Pain Level Classification from Speech Using GRU-Mixer Architecture with Log-Mel Spectrogram Features

Abstract

1. Introduction

- (1)

- An end-to-end differentiable architecture integrating Mel-spectral analysis and GRU-Mixer temporal modeling, without hand-engineered prosodic features.

- (2)

- A speaker-independent evidence-based preprocessing algorithm that standardizes recordings but preserves clinically relevant acoustic patterns.

- (3)

- Complementary descriptions formed by integrating fixed spectral frames and non-stationary recurrent encodings to produce a rich joint spectral-temporal pain and thermal state signature.

- (4)

- A multi-task training framework whose regularization techniques and balanced metrics in combination enhance robust, real-time audio monitoring across pain presence, temperature sense, and graded pain intensity.

- (5)

- To the best of our knowledge, this is the first study to utilize the TAME Pain dataset for supervised classification tasks involving vocal expressions of pain. By applying an end-to-end deep learning architecture to this recently released corpus, we establish a benchmark for future work in speech-based pain recognition.

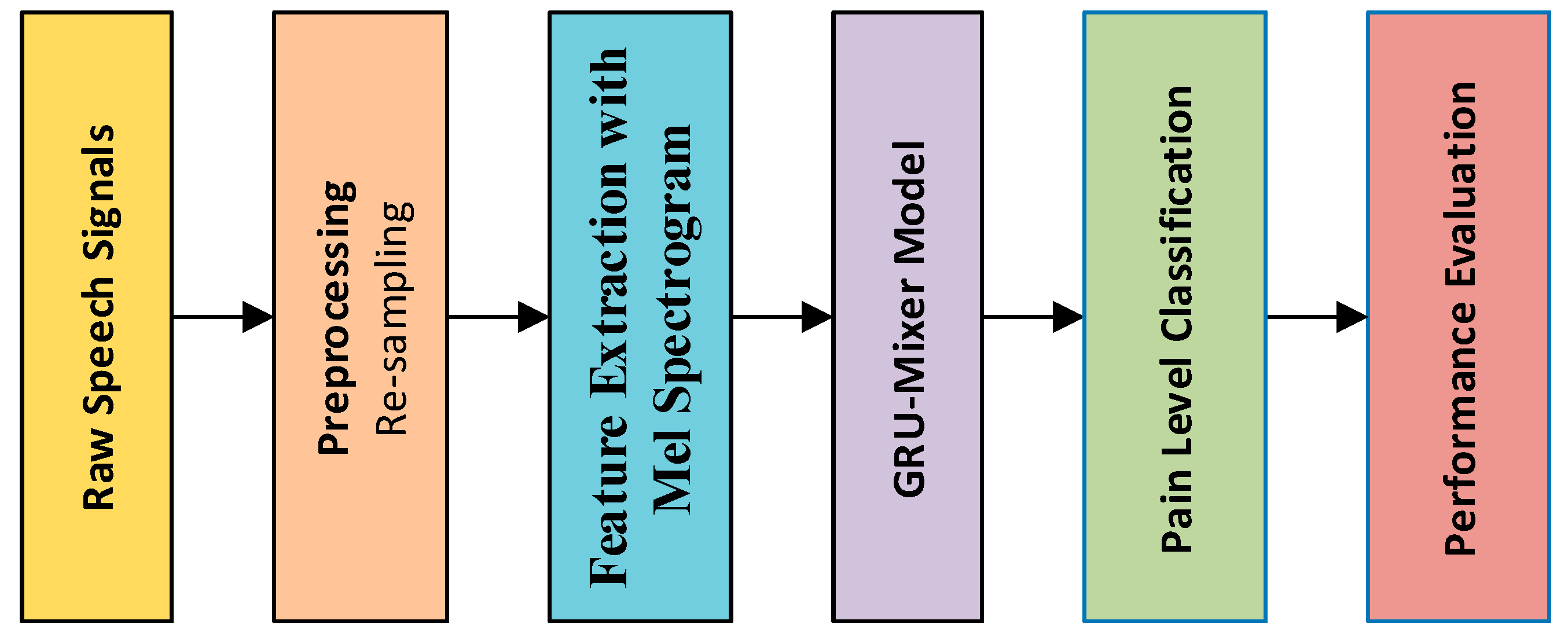

2. Materials and Methods

2.1. Preprocessing

2.2. Feature Extraction with Mel Spectrogram

2.3. GRU-Mixer

2.4. Dataset

3. Experimental Works and Results

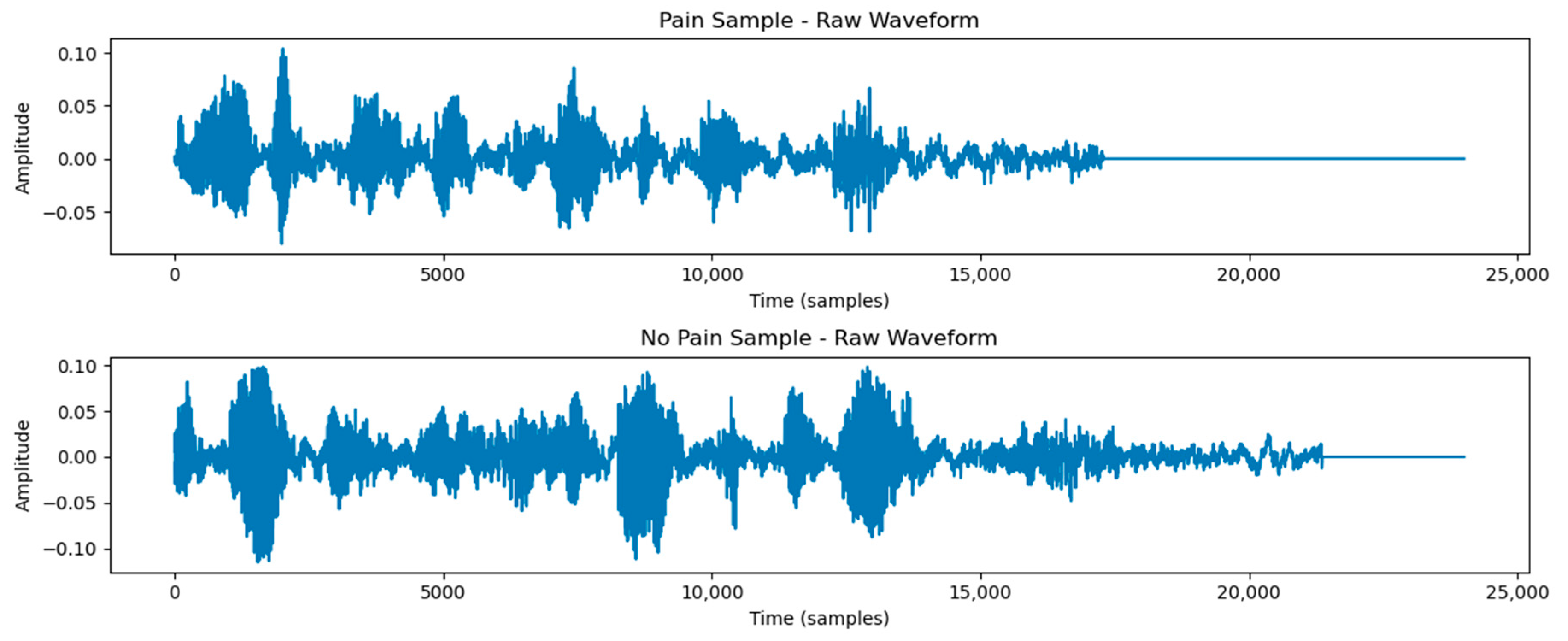

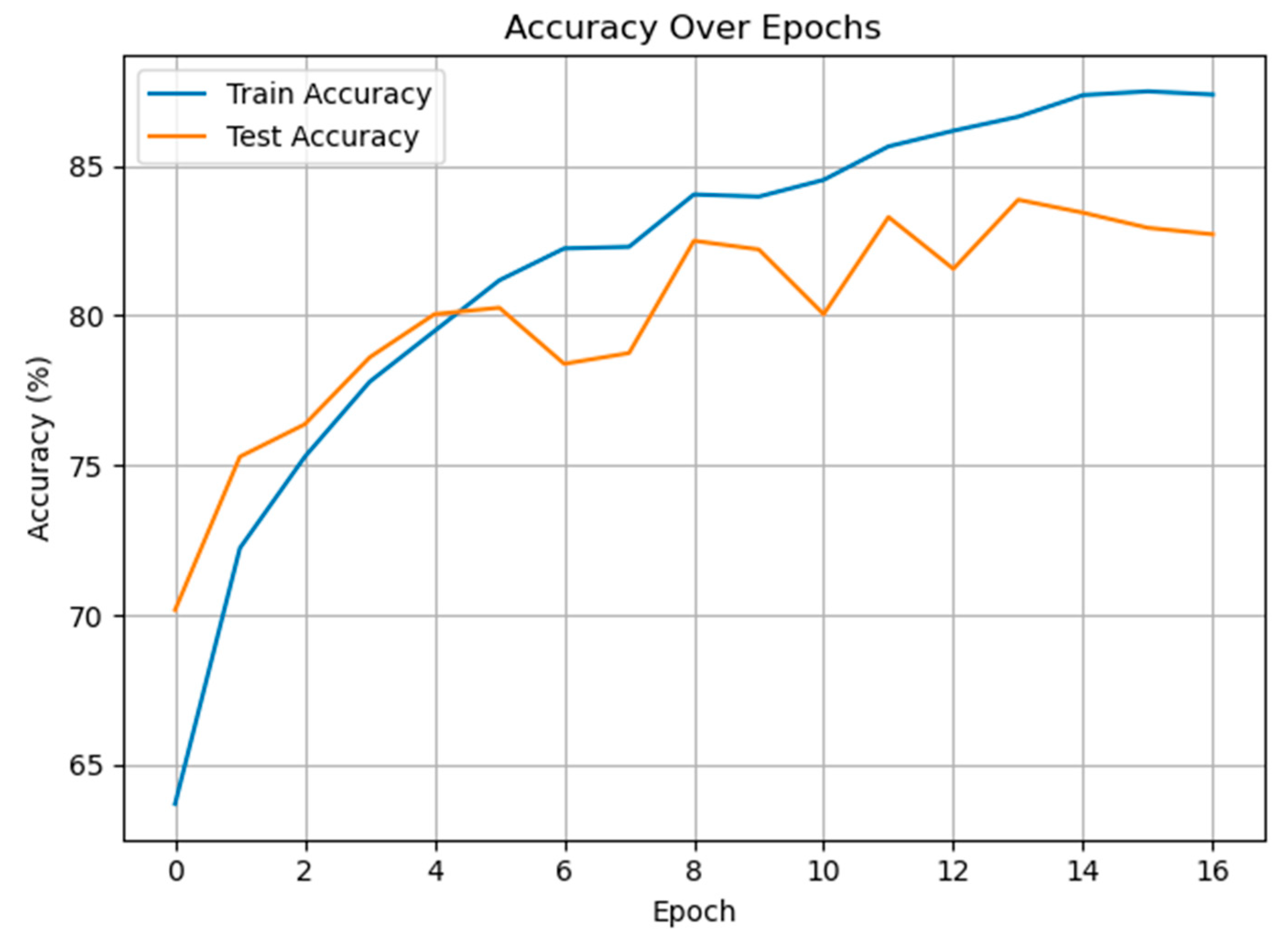

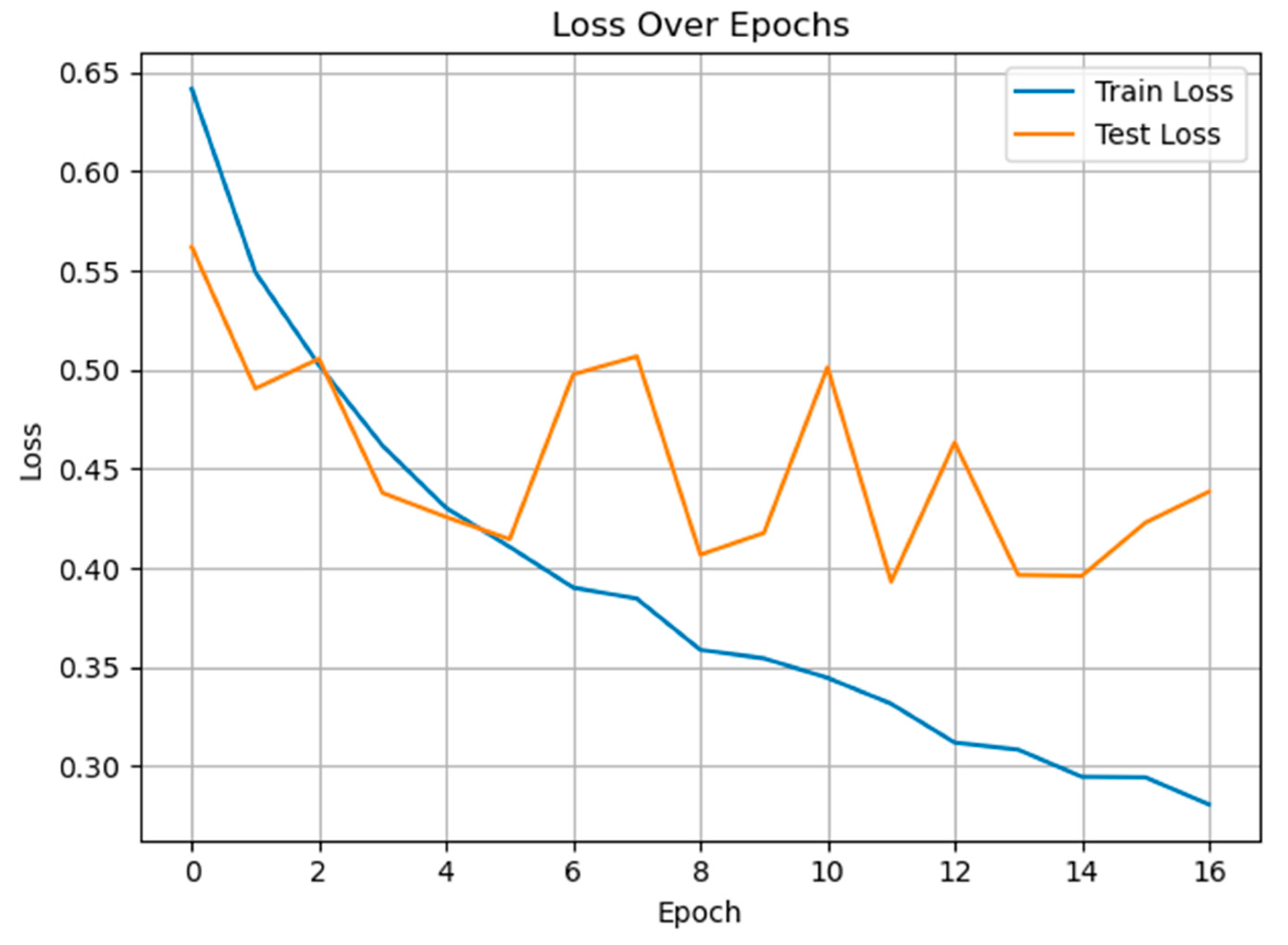

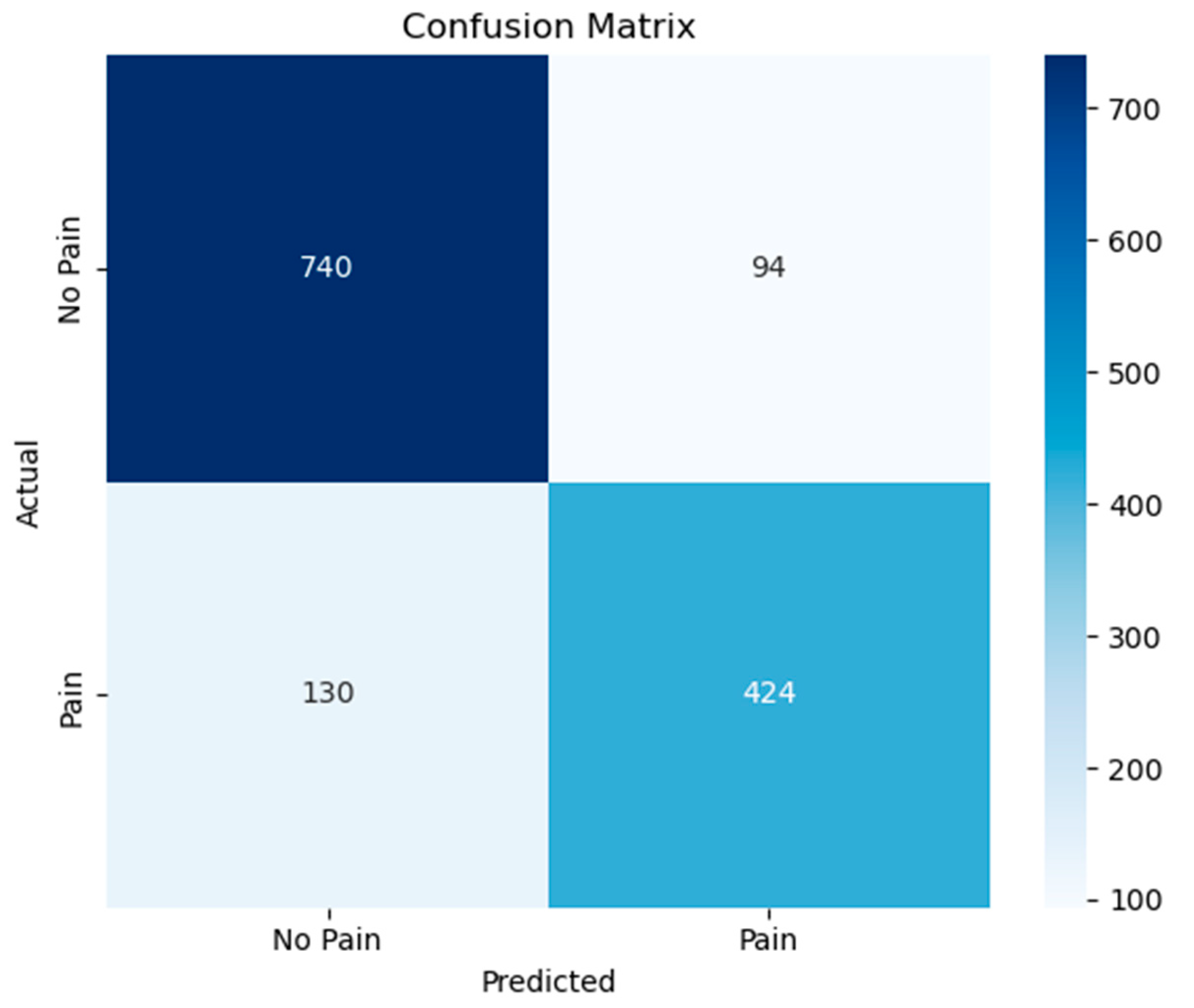

3.1. Classification of Pain and Non-Pain Scenarios

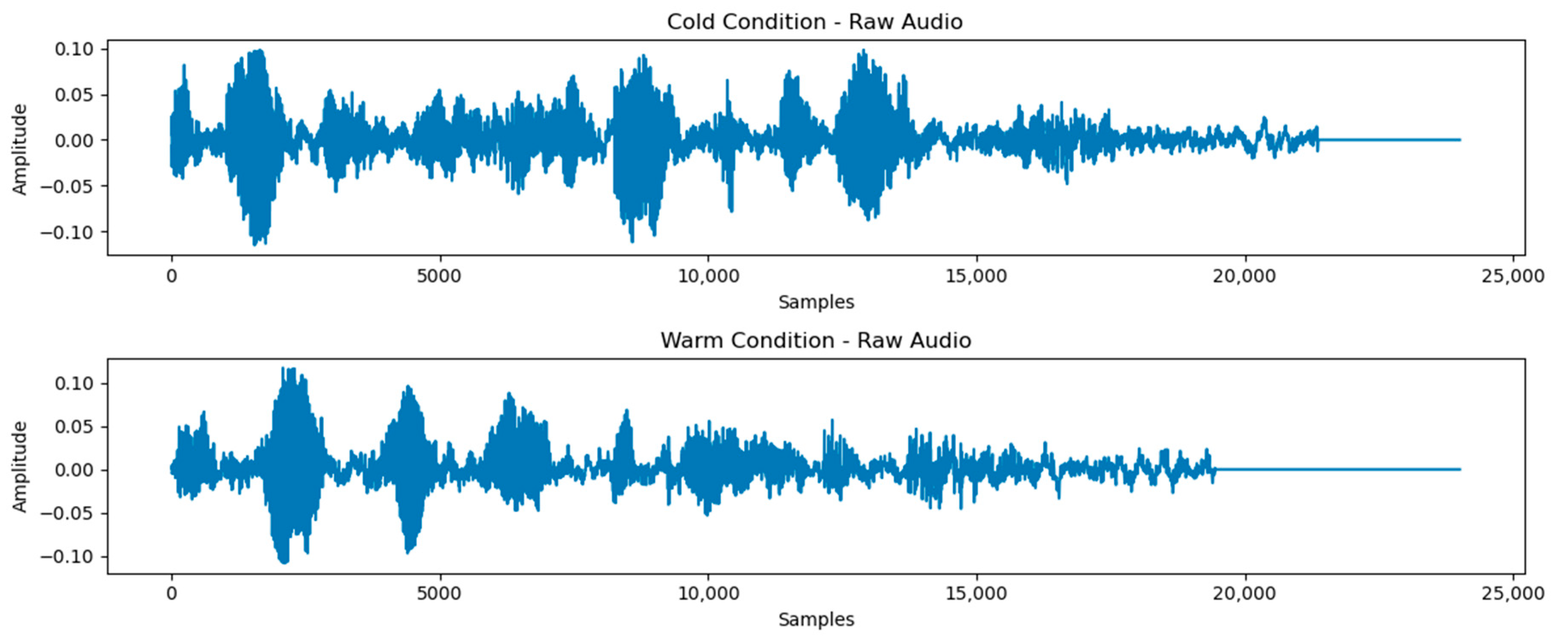

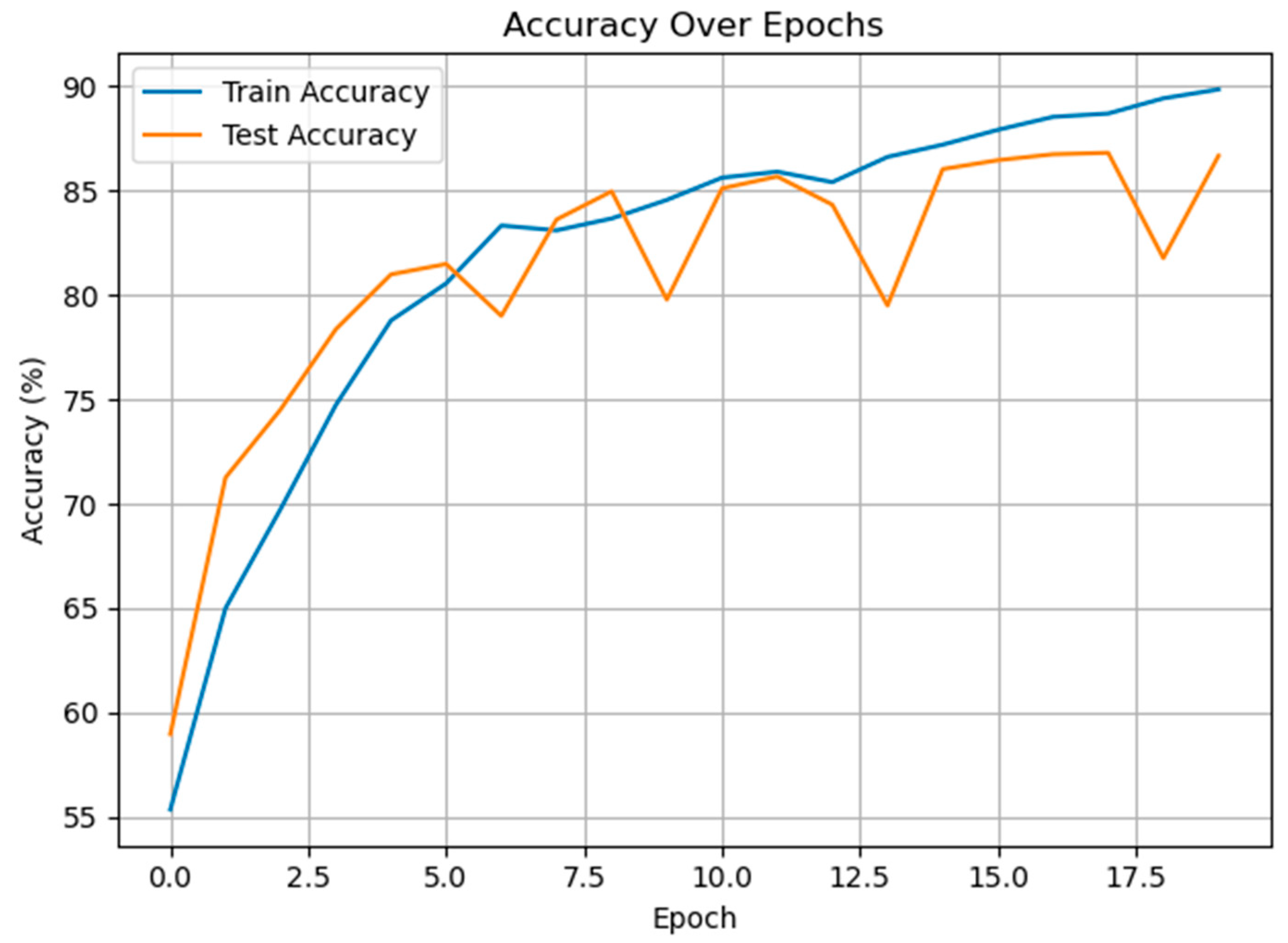

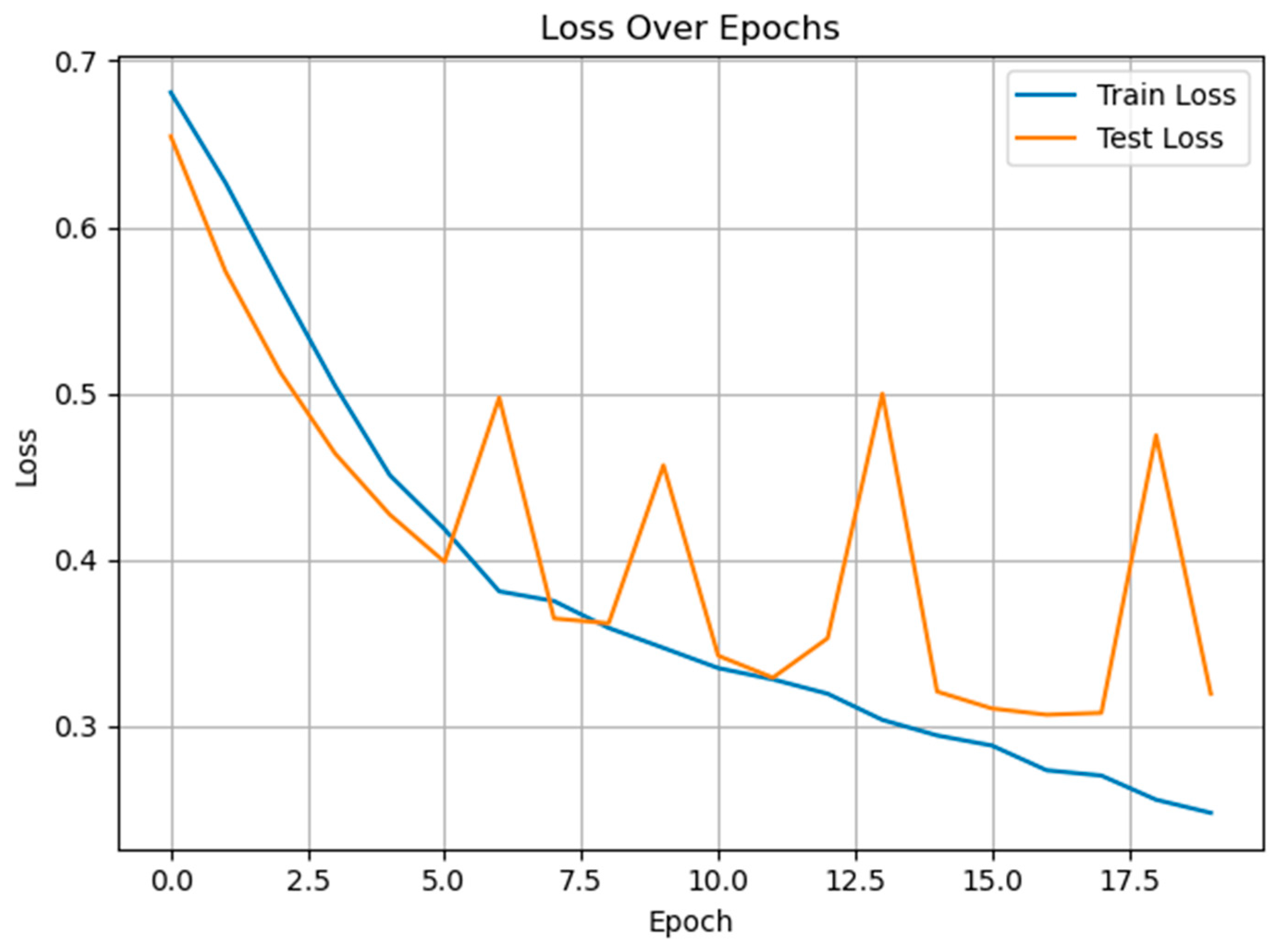

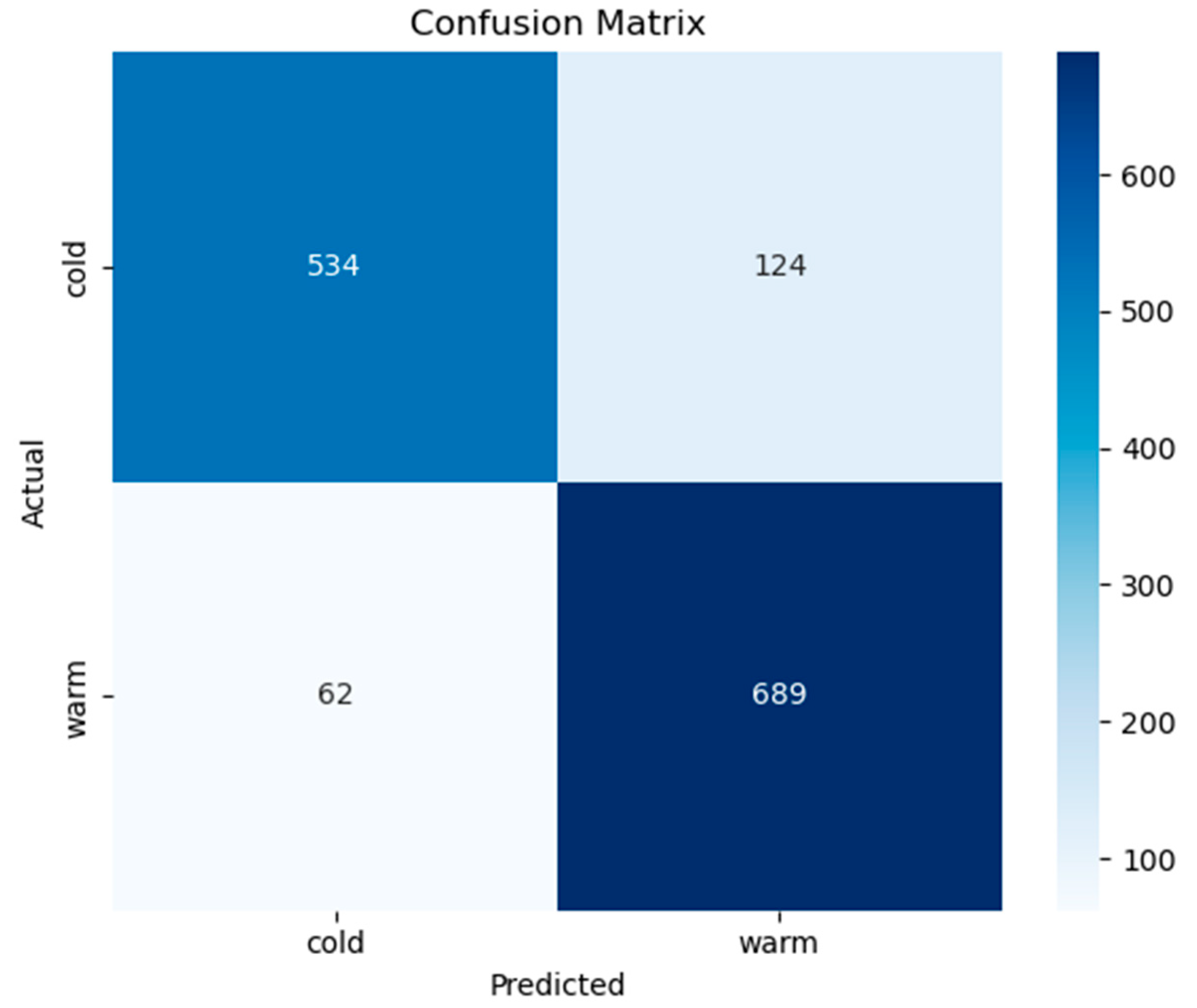

3.2. Classification of Cold and Warm Condition Classes

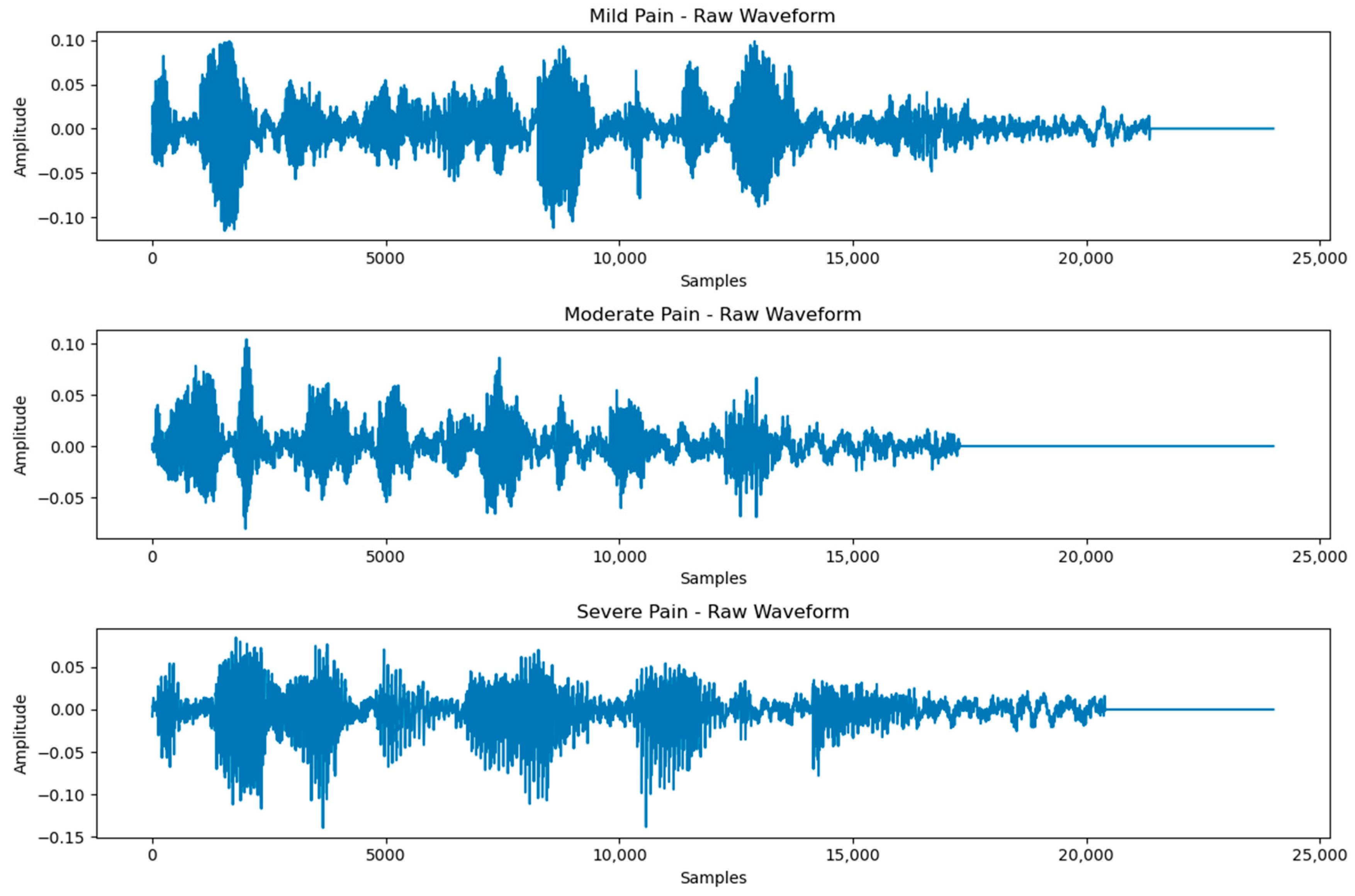

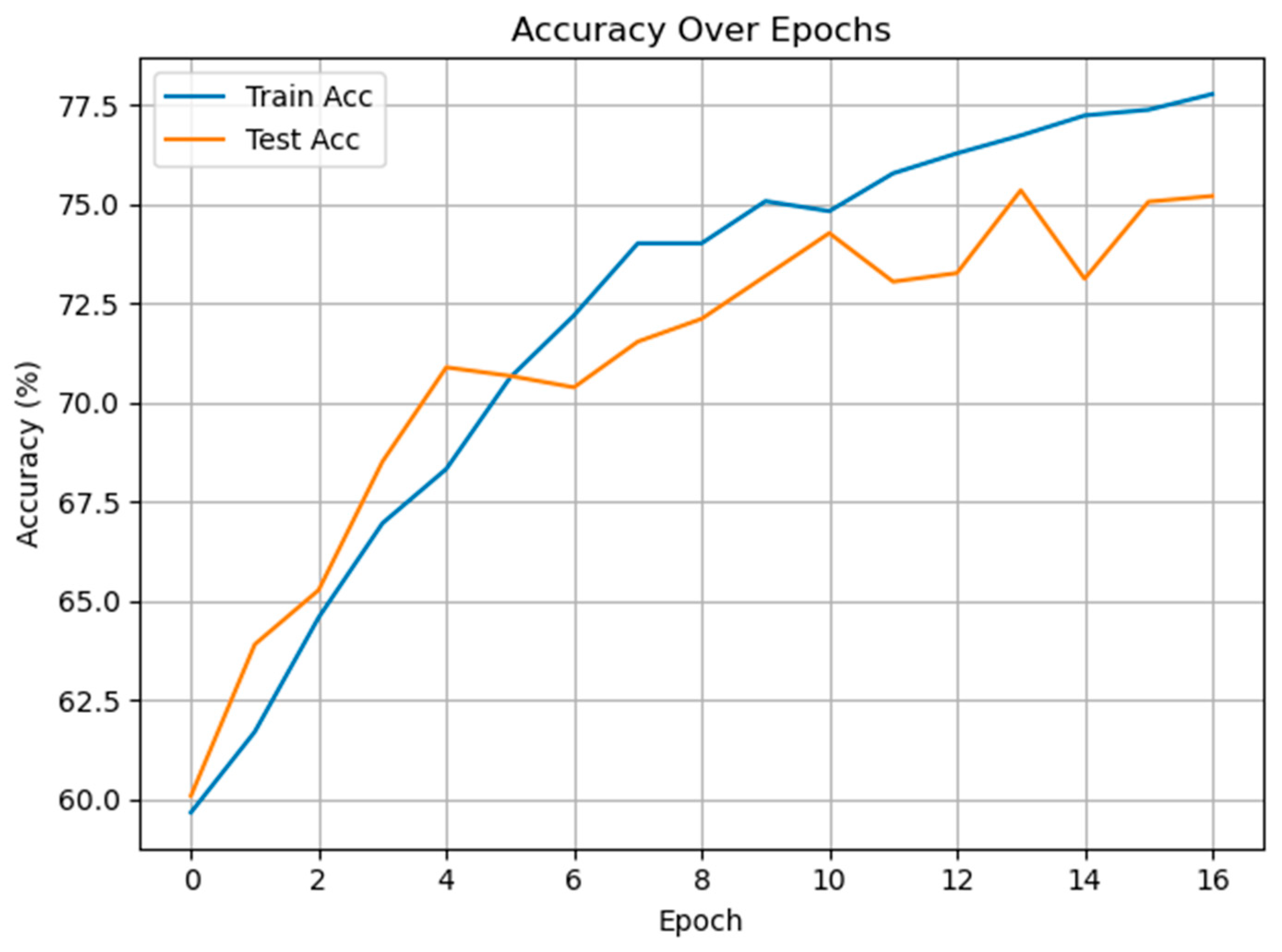

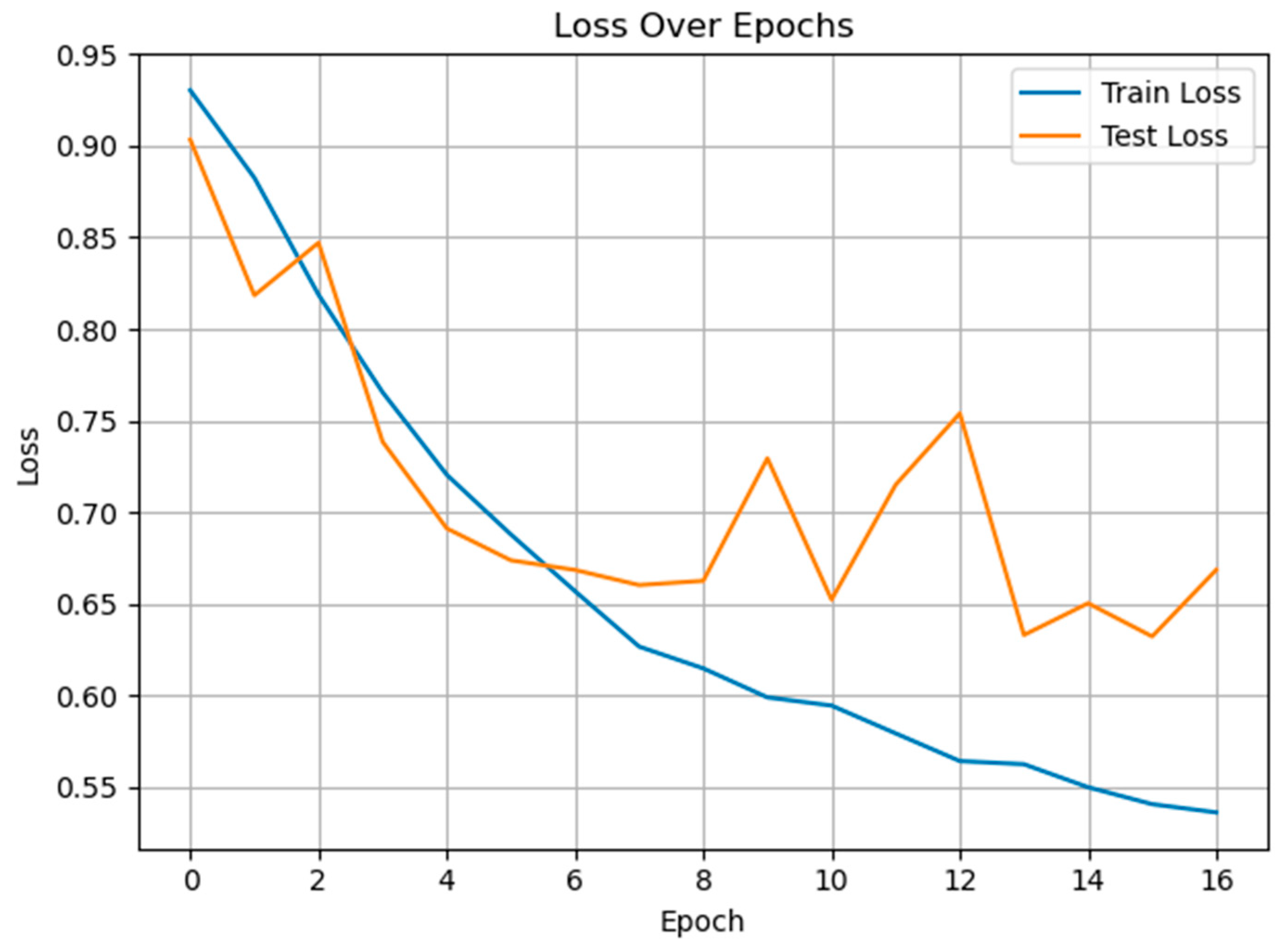

3.3. Classification of Multiclass Pain Classes

3.4. Ablation Study

4. Discussions

5. Conclusions and Future Work

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Breivik, H.; Borchgrevink, P.C.; Allen, S.M.; Rosseland, L.A.; Romundstad, L.; Breivik Hals, E.K.; Kvarstein, G.; Stubhaug, A. Assessment of pain. Br. J. Anaesth. 2008, 101, 17–24. [Google Scholar] [CrossRef] [PubMed]

- Chandran, R. Pain assessment in children using a modified wong baker faces pain rating scale. Korean Acad. Prev. Dent. 2019, 15, 202–205. [Google Scholar] [CrossRef]

- Tsai, F.S.; Hsu, Y.L.; Chen, W.C.; Weng, Y.M.; Ng, C.J.; Lee, C.C. Toward development and evaluation of pain level-rating scale for emergency triage based on vocal characteristics and facial expressions. In Proceedings of the 17th Annual Conference of the International Speech Communication Association, Interspeech, San Francisco, CA, USA, 8–16 September 2016; pp. 92–96. [Google Scholar]

- Aung, M.S.; Kaltwang, S.; Romera-Paredes, B.; Martinez, B.; Singh, A.; Cella, M.; Valstar, M.; Meng, H.; Kemp, A.; Shafizadeh, M.; et al. The automatic detection of chronic pain-related expression: Requirements, challenges and the multimodal EmoPain dataset. IEEE Trans. Affect. Comput. 2015, 7, 435–451. [Google Scholar] [CrossRef] [PubMed]

- Salekin, M.S.; Zamzmi, G.; Paul, R.; Goldgof, D.; Kasturi, R.; Ho, T.; Sun, Y. Harnessing the power of deep learning methods in healthcare: Neonatal pain assessment from crying sound. In Proceedings of the 2019 IEEE Healthcare Innovations and Point of Care Technologies (HI-POCT), Bethesda, MD, USA, 20–22 November 2019; pp. 127–130. [Google Scholar]

- Oshrat, Y.; Bloch, A.; Lerner, A.; Cohen, A.; Avigal, M.; Zeilig, G. Speech prosody as a biosignal for physical pain detection. In Proceedings of the 8th Speech Prosody, Boston, MA, USA, 31 May–3 June 2016; pp. 420–424. [Google Scholar]

- Tsai, F.S.; Weng, Y.M.; Ng, C.J.; Lee, C.C. Embedding stacked bottleneck vocal features in an LSTM architecture for automatic pain level classification during emergency triage. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; IEEE: Piscataway, NJ, USA; pp. 313–318. [Google Scholar]

- Ren, Z.; Cummins, N.; Han, J.; Schnieder, S.; Krajewski, J.; Schuller, B. Evaluation of the pain level from speech: Introducing a novel pain database and benchmarks. In Speech Communication; 13th ITG-Symposium; VDE: Frankfurt am Main, Germany, 2018; pp. 1–5. [Google Scholar]

- Salinas-Ranneberg, M.; Niebuhr, O.; Kunz, M.; Lautenbacher, S.; Barbosa, P. Effects of pain on vowel production–Towards a new way of pain-level estimation based on acoustic speech-signal analyses. In Proceedings of the 43. Deutsche Jahrestagung für Akustik, Kiel, Germany, 6–9 March 2017; pp. 1442–1445. [Google Scholar]

- Gutierrez, R.; Garcia-Ortiz, J.; Villegas-Ch, W. Multimodal AI techniques for pain detection: Integrating facial gesture and paralanguage analysis. Front. Comput. Sci. 2024, 6, 1424935. [Google Scholar] [CrossRef]

- Cascella, M.; Cutugno, F.; Mariani, F.; Vitale, V.N.; Iuorio, M.; Cuomo, A.; Bimonte, S.; Conti, V.; Sabbatino, F.; Ponsiglione, A.M.; et al. AI-based cancer pain assessment through speech emotion recognition and video facial expressions classification. Signa Vitae 2024, 20, 28–38. [Google Scholar] [CrossRef]

- Chang, C.Y.; Li, J.J. Application of deep learning for recognizing infant cries. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Nantou, Taiwan, 27–29 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–2. [Google Scholar]

- Thiam, P.; Schwenker, F. Combining deep and hand-crafted features for audio-based pain intensity classification. In IAPR Workshop on Multimodal Pattern Recognition of Social Signals in Human-Computer Interaction; Springer International Publishing: Cham, Switherland, 2018; pp. 49–58. [Google Scholar]

- Müller, M. Fundamentals of Music Processing: Audio, Analysis, Algorithms, Applications; Springer: Berlin/Heidelberg, Germany, 2015; Volume 5, p. 62. [Google Scholar]

- Warden, P. Speech commands: A dataset for limited-vocabulary speech recognition. arXiv 2018, arXiv:1804.03209. [Google Scholar] [CrossRef]

- Shen, J.; Pang, R.; Weiss, R.J.; Schuster, M.; Jaitly, N.; Yang, Z.; Chen, Z.; Zhang, Y.; Wang, Y.; Skerrv-Ryan, R.; et al. Natural TTS synthesis by conditioning wavenet on mel spectrogram predictions. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4779–4783. [Google Scholar]

- Rabiner, L.R. Digital Processing of Speech Signals; Pearson Education India: Chennai, India, 1978. [Google Scholar]

- Oppenheim, A.V. Discrete-Time Signal Processing; Pearson Education India: Chennai, India, 1999. [Google Scholar]

- Demir, F.; Sengur, A.; Cummins, N.; Amiriparian, S.; Schuller, B. Low level texture features for snore sound discrimination. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 413–416. [Google Scholar]

- Bao, Q.; Miao, S.; Tian, Y.; Jin, X.; Wang, P.; Jiang, Q.; Yao, S.; Hu, D.; Wang, R. GDRNet: A channel grouping based time-slice dilated residual network for long-term time-series forecasting. J. Supercomput. 2025, 81, 1–32. [Google Scholar] [CrossRef]

- Mahto, D.K.; Saini, V.K.; Mathur, A.; Kumar, R. Deep recurrent mixer models for load forecasting in distribution network. In Proceedings of the 2022 IEEE 2nd International Conference on Sustainable Energy and Future Electric Transportation (SeFeT), Hyderabad, India, 4–6 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Zargar, S. Introduction to Sequence Learning Models: RNN, LSTM, GRU; Department of Mechanical and Aerospace Engineering, North Carolina State University: Raleigh, NC, USA, 2021; p. 37988518. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Karthik, R.; Menaka, R.; Kathiresan, G.S.; Anirudh, M.; Nagharjun, M. Gaussian dropout based stacked ensemble CNN for classification of breast tumor in ultrasound images. Irbm 2022, 43, 715–733. [Google Scholar] [CrossRef]

- Martins, A.; Astudillo, R. From softmax to sparsemax: A sparse model of attention and multi-label classification. In Proceedings of the 33rd the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; PMLR: Cambridge, MA, USA, 2016; pp. 1614–1623. [Google Scholar]

- Dao, T.Q.; Schneiders, E.; Williams, J.; Bautista, J.R.; Seabrooke, T.; Vigneswaran, G.; Kolpekwar, R.; Vashistha, R.; Farahi, A. TAME Pain data release: Using audio signals to characterize pain. Sci. Data 2025, 12, 595. Available online: https://physionet.org/content/tame-pain/1.0.0/ (accessed on 21 June 2025). [CrossRef] [PubMed]

- Bai, Y.; Yang, E.; Han, B.; Yang, Y.; Li, J.; Mao, Y.; Niu, G.; Liu, T. Understanding and improving early stopping for learning with noisy labels. Adv. Neural Inf. Process. Syst. 2021, 34, 24392–24403. [Google Scholar]

- Schneiders, E.; Williams, J.; Farahi, A.; Seabrooke, T.; Vigneswaran, G.; Bautista, J.R.; Dowthwaite, L.; Piskopani, A.M. Tame pain: Trustworthy assessment of pain from speech and audio for the empowerment of patients. In Proceedings of the First International Symposium on Trustworthy Autonomous Systems, Edinburgh, UK, 11–12 July 2023; pp. 1–4. [Google Scholar]

| Classes | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| Class-Based Evaluation | non-pain | 0.8506 | 0.8873 | 0.8685 |

| pain | 0.8185 | 0.7653 | 0.7910 | |

| General Average Evaluation | Macro Avg. | 0.8346 | 0.8263 | 0.8298 |

| Weighted Avg. | 0.8378 | 0.8386 | 0.8376 | |

| Accuracy | 0.8386 | |||

| Classes | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| Class-Based Evaluation | cold | 0.8960 | 0.8116 | 0.8517 |

| warm | 0.8475 | 0.9174 | 0.8811 | |

| General Average Evaluation | Macro Avg. | 0.8717 | 0.8645 | 0.8664 |

| Weighted Avg. | 0.8701 | 0.8680 | 0.8673 | |

| Accuracy | 0.8680 | |||

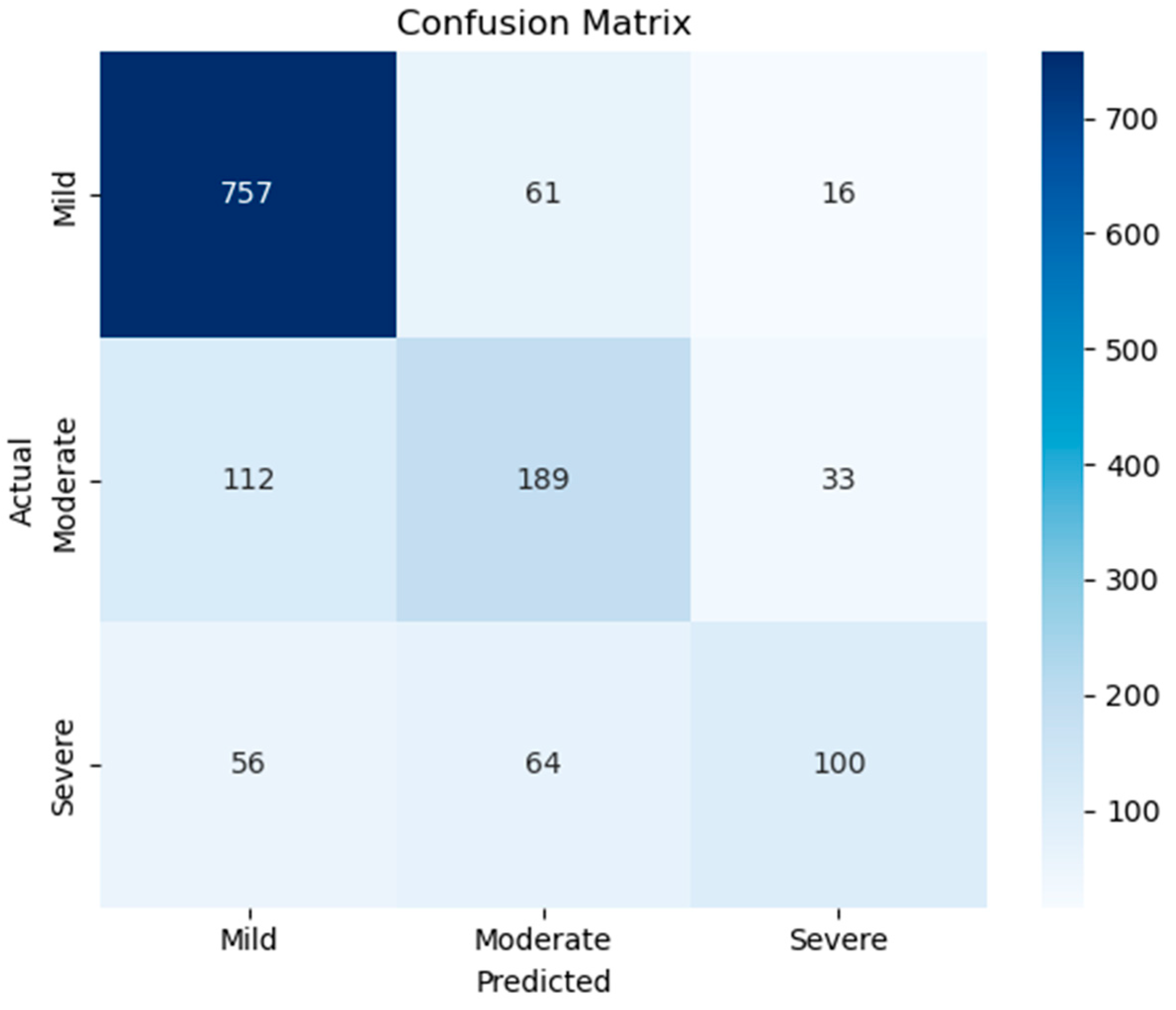

| Classes | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| Class-Based Evaluation | Mild | 0.8184 | 0.9077 | 0.8607 |

| Moderate | 0.6019 | 0.5659 | 0.5833 | |

| Severe | 0.6711 | 0.4545 | 0.5420 | |

| General Average Evaluation | Macro Avg. | 0.6971 | 0.6427 | 0.6620 |

| Weighted Avg. | 0.7430 | 0.7536 | 0.7435 | |

| Accuracy | 0.7536 | |||

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Full Model | 0.8271 | 0.8257 | 0.7184 | 0.7683 |

| No Dropout | 0.8170 | 0.8378 | 0.6715 | 0.7455 |

| No Weight Decay | 0.8127 | 0.8467 | 0.6480 | 0.7342 |

| No Early Stopping | 0.8386 | 0.7957 | 0.8014 | 0.7986 |

| No Dropout + No weight decay | 0.8170 | 0.7187 | 0.8899 | 0.7952 |

| GRU Only | 0.8422 | 0.8587 | 0.7238 | 0.7855 |

| Model | Macro-Precision | Macro-Recall | Macro-F1-Score |

|---|---|---|---|

| LogReg | 0.5509 | 0.5857 | 0.5533 |

| SVM | 0.5974 | 0.6363 | 0.6057 |

| kNN-5 | 0.6297 | 0.5138 | 0.5378 |

| DT | 0.5483 | 0.5463 | 0.5473 |

| RF | 0.7633 | 0.5617 | 0.6006 |

| GRU-Mixer | 0.6971 | 0.6427 | 0.6620 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alhudhaif, A. Pain Level Classification from Speech Using GRU-Mixer Architecture with Log-Mel Spectrogram Features. Diagnostics 2025, 15, 2362. https://doi.org/10.3390/diagnostics15182362

Alhudhaif A. Pain Level Classification from Speech Using GRU-Mixer Architecture with Log-Mel Spectrogram Features. Diagnostics. 2025; 15(18):2362. https://doi.org/10.3390/diagnostics15182362

Chicago/Turabian StyleAlhudhaif, Adi. 2025. "Pain Level Classification from Speech Using GRU-Mixer Architecture with Log-Mel Spectrogram Features" Diagnostics 15, no. 18: 2362. https://doi.org/10.3390/diagnostics15182362

APA StyleAlhudhaif, A. (2025). Pain Level Classification from Speech Using GRU-Mixer Architecture with Log-Mel Spectrogram Features. Diagnostics, 15(18), 2362. https://doi.org/10.3390/diagnostics15182362