A Comparative Analysis of the Mamba, Transformer, and CNN Architectures for Multi-Label Chest X-Ray Anomaly Detection in the NIH ChestX-Ray14 Dataset

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

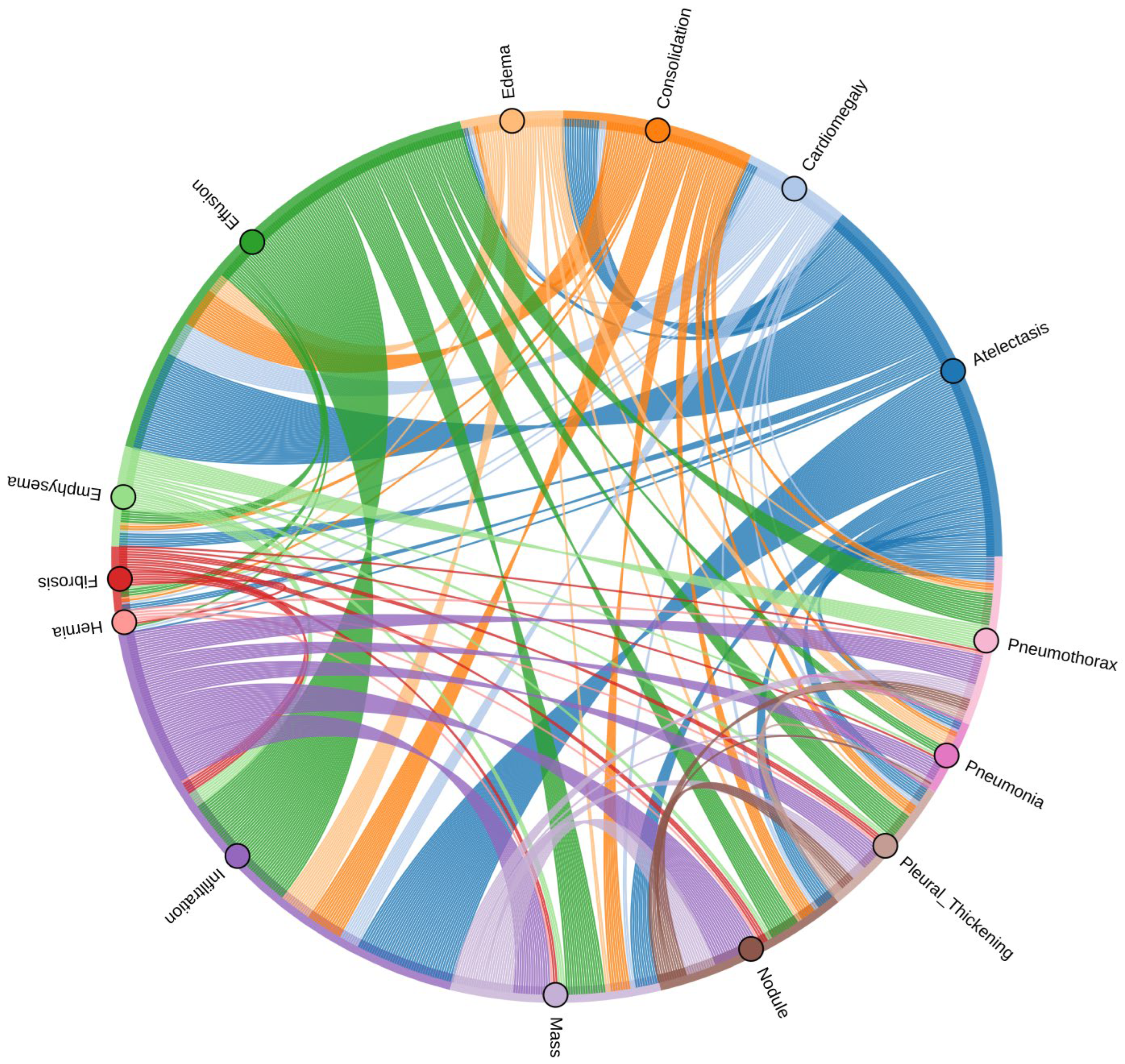

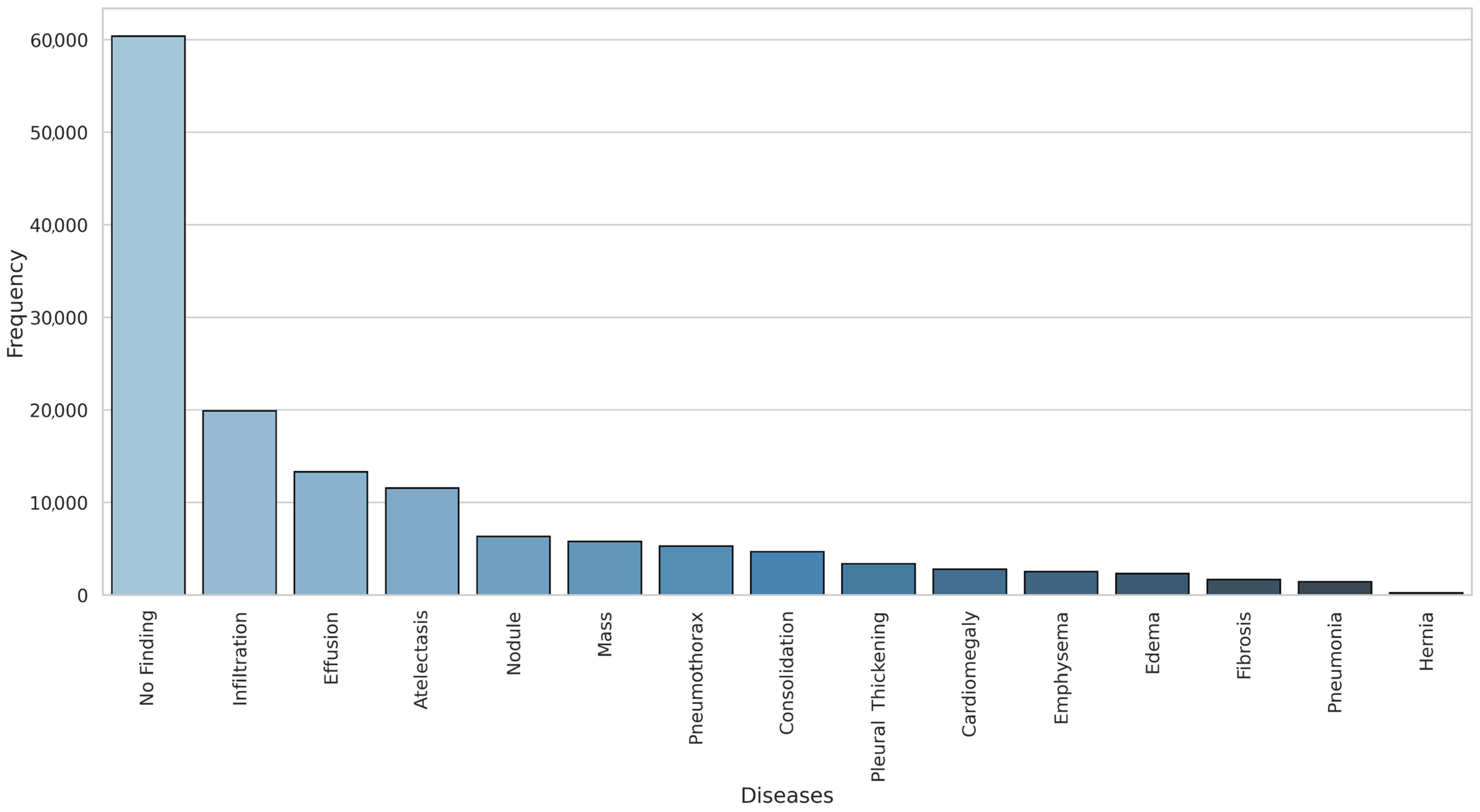

3.1. Dataset Description

3.2. Model Architectures

3.2.1. Convolutional Neural Networks (CNNs)

- DenseNet121 (8 M parameters)—DenseNet’s dense connectivity pattern, where each layer receives inputs from all preceding layers, promotes feature reuse and mitigates the vanishing gradient problem. This dense information flow enables the model to learn richer and more diverse features with fewer parameters [19]. DenseNet121′s success in CheXNet, which achieved radiologist-level pneumonia detection on NIH ChestX-ray14, illustrates its capability to capture subtle radiographic patterns critical for thoracic disease identification [10].

- ResNet34 (21 M)—ResNet’s introduction of residual connections fundamentally improved the trainability of deep networks by allowing gradients to bypass several layers, addressing degradation problems in very deep architectures. ResNet34 strikes a balance between depth and complexity, offering stable training and generalization. Its residual blocks help the model learn both low-level textures and higher-order semantic features, which are essential for differentiating overlapping pathologies in chest X-rays [20].

- InceptionV3 (24 M)—Inception architectures utilize parallel convolutional filters of varying sizes (e.g., 1 × 1, 3 × 3, and 5 × 5) within inception modules to capture multi-scale spatial features simultaneously. This design enables the network to efficiently aggregate local and global contextual information, enhancing its ability to discern complex lung patterns such as nodules, masses, or infiltrates. InceptionV3 has shown robustness in handling the heterogeneous data distributions commonly found in medical images [21].

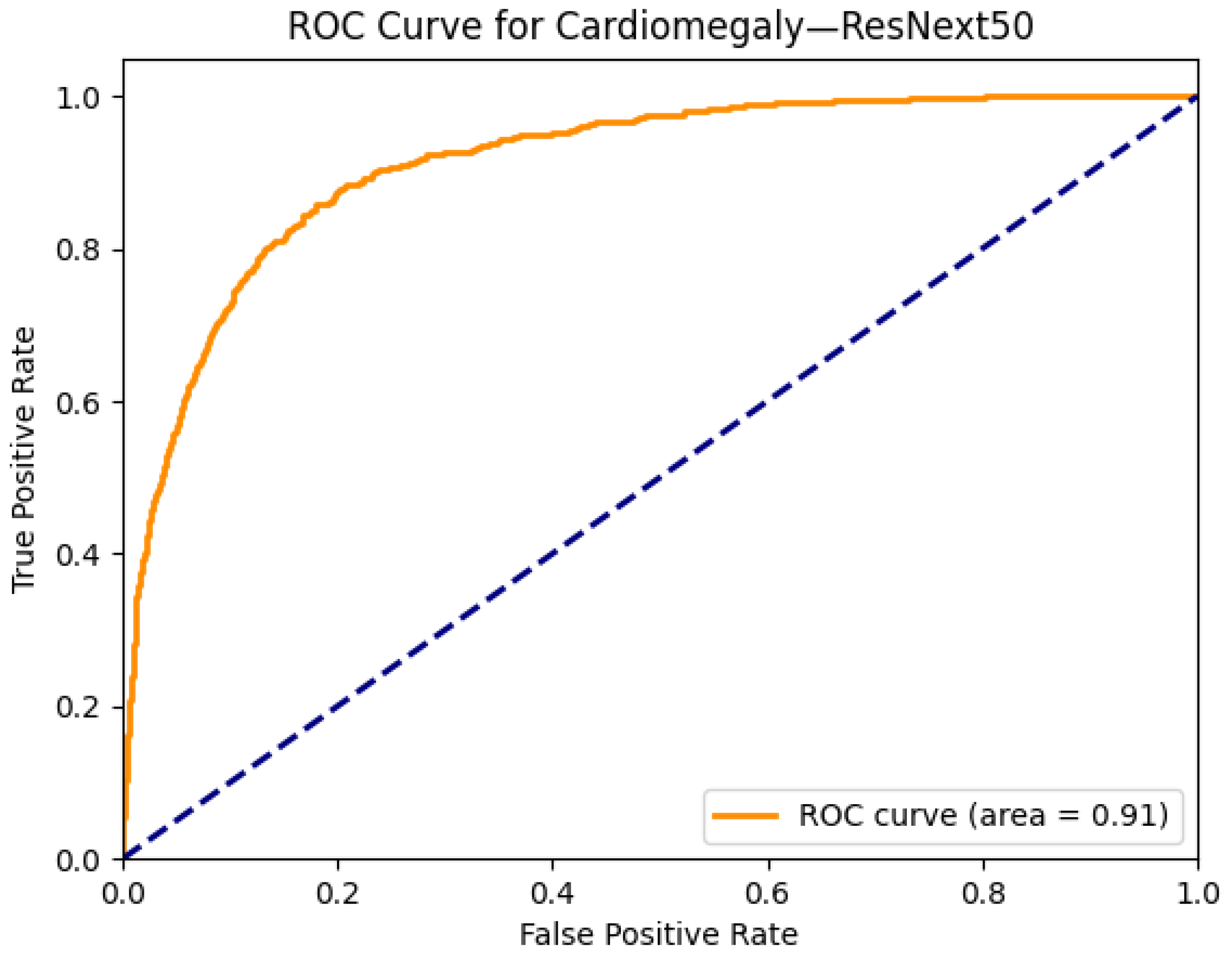

- ResNext50 (25 M)—ResNext employs grouped convolutions to increase cardinality—the number of independent paths through the network—thereby boosting representational power without a proportional increase in computational cost. This architecture excels in multi-label tasks by allowing the model to capture diverse disease features in parallel. ResNext50’s modular design facilitates flexible scaling and has demonstrated strong performance in thoracic abnormality classification benchmarks [22].

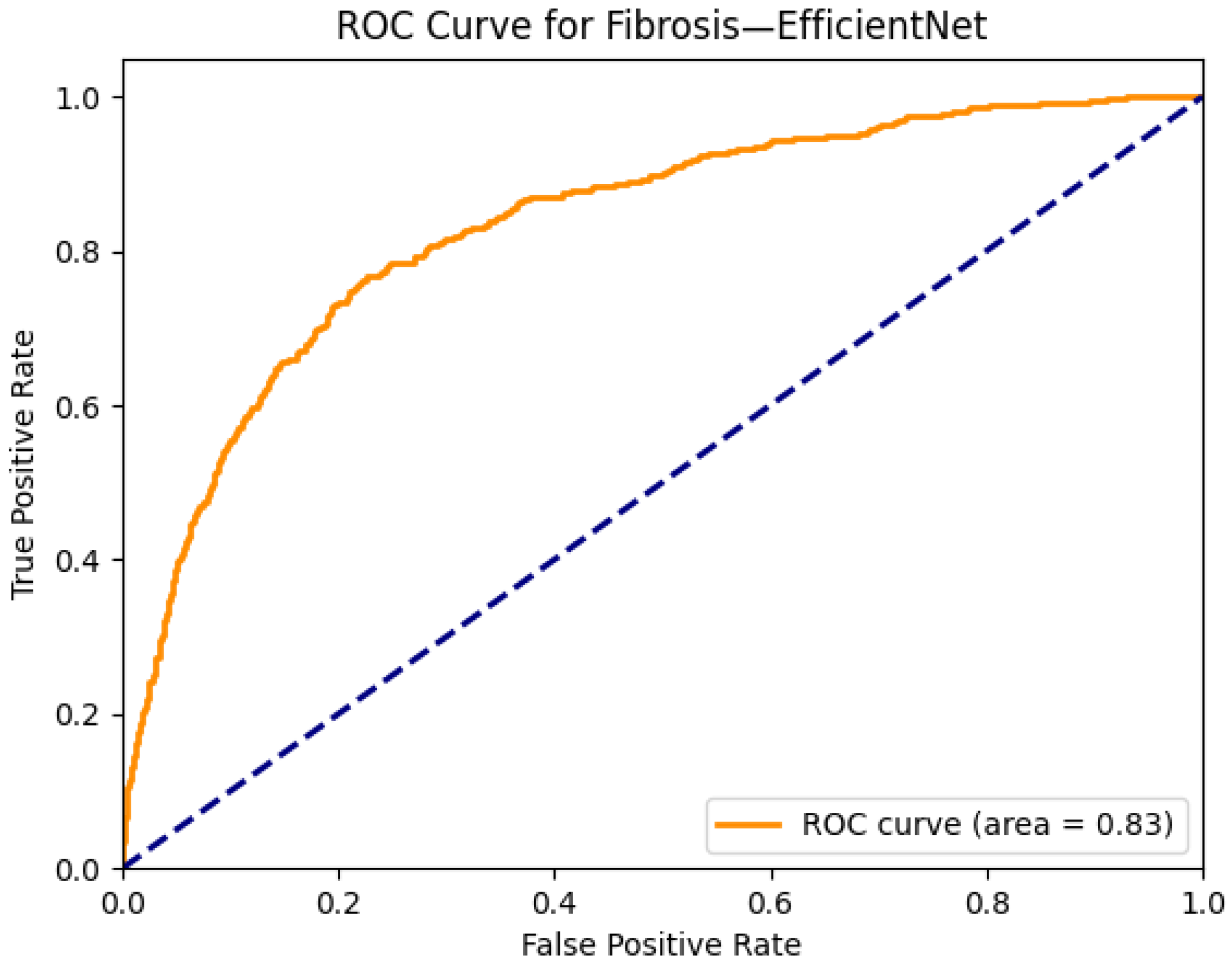

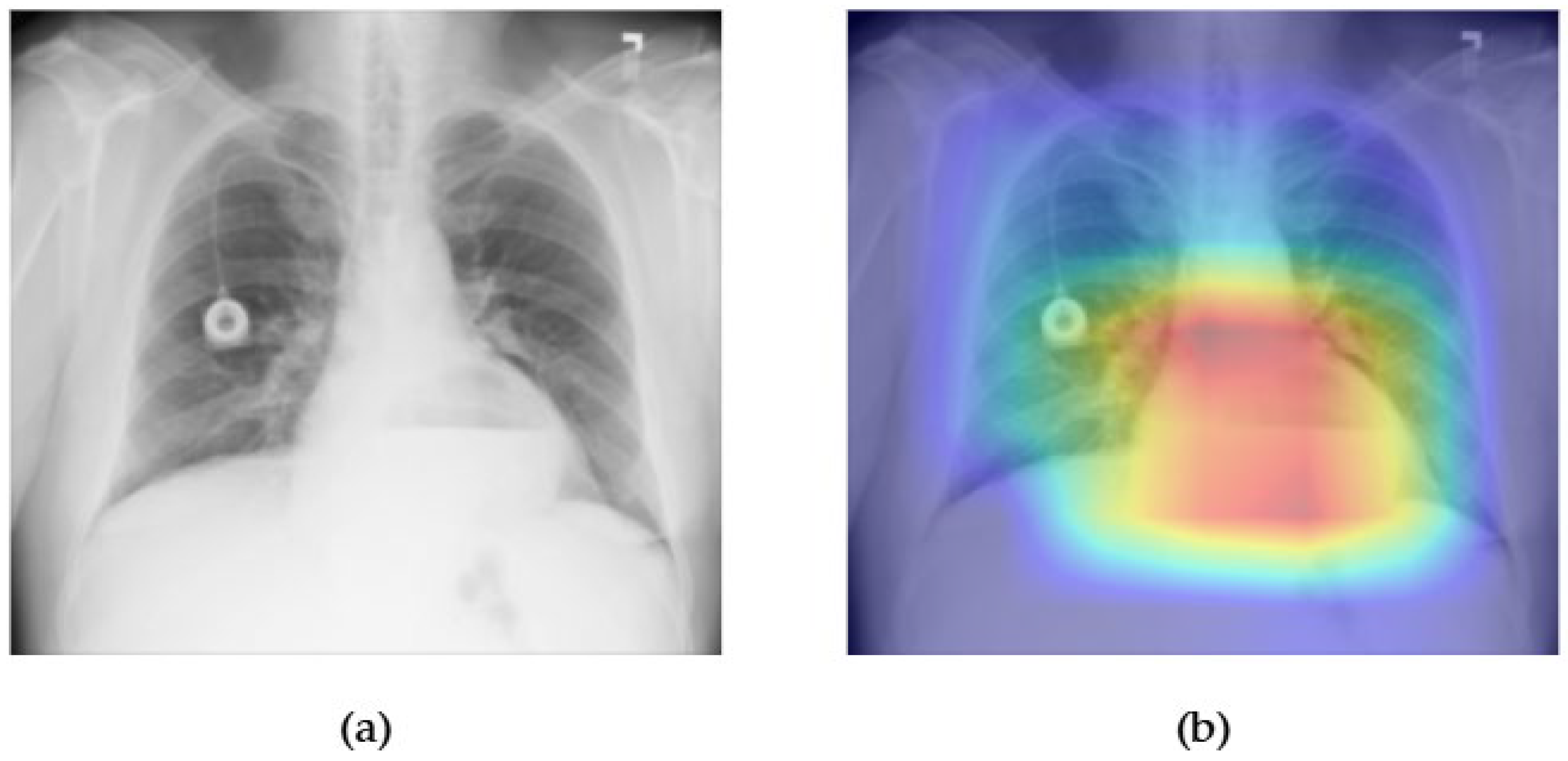

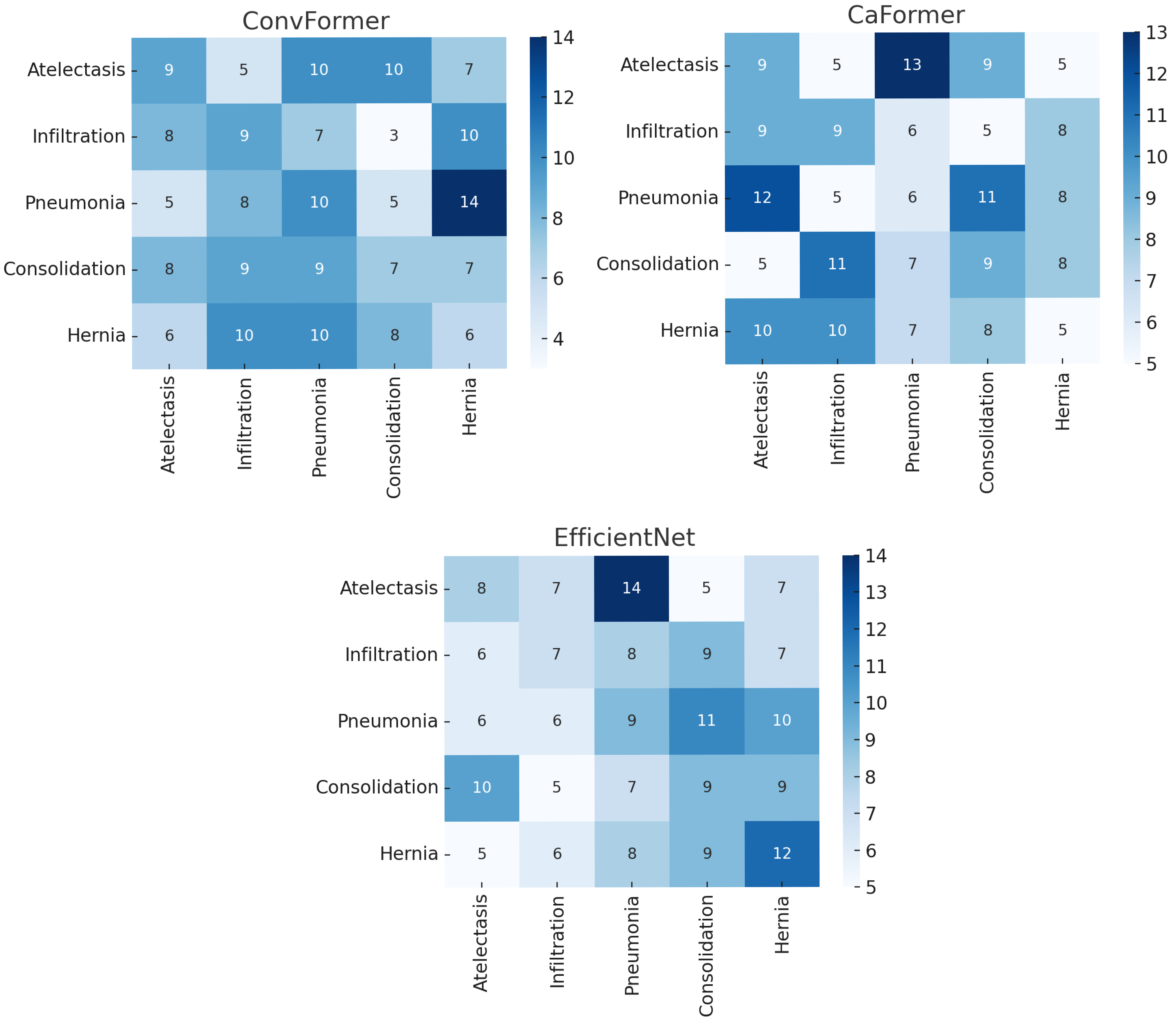

- EfficientNet-B0 (5.3 M)—EfficientNet introduces a compound scaling method that uniformly scales the network depth, width, and input resolution based on a fixed set of scaling coefficients. This approach yields models that achieve high accuracy with fewer parameters and less computational overhead. EfficientNet-B0’s efficiency and scalability are particularly advantageous in medical imaging contexts, where high-resolution images and resource constraints pose challenges [23].

- MobileNetV4 (6 M)—MobileNet architectures are tailored for lightweight deployment using depthwise separable convolutions to drastically reduce the model size and computational cost. MobileNetV4 further integrates novel architectural improvements such as squeeze-and-excitation modules and optimized activation functions, maintaining competitive accuracy with low latency. This makes MobileNetV4 suitable for clinical environments requiring fast inference on limited hardware, such as bedside diagnostics or portable X-ray devices [24].

3.2.2. Transformer-Based Models

- DaViT-Tiny (28 M)—The Dual Attention Vision Transformer introduces a novel combination of spatial and channel-wise attention mechanisms. This dual-path attention structure improves the model’s ability to capture both global structure and fine-grained details, making it especially effective for multi-label classification where lesions may differ significantly in size and location [25].

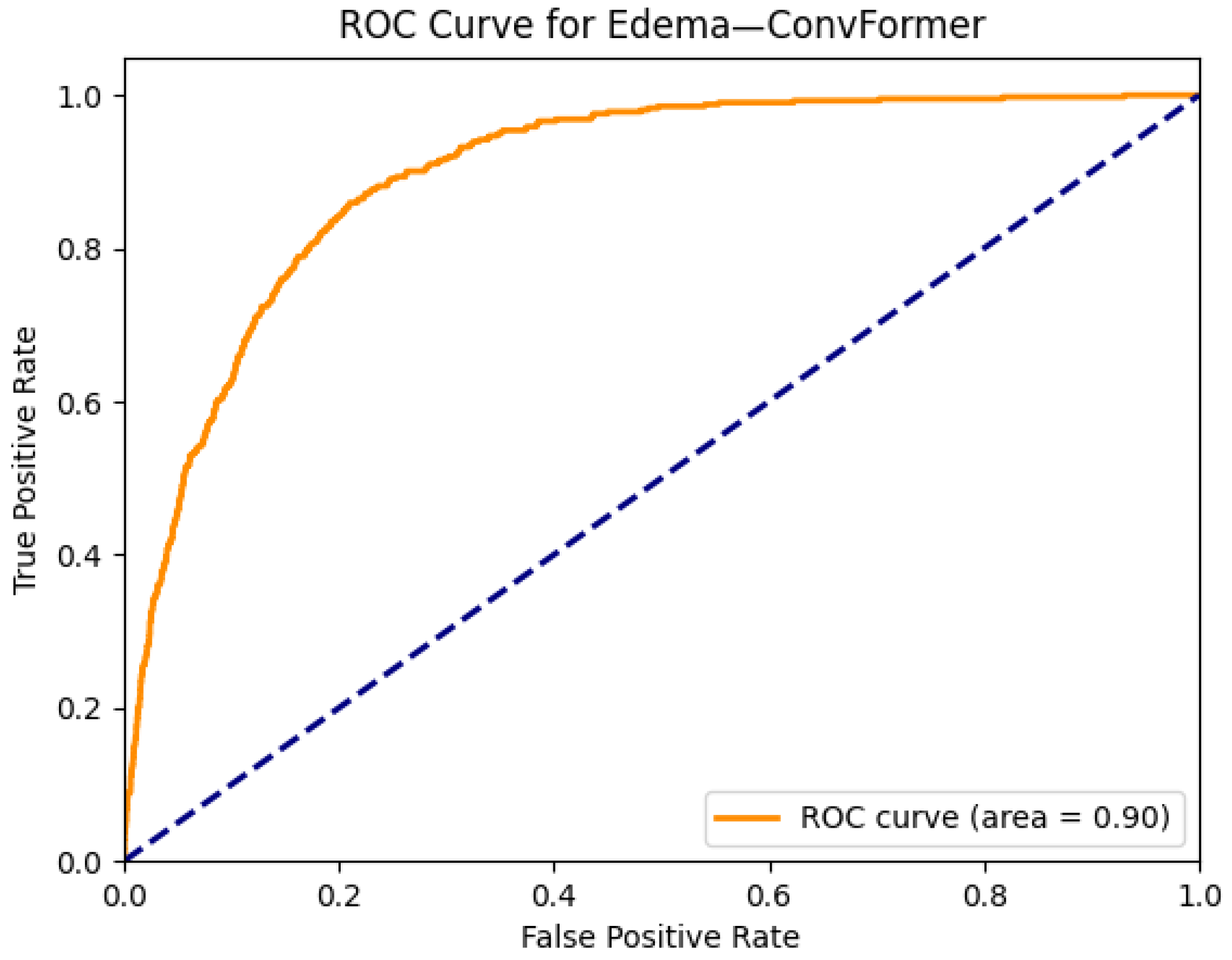

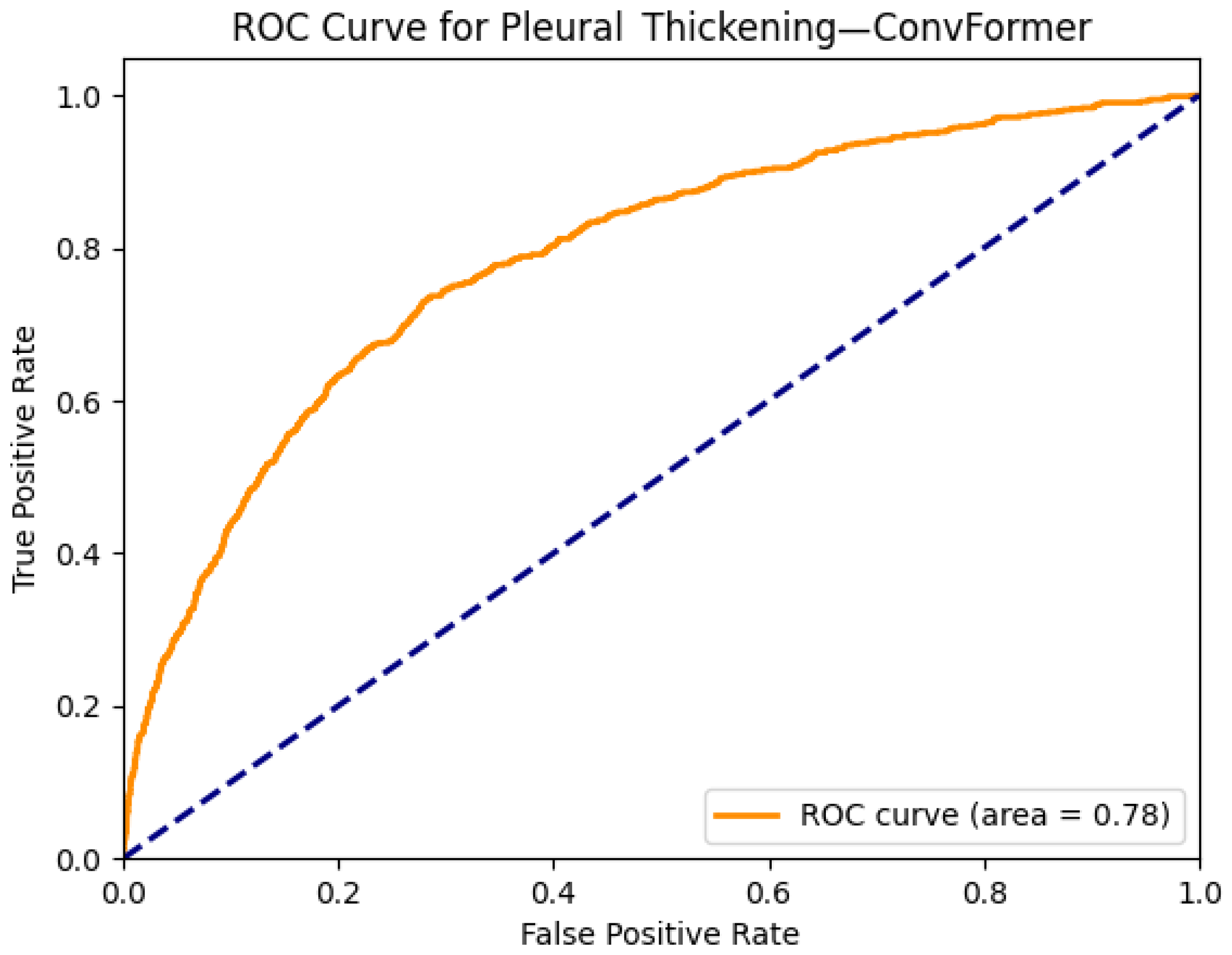

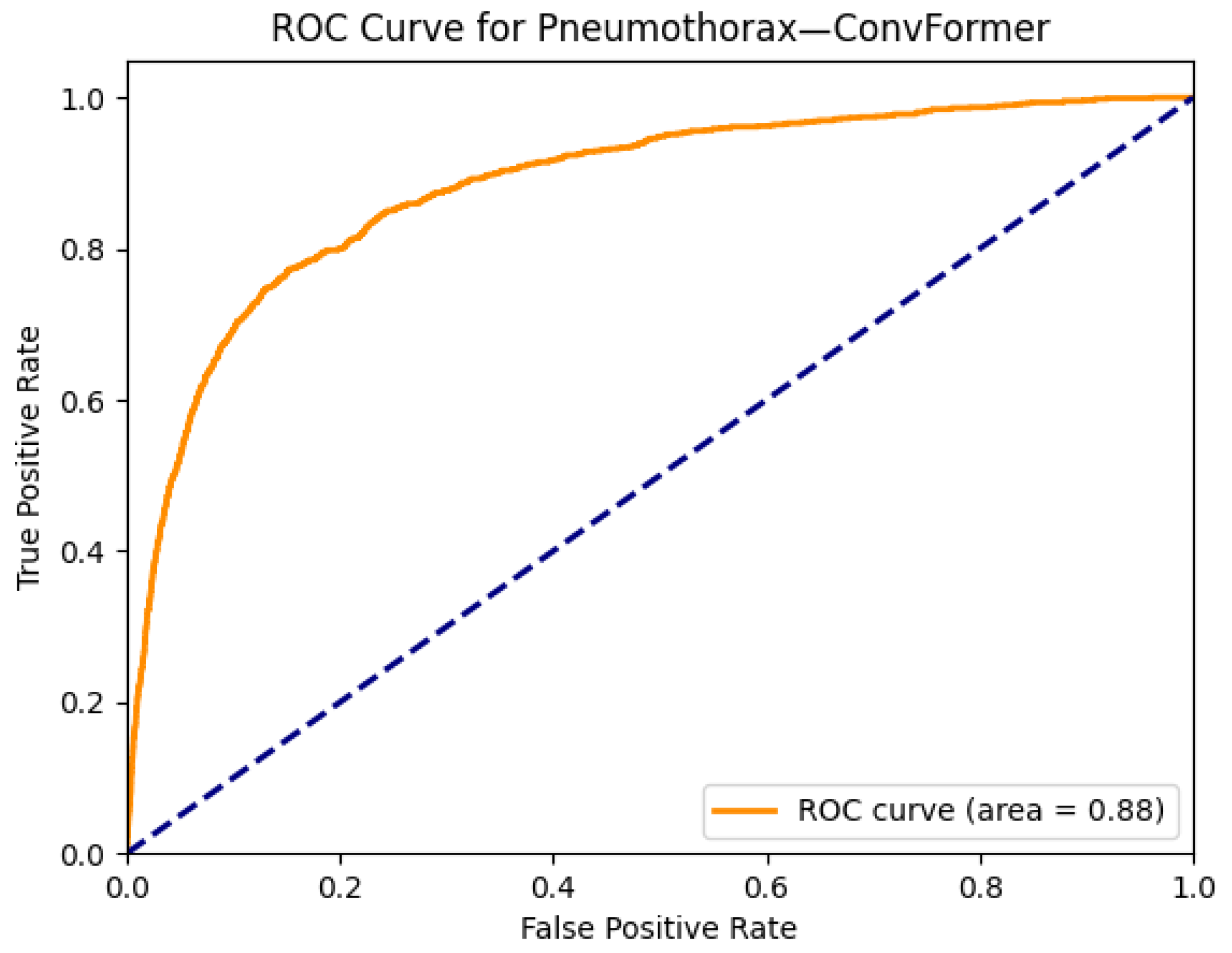

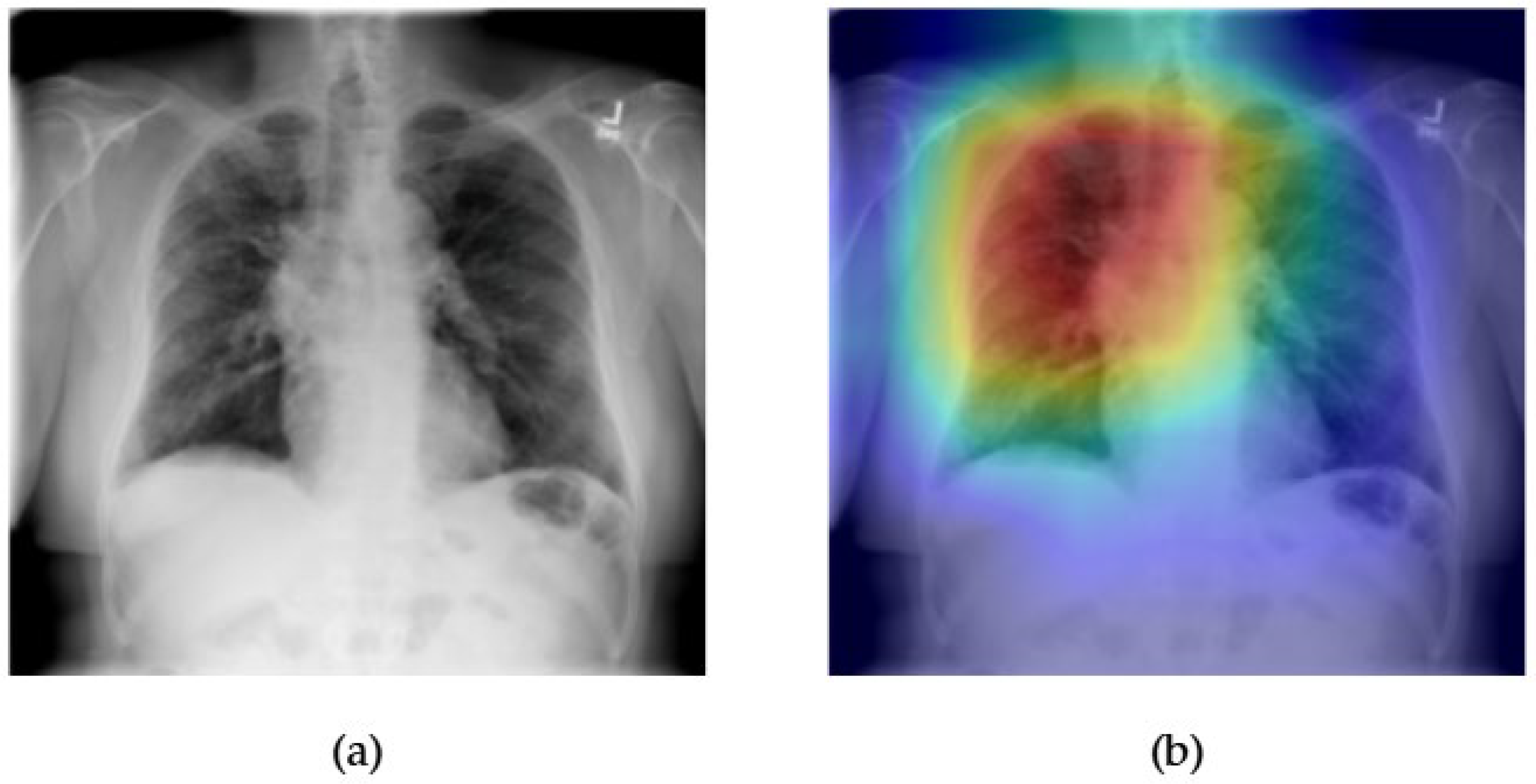

- ConvFormer (28 M)—A hybrid architecture, ConvFormer blends the local feature extraction strengths of CNNs with the long-range modeling capabilities of Transformers. By integrating convolutional layers within Transformer blocks, it maintains strong spatial inductive biases while also capturing contextual dependencies—a beneficial combination for medical images that contain both localized and global pathological features [26].

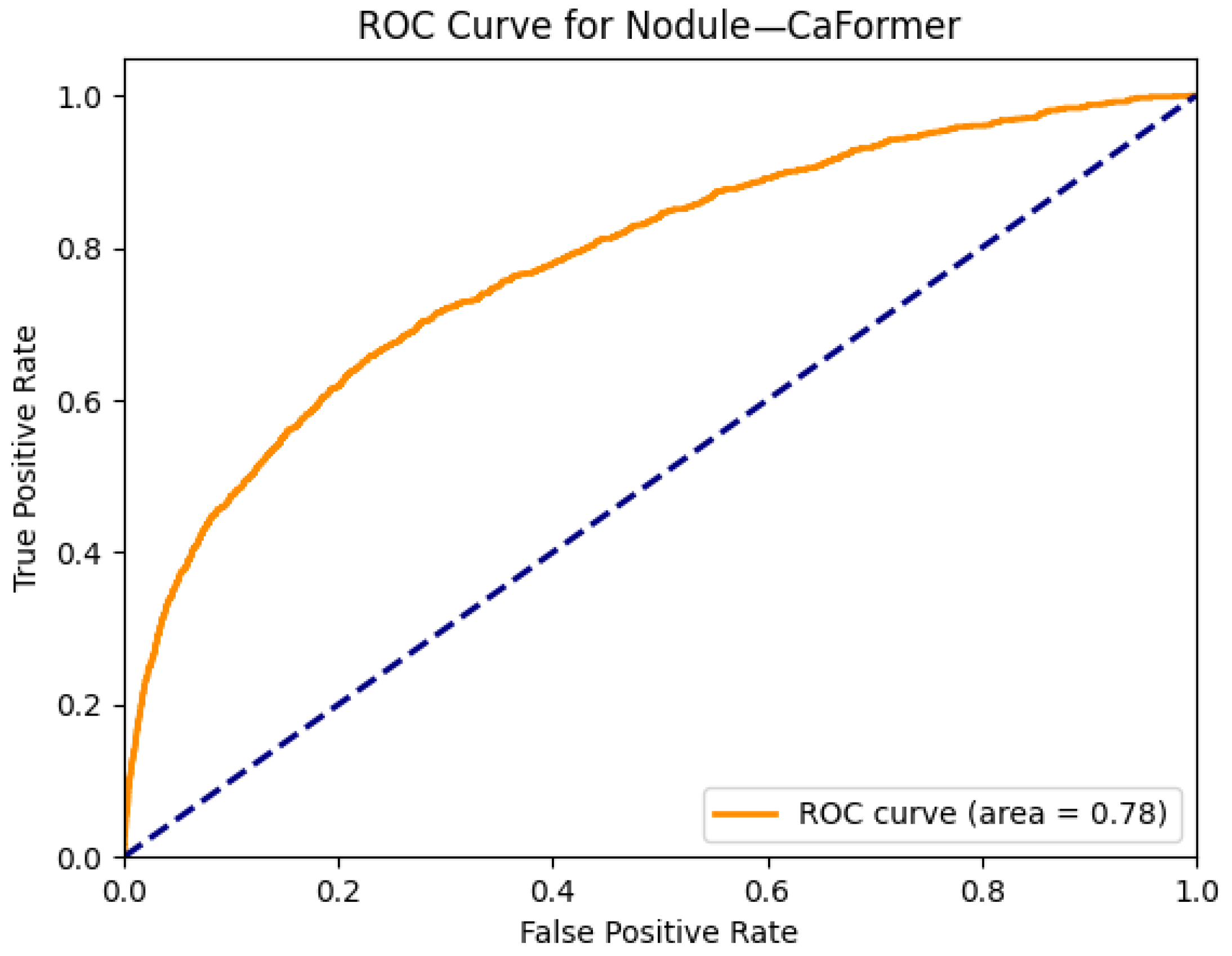

- CaFormer (~29 M)—The Conditional Attention Transformer (CaFormer) is a model introduced within the MetaFormer framework, which abstracts the architecture of Transformers to focus on the token mixer component. CaFormer enhances its adaptability by employing depthwise separable convolutions as token mixers in the initial stages and transitioning to vanilla self-attention in the later stages. This hybrid approach allows the model to effectively capture both local and global dependencies [26].

- DeiT (22 M)—The Data-Efficient Image Transformer was designed to make training ViTs feasible on smaller datasets through knowledge distillation. This is especially relevant in the medical domain, where large-scale, high-quality annotated data can be scarce. DeiT maintains competitive performance while being more computationally accessible than traditional ViT [27].

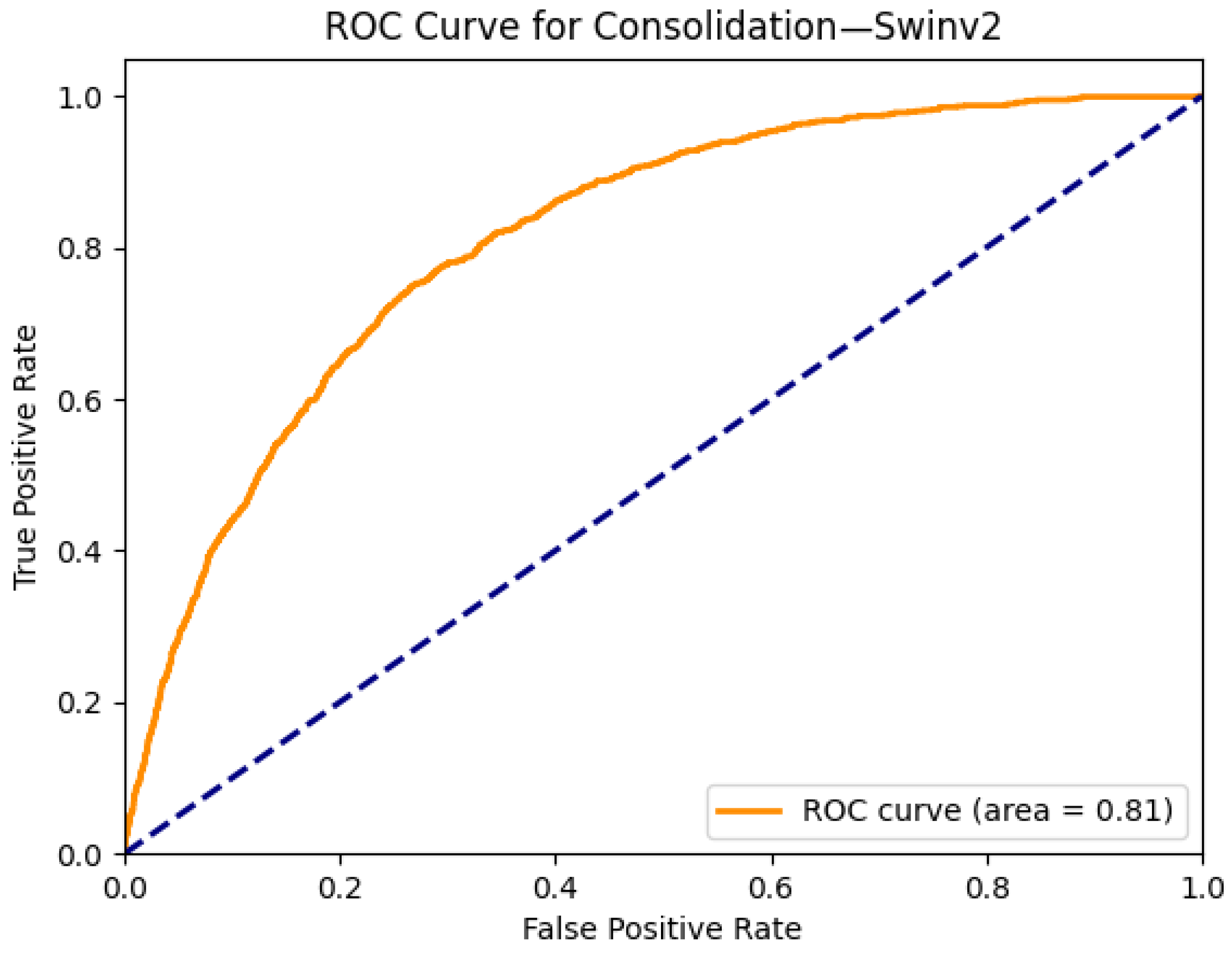

- Swin Transformer v1/v2 (29–60 M)—Swin Transformers adopt a hierarchical architecture with shifted window-based self-attention. This design preserves the Transformer’s global modeling capacity while dramatically improving efficiency and scalability for high-resolution images. The model builds representations at multiple scales, closely mimicking the way radiologists zoom in and out across regions of interest in chest X-rays [28,29].

3.2.3. Mamba-Based State Space Models

- VMamba (~22M)—VMamba extends the Mamba language model to the vision domain by introducing a Selective-Scan 2D (SS2D) module. This component enables the model to perform directional scanning in multiple spatial paths, allowing global receptive field modeling with linear complexity. VMamba has demonstrated strong accuracy, throughput, and memory efficiency in standard computer vision benchmarks. However, its classification performance on medical images has shown variability, especially when compared to mature Transformer-based counterparts [18].

- MedMamba (~14M)—MedMamba is the first Mamba variant specifically adapted for generalized medical image classification. It employs a hybrid design combining grouped convolutions with SSM-based processing, as well as medical domain-specific normalization techniques to better align with clinical imaging characteristics. Its lightweight design and theoretical scalability make it attractive for real-time or resource-constrained settings. Despite these advantages, it is indicated by the evaluations that MedMamba underperforms relative to CNN and Transformer baselines on complex thoracic anomalies, highlighting ongoing challenges in adapting Mamba architectures to the unique demands of medical image analysis [10].

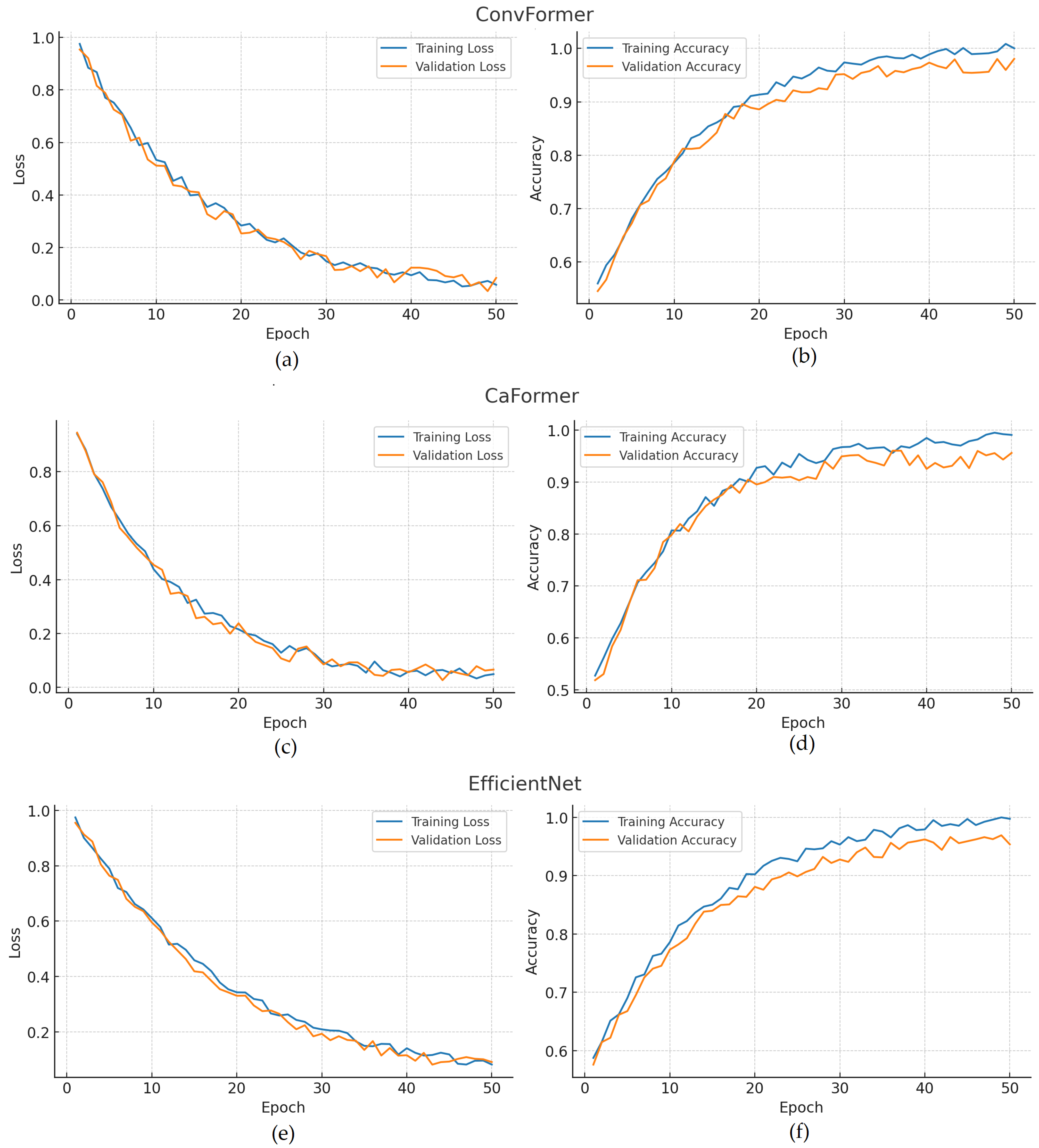

4. Results

5. Discussion

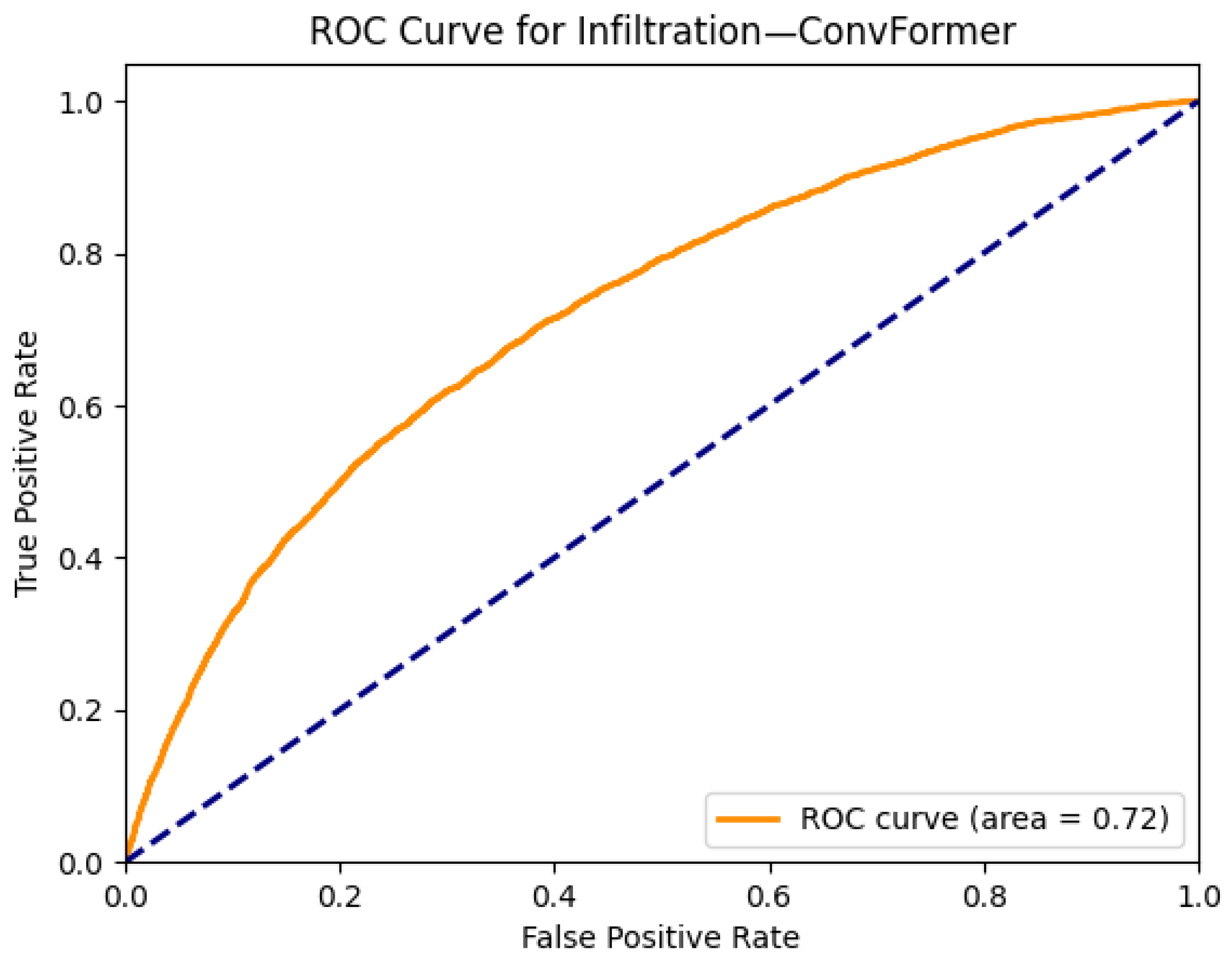

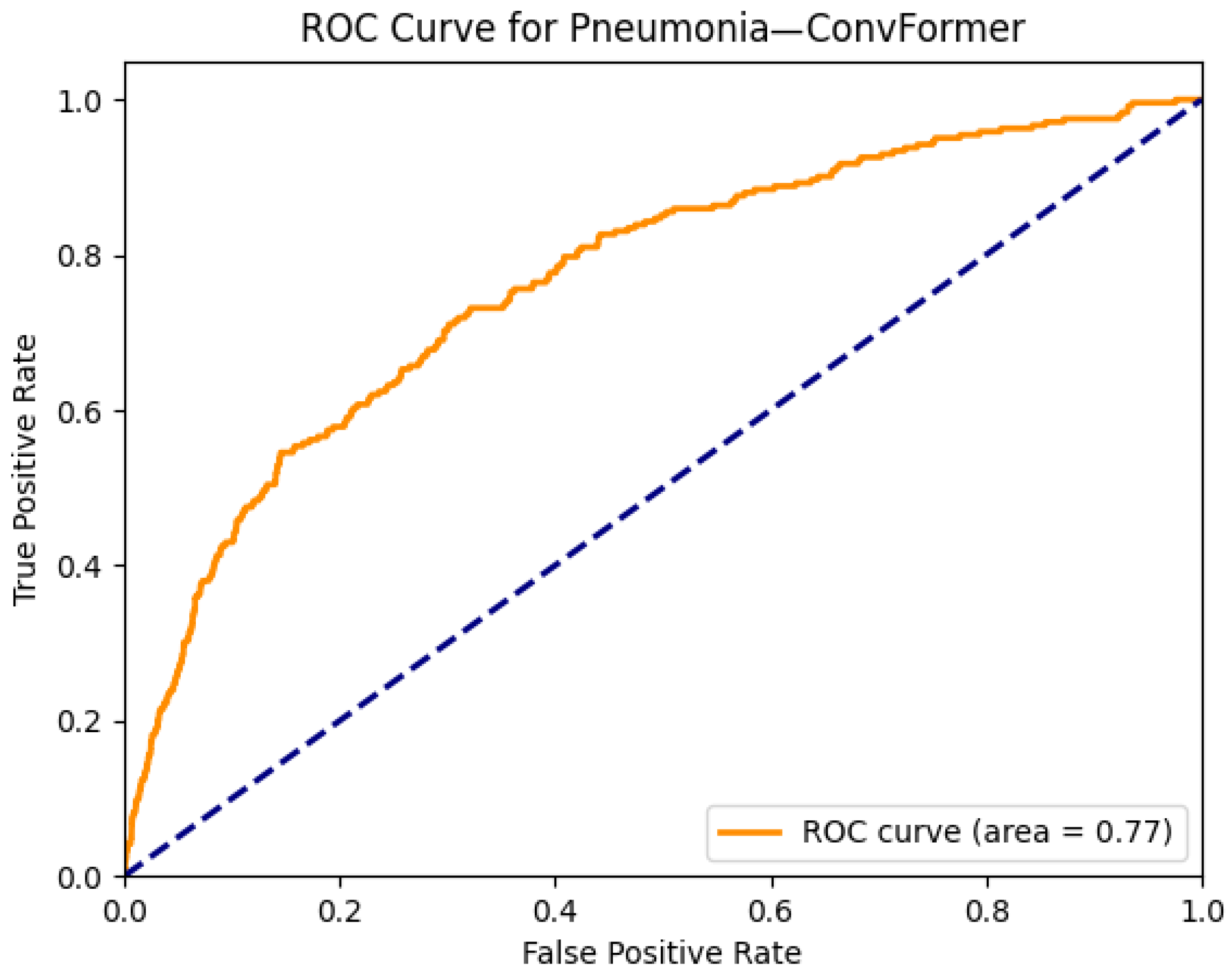

- While most models perform well on distinct pathologies, the performance for infiltration (0.72) and pneumonia (0.77) remains modest. These categories are notoriously difficult due to their ambiguous radiological definitions and overlap with multiple other disease classes. This suggests a potential limitation of current visual backbones in handling diffuse, context-dependent findings. Future work could explore integrating clinical metadata or temporal sequences to improve context-aware learning.

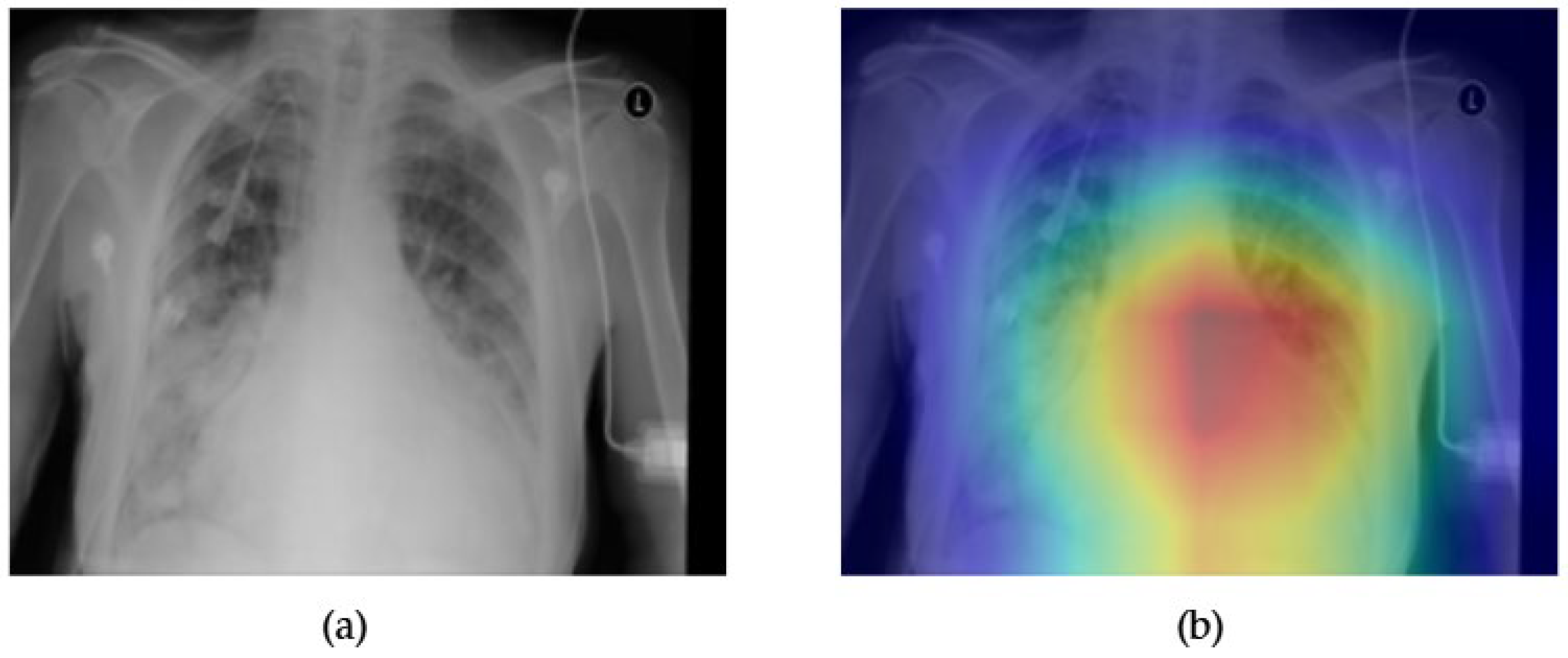

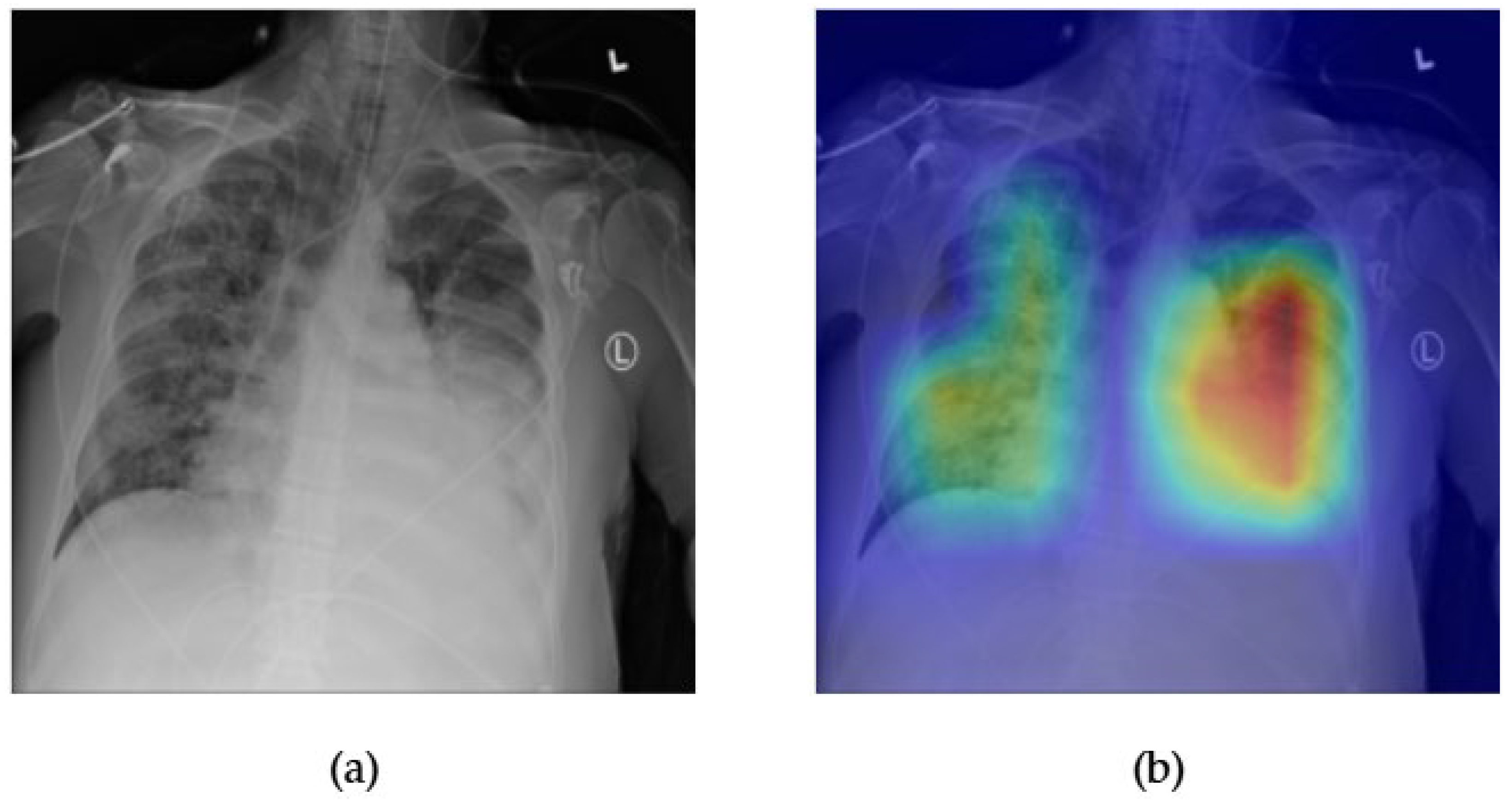

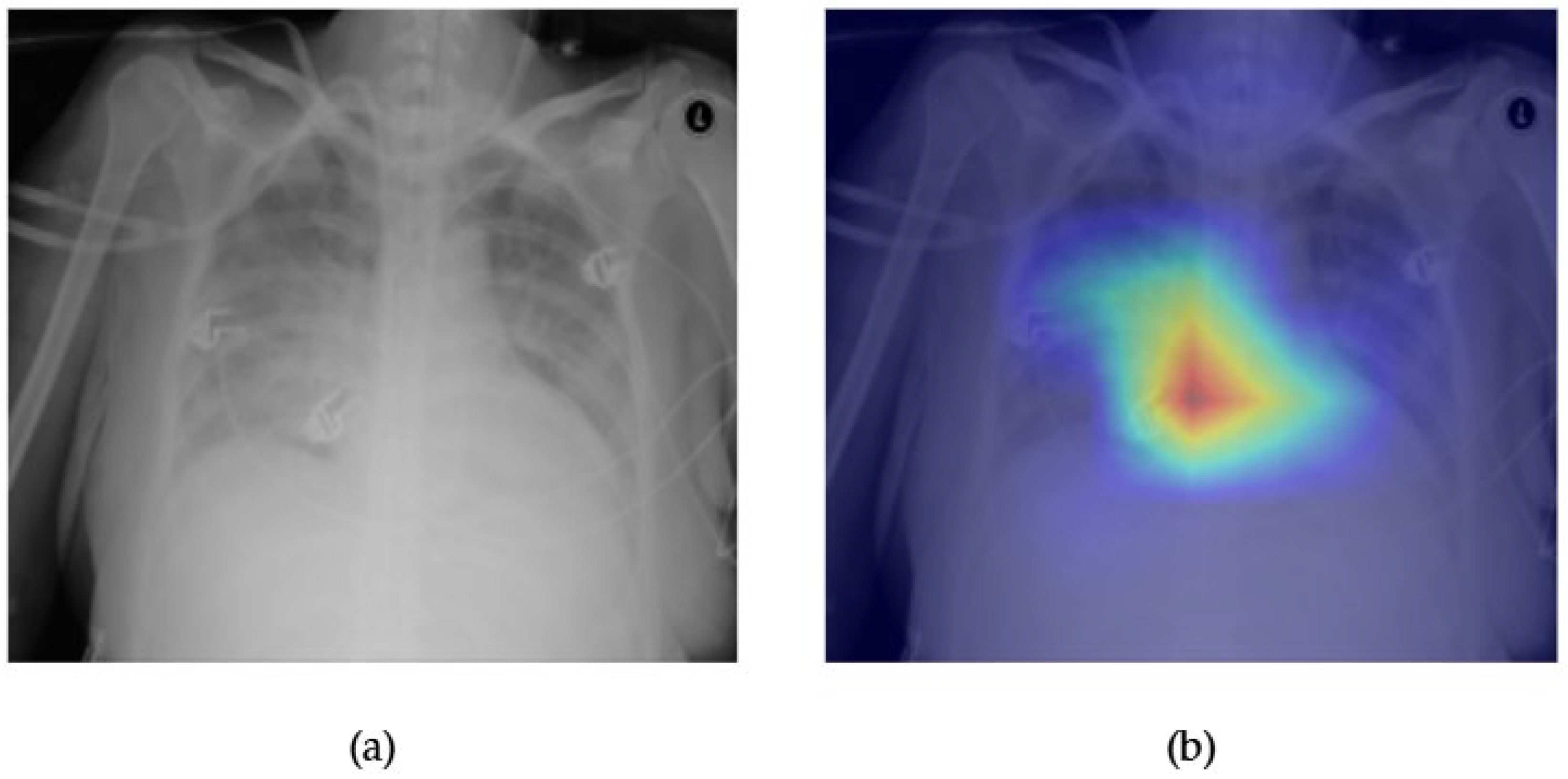

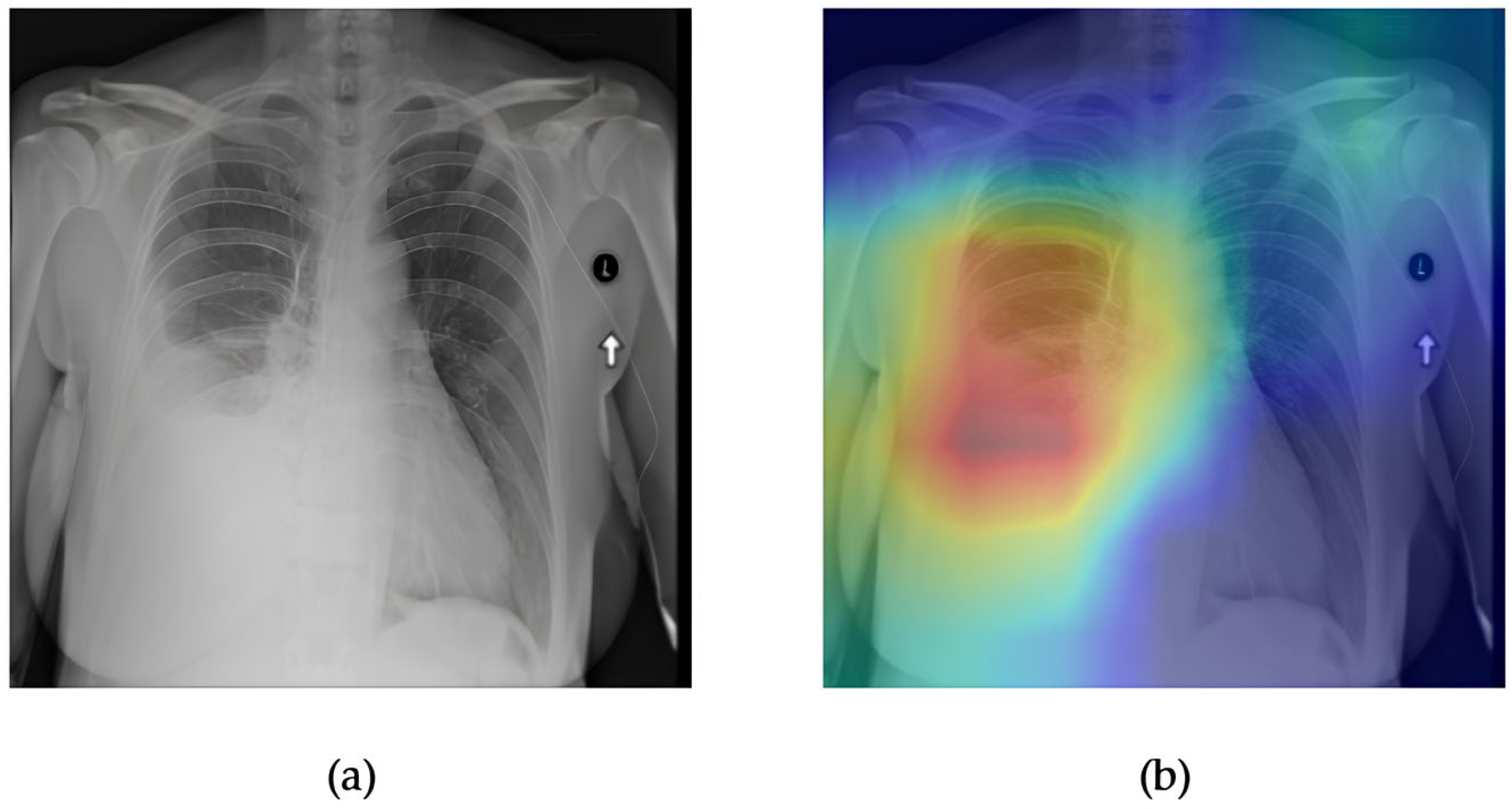

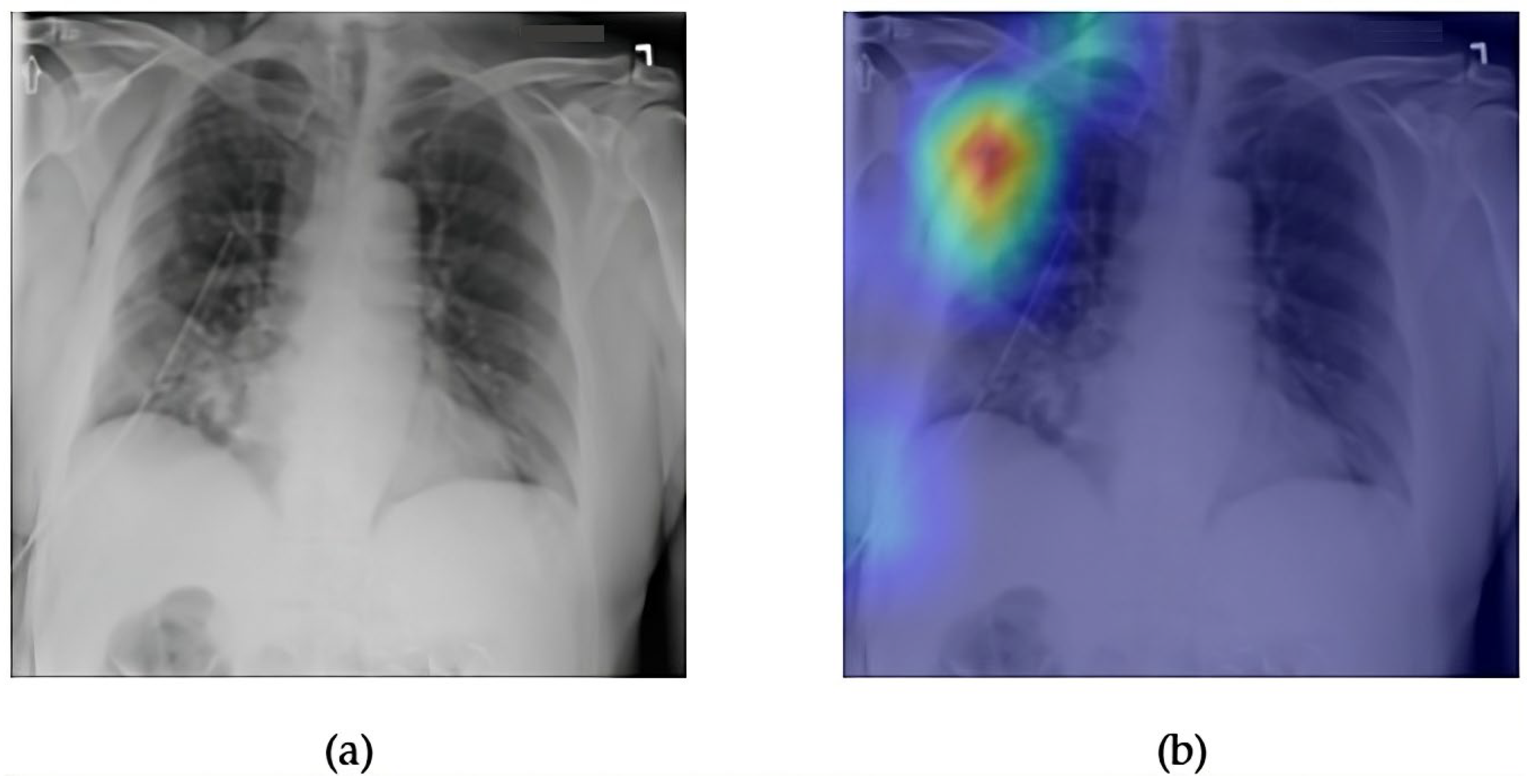

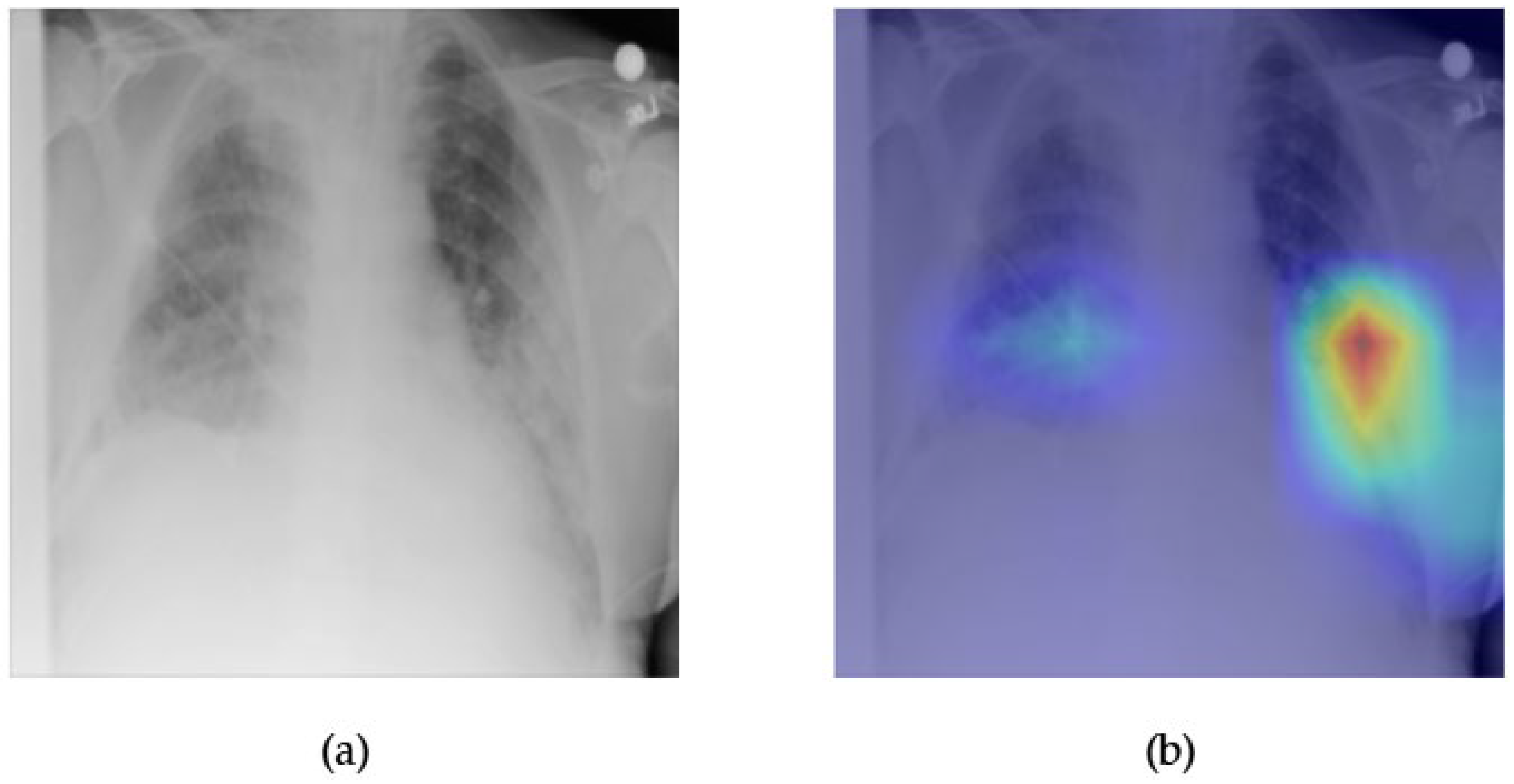

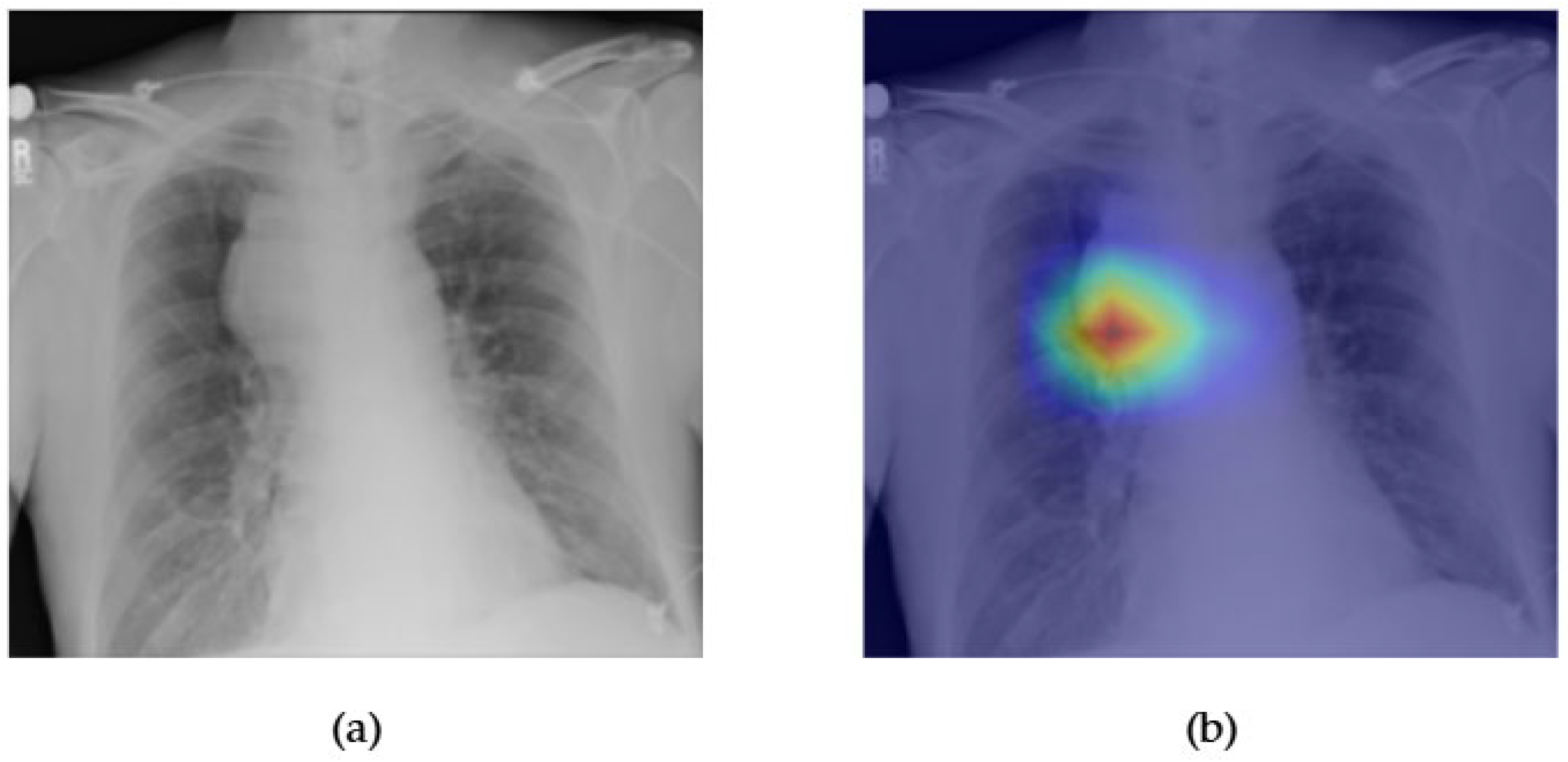

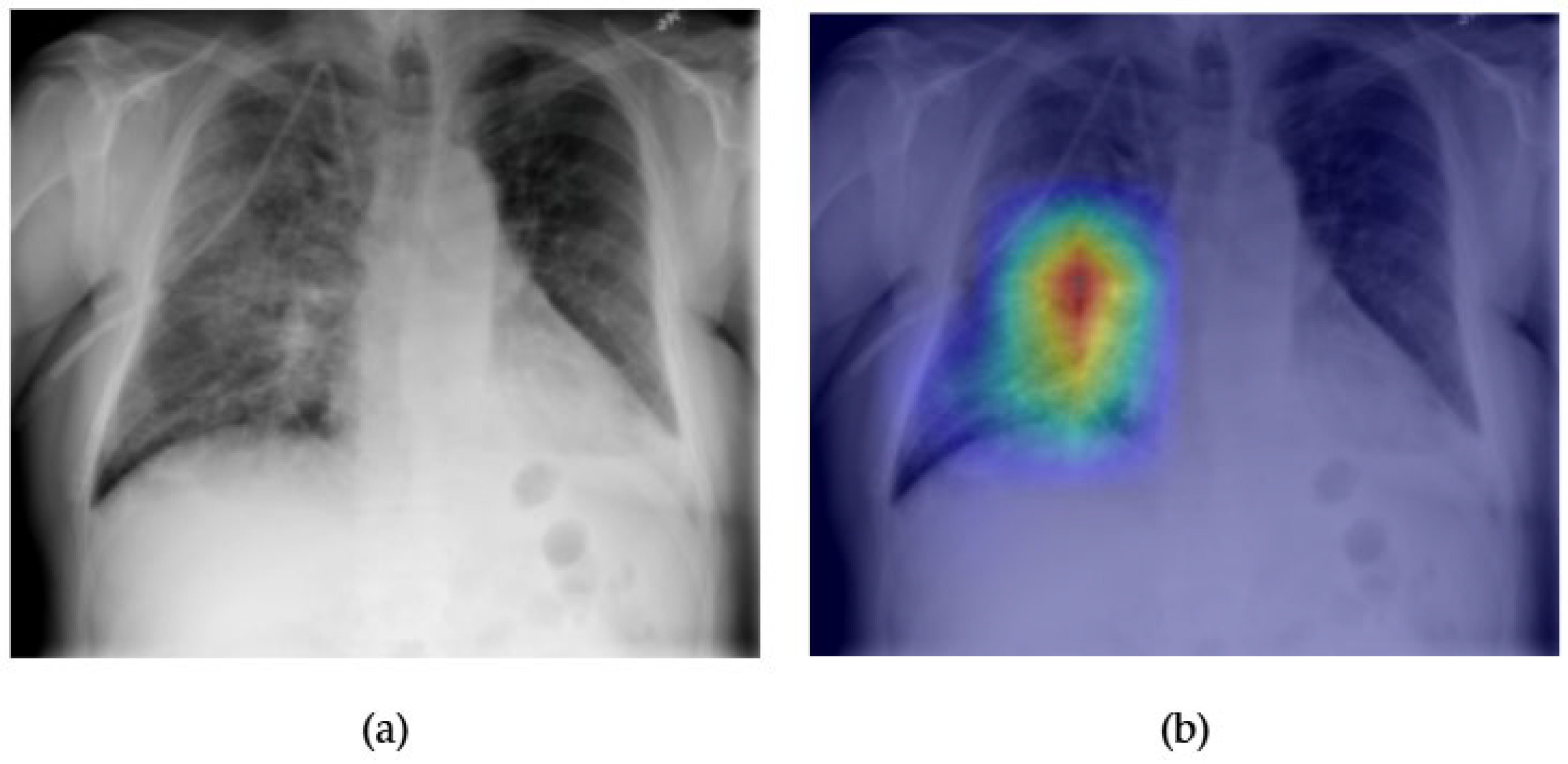

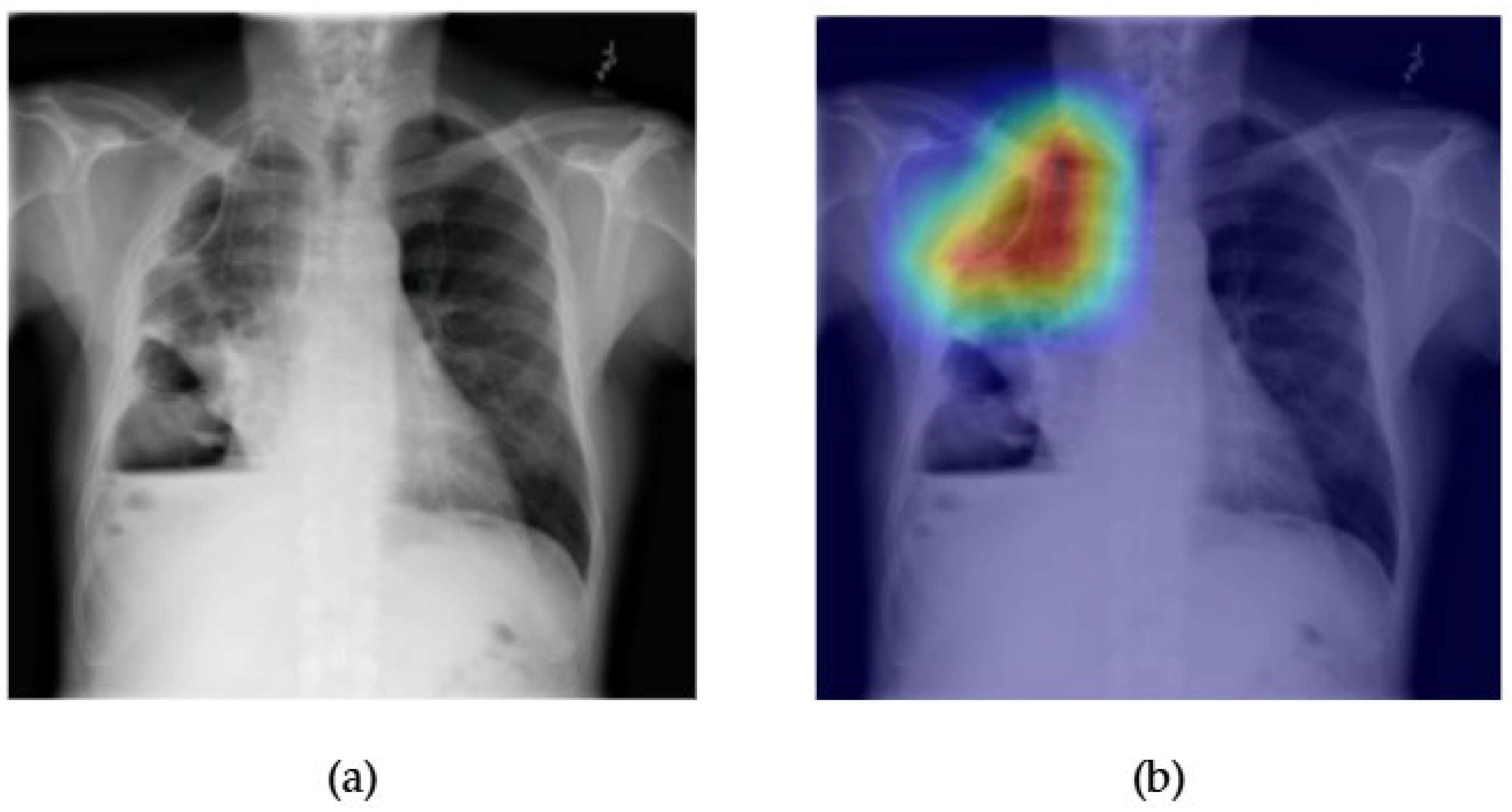

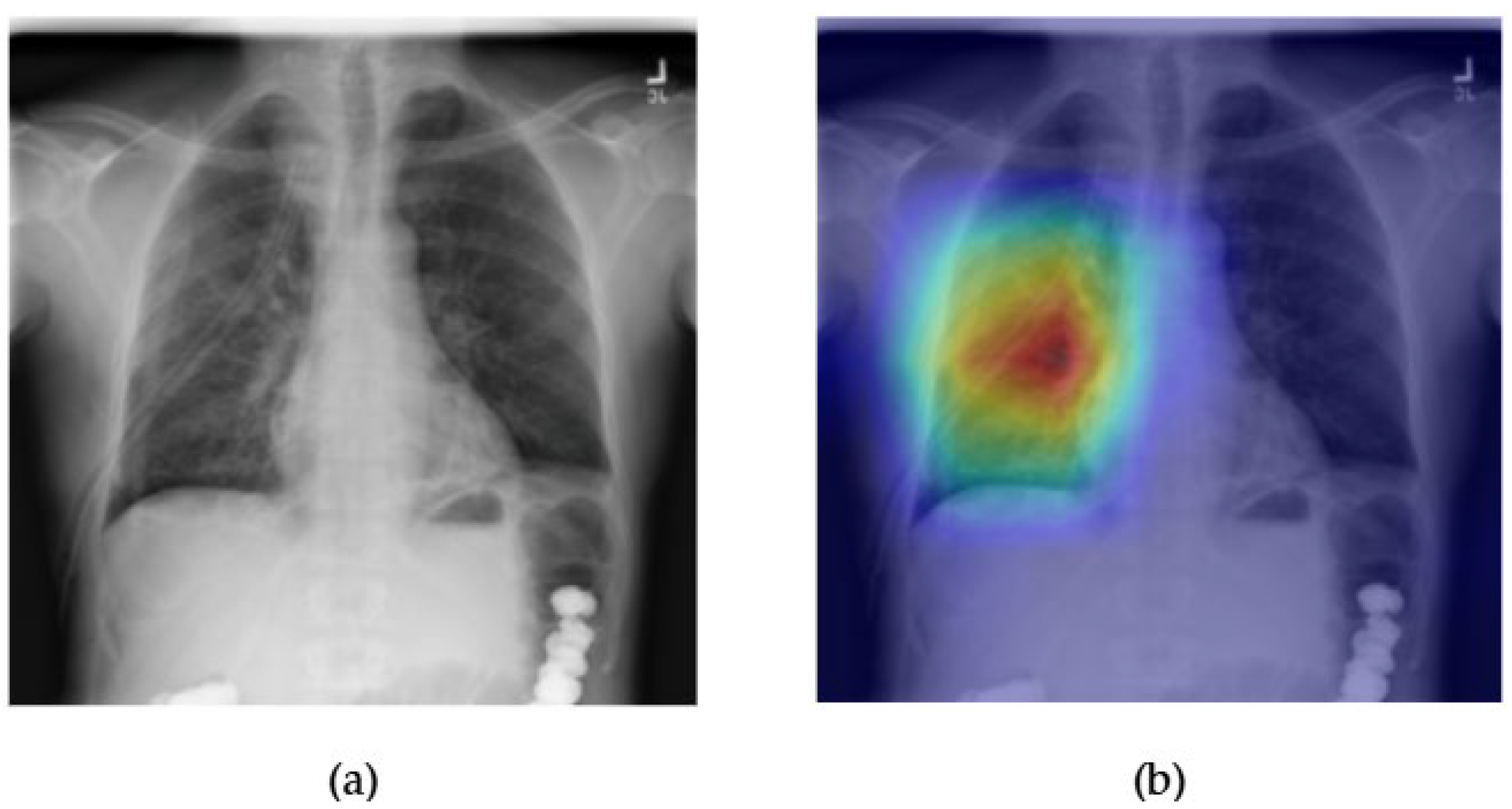

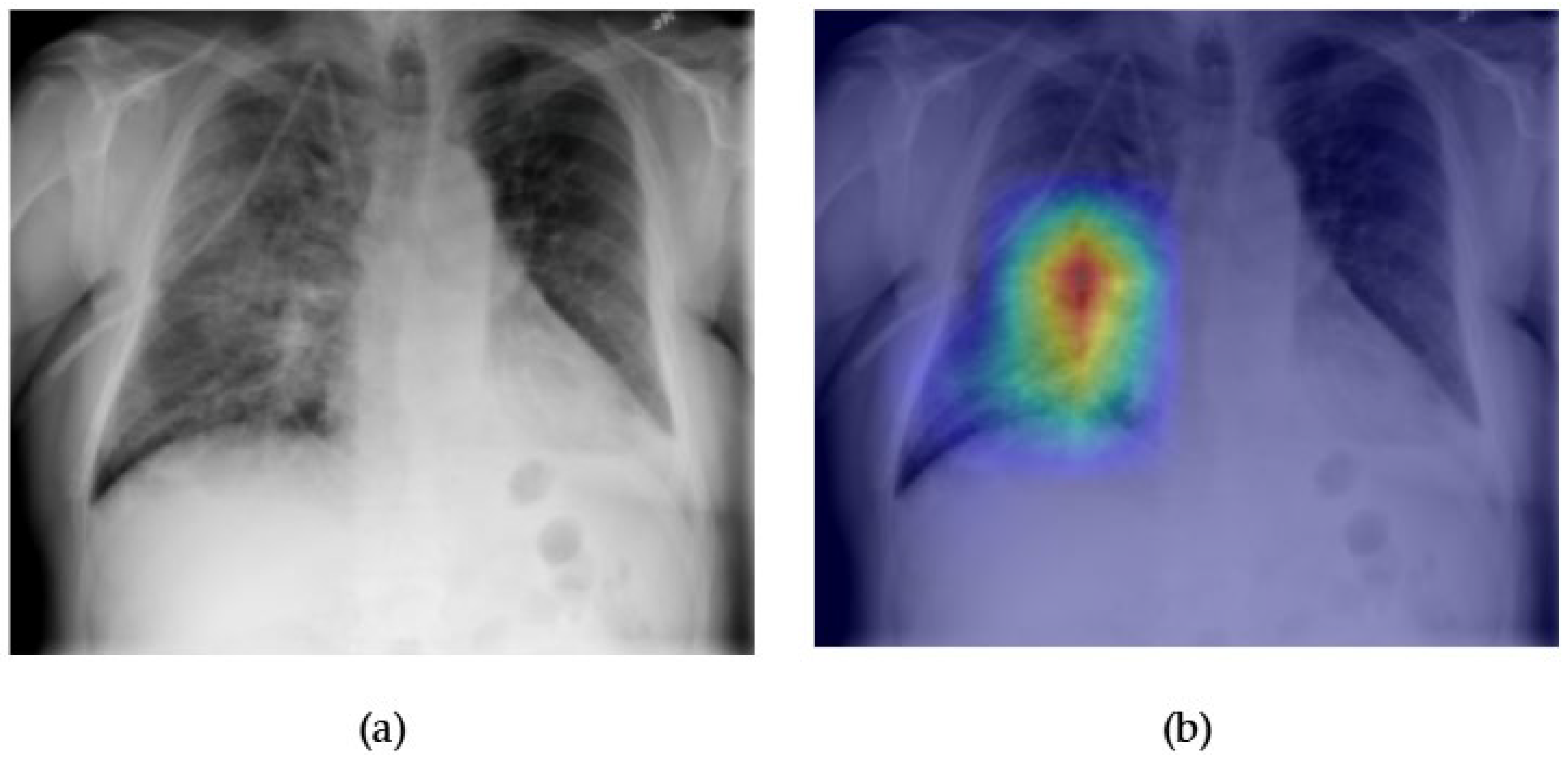

- Although our Grad-CAM-based explainability provides useful localization cues, it is inherently limited by its post hoc nature and reliance on gradient flow from the final convolutional layers. Future research could incorporate advanced interpretability techniques such as Layer-wise Relevance Propagation (LRP), Integrated Gradients, or attention rollouts in Transformers, which may offer a more complete understanding of model reasoning.

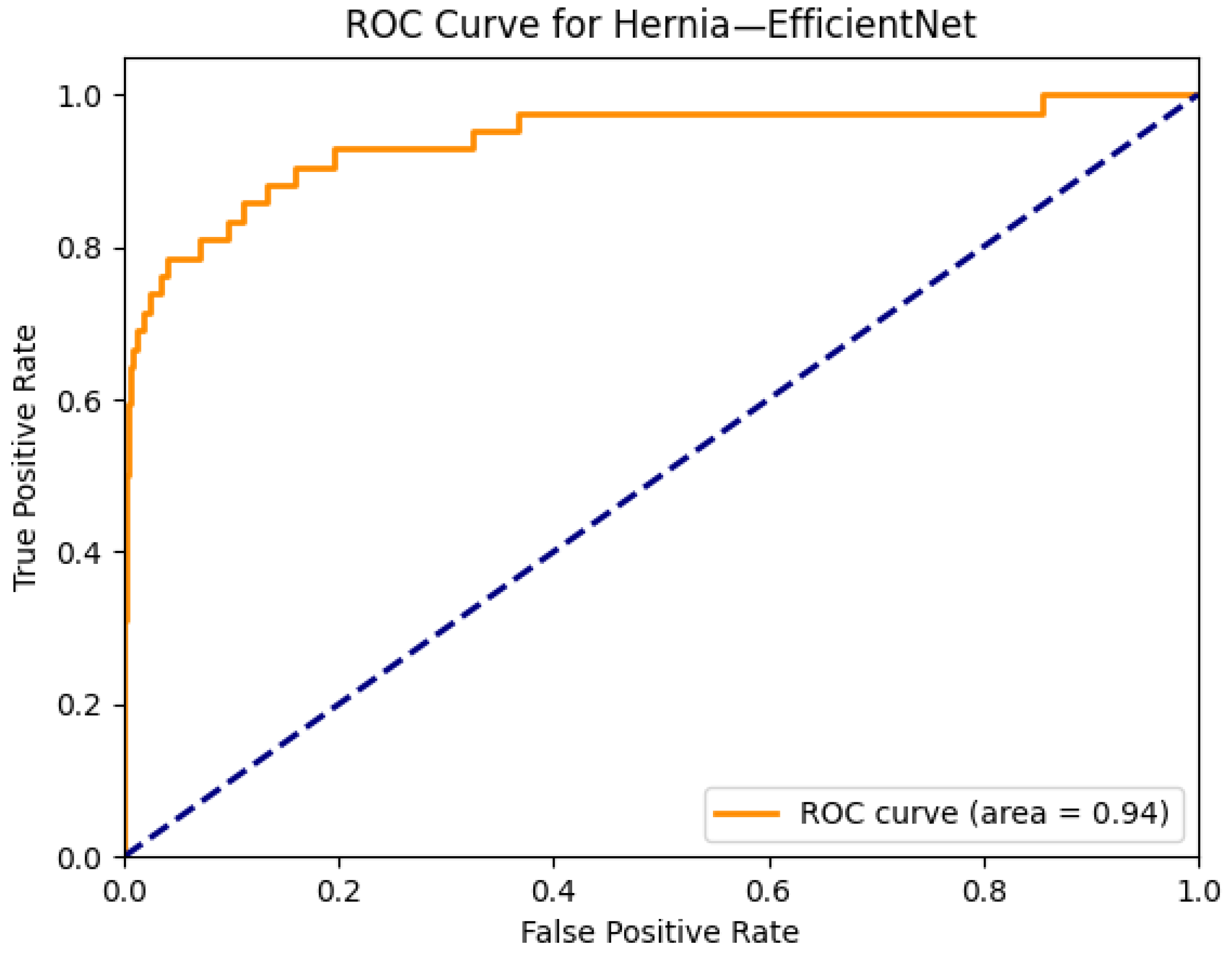

- A critical limitation of the NIH ChestX-ray14 dataset is its reliance on natural language processing (NLP)-based labeling. Although these weak labels achieve approximately 90% accuracy, noise is unevenly distributed across disease categories. Rare pathologies such as hernia (0.20% prevalence) and fibrosis (1.5%) are especially vulnerable, as even small mislabeling rates can lead to substantial bias in both training and evaluation. For instance, mislabeled negative cases may artificially inflate AUROC scores for rare classes, while false positives can obscure true model sensitivity. Furthermore, weak supervision limits model reliability in conditions requiring subtle radiographic interpretation. Addressing this issue will require curated datasets with radiologist consensus annotations, as well as external validation using multi-institutional, high-quality benchmarks.

- Although architectural performance was the central focus of this work, deployment-related factors such as inference latency, memory usage, and integration into PACS or radiology workflows were not evaluated. While models such as EfficientNet and MobileNetV4 offer good computational efficiency, Transformer-based models often require more hardware resources. Evaluating these trade-offs in edge scenarios, such as low-resource hospitals or portable devices, remains an important next step.

- User-centered validation with clinical experts, including radiologist-driven qualitative assessments of Grad-CAM outputs and case-level agreement rates, is essential for ensuring that the model outputs are not only technically sound but also practically usable in diagnostic workflows.

6. Conclusions and Future Work

- Improving the classification of challenging diseases (infiltration and pneumonia) by integrating multimodal clinical information (such as demographics and symptoms) alongside imaging data;

- Exploring advanced interpretability techniques, such as Layer-wise Relevance Propagation (LRP), SHAP, and attention rollout, to provide higher-fidelity and more reliable explanations;

- Validating generalizability through evaluations of external datasets, diverse patient populations, and different imaging protocols, and employing domain adaptation methods to mitigate dataset shift;

- Leveraging improved learning strategies, such as semi-supervised and self-supervised approaches, to handle weakly labeled or imbalanced data better;

- Focusing on deployment-related factors, including inference efficiency, memory usage, and integration into radiology workflows, with special attention to edge devices and resource-limited environments;

- Conducting prospective clinical studies with radiologists to evaluate usability, trust, and diagnostic impact in real-world settings.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AUROC | Area Under the Receiver Operating Characteristic Curve |

| CNN | Convolutional Neural Network |

| CXR | Chest X-ray |

| DeiT | Data-Efficient Image Transformer |

| DL | Deep Learning |

| FPS | Frames Per Second |

| Grad-CAM | Gradient-Weighted Class Activation Mapping |

| MLC | Multi-Label Classification |

| NIH | National Institutes of Health |

| NLP | Natural Language Processing |

| PACS | Picture Archiving and Communication System |

| SSM | Structured State Space Model |

| ViT | Vision Transformer |

References

- Nasser, A.A.; Akhloufi, M.A. Deep learning methods for chest disease detection using radiography images. SN Comput. Sci. 2023, 4, 388. [Google Scholar] [CrossRef] [PubMed]

- Song, L.; Sun, H.; Xiao, H.; Lam, S.K.; Zhan, Y.; Ren, G.; Cai, J. Artificial Intelligence for chest X-ray image en-hancement. Radiat. Med. Prot. 2025, 6, 61–68. [Google Scholar] [CrossRef]

- Yanar, E.; Hardalaç, F.; Ayturan, K. PELM: A Deep Learning Model for Early Detection of Pneumonia in Chest Radiography. Appl. Sci. 2025, 15, 6487. [Google Scholar] [CrossRef]

- Yanar, E.; Hardalaç, F.; Ayturan, K. CELM: An Ensemble Deep Learning Model for Early Cardiomegaly Diagnosis in Chest Radiography. Diagnostics 2025, 15, 1602. [Google Scholar] [CrossRef] [PubMed]

- Marrón-Esquivel, J.M.; Duran-Lopez, L.; Linares-Barranco, A.; Dominguez-Morales, J.P. A comparative study of the inter-observer variability on Gleason grading against Deep Learning-based approaches for prostate cancer. Comput. Biol. Med. 2023, 159, 106856. [Google Scholar] [CrossRef] [PubMed]

- Chibuike, O.; Yang, X. Convolutional Neural Network–Vision Transformer architecture with gated control mechanism and multi-scale fusion for enhanced pulmonary disease classification. Diagnostics 2024, 14, 2790. [Google Scholar] [CrossRef] [PubMed]

- Taslimi, S.; Taslimi, S.; Fathi, N.; Salehi, M.; Rohban, M.H. SwinCheX: Multi-label classification on chest X-ray images with transformers. arXiv 2022, arXiv:2206.04246. [Google Scholar]

- U.S. Department of Health and Human Services. NIH Clinical Center Provides One of the Largest Publicly Available Chest X-ray Datasets to Scientific Community. National Institutes of Health. Available online: https://www.kaggle.com/datasets/nih-chest-xrays/data (accessed on 23 July 2025).

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Ball, R.L.; Langlotz, C.; et al. CheXNet: Radiologist-level pneumonia detection on chest X-rays with deep learning. arXiv 2017, arXiv:1711.05225. [Google Scholar]

- Tang, Y.-X.; Tang, Y.-B.; Peng, Y.; Yan, K.; Bagheri, M.; Redd, B.A.; Brandon, C.J.; Lu, Z.; Han, M.; Xiao, J.; et al. Automated abnormality classification of chest radiographs using deep convolutional neural networks. Npj Digit. Med. 2020, 3, 70. [Google Scholar] [CrossRef] [PubMed]

- Ravi, V.; Acharya, V.; Alazab, M. A multichannel EfficientNet Deep Learning-based stacking ensemble approach for lung disease detection using chest X-ray images. Clust. Comput. 2020, 26, 1181–1203. [Google Scholar] [CrossRef] [PubMed]

- Baltruschat, I.M.; Nickisch, H.; Grass, M.; Knopp, T.; Saalbach, A. Comparison of deep learning approaches for multi-label chest X-ray classification. Sci. Rep. 2019, 9, 6381. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Houlsby, N. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wollek, A.; Graf, R.; Čečatka, S.; Fink, N.; Willem, T.; Sabel, B.O.; Lasser, T. Attention-based saliency maps improve interpretability of pneumothorax classification. Radiol. Artif. Intell. 2023, 5, e220187. [Google Scholar] [CrossRef] [PubMed]

- Yue, Y.; Li, Z. MEDMAMBA: Vision Mamba for Medical Image Classification. arXiv 2024, arXiv:2403.03849. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. VMamba: Visual State Space Model. arXiv 2024, arXiv:2401.10166. [Google Scholar] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking model scaling for convolutional neural networks. In International Conference on Machine Learning; PMLR: New York, NY, USA, 2019; pp. 6105–6114. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Howard, A. MobileNetV4: Universal models for the mobile ecosystem. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 78–96. [Google Scholar]

- Ding, M.; Xiao, B.; Codella, N.; Luo, P.; Wang, J.; Yuan, L. DaViT: Dual attention vision transformers. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2022; pp. 74–92. [Google Scholar]

- Yu, W.; Si, C.; Zhou, P.; Luo, M.; Zhou, Y.; Feng, J.; Wang, X. MetaFormer baselines for vision. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 896–912. [Google Scholar] [CrossRef] [PubMed]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In International Conference on Machine Learning; PMLR: New York, NY, USA, 2021; pp. 10347–10357. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Guo, B. Swin Transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Guo, B. Swin Transformer V2: Scaling up capacity and resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

- Yao, L.; Prosky, J.; Poblenz, E.; Covington, B.; Lyman, K. Weakly supervised medical diagnosis and localization from multiple resolutions. arXiv 2018, arXiv:1803.07703. [Google Scholar] [CrossRef]

- Gundel, S.; Grbic, S.; Georgescu, B.; Liu, S.; Maier, A.; Comaniciu, D. Learning to recognize abnormalities in chest x-rays with location-aware dense networks. Lect. Notes Comput. Sci. 2019, 11401, 757–765. [Google Scholar] [CrossRef]

| Pathology | True Count | False Count | Prevalence (%) |

|---|---|---|---|

| Infiltration | 19,894 | 92,226 | 17.74 |

| Effusion | 13,317 | 98,803 | 11.88 |

| Atelectasis | 11,559 | 100,561 | 10.31 |

| Nodule | 6331 | 105,789 | 5.65 |

| Mass | 5782 | 106,338 | 5.16 |

| Pneumothorax | 5302 | 106,818 | 4.73 |

| Consolidation | 4667 | 107,453 | 4.16 |

| Pleural Thickening | 3385 | 108,735 | 3.02 |

| Cardiomegaly | 2776 | 109,344 | 2.48 |

| Emphysema | 2516 | 109,604 | 2.24 |

| Edema | 2303 | 109,817 | 2.05 |

| Fibrosis | 1686 | 110,434 | 1.50 |

| Pneumonia | 1431 | 110,689 | 1.28 |

| Hernia | 227 | 111,893 | 0.20 |

| Category | Model | Backbone Type | Key Features | Params (M) | FPS | Strengths | Weaknesses |

|---|---|---|---|---|---|---|---|

| CNN | DenseNet121 | DenseNet | Dense connections, feature reuse | 8 | ~40 | Strong feature reuse, mitigates vanishing gradient | Deeper network increases training cost |

| ResNet34 | ResNet | Residual learning, stable training | 21 | ~40 | Stable training, good generalization | Higher param count, slower than EfficientNet | |

| InceptionV3 | Inception | Multi-scale receptive fields | 24 | ~35 | Captures multi-scale features | Complex structure, higher FLOPs | |

| ResNext50 | ResNet+ | Grouped convolutions | 25 | ~35 | Strong representational power | Requires more computational | |

| EfficientNet-B0 | EfficientNet | Compound model scaling | 5.3 | ~125 | Excellent accuracy-efficiency trade-off | Sensitive to input resolution | |

| MobileNetV4 | MobileNet | Lightweight, depthwise convolutions | ~6 | ~110 | Lightweight, fast inference | Lower accuracy on complex patterns | |

| Transformer | DaViT-Tiny | Vision Transformer | Dual attention: spatial + channel | 28 | ~80 | Good balance of local/global features | Still high param count |

| ConvFormer | Hybrid CNN + ViT | Combines conv. priors and attention | 28 | ~83 | Combines local + global context | More computational demand | |

| CaFormer | Conditional Attention | Adaptive attention filtering | 29 | ~77 | Adaptive attention, strong results | High FLOPs, needs a large memory | |

| DeiT | ViT | Distilled supervision, data-efficient | 22 | ~90 | Data-efficient, lighter ViT | Accuracy < ConvFormer/CaFormer | |

| SwinV1/V2 | Hierarchical ViT | Shifted windows, scalable to resolution | 29–60 | ~55 | Scales well to high resolution | Heavy computing, high latency | |

| Mamba | VMamba | State Space Model | 2D scanning, efficient global modeling | 22 | ~111 | Efficient global modeling, low FLOPs | Accuracy lower than CNNs/Transformers |

| MedMamba | SSM + Conv | Grouped convs, medical-specific layers | 14 | ~143 | Lightweight, domain-adapted | Underperforms on rare/complex classes |

| Condition | DenseNet121 | Resnet34 | InceptionV3 | ResNext50 | DavitTiny | EfficientNet | Mobile Netv4 | Conv Former | CaFormer | Deit | Swinv1 | Swinv2 | VMamba | MedMamba |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Atelectasis | 0.82 | 0.81 | 0.77 | 0.82 | 0. | 0.81 | 0.81 | 0.82 | 0.83 | 0.79 | 0.81 | 0.82 | 0.77 | 0.78 |

| Cardiomegaly | 0.90 | 0.90 | 0.87 | 0.91 | 0.90 | 0.90 | 0.89 | 0.90 | 0.89 | 0.88 | 0.90 | 0.89 | 0.86 | 0.89 |

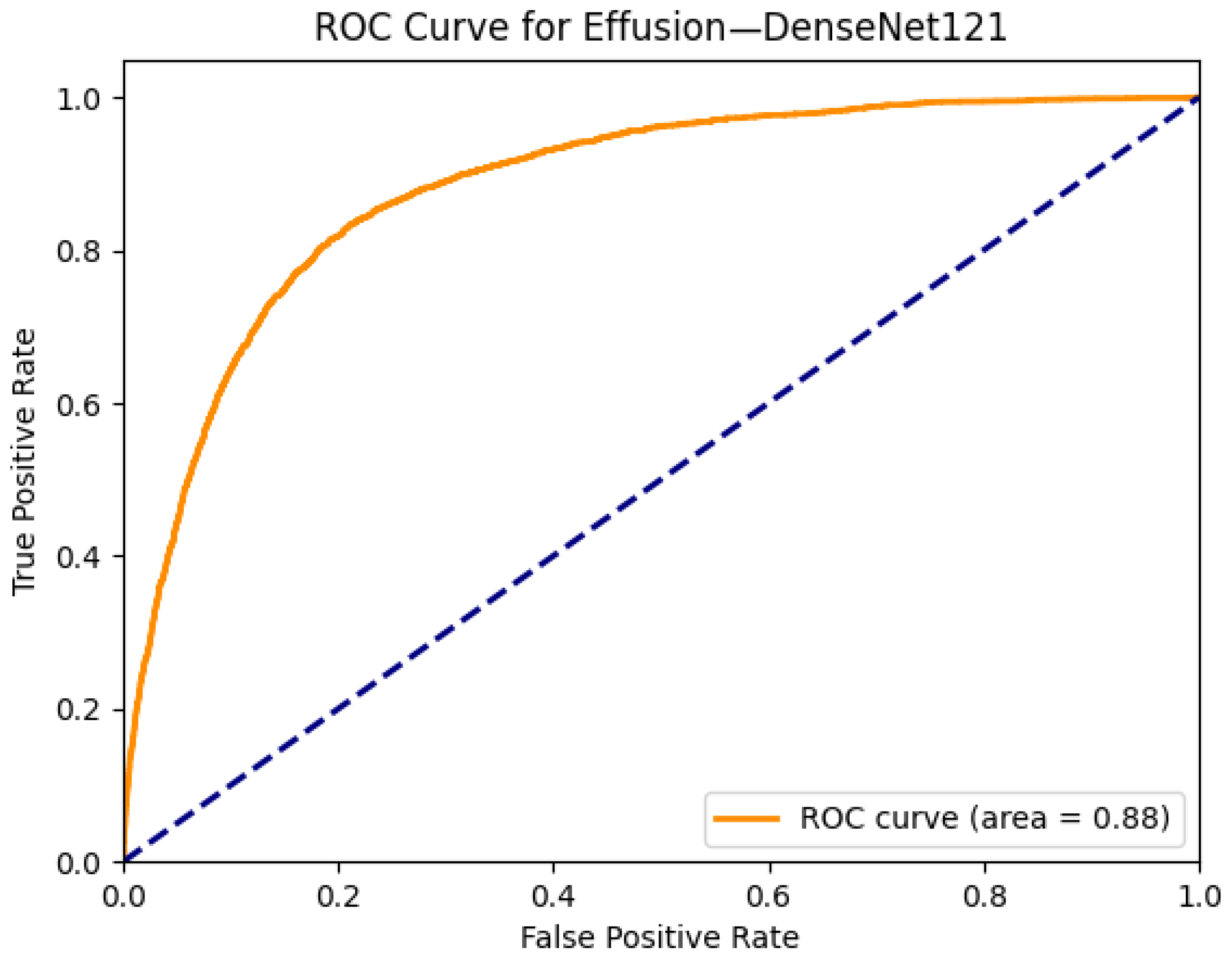

| Effusion | 0.88 | 0.87 | 0.84 | 0.87 | 0.87 | 0.88 | 0.87 | 0.88 | 0.88 | 0.86 | 0.87 | 0.87 | 0.84 | 0.86 |

| Infiltration | 0.71 | 0.70 | 0.68 | 0.71 | 0.71 | 0.70 | 0.71 | 0.71 | 0.70 | 0.70 | 0.70 | 0.71 | 0.68 | 0.68 |

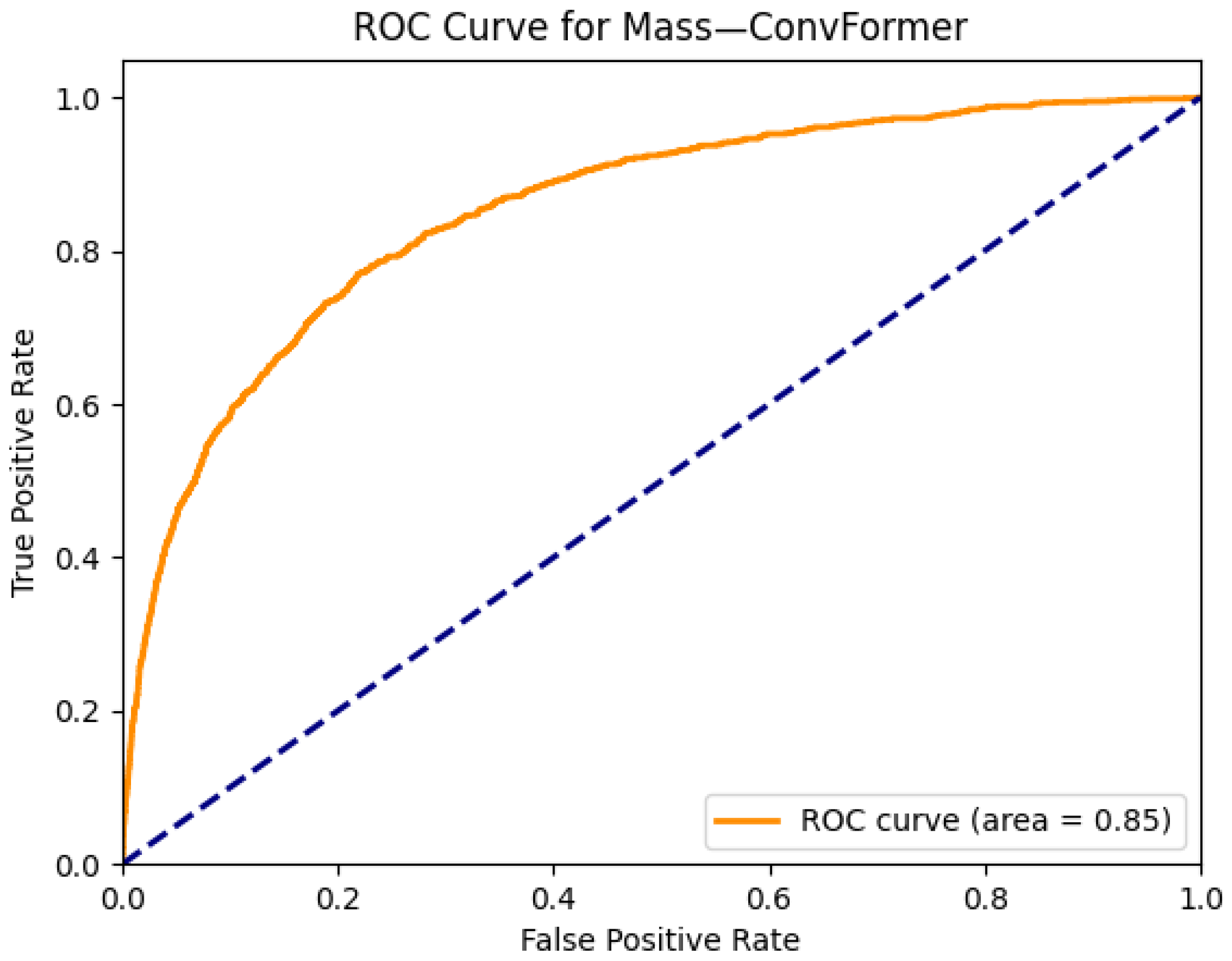

| Mass | 0.83 | 0.83 | 0.73 | 0.83 | 0.84 | 0.84 | 0.82 | 0.85 | 0.84 | 0.79 | 0.81 | 0.75 | 0.77 | |

| Nodule | 0.76 | 0.76 | 0.65 | 0.76 | 0.76 | 0.77 | 0.75 | 0.77 | 0.78 | 0.72 | 0.75 | 0.77 | 0.63 | 0.65 |

| Pneumonia | 0.75 | 0.74 | 0.73 | 0.75 | 0.73 | 0.75 | 0.75 | 0.76 | 0.76 | 0.74 | 0.75 | 0.75 | 0.70 | 0.72 |

| Pneumothorax | 0.86 | 0.87 | 0.82 | 0.85 | 0.88 | 0.87 | 0.85 | 0.88 | 0.87 | 0.85 | 0.86 | 0.87 | 0.81 | 0.82 |

| Consolidation | 0.80 | 0.79 | 0.78 | 0.79 | 0.81 | 0.80 | 0.80 | 0.80 | 0.80 | 0.79 | 0.79 | 0.81 | 0.77 | 0.78 |

| Edema | 0.89 | 0.89 | 0.87 | 0.89 | 0.88 | 0.89 | 0.89 | 0.89 | 0.88 | 0.88 | 0.88 | 0.89 | 0.86 | 0.87 |

| Emphysema | 0.92 | 0.92 | 0.82 | 0.92 | 0.93 | 0.92 | 0.92 | 0.93 | 0.92 | 0.89 | 0.91 | 0.91 | 0.79 | 0.82 |

| Fibrosis | 0.82 | 0.81 | 0.75 | 0.82 | 0.83 | 0.83 | 0.79 | 0.83 | 0.83 | 0.80 | 0.82 | 0.81 | 0.75 | 0.75 |

| Pleural Thickening | 0.77 | 0.77 | 0.72 | 0.77 | 0.76 | 0.77 | 0.76 | 0.78 | 0.78 | 0.75 | 0.77 | 0.78 | 0.72 | 0.74 |

| Hernia | 0.93 | 0.90 | 0.89 | 0.89 | 0.93 | 0.94 | 0.91 | 0.92 | 0.93 | 0.86 | 0.85 | 0.89 | 0.88 | 0.89 |

| Model | Params (M) | FLOPs (G) | Training Time/Epoch (s) | Inference Latency (ms/image) |

|---|---|---|---|---|

| EfficientNet-B0 | 5.3 | 50.39 | 42 | 8 |

| ConvFormer | 28 | 2.1 | 75 | 12 |

| CaFormer | 29 | 2.3 | 80 | 13 |

| SwinV2 | 60 | 4.5 | 120 | 18 |

| VMamba | 22 | 1.1 | 60 | 9 |

| MedMamba | 14 | 0.8 | 48 | 7 |

| Metric | ConvFormer | CaFormer | EfficientNet |

|---|---|---|---|

| Accuracy | 0.87 | 0.86 | 0.89 |

| Precision | 0.85 | 0.84 | 0.87 |

| Recall | 0.83 | 0.82 | 0.85 |

| Specificity | 0.89 | 0.88 | 0.91 |

| F1-score | 0.84 | 0.83 | 0.86 |

| Thoracic Disease | Wang et al. [9] | Yao et al. [30] | Gundel et al. [31] | Rajpurkar et al. [10] | Taslimi et al. [7] | The Best Scores Achieved |

|---|---|---|---|---|---|---|

| Atelectasis | 0.74 | 0.73 | 0.76 | 0.80 | 0.78 | 0.83 |

| Cardiomegaly | 0.87 | 0.85 | 0.88 | 0.92 | 0.87 | 0.91 |

| Effusion | 0.81 | 0.80 | 0.82 | 0.86 | 0.82 | 0.88 |

| Infiltration | 0.67 | 0.67 | 0.70 | 0.73 | 0.70 | 0.71 |

| Mass | 0.78 | 0.71 | 0.82 | 0.86 | 0.82 | 0.85 |

| Nodule | 0.69 | 0.77 | 0.75 | 0.78 | 0.78 | 0.78 |

| Pneumonia | 0.69 | 0.68 | 0.73 | 0.76 | 0.71 | 0.76 |

| Pneumothorax | 0.80 | 0.80 | 0.84 | 0.88 | 0.87 | 0.88 |

| Consolidation | 0.72 | 0.71 | 0.74 | 0.79 | 0.74 | 0.81 |

| Edema | 0.83 | 0.80 | 0.83 | 0.88 | 0.84 | 0.89 |

| Emphysema | 0.82 | 0.84 | 0.89 | 0.93 | 0.91 | 0.93 |

| Fibrosis | 0.80 | 0.74 | 0.81 | 0.80 | 0.82 | 0.83 |

| Pleural Thickening | 0.75 | 0.72 | 0.76 | 0.80 | 0.77 | 0.78 |

| Hernia | 0.89 | 0.77 | 0.89 | 0.91 | 0.85 | 0.94 |

| Average | 0.77 | 0.75 | 0.80 | 0.83 | 0.80 | 0.84 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yanar, E.; Kutan, F.; Ayturan, K.; Kutbay, U.; Algın, O.; Hardalaç, F.; Ağıldere, A.M. A Comparative Analysis of the Mamba, Transformer, and CNN Architectures for Multi-Label Chest X-Ray Anomaly Detection in the NIH ChestX-Ray14 Dataset. Diagnostics 2025, 15, 2215. https://doi.org/10.3390/diagnostics15172215

Yanar E, Kutan F, Ayturan K, Kutbay U, Algın O, Hardalaç F, Ağıldere AM. A Comparative Analysis of the Mamba, Transformer, and CNN Architectures for Multi-Label Chest X-Ray Anomaly Detection in the NIH ChestX-Ray14 Dataset. Diagnostics. 2025; 15(17):2215. https://doi.org/10.3390/diagnostics15172215

Chicago/Turabian StyleYanar, Erdem, Furkan Kutan, Kubilay Ayturan, Uğurhan Kutbay, Oktay Algın, Fırat Hardalaç, and Ahmet Muhteşem Ağıldere. 2025. "A Comparative Analysis of the Mamba, Transformer, and CNN Architectures for Multi-Label Chest X-Ray Anomaly Detection in the NIH ChestX-Ray14 Dataset" Diagnostics 15, no. 17: 2215. https://doi.org/10.3390/diagnostics15172215

APA StyleYanar, E., Kutan, F., Ayturan, K., Kutbay, U., Algın, O., Hardalaç, F., & Ağıldere, A. M. (2025). A Comparative Analysis of the Mamba, Transformer, and CNN Architectures for Multi-Label Chest X-Ray Anomaly Detection in the NIH ChestX-Ray14 Dataset. Diagnostics, 15(17), 2215. https://doi.org/10.3390/diagnostics15172215