A Feature-Augmented Explainable Artificial Intelligence Model for Diagnosing Alzheimer’s Disease from Multimodal Clinical and Neuroimaging Data

Abstract

1. Introduction

2. Related Work

2.1. Background of Explainable Artificial Intelligence (XAI) in Alzheimer’s Diagnosis

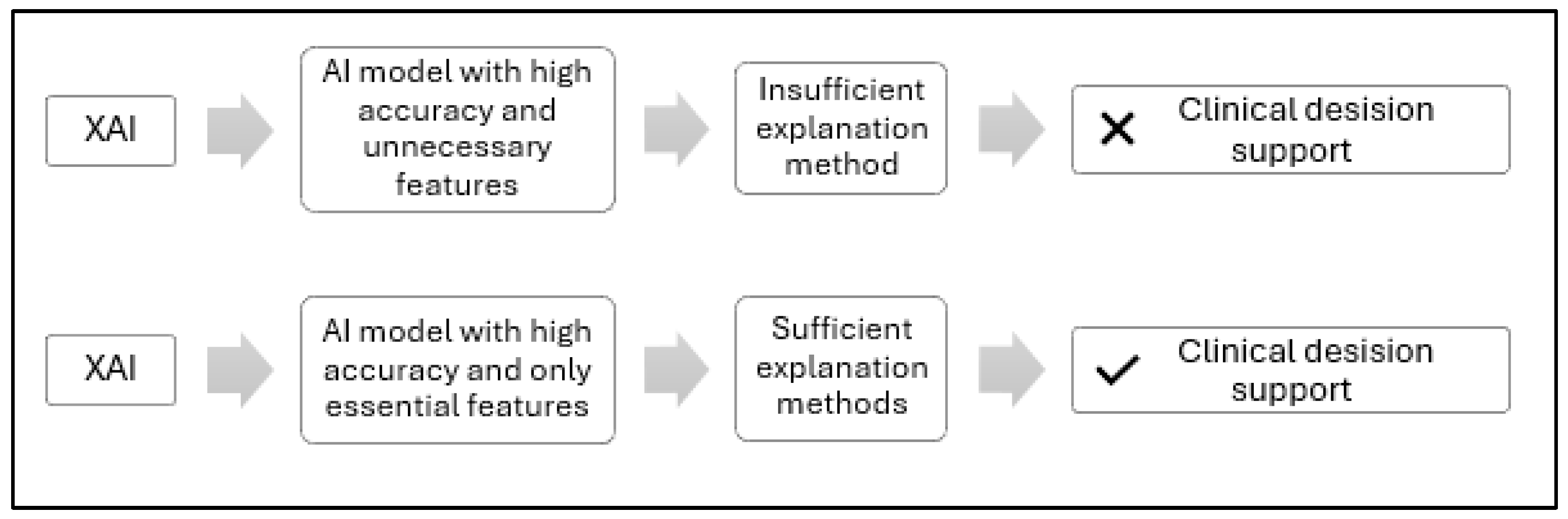

2.2. Problem Formulation

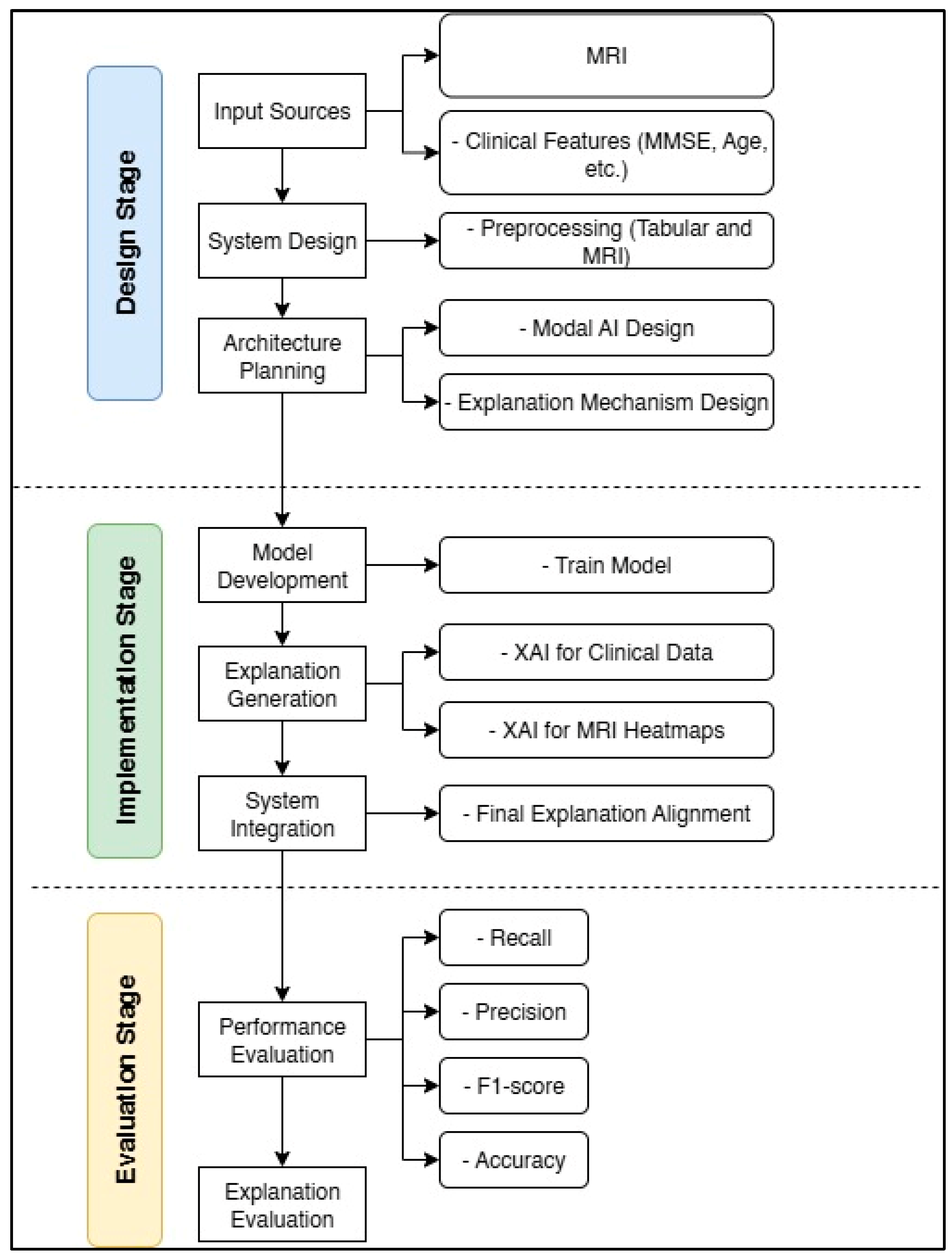

3. Methods

3.1. Objective and Theoretical Foundation of the Feature-Augmented Framework

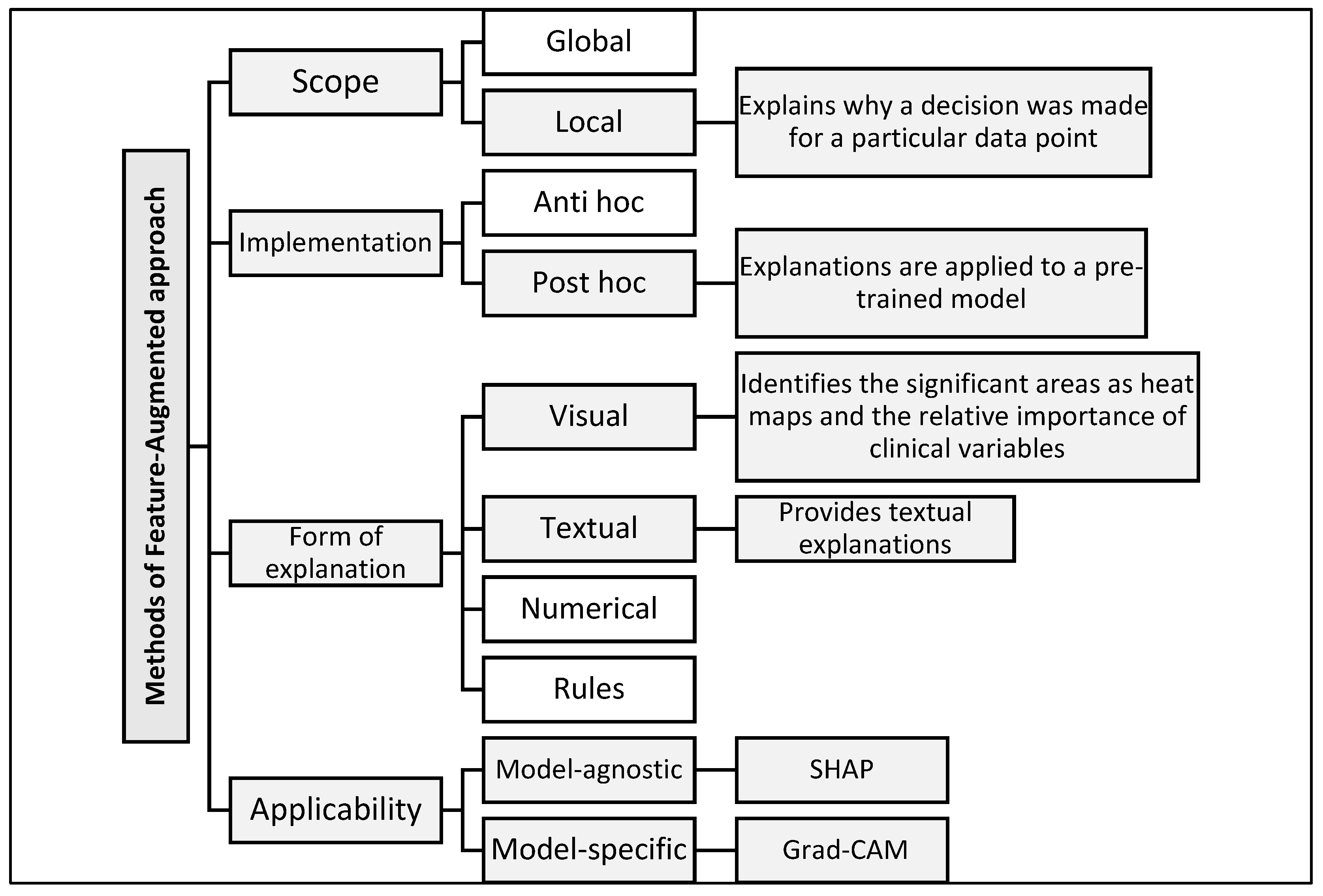

3.2. Feature-Augmented Explainable Artificial Intelligence

3.2.1. Model Architecture and Ensemble Design

3.2.2. Explainability Framework

4. Experimental Results and Discussion

4.1. Data Processing

4.2. Model Configuration and XAI Integration

- SHAP is applied to clinical data. This model quantifies the contribution of each feature to the final prediction and presents the results in graphical and textual form, making them easier for clinicians to understand.

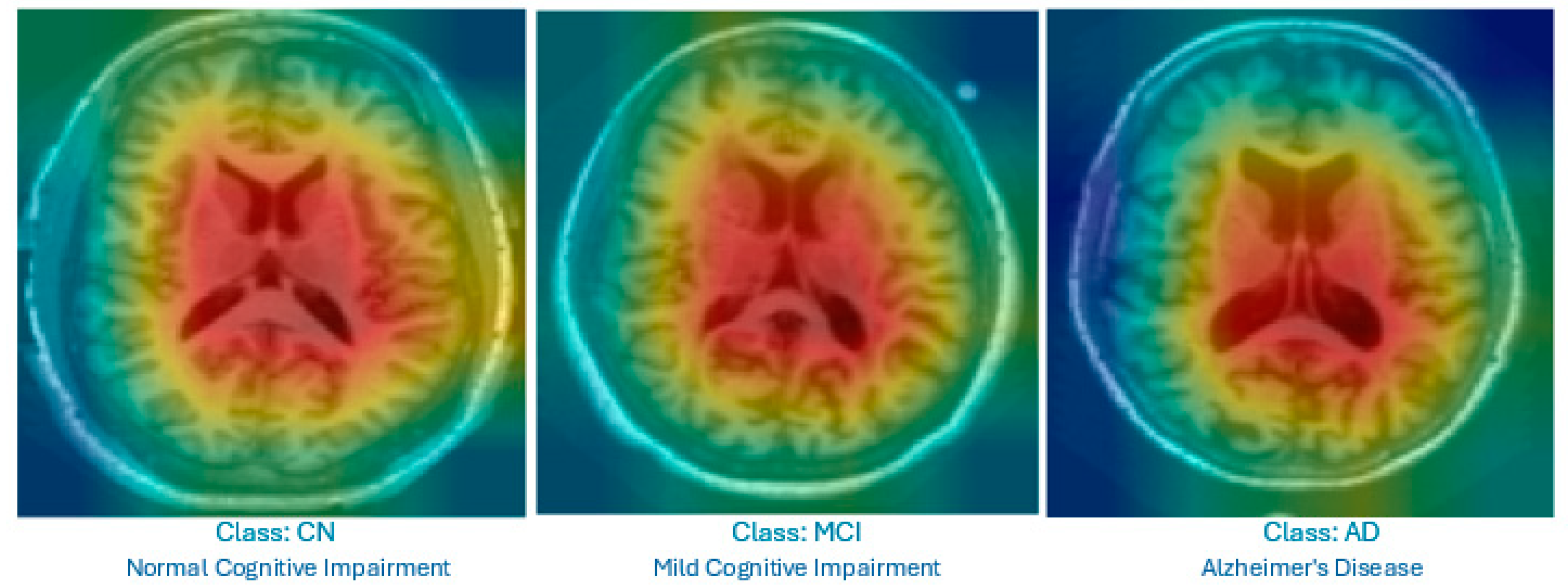

- Grad-CAM is used for MRI images. It produces heat maps superimposed on the original slices to highlight the brain regions that influenced the classification, helping non-radiologists visualize relevant anatomical patterns.

4.3. Performance Evaluation

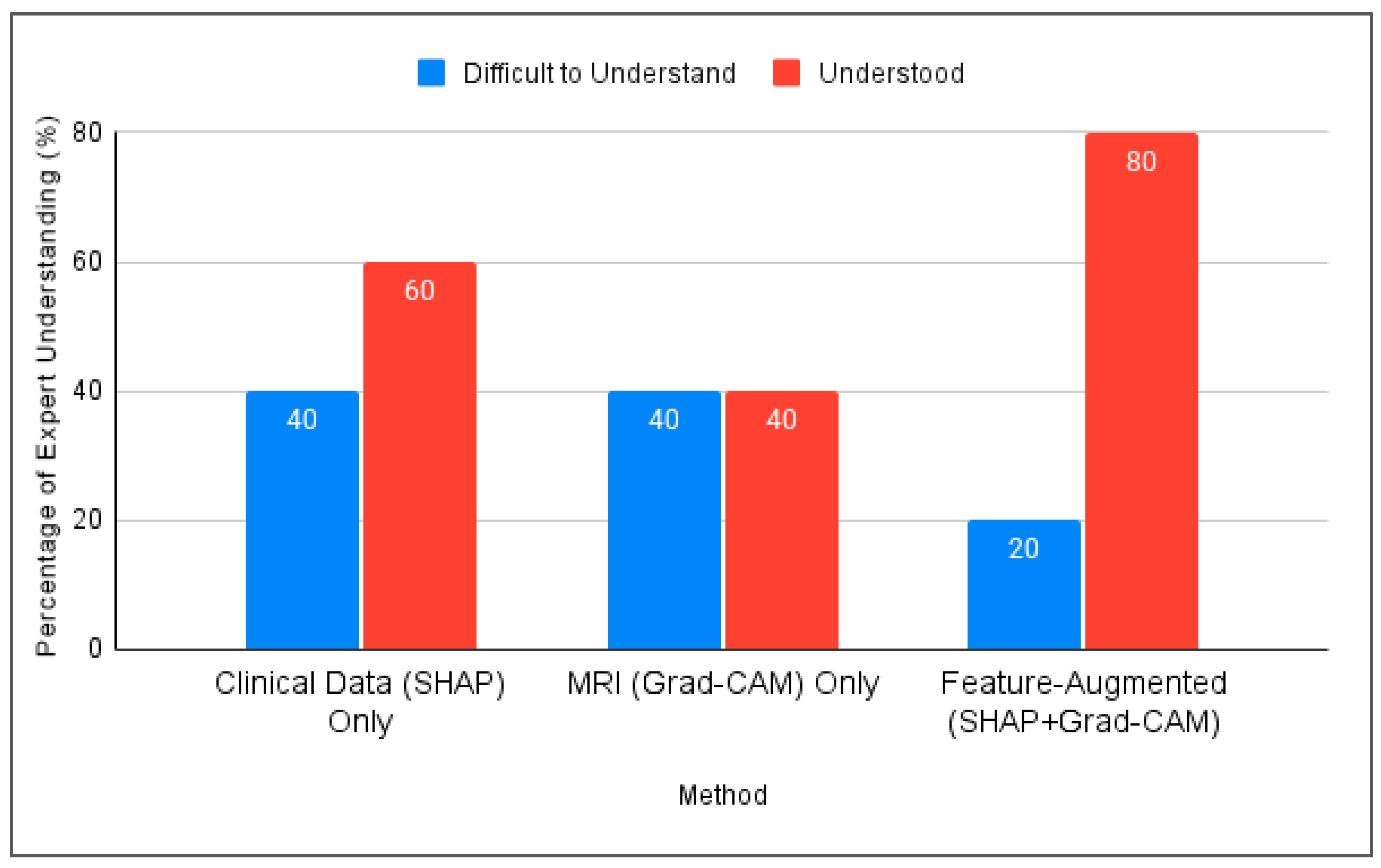

4.3.1. Questionnaire Design and Expert Involvement

- i.

- SHAP-based clinical explanation only: Highlighting key clinical features.

- ii.

- Grad-CAM heatmaps only: Showing important brain regions in the MRI.

- iii.

- Feature-Augmented XAI explanation: Combining SHAP-based features, Grad-CAM heatmaps, and textual explanations.

- i.

- Clarity: How easy the interpretations were to understand.

- ii.

- Clinical relevance: The extent to which interpretations align with known biomarkers of AD.

- iii.

- Decision support: The extent to which interpretations contribute to clinical decision-making.

- iv.

- Confidence in AI: Participants’ degree of confidence in AI-assisted diagnosis after reviewing the interpretations.

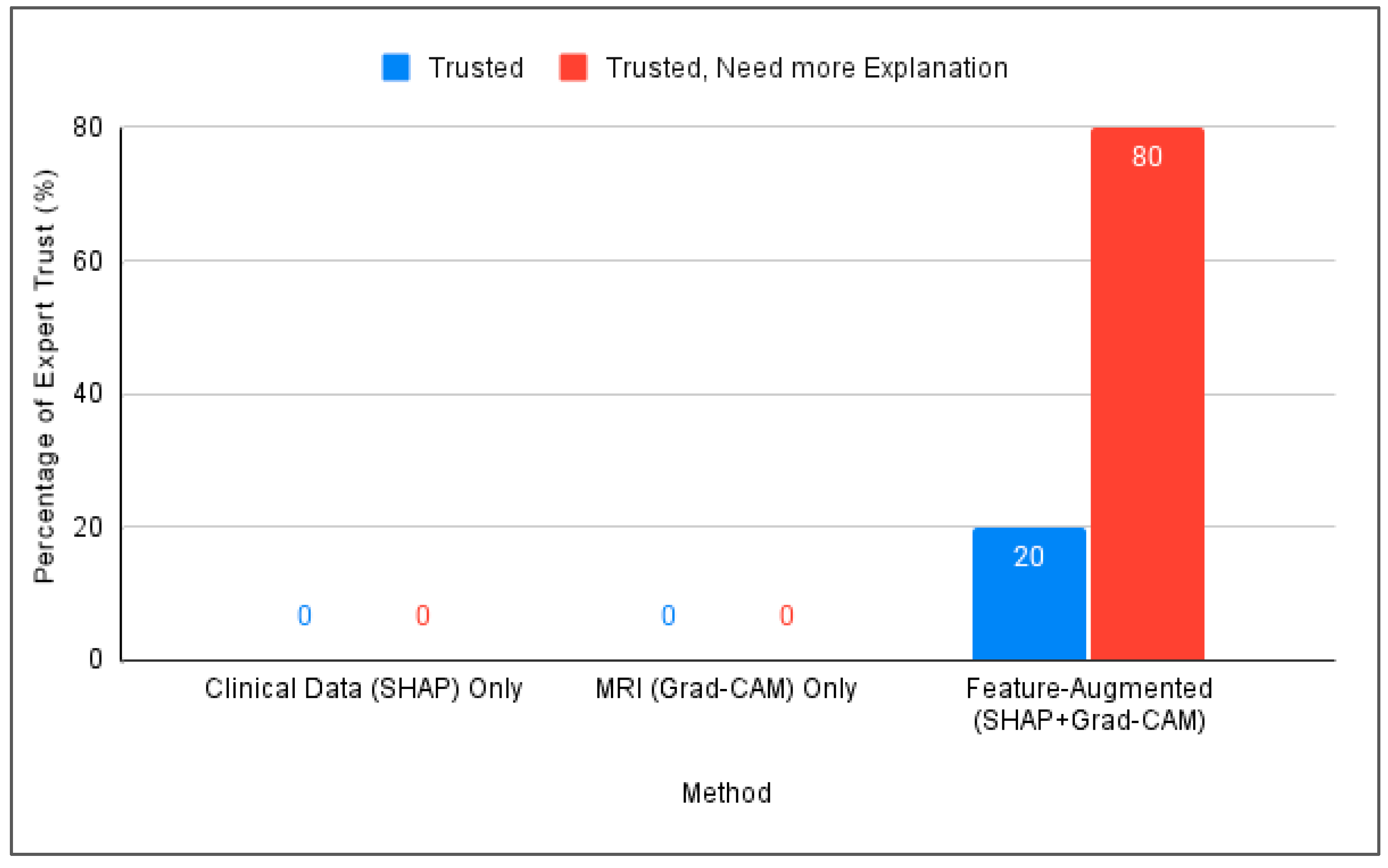

4.3.2. Expert Validation Results

- All five experts agreed that integrating clinical, MMSE, and MRI data improves the diagnostic accuracy.

- Four out of five agreed that AI models like ours help detect subtle disease patterns that are not easily visible through human interpretation.

- All experts rated SHAP and Grad-CAM explanations as either “understandable” or “very understandable” and described them as useful for gaining insight into the model decisions.

- Three out of five experts suggested that the textual explanations could be made clearer, especially when intended for non-expert users.

- All five participants emphasized that such explainability tools should be used to support, not replace, human physicians and showed strong support for integrating these models into future clinical practice.

4.3.3. Expert Reflections on Explanation and Clinical Relevance

4.4. Discussion

Addressing XAI Challenges Identified in Prior Work

- Involvement of medical experts: Specialists with over 10 years of experience evaluated the outputs through a structured questionnaire. The model achieved a 100% trust score, confirming improved confidence in AI decisions.

- Integrating multiple modalities: SHAP was applied to clinical data and Grad-CAM to MRI slices, ensuring comprehensive modality-specific interpretations.

- Reducing ambiguity from multiple XAI tools: Using several explainability frameworks on the same data can lead to contradictory outputs [35]. Our model avoids this issue by assigning SHAP exclusively to clinical data and Grad-CAM to image data, preventing interpretive overlap and maintaining clarity.

- Focusing on specific MRI slices to enhance explainability: Our model restricts analysis to middle slices that show the lateral ventricles, which are well-known indicators of AD-related atrophy. This strategy enhances the explainability and clinical relevance of the visual output.

- Formulating tailored explanations: Textual interpretations were adapted for physicians and medical students to improve the clarity and trust across user groups.

5. Conclusions

Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fabrizio, C.; Termine, A.; Caltagirone, C.; Sancesario, G. Artificial Intelligence for Alzheimer’s Disease: Promise or Challenge? Diagnostics 2021, 11, 1473. [Google Scholar] [CrossRef]

- Vrahatis, A.G.; Skolariki, K.; Krokidis, M.G.; Lazaros, K.; Exarchos, T.P.; Vlamos, P. Revolutionizing the Early Detection of Alzheimer’s Disease through Non-Invasive Biomarkers: The Role of Artificial Intelligence and Deep Learning. Sensors 2023, 23, 4184. [Google Scholar] [CrossRef]

- Majd, S.; Power, J.; Majd, Z.; Majd, S.; Power, J.; Majd, Z. Alzheimer’s Disease and Cancer: When Two Monsters Cannot Be Together. Front. Neurosci. 2019, 13, 155. [Google Scholar] [CrossRef]

- Khan, P.; Kader, F.; Islam, S.M.R.; Rahman, A.B.; Kamal, S.; Toha, M.U.; Kwak, K.-S. Machine Learning and Deep Learning Approaches for Brain Disease Diagnosis: Principles and Recent Advances. IEEE Access 2021, 9, 37622–37655. [Google Scholar] [CrossRef]

- Garcia, S.d.l.F.; Ritchie, C.W.; Luz, S. Artificial Intelligence, Speech, and Language Processing Approaches to Monitoring Alzheimer’s Disease: A Systematic Review. J. Alzheimer’s Dis. 2020, 78, 1547–1574. [Google Scholar] [CrossRef]

- Ben Hassen, S.; Neji, M.; Hussain, Z.; Hussain, A.; Alimi, A.M.; Frikha, M. Deep learning methods for early detection of Alzheimer’s disease using structural MR images: A survey. Neurocomputing 2024, 576, 127325. [Google Scholar] [CrossRef]

- Zakaria, M.M.A.; Doheir, M.; Akmaliah, N.; Yaacob, N.B.M. Infinite potential of AI chatbots: Enhancing user experiences and driving business transformation in e-commerce: Case of Palestinian e-commerce. J. Ecohumanism 2024, 3, 216–229. [Google Scholar] [CrossRef]

- Villain, N.; Fouquet, M.; Baron, J.-C.; Mézenge, F.; Landeau, B.; de La Sayette, V.; Viader, F.; Eustache, F.; Desgranges, B.; Chételat, G. Sequential relationships between grey matter and white matter atrophy and brain metabolic abnormalities in early Alzheimer’s disease. Brain 2010, 133, 3301–3314. [Google Scholar] [CrossRef] [PubMed]

- Battista, P.; Salvatore, C.; Berlingeri, M.; Cerasa, A.; Castiglioni, I. Artificial intelligence and neuropsychological measures: The case of Alzheimer’s disease. Neurosci. Biobehav. Rev. 2020, 114, 211–228. [Google Scholar] [CrossRef] [PubMed]

- Al Olaimat, M.; Martinez, J.; Saeed, F.; Bozdag, S.; Initiative, A.D.N. PPAD: A deep learning architecture to predict progression of Alzheimer’s disease. Bioinformatics 2023, 39, i149–i157. [Google Scholar] [CrossRef]

- Arafa, D.A.; Moustafa, H.E.-D.; Ali-Eldin, A.M.T.; Ali, H.A. Early detection of Alzheimer’s disease based on the state-of-the-art deep learning approach: A comprehensive survey. Multimedia Tools Appl. 2022, 81, 23735–23776. [Google Scholar] [CrossRef]

- Martínez-Murcia, F.; Górriz, J.; Ramírez, J.; Puntonet, C.; Salas-González, D. Computer Aided Diagnosis tool for Alzheimer’s Disease based on Mann–Whitney–Wilcoxon U-Test. Expert Syst. Appl. 2012, 39, 9676–9685. [Google Scholar] [CrossRef]

- Woodbright, M.D.; Morshed, A.; Browne, M.; Ray, B.; Moore, S. Toward Transparent AI for Neurological Disorders: A Feature Extraction and Relevance Analysis Framework. IEEE Access 2024, 12, 37731–37743. [Google Scholar] [CrossRef]

- Saif, F.H.; Al-Andoli, M.N.; Bejuri, W.M.Y.W. Explainable AI for Alzheimer Detection: A Review of Current Methods and Applications. Appl. Sci. 2024, 14, 10121. [Google Scholar] [CrossRef]

- Bazarbekov, I.; Razaque, A.; Ipalakova, M.; Yoo, J.; Assipova, Z.; Almisreb, A. A review of artificial intelligence methods for Alzheimer’s disease diagnosis: Insights from neuroimaging to sensor data analysis. Biomed. Signal Process. Control 2024, 92, 106023. [Google Scholar] [CrossRef]

- Arya, A.D.; Verma, S.S.; Chakarabarti, P.; Chakrabarti, T.; Elngar, A.A.; Kamali, A.-M.; Nami, M. A systematic review on machine learning and deep learning techniques in the effective diagnosis of Alzheimer’s disease. Brain Inform. 2023, 10, 17. [Google Scholar] [CrossRef] [PubMed]

- Abadir, P.; Oh, E.; Chellappa, R.; Choudhry, N.; Demiris, G.; Ganesan, D.; Karlawish, J.; Marlin, B.; Li, R.M.; Dehak, N.; et al. Artificial Intelligence and Technology Collaboratories: Innovating aging research and Alzheimer’s care. Alzheimer’s Dement. 2024, 20, 3074–3079. [Google Scholar] [CrossRef]

- Fujita, K.; Katsuki, M.; Takasu, A.; Kitajima, A.; Shimazu, T.; Maruki, Y. Development of an artificial intelligence-based diagnostic model for Alzheimer’s disease. Aging Med. 2022, 5, 167–173. [Google Scholar] [CrossRef]

- Bordin, V.; Coluzzi, D.; Rivolta, M.W.; Baselli, G. Explainable AI Points to White Matter Hyperintensities for Alzheimer’s Disease Identification: A Preliminary Study. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022. [Google Scholar] [CrossRef]

- Angelov, P.P.; Soares, E.A.; Jiang, R.; Arnold, N.I.; Atkinson, P.M. Explainable artificial intelligence: An analytical review. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2021, 11, e1424. [Google Scholar] [CrossRef]

- Kamal, M.S.; Chowdhury, L.; Nimmy, S.F.; Rafi, T.H.H.; Chae, D.-K. An Interpretable Framework for Identifying Cerebral Microbleeds and Alzheimer’s Disease Severity using Multimodal Data. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023. [Google Scholar] [CrossRef]

- Yousefzadeh, N.; Tran, C.; Ramirez-Zamora, A.; Chen, J.; Fang, R.; Thai, M.T. Neuron-level explainable AI for Alzheimer’s Disease assessment from fundus images. Sci. Rep. 2024, 14, 7710. [Google Scholar] [CrossRef]

- Jahan, S.; Abu Taher, K.; Kaiser, M.S.; Mahmud, M.; Rahman, S.; Hosen, A.S.M.S.; Ra, I.-H.; Mridha, M.F. Explainable AI-based Alzheimer’s prediction and management using multimodal data. PLoS ONE 2023, 18, e0294253. [Google Scholar] [CrossRef]

- Achilleos, K.G.; Leandrou, S.; Prentzas, N.; Kyriacou, P.A.; Kakas, A.C.; Pattichis, C.S. Extracting Explainable Assessments of Alzheimer’s disease via Machine Learning on brain MRI imaging data. In Proceedings of the 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), Cincinnati, OH, USA, 26–28 October 2020. [Google Scholar] [CrossRef]

- Guan, H.; Wang, C.; Cheng, J.; Jing, J.; Liu, T. A parallel attention-augmented bilinear network for early magnetic resonance imaging-based diagnosis of Alzheimer’s disease. Hum. Brain Mapp. 2022, 43, 760–772. [Google Scholar] [CrossRef]

- Yilmaz, D. Development and Evaluation of an Explainable Diagnostic AI for Alzheimer’s Disease. In Proceedings of the 2023 International Conference on Artificial Intelligence Science and Applications in Industry and Society (CAISAIS), Galala, Egypt, 3–5 September 2023. [Google Scholar] [CrossRef]

- Mansouri, D.; Echtioui, A.; Khemakhem, R.; Hamida, A.B. Explainable AI Framework for Alzheimer’s Diagnosis Using Convolutional Neural Networks. In Proceedings of the 2024 IEEE 7th International Conference on Advanced Technologies, Signal and Image Processing (ATSIP), Sousse, Tunisia, 11–13 July 2024. [Google Scholar] [CrossRef]

- Shad, H.A.; Rahman, Q.A.; Asad, N.B.; Bakshi, A.Z.; Mursalin, S.; Reza, T.; Parvez, M.Z. Exploring Alzheimer’s Disease Prediction with XAI in various Neural Network Models. In Proceedings of the TENCON 2021-2021 IEEE Region 10 Conference (TENCON), Auckland, New Zealand, 7–10 December 2021. [Google Scholar] [CrossRef]

- Tima, J.; Wiratkasem, C.; Chairuean, W.; Padongkit, P.; Pangkhiao, K.; Pikulkaew, K. Early Detection of Alzheimer’s Disease: A Deep Learning Approach for Accurate Diagnosis. In Proceedings of the 2024 21st International Joint Conference on Computer Science and Software Engineering (JCSSE), Phuket, Thailand, 19–22 June 2024. [Google Scholar] [CrossRef]

- Haddada, K.; Khedher, M.I.; Jemai, O.; Khedher, S.I.; El-Yacoubi, M.A. Assessing the Interpretability of Machine Learning Models in Early Detection of Alzheimer’s Disease. In Proceedings of the 2024 16th International Conference on Human System Interaction (HSI), Paris, France, 8–11 July 2024. [Google Scholar] [CrossRef]

- Alarjani, M. Alzheimer’s Disease Detection based on Brain Signals using Computational Modeling. In Proceedings of the 2024 Seventh International Women in Data Science Conference at Prince Sultan University (WiDS PSU), Riyadh, Saudi Arabia, 3–4 March 2024. [Google Scholar] [CrossRef]

- Alvarado, M.; Gómez, D.; Nuñez, A.; Robles, A.; Marecos, H.; Ticona, W. Implementation of an Early Detection System for Neurodegenerative Diseases Through the use of Artificial Intelligence. In Proceedings of the 2023 IEEE XXX International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 2–4 November 2023. [Google Scholar] [CrossRef]

- Bloch, L.; Friedrich, C.M.; Alzheimer’s Disease Neuroimaging Initiative. Machine Learning Workflow to Explain Black-box Models for Early Alzheimer’s Disease Classification Evaluated for Multiple Datasets. SN Comput. Sci. 2022, 3, 509. [Google Scholar] [CrossRef]

- Deshmukh, A.; Kallivalappil, N.; D’souza, K.; Kadam, C. AL-XAI-MERS: Unveiling Alzheimer’s Mysteries with Explainable AI. In Proceedings of the 2024 Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), Vellore, India, 22–23 February 2024. [Google Scholar] [CrossRef]

- Viswan, V.; Shaffi, N.; Mahmud, M.; Subramanian, K.; Hajamohideen, F. Explainable Artificial Intelligence in Alzheimer’s Disease Classification: A Systematic Review. Cogn. Comput. 2023, 16, 1–44. [Google Scholar] [CrossRef]

- Umeda-Kameyama, Y.; Kameyama, M.; Tanaka, T.; Son, B.-K.; Kojima, T.; Fukasawa, M.; Iizuka, T.; Ogawa, S.; Iijima, K.; Akishita, M. Screening of Alzheimer’s disease by facial complexion using artificial intelligence. Aging 2021, 13, 1765–1772. [Google Scholar] [CrossRef]

- Nguyen, K.; Nguyen, M.; Dang, K.; Pham, B.; Huynh, V.; Vo, T.; Ngo, L.; Ha, H. Early Alzheimer’s disease diagnosis using an XG-Boost model applied to MRI images. Biomed. Res. Ther. 2023, 10, 5896–5911. [Google Scholar] [CrossRef]

- AlMohimeed, A.; Saad, R.M.A.; Mostafa, S.; El-Rashidy, N.M.; Farrag, S.; Gaballah, A.; Elaziz, M.A.; El-Sappagh, S.; Saleh, H. Explainable Artificial Intelligence of Multi-Level Stacking Ensemble for Detection of Alzheimer’s Disease Based on Particle Swarm Optimization and the Sub-Scores of Cognitive Biomarkers. IEEE Access 2023, 11, 123173–123193. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, T.; Lanfranchi, V.; Yang, P. Explainable Tensor Multi-Task Ensemble Learning Based on Brain Structure Variation for Alzheimer’s Disease Dynamic Prediction. IEEE J. Transl. Eng. Heal. Med. 2022, 11, 1–12. [Google Scholar] [CrossRef]

- Syed, M.R.; Kothari, N.; Joshi, Y.; Gawade, A. Eadda: Towards Novel and Explainable Deep Learning for Early Alzheimer’s Disease Diagnosis Using Autoencoders. Int. J. Intell. Syst. Appl. Eng. 2023, 11, 234–246. [Google Scholar]

- Qu, Y.; Wang, P.; Liu, B.; Song, C.; Wang, D.; Yang, H.; Zhang, Z.; Chen, P.; Kang, X.; Du, K.; et al. AI4AD: Artificial intelligence analysis for Alzheimer’s disease classification based on a multisite DTI database. Brain Disord. 2021, 1, 100005. [Google Scholar] [CrossRef]

- Al-Bakri, F.H.; Bejuri, W.M.Y.W.; Al-Andoli, M.N.; Ikram, R.R.R.; Khor, H.M.; Tahir, Z.; Initiative, T.A.D.N. A Meta-Learning-Based Ensemble Model for Explainable Alzheimer’s Disease Diagnosis. Diagnostics 2025, 15, 1642. [Google Scholar] [CrossRef]

- Ganaie, M.; Hu, M.; Malik, A.; Tanveer, M.; Suganthan, P. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Al-Andoli, M.; Cheah, W.P.; Tan, S.C. Deep learning-based community detection in complex networks with network partitioning and reduction of trainable parameters. J. Ambient. Intell. Humaniz. Comput. 2020, 12, 2527–2545. [Google Scholar] [CrossRef]

- Rashmi, U.; Singh, T.; Ambesange, S. MRI image based Ensemble Voting Classifier for Alzheimer’s Disease Classification with Explainable AI Technique. In Proceedings of the 2023 IEEE 8th International Conference for Convergence in Technology (I2CT), Lonavla, India, 7–9 April 2023. [Google Scholar] [CrossRef]

- Al-Andoli, M.N.; Tan, S.C.; Cheah, W.P. Distributed parallel deep learning with a hybrid backpropagation-particle swarm optimization for community detection in large complex networks. Inf. Sci. 2022, 600, 94–117. [Google Scholar] [CrossRef]

- Al-Andoli, M.N.; Tan, S.C.; Sim, K.S.; Lim, C.P.; Goh, P.Y. Parallel Deep Learning with a hybrid BP-PSO framework for feature extraction and malware classification. Appl. Soft Comput. 2022, 131, 109756. [Google Scholar] [CrossRef]

- Ravikiran, H.K.; Deepak, R.; Deepak, H.A.; Prapulla Kumar, M.S.; Sharath, S.; Yogeesh, G.H. A robust framework for Alzheimer’s disease detection and staging: Incorporating multi-feature integration, MRMR feature selection, and Random Forest classification. Multimed. Tools Appl. 2024, 84, 24903–24931. [Google Scholar] [CrossRef]

- Uddin, K.M.M.; Alam, M.J.; Anawar, J.E.; Uddin, A.; Aryal, S. A Novel Approach Utilizing Machine Learning for the Early Diagnosis of Alzheimer’s Disease. Biomed. Mater. Devices 2023, 1, 882–898. [Google Scholar] [CrossRef]

- Menagadevi, M.; Mangai, S.; Madian, N.; Thiyagarajan, D. Automated prediction system for Alzheimer detection based on deep residual autoencoder and support vector machine. Optik 2022, 272, 170212. [Google Scholar] [CrossRef]

- van der Waa, J.; Nieuwburg, E.; Cremers, A.; Neerincx, M. Evaluating XAI: A comparison of rule-based and example-based explanations. Artif. Intell. 2021, 291, 103404. [Google Scholar] [CrossRef]

- Rong, Y.; Castner, N.; Bozkir, E.; Kasneci, E. User Trust on an Explainable AI-based Medical Diagnosis Support System. arXiv 2022, arXiv:2204.12230. [Google Scholar] [CrossRef]

- Vimbi, V.; Shaffi, N.; Mahmud, M. Interpreting artificial intelligence models: A systematic review on the application of LIME and SHAP in Alzheimer’s disease detection. Brain Inform. 2024, 11, 10. [Google Scholar] [CrossRef] [PubMed]

- González-Alday, R.; García-Cuesta, E.; Kulikowski, C.A.; Maojo, V. A Scoping Review on the Progress, Applicability, and Future of Explainable Artificial Intelligence in Medicine. Appl. Sci. 2023, 13, 10778. [Google Scholar] [CrossRef]

- Yang, K.; Mohammed, E.A. A Review of Artificial Intelligence Technologies for Early Prediction of Alzheimer’s Disease. arXiv 2020, arXiv:2101.01781. [Google Scholar] [CrossRef]

| Phase | Subject Matter | Methodology | Measure of Performance | Result Validation |

|---|---|---|---|---|

| Phase 1 | Enhanced XAI (Feature-Augmented Design) | Develop an ensemble meta-model using mid-slice MRI and clinical data; apply SHAP and Grad-CAM for explainability | Accuracy, recall, precision, and F1-score | Compared against standard XAI models; validated using ADNI and OASIS datasets |

| Phase 2 | Integration of Tabular and Image Features | Extract predefined clinical features (e.g., MMSE, age); train a hybrid model with SHAP for interpretation | Explanation coverage and clinical explainability | Measured improvements in classification, accuracy, and explanation quality |

| Phase 3 | Limitations of Current XAI Techniques | Literature gap analysis; compare SHAP-only and Grad-CAM-only models; evaluate enhanced model | Usability score, explanation clarity, and time to understand | Theoretical and practical comparison; discuss model-agnostic vs. model-specific XAI limitations |

| Phase 4 | Evaluation of Explanation Quality and Performance | Collect user and expert feedback via surveys to assess clarity, usefulness, and trust in explanations | Trust, clarity, and usefulness | Validated through structured feedback from experts |

| Expert | Years of Experience | Familiarity with Alzheimer’s Diagnosis | Familiarity with XAI in Medicine | Confidence in XAI |

|---|---|---|---|---|

| Person 1 | More than 10 years | Excellent knowledge | Good | Strongly Agree |

| Person 2 | More than 10 years | Excellent knowledge | Moderate | Agree |

| Person 3 | More than 10 years | Excellent knowledge | Excellent | Strongly Agree |

| Person 4 | 6–10 years | Good knowledge | Moderate | Agree |

| Person 5 | 6–10 years | Good knowledge | Moderate | Neutral |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-bakri, F.H.; Bejuri, W.M.Y.W.; Al-Andoli, M.N.; Ikram, R.R.R.; Khor, H.M.; Sholva, Y.; Ariffin, U.K.; Yaacob, N.M.; Abas, Z.A.; Abidin, Z.Z.; et al. A Feature-Augmented Explainable Artificial Intelligence Model for Diagnosing Alzheimer’s Disease from Multimodal Clinical and Neuroimaging Data. Diagnostics 2025, 15, 2060. https://doi.org/10.3390/diagnostics15162060

Al-bakri FH, Bejuri WMYW, Al-Andoli MN, Ikram RRR, Khor HM, Sholva Y, Ariffin UK, Yaacob NM, Abas ZA, Abidin ZZ, et al. A Feature-Augmented Explainable Artificial Intelligence Model for Diagnosing Alzheimer’s Disease from Multimodal Clinical and Neuroimaging Data. Diagnostics. 2025; 15(16):2060. https://doi.org/10.3390/diagnostics15162060

Chicago/Turabian StyleAl-bakri, Fatima Hasan, Wan Mohd Yaakob Wan Bejuri, Mohamed Nasser Al-Andoli, Raja Rina Raja Ikram, Hui Min Khor, Yus Sholva, Umi Kalsom Ariffin, Noorayisahbe Mohd Yaacob, Zuraida Abal Abas, Zaheera Zainal Abidin, and et al. 2025. "A Feature-Augmented Explainable Artificial Intelligence Model for Diagnosing Alzheimer’s Disease from Multimodal Clinical and Neuroimaging Data" Diagnostics 15, no. 16: 2060. https://doi.org/10.3390/diagnostics15162060

APA StyleAl-bakri, F. H., Bejuri, W. M. Y. W., Al-Andoli, M. N., Ikram, R. R. R., Khor, H. M., Sholva, Y., Ariffin, U. K., Yaacob, N. M., Abas, Z. A., Abidin, Z. Z., Asmai, S. A., Ahmad, A., Abdul Rahman, A. F. N., Rahmalan, H., & Abd Samad, M. F. (2025). A Feature-Augmented Explainable Artificial Intelligence Model for Diagnosing Alzheimer’s Disease from Multimodal Clinical and Neuroimaging Data. Diagnostics, 15(16), 2060. https://doi.org/10.3390/diagnostics15162060