1. Introduction

Dry eye disease (DED) is a multifactorial condition affecting the ocular surface, characterized by a loss of homeostasis of the tear film and accompanied by ocular symptoms, in which ocular surface damage plays an etiological role [

1]. The prevalence of DED ranges between 5% and 50% worldwide [

2]. To diagnose DED, careful assessment of ocular surface damage is essential. Recommended assessments include corneal, conjunctival, and lid-margin staining, with corneal staining being the most significant [

3]. Sodium fluorescein is a common dye for corneal staining, as it can enter sick cells and be transported across altered tight junctions and micro pool at the space left by a shed epithelial cell [

4].

Several subjective grading scales have been developed to evaluate corneal fluorescein staining (CFS), including the National Eye Institute (NEI)/Industry scale [

5], the Oxford scale [

6], and the Sjögren’s International Collaborative Clinical Alliance Ocular Staining Score (OSS) scale [

7]. The NEI scale divides the cornea into five zones (central, superior, inferior, nasal, and temporal) and assigns a grade from 0 to 3 to each zone. In contrast, the Oxford and OSS scales examine the entire cornea, using different criteria to evaluate staining regions. The Oxford scale employs illustrative drawings to grade corneal staining from 0 to 5, while the OSS scale counts the number of staining dots and assigns a score between 0 and 3, with an additional point for special types of dot distributions. However, there is little consensus on the features included in these scales, as they differ in their procedures and standards [

8]. The variety of grading scales can be confusing for ophthalmologists and time consuming in clinical practice, and subjectivity leads to interobserver and intraobserver variability.

Therefore, computer-aided diagnosis (CAD) has made progress in evaluating CFS in recent years. Rodriguez et al. [

9] developed a semi-automatic method by manually identifying staining regions using three seed points in a corneal area. Similarly, Pellegrini et al. [

10] manually traced the corneal area with the ImageJ 1.51s software for CFS grading. In contrast, Daugman’s operator was employed for locating the cornea, followed by an Otsu method for automatically identifying staining regions [

11]. Bagbaba et al. [

12] also developed a method that automatically segmented staining regions using connected component labeling. Kourukmas manually positioned the cornea and segmented the stained area with the help of ImageJ software [

13]. Rather than directly predicting the CFS result, all these methods finally attempted to explore the correlation between the corresponding grade and the image-based parameters of the staining regions, such as the number of corneal staining regions and the ratio of the staining area to the total corneal area. Recently, data-driven deep neural networks (DNNs) have been used to segment staining regions or to examine the grading scale directly from the images [

14,

15,

16,

17,

18], producing promising results in grading CFS.

The emergence of computer-aided solutions for evaluating CFS can provide objective measurements and facilitate the quantitative grading of dry eyes. However, previous studies have primarily focused on the correlation of CFS with the number or area of the staining regions [

9,

10,

11,

12,

18], neglecting the distribution and texture information from these regions. This approach is inadequate for complicated corneal staining images that involve confluent staining and filaments. Although data-driven solutions such as deep learning show promising classification accuracy, their lack of interpretability restricts their usage in clinics [

14,

15,

16,

17]. Moreover, previous research has ignored spatial position information, despite the variations in the closeness and distribution between staining regions in different grades’ images, indicating the potential use of the topological theory in CFS evaluation. To the best of our knowledge, the topological features have not been applied in corneal-staining evaluation.

Therefore, we considered whether topological attributes might uncover a more efficient underlying differentiating mechanism between different staining grades. We proposed an automated machine learning-based method for evaluating corneal fluorescein staining in dry-eye disease, which includes a set of multiscale topological features designed to capture spatial connectivity and distribution information between staining regions.

2. Materials and Methods

2.1. Data Set

The retrospective, single-center research was conducted at Beijing Tongren Hospital, Capital Medical University. Patients diagnosed with dry eye based on the Dry Eye Workshop II criteria between 2021 to 2022 were included [

19]. Patients with a history of ocular injury or infection, contact lens wear within the last 6 months, surgery within the previous 12 months, or systemic autoimmune diseases such as Sjogren’s syndrome and systemic lupus erythematosus were excluded. The study adhered to the principles of the Declaration of Helsinki. Written informed consent was obtained from all participants.

All corneal images were acquired by a single technician using a photo slit-lamp system (BX 900, Haag-Streit, Bern, Switzerland) with 10× magnification. The cornea was stained with fluorescein by introducing a wet fluorescein sodium ophthalmic strip (Tianjin Jingming New Technological Development Co., Tianjin, China) into the inferior fornix, and participants were instructed to blink [

19]. The camera settings were constant for all participants, and images of the entire cornea were obtained using the blue filter and the barrier yellow filter to observe corneal staining.

2.2. Investigator Grading System

Two experienced ophthalmologists assessed corneal staining images independently. The results obtained by the two ophthalmologists were compared and discrepancies were resolved with another ophthalmologist. In dry-eye disease, the inferior zone typically presents the most severe grade of corneal staining. Compared to other areas, the inferior zone has a higher density of staining and provides more features [

20]. We assumed that the center of the cornea was located at the middle of pupil, and the distance from the center to the bottom edge of the cornea was regarded as the radius. Therefore, the region of interest (ROI) was located at the lower sector areas, with an included angle of 90°. The ROI in the inferior cornea was finally graded according to the OSS scale. The OSS score was calculated as follows: 0, no staining; 1, one to five staining dots; 2, six to 30 staining dots; 3, more than 30 staining dots [

7]. An additional point was added if:(1) there were one or more patches of confluent staining, (2) there were one or more filaments, or (3) staining occurred in the central cornea. As the ROI was the inferior zone of the cornea, the maximum score for each ROI was 5 in our study. The final OSS score was regarded as the true label.

2.3. Staining Region Analysis

As shown in

Figure 1, the stage of staining region analysis was developed to extract the regions of staining. The extraction process consisted of three primary steps: image preprocessing, identification of the region of interest (ROI) in the cornea, and segmentation of staining regions within the ROI. The precise determination of both the ROI and staining regions was critical for achieving optimal performance of the automated grading system.

2.3.1. Image Preprocessing

To improve the clarity of corneal edges and eliminate reflective areas, we applied a series of image preprocessing operations before determining the ROI. In cases where low-contrast images were obtained, likely due to variations in the light environment during image capture, we employed image enhancement methods to increase the visibility of corneal edges. First, we extracted the red channel from the input color image to enhance the edges and minimize the possible impact of staining (

Figure 2a). We then utilized median filtering and contrast-limited adaptive histogram equalization (CLAHE) to decrease noise and improve image contrast [

11,

21], respectively. Additionally, to address potential reflective regions in the images, Otsu thresholding was applied to detect and eliminate the brightest areas (

Figure 2b) [

22].

2.3.2. Location of the ROI

Following the preprocessing procedures, we identified the ROI by first detecting the cornea and the pupil. We used Otsu thresholding to binarize and segment the sclera areas, which were outside and brighter than the cornea. We then determined the center and radius of the cornea by identifying the largest diameter in the inner edges of the binarized sclera, corresponding to the circular edges of the cornea. Daugman’s integrodifferential operator was subsequently used to detect the pupil within the cornea [

23]. Specifically, we searched for the largest outward radial gradient of the grey intensity of the circular ring under any proposed combination of the center (x0, y0) and radius r, consisting of the circle of the pupil. Daugman’s equation, as presented in Equation (1), was used to compute the pupil’s position. Once the cornea and the pupil were detected, the ROI’s location was determined as the lower sector area with an included angle of 90°.

Figure 2c shows the detected cornea, pupil, and ROI.

2.3.3. Segmentation of Staining Regions in the ROI

To segment the staining regions of the dots or patches in the located ROI image, we employed the top-hat by reconstruction operation in morphology [

24]. Specifically, we first extracted the green channel to highlight the green staining regions and then performed an open operation to eliminate small bright details. Next, the ROI image was reconstructed in grayscale using the result from the open operation as a template, which effectively removed the highlights while preserving the background. Finally, the staining regions were segmented by taking the difference between the ROI and the reconstructed result (

Figure 2d). Compared with the commonly used threshold segmentation methods, such as Otsu, the top-hat by reconstruction operation showed superior detection performance to staining regions under different contrasts.

2.4. Signature Construction

After segmenting the desired stained regions, we proceeded with signature construction, which involved feature extraction and selection to meet the requirements of the classification model. In this stage, we extracted conventional radiomic features, including textural and morphological features. Additionally, we developed a set of multiscale topological features to uncover spatial connectivity and distribution information between staining regions [

25]. These additional features aimed to capture the intricate relationships and patterns among the stained regions.

2.4.1. Feature Extraction

Radiomic features, encompassing both textural and morphological characteristics, were calculated, as the previous study mentioned [

26,

27]. A total of 837 texture features were extracted to analyze the background grayscale textural and pattern information of ROI images. Specifically, this included 18 first-order histogram statistical features and 75 second-order gray matrix features, such as autocorrelation and cluster prominence in the gray-level co-occurrence matrix (GLCM), the gray-level non-uniformity and gray-level non-uniformity normalized in gray-level size zone matrix (GLSZM), the gray-level variance and high gray-level run emphasis in gray-level run length matrix (GLRLM), and so on. These features were calculated in nine forms of images, respectively [

26]. In addition to the original image, three image transformation operations were performed to enrich the textural information of the ROI. These operations included Laplacian of Gaussian filtering (LoG) (σ = 1, 2, 3), four components of wavelet decomposition, and local binary patterns (LBPs). Furthermore, to avoid ignoring the information from staining regions, nine morphological features were extracted by calculating the shape characteristics, such as circularity and the area of each segmented staining region [

10].

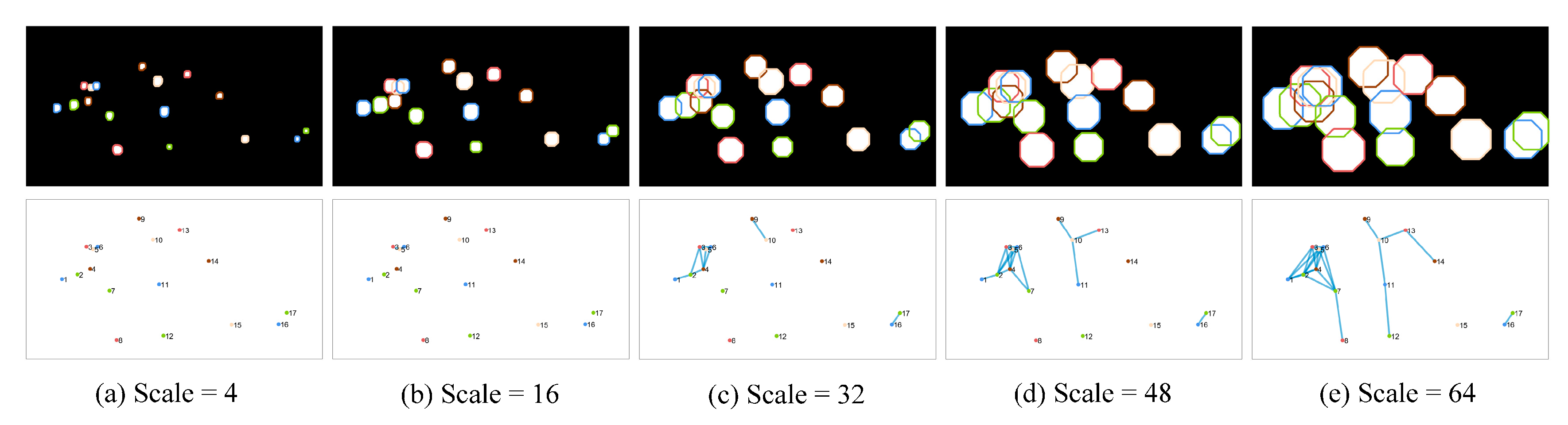

To capture the spatial connectivity and distribution information of staining regions, a set of multiscale graph-theoretic topological features were developed. These features aimed to address characteristics like regional clustered distribution resulting from confluent staining, which cannot be accurately described by textural and morphological features alone [

25]. The approach involved considering the centroid of each staining region as the vertex in a graph and estimating the connectivity between staining regions using dilation operations at multiple scales. When two staining regions overlapped after dilation, their nodes were connected by an edge, thus generating a topological graph that reflected the connectivity. As the scale of dilation increased from small to large, the number of edges and the connectivity of the graph exhibited a positive correlation. The construction process of the topological graph is shown in

Figure 3. Eight topological features, including vertex degree and eccentricity, were calculated at each scale. These calculations were performed using different disk dilation operators, ranging in size from 4 to 64. A total of 128 multiscale topological features were extracted in 16 scales. The texture features we extracted were the same as those in the literature, and the morphological and topological features extracted are shown in

Table 1 [

25].

2.4.2. Feature Selection

In order to handle the large number of features (974 normalized features) obtained after calculating all three kinds of features, a four-step feature selection method was employed for signature construction. This approach aimed to reduce the dimensionality of the features set and prevent overfitting of the model without compromising its performance. One-way analysis of variance (ANOVA) and a Pearson redundancy-based filter (PRBF) were applied to eliminate redundant terms in the feature vector [

28]. Subsequently, a backward feature selection method based on the linear regression model was used to remove non-significant features [

29]. The final selection for important features was determined using a decision-tree method [

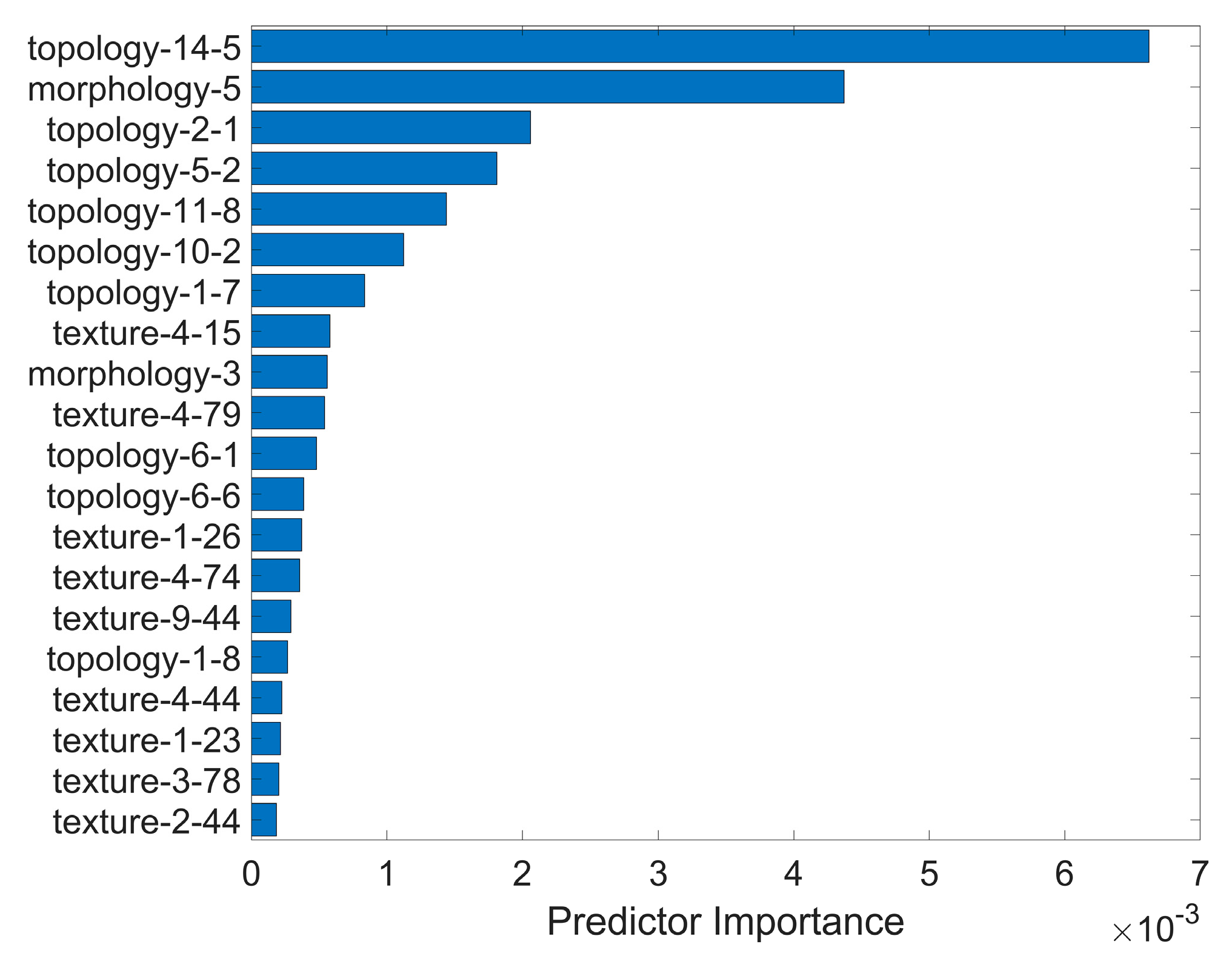

30], where the importance of each feature was measured by the gini impurity at each node. Higher importance indicated stronger separability of the feature across different classes of the model. Multiple alternative signatures were constructed, based on different combinations of the importance ranking and feature categories.

2.5. Classification and Evaluation

The developed machine learning-based model aimed to classify corneal staining images into different grades, based on selected features. To determine the optimal approach for our auto-grading method and evaluate the effect of different categories of features, we extensively explored the performance of different combinations of signatures and models. Specifically, four groups of features were applied in the same support vector machine (SVM) model in order to determine the one that was associated with best performance and accept it as the final signature. Additionally, different combinations of topological and non-topological features (textural and morphological) were investigated to evaluate the contributions of topological features in the classification process. This included the overall top-10 important features, the top-10 important non-topological features, and the top-5 important topological features and non-topological features, respectively. These resulting signatures were then accessed using five kinds of machine learning-based classification models, namely, SVM, Naive Bayes (NB), Decision Tree (DT), Boosting Tree (BT), K-nearest neighbors (KNN), and Random Forest (RF). In our experiments, the original dataset was divided into a training dataset (307 images) and a testing dataset (77 images), in an 8:2 ratio. A ten-fold cross-validation method was used to train and validate each model on the training dataset, while the classification performance of the model was evaluated with the testing dataset.

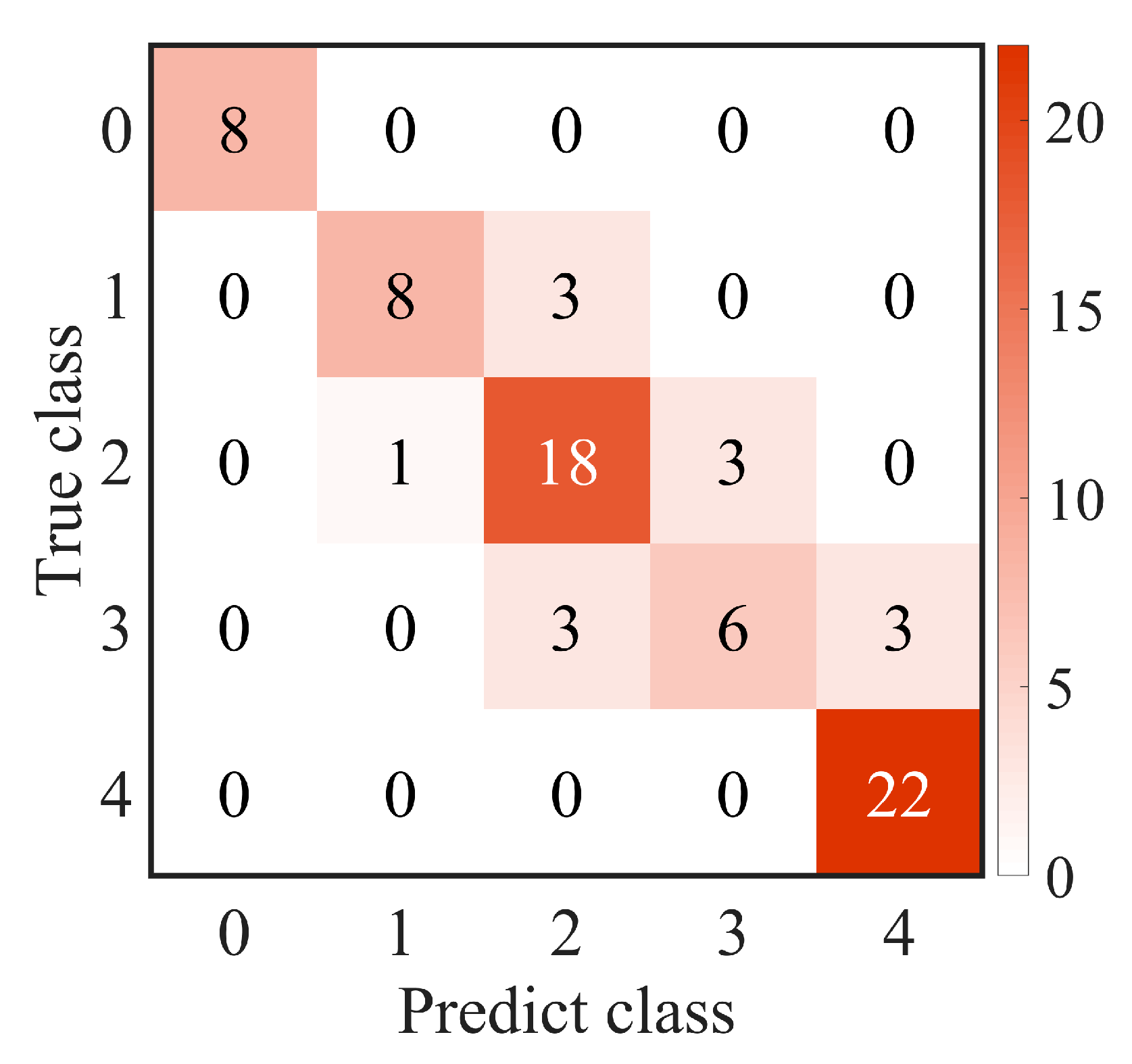

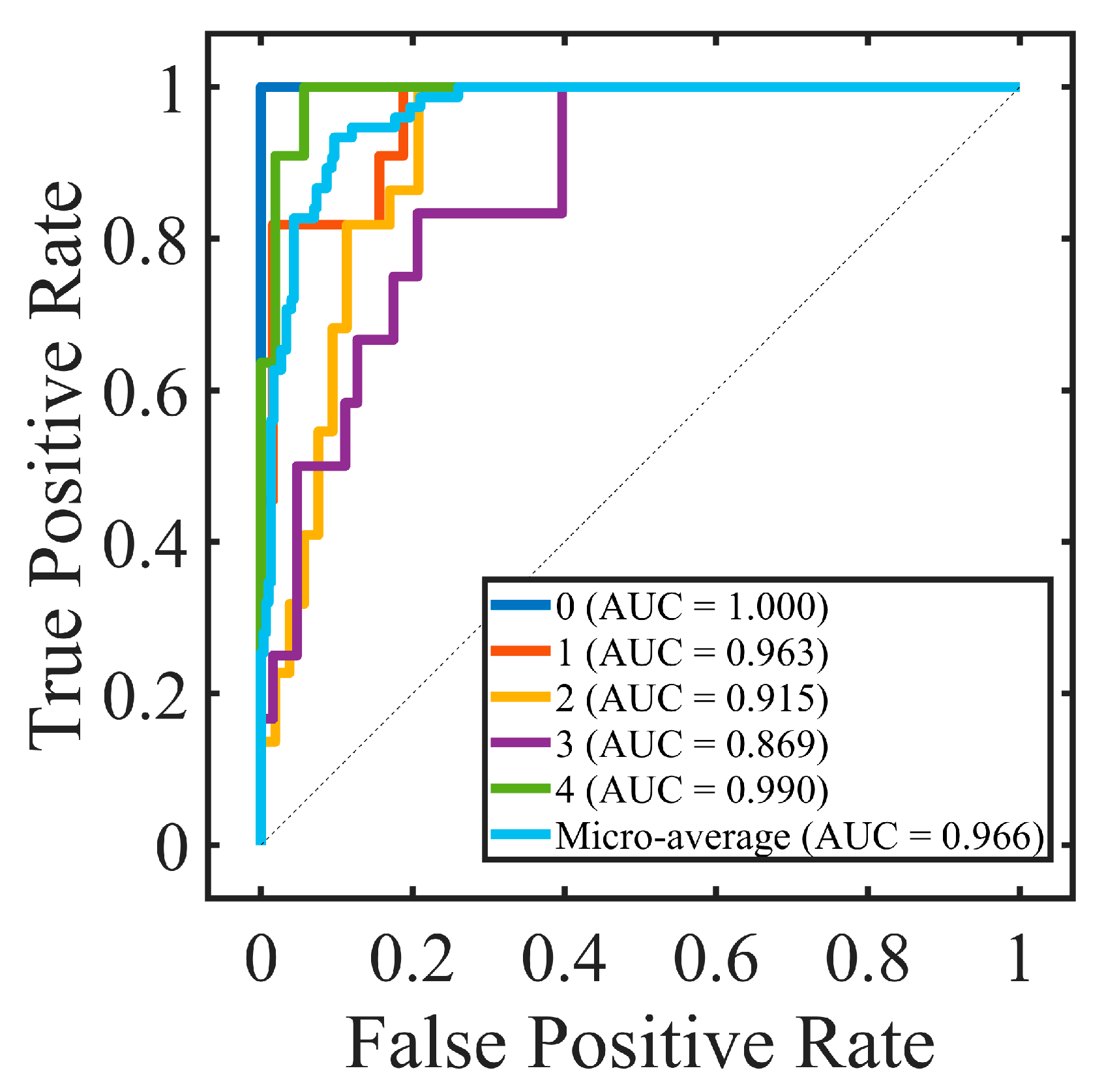

2.6. Statistical Analysis

An intraclass correlation coefficient (ICC) was calculated to assess the interobserver repeatability between two resident ophthalmologists; ICC ≥ 0.8 indicated good reliability. Accuracy (ACC), sensitivity (SEN), precision (PRE), specificity (SPE), F-measure of the confusion matrix, and the area under the receiver operating characteristic (ROC) curve (AUC) were calculated to evaluate the performance of the proposed method. The confusion matrix showed the predicted label and true label of each class, and the ACC was the ratio of the total number of correctly classified samples to the total number of samples. Due to the imbalance between different classes in the dataset, the F-measure was also used to evaluate the harmonic mean of precision and sensitivity. The ROC is a function of sensitivity and specificity, which was established by changing the threshold of the classifier. Generally, AUC was used to evaluate the accuracy of the classification model. The larger the AUC, the better the performance. Kendall’s tau-b correlation coefficient (rK) was used to evaluate the correlation between selected features and true labels. All experiments were two-sided tests; p < 0.05 was considered to be statistically significant and p < 0.01 was considered to be statistically extremely significant.

2.7. Implementation Details

The detection and classification experiments in this study were conducted on a personal computer with an Intel Core i9-9900K, 3.60 GHz, and 16 cores. The programming language used for the implemented algorithms was MATLAB software (version R2022a, RRID:SCR_001622). All input images were standardized to a size of 1944 × 2592, and the extracted ROI size was normalized to 596 × 1104. In the preprocessing phase, median filtering was applied with a neighborhood size of 21 × 21. For pupil detection using Daugman’s integrodifferential operator, the preselected range for the pupil center (x0, y0) was a rectangle with the corneal center as the center and 0.35 times the corneal radius as the length. The preselected range for the pupil radius (r) was from 0.15 times to 0.35 times the length of the corneal radius. The calculation angle range for the integrodifferential operator was from 0 degrees to 360 degrees. In the reconstruction of the top-hat operation, a circular structural element with a radius of 10 was used. For machine learning model training, the dataset was divided into a training set and a test set in an 8:2 ratio. Model parameters were optimized using the training set, and the model’s performance was evaluated using the test set. For the SVM model, the kernel function was a Gaussian kernel. The DT model had a maximum split criterion of 4 at each node. The boosting tree model underwent 30 training cycles. The KNN model had a neighborhood size of 10.

4. Discussion

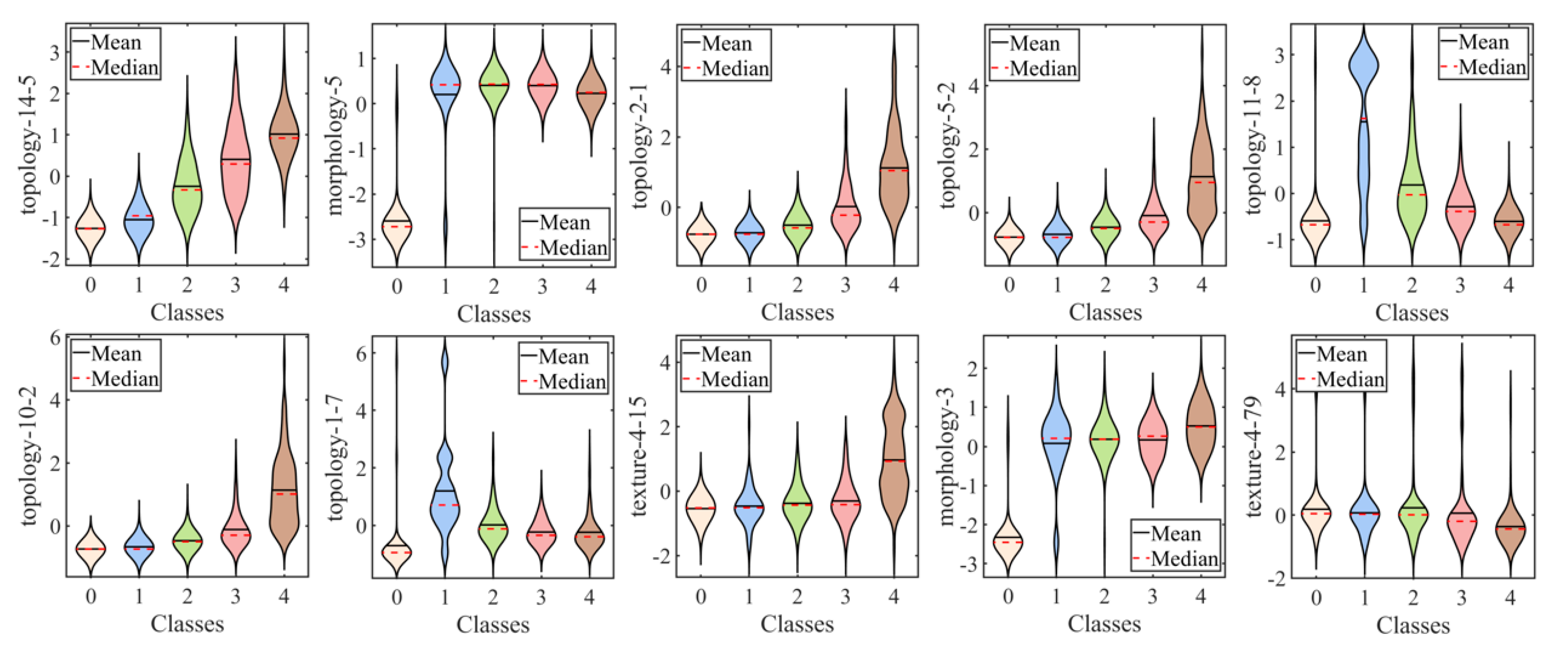

In this study, we developed an automatic machine learning-based method for corneal fluorescein staining evaluation. Our findings highlighted the significance of topological features as clinical characteristics in the evaluation of corneal fluorescein staining in dry-eye disease. This end-to-end model consisted of segmenting staining regions, extracting their radiomic characteristics, and differentiating them into the corresponding grades. The resulting radiomic signature was constructed from a combination of multiscale topological features, as well as textural and morphological features. The experimental results of our clinical dataset demonstrated the good performance of the proposed model with ACC 82.67% and AUC 95.36%. Moreover, we identified the 10 most important interpretable image-based features, with topological features playing a crucial role. These topological features captured the spatial connectivity and distribution information among staining regions, thereby holding promising in guiding clinical decision-making for the diagnosis and treatment of dry-eye diseases.

The key component in our proposed method was the usage of multiscale topological features that scrutinized the spatial connectivity and distribution among staining regions. These features significantly contributed to the discriminatory ability of the model. This is evident in

Figure 4, where the top-ranked radiomic features after selection include six topological attributes among the top seven characteristics. Additionally, the superior performance of topological features compared to non-topological features was consistently observed across different machine learning-based classification models, as shown in

Table 5. This indicated the effectiveness of topological features in distinguishing between different grades of corneal staining. Moreover,

Table 4 reveals a significant positive or negative correlation between topological characteristics and subjective gradings, which was not observed in other textural and morphological features. These findings underscore the unique contribution of topological features in capturing relevant spatial information related to corneal staining.

The observation of an increasing diameter of scale 56 (“topology-14-5”) with higher true labels suggested a linear distribution of fluorescein staining. In the context of clinical practice, we interpreted this linear distribution as being horizontal. The horizontal distribution is associated with incomplete blinking, which refers to a blink cycle where the upper lid turns to the upstroke before making contact with the lower lid [

31,

32]. Incomplete blinking is commonly observed in dry-eye patients [

33]. Incomplete blinks extend the period of horizontal strip area exposure to desiccating stress, leading to more corneal epithelium defects in that region [

34]. Therefore, the presence of a linear distribution of staining may serve as an indicator of incomplete blinking and the associated increased risk of corneal epithelial damage. Other topological features revealed the aggregation of corneal staining in the inferior zone, which has rarely been reported in previous studies. The number of subgraphs of scale 8 (“topology-2-1”) and the average vertex degree of scale 20 (“topology-5-2”) and 40 (“topology-10-2”) positively correlated with the true label, which indicated a tendency for staining to cluster. The aggregation phenomenon may be attributed to the main mechanism of corneal epithelium cell damage in DED, which is tear hyperosmolarity. It has been proven that hyperosmolarity can be localized [

3]. Additionally, tear film is more likely to break up in areas in damaged epithelium, leading to an increase in osmolarity in those regions. This creates a vicious cycle, resulting in more corneal epithelium defects in the same area. Furthermore, the more staining in the fixed area (the inferior zone of the cornea) inevitably affects the value of such topological features. These topological features highlight the crucial role they play in distinguishing the severity of corneal staining and provide valuable insights into the spatial connectivity and distribution of staining regions in a clinical setting.

We extensively explored different combinations of feature signatures and models to identify the approach that yielded the best performance for our computer-aided diagnosis method. The selection of the support vector machine (SVM) model as the best-performing classification model in our study was based on its high accuracy (ACC) of 82.67% and area under the receiver operating characteristic curve (AUC) of 96.59%. The high ACC and AUC values obtained with the SVM model indicated its effectiveness in accurately classifying corneal staining images into different grades.

There were some previous studies that reported similar results in staining-regions evaluation. While some studies focused on semi-automatic methods for locating the region of interest (ROI) in the cornea [

9,

10], our method was fully automated, providing accurate localization and segmentation of staining regions. Additionally, previous studies mainly relied on image processing or deep-learning techniques for corneal region segmentation, without specifically analyzing the spatial connectivity and distribution of staining regions [

11,

12,

18]. In contrast to approaches that only consider parameters such as the number and area of staining regions, we introduced topological features to capture the spatial characteristics and the confluence of staining regions. This provided a more comprehensive analysis of corneal staining patterns. By incorporating these topological features along with textural and morphological traits, our machine learning-based classification model demonstrated improved performance in grading corneal staining.

The effectiveness of the topological features identified in our study holds promise for clinicians in diagnosing dry-eye disease. The integration of topological features with other radiomic characteristics enhances the classification performance and provides valuable insights into the spatial characteristics of corneal staining. This information can, potentially, aid clinicians in diagnosing and managing dry-eye disease more effectively.

There were several limitations in our study that should be acknowledged. First, the segmentation accuracy of large staining patches could be improved. Due to the low contrast and ambiguous edges of the patches, the segmentation accuracy was compromised. Future studies could explore more efficient methods, such as deep learning, for accurately locating the ROI and segmenting staining regions. Second, the performance of the proposed method should be further validated on a larger dataset. Although our study included a substantial number of images, a larger dataset would provide more robust evidence of the effectiveness of the proposed method. Lastly, the classification performance for score 3 was relatively insufficient. It is possible that score 3 included two distinct conditions: true score 3 and score 2 with an additional score for confluent staining. This may have resulted in a misclassification as score 4 or score 2. Future research could focus on designing a more refined classification model to improve the differentiation of score 3 from other grades. Addressing these limitations in future studies will help to further enhance the accuracy and applicability of the proposed method in corneal fluorescein staining evaluation.