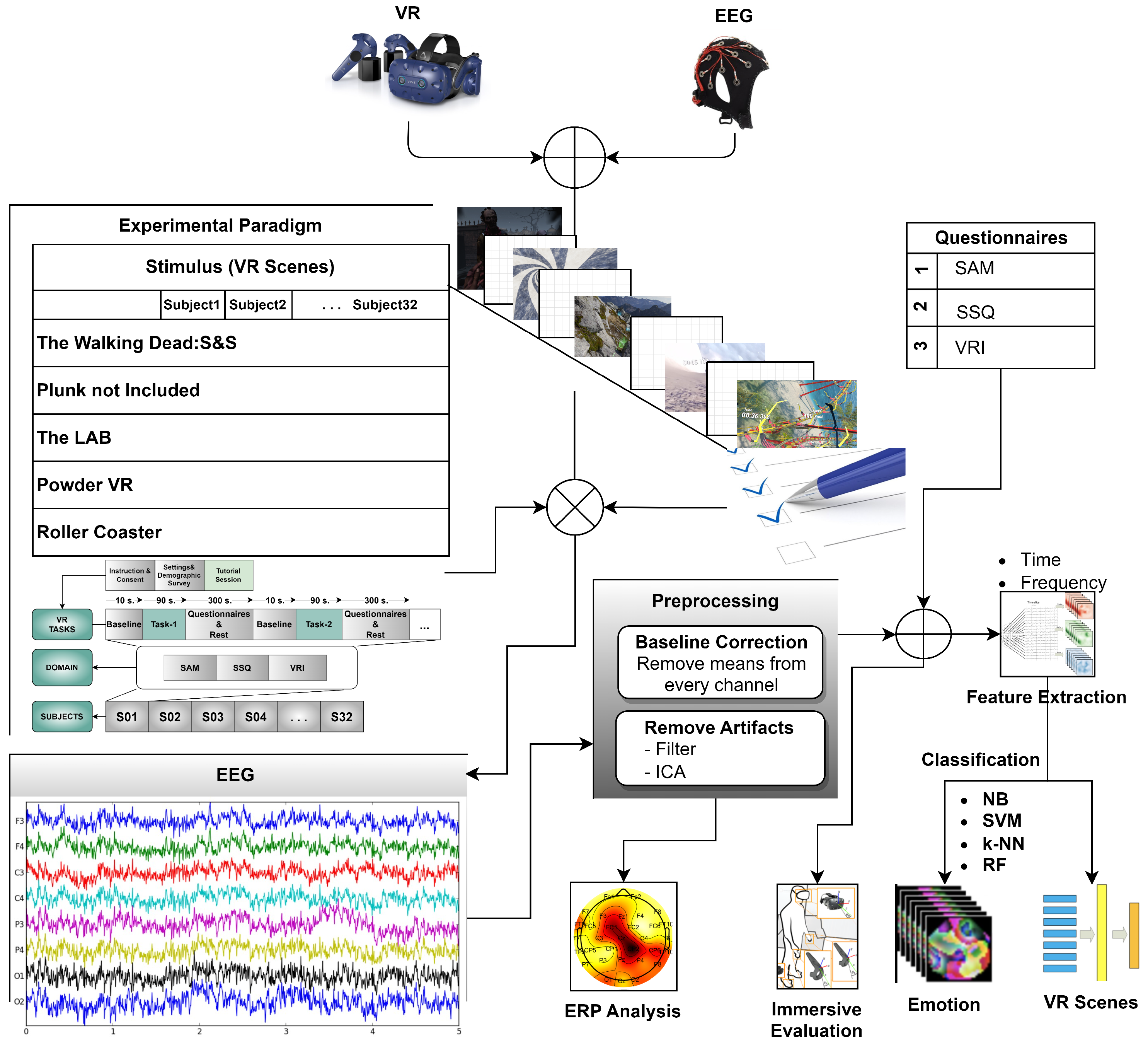

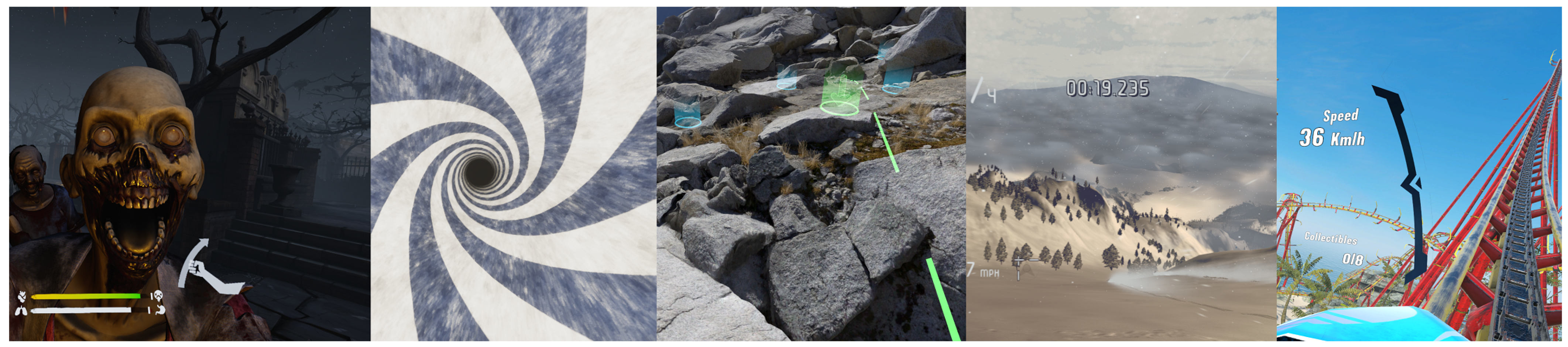

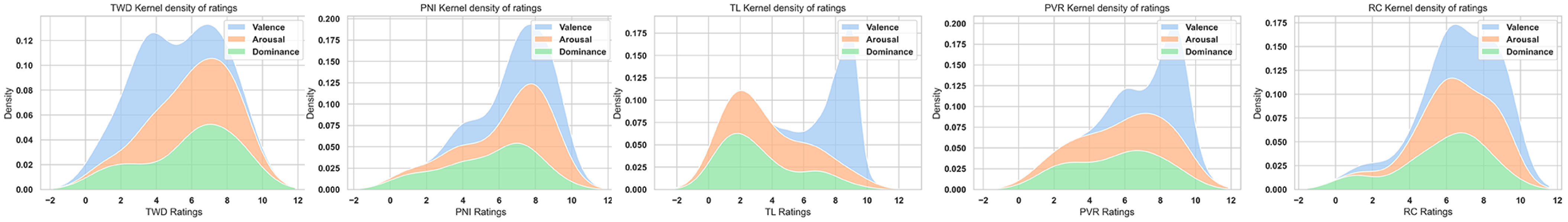

An experiment was developed to compare the immersion factors of scenery, classification performances in EEG data, CS characteristics, and subjective ratings in a virtual reality environment under varied virtual task settings. EEG was used to explore the CS induction effect of the VR scenes at the neurophysiological level. In addition, subjective questionnaire ratings were collected to examine immersion and CS characteristics. Simultaneously, the subjects performed the task in a virtual environment.

Figure 1 shows an overview of the VR experimental setting and flow of the experiment. This section describes the materials and methods used in the study.

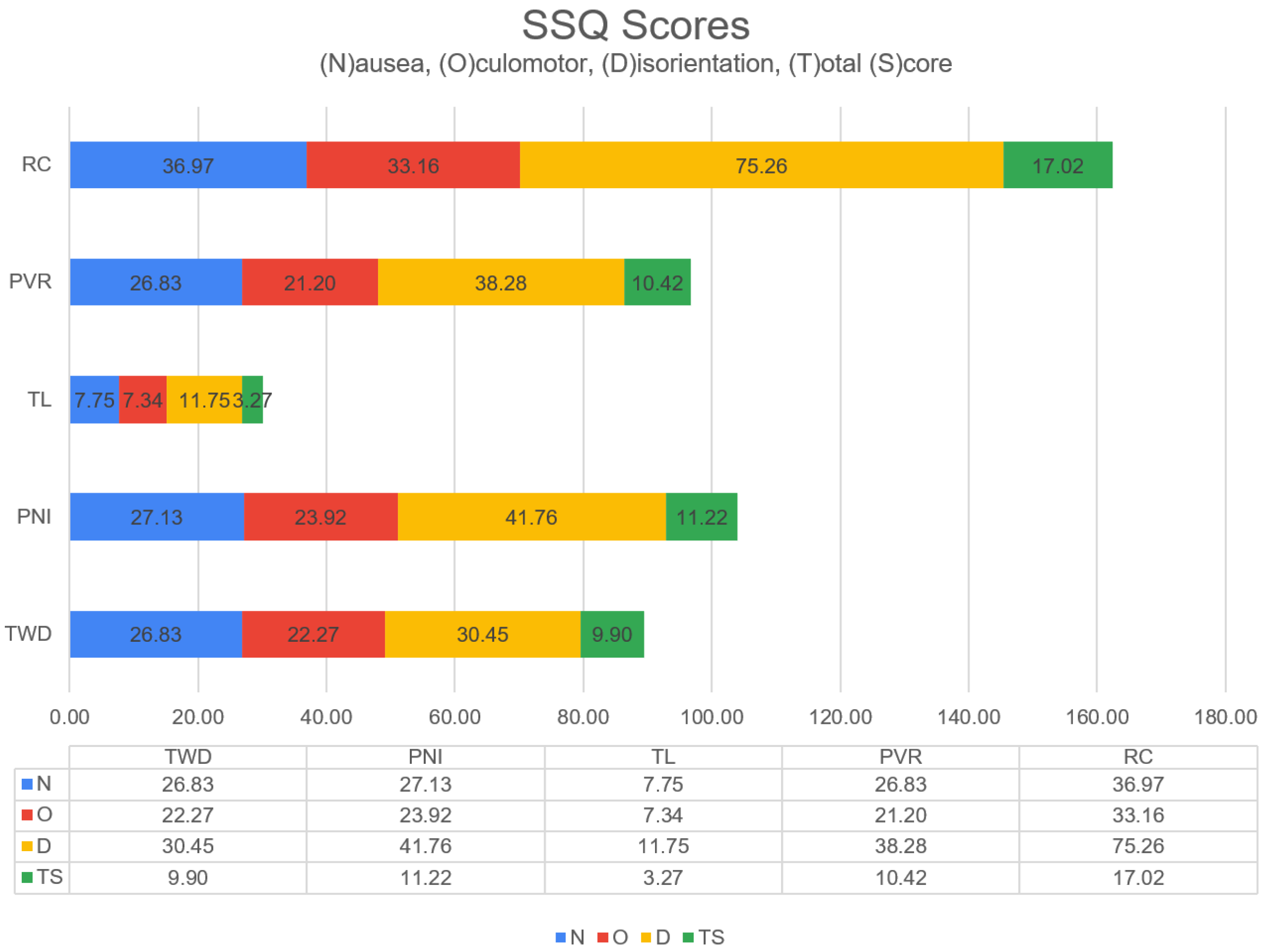

EEG is often considered an inclusive measurement method in neuroscience and psychology research. EEG records electrical activity in the brain by measuring the electrical potentials produced by neurons. It provides objective data about brain activity, such as the patterns of neural oscillations, event-related potentials (ERPs), and other neurophysiological markers. Therefore, participants cannot simulate this data in experiments. Exclusive measurements with questionnaires like the Self-Assessment Manikin (SAM) [

50] and SSQ are based on self-report data in which individuals rate their experiences and symptoms of CS and discomfort. It does not provide objective physiological measurements but instead captures the individual’s perception of symptoms. Objective measurements are combined with annotations of subjective questionnaire data collected from participants.

Extensive research has been conducted on the physiological changes (like EEG) and subjective assessments (Simulator Sickness Questionnaire—SSQ) associated with CS [

51]. Questionnaires, such as those used in our study, are an explicit evaluation method [

52,

53]. The Simulator Sickness Questionnaire (SSQ), comprising 16 items on a 4-point Likert scale, is a commonly employed instrument in CS research [

54]. Participants rate each item on a scale of 0 (none), 1 (slight), 2 (moderate), and 3 (severe). The SSQ is based on the following three sub-components:

3.3. Participants and Ethics

Before the experiment, participants were advised about safety precautions and the study procedure. Participants were informed (1) of the study purpose, (2) that they had the right to stop the experiment at any time without providing any reason, and (3) that they could stop the experiment if they felt sick or had any discomfort. All participants signed an informed consent form before undergoing the VR training. The Scientific Research and Publication Ethics Committee of Erzurum Technical University (11-2-20052021), Erzurum, TUR, reviewed and approved this study.

Within the project’s scope, 35 adults volunteered to participate in this study. However, one participant used their right to withdraw from the experiment, and one participant’s data could not be used because of the experiment’s faulty hardware data collection system. The other participant was unable to complete the experiment because of extreme dizziness and nausea. Therefore, the experiment was conducted with the remaining 32 (right-handed) volunteer participants (21 males, 11 females, age range 18–30,

). They had no visual or hearing impairments and normal or corrected vision. The participants were presented with a demographic survey before the experiment, which included their VR experience. Hygiene measures were taken with a Disposable VR Hygienic Mask compatible with HTC Vive. The necessary preparations were made for the contact quality of the EEG electrodes (

Figure 5). On average, participants had little VR experience (

on a 7-point Likert scale,

, 1: none, 7: a lot); While twenty-three participants (71.87%) had never experienced VR before, nine participants (28.13%) had VR experience close to the “none” scale.

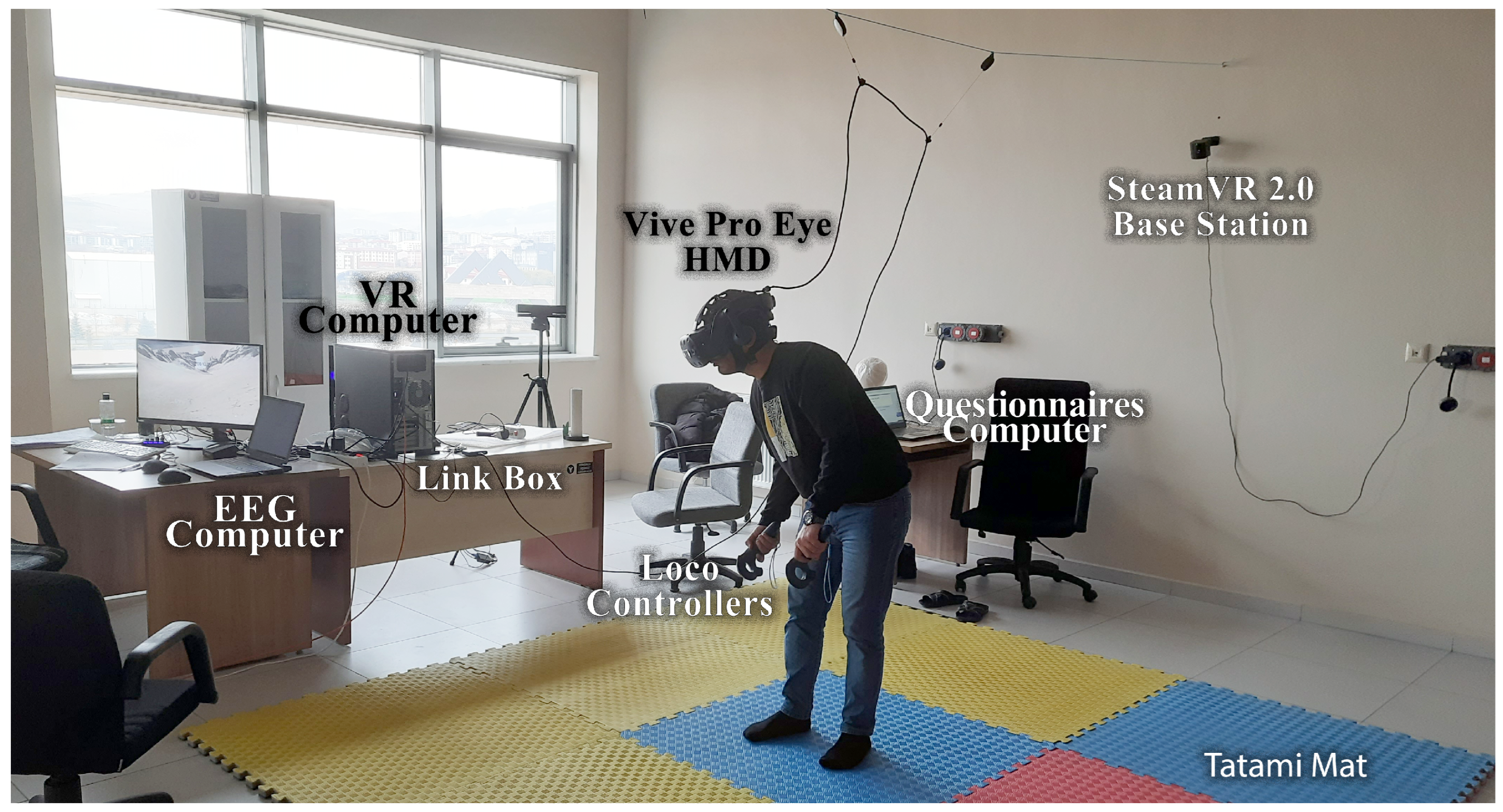

3.4. Experiment Apparatus

Our experimental equipment consisted of the following parts: three computers (VR computer, EEG computer, and questionnaire computer) and an HTC (High-Tech Computer) Vive Pro Eye (HTC Corporation, Taoyuan City, Taiwan) HMD for locomotion VR scenes. The VR device was connected to a desktop computer (VR computer) with 64 GB RAM and an Intel Core i7-9700K processor running at 3.60 GHz. The Emotiv Epoc Flex device was wirelessly connected to a laptop (EEG computer) with 32 GB RAM and an Intel Core i7-11800H processor running at 2.30 GHz. The questionnaires were taken from a laptop computer (questionnaire computer) with an i5 processor and 8 GB RAM. The desktop computer had an Nvidia GeForce RTX 2080 Super GPU (8 GB), whereas the laptop had an Nvidia GeForce RTX 3080 GPU (16 GB) graphics card (

Figure 6). The Emotiv Cortex API software (version 2.0) was configured (C Sharp programming) to ensure computer synchronization. The Cortex API is built on JSON and WebSockets, making accessing various programming languages and platforms easy.

EEG signals were collected by Emotiv EPOC Flex equipment, including a cap with 32 and two reference electrodes. It has been proven that the EMOTIV EPOC Flex can capture data similar to research-grade EEG systems [

55]. Electrode placement follows a 10–20 system. Additionally, to prevent the HMD from exerting pressure on the anterior central electrodes, three elastic bands were used to fix the HMD, while the upper elastic band of the HMD was loose. The bands were placed between the electrodes. The EEG electrode configuration is shown in

Figure 7. All electrodes were referenced to AFz (Driven-Right-Leg sensor, DRL) and FCz (Common-Mode Sensor, CMS) and grounded to the forehead. The electrodes on the cap were filled with saline solution to ensure the quality of EEG signals.

3.5. Feature Extraction

Feature extraction is an introductory section of classification algorithms. These features can be obtained from various domains [

56]. Extracting more dominant features from an EEG signal is essential for classification. Because of their nature, EEG signals have nonlinear and spatiotemporal structures. Therefore, extracting features in the time and frequency domains from the signal [

57] is significant in determining the most distinctive features for classification.

The participant’s raw EEG data were preprocessed for a better signal-to-noise ratio. Preprocessing steps were used: re-reference, resampling, filtering, artifact rejection, and epoching. This study used AFz and FCz electrodes for re-reference (

Figure 7). No data are read from the references. EMOTIV products use a differential mount relative to CMS (after common mode noise cancellation). All signals reflect the potential difference between the EEG and CMS sensors. The differential mount lets you obtain relative signals between channels by subtraction: the common CMS voltage is canceled. For example, (F3 – CMS) – (F4 – CMS) = F3 – CMS – F4 + CMS = F3 – F4 is the voltage that will be observed at F3 when F4 is used as the reference level. Signals from an EEG amplifier are often subsampled for further analysis. The Emotiv EPOC Flex device used in this study was a 1024 sampling rate internally. This rate was downsampled to a 128 Hz sampling rate automatically. This value covers the typical frequency band (<50 Hz) for EEG analysis and successfully satisfies Nyquist’s signal processing theorem. The resampled EEG data were filtered to remove noise. This study applied a low-pass filter to EEG data with a cut-off frequency of 45 Hz. Baseline correction and selection of independent components were performed during preprocessing of the EEG signals. The baseline averages obtained before the experiment were extracted from all 32 channels. Electrooculogram (EOG) is one of the most important artifacts in brain signals. Independent component analysis (ICA) was applied to EEG data to eliminate this artifact and similar artifacts (e.g., blinking, muscle movements, etc.) that degrade EEG quality. ICA was applied to all channels using the MATLAB plugin EEGLab. After artifact rejection, continuous EEG data were epoched for feature extraction. This study determined the time window as 90 s (

Figure 2a).

The signals are analyzed in the time domain to illustrate how the values acquired from EEG signals change over time. Changes in EEG signals sometimes exhibit a wide variance [

58]. Statistical methods have also been used to reduce the effects of temporal variations in the signals. Therefore, statistical features such as the mean, variance, standard deviation, and first/second differences of the EEG data were utilized (

Table 2). The first difference shows both the intensity of the signal change in the time domain and the self-similarities of the EEG signal. Another method used to analyze EEG signals in the time domain is the Hjorth parameter [

59]. The Hjorth parameters (activity, mobility, and complexity) were also chosen as the feature extraction method because they have the advantage of a low computational cost (

Table 2).

In the frequency domain, the relationship between the frequency and amplitude of a signal is established, and the frequency characteristics of the signal are evaluated analytically. Frequency domain information is commonly used in EEG signals with temporal and spatial dimensions. The methods of Power Spectral Density (PSD) and Spectral Entropy (SE), calculated using the Fast Fourier Transform (FFT), were employed to extract frequency domain features. PSD was calculated using Welch’s method. The signal was divided into eight segments with a 50% overlap, and each component was windowed with a Hamming window (step size of 32). So, the length of each epoch was 12 s. The PSD of each element was averaged. In our computer system, parameter learning with the Radial Basis Function (RBF) for SVM took approximately 5 min. In the case of Random Forest (RF), the learning process consumed approximately 20 min, with the number of trees set to 100 (for time domain features).

FFT can be used for various types of signal processing, such as audio, video, and EEG signals. FFT can show multiple frequency activity types in different situations (Equation (

1)). Given a finite

sequence of length L, Equation (

1) transforms a signal of length (

) into a frequency sequence dependent on the variable

k of length

N (with

i = −1).

where

represents the time-domain signal, and

is their representation in the frequency domain. Spectral Entropy (SE) provides information regarding the nonlinearity of an EEG signal. Therefore, it is used as a feature in signal processing applications [

60]. The SE is the Shannon entropy of the PSD of the signal in Equation (

2).

where

P is the normalized PSD and

is the sampling frequency. EEG signals were transformed into frequency components using FFT to investigate brain activity. Subsequently, Power Spectral Density (PSD) was calculated. Using the normalized PSD, the

distribution function was obtained using Equation (

2). Features were extracted from all 32 electrodes, yielding 320 features in the time domain and 1416 features in the frequency domain. These values represent the total computation results obtained from the EEG data acquired from 32 electrodes, as detailed in

Table 2 and Equations (

1) and (

2). For example, in the time domain, the calculation is (32 channels × 3 Hjorth measurements) + (32 channels × 7 statistical measurements) = 320. Since normalized first/second difference values were also obtained from the statistical measurements, a total of 7 statistical measurements were acquired. In the frequency domain, it was calculated as 360 FFT measurements + 1056 Welch measurements, resulting in a total of 1416 measurements. After feature extraction, the multi-instance learning (MIL) method is applied to the feature vector [

56]. Multiple-instance learning involves a set of bags, each marked as positive or negative. Each bag consists of numerous instances representing a point in the feature space. A bag is designated as positive if it contains at least one positive instance and labeled as negative if all instances within it are negative.

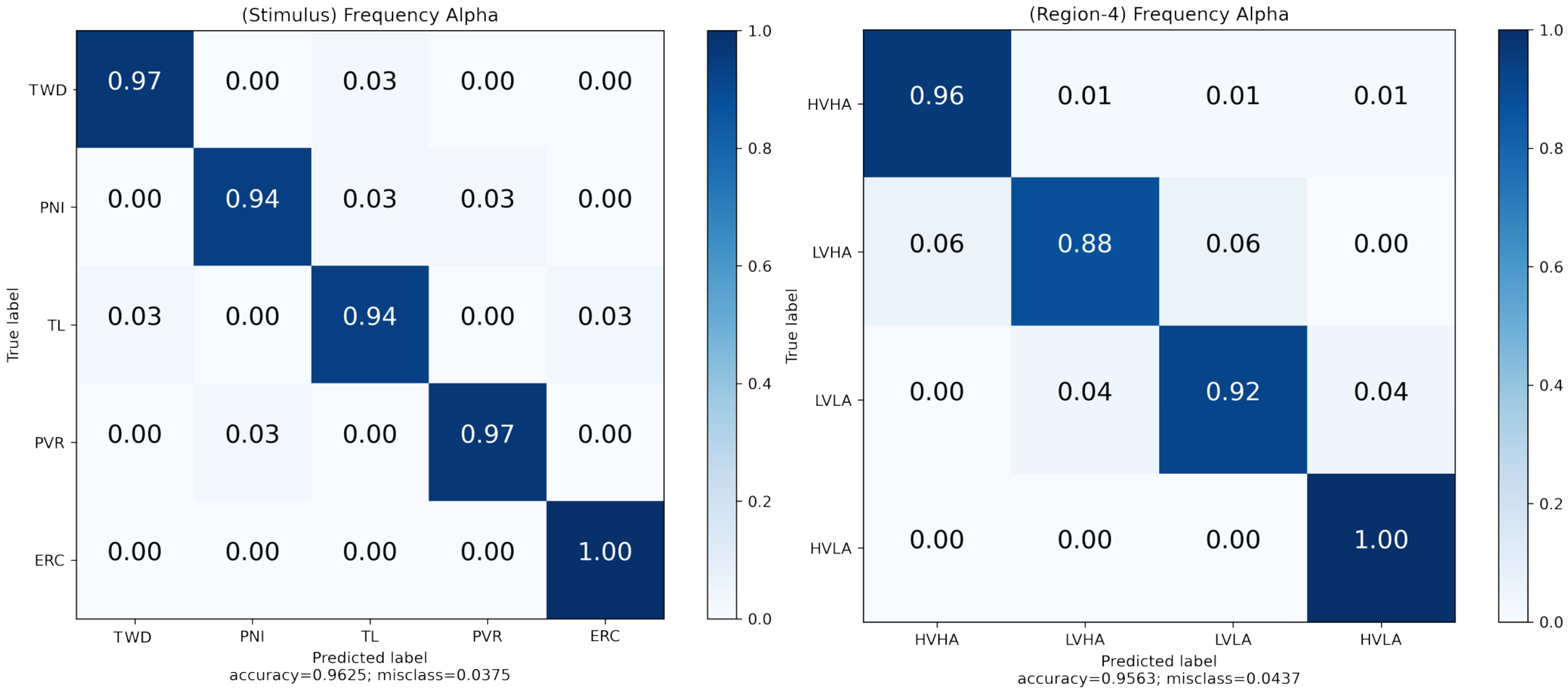

Measurements were obtained from participants’ survey-based assessments and ratings of their emotional states. Supervised machine learning methods were then applied to analyze the data. Naive Bayes (NB), Support Vector Machine (SVM), k-nearest Neighbors (k-NN), and Random Forest (RF) classifiers were used to classify participants’ emotional states during the virtual reality scenes. SVM is a linear or non-linear classification algorithm that finds the best-separating boundary between classes. k-NN is a simple classification algorithm that assigns data points to classes based on the majority class among their nearest neighbors. RF is an ensemble learning method that combines multiple decision trees to improve classification accuracy and robustness. NB is a probabilistic classification algorithm based on Bayes’ theorem, often used for text and categorical data classification. In addition, these classifiers were used to classify virtual scenes with brain signals. These classifiers are commonly used in EEG-based studies [

28]. The parameters of all the classifiers were the same for all the operations (

Table 3).

After the classification algorithms were configured, a 10-fold cross-validation (CV) approach was used to ensure performance validity. The k-fold CV approach divides a training set into k clusters. The validity of the resulting model was tested using the remaining k-1 folds as the training set. The first k-fold was used as a test set to calculate performance measures, such as accuracy. This was repeated for each k-fold, and the results were averaged. The study employed a 10-fold CV approach (k = 10).