Abstract

Lung cancer is a prevalent malignancy that impacts individuals of all genders and is often diagnosed late due to delayed symptoms. To catch it early, researchers are developing algorithms to study lung cancer images. The primary objective of this work is to propose a novel approach for the detection of lung cancer using histopathological images. In this work, the histopathological images underwent preprocessing, followed by segmentation using a modified approach of KFCM-based segmentation and the segmented image intensity values were dimensionally reduced using Particle Swarm Optimization (PSO) and Grey Wolf Optimization (GWO). Algorithms such as KL Divergence and Invasive Weed Optimization (IWO) are used for feature selection. Seven different classifiers such as SVM, KNN, Random Forest, Decision Tree, Softmax Discriminant, Multilayer Perceptron, and BLDC were used to analyze and classify the images as benign or malignant. Results were compared using standard metrics, and kappa analysis assessed classifier agreement. The Decision Tree Classifier with GWO feature extraction achieved good accuracy of 85.01% without feature selection and hyperparameter tuning approaches. Furthermore, we present a methodology to enhance the accuracy of the classifiers by employing hyperparameter tuning algorithms based on Adam and RAdam. By combining features from GWO and IWO, and using the RAdam algorithm, the Decision Tree classifier achieves the commendable accuracy of 91.57%.

1. Introduction

Cancer is increasingly common, and doctors use blood tests, biopsies, and image analysis for its diagnosis. It originates from damaged cells and varies among individuals. Understanding its source helps us comprehend its condition [1]. Lung cancer, often tied to smoking or harmful exposures, is a prevalent cancer type causing rising death tolls globally [2]. It affects both genders and has a low survival rate. Early detection is crucial for better outcomes. The five-year survival rate is approximately 34% for surgically removable early-stage cancer, compared to less than 10% for inoperable cases. Lung cancer treatment depends on histological characteristics, categorized as small cell (SCLC) and non-small cell (NSCLC) types, of which 80% to 85% are NSCLC and the rest are SCLC [3]. NSCLC has subtypes such as benign, adenocarcinoma (ACA), and squamous cell carcinoma (SCC). SCC displays characteristics such as the presence of clusters of polyhedral cells, keratinization, and the formulation of keratin pearls. Once the tissue type is identified, suitable treatments can be selected: either surgery, chemotherapy, radiation, targeted therapy, or immunotherapy.

Early detection and treatment of cancer are vital for better patient outcomes. Traditional diagnostic methods involve clinical assessments, lab tests, imaging, and a procedure called biopsy [4], which is considered the gold standard. During biopsy, tissue samples are taken and examined under a microscope using techniques such as hematoxylin and eosin staining. This histopathological analysis helps identify abnormal tissue growth and cell characteristics. Accurate identification and classification of individual cell nuclei are of utmost importance when evaluating tissue samples for cancer diagnosis. Pathologists inspect these samples at different magnifications, looking for signs of malignancy such as irregular cell shape, dark nuclei, and increased mitotic figures [5], and count to generate reliable results [6]. Manual histopathological examination is a time-consuming process due to the frequent presence of numerous nuclei from diverse categories clustered together in histopathological images, which can result in disagreements among pathologists [7], prompting the development of automated systems. Researchers have utilized image processing, pattern recognition, and machine learning/deep learning techniques to create computer-aided diagnostic (CAD) systems. These systems aim to detect and classify carcinomas quickly and reliably [8]. Machine learning and deep learning algorithms improve CAD performance as they learn from more data. These approaches use either microscopic images or whole slide images (WSIs) and extract features to aid in diagnosis. The challenge is to create a novel, versatile, and fully automated CAD system that can handle both microscopic images and WSIs, regardless of any imaging artifacts. Automated analysis of microscopic images is vital for evaluating digitized specimens, reducing inter-observer variations, and improving objectivity and reproducibility, as emphasized by Foran et al. [9]. This advancement can enable comparative studies of diseases and potentially aid in diagnostic decision-making.

Different imaging techniques, such as ultrasounds, MRIs, CT scans, X-rays, and needle biopsies, are used to diagnose lung cancer. X-ray imaging, considered a fundamental technique for lung examination, possesses restricted resolution and the potential to overlook specific areas of interest [10]. CT scans are commonly used to detect early stages of lung cancer and locate tumors before surgery, but they expose patients to harmful radiation with repeated scans. MRI demonstrates notable sensitivity and specificity, valuable for identifying bone metastases, although it is not advisable for diagnosing lung cancer. Ultrasound, a non-invasive method, proves adept at identifying postoperative lung issues and surpasses X-rays in effectiveness [11]. While image examination aids in diagnosis, staging, treatment evaluation, and prognosis assessment, histopathological examination remains the most reliable method to determine tumor characteristics and clinical stages. Histopathological images offer an intricate view of cellular and tissue-level transformations linked in differentiating between various conditions and cancer types, empowering pathologists to deliver precise and reliable diagnoses. Moreover, they are invaluable for pinpointing distinct biomarkers linked to various cancer types and grades, facilitating tumor classification and subtyping. Histopathological images form a dependable diagnostic framework known for its consistency and reliability in cancer diagnosis [12]. By harnessing extensive datasets of annotated histopathological images, it becomes feasible to create highly dependable algorithms for automated cancer diagnosis. These algorithms effectively streamline the diagnostic process, reducing the necessity for extensive manual examination [13].

The objective of this study is to create a classification framework that can analyze histopathological images data related to lung cancer. The goal is to accurately classify individuals as either having cancer or not, using machine learning techniques and meta-heuristic algorithms for tasks such as feature extraction, feature selection, and classification. The following subsection analyzes various methods for cancer detection and classification using image processing and classification techniques.

Review of Previous Work

In recent times, the research community has shown significant interest in diagnosing Lung Cancer through histopathological images. Numerous methodologies have been explored, utilizing a range of machine learning and deep learning techniques, across diverse datasets to detect instances of lung cancer.

Various strategies have been proposed to identify irregularities in lung-related images, encompassing chest radiographs, CT scans, ultrasound images, histopathological images, and microarray data. Ozekes and Camurcu [14] utilized template matching, while Schilham et al. devised a computer-aided detection (CAD) system that encompasses preprocessing, the identification of candidate nodules, feature extraction, and cancer classification [15]. Wang et al. [16] executed the classification of pathology images concerning lung cancer using a convolutional neural network (CNN) methodology, incorporating cell segmentation. The final layer of the CNN model integrated the Softmax activation function to enhance the classification process. Through the application of the region of interest (ROI) technique as a preliminary step, they focused on cell areas containing relevant tumors. The achieved classification accuracy for the three-class image dataset reached 90.1%. Dehmeshki et al. [17] employed a genetic algorithm based on shapes for template matching, while Suarez-Cuenca et al. used an iris filter for CT image discrimination [18]. Murphy et al. used a K-nearest neighbor (KNN) classifier for nodule detection [19], and Giger et al. used geometric features in their CAD system for CT images.

Wei et al. [20] undertook the categorization of histopathological images depicting six classes of lung cancer utilizing CNNs. They specifically employed ResNet models for their investigation. The ResNet models were integrated with pre-trained approaches from ImageNet and COCO image databases. Prior to the model training phase, the input data underwent preprocessing, which included the application of augmentation techniques. The study’s achievement in terms of classification F-score reached a notable 90.4%. Mohammed Al-Jabber et al. [21] employed histopathological images from the LC25000 dataset, employing both ANN and the GoogLeNet and VGG-19 models. This combination yielded an impressive accuracy of 99.64%. Teramoto et al. [22] effectively distinguished histopathological images spanning three types of lung cancer through the application of a deep learning model. They implemented an augmentation approach that involved rotating, flipping, and applying filters to each image. Following this, they employed their developed deep CNN model to carry out the classification process. The outcomes of their classification efforts yielded an accuracy of around 70%. Shapcott et al. [23] conducted their model training by initially subjecting the input data to a preprocessing stage, integrating the augmentation technique. They employed a deep learning methodology for classifying histopathological images related to colon cancer. The dataset encompassed four distinct classes. To facilitate cell identification, a cell patches algorithm was employed on each image. The images were segmented into specific dimensions through segmentation procedures. The classification process was then conducted using the CNN model based on the defined cell patches. The obtained correlation accuracy rates ranged between 90% and 96.9%.

Barker et al. an automated system to classify brain tumors using digital pathology images [24]. Ojansivu et al. explored an automated method for categorizing breast cancer from tissue samples [25]. Ficsor et al. proposed an automated classification method for colon inflammation using digital microscopy images of histological sections [26]. The authors of a study, Mouelhi et al. [27], used various techniques such as Haralick’s textures, histogram of oriented gradients (HOG), and color-based statistical moments (CCSM) to extract features from biopsy images and classify cancerous cells. The features included energy, correlation, homogeneity, contrast, GLCM texture features, as well as RGB, gray level, and HSV color components. Huang and Lai [28] focused on histology image analysis, employing texture features and KNN, SVM for image classification and segmentation. Their approach achieved a classification accuracy of 90.07% and 92.8%. Gessert et al. [29] executed the classification procedure employing CNN models based on transfer learning, leveraging microscopic images of colon cancer. Their study employed a dataset that comprised both benign and malignant images. They trained the dataset using various models including Inception, VGG, and DenseNet. Among these, the DenseNet model yielded the most promising classification outcome, achieving a classification accuracy of 91.2%.

Sinha and Ramkrishan [30] studied small biopsy images, analyzing cell characteristics such as shape, size, color, and other properties. Four classification methods were compared: Bayesian, KNN, neural networks, and SVM. The last two methods achieved the highest accuracy rates of 94.1%, while the first two had lower rates of 82.3% and 70.6%. Kasmin et al. [31] examined microscopic biopsy images, considering characteristics such as cell/nuclei size, cell boundary length, minimum polygon area enclosing a cell, major axis length of an ellipse fitted to a cell, filled cell area, and average cytoplasmic intensity. They used neural networks and achieved classification accuracies of 86% and 92%. Chia-Hung Chen et al. [32] used a convolutional neural network to diagnose endobronchial ultrasound images, achieving an improved accuracy of 85.4% compared to traditional methods. Azka Khoirunnia et al. [33] developed a lung cancer detection system using a combination of CNN and RNN with Microarray data. In their research, CNN achieved 83% accuracy, RNN reached 71%, and the fusion of CNN and RNN (CRNN) attained the highest accuracy at 91%. Shahid Mehmood et al. [34] focused on classifying histopathological images of lung and colon cancers. By using AlexNet along with a technique called Class-Selective Contrast Enhancement, they achieved an impressive accuracy of 98.4%.

This paper is structured as follows: Section 2 focuses on the methodology employed for detecting lung cancer. Section 3 explores the feature extraction techniques including Particle Swarm Optimization and Grey Wolf Optimization whereas Section 4 explores the feature selection techniques, such as KL Divergence, and Invasive Weed Optimization. Section 5 explains the different classifiers used and hyper parameter updating method and its implementation. Section 6 presents the cumulative results, and Section 7 concludes the paper.

The following section deals with the methodology employed for identifying lung cancer through histopathological images.

2. Methodology for Lung Cancer Detection

This study employed lung histopathological images sourced from the LC25000 Dataset, which is available online. Andrew Borkowski and his colleagues from James Hospital Tampa, University of South Florida, and the Moffitt Cancer Center in Florida, USA, worked together to collectively assemble this dataset. The dataset encompasses histopathological images representing lung and colon cancer cases. Excluding colon cancer cases, the collection includes a total of 500 lung tissue images, divided equally between Benign Lung tissue and Lung Adenocarcinomas. These images were originally captured from pathology glass slides and were later resized to square dimensions of 768 × 768 pixels, down from their original size of 1024 × 768 pixels. The dataset underwent augmentation, resulting in an expansion to a comprehensive set of 10,000 lung histopathological color images which are categorized into two classes: Benign (N) and Adenocarcinoma (ACA), each consisting of 5000 images. These images are resized to a standard size of 256 × 256 followed by converting into a grey scale image. Notably, the images portray lung benign tissue characterized by abnormality but not indicative of cancer, while lung adenocarcinoma, the most prevalent form of lung cancer in the United States and notably linked to smoking, forms the second category.

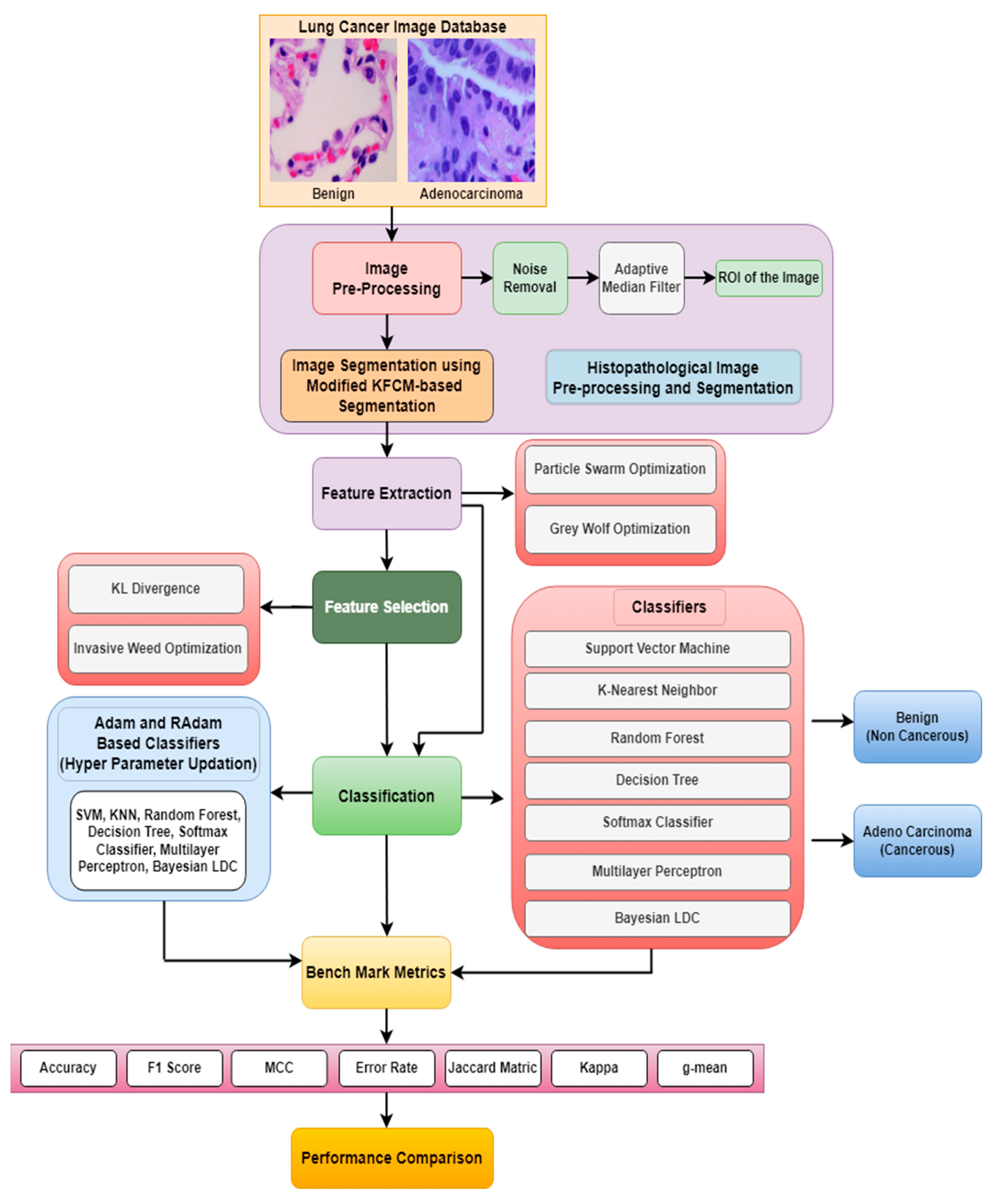

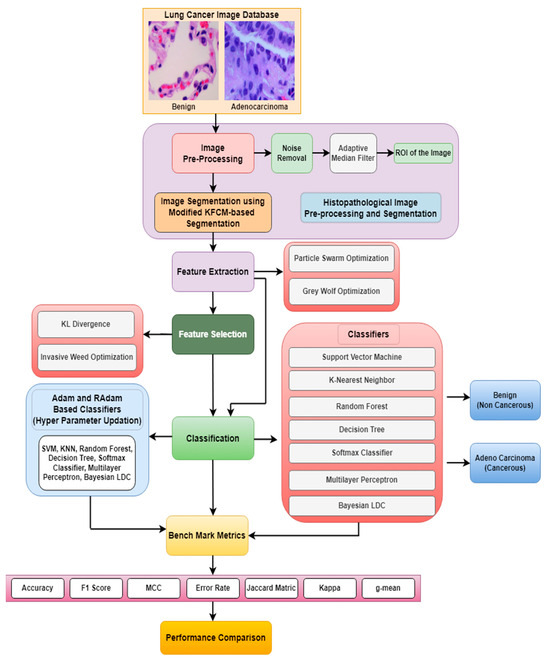

Figure 1 shows the general schematic diagram for identifying and categorizing lung cancer in histopathological images. The input histopathological image will undergo conversion into a linear vector comprising 65,536 elements (due to the image’s size of [256 × 256]). The procedure involves image pre-processing and a modified KFCM-based segmentation. During segmentation process approximately as [190 × 190] of the original image (i.e., nearly 36,100 intensity values) are segmented and used for further processing. These values will be directly employed to initialize the positions of birds in the Particle Swarm Optimization (PSO) and grey wolves in the Grey Wolf Optimization (GWO) algorithms. Optimization algorithms such as PSO and GWO are used to obtain a matrix of [512 × 10] dimensionally reduced intensity values from the segmented images. These dimensionally reduced features undergo feature selection techniques such as KL divergence and IWO. The selected features are then inputted into classifiers to evaluate their performance of the classifiers. Furthermore, an enhancement in the accuracy of lung cancer classification across various classifiers including SVM, KNN, Random Forest, Decision Tree, Softmax Discriminant, Multilayer Perceptron, and BLDC classifiers is achieved through the implementation of a Hyper Parameter Updation algorithm based on the RAdam technique.

Figure 1.

Schematic representation for detecting lung abnormalities from Histopathological Images.

2.1. Histopathological Image Preprocessing

Histopathological analysis serves as the definitive standard for evaluating the quality and clinical staging of tumors [35]. In the realm of diagnosing and treating medical conditions, healthcare professionals heavily rely on histopathological images. These images establish a crucial cornerstone for predicting patient survival rates [36].

As per available reports, histopathological images present several challenges:

- The images exhibit intricate geometric structures and complex textures that arise from the vast diversity in structural morphology [37].

- Notably, histopathological images are susceptible to color inconsistencies and noise due to external factors such as variations in illumination conditions [38].

- Variations in microscope magnification, equipment settings, and other variables contribute to inconsistencies in image sizes and resolutions within histopathological images [39].

- Elements of significance, such as local micro-vessels with distinctive textural characteristics, significantly influence disease diagnosis within histopathological images. Extracting these features is of paramount importance in supporting the classification and diagnosis of lung cancer [40].

Due to these factors, the histopathological images we encounter are frequently not perfect and these images show that image quality is affected by noise during acquisition and artifacts during sample preparation and slide digitization. Preprocessing methods are employed in histopathological images to enhance image quality, rectify anomalies, amplify pertinent characteristics, and establish uniformity, ultimately resulting in heightened precision and dependability of diagnostic outcomes. The study demonstrates that using an efficient adaptive median filter enhances image quality, reduces artifacts, and facilitates accurate diagnosis and analysis. However, when subjected to an adaptive median filter, these images tend to become smoother and exhibit reduced noise, rendering them suitable for our forthcoming investigations. After artifact removal, the filtered histopathological images are used for segmentation. Here, the size of the selected region of interest (ROI) is 256 × 256 which is the complete original image.

2.2. Histopathological Image Segmentation

A Modified Kernel Fuzzy C-Means methodology is employed to effectively segment normal and abnormal regions in histopathological images even though outliers are encountered. Image segmentation is the process of dividing an image into distinct regions based on certain image characteristics, with the goal of isolating and identifying specific regions within the image [41]. In this scenario, we have an input histopathological image denoted as H, which consists of a set of color images at pixel and these color images are represented as , in the k-dimensional space. The cluster centers within the histopathological images are represented as , where c is a positive integer , and represents the membership value for each pixel in the -th cluster . In the Kernel Fuzzy C-Means algorithm, clusters are formed in the image space by assigning distinct membership values to all pixels. The objective function or general equation for the Kernel Fuzzy C-Means algorithm is expressed as follows in the Equation (1):

where n represent an exponent used for regularization, with the condition that, n > 1, and denotes the squared grayscale Euclidean distance between and , which is given in Equation (2):

Using the membership function derived from the alternate optimization approach, the process of iteratively updating the cluster centers is carried out according to the Equations (3) and (4).

To reduce the impact of noise, the Equation (5) incorporates the spatial information of neighboring pixels,

Here, the spatial information is denoted by α, represents the set of pixels and its cardinality is defined as , the neighborhood function is substituted by , in place of , where, represents a color scale-filtered image, and the Euclidean distance is replaced with the correlation distance measure to avoid the neighborhood function. The updated equation is represented in Equation (6):

In this study, a modified version of KFCM computes the parameter for each cluster at every iteration to substitute for α [42]. The calculation of this parameter utilizes the correlation function, as outlined in the Equation (7):

Here C represents the correlation function or correlation distance measure. Here, determining the precise characteristics of C typically necessitates a large number of patterns and numerous cluster centers to identify optimal value for . To address this challenge, a solution is devised by integrating spatial context and scale information through the incorporation of fuzzy factor. The objective function of the KFCM, as presented in Equation (8), incorporates the inclusion of the fuzzy factor .

Then the altered fuzzy factor is derived using Equation (9).

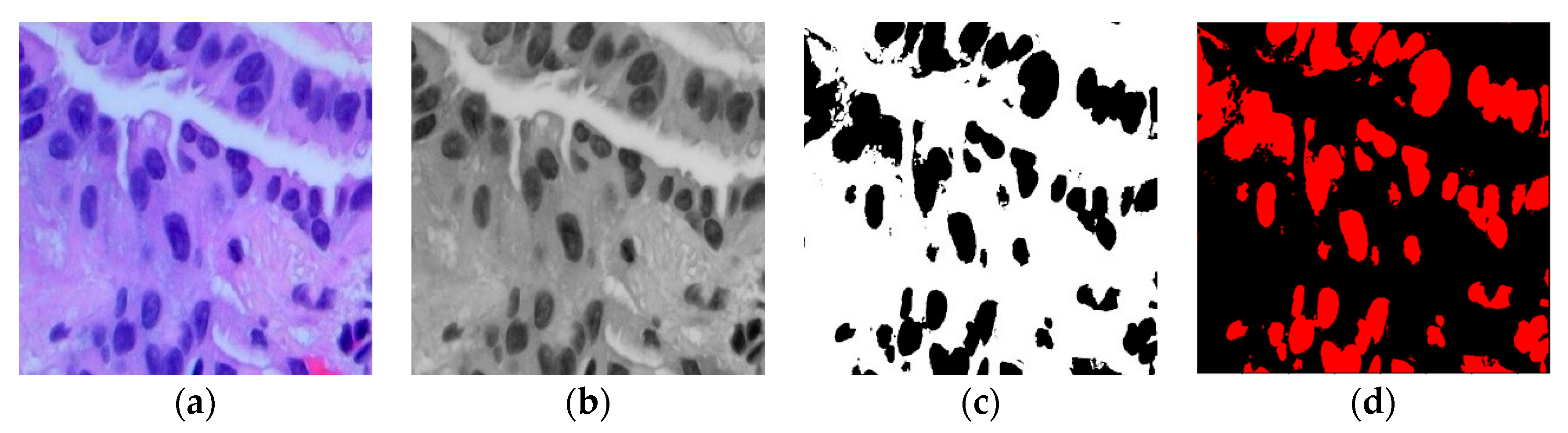

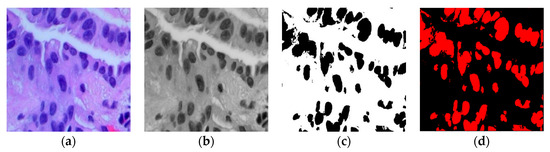

This adjusted fuzzy factor plays a crucial role in influencing local neighbor relationships and substituting the traditional distance metric with a correlation function. Here, represents the fuzzy factor for cluster , and signifies the correlation metric function. Since the histopathological images contain variation in intensities, gradients, and complex backgrounds, it becomes imperative to employ a modified KFCM-based segmentation method to distinguish between the region of interest (ROI) and the background in the image. Figure 2 illustrates the sequence of the original image, the filtered image, the identified ROI within the ACA image, and the segmented image generated using the modified KFCM for the Adenocarcinoma (ACA) class.

Figure 2.

(a) Original ACA image; (b) Filtered ACA image; (c) ROI of the ACA Image; (d) Segmented ACA image.

The following section focuses on the methods utilized for extracting dimensionally reduced image features, aimed at enhancing the classification and detection of lung cancer using histopathological images.

3. Feature Extraction

Feature extraction techniques condense essential information from images into compact feature vectors, enabling the effective classification of complex image datasets using linear algorithms [43]. As the abundant features within histopathological images serve as a fundamental resource for clinicians to conduct diagnoses, the proficient extraction of these image features stands as a pivotal factor in enhancing the precision of computer-aided diagnosis [44]. This study delves into the impact of two distinct feature extraction techniques such as PSO and GWO on the classification of histopathological images related to lung cancer.

3.1. Particle Swarm Optimization (PSO)

Kennedy and Eberhart introduced the PSO algorithm in 1995, which draws inspiration from the hunting behavior of birds. This optimization method relies on a population and leverages the social dynamics of bird flocks. It starts by creating particles and setting key parameters for the optimization process. [45].

Every particle has a unique position that is traced by the following equation:

The velocity is traced by the following equation:

Each particle’s velocity is updated as:

Here, and represent randomly selected values within the range of 0 to 1. The acceleration coefficients, denoted as and , play a role in analyzing the motion of particles. The weight function is expressed as:

The position of each particle is given by:

The particle that possesses the optimal position progresses to the next level. The best position for an individual particle is represented by the letters “p-best”, while the letters “g-best” represent the best position among all particles. The weight parameter “” is chosen between 0.45–0.9, maximum iteration values are 100–1000, both and are set to 0.85, cognitive component ( and Social Component ( are chosen between 1.0–2.0. The above values are determined based on the trial-and-error method.

3.2. Grey Wolf Optimization (GWO)

Grey wolves are known for living and hunting in groups called packs [46]. The process of searching and hunting involves plotting to track and approach a target efficiently. This optimization technique, inspired by the search and hunting patterns of gray wolves, employs symbols such as Alpha (), Beta (), and Gamma () to represent the best, next best, and third best solutions in mathematical modeling. Lambdas are presumed to be the remaining possible solutions and they guide the alpha, beta, and gamma wolves in searching and surrounding the prey. Three coefficients, A, B, and C are suggested to describe the encircling behavior. The equation of hunting strategy is formulated as follows:

where and denotes the adjusted distance variables from the alpha, beta, and delta positions to the other wolves, , and are coefficients that assist in adapting these distance variables, t signifies the ongoing iteration, indicates the position of the grey wolf and it follows as,

The parameters and can be mathematically expressed as follows:

The control parameter chases , which eventually drives the lambda wolves to flee from the dominant wolves such as , and . When there are multiple dominant wolves (|A| > 1), the grey wolves run away from them, allowing lambda wolves to search extensively and explore more during optimization. However, when there are fewer dominant wolves (|A| < 1), the grey wolves approach them and follow their guidance in hunting, which is called local search in optimization. During the iterations, the control parameter i is linearly decreased from 2 to 0, and is represented as,

where indicates the maximum iteration, and it is started from the beginning.

In the context of the classification problem, the introduction of randomness through variables and leads to heightened fluctuations in the wolves’ positions. Consequently, their ability to effectively converge towards the target (prey) becomes hindered. To address this issue, a decision has been made to treat the values of and in Equations (9) and (10) as control parameters within a confined range of [0, 1], rather than allowing them to remain purely random. Through empirical experimentation, it has been determined that the optimal performance of the Grey Wolf Optimization (GWO) algorithm is achieved when both and are set to 0.8. This adjustment enhances the accuracy of the GWO algorithm in tackling the classification problem.

3.3. Statistical Analysis

To enhance the accuracy of cancer prediction using dimensionally reduced features, it is advisable to calculate statistical parameters from the region of interest. The intensity values, which have been reduced in dimensionality through methods such as PSO (Particle Swarm Optimization) and GWO (Grey Wolf Optimization), are then examined using statistical measures such as Mean, Variance, Skewness, Kurtosis, Pearson Correlation Coefficient (PCC), and CCA (Canonical Correlation Analysis). These statistical parameters help determine whether the outcomes accurately reflect the inherent properties of lung cancer data within the subspace. These attributes were derived for both normal and malignant classes.

The statistical parameters of cancer data, extracted using the PSO and GWO methods, are shown in Table 1. Variance quantifies data spread. Notably, Table 1 reveals lower mean values for normal cases using both PSO and GWO, while higher mean values are evident for malignant cases using both methods. Furthermore, the Malignant group demonstrates greater data spread compared to the Normal group as indicated by Table 1. GWO shows a Pearson correlation coefficient of 1 for both cases, implying strong intra-class correlation. Skewness and kurtosis are highly skewed for both normal and malignant instances. When CCA values exceed 0.5, strong inter-class correlation is present. However, Table 1 indicates that PSO and GWO methods exhibit the lowest inter-class correlation. Consequently, the analysis of these extracted features emphasizes the need for improved classifiers.

Table 1.

Statistical Parameters in PSO and GWO for Feature Extraction in Malignant and Normal Data.

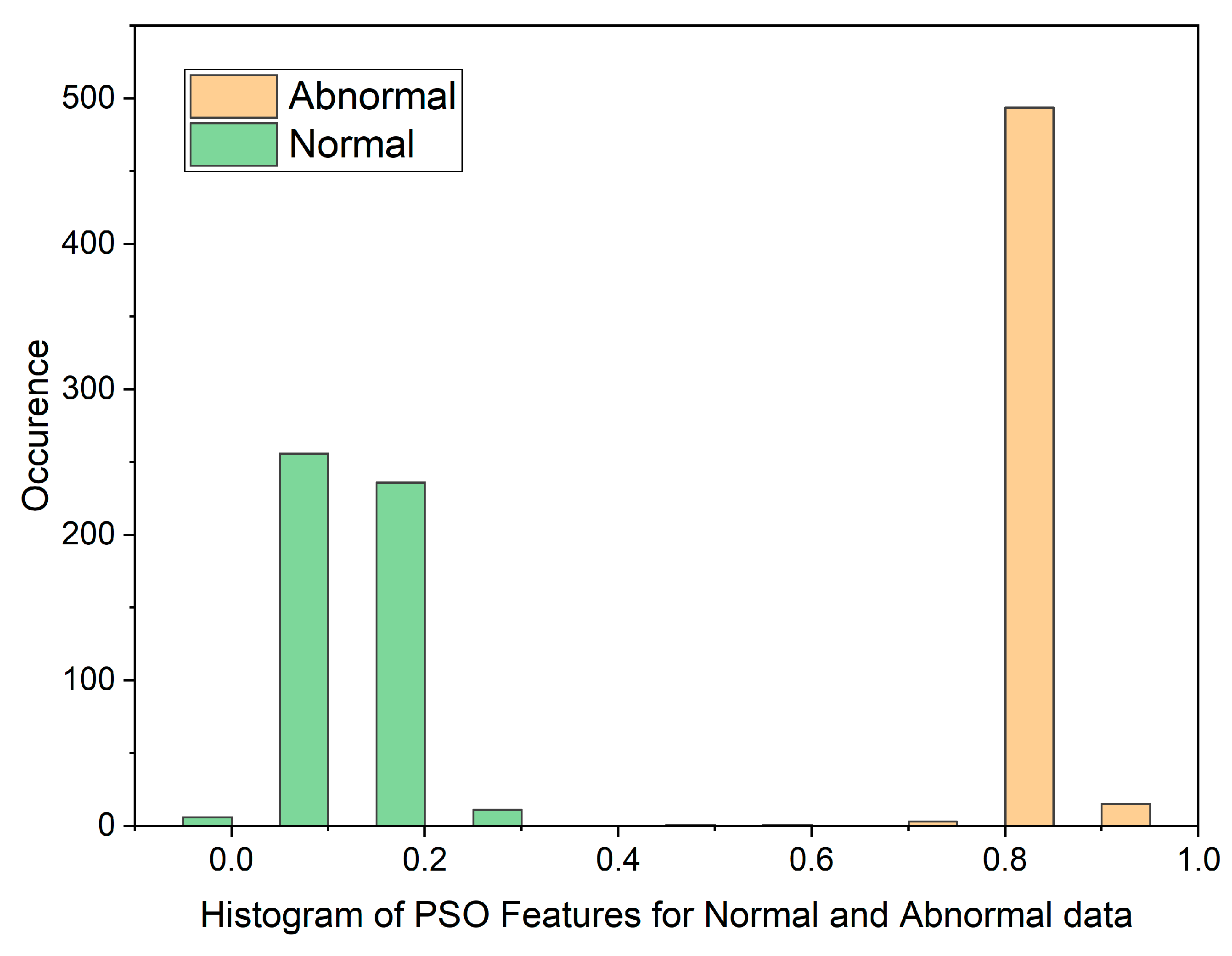

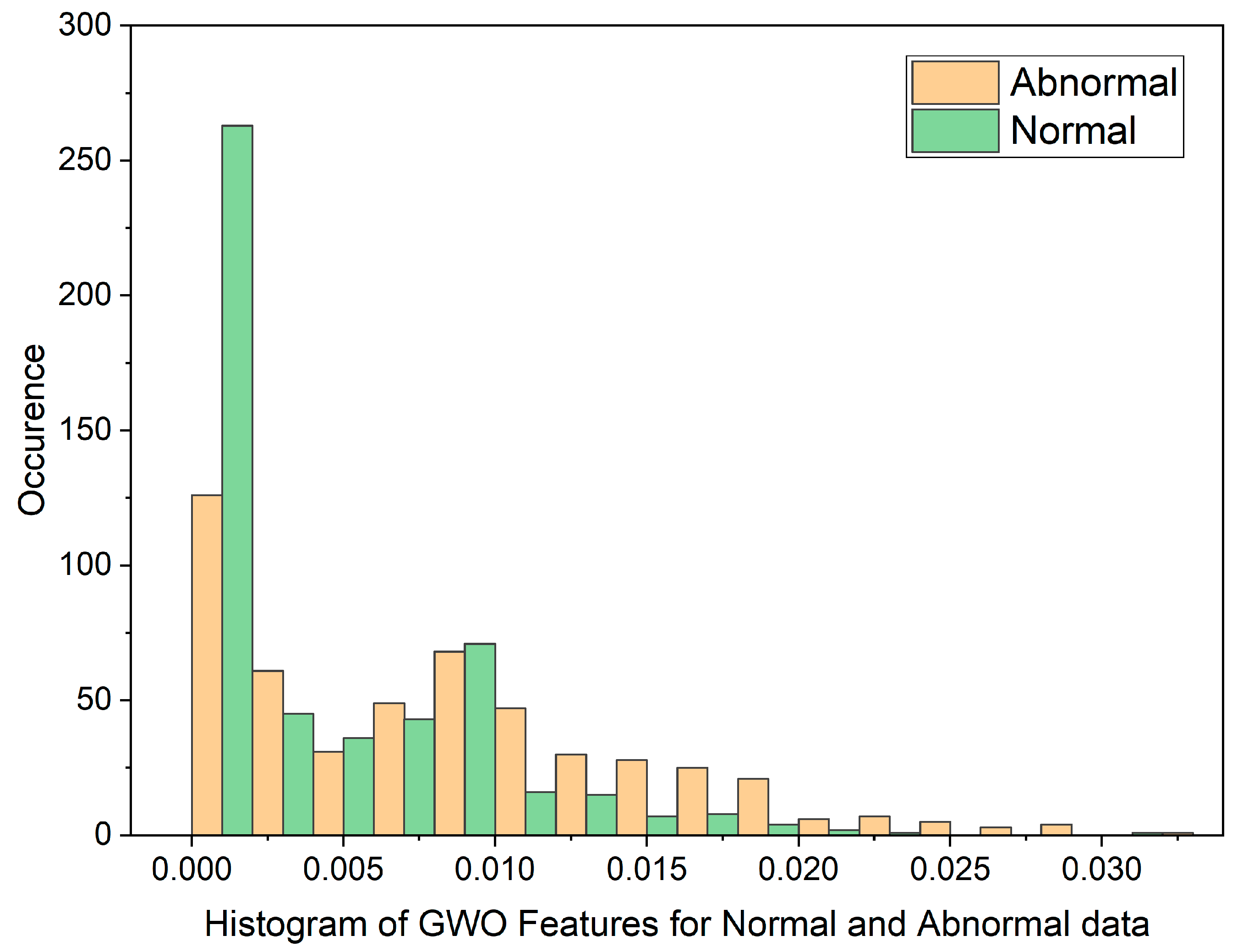

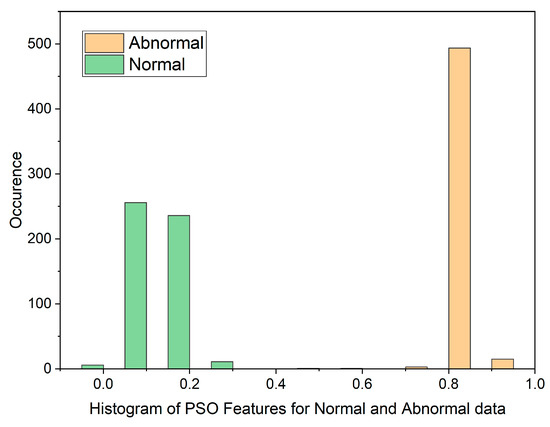

In cases where the features exhibit linear separability, a straightforward binary thresholding approach can be employed for the classification of Histopathological Lung images into two distinct classes: N and ACA. The characteristics of malignancy exhibit non-linear and non-Gaussian features that overlap with each other. To analyze these dimensionally reduced values which was obtained from PSO and GWO methods, histogram and scatterplot plots are used as illustrated in Figure 3 and Figure 4.

Figure 3.

Histogram of PSO Features for Normal and Malignant data.

Figure 4.

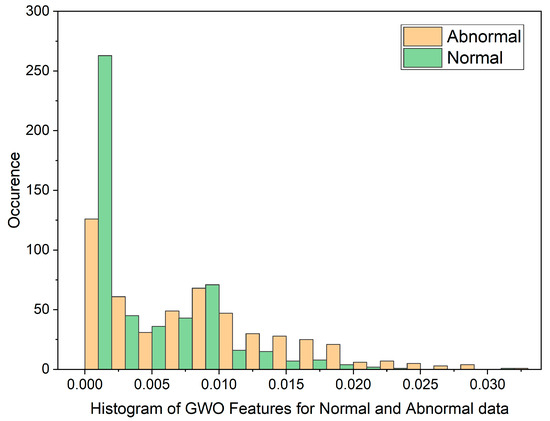

Histogram of GWO Features for Normal and Malignant data.

The histogram plot in Figure 3 illustrates the distribution of PSO feature data for normal and malignant cancer cases. The histogram illustrates PSO features characterized by outliers, substantial gaps, downward trends, and a non-Gaussian distribution. From Table 1, In the PSO-based extraction technique, the Canonical Correlation Coefficient (CCA) value is significantly low at 0.12309, suggesting a non-linear relationship between normal and malignant cases. Figure 4 showcases the histogram plot for GWO feature distribution, indicating skewed Poisson distributed data, and a non-linear nature.

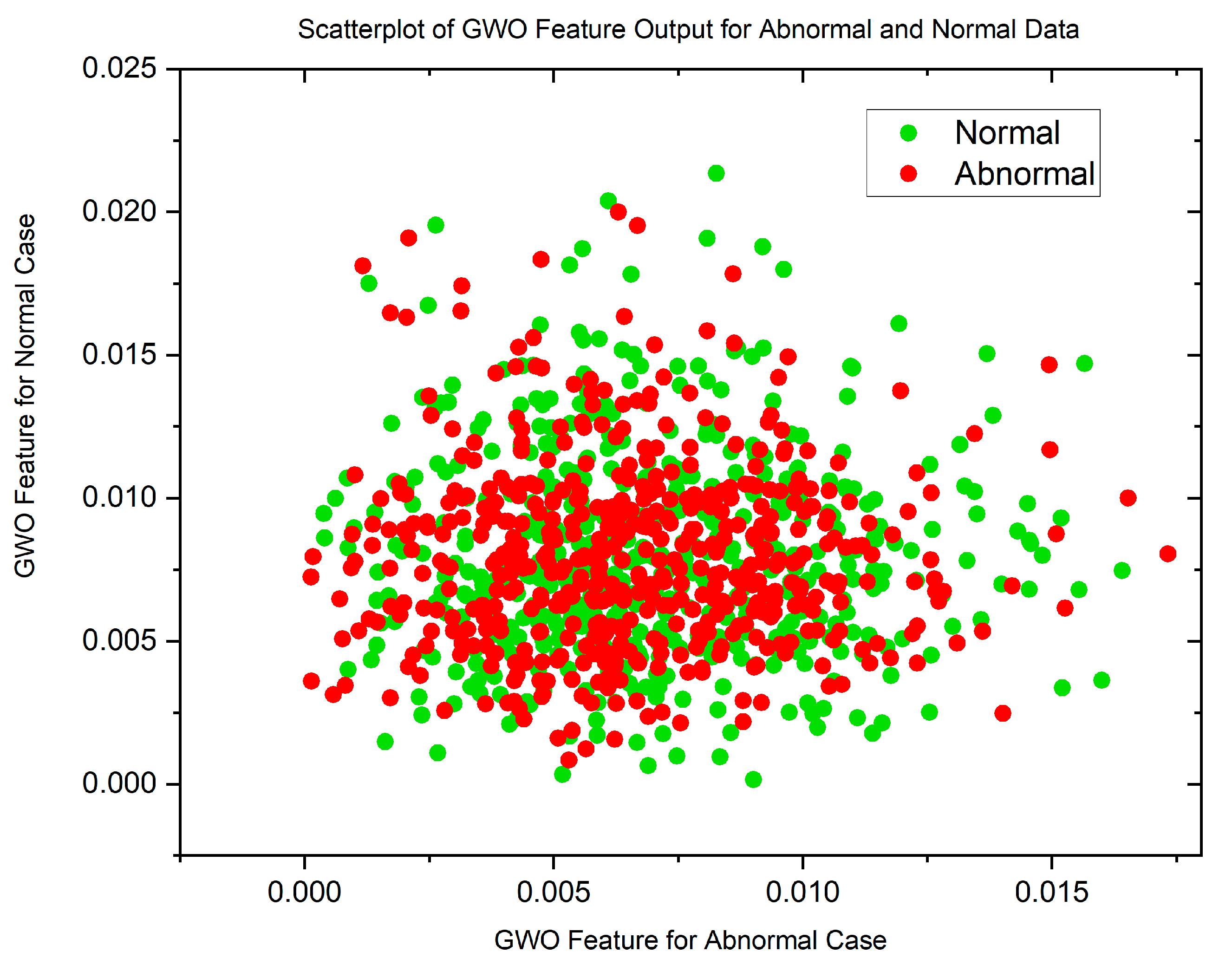

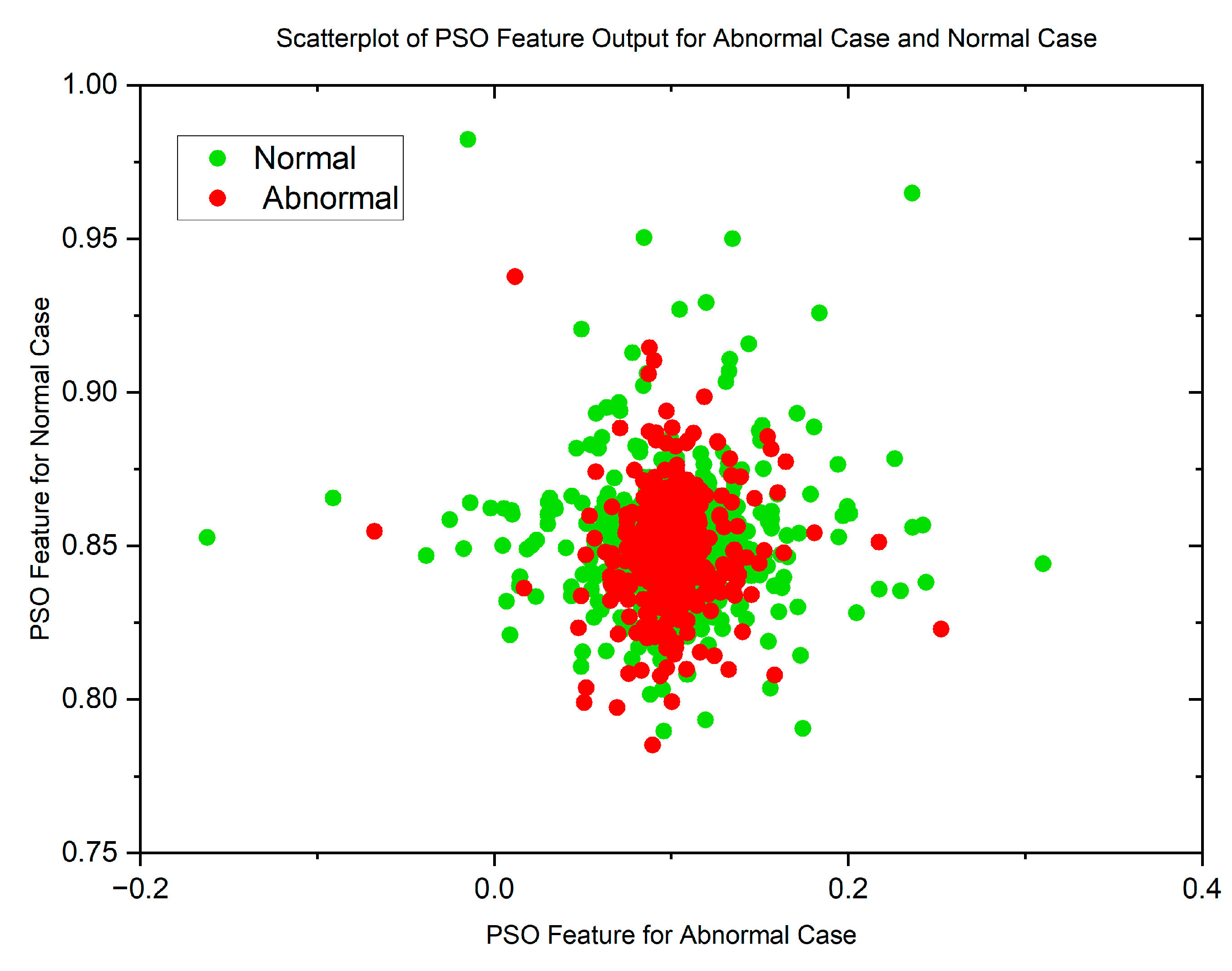

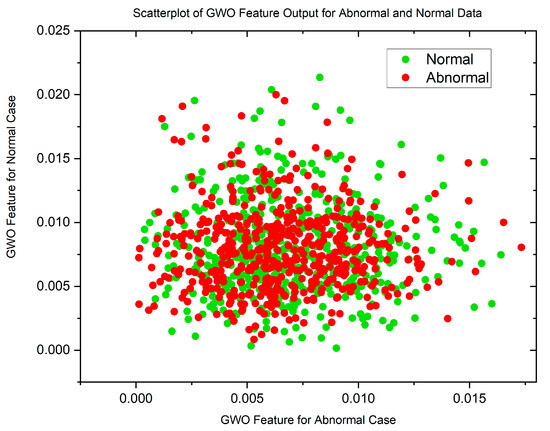

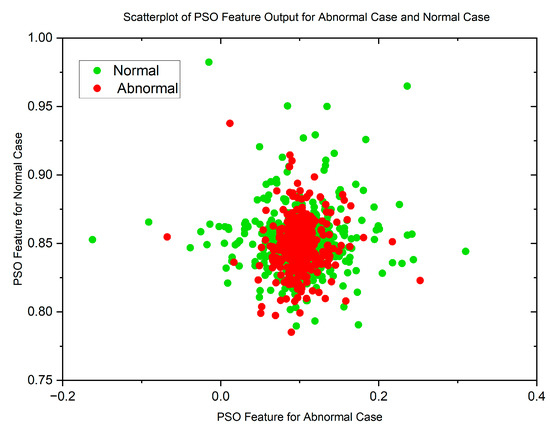

Figure 5 and Figure 6 display scatterplots demonstrating the feature output of normal and malignant cancer data utilizing the PSO and GWO methods. Scatter plots are useful for identifying data clustering, detecting nonlinearity, and overlapping. Both figures indicate the presence of nonlinearity and overlapping in the data. Therefore, from the histogram and scatterplot it is evident to employ accurate classifiers capable of distinguishing between normal and cancer cases in lung data using PSO and GWO features. The next section centers on the techniques applied to choose optimal image features, with the goal of improving the classification and identification of lung cancer in histopathological images.

Figure 5.

Scatterplot of PSO Features for Normal and Malignant Case.

Figure 6.

Scatterplot of GWO Features for Normal and Malignant Case.

4. Feature Selection

Feature selection aims to reduce input variables, excluding irrelevant characteristics for a more accurate, less complex, and unbiased model. Optimal feature selection is crucial for creating an effective, accurate machine learning model with high generalization ability [40]. In this paper, Feature Selection is performed using the KL Divergence and Invasive Weed Optimization (IWO) methods. Following the feature extraction procedures as described in Section 3, which involves Particle Swarm Optimization (PSO) and Grey Wolf Optimization (GWO). After feature extraction method [256 × 256] is dimensionally reduced to [512 × 10] per histopathological image. These [512 × 10] intensity values per image serve as the initial input for the Feature Selection techniques namely KL Divergence and IWO. However, the application of Feature Selection techniques is a further dimensionality reduction method only. After PSO and GWO feature extraction methods through KL Divergence and IWO process [512 × 10] intensity values per image is reduced to [100 × 12] which represents the most relevant intensity values of the images are retained as input given to the classifier for the subsequent classification process. The histopathological images are represented as a relevant intensity value in matrix form as described above.

4.1. KL Divergence

KL Divergence, also known as relative entropy, measures disparities between probability distributions, but in an asymmetric manner. The KL divergence between a probability distribution and another distribution is defined as,

The integral form of the KL divergence for continuous distributions is expressed as follows:

The KL divergence exhibits mutual convexity for both discrete and continuous distributions. The following are the properties of the KL divergence measure:

From the above equation, it can be observed that when the KL divergence is smaller, the two compared distributions are more similar.

4.2. Invasive Weed Optimization

The invasive weed optimization algorithm is a popular population-based metaheuristic approach [47]. The dynamic and versatile characteristics of weed colonies have sparked the creation of an optimization algorithm that imitates their behavior. By leveraging the qualities of weeds, a straightforward and efficient optimization technique can be developed. This method, called the IWO algorithm, incorporates phases such as seeding, growth, and competition. The following are the strategy for simulating weed habitat behavior:

- Primary Population Initialization: A few seeds are dispersed to start the search.

- Reproduction process: Seeds have the potential to grow into flowering plants, which then choose and spread the fittest seeds for survival and reproduction. The quantity of grass grain grains decreases in a linear fashion from to as follows:

- Spectral Spread Method: The group’s seeds are distributed normally with a mean planting position and standard deviation (SD) determined by the equation below.

- Competitive Deprivation: If the colony has more grasses than the maximum limit (Smax), the grass with the lowest fitness is eliminated to maintain a consistent number of herbs.

- The process continues until the maximum iteration is reached, keeping the lowest cost value of the grasses.

The upcoming next section revolves around the utilization of classification methods to categorize lung cancer images within histopathological images.

5. Classifiers for the Detection of Lung Cancer

Classifiers have a crucial role in categorizing data effectively. An optimal classifier is characterized by its ability to achieve high accuracy and low error rates while maintaining manageable computational complexity. Addressing the classification challenge involves constructing a model for the purpose of classifying images and assigning them appropriate class labels. The following sections of this paper delve into the classifiers that were used for this purpose.

5.1. Support Vector Machine

SVM is known for its scalability and classification performance [42]. It aims to create a hyperplane that maximizes class separation by minimizing the cost function. It is given by the following expression:

where and .

C represents the trade-off between the margin and the error. The training data’s size is represented by , and the class label for each sample is represented as . SVM is a flexible classifier suitable for linear and nonlinear cases. To handle nonlinear data, we employ Polynomial, RBF, and Sigmoid kernel functions. In this study, we exclusively enhance the classification accuracy by utilizing the SVM-RBF kernel.

5.2. K-Nearest Neighbor

KNN stands as a widely utilized and efficient non-parametric classification technique. In KNN, the symbol ‘k’ denotes the count of nearest neighbors involved in the voting process. To enhance prediction accuracy, employing an odd value for k is recommended. KNN determines the classification of a test sample by conducting a majority vote among neighboring training samples. Measuring distances between individuals is crucial, and the Euclidean distance is commonly used for this purpose [48]. For example, in the Euclidean space if and are the two points and it is assumed that and , then the Euclidean distance of line segment can be expressed as follows:

5.3. Random Forest

This tree-based ensemble learning algorithm is highly accurate and resilient in image classification [49]. It utilizes multiple decision trees that work independently. Two important parameters for the algorithm are the number of decision trees and the number of predictive variables used in each tree’s decision-making process. By combining the votes of multiple decision trees, a random forest can accurately predict binary tasks. For a training set × consisting of M samples, each containing N features and a classification label Y. The following steps are involved in the construction of Random Forest.

- Randomly select M samples from × using the Bootstrap method.

- Choose n random features (where n < N) to split a decision tree node. Determine the split criterion by selecting the feature with the lowest Gini value. Gini is computed using the formula:where represents the relative frequency of dataset features and represents the number of classes.

- Generate M decision trees by repeating steps 1 and 2, M times.

- Create a random forest by combining the decision trees and utilize voting to determine the classification outcome.

5.4. Decision Tree

It is a well-known machine learning algorithm that partitions input data recursively [50]. A decision tree starts with a root node and branches. This work utilizes CART, which splits the data based on its ability to distinguish between groups. The process continues until all data groups have the same label or match the training set. CART uses the Gini impurity measure at each node to determine the best split. The data at node ‘d’ are divided into two subsets, X-left and X-right, based on the splitting features and a threshold determined by CART and the amount of data X.

At node ‘d’ the input is computed through impurity measure Gini as with the proportion of class k observation in the node ‘d’. Construction time of a decision tree depends on the dataset’s size (samples and features). Overfitting can occur if the tree is built using CART and results in few samples per leaf. To prevent overfitting and improve accuracy, a pruning algorithm can be used to simplify the tree, reducing construction time while maintaining performance.

5.5. Softmax Discriminant Classifier

SDC’s main objective is to classify a given test specimen [51] by comparing its distance to the training sample within its category. The process entails gauging the distance between training and test samples belonging to the same class to derive the outcome. Supposing, the training set comes from q distinct classes. Indicates samples from the qth class where . Assuming represents the test sample, within the classifier, we employ samples from class q to recognize the test sample, aiming to minimize the reconstruction error. To uphold the principle of SDC, we can enhance the non-linear transformation linking the q class samples and the test sample. Therefore, the SDC can be defined as follows:

where defines the distance between the jth class and the test sample. The value of 𝜆 should be greater than zero, to provide a penalty cost. If w relates to the jth class, then w and would have likely same characteristics and so is progressing close towards zero and hence maximizing can achieve the maximum possible value in an asymptotic manner.

5.6. Multilayer Perceptron

MLP is often used to approximate functions such as regression [52]. It consists of an input layer with n nodes, a hidden layer, and an output layer. The given input and output pairs be denoted as where and are the input vector and the corresponding desired output value, respectively. Sigmoid function is commonly used for hidden and output nodes, producing values from 0 to 1.

The kth hidden node in the MLP calculates its output when the input is given. The output value is computed as

The output value of the output node is determined by the sigmoid function , along with bias ), and connection weight associated with the corresponding hidden node. Then the final output value is computed as,

The number of hidden nodes is denoted by , the bias to the output node is represented by 𝜃, and signifies the connection weight from the hidden node to the output node. This results in a total of synaptic connections. To train the Multilayer Perceptron (MLP), the following cost function can be utilized.

where t denotes the number of training patterns. In our study, we used a three-layer model, which is known to effectively approximate any continuous function with high accuracy [53].

5.7. Bayesian Linear Discriminant Classifier

The BLDC, or Bayesian Linear Discriminant Classifier, can distinguish between multiple classes. It uses the Fisher linear discriminant and applies the Bayes decision rule to estimate the error probability [54]. Bayesian regression assumes that the target variable y is a linear combination of vector k, and Gaussian noise m. This relationship is expressed as , where q represents the weight coefficients.

The given expression represents the likelihood function,

In the above equation, is the target values for regression, is a matrix made by combining the training feature vectors horizontally, and is the combination of . represents the noise’s inverse variance, and T is the total number of samples in the training set.

5.8. Methods for Updating Hyperparameters in Various Classifiers

The performance of a classifier greatly depends on the values assigned to its hyperparameters [55]. To find the best hyperparameter values, different methods such as Stochastic Gradient Descent (SGD), Grid Search (GS), and Adaptive Moment Estimation Method (ADAM) can be used. This study introduces a new approach called R-Adam, which aims to enhance lung cancer classification accuracy for the Decision Tree classifier and other classifiers. While Adam is a prevalent choice for hyperparameter selection in deep learning networks, this study introduces R-Adam, an adapted version proposed for hyperparameter selection across diverse classifiers. Utilizing controlled randomness, the envisioned R-Adam algorithm aims to discover hyperparameter values in proximity to the optimal values recommended by the Adam method. The investigation assesses the classification performance using both Adam and the newly introduced R-Adam technique.

5.8.1. Adam Approach

The Adam approach involves employing squared gradients and exponential moving averages. The validation of hyperparameters is achieved based on the expressions provided below [56]:

where represents the previous hyperparameters, denotes the updated hyperparameters, signifies the learning rate, and is a small constant used to avoid division by zero. The constants in the Adam method are and .

where signifies the derivative of the loss function with respect to x. Thus, the mathematical representation of the loss function is as follows:

where ER stands for the error rate, tr indicates the current iteration and tr − 1 denotes the previous iteration of in the Adam approach. Algorithm 1 outlines the process of utilizing the Adam optimizer to update hyperparameters in a Decision Tree model, aiming to minimize the error rate, which serves as the loss function. In Decision Tree, the key hyperparameters include maximum depth and criterion. In Decision tree, the hyperparameters are set as maximum depth = 20 and criterion = MSE. The Adam’s approach employs specific constants in this work: = 0.001, = 0.89, = 0.9 and . Through experimentation, the optimal number of iterations for the Adam approach was determined to be 40. This iterative process aims to uncover the lowest error rate, helping identify the best hyperparameters. Notably, similar approaches involving SVM, KNN, Random Forest, SDC, MLP, and BLDC models could also leverage the Adam optimizer to update hyperparameters in a comparable manner.

| Algorithm 1. Adam Approach |

|

5.8.2. RAdam’s Approach

The Randomized Adam (RAdam) technique is tailored to enhance the precision of the Decision Tree classifier. Algorithm 2 presents a methodology for implementing the Decision Tree using the RAdam approach. RAdam amalgamates two core components: the Adam method and controlled randomization. The controlled randomization process is pivotal in elevating classification performance. Within each iteration of the Adam method, hyperparameters are updated. The Adam process, which meticulously refines hyperparameter ranges, is nested within the iterative controlled randomization. This controlled randomization strategy integrates two control parameters—solution considering rate and solution adjusting rate—to fulfill its objective. Constants for R-Adam are defined as follows: bandwidth is set at 0.0098, the maximum number of iterations for randomization is 15, solution considering rate is 0.6, and solution adjusting rate is 0.92. In Algorithm 2, randomization 1, randomization 2, randomization 5, and randomization 6 indicate random values from the range [0, 1], while randomization 3 and randomization 4 correspond to random values within [0, 0.1]. Following this iterative process, the lowest error rate is found, leading to the identification of optimal hyperparameters. Significantly, analogous methodologies that pertain to SVM, KNN, Random Forest, SDC, MLP, and BLDC models could also make use of the Adam optimizer for adjusting hyperparameters in a similar fashion.

| Algorithm 2. RAdam’s Approach |

|

The following section pertains to the outcomes derived from employing diverse classification techniques for the categorization of lung cancer images within histopathological images.

6. Results and Discussion

This section explores the efficacy of different classifiers based on their benchmark parameters. A higher classification accuracy combined with a decreased error rate signifies robust performance of the classifier. As a result, the classifiers underwent training and testing using the extracted and chosen feature values within the Lung Histopathological Image Dataset.

6.1. Training and Testing of the Classifiers

The training and testing of the classifiers constitute crucial phases within classification procedures. Training facilitates the acquisition of patterns linked to the provided dimensionally reduced intensity values of the histopathological images by the classifier. In this study, the entire dataset, comprising histopathological image values related to lung cancer detection and classification, is divided into 10 equal folds. The analysis involves a series of iterations. During each iteration, one-fold is designated as the testing set, while the remaining nine folds are combined and used as the training set. In essence, 10% of the data is reserved for testing in each iteration, and the remaining 90% is utilized for training. Various performance metrics are computed for each iteration. The results obtained from all 10 iterations are collected and aggregated. This aggregation often involves calculating average values for the performance metrics. The conclusion of training and testing for the classifiers was established based on the mean square error (MSE) acting as the termination criterion. The mathematical expression for MSE is given below:

where signifies the value observed at a definite time; indicates the target value for model k, with “k” ranging from 1 to 15; and the value of M is assumed to be 5000 and indicates the total number of images.

6.2. Selection of the Optimal Parameters for the Classifiers

In this study, seven classifiers were used to categorize images into benign or adenocarcinoma based on the target selection. The target selection for the benign case is represented as follows:

The characteristics of the entire set of benign lung data were subjected to normalization, and their average is denoted as as outlined in Equation (38), applicable for classification purposes.

The average of the normalized features is denoted as . For benign images, a target value of 0.1 was selected, which falls within the lower end of the 0–1 scale.

The condition for choosing a target in a case of adenocarcinoma (aca) is:

The characteristics of the entire set of lung adenocarcinoma data were subjected to normalization, and their average is denoted as as outlined in Equation (39), applicable for classification purposes.

To enhance adenocarcinoma classification, the target selection should exceed the mean value , which represents the average of normalized features across N images. Improving classification requires a target value of 0.5 or higher, as specified by the condition:

Depending on the criteria described in Equation (40), the selected targets for this study were set at 0.1 for benign cases and 0.85 for adenocarcinoma cases. The classifiers underwent training using a 10-fold cross validation training and testing approach, with the stopping criterion being an MSE value of or a maximum operation of 1000, whichever was achieved first. The selection of optimal parameters for the classifiers during the training process is outlined in Table 2. In the case of SVM (RBF) classifier the parameters are selected through the trial-and-error method. The classifiers parameters are α, Kernel width parameter (σ), w, and b are selected with the constraint of minimum MSE. In the case of KNN, K value indicates the number of clusters and in this case, it is K = 5 is selected randomly. With Euclidean Distance measure as the cluster coefficient with weight w = 0.5 is selected with the constrain of minimum MSE. In the case of Random Forest, the parameters such as Number of trees, Maximum depth and Bootstrap sample are initialized with random selection. Similarly in the case of Decision Tree, the parameter maximum depth is initialized with random selection. Since it is a binary classification problem, the class weight value for Random Forest is settled at 0.45, whereas for Decision tree, the class weight is settled at 0.4. In the case of Softmax Discriminant Classifier, it is a binary classification problem, so the λ value is settled at 0.5 along with the mean of each class target values as 0.1 and 0.85. In the case of Multilayer Perceptron Classifier, the network is trained using LM (Levenberg-Marquardt) algorithm to minimize the square output error. This error back propagation algorithm is used to calculate the weights updates in each layer of the network. As the number of hidden units gradually increased from its initial value, then there will be a reduction in the minimum Mean Squared Error (MSE) on the testing set. The optimal number of hidden units is the one that results in the lowest MSE. If the number of hidden units is increased beyond this point, the model’s performance does not show any further improvement; instead, it often starts to decline. This decline occurs since the neural network becomes unnecessarily complex, exceeding the complexity necessary to solve the problem effectively. The choice of the learning rate as 0.3 is determined based on the distribution of training patterns and their associated MSE. In case of BLDC, the parameters such as prior probability p(x) − 0.5, Class mean = 0.8 and = 0.1 are selected with constrain of minimum MSE. The training process demonstrated that the MSE value was attained either as low as or after 1000 iterations.

Table 2.

Selection of the Optimal Parameters for the Classifiers.

6.3. Performance Metrics of the Classifiers

The primary objective of the classifier was to effectively distinguish between cancer cells and normal data samples in the dataset. As this research focuses on binary classification, it is essential to select appropriate performance metrics. In binary classification tasks, one of the key evaluation tools is the confusion matrix. This matrix provides a concise summary of the model’s predictions in relation to the actual labels of the dataset. The confusion matrix consists of four elements: True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN). TP indicates the presence of lung cancer, while TN indicates its absence, both representing correct classification. FP and FN represent misclassification, where lung cancer is incorrectly predicted as present (FP), or lung cancer is present but wrongly classified as not present (FN).

Table 3 displays TP, TN, FP, FN values, and average MSE for PSO and GWO features along with seven classifiers without employing Feature Selection Methods. Achieving the lowest MSE serves as an indicator for improved classifier performance, while a higher MSE value results in inferior classifier performance, regardless of the employed feature selection methods. PSO features show Decision Tree Classifier with the lowest MSE (3.60 × 10−7) and Random Forest Classifier with the highest MSE (1.60 × 10−5). GWO features show Bayesian LDC Classifier with the minimum MSE (2.50 × 10−7) and KNN Classifier with the maximum MSE (1.44 × 10−5).

Table 3.

Confusion Matrix for Classifiers without Feature Selection.

The features extracted were given to seven classifiers for performance analysis, following feature selection methods. Table 4 shows the average MSE and confusion matrix for PSO Feature Extraction with KL Divergence and IWO feature selection. The Decision Tree had the lowest MSE (9.00 × 10−6) using PSO with KL Divergence, while the Bayesian LDC had the highest MSE (1.02 × 10−5). With PSO and IWO, the Decision Tree had the lowest MSE (7.84 × 10−6), while the Softmax Discriminant had the highest MSE (1.22 × 10−5).

Table 4.

Confusion Matrix for Classifiers for PSO with KL Divergence and IWO.

Table 5 displays the average MSE and confusion matrix for GWO Feature Extraction with KL Divergence and IWO feature selection methods. The results include SVM, KNN, Random Forest, Decision Tree, Softmax Discriminant, Multilayer Perceptron, and Bayesian LDC classifiers. In the GWO with KL Divergence approach, Bayesian LDC achieves the lowest MSE (1.00 × 10−8), while the Multilayer Perceptron Classifier has the highest MSE (2.03 × 10−5). Similarly, in the PSO with IWO approach, SVM achieves the minimum MSE (4.90 × 10−7), while the Random Forest Classifier has the maximum MSE (1.52 × 10−5).

Table 5.

Confusion Matrix for Classifiers for GWO with KL Divergence and IWO.

Table 6 presents the mean Mean Squared Error (MSE) and confusion matrix outcomes for PSO Feature Extraction using KL Divergence and IWO feature selection techniques in Adam Hyperparameter Tuning. Among these, Bayesian LDC achieved the smallest MSE (8.41 × 10−6) through PSO with KL Divergence, whereas Random Forest showed the highest MSE (2.72 × 10−4). When considering PSO and IWO, Random Forest demonstrated the lowest MSE (9.00 × 10−8), whereas Softmax Discriminant had the highest MSE (4.00 × 10−4).

Table 6.

Confusion Matrix for Classifiers: PSO with KL Divergence and IWO for Adam Hyperparameter Tuning.

Table 7 displays the average Mean Squared Error (MSE) and the results of the confusion matrices obtained from GWO Feature Extraction using KL Divergence and IWO feature selection techniques in Adam Hyperparameter Tuning. Among these approaches, Multilayer Perceptron achieved the smallest MSE of 6.40 × 10−7 using GWO with KL Divergence, while SVM exhibited the highest MSE of 1.23 × 10−5. Considering both GWO and IWO, Decision Tree showcased the lowest MSE of 6.40 × 10−7, whereas Softmax Discriminant had the highest MSE of 1.04 × 10−4.

Table 7.

Confusion Matrix for Classifiers: GWO with KL Divergence and IWO for Adam Hyperparameter Tuning.

Table 8 presents the average Mean Squared Error (MSE) and the results of confusion matrices obtained by using PSO Feature Extraction with KL Divergence and IWO feature selection techniques during R-Adam Hyperparameter Tuning. Among these methods, SVM achieved the smallest MSE of 6.56 × 10−5 when using GWO with KL Divergence, while Random Forest had the highest MSE of 1.09 × 10−5. Considering both PSO and IWO, Random Forest had the lowest MSE of 4.49 × 10−5, while Softmax Discriminant had the highest MSE of 1.10 × 10−4.

Table 8.

Confusion Matrix for Classifiers: PSO with KL Divergence and IWO for RAdam Hyperparameter Tuning.

Table 9 displays the average Mean Squared Error (MSE) and the outcomes of confusion matrices. These were derived using GWO Feature Extraction with KL Divergence and IWO feature selection methods within R-Adam Hyperparameter Tuning. Among the techniques, Bayesian LDC achieved the lowest MSE of 9.61 × 10−6 with GWO and KL Divergence. Conversely, Random Forest had the highest MSE of 1.02 × 10−5. When considering both GWO and IWO, KNN displayed the smallest MSE of 5.48 × 10−5, while Random Forest exhibited the highest MSE of 1.90 × 10−4.

Table 9.

Confusion Matrix for Classifiers: GWO with KL Divergence and IWO for RAdam Hyperparameter Tuning.

Table 10 presents the metrics used to evaluate the performance of classifiers, including Accuracy, Error Rate, F1 Score, MCC, Jaccard Index, g-Mean, and Kappa. The mathematical expressions for these metrics are also provided.

Table 10.

Standard Benchmark Parameters.

The lung cancer data are processed using PSO and GWO techniques to extract features from normal and malignant data. These features are then used as inputs for seven classification models. Table 11 shows the performance of the classifiers without Feature Selection. The Decision Tree Classifier stands out with the highest accuracy of 85.01% for GWO features. It also achieves the highest F1 score (85.77%), MCC value (0.70), Jaccard Index (75.08%), g-mean (85.33%), kappa score (0.70), and the lowest error rate (14.99%). In contrast, the Random Forest classifier performs poorly for PSO features, with an accuracy of 56.25%, F1 score of 55.17%, MCC value of 0.13, Jaccard Index of 38.09%, g-mean of 56.26%, kappa value of 0.13, and the highest error rate of 43.75%. Without feature selection, the Decision Tree Classifier with GWO feature extraction method achieves the best accuracy and outperforms other classifiers.

Table 11.

Performance Analysis of the Classifiers without Feature Selection.

The performance of a random forest model heavily relies on the quality of individual trees and the diversity among them. If a random forest includes subpar or correlated trees, it can result in reduced overall accuracy. Correlation among trees can introduce redundant information, hampering the model’s ability to generalize effectively to new data and causing a drop in accuracy.

In Table 11, the Random Forest Classifier, when paired with the feature extraction technique of Particle Swarm Optimization (PSO), achieves a lower accuracy of 56.25% compared to the Genetic Wolf Optimization (GWO) approach, which achieves an accuracy of 77.84%. This discrepancy is primarily due to PSO selecting suboptimal intensity values of the segmented image, including less informative or irrelevant intensity values in the random forest model. Effective selection of intensity values is crucial for any classifier to yield better results. If the process of selecting intensity values fails to filter out irrelevant ones, it can negatively impact the performance of the Random Forest model. And also, PSO selects intensity values that are highly specific to the training dataset, resulting in a model that performs well on the training data but struggles to generalize to new, unseen data. Furthermore, the computational demands of PSO can lead to longer tree construction times, affecting the overall classifier’s performance.

Table 12 compares the performance of seven classifiers with PSO, KL Divergence, and IWO Feature Selection. The Softmax Discriminant Classifier stands out with superior results for KL Divergence features, achieving an accuracy of 83.47%, the highest F1 score of 83.18%, MCC of 0.67, Jaccard Index of 71.21%, g-mean of 83.50%, Kappa score of 0.67, and the lowest error rate of 16.53%. Conversely, the Bayesian LDC classifier performs poorly with IWO features, obtaining an accuracy of 53.79%, F1 score of 53.71%, MCC of 0.08, Jaccard Index of 36.72%, g-mean of 53.79%, Kappa of 0.08, and the highest error rate of 46.21%. Overall, the Softmax Discriminant Classifier using PSO and KL Divergence Feature selection achieves the highest accuracy and outperforms other classifiers.

Table 12.

Performance Analysis of the Classifiers for PSO with KL Divergence and IWO.

Table 13 presents the performance of seven classifiers using GWO features, KL Divergence, and IWO Feature Selection. The KNN Classifier achieves the highest accuracy of 79.36% with KL Divergence features. It also obtains the highest F1 score (78.60%), MCC value (0.59), Jaccard Index (64.74%), g-mean (79.49%), kappa score (0.59), and lowest error rate (20.64%) among all classifiers. However, the Bayesian LDC classifier performs poorly with IWO features, achieving an accuracy of 59.76%, F1 score of 61.52%, MCC value of 0.20, Jaccard Index of 44.42%, g-mean of 59.84%, kappa value of 0.20, and the highest error rate (40.24%). The KNN Classifier with GWO and KL Divergence Feature selection method demonstrates the best accuracy and outperforms other classifiers.

Table 13.

Performance Analysis of the Classifiers for GWO with KL Divergence and IWO.

Table 14 presents a comprehensive performance analysis of various classifiers, utilizing PSO with KL Divergence and IWO in combination with Adam Hyperparameter Tuning. The findings highlight that the K-Nearest Neighbors (KNN) Classifier attains the highest accuracy at 86.70% when incorporating KL Divergence features. This classifier also excels in other evaluation metrics, boasting the highest F1 score (86.30%), MCC value (0.74), Jaccard Index (75.96%), geometric mean (g-mean) (86.81%), kappa score (0.73), and displaying the lowest error rate (13.30%) compared to all other classifiers. Conversely, the performance of the Bayesian Linear Discriminant Classifier (LDC) is notably subpar when employing IWO features, achieving an accuracy of 76.14%, an F1 score of 76.19%, an MCC value of 0.52, a Jaccard Index of 61.54%, a g-mean of 76.14%, a kappa value of 0.52, and the highest error rate (23.86%) among the classifiers considered. Overall, the KNN Classifier in conjunction with PSO and the KL Divergence Feature selection method emerges as the standout performer, showcasing superior accuracy and outclassing the other classifiers in the evaluation.

Table 14.

Performance Analysis of the Classifiers: PSO with KL Divergence and IWO for Adam Hyperparameter Tuning.

Table 15 provides a comprehensive analysis of classifier performance, utilizing a combination of PSO with KL Divergence and IWO along with R-Adam Hyperparameter Tuning. The results highlight that the K-Nearest Neighbors (KNN) Classifier achieves the highest accuracy of 87.45% when incorporating KL Divergence features. This classifier also excels across various evaluation metrics, including the highest F1 score (87.02%), MCC value (0.75), Jaccard Index (77.03%), geometric mean (g-mean) (87.58%), kappa score (0.75), and the lowest error rate (12.55%) compared to other classifiers. On the other hand, the Decision Tree’s performance is notably weaker when using KL Divergence features, with an accuracy of 78.09%, F1 score of 78.19%, MCC value of 0.55, Jaccard Index of 64.19%, g-mean of 78.10%, kappa value of 0.54, and the highest error rate (21.91%) among considered classifiers. In summary, the KNN Classifier, in combination with PSO and the KL Divergence Feature selection method, stands out as the top performer, showcasing exceptional accuracy and surpassing other classifiers in the evaluation.

Table 15.

Performance Analysis of the Classifiers: PSO with KL Divergence and IWO for RAdam Hyperparameter Tuning.

Table 16 provides a comprehensive analysis of classifier performance, utilizing GWO with KL Divergence and IWO along with Adam Hyperparameter Tuning. The results emphasize that the K-Nearest Neighbors (KNN) Classifier achieves the highest accuracy at 90.87% when incorporating KL Divergence features. This classifier also excels in various evaluation metrics, recording the highest F1 score (90.06%), MCC value (0.83), Jaccard Index (81.92%), geometric mean (g-mean) (91.71%), kappa score (0.82), and demonstrating the lowest error rate (9.14%) compared to alternative classifiers. In contrast, the performance of the Decision Tree is notably below par when utilizing KL Divergence features, attaining an accuracy of 76.20%, an F1 score of 75.33%, an MCC value of 0.53, a Jaccard Index of 60.43%, a g-mean of 76.30%, a kappa score of 0.52, and the highest error rate (23.81%) among the considered classifiers. In summary, the KNN Classifier, combined with GWO and the KL Divergence Feature selection approach, stands out as the top performer, showcasing remarkable accuracy and surpassing the other classifiers in the evaluation.

Table 16.

Performance Analysis of the Classifiers: GWO with KL Divergence and IWO for Adam Hyperparameter Tuning.

Table 17 presents a comprehensive analysis of classifier performance, utilizing GWO with KL Divergence, and IWO alongside R-Adam Hyperparameter Tuning. The outcomes underscore the Decision Tree Classifier’s exceptional performance, achieving the highest accuracy at 91.57% when integrating KL Divergence features. This classifier also outperforms others across various evaluation metrics, achieving the highest F1 score (91.71%), MCC value (0.83), Jaccard Index (84.70%), geometric mean (g-mean) (91.87%), kappa score (0.83), and demonstrating the lowest error rate (8.43%) compared to alternative classifiers. In contrast, the performance of the Decision Tree notably drops when utilizing IWO, with an accuracy of 77.35%, an F1 score of 76.80%, an MCC value of 0.55, a Jaccard Index of 62.34%, a g-mean of 77.39%, a kappa score of 0.55, and the highest error rate (22.66%) among the considered classifiers. In recap, the Decision Tree classifier, combined with GWO and the KL Divergence Feature selection approach, emerges as the leading performer, showcasing remarkable accuracy and surpassing the other classifiers in the evaluation.

Table 17.

Performance Analysis of the Classifiers: GWO with KL Divergence and IWO for RAdam Hyperparameter Tuning.

Table 18 presents a summary of the performance outcomes for each combination of feature extraction and feature selection using Adam and R-Adam Hyperparameter tuning methods across all seven classifiers. The highest accuracy of 91.57% in the Decision Tree classifier was attained by combining GWO and IWO techniques, utilizing the RAdam Hyperparameter tuning approach.

Table 18.

Performance Analysis of the classifiers for Maximum Accuracy.

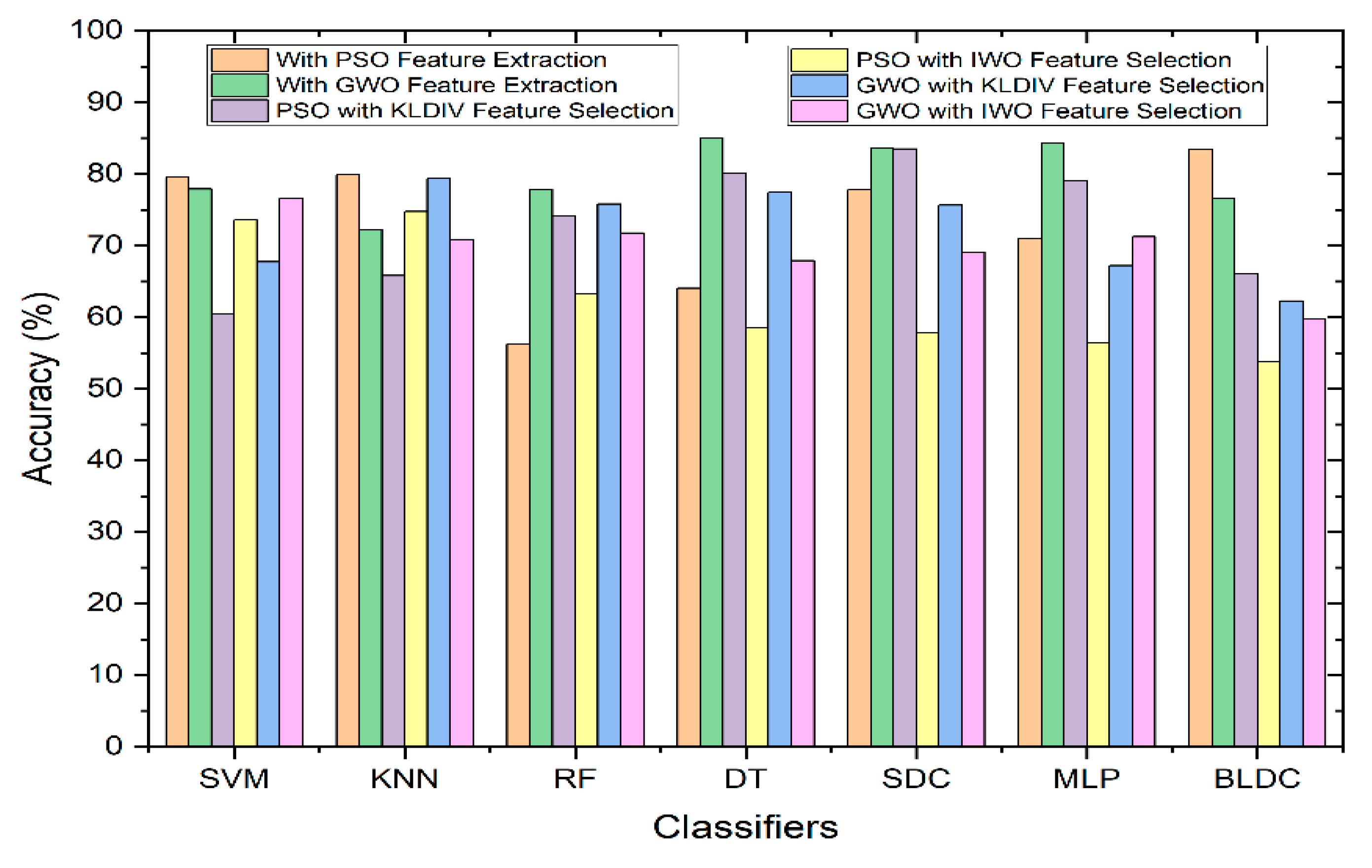

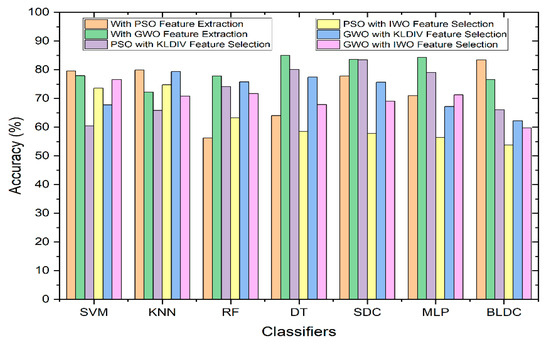

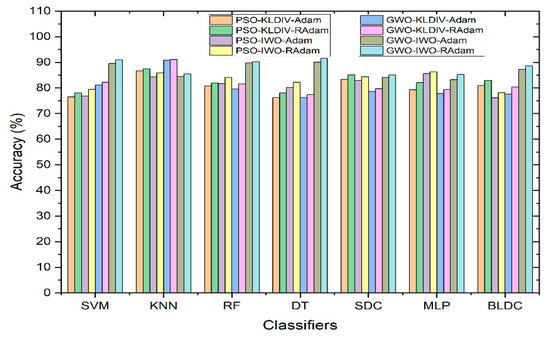

Figure 7 illustrates the comparative performance of classifiers in relation to Accuracy, both with and without the integration of feature selection. As depicted in the graph, among all of the classifier types, the Decision Tree classifier employing the GWO (Grey Wolf Optimization) feature extraction method outperformed the rest in terms of achieving the highest accuracy. When utilizing the KL Divergence feature selection technique, along with PSO feature extraction technique, the Softmax Discriminant Classifier demonstrated a commendable accuracy of 83.47%. Similarly, when employing the IWO (Invasive Weed Optimization) feature selection technique, along with GWO feature extraction technique, the SVM (Support Vector Machine) classifier exhibited a notable accuracy of 76.63%. In contrast, the Mathematical feature selection approaches yielded comparatively lower accuracy when compared to scenarios where feature selection was not applied.

Figure 7.

Performance of Classifiers with and without Feature Selection Methods in terms of Accuracy.

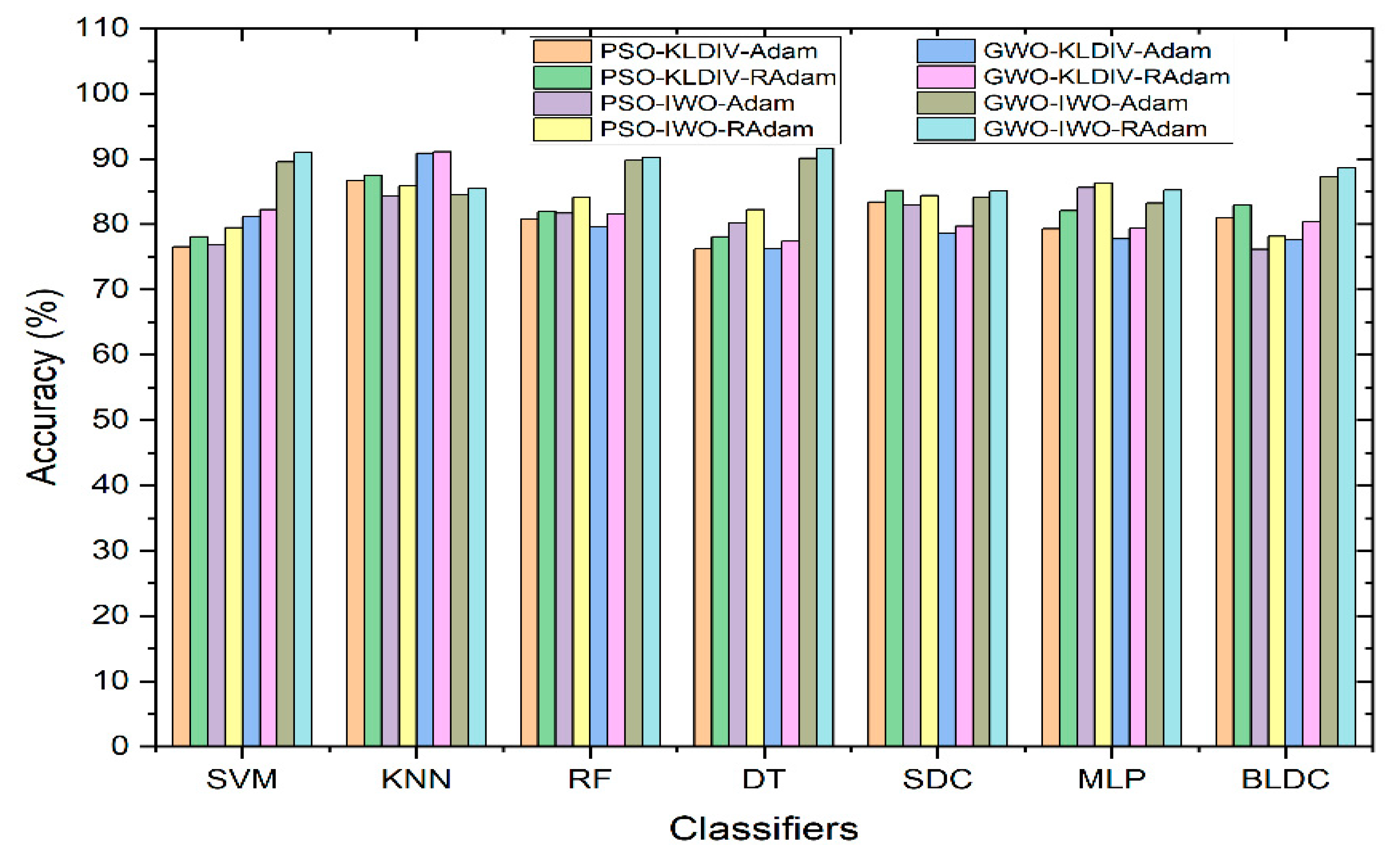

Figure 8 displays how classifiers perform when hyperparameter tuning methods such as Adam and RAdam are used to enhance accuracy. Even after applying feature selection techniques, there’s no significant improvement in classifier accuracy compared to using no feature selection. To address this, hyperparameter update algorithms are introduced. The accuracy achieved through the KL Divergence feature selection method is notably high across all classifiers. However, for the IWO feature selection technique, accuracy seems to be somewhat lower. This prompts the use of hyperparameter update algorithms specifically for the IWO feature selection. As a result of employing these algorithms, there’s a substantial accuracy improvement for all classifiers using the IWO feature selection. Notably, the Decision Tree classifier combined with GWO feature extraction and IWO feature selection, along with the RAdam hyperparameter update algorithm, achieves the highest accuracy at 91.57%.

Figure 8.

Performance of Classifiers with Hyperparameter Tuning Methods for Adam and RAdam in terms of Accuracy.

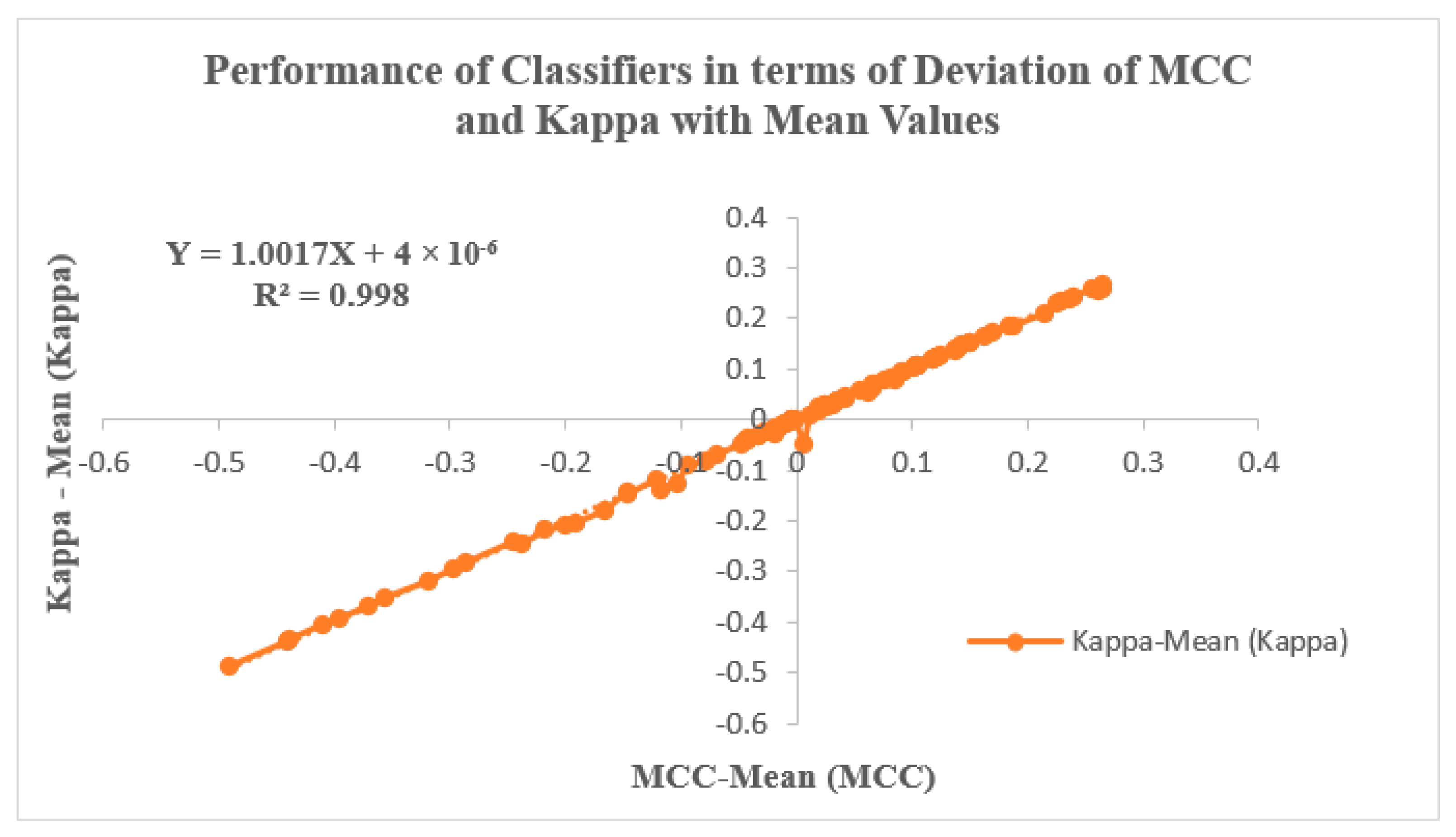

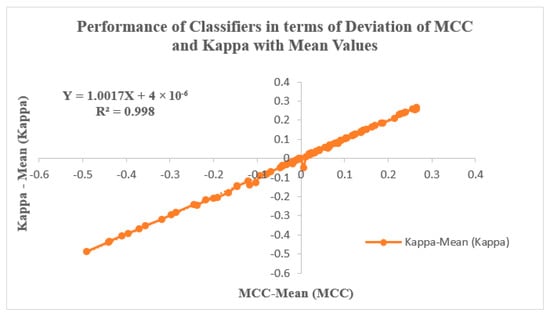

Figure 9 illustrates the classifiers’ performance by analyzing the Deviation of MCC and Kappa parameters in relation to their mean values. These parameters, MCC and Kappa, serve as benchmarks for evaluating how classifiers respond to different inputs. The study involves two input categories: features extracted using PSO and GWO, followed by feature selection through KL Divergence and IWO. The selected features are then inputted into the classifiers, and their effectiveness is evaluated through the resulting MCC and Kappa values. The average MCC and Kappa values attained from the classifiers are 0.56661 and 0.56256, respectively. A methodology is devised to assess classifier performance by examining the variability of MCC and Kappa values from their respective means. Notably, Figure 9 depicts a trend where MCC and Kappa values in the graph’s third quadrant correspond to non-linear outcomes with lower performance metrics. Conversely, values in the graph’s first quadrant indicate improved classifier performance, with MCC and Kappa values surpassing the average. This pattern suggests an enhancement in classifier performance for GWO inputs when coupled with IWO feature selection, particularly within the context of the RAdam hyperparameter tuning approach. Figure 9 is also characterized by a linear curve fitting described by the equation Y = 1.0017 X + 4 × 10−06, with an R2 value of 0.998.

Figure 9.

Performance of Classifiers in terms of Deviation of MCC and Kappa Parameters with mean values.

6.4. Computational Complexity Analysis of the Classifiers

Computational complexity also acts as a performance metric for classifiers, encompassing time and space complexities. This study utilizes the Big O notation to characterize the computational complexity of feature extraction, feature selection, and classification methods. The assessment of Computational Complexity involves an input size labelled as ‘n’. When the input size is , the computational complexity remains minimal. However, as the input size increases, so does the computational complexity. The relationship between input size and computational complexity is encapsulated by the Big O notation. Specifically, if the complexity grows logarithmically with the increase in ‘n’, it is represented as . The classifiers examined in this research integrate either feature extraction methods, feature selection techniques, or a combination of both. Hence, computational complexity becomes a blend of these hybrid methodologies. Table 19 offers an overview of the computational complexity associated with the classifiers across diverse Feature Extraction and Feature Selection Techniques.

Table 19.

Computational Complexity of the classifiers among Feature Extraction, Feature Selection and Hyperparameter Tuning approaches.

As evident from Table 19, when feature extraction techniques are not employed, classifiers such as SVM, KNN, Random Forest (RF), Decision Tree (DT), Softmax Discriminant classifier (SDC), Multilayer Perceptron (MLP), and Bayesian LDC (BLDC) exhibit lower levels of computational complexity. When utilizing the GWO feature extraction technique, the Decision Tree classifier stands out with a computational complexity of and achieves a high accuracy of 85.01%. However, the BLDC classifier, with a computational complexity of for GWO feature extraction, performs poorly when IWO feature selection methods are applied across the classifiers. The observed underperformance is linked to outlier problems present in the GWO features. To improve classifier performance, this study integrates hyperparameter tuning into the IWO feature selection method. Particularly, the Decision Tree classifier demonstrates remarkable performance with accuracies of 90.07% and 91.57% when utilizing GWO feature extraction in conjunction with IWO. These improvements come with a moderate computational complexity represented by for the Adam Hyperparameter tuning approach and for the RAdam Hyperparameter tuning approach.

6.5. Comparison of Previous Works

Comparison charts with different Machine Learning and Deep Learning models along with classifiers are shown in the Table 20 for the different datasets of lung cancer. As noted in the Table 20, that the classifier performance is analyzed for the four different datasets, namely CRAG, LIDC-IDRI, LUNA16 and LC25000. The following classifiers namely Ensemble, ResNet50, KNN, AlexNet and CNN were analyzed. As shown in the Table 20., for the CRAG database, the ResNet50 attains the maximum accuracy of 93.91% whereas for CT image database (LIDC-IDRI, LUNA16), the CNN-ALCDC model attains the maximum accuracy of 97.2%. This is due to the smaller number of CT images as the data set. Similarly, for LC25000 database, for a binary classification problem, 5000 images for each class are taken, which attains a commendable accuracy of 91.57%.

Table 20.

Comparison of classifier performance with different datasets.

7. Conclusions

Early diagnosis of lung cancer enhances patient life expectancy. This paper proposes machine learning techniques to enhance classifier accuracy and enable early identification using histopathological images. The primary aim is to achieve lung cancer classification with high accuracy, while minimizing false positives and false negatives. The study applies adaptive median filtering and a modified KFCM-based segmentation method to obtain the segmented images for better classification results. Feature extraction involves optimization techniques such as PSO and GWO which reduces the dimensionality of the segmented image to [512 × 10], followed by statistical analysis. Feature selection reduces the number of intensity values to [100 × 12] for lung cancer classification. Through the utilization of KL Divergence and Invasive Weed Optimization to evaluate the dimensionally reduced features, the datasets undergo classification with various classifiers to achieve better accuracy. The classification process entails seven classifiers, coupled with Hyperparameter selection using Adam and Radam methods, which are compared and analyzed. The Decision Tree Classifier for GWO features without feature selection achieves a better accuracy of 85.01%. Mathematical feature selection methods had lower accuracy compared to scenarios without feature selection. The results are further enhanced when the Hyperparameter Up-to-date methods are employed which reveal that the combination of GWO-IWO-Decision Tree classifier for RAdam outperforms all other classifiers, achieving an overall accuracy of 91.57% in classifying Benign and Adenocarcinoma classes. Future research directions will explore diverse feature selection techniques, optimization methodologies, and the inclusion of deep learning approaches such as CNN, DNN, and LSTM to further enhance lung cancer classification.

Author Contributions

Conceptualization, K.S.; Methodology, H.R.; Software, K.S.; Validation, H.R.; Formal analysis, K.S.; Investigation, H.R.; Resources, K.S.; Data curation, K.S.; Writing-original draft, K.S.; Writing-review and editing, H.R.; Visualization, H.R.; Supervision, H.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

Not Applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Prabhakar, S.K.; Lee, S.W. An Integrated Approach for Ovarian Cancer Classification with the Application of Stochastic Optimization. IEEE Access. 2020, 8, 127866–127882. [Google Scholar] [CrossRef]

- Jemal, A.; Ward, E.M.; Johnson, C.J.; Cronin, K.A.; Ma, J.; Ryerson, A.B.; Mariotto, A.; Lake, A.J.; Wilson, R.; Sherman, R.L.; et al. Annual Report to the Nation on the Status of Cancer, 1975–2014, Featuring Survival. J. Natl. Cancer Inst. 2017, 109, djx030. [Google Scholar] [CrossRef] [PubMed]

- Miki, T.; Yano, S.; Hanibuchi, M.; Sone, S. Bone Metastasis Model with Multiorgan Dissemination of Human Small-Cell Lung Cancer (SBC-5) Cells in Natural Killer Cell-Depleted SCID Mice. Oncol. Res. Featur. Preclin. Clin. Cancer Ther. 2001, 12, 209–217. [Google Scholar] [CrossRef] [PubMed]

- Mahmood, H.; Shaban, M.; Indave, B.I.; Santos-Silva, A.R.; Rajpoot, N.; Khurram, S.A. Use of artificial intelligence in diagnosis of head and neck precancerous and cancerous lesions: A systematic review. Oral Oncol. 2020, 110, 104885. [Google Scholar] [CrossRef] [PubMed]

- He, L.; Long, L.R.; Antani, S.; Thoma, G.R. Histology image analysis for carcinoma detection and grading. Comput. Methods Programs Biomed. 2012, 107, 538–556. [Google Scholar] [CrossRef] [PubMed]

- Komura, D.; Ishikawa, S. Machine Learning Methods for Histopathological Image Analysis. Comput. Struct. Biotechnol. J. 2018, 16, 34–42. [Google Scholar] [CrossRef]

- Andreadis, D.A.; Pavlou, A.M.; Panta, P. Biopsy and oral squamous cell carcinoma histopathology. In Oral Cancer Detection: Novel Strategies and Clinical Impact; Springer International Publishing: Cham, Switzerland, 2019; pp. 133–151. [Google Scholar]

- Gertych, A.; Swiderska-Chadaj, Z.; Ma, Z.; Ing, N.; Markiewicz, T.; Cierniak, S.; Salemi, H.; Guzman, S.; Walts, A.E.; Knudsen, B.S. Convolutional neural networks can accurately distinguish four histologic growth patterns of lung adenocarcinoma in digital slides. Sci. Rep. 2019, 9, 1483. [Google Scholar] [CrossRef]

- Zhang, X.; Xing, F.; Su, H.; Yang, L.; Zhang, S. High-throughput histopathological image analysis via robust cell segmentation and hashing. Med. Image Anal. 2015, 26, 306–315. [Google Scholar] [CrossRef]