The Importance of Artificial Intelligence in Upper Gastrointestinal Endoscopy

Abstract

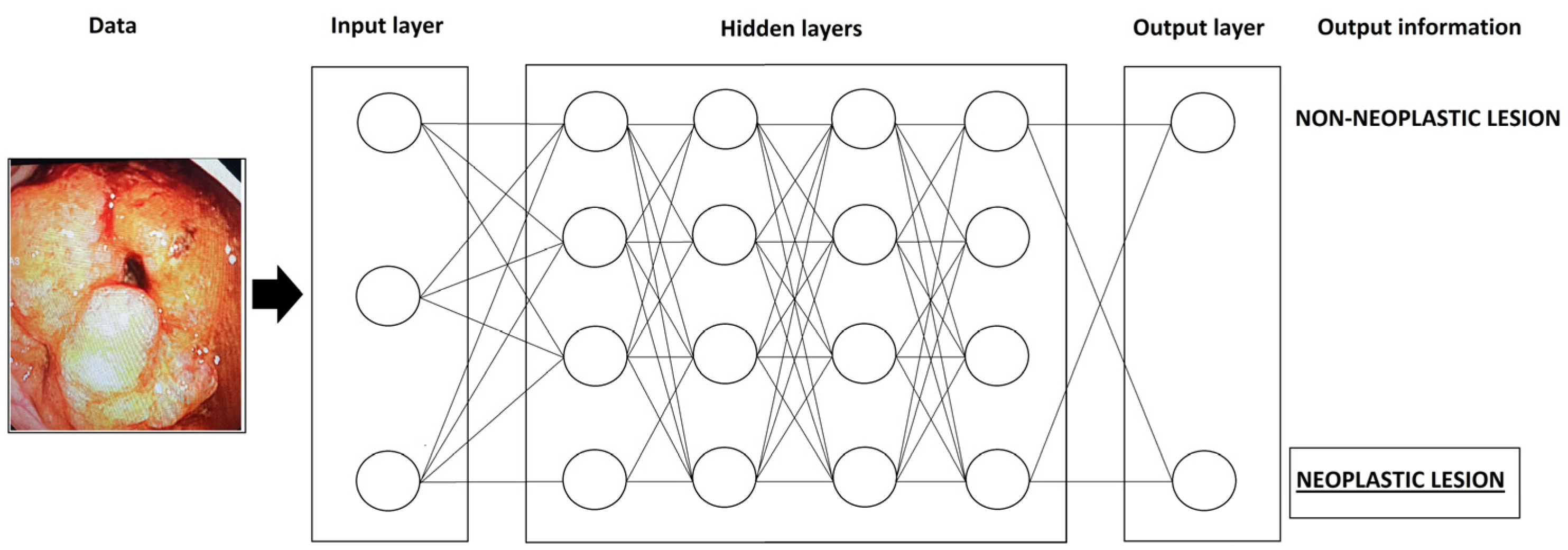

:1. Introduction

- Quality assessment;

- Detection of lesions;

- Characterizations of lesions.

2. Barrett’s Esophagus and Esophageal Adenocarcinoma

3. Esophageal Squamous Cell Carcinoma (ESCC)

4. Early Gastric Carcinoma

5. H. pylori Gastritis

6. Conclusions

Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kaul, V.; Enslin, S.; Gross, S.A. History of artificial intelligence in medicine. Gastrointest. Endosc. 2020, 92, 807–812. [Google Scholar] [CrossRef] [PubMed]

- Tokat, M.; van Tilburg, L.; Koch, A.D.; Spaander, M.C. Artificial Intelligence in Upper Gastrointestinal Endoscopy. Dig. Dis. 2022, 40, 395–408. [Google Scholar] [CrossRef] [PubMed]

- Okagawa, Y.; Abe, S.; Yamada, M.; Oda, I.; Saito, Y. Artificial Intelligence in Endoscopy. Dig. Dis. Sci. 2022, 67, 1553–1572. [Google Scholar] [CrossRef]

- Ebigbo, A.; Palm, C.; Probst, A.; Mendel, R.; Manzeneder, J.; Prinz, F.; de Souza, L.A.; Papa, J.P.; Siersema, P.; Messmann, H. A technical review of artificial intelligence as applied to gastrointestinal endoscopy: Clarifying the terminology. Endosc. Int. Open 2019, 7, E1616–E1623. [Google Scholar] [CrossRef]

- Mori, Y.; Kudo, S.; Mohmed, H.E.N.; Misawa, M.; Ogata, N.; Itoh, H.; Oda, M.; Mori, K. Artificial intelligence and upper gastrointestinal endoscopy: Current status and future perspective. Dig. Endosc. 2019, 31, 378–388. [Google Scholar] [CrossRef] [PubMed]

- Hamade, N.; Sharma, P. Artificial intelligence in Barrett’s Esophagus. Ther. Adv. Gastrointest. Endosc. 2021, 14, 26317745211049964. [Google Scholar] [CrossRef] [PubMed]

- Renna, F.; Martins, M.; Neto, A.; Cunha, A.; Libânio, D.; Dinis-Ribeiro, M.; Coimbra, M. Artificial Intelligence for Upper Gastrointestinal Endoscopy: A Roadmap from Technology Development to Clinical Practice. Diagnostics 2022, 12, 1278. [Google Scholar] [CrossRef] [PubMed]

- Januszewicz, W.; Witczak, K.; Wieszczy, P.; Socha, M.; Turkot, M.H.; Wojciechowska, U.; Didkowska, J.; Kaminski, M.F.; Regula, J. Prevalence and risk factors of upper gastrointestinal cancers missed during endoscopy: A nationwide registry-based study. Endoscopy 2022, 54, 653–660. [Google Scholar] [CrossRef]

- De Groof, A.J.; Struyvenberg, M.R.; Fockens, K.N.; van der Putten, J.; van der Sommen, F.; Boers, T.G.; Zinger, S.; Bisschops, R.; de With, P.H.; Pouw, R.E.; et al. Deep learning algorithm detection of Barrett’s neoplasia with high accuracy during live endoscopic procedures: A pilot study (with video). Gastrointest. Endosc. 2020, 91, 1242–1250. [Google Scholar] [CrossRef]

- Ebigbo, A.; Mendel, R.; Probst, A.; Manzeneder, J.; Prinz, F.; de Souza, L.A., Jr.; Papa, J.; Palm, C.; Messmann, H. Real-time use of artificial intelligence in the evaluation of cancer in Barrett’s oesophagus. Gut 2020, 69, 615–616. [Google Scholar] [CrossRef]

- Peters, Y.; Al-Kaabi, A.; Shaheen, N.J.; Chak, A.; Blum, A.; Souza, R.F.; Di Pietro, M.; Iyer, P.G.; Pech, O.; Fitzgerald, R.C.; et al. Barrett oesophagus. Nat. Rev. Dis. Prim. 2019, 5, 35. [Google Scholar] [CrossRef] [PubMed]

- De Groof, A.J.; Struyvenberg, M.R.; van der Putten, J.; van der Sommen, F.; Fockens, K.N.; Curvers, W.L.; Zinger, S.; Pouw, R.E.; Coron, E.; Baldaque-Silva, F.; et al. Deep-Learning System Detects Neoplasia in Patients with Barrett’s Esophagus with Higher Accuracy Than Endoscopists in a Multistep Training and Validation Study with Benchmarking. Gastroenterology 2020, 158, 915–929.e4. [Google Scholar] [CrossRef] [PubMed]

- Pennathur, A.; Gibson, M.K.; Jobe, B.A.; Luketich, J.D. Oesophageal carcinoma. Lancet 2013, 381, 400–412. [Google Scholar] [CrossRef]

- Weusten, B.; Bisschops, R.; Coron, E.; Dinis-Ribeiro, M.; Dumonceau, J.-M.; Esteban, J.-M.; Hassan, C.; Pech, O.; Repici, A.; Bergman, J.; et al. Endoscopic management of Barrett’s esophagus: European Society of Gastrointestinal Endoscopy (ESGE) Position Statement. Endoscopy 2017, 49, 191–198. [Google Scholar] [CrossRef]

- Sharaf, R.N.; Shergill, A.K.; Odze, R.D.; Krinsky, M.L.; Fukami, N.; Jain, R.; Appalaneni, V.; Anderson, M.A.; Ben-Menachem, T.; Chandrasekhara, V.; et al. Endoscopic mucosal tissue sampling. Gastrointest. Endosc. 2013, 78, 216–224. [Google Scholar] [CrossRef] [PubMed]

- Sharma, P.; Dent, J.; Armstrong, D.; Bergman, J.J.; Gossner, L.; Hoshihara, Y.; Jankowski, J.A.; Junghard, O.; Lundell, L.; Tytgat, G.N.; et al. The Development and Validation of an Endoscopic Grading System for Barrett’s Esophagus: The Prague C & M Criteria. Gastroenterology 2006, 131, 1392–1399. [Google Scholar] [CrossRef]

- Kusano, C.; Singh, R.; Lee, Y.Y.; Soh, Y.S.A.; Sharma, P.; Ho, K.; Gotoda, T. Global variations in diagnostic guidelines for Barrett’s esophagus. Dig. Endosc. 2022, 34, 1320–1328. [Google Scholar] [CrossRef]

- Milosavljevic, T.; Popovic, D.; Zec, S.; Krstic, M.; Mijac, D. Accuracy and Pitfalls in the Assessment of Early Gastrointestinal Lesions. Dig. Dis. 2019, 37, 364–373. [Google Scholar] [CrossRef]

- Nagao, S.; Tani, Y.; Shibata, J.; Tsuji, Y.; Tada, T.; Ishihara, R.; Fujishiro, M. Implementation of artificial intelligence in upper gastrointestinal endoscopy. DEN Open 2022, 2, e72. [Google Scholar] [CrossRef]

- Smyth, E.C.; Lagergren, J.; Fitzgerald, R.C.; Lordick, F.; Shah, M.A.; Lagergren, P.; Cunningham, D. Oesophageal cancer. Nat. Rev. Dis. Primers 2017, 3, 17048. [Google Scholar] [CrossRef]

- van der Sommen, F.; Zinger, S.; Curvers, W.L.; Bisschops, R.; Pech, O.; Weusten, B.L.A.M.; Bergman, J.J.G.H.M.; de With, P.H.N.; Schoon, E.J. Computer-aided detection of early neoplastic lesions in Barrett’s esophagus. Endoscopy 2016, 48, 617–624. [Google Scholar] [CrossRef] [PubMed]

- Lui, T.K.; Tsui, V.W.; Leung, W.K. Accuracy of artificial intelligence–assisted detection of upper GI lesions: A systematic review and meta-analysis. Gastrointest. Endosc. 2020, 92, 821–830.e9. [Google Scholar] [CrossRef]

- Fockens, K.N.; Jukema, J.B.; Boers, T.; Jong, M.R.; van der Putten, J.A.; Pouw, R.E.; Weusten, B.L.A.M.; Herrero, L.A.; Houben, M.H.M.G.; Nagengast, W.B.; et al. Towards a robust and compact deep learning system for primary detection of early Barrett’s neoplasia: Initial image-based results of training on a multi-center retrospectively collected data set. United Eur. Gastroenterol. J. 2023, 11, 324–336. [Google Scholar] [CrossRef] [PubMed]

- Abdelrahim, M.; Saiko, M.; Maeda, N.; Hossain, E.; Alkandari, A.; Subramaniam, S.; Parra-Blanco, A.; Sanchez-Yague, A.; Coron, E.; Repici, A.; et al. Development and validation of artificial neural networks model for detection of Barrett’s neoplasia: A multicenter pragmatic nonrandomized trial (with video). Gastrointest. Endosc. 2023, 97, 422–434. [Google Scholar] [CrossRef] [PubMed]

- Struyvenberg, M.R.; de Groof, A.J.; van der Putten, J.; van der Sommen, F.; Baldaque-Silva, F.; Omae, M.; Pouw, R.; Bisschops, R.; Vieth, M.; Schoon, E.J.; et al. A computer-assisted algorithm for narrow-band imaging-based tissue characterization in Barrett’s esophagus. Gastrointest. Endosc. 2021, 93, 89–98. [Google Scholar] [CrossRef] [PubMed]

- Swager, A.-F.; van der Sommen, F.; Klomp, S.R.; Zinger, S.; Meijer, S.L.; Schoon, E.J.; Bergman, J.J.; de With, P.H.; Curvers, W.L. Computer-aided detection of early Barrett’s neoplasia using volumetric laser endomicroscopy. Gastrointest. Endosc. 2017, 86, 839–846. [Google Scholar] [CrossRef] [PubMed]

- Visaggi, P.; Barberio, B.; Gregori, D.; Azzolina, D.; Martinato, M.; Hassan, C.; Sharma, P.; Savarino, E.; de Bortoli, N. Systematic review with meta-analysis: Artificial intelligence in the diagnosis of oesophageal diseases. Aliment. Pharmacol. Ther. 2022, 55, 528–540. [Google Scholar] [CrossRef]

- Hashimoto, R.; Requa, J.; Dao, T.; Ninh, A.; Tran, E.; Mai, D.; Lugo, M.; Chehade, N.E.-H.; Chang, K.J.; Karnes, W.E.; et al. Artificial intelligence using convolutional neural networks for real-time detection of early esophageal neoplasia in Barrett’s esophagus (with video). Gastrointest. Endosc. 2020, 91, 1264–1271.e1. [Google Scholar] [CrossRef]

- Abnet, C.C.; Arnold, M.; Wei, W.-Q. Epidemiology of Esophageal Squamous Cell Carcinoma. Gastroenterology 2018, 154, 360–373. [Google Scholar] [CrossRef]

- Meves, V.; Behrens, A.; Pohl, J. Diagnostics and Early Diagnosis of Esophageal Cancer. Visc. Med. 2015, 31, 315–318. [Google Scholar] [CrossRef]

- Morita, F.H.A.; Bernardo, W.M.; Ide, E.; Rocha, R.S.P.; Aquino, J.C.M.; Minata, M.K.; Yamazaki, K.; Marques, S.B.; Sakai, P.; de Moura, E.G.H. Narrow band imaging versus lugol chromoendoscopy to diagnose squamous cell carcinoma of the esophagus: A systematic review and meta-analysis. BMC Cancer 2017, 17, 54. [Google Scholar] [CrossRef] [PubMed]

- Codipilly, D.C.; Qin, Y.; Dawsey, S.M.; Kisiel, J.; Topazian, M.; Ahlquist, D.; Iyer, P.G. Screening for esophageal squamous cell carcinoma: Recent advances. Gastrointest. Endosc. 2018, 88, 413–426. [Google Scholar] [CrossRef] [PubMed]

- Kodashima, S.; Fujishiro, M.; Takubo, K.; Kammori, M.; Nomura, S.; Kakushima, N.; Muraki, Y.; Goto, O.; Ono, S.; Kaminishi, M.; et al. Ex vivo pilot study using computed analysis of endo-cytoscopic images to differentiate normal and malignant squamous cell epithelia in the oesophagus. Dig. Liver Dis. 2007, 39, 762–766. [Google Scholar] [CrossRef] [PubMed]

- Horie, Y.; Yoshio, T.; Aoyama, K.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Hirasawa, T.; Tsuchida, T.; Ozawa, T.; Ishihara, S.; et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest. Endosc. 2019, 89, 25–32. [Google Scholar] [CrossRef]

- Feng, Y.; Liang, Y.; Li, P.; Long, Q.; Song, J.; Li, M.; Wang, X.; Cheng, C.-E.; Zhao, K.; Ma, J.; et al. Artificial intelligence assisted detection of superficial esophageal squamous cell carcinoma in white-light endoscopic images by using a generalized system. Discov. Oncol. 2023, 14, 73. [Google Scholar] [CrossRef]

- Wang, S.X.; Ke, Y.; Liu, Y.M.; Liu, S.Y.; Song, S.B.; He, S.; Zhang, Y.M.; Dou, L.Z.; Liu, Y.; Liu, X.D.; et al. Establishment and clinical validation of an artificial intelligence YOLOv51 model for the detection of precancerous lesions and superficial esophageal cancer in endoscopic procedure. Chin. J. Oncol. 2022, 44, 395–401. [Google Scholar] [CrossRef]

- Shimamoto, Y.; Ishihara, R.; Kato, Y.; Shoji, A.; Inoue, T.; Matsueda, K.; Miyake, M.; Waki, K.; Kono, M.; Fukuda, H.; et al. Real-time assessment of video images for esophageal squamous cell carcinoma invasion depth using artificial intelligence. J. Gastroenterol. 2020, 55, 1037–1045. [Google Scholar] [CrossRef]

- Yuan, X.; Guo, L.; Liu, W.; Zeng, X.; Mou, Y.; Bai, S.; Pan, Z.; Zhang, T.; Pu, W.; Wen, C.; et al. Artificial intelligence for detecting superficial esophageal squamous cell carcinoma under multiple endoscopic imaging modalities: A multicenter study. J. Gastroenterol. Hepatol. 2022, 37, 169–178. [Google Scholar] [CrossRef]

- Ohmori, M.; Ishihara, R.; Aoyama, K.; Nakagawa, K.; Iwagami, H.; Matsuura, N.; Shichijo, S.; Yamamoto, K.; Nagaike, K.; Nakahara, M.; et al. Endoscopic detection and differentiation of esophageal lesions using a deep neural network. Gastrointest. Endosc. 2020, 91, 301–309.e1. [Google Scholar] [CrossRef]

- Guo, L.; Xiao, X.; Wu, C.; Zeng, X.; Zhang, Y.; Du, J.; Bai, S.; Xie, J.; Zhang, Z.; Li, Y.; et al. Real-time automated diagnosis of precancerous lesions and early esophageal squamous cell carcinoma using a deep learning model (with videos). Gastrointest. Endosc. 2020, 91, 41–51. [Google Scholar] [CrossRef]

- Nakagawa, K.; Ishihara, R.; Aoyama, K.; Ohmori, M.; Nakahira, H.; Matsuura, N.; Shichijo, S.; Nishida, T.; Yamada, T.; Yamaguchi, S.; et al. Classification for invasion depth of esophageal squamous cell carcinoma using a deep neural network compared with experienced endoscopists. Gastrointest. Endosc. 2019, 90, 407–414. [Google Scholar] [CrossRef] [PubMed]

- Milano, A.F. 20-Year Comparative Survival and Mortality of Cancer of the Stomach by Age, Sex, Race, Stage, Grade, Cohort Entry Time-Period, Disease Duration & Selected ICD-O-3 Oncologic Phenotypes: A Systematic Review of 157,258 Cases for Diagnosis Years 1973–2014: (SEER*Stat 8.3.4). J. Insur. Med. 2019, 48, 5–23. [Google Scholar] [CrossRef] [PubMed]

- Jin, P.; Ji, X.; Kang, W.; Li, Y.; Liu, H.; Ma, F.; Ma, S.; Hu, H.; Li, W.; Tian, Y. Artificial intelligence in gastric cancer: A systematic review. J. Cancer Res. Clin. Oncol. 2020, 146, 2339–2350. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.; Lu, L.; Liu, H.; Wang, X.; Gao, Y.; Yang, L.; Li, Y.; Su, M.; Jin, M.; Khan, S. A comprehensive update on early gastric cancer: Defining terms, etiology, and alarming risk factors. Expert Rev. Gastroenterol. Hepatol. 2021, 15, 255–273. [Google Scholar] [CrossRef] [PubMed]

- Hartgrink, H.H.; Jansen, E.P.; van Grieken, N.C.; van de Velde, C.J. Gastric cancer. Lancet 2009, 374, 477–490. [Google Scholar] [CrossRef]

- Young, E.; Philpott, H.; Singh, R. Endoscopic diagnosis and treatment of gastric dysplasia and early cancer: Current evidence and what the future may hold. World J. Gastroenterol. 2021, 27, 5126–5151. [Google Scholar] [CrossRef]

- Yao, K.; Uedo, N.; Kamada, T.; Hirasawa, T.; Nagahama, T.; Yoshinaga, S.; Oka, M.; Inoue, K.; Mabe, K.; Yao, T.; et al. Guidelines for endoscopic diagnosis of early gastric cancer. Dig. Endosc. 2020, 32, 663–698. [Google Scholar] [CrossRef]

- Waddingham, W.; Nieuwenburg, S.A.V.; Carlson, S.; Rodriguez-Justo, M.; Spaander, M.; Kuipers, E.J.; Jansen, M.; Graham, D.G.; Banks, M. Recent advances in the detection and management of early gastric cancer and its precursors. Front. Gastroenterol. 2020, 12, 322–331. [Google Scholar] [CrossRef]

- Miyaki, R.; Yoshida, S.; Tanaka, S.; Kominami, Y.; Sanomura, Y.; Matsuo, T.; Oka, S.; Raytchev, B.; Tamaki, T.; Koide, T.; et al. Quantitative identification of mucosal gastric cancer under magnifying endoscopy with flexible spectral imaging color enhancement. J. Gastroenterol. Hepatol. 2013, 28, 841–847. [Google Scholar] [CrossRef]

- Luo, H.; Xu, G.; Li, C.; He, L.; Luo, L.; Wang, Z.; Jing, B.; Deng, Y.; Jin, Y.; Li, Y.; et al. Real-time artificial intelligence for detection of upper gastrointestinal cancer by endoscopy: A multicentre, case-control, diagnostic study. Lancet Oncol. 2019, 20, 1645–1654. [Google Scholar] [CrossRef]

- Kubota, K.; Kuroda, J.; Yoshida, M.; Ohta, K.; Kitajima, M. Medical image analysis: Computer-aided diagnosis of gastric cancer invasion on endoscopic images. Surg. Endosc. 2021, 26, 1485–1489. [Google Scholar] [CrossRef] [PubMed]

- Niikura, R.; Aoki, T.; Shichijo, S.; Yamada, A.; Kawahara, T.; Kato, Y.; Hirata, Y.; Hayakawa, Y.; Suzuki, N.; Ochi, M.; et al. Artificial intelligence versus expert endoscopists for diagnosis of gastric cancer in patients who have undergone upper gastrointestinal endoscopy. Endoscopy 2022, 54, 780–784. [Google Scholar] [CrossRef] [PubMed]

- Costa, L.C.d.S.; Santos, J.O.M.; Miyajima, N.T.; Montes, C.G.; Andreollo, N.A.; Lopes, L.R. Efficacy analysis of endoscopic submucosal dissection for the early gastric cancer and precancerous lesions. Arq. Gastroenterol. 2022, 59, 421–427. [Google Scholar] [CrossRef] [PubMed]

- Niu, P.-H.; Zhao, L.-L.; Wu, H.-L.; Zhao, D.-B.; Chen, Y.-T. Artificial intelligence in gastric cancer: Application and future perspectives. World J. Gastroenterol. 2020, 26, 5408–5419. [Google Scholar] [CrossRef] [PubMed]

- Tang, D.; Wang, L.; Ling, T.; Lv, Y.; Ni, M.; Zhan, Q.; Fu, Y.; Zhuang, D.; Guo, H.; Dou, X.; et al. Development and validation of a real-time artificial intelligence-assisted system for detecting early gastric cancer: A multicentre retrospective diagnostic study. EBioMedicine 2020, 62, 103146. [Google Scholar] [CrossRef] [PubMed]

- Ikenoyama, Y.; Hirasawa, T.; Ishioka, M.; Namikawa, K.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Yoshio, T.; Tsuchida, T.; Takeuchi, Y.; et al. Detecting early gastric cancer: Comparison between the diagnostic ability of convolutional neural networks and endoscopists. Dig. Endosc. 2021, 33, 141–150. [Google Scholar] [CrossRef]

- Nagao, S.; Tsuji, Y.; Sakaguchi, Y.; Takahashi, Y.; Minatsuki, C.; Niimi, K.; Yamashita, H.; Yamamichi, N.; Seto, Y.; Tada, T.; et al. Highly accurate artificial intelligence systems to predict the invasion depth of gastric cancer: Efficacy of conventional white-light imaging, nonmagnifying narrow-band imaging, and indigo-carmine dye contrast imaging. Gastrointest. Endosc. 2020, 92, 866–873.e1. [Google Scholar] [CrossRef]

- Kanesaka, T.; Lee, T.-C.; Uedo, N.; Lin, K.-P.; Chen, H.-Z.; Lee, J.-Y.; Wang, H.-P.; Chang, H.-T. Computer-aided diagnosis for identifying and delineating early gastric cancers in magnifying narrow-band imaging. Gastrointest. Endosc. 2018, 87, 1339–1344. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, Q.-C.; Xu, M.-D.; Zhang, Z.; Cheng, J.; Zhong, Y.-S.; Zhang, Y.-Q.; Chen, W.-F.; Yao, L.-Q.; Zhou, P.-H.; et al. Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy. Gastrointest. Endosc. 2019, 89, 806–815.e1. [Google Scholar] [CrossRef]

- Yang, H.; Hu, B. Diagnosis of Helicobacter pylori Infection and Recent Advances. Diagnostics 2021, 11, 1305. [Google Scholar] [CrossRef]

- Bang, C.S.; Lee, J.J.; Baik, G.H. Artificial Intelligence for the Prediction of Helicobacter Pylori Infection in Endoscopic Images: Systematic Review and Meta-Analysis of Diagnostic Test Accuracy. J. Med. Internet Res. 2020, 22, e21983. [Google Scholar] [CrossRef] [PubMed]

- Pannala, R.; Krishnan, K.; Melson, J.; Parsi, M.A.; Schulman, A.R.; Sullivan, S.; Trikudanathan, G.; Trindade, A.J.; Watson, R.R.; Maple, J.T.; et al. Artificial intelligence in gastrointestinal endoscopy. VideoGIE 2020, 5, 598–613. [Google Scholar] [CrossRef] [PubMed]

- Shichijo, S.; Nomura, S.; Aoyama, K.; Nishikawa, Y.; Miura, M.; Shinagawa, T.; Takiyama, H.; Tanimoto, T.; Ishihara, S.; Matsuo, K.; et al. Application of Convolutional Neural Networks in the Diagnosis of Helicobacter pylori Infection Based on Endoscopic Images. EBioMedicine 2017, 25, 106–111. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.; Zhang, X.; Kim, J.J.; Zhu, X.; Ye, G.; Ye, B.; Wang, J.; Luo, S.; Li, J.; Yu, T.; et al. High Accuracy of Convolutional Neural Network for Evaluation of Helicobacter pylori Infection Based on Endoscopic Images: Preliminary Experience. Clin. Transl. Gastroenterol. 2019, 10, e00109. [Google Scholar] [CrossRef] [PubMed]

- Bordin, D.S.; Voynovan, I.N.; Andreev, D.N.; Maev, I.V. Current Helicobacter pylori Diagnostics. Diagnostics 2021, 11, 1458. [Google Scholar] [CrossRef]

- Hirotaka, N.; Hiroshi, K.; Hiroshi, K.; Nobuhiro, S. Artificial intelligence diagnosis of Helicobacter pylori infection using blue laser imaging-bright and linked color imaging: A single-center prospective study. Ann. Gastroenterol. 2018, 31, 462–468. [Google Scholar] [CrossRef]

- Nakashima, H.; Kawahira, H.; Sakaki, N. Endoscopic three-categorical diagnosis of Helicobacter pylori infection using linked color imaging and deep learning: A single-center prospective study (with video). Gastric Cancer 2020, 23, 1033–1040. [Google Scholar] [CrossRef]

- Seo, J.Y.; Hong, H.; Ryu, W.-S.; Kim, D.; Chun, J.; Kwak, M.-S. Development and validation of a convolutional neural network model for diagnosing Helicobacter pylori infections with endoscopic images: A multicenter study. Gastrointest. Endosc. 2023, 97, 880–888.e2. [Google Scholar] [CrossRef]

- Li, Y.-D.; Wang, H.-G.; Chen, S.-S.; Yu, J.-P.; Ruan, R.-W.; Jin, C.-H.; Chen, M.; Jin, J.-Y.; Wang, S. Assessment of Helicobacter pylori infection by deep learning based on endoscopic videos in real time. Dig. Liver Dis. 2023, 55, 649–654. [Google Scholar] [CrossRef]

- Yasuda, T.; Hiroyasu, T.; Hiwa, S.; Okada, Y.; Hayashi, S.; Nakahata, Y.; Yasuda, Y.; Omatsu, T.; Obora, A.; Kojima, T.; et al. Potential of automatic diagnosis system with linked color imaging for diagnosis of Helicobacter pylori infection. Dig. Endosc. 2020, 32, 373–381. [Google Scholar] [CrossRef]

- Itoh, T.; Kawahira, H.; Nakashima, H.; Yata, N. Deep learning analyzes Helicobacter pylori infection by upper gastrointestinal endoscopy images. Endosc. Int. Open 2018, 6, E139–E144. [Google Scholar] [CrossRef] [PubMed]

| Authors (Reference) | Year | Country | Design | Dichotomous Variable | Endoscopic Methods | AI Method | Performance | ||

|---|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | Sensitivity (%) | Specificity (%) | |||||||

| van der Sommen [21] | 2016 | Netherlands | Retrospective | neoplastic/non-neoplastic BE | WLI | SVM | N/A | 86.0 | 87.0 |

| de Groof [12] | 2020 | Netherlands | Retrospective | neoplastic/non-neoplastic BE | WLI | ResNet/UNet (CNN) | 89.0 | 90.0 | 88.0 |

| Fockens [23] | 2023 | Netherlands | Prospective (multicentric) | neoplastic/non-neoplastic BE | WLI | CNN | N/A | 100.0 | 66.0 |

| Abdelrahim [24] | 2023 | UK | Retrospective (multicentric) | neoplastic/non-neoplastic BE | WLI | CNN | 92.0 | 93.8 | 90.7 |

| Struyvenberg [25] | 2021 | Netherlands | Retrospective (multicentric) | neoplastic/non-neoplastic BE | NBI | ResNet/UNet (CNN) | 84.0 | 88.0 | 78.0 |

| de Groof [9] | 2020 | Netherlands | Prospective | neoplastic/non-neoplastic BE | WLI | ResNet/UNet (CNN) | 90.0 | 91.0 | 89.0 |

| Ebigdo [10] | 2020 | Germany | Prospective | neoplastic/non-neoplastic BE | WLI | CNN | 89.9 | 83.7 | 100 |

| Hashimoto [28] | 2019 | USA | Retrospective | neoplastic/non-neoplastic BE | WLI | CNN | N/A | 98.6 | 88.8 |

| NBI | N/A | 92.4 | 99.2 | ||||||

| Swager [26] | 2017 | Netherlands | Retrospective | neoplastic/non-neoplastic BE | VLE | CNN | N/A | ||

| Authors (Reference) | Year | Country | Design | Dichotomous Variable | Endoscopic Methods | AI Method | Performance | ||

|---|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | Sensitivity (%) | Specificity (%) | |||||||

| Horie [34] | 2019 | Japan | Retrospective | cancer/non-cancer | WLI | CNN | N/A | 98.0 * | 79.0 ** |

| Feng [35] | 2023 | China | Retrospective | cancer/non-cancer | WLI | CNN | 88.3 | 90.1 | 94.3 |

| Wang [36] | 2023 | China | Retrospective | cancer/non-cancer | WLI | YOLOv5l | 96.9 | 87.9 | 98.3 |

| NBI | 98.6 | 89.3 | 99.5 | ||||||

| LCE | 93.0 | 77.5 | 98.0 | ||||||

| Shimamoto [37] | 2020 | Japan | Retrospective | depth of invasion | WLI | CNN | 87.3 | 50.0 | 98.7 |

| ME | 89.2 | 70.8 | 94.9 | ||||||

| Yuan [38] | 2022 | China | Retrospective (multicentric) | superficial carcinoma/non-carcinoma | WLI | CNN | 86.6 | 93.3 | 78.5 |

| non-ME NBI | 91.7 | 98.0 | 85.1 | ||||||

| ME-NBI | 96.5 | 99.4 | 89.0 | ||||||

| Iodine staining | 92.2 | 96.7 | 86.9 | ||||||

| Ohmori [39] | 2020 | Japan | Retrospective | cancer/non-cancer | WLI | CNN | 81.0 | 90.0 | 76.0 |

| NBI/BLI | 77.0 | 100.0 | 63.0 | ||||||

| ME | 77.0 | 98.0 | 56.0 | ||||||

| Guo [40] | 2019 | India | Retrospective | cancer/non-cancer | NBI | SegNet | N/A | 98.0 | 95.0 |

| Nakagwa [41] | 2019 | Japan | Retrospective | cancer/non-cancer | WLI | Single Shot MultiBox | 91.0 | 90.1 | 95.8 |

| Authors (Reference) | Year | Country | Design | Dichotomous Variable | Endoscopic Methods | AI Method | Performance | ||

|---|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | Sensitivity (%) | Specificity (%) | |||||||

| Miyaki [49] | 2013 | Japan | Retrospective | cancer/non-cancer | ME-FICE | SVM | 85.9 | 84.8 | 87.0 |

| Luo [50] | 2019 | China | Retrospective (multicentric) | cancer/non-cancer | WLI | CNN | 92.7 | 94.6 | 91.3 |

| Tang [55] | 2020 | China | Retrospective | cancer/non-cancer | WLI | CNN | 85.1–91.2 | 85.9–95.5 | 81.7–90.3 |

| Ikenoyama [56] | 2021 | Japan | Retrospective | cancer/non-cancer | WLI, ICE, NBI | CNN | N/A | 58.4 | 87.3 |

| Nagao [57] | 2020 | Japan | Retrospective | depth of invasion | WLI | CNN | 94.4 | 84.4 | 99.3 |

| NBI | 94.3 | 75.0 | 100.0 | ||||||

| ICE | 95.5 | 87.5 | 100.0 | ||||||

| Kanasaka [58] | 2018 | Taiwan | Retrospective | cancer/non-cancer | ME-NBI | SVM | 96.3 | 96.7 | 95.0 |

| Zhu [59] | 2018 | USA | Retrospective | depth of invasion | WLI | CNN | 89.1 | 76.4 | 95.5 |

| Authors (Reference) | Year | Country | Design | Dichotomous Variable | Endoscopic Methods | AI Method | Performance | ||

|---|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | Sensitivity (%) | Specificity (%) | |||||||

| Shichijo [63] | 2017 | Japan | Retrospective | Presence/absence H. pylori | WLI | CNN | 87.7 | 88.9 | 87.4 |

| Zheng [64] | 2019 | China | Retrospective | Presence/absence H. pylori | WLI | CNN (Res-Net 50) | 93.8 * | 91.6 * | 98.6 * |

| Seo [68] | 2023 | Korea | Retrospective (multicentric) | Presence/absence H. pylori | WLI | CNN | 94.0 ** | 96.0 ** | 90.0 ** |

| 88.0 *** | 92.0 *** | 79.0 *** | |||||||

| Nakashima [67] | 2020 | Japan | Prospective | Presence/absence H. pylori | WLI | CNN | 77.5 | 60.0 | 86.2 |

| LCI | 82.5 | 62.5 | 92.5 | ||||||

| Li [69] | 2023 | China | Retrospective | Presence/absence H. pylori | WLI | CNN | 84.0 | 82.0 | 86.0 |

| Yasuda [70] | 2019 | Japan | Retrospective | Presence/absence H. pylori | LCI | SVM | 87.6 | 90.5 | 85.7 |

| Itoh [71] | 2019 | Japan | Prospective | Presence/absence H. pylori | WLI | CNN | N/A | 86.7 | 86.7 |

| Nakashima [66] | 2018 | Japan | Prospective | Presence/absence H. pylori | WLI | CNN | N/A | 66.7 | 60.0 |

| BLI-bright | N/A | 96.7 | 86.7 | ||||||

| LCI | N/A | 96.7 | 83.3 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Popovic, D.; Glisic, T.; Milosavljevic, T.; Panic, N.; Marjanovic-Haljilji, M.; Mijac, D.; Stojkovic Lalosevic, M.; Nestorov, J.; Dragasevic, S.; Savic, P.; et al. The Importance of Artificial Intelligence in Upper Gastrointestinal Endoscopy. Diagnostics 2023, 13, 2862. https://doi.org/10.3390/diagnostics13182862

Popovic D, Glisic T, Milosavljevic T, Panic N, Marjanovic-Haljilji M, Mijac D, Stojkovic Lalosevic M, Nestorov J, Dragasevic S, Savic P, et al. The Importance of Artificial Intelligence in Upper Gastrointestinal Endoscopy. Diagnostics. 2023; 13(18):2862. https://doi.org/10.3390/diagnostics13182862

Chicago/Turabian StylePopovic, Dusan, Tijana Glisic, Tomica Milosavljevic, Natasa Panic, Marija Marjanovic-Haljilji, Dragana Mijac, Milica Stojkovic Lalosevic, Jelena Nestorov, Sanja Dragasevic, Predrag Savic, and et al. 2023. "The Importance of Artificial Intelligence in Upper Gastrointestinal Endoscopy" Diagnostics 13, no. 18: 2862. https://doi.org/10.3390/diagnostics13182862

APA StylePopovic, D., Glisic, T., Milosavljevic, T., Panic, N., Marjanovic-Haljilji, M., Mijac, D., Stojkovic Lalosevic, M., Nestorov, J., Dragasevic, S., Savic, P., & Filipovic, B. (2023). The Importance of Artificial Intelligence in Upper Gastrointestinal Endoscopy. Diagnostics, 13(18), 2862. https://doi.org/10.3390/diagnostics13182862