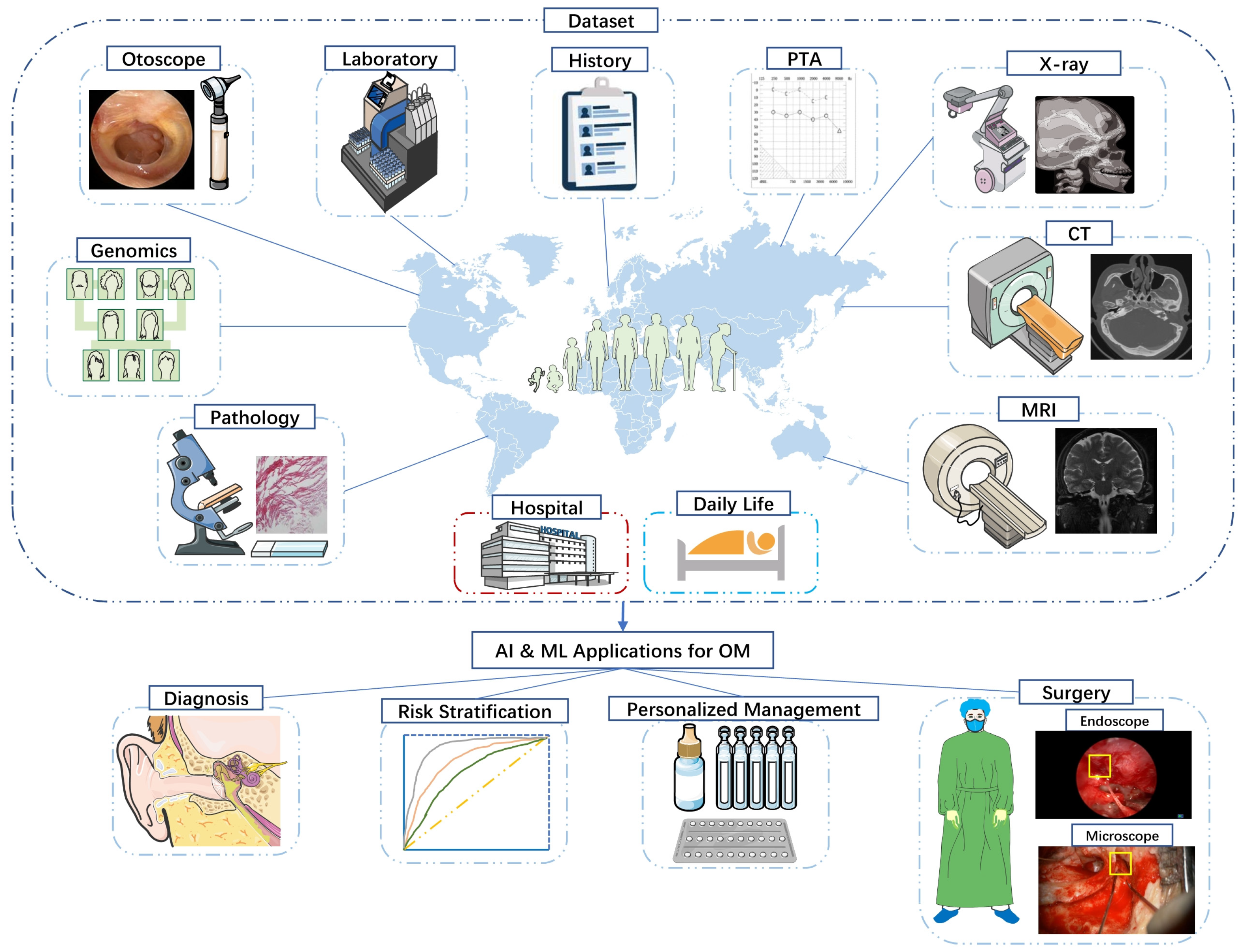

Diagnosis, Treatment, and Management of Otitis Media with Artificial Intelligence

Abstract

1. Introduction

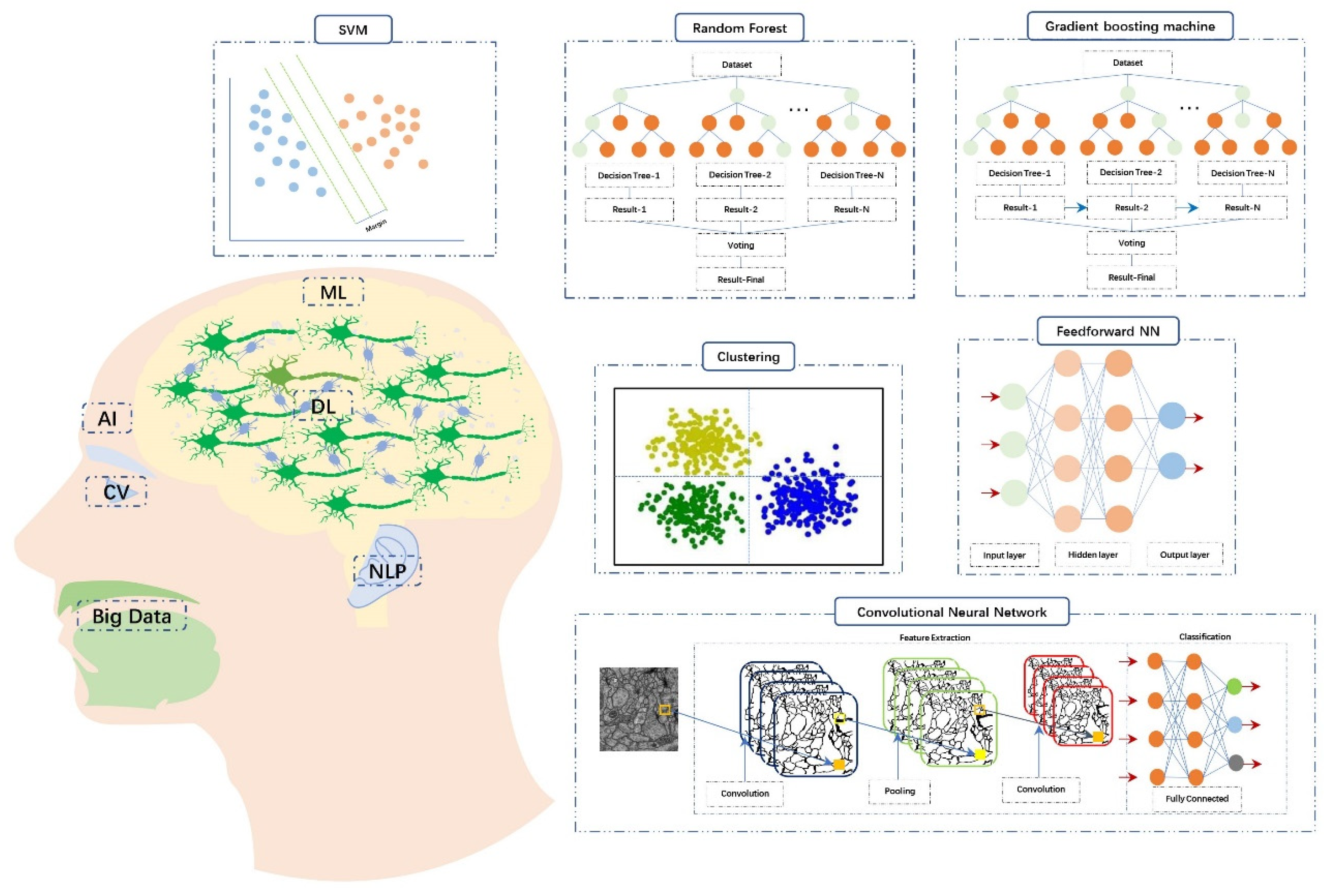

2. Artificial Intelligence & Machine Learning

2.1. What

2.2. How

2.3. Why

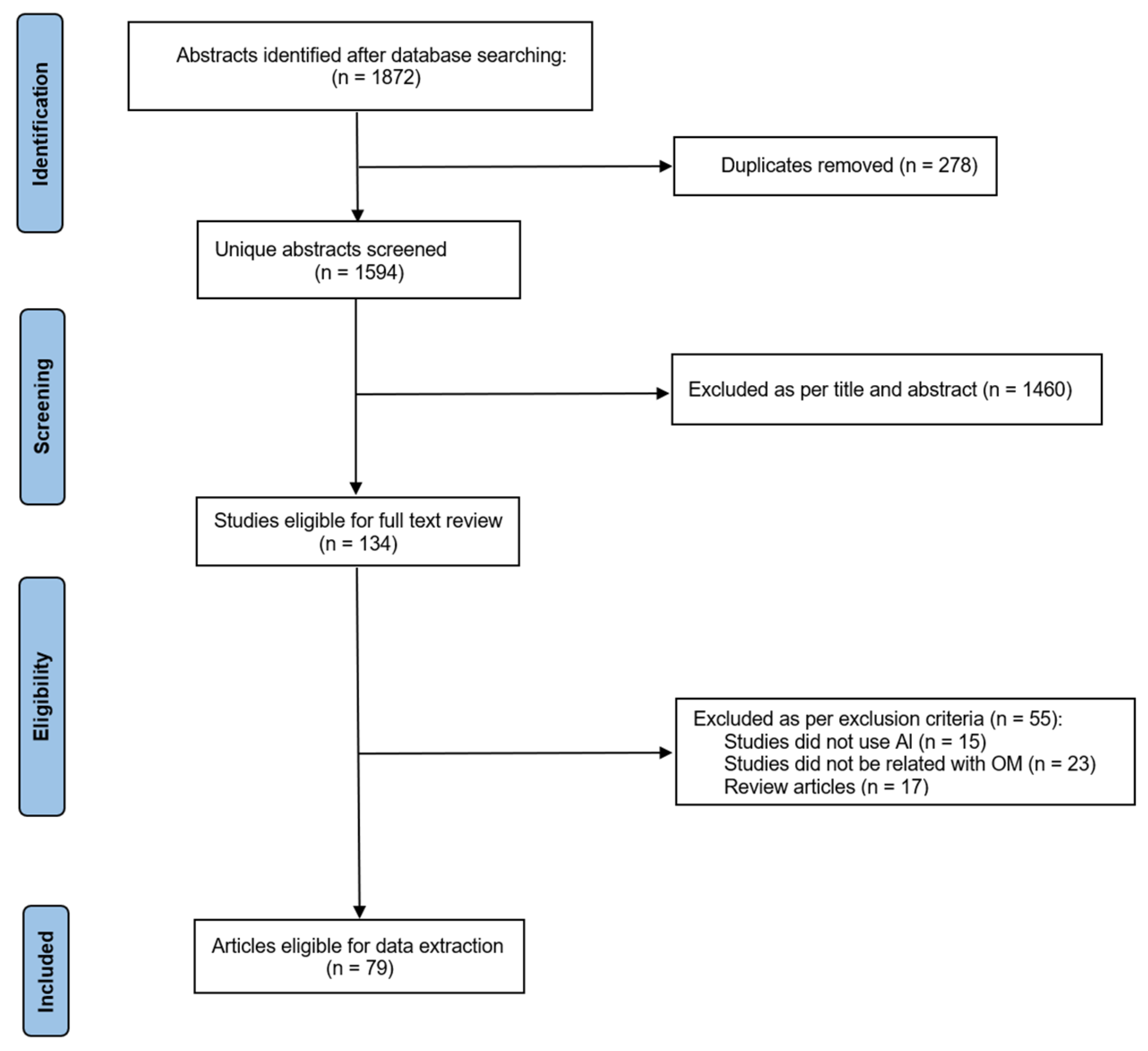

3. Materials and Methods

3.1. Literature Search

3.2. Selection Criteria

3.3. Data Extraction

4. Diagnosis

4.1. Computer Vision

4.1.1. Otoscopy

4.1.2. Radiology & Pathology

4.1.3. Tympanometry

4.2. Natural Language Processing

5. Treatment

5.1. Internal Medicine

5.2. Surgery

6. Risk Prediction & Postoperative Care

7. Challenges and Future Considerations

7.1. Data & Algorithm

7.2. Application

7.3. Privacy & Regulation

8. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviations | Extensions |

| ENT | Ear-nose-throat |

| OM | Otitis media |

| OME | Otitis media effusion |

| AOM | Acute otitis media |

| COM | Chronic otitis media |

| CSOM | Chronic suppurative otitis media |

| PCD | Primary ciliated dyskinesia |

| TM | Tympanic membrane |

| ABG | Air-bone gap |

| FNDs | Fluorescent nanodiamonds |

| VPO | Video pneumatic otoscope |

| OCT | Optical coherence tomography |

| WBT | Wideband tympanometry |

| AI | Artificial intelligence |

| CNN | Convolutional neural networks |

| ML | Machine learning |

| DL | Deep learning |

| CV | Computer vision |

| NLP | Natural language processing |

| XAI | Explainable artificial intelligence |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| BOW | Bag-of-words model |

| NCA | Neighborhood component analysis |

| FNN | Feedforward artificial neural networks |

References

- Auinger, P.; Lanphear, B.P.; Kalkwarf, H.J.; Mansour, M.E. Trends in Otitis Media Among Children in the United States. Pediatrics 2003, 112, 514–520. [Google Scholar] [CrossRef] [PubMed]

- Blomgren, K.; Pitkäranta, A. Is it possible to diagnose acute otitis media accurately in primary health care? Fam. Pract. 2003, 20, 524–527. [Google Scholar] [CrossRef] [PubMed]

- Pichichero, M.E.; Poole, M.D. Assessing Diagnostic Accuracy and Tympanocentesis Skills in the Management of Otitis Media. Arch. Pediatr. Adolesc. Med. 2001, 155, 1137–1142. [Google Scholar] [CrossRef] [PubMed]

- Brennan-Jones, C.G.; Whitehouse, A.J.; Calder, S.D.; Costa, C.D.; Eikelboom, R.H.; Swanepoel, D.W.; Jamieson, S.E. Does Otitis Media Affect Later Language Ability? A Prospective Birth Cohort Study. J. Speech Lang Hear Res. 2020, 63, 2441–2452. [Google Scholar] [CrossRef]

- Abada, R.L.; Mansouri, I.; Maamri, M.; Kadiri, F. Complications of chronic otitis media. Ann. Otolaryngol. Chir. Cervicofac. 2009, 126, 1–5. [Google Scholar] [CrossRef]

- Dagan, R. Clinical significance of resistant organisms in otitis media. Pediatr. Infect. Dis. J. 2000, 19, 378–382. [Google Scholar] [CrossRef]

- Bosch, J.H.W.-V.D.; Stolk, E.A.; Francois, M.; Gasparini, R.; Brosa, M. The health care burden and societal impact of acute otitis media in seven European countries: Results of an Internet survey. Vaccine 2010, 28 (Suppl. S6), G39–G52. [Google Scholar]

- Qureshi, S.A.; Raza, S.E.A.; Hussain, L.; Malibari, A.A.; Nour, M.K.; Rehman, A.U.; Al-Wesabi, F.N.; Hilal, A.M. Intelligent Ultra-Light Deep Learning Model for Multi-Class Brain Tumor Detection. Appl. Sci. 2022, 12, 3715. [Google Scholar] [CrossRef]

- Handelman, G.S.; Kok, H.K.; Chandra, R.V.; Razavi, A.H.; Lee, M.J.; Asadi, H. eDoctor: Machine learning and the future of medicine. J. Intern. Med. 2018, 284, 603–619. [Google Scholar] [CrossRef]

- Rajkomar, A.; Dean, J.; Kohane, I. Machine learning in medicine. New Engl. J. Med. 2019, 380, 1347–1358. [Google Scholar] [CrossRef]

- Deo, R.C. Machine Learning in Medicine. Circulation 2015, 132, 1920–1930. [Google Scholar] [CrossRef]

- Sandström, J.; Myburgh, H.; Laurent, C.; Swanepoel, D.W.; Lundberg, T. A Machine Learning Approach to Screen for Otitis Media Using Digital Otoscope Images Labelled by an Expert Panel. Diagnostics 2022, 12, 1318. [Google Scholar] [CrossRef]

- Chen, Y.-C.; Chu, Y.-C.; Huang, C.-Y.; Lee, Y.-T.; Lee, W.-Y.; Hsu, C.-Y.; Yang, A.C.; Liao, W.-H.; Cheng, Y.-F. Smartphone-based artificial intelligence using a transfer learning algorithm for the detection and diagnosis of middle ear diseases: A retrospective deep learning study. Eclinicalmedicine 2022, 51, 101543. [Google Scholar] [CrossRef]

- Mao, C.; Li, A.; Hu, J.; Wang, P.; Peng, D.; Wang, J.; Sun, Y. Efficient and accurate diagnosis of otomycosis using an ensemble deep-learning model. Front. Mol. Biosci. 2022, 9, 951432. [Google Scholar] [CrossRef]

- Crowson, M.G.; Bates, D.W.; Suresh, K.; Cohen, M.S.; Hartnick, C.J. “Human vs Machine” Validation of a Deep Learning Algorithm for Pediatric Middle Ear Infection Diagnosis. Otolaryngol. Neck Surg. 2022, 1945998221119156. [Google Scholar] [CrossRef]

- Mohammed, K.K.; Hassanien, A.E.; Afify, H.M. Classification of Ear Imagery Database using Bayesian Optimization based on CNN-LSTM Architecture. J. Digit. Imaging 2022, 35, 947–961. [Google Scholar] [CrossRef]

- Choi, Y.; Chae, J.; Park, K.; Hur, J.; Kweon, J.; Ahn, J.H. Automated multi-class classification for prediction of tympanic membrane changes with deep learning models. PLoS ONE 2022, 17, e0275846. [Google Scholar] [CrossRef]

- Habib, A.-R.; Crossland, G.; Patel, H.; Wong, E.; Kong, K.; Gunasekera, H.; Richards, B.; Caffery, L.; Perry, C.; Sacks, R.; et al. An Artificial Intelligence Computer-vision Algorithm to Triage Otoscopic Images From Australian Aboriginal and Torres Strait Islander Children. Otol. Neurotol. 2022, 43, 481–488. [Google Scholar] [CrossRef]

- Zeng, J.; Kang, W.; Chen, S.; Lin, Y.; Deng, W.; Wang, Y.; Chen, G.; Ma, K.; Zhao, F.; Zheng, Y.; et al. A Deep Learning Approach to Predict Conductive Hearing Loss in Patients with Otitis Media with Effusion Using Otoscopic Images. JAMA Otolaryngol. Neck Surg. 2022, 148, 612–620. [Google Scholar] [CrossRef]

- Byun, H.; Park, C.J.; Oh, S.J.; Chung, M.J.; Cho, B.H.; Cho, Y.-S. Automatic Prediction of Conductive Hearing Loss Using Video Pneumatic Otoscopy and Deep Learning Algorithm. Ear Hear. 2022, 43, 1563–1573. [Google Scholar] [CrossRef]

- Viscaino, M.; Talamilla, M.; Maass, J.C.; Henríquez, P.; Délano, P.H.; Cheein, C.A.; Cheein, F.A. Color Dependence Analysis in a CNN-Based Computer-Aided Diagnosis System for Middle and External Ear Diseases. Diagnostics 2022, 12, 917. [Google Scholar] [CrossRef] [PubMed]

- Alhudhaif, A.; Cömert, Z.; Polat, K. Otitis media detection using tympanic membrane images with a novel multi-class machine learning algorithm. PeerJ Comput. Sci. 2021, 7, e405. [Google Scholar] [CrossRef] [PubMed]

- Cai, Y.; Yu, J.-G.; Chen, Y.; Liu, C.; Xiao, L.; Grais, E.M.; Zhao, F.; Lan, L.; Zeng, S.; Zeng, J.; et al. Investigating the use of a two-stage attention-aware convolutional neural network for the automated diagnosis of otitis media from tympanic membrane images: A prediction model development and validation study. BMJ Open 2021, 11, e041139. [Google Scholar] [CrossRef] [PubMed]

- Byun, H.; Yu, S.; Oh, J.; Bae, J.; Yoon, M.S.; Lee, S.H.; Chung, J.H.; Kim, T.H. An Assistive Role of a Machine Learning Network in Diagnosis of Middle Ear Diseases. J. Clin. Med. 2021, 10, 3198. [Google Scholar] [CrossRef]

- Crowson, M.G.; Hartnick, C.J.; Diercks, G.R.; Gallagher, T.Q.; Fracchia, M.S.; Setlur, J.; Cohen, M.S. Machine Learning for Accurate Intraoperative Pediatric Middle Ear Effusion Diagnosis. Pediatrics 2021, 147, e2020034546. [Google Scholar] [CrossRef]

- Pham, V.-T.; Tran, T.-T.; Wang, P.-C.; Chen, P.-Y.; Lo, M.-T. EAR-UNet: A deep learning-based approach for segmentation of tympanic membranes from otoscopic images. Artif. Intell. Med. 2021, 115, 102065. [Google Scholar] [CrossRef]

- Sundgaard, J.V.; Harte, J.; Bray, P.; Laugesen, S.; Kamide, Y.; Tanaka, C.; Paulsen, R.R.; Christensen, A.N. Deep metric learning for otitis media classification. Med. Image Anal. 2021, 71, 102034. [Google Scholar] [CrossRef]

- Zeng, X.; Jiang, Z.; Luo, W.; Li, H.; Li, H.; Li, G.; Shi, J.; Wu, K.; Liu, T.; Lin, X.; et al. Efficient and accurate identification of ear diseases using an ensemble deep learning model. Sci. Rep. 2021, 11, 10839. [Google Scholar] [CrossRef]

- Kashani, R.G.; Młyńczak, M.C.; Zarabanda, D.; Solis-Pazmino, P.; Huland, D.M.; Ahmad, I.N.; Singh, S.P.; Valdez, T.A. Shortwave infrared otoscopy for diagnosis of middle ear effusions: A machine-learning-based approach. Sci. Rep. 2021, 11, 12509. [Google Scholar] [CrossRef]

- Tsutsumi, K.; Goshtasbi, K.; Risbud, A.; Khosravi, P.; Pang, J.C.; Lin, H.W.; Djalilian, H.R.; Abouzari, M. A Web-Based Deep Learning Model for Automated Diagnosis of Otoscopic Images. Otol. Neurotol. 2021, 42, e1382–e1388. [Google Scholar] [CrossRef]

- Başaran, E.; Cömert, Z.; Çelik, Y. Convolutional neural network approach for automatic tympanic membrane detection and classification. Biomed. Signal Process. Control. 2020, 56, 101734. [Google Scholar] [CrossRef]

- Cha, D.; Pae, C.; A Lee, S.; Na, G.; Hur, Y.K.; Lee, H.Y.; Cho, A.R.; Cho, Y.J.; Gil Han, S.; Kim, S.H.; et al. Differential Biases and Variabilities of Deep Learning–Based Artificial Intelligence and Human Experts in Clinical Diagnosis: Retrospective Cohort and Survey Study. JMIR Public Heal. Surveill. 2021, 9, e33049. [Google Scholar] [CrossRef]

- Wang, W.; Tamhane, A.; Santos, C.; Rzasa, J.R.; Clark, J.H.; Canares, T.L.; Unberath, M. Pediatric Otoscopy Video Screening With Shift Contrastive Anomaly Detection. Front. Digit. Health 2022, 3, 810427. [Google Scholar] [CrossRef]

- Binol, H.; Niazi, M.K.K.; Elmaraghy, C.; Moberly, A.C.; Gurcan, M.N. OtoXNet-automated identification of eardrum diseases from otoscope videos: A deep learning study for video-representing images. Neural Comput. Appl. 2022, 14, 12197–12210. [Google Scholar] [CrossRef]

- Wu, Z.; Lin, Z.; Li, L.; Pan, H.; Chen, G.; Fu, Y.; Qiu, Q. Deep Learning for Classification of Pediatric Otitis Media. Laryngoscope 2021, 131, E2344–E2351. [Google Scholar] [CrossRef]

- Khan, M.A.; Kwon, S.; Choo, J.; Hong, S.J.; Kang, S.H.; Park, I.-H.; Kim, S.K. Automatic detection of tympanic membrane and middle ear infection from oto-endoscopic images via convolutional neural networks. Neural Netw. 2020, 126, 384–394. [Google Scholar] [CrossRef]

- Camalan, S.; Niazi, M.K.K.; Moberly, A.C.; Teknos, T.; Essig, G.; Elmaraghy, C.; Taj-Schaal, N.; Gurcan, M.N. OtoMatch: Content-based eardrum image retrieval using deep learning. PLoS ONE 2020, 15, e0232776. [Google Scholar] [CrossRef]

- Viscaino, M.; Maass, J.C.; Delano, P.H.; Torrente, M.; Stott, C.; Cheein, F.A. Computer-aided diagnosis of external and middle ear conditions: A machine learning approach. PLoS ONE 2020, 15, e0229226. [Google Scholar] [CrossRef]

- Pham, V.-T.; Tran, T.-T.; Wang, P.-C.; Lo, M.-T. Tympanic membrane segmentation in otoscopic images based on fully convolutional network with active contour loss. Signal Image Video Process. 2021, 15, 519–527. [Google Scholar] [CrossRef]

- Habib, A.-R.; Wong, E.; Sacks, R.; Singh, N. Artificial intelligence to detect tympanic membrane perforations. J. Laryngol. Otol. 2020, 134, 311–315. [Google Scholar] [CrossRef]

- Binol, H.; Moberly, A.C.; Niazi, M.K.K.; Essig, G.; Shah, J.; Elmaraghy, C.; Teknos, T.; Taj-Schaal, N.; Yu, L.; Gurcan, M.N. Decision Fusion on Image Analysis and Tympanometry to Detect Eardrum Abnormalities; SPIE Medical Imaging: Houston, TX, USA, 2020. [Google Scholar]

- Binol, H.; Moberly, A.C.; Niazi, M.K.K.; Essig, G.; Shah, J.; Elmaraghy, C.; Teknos, T.; Taj-Schaal, N.; Yu, L.; Gurcan, M.N. SelectStitch: Automated Frame Segmentation and Stitching to Create Composite Images from Otoscope Video Clips; Cold Spring Harbor Laboratory Press: Cold Spring Harbor, NY, USA, 2020. [Google Scholar]

- Cha, D.; Pae, C.; Seong, S.-B.; Choi, J.Y.; Park, H.-J. Automated diagnosis of ear disease using ensemble deep learning with a big otoendoscopy image database. Ebiomedicine 2019, 45, 606–614. [Google Scholar] [CrossRef] [PubMed]

- Cmert, Z. Fusing fine-tuned deep features for recognizing different tympanic membranes. Biocybern. Biomed. Eng. 2019, 40, 40–51. [Google Scholar]

- Livingstone, D.; Chau, J. Otoscopic diagnosis using computer vision: An automated machine learning approach. Laryngoscope 2020, 130, 1408–1413. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.Y.; Choi, S.H.; Chung, J.W. Automated Classification of the Tympanic Membrane Using a Convolutional Neural Network. Appl. Sci. 2019, 9, 1827. [Google Scholar] [CrossRef]

- Seok, J.; Song, J.J.; Koo, J.W.; Kim, H.C.; Choi, B.Y. The semantic segmentation approach for normal and pathologic tympanic membrane using deep learning. BioRxiv 2019, 515007. [Google Scholar]

- Tran, T.T.; Fang, T.Y.; Pham, V.T.; Lin, C.; Wang, P.C.; Lo, M.T. Development of an Automatic Diagnostic Algorithm for Pediatric Otitis Media. Otol. Neurotol. 2018, 39, 1060–1065. [Google Scholar] [CrossRef]

- Myburgh, H.C.; Jose, S.; Swanepoel, D.W.; Laurent, C. Towards low cost automated smartphone- and cloud-based otitis media diagnosis. Biomed. Signal Process. Control. 2018, 39, 34–52. [Google Scholar] [CrossRef]

- Senaras, C.; Moberly, A.C.; Teknos, T.; Essig, G.; Elmaraghy, C.; Taj-Schaal, N.; Yu, L.; Gurcan, M. Autoscope: Automated otoscopy image analysis to diagnose ear pathology and use of clinically motivated eardrum features. In Med Imaging 2017: Compu-Aided Diagnosis; SPIE Medical Imaging: Orlando, Fl, USA, 2017; Volume 10134. [Google Scholar]

- Kasher, M.S. Otitis Media Analysis—An Automated Feature Extraction and Image Classification System; Helsinki Metropolia University of Applied Science: Helsinki, Finland, 2018. [Google Scholar]

- Myburgh, H.C.; van Zijl, W.H.; Swanepoel, D.W.; Hellström, S.; Laurent, C. Otitis Media Diagnosis for Developing Countries Using Tympanic Membrane Image-Analysis. Ebiomedicine 2016, 5, 156–160. [Google Scholar] [CrossRef]

- Shie, C.-K.; Chuang, C.-H.; Chou, C.-N.; Wu, M.-H.; Chang, E.Y. Transfer representation learning for medical image analysis. 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25-29 August; 2015; pp. 711–714. [Google Scholar] [CrossRef]

- Shie, C.-K.; Chang, H.-T.; Fan, F.-C.; Chen, C.-J.; Fang, T.-Y.; Wang, P.-C. A hybrid feature-based segmentation and classification system for the computer aided self-diagnosis of otitis media. In Proceedings of the Engineering in Medicine and Biology Society (EMBC), 36th Annual International Conference of the IEEE, Chicago, IL, USA, 27–31 August 2014. [Google Scholar]

- Monroy, G.L.; Won, J.; Dsouza, R.; Pande, P.; Hill, M.C.; Porter, R.G.; Novak, M.A.; Spillman, D.R.; Boppart, S.A. Automated classification platform for the identification of otitis media using optical coherence tomography. NPJ Digit. Med. 2019, 2, 22. [Google Scholar] [CrossRef]

- Monroy, G.L.; Won, J.; Shi, J.; Hill, M.C.; Porter, R.G.; Novak, M.A.; Hong, W.; Khampang, P.; Kerschner, J.E.; Spillman, D.R.; et al. Automated classification of otitis media with OCT: Augmenting pediatric image datasets with gold-standard animal model data. Biomed. Opt. Express 2022, 13, 3601. [Google Scholar] [CrossRef]

- Wang, Y.-M.; Li, Y.; Cheng, Y.-S.; He, Z.-Y.; Yang, J.-M.; Xu, J.-H.; Chi, Z.-C.; Chi, F.-L.; Ren, D.-D. Deep Learning in Automated Region Proposal and Diagnosis of Chronic Otitis Media Based on Computed Tomography. Ear Hear. 2020, 41, 669–677. [Google Scholar] [CrossRef]

- Wang, Z.; Song, J.; Su, R.; Hou, M.; Qi, M.; Zhang, J.; Wu, X. Structure-aware deep learning for chronic middle ear disease. Expert Syst. Appl. 2022, 194, 116519. [Google Scholar] [CrossRef]

- Eroğlu, O.; Eroğlu, Y.; Yıldırım, M.; Karlıdag, T.; Çınar, A.; Akyiğit, A.; Kaygusuz, I.; Yıldırım, Y.; Keleş, E.; Yalçın, Ş. Is it useful to use computerized tomography image-based artificial intelligence modelling in the differential diagnosis of chronic otitis media with and without cholesteatoma? Am. J. Otolaryngol. 2022, 43, 103395. [Google Scholar] [CrossRef]

- Takahashi, M.; Noda, K.; Yoshida, K.; Tsuchida, K.; Yui, R.; Nakazawa, T.; Kurihara, S.; Baba, A.; Motegi, M.; Yamamoto, K.; et al. Preoperative prediction by artificial intelligence for mastoid extension in pars flaccida cholesteatoma using temporal bone high-resolution computed tomography: A retrospective study. PLoS ONE 2022, 17, e0273915. [Google Scholar] [CrossRef]

- Duan, B.; Guo, Z.; Pan, L.; Xu, Z.; Chen, W. Temporal bone CT-based deep learning models for differential diagnosis of primary ciliary dyskinesia related otitis media and simple otitis media with effusion. Am. J. Transl. Res. 2022, 14, 4728–4735. [Google Scholar]

- Lee, K.J.; Ryoo, I.; Choi, D.; Sunwoo, L.; You, S.-H.; Na Jung, H. Performance of deep learning to detect mastoiditis using multiple conventional radiographs of mastoid. PLoS ONE 2020, 15, e0241796. [Google Scholar] [CrossRef]

- Qureshi, S.A.; Hussain, L.; Ibrar, U.; Alabdulkreem, E.; Nour, M.K.; Alqahtani, M.S.; Nafie, F.M.; Mohamed, A.; Mohammed, G.P.; Duong, T.Q. Radiogenomic classification for MGMT promoter methylation status using multi-omics fused feature space for least invasive diagnosis through mpMRI scans. Sci. Rep. 2023, 13, 3291. [Google Scholar] [CrossRef]

- Feng, L.; Liu, Z.; Li, C.; Li, Z.; Lou, X.; Shao, L.; Wang, Y.; Huang, Y.; Chen, H.; Pang, X.; et al. Development and validation of a radiopathomics model to predict pathological complete response to neoadjuvant chemoradiotherapy in locally advanced rectal cancer: A multicentre observational study. Lancet Digit. Heal. 2022, 4, e8–e17. [Google Scholar] [CrossRef]

- Sundgaard, J.V.; Bray, P.; Laugesen, S.; Harte, J.; Kamide, Y.; Tanaka, C.; Christensen, A.N.; Paulsen, R.R. A Deep Learning Approach for Detecting Otitis Media From Wideband Tympanometry Measurements. IEEE J. Biomed. Heal Inform. 2022, 26, 2974–2982. [Google Scholar] [CrossRef]

- Merchant, G.R.; Al-Salim, S.; Tempero, R.M.; Fitzpatrick, D.; Neely, S.T. Improving the Differential Diagnosis of Otitis Media With Effusion Using Wideband Acoustic Immittance. Ear Hear. 2021, 42, 1183–1194. [Google Scholar] [CrossRef]

- Grais, E.M.; Wang, X.; Wang, J.; Zhao, F.; Jiang, W.; Cai, Y.; Zhang, L.; Lin, Q.; Yang, H. Analysing wideband absorbance immittance in normal and ears with otitis media with effusion using machine learning. Sci. Rep. 2021, 11, 10643. [Google Scholar] [CrossRef] [PubMed]

- Kuruvilla, A.; Shaikh, N.; Hoberman, A.; Kovačević, J. Automated Diagnosis of Otitis Media: Vocabulary and Grammar. Int. J. Biomed. Imaging 2013, 2013, 327515. [Google Scholar] [CrossRef] [PubMed]

- Binol, H.; Niazi, M.K.K.; Elmaraghy, C.; Moberly, A.C.; Gurcan, M.N. Automated Video Summarization and Label Assignment for Otoscopy Videos Using Deep Learning and Natural Language Processing. In Imaging Informatics for Healthcare, Research, and Applications; SPIE: Bellingham, WA, USA, 2021. [Google Scholar] [CrossRef]

- Herigon, J.C.; Kimia, A.; Harper, M. 1358 Using natural language processing to optimize case ascertainment of acute otitis media in a large, state-wide pediatric practice network. Open Forum Infect. Dis. 2020, 7, S690–S691. [Google Scholar] [CrossRef]

- Kuruvilla, A.; Li, J.; Yeomans, P.H.; Quelhas, P.; Shaikh, N.; Hoberman, N.; Kovačević, J. Otitis media vocabulary and grammary. Proc. Int. Conf. Image Proc. 2012, 2012, 2845–2848. [Google Scholar] [PubMed]

- Macesic, N.; Bear Don’t Walk IV, O.J.; Pe’er, I.; Tatonetti, N.P.; Peleg, A.Y.; Uhlemann, A.C. Predicting Phenotypic Polymyxin Resistance in Klebsiella pneumoniae through Machine Learning Analysis of Genomic Data. mSystems 2020, 5, e00656-19. [Google Scholar] [CrossRef] [PubMed]

- Mansbach, R.A.; Leus, I.V.; Mehla, J.; Lopez, C.A.; Walker, J.K.; Rybenkov, V.V.; Hengartner, N.W.; Hengartner, H.I.; Gnanakaran, S. Machine Learning Algorithm Identifies an Antibiotic Vocabulary for Permeating Gram-Negative Bacteria. J. Chem. Inf. Model. 2020, 60, 2838–2847. [Google Scholar] [CrossRef]

- Fields, F.R.; Freed, S.D.; Carothers, K.E.; Hamid, M.N.; Hammers, D.E.; Ross, J.N.; Kalwajtys, V.R.; Gonzalez, A.J.; Hildreth, A.D.; Friedberg, I.; et al. Novel antimicrobial peptide discovery using machine learning and biophysical selection of minimal bacteriocin domains. Drug Dev. Res. 2020, 81, 43–51. [Google Scholar] [CrossRef]

- Senior, A.W.; Evans, R.; Jumper, J.; Kirkpatrick, J.; Sifre, L.; Green, T.; Qin, C.; Žídek, A.; Nelson, A.W.R.; Bridgland, A.; et al. Improved protein structure prediction using potentials from deep learning. Nature 2020, 577, 706–710. [Google Scholar] [CrossRef]

- Wang, J.; Lisanza, S.; Juergens, D.; Tischer, D.; Watson, J.L.; Castro, K.M.; Ragotte, R.; Saragovi, A.; Milles, L.F.; Baek, M.; et al. Scaffolding protein functional sites using deep learning. Science 2022, 377, 387–394. [Google Scholar] [CrossRef]

- Qureshi, S.A.; Hsiao, W.W.-W.; Hussain, L.; Aman, H.; Le, T.-N.; Rafique, M. Recent Development of Fluorescent Nanodiamonds for Optical Biosensing and Disease Diagnosis. Biosensors 2022, 12, 1181. [Google Scholar] [CrossRef]

- Livingstone, D.; Talai, A.S.; Chau, J.; Forkert, N.D. Building an Otoscopic screening prototype tool using deep learning. J. Otolaryngol.-Head Neck Surg. 2019, 48, 66. [Google Scholar] [CrossRef]

- Wang, X.; Valdez, T.A.; Bi, J. Detecting tympanostomy tubes from otoscopic images via offline and online training. Comput. Biol. Med. 2015, 61, 107–118. [Google Scholar] [CrossRef]

- Miwa, T.; Minoda, R.; Yamaguchi, T.; Kita, S.-I.; Osaka, K.; Takeda, H.; Kanemaru, S.-I.; Omori, K. Application of artificial intelligence using a convolutional neural network for detecting cholesteatoma in endoscopic enhanced images. Auris Nasus Larynx 2022, 49, 11–17. [Google Scholar] [CrossRef]

- Ding, A.S.; Lu, A.; Li, Z.; Galaiya, D.; Siewerdsen, J.H.; Taylor, R.H.; Creighton, F.X. Automated Registration-Based Temporal Bone Computed Tomography Segmentation for Applications in Neurotologic Surgery. Otolaryngol. Neck Surg. 2022, 167, 133–140. [Google Scholar] [CrossRef]

- Dong, B.; Lu, C.; Hu, X.; Zhao, Y.; He, H.; Wang, J. Towards accurate facial nerve segmentation with decoupling optimization. Phys. Med. Biol. 2022, 67, 065007. [Google Scholar] [CrossRef]

- Neves, C.A.; Tran, E.D.; Kessler, I.M.; Blevins, N.H. Fully automated preoperative segmentation of temporal bone structures from clinical CT scans. Sci. Rep. 2021, 11, 116. [Google Scholar] [CrossRef]

- Nikan, S.; Van Osch, K.; Bartling, M.; Allen, D.G.; Rohani, S.A.; Connors, B.; Agrawal, S.K.; Ladak, H.M. PWD-3DNet: A Deep Learning-Based Fully-Automated Segmentation of Multiple Structures on Temporal Bone CT Scans. IEEE Trans. Image Process. 2021, 30, 739–753. [Google Scholar] [CrossRef]

- Lv, Y.; Ke, J.; Xu, Y.; Shen, Y.; Wang, J.; Wang, J. Automatic segmentation of temporal bone structures from clinical conventional CT using a CNN approach. Int. J. Med. Robot. Comput. Assist. Surg. 2021, 17, e2229. [Google Scholar] [CrossRef]

- Jeevakala, S.; Sreelakshmi, C.; Ram, K.; Rangasami, R.; Sivaprakasam, M. Artificial intelligence in detection and segmentation of internal auditory canal and its nerves using deep learning techniques. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1859–1867. [Google Scholar] [CrossRef]

- Fauser, J.; Stenin, I.; Bauer, M.; Hsu, W.-H.; Kristin, J.; Klenzner, T.; Schipper, J.; Mukhopadhyay, A. Toward an automatic preoperative pipeline for image-guided temporal bone surgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 967–976. [Google Scholar] [CrossRef]

- Gare, B.M.; Hudson, T.; Rohani, S.A.; Allen, D.G.; Agrawal, S.K.; Ladak, H.M. Multi-atlas segmentation of the facial nerve from clinical CT for virtual reality simulators. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 259–267. [Google Scholar] [CrossRef] [PubMed]

- Powell, K.A.; Liang, T.; Hittle, B.; Stredney, D.; Kerwin, T.; Wiet, G.J. Atlas-Based Segmentation of Temporal Bone Anatomy. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1937–1944. [Google Scholar] [CrossRef] [PubMed]

- Lu, P.; Barazzetti, L.; Chandran, V.; Gavaghan, K.; Weber, S.; Gerber, N.; Reyes, M. Highly Accurate Facial Nerve Segmentation Refinement From CBCT/CT Imaging Using a Super-Resolution Classification Approach. IEEE Trans. Biomed. Eng. 2018, 65, 178–188. [Google Scholar] [CrossRef] [PubMed]

- Becker, M.; Kirschner, M.; Sakas, G. Segmentation of risk structures for otologic surgery using the probabilistic active shape model. ProcSPIE. 2014, 9036, 9036–9037. [Google Scholar]

- Noble, J.H.; Labadie, R.F.; Majdani, O.; Dawant, B.M. Automatic Segmentation of Intracochlear Anatomy in Conventional CT. IEEE Trans. Biomed. Eng. 2011, 58, 2625–2632. [Google Scholar] [CrossRef]

- Dowell, A.; Darlow, B.; Macrae, J.; Stubbe, M.; Turner, N.; McBain, L. Childhood respiratory illness presentation and service utilisation in primary care: A six-year cohort study in Wellington, New Zealand, using natural language processing (NLP) software. BMJ Open 2017, 7, e017146. [Google Scholar] [CrossRef]

- Szaleniec, J.; Wiatr, M.; Szaleniec, M.; Składzień, J.; Tomik, J.; Oleś, K.; Tadeusiewicz, R. Artificial neural network modelling of the results of tympanoplasty in chronic suppurative otitis media patients. Comput. Biol. Med. 2013, 43, 16–22. [Google Scholar] [CrossRef]

- Koyama, H.; Kashio, A.; Uranaka, T.; Matsumoto, Y.; Yamasoba, T. Application of Machine Learning to Predict Hearing Outcomes of Tympanoplasty. Laryngoscope 2022. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. arXiv 2019, arXiv:1610.02391. [Google Scholar]

- Saeed, H.; Malik, H.; Bashir, U.; Ahmad, A.; Riaz, S.; Ilyas, M.; Bukhari, W.A.; Khan, M.I.A. Blockchain technology in healthcare: A systematic review. PLoS ONE 2022, 17, e0266462. [Google Scholar] [CrossRef]

- Rischke, R.; Schneider, L.; Müller, K.; Samek, W.; Schwendicke, F.; Krois, J. Federated Learning in Dentistry: Chances and Challenges. J. Dent. Res. 2022, 101, 1269–1273. [Google Scholar] [CrossRef]

| ML Algorithms | Definition |

|---|---|

| Supervised learning | The model is trained based on the labeled data to make the prediction. It is mainly used for classification (continuous data) and regression problems (discrete data). |

| Ensemble learning | Generate multiple models and then combine these models according to a certain method. It is mainly applied to improve model performance or reduce the possibility of improper model selection. |

| Bagging-Random forest (RF) | As a type of ensembled learning, it is based on decision trees. Each of decision trees can produce an independent and de-correlated output. And then the final result is determined by the rule of “majority-vote”. Each decision tree has the equal weight. |

| Boosting-Gradient boosting machine (GBM) | The basic unit is the decision tree. The performance of the first decision tree is only due to random guessing. The decision tree model established later are based on the prior one by adjusting parameters to gradually improve the accuracy of subsequent models. And then the final result is determined by considering comprehensively the predicted values of all decision trees. Base learner (decision tree) with better performance has higher weight. |

| Support vector machine (SVM) | In a given set of training samples, with functional distance as constraint condition and geometric distance as objective function, the maximum-margin hyperplane with the best generalization ability and the strongest robust is determined, so as to realize the binary classification of data. |

| Unsupervised learning | The training data is not labeled and the goal is to find patterns in the data. It is mainly used to solve clustering and dimension reduction problems. |

| Clustering | The process of sorting data into different classes or clusters based on mathematical relevance. |

| Dimensionality reduction | It means converting high-dimensional data into a lower representation with fewer features and is often used for data visualization or data preprocessing. |

| Reinforcement learning | Through interaction with its environment and trial and error, the training model learns the environment-to-action reflex that maximize cumulative returns. |

| Transfer learning (TL) | Transfer the learned knowledge from a domain to another and save a deal of time and computing resources for the training. |

| Deep learning (DL) | Mimicking the hierarchical network of neurons in the brain and using multiple layers of data processing, the model can automatically detect the required features and predict the result. But it requires larger quantities of data and advanced computational capacity. |

| Feedforward neural networks (FNN) | Arranged in layers, each neuron receives only the output of the previous layer and sends it to the next layer. There is no feedback between adjacent layers. |

| Convolutional neural networks (CNN) | It is often used in imaging analysis. Through the convolution layer, activation function and pooling layer, the input image is approximate processed and redundant features are removed to reduce the computational complexity and help prevent overfitting. And then the significant features are combined through the fully connected layer to output prediction or classification. |

| Natural language processing (NLP) | Algorithms are established to organize and interpret human language. The aim is to realize the interactive communication between humans and machines. |

| Computer vision (CV) | Algorithms are established to enable the computer to perceive, observe and understand the environment through images and vision, and finally have the ability to adapt to the environment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, X.; Huang, Y.; Tian, X.; Zhao, Y.; Feng, G.; Gao, Z. Diagnosis, Treatment, and Management of Otitis Media with Artificial Intelligence. Diagnostics 2023, 13, 2309. https://doi.org/10.3390/diagnostics13132309

Ding X, Huang Y, Tian X, Zhao Y, Feng G, Gao Z. Diagnosis, Treatment, and Management of Otitis Media with Artificial Intelligence. Diagnostics. 2023; 13(13):2309. https://doi.org/10.3390/diagnostics13132309

Chicago/Turabian StyleDing, Xin, Yu Huang, Xu Tian, Yang Zhao, Guodong Feng, and Zhiqiang Gao. 2023. "Diagnosis, Treatment, and Management of Otitis Media with Artificial Intelligence" Diagnostics 13, no. 13: 2309. https://doi.org/10.3390/diagnostics13132309

APA StyleDing, X., Huang, Y., Tian, X., Zhao, Y., Feng, G., & Gao, Z. (2023). Diagnosis, Treatment, and Management of Otitis Media with Artificial Intelligence. Diagnostics, 13(13), 2309. https://doi.org/10.3390/diagnostics13132309