Breast Cancer Diagnosis Based on IoT and Deep Transfer Learning Enabled by Fog Computing

Abstract

1. Introduction

- Fog computing along with Cloud computing and IoT for real-time analysis and IoT monitoring system installation;

- An automatic, remote diagnosis of benign and malignant breast cancer in different people;

- A model for real-time breast cancer diagnosis using DTL was trained using images from mammograms;

- The predictive and network analysis performance of the proposed system is shown and analyzed;

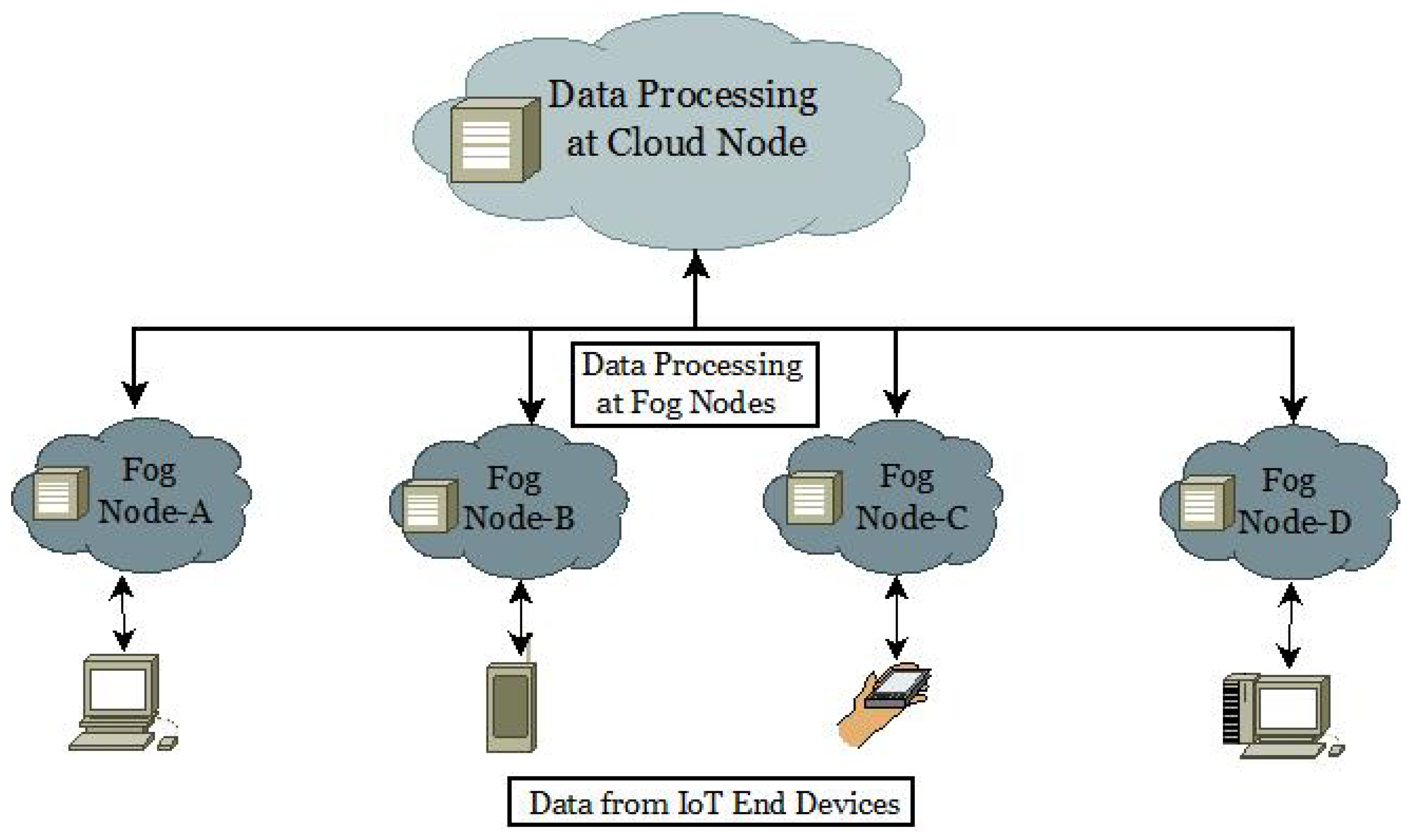

- Predictive analytics by modeling and simulating IoT–Fog–Cloud environments;

- Introducing the findings and comparing them with prior research to emphasize the unique contribution of the current study.

2. Literature Study

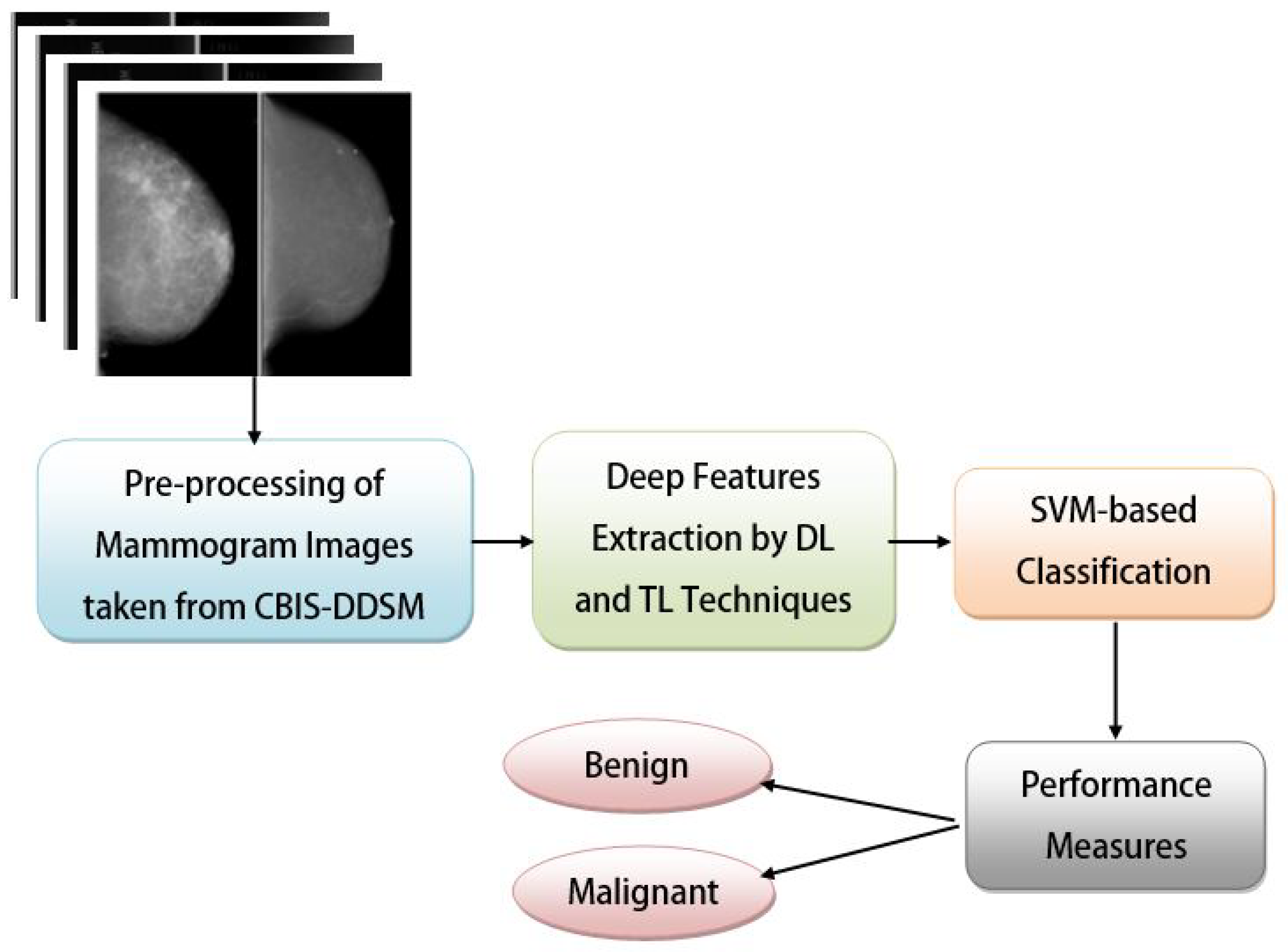

3. Materials and Methods

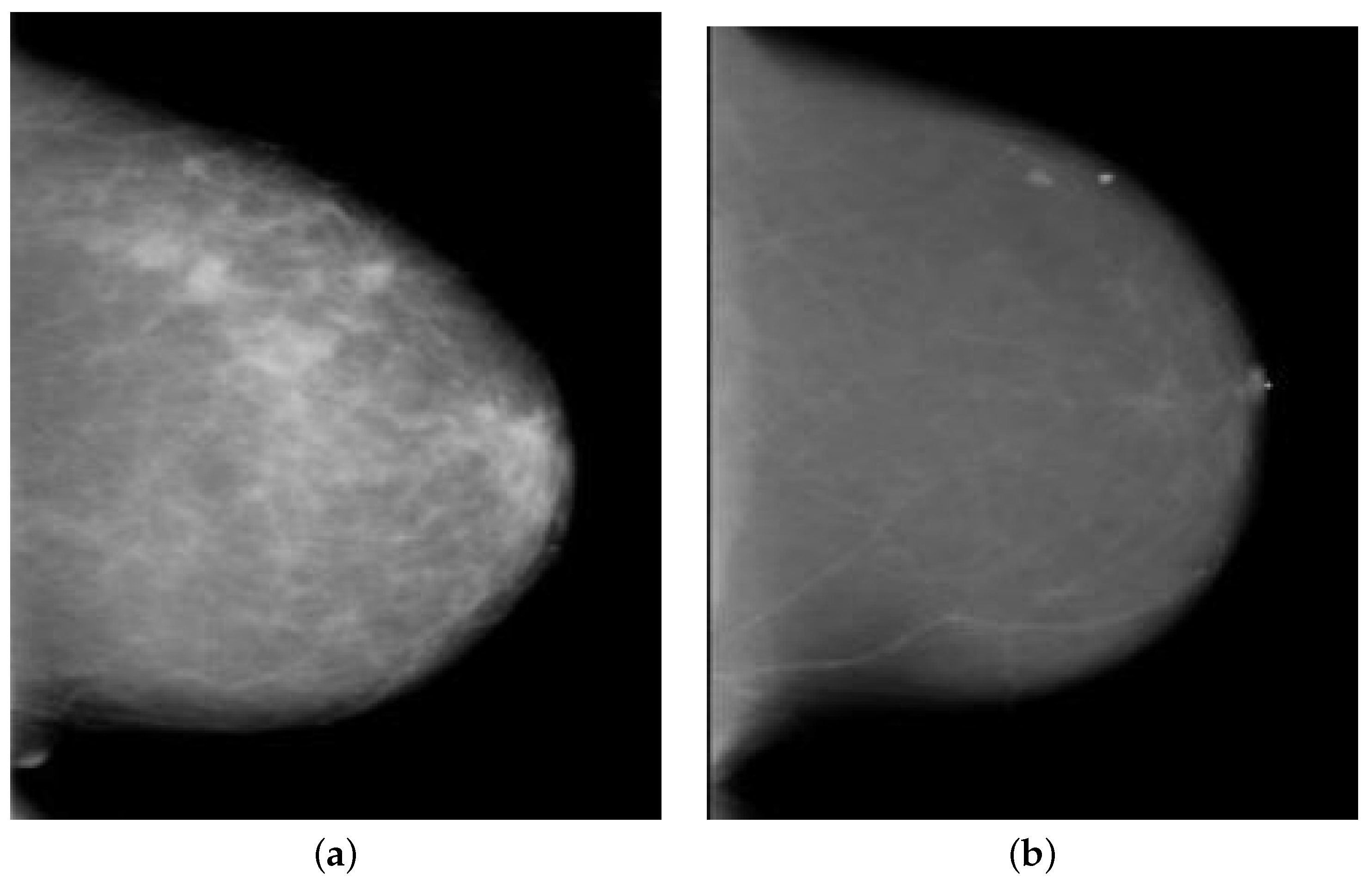

3.1. Dataset Description and Acquisition

3.2. Methodologies

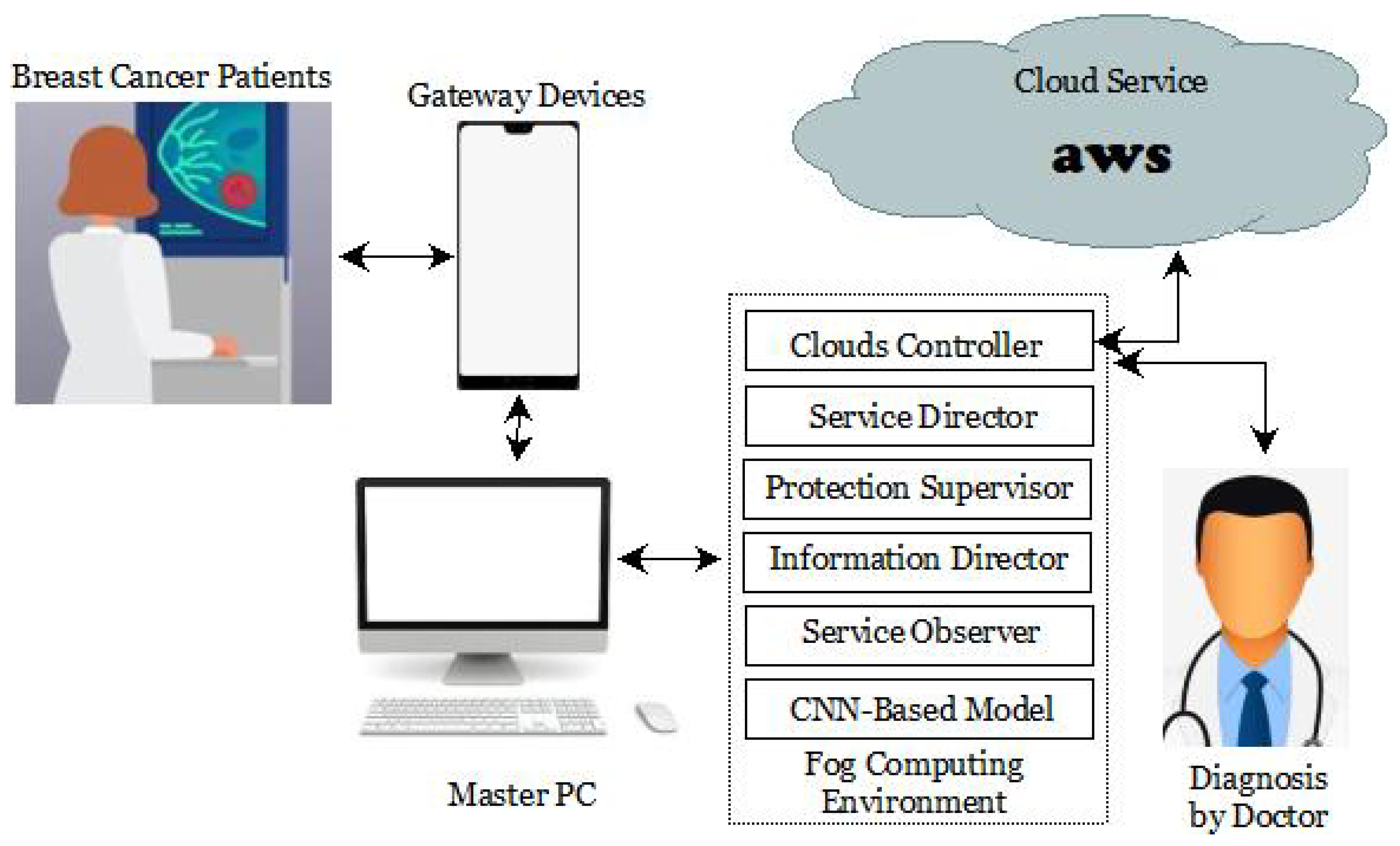

4. Proposed Work

4.1. Components Used

4.2. Framework Design and Implementation

4.3. Working Principle

| Algorithm 1 Main Function of the Proposed Work |

Require: Ensure: 1: For Active

|

| Algorithm 2 Body of the Procedure Active Nodes |

Require: Received via Ensure: Sent to

|

5. Simulation and Results

6. Conclusions and Future Scope

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Palazzi, C.E.; Ferretti, S.; Cacciaguerra, S.; Roccetti, M. On maintaining interactivity in event delivery synchronization for mirrored game architectures. In Proceedings of the IEEE Global Telecommunications Conference Workshops, GlobeCom Workshops, Dallas, TX, USA, 29 November–3 December 2004; pp. 157–165. [Google Scholar]

- Hanna, K.; Krzoska, E.; Shaaban, A.M.; Muirhead, D.; Abu-Eid, R.; Speirs, V. Raman spectroscopy: Current applications in breast cancer diagnosis, challenges and future prospects. Br. J. Cancer 2022, 126, 1125–1139. [Google Scholar] [CrossRef] [PubMed]

- Pati, A.; Parhi, M.; Pattanayak, B.K. IABCP: An Integrated Approach for Breast Cancer Prediction. In Proceedings of the 2022 2nd Odisha International Conference on Electrical Power Engineering, Communication and Computing Technology (ODICON), Bhubaneswar, India, 11–12 November 2022; pp. 1–5. [Google Scholar]

- Narod, S.A.; Iqbal, J.; Miller, A.B. Why have breast cancer mortality rates declined? J. Cancer Policy 2015, 5, 8–17. [Google Scholar] [CrossRef]

- Heywang-Köbrunner, S.H.; Hacker, A.; Sedlacek, S. Advantages and disadvantages of mammography screening. Breast Care 2011, 6, 199–207. [Google Scholar] [CrossRef] [PubMed]

- Pati, A.; Parhi, M.; Pattanayak, B.K.; Sahu, B.; Khasim, S. CanDiag: Fog Empowered Transfer Deep Learning Based Approach for Cancer Diagnosis. Designs 2023, 7, 57. [Google Scholar] [CrossRef]

- Lakhan, A.; Mohammed, M.A.; Kozlov, S.; Rodrigues, J.J. Mobile-fog-cloud assisted deep reinforcement learning and blockchain-enable IoMT system for healthcare workflows. In Transactions on Emerging Telecommunications Technologies; Wiley: Hoboken, NJ, USA, 2021; p. e4363. [Google Scholar]

- Pati, A.; Parhi, M.; Pattanayak, B.K. HeartFog: Fog Computing Enabled Ensemble Deep Learning Framework for Automatic Heart Disease Diagnosis. In Intelligent and Cloud Computing; Springer: Berlin/Heidelberg, Germany, 2022; pp. 39–53. [Google Scholar]

- Mutlag, A.A.; Abd Ghani, M.K.; Mohammed, M.A.; Lakhan, A.; Mohd, O.; Abdulkareem, K.H.; Garcia-Zapirain, B. Multi-Agent Systems in Fog–Cloud Computing for Critical Healthcare Task Management Model (CHTM) Used for ECG Monitoring. Sensors 2021, 21, 6923. [Google Scholar] [CrossRef]

- Pati, A.; Parhi, M.; Pattanayak, B.K.; Singh, D.; Samanta, D.; Banerjee, A.; Biring, S.; Dalapati, G.K. Diagnose Diabetic Mellitus Illness Based on IoT Smart Architecture. Wirel. Commun. Mob. Comput. 2022, 2022, 7268571. [Google Scholar] [CrossRef]

- Shukla, S.; Thakur, S.; Hussain, S.; Breslin, J.G.; Jameel, S.M. Identification and authentication in healthcare internet-of-things using integrated fog computing based blockchain model. Internet Things 2021, 15, 100422. [Google Scholar] [CrossRef]

- Parhi, M.; Roul, A.; Ghosh, B.; Pati, A. IOATS: An Intelligent Online Attendance Tracking System based on Facial Recognition and Edge Computing. Int. J. Intell. Syst. Appl. Eng. 2022, 10, 252–259. [Google Scholar]

- Pati, A.; Parhi, M.; Alnabhan, M.; Pattanayak, B.K.; Habboush, A.K.; Al Nawayseh, M.K. An IoT-Fog-Cloud Integrated Framework for Real-Time Remote Cardiovascular Disease Diagnosis. Informatics 2023, 10, 21. [Google Scholar] [CrossRef]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A.; et al. International evaluation of an AI system for breast cancer screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef]

- Alahe, M.A.; Maniruzzaman, M. Detection and Diagnosis of Breast Cancer Using Deep Learning. In Proceedings of the 2021 IEEE Region 10 Symposium (TENSYMP), Grand Hyatt Jeju, Republic of Korea, 23–25 August 2021; pp. 1–7. [Google Scholar]

- Xu, J.; Liu, H.; Shao, W.; Deng, K. Quantitative 3-D shape features based tumor identification in the fog computing architecture. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 2987–2997. [Google Scholar] [CrossRef]

- Zhu, G.; Fu, J.; Dong, J. Low Dose Mammography via Deep Learning. J. Phys. Conf. Ser. 2020, 1626, 012110. [Google Scholar]

- Chougrad, H.; Zouaki, H.; Alheyane, O. Multi-label transfer learning for the early diagnosis of breast cancer. Neurocomputing 2020, 392, 168–180. [Google Scholar] [CrossRef]

- Allugunti, V.R. Breast cancer detection based on thermographic images using machine learning and deep learning algorithms. Int. J. Eng. Comput. Sci. 2022, 4, 49–56. [Google Scholar] [CrossRef]

- Goen, A.; Singhal, A. Classification of Breast Cancer Histopathology Image using Deep Learning Neural Network. Int. J. Eng. Res. Appl. 2021, 11, 59–65. [Google Scholar] [CrossRef]

- Canatalay, P.J.; Uçan, O.N.; Zontul, M. Diagnosis of breast cancer from X-ray images using deep learning methods. Ponte Int. J. Sci. Res. 2021, 77, 1. [Google Scholar] [CrossRef]

- Pourasad, Y.; Zarouri, E.; Salemizadeh Parizi, M.; Salih Mohammed, A. Presentation of novel architecture for diagnosis and identifying breast cancer location based on ultrasound images using machine learning. Diagnostics 2021, 11, 1870. [Google Scholar] [CrossRef] [PubMed]

- Kavitha, T.; Mathai, P.P.; Karthikeyan, C.; Ashok, M.; Kohar, R.; Avanija, J.; Neelakandan, S. Deep learning based capsule neural network model for breast cancer diagnosis using mammogram images. Interdiscip. Sci. Comput. Life Sci. 2022, 14, 113–129. [Google Scholar] [CrossRef]

- Jabeen, K.; Khan, M.A.; Alhaisoni, M.; Tariq, U.; Zhang, Y.D.; Hamza, A.; Mickus, A.; Damaševičius, R. Breast cancer classification from ultrasound images using probability-based optimal deep learning feature fusion. Sensors 2022, 22, 807. [Google Scholar] [CrossRef]

- Jasti, V.; Zamani, A.S.; Arumugam, K.; Naved, M.; Pallathadka, H.; Sammy, F.; Raghuvanshi, A.; Kaliyaperumal, K. Computational technique based on machine learning and image processing for medical image analysis of breast cancer diagnosis. Secur. Commun. Netw. 2022, 2022, 1918379. [Google Scholar] [CrossRef]

- Qi, X.; Yi, F.; Zhang, L.; Chen, Y.; Pi, Y.; Chen, Y.; Guo, J.; Wang, J.; Guo, Q.; Li, J.; et al. Computer-aided diagnosis of breast cancer in ultrasonography images by deep learning. Neurocomputing 2022, 472, 152–165. [Google Scholar] [CrossRef]

- Ragab, M.; Albukhari, A.; Alyami, J.; Mansour, R.F. Ensemble deep-learning-enabled clinical decision support system for breast cancer diagnosis and classification on ultrasound images. Biology 2022, 11, 439. [Google Scholar] [CrossRef] [PubMed]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and operating a public information repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef] [PubMed]

- Digital Database for Screening Mammography the Cancer Imaging Archive (TCIA) Public Access. Available online: https://wiki.cancerimagingarchive.net/display/Public/CBIS-DDSM (accessed on 18 August 2021).

- Guan, S.; Loew, M. Breast cancer detection using synthetic mammograms from generative adversarial networks in convolutional neural networks. J. Med. Imaging 2019, 6, 031411. [Google Scholar] [CrossRef]

- Roul, A.; Pati, A.; Parhi, M. COVIHunt: An Intelligent CNN-Based COVID-19 Detection Using CXR Imaging. In Electronic Systems and Intelligent Computing; Springer: Berlin/Heidelberg, Germany, 2022; pp. 313–327. [Google Scholar]

- Al-Haija, Q.A.; Adebanjo, A. Breast cancer diagnosis in histopathological images using ResNet-50 convolutional neural network. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Vancouver, BC, Canada, 9–12 September 2020; pp. 1–7. [Google Scholar]

- Al Husaini, M.A.S.; Habaebi, M.H.; Gunawan, T.S.; Islam, M.R.; Elsheikh, E.A.; Suliman, F. Thermal-based early breast cancer detection using inception V3, inception V4 and modified inception MV4. Neural Comput. Appl. 2022, 34, 333–348. [Google Scholar] [CrossRef] [PubMed]

- Omonigho, E.L.; David, M.; Adejo, A.; Aliyu, S. Breast cancer: Tumor detection in mammogram images using modified alexnet deep convolution neural network. In Proceedings of the 2020 International Conference in Mathematics, Computer Engineering and Computer Science (ICMCECS), Ayobo, Nigeria, 18–21 March 2020; pp. 1–6. [Google Scholar]

- Jahangeer, G.S.B.; Rajkumar, T.D. Early detection of breast cancer using hybrid of series network and VGG-16. Multimed. Tools Appl. 2021, 80, 7853–7886. [Google Scholar] [CrossRef]

- Singh, R.; Ahmed, T.; Kumar, A.; Singh, A.K.; Pandey, A.K.; Singh, S.K. Imbalanced breast cancer classification using transfer learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 18, 83–93. [Google Scholar] [CrossRef]

- Rehman, K.U.; Li, J.; Pei, Y.; Yasin, A.; Ali, S.; Mahmood, T. Computer vision-based microcalcification detection in digital mammograms using fully connected depthwise separable convolutional neural network. Sensors 2021, 21, 4854. [Google Scholar] [CrossRef]

- Qian, S.; Liu, H.; Liu, C.; Wu, S.; San Wong, H. Adaptive activation functions in convolutional neural networks. Neurocomputing 2018, 272, 204–212. [Google Scholar] [CrossRef]

- Gupta, H.; Vahid Dastjerdi, A.; Ghosh, S.K.; Buyya, R. iFogSim: A toolkit for modeling and simulation of resource management techniques in the Internet of Things, Edge and Fog computing environments. Softw. Pract. Exp. 2017, 47, 1275–1296. [Google Scholar] [CrossRef]

- Tuli, S.; Mahmud, R.; Tuli, S.; Buyya, R. Fogbus: A blockchain-based lightweight framework for edge and fog computing. J. Syst. Softw. 2019, 154, 22–36. [Google Scholar] [CrossRef]

- Narula, S.; Jain, A. Cloud computing security: Amazon web service. In Proceedings of the 2015 Fifth International Conference on Advanced Computing & Communication Technologies, Haryana, India, 21–22 February 2015; pp. 501–505. [Google Scholar]

- Vecchiola, C.; Chu, X.; Buyya, R. Aneka: A software platform for NET-based cloud computing. High Speed Large Scale Sci. Comput. 2009, 18, 267–295. [Google Scholar]

- Pati, A.; Parhi, M.; Pattanayak, B.K. IHDPM: An integrated heart disease prediction model for heart disease prediction. Int. J. Med. Eng. Inform. 2022, 14, 564–577. [Google Scholar]

- Pati, A.; Parhi, M.; Pattanayak, B.K. A review on prediction of diabetes using machine learning and data mining classification techniques. Int. J. Biomed. Eng. Technol. 2023, 41, 83–109. [Google Scholar] [CrossRef]

- Sahu, B.; Panigrahi, A.; Rout, S.K.; Pati, A. Hybrid Multiple Filter Embedded Political Optimizer for Feature Selection. In Proceedings of the 2022 International Conference on Intelligent Controller and Computing for Smart Power (ICICCSP), Hyderabad, India, 21–23 July 2022; pp. 1–6. [Google Scholar]

- Liu, H.; Yan, F.; Zhang, S.; Xiao, T.; Song, J. Source-level energy consumption estimation for cloud computing tasks. IEEE Access 2017, 6, 1321–1330. [Google Scholar] [CrossRef]

| Class Labels | Number of Samples | ||||

|---|---|---|---|---|---|

| Training Samples | Test Samples | Validation Samples | Total Samples | Resolution | |

| Malignant (Considered Binary Value: 1) | 640 | 91 | 183 | 914 | 320 × 240 |

| Benign (Considered Binary Value: 0) | 609 | 87 | 174 | 870 | 320 × 240 |

| TL Approaches | Base Layer | Depth | Optimizer Used | Learning Rate | Epochs | Mini Batch Size | AF at Input Layers | AF at Hidden Layers | AF at Output Layers |

|---|---|---|---|---|---|---|---|---|---|

| ResNet50 | Without FC | 50 | Adam | 0.000001 | 50 | 24 | ReLU | ReLU | Softmax |

| InceptionV3 | Without FC | 48 | Adam | 0.000001 | 50 | 24 | ReLU | ReLU | Softmax |

| AlexNet | Without FC | 8 | Adam | 0.000001 | 50 | 24 | ReLU | ReLU | Softmax |

| VGG16 | Without FC | 16 | Adam | 0.000001 | 50 | 24 | ReLU | ReLU | Sigmoid |

| VGG19 | Without FC | 19 | Adam | 0.000001 | 50 | 24 | ReLU | ReLU | Sigmoid |

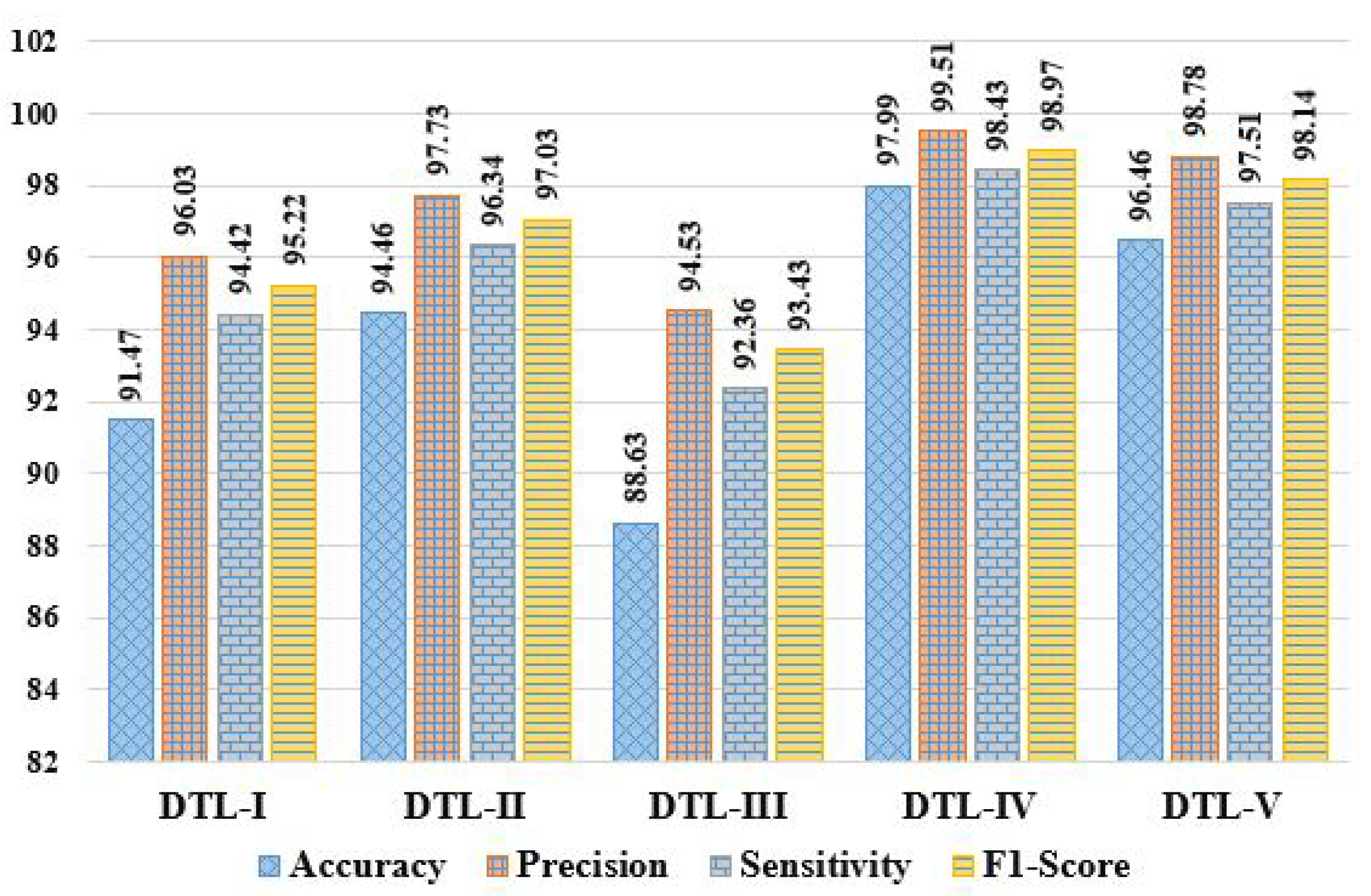

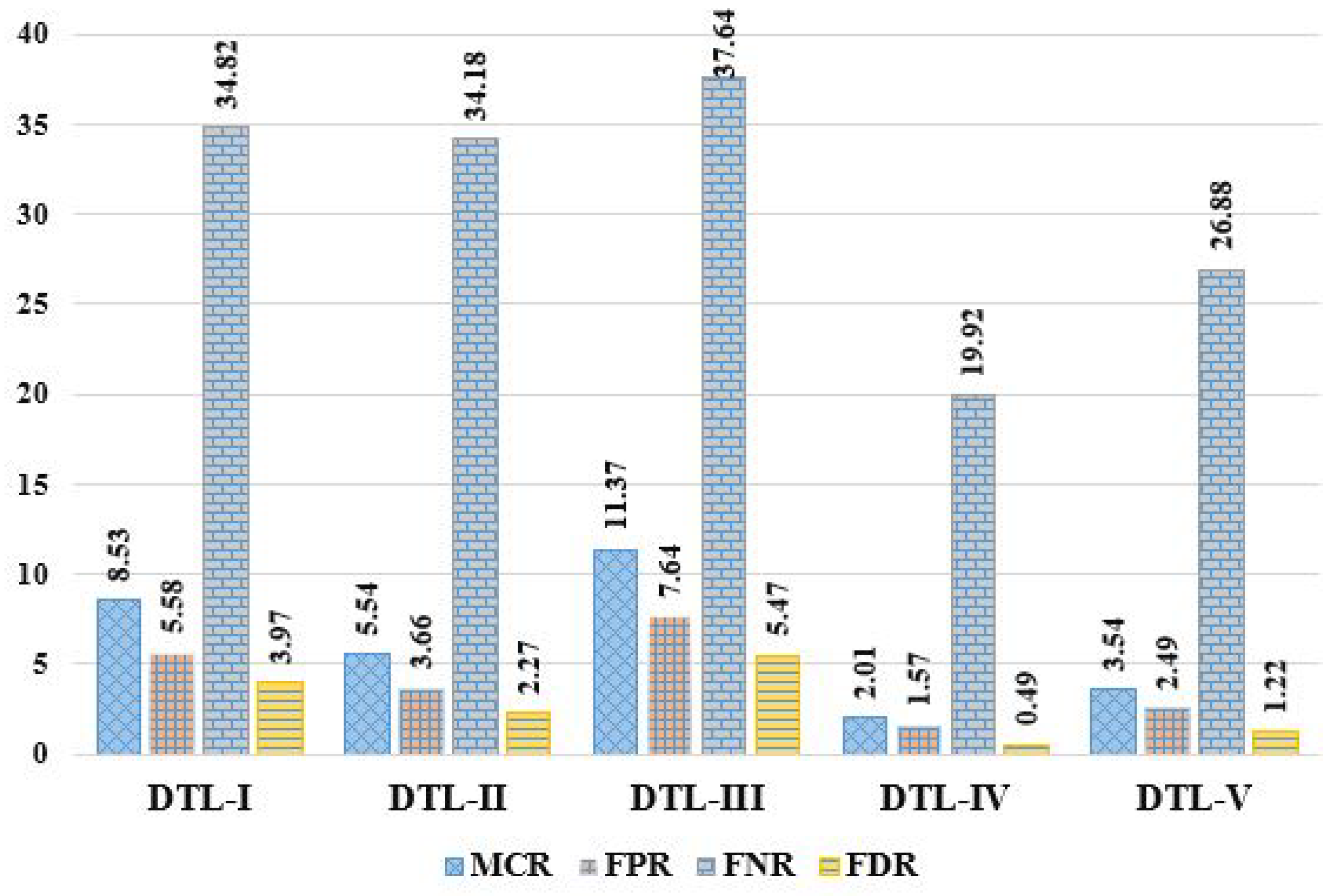

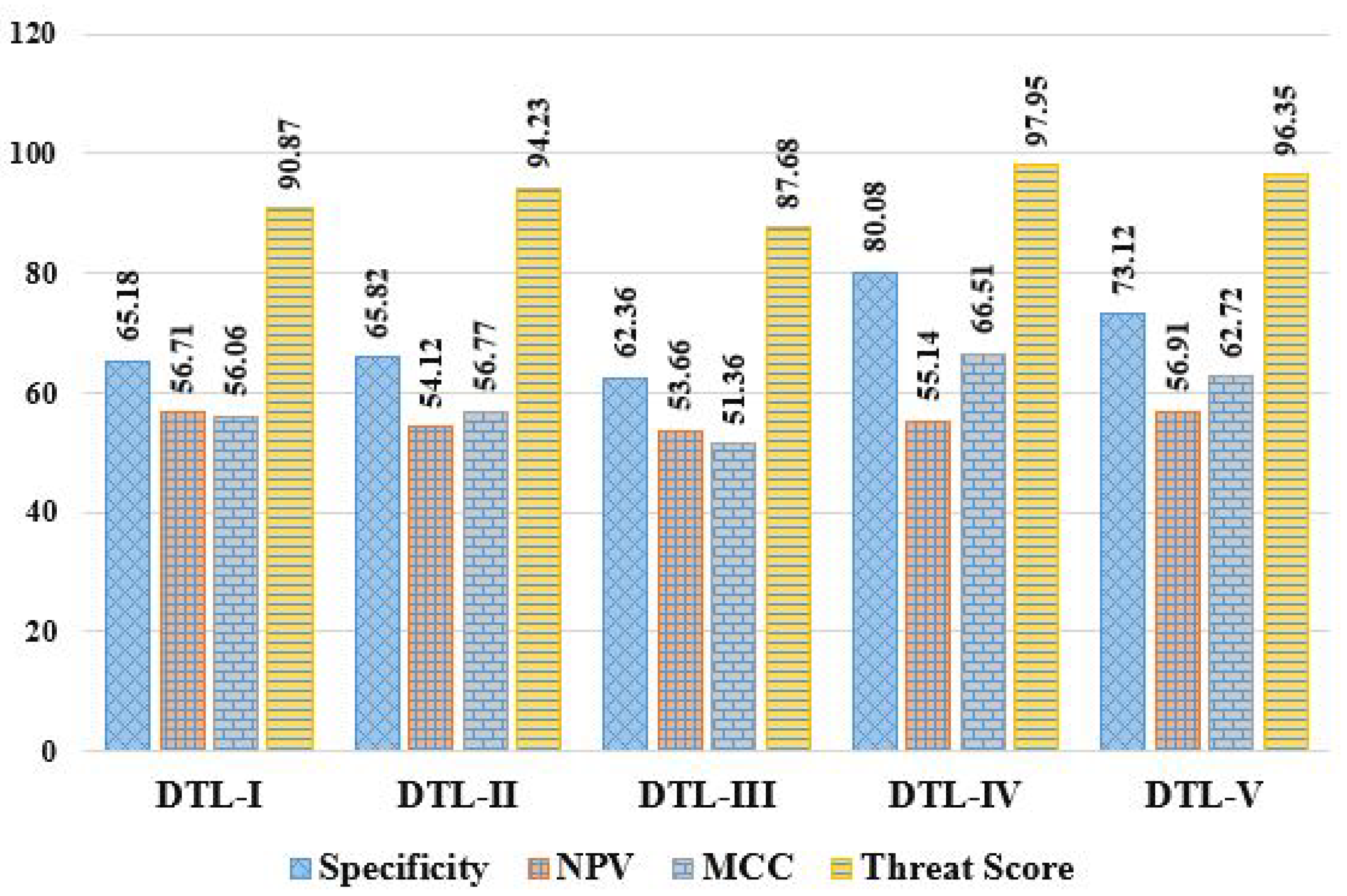

| DTL Methods | Performance Measures (in %) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Acc | MCR | Pre | Sen | Spc | F1S | FPR | FNR | NPV | FDR | MCC | TSc | |

| DTL-I | 91.47 | 8.53 | 96.03 | 94.42 | 65.18 | 95.22 | 5.58 | 34.82 | 56.71 | 3.97 | 56.06 | 90.87 |

| DLT-II | 94.46 | 5.54 | 97.73 | 96.34 | 65.82 | 97.03 | 3.66 | 34.18 | 54.12 | 2.27 | 56.77 | 94.23 |

| DTL-III | 88.63 | 11.37 | 94.53 | 92.36 | 62.36 | 93.43 | 7.64 | 37.64 | 53.66 | 5.47 | 51.35 | 87.68 |

| DTL-IV | 97.99 | 2.01 | 99.51 | 98.43 | 80.08 | 98.97 | 1.57 | 19.92 | 55.14 | 0.49 | 65.51 | 97.95 |

| DTL-V | 96.46 | 3.54 | 98.78 | 97.51 | 73.12 | 98.14 | 2.49 | 26.88 | 56.91 | 1.22 | 62.72 | 96.35 |

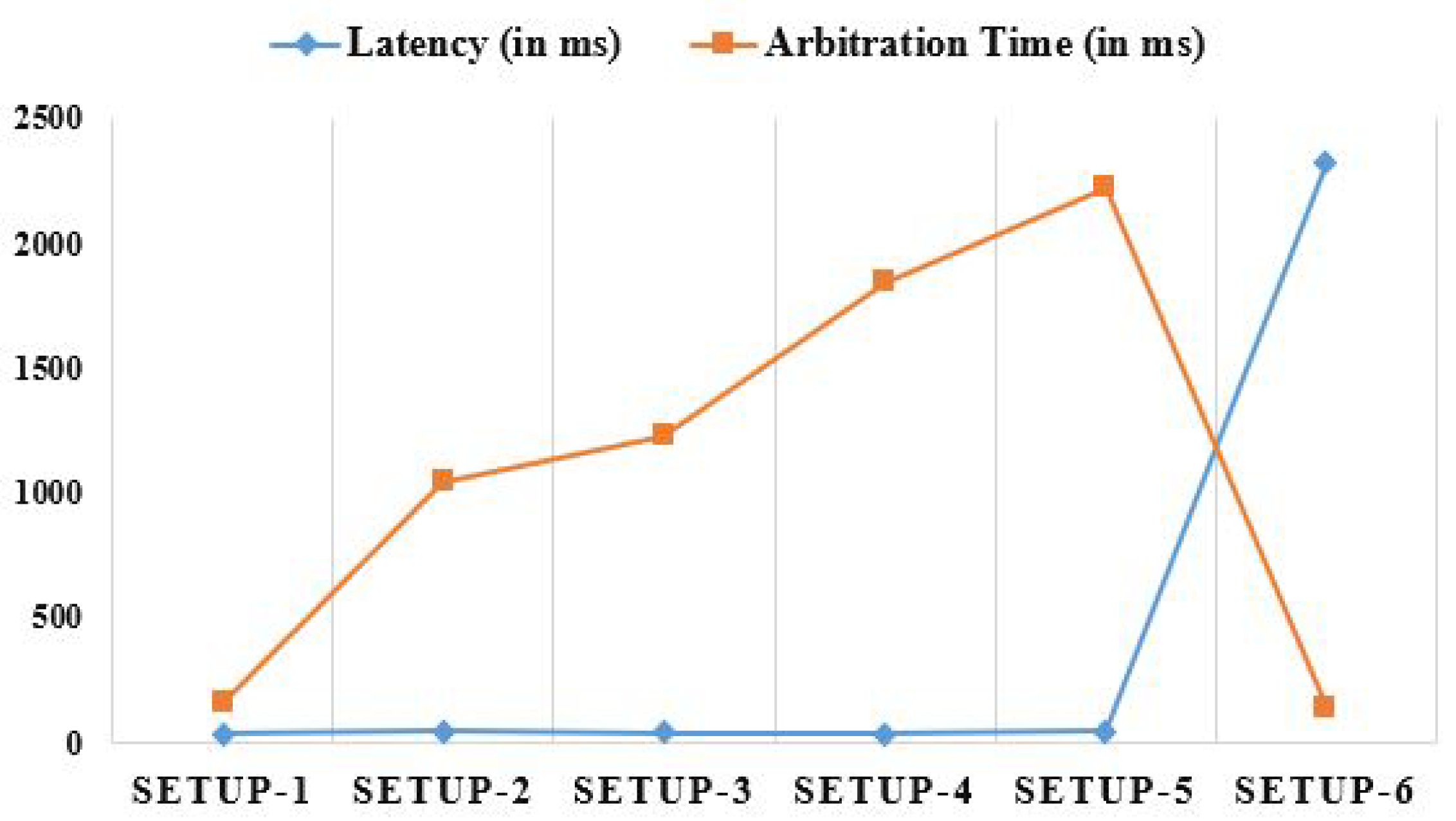

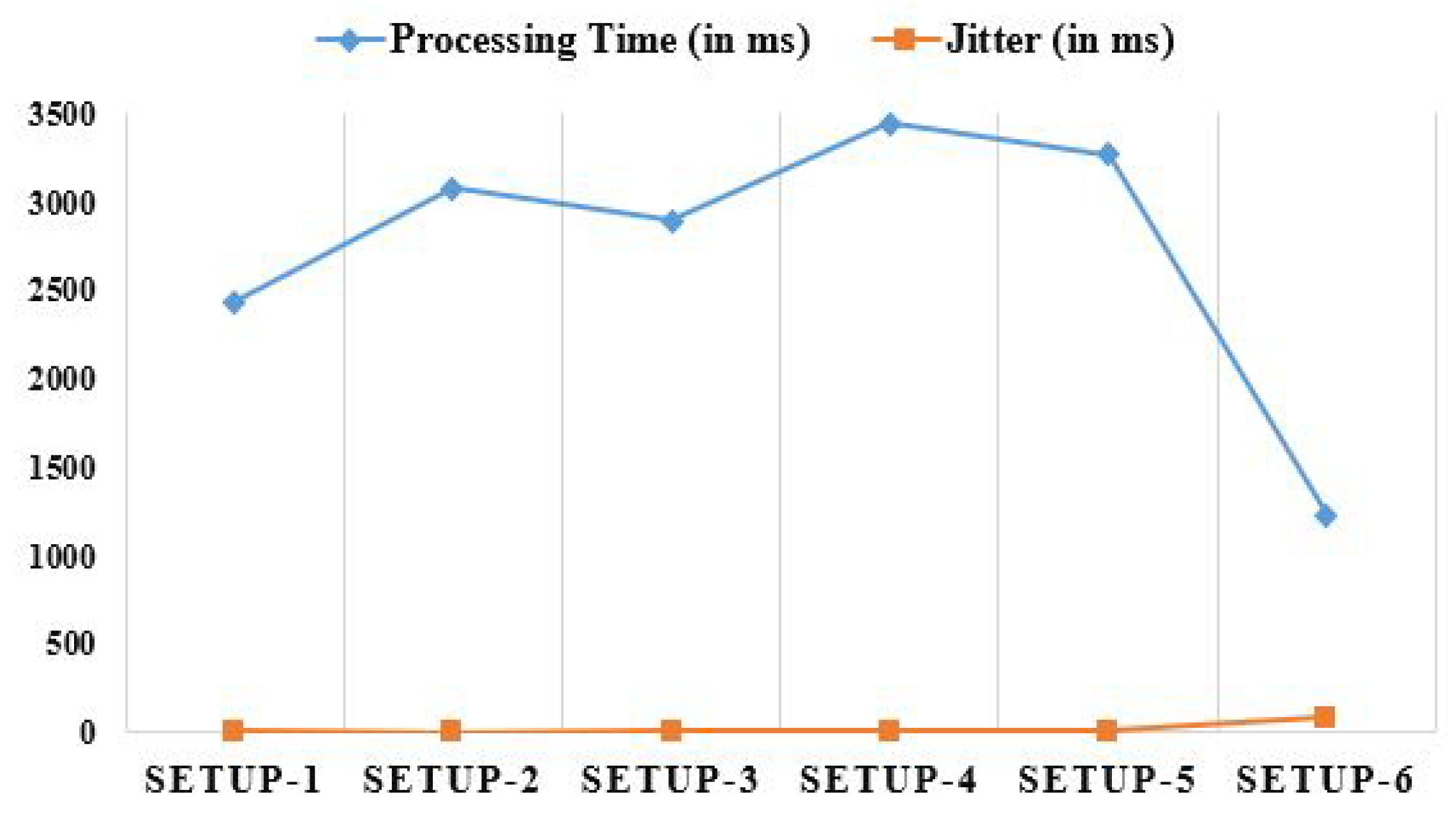

| Configurations | Network Parameters | |||||

|---|---|---|---|---|---|---|

| Latency (in ms) | Arbitration Time (in ms) | Processing Time (in ms) | Jitter (in ms) | Network Utilization (in Secs) | Energy Consumption (in Watt) | |

| SETUP-1 | 31.7 | 156.7 | 2435.2 | 6.25 | 9.3 | 3.49 |

| SETUP-2 | 42.4 | 1046.5 | 3082.4 | 3.75 | 12.1 | 4.28 |

| SETUP-3 | 36.5 | 1228.7 | 2897.5 | 4.50 | 14.8 | 5.26 |

| SETUP-4 | 34.8 | 1847.5 | 3443.4 | 5.75 | 17.4 | 6.11 |

| SETUP-5 | 41.3 | 2223.4 | 3273.6 | 8.50 | 18.7 | 6.83 |

| SETUP-6 | 2318.9 | 142.3 | 1228.6 | 82.25 | 22.7 | 22.23 |

| Work | Methodologies | Dataset Used | Performance Measures (in %) | ||||

|---|---|---|---|---|---|---|---|

| Acc | Pre | Sen | Spe | F1S | |||

| [15] | CNN | Breast Histopathology Images (BHIs) Dataset | 88.46 | 85.46 | 95.17 | 82.64 | 79.77 |

| [16] | Fuzzy c-means clustering algorithm | Medical CT scans | 94.6 | - | - | - | - |

| [17] | CNN | Dataset from TCIA | - | - | - | - | - |

| [18] | CNN | Dataset from TCIA | - | - | - | - | 94.2 |

| [19] | CNN, SM, and RF | Dataset from Kaggle | 99.67 | - | - | - | - |

| [20] | CNN and Deep CNN | Dataset from Kaggle | 84 | 74 | 71 | - | 70 |

| [21] | CNN and TL | Dataset from TCIA | 97.0 | 96.0 | 89.0 | 94.0 | 98.0 |

| [22] | CNN, SVM, DT, and NB | Dataset from Kaggle | 98.0 | - | 88.5 | - | - |

| [23] | OMLTS-DLCN | Mini-MIAS and DDSM dataset | 98.50 | - | 98.46 | 99.08 | 98.91 |

| [24] | CNN and TL | Breast Ultrasound Images (BUSIs) Dataset | 99.10 | 99.10 | 99.06 | - | 99.08 |

| [25] | SVM, KNN, RF, NB, and AlexNet | MIAS dataset | 97.5 | 94.5 | 94.5 | 96.5 | 94.5 |

| [26] | DCNN | Images from WCH, CMGH, and PHDY Hospitals | 87.0 | - | 86.0 | 88.0 | - |

| [27] | VGG-16, VGG-19, and SqueezeNet | Benchmark Breast Ultrasound Dataset | 97.09 | 87.90 | 84.95 | 90.20 | - |

| Proposed Work | CNN, VGG16, VGG19, ResNet50, AlexNet, and InceptionV3 | DDSM from TCIA | 97.97 | 99.51 | 98.43 | 80.08 | 98.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pati, A.; Parhi, M.; Pattanayak, B.K.; Singh, D.; Singh, V.; Kadry, S.; Nam, Y.; Kang, B.-G. Breast Cancer Diagnosis Based on IoT and Deep Transfer Learning Enabled by Fog Computing. Diagnostics 2023, 13, 2191. https://doi.org/10.3390/diagnostics13132191

Pati A, Parhi M, Pattanayak BK, Singh D, Singh V, Kadry S, Nam Y, Kang B-G. Breast Cancer Diagnosis Based on IoT and Deep Transfer Learning Enabled by Fog Computing. Diagnostics. 2023; 13(13):2191. https://doi.org/10.3390/diagnostics13132191

Chicago/Turabian StylePati, Abhilash, Manoranjan Parhi, Binod Kumar Pattanayak, Debabrata Singh, Vijendra Singh, Seifedine Kadry, Yunyoung Nam, and Byeong-Gwon Kang. 2023. "Breast Cancer Diagnosis Based on IoT and Deep Transfer Learning Enabled by Fog Computing" Diagnostics 13, no. 13: 2191. https://doi.org/10.3390/diagnostics13132191

APA StylePati, A., Parhi, M., Pattanayak, B. K., Singh, D., Singh, V., Kadry, S., Nam, Y., & Kang, B.-G. (2023). Breast Cancer Diagnosis Based on IoT and Deep Transfer Learning Enabled by Fog Computing. Diagnostics, 13(13), 2191. https://doi.org/10.3390/diagnostics13132191