Automatic Acne Object Detection and Acne Severity Grading Using Smartphone Images and Artificial Intelligence

Abstract

:1. Introduction

2. Materials and Methods

2.1. Dataset

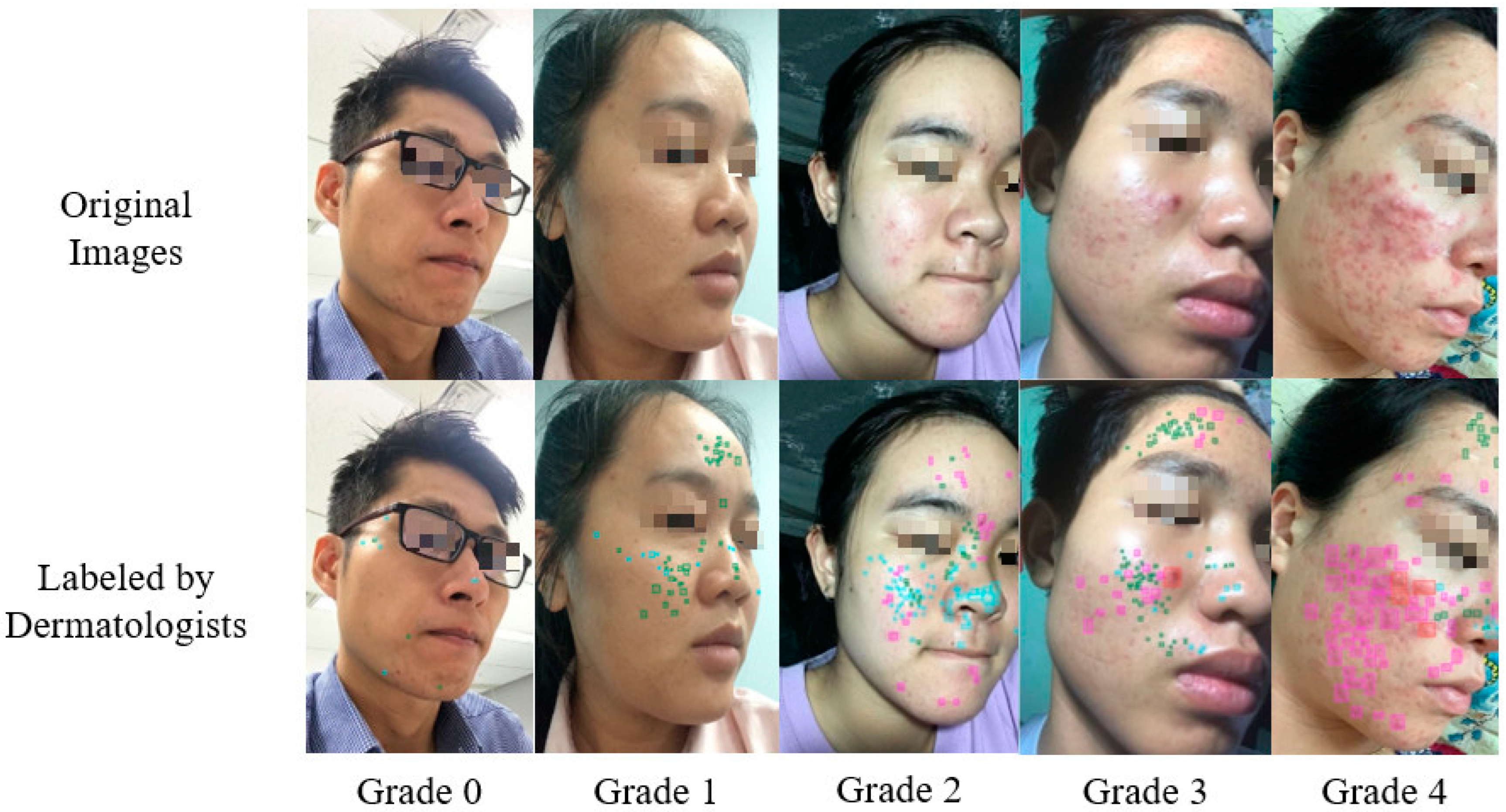

2.1.1. Data Collection and Labeling

2.1.2. Data Statistics

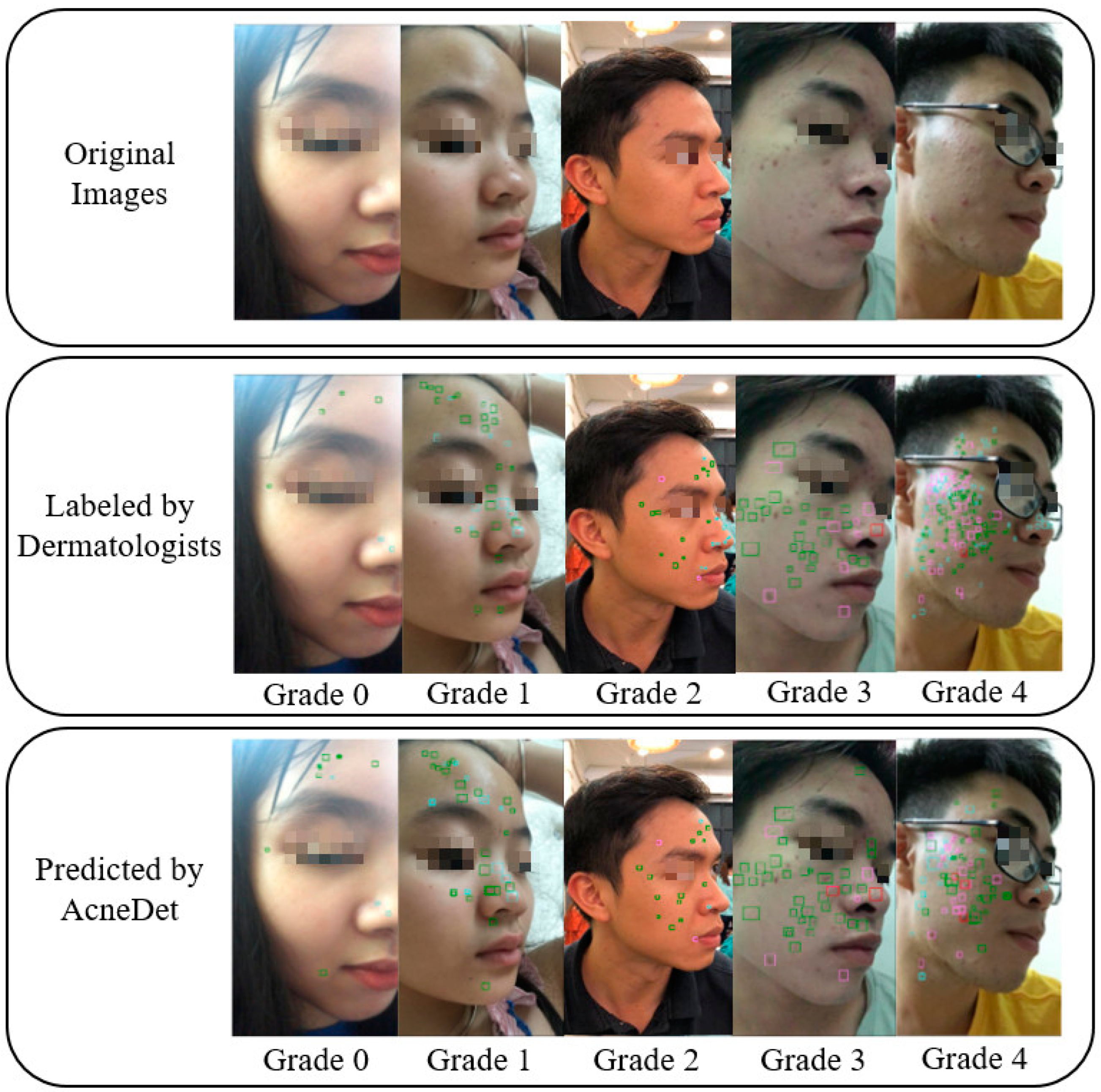

2.2. IGA Scale

2.3. Methods

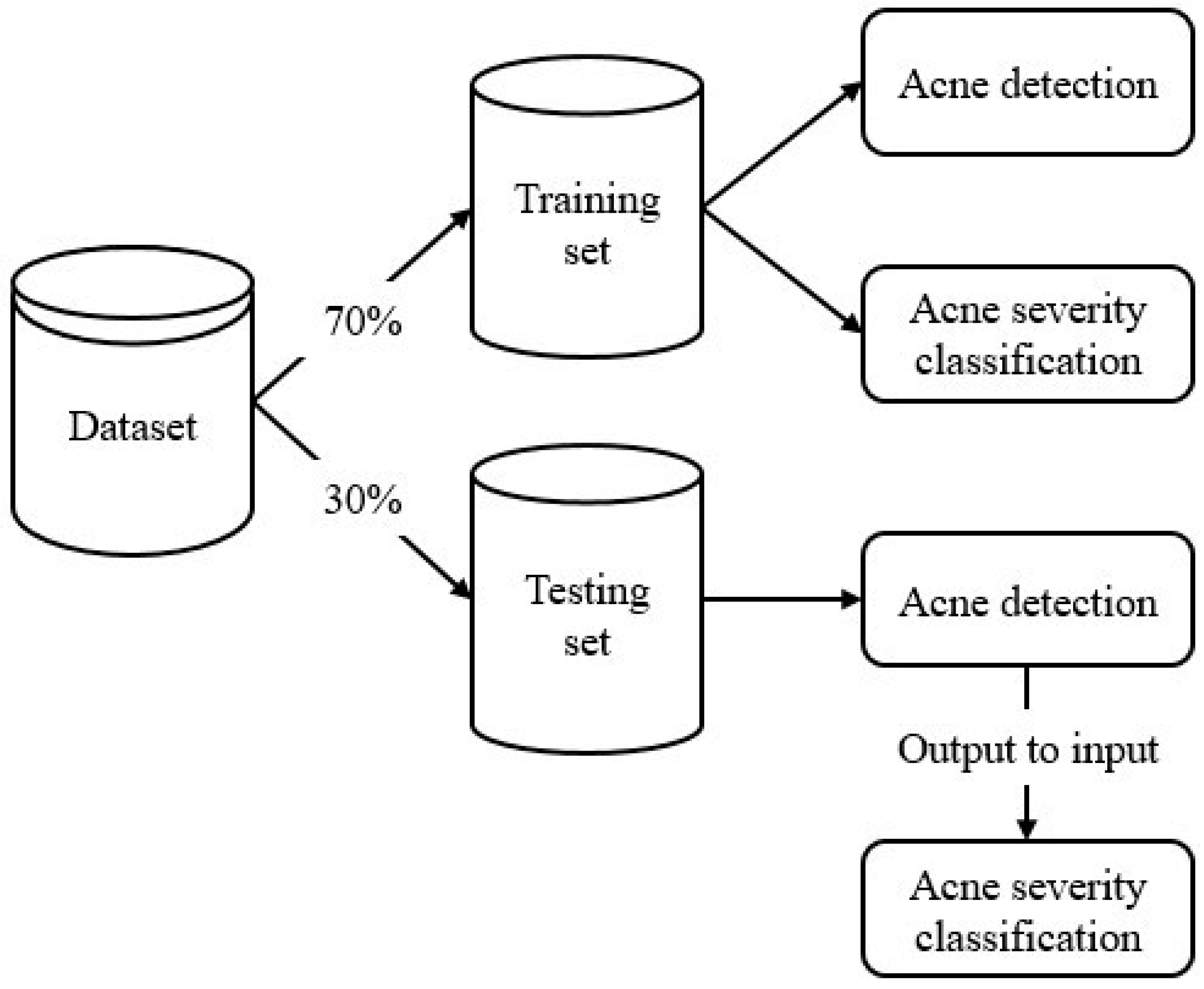

2.3.1. Overall Model Architecture

- Acne object detection model: determine the location and type of acne lesions.

- Acne severity grading model: grade the overall acne severity of the input image using the IGA scale.

- Acne object detection model

- Acne severity grading model

2.3.2. Training Model

- Evaluation metrics

3. Results

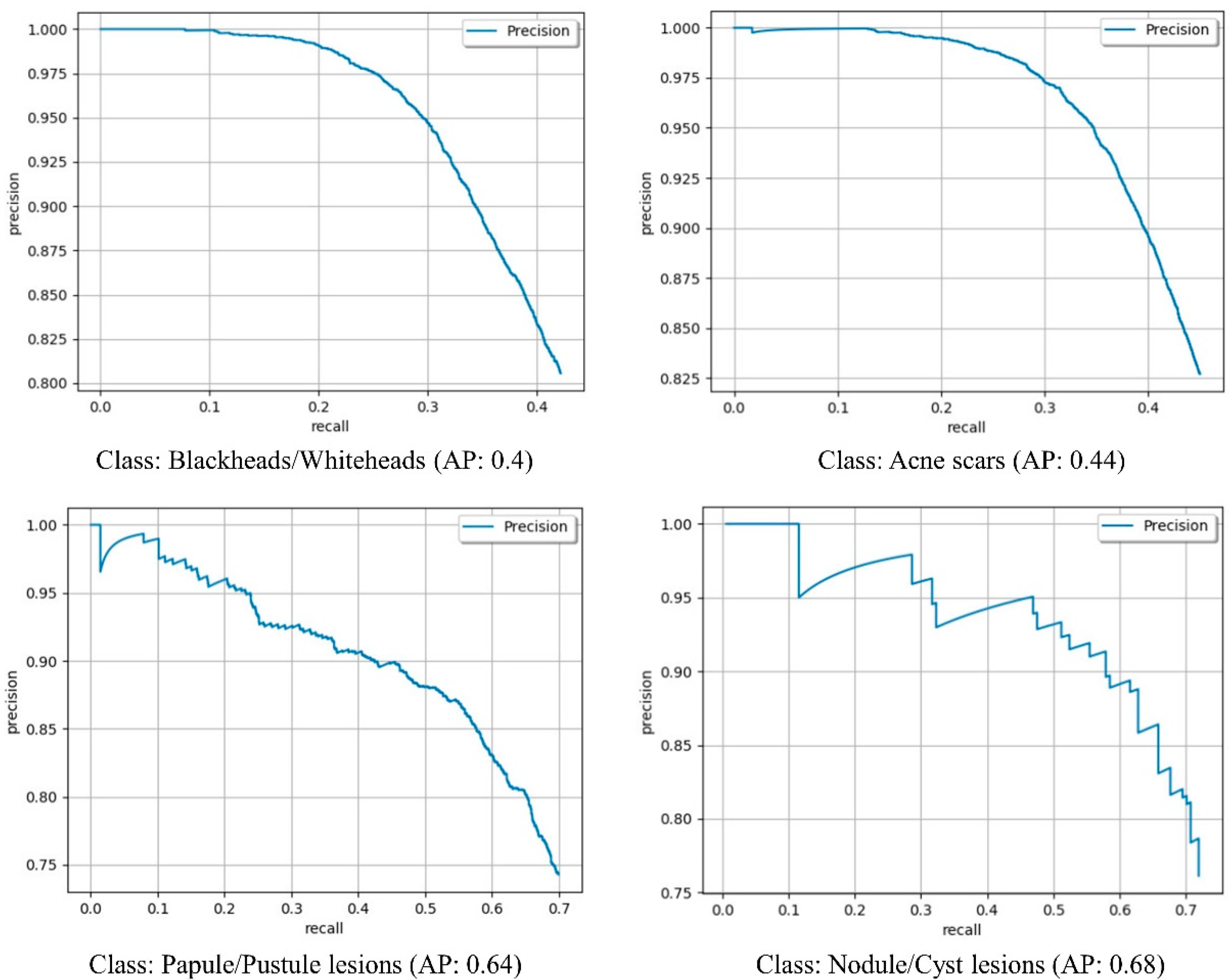

3.1. Acne Object Detection

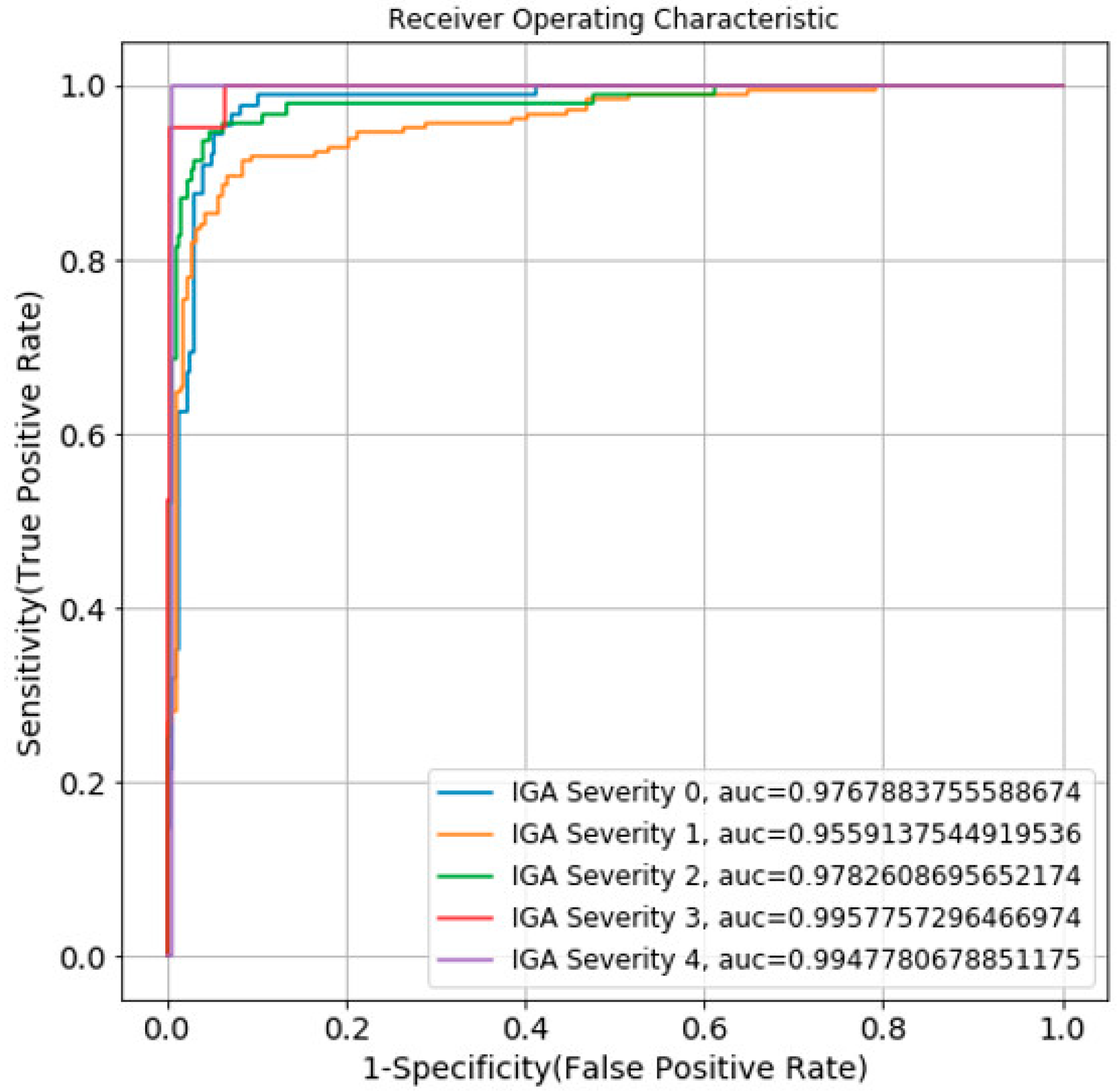

3.2. Acne Severity Grading

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bernardis, E.; Shou, H.; Barbieri, J.S.; McMahon, P.J.; Perman, M.J.; Rola, L.A.; Streicher, J.L.; Treat, J.R.; Castelo-Soccio, L.; Yan, A.C. Development and Initial Validation of a Multidimensional Acne Global Grading System Integrating Primary Lesions and Secondary Changes. JAMA Dermatol. 2020, 156, 296–302. [Google Scholar] [CrossRef] [PubMed]

- Vos, T.; Flaxman, A.D.; Naghavi, M.; Lozano, R.; Michaud, C.; Ezzati, M.; Shibuya, K.; Salomon, J.A.; Abdalla, S.; Aboyans, V.; et al. Years lived with disability (YLDs) for 1160 sequelae of 289 diseases and injuries 1990–2010: A systematic analysis for the Global Burden of Disease Study 2010. Lancet 2012, 380, 2163–2196. [Google Scholar] [CrossRef]

- Malgina, E.; Kurochkina, M.-A. Development of the Mobile Application for Assessing Facial Acne Severity from Photos. In Proceedings of the IEEE Conference of Russian Young Researchers in Electrical and Electronic Engineering (ElConRus), St. Petersburg, Russia, 26–29 January 2021; pp. 1790–1793. [Google Scholar] [CrossRef]

- Zaenglein, A.L.; Pathy, A.L.; Schlosser, B.J.; Alikhan, A.; Baldwin, H.E.; Berson, D.S.; Bowe, W.P.; Graber, E.M.; Harper, J.C.; Kang, S.; et al. Guidelines of care for the management of acne vulgaris. J. Am. Acad. Dermatol. 2016, 74, 945–973. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, T.B.; Bai, Y.P. Progress in the treatment of acne vulgaris. Chin. J. Derm. Integr. Tradit. West. Med. 2019, 18, 180–182. [Google Scholar]

- Sutaria, A.H.; Masood, S.; Schlessinger, J. Acne Vulgaris. [Updated 2020 Aug 8]. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, January 2021. Available online: https://www.ncbi.nlm.nih.gov/books/NBK459173/ (accessed on 28 June 2022).

- Bhate, K.; Williams, H.C. Epidemiology of acne vulgaris. Br. J. Dermatol. 2013, 168, 474–485. [Google Scholar] [CrossRef] [PubMed]

- Tassavor, M.; Payette, M.J. Estimated cost efficacy of U.S. Food and Drug Administration-approved treatments for acne. Dermatol. Ther. 2019, 32, e12765. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shen, X.; Zhang, J.; Yan, C.; Zhou, H. An Automatic Diagnosis Method of Facial Acne Vulgaris Based on Convolutional Neural Network. Sci. Rep. 2018, 8, 5839. [Google Scholar] [CrossRef] [PubMed]

- Rashataprucksa, K.; Chuangchaichatchavarn, C.; Triukose, S.; Nitinawarat, S.; Pongprutthipan, M.; Piromsopa, K. Acne Detection with Deep Neural Networks. In Proceedings of the 2020 2nd International Conference on Image Processing and Machine Vision (IPMV 2020), Bangkok, Thailand, 5–7 August 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 53–56. [Google Scholar] [CrossRef]

- Gu, T. Newzoo’s Global Mobile Market Report: Insights into the World’s 3.2 Billion Smartphone Users, the Devices They Use & the Mobile Games They Play. 2019. Available online: https://newzoo.com/insights/articles/newzoos-global-mobile-market-report-insights-into-the-worlds-3-2-billion-smartphone-users-the-devices-they-use-the-mobile-games-they-play/ (accessed on 6 June 2021).

- Gordon, W.J.; Landman, A.; Zhang, H.; Bates, D.W. Beyond validation: Getting health apps into clinical practice. NPJ Digit. Med. 2020, 3, 14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alamdari, N.; Tavakolian, K.; Alhashim, M.; Fazel-Rezai, R. Detection and classification of acne lesions in acne patients: A mobile application. In Proceedings of the 2016 IEEE International Conference on Electro Information Technology (EIT), Grand Forks, ND, USA, 19–21 May 2016; pp. 739–743. [Google Scholar] [CrossRef]

- Maroni, G.; Ermidoro, M.; Previdi, F.; Bigini, G. Automated detection, extraction and counting of acne lesions for automatic evaluation and tracking of acne severity. In Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence (SSCI), Honolulu, HI, USA, 27 November–1 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Chantharaphaichit, T.; Uyyanonvara, B.; Sinthanayothin, C.; Nishihara, A. Automatic acne detection with featured Bayesian classifier for medical treatment. In Proceedings of the 3rd International Conference on Robotics Informatics and Intelligence Control Technology (RIIT2015), Bangkok, Thailand, 30 April 2015; pp. 10–16. [Google Scholar]

- Junayed, M.S.; Jeny, A.A.; Atik, S.T.; Neehal, N.; Karim, A.; Azam, S.; Shanmugam, B. AcneNet—A Deep CNN Based Classification Approach for Acne Classes. In Proceedings of the 2019 12th International Conference on Information & Communication Technology and System (ICTS), Surabaya, Indonesia, 18 July 2019; pp. 203–208. [Google Scholar]

- Seité, S.; Khammari, A.; Benzaquen, M.; Moyal, D.; Dréno, B. Development and accuracy of an artificial intelligence algorithm for acne grading from smartphone photographs. Exp. Dermatol. 2019, 28, 1252–1257. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Guo, L.; Wu, Q.; Zhang, M.; Zeng, R.; Ding, H.; Zheng, H.; Xie, J.; Li, Y.; Ge, Y.; et al. Construction and Evaluation of a Deep Learning Model for Assessing Acne Vulgaris Using Clinical Images. Dermatol. Ther. 2021, 11, 1239–1248. [Google Scholar] [CrossRef] [PubMed]

- Draft Guidance on Tretinoin. Available online: https://www.accessdata.fda.gov/drugsatfda_docs/psg/Tretinoin_Topical%20gel%200.4_NDA%20020475_RV%20Nov%202018.pdf (accessed on 30 July 2021).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in neural information processing systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. LightGBM: A highly efficient gradient boosting decision tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 3149–3157. [Google Scholar]

- Min, K.; Lee, G.; Lee, S. ACNet: Mask-Aware Attention with Dynamic Context Enhancement for Robust Acne Detection. arXiv 2021, arXiv:2105.14891. [Google Scholar]

- Lim, Z.V.; Akram, F.; Ngo, C.P.; Winarto, A.A.; Lee, W.Q.; Liang, K.; Oon, H.H.; Thng, S.T.; Lee, H.K. Automated grading of acne vulgaris by deep learning with convolutional neural networks. Ski. Res. Technol. 2020, 26, 187–192. [Google Scholar] [CrossRef] [PubMed]

| Type of Acne | Number of Acne Type | Ratio (%) |

|---|---|---|

| Blackheads/Whiteheads | 15,686 | 37.47 |

| Acne scars | 23,214 | 55.46 |

| Papules/Pustules | 2677 | 6.4 |

| Nodular/Cyst lesions | 282 | 0.67 |

| Total | 41,859 | 100 |

| IGA Scale of Acne Severity Grade | Number of Images | Ratio (%) |

|---|---|---|

| 0 | 211 | 13.42 |

| 1 | 883 | 56.18 |

| 2 | 361 | 22.96 |

| 3 | 83 | 5.28 |

| 4 | 34 | 2.16 |

| Total | 1572 | 100 |

| Grade | Description |

|---|---|

| 0 | Clear skin with no inflammatory or non-inflammatory lesions |

| 1 | Almost clear; rare non-inflammatory lesions with no more than one small inflammatory lesion |

| 2 | Mild severity; greater than Grade 1; some non-inflammatory lesions with no more than a few inflammatory lesions (papules/pustules only, no nodular lesions) |

| 3 | Moderate severity; greater than Grade 2; up to many non-inflammatory lesions and may have some inflammatory lesions, but no more than one small nodular lesion |

| 4 | Severe; greater than Grade 3; up to many non-inflammatory lesions and may have some inflammatory lesions, but no more than a few nodular lesions |

| Type of Acne | AP |

|---|---|

| Blackheads/Whiteheads | 0.4 |

| Acne scars | 0.44 |

| Papule/Pustule lesions | 0.64 |

| Nodular/Cyst lesions | 0.68 |

| mAP for all four acne types | 0.54 |

| Grade of IGA Scale | Precision | Recall | F1 |

|---|---|---|---|

| 0 | 0.77 | 0.63 | 0.70 |

| 1 | 0.92 | 0.90 | 0.91 |

| 2 | 0.72 | 0.77 | 0.75 |

| 3 | 0.60 | 0.61 | 0.60 |

| 4 | 0.65 | 0.87 | 0.74 |

| Accuracy | 0.85 | ||

| Authors | Acne Types | Number of Acne | Model | mAP |

|---|---|---|---|---|

| Kuladech et al. [10] | Type I, Type III, Post-inflammatory erythema, Post-inflammatory hyperpigmentation | 15,917 | Faster R-CNN, R-FCN | Faster R-CNN: 0.233 R-FCN: 0.283 |

| Kyungseo Min et al. [22] | General Acne (not classification) | 18,983 | ACNet | 0.205 |

| Our method | Blackheads/Whiteheads, Papules/Pustules, Nodules/Cysts, and Acne scars | 41,859 | Faster R-CNN | 0.540 |

| Authors | Acne Severity Scale | Number of Images | Model | Accuracy |

|---|---|---|---|---|

| Sophie Seite et al. [17] | GEA scale | 5972 | 0.68 | |

| Ziying Vanessa et al. [23] | IGA scale | 472 | Developed based on DenseNet, Inception v4 and ResNet18 | 0.67 |

| Yin Yang et al. [18] | Classified according to the Chinese guidelines for the management of acne vulgaris with 4 severity classes | 5871 | Inception-v3 | 0.8 |

| Our method | IGA scale | 1572 | LightGBM | 0.85 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huynh, Q.T.; Nguyen, P.H.; Le, H.X.; Ngo, L.T.; Trinh, N.-T.; Tran, M.T.-T.; Nguyen, H.T.; Vu, N.T.; Nguyen, A.T.; Suda, K.; et al. Automatic Acne Object Detection and Acne Severity Grading Using Smartphone Images and Artificial Intelligence. Diagnostics 2022, 12, 1879. https://doi.org/10.3390/diagnostics12081879

Huynh QT, Nguyen PH, Le HX, Ngo LT, Trinh N-T, Tran MT-T, Nguyen HT, Vu NT, Nguyen AT, Suda K, et al. Automatic Acne Object Detection and Acne Severity Grading Using Smartphone Images and Artificial Intelligence. Diagnostics. 2022; 12(8):1879. https://doi.org/10.3390/diagnostics12081879

Chicago/Turabian StyleHuynh, Quan Thanh, Phuc Hoang Nguyen, Hieu Xuan Le, Lua Thi Ngo, Nhu-Thuy Trinh, Mai Thi-Thanh Tran, Hoan Tam Nguyen, Nga Thi Vu, Anh Tam Nguyen, Kazuma Suda, and et al. 2022. "Automatic Acne Object Detection and Acne Severity Grading Using Smartphone Images and Artificial Intelligence" Diagnostics 12, no. 8: 1879. https://doi.org/10.3390/diagnostics12081879

APA StyleHuynh, Q. T., Nguyen, P. H., Le, H. X., Ngo, L. T., Trinh, N.-T., Tran, M. T.-T., Nguyen, H. T., Vu, N. T., Nguyen, A. T., Suda, K., Tsuji, K., Ishii, T., Ngo, T. X., & Ngo, H. T. (2022). Automatic Acne Object Detection and Acne Severity Grading Using Smartphone Images and Artificial Intelligence. Diagnostics, 12(8), 1879. https://doi.org/10.3390/diagnostics12081879