Pulmonary Lesion Classification Framework Using the Weighted Ensemble Classification with Random Forest and CNN Models for EBUS Images

Abstract

:1. Introduction

2. Materials

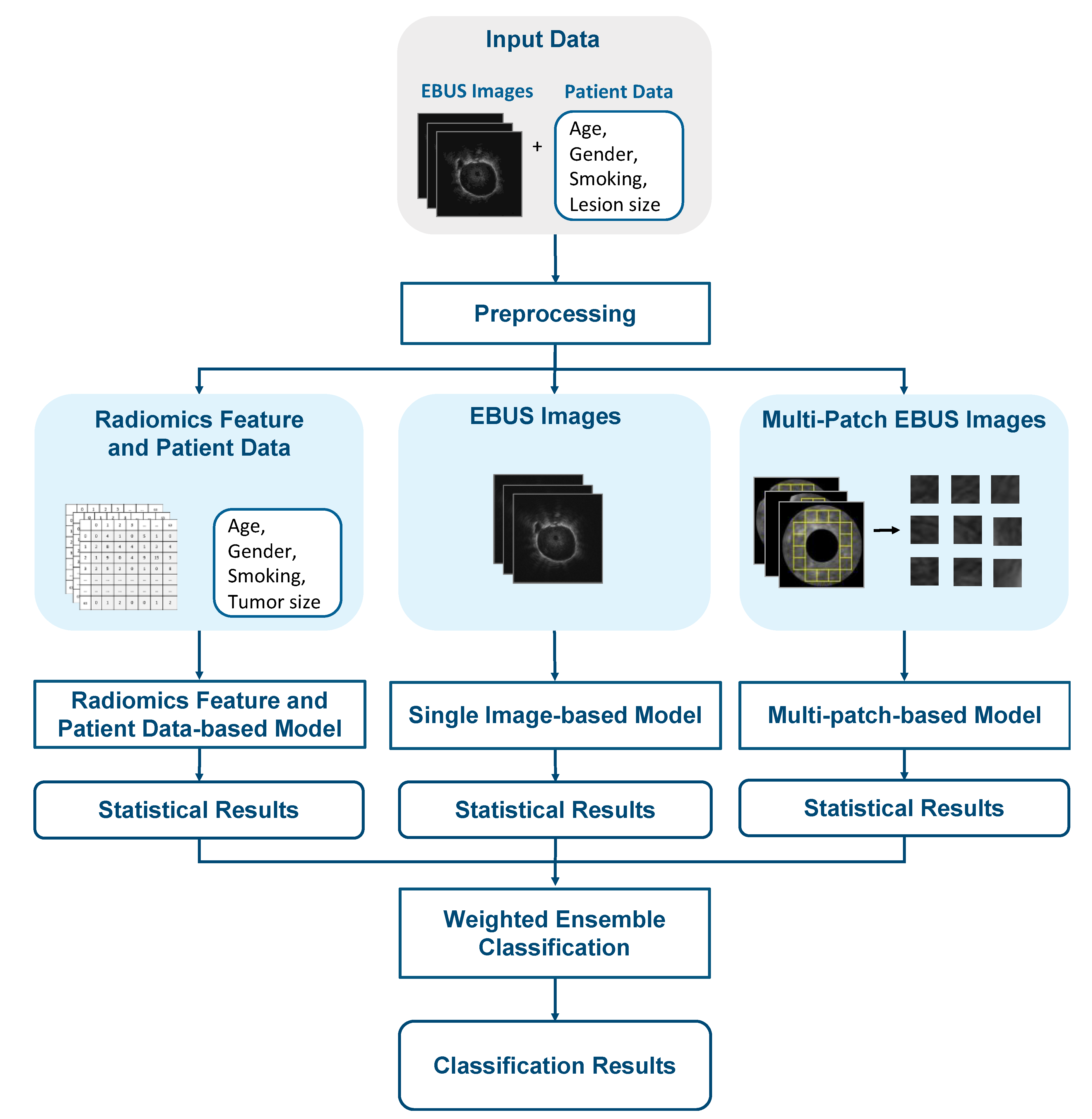

3. Methods

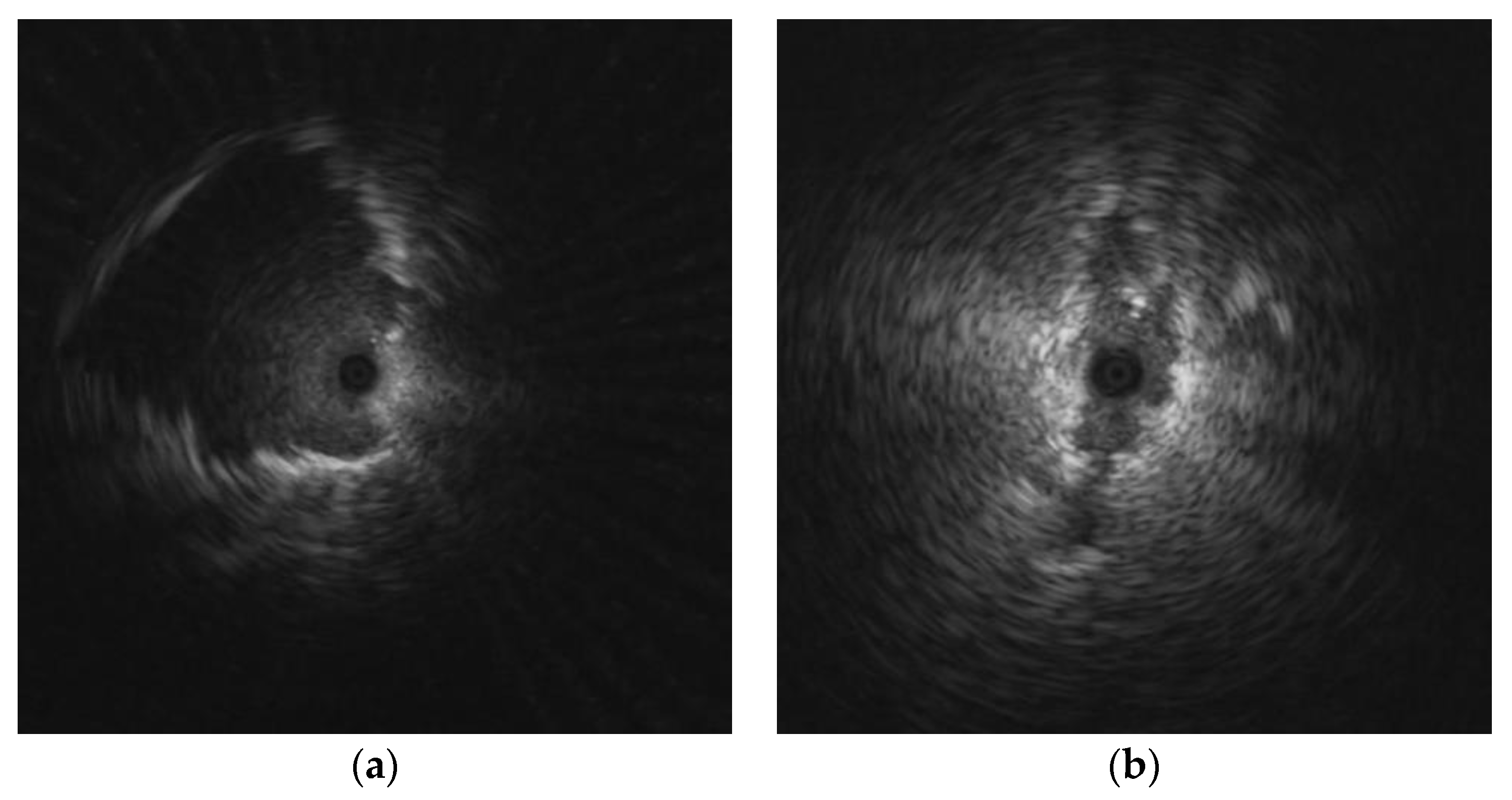

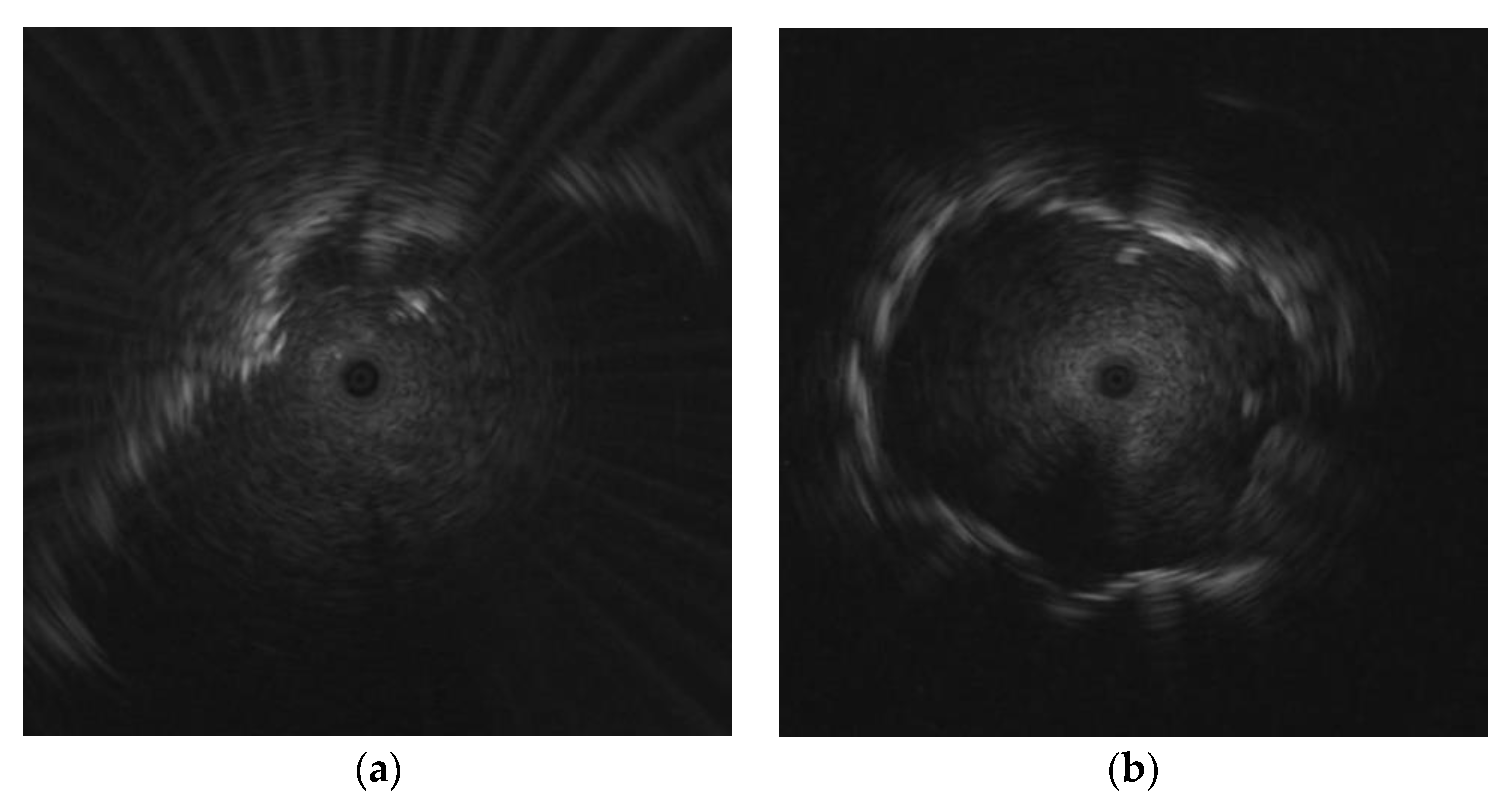

3.1. Preprocessing

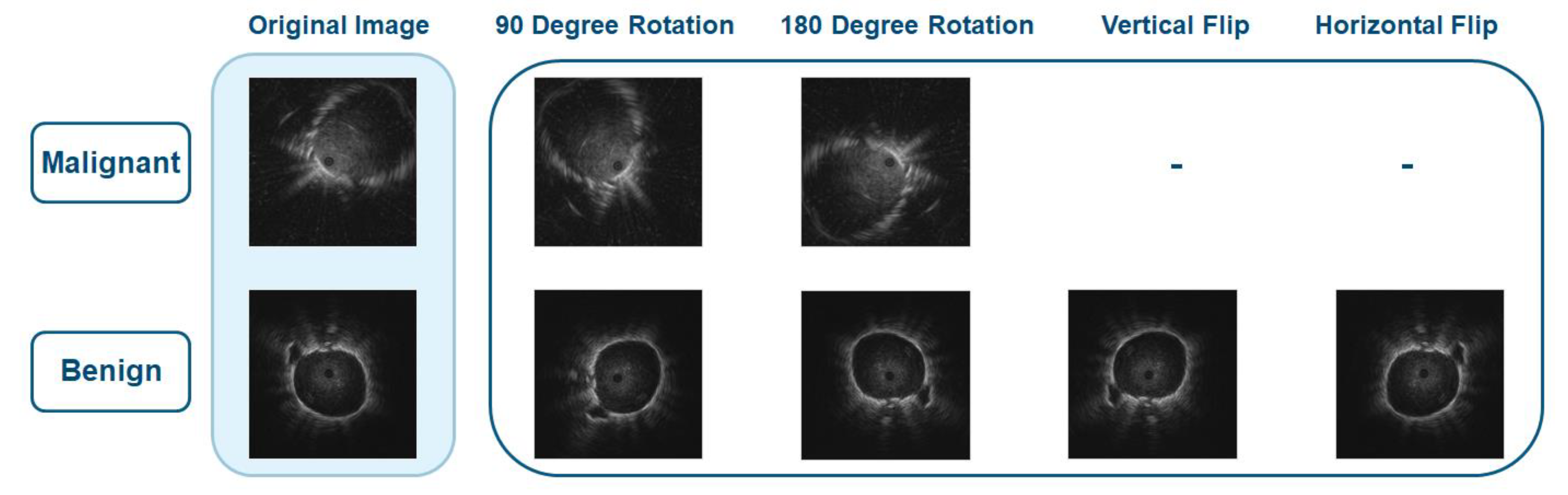

3.1.1. Class Balancing

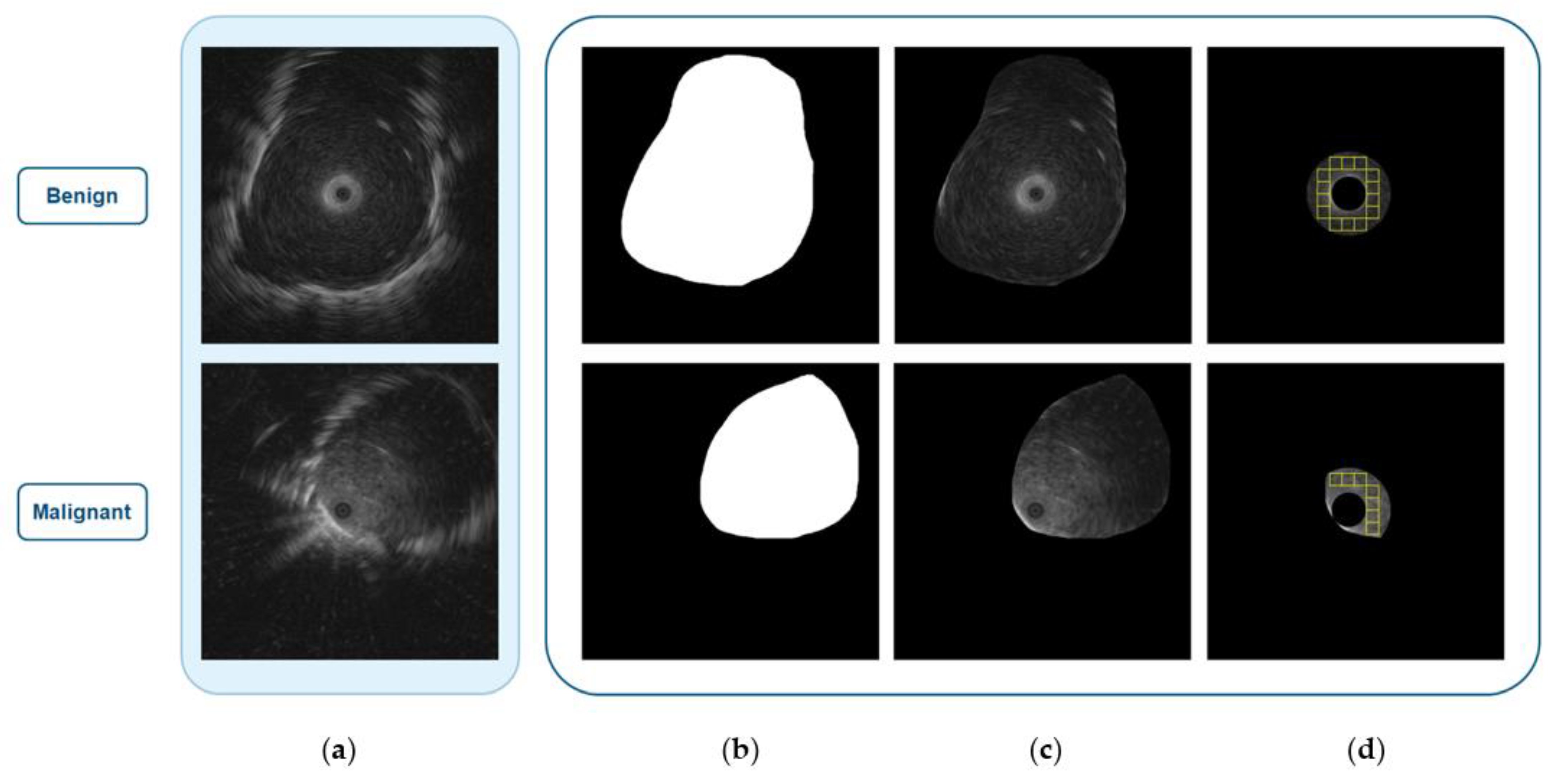

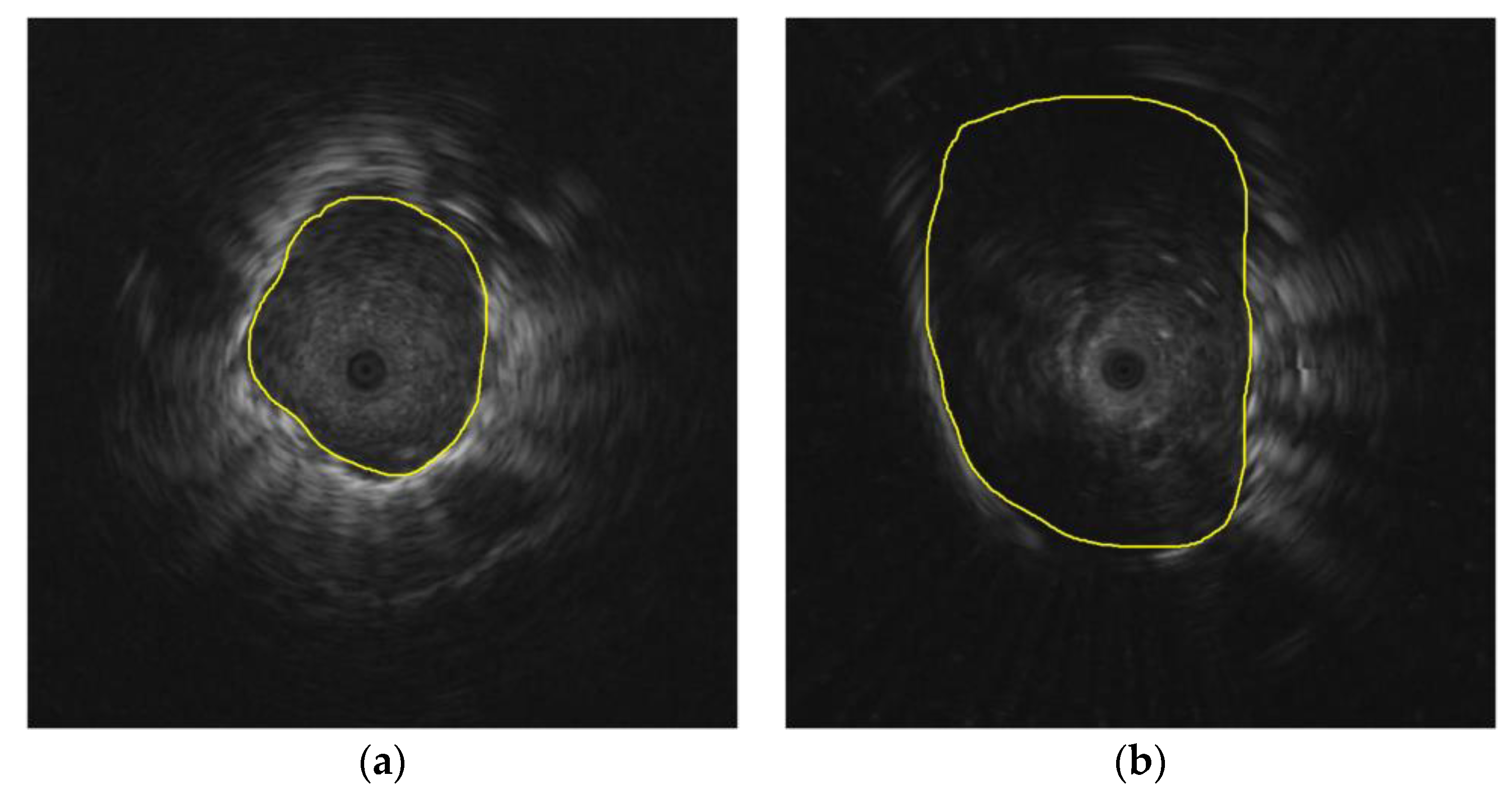

3.1.2. Mask Generation

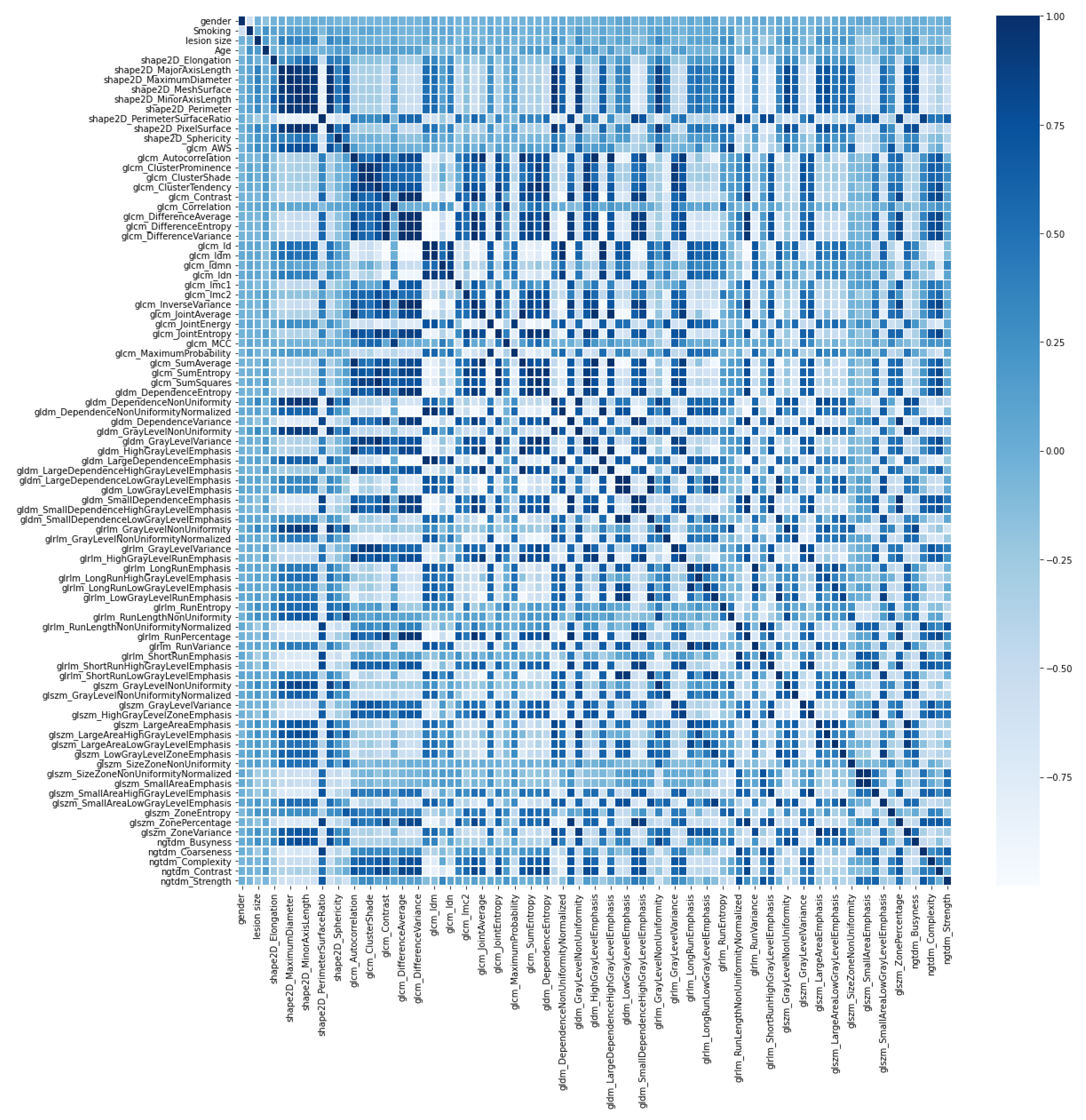

3.1.3. Feature Extraction

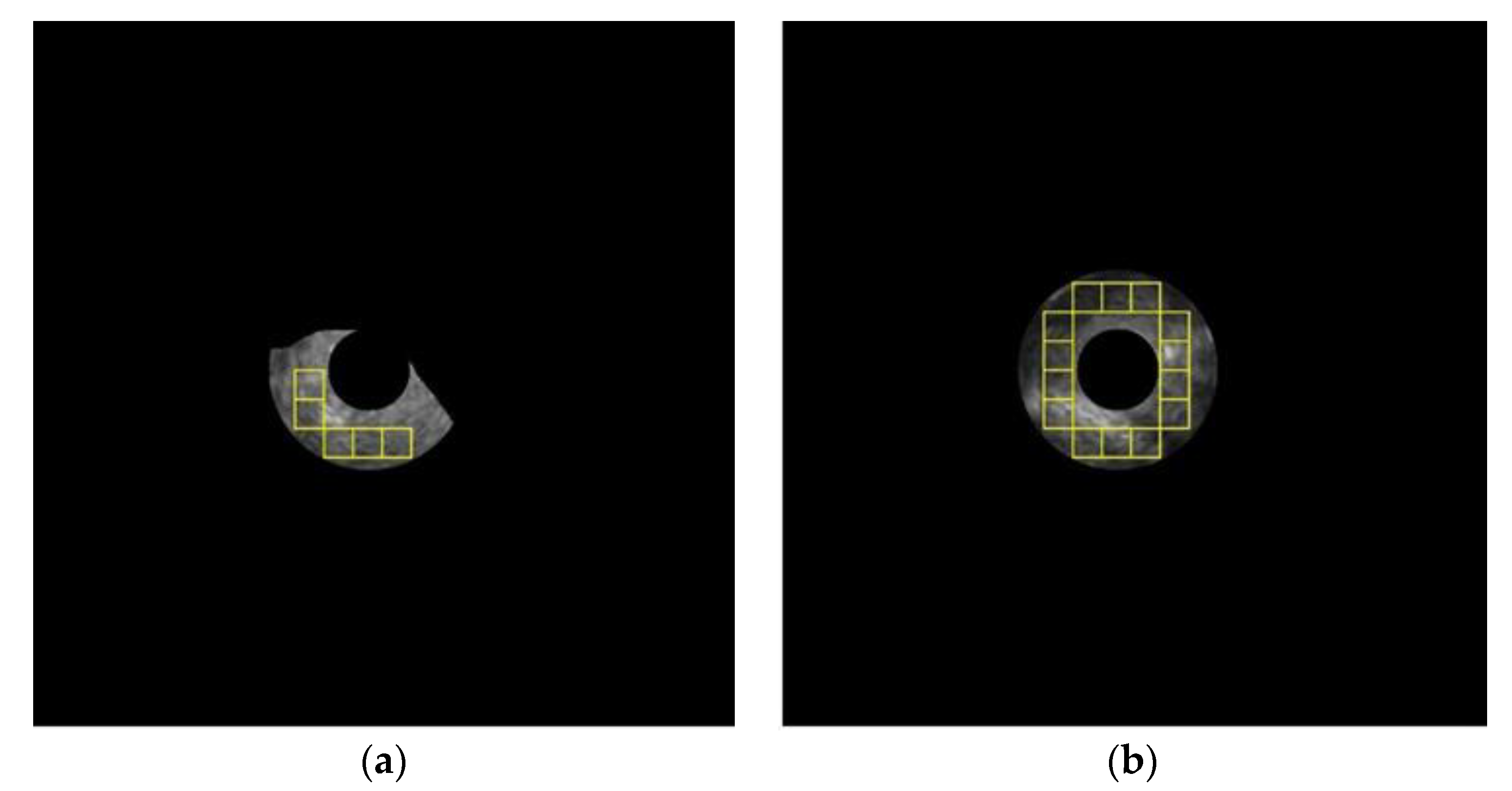

3.1.4. WOI Selection

3.2. The Proposed Framework

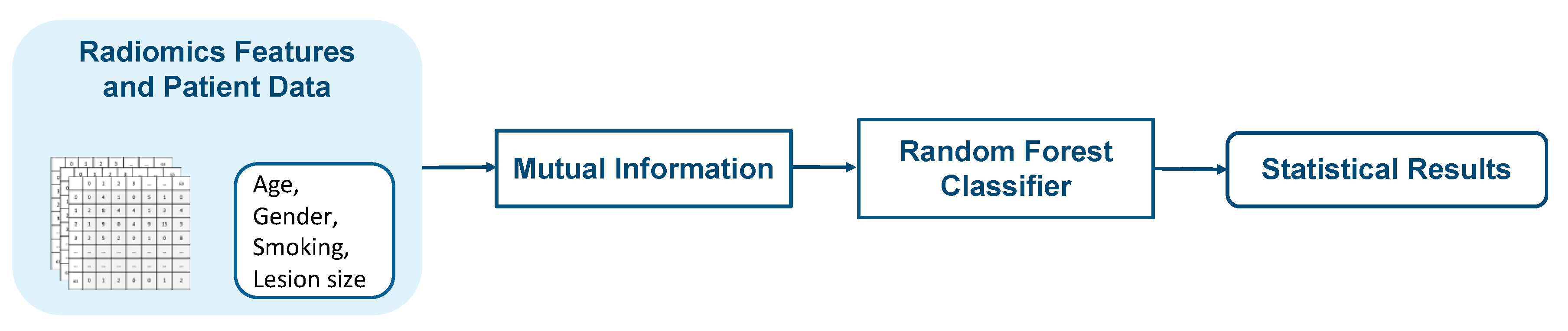

3.2.1. Radiomics Feature and Patient Data-Based Model

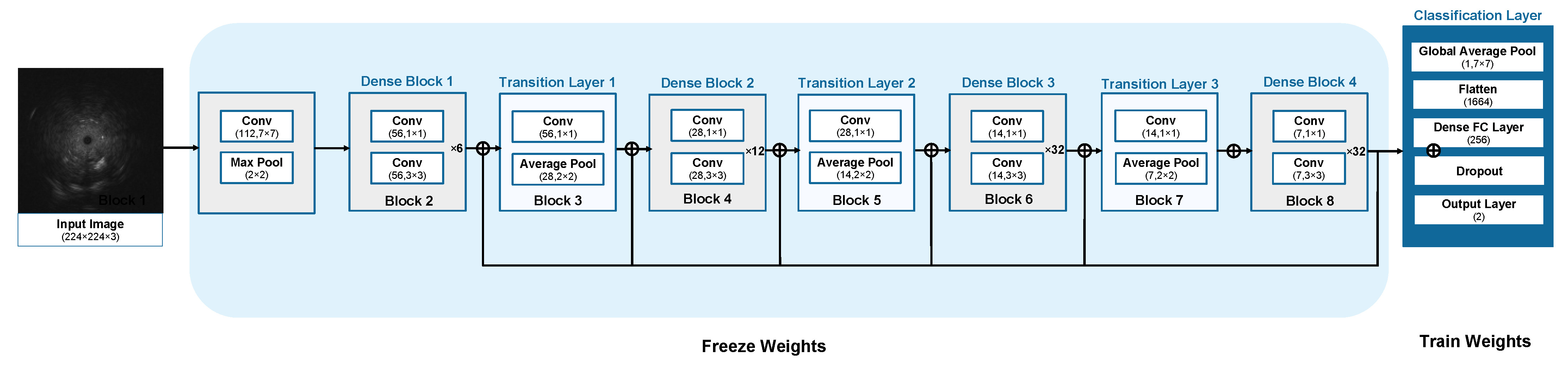

3.2.2. Single Image-Based Model

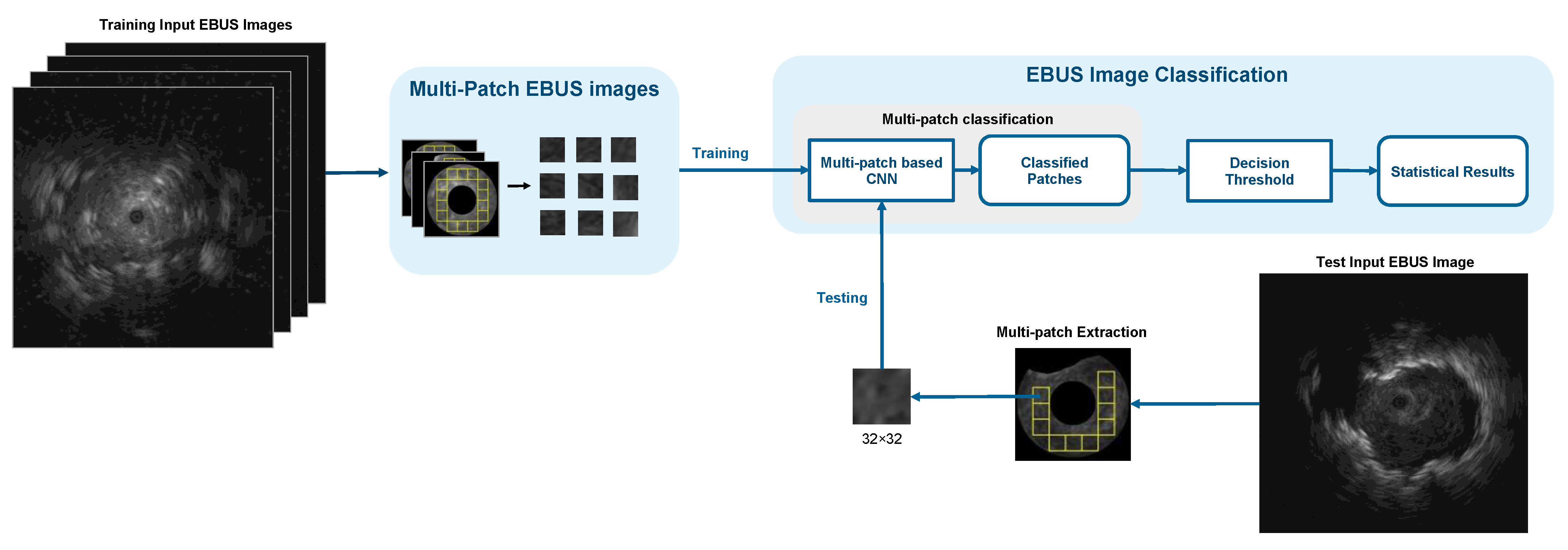

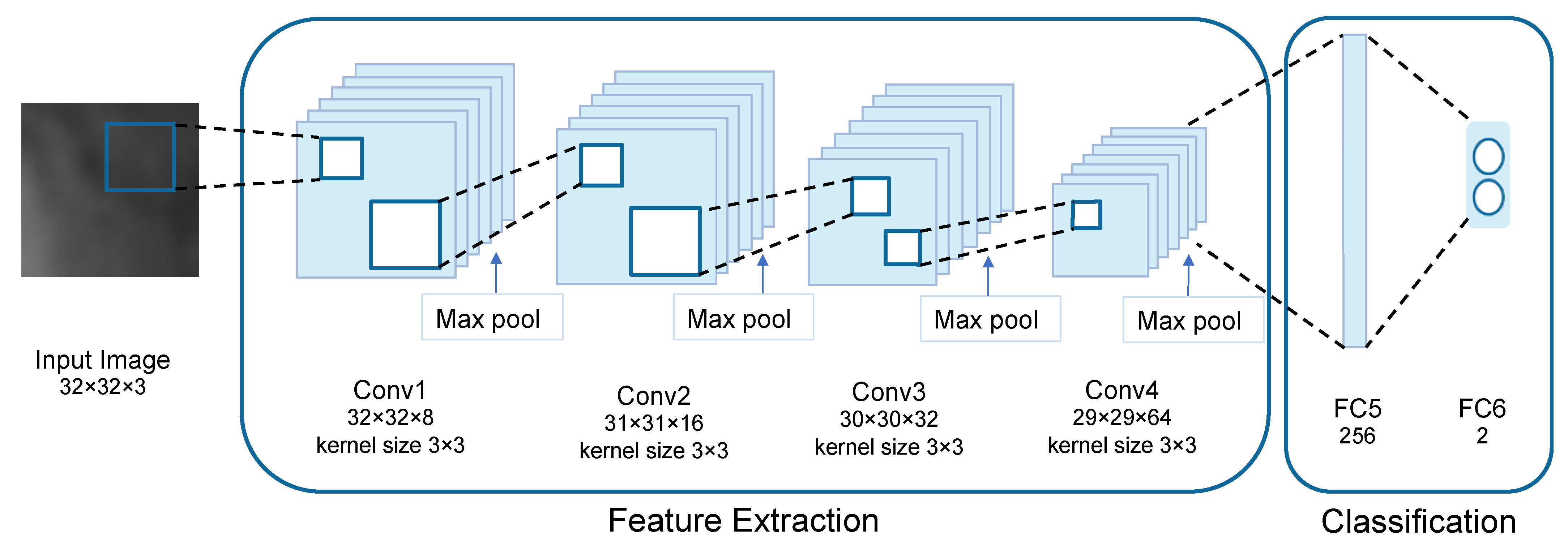

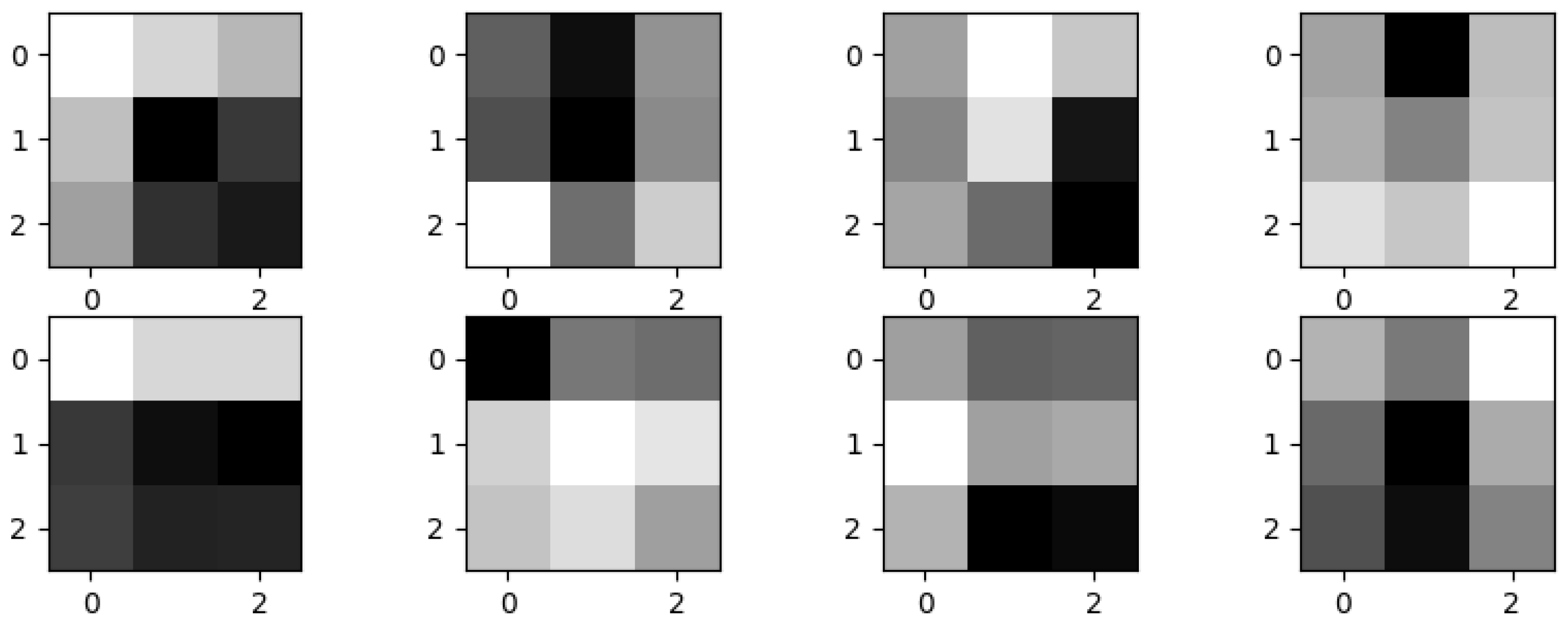

3.2.3. Multi-Patch-Based Model

3.3. Weighted Ensemble Classification

3.4. Performance Evaluation

4. Experimental Results and Discussion

4.1. Experimental Setup

4.2. Experimental Results

4.2.1. EBUS Image Enhancement

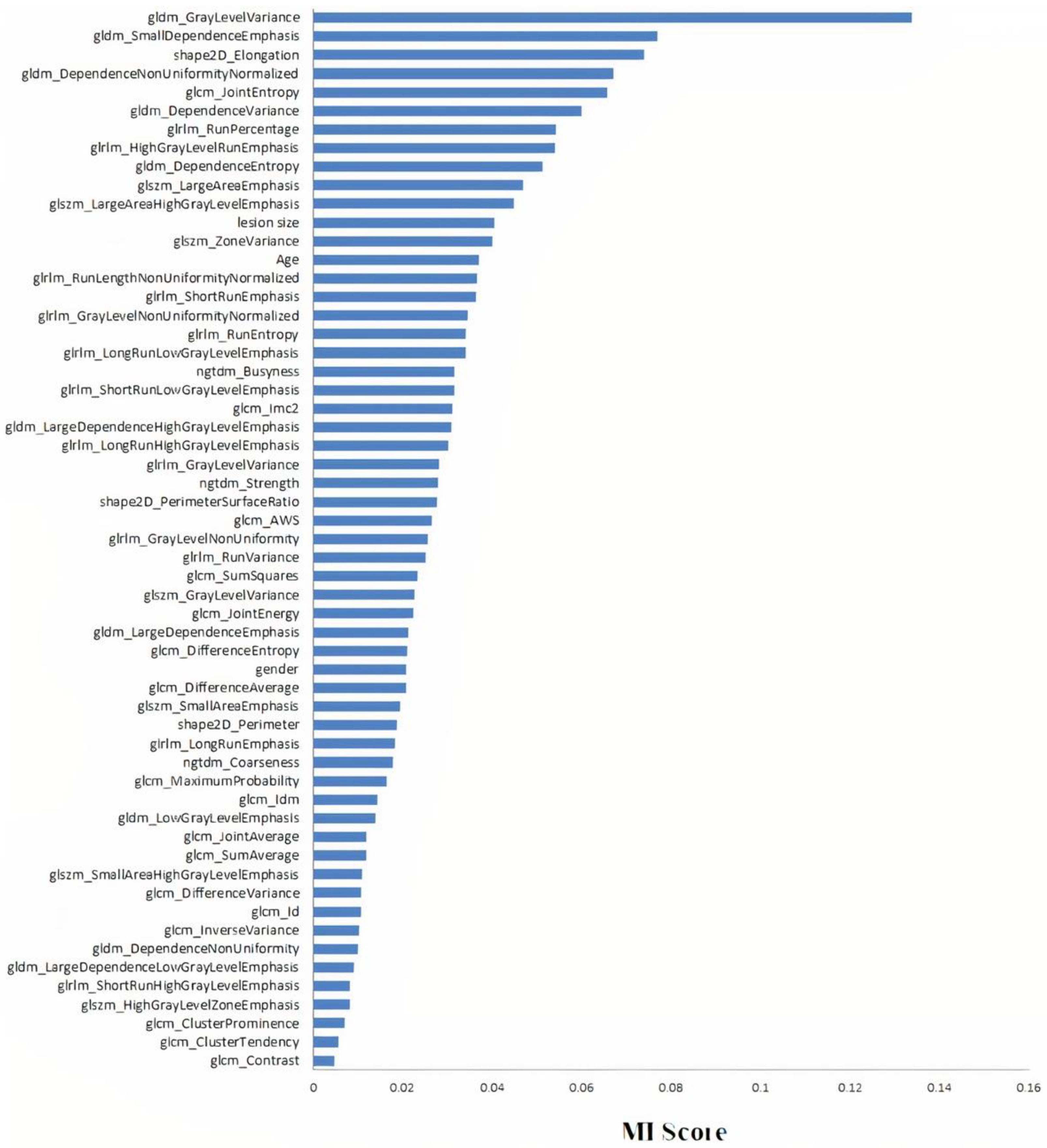

4.2.2. Feature Selection

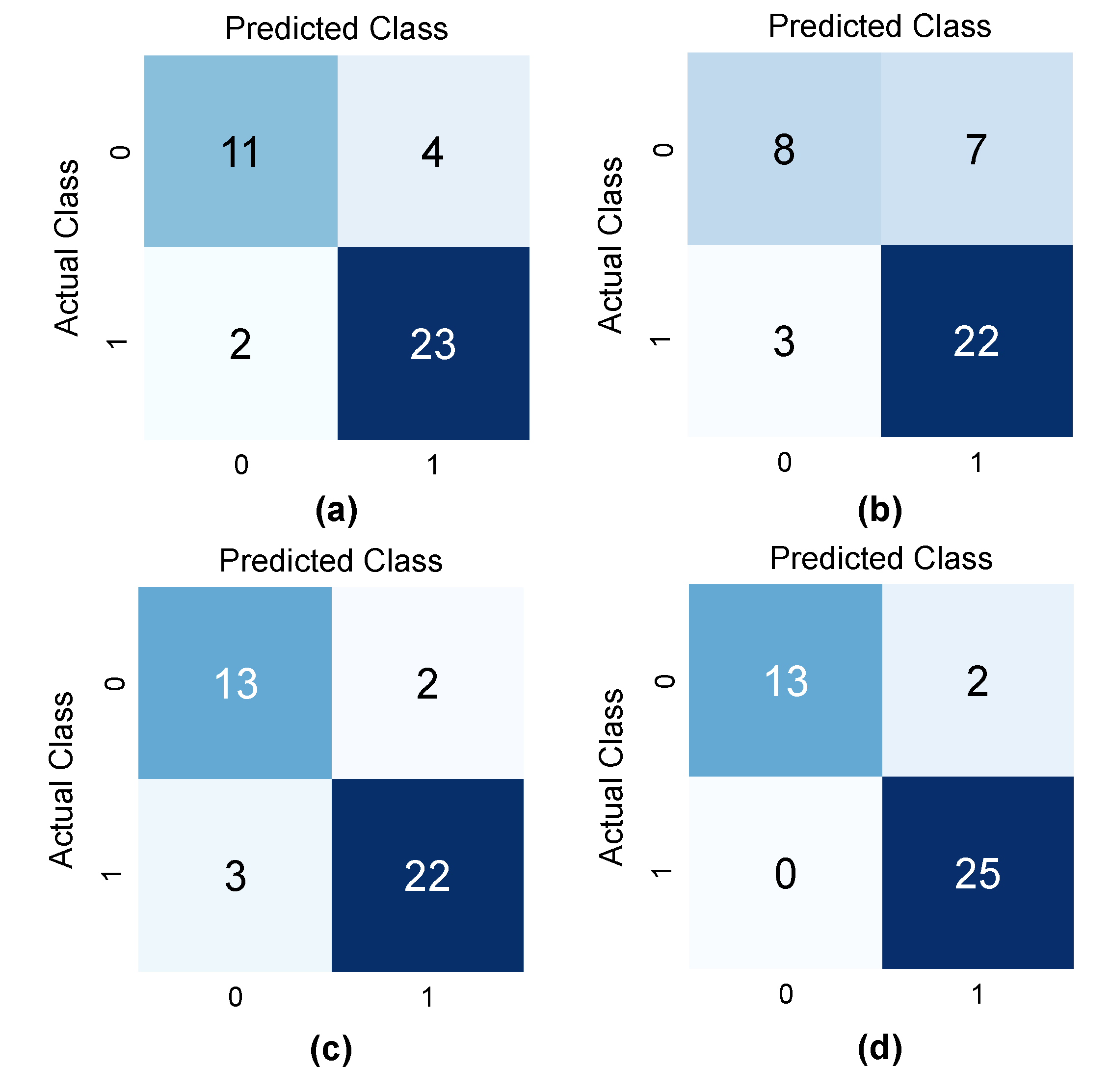

4.2.3. Classification Performance

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Araujo, L.H.; Horn, L.; Merritt, R.E.; Shilo, K.; Xu-Welliver, M.; Carbone, D.P. Cancer of the lung: Non–small cell lung cancer and small cell lung cancer. In Abeloff’s Clinical Oncology; Elsevier: Amsterdam, The Netherlands, 2020; pp. 1108–1158.e16. [Google Scholar]

- Islami, F.; Goding Sauer, A.; Miller, K.D.; Siegel, R.L.; Fedewa, S.A.; Jacobs, E.J.; McCullough, M.L.; Patel, A.V.; Ma, J.; Soerjomataram, I. Proportion and number of cancer cases and deaths attributable to potentially modifiable risk factors in the United States. CA Cancer J. Clin. 2018, 68, 31–54. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Herbst, R.S.; Boshoff, C. Toward personalized treatment approaches for non-small-cell lung cancer. Nat. Med. 2021, 27, 1345–1356. [Google Scholar] [CrossRef]

- Silvestri, G.A.; Gonzalez, A.V.; Jantz, M.A.; Margolis, M.L.; Gould, M.K.; Tanoue, L.T.; Harris, L.J.; Detterbeck, F.C. Methods for staging non-small cell lung cancer: Diagnosis and management of lung cancer: American College of Chest Physicians evidence-based clinical practice guidelines. Chest 2013, 143, e211S–e250S. [Google Scholar] [CrossRef] [Green Version]

- Tanoue, L.T.; Tanner, N.T.; Gould, M.K.; Silvestri, G.A. Lung cancer screening. Am. J. Respir. Crit. Care Med. 2015, 191, 19–33. [Google Scholar] [CrossRef] [Green Version]

- Zaric, B.; Stojsic, V.; Sarcev, T.; Stojanovic, G.; Carapic, V.; Perin, B.; Zarogoulidis, P.; Darwiche, K.; Tsakiridis, K.; Karapantzos, I.; et al. Advanced bronchoscopic techniques in diagnosis and staging of lung cancer. J. Thorac. Dis. 2013, 5, S359–S370. [Google Scholar] [CrossRef] [PubMed]

- Czarnecka-Kujawa, K.; Yasufuku, K. The role of endobronchial ultrasound versus mediastinoscopy for non-small cell lung cancer. J. Thorac. Dis. 2017, 9, S83–S97. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tian, Q.; Chen, L.A.; Wang, R.T.; Yang, Z.; An, Y. The reasons of false negative results of endobronchial ultrasound-guided transbronchial needle aspiration in the diagnosis of intrapulmonary and mediastinal malignancy. Thoracic. Cancer 2013, 4, 186–190. [Google Scholar] [CrossRef] [Green Version]

- Kurimoto, N.; Murayama, M.; Yoshioka, S.; Nishisaka, T. Analysis of the internal structure of peripheral pulmonary lesions using endobronchial ultrasonography. Chest 2002, 122, 1887–1894. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, L.; Chen, J.; Xie, F.; Jiang, Y.; Sun, J. Diagnostic value of radial endobronchial ultrasonographic features in predominant solid peripheral pulmonary lesions. J. Thorac. Dis. 2020, 12, 7656–7665. [Google Scholar] [CrossRef]

- Izumo, T.; Sasada, S.; Chavez, C.; Matsumoto, Y.; Tsuchida, T. Endobronchial ultrasound elastography in the diagnosis of mediastinal and hilar lymph nodes. Jpn. J. Clin. Oncol. 2014, 44, 956–962. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hernández Roca, M.; Pérez Pallarés, J.; Prieto Merino, D.; Valdivia Salas, M.d.M.; García Solano, J.; Fernández Álvarez, J.; Lozano Vicente, D.; Wasniewski, S.; Martínez Díaz, J.J.; Elías Torregrosa, C. Diagnostic value of elastography and endobronchial ultrasound in the study of hilar and mediastinal lymph nodes. J. Bronchol. Interv. Pulmonol. 2019, 26, 184–192. [Google Scholar] [CrossRef] [PubMed]

- Zhi, X.; Chen, J.; Xie, F.; Sun, J.; Herth, F.J. Diagnostic value of endobronchial ultrasound image features: A specialized review. Endosc. Ultrasound 2021, 10, 3. [Google Scholar]

- Morikawa, K.; Kurimoto, N.; Inoue, T.; Mineshita, M.; Miyazawa, T. Histogram-based quantitative evaluation of endobronchial ultrasonography images of peripheral pulmonary lesion. Respiration 2015, 89, 148–154. [Google Scholar] [CrossRef] [PubMed]

- Alici, I.O.; Yılmaz Demirci, N.; Yılmaz, A.; Karakaya, J.; Özaydın, E. The sonographic features of malignant mediastinal lymph nodes and a proposal for an algorithmic approach for sampling during endobronchial ultrasound. Clin. Respir. J. 2016, 10, 606–613. [Google Scholar] [CrossRef] [PubMed]

- Khomkham, B.; Lipikorn, R. Pulmonary lesion classification from endobronchial ultrasonography images using adaptive weighted-sum of the upper and lower triangular gray-level co-occurrence matrix. Int. J. Imaging Syst. Technol. 2021, 31, 1280–1293. [Google Scholar] [CrossRef]

- Gómez, W.; Pereira, W.C.A.; Infantosi, A.F.C. Analysis of co-occurrence texture statistics as a function of gray-level quantization for classifying breast ultrasound. IEEE Trans. Med. Imaging 2012, 31, 1889–1899. [Google Scholar] [CrossRef]

- Jia, A.D.; Li, B.Z.; Zhang, C.C. Detection of cervical cancer cells based on strong feature CNN-SVM network. Neurocomputing 2020, 411, 112–127. [Google Scholar]

- Tan, J.; Gao, Y.; Liang, Z.; Cao, W.; Pomeroy, M.J.; Huo, Y.; Li, L.; Barish, M.A.; Abbasi, A.F.; Pickhardt, P.J. 3D-GLCM CNN: A 3-Dimensional Gray-Level Co-Occurrence Matrix-Based CNN Model for Polyp Classification via CT Colonography. IEEE Trans. Med. Imaging 2019, 39, 2013–2024. [Google Scholar] [CrossRef]

- Islam, M.Z.; Islam, M.M.; Asraf, A. A combined deep CNN-LSTM network for the detection of novel coronavirus (COVID-19) using X-ray images. Inform. Med. Unlocked 2020, 20, 100412. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Z.; Dai, C.; Dong, Q.; Badrigilan, S. Accuracy of deep learning for automated detection of pneumonia using chest X-Ray images: A systematic review and meta-analysis. Comput. Biol. Med. 2020, 123, 103898. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Bai, C.; Liu, Z.; Yang, L.T.; Yu, H.; Zhao, J.; Yuan, H. A GPU-based residual network for medical image classification in smart medicine. Inf. Sci. 2020, 536, 91–100. [Google Scholar] [CrossRef]

- Cai, L.; Long, T.; Dai, Y.; Huang, Y. Mask R-CNN-based detection and segmentation for pulmonary nodule 3D visualization diagnosis. IEEE Access 2020, 8, 44400–44409. [Google Scholar] [CrossRef]

- Wang, Q.; Zheng, Y.; Yang, G.; Jin, W.; Chen, X.; Yin, Y. Multiscale rotation-invariant convolutional neural networks for lung texture classification. IEEE J. Biomed. Health Inform. 2017, 22, 184–195. [Google Scholar] [CrossRef] [PubMed]

- Anthimopoulos, M.; Christodoulidis, S.; Ebner, L.; Christe, A.; Mougiakakou, S. Lung pattern classification for interstitial lung diseases using a deep convolutional neural network. IEEE Trans. Med. Imaging 2016, 35, 1207–1216. [Google Scholar] [CrossRef]

- Chen, C.-H.; Lee, Y.-W.; Huang, Y.-S.; Lan, W.-R.; Chang, R.-F.; Tu, C.-Y.; Chen, C.-Y.; Liao, W.-C. Computer-aided diagnosis of endobronchial ultrasound images using convolutional neural network. Comput. Methods Programs Biomed. 2019, 177, 175–182. [Google Scholar] [CrossRef] [PubMed]

- Lei, Y.; Zhang, J.; Shan, H. Strided Self-Supervised Low-Dose CT Denoising for Lung Nodule Classification. Phenomics 2021, 1, 257–268. [Google Scholar] [CrossRef]

- Lei, Y.; Tian, Y.; Shan, H.; Zhang, J.; Wang, G.; Kalra, M.K. Shape and margin-aware lung nodule classification in low-dose CT images via soft activation mapping. Med. Image Anal. 2020, 60, 101628. [Google Scholar] [CrossRef] [Green Version]

- Guo, X.; Lei, Y.; He, P.; Zeng, W.; Yang, R.; Ma, Y.; Feng, P.; Lyu, Q.; Wang, G.; Shan, H. An ensemble learning method based on ordinal regression for COVID-19 diagnosis from chest CT. Phys. Med. Biol. 2021, 66, 244001. [Google Scholar] [CrossRef]

- Salem, N.; Malik, H.; Shams, A. Medical image enhancement based on histogram algorithms. Procedia Comput. Sci. 2019, 163, 300–311. [Google Scholar] [CrossRef]

- Janani, P.; Premaladha, J.; Ravichandran, K. Image enhancement techniques: A study. Indian J. Sci. Technol. 2015, 8, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Yang, C.-C. Image enhancement by modified contrast-stretching manipulation. Opt. Laser Technol. 2006, 38, 196–201. [Google Scholar] [CrossRef]

- Chalana, V.; Kim, Y. A methodology for evaluation of boundary detection algorithms on medical images. IEEE Trans. Med. Imaging 1997, 16, 642–652. [Google Scholar] [CrossRef] [PubMed]

- Tomar, N.K.; Jha, D.; Riegler, M.A.; Johansen, H.D.; Johansen, D.; Rittscher, J.; Halvorsen, P.; Ali, S. Fanet: A feedback attention network for improved biomedical image segmentation. IEEE Trans. Neural Netw. Learn. Syst. 2021, 2103, 17235. [Google Scholar] [CrossRef] [PubMed]

- Jha, D.; Ali, S.; Tomar, N.K.; Johansen, H.D.; Johansen, D.; Rittscher, J.; Riegler, M.A.; Halvorsen, P. Real-time polyp detection, localization and segmentation in colonoscopy using deep learning. IEEE Access 2021, 9, 40496–40510. [Google Scholar] [CrossRef]

- Suraworachet, W.; Lipikorn, R.; Wattanathum, A. Pulmonary lesion boundary detection from an endobronchial ultrasonogram using polar sector maximum intensity. Int. J. Electron. Electr. Eng. 2016, 4, 185–188. [Google Scholar] [CrossRef] [Green Version]

- Van Griethuysen, J.J.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.; Fillion-Robin, J.-C.; Pieper, S.; Aerts, H.J. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef] [Green Version]

- Roque, F.S.; Jensen, P.B.; Schmock, H.; Dalgaard, M.; Andreatta, M.; Hansen, T.; Søeby, K.; Bredkjær, S.; Juul, A.; Werge, T. Using electronic patient records to discover disease correlations and stratify patient cohorts. PLoS Comput. Biol. 2011, 7, e1002141. [Google Scholar] [CrossRef] [Green Version]

- Ross, B.C. Mutual information between discrete and continuous data sets. PLoS ONE 2014, 9, e87357. [Google Scholar] [CrossRef]

- Azar, A.T.; Elshazly, H.I.; Hassanien, A.E.; Elkorany, A.M. A random forest classifier for lymph diseases. Comput. Methods Programs Biomed. 2014, 113, 465–473. [Google Scholar] [CrossRef]

- Wang, S.-H.; Zhang, Y.-D. DenseNet-201-based deep neural network with composite learning factor and precomputation for multiple sclerosis classification. ACM Trans. Multimed. Comput. Commun. Appl. 2020, 16, 1–19. [Google Scholar] [CrossRef]

- Shermin, T.; Teng, S.W.; Murshed, M.; Lu, G.; Sohel, F.; Paul, M. Enhanced transfer learning with imagenet trained classification layer. In Proceedings of Pacific-Rim Symposium on Image and Video Technology. In Pacific-Rim Symposium on Image and Video Technology; Springer: Cham, Switzerland, 2019; pp. 142–155. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 60, 1097–1105. [Google Scholar] [CrossRef]

- Jimenez, D. Dynamically weighted ensemble neural networks for classification. In Proceedings of the 1998 IEEE International Joint Conference on Neural Networks Proceedings. IEEE World Congress on Computational Intelligence (Cat. No.98CH36227), Anchorage, AK, USA, 4–9 May 1998; Volume 751, pp. 753–756. [Google Scholar]

| Malignant | Benign | |

|---|---|---|

| Number of patients | 124 (74 male, 50 female) | 76 (29 male, 47 female) |

| Age (Mean ± SD) | 64.32 ± 13.21 | 57.63 ± 15.51 |

| Lesion size | ≥3 cm (75), <3 cm (49) | ≥3 cm (38), <3 cm (38) |

| Smoking History | non-smoking (52), smoking (35), ex-smoking (37) | non-smoking (29), smoking (27), ex-smoking (20) |

| Malignant | Benign | All | |

|---|---|---|---|

| Original EBUS image data | 99 | 61 | 160 |

| Augmented image data | 198 | 244 | 442 |

| Total of training image data | 297 | 305 | 602 |

| Hyper-Parameter | Value |

|---|---|

| Optimizer | Stochastic Gradient Descent |

| Learning rate | 0.0001 |

| Loss function | Cross-entropy |

| Batch size | 32 |

| Layer | Type | Kernel Size | Stride | Output Size |

|---|---|---|---|---|

| Data | Input | 3 × 32 × 32 | ||

| Conv1 | Convolution | 3 × 3 | 1 | 8 × 32 × 32 |

| Conv2 | Convolution | 3 × 3 | 1 | 16 × 31 × 31 |

| Conv3 | Convolution | 3 × 3 | 1 | 32 × 30 × 30 |

| Conv4 | Convolution | 3 × 3 | 1 | 64 × 29 × 29 |

| FC5 | Fully connected | 256 × 1 × 1 | ||

| FC6 | Fully connected | 2 × 1 × 1 |

| Hyper-Parameter | Value |

|---|---|

| Optimizer | Adam |

| Learning rate | 0.001 |

| Loss function | Cross-entropy |

| Batch size | 128 |

| Acc (%) | Sen (%) | Spec (%) | PPV (%) | NPV (%) | AUC | |

|---|---|---|---|---|---|---|

| Radiomics feature and patient data-based model | 85.00 | 92.00 | 73.33 | 85.19 | 84.62 | 0.8267 |

| Single image-based model | 75.00 | 88.00 | 53.33 | 75.86 | 72.72 | 0.7067 |

| Multi-patch-based model | 87.50 | 88.00 | 86.67 | 91.67 | 81.25 | 0.8733 |

| Proposed framework | 95.00 | 100 | 86.67 | 92.59 | 100 | 0.9333 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khomkham, B.; Lipikorn, R. Pulmonary Lesion Classification Framework Using the Weighted Ensemble Classification with Random Forest and CNN Models for EBUS Images. Diagnostics 2022, 12, 1552. https://doi.org/10.3390/diagnostics12071552

Khomkham B, Lipikorn R. Pulmonary Lesion Classification Framework Using the Weighted Ensemble Classification with Random Forest and CNN Models for EBUS Images. Diagnostics. 2022; 12(7):1552. https://doi.org/10.3390/diagnostics12071552

Chicago/Turabian StyleKhomkham, Banphatree, and Rajalida Lipikorn. 2022. "Pulmonary Lesion Classification Framework Using the Weighted Ensemble Classification with Random Forest and CNN Models for EBUS Images" Diagnostics 12, no. 7: 1552. https://doi.org/10.3390/diagnostics12071552

APA StyleKhomkham, B., & Lipikorn, R. (2022). Pulmonary Lesion Classification Framework Using the Weighted Ensemble Classification with Random Forest and CNN Models for EBUS Images. Diagnostics, 12(7), 1552. https://doi.org/10.3390/diagnostics12071552