Performance of the Deep Neural Network Ciloctunet, Integrated with Open-Source Software for Ciliary Muscle Segmentation in Anterior Segment OCT Images, Is on Par with Experienced Examiners

Abstract

1. Introduction

2. Materials and Methods

2.1. Training, Validation, and Test Image Data

2.1.1. Imaging Protocol

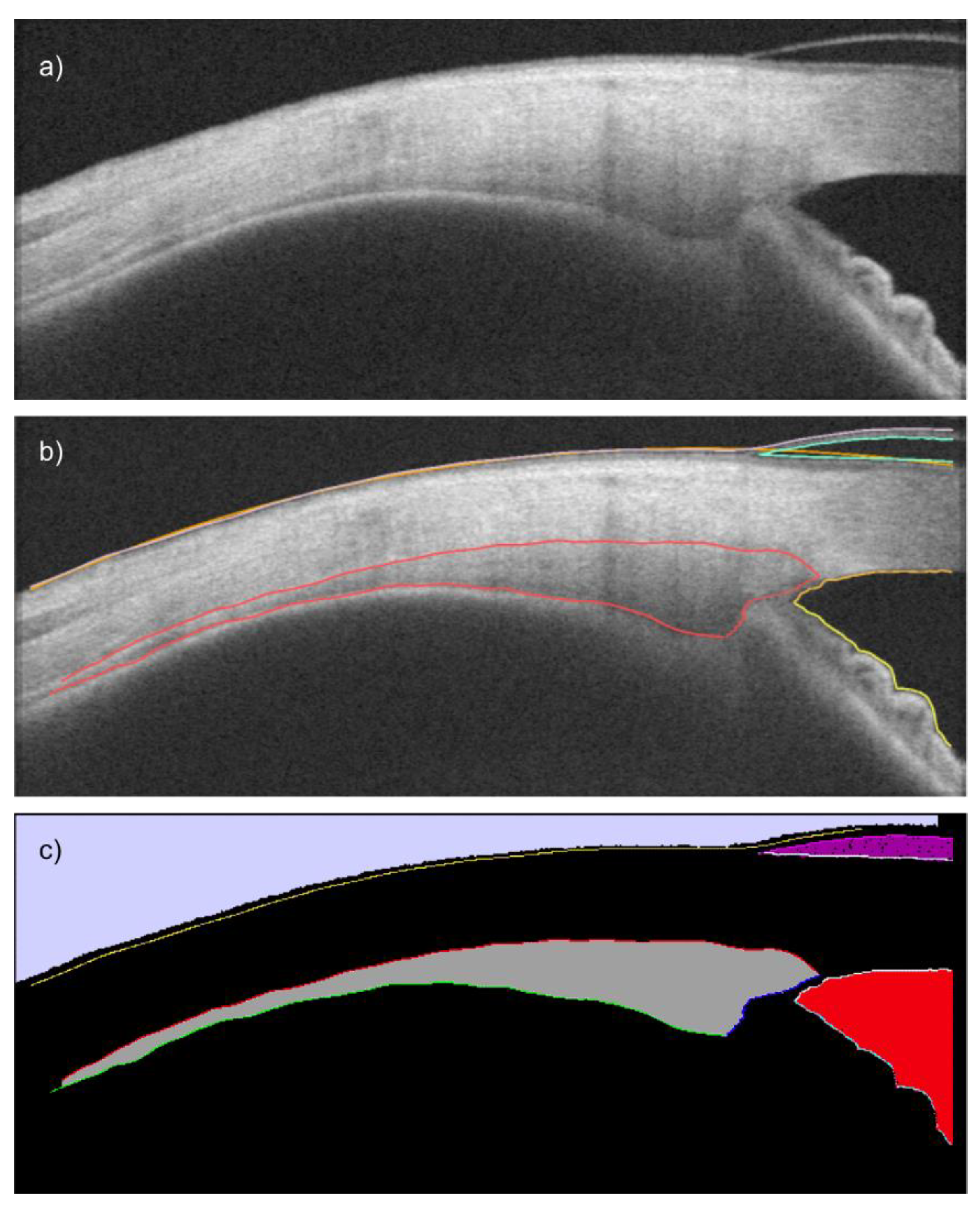

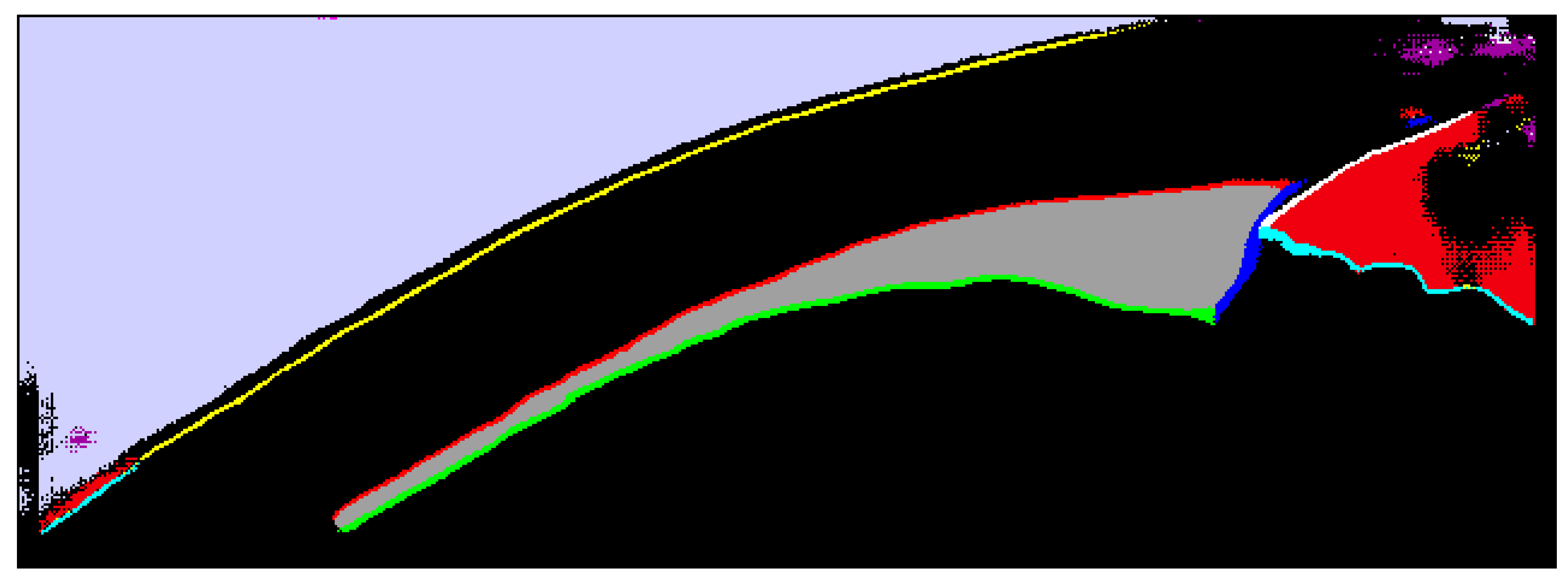

2.1.2. Image Preparation

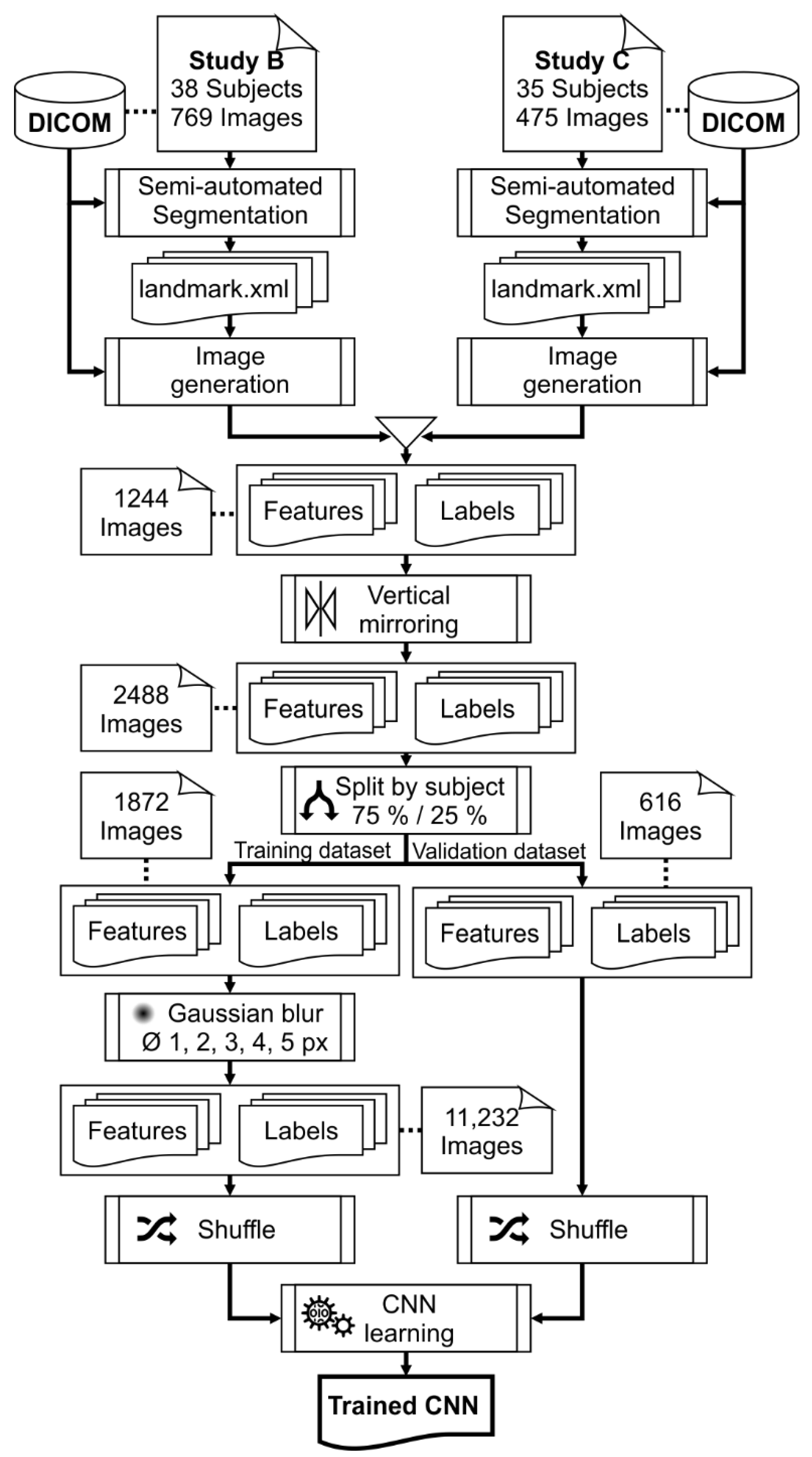

2.1.3. Training and Validation Data

2.2. Network Architecture

2.3. Network Training

2.4. Testing

2.5. Statistical Analysis

3. Results

3.1. Training Performance

3.2. Segmentation Accuracy

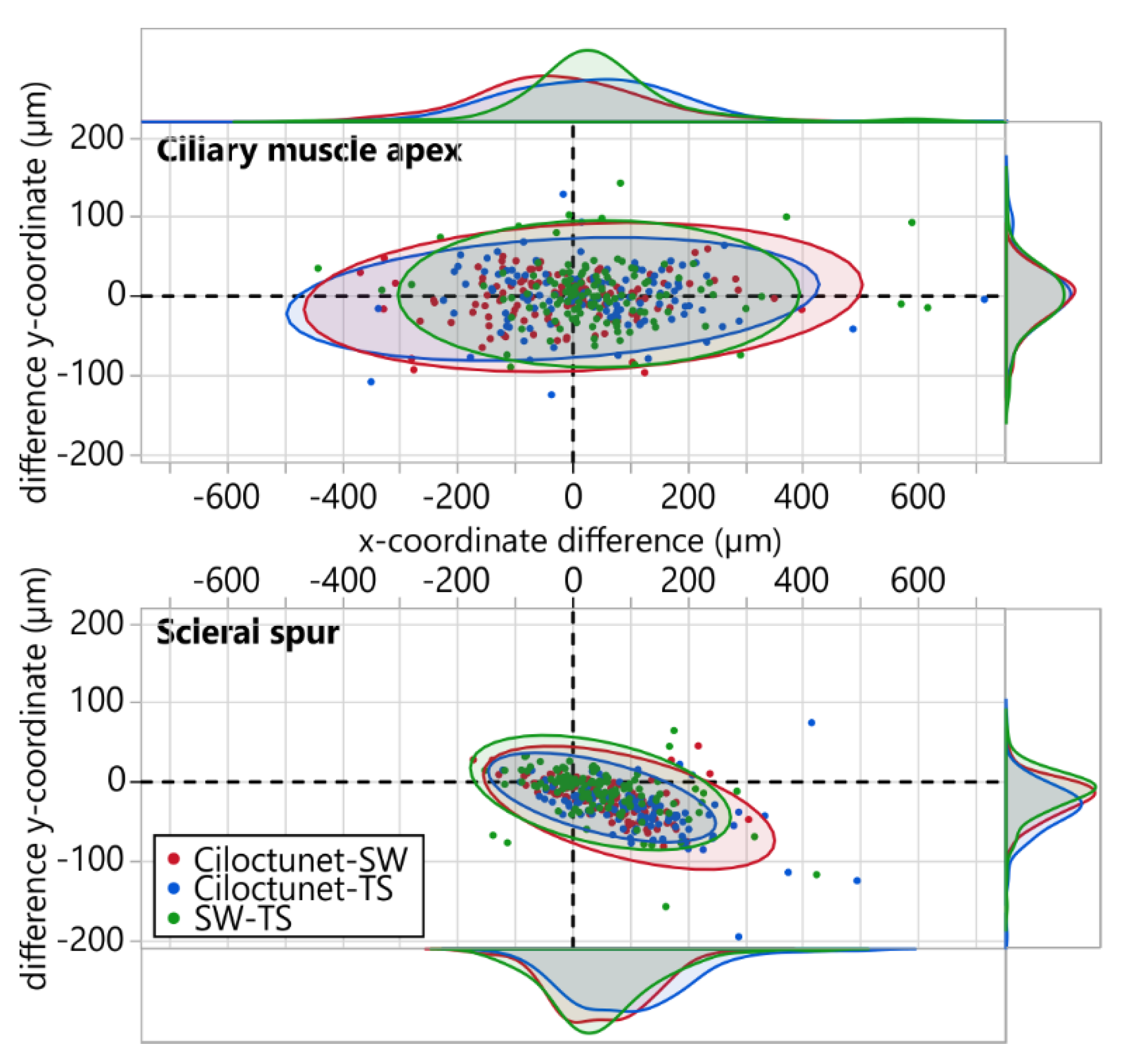

3.3. Ciliary Muscle Apex and Scleral Spur Coordinates

3.4. Effect of Segmenter on Biometric Parameters

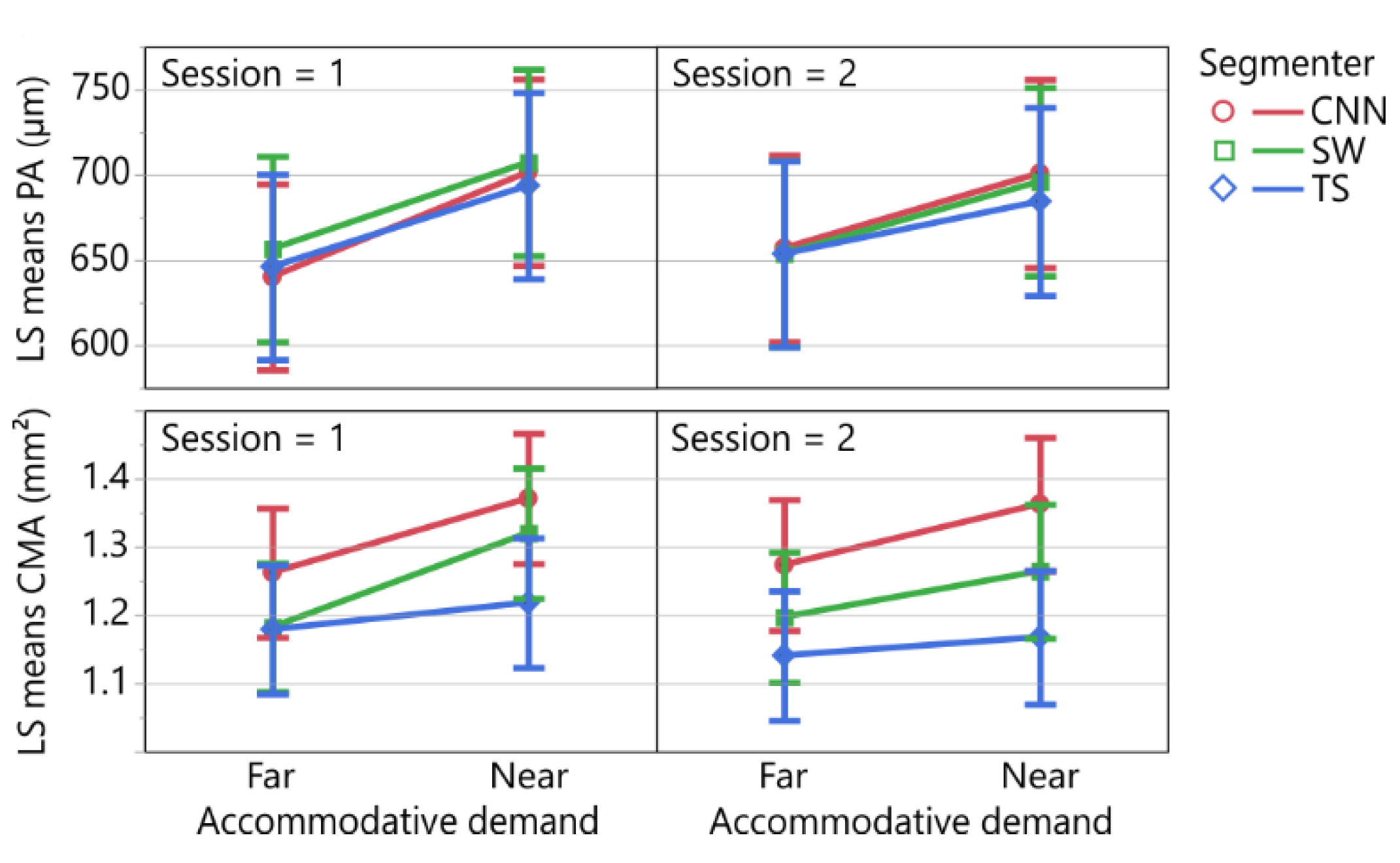

3.5. Repeatability Analysis of the Biometric Parameters

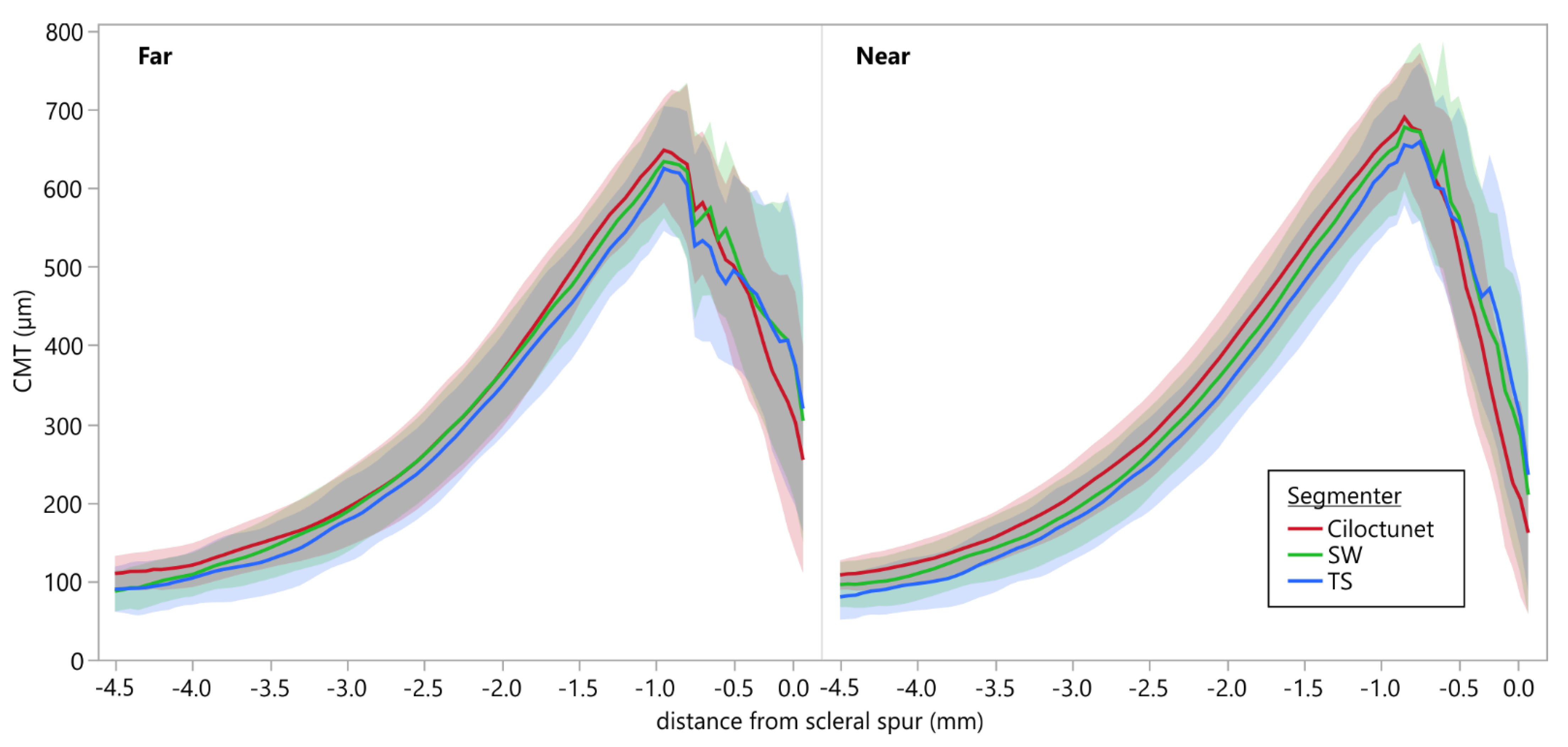

3.6. Comparison of Ciliary Muscle Thickness Profiles

3.7. Replication of the Results of Study C by Comparison of Biometric Parameters Derived from Ciloctunet Segmentations during Near and Far Accommodation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, R.; Ahmed, I.I.K. Anterior Segment Optical Coherence Tomography. Tech. Ophthalmol. 2006, 4, 120–127. [Google Scholar] [CrossRef]

- Ang, M.; Baskaran, M.; Werkmeister, R.M.; Chua, J.; Schmidl, D.; Aranha dos Santos, V.; Garhöfer, G.; Mehta, J.S.; Schmetterer, L. Anterior segment optical coherence tomography. Prog. Retin. Eye Res. 2018, 66, 132–156. [Google Scholar] [CrossRef] [PubMed]

- Richdale, K.; Bailey, M.D.; Sinnott, L.T.; Kao, C.-Y.; Zadnik, K.; Bullimore, M.A. The Effect of Phenylephrine on the Ciliary Muscle and Accommodation. Optom. Vis. Sci. 2012, 89, 1507–1511. [Google Scholar] [CrossRef] [PubMed]

- Lewis, H.A.; Kao, C.-Y.; Sinnott, L.T.; Bailey, M.D. Changes in Ciliary Muscle Thickness During Accommodation in Children. Optom. Vis. Sci. 2012, 89, 727–737. [Google Scholar] [CrossRef]

- Lossing, L.A.; Sinnott, L.T.; Kao, C.-Y.; Richdale, K.; Bailey, M.D. Measuring changes in ciliary muscle thickness with accommodation in young adults. Optom. Vis. Sci. 2012, 89, 719–726. [Google Scholar] [CrossRef]

- Richdale, K.; Sinnott, L.T.; Bullimore, M.A.; Wassenaar, P.A.; Schmalbrock, P.; Kao, C.-Y.; Patz, S.; Mutti, D.O.; Glasser, A.; Zadnik, K. Quantification of Age-Related and per Diopter Accommodative Changes of the Lens and Ciliary Muscle in the Emmetropic Human Eye. Investig. Ophthalmol. Vis. Sci. 2013, 54, 1095. [Google Scholar] [CrossRef]

- Ruggeri, M.; de Freitas, C.; Williams, S.; Hernandez, V.M.; Cabot, F.; Yesilirmak, N.; Alawa, K.; Chang, Y.-C.; Yoo, S.H.; Gregori, G.; et al. Quantification of the ciliary muscle and crystalline lens interaction during accommodation with synchronous OCT imaging. Biomed. Opt. Express 2016, 7, 1351–1364. [Google Scholar] [CrossRef]

- Ruggeri, M.; Hernandez, V.; de Freitas, C.; Manns, F.; Parel, J.-M. Biometry of the ciliary muscle during dynamic accommodation assessed with OCT. In Ophthalmic Technologies XXIV; Manns, F., Söderberg, P.G., Ho, A., Eds.; SPIE: Bellingham, WA, USA, 2014; Volume 8930, p. 89300W. [Google Scholar]

- Shao, Y.; Tao, A.; Jiang, H.; Shen, M.; Zhong, J.; Lu, F.; Wang, J. Simultaneous real-time imaging of the ocular anterior segment including the ciliary muscle during accommodation. Biomed. Opt. Express 2013, 4, 466–480. [Google Scholar] [CrossRef]

- Wagner, S.; Zrenner, E.; Strasser, T. Ciliary muscle thickness profiles derived from optical coherence tomography images. Biomed. Opt. Express 2018, 9, 5100. [Google Scholar] [CrossRef]

- Buckhurst, H.; Gilmartin, B.; Cubbidge, R.P.; Nagra, M.; Logan, N.S. Ocular biometric correlates of ciliary muscle thickness in human myopia. Ophthalmic Physiol. Opt. 2013, 33, 294–304. [Google Scholar] [CrossRef]

- Wagner, S.; Zrenner, E.; Strasser, T. Emmetropes and myopes differ little in their accommodation dynamics but strongly in their ciliary muscle morphology. Vision Res. 2019, 163, 42–51. [Google Scholar] [CrossRef] [PubMed]

- Jeon, S.; Lee, W.K.; Lee, K.; Moon, N.J. Diminished ciliary muscle movement on accommodation in myopia. Exp. Eye Res. 2012, 105, 9–14. [Google Scholar] [CrossRef] [PubMed]

- Bailey, M.D.; Sinnott, L.T.; Mutti, D.O. Ciliary Body Thickness and Refractive Error in Children. Investig. Ophthalmol. Vis. Sci. 2008, 49, 4353. [Google Scholar] [CrossRef] [PubMed]

- Pucker, A.D.; Sinnott, L.T.; Kao, C.-Y.; Bailey, M.D. Region-Specific Relationships Between Refractive Error and Ciliary Muscle Thickness in Children. Investig. Ophthalmol. Vis. Sci. 2013, 54, 4710. [Google Scholar] [CrossRef]

- Sheppard, A.L.; Davies, L.N. The Effect of Ageing on In Vivo Human Ciliary Muscle Morphology and Contractility. Investig. Ophthalmol. Vis. Sci. 2011, 52, 1809. [Google Scholar] [CrossRef]

- Kuchem, M.K.; Sinnott, L.T.; Kao, C.-Y.; Bailey, M.D. Ciliary Muscle Thickness in Anisometropia. Optom. Vis. Sci. 2013, 90, 1312–1320. [Google Scholar] [CrossRef]

- Schultz, K.E.; Sinnott, L.T.; Mutti, D.O.; Bailey, M.D. Accommodative Fluctuations, Lens Tension, and Ciliary Body Thickness in Children. Optom. Vis. Sci. 2009, 86, 677–684. [Google Scholar] [CrossRef]

- Sheppard, A.L.; Davies, L.N. In vivo analysis of ciliary muscle morphologic changes with accommodation and axial ametropia. Investig. Ophthalmol. Vis. Sci. 2010, 51, 6882–6889. [Google Scholar] [CrossRef]

- Wagner, S.; Schaeffel, F.; Zrenner, E.; Straßer, T. Prolonged nearwork affects the ciliary muscle morphology. Exp. Eye Res. 2019, 186, 107741. [Google Scholar] [CrossRef]

- Muftuoglu, O.; Hosal, B.M.; Zilelioglu, G. Ciliary body thickness in unilateral high axial myopia. Eye 2009, 23, 1176–1181. [Google Scholar] [CrossRef]

- Kao, C.-Y.; Richdale, K.; Sinnott, L.T.; Grillott, L.E.; Bailey, M.D. Semiautomatic extraction algorithm for images of the ciliary muscle. Optom. Vis. Sci. 2011, 88, 275–289. [Google Scholar] [CrossRef] [PubMed]

- Laughton, D.S.; Coldrick, B.J.; Sheppard, A.L.; Davies, L.N. A program to analyse optical coherence tomography images of the ciliary muscle. Contact Lens Anterior Eye 2015, 38, 402–408. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Kaphle, D.; Schmid, K.L.; Davies, L.N.; Suheimat, M.; Atchison, D.A. Ciliary Muscle Dimension Changes With Accommodation Vary in Myopia and Emmetropia. Investig. Ophthalmol. Vis. Sci. 2022, 63, 24. [Google Scholar] [CrossRef] [PubMed]

- Monsálvez-Romín, D.; Domínguez-Vicent, A.; Esteve-Taboada, J.J.; Montés-Micó, R.; Ferrer-Blasco, T. Multisectorial changes in the ciliary muscle during accommodation measured with high-resolution optical coherence tomography. Arq. Bras. Oftalmol. 2019, 82, 207–213. [Google Scholar] [CrossRef] [PubMed]

- Domínguez-Vicent, A.; Monsálvez-Romín, D.; Esteve-Taboada, J.J.; Montés-Micó, R.; Ferrer-Blasco, T. Effect of age in the ciliary muscle during accommodation: Sectorial analysis. J. Optom. 2018, 12, 14–21. [Google Scholar] [CrossRef]

- Esteve-Taboada, J.J.; Domínguez-Vicent, A.; Monsálvez-Romín, D.; Del Águila-Carrasco, A.J.; Montés-Micó, R. Non-invasive measurements of the dynamic changes in the ciliary muscle, crystalline lens morphology, and anterior chamber during accommodation with a high-resolution OCT. Graefe’s Arch. Clin. Exp. Ophthalmol. 2017, 255, 1385–1394. [Google Scholar] [CrossRef]

- Fernández-Vigo, J.I.; Shi, H.; Kudsieh, B.; Arriola-Villalobos, P.; De-Pablo Gómez-de-Liaño, L.; García-Feijóo, J.; Fernández-Vigo, J.Á. Ciliary muscle dimensions by swept-source optical coherence tomography and correlation study in a large population. Acta Ophthalmol. 2020, 98, aos.14304. [Google Scholar] [CrossRef]

- Moulakaki, A.I.; Monsálvez-Romín, D.; Domínguez-Vicent, A.; Esteve-Taboada, J.J.; Montés-Micó, R. Semiautomatic procedure to assess changes in the eye accommodative system. Int. Ophthalmol. 2018, 38, 2451–2462. [Google Scholar] [CrossRef]

- Shi, J.; Zhao, J.; Zhao, F.; Naidu, R.; Zhou, X. Ciliary muscle morphology and accommodative lag in hyperopic anisometropic children. Int. Ophthalmol. 2020, 40, 917–924. [Google Scholar] [CrossRef]

- Bailey, M.D. How should we measure the ciliary muscle? Investig. Ophthalmol. Vis. Sci. 2011, 52, 1817–1818. [Google Scholar] [CrossRef][Green Version]

- Straßer, T.; Wagner, S.; Zrenner, E. Review of the application of the open-source software CilOCT for semi-automatic segmentation and analysis of the ciliary muscle in OCT images. PLoS ONE 2020, 15, e0234330. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Team, T.I.D. ImageMagick. Available online: https://imagemagick.org (accessed on 12 June 2020).

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the ICML’15: 32nd International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; Volume 37, pp. 448–456. [Google Scholar]

- Yu, N.; Shen, X.; Lin, Z.; Mech, R.; Barnes, C. Learning to Detect Multiple Photographic Defects. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; Volume 2018, pp. 1387–1396. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe. In Proceedings of the ACM International Conference on Multimedia—MM ’14, Orlando, FL, USA, 3–7 November 2014; ACM Press: New York, NY, USA, 2014; pp. 675–678. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar] [CrossRef]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 25, 120–123. [Google Scholar]

- Chen, Y.-S.; Hsu, W.-H. A modified fast parallel algorithm for thinning digital patterns. Pattern Recognit. Lett. 1988, 7, 99–106. [Google Scholar] [CrossRef]

- Reza, N. Zhang-Suen Thinning Algorithm, Java Implementation. Available online: https://nayefreza.wordpress.com/2013/05/11/zhang-suen-thinning-algorithm-java-implementation/ (accessed on 24 June 2020).

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the KDD’96: Second International Conference on Knowledge Discovery and Data Mining, Portland OR, USA, 2–4 August 1996; Association for the Advancement of Artificial Intelligence: Menlo Park, CA, USA, 1996; pp. 226–231. [Google Scholar]

- Jaccard, P. Lois de distribution florale dans la zone alpine. Bull. la Société vaudoise des Sci. Nat. 1902, 38, 72. [Google Scholar] [CrossRef]

- Hair, J.F.J.; Anderson, R.E.; Tatham, R.L.; Black, W.C. Multivariate Data Analysis, 3rd ed.; Macmillan: New York, NY, USA, 1995. [Google Scholar]

- Crosby, J.M.; Twohig, M.P.; Phelps, B.I.; Fornoff, A.; Boie, I.; Mazur-Mosiewicz, A.; Dean, R.S.; Mazur-Mosiewicz, A.; Dean, R.S.; Allen, R.L.; et al. Homoscedasticity. In Encyclopedia of Child Behavior and Development; Springer: Boston, MA, USA, 2011; p. 752. [Google Scholar]

- Santos Nobre, J.; da Motta Singer, J. Residual Analysis for Linear Mixed Models. Biom. J. 2007, 49, 863–875. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 1, 307–310. [Google Scholar] [CrossRef] [PubMed]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed]

- Willmott, C.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the IEEE 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 318–327. [Google Scholar] [CrossRef]

- Cabeza-Gil, I.; Ruggeri, M.; Chang, Y.-C.; Calvo, B.; Manns, F. Automated segmentation of the ciliary muscle in OCT images using fully convolutional networks. Biomed. Opt. Express 2022, 13, 2810. [Google Scholar] [CrossRef] [PubMed]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. Int. J. Comput. Vis. 2015, 125, 3–18. [Google Scholar] [CrossRef]

- Hui, B.; Xu, Z.; Luo, H.; Chang, Z. Contour detection using an improved holistically-nested edge detection network. In Proceedings of the Global Intelligence Industry Conference (GIIC 2018), Beijing, China, 21–23 May 2018; Lv, Y., Ed.; SPIE: Bellingham, WA, USA, 2018; Volume 10835, p. 2. [Google Scholar]

- Prechelt, L. Early Stopping—But When? In Neural Networks: Tricks of the Trade. Lecture Notes in Computer Science; Orr, G., Müller, K., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–69. ISBN 978-3-540-65311-0. [Google Scholar]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, Edinburgh, UK, 3–6 August 2003; IEEE Computer Society: Washington, DC, USA, 2003; Volume 1, pp. 958–963. [Google Scholar]

- Wong, S.C.; Gatt, A.; Stamatescu, V.; McDonnell, M.D. Understanding Data Augmentation for Classification: When to Warp? In Proceedings of the IEEE 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; pp. 1–6. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Chang, Y.-C.; Liu, K.; Cabot, F.; Yoo, S.H.; Ruggeri, M.; Ho, A.; Parel, J.-M.; Manns, F. Variability of manual ciliary muscle segmentation in optical coherence tomography images. Biomed. Opt. Express 2018, 9, 791. [Google Scholar] [CrossRef] [PubMed]

- Bland, M. Estimating Mean and Standard Deviation from the Sample Size, Three Quartiles, Minimum, and Maximum. Int. J. Stat. Med. Res. 2015, 4, 57–64. [Google Scholar] [CrossRef]

- Wan, X.; Wang, W.; Liu, J.; Tong, T. Estimating the sample mean and standard deviation from the sample size, median, range and/or interquartile range. BMC Med. Res. Methodol. 2014, 14, 135. [Google Scholar] [CrossRef] [PubMed]

- Xu, B.Y.; Chiang, M.; Pardeshi, A.A.; Moghimi, S.; Varma, R. Deep Neural Network for Scleral Spur Detection in Anterior Segment OCT Images: The Chinese American Eye Study. Transl. Vis. Sci. Technol. 2020, 9, 18. [Google Scholar] [CrossRef]

- Buckhurst, H.D.; Gilmartin, B.; Cubbidge, R.P.; Logan, N.S. Measurement of Scleral Thickness in Humans Using Anterior Segment Optical Coherent Tomography. PLoS ONE 2015, 10, e0132902. [Google Scholar] [CrossRef]

- Choi, H.J.; Lee, S.-M.; Lee, J.Y.; Lee, S.Y.; Kim, M.K.; Wee, W.R. Measurement of anterior scleral curvature using anterior segment OCT. Optom. Vis. Sci. 2014, 91, 793–802. [Google Scholar] [CrossRef]

- Fu, H.; Baskaran, M.; Xu, Y.; Lin, S.; Wong, D.W.K.; Liu, J.; Tun, T.A.; Mahesh, M.; Perera, S.A.; Aung, T. A Deep Learning System for Automated Angle-Closure Detection in Anterior Segment Optical Coherence Tomography Images. Am. J. Ophthalmol. 2019, 203, 37–45. [Google Scholar] [CrossRef]

- Xu, B.Y.; Chiang, M.; Chaudhary, S.; Kulkarni, S.; Pardeshi, A.A.; Varma, R. Deep Learning Classifiers for Automated Detection of Gonioscopic Angle Closure Based on Anterior Segment OCT Images. Am. J. Ophthalmol. 2019, 208, 273–280. [Google Scholar] [CrossRef] [PubMed]

| # | Segmentation Class | Color | RGB Value |

|---|---|---|---|

| 1 | Background | ● Black | 0, 0, 0 |

| 2 | Inner scleral border | ○ White | 255, 255, 255 |

| 3 | Upper iris border | ● Cyan | 0, 255, 255 |

| 4 | Outer conjunctiva border | ● Yellow | 255, 255, 0 |

| 5 | Outer ciliary muscle border | ● Red | 255, 0, 0 |

| 6 | Inner ciliary muscle border | ● Green | 0, 255, 0 |

| 7 | Vertical ciliary muscle border | ● Blue | 0, 0, 255 |

| 8 | Ciliary muscle area | ● Dark gray | 160, 160, 160 |

| 9 | Upper contact lens border 1 | ● Magenta | 240, 0, 240 |

| 10 | Lower contact lens border 1 | ● Light gray | 240, 240, 240 |

| 11 | Contact lens area 1 | ● Purple | 160, 0, 160 |

| 12 | Anterior chamber area | ● Sienna | 240, 0, 15 |

| 13 | Air area | ● Lavender | 209, 209, 255 |

| Ciloctunet–SW | Ciloctunet–TS | TS–SW | |

|---|---|---|---|

| Ciliary muscle apex (n = 130) | |||

| Difference x Mean ± SD (µm) | −21.82 ± 139.27 | 30.89 ± 148.86 | 46.15 ± 142.43 |

| Difference y Mean ± SD (µm) | −3.66 ± 31.08 | −1.03 ± 37.26 | 2.27 ± 38.00 |

| Absolute difference x Median [Q25, Q75] (µm) | 85.16 [44.53, 147.66] | 101.17 [55.66, 153.71] | 60.16 [24.61, 110.94] |

| Absolute difference y Median [Q25, Q75] (µm) | 18.75 [9.37, 33.40] | 21.68 [11.72, 36,91] | 20.51 [8.79, 38.38] |

| Euclidean distance Median [Q25, Q75] (µm) | 89.38 [54.72, 152.11] | 111.17 [65.69, 156.24] | 71.44 [34.62, 127.54] |

| Scleral spur (n = 131) | |||

| Difference x Mean ± SD (µm) | 51.68 ± 80.61 | 98.63 ± 103.32 | 48.99 ± 92.32 |

| Difference y Mean ± SD (µm) | −20.16 ± 23.10 | −33.13 ± 31.87 | −14.11 ± 29.62 |

| Absolute difference x Median [Q25, Q75] (µm) | 65.23 [27.34, 114.06] | 94.53 [35.16, 157.03] | 58.59 [25.39, 112.50] |

| Absolute difference y Median [Q25, Q75] (µm) | 19.92 [8.20, 36.62] | 31.05 [15.82, 48.05] | 15.23 [4.98, 30.47] |

| Euclidean distance Median [Q25, Q75] (µm) | 67.44 [29.61, 117.26] | 99.92 [45.94, 173.03] | 60.94 [31.83, 115.23] |

| Fixed Effect | PA (n = 380, R2adj. = 0.73) | CMA (n = 380, R2adj. = 0.54) | ||

|---|---|---|---|---|

| F-Statistic | p-Value | F-Statistic | p-Value | |

| Distance | F (1, 356.17) = 71.2319 | <0.0001 | F (1, 356.47) = 27.6147 | <0.0001 |

| Segmenter | F (2, 356.00) = 0.9343 | 0.3938 | F (2, 355.99) = 30.6360 | <0.0001 |

| Session | F (1, 356.28) = 0.0003 | 0.9852 | F (1, 356.77) = 2.0181 | 0.1563 |

| Distance x Segmenter | F (2, 356.00) = 0.5168 | 0.5969 | F (2, 356.00) = 2.3787 | 0.0941 |

| Distance x Session | F (1, 356.27) = 1.6976 | 0.1934 | F (1, 356.75) = 1.3051 | 0.2541 |

| Segmenter x Session | F (2, 356.00) = 0.6444 | 0.5256 | F (2, 355.99) = 0.8143 | 0.4438 |

| Distance x Segmenter x Session | F (2, 356.00) = 0.0712 | 0.9313 | F (2, 356.01) = 0.3828 | 0.6823 |

| Comparison | Mean Diff. ± SD | t-Statistic a | Limits of Agreement | ICC b,d [95% CI] | ICC b,c,d [95% CI] | ||

|---|---|---|---|---|---|---|---|

| df | t-Value | p-Value | |||||

| Perpendicular axis (µm) | |||||||

| Ciloctunet–SW | 5.35 ± 67.64 | 119 | 0.8668 | 0.3878 | [−127.21, 137.92] | 0.85 [0.79, 0.90] | 0.93 [0.90, 0.95] |

| Ciloctunet–TS | −3.80 ± 77.10 | 122 | −0.5473 | 0.0585 | [−154.93, 147.32] | 0.82 [0.74, 0.87] | 0.90 [0.86, 0.93] |

| SW–TS | −9.60 ± 57.11 | 124 | −1.8793 | 0.0626 | [−121.54, 102.34] | 0.91 [0.88, 0.94] | 0.91 [0.88, 0.94] |

| Ciloctunet, Session 1–2 | 13.23 ± 84.07 | 49 | 1.1132 | 0.2711 | [−151.54, 178.01] | 0.70 [0.47, 0.83] | 0.93 [0.87, 0.96] |

| Ciliary muscle area (mm²) | |||||||

| Ciloctunet–SW | −0.08 ± 0.19 | 119 | −4.8045 | <0.0001 | [−0.45, 0.29] | 0.65 [0.45, 0.77] | 0.71 [0.44, 0.83] |

| Ciloctunet–TS | −0.14 ± 0.19 | 122 | −7.8459 | <0.0001 | [−0.51, 0.24] | 0.58 [0.19, 0.76] | 0.58 [0.19, 0.76] |

| SW–TS | −0.06 ± 0.21 | 124 | −3.2455 | 0.0015 | [−0.47, 0.35] | 0.63 [0.47, 0.74] | 0.67 [0.53, 0.77] |

| Ciloctunet, Session 1–2 | 0.00 ± 0.17 | 49 | −0.1058 | 0.9162 | [−0.34, 0.33] | 0.75 [0.57, 0.86] | 0.75 [0.57, 0.86] |

| Euclidean Distance between Absolute Scleral Spur Coordinates (µm) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Inter-Examiner Difference | CNN-Examiner Difference | |||||||

| Mean/Median | SD | IQR | Mean/Median | SD | IQR | |||

| Current study | 60.94 | 83.40 | SW: TS: | 67.44 99.92 | 87.65 83.40 | |||

| Chang et al. (2018) [60] | 122 | |||||||

| Xu et al. (2020) [63] | 97.34 | 73.29 | 98.87 1 | 73.08 | 52.06 | 70.23 1 | ||

| Pointwise ciliary muscle thickness difference: CMTMAX/PA mean (µm) | ||||||||

| Mean | SD | Mean | SD | |||||

| Current study | −9.60 | 57.11 | SW: TS: | 5.35 −3.80 | 67.64 77.10 | |||

| Chang et al. (2018) [60] | Relaxed: Accommodated: | 20 25 | 69.57 2,3 34.79 2,3 | |||||

| Cabeza-Gil et al. (2022) [53] | 1.2 | 23.72 4 | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Straßer, T.; Wagner, S. Performance of the Deep Neural Network Ciloctunet, Integrated with Open-Source Software for Ciliary Muscle Segmentation in Anterior Segment OCT Images, Is on Par with Experienced Examiners. Diagnostics 2022, 12, 3055. https://doi.org/10.3390/diagnostics12123055

Straßer T, Wagner S. Performance of the Deep Neural Network Ciloctunet, Integrated with Open-Source Software for Ciliary Muscle Segmentation in Anterior Segment OCT Images, Is on Par with Experienced Examiners. Diagnostics. 2022; 12(12):3055. https://doi.org/10.3390/diagnostics12123055

Chicago/Turabian StyleStraßer, Torsten, and Sandra Wagner. 2022. "Performance of the Deep Neural Network Ciloctunet, Integrated with Open-Source Software for Ciliary Muscle Segmentation in Anterior Segment OCT Images, Is on Par with Experienced Examiners" Diagnostics 12, no. 12: 3055. https://doi.org/10.3390/diagnostics12123055

APA StyleStraßer, T., & Wagner, S. (2022). Performance of the Deep Neural Network Ciloctunet, Integrated with Open-Source Software for Ciliary Muscle Segmentation in Anterior Segment OCT Images, Is on Par with Experienced Examiners. Diagnostics, 12(12), 3055. https://doi.org/10.3390/diagnostics12123055