Abstract

Thoracic diseases refer to disorders that affect the lungs, heart, and other parts of the rib cage, such as pneumonia, novel coronavirus disease (COVID-19), tuberculosis, cardiomegaly, and fracture. Millions of people die every year from thoracic diseases. Therefore, early detection of these diseases is essential and can save many lives. Earlier, only highly experienced radiologists examined thoracic diseases, but recent developments in image processing and deep learning techniques are opening the door for the automated detection of these diseases. In this paper, we present a comprehensive review including: types of thoracic diseases; examination types of thoracic images; image pre-processing; models of deep learning applied to the detection of thoracic diseases (e.g., pneumonia, COVID-19, edema, fibrosis, tuberculosis, chronic obstructive pulmonary disease (COPD), and lung cancer); transfer learning background knowledge; ensemble learning; and future initiatives for improving the efficacy of deep learning models in applications that detect thoracic diseases. Through this survey paper, researchers may be able to gain an overall and systematic knowledge of deep learning applications in medical thoracic images. The review investigates a performance comparison of various models and a comparison of various datasets.

1. Introduction

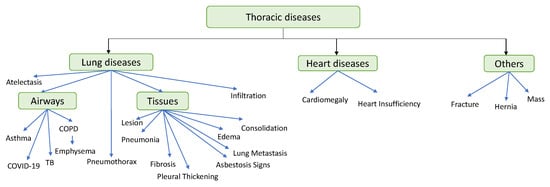

Thoracic diseases are diseases of the organs within the rib cage, including heart and lung diseases. Lung diseases result in hypoxia and dyspnea. Furthermore, some diseases may cause the failure of the entire respiratory system and thus lead to death [1], such as the novel coronavirus disease (COVID-19), which emerged recently and became a pandemic that poses a threat to the entire world. There are several types of thoracic diseases [2,3] represented as follows: (i) asthma, COVID-19, tuberculosis (TB), and chronic obstructive pulmonary disease (COPD) are examples of diseases that affect the airways or lungs; () diseases that affect the heart, such as cardiomegaly and heart failure; () other diseases affecting bones and muscles in the chest, such as fracture and lung metastasis. The World Health Organization (WHO) has classified pneumonia as the third-deadliest disease in the world after heart disease and cerebral palsy. As in 2019, 2.5 million death cases from pneumonia around the world [4], 14% of all deaths of children under five years old, which results in the death of 672,000 children [5]. In addition, COVID-19 has caused more than 6.5 million death cases around the world since its emergence in 2019 [6]. Tuberculosis (TB) resulted in the death of approximately 1.5 million people in 2020.

Early detection refers to detecting symptomatic patients as early as possible, detection refers to the act of detecting or sensing something; discovering something that was hidden or disguised, and diagnosis refers to the identification of the nature and cause of an illness. As a result, human health is at a serious risk due to thoracic diseases, and early detection of these diseases improves recovery and reduces mortality. The consultant provided by a thoracic expert or radiologist is solely responsible for the patient’s diagnosis. However, there may be emergency situations where radiology professionals are too busy, unavailable, or unable to diagnose a large number of thoracic images rapidly [7,8]. Artificial intelligence (AI) systems can be extremely useful in this regard [9].

AI is used to analyze, display, and understand complex medical and health data. The ability of computer algorithms to guess conclusions based solely on input data are known as artificial intelligence. The major goal of health-related AI applications is to figure out how clinical procedures affect patient outcomes. AI systems are employed in diagnostics, treatment protocol creation, drug research, personalized medicine, patient monitoring, and care. What distinguishes AI technology from traditional healthcare solutions is its ability to collect and process data and deliver a specific and fast result [10]. Artificial intelligence does this through the use of machine learning (ML) and deep learning (DL) algorithms. These processes are able to recognize patterns of behavior and develop their own logic.

ML allows software applications to become more accurate at predicting outcomes without being explicitly programmed to do so. Machine learning algorithms use historical data as input to predict new output values. There are four basic approaches for ML: supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning [11]. The type of the algorithm; that the data scientists choose to use, depends on what type of data they want to predict. There are different learning techniques which can be summarized as follows:

- Supervised learning: data scientists supply algorithms with labeled training data and define the variables they want the algorithm to assess for correlations. Both the input and the output of the algorithm are specified. Some of the most common algorithms in supervised learning include Support Vector Machines (SVM), Decision Trees, and Random Forest;

- Unsupervised learning: involves algorithms that train on unlabeled data. The algorithm scans through datasets looking for any meaningful connection. The data that the algorithms train on as well as the predictions or recommendations they output are predetermined;

- Semi-supervised learning: occurs when part of the given input data has been labeled. Unsupervised and semi-supervised learning can be more appealing alternatives as it can be time-consuming and costly to rely on domain expertise to label data appropriately for supervised learning;

- Reinforcement learning: data scientists typically use reinforcement learning to teach a machine to complete a multi-step process for which there are clearly defined rules. Data scientists program an algorithm to complete a task and give it positive or negative cues as it works out how to complete a task. However, for the most part, the algorithm decides on its own what steps to take along the way.

Table 1 illustrates a simplified summary of the four types of ML approaches.

Table 1.

ML approaches summary.

Machine learning models are trained using large amounts of input data in order to provide relevant insights and predictions. Currently, several datasets of thoracic images for different thoracic diseases are publicly available. The doctor’s efficiency can be improved by AI systems, especially through DL.

AI is now widely applied in a variety of sectors, including medicine and the rapid detection of diseases. AI played a key contribution in developing a Coronavirus vaccine in record time [12]. In South Korea, intelligence assisted doctors in learning the statistics of affected persons, allowing them to predict the Coronavirus outbreak at the start of the crisis. At a time when many governments around the world were still considering the idea of imposing a blanket closure owing to the pandemic, a business in Seoul (Seegene) employed artificial intelligence to develop tests to detect the Coronavirus in weeks, whereas it would have taken months without it [13]. “People thought the delta mutant would spread across the African continent, clogging hospitals with patients, but with AI, we can control it,” explains a South African AI expert. Artificial intelligence has aided scientists in gaining a better understanding of how quickly the virus can change, as well as in developing and testing vaccines against the Coronavirus. As indicated in the paper, many research has demonstrated the value of AI algorithms in detecting specific types of diseases with great accuracy. The initial use of AI in the face of a health catastrophe is almost definitely to assist researchers in developing a vaccine that will protect caretakers while also containing the pandemic. Biomedicine and research rely on a wide range of methodologies, many of which have long been aided by computer science and statistics applications. As a result, AI is a component of this continuity [14]. Scientists have already avoided months of experimentation by using AI to forecast the virus structure. Even if it is constrained due to so-called “continuous” rules and infinite combinatorics for the study of protein folding, AI appears to have provided important support in this regard. Moderna, an American start-up, has made a name for itself by mastering biotechnology based on messenger ribonucleic acid (mRNA), which necessitates the study of protein folding. With the use of bioinformatics, of which AI is a key part, it was able to dramatically cut the time it took to build a prototype vaccine that could be tested on humans. In February 2020, Baidu, a Chinese technological powerhouse, released its Linearfold prediction algorithm in collaboration with Oregon State University and the University of Rochester to research the same protein folding. This method predicts the structure of a virus’ secondary ribonucleic acid (RNA) significantly faster than standard algorithms, giving scientists more knowledge on how viruses spread. Linearfold could have predicted the secondary structure of COVID-19’s RNA sequence in 27 s instead of 55 min. DeepMind, a subsidiary of Alphabet, the parent company of Google, has also shared its coronavirus protein structure predictions with its AlphaFold AI system. IBM, Amazon, Google, and Microsoft have also offered their servers’ computing power to the US government for the processing of very big datasets in epidemiology, bioinformatics, and molecular modeling.

Thus, an intelligent and automatic system is required to diagnose the hidden patterns in clinical data. Using image classification and detection techniques, DL models have provided advanced digital imaging applications for faster disease detection [15]. DL is based on extracting features from raw data using multiple layers to recognize different parts of the input data [16]. DL provides guidance to doctors and other researchers on how to automatically detect diseases [17]. However, DL requires a large amount of data to perform better. Therefore, images will be crucial data for detecting diseases [18].

Deep learning use cases in healthcare such as medical imaging, data analytic, personalized medical treatments, drug discovery, genomics analysis, and mental health research. Among the recent work based on deep learning are the detection of acute lymphoblastic leukemia [19,20,21,22,23,24], chest disease [25], and Alzheimer’s disease [26,27,28].

Although AI has made an effective contribution to the medical field, it has some limitations [29] as:

- Cost (analyzing data will be very costly both in terms of energy and hardware use);

- These technologies are still a group of very rapidly developing technologies, and therefore they are still under development. Thus, we need experts in this field to deal with it;

- These technologies are not widely available in the healthcare sector;

- Security needs to be integral in the AI process.

The primary contributions to this article are as follows:

- It provides a comprehensive overview of the use of AI in detecting thoracic diseases, including COVID-19;

- It presented the different types of AI models that used to detect thoracic diseases and the databases that include those diseases. In addition, the progress of the works and the direction the researchers are moving in this domain throughout the recent years;

- To express that CNN has penetrated the field of understanding the medical picture with high accuracy;

- It collected many different databases for thoracic diseases with descriptions;

- It also presents the issues of thoracic diseases detection using deep learning found in the literature studies.

2. Methodology

The methodology used to conduct the survey of recent thoracic diseases detection using DL/ML models: First, an appropriate dataset containing a large number of images that includes related diseases must be selected, and this is described in detail in the next section. Second, the DL/ML algorithms that are applied to detect related diseases must be identified, and this is described in detail in the next section. In the last stage, the performance of the model used in the detection of the disease is determined.

3. The Taxonomy of State-of-the-Art Work on Thoracic Diseases Detection Using DL/ML

In this section, a taxonomy of the recent work on thoracic diseases detection using DL/ML is presented, which is the first contribution of this paper.

The taxonomy consists of 9 traits that are common in the surveyed articles: image type, dataset description, image pre-processing, deep learning models, ways to train deep learning, the ensemble of classifiers, pre-trained models, type of disease, and evaluation criteria.

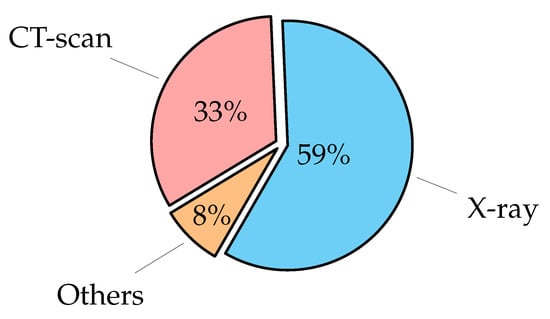

3.1. Imaging Thoracic Exams

Medical and health protocols recommend thoracic imaging because it is a rapid and painless technique. Infected patients, including children, adults, and the elderly, are now being assessed using image scans. Imaging systems that rely on AI technologies are provided with thousands of medical images so that these systems can identify abnormal masses that could indicate the onset of disease. Then, these systems are able to identify a specific area on the radiograph for the doctor to examine with greater accuracy, thus integrating ‘artificial intelligence’ techniques with the doctors’ efforts [30]. Some diseases of the thoracic require radiological images (as X-ray or CT-Scan) to detect the disease. Others require examination of the tissues themselves to confirm the presence of the disease. The most examination types to diagnose thoracic diseases [31] are:

- Chest X-ray (CXR): can be used to check for diseases such as pneumonia [32] and a lung infection that causes fluid buildup [33]. It can also be used to detect cancer or pulmonary fibrosis, which is a scar tissue buildup in the lungs. CXR scans are commonly used in clinical practice since they are inexpensive, simple to perform, give a quick scan for the patient as two-dimensional (2D) images, and can be widely used for diagnosis and treatment of lung and cardiovascular diseases [34,35]. Although X-rays are frequently used, they have side effects such as exposure to ionizing radiation harmful to the human body and relatively low information when compared to other imaging methods;

- Computerized Tomography (CT): is a more advanced imaging test that can be used to detect disorders such as cancer that an X-ray could miss [36,37,38,39]. A CT scan is a series of X-rays taken from various angles that are patched together to create a complete image. While CT scans are more reliable in diagnosing COVID-19, they are less accurate in diagnosing non-viral pneumonia-like consolidation [40]. The CT scan is very accurate spatial information and quick, but the disadvantages of the CT scan are the risk of exposure to radiation is high, require expensive equipment, and is therefore not always accessible to all levels of people;

- Histopathology: often known as histology, is the microscopic examination of organic tissues in order to observe the appearance of diseased cells [41]. The tissue that was sent for testing, as well as the characteristics of the tumor under the microscope is described in a histopathology report [42]. A biopsy report or a pathology report are both terms used to describe a histopathology report. It can identify features of what cancer looks like under the microscope, or detect cardiomegaly disease [43]. Histology examination is low cost and allows an evaluation of infection distribution in various tissues. However, it needs 2–7 days of preparation time, might not detect low-level infection, and it depends on the expertise of pathologists;

- Sputum Smear Microscopy: refers to the microscopic investigation of sputum [44]. This has been proved to be one of the most effective ways of detecting tuberculosis infection in patients so that treatment can begin [45]. In some times, a chest X-ray and a sputum sample are needed to find out if a person has tuberculosis [46]. In poor and middle-income countries, sputum smear microscopy has been the major approach for diagnosing pulmonary tuberculosis [47]. Sputum smear microscopy examination has a long experience, inexpensive, and is used for the follow-up of patients on treatment. However, it is cumbersome for laboratory staff and patients and needs two samples;

- Magnetic Resonance Imaging (MRI): is a type of scan that uses powerful magnetic fields and radio waves to provide detailed images of the inside of the body. An MRI scanner is a huge tube with powerful magnets within. During the scan, the patient will be lying inside the tube. MRI scans can be used to investigate practically any region of the body, including the brain, breast, and heart problems [48]. MRI has more advantages as a 3D technique and is safer (no ionizing radiation, and excellent soft-tissue contrast. However, it has long total scan times (30–75 min), is not as readily accessible, and is claustrophobic (enclosed space).

3.2. Dataset Description

A high number of trainable parameters are required to train a neural network model, which necessitates very large datasets. Several publicly available open-source datasets of thoracic images are reported in Table 2. Some of the datasets contain images of multiple diseases of the thoracic, such as the National Institute of Health (NIH) dataset [49], ChestX-ray8 [49], Chowdhury’s Kaggle [50], COVID-19 Image Data Collection [51], PadChest [52], CheXpert [53], COVIDx Dataset [54], COVID-19 Radiography Database [55], and the MIMIC dataset [56].

Other datasets contain only images of one disease, such as Andrew’s Kaggle dataset, JSRT [57], Optical Coherence Tomography (OCT) and Chest X-ray Images [58], RSNA Pneumonia Detection Challenge Dataset [59], RIH-CXR [60], NCI Genomic Data Commons [61], COVID Chest X-ray Dataset, ImageCLEF [62], ChestX-ray images (Pneumonia) [58], Montgomery and Shenzhen datasets [63], Shenzhen datasets [63], COVID-CT Dataset [64], Autofocus database [65], Sajid’s Kaggle dataset, LDOCTCXR [66], Sunnybrook Cardiac MRI dataset [67], CPTAC-LUAD dataset [68], and the LIDC-IDRI dataset [69].

All of these datasets display about 32 different diseases labels which can listed as follows: Pneumonia, Viral Pneumonia, Bacterial Pneumonia, Atypical Pneumonia, COVID-19, Edema, Consolidation, Atelectasis, Lesion, Asbestosis Signs, Cardiomegaly, Enlarged Cardiomediastinum, Heart Insufficiency, Pleural Thickening, Pneumothorax, Fracture, Lung Metastasis, Mass, Hernia, Effusion, Nodule, Emphysema, Fibrosis, COPD signs, Tuberculosis, Tuberculosis Sequelae, Post Radiotherapy Changes, Pulmonary Hypertension, Respiratory Distress, Lymphangitis Carcinomatosa, Infiltration, and Lepidic Adenocarcinoma. The majority of the available datasets are CXR images, as shown in Table 2.

Table 2.

Public datasets are used by contributions to deep learning applications in pulmonary medical imaging analysis.

Table 2.

Public datasets are used by contributions to deep learning applications in pulmonary medical imaging analysis.

| Name of Dataset/Ref. & Download Link | Dataset Classes | Images Type | Dataset Description |

|---|---|---|---|

| ChestX-ray8 [49,70] | 8 thoracic diseases and a normal case. Diseases labels are Atelectasis, Cardiomegaly, Effusion, Infiltration, Mass, Nodule, Pneumonia, and Pneumothorax. | X-ray | 108,948 frontal images in PNG format with resolution of images 1024 × 1024, from 30,805 patients. |

| ChestX-ray14 [49,70] | 14 thoracic diseases and a normal case. Diseases labels are Edema, Cardiomegaly, Effusion, Infiltration, Mass, Nodule, Pneumonia, Pneumothorax, Atelectasis, Hernia, Pleural thickening, Emphysema, Fibrosis, and Consolidation. | X-ray | 112,120 total images in PNG format from 32,717 patients. Images resolution 1024 × 1024. |

| ImageCLEF 2019 [39,71] | Tuberculosis | CT | 335 images in PNG format for 218 patients, with a set of clinically relevant metadata. Image size 512 × 512 pixels. |

| ImageCLEF 2020 [62,72] | Tuberculosis | CT | 403 images in PNG format 512 × 512 pixels. |

| JSRT dataset [57,73] | Normal and Lung Nodules | CT and X-ray | 93 normal and 154 nodule images in PNG format, with metadata. Image size 2048 × 2048 pixels. |

| Montgomery dataset [63,74] | Tuberculosis and Normal | X-ray | 138 TB images and 80 normal images in PNG format with metadata. Images size is either 4020 × 4892 or 4892 × 4020 pixels. |

| Autofocus database [65,75] | Tuberculosis | Sputum Smear Microscopy | 1200 images with resolution of 2816 × 2112 pixels. |

| Andrew’s Kaggle Database [76] | COVID-19 | CT and X-ray | 16 CT images and 79 X-ray images in JPEG format with different size of images. |

| Chowdhury’s Kaggle dataset [50,77] | COVID-19, Pneumonia, and Normal | X-ray | 1341 Normal, 219 COVID-19, and 1345 Pneumonia in PNG format images. |

| Optical Coherence Tomography (OCT) and Chest X-ray Images [58,78] | Normal and Pneumonia | X-ray and CT | 5856 images, 1583 normal and 4273 pneumonia images in JPEG format with different images size. Bacterial Pneumonia, Viral Pneumonia, and COVID-19 are all represented in the Pneumonia class. |

| Shenzhen dataset [63,79] | Tuberculosis and normal | X-ray | 662 frontal images; 326 Normal and 336 TB. Images are in PNG format with different size about 3000 × 3000. |

| CheXpert [53,80] | 18 different diseases labels as Atelectasis, Consolidation, Infiltration, Pneumothorax, Edema, Emphysema, Fibrosis, Effusion, Pneumonia, Pleural Thickening, Cardiomegaly, Nodule, Mass, Hernia, Lung Lesion, Fracture, Lung Opacity, and Enlarged Cardiomediastinum | X-ray | 224,316 images in PNG and JPG format from 65,240 patients with both frontal and lateral views, with different images size. |

| RSNA Dataset [59] | Pneumonia and Normal | X-ray | 5863 images in JPEG format with different images size. |

| PadChest [52,81] | 16 different diseases labels as Pulmonary Fibrosis, COPD signs, Pulmonary Hypertension, Pneumonia, Heart Insufficiency, Pulmonary Edema, Emphysema, Tuberculosis, Tuberculosis Sequelae, Lung Metastasis, Post Radiotherapy Changes, Atypical Pneumonia, Respiratory Distress, Asbestosis Signs, Lymphangitis Carcinomatosa, and Lepidic Adenocarcinoma | X-ray | 160,868 images in PNG format with different images size from 67,625 patients and 206,222 reports. |

| NCI Genomic Data Commons [61,82] | Lung Cancer | Histopathology | More than 575,000 images with size 512 × 512. |

| Covid Chest X-ray database [83] | COVID-19 | X-ray | 231 COVID-19 images in JPEG format with different images size, and contains metadata |

| RIH-CXR [60] | Normal and Abnormal | X-ray | 17,202 frontal images; 9030 Normal and 8172 abnormal images from 14,471 patients. It also contains metadata. |

| Sajid’s Kaggle database [84] | Normal and COVID-19 | X-ray | 28 Normal and 70 COVID-19 images in JPEG, JPG, and PNG format with different images size. |

| Covid-19 Radiography Database [55,77] | Normal, COVID-19, Lung Opacity, and Viral Pneumonia | X-ray | 10200 Normal, 3616 COVID-19, 6012 Lung Opacity, and 1345 Viral Pneumonia. 299 × 299 pixels images in PNG format. The dataset contains metadata. |

| ChestX-ray images (Pneumonia) [58,85] | Normal and Pneumonia | X-ray | 5232 chest X-ray images from children. 3883 pneumonia (2538 bacterial and 1345 Viral) and 1349 normal, from a total of 5856 patients to train a model and then tested with 234 normal and 390 Pneumonia from 624 patients. The images are in JPEG format with different size. |

| COVID-CT database [64,86] | Normal and COVID-19 | CT | 15589 images for normal and 48260 images for COVID-19 in DICOM format with 512×512 pixels. |

| COVID-19 Image Data Collection [51,87] | 4 classes: COVID-19, Viral Pneumonia, Bacterial Pneumonia, and Normal | X-ray | It contains 306 images, 79 images for normal, 69 images for COVID-19, 79 images for Bacterial Pneumonia, and 79 images for Viral Pneumonia in JPG format with different size. It also contains metadata. |

| LIDC-IDRI [69,88] | Lung Cancer | CT | It conatins 1018 images from 1010 patients. It also contains metadata. |

| LDOCTCXR [66,78] | Normal and Pneumonia | X-ray | 3883 Pneumonia and 1349 Normal images. |

| COVIDx Dataset [54,89] | Pneumonia, Normal, and COVID-19 | X-ray | 5559 Pneumonia, 8066 Normal, and 573 COVID-19 images |

| CPTAC-LUAD Dataset [68,90] | Lung Cancer | MRI, CT, and X-ray | 43,420 images in DICOM format. |

| Sunnybrook Cardiac MRI [67,91] | Heart Disease | MRI | The SCD had 45 MRI images with the combination of patients with the following classes such as healthy, hypertrophy, heart failure with infarction and heart failure without infarction. The image resolution is 255 × 255. |

| MIMIC-CXR Dataset [56,92] | Chest radiograph | X-ray | 377,110 chest radiographs with 227,835 radiology reports in DICOM format. The size of the chest radiographs varies and is around 3000 × 3000 pixels. |

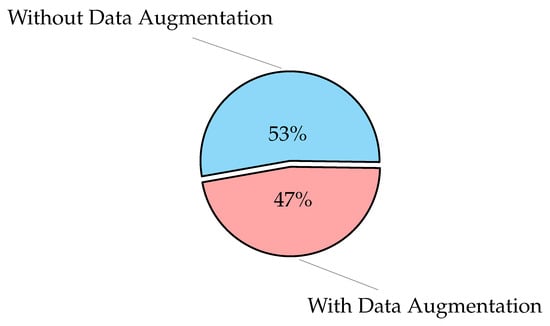

3.3. Image Pre-Processing

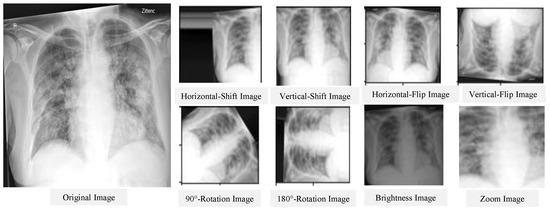

The main goal of image pre-processing a segmentation is to enhance the quality of the input image and reduce the amount of noise. Images must match the network’s input size in order to train a network model and make predictions on new data. You can re-scale or crop your data to the desired size if you need to modify the size of your images to fit the network. By using random augmentation to your data, you can effectively enhance the amount of training data [93]. The image augmentation creates many variations from the original images. The image augmentation process may include cropping, flipping, brightness, saturation, contrast, rotation, scaling, translation, zooms, and/or adding noise, as shown in Figure 1. The figure illustrates the different variations from the input X-ray image, including horizontal and vertical shift, horizontal and vertical flip, rotation, brightness, and zoom using Keras ImageDataGenerator method. As an example of data augmentation pre-processing, in [94], the authors used data augmentation to diagnose pneumonia disease and achieved an accuracy of 96.61%. For image classification tasks, in terms of training loss, accuracy, and validation loss, a deep learning model with image augmentation outperforms a deep learning model without it. Augmentation can solve the problem of imbalanced classes in binary classification [95]. When training a binary classification model, the resulting model will be biased if one class has more samples than the other. There are other advanced methods that are used to handle the imbalanced dataset, such as the synthetic minority oversampling technique (SMOTE) [96], generative adversarial networks (GAN) [97,98], and adaptive synthetic sampling method (ADAYSN) [99]. In [98], the authors used GAN method to detect lung cancer and achieved an accuracy of 99.86%. Image segmentation is used to perform operations on images to detect patterns and retrieve information from them. Image segmentation is the process of splitting a digital image into several regions or objects, each of which is made up of sets of pixels with similar features or attributes that are labeled differently to represent distinct regions or objects. The purpose of segmentation is to make an image more understandable and easier to evaluate by simplifying and/or changing its representation, as in [100], which achieved an AUC of 97.7% for segmentation. In [101], the authors achieved an accuracy of 96.47% without segmentation and 98.6% with segmentation.

Figure 1.

Results of the data augmentation procedure on a certain CXR image.

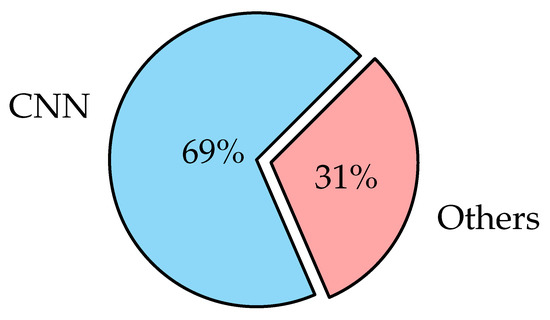

3.4. Deep Learning Models

Deep learning has become very popular in the field of scientific computing because its algorithms are widely used to solve complex problems in medical applications. Deep learning algorithms employ several types of neural networks to perform specific tasks such as speech recognition, image recognition, data compression, machine translation, data visualization, and image classification. Deep learning supports the classification, quantification, and identification of medical images. DL is a learning type of neural network relevant to layer size [102], and it refers to systems that learn from experience on large data sets. Deep learning is predicated on the concept of extracting features from input data utilizing many layers to find different elements that are important to the input data. Data classification is very important in the medical field to generate statistics about the causes of illness and causes of death. Many varieties of deep learning algorithms are utilized in different applications as the nature of the data determines which deep learning algorithms are used. The most widely used deep learning algorithms are as follows:

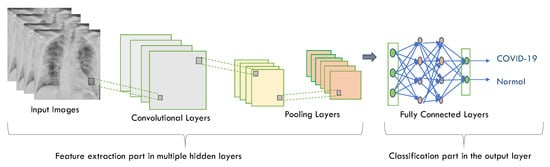

3.4.1. Convolutional Neural Networks (CNNs)

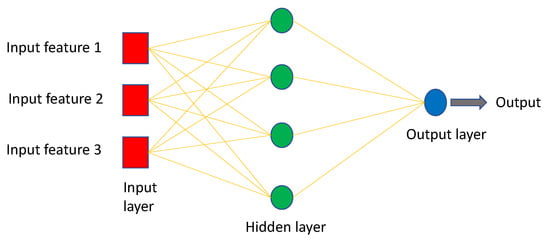

For image classification, CNN is one of the most commonly used deep neural network types [103]. Unlike neural networks ‘ANN’, where the input is a vector, here the input is a multi-channeled image. CNN operates by extracting features from images directly [104]. The essential features are not pre-trained; they are learned while the network trains on a set of images. This automated feature extraction makes deep learning models more accurate for computer vision tasks such as object classification [105]. CNN learns to detect distinct aspects of an image using many hidden layers. CNN is formed by three main types of layers (convolutional layer, pooling layer, and fully connected layer) [106,107] as shown in Figure 2. The description of these layer types is as follows:

Figure 2.

CNN architecture using chest X-ray images as an input.

- Convolutional layer has a set of filters (or kernels). A kernel or a filter is a collection of weights, where each neuron in one layer is connected to every neuron in the next layer in the neural network using weights. It performs a convolution operation (a linear operation involving a set of weights multiplied (in a dot product mode) by the input is called convolution) [108]. To obtain a certain value, the value of dot products are added together;

- Pooling layer is applied to the feature maps produced by a convolutional layer. It provides an approach for downsampling feature maps by summarizing the presence of features in patches of the feature map, which leads to reducing the number of parameters and calculations in the network. It recognizes the complex objects in the image and thus preventing overfitting. Average pooling and max pooling are two common pooling algorithms that summarize a feature’s average presence maps;

- Fully connected layer connects all of the neurons from the previous layer and assigns each connection a weight. Each output node in the output layer represents a class’s score. Multiple convolutional-pooling layers are merged to generate a deep architecture of nonlinear transformations, which helps to create a hierarchical representation of an image, facilitating the learning of complex relationships.

CNN is widely used in image classification because it is powerful in achieving high accuracy with lowest error rate, but there are some disadvantages as follows: CNN has multiple layers, hence the training process takes a lot of time and also requires a large data set to process and train the neural network [109].

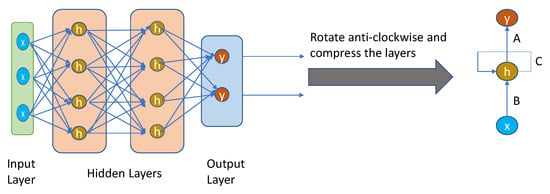

3.4.2. Recurrent Neural Networks (RNNs)

RNNs are widely employed in image labels, speech recognition, time series analysis, machine translation, and natural language processing (NLP). RNNs use some types of feedback, in which the output is fed back into the input as shown in Figure 3.

Figure 3.

Simple recurrent neural network architecture.

It is a loop that passes data back to the network from the output to the input. The nodes in different layers of the neural network are compressed to form a single layer of recurrent neural networks. ‘x’ is the input layer, ‘h’ is the hidden layer, and ‘y’ is the output layer. A, B, and C are the network parameters used to improve the output of the model. At any given time t, the current input is a combination of input at x(t) and x(t − 1). The output at any given time is fetched back to the network to improve on the output. The previous elements of a sequence determine the output of the RNNs. Therefore, they are able to remember previous data and use that information in their prediction [110].

RNN is the best example of long-term memory as it remembers all information since it was first used. Using its prior knowledge, it anticipates your other actions. However, there are some drawbacks such as slow computation of this neural network, training can be difficult, and very long sequences cannot be processed if you use relu as an activation function. Therefore, RNN includes less feature compatibility when compared to CNN [111].

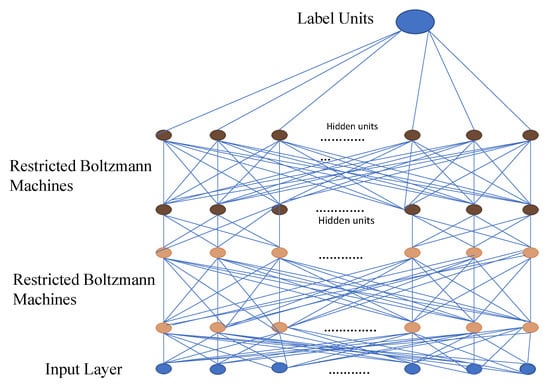

3.4.3. Deep Belief Networks (DBNs)

DBN is a type of deep neural network that comprises a large number of hidden units connected between layers but not between units within each layer as shown in Figure 4.

Figure 4.

Simple deep belief network architecture.

Restricted Boltzmann Machines (RBMs) are a binary variant of factor analysis. Instead of having multiple factors, the network output will be determined by a binary variable. DBN can be used to extract the in-depth features of the original data. Object recognition, video sequences, and motion capture data are all processed using DBN applications [112,113].

A deep belief network is especially useful when limited training data are available. DBN has specific robustness in classification (size, position, color, and viewing angle rotation). The same neural network approach in a DBN can be implemented on various applications and data types. However, there are some drawbacks including that it requires huge data to perform better techniques such as CNN model, has hardware requirements, and requires classifiers to understand the output [114].

3.4.4. Multilayer Perceptron (MLP)

MLP is a sort of feedforward neural network made up of multiple layers of perceptrons with activation functions and is a fully connected class of Artificial Neural Network (ANN), where ANN refers to models of human neural networks that are designed to help computers learn. It consists of a large number of highly interconnected processing elements called neurons, and one or more hidden layers. MLP are made up of at least three fully connected layers: input, hidden, and output layers as shown in Figure 5.

Figure 5.

Simple MLP architecture.

MLP might have several hidden layers, and they are employed in applications of machine translation software, complex signal processing, speech recognition, and image recognition [115].

The MLP model is one of the best and simplest types of artificial neural networks, and it works well with both small and large input data. However, one of its drawbacks is that the calculation process is difficult and takes a long time [116].

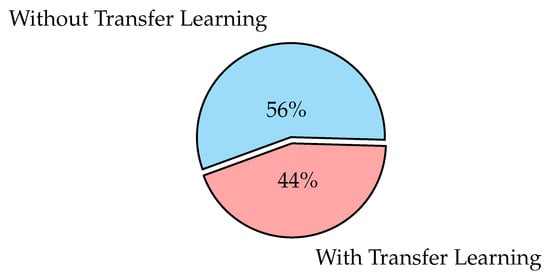

3.5. Ways to Train Deep Learning Models

A pre-trained model is one that has been trained on a large dataset to handle a problem similar to the one we are working on. There are three types of training a deep learning model: learning from scratch, transfer learning, and fine-tuning.

- Learning from scratch collects a large number of labeled datasets and designs a network architecture to learn the features that may then be used as input to a model (i.e., feature extractor). Feature extraction images may be extracted from a model automatically as in the CNN model or manually using hand-crafted methods such as Histogram of Oriented Gradients (HOG), Intensity Histograms (IH), Scale Invariant Feature Transform (SIFT), Local Binary Patterns (LBP), and Edge Histogram Descriptor (EHD) [117]. For applications with a large number of output classes, this strategy is useful, but it needs more time to train a model [118];

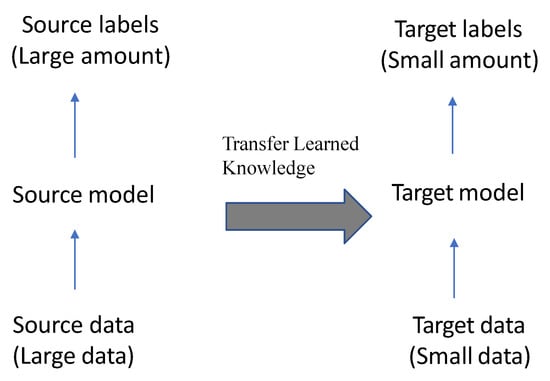

- Transfer learning is the process of transferring information from one model to the next, allowing for more accurate model creation with less training data as shown in Figure 6. Instead of starting the learning process from scratch, transfer learning begins with patterns learned while solving a previous problem, allowing for faster progress and improved performance while tackling the second problem [119]. Many studies use transfer learning to enhance their model performance, such as the ones in [94,101,120,121,122];

Figure 6. The concept of transfer learning.

Figure 6. The concept of transfer learning. - Fine-tuning is a common technique for transfer learning. In addition, it is making minor changes in order to obtain the desired result or performance, using the weights of a pre-trained neural network model as initialization for a new model trained on the same domain’s data. Except for the output layer, the target model duplicates all model designs and their parameters from the source model and fine-tunes them based on the target dataset. The target model’s output layer, on the other hand, must be trained from scratch. Fine-tuning deep learning involves using weights of a previous deep learning algorithm for programming another similar deep learning process as in [32,123,124]. Because it already has crucial knowledge from a previous deep learning algorithm, its procedure dramatically reduces the time required to develop and process a new deep learning algorithm. When the amount of data available for the new deep learning model is limited, fine-tuning deep learning models can be used, but only if the datasets of the current model and the new deep learning model are similar [125].

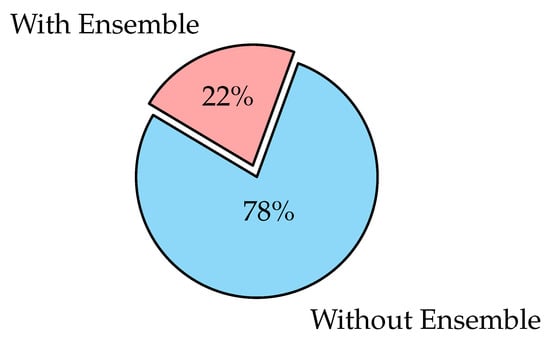

3.6. Ensemble Learning

Ensemble learning is the process of strategically generating and combining several models, such as classifiers to solve a specific problem [126]. It is largely used to improve a model’s performance (classification, prediction, function approximation, etc.) or to lower the chance of a poor model selection. It can also be used to assign a confidence level to the model’s decision, data fusion, incremental learning, non-stationary learning, pick optimal (or near-optimal) features, and error-correcting. Classifiers may be Support Vector Machine (SVM), SoftMax, Decision Trees, or Naïve Bayes Classifiers. Voting scheme [127,128], bagging [129], boosting [130,131], and stacking [132,133] are the most commonly used ensemble learning algorithms.

3.7. Pre-Trained Models

As mentioned before, transfer learning is a machine learning method where we reuse a pre-trained model as the starting point for a model on a new task as shown in Figure 6.

The following are many pre-trained models for image classification and segmentation as:

- Visual Geometry Group (VGG) is the most familiar model for image classification. It is a standard CNN with multiple layers [134]. The VGG models are VGG-16 and VGG-19, which supports 16 and 19 convolutional layers, respectively, trained on the ImageNet (ImageNet is a database with over 14 million images divided into 1000 categories). VGG-16 takes a long time to train compared to other models, and this can be a disadvantage when we are using large datasets. The main feature of this architecture is that it focuses on basic 3 × 3 size kernels rather than a large number of hyper-parameters (a kernel is a matrix of weights that are multiplied with the input to improve the output in a preferred manner) in the convolutional layers and the max-pooling layers of 2 × 2 size. Finally, it has two fully connected (FC) layers for output, followed by a Softmax classifier. The VGG’s weight configuration is publicly available and has been utilized as a baseline feature extractor in a variety of other applications and challenges. VGG-19 differs from VGG-16 in that each of the three convolutional blocks has an extra layer [135]. The work in [136] used VGG-16 for the classification of 14 different thoracic diseases and the work in [137] used the same model for COVID-19 detection. The work in [138] used VGG-19 for the detection of tuberculosis and the work in [139] used VGG-19 in the detection of pneumonia;

- Inception-V3 Szegedy et al. invented a type of CNN in 2014 [140]. Inception v3 is an image recognition model that has been shown to attain greater than 78.1% accuracy on the ImageNet dataset [141]. Inception models are different from typical CNNs in that they are made up of inception blocks, concatenating the results of many filters on the same input tensor. The model itself is made up of symmetric and asymmetric building blocks, including convolutions, average pooling, max pooling, concatenations, dropouts, and fully connected layers. Batch normalization is used extensively throughout the model and applied to activation inputs. Loss is computed using Softmax. Inception-V3 is a new version of the starting model that was first released in 2015. It has three different filter sizes in a block of parallel convolutional layers (1 × 1, 3 × 3, and 5 × 5). Moreover, a maximum 3 × 3 assembly is performed. The outputs are transmitted to the next unit in a consecutive order. It accepts an entry image size of 299 × 299 pixels [142]. In [119], the authors used this model for the detection of lung nodule disease;

- ResNet50 is a type of deep neural network that is a subclass of CNNs and is used to classify images. ResNet50 is a variant of ResNet model which has 48 Convolution layers along with one MaxPool and one Average Pool layer [143]. The usage of residual layers to create a new in-network architecture is a major innovation. ResNet50 is comprised of five convolution blocks, each having three layers of convolution. ResNet50 is a residual network that accepts photos with a resolution of 224 × 224 pixels and has 50 residual networks [144]. The work in [120,145] used this model in the classification of 14 different thoracic diseases;

- Inception-ResNet-V2 is an ImageNet-trained CNN. The network is 164 layers deep and can classify images into 1000 object categories [141]. It is a hybrid approach that combines the structure of inception with the residual connection. It accepts 299 × 299 pixel images and generates a list of estimated class probabilities. The conversion of inception modules into residual inception blocks, the addition of more inception modules, and the creation of a new type of inception module (Inception-A) following the Stem module are among the advantages of Inception-Resnet-V2 [146];

- DenseNet201 is a 201-layer CNN that receives a 224 × 224 pixel input image. DenseNet201 is a ResNet upgrade that adds dense layer connections. It connects one layer to the next in a feed-forward approach. DensNet201 has direct connections while the standard convolutional networks have L layers and L connections. In DenseNet, each layer obtains additional inputs from all preceding layers and passes on its own feature-maps to all subsequent layers. Concatenation is used. Each layer is receiving a “collective knowledge” from all preceding layers. Since each layer in DenseNet receives all preceding layers as input, it has more diversified features and tends to have richer patterns [147]. By increasing the amount of computing required, encouraging feature reuse, minimizing the number of parameters, and reinforcing feature propagation, DenseNet can enhance the model’s performance [148];

- MobileNet-V2 is an improved version of MobileNet-V1 that uses the ImageNet database to train. It contains only 54 layers and a 224 × 224 pixel input image. MobileNetV2 contains the initial fully convolution layer with 32 filters, followed by 19 residual bottleneck layers [149]. Its key distinctive feature is that it uses depth-wise separable convolutions instead of a single 2D convolution. That is, two 1D convolutions with two kernels are used. As a result, training takes up less memory and requires fewer parameters, resulting in a tiny and efficient model. A residual block with a stride of 1 and a downsizing block with a stride of 2 are the two types of blocks. Each block has three layers: a 1 × 1 convolution with ReLU6, a depthwise 3 × 3 convolution with ReLU6, and another 1 × 1 convolution with nonlinearity. MobileNetV2 is a mobile-oriented model that can be used to solve a variety of visual identification tasks (classification, segmentation, or detection) [150]. The work in [151] used MobileNet-v2 in the classification of 14 different thoracic diseases, and the work in [101] used this model for the detection of tuberculosis disease;

- Xception is a CNN that has 71 layers called Xception and presented by Chollet [152]. It features depthwise separable convolutions and is a more advanced version of Inception’s architecture. The traditional Inception modules are replaced by depthwise separable convolutions in Xception. It outperforms VGG16, ResNet, and Inception in conventional classification issues when compared to them. It uses a 299 × 299 pixel input image [152];

- NASNet is a type of convolutional neural network discovered through a search for neural architecture. It has been trained on over a million images from ImageNet. For a wide variety of images, the network learned rich feature representations. Normal and reduced cells are the basic building blocks [153]. The network accepts 331 × 331 pixel images as input [154]. The work in [135] used this model in lung cancer detection;

- U-Net is used for semantic segmentation. It is a convolutional network architecture for fast and precise segmentation of images. It is used for biomedical image segmentation [155]. In the U-Net model, the input images go through several stages of convolutional and pooling, which reduce the height and width of the image as the depth grows after each convolution in down-sampling, followed by fully convolutional and several stages of up-sampling to produce the image mask [156]. The segmentation image size of 512×512 pixel [157,158]. In [159], the authors used this model for segmentation of thoracic fracture disease, and in [100], the authors used U-Net in segmentation of cardiomegaly disease.

3.8. Evaluation Criteria

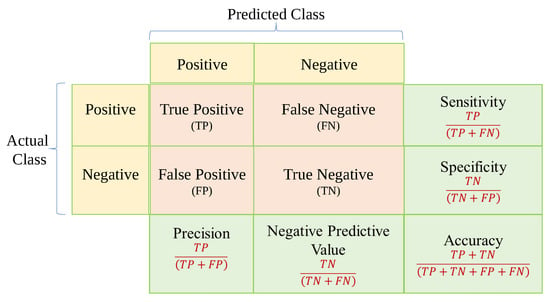

The final step is using a loss function or confusion matrix to determine the number of observations that were categorized properly or wrongly. The loss function is the difference between the expected outcome and the expected output. From the loss function, we can derive the gradients which are used to update the weights. For a data point Yi and its predicted value Yj, where n is the total number of data points in the dataset, the mean squared error (MSE) is defined as in Equation (7). The observed i and projected j outcome values are compared as shown in Figure 7. The confusion matrix shows the number of correct and incorrect predictions categorized by type of outcome [160]. Recall, Precision, Specificity, Accuracy, Area Under the Curve (AUC), and Receiver Operating Characteristics (ROC) curve can be measured using a confusion matrix. The benchmark metrics are:

Figure 7.

Confusion matrix.

3.9. Type of Disease

In this paper, we focus on classifying the thoracic diseases into three classes: lung diseases or respiratory system diseases, heart diseases, and others as shown in Figure 8. For each class, we discuss the research that classifies these diseases whether the classification is binary or multiple, the type of image for each disease, the type of AI model that is used to detect this disease, the dataset used, and the performance of each model. Lung diseases include pneumonia [139], COVID-19 [137,161,162,163,164,165], edema [166], lesion [135], asbestosis signs [167], consolidation [168], atelectasis [169], COPD [170], pleural thickening [171], fibrosis [172], asthma [173], lung metastasis [98], pneumothorax [174], emphysema [175], tuberculosis (TB) [176], and infiltration [177]. Heart diseases include cardiomegaly [128] and heart insufficiency disease [178]. Other diseases include fracture [159], hernia [179], and mass [180].

Figure 8.

Thoracic diseases classification.

3.9.1. Lung Diseases

These chest diseases affect the structure of the lung tissue, airways, or any part of the respiratory system, causing it to become scarred or inflamed, which makes the lungs unable to fully expand [181]. These diseases appear as opacities on chest radiograph such as pneumonia, MERS-CoV, edema, and consolidation [182]. Table 3, Table 4 and Table 5 summarize the studies on using deep learning to diagnose lung diseases.

Lung Diseases That Affect Tissues

Table 3 includes the following diseases: Pneumonia, Fibrosis, Lesion, Pleural Thickening, Asbestosis Signs, Edema, Lung Metastasis, and Consolidation.

Table 3.

Lung diseases detection summary that affect tissues.

Table 3.

Lung diseases detection summary that affect tissues.

| Ref. (Year) | Name of Disease | Input Image Type | Dataset Used | Data Preparation Type | Model Type | Ensemble Technique | Target | Results | Open Issues |

|---|---|---|---|---|---|---|---|---|---|

| [127] (2019) | Pneumonia | X-ray | RSNA Pneumonia Detection Challenge dataset | Data Augmentation including flipping, rotation, brightness, gamma transforms, random gaussian noise, and blur. | RetinaNet and Mask R-CNN | voting scheme | Localization and Classification | Recall 79.3% | A lateral chest X-ray or/and CT images should be augmented to the chest X-ray.Metadata such as age, gender, and view position can be useful in later investigations. |

| [94] (2021) | Pneumonia | X-ray | Covid Chest X-ray and optical coherence tomography datasets. | intensity normalization. Contrast Limited Adaptive Histogram Equalization (CLAHE).Data Augmentation. | CNN pre-trained on Inception-V3, VGG16, VGG19, DenseNet201, Inception-ResNet-V2, Resnet50, MobileNet-V2, and Xception | Detection and Classification | Accuracy 96.61%, Sensitivity 94.92%, F1-Score 96.67%, Specificity 98.43%, Precision 98.49% | Create a complete system that can detect, segment, and classify pneumonia. Furthermore, performance could be improved by using larger datasets and more advanced feature extraction techniques including color, texture, and shape. | |

| [139] (2021) | Pneumonia | X-ray | ChestX-ray8 | image resizing | VGG19 | Voting Classifier | Classification and Detection | Accuracy 97.94% | Using texture and shape feature extraction techniques to improve the handcrafted feature vector. Using a suitable classifier system to replace the SoftMax layer. To improve classification accuracy, the fully-connected layer and the drop out layer were modified. |

| [166] (2020) | Edema | X-ray | MIMIC-CXR | Data Augmentation including translation and rotation | BERT model | Classification and Prediction of the Edema severity level | overall accuracy 89% | Suggest utilizing text to semantically explain the image model. | |

| [183] (2019) | Edema | X-ray | MIMIC-CXR | Data Augmentation including rotation, transformation, and cropping. | Bayesian | predicting pulmonary edema severity | RMS 0.66 | To improve the pulmonary edema severity prediction accuracy, researchers suggest using an alternative machine learning approach. | |

| [184] (2018) | Fibrosis | Histopathology | cardiac histological images dataset | Data Augmentation including rotation, flipping, warping, and transformation. | CNN | Segmentation and Detection | Mean DSC is 0.947 | Learning data should include proportions of each class and color variations in particular structures, as well as an approximate representation of the attributes in the whole image collection. | |

| [172] (2019) | Fibrosis | CT | LTRC-DB, MD-ILD, INSEL-DB | CNN | Segmentation, Classification, and Diagnosis | Accuracy 81% and F1-score 80% | Use Histopathology or X-ray in the diagnosis. | ||

| [168] (2020) | Consolidation | X-ray | Pediatric Chest X-ray | Data Augmentation including cropping, Histogram matching transformation, and Contrast Limited Adaptive Histogram Equalization (CLAHE) | DCNN | Detection and Perturbation visualization (Heatmap) | Accuracy 94.67% | Test DCNN model in multi-classification. | |

| [185] (2021) | Consolidation and (Pneumonia, SARS-CoV-2) | X-ray | COVIDx Dataset | Data Augmentation including flipping, rotation, and scaling. | CNN pre-trained on VGG-19 | Classification and Visualization (GradCam) | Accuracy 89.58% for binary classification and 64.58% for multi-classification | Enhance accuracy by using a large amount of data in multi-classification. | |

| [135] (2019) | Lung Lesion/Lung Cancer | CT | LIDC-IDRI | DCNN pre-trained on VGG-19, VGG-16, ResNet50, DenseNet121, MobileNet, Xception, and NASNet | Segmentation and Classification | DenseNet: Accuracy 87.88%, Sensitivity is 80.93%, Specificity is 92.38%, Precision is 87.88%, and AUC is 93.79%. Xception: Accuracy 87.03%, Sensitivity 82.73%, Specificity 89.92%, Precision 84.97%, and AUC 93.24%. | Focusing on the application of deep learning models to small datasets. Using CNNs to synthesize artificial datasets, such as generative adversarial networks. | ||

| [186] (2020) | Lung Nodule | CT | Japanese Society of Radiological Technology database | Data Augmentation including horizontal flipping and angle rotation. | CNN | nodule enhancement, nodule segmentation, and nodule detection. | Sensitivity 91.4% | To improve CAD performance, the ROI image can be transformed to an RGB image and combined with additional nodule enhancement images. | |

| [119] (2019) | Lung Nodules | CT | JSRT | Data Augmentation including rotation, flip, and shift. | DCNN pre-trained on Inception-v3 | Classification | sensitivity 95.41%, specificity 80.09% | Using ensemble learning to overcome the problem of the deep learning model’s large gap between specificity and sensitivity. | |

| [167] (2021) | Asbestosis Sign | CT | Private dataset | LRCN | Classification and Visualization | Accuracy 83.3% | The LRCN model can be used to diagnose a wide range of lung diseases. | ||

| [187] (2022) | Asbestosis | CT | Private dataset | Data Augmentation including zoom, flipping, rotation, and shift. Random sampling. | LRCN (CNN and RNN) | Segmentation and Diagnosis | Sensitivity 96.2%, Specificity 97.5%, Accuracy 97%, AUROC of 96.8%, and F1 score 96.1% | To supplement the limitations of a short dataset, more data should be obtained, and external validation should be done through a multicenter study involving additional hospitals. | |

| [171] (2018) | Pleural Thickening and another 13 different diseases | X-ray | ChestX-ray14 | Data Augmentation including cropping and flipping | AG-CNN | Localization and classification | AUC 86.8% | Look into a more accurate localization of the lesion areas. Take on the challenges of sample collecting and annotation (with the help of a semi-supervised learning system). | |

| [145] (2021) | Pleural Thickening and another 13 different diseases | X-ray | ChestX-ray14 and CheXpert | CNN pre-trained on ResNet50 | Localization and classification | AUC (Pleural Thickening) 79% of ChestX-ray14 & average AUC 83.5% | Invite a group of top radiologists to work on mask level annotation for the NIH and CheXpert datasets. | ||

| [98] (2021) | Lung Metastasis—Lung Cancerv | CT | SPIE-AAPM Lung CT Challenge Data Set | Data Augmentation using GAN network | CNN pre-trained on AlexNet | Classification | Accuracy 99.86% | Adjusting the parameters of each layer to obtain the best parameter combination or implement the optimizer in different network architectures. | |

| [121] (2021) | Lung Cancer | CT | LIDC-IDRI | CNN pre-trained on GoogleNet | Classification | Accuracy 94.53%, Specificity 99.06%, Sensitivity 65.67%, and AUC 86.84% | To increase the classification accuracy of lung lesions in CT images, more study on the GoogleNet network is required. |

Pneumonia

Pneumonia is an infection that causes breathing difficulties by inflaming the air sacs in one or both lungs.

Using the current deep learning architectures (VGG-16, VGG-19, ResNet-50, DenseNet-201, Inception-ResNet-V2, Inception-V3, MobileNet-V2, and Xception models) for transfer learning to compare current deep CNN architectures and retraining of a baseline CNN, Idri et al. [94] established the best performing architecture for 2-class categorization (pneumonia and normal) based on X-ray images. The OCT and COVID Chest X-ray were the two datasets used. As a result, they determined that the fine-tuned version of Resnet50 operates exceptionally well, with rapid increases in training and testing accuracy (more than 96%). Using transfer learning of current deep learning architectures, they established the best performing architecture for 2-class categorization (pneumonia and normal) based on X-ray images. Dey et al. [139] presented a Deep-Learning System (DLS) to diagnose lung diseases based on X-ray images. The suggested study makes use of traditional chest radiographs as well as chest radiographs that have been processed with a threshold filter. Standard DL models with a SoftMax classifier are utilized for the first experimental evaluation using the ChestX-ray8 dataset, including AlexNet, VGG16, VGG19, and ResNet50. The results showed that VGG-19 has a higher classification accuracy of 86.97% when compared to other approaches. They then used the Ensemble Feature Scheme to modify the VGG19 network to identify pneumonia. VGG19 with an RF classifier has a higher accuracy of 95.70%. When the same experiment was conducted with chest radiographs that had been handled with a threshold filter, the classification accuracy of the VGG19 using the RF classifier was 97.94%.

An automated model for detecting and localizing pneumonia on chest X-ray images were provided by Sirazitdinov et al. [127]. For pneumonia identification and localization, they suggest an ensemble of two convolutional neural networks, Mask R-CNN and RetinaNet, where RetinaNet is a one-stage object detection model that utilizes a focal loss function to address class imbalance during training. The RetinaNet backbone uses ResNet and Feature Pyramid Net (FPN) structures. Based on the FPN structure, a top-down path and horizontal connection are added. Each level of the FPN is connected to the fully convolutional networks, which include two independent subnets that are used for classification and regression. The Mask R-CNN is a Convolutional Neural Network and state-of-the-art in terms of image segmentation. This variant of a Deep Neural Network detects objects in an image and generates a high-quality segmentation mask for each instance.

For the detection of pneumonia, the Faster R-CNN-based technique was used. They used the Kaggle Pneumonia Detection Challenge dataset, which contains 26,684 X-ray images of pneumonia. The recall score was 79.3%.

Fibrosis

The pulmonary fibrosis disease is characterized by scarred and damaged lung tissue. These thick, rough tissues make it difficult for your lungs to function properly, and as pulmonary fibrosis worsens, you will start to feel short of breath.

Christe et al. [172] presented a CNN model for the classification and diagnosis of pulmonary fibrosis disease by using CT images. They used three datasets: Lung Tissue Research Consortium Database (LTRC-DB), the Multimedia Database of Interstitial Lung Diseases (MD-ILD), and the Inselspital Interstitial Lung Diseases Database (INSEL-DB). They used the random forest (RF) classifier that was able to recommend a radiological diagnosis. The output accuracy is 81%, and the F1-score is 80%.

Fu et al. [184] developed and tested an elegant convolutional neural network (CNN) for histological image segmentation, particularly those containing Masson’s trichrome stain. There are 11 convolutional layers in the network. The CNN model was trained and tested on a 72-image dataset of cardiac histology pictures (labeled fibrosis, myocytes, and background). The segmentation performance of the model was excellent, with a test mean dice similarity coefficient (DSC) of 0.947.

Lesion

Pulmonary lesions, pulmonary nodules, lung nodules, pulmonary nodules, white spots, and lesions are various words for the same thing: an abnormality in the lungs. They are distinct, well-defined spherical opacities with a diameter of less than or equal to 3 cm (1.5 in) that are entirely surrounded by lung tissue, do not touch the lung root or mediastinum, and are not associated with enlarged lymph nodes, collapsed lung, or pleural effusion. A pulmonary nodule might be malignant or benign.

Zhang et al. [135] presented a DCNN model pretrained on VGG-19, VGG-16, ResNet50, DenseNet121, MobileNet, Xception, and NASNet. They showed that DenseNet121 and Xception achieved better results for lung nodule diagnosis. They used the CT Lung Image Database Consortium and the image database resource initiative (LIDC-IDRI). The output accuracy for the DenseNet model is 87.77%, sensitivity is 80.93%, specificity is 92.38%, precision is 87.88%, and AUC is 93.79%. Xception output performance is as follows: 87.03% accuracy, 82.73% sensitivity, 89.92% specificity, 84.97% precision, and 93.24% AUC.

Chen et al. [186] introduced a faster region convolutional neural network (Faster R-CNN) that has been effectively used for computed tomography nodule candidate detection. Before doing nodule detection, they did nodular enhancement and segmentation. They used the database of the Japanese Society of Radiological Technology. The model performed well, with a sensitivity of 91.4% and 97.1%, respectively, with 2.0 and 5.0 false positives per image (FPs/image).

To categorize pulmonary images, Wang et al. [119] employed a DCNN model pre-trained on Inception-v3 to create a viable and practicable computer-aided diagnostic model. The computer-aided diagnostic approach could help clinicians diagnose thoracic disorders more accurately and quickly. They employed the fine-tuned Inception-v3 model based on transfer learning and a variety of classifiers (Softmax, Logistic, and SVM). They worked using the JSRT dataset. The sensitivity of the model was 95.41%, and the specificity was 80.09%.

Pleural Thickening

Pleurisy is a disease that causes thickening of the lung lining, or pleura that may cause chest pain and difficulty breathing.

Guan et al. [171] proposed an attention guided convolution neural (AG-CNN) network that avoids noise and improves alignment by learning from disease-specific regions. AG-CNN is divided into three branches. Five convolutional blocks with batch normalisation and ReLU make up the global and local branches. A max pooling layer, a fully connected (FC) layer, and a sigmoid layer are then connected to each of them. Unlike the global branch, the local branch’s input is a local lesion patch that is cropped by the mask formed by the global branch. The fusion branch is then created by concatenating the maximum pooling layers of these two branches. They initially learn about a global CNN branch by looking at global visuals. Then, they used the attention heat map obtained by the global branch to infer a mask to crop a discriminative region from the image.

The ChestX-ray14 dataset was used to train and test the model. The AUC for AG-CNN is 86.8% on average. The average AUC was 87.1% when DenseNet-121 is utilized.

For clinical applications, solving the problem of abnormality localization in addition to categorising abnormalities, further training of these models to locate abnormalities could be employed to address this problem. However, doing so accurately will necessitate a significant number of clinical expert disease localisation annotations.

Ouyang et al. [145] employed a hierarchical attention mining framework that unites activation and gradient-based visual attention in a holistic manner, as well as an attention-driven weakly supervised algorithm. The three layers of attention mechanisms in the hierarchical attention mining framework are foreground attention, positive attention, and abnormal attention. ChestX-ray14 and CheXpert datasets are used in their investigation. The average AUC for the ChetX-ray dataset is 83.5%. The AUC of ResNet50 and ResNet152 increased to 88.8% and 89.5%, respectively, when transfer learning was used.

Asbestosis Signs

Asbestosis is a long-term lung illness caused by inhaling asbestos fibers. Long-term exposure to these fibers causes lung tissue scarring and shortness of breath. The disease’s symptoms can range from minor to severe, and they normally do not show up for several years following persistent exposure.

Using medical CT data, Myong et al. [167] presented a Long-term Recurrent Convolution Networks (LRCN) model capable of recognizing the existence and severity of asbestosis. The CNN and RNN models are combined in the LRCN model. LRCN processes the variable-length visual input with a CNN. In addition, their outputs are fed into a stack of recurrent sequence models, which is long short-term memory (LSTM). The final output from the sequence models is a variable-length prediction. DenseNet161 is used to train the CNN model (transfer learning). They used private data from 469 patients who had been screened for asbestosis at Seoul St. Mary’s Hospital in Korea. The purpose of this study was to employ LSTM which is a special type of RNN to address the image classification problem with CT data. The model achieved an accuracy of 83.3%, with a true positive of 81.578% and a true negative of 86%. Additionally, a model was built that can test validity by assisting an expert with a Grad-CAM that can see the judgement.

A lung segmentation and deep learning model-based approach for recognizing patients with asbestosis in segmented computed tomography (CT) images has been developed by Kim et al. [187], which could be used as a clinical decision support system (CDSS). They also suggested that the LRCN model to categorize lungs into normal and asbestosis lungs (CNN extracts image features, and RNN learns the extracted sequence information). They used a private dataset at Seoul St. Mary’s Hospital, which is part of the Catholic University of Korea’s College of Medicine (IRB no. KC17ENSI0379). There were a total of 447 patients, with 275 being healthy and 172 having asbestosis. In addition, 87 of the 172 patients with asbestosis were diagnosed in the early stages, while 85 were discovered in the advanced stages. The algorithm built with the DenseNet201 pre-trained model performed exceptionally well, with a sensitivity of 96.2%, specificity of 97.5%, accuracy of 97%, AUROC of 96.8%, and F1 score of 96.1%.

Pulmonary Edema

Excess fluid in the lungs causes this disorder. This fluid gathers in the lungs’ many air sacs, making breathing harder.

Chauhan et al. [166] used the Medical Information Mart for Intensive Care CXR dataset (MIMIC-CXR) to present a Bidirectional Encoder Representations from a Transformers (BERT) neural network model that learns from images and text to assess pulmonary edema severity from chest radiographs, where BERT is a deep learning model in which every output element is connected to every input element, and the weightings between them are dynamically calculated based upon their connection. Overall, the accuracy is 89%.

Liao et al. [183] also measure the severity level of pulmonary edema in CXR images, but by using a Bayesian model for training and testing on the MIMIC-CXR dataset. The root mean squared (RMS) error is 0.66, and the Pearson correlation coefficient (CC) is 0.52.

Lung Metastasis

Metastasis of the Lungs or Metastatic Lung Disease Cancer is a malignant tumor that develops elsewhere and spreads to the lungs through the circulation. Breast cancer, colon cancer, prostate cancer, sarcoma, bladder cancer, neuroblastoma, and Wilm’s tumor are all common malignancies that metastasize to the lungs. Any malignancy, on the other hand, has the potential to move to the lungs.

To overcome the difficulty of sparse data, the Generative Adversarial Network (GAN) was presented by Lin et al. [98] to generate computed tomography images of lung cancer. GAN is applied to generate new data automatically. It trains the generator and discriminator networks simultaneously. The former generates new images, and the latter learns to distinguish the fake images from the input of real and generated data. The AlexNet model is applied for the classification of lung cancer into benign or malignant tumors. They used the SPIE-AAPM Lung CT Challenge Data Set that contains 22,489 lung CT images, with 11,407 images of malignant tumors and 11,082 images of benign tumors. The image size is 512 × 512 pixels. The model achieved an accuracy of 99.86%.

Using CT images from the LIDC-IDRI datasets, Ashharet al. [121] evaluated the performance of five convolutional neural network architectures: ShuffleNet, GoogleNet, SqueezeNet, DenseNet, and MobileNetV2 in categorizing lung tumors into two classes: malignant and benign categories. They proved that GoogleNet has the best performance for CT lung tumor classification with a specificity of 99.06%, an accuracy of 94.53%, sensitivity of 65.67%, and AUC of 86.84%.

Consolidation

Pulmonary consolidation is an area of normally compressible lung tissue that occurs when that tissue is filled with fluid instead of air.

To detect consolidation lung illness, Rostami et al. [168] deployed a pre-trained deep convolutional neural network (DCNN) VGG16 and DenseNet121 on ImageNet datasets. The dataset they used was the Pediatric Chest X-ray dataset, which contains two classes, normal and pneumonia/consolidation. The model correctly identified consolidation with a 94.67% accuracy.

A CNN classification model pre-trained on VGG-19 was developed by Bhatt et al. [185] for COVID-19 pulmonary consolidations in chest X-ray detection. They look at binary classification to detect consolidation lung disease, followed by multi-classification predictions (normal, pneumonia, and SARS-CoV-2). They used the COVIDx dataset, which includes 66 COVID-19 among the 16,756 chest radiography images. For binary classification, the accuracy was 89.58%, while for multi-classification, it was 64.58%.

Lung Diseases That Affect Airways

Table 4 includes the following diseases: Asthma, COPD, TB, and COVID-19.

Table 4.

Lung diseases detection summary that affect airways.

Table 4.

Lung diseases detection summary that affect airways.

| Ref. (Year) | Name of Disease | Input Image Type | Dataset Used | Data Preparation Type | Model Type | Ensemble Technique | Target | Results | Open Issues |

|---|---|---|---|---|---|---|---|---|---|

| [188] (2021) | COPD | CT | KNUH and JNUH | 3D-CNN | Extraction, visualization, and classification | Accuracy 89.3% and Sensitivity 88.3% | Apply a 3D-model using a wide range of datasets. | ||

| [170] (2021) | COPD | CT | RFAI | data augmentation processes: random rotation, random translation, random Gaussian blur, and subsampling | MV-DCNN | Classification | Accuracy 97.7% | Applied MC-DCNN to diagnose a variety of lung diseases. | |

| [175] (2019) | Emphysema | CT | private dataset | DCNN | Classification and Detection | Accuracy 92.68% | Use transfer learning to achieve high accuracy. | ||

| [189] (2019) | Emphysema and another different 13 diseases | X-ray | ChestX-ray14 | CNN | Classification | overall Accuracy 89.77% | Ensemble approaches were used to improve the model’s performance. | ||

| [173] (2018) | Asthma | Reports only | Private dataset | DNN | Diagnosis | Accuracy 98% | Apply a different classifier to outperform the DNN algorithm in terms of accuracy. | ||

| [190] (2018) | Asthma | Reports only | Private dataset | Bayesian Logistic Regression | Prediction for Asthma disease | Accuracy 86.3673%, Sensitivity 87.25% | Check if there is an increase in the accuracy when including more patients in the dataset, using the posteriors from this study as priors for the new dataset. | ||

| [137] (2020) | COVID-19 | CT | Private dataset | Histogram equalization features extraction Intensity transformation | CNN pre-trained on VGG-16 | Classification | Precision 92%, Sensitivity 90%, Specificity 91%, F1-Score 91%, Accuracy 90% | It is possible to use deep learning networks with more complex backbone architecture.GANs can be developed to increase the number of suitable images for network training and hence improve the model’s performance. | |

| [131] (2020) | COVID-19 | CT | Private dataset | Volume features based on segmented infected lung regions, Histogram distribution, Radiomics features. | CNN | Boosting | Segmentation and Classification | Accuracy 91.79%, Sensitivity 93.05%, Specificity 89.95%, AUC 96.35%, Precision 93.10%, and F1-score 93.07% | Plan to collect more data from patients with different diseases and apply the AFSDF approach to further COVID-19 classification tasks (e.g., COVID-19 vs. Influenza-A viral pneumonia and CAP, severe patients vs. non-severe patients). |

| [122] (2021) | COVID-19, Pneumonia, Tuberculosis | X-ray | Pediatric CXRs, IEEE COVID-19 CXRs, and Shenzhen datasets. | Data Augmentation including rotation, shift, and adding noise. | DenResCov-19 | Classification | Precision 82.90%, AUC 95%, and F1-Score 75.75% | Increase the number of classes to address more lung illnesses. The number of COVID-19 patients should be raised. | |

| [191] (2021) | COVID-19 | X-ray | RSNA dataset | Data Augmentation including zoom, flipping, rotation, translation, and shift. | COVID-Net CXR-S | Detection and Classification | Sensitivity (level1) 92.3%, Sensitivity (level2) 92.85%, PPV (level1) 87.27%, PPV (level2) 95.78%, Accuracy 92.66% | Build innovative clinical decision support technologies to aid clinicians all throughout the world in dealing with the pandemic. | |

| [133] (2022) | COVID-19 | X-ray | COVID-19 dataset, chest-X-ray, COVID-19 pneumonia dataset, private dataset collected from MGM Medical College and hospital | DCNN | Stacking | Classification and Detection | Accuracy 88.98% for three classifications and 98.58% for binary classification. | Using more public datasets will improve the model’s accuracy. | |

| [192] (2022) | COVID-19 | CT | COVID-19 CT Images Segmentation | segmentation | DRL | Image segmentation | Precision 97.12%, a sensitivity of 79.97%, and a specificity of 99.48% | The mask extraction stage could be improved. In addition, more complex algorithms, approaches, and datasets appear promising to improve system performance. | |

| [193] (2019) | Tuberculosis | Sputum Smear Microscopy | ZNSM-iDB dataset | Data Augmentation including rotation and translation | RCNN pre-trained on VGG-16 | localization and classification | Recall 98.3% Precision 82.6% F1-Score 89.7% | Planning to expand the amount of data used in a deep network. | |

| [101] (2020) | Tuberculosis | X-ray | NIAID TB dataset and RSNA dataset | Data Augmentation including cropping. | CNN pre-trained on (Inception-V3, ResNet-18, DenseNet-201, ResNet-50, ResNet-101, ChexNet, SqueezeNet, VGG-19, and MobileNet-V2) and UNet for segmentation. | Lung Segmentation and TB Classification | Without Segmentation: Accuracy 96.47%, Precision 96.62%, and Recall 96.47% With Segmentation: Accuracy 98.6%, Precision 98.57%, and Recall 98.56% | Split lungs into patches that can be fed into a CNN model, perhaps improving performance even more. | |

| [176] (2021) | Tuberculosis | X-ray | Montgomery County (MC) CXR dataset, Shenzhen dataset, RSNA Pneumonia Detection Challenge dataset, Belarus dataset, and COVID-19 radiography database | EfficientNet and Vision Transformer | Boosting | Classification & Detection | Accuracy 97.72%AUC 100% | Planning to add new baselines to compare to the tool that has been developed.Planning to release a mobile app that can run on small devices like smartphones and tablets. | |

| [138] (2021) | Tuberculosis | X-ray | Montgomery County dataset (MC) and Shenzhen dataset (SZ) | Histogram Equalization & Contrast Limited Adaptive Histogram Equalization (CLAHE). | CNN pre-trained on VGG19 | Stacking | Segmentation and Detection TB disease | AUC 99.00 ± 0.28/98.00 ± 0.16 for MC/SZ For the MC/SZ accuracy 99.26 ± 0.40/99.22 ± 0.32. | Propose scalability testing for the proposed approach on large datasets. Use big data technologies like distributed processing and/or Map-Reduced based approaches for complex network building and feature extraction. |

Tuberculosis

Tuberculosis (TB) is a bacterial infection caused by Mycobacterium tuberculosis bacteria. The bacteria most commonly assault the lungs, but they can also harm other regions of the body. When a person with tuberculosis coughs, sneezes, or talks, it spreads via the air.

Tuberculosis was was properly detected from chest X-ray images using data augmentation, image segmentation, and deep-learning classification approaches. Rahman et al. [101] employed nine distinct deep CNNs for transfer learning (ResNet18, ResNet50, ResNet101, ChexNet, InceptionV3, VGG19, DenseNet201, SqueezeNet, and MobileNet). They used the NIAID TB dataset as well as the RSNA dataset. Without segmentation, the output classification accuracy, precision, and recall for tuberculosis detection were 96.47%, 96.62%, and 96.47%, respectively, but with segmentation, they were 98.6%, 98.57%, and 98.56%.

Duong et al. [176] presented a practical method for detecting tuberculosis from chest X-ray pictures. The Montgomery County (MC) CXR, the Shenzhen dataset, the Belarus dataset, the COVID-19 dataset, the COVID-19 Radiography Database, and the RSNA Pneumonia Detection Challenge dataset were all utilized to train and evaluate the Hybrid EfficientNet with a Vision Transformer model. The model achieved a 97.72% accuracy and 100% AUC.

Khatibi et al. [138] introduced a new Multi-Instance Learning (MIL) strategy that combines CNNs, clustering, complex network analysis, and stacked ensemble classifiers for TB diagnosis from CXR images. To detect tuberculosis, they employed a multi-instance classification based on a CNN model.They used two datasets for the TB scans: Shenzhen dataset (SZ) and the Montgomery County dataset (MC). The accuracy of MC/SZ is 99.26 ± 0.40/99.22 ± 0.32, and the AUC is 99.00 ± 0.28/98.00 ± 0.16. Support Vector Machine (SVM), Logistic Regression (LR), Decision Tree (DT), Random Forest (RF), and Adaboost were among the classifiers utilized.

El-Melegy et al. [193] presented a Faster Region-based Convolutional Neural Network (RCNN) to detect Tuberculosis Bacilli using Sputum Smear microscopy images. They employed the ZNSM-iDB public database, which includes auto-focused data, overlapping objects, single or few bacilli, views without bacilli, occluded bacilli, over-stained views with bacilli, and artifacts. The model achieved F1-Score of 89.7%, a recall of 98.3%, and precision of 82.6%.

COVID-19