From Bedside to Bot-Side: Artificial Intelligence in Emergency Appendicitis Management

Abstract

1. Introduction

2. Methods

2.1. Patient Characteristics and Study Criteria

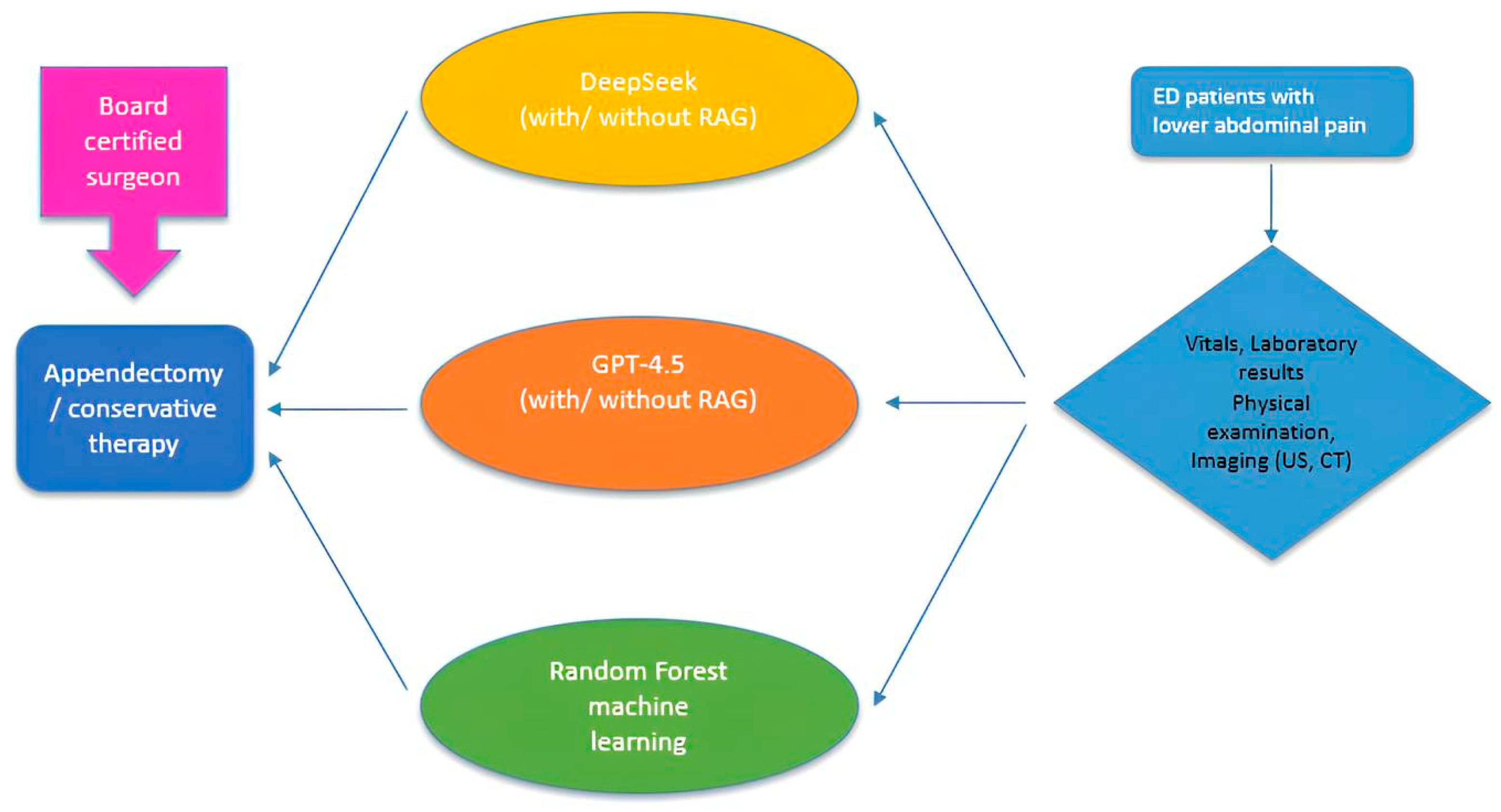

2.2. Study Design

2.3. Statistical Analysis

2.4. Machine-Learning Classifier

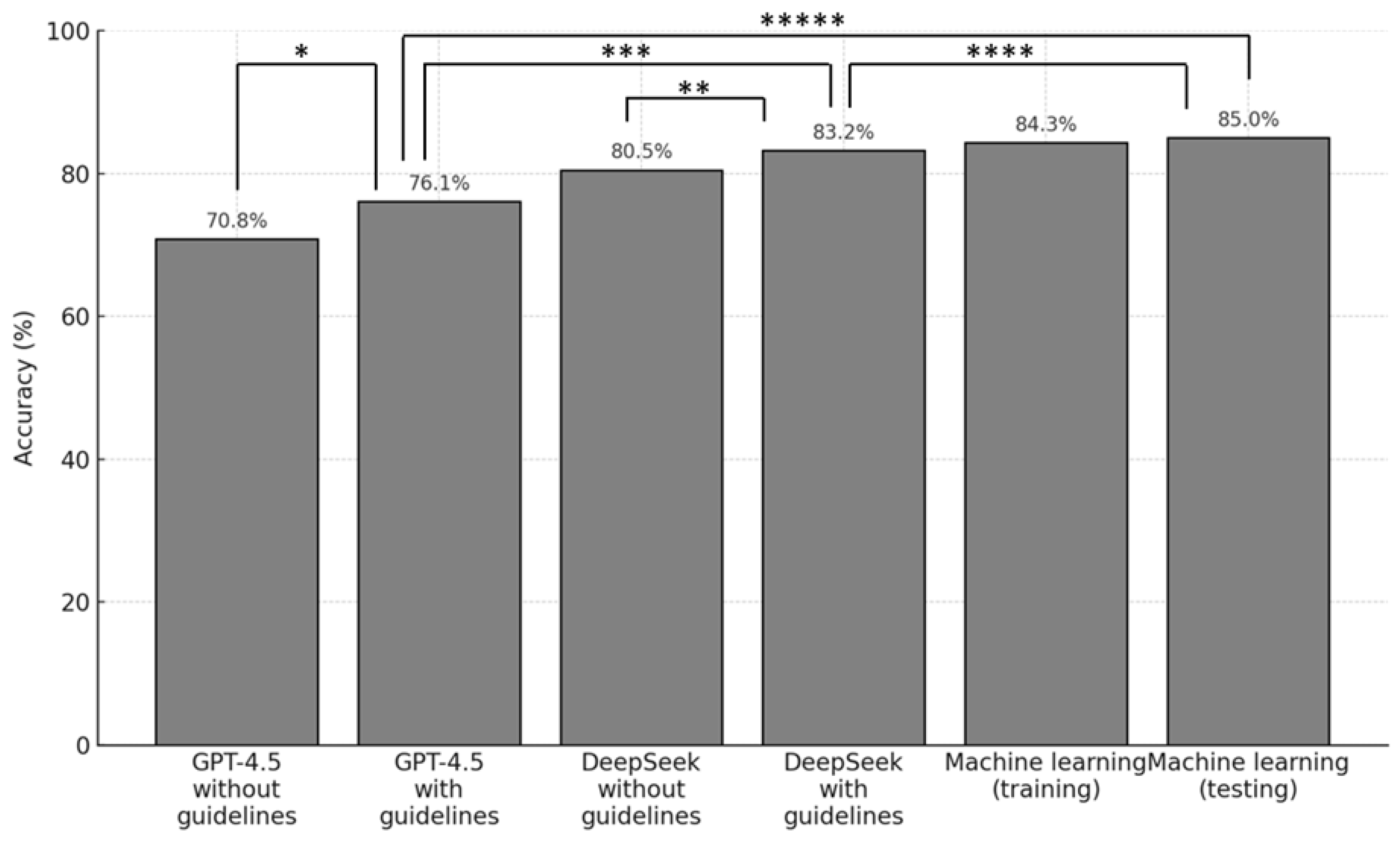

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sceats, L.A.; Trickey, A.W.; Morris, A.M.; Kin, C.; Staudenmayer, K.L. Nonoperative Management of Uncomplicated Appendicitis Among Privately Insured Patients. JAMA Surg. 2019, 154, 141–149. [Google Scholar] [CrossRef] [PubMed]

- Davidson, G.H.; Flum, D.R.; Monsell, S.E.; Kao, L.S.; Voldal, E.C.; Heagerty, P.J.; Fannon, E.; Lavallee, D.C.; Bizzell, B.; Lawrence, S.O.; et al. Antibiotics versus Appendectomy for Acute Appendicitis—Longer-Term Outcomes. N. Engl. J. Med. 2021, 385, 2395–2397. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.Q.H.; Afdhal, N.H. Gallstone disease. In Sleisenger and Fordtran’s Gastrointestinal and Liver Disease, 9th ed.; Saunders Elsevier: Philadelphia, PA, USA, 2010. [Google Scholar]

- Potey, K.; Kandi, A.; Jadhav, S.; Gowda, V. Study of outcomes of perforated appendicitis in adults: A prospective cohort study. Ann. Med. Surg. 2023, 85, 694–700. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kim, J.K.; Ryoo, S.; Oh, H.K.; Kim, J.S.; Shin, R.; Choe, E.K.; Jeong, S.-Y.; Park, K.J. Management of appendicitis presenting with abscess or mass. J. Korean Soc. Coloproctol. 2010, 26, 413–419. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Fike, F.B.; Mortellaro, V.E.; Juang, D.; Sharp, S.W.; Ostlie, D.J.; Peter, S.D.S. The impact of postoperative abscess formation in perforated appendicitis. J. Surg. Res. 2011, 170, 24–26. [Google Scholar] [CrossRef]

- Andersson, R.E.; Petzold, M.G. Nonsurgical treatment of appendiceal abscess or phlegmon: A systematic review and meta-analysis. Ann. Surg. 2007, 246, 741–748. [Google Scholar] [CrossRef]

- Mulita, F.; Plachouri, K.M.; Liolis, E.; Kehagias, D.; Kehagias, I. Comparison of intra-abdominal abscess formation after laparoscopic and open appendectomy for complicated and uncomplicated appendicitis: A retrospective study. Videosurgery Other Miniinvasive Tech. 2021, 16, 560–565. [Google Scholar] [CrossRef]

- Li, X.; Zhang, J.; Sang, L.; Zhang, W.; Chu, Z.; Li, X.; Liu, Y. Laparoscopic versus conventional appendectomy—A meta-analysis of randomized controlled trials. BMC Gastroenterol. 2010, 10, 129. [Google Scholar] [CrossRef]

- Masoomi, H.; Nguyen, N.T.; Dolich, M.O.; Mills, S.; Carmichael, J.C.; Stamos, M.J. Laparoscopic appendectomy trends and outcomes in the United States: Data from the Nationwide Inpatient Sample (NIS), 2004–2011. Am. Surg. 2014, 80, 1074–1077. [Google Scholar] [CrossRef]

- Moris, D.; Paulson, E.K.; Pappas, T.N. Diagnosis and Management of Acute Appendicitis in Adults: A Review. JAMA 2021, 326, 2299–2311. [Google Scholar] [CrossRef]

- Lamm, R.; Kumar, S.S.; Collings, A.T.; Haskins, I.N.; Abou-Setta, A.; Narula, N.; Nepal, P.; Hanna, N.M.; Athanasiadis, D.I.; Scholz, S.; et al. Diagnosis and treatment of appendicitis: Systematic review and meta-analysis. Surg. Endosc. 2023, 37, 8933–8990. [Google Scholar] [CrossRef]

- Reyes, A.M.; Royan, R.; Feinglass, J.; Thomas, A.C.; Stey, A.M. Patient and Hospital Characteristics Associated with Delayed Diagnosis of Appendicitis. JAMA Surg. 2023, 158, e227055. [Google Scholar] [CrossRef] [PubMed]

- Arruzza, E.; Milanese, S.; Li, L.S.K.; Dizon, J. Diagnostic accuracy of computed tomography and ultrasound for the diagnosis of acute appendicitis: A systematic review and meta-analysis. Radiography 2022, 28, 1127–1141. [Google Scholar] [CrossRef] [PubMed]

- Alvarado, A. A practical score for the early diagnosis of acute appendicitis. Ann. Emerg. Med. 1986, 15, 557–564. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, M.; Krakauer, D.C. The debate over understanding in AI’s large language models. Proc. Natl. Acad. Sci. USA 2023, 120, e2215907120. [Google Scholar] [CrossRef] [PubMed]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving language understanding by generative pre-training. OpenAI 2018. [Google Scholar]

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- Bi, Q.; Goodman, K.E.; Kaminsky, J.; Lessler, J. What is machine learning? A primer for the epidemiologist. Am. J. Epidemiol. 2019, 188, 2222–2239. [Google Scholar] [CrossRef]

- Issaiy, M.; Zarei, D.; Saghazadeh, A. Artificial Intelligence and Acute Appendicitis: A Systematic Review of Diagnostic and Prognostic Models. World J. Emerg. Surg. 2023, 18, 59. [Google Scholar] [CrossRef]

- Sanduleanu, S.; Ersahin, K.; Bremm, J.; Talibova, N.; Damer, T.; Erdogan, M.; Kottlors, J.; Goertz, L.; Bruns, C.; Maintz, D.; et al. Feasibility of GPT-3.5 versus Machine Learning for Automated Surgical Decision-Making Determination: A Multicenter Study on Suspected Appendicitis. AI 2024, 5, 1942–1954. [Google Scholar] [CrossRef]

- Di Saverio, S.; Podda, M.; De Simone, B.; Ceresoli, M.; Augustin, G.; Gori, A.; Boermeester, M.; Sartelli, M.; Coccolini, F.; Tarasconi, A.; et al. Diagnosis and treatment of acute appendicitis: 2020 update of the WSES Jerusalem guidelines. World J. Emerg. Surg. 2020, 15, 27. [Google Scholar] [CrossRef] [PubMed]

- Boyle, A.; Huo, B.; Sylla, P.; Calabrese, E.; Kumar, S.; Slater, B.J.; Walsh, D.S.; Vosburg, R.W. Large language model-generated clinical practice guideline for appendicitis. Surg. Endosc. 2025, 39, 3539–3551. [Google Scholar] [CrossRef] [PubMed]

- Ghanem, Y.K.; Rouhi, A.D.; Al-Houssan, A.; Saleh, Z.; Moccia, M.C.; Joshi, H.; Dumon, K.R.; Hong, Y.; Spitz, F.; Joshi, A.R.; et al. Dr. Google to Dr. ChatGPT: Assessing the content and quality of artificial intelligence-generated medical information on appendicitis. Surg. Endosc. 2024, 38, 2887–2893. [Google Scholar] [CrossRef]

- Gracias, D.; Siu, A.; Seth, I.; Dooreemeah, D.; Lee, A. Exploring the role of an artificial intelligence chatbot on appendicitis management: An experimental study on ChatGPT. ANZ J. Surg. 2024, 94, 342–352. [Google Scholar] [CrossRef]

- Baptista, E. What Is DeepSeek and Why Is It Disrupting the AI Sector? Reuters. 2025. Available online: https://www.reuters.com/technology/artificial-intelligence/what-is-deepseek-why-is-it-disrupting-ai-sector-2025-01-27/ (accessed on 10 March 2025).

- Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; Bi, X.; et al. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Van Calster, B.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M.; et al. TRIPOD + AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 2024, 385, e078378. [Google Scholar] [CrossRef]

- Aghamaliyev, U.; Karimbayli, J.; Zamparas, A.; Bösch, F.; Thomas, M.; Schmidt, T.; Krautz, C.; Kahlert, C.; Schölch, S.; Angele, M.K.; et al. Bots in white coats: Are large language models the future of patient education? A multicenter cross-sectional analysis. Int. J. Surg. 2025, 111, 2376–2384. [Google Scholar] [CrossRef]

- Debray, T.P.; Collins, G.S.; Riley, R.D.; Snell, K.I.; Van Calster, B.; Reitsma, J.B.; Moons, K.G. Transparent reporting of multivariable prediction models developed or validated using clustered data (TRIPOD-Cluster): Explanation and elaboration. BMJ 2023, 380, e071018. [Google Scholar] [CrossRef]

| GFO-Troisdorf Cohort (n = 100) | UKK Cologne Cohort (n = 13) | Training ML (n = 90) | Testing ML (n = 23) | Total (n = 113) | |

|---|---|---|---|---|---|

| Board-certified specialist decision | |||||

| Appendectomy (n) | 50 | 13 | 50 | 13 | 63 |

| Conservative (n) | 50 | 0 | 40 | 10 | 50 |

| Total (n) | 100 | 13 | 90 | 23 | 113 |

| Median age (years) | 35 | 22 | 35 | 23 | 34 |

| Gender | |||||

| Male (n) | 43 | 8 | 41 | 10 | 51 |

| Female (n) | 57 | 5 | 49 | 13 | 62 |

| Imaging upon ER-admission | |||||

| Ultrasound (%) | 100 | 100 | 100 | 100 | 100 |

| Computed Tomography (%) | 29 | 31 | 29 | 39 | 29 |

| Model Pair | Sample Size (n) | Cohen’s Kappa (κ) | Agreement Interpretation |

|---|---|---|---|

| ML Testing vs. DeepSeek (with RAG) | 23 | 0.52 | Moderate |

| ML Testing vs. GPT-4.5 (with RAG) | 23 | 0.64 | Substantial |

| DeepSeek (with RAG) vs. GPT-4.5 (with RAG) | 113 | 0.75 | Substantial |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ersahin, K.; Sanduleanu, S.; Thulasi Seetha, S.; Bremm, J.; Abbasli, C.; Zimmer, C.; Damer, T.; Kottlors, J.; Goertz, L.; Bruns, C.; et al. From Bedside to Bot-Side: Artificial Intelligence in Emergency Appendicitis Management. Life 2025, 15, 1387. https://doi.org/10.3390/life15091387

Ersahin K, Sanduleanu S, Thulasi Seetha S, Bremm J, Abbasli C, Zimmer C, Damer T, Kottlors J, Goertz L, Bruns C, et al. From Bedside to Bot-Side: Artificial Intelligence in Emergency Appendicitis Management. Life. 2025; 15(9):1387. https://doi.org/10.3390/life15091387

Chicago/Turabian StyleErsahin, Koray, Sebastian Sanduleanu, Sithin Thulasi Seetha, Johannes Bremm, Cavid Abbasli, Chantal Zimmer, Tim Damer, Jonathan Kottlors, Lukas Goertz, Christiane Bruns, and et al. 2025. "From Bedside to Bot-Side: Artificial Intelligence in Emergency Appendicitis Management" Life 15, no. 9: 1387. https://doi.org/10.3390/life15091387

APA StyleErsahin, K., Sanduleanu, S., Thulasi Seetha, S., Bremm, J., Abbasli, C., Zimmer, C., Damer, T., Kottlors, J., Goertz, L., Bruns, C., Maintz, D., & Abdullayev, N. (2025). From Bedside to Bot-Side: Artificial Intelligence in Emergency Appendicitis Management. Life, 15(9), 1387. https://doi.org/10.3390/life15091387