Abstract

Hallux valgus, a frequently seen foot deformity, requires early detection to prevent it from becoming more severe. It is a medical economic problem, so a means of quickly distinguishing it would be helpful. We designed and investigated the accuracy of an early version of a tool for screening hallux valgus using machine learning. The tool would ascertain whether patients had hallux valgus by analyzing pictures of their feet. In this study, 507 images of feet were used for machine learning. Image preprocessing was conducted using the comparatively simple pattern A (rescaling, angle adjustment, and trimming) and slightly more complicated pattern B (same, plus vertical flip, binary formatting, and edge emphasis). This study used the VGG16 convolutional neural network. Pattern B machine learning was more accurate than pattern A. In our early model, Pattern A achieved 0.62 for accuracy, 0.56 for precision, 0.94 for recall, and 0.71 for F1 score. As for Pattern B, the scores were 0.79, 0.77, 0.96, and 0.86, respectively. Machine learning was sufficiently accurate to distinguish foot images between feet with hallux valgus and normal feet. With further refinement, this tool could be used for the easy screening of hallux valgus.

1. Introduction

Hallux valgus (HV) is a common foot deformity characterized by static subluxation of the first metatarsophalangeal joint with lateral deviation of the hallux and medial deviation of the first metatarsal [1]. High prevalence is also a characteristic of HV; 23% of adults aged 18–65 years were reportedly affected, with incidence increasing with age and being higher in women than in men [2]. Increased severity of HV is associated with decreased health-related quality of life, physical function, balance function, and risk of falls [3,4,5,6,7]. HV progresses over time, so early intervention should be sought to prevent the onset of severe HV. A large HV angle is said to be a risk factor for the progression of HV deformity [8].

HV is generally diagnosed based on the first-second intermetatarsal angle and HV angle, both of which are usually determined by radiography [9]. Radiography is typically performed in the hospital, and patients with HV who present to the hospital likely already have advanced deformity, pain, and other symptoms. In addition, radiologic examination requires some degree of radiation exposure. Early intervention for HV is important to maintain physical function and to prevent worsening leading to disability. General practitioners in Australia, for example, encounter an estimated 60,000 bunions each year, so there is a problematically high medical economic burden [10]. A simple method to measure HV in community-dwelling adults at home without the need for a hospital visit could therefore be useful. Home blood pressure monitoring, for example, has helped to reduce cardiovascular disease events. Similarly, home screening tools for HV may help to prevent HV becoming severe [11].

The Manchester scale (MS), a relative nonmetric measurement of severity of HV, has potential use within a tool for community-dwelling adults to screen HV severity by themselves [12]. In MS, HV is visually classified into four levels (no deformity, mild deformity, moderate deformity, severe deformity). The MS has a statistically significant correlation with the American Orthopaedic Foot and Ankle Society score [13]. It can be measured in a clinical setting, and it has been reported to correlate moderately well with radiography [14]. However, for the early detection and prevention of severe disease, a tool than can more easily assess HV is desired. This could be achieved by image classification using machine learning. Image classification using machine learning is currently being actively studied in the medical field, and it can be a supplementary tool for diagnosis based on radiography and computed tomography images [15].

Image recognition using images taken with digital cameras has been successfully used to screen for sarcopenia [16]. However, a similar tool to screen for HV has not yet been reported. We therefore developed a non-invasive tool that can screen HV according to foot images using a machine learning framework, and we examined its accuracy. The tool classifies foot images into ‘normal’ (no deformity, mild deformity) and HV (moderate deformity, severe deformity) based on the MS. This classification is based on the suggestion that HV moderate deformity and severe deformity groups are considered to have reduced foot function, general foot health, and social competence [17].

2. Materials and Methods

2.1. Study Materials

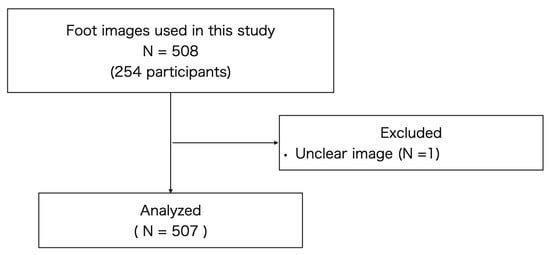

We used 508-foot images obtained from 254 participants of a local government-supported check-up for health in Kaizuka City, Osaka, Japan. One of the images was excluded from the analysis because it was unclear (Figure 1). All participants lived at home and were independent in terms of their activities in daily living.

Figure 1.

Flowchart illustrating the selection of study materials.

2.2. Severity of Hallux Valgus

The severity of HV was determined by the MS using images of the foot. Patients stood in a full-weight-bearing position and the degree of HV was recorded as either no deformity, mild deformity, moderate deformity, or severe deformity. This was visually determined by two physiotherapists, each of whom have more than 20 years of experience. There were determined to be 243 images of no deformity and 61 images of mild deformity, which made up the normal group. There were 122 images of moderate deformity and 81 images of and severe deformity, which made up the HV group (Table 1).

Table 1.

Classification of foot images used in this study based on the MS.

2.3. Foot Imaging

Images of feet were taken at three health and welfare centers by trained research staff. To ensure uniform imaging conditions, participants stepped onto a movable box and were asked to place their weight evenly on both feet in a standing position. The mobile box was 90 cm high, and its top was covered with a black sheet. A digital camera (RX-0, SONY, Tokyo, Japan) was placed at the height of the participant’s tibial tuberosity and images were taken of the feet from above (Figure 2). The digital camera features 20.3 megapixels, a focal length of 4.3 (W)—172.0 mm, a resolution of 5184 × 2912 pixels, and one pixel in a captured image is 0.005 square centimeters.

Figure 2.

Example of foot image.

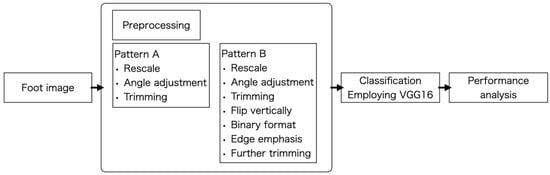

Images were preprocessed as follows (Figure 3). Preprocessing was performed in two patterns to verify whether the degree of accuracy varies with the degree of preprocessing.

Figure 3.

Comprehensive framework for HV classification.

- Pattern A (Figure 4A)

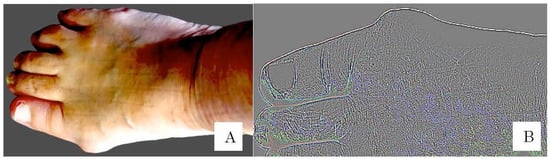

Figure 4. Image preprocessing. (A) Pattern A: The images had the background removed and were horizontally corrected. The image was further cropped to include only the metatarsus from the toes. (B) Pattern B: The image was vertically inverted, and the edges were enhanced.

Figure 4. Image preprocessing. (A) Pattern A: The images had the background removed and were horizontally corrected. The image was further cropped to include only the metatarsus from the toes. (B) Pattern B: The image was vertically inverted, and the edges were enhanced.

- (1)

- The image was converted to a size width of 640 pixels and the background was digitally removed. The foot image was adjusted to horizontal orientation. The image was cropped so that only the area from the toes to the midfoot area was included.

- Pattern B (Figure 4B)

Pattern B was preprocessed in the same way as pattern A, with the following additions:

- (2)

- The right foot image was vertically inverted to match the orientation of the left and right feet.

- (3)

- The image was converted to grayscale, the edges were enhanced, and the image was cropped so that only the midfoot area from the tip of the hallux was included.

- (4)

- The normal group (n = 304) and the HV group (n = 203) have different numbers of images, which could negatively affect the accuracy. Data augmentation was therefore performed on the images in the HV group. This involved changing the contrast and saturation of 101 randomly selected images in the HV group. Color jittering, such as varying of saturation and contrast, is common in data augmentation used in machine learning research [18].

Comparison of the difference in accuracy between patterns A and B is necessary because it may aid in decision-making in future applications. It may be necessary to restrict the imaging conditions in which the subject themself judges the HV. Hypothetically, in the future this could be a lay person rather than a specialist. Restriction of the imaging conditions may also affect the complexity when programming. Image preprocessing was performed manually using the picture tool on a personal computer (iMAC, Apple M1, 16 GB memory) and a photo editor application, PhotoScape X (MOOII Tech, Seoul, Republic of Korea).

2.4. Classification by Machine Learning

Machine learning was performed with the following hardware and software. Central processing unit: Apple M1, Memory: 16 GB, operating system: macOS 13.2.1, Framework: TensorFlow, Keras, Python 2.11.0. This study used VGG16 for Convolutional Neural Network. VGG16 consists of 13 convolutional layers and three convolutional layers, totaling 16 layers, and is used in image classification research [19,20,21,22]. The number of training epochs was 20, the batch size was 16, and the image size of the input layer was downsized to 224 × 224 pixels. The activation and loss functions were Adam and cross-entropy, respectively.

2.5. Statistical Analysis

Foot images were randomly assigned to groups of training data (80%) and validation data (20%). Confusion matrix was used to determine how well the model performs against validation data. The performance of the image classification model for HV identification was evaluated in terms of accuracy, precision, recall, and F1 score. Accuracy is the most common model evaluation metric in machine learning and is easy to interpret and understand. Precision is a measure of the ability of a machine learning model to predict accurately. The F1 score measures the sensitivity of precision and sensitivity. It was introduced to solve the conflict between precision and recall. Recall is a percentage value that suggests how well a positive class was predicted; it focuses on positivity for the correct answer [23]. The performance of the confusion matrix and image classification models was analyzed and compared for both A and B patterns of image preprocessing.

3. Results

Table 2 shows the confusion matrix of the HV image classification model used in this study.

Table 2.

Confusion matrix of machine learning models to identify HV.

Using pattern A, of the HV images, 72 images were correctly distinguished as HV and 157 images were incorrectly distinguished as Normal. Of the Normal images, 13 images were incorrectly distinguished as HV and 206 images were correctly distinguished as Normal.

Using pattern B, of the HV images, 102 images were correctly distinguished as HV and 128 images were incorrectly distinguished as Normal. Of the Normal images, 28 images were incorrectly distinguished as HV and 423 images were correctly distinguished as Normal.

Pattern A of the model used in this study achieved scores of 0.62 for accuracy, 0.56 for precision, 0.94 for recall, and 0.71 for F1 score. Pattern B of the model used in this study achieved 0.79 for accuracy, 0.77 for precision, 0.96 for recall, and 0.86 for F1 score (Table 3).

Table 3.

Accuracy of Machine Learning Models with Different Preprocessing.

4. Discussion

Few studies of machine learning with photography have been conducted in medical research. A small number of studies have been performed on its use in classification of acromegaly, skin cancer, and the severity of caries [24,25,26]. This study is the first to demonstrate that a machine learning framework can discriminate foot deformity in humans.

We applied an existing machine learning framework to verify whether it is possible to identify HV from digital images. The results suggest that HV can be identified by deep learning with appropriate image preprocessing. The pattern B machine learning model for identifying HV demonstrated accuracy of 0.79, precision of 0.77, recall of 0.96, and an F1 score of 0.86. HV has not been identified using machine learning in previous studies, making the comparison of accuracy of the models used in this study difficult. However, there are existing studies in which there was image analysis using machine learning in orthopedics [27,28]. The accuracy of these models to detect arthritis and trauma from magnetic resonance imaging (MRI) and radiography was 75–92.8%. Based on these previous orthopedics studies, the accuracy of the model constructed in this study is thought to be acceptable.

Compared with pattern B, the pattern A machine learning model for identifying HV was less accurate, perhaps owing to the comparative simplicity of the image preprocessing. To identify HV using machine learning, we suggest that vertical flipping, edge emphasis, and trimming are necessary. However, the confusion matrix of pattern B demonstrated high accuracy in distinguishing normal foot images as normal, but low accuracy in distinguishing HV. Further improved image preprocessing may achieve better accuracy. Machine learning was previously implemented for the diagnosis of hip osteoarthritis, achieving accuracy of 92.8% [29]. That study used magnified images focusing upon osteophytes. The diagnosis of knee osteoarthritis using a deep Siamese convolution neural network had average accuracy of 66.7% and kappa coefficient of 0.83 [30]. Elsewhere, feature value extraction with machine learning has been used in the detection of cancer and COVID-19 using MRI images and radiography [31,32]. The purpose of this study was to develop a screening tool for HV, so we did not use radiography or MRI images. However, we suggest that improved accuracy in identifying MS-based HV might be obtained with additional preprocessing, feature value extraction, and greater emphasis on the first metatarsophalangeal joint.

The main limitation of this study is that it was not possible to use all four gradings of the MS. This limitation may be solved by collecting more images in the future, and the current accuracy needs to be improved. Improved image preprocessing is needed to achieve greater accuracy. This machine learning does not account for the complexity of the deformity and associated conditions, such as pes planus. Nonetheless, this study showed that, to some extent, machine learning models could identify HV from images captured by digital cameras without the use of radiography. Applications of such machine learning models could be used within tools that allow community-dwelling adults to easily identify whether they have HV without the need for consultation with a medical professional. This could contribute to alleviation of the health–economic problem of HV.

5. Conclusions

Machine learning tools may be able to detect whether or not a foot image is HV. Accuracy will be increased by improving image preprocessing. After further development, a similar tool could be used to screen the severity of hallux valgus.

Author Contributions

Conceptualization, M.H. and H.N.; methodology, M.H., C.W., S.E. and K.K.; investigation, M.H., M.I., R.I., M.N., T.I., J.O., F.T., T.K., K.S., A.H. and K.Y.; data curation, M.H. and S.E.; supervision, M.T. and H.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by a Grant-in-Aid for Scientific Research C (KAKENHI (22K11386)).

Institutional Review Board Statement

This study was approved by the Osaka Kawasaki Rehabilitation University Ethics Committee (reference number OKRU30-A016) and was conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the subjects to publish this paper.

Data Availability Statement

Not applicable.

Acknowledgments

We thank the participants in Kaizuka City and the volunteer staff for their contributions to data collection. We acknowledge proofreading and editing by Benjamin Phillis.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mann, R.A.; Coughlin, M.J. Hallux valgus—Etiology, anatomy, treatment and surgical considerations. Clin. Orthop. Relat. Res. 1981, 157, 31–41. [Google Scholar] [CrossRef]

- Nix, S.; Smith, M.; Vicenzino, B. Prevalence of hallux valgus in the general population: A systematic review and meta-analysis. J. Foot Ankle Res. 2010, 3, 21. [Google Scholar] [CrossRef] [PubMed]

- Cho, N.H.; Kim, S.; Kwon, D.J.; Kim, H.A. The prevalence of hallux valgus and its association with foot pain and function in a rural Korean community. J. Bone Jt. Surg. Br. 2009, 91, 494–498. [Google Scholar] [CrossRef] [PubMed]

- Menz, H.B.; Roddy, E.; Thomas, E.; Croft, P.R. Impact of hallux valgus severity on general and foot-specific health-related quality of life. Arthritis Care Res. 2011, 63, 396–404. [Google Scholar]

- Abhishek, A.; Roddy, E.; Zhang, W.; Doherty, M. Are hallux valgus and big toe pain associated with impaired quality of life? A cross-sectional study. Osteoarthr. Cartil. 2010, 18, 923–926. [Google Scholar] [CrossRef] [PubMed]

- Nix, S.E.; Vicenzino, B.T.; Smith, M.D. Foot pain and functional limitation in healthy adults with hallux valgus: A cross-sectional study. BMC Musculoskelet. Disord. 2012, 13, 197. [Google Scholar] [CrossRef]

- Menz, H.B.; Morris, M.E.; Lord, S.R. Foot and ankle risk factors for falls in older people: A prospective study. J. Gerontol. A Biol. Sci. Med. Sci. 2006, 61, 866–870. [Google Scholar] [CrossRef]

- Shinohara, M.; Yamaguchi, S.; Ono, Y.; Kimura, S.; Kawasaki, Y.; Sugiyama, H.; Akagi, R.; Sasho, T.; Ohtori, S. Anatomical factors associated with progression of hallux valgus. Foot Ankle Surg. 2022, 28, 240–244. [Google Scholar] [CrossRef]

- Coughlin, M.J.; Saltzman, C.L.; Nunley, J.A., 2nd. Angular measurements in the evaluation of hallux valgus deformities: A report of the ad hoc committee of the American Orthopaedic Foot & Ankle Society on angular measurements. Foot Ankle Int. 2002, 23, 68–74. [Google Scholar]

- Menz, H.B.; Harrison, C.; Britt, H.; Whittaker, G.A.; Landorf, K.B.; Munteanu, S.E. Management of Hallux Valgus in General Practice in Australia. Arthritis Care Res. 2020, 72, 1536–1542. [Google Scholar] [CrossRef]

- Kario, K. Home Blood Pressure Monitoring: Current Status and New Developments. Am. J. Hypertens. 2021, 34, 783–794. [Google Scholar] [CrossRef] [PubMed]

- Garrow, A.P.; Papageorgiou, A.; Silman, A.J.; Thomas, E.; Jayson, M.I.; Macfarlane, G.J. The grading of hallux valgus. The Manchester Scale. J. Am. Podiatr. Med. Assoc. 2001, 91, 74–78. [Google Scholar] [CrossRef] [PubMed]

- Iliou, K.; Paraskevas, G.; Kanavaros, P.; Barbouti, A.; Vrettakos, A.; Gekas, C.; Kitsoulis, P. Correlation between Manchester Grading Scale and American Orthopaedic Foot and Ankle Society Score in Patients with Hallux Valgus. Med. Princ. Pract. 2016, 25, 21–24. [Google Scholar] [CrossRef] [PubMed]

- Menz, H.B.; Fotoohabadi, M.R.; Wee, E.; Spink, M.J. Validity of self-assessment of hallux valgus using the Manchester scale. BMC Musculoskelet. Disord. 2010, 11, 215. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Han, Z.; Wang, J.; Yang, C. The emerging roles of machine learning in cardiovascular diseases: A narrative review. Ann. Transl. Med. 2022, 10, 611. [Google Scholar] [CrossRef]

- Sakai, K.; Gilmour, S.; Hoshino, E.; Nakayama, E.; Momosaki, R.; Sakata, N.; Yoneoka, D. A Machine Learning-Based Screening Test for Sarcopenic Dysphagia Using Image Recognition. Nutrients 2021, 13, 4009. [Google Scholar] [CrossRef]

- Lopez, D.L.; Callejo Gonzalez, L.; Losa Iglesias, M.E.; Canosa, J.L.; Sanz, D.R.; Lobo, C.C.; Becerro de Bengoa Vallejo, R. Quality of Life Impact Related to Foot Health in a Sample of Older People with Hallux Valgus. Aging Dis. 2016, 7, 45–52. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Alshammari, A. Construction of VGG16 Convolution Neural Network (VGG16_CNN) Classifier with NestNet-Based Segmentation Paradigm for Brain Metastasis Classification. Sensors 2022, 22, 8076. [Google Scholar] [CrossRef]

- Ding, I.J.; Zheng, N.W. CNN Deep Learning with Wavelet Image Fusion of CCD RGB-IR and Depth-Grayscale Sensor Data for Hand Gesture Intention Recognition. Sensors 2022, 22, 803. [Google Scholar] [CrossRef]

- Khandakar, A.; Chowdhury, M.E.H.; Reaz, M.B.I.; Ali, S.H.M.; Abbas, T.O.; Alam, T.; Ayari, M.A.; Mahbub, Z.B.; Habib, R.; Rahman, T.; et al. Thermal Change Index-Based Diabetic Foot Thermogram Image Classification Using Machine Learning Techniques. Sensors 2022, 22, 1793. [Google Scholar] [CrossRef] [PubMed]

- Özkaraca, O.; Bağrıaçık, O.İ.; Gürüler, H.; Khan, F.; Hussain, J.; Khan, J.; Laila, U.E. Multiple Brain Tumor Classification with Dense CNN Architecture Using Brain MRI Images. Life 2023, 13, 349. [Google Scholar] [CrossRef] [PubMed]

- Qin, Y.; Wu, J.; Xiao, W.; Wang, K.; Huang, A.; Liu, B.; Yu, J.; Li, C.; Yu, F.; Ren, Z. Machine Learning Models for Data-Driven Prediction of Diabetes by Lifestyle Type. Int. J. Environ. Res. Public Health 2022, 19, 15027. [Google Scholar] [CrossRef] [PubMed]

- Kong, Y.; Kong, X.; He, C.; Liu, C.; Wang, L.; Su, L.; Gao, J.; Guo, Q.; Cheng, R. Constructing an automatic diagnosis and severity-classification model for acromegaly using facial photographs by deep learning. J. Hematol. Oncol. 2020, 13, 88. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Duong, D.L.; Kabir, M.H.; Kuo, R.F. Automated caries detection with smartphone color photography using machine learning. Health Inform. 2021, 27, 14604582211007530. [Google Scholar] [CrossRef]

- Lalehzarian, S.P.; Gowd, A.K.; Liu, J.N. Machine learning in orthopaedic surgery. World J. Orthop. 2021, 12, 685–699. [Google Scholar] [CrossRef]

- Lau, L.C.M.; Chui, E.C.S.; Man, G.C.W.; Xin, Y.; Ho, K.K.W.; Mak, K.K.K.; Ong, M.T.Y.; Law, S.W.; Cheung, W.H.; Yung, P.S.H. A novel image-based machine learning model with superior accuracy and predictability for knee arthroplasty loosening detection and clinical decision making. J. Orthop. Translat. 2022, 36, 177–183. [Google Scholar] [CrossRef]

- Xue, Y.; Zhang, R.; Deng, Y.; Chen, K.; Jiang, T. A preliminary examination of the diagnostic value of deep learning in hip osteoarthritis. PLoS ONE 2017, 12, e0178992. [Google Scholar] [CrossRef]

- Yunus, U.; Amin, J.; Sharif, M.; Yasmin, M.; Kadry, S.; Krishnamoorthy, S. Recognition of Knee Osteoarthritis (KOA) Using YOLOv2 and Classification Based on Convolutional Neural Network. Life 2022, 12, 1126. [Google Scholar] [CrossRef]

- Goyal, S.; Singh, R. Detection and classification of lung diseases for pneumonia and Covid-19 using machine and deep learning techniques. J. Ambient. Intell. Humaniz. Comput. 2021, 14, 3239–3259. [Google Scholar] [CrossRef] [PubMed]

- Yedjou, C.G.; Tchounwou, S.S.; Aló, R.A.; Elhag, R.; Mochona, B.; Latinwo, L. Application of Machine Learning Algorithms in Breast Cancer Diagnosis and Classification. Int. J. Sci. Acad. Res. 2021, 2, 3081–3086. [Google Scholar] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).