AI-Based Pipeline for Classifying Pediatric Medulloblastoma Using Histopathological and Textural Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Convolutional Neural Networks

2.2. Texture Analysis Methods

2.3. Data Collection

2.4. Proposed Pipeline

2.4.1. Image Preprocessing

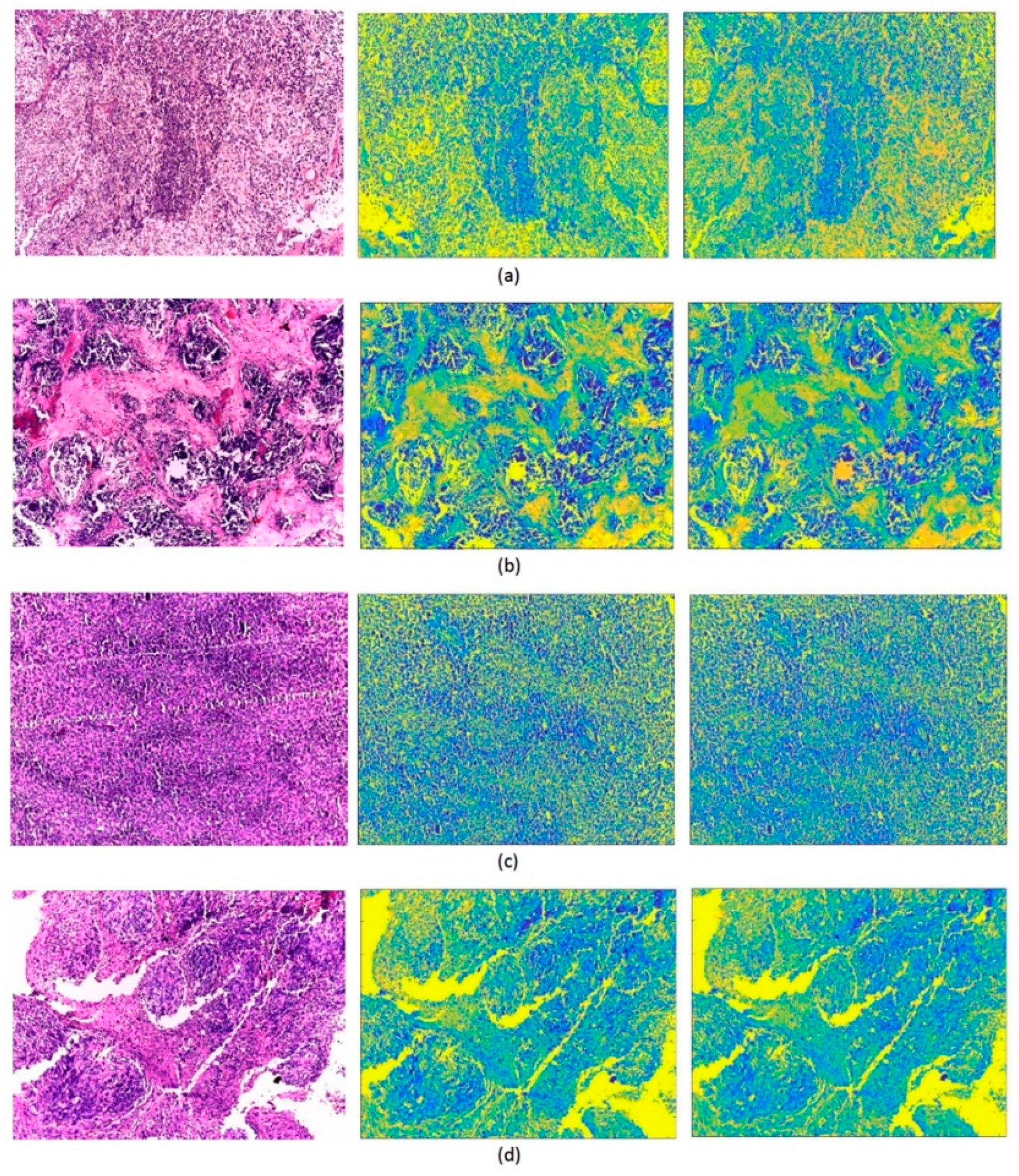

2.4.2. Textural Image Generation and CNN Training

2.5. Feature Extraction and Fusion

2.6. Classification

3. Performance Evaluation Metrics

- True Positives (TP): is the number of scans where the model correctly predicts the positive class.

- False Positives (FP): is the number of scans where the model incorrectly predicts the positive class.

- True Negatives (TN): is the number of scans where the model correctly predicts the negative class.

- False Negatives (FN): is the number of scans where the model incorrectly predicts the negative class.

4. Results and Discussion

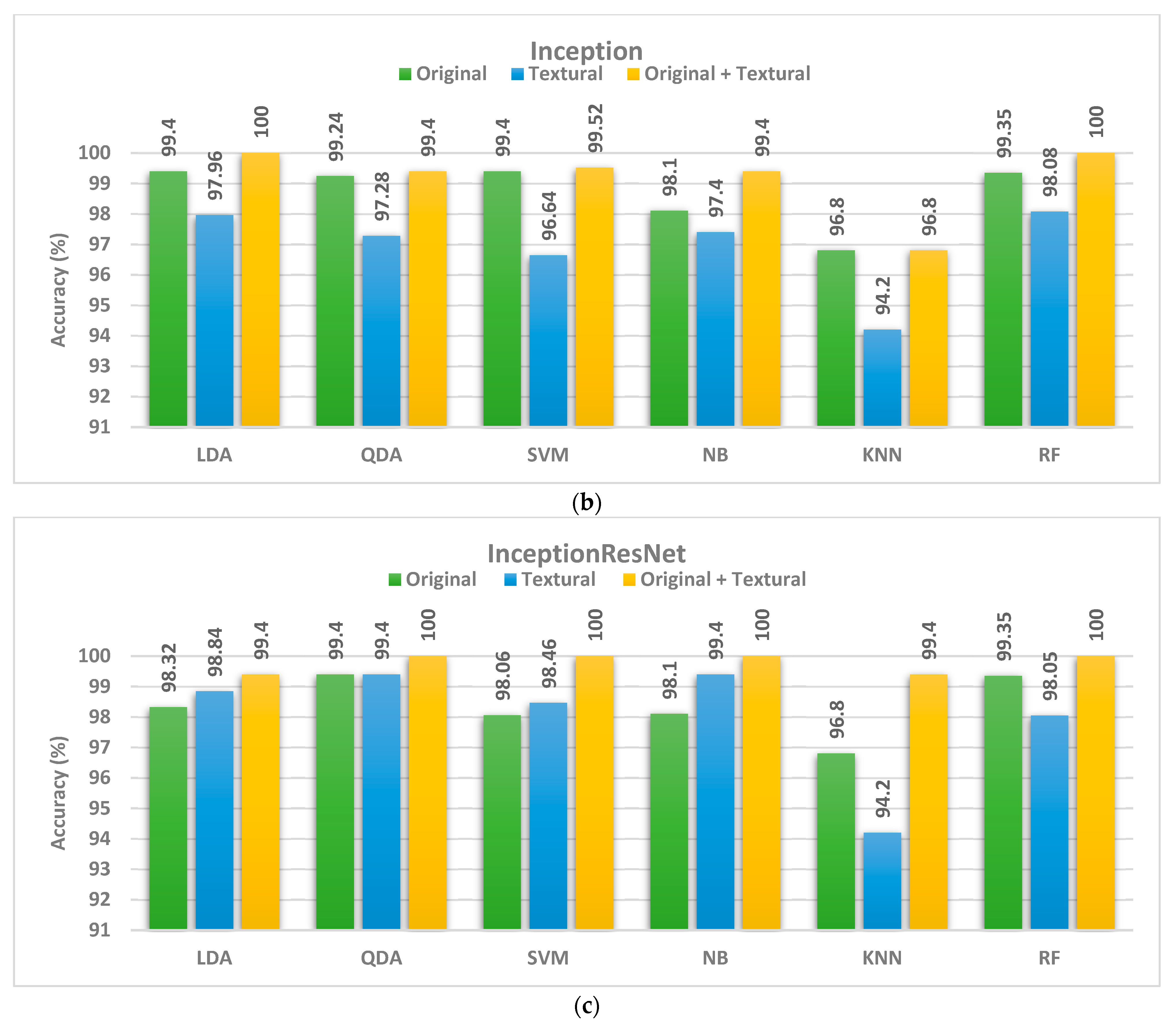

4.1. First Fusion Step

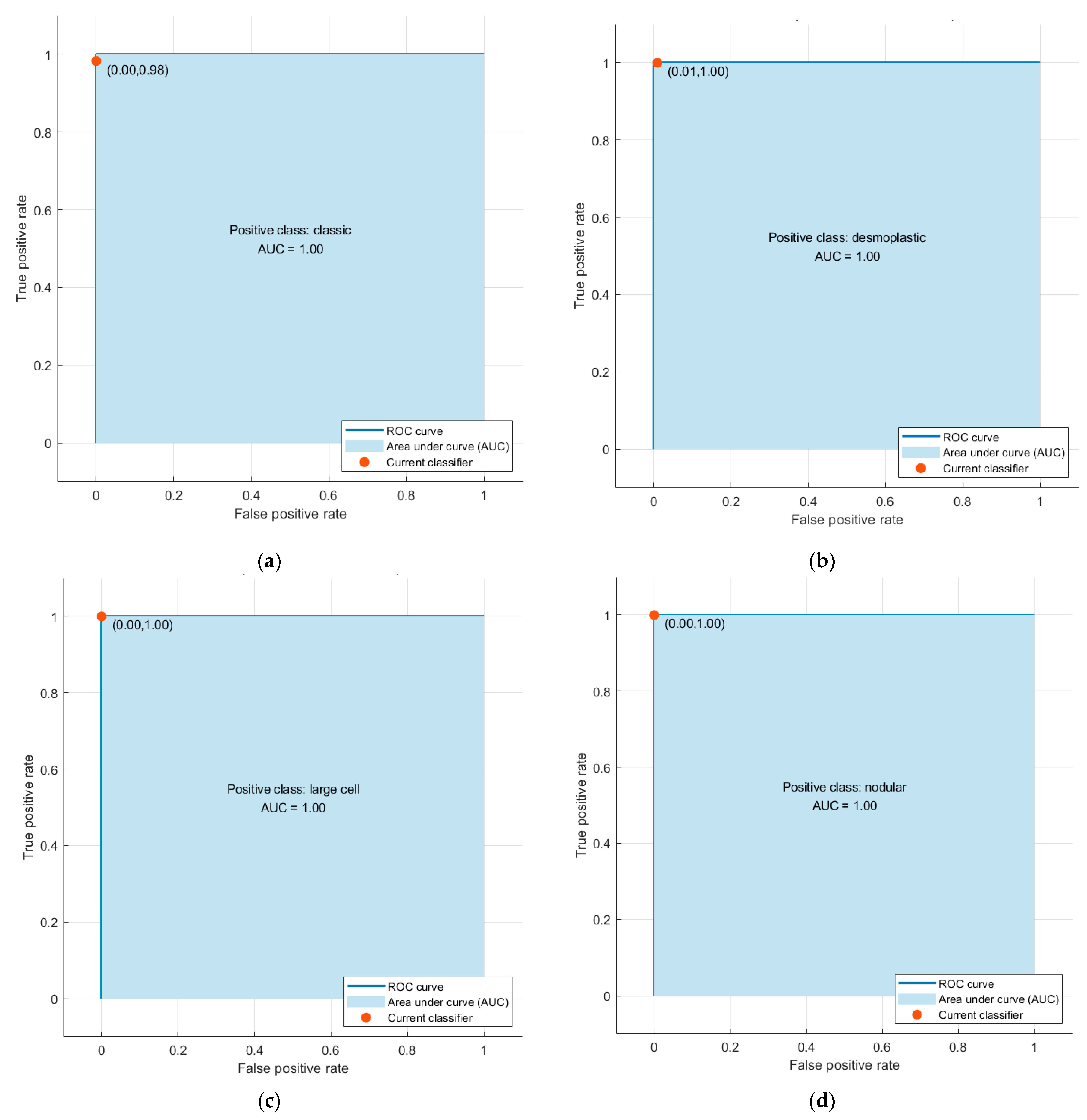

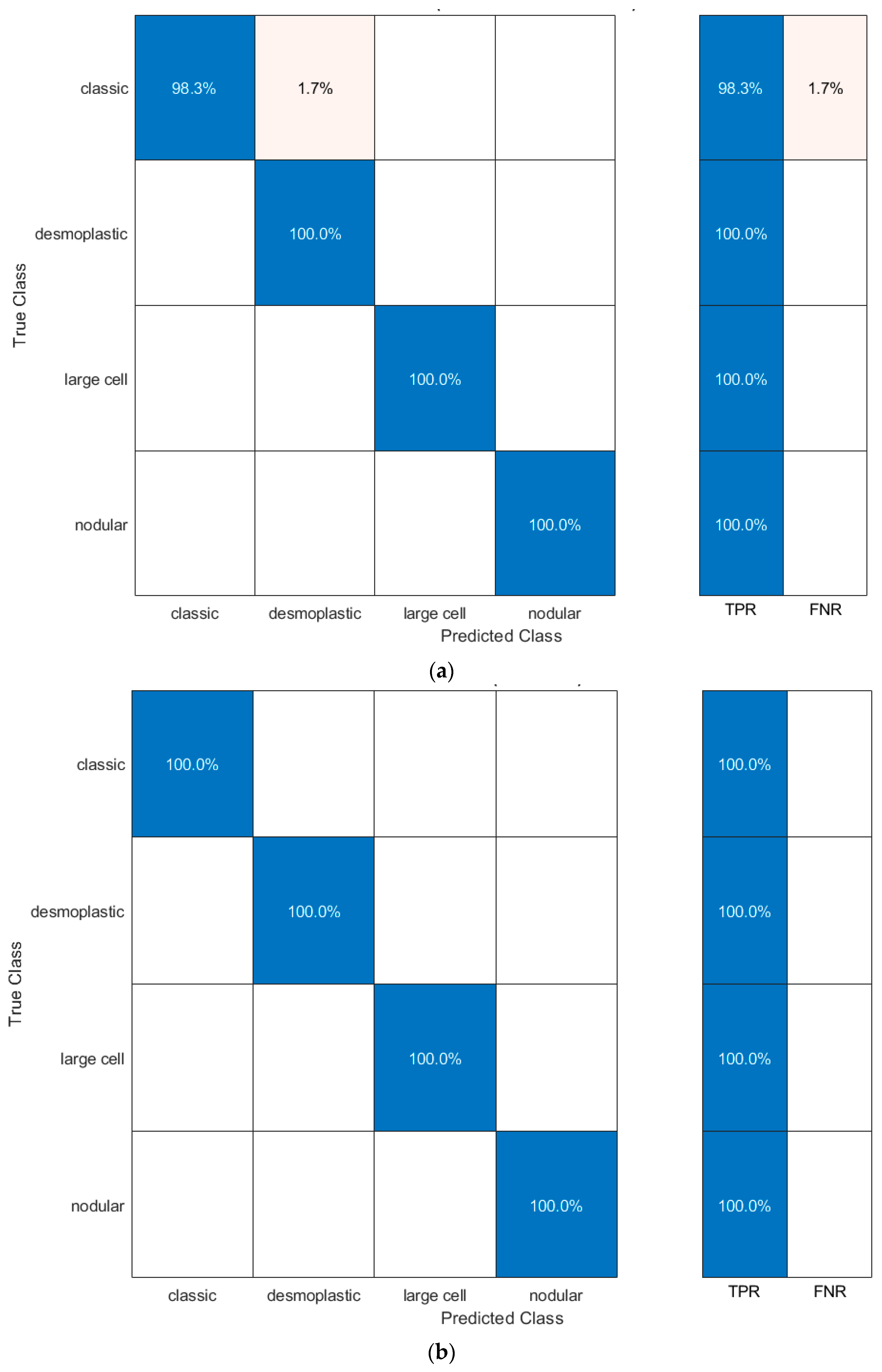

4.2. Second Fusion Step

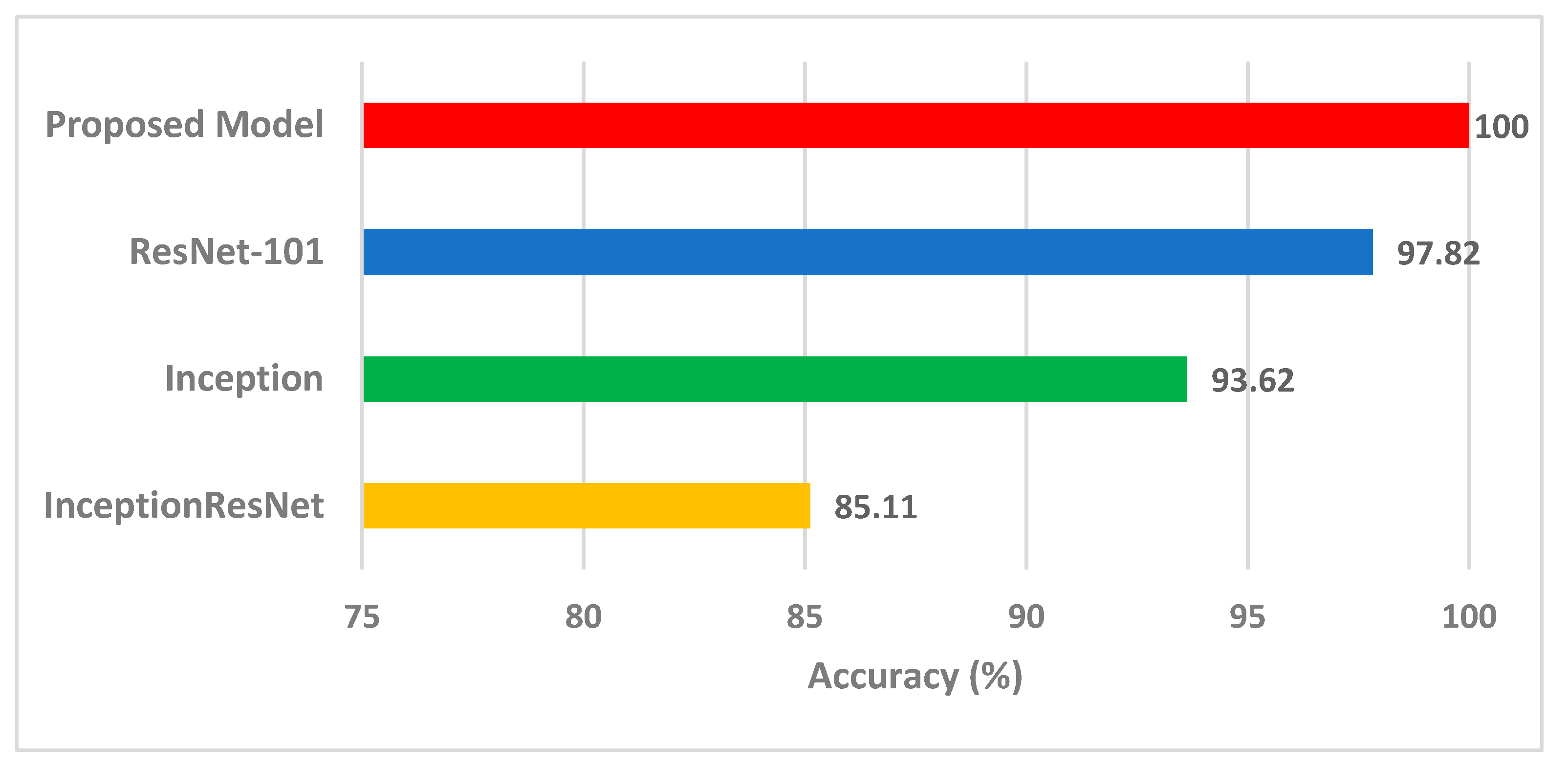

4.3. Comparision with Other Methods and Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Iv, M.; Zhou, M.; Shpanskaya, K.; Perreault, S.; Wang, Z.; Tranvinh, E.; Lanzman, B.; Vajapeyam, S.; Vitanza, N.A.; Fisher, P.G.; et al. MR Imaging-Based Radiomic Signatures of Distinct Molecular Subgroups of Medulloblastoma. Am. J. Neuroradiol. 2019, 40, 154–161. [Google Scholar] [CrossRef] [PubMed]

- Ostrom, Q.T.; Cioffi, G.; Waite, K.; Kruchko, C.; Barnholtz-Sloan, J.S. CBTRUS Statistical Report: Primary Brain and Other Central Nervous System Tumors Diagnosed in the United States in 2014–2018. Neuro. Oncol. 2021, 23, iii1–iii105. [Google Scholar] [CrossRef] [PubMed]

- Hovestadt, V.; Ayrault, O.; Swartling, F.J.; Robinson, G.W.; Pfister, S.M.; Northcott, P.A. Medulloblastomics revisited: Biological and clinical insights from thousands of patients. Nat. Rev. Cancer 2020, 20, 42–56. [Google Scholar] [CrossRef] [PubMed]

- Pollack, I.F.; Agnihotri, S.; Broniscer, A. Childhood brain tumors: Current management, biological insights, and future directions. J. Neurosurg. Pediatr. 2019, 23, 261–273. [Google Scholar] [CrossRef]

- Curtin, S.C.; Minino, A.M.; Anderson, R.N. Declines in Cancer Death Rates Among Children and Adolescents in the United States, 1999–2014. NCHS Data Brief. 2016, 257, 1–8. [Google Scholar]

- Ailion, A.S.; Hortman, K.; King, T.Z. Childhood Brain Tumors: A Systematic Review of the Structural Neuroimaging Literature. Neuropsychol. Rev. 2017, 27, 220–244. [Google Scholar] [CrossRef]

- Arseni, C.; Ciurea, A.V. Statistical survey of 276 cases of medulloblastoma (1935–1978). Acta Neurochir. 1981, 57, 159–162. [Google Scholar] [CrossRef]

- Polednak, A.P.; Flannery, J.T. Brain, other central nervous system, and eye cancer. Cancer 1995, 75, 330–337. [Google Scholar] [CrossRef]

- Taylor, M.D.; Northcott, P.A.; Korshunov, A.; Remke, M.; Cho, Y.J.; Clifford, S.C.; Eberhart, C.G.; Parsons, D.W.; Rutkowski, S.; Gajjar, A.; et al. Molecular subgroups of medulloblastoma: The current consensus. Acta Neuropathol. 2012, 123, 465–472. [Google Scholar] [CrossRef]

- Manias, K.A.; Gill, S.K.; MacPherson, L.; Foster, K.; Oates, A.; Peet, A.C. Magnetic resonance imaging based functional imaging in paediatric oncology. Eur. J. Cancer 2017, 72, 251–265. [Google Scholar] [CrossRef]

- Iqbal, S.; Khan, M.U.G.; Saba, T.; Rehman, A. Computer-assisted brain tumor type discrimination using magnetic resonance imaging features. Biomed. Eng. Lett. 2018, 8, 5–28. [Google Scholar] [CrossRef] [PubMed]

- Tahmassebi, A.; Karbaschi, G.; Meyer-Baese, U.; Meyer-Baese, A. Large-Scale Dynamical Graph Networks Applied to Brain Cancer Image Data Processing. In Proceedings of the Computational Imaging VI, Florida, FL, USA, 12–17 April 2021; p. 1173104. [Google Scholar]

- Rehman, M.U.; Cho, S.; Kim, J.; Chong, K.T. BrainSeg-Net: Brain Tumor MR Image Segmentation via Enhanced Encoder–Decoder Network. Diagnostics 2021, 11, 169. [Google Scholar] [CrossRef]

- Tai, Y.-L.; Huang, S.-J.; Chen, C.-C.; Lu, H.H.-S. Computational complexity reduction of neural networks of brain tumor image segmentation by introducing fermi–dirac correction functions. Entropy 2021, 23, 223. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, J.; Jia, Z. Brain tumor segmentation via C-dense convolutional neural network. Prog. Artif. Intell. 2021, 10, 147–156. [Google Scholar] [CrossRef]

- Rehman, M.U.; Cho, S.; Kim, J.H.; Chong, K.T. BU-Net: Brain Tumor Segmentation Using Modified U-Net Architecture. Electronics 2020, 9, 2203. [Google Scholar] [CrossRef]

- Fan, Y.; Feng, M.; Wang, R. Application of Radiomics in Central Nervous System Diseases: A Systematic literature review. Clin. Neurol. Neurosurg. 2019, 187, 105565. [Google Scholar] [CrossRef]

- Attallah, O.; Gadelkarim, H.; Sharkas, M.A. Detecting and Classifying Fetal Brain Abnormalities Using Machine Learning Techniques. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1371–1376. [Google Scholar] [CrossRef]

- Grist, J.T.; Withey, S.; MacPherson, L.; Oates, A.; Powell, S.; Novak, J.; Abernethy, L.; Pizer, B.; Grundy, R.; Bailey, S. Distinguishing between paediatric brain tumour types using multi-parametric magnetic resonance imaging and machine learning: A multi-site study. NeuroImage: Clin. 2020, 25, 102172. [Google Scholar] [CrossRef] [PubMed]

- Fetit, A.E.; Novak, J.; Rodriguez, D.; Auer, D.P.; Clark, C.A.; Grundy, R.G.; Peet, A.C.; Arvanitis, T.N. Radiomics in paediatric neuro-oncology: A multicentre study on MRI texture analysis. NMR Biomed. 2018, 31, e3781. [Google Scholar] [CrossRef]

- Zarinabad, N.; Abernethy, L.J.; Avula, S.; Davies, N.P.; Rodriguez Gutierrez, D.; Jaspan, T.; MacPherson, L.; Mitra, D.; Rose, H.E.; Wilson, M. Application of pattern recognition techniques for classification of pediatric brain tumors by in vivo 3T 1H-MR spectroscopy—A multi-center study. Magn. Reson. Med. 2018, 79, 2359–2366. [Google Scholar] [CrossRef]

- Louis, D.N.; Perry, A.; Reifenberger, G.; von Deimling, A.; Figarella-Branger, D.; Cavenee, W.K.; Ohgaki, H.; Wiestler, O.D.; Kleihues, P.; Ellison, D.W. The 2016 World Health Organization Classification of Tumors of the Central Nervous System: A summary. Acta Neuropathol. 2016, 131, 803–820. [Google Scholar] [CrossRef]

- Kourou, K.; Exarchos, T.P.; Exarchos, K.P.; Karamouzis, M.V.; Fotiadis, D.I. Machine learning applications in cancer prognosis and prediction. Comput. Struct. Biotechnol. J. 2015, 13, 8–17. [Google Scholar] [CrossRef] [PubMed]

- Ragab, D.A.; Sharkas, M.; Attallah, O. Breast cancer diagnosis using an efficient CAD system based on multiple classifiers. Diagnostics 2019, 9, 165. [Google Scholar] [CrossRef] [PubMed]

- Nadeem, M.W.; Ghamdi, M.A.A.; Hussain, M.; Khan, M.A.; Khan, K.M.; Almotiri, S.H.; Butt, S.A. Brain Tumor Analysis Empowered with Deep Learning: A Review, Taxonomy, and Future Challenges. Brain Sci. 2020, 10, 118. [Google Scholar] [CrossRef]

- Attallah, O.; Karthikesalingam, A.; Holt, P.J.; Thompson, M.M.; Sayers, R.; Bown, M.J.; Choke, E.C.; Ma, X. Using multiple classifiers for predicting the risk of endovascular aortic aneurysm repair re-intervention through hybrid feature selection. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2017, 231, 1048–1063. [Google Scholar] [CrossRef]

- Kleppe, A.; Skrede, O.J.; De Raedt, S.; Liestol, K.; Kerr, D.J.; Danielsen, H.E. Designing deep learning studies in cancer diagnostics. Nat. Rev. Cancer 2021, 21, 199–211. [Google Scholar] [CrossRef]

- Attallah, O. An effective mental stress state detection and evaluation system using minimum number of frontal brain electrodes. Diagnostics 2020, 10, 292. [Google Scholar] [CrossRef] [PubMed]

- Dasgupta, A.; Gupta, T. MRI-based prediction of molecular subgrouping in medulloblastoma: Images speak louder than words. Oncotarget 2019, 10, 4805–4807. [Google Scholar] [CrossRef]

- Das, D.; Mahanta, L.B. A Comparative Assessment of Different Approaches of Segmentation and Classification Methods on Childhood Medulloblastoma Images. J. Med. Biol. Eng. 2021, 41, 379–392. [Google Scholar] [CrossRef]

- Lai, Y.; Viswanath, S.; Baccon, J.; Ellison, D.; Judkins, A.R.; Madabhushi, A. A Texture-based Classifier to Discriminate Anaplastic from Non-Anaplastic Medulloblastoma. In Proceedings of the 2011 IEEE 37th Annual Northeast Bioengineering Conference (NEBEC), Troy, NY, USA, 1–3 April 2011. [Google Scholar]

- Galaro, J.; Judkins, A.R.; Ellison, D.; Baccon, J.; Madabhushi, A. An integrated texton and bag of words classifier for identifying anaplastic medulloblastomas. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011. [Google Scholar] [CrossRef]

- Cruz-Roa, A.; Arevalo, J.; Basavanhally, A.; Madabhushi, A.; Gonzalez, F. A comparative evaluation of supervised and unsupervised representation learning approaches for anaplastic medulloblastoma differentiation. Proc. Spie. 2015, 9287. [Google Scholar] [CrossRef]

- Cruz-Roa, A.; Gonzalez, F.; Galaro, J.; Judkins, A.R.; Ellison, D.; Baccon, J.; Madabhushi, A.; Romero, E. A visual latent semantic approach for automatic analysis and interpretation of anaplastic medulloblastoma virtual slides. Med. Image Comput. Comput. Assist. Interv. 2012, 15, 157–164. [Google Scholar] [CrossRef]

- Otalora, S.; Cruz-Roa, A.; Arevalo, J.; Atzori, M.; Madabhushi, A.; Judkins, A.R.; Gonzalez, F.; Muller, H.; Depeursinge, A. Combining Unsupervised Feature Learning and Riesz Wavelets for Histopathology Image Representation: Application to Identifying Anaplastic Medulloblastoma. Lect. Notes Comput. Sc. 2015, 9349, 581–588. [Google Scholar] [CrossRef]

- Humeau-Heurtier, A. Texture Feature Extraction Methods: A Survey. IEEE Access 2019, 7, 8975–9000. [Google Scholar] [CrossRef]

- Zhang, J.; Liang, J.M.; Zhao, H. Local Energy Pattern for Texture Classification Using Self-Adaptive Quantization Thresholds. IEEE T Image Process. 2013, 22, 31–42. [Google Scholar] [CrossRef] [PubMed]

- Hira, Z.M.; Gillies, D.F. A Review of Feature Selection and Feature Extraction Methods Applied on Microarray Data. Adv. Bioinform. 2015, 2015, 198363. [Google Scholar] [CrossRef] [PubMed]

- Babu, J.; Rangu, S.; Manogna, P. A survery on different feature extraction and classification techniques used in image steganalysis. J. Inf. Secur. 2017, 8. [Google Scholar] [CrossRef]

- Das, D.; Mahanta, L.B.; Ahmed, S.; Baishya, B.K.; Haque, I. Study on Contribution of Biological Interpretable and Computer-Aided Features Towards the Classification of Childhood Medulloblastoma Cells. J. Med. Syst. 2018, 42, 151. [Google Scholar] [CrossRef] [PubMed]

- Afifi, W.A. Image Retrieval Based on Content Using Color Feature. Int. Sch. Res. Not. 2012, 2012, 248285. [Google Scholar] [CrossRef]

- Park, S.; Yu, S.; Kim, J.; Kim, S.; Lee, S. 3D hand tracking using Kalman filter in depth space. Eurasip. J. Adv. Sig. Pract. 2012, 2012, 46. [Google Scholar] [CrossRef]

- Das, D.; Mahanta, L.B.; Ahmed, S.; Baishya, B.K. Classification of childhood medulloblastoma into WHO-defined multiple subtypes based on textural analysis. J. Microsc-Oxford 2020, 279, 26–38. [Google Scholar] [CrossRef]

- Das, L.B.M.; Baishya, B.K.; Ahmed, S. Classification of childhood medulloblastoma and its subtypes using transfer learning features- a comparative study of deep convolutional neural networks. In Proceedings of the International Conference on Computer, Electrical & Communication Engineering (ICCECE), Kolkata, India, 17–18 January 2020. [Google Scholar] [CrossRef]

- Attallah, O. MB-AI-His: Histopathological Diagnosis of Pediatric Medulloblastoma and its Subtypes via AI. Diagnostics 2021, 11, 359. [Google Scholar] [CrossRef]

- Sarvamangala, D.; Kulkarni, R.V. Convolutional neural networks in medical image understanding: A survey. Evol. Intell. 2021, 1–22, Online ahead of print. [Google Scholar]

- Anwar, S.M.; Majid, M.; Qayyum, A.; Awais, M.; Alnowami, M.; Khan, M.K. Medical image analysis using convolutional neural networks: A review. J. Med. Syst. 2018, 42, 226. [Google Scholar] [CrossRef] [PubMed]

- Lu, L.; Wang, X.; Carneiro, G.; Yang, L. Deep Learning and Convolutional Neural Networks for Medical Imaging and Clinical Informatics; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Attallah, O. ECG-BiCoNet: An ECG-based pipeline for COVID-19 diagnosis using Bi-Layers of deep features integration. Comput. Biol. Med. 2022, 142, 105210. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-Resnet and the Impact of Residual Connections on Learning. In Proceedings of the Thirty-first AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Castellano, G.; Bonilha, L.; Li, L.; Cendes, F. Texture analysis of medical images. Clin. Radiol. 2004, 59, 1061–1069. [Google Scholar] [CrossRef]

- Garg, M.; Dhiman, G. A novel content-based image retrieval approach for classification using GLCM features and texture fused LBP variants. Neural Comput. Appl. 2021, 33, 1311–1328. [Google Scholar] [CrossRef]

- Jabber, B.; Rajesh, K.; Haritha, D.; Basha, C.Z.; Parveen, S.N. An Intelligent System for Classification of Brain Tumours With GLCM and Back Propagation Neural Network. In Proceedings of the 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Tamil Nadu, India, 5–7 November 2020; pp. 21–25. [Google Scholar]

- Gupta, Y.; Lama, R.K.; Lee, S.-W.; Kwon, G.-R. An MRI brain disease classification system using PDFB-CT and GLCM with kernel-SVM for medical decision support. Multimed. Tools Appl. 2020, 79, 32195–32224. [Google Scholar] [CrossRef]

- Durgamahanthi, V.; Christaline, J.A.; Edward, A.S. GLCM and GLRLM based texture analysis: Application to brain cancer diagnosis using histopathology images. In Intelligent Computing and Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 691–706. [Google Scholar]

- Attallah, O.; Sharkas, M.A.; Gadelkarim, H. Fetal brain abnormality classification from MRI images of different gestational age. Brain Sci. 2019, 9, 231. [Google Scholar] [CrossRef]

- Ragab, D.A.; Attallah, O. FUSI-CAD: Coronavirus (COVID-19) diagnosis based on the fusion of CNNs and handcrafted features. PeerJ Comput. Sci. 2020, 6, e306. [Google Scholar] [CrossRef]

- Hasan, A.M.; Meziane, F. Automated screening of MRI brain scanning using grey level statistics. Comput. Electr. Eng. 2016, 53, 276–291. [Google Scholar] [CrossRef]

- Rehman, M.U.; Akhtar, S.; Zakwan, M.; Mahmood, M.H. Novel architecture with selected feature vector for effective classification of mitotic and non-mitotic cells in breast cancer histology images. Biomed. Signal. Processing Control 2022, 71, 103212. [Google Scholar] [CrossRef]

- Trivizakis, E.; Ioannidis, G.S.; Souglakos, I.; Karantanas, A.H.; Tzardi, M.; Marias, K. A neural pathomics framework for classifying colorectal cancer histopathology images based on wavelet multi-scale texture analysis. Sci. Rep. 2021, 11, 613–620. [Google Scholar] [CrossRef]

- Mishra, S.; Majhi, B.; Sa, P.K. Glrlm-based feature extraction for acute lymphoblastic leukemia (all) detection. In Recent Findings in Intelligent Computing Techniques; Springer: Berlin/Heidelberg, Germany, 2018; pp. 399–407. [Google Scholar]

- Das, D.; Mahanta, L.B.; Ahmed, S.; Baishya, B.K.; Haque, I. Automated classification of childhood brain tumours based on texture feature. Songklanakarin J. Sci. Technol. 2019, 41, 1014–1020. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Attallah, O. DIAROP: Automated Deep Learning-Based Diagnostic Tool for Retinopathy of Prematurity. Diagnostics 2021, 11, 2034. [Google Scholar] [CrossRef]

- Attallah, O.; Sharkas, M.A.; Gadelkarim, H. Deep Learning Techniques for Automatic Detection of Embryonic Neurodevelopmental Disorders. Diagnostics 2020, 10, 27. [Google Scholar] [CrossRef]

- Raghu, M.; Zhang, C.Y.; Kleinberg, J.; Bengio, S. Transfusion: Understanding Transfer Learning for Medical Imaging. arXiv 2019, arXiv:1902.07208. [Google Scholar]

- Zemouri, R.; Zerhouni, N.; Racoceanu, D. Deep learning in the biomedical applications: Recent and future status. Appl. Sci. 2019, 9, 1526. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Tharwat, A. Linear vs. quadratic discriminant analysis classifier: A tutorial. Int. J. Appl. Pattern Recognit. 2016, 3, 145–180. [Google Scholar] [CrossRef]

- Das, D.; Mahanta, L.B.; Ahmed, S.; Baishya, B.K. A study on MANOVA as effective feature reduction technique in classification of childhood medulloblastoma and its subtypes. Netw Model. Anal. Health Informatics Bioinform. 2020, 9, 141–151. [Google Scholar] [CrossRef]

- Attallah, O. CoMB-Deep: Composite Deep Learning-Based Pipeline for Classifying Childhood Medulloblastoma and Its Classes. Front. Neuroinform. 2021, 15, 663592. [Google Scholar] [CrossRef]

| Original Images | GLCM | GLRM | GLCM+ GLRM | |

|---|---|---|---|---|

| ResNet-101 | ||||

| LDA | 98.70 | 94.68 | 95.98 | 98.34 |

| QDA | 99.12 | 94.18 | 97.16 | 98.7 |

| SVM | 98.46 | 92.88 | 96.26 | 97.04 |

| NB | 99.40 | 96.80 | 97.40 | 98.70 |

| KNN | 98.70 | 95.50 | 96.10 | 98.10 |

| RF | 98.05 | 94.80 | 96.10 | 96.7 |

| Inception | ||||

| LDA | 99.40 | 92.60 | 97.82 | 97.96 |

| QDA | 99.24 | 92.86 | 96.64 | 97.28 |

| SVM | 99.40 | 93.28 | 96.38 | 96.64 |

| NB | 98.1 | 92.22 | 96.88 | 97.40 |

| KNN | 96.80 | 89.60 | 93.50 | 94.20 |

| RF | 99.35 | 88.96 | 95.45 | 98.05 |

| InceptionResNet | ||||

| LDA | 98.32 | 96.38 | 98.58 | 98.84 |

| QDA | 99.40 | 95.48 | 96.78 | 99.40 |

| SVM | 98.06 | 95.74 | 97.44 | 98.46 |

| NB | 98.1 | 96.1 | 98.70 | 99.4 |

| KNN | 96.1 | 96.1 | 97.4 | 97.40 |

| RF | 96.1 | 94.80 | 98.70 | 98.7 |

| Classifier | Sensitivity | Specificity | Precision | F1-Score | MCC |

|---|---|---|---|---|---|

| ResNet-101 | 0.9958 | 0.9977 | 0.9942 | 0.9974 | 0.9925 |

| Inception | 1 | 1 | 1 | 1 | 1 |

| InceptionResNet | 0.9958 | 0.9981 | 0.9895 | 0.9918 | 0.9904 |

| Model Description | Precision (%) | Accuracy (%) | Sensitivity (%) | Specificity (%) | Number of Features | Reference |

|---|---|---|---|---|---|---|

| Proposed Model | 100 | 100 | 100 | 100 | 12 | This study |

| Shape + Color features; PCA; SVM | - | 84.9 | - | - | 19 | [40] |

| HOG, GLCM, Tamura, LBP features; GRLN; MANOVA; SVM | 66.6 | 65.2 | 72.0 | - | 34 | [73] |

| AlexNet | - | 79.3 | - | - | - | [44] |

| VGG-16 | - | 65.4 | - | - | - | [44] |

| AlexNet; SVM | - | 93.2 | - | - | 4096 | [44] |

| VGG-16; SVM | - | 93.4 | - | - | 4096 | [44] |

| GLCM, Tamura, LBP, GRLN features; SVM | 91.3 | 91.3 | 91.3 | 97 | 83 | [43] |

| GLCM, Tamura, LBP, GRLN features; PCA; SVM | - | 96.7 | - | - | 20 | [43] |

| MobileNet; DenseNet; ResNet merging using PCA; LDA classifier | 99.6 | 99.4 | 99.5 | 99.6 | 95 | [45] |

| Deep features from DenseNet-201, ShuffleNet; Relief-F; Bi-LSTM | 98.1 | 98.1 | 98.1 | 99.3 | 448 | [74] |

| Deep features from DenseNet-201, Inception, Resnet-50, Darknet-53, MobileNet, ShuffleNet, SqueezeNet, NasNetMobile; Relief-F; Bi-LSTM | 99.4 | 99.4 | 99.8 | 99.4 | 739 | [74] |

| FractalNet; GLCM, Tamura, LBP, GRLN; SVM | - | 91.3 | - | - | - | [30] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Attallah, O.; Zaghlool, S. AI-Based Pipeline for Classifying Pediatric Medulloblastoma Using Histopathological and Textural Images. Life 2022, 12, 232. https://doi.org/10.3390/life12020232

Attallah O, Zaghlool S. AI-Based Pipeline for Classifying Pediatric Medulloblastoma Using Histopathological and Textural Images. Life. 2022; 12(2):232. https://doi.org/10.3390/life12020232

Chicago/Turabian StyleAttallah, Omneya, and Shaza Zaghlool. 2022. "AI-Based Pipeline for Classifying Pediatric Medulloblastoma Using Histopathological and Textural Images" Life 12, no. 2: 232. https://doi.org/10.3390/life12020232

APA StyleAttallah, O., & Zaghlool, S. (2022). AI-Based Pipeline for Classifying Pediatric Medulloblastoma Using Histopathological and Textural Images. Life, 12(2), 232. https://doi.org/10.3390/life12020232