Minimum Mapping from EMG Signals at Human Elbow and Shoulder Movements into Two DoF Upper-Limb Robot with Machine Learning

Abstract

1. Introduction

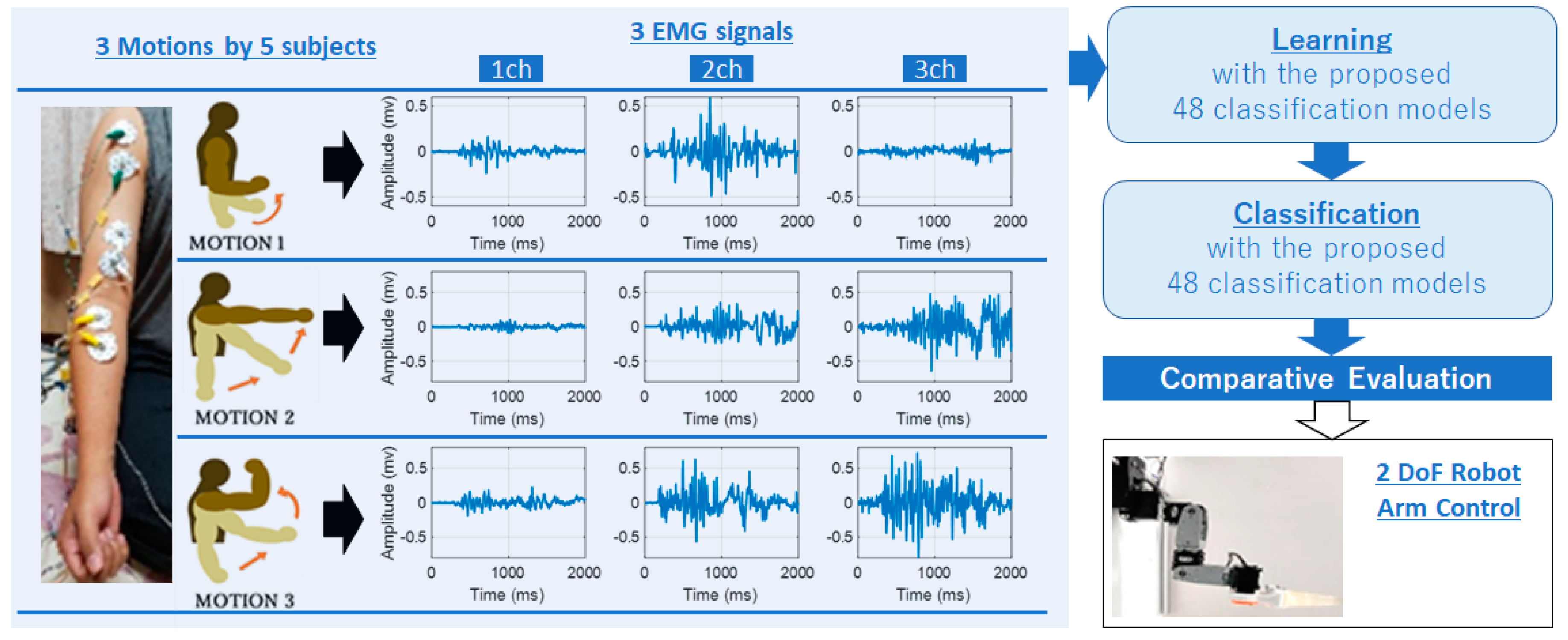

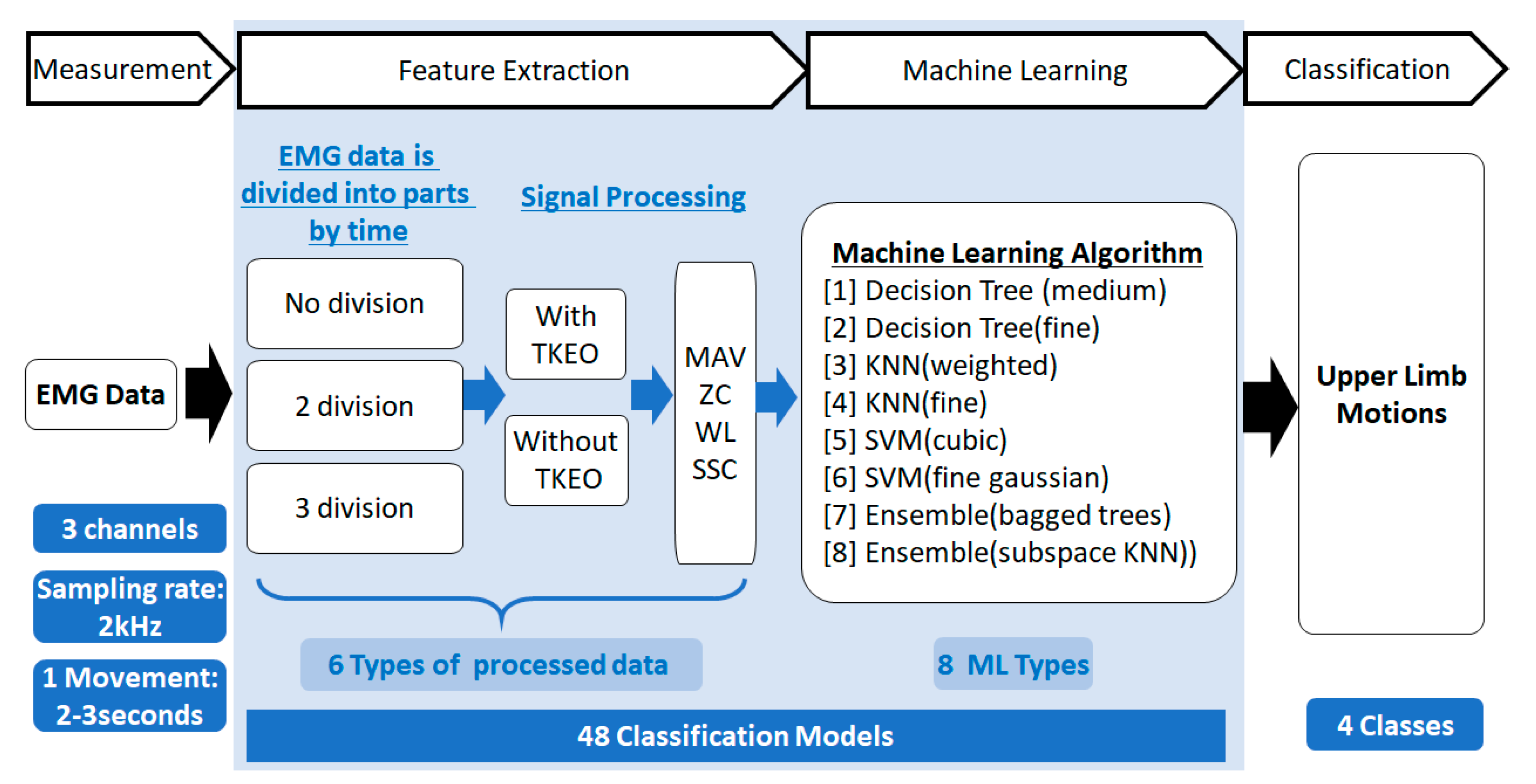

2. Materials and Methodology

2.1. Feature Extraction Stage

2.2. Machine Learning (ML) Stage

2.3. Performance Analysis

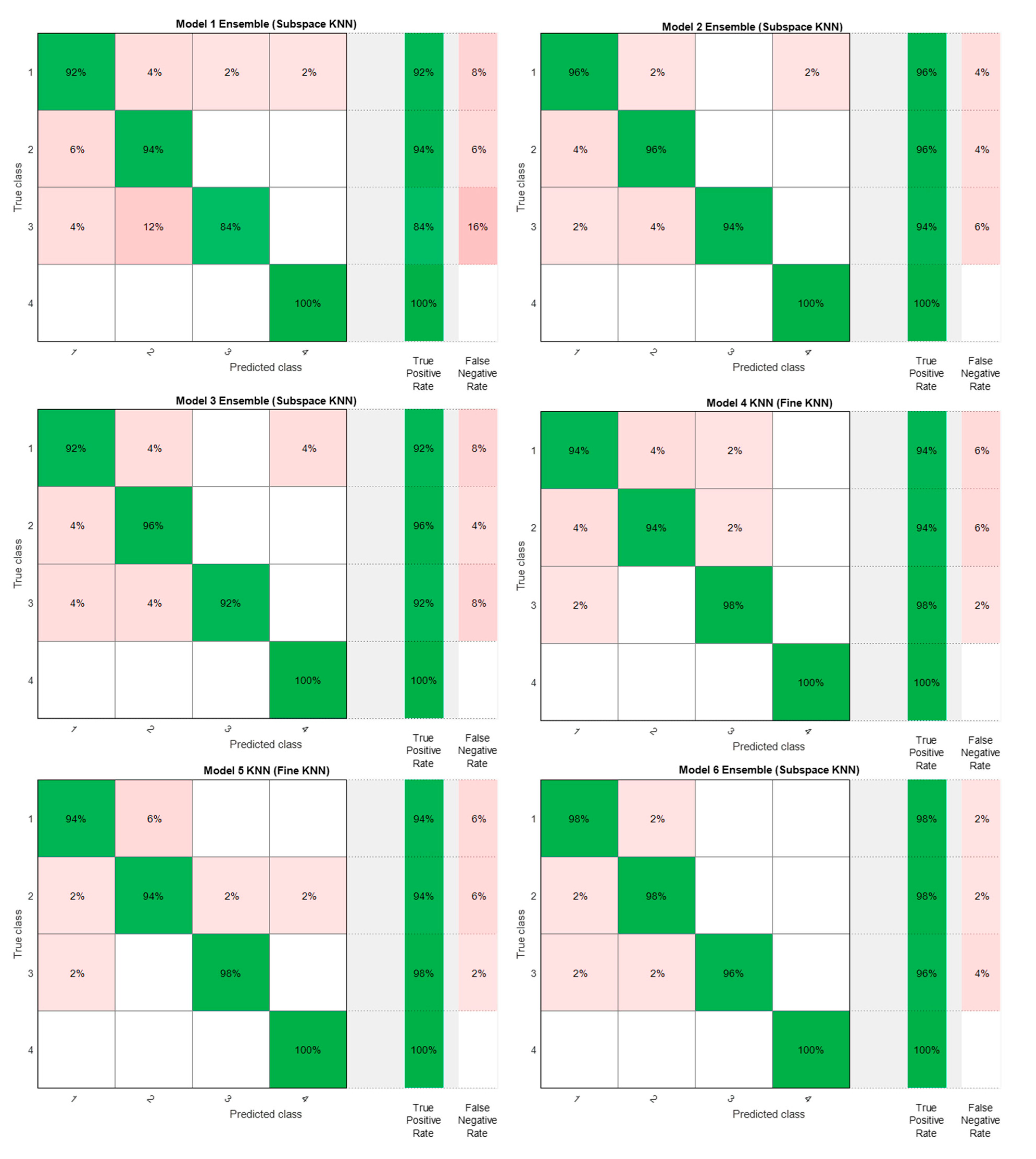

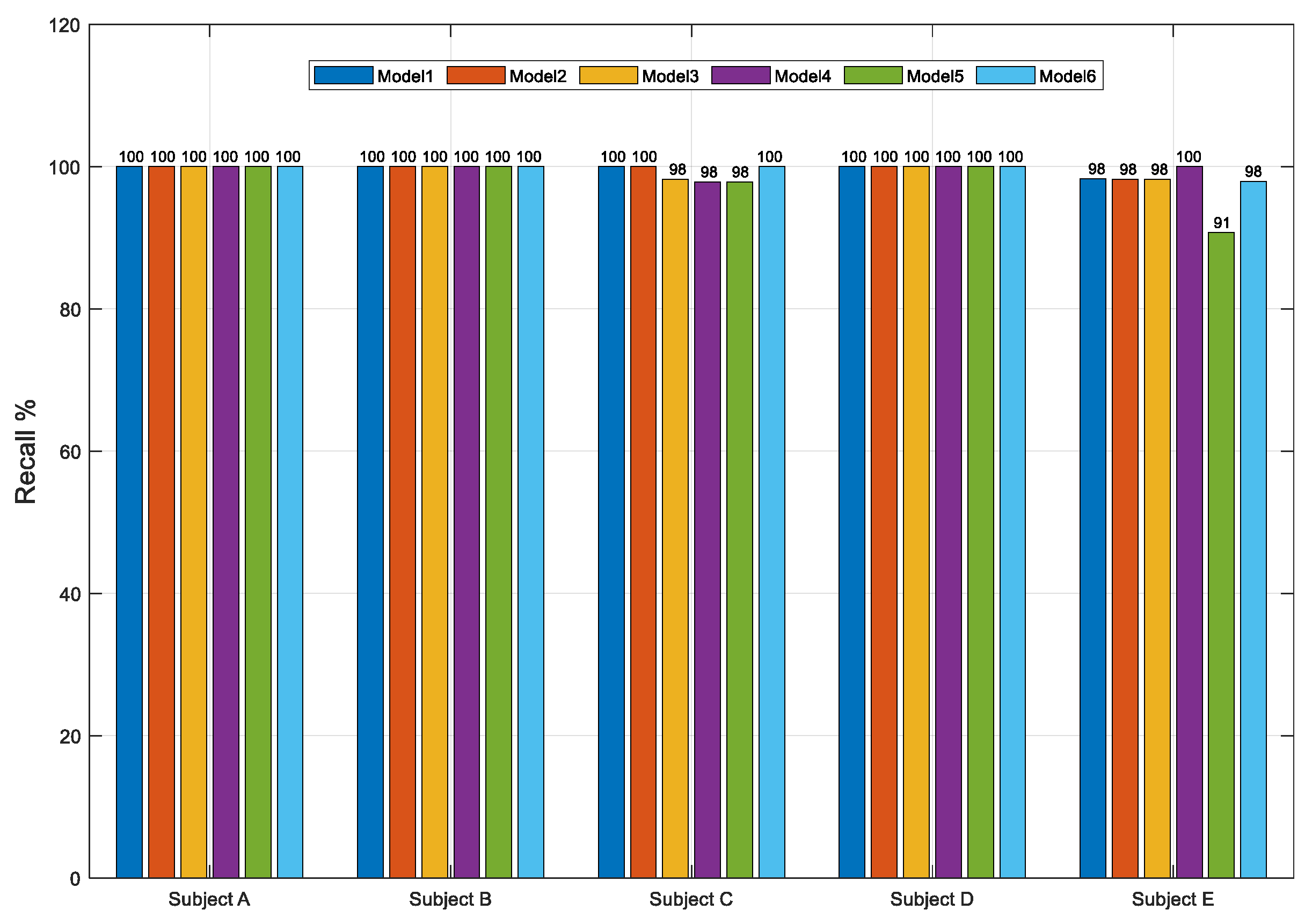

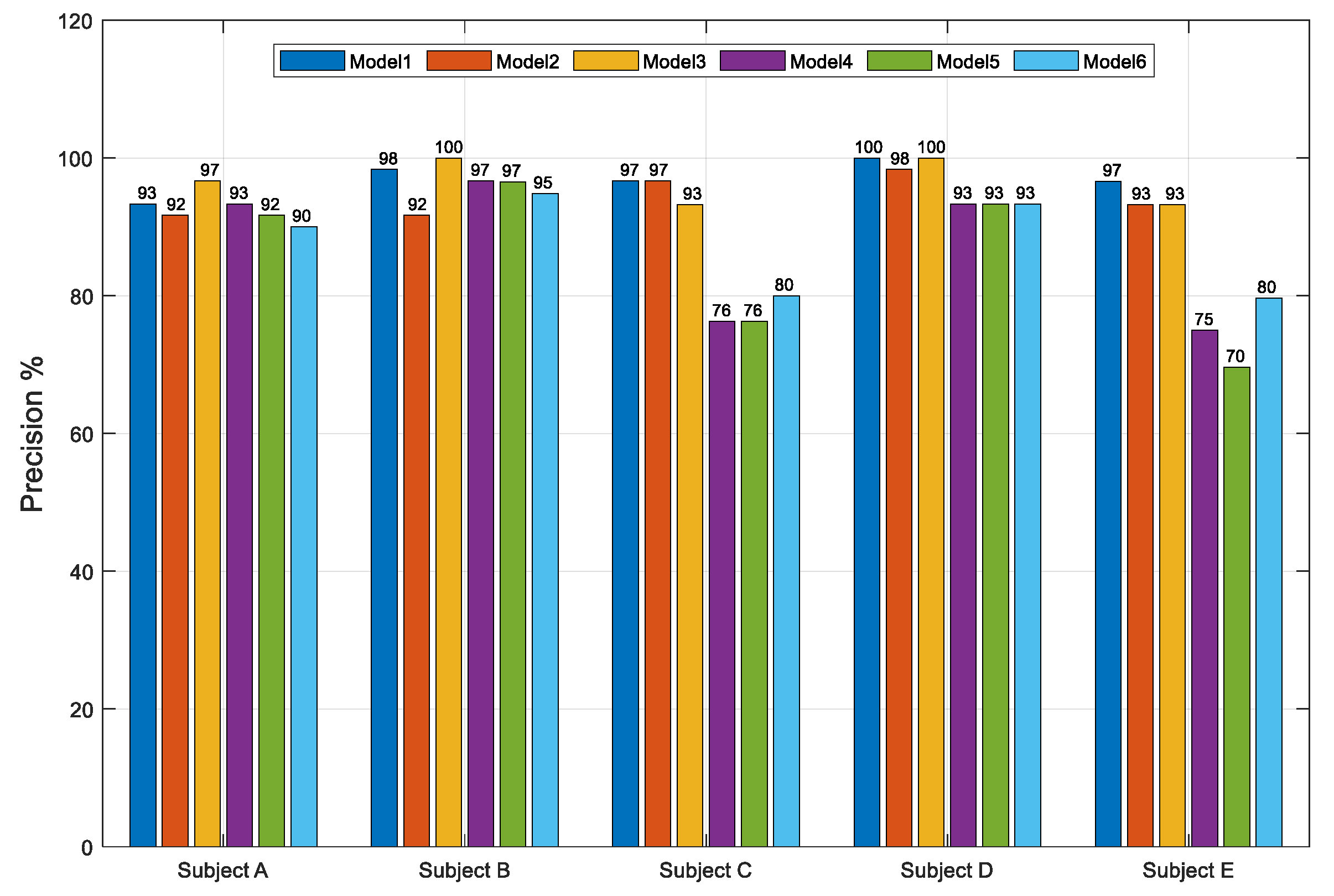

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sasaki, M.; Matsushita, K.; Rusydi, M.I.; Laksono, P.W.; Muguro, J.; Bin Suhaimi, M.S.A.; Njeri, P.W. Robot control systems using bio-potential signals Robot Control Systems Using Bio-Potential Signals. AIP Conf. Proc. 2020, 2217, 020008. [Google Scholar] [CrossRef]

- Farina, D.; Jiang, N.; Rehbaum, H.; Holobar, A.; Graimann, B.; Dietl, H.; Aszmann, O.C. The Extraction of Neural Information from the Surface EMG for the Control of Upper-Limb Prostheses: Emerging Avenues and Challenges. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 797–809. [Google Scholar] [CrossRef]

- Bi, L.; Feleke, A.; Guan, C. A review on EMG-based motor intention prediction of continuous human upper limb motion for human-robot collaboration. Biomed. Signal Process. Control 2019, 51, 113–127. [Google Scholar] [CrossRef]

- Parajuli, N.; Sreenivasan, N.; Bifulco, P.; Cesarelli, M.; Savino, S.; Niola, V.; Esposito, D.; Hamilton, T.J.; Naik, G.R.; Gunawardana, U.; et al. Real-Time EMG Based Pattern Recognition Control for Hand Prostheses: A Review on Existing Methods, Challenges and Future Implementation. Sensors 2019, 19, 4596. [Google Scholar] [CrossRef]

- Meattini, R.; Benatti, S.; Scarcia, U.; De Gregorio, D.; Benini, L.; Melchiorri, C. An sEMG-Based Human–Robot Interface for Robotic Hands Using Machine Learning and Synergies. IEEE Trans. Compon. Packag. Manuf. Technol. 2018. [Google Scholar] [CrossRef]

- Toledo-Pérez, D.C.; Rodríguez-Reséndiz, J.; Gómez-Loenzo, R.A.; Jauregui-Correa, J.C. Support Vector Machine-Based EMG Signal Classification Techniques: A Review. Appl. Sci. 2019, 9, 4402. [Google Scholar] [CrossRef]

- Jia, G.; Lam, H.-K.; Liao, J.; Wang, R. Classification of electromyographic hand gesture signals using machine learning techniques. Neurocomputing 2020, 401, 236–248. [Google Scholar] [CrossRef]

- Jaramillo-Yánez, A.; Benalcázar, M.E.; Mena-Maldonado, E. Real-Time Hand Gesture Recognition Using Surface Electromyography and Machine Learning: A Systematic Literature Review. Sensors 2020, 20, 2467. [Google Scholar] [CrossRef]

- Laksono, P.W.; Matsushita, K.; Bin Suhaimi, M.S.A.; Kitamura, T.; Njeri, W.; Muguro, J.; Sasaki, M. Mapping Three Electromyography Signals Generated by Human Elbow and Shoulder Movements to Two Degree of Freedom Upper-Limb Robot Control. Robotics 2020, 9, 83. [Google Scholar] [CrossRef]

- Simao, M.; Mendes, N.; Gibaru, O.; Neto, P. A Review on Electromyography Decoding and Pattern Recognition for Human-Machine Interaction. IEEE Access 2019, 7, 39564–39582. [Google Scholar] [CrossRef]

- Rubio, J.D.J.; Ochoa, G.; Mujica-Vargas, D.; Garcia, E.; Balcazar, R.; Elias, I.; Cruz, D.R.; Juarez, C.F.; Aguilar, A.; Novoa, J.F. Structure Regulator for the Perturbations Attenuation in a Quadrotor. IEEE Access 2019, 7, 138244–138252. [Google Scholar] [CrossRef]

- Tavakoli, M.; Benussi, C.; Lourenco, J.L. Single channel surface EMG control of advanced prosthetic hands: A simple, low cost and efficient approach. Expert Syst. Appl. 2017, 79, 322–332. [Google Scholar] [CrossRef]

- Triwiyanto, T.; Rahmawati, T.; Yulianto, E.; Mak’Ruf, M.R.; Nugraha, P.C. Dynamic feature for an effective elbow-joint angle estimation based on electromyography signals. Indones. J. Electr. Eng. Comput. Sci. 2020, 19, 178–187. [Google Scholar] [CrossRef]

- Antuvan, C.W.; Ison, M.; Artemiadis, P. Embedded Human Control of Robots Using Myoelectric Interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 820–827. [Google Scholar] [CrossRef]

- Fukuda, O.; Tsuji, T.; Kaneko, M.; Otsuka, A. A human-assisting manipulator teleoperated by EMG signals and arm motions. IEEE Trans. Robot. Autom. 2003. [Google Scholar] [CrossRef]

- Martinez, D.I.; De Rubio, J.J.; Vargas, T.M.; Garcia, V.; Ochoa, G.; Balcazar, R.; Cruz, D.R.; Aguilar, A.; Novoa, J.F.; Aguilar-Ibanez, C. Stabilization of Robots With a Regulator Containing the Sigmoid Mapping. IEEE Access 2020, 8, 89479–89488. [Google Scholar] [CrossRef]

- Bin Suhaimi, M.S.A.; Matsushita, K.; Sasaki, M.; Njeri, W. 24-Gaze-Point Calibration Method for Improving the Precision of AC-EOG Gaze Estimation. Sensors 2019, 19, 3650. [Google Scholar] [CrossRef]

- Sánchez-Velasco, L.E.; Arias-Montiel, M.; Guzmán-Ramírez, E.; Lugo-González, E. A Low-Cost EMG-Controlled Anthropomorphic Robotic Hand for Power and Precision Grasp. Biocybern. Biomed. Eng. 2020, 40, 221–237. [Google Scholar] [CrossRef]

- Hassan, H.F.; Abou-Loukh, S.J.; Ibraheem, I.K. Teleoperated robotic arm movement using electromyography signal with wearable Myo armband. J. King Saud Univ. Eng. Sci. 2019. [Google Scholar] [CrossRef]

- Aguilar-Ibanez, C.; Suarez-Castanon, M.S. A Trajectory Planning Based Controller to Regulate an Uncertain 3D Overhead Crane System. Int. J. Appl. Math. Comput. Sci. 2020, 29, 693–702. [Google Scholar] [CrossRef]

- Rusydi, M.I.; Sasaki, M.; Ito, S. Affine Transform to Reform Pixel Coordinates of EOG Signals for Controlling Robot Manipulators Using Gaze Motions. Sensors 2014, 14, 10107–10123. [Google Scholar] [CrossRef]

- García-Sánchez, J.R.; Tavera-Mosqueda, S.; Silva-Ortigoza, R.; Hernández-Guzmán, V.M.; Sandoval-Gutiérrez, J.; Marcelino-Aranda, M.; Taud, H.; Marciano-Melchor, M. Robust Switched Tracking Control for Wheeled Mobile Robots Considering the Actuators and Drivers. Sensors 2018, 18, 4316. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Lao, K.; Zhang, X. Design and Myoelectric Control of an Anthropomorphic Prosthetic Hand. J. Bionic Eng. 2017, 14, 47–59. [Google Scholar] [CrossRef]

- Nascimento, L.M.S.D.; Bonfati, L.V.; Freitas, M.L.B.; Junior, J.J.A.M.; Siqueira, H.V.; Stevan, J.S.L. Sensors and Systems for Physical Rehabilitation and Health Monitoring—A Review. Sensors 2020, 20, 4063. [Google Scholar] [CrossRef] [PubMed]

- Fang, C.; He, B.; Wang, Y.; Cao, J.; Gao, S. EMG-Centered Multisensory Based Technologies for Pattern Recognition in Rehabilitation: State of the Art and Challenges. Biosensors 2020, 10, 85. [Google Scholar] [CrossRef] [PubMed]

- Qidwai, U.; Ajimsha, M.; Shakir, M. The role of EEG and EMG combined virtual reality gaming system in facial palsy rehabilitation—A case report. J. Bodyw. Mov. Ther. 2019, 23, 425–431. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, A.; Raza, H.; Meena, Y.K.; Dutta, A.; Prasad, G. An EEG-EMG correlation-based brain-computer interface for hand orthosis supported neuro-rehabilitation. J. Neurosci. Methods 2019, 312, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Vujaklija, I.; Farina, D.; Aszmann, O.C. New developments in prosthetic arm systems. Orthop. Res. Rev. 2016, 8, 31–39. [Google Scholar] [CrossRef]

- Ramírez-Martínez, D.; Alfaro-Ponce, M.; Pogrebnyak, O.; Aldape-Pérez, M.; Argüelles-Cruz, A.-J. Hand Movement Classification Using Burg Reflection Coefficients. Sensors 2019, 19, 475. [Google Scholar] [CrossRef] [PubMed]

- Campeau-Lecours, A.; Cote-Allard, U.; Vu, D.-S.; Routhier, F.; Gosselin, B.; Gosselin, C. Intuitive Adaptive Orientation Control for Enhanced Human–Robot Interaction. IEEE Trans. Robot. 2019, 35, 509–520. [Google Scholar] [CrossRef]

- Rahman, S. Machine Learning-Based Cognitive Position and Force Controls for Power-Assisted Human–Robot Collaborative Manipulation. Machines 2021, 9, 28. [Google Scholar] [CrossRef]

- Zhou, S.; Yin, K.; Fei, F.; Zhang, K. Surface electromyography–based hand movement recognition using the Gaussian mixture model, multilayer perceptron, and AdaBoost method. Int. J. Distrib. Sens. Netw. 2019, 15. [Google Scholar] [CrossRef]

- Khushaba, R.N.; Kodagoda, S.; Takruri, M.; Dissanayake, G. Toward improved control of prosthetic fingers using surface electromyogram (EMG) signals. Expert Syst. Appl. 2012, 39, 10731–10738. [Google Scholar] [CrossRef]

- Mukhopadhyay, A.K.; Samui, S. An experimental study on upper limb position invariant EMG signal classification based on deep neural network. Biomed. Signal Process. Control 2020, 55, 101669. [Google Scholar] [CrossRef]

- Ko, A.J.; Latoza, T.D.; Burnett, M.M. A practical guide to controlled experiments of software engineering tools with human participants. Empir. Softw. Eng. 2013, 20, 110–141. [Google Scholar] [CrossRef]

- Faber, M.; Bützler, J.; Schlick, C.M. Human-robot Cooperation in Future Production Systems: Analysis of Requirements for Designing an Ergonomic Work System. Procedia Manuf. 2015, 3, 510–517. [Google Scholar] [CrossRef]

- Huang, Y.; Chen, K.; Zhang, X.; Wang, K.; Ota, J. Joint torque estimation for the human arm from sEMG using backpropagation neural networks and autoencoders. Biomed. Signal Process. Control 2020, 62, 102051. [Google Scholar] [CrossRef]

- Márquez-Figueroa, S.; Shmaliy, Y.S.; Ibarra-Manzano, O. Optimal extraction of EMG signal envelope and artifacts removal assuming colored measurement noise. Biomed. Signal Process. Control 2020, 57, 101679. [Google Scholar] [CrossRef]

- Antuvan, C.W.; Bisio, F.; Marini, F.; Yen, S.-C.; Cambria, E.; Masia, L. Role of Muscle Synergies in Real-Time Classification of Upper Limb Motions using Extreme Learning Machines. J. Neuroeng. Rehabil. 2016, 13, 1–15. [Google Scholar] [CrossRef]

- Englehart, K.K.; Hudgins, B. A Robust, Real-Time Control Scheme for Multifunction Myoelectric Control. IEEE Trans. Biomed. Eng. 2003, 50, 848–854. [Google Scholar] [CrossRef] [PubMed]

- Samuel, O.W.; Asogbon, M.G.; Geng, Y.; Al-Timemy, A.H.; Pirbhulal, S.; Ji, N.; Chen, S.; Fang, P.; Li, G. Intelligent EMG Pattern Recognition Control Method for Upper-Limb Multifunctional Prostheses: Advances, Current Challenges, and Future Prospects. IEEE Access 2019, 7, 10150–10165. [Google Scholar] [CrossRef]

- Nougarou, F.; Campeau-Lecours, A.; Massicotte, D.; Boukadoum, M.; Gosselin, C.; Gosselin, B. Pattern recognition based on HD-sEMG spatial features extraction for an efficient proportional control of a robotic arm. Biomed. Signal Process. Control 2019, 53, 101550. [Google Scholar] [CrossRef]

- Rabin, N.; Kahlon, M.; Malayev, S.; Ratnovsky, A. Classification of human hand movements based on EMG signals using nonlinear dimensionality reduction and data fusion techniques. Expert Syst. Appl. 2020, 149, 113281. [Google Scholar] [CrossRef]

- Krasoulis, A.; Nazarpour, K. Myoelectric digit action decoding with multi-label, multi-class classification: An offline analysis. Sci. Rep. 2020, 1–10. [Google Scholar] [CrossRef]

- Young, A.J.; Smith, L.H.; Rouse, E.J.; Hargrove, L.J. A comparison of the real-time controllability of pattern recognition to conventional myoelectric control for discrete and simultaneous movements. J. Neuroeng. Rehabil. 2014, 11, 1–10. [Google Scholar] [CrossRef]

- Jiang, Y.; Chen, C.; Zhang, X.; Chen, C.; Zhou, Y.; Ni, G.; Muh, S.; Lemos, S. Shoulder muscle activation pattern recognition based on sEMG and machine learning algorithms. Comput. Methods Programs Biomed. 2020, 197. [Google Scholar] [CrossRef]

- Tsai, A.-C.; Hsieh, T.-H.; Luh, J.-J.; Lin, T.-T. A comparison of upper-limb motion pattern recognition using EMG signals during dynamic and isometric muscle contractions. Biomed. Signal Process. Control 2014, 11, 17–26. [Google Scholar] [CrossRef]

- Cai, S.; Chen, Y.; Huang, S.; Wu, Y.; Zheng, H.; Li, X.; Xie, L. SVM-Based Classification of sEMG Signals for Upper-Limb Self-Rehabilitation Training. Front. Neurorobotics 2019, 13, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Trigili, E.; Grazi, L.; Crea, S.; Accogli, A.; Carpaneto, J.; Micera, S.; Vitiello, N.; Panarese, A. Detection of movement onset using EMG signals for upper-limb exoskeletons in reaching tasks. J. Neuroeng. Rehabil. 2019, 16, 1–16. [Google Scholar] [CrossRef]

- Kaiser, J.F. Some useful properties of Teager’s energy operators. In Proceedings of the 1993 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 1993), Minneapolis, MN, USA, 27–30 April 1993; volume 3, pp. 149–152. [Google Scholar]

- Li, X.; Zhou, P.; Aruin, A.S. Teager–Kaiser Energy Operation of Surface EMG Improves Muscle Activity Onset Detection. Ann. Biomed. Eng. 2007, 35, 1532–1538. [Google Scholar] [CrossRef]

- Phinyomark, A.; Khushaba, R.N.; Scheme, E. Feature Extraction and Selection for Myoelectric Control Based on Wearable EMG Sensors. Sensors 2018, 18, 1615. [Google Scholar] [CrossRef]

- Karabulut, D.; Ortes, F.; Arslan, Y.Z.; Adli, M.A. Comparative evaluation of EMG signal features for myoelectric controlled human arm prosthetics. Biocybern. Biomed. Eng. 2017, 37, 326–335. [Google Scholar] [CrossRef]

- Chowdhury, R.H.; Reaz, M.B.I.; Ali, M.A.B.M.; Bakar, A.A.A.; Chellappan, K.; Chang, T.G. Surface Electromyography Signal Processing and Classification Techniques. Sensors 2013, 13, 12431–12466. [Google Scholar] [CrossRef]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012. [Google Scholar] [CrossRef]

- Gopura, R.A.R.C.; Bandara, D.S.V.; Gunasekara, J.M.P.; Jayawardane, T.S.S. Recent Trends in EMG-Based Control Methods for Assistive Robots. In Electrodiagnosis in New Frontiers of Clinical Research; IntechOpen: London, UK, 2013; pp. 237–268. [Google Scholar]

- Soedirdjo, S.D.H.; Merletti, R. Comparison of different digital filtering techniques for surface EMG envelope recorded from skeletal muscle. In Proceedings of the 20th Congress of the International Society of Electrophysiology and Kinesiology (ISEK 2014), Rome, Italy, 15–18 July 2014. [Google Scholar]

- Rokach, L.; Schclar, A.; Itach, E. Ensemble methods for multi-label classification. Expert Syst. Appl. 2014, 41, 7507–7523. [Google Scholar] [CrossRef]

- Noor, A.; Uçar, M.K.; Polat, K.; Assiri, A.; Nour, R. A Novel Approach to Ensemble Classifiers: FsBoost-Based Subspace Method. Math. Probl. Eng. 2020, 2020. [Google Scholar] [CrossRef]

- Rasool, G.; Iqbal, K.; Bouaynaya, N.; White, G. Real-Time Task Discrimination for Myoelectric Control Employing Task-Specific Muscle Synergies. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 98–108. [Google Scholar] [CrossRef] [PubMed]

- Smith, L.H.; Hargrove, L.J.; Lock, B.A.; Kuiken, T.A. Classification Error and Controller Delay. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 186–192. [Google Scholar] [CrossRef] [PubMed]

| Model | Decision Tree (Medium) | Decision Tree (Fine) | KNN (Weighted) | KNN (Fine) | SVM (Cubic) | SVM (Fine Gaussian) | Ensemble (Bagged Trees) | Ensemble (Subspace KNN) |

|---|---|---|---|---|---|---|---|---|

| 1 | 80.5% | 80.5% | 89% | 89% | 89% | 82% | 84.5% | 92.5% |

| 2 | 74.5% | 74.5% | 92% | 95.5% | 92% | 75.5% | 89.5% | 96% |

| 3 | 77% | 77% | 93.5% | 94% | 93% | 74% | 86% | 95% |

| 4 | 90% | 90% | 94.5% | 96.5% | 95% | 95.5% | 91.5% | 96% |

| 5 | 82% | 82% | 93% | 96.5% | 93.5% | 92% | 90% | 96% |

| 6 | 85% | 85% | 93.5% | 97.5% | 95.5% | 94% | 93.5% | 98% |

| Model | Accuracy | Recall | Precision |

|---|---|---|---|

| 1 | 96.67% | 99.66% | 96.99% |

| 2 | 94% | 99.64% | 94.31% |

| 3 | 96% | 99.29% | 96.62% |

| 4 | 86.67% | 99.57% | 86.92% |

| 5 | 83.67% | 97.7% | 85.49% |

| 6 | 86.33% | 96.97% | 89.31% |

| Model | Average Time (s) | SD |

|---|---|---|

| 1 | 0.0314 | 0.0019 |

| 2 | 0.0345 | 0.0022 |

| 3 | 0.0365 | 0.0033 |

| 4 | 0.0027 | 0.0005 |

| 5 | 0.0031 | 0.0005 |

| 6 | 0.0020 | 0.0020 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Laksono, P.W.; Kitamura, T.; Muguro, J.; Matsushita, K.; Sasaki, M.; Amri bin Suhaimi, M.S. Minimum Mapping from EMG Signals at Human Elbow and Shoulder Movements into Two DoF Upper-Limb Robot with Machine Learning. Machines 2021, 9, 56. https://doi.org/10.3390/machines9030056

Laksono PW, Kitamura T, Muguro J, Matsushita K, Sasaki M, Amri bin Suhaimi MS. Minimum Mapping from EMG Signals at Human Elbow and Shoulder Movements into Two DoF Upper-Limb Robot with Machine Learning. Machines. 2021; 9(3):56. https://doi.org/10.3390/machines9030056

Chicago/Turabian StyleLaksono, Pringgo Widyo, Takahide Kitamura, Joseph Muguro, Kojiro Matsushita, Minoru Sasaki, and Muhammad Syaiful Amri bin Suhaimi. 2021. "Minimum Mapping from EMG Signals at Human Elbow and Shoulder Movements into Two DoF Upper-Limb Robot with Machine Learning" Machines 9, no. 3: 56. https://doi.org/10.3390/machines9030056

APA StyleLaksono, P. W., Kitamura, T., Muguro, J., Matsushita, K., Sasaki, M., & Amri bin Suhaimi, M. S. (2021). Minimum Mapping from EMG Signals at Human Elbow and Shoulder Movements into Two DoF Upper-Limb Robot with Machine Learning. Machines, 9(3), 56. https://doi.org/10.3390/machines9030056