Abstract

Localization of mobile robots is crucial for deploying robots in real-world applications such as search and rescue missions. This work aims to develop an accurate localization system applicable to swarm robots equipped only with low-cost monocular vision sensors and visual markers. The system is designed to operate in fully open spaces, without landmarks or support from positioning infrastructures. To achieve this, we propose a localization method based on equilateral triangular formations. By leveraging the geometric properties of equilateral triangles, the accurate two-dimensional position of each participating robot is estimated using one-dimensional lateral distance information between robots, which can be reliably and accurately obtained with a low-cost monocular vision sensor. Experimental and simulation results demonstrate that, as travel time increases, the positioning error of the proposed method becomes significantly smaller than that of a conventional dead-reckoning system, another low-cost localization approach applicable to open environments.

1. Introduction

Localization is a fundamental research area for enabling the autonomy of mobile robots [1]. Even with a high-performance controller, a robot cannot reach its intended destination if its position estimate is inaccurate [2]. Accurate and reliable localization systems are therefore essential for autonomous robotic operation.

Various positioning systems that use infrastructure and onboard sensors have been employed to determine the location of mobile robots. Infrastructure-based systems—such as the Global Positioning System (GPS) [3,4,5,6,7,8,9,10] and indoor positioning systems [11,12,13,14,15,16,17,18]—estimate position based on time-of-flight (TOF) or received signal strength (RSS) measurements of radio signals, along with the known locations of infrastructure components such as satellites or beacons. These systems typically offer high accuracy but become ineffective when the infrastructure is unavailable—for example, during disasters, radio frequency interference, or emergency construction. In particular, GPS is susceptible to ionospheric anomalies [19,20,21,22,23] and radio frequency interference [24,25,26,27,28,29,30,31].

Positioning systems based on onboard sensors, such as dead-reckoning systems that utilize a wheel encoder and an inertial measurement unit [32,33,34,35], have also been employed by mobile robots. In these systems, robots acquire their position information independently of external positioning infrastructures. However, such systems suffer from low positioning accuracy due to accumulated and diverging errors over time. Map-based positioning algorithms have also been investigated to improve localization accuracy using onboard sensors [36,37,38]. Given a predefined obstacle map of the environment, a mobile robot estimates its position by matching detected obstacles to the map. Onboard obstacle detection sensors, such as laser range finders and TOF cameras, are typically used for this purpose. However, the positioning accuracy of map-based systems significantly degrades in large open spaces without obstacles (e.g., lobbies or long straight hallways). Moreover, these methods cannot be applied in unknown environments lacking an existing map.

To overcome the dependency on an existing map, simultaneous localization and mapping (SLAM) algorithms have been developed, in which map building and localization processes are performed simultaneously [39,40,41,42,43]. With SLAM, localization is possible even in unknown environments without positioning infrastructures or a preexisting map; consequently, SLAM has become one of the most prominent research topics in addressing the localization problem. However, to acquire accurate obstacle information necessary for map building, SLAM typically relies on high-performance distance-measuring sensors, such as high-cost light detection and ranging (lidar) devices [44,45,46,47,48,49]. Moreover, the environment must contain sufficiently distinctive features or patterns to construct a high-quality map. To compensate for accumulated localization errors in SLAM, a mobile robot’s trajectory should include loops that introduce additional travel distance; scan matching through loop closure can then improve position accuracy [50,51,52]. In environments such as straight passages without loop closures, however, the robot may produce a curved map, resulting in significant inaccuracy—even in a long, straight corridor.

Cooperative positioning systems, which are applicable to swarm robots, have been proposed to reduce positioning errors in uncharted environments [53,54]. However, these systems typically assume that the robots are equipped with high-cost ranging sensors capable of providing accurate inter-robot distance measurements. In other words, if the robots lack such high-precision distance-measuring sensors, the cooperative positioning methods in [53,54] cannot achieve high localization accuracy.

In this work, our objective is to achieve high localization accuracy for swarm robots operating in wide open spaces without landmarks. The robots are equipped only with low-cost monocular vision sensors and visual markers. It is assumed that there is no infrastructure support, no existing map, and no distance-measuring sensors available. Furthermore, sophisticated vision processing is avoided to minimize computational cost.

To achieve this goal, we propose a cooperative localization system based on equilateral triangular formations. A minimum of four robots cooperate in the system, although it can be easily extended to accommodate more robots. In the proposed approach, three robots act as beacons and form an equilateral triangle. The fourth robot, referred to as the moving robot, approaches the target vertex of the next equilateral triangle—closer to the destination—with the assistance of the three beacon robots. The key challenge in implementing this system is guiding the moving robot to the target vertex with high accuracy using only low-cost monocular vision sensors and the visual markers on the beacon robots. We address this challenge by relying solely on the lateral distance information between the beacon robots, which can be reliably obtained with high accuracy even using low-cost monocular vision sensors. The geometric properties of equilateral triangles are also leveraged in the implementation.

Assuming that the initial positions of the robots are known (an assumption commonly made in previous studies on dead-reckoning and Kalman-filter-based SLAM, e.g., [55,56,57,58]), the triangular formation advances repeatedly toward the destination, and the robots estimate their positions in succession. A simple path planning algorithm is also developed to avoid collisions. The detailed implementation of the system, along with its performance evaluated through experiments and simulations, is discussed in this paper.

The contributions of this study are summarized as follows:

- Unlike infrastructure-based localization systems, which are inoperable in environments lacking positioning infrastructures, the proposed triangular formation-based approach operates independently of any preconstructed infrastructure.

- Unlike map-based localization systems that require existing obstacle maps, high-cost distance-measuring sensors, and significant computational resources, the proposed algorithm does not rely on obstacle maps or map matching and incurs low computational cost. It remains operational even in open spaces without any landmarks.

- Unlike dead-reckoning systems, where positioning errors accumulate continuously over time, in the proposed method, errors accumulate only when a new triangular formation is generated. As a result, the positioning error of the proposed system becomes significantly smaller than that of a dead-reckoning system as travel time increases.

The remainder of this paper is organized as follows: the movement pattern of the robots maintaining the equilateral triangular formation is first introduced in Section 2. Next, the collision avoidance algorithm based on the proposed formation is described in Section 3, followed by experimental results from partial movement steps using four mobile robots in Section 4. To demonstrate the performance of the proposed algorithm in wide open spaces, simulation results based on realistic error models derived from the experiments are presented in Section 5. Finally, the conclusions of this study are provided in Section 6.

2. Proposed Equilateral Triangular Formation for Swarm Robot Localization

Although we conceived the initial idea of triangular formation-based robot localization early on [54], implementing such a system without relying on distance-measuring sensors proved challenging. If accurate distance-measuring sensors, such as laser range finders, are available, a moving robot can easily localize itself using distance information from two beacon robots at known locations. This constitutes a straightforward trilateration problem. However, our goal is to develop a highly accurate localization system based solely on low-cost monocular vision sensors and visual markers, in order to reduce system cost and ensure applicability to low-cost swarm robots. Estimating distances to beacon robots through vision processing is not well-suited for our purpose, as it incurs high computational cost and offers relatively low accuracy compared to distance- measuring sensors.

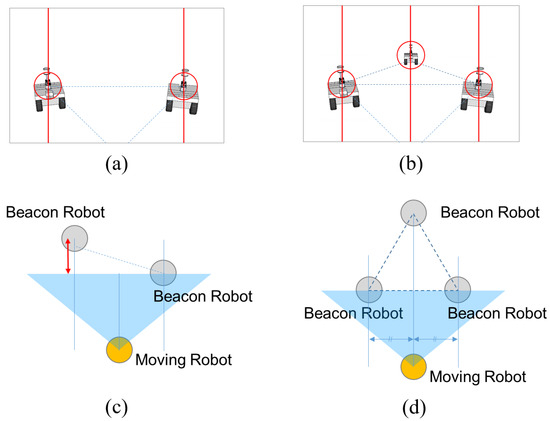

To maintain the position of each robot at the vertices of a triangle with high accuracy while marching toward the destination, we propose an equilateral triangular formation consisting of four robots. This approach substantially simplifies the process of accurately positioning each robot at its designated vertex. The moving robot determines its target vertex by checking the lateral distances to the three beacon robots, as shown in Figure 1d, and depth information is not required in our approach (i.e., a monocular camera is sufficient).

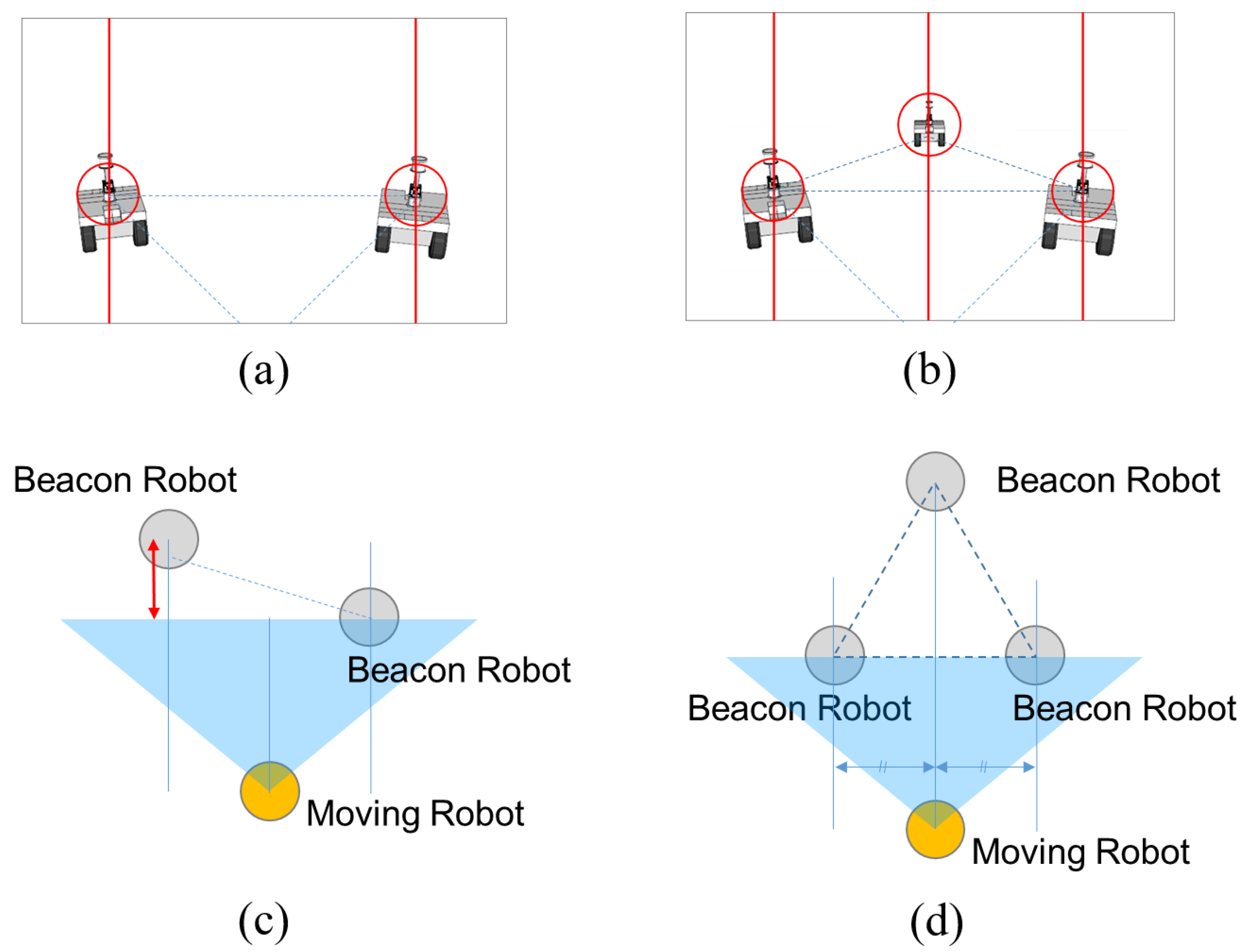

Figure 1.

Triangular formation for localization with three robots vs. four robots. (a) View from the moving robot’s perspective corresponding to the three-robot formation shown in (c). (b) View from the moving robot’s perspective corresponding to the four-robot formation shown in (d). (c) Arbitrary triangular formation based on three robots. (d) Equilateral triangular formation based on four robots.

Accurate depth information is more difficult to obtain than accurate lateral distance information when using only a low-cost monocular vision sensor and visual markers. In contrast, lateral distance information can be acquired with high accuracy through simple pixel counting in the vision image. Moreover, the use of an equilateral triangular formation facilitates straightforward generalization of the method to systems with more than four robots (details are discussed in Section 3).

Theoretically, an equilateral triangle can be formed using three robots. In this case, however, the depth error indicated by the red arrow in Figure 1c must be mitigated through vision processing. The lateral distance information obtained via simple pixel counting cannot compensate for this error. If depth information is not available, the moving robot may mistakenly assume that it is positioned at the right vertex of the equilateral triangle based on its vision image in Figure 1a. Even when vision processing is applied to extract depth information, the resulting accuracy remains low when using only a monocular vision camera and visual markers, and the localization performance of the moving robot cannot be reliably maintained.

Therefore, we propose a four-robot system, as illustrated in Figure 1d. The middle robot in the moving robot’s field of view (FOV) (Figure 1b) acts as an anchor, allowing the moving robot to reach the correct vertex location by checking only the lateral distances between the beacon robots. Since depth information is not required in this approach, the localization accuracy of the moving robot can be higher than that of the three-robot system. Given that our application targets swarm robots, which typically consist of more than four units, the use of one additional robot to improve localization accuracy is not a limitation of the proposed approach.

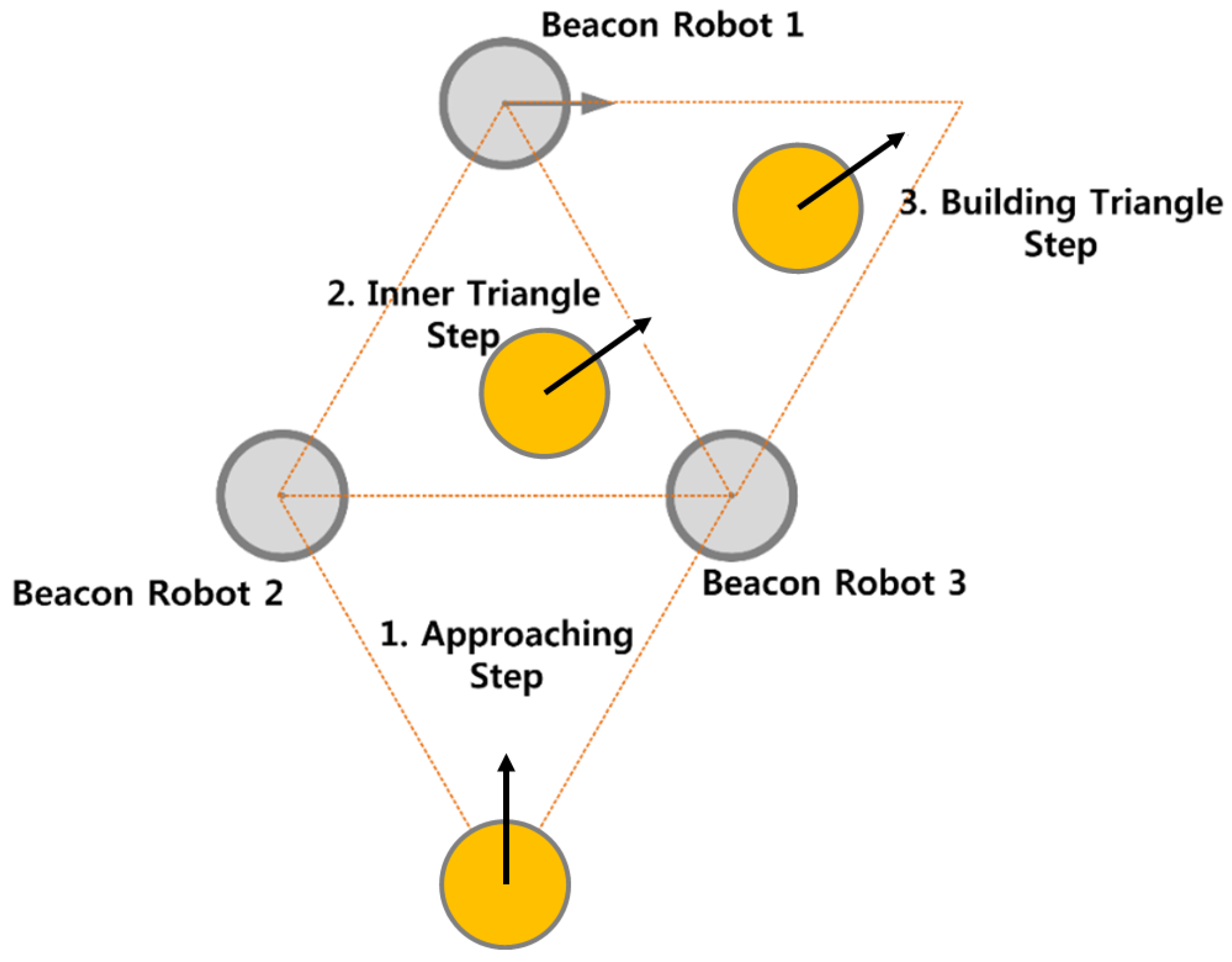

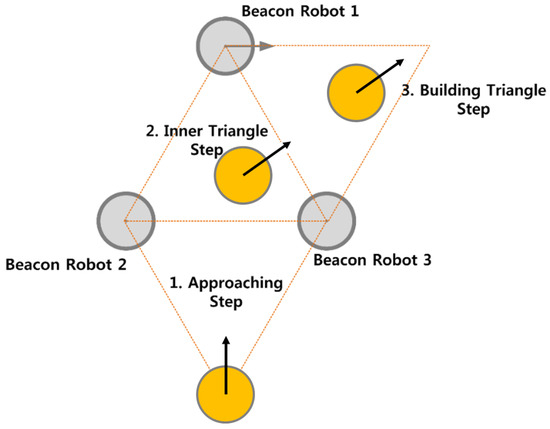

Figure 2 illustrates the maneuver of the moving robot relative to three beacon robots, which remain temporarily stationary at the vertices of the equilateral triangle until the moving robot reaches the target vertex to form the next triangle. The procedure for the moving robot to reach the target vertex consists of three steps: an approaching step, an inner triangle step, and a building triangle step.

Figure 2.

Movement of a robot to the target vertex to form the next equilateral triangular formation. The movement consists of three steps: the approaching step, the inner triangle step, and the building triangle step.

In the approaching step, the moving robot advances toward the triangular formation of the beacon robots using its front-view monocular camera. In the inner triangle step, the moving robot maneuvers toward the target vertex. In the building triangle step, the moving robot locates and moves to the correct vertex position to form a new equilateral triangle using its rear-view monocular camera. As shown in Figure 1b, the moving robot operates independently of odometry or ranging sensors when determining the correct vertex position. Once the moving robot reaches the target vertex, its role changes to that of a beacon robot, one of the previous beacon robots becomes the new moving robot, and the formation continues marching toward the destination.

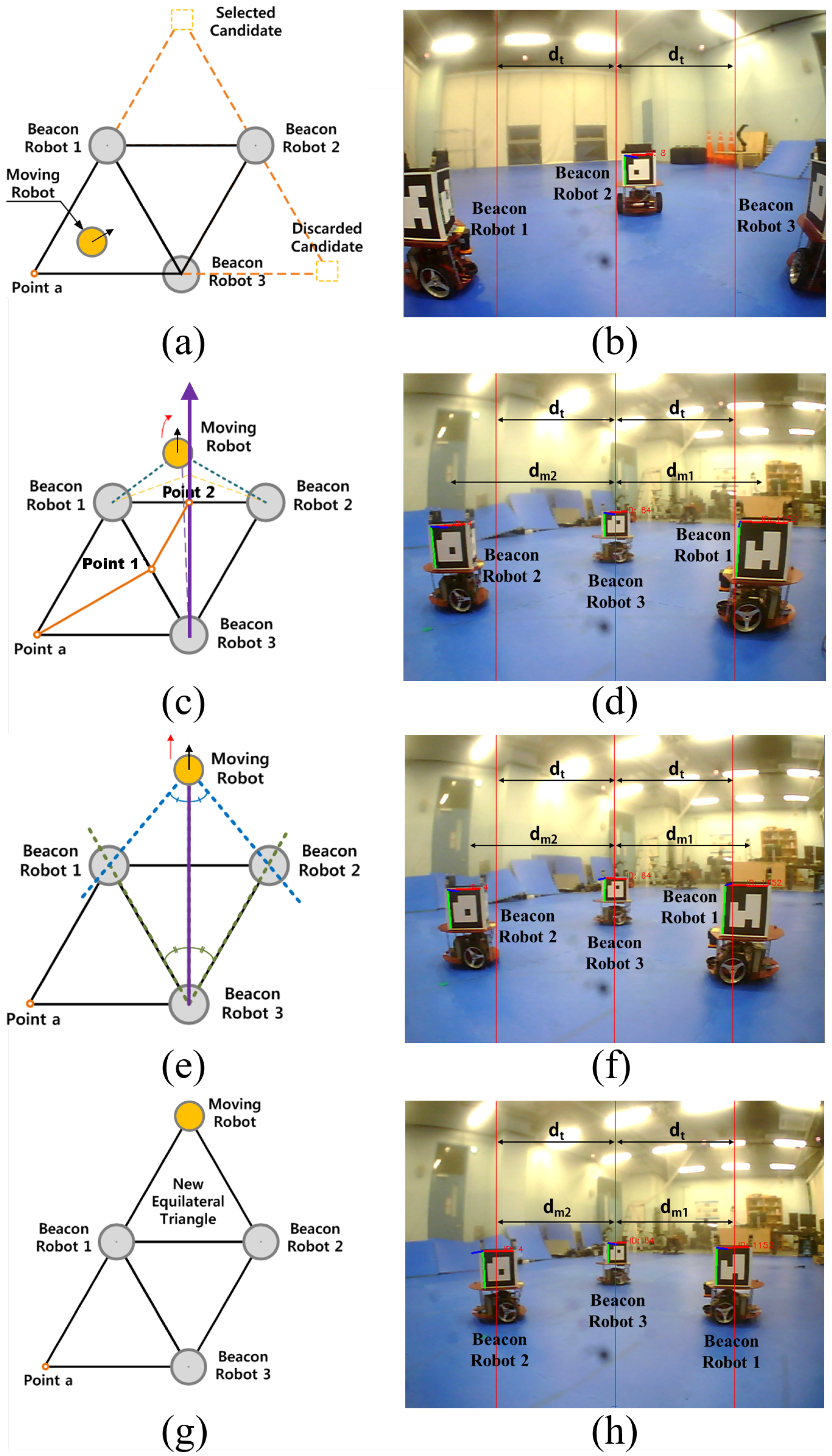

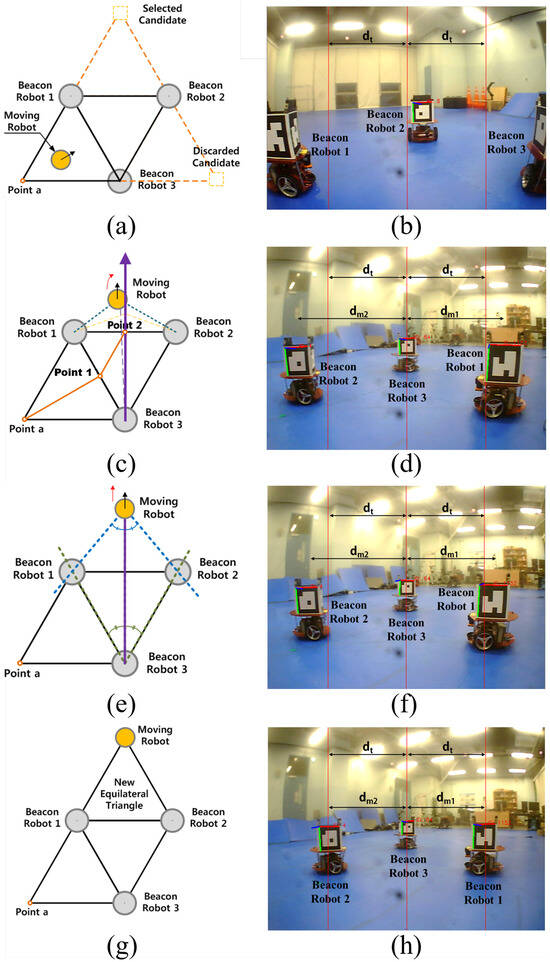

The detailed procedure for the moving robot to reach the target vertex in the building triangle step is presented in Algorithm 1 and Figure 3. After the moving robot passes between beacon robots 1 and 3, as shown in Figure 3a, it continues moving between beacon robots 1 and 2, as shown in Figure 3c. Up to this point, the robot uses its front-view camera. It then follows the steps in Algorithm 1 using its rear-view camera. In Figure 3b,d,f,h, the center red vertical line indicates the robot’s center of sight. The left and right red vertical lines are equidistant from the center line, with a spacing of dt. Here, dt represents the desired lateral pixel distance between the two side markers in the robot’s field of view. The value of dt is selected based on the constraints of the field-of-view angle of the monocular camera used in the experiment. In this study, it was set to 280 pixels. This parameter can be adjusted depending on the camera specifications and the desired scale of the swarm robot localization system. This desired spacing is ultimately determined by the side length of the equilateral triangle.

Figure 3.

Algorithm used to create the next equilateral triangle in the desired moving direction. (a) The moving robot selects the target vertex and begins its maneuver. (b) Front-view image during the approaching step. (c) The orange line indicates the path of the moving robot. The purple arrow represents the extended height of the triangle. After passing point 2, the moving robot is controlled to maintain equal lateral distances to beacon robots 1 and 2 with respect to beacon robot 3. (d) The moving robot uses its rear-view camera to estimate the lateral distances between the beacon robots and is controlled to make dm1 and dm2 equal. (e) The moving robot moves forward until the lateral distances between beacon robot 3 and the two nearby beacon robots (i.e., distances dm1 and dm2) match the pre-established value (i.e., dt). (f) The lateral distances to the two nearby beacon robots (i.e., dm1 and dm2) are equal, but they do not yet match the desired value dt, which is determined by the size of the equilateral triangle. (g) The moving robot settles at the target vertex to complete the new equilateral triangular formation. (h) Rear-view image after the moving robot has settled at the vertex. At this point, dm1, dm2, and dt are all equal.

Note that the depths of the robots in the image are not estimated through vision processing due to their relatively low estimation accuracy. Instead, only lateral distances in the image are utilized in Algorithm 1, which provides significantly higher accuracy. Our method places the moving robot at the desired two-dimensional location based solely on one-dimensional lateral distance information, which can be extracted with high accuracy even using a low-cost monocular vision sensor and minimal computational power.

| Algorithm 1 The detailed procedure for the moving robot to reach the target vertex in the building triangle step |

|

3. Path Planning Algorithm for the Proposed Localization Method

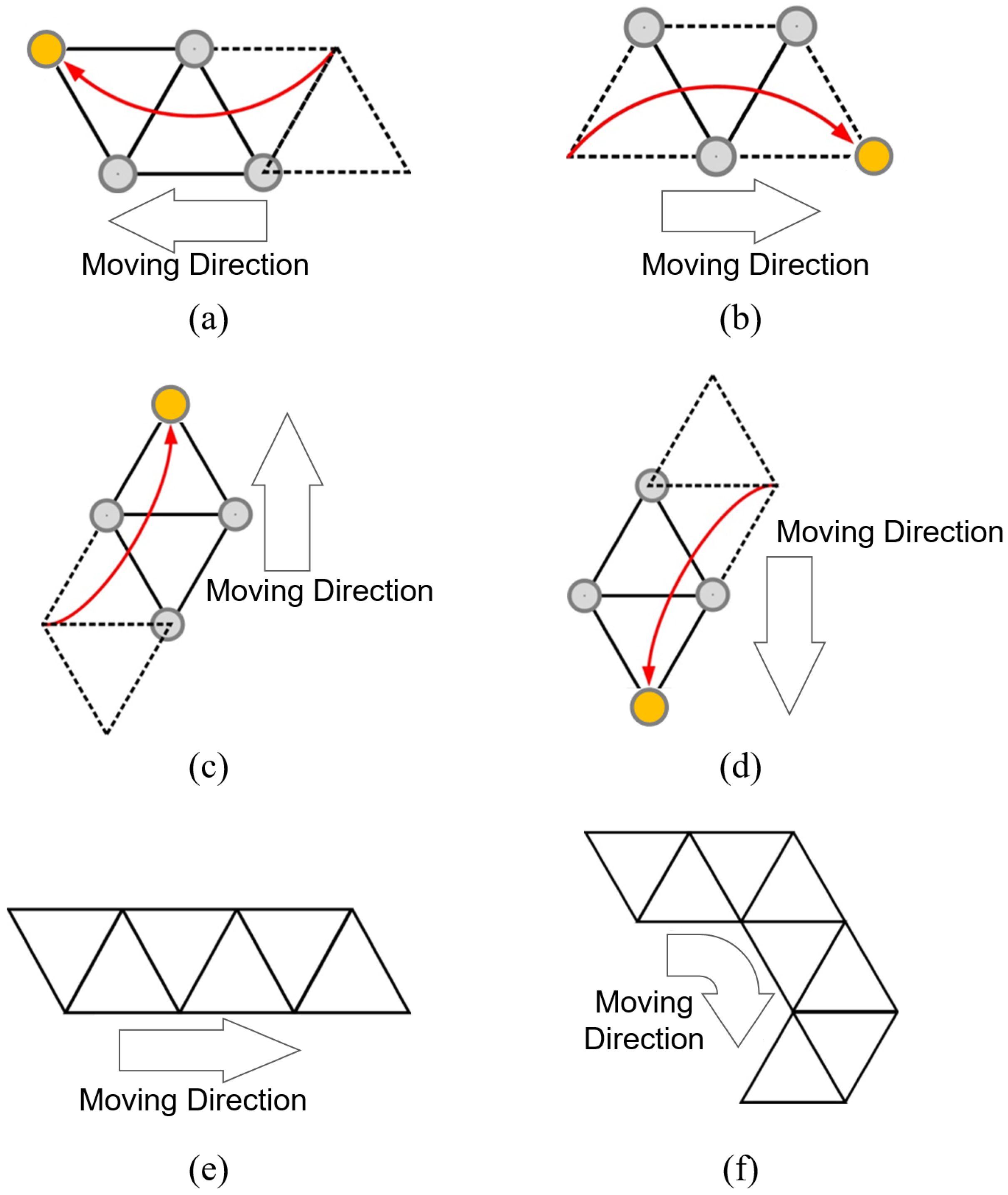

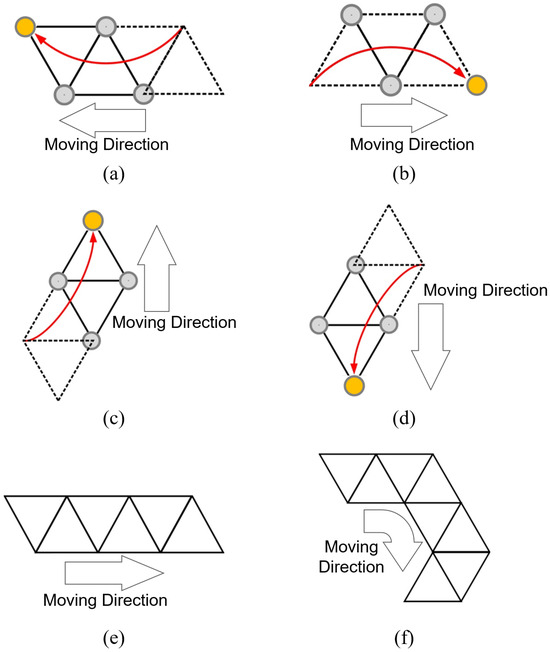

The proposed system selects one moving robot and its local destination (i.e., target vertex) in each step to advance the entire formation toward the destination. The triangular formation can move in four directions by selecting an appropriate robot within the formation as the moving robot. Once the desired moving direction is determined, the corresponding moving robot is selected. For example, if the formation moves left or upward, the robot located to the right or below is chosen as the moving robot, as shown in Figure 4a,c, respectively, while the others serve as beacon robots. Figure 4 illustrates the various movement patterns of the triangular formation. In Figure 4a–d, the gray circles represent beacon robots and the orange circle represents the moving robot. Figure 4e,f show combined movements composed of the basic steps shown in Figure 4a–d. As demonstrated in Figure 4, the triangular formation is capable of moving in any direction.

Figure 4.

Movement patterns of the triangular formation. (a) A single leftward movement step. (b) A single rightward movement step. (c) A single upward movement step. (d) A single downward movement step. (e) Straight-line movement through multiple rightward steps. (f) Turning motion achieved through a sequence of rightward, downward, and leftward steps.

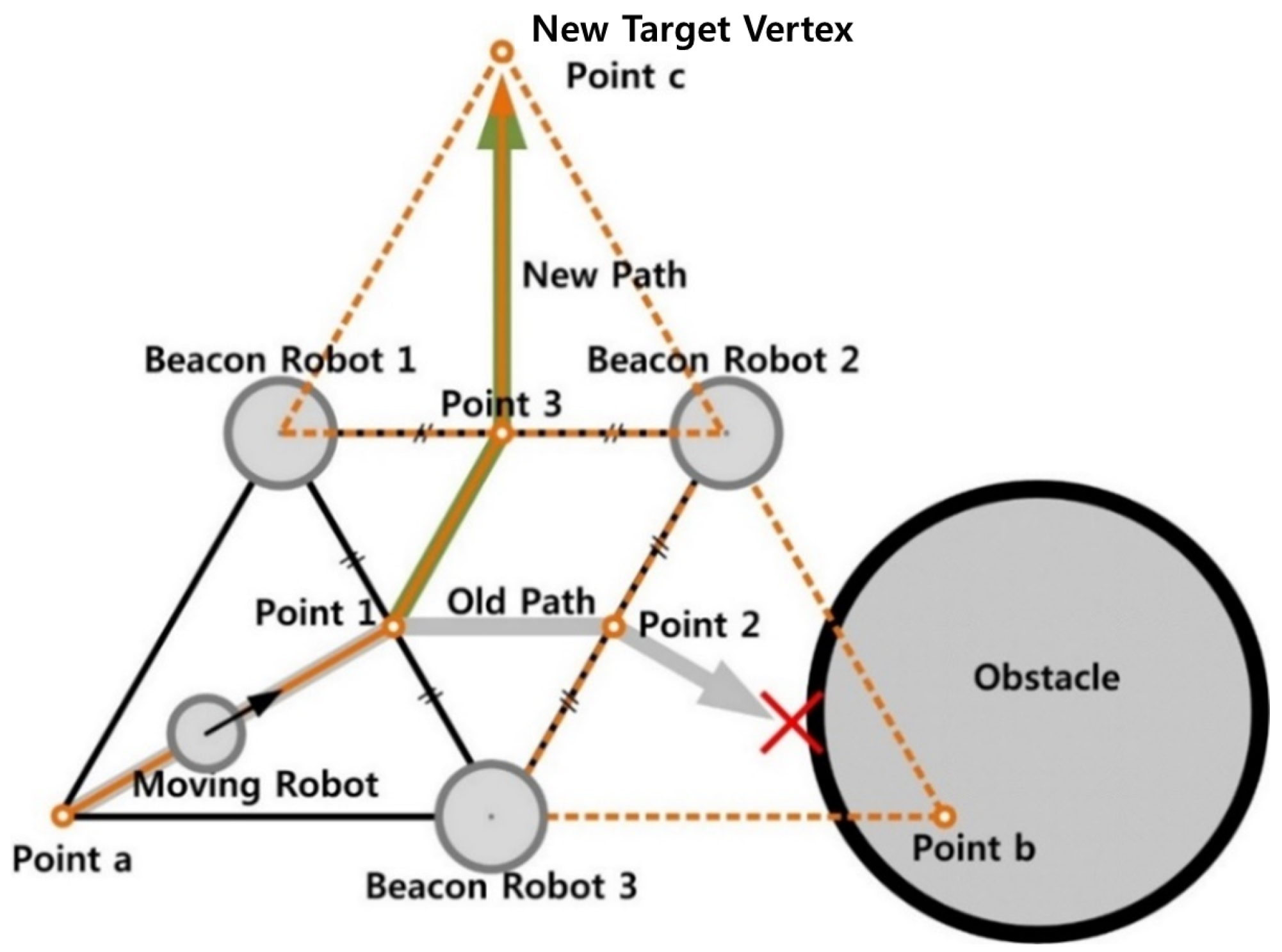

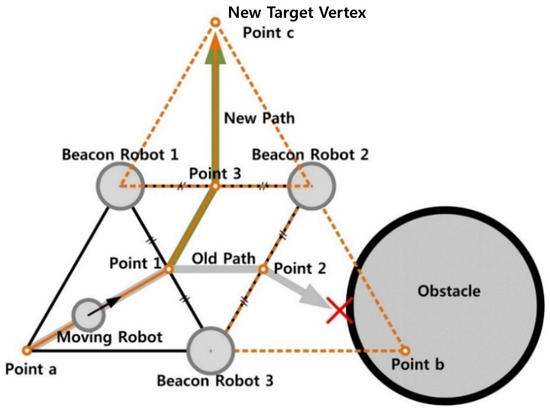

During the movement of the formation, collisions between robots and obstacles must be avoided. Collisions are categorized into two types: inner collisions, which occur between robots within the formation, and outer collisions, which occur between robots and external obstacles in the environment. First, inner collisions are avoided using Algorithm 2. The set P = {point 1, point 3, point c}, obtained by Algorithm 2, correctly represents the desired path for the moving robot, as shown in Figure 5.

| Algorithm 2 How to avoid inner collisions |

|

Figure 5.

Path for collision avoidance with an obstacle in a candidate path. Both paths P1 = {point 1, point 3, point c} and P2 = {point 1, point 2, point b} can avoid inner collisions, but only path P1 avoids outer collisions.

After inner collision avoidance is achieved, the robot formation must also avoid external obstacles (i.e., outer collision avoidance). To this end, we assume that the robots can detect obstacles using low-cost detection sensors, such as ultrasonic sensors [59,60], and that obstacle information is shared among the robots via any communication channel (e.g., WiFi in our testbed). Since the obstacle locations are not used for localization purposes in our system, high-cost distance-measuring sensors are unnecessary, and approximate information about the presence of obstacles is sufficient.

Let us assume that point b in Figure 5 is the closest vertex to the final destination of the robot formation. In this case, it would be reasonable for the moving robot to proceed toward point b. However, since there is an obstacle along the path to point b in Figure 5, the robot should instead select a different vertex to avoid a collision. If the next closest vertex to the destination is point c, the robot selects point c as its new target. In summary, the priority of candidate vertices in our algorithm is determined by their Euclidean distance to the destination.

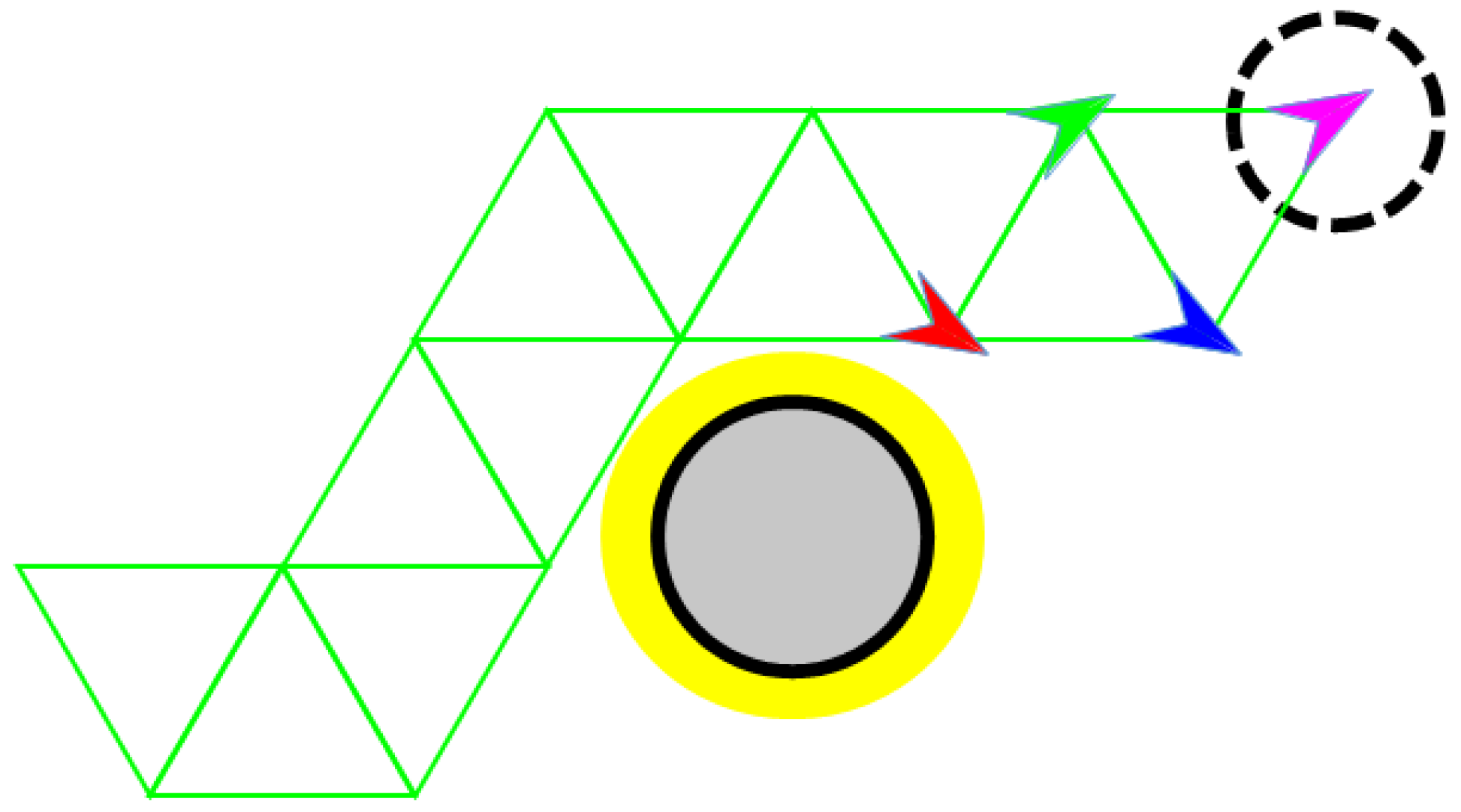

Figure 6 shows an example in which the robot formation detours around an obstacle and reaches the destination using the “closest vertex” algorithm. The yellow circle in Figure 6 represents the safety zone. If the path to an initially selected vertex intersects this safety zone, the moving robot should instead aim for the next closest vertex to ensure safety. If the robot formation is in a more complex situation—where obstacles are located near both points b and c in Figure 5—the system should select a different robot as the moving robot and choose the next closest vertex, other than points b and c, as the new target. While the current implementation effectively handles simple obstacle avoidance by selecting the next closest vertex, its performance in more complex environments with multiple closely spaced obstacles (e.g., near both points b and c in Figure 5) depends on the ability to dynamically reassign the moving robot and replan the target vertex. For even more intricate scenarios, the integration of higher-level global path planning algorithms, such as A* or rapidly exploring random tree (RRT), could further enhance the system’s ability to navigate safely and efficiently through cluttered environments.

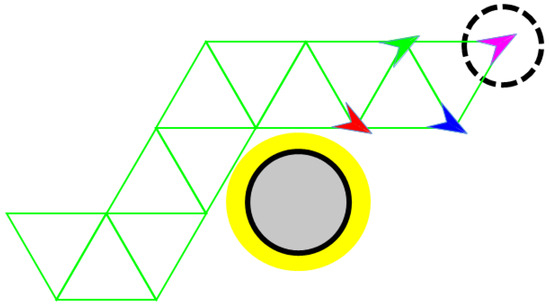

Figure 6.

Example path of the robot formation to the destination. The yellow region around the obstacle represents the safety zone, and the formation detours around this zone to avoid potential collisions. The four colored arrows represent individual robots.

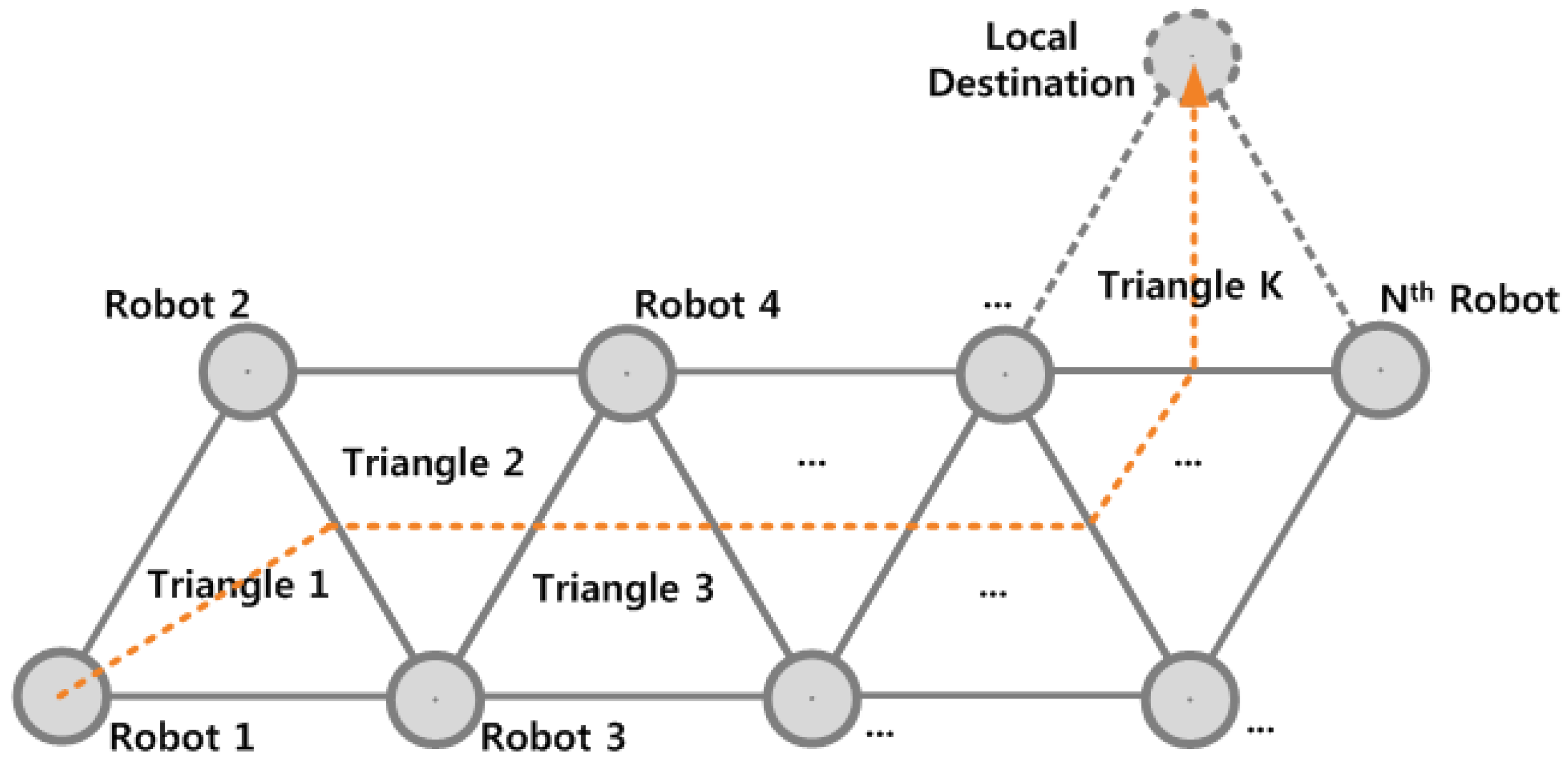

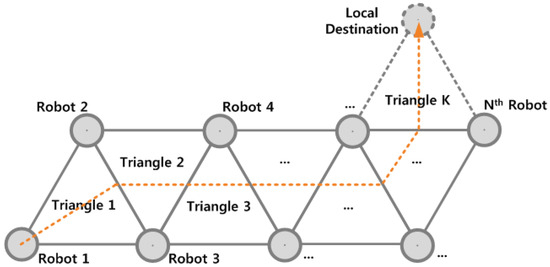

The proposed robot formation can be readily extended to an N-robot system, as illustrated in Figure 7. In such a system, each robot participates in at least one equilateral triangular formation. In the basic implementation, the farthest robot from the destination is selected as the moving robot, and it moves to the target vertex (i.e., local destination), which is the closest possible vertex to the global destination. This algorithm generates a “snake-like” movement of the formation toward the destination and is applicable to swarm robot systems [61].

Figure 7.

Movement of an N-robot system. This configuration is a direct extension of the proposed four-robot system.

4. Localization Performance and Discussion

The proposed localization method, based on equilateral triangular formations, was tested using four mobile robots. Localization error models obtained in a controlled laboratory environment, as explained in Section 4.2, were then used to perform realistic performance simulations in larger open spaces.

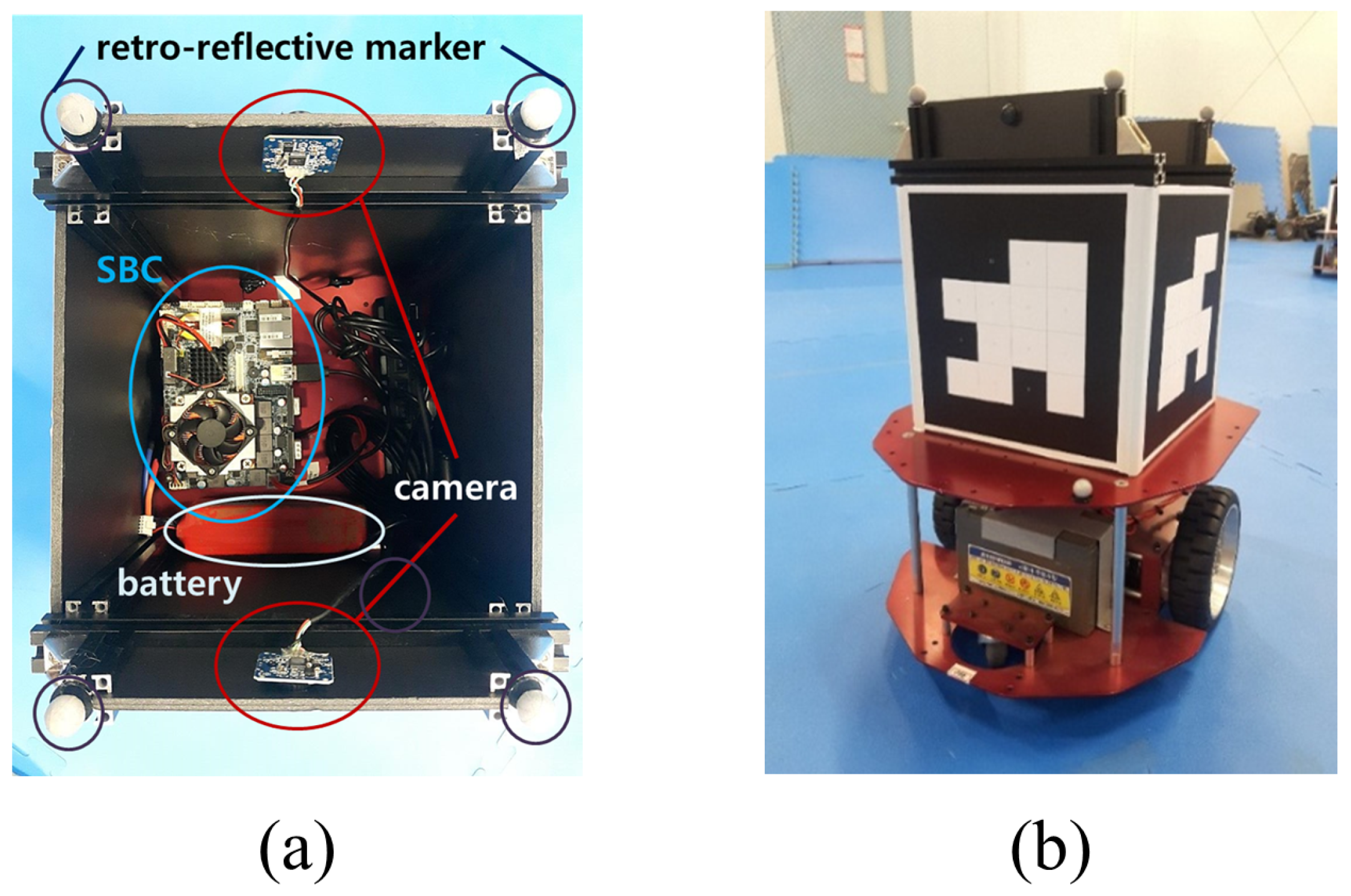

4.1. Experimental Setup

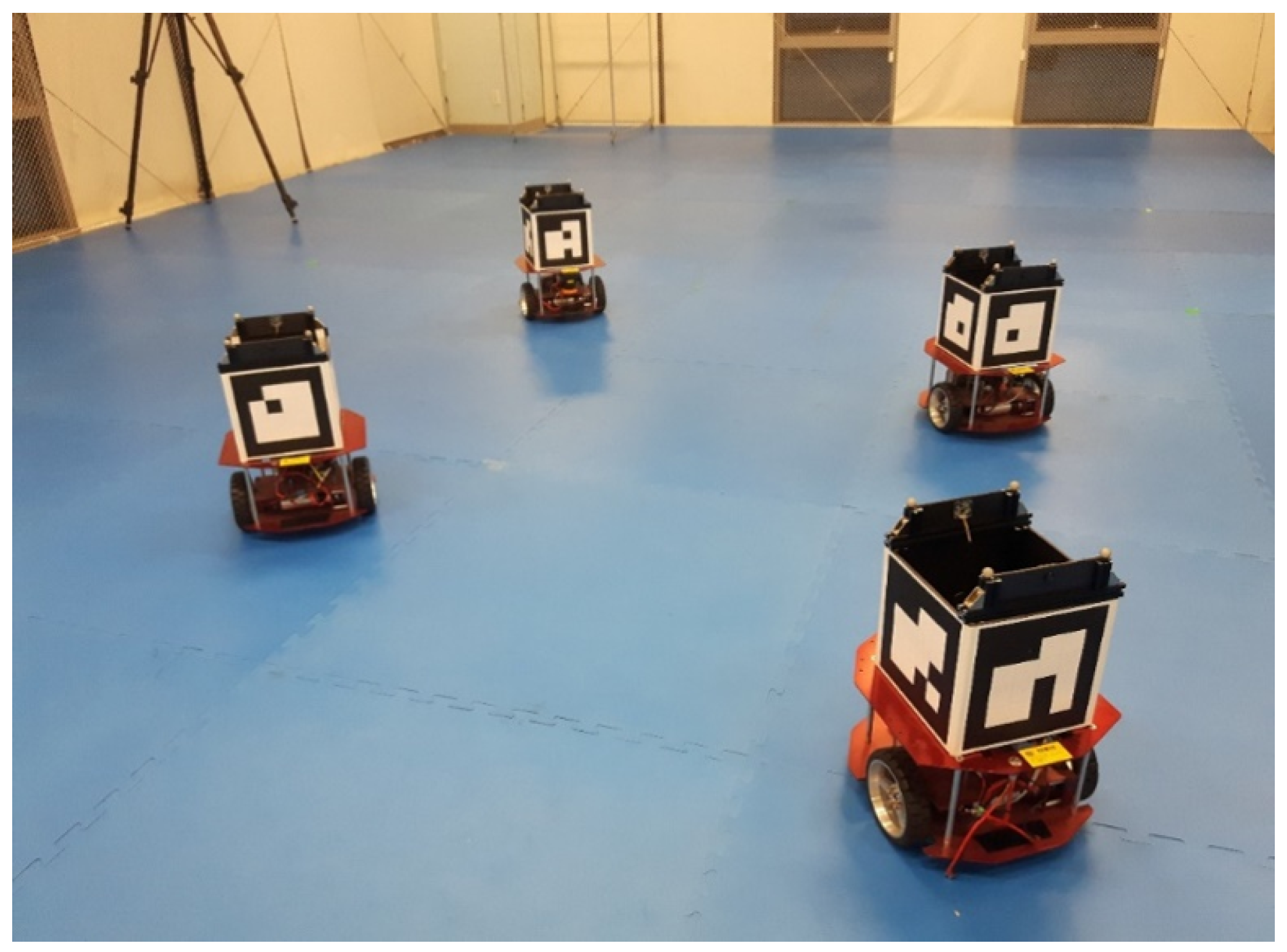

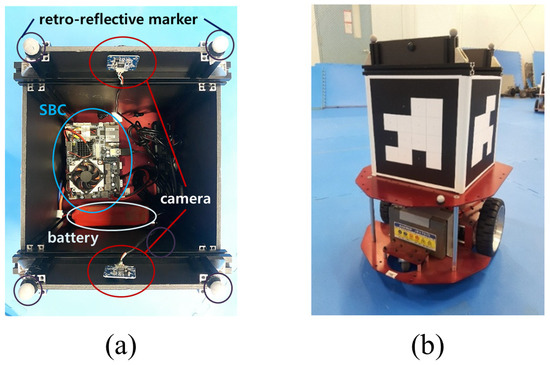

A commercial mobile robot named Stella (Figure 8) was used for the experiments. Each robot was equipped with a single-board computer (SBC), two cameras, and four visual markers. The SBC handled basic image processing and pixel counting to maintain the robot formation, as well as steering and velocity control. One camera faced forward and the other faced backward. In general, two additional cameras can be mounted on the left and right sides of the robot to enable omnidirectional exploration.

Figure 8.

Mobile robot platform used for the experiments. (a) System components. Two cameras are mounted to view the front and back, and a single-board computer (SBC) controls the entire system. Retro-reflective markers are attached to enable ground truth motion capture using a commercial system. (b) Exterior of the mobile robot. Visual markers are placed on all sides.

Visual markers were installed on all four sides of the robots. Using simple image processing based on these visual markers, the moving robot extracts the center point of each beacon robot. The AprilTag marker detection system [62] was employed to determine the position of each robot. Once the center points are identified, accurate lateral distances between the robots are obtained through simple pixel counting. A WiFi communication module in the SBC was used to share path planning information, including obstacle data, among the robots. It is not necessary for the robots to share any visual images for localization purposes; thus, the required communication bandwidth of the system remains very low.

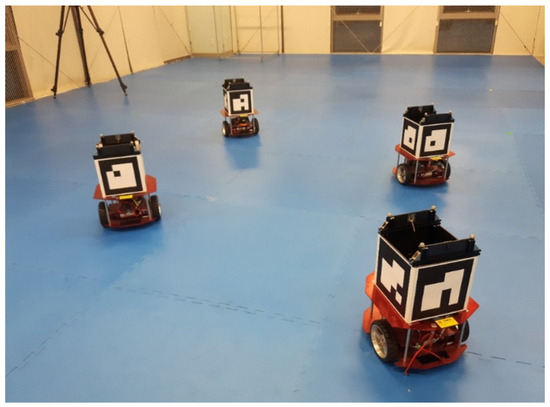

In order to evaluate the localization errors, ground truth data of the robots’ actual movements must be obtained. To this end, a 10-camera Vicon MX motion capture system (Vicon Motion Systems Ltd., Oxford, UK) was used. This system provides the positions of retro-reflective markers attached to the robots, as shown in Figure 8a, with sub-centimeter accuracy. The accuracy for stationary markers can be further improved by averaging the measured positions over a longer duration. The experimental setup with four robots in the motion capture studio is shown in Figure 9. This controlled laboratory environment enables accurate evaluation of localization errors, although the space covered by the ten cameras is limited. Accordingly, realistic error models of the proposed system were obtained in this environment, and performance in larger open spaces was evaluated through simulations based on these models.

Figure 9.

Four-robot system in a controlled laboratory environment with a commercial motion capture system. Ten motion capture cameras provided ground truth data for the movement of each robot during the experiment.

4.2. Error Models

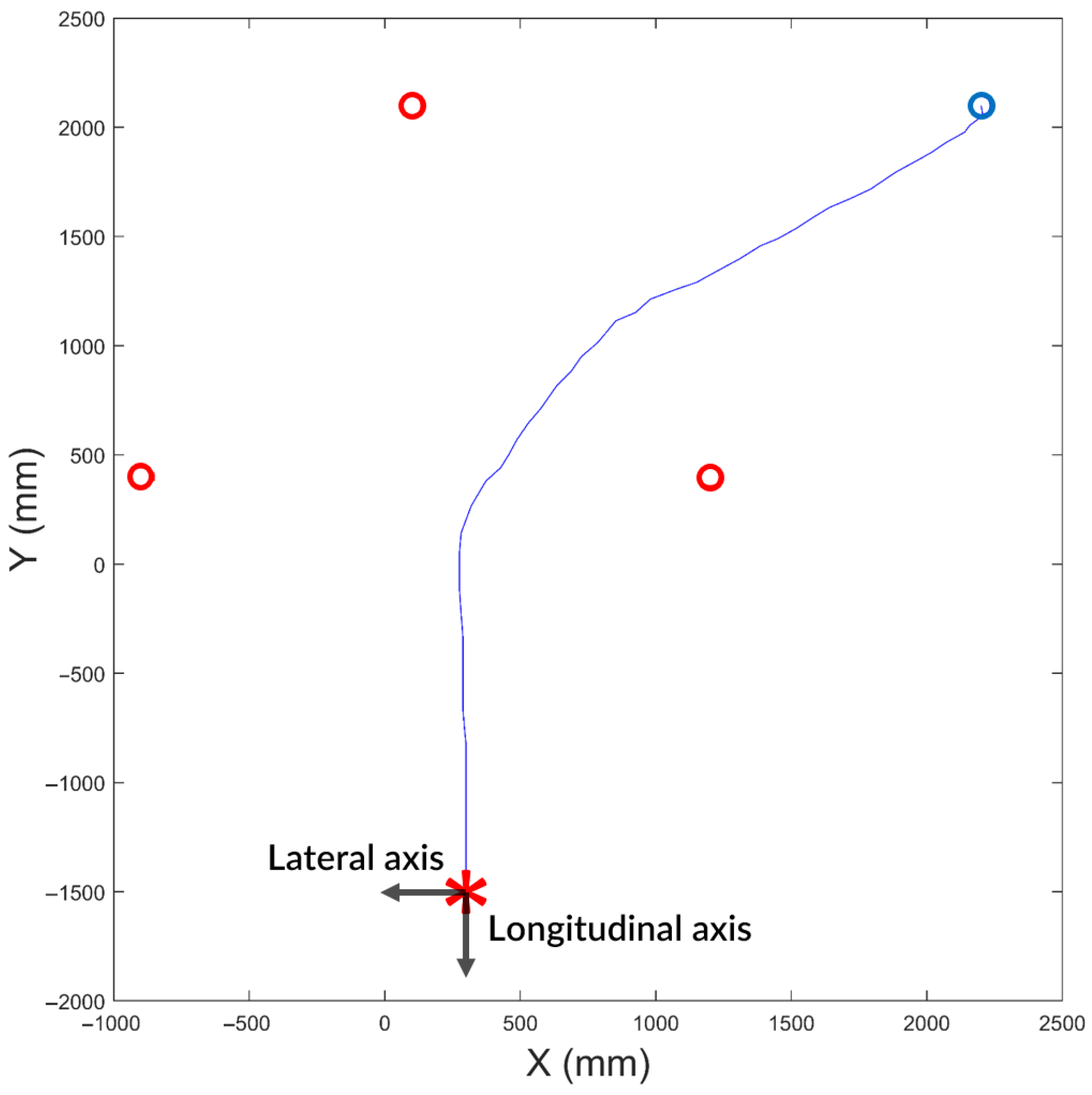

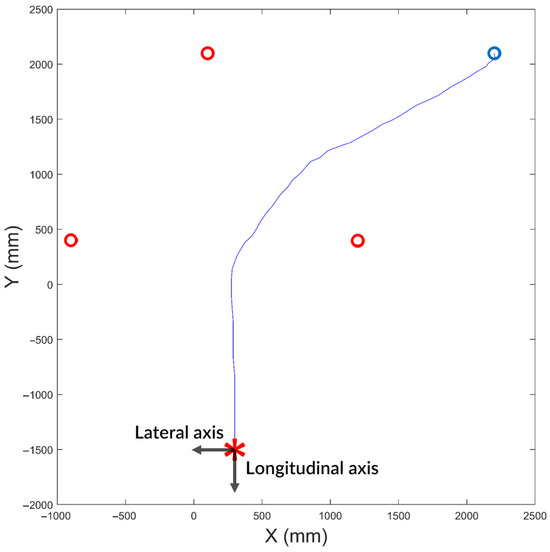

During the experiments, the moving robot approached the target vertex by following the steps described in Algorithm 1 and illustrated in Figure 3. An example trajectory of the moving robot while forming a new equilateral triangle is shown in Figure 10. The red circles in Figure 10 represent the positions of the three beacon robots. The blue circle and the red asterisk indicate the initial position and the local destination (i.e., the target vertex) of the moving robot, respectively. The blue line shows the trajectory taken by the moving robot.

Figure 10.

Example trajectory of a moving robot passing through the previous triangular formation of three beacon robots to create a new triangular formation. The robot moved from the blue circle to the red asterisk, which represents a vertex of the new equilateral triangle. This ground truth trajectory was obtained using a commercial motion capture system in a controlled laboratory environment.

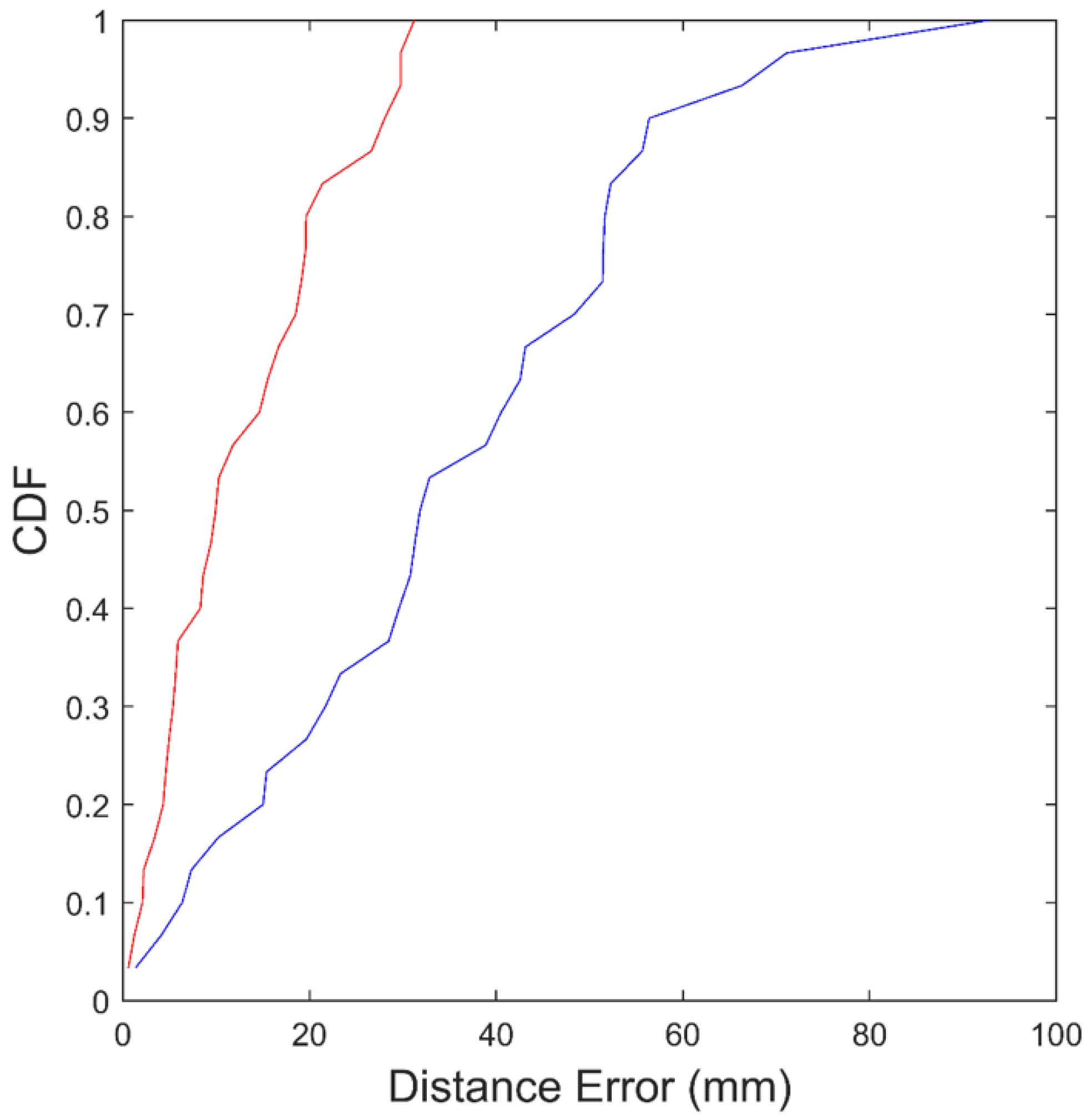

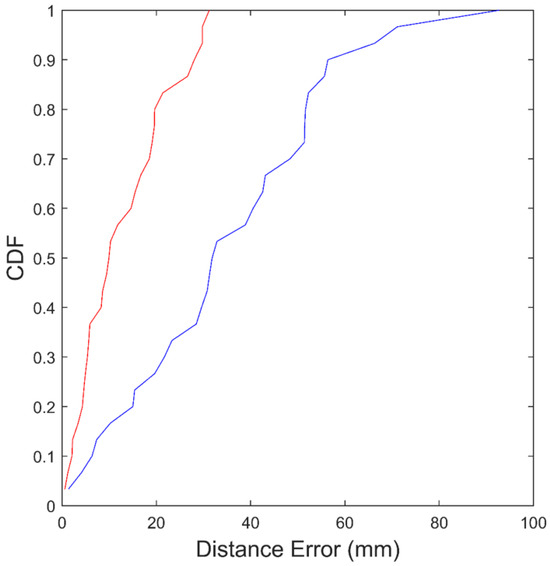

The experiment in which a new equilateral triangle is formed was repeated 60 times in a controlled laboratory environment, and statistics of the localization error were collected. The empirical cumulative distribution functions (CDFs) of the localization errors along the lateral and longitudinal axes, based on the 60 trials, are shown in Figure 11. The lateral axis is defined as parallel to the base of the new triangle, and the longitudinal axis as perpendicular to the base, as illustrated in Figure 10.

Figure 11.

Empirical cumulative distribution functions (CDFs) of the distance errors for the proposed system along the lateral axis (blue) and the longitudinal axis (red).

The mean localization error along the lateral and longitudinal axes was calculated as

where and represent the lateral and longitudinal error samples, respectively, and N is the total number of measurements. The corresponding standard deviations were computed as

The mean localization error along the lateral and longitudinal axes was 36 mm and 13 mm, respectively. The standard deviation of the localization error along the lateral and longitudinal axes was 21 mm and 9 mm, respectively. The lateral axis error is generally greater than the longitudinal axis error because the robot used in the experiment has a nonholonomic constraint. This constraint makes it difficult for the robot to move precisely in the lateral direction.

The performance of the proposed method was compared with that of a conventional dead-reckoning method, which represents a typical low-cost localization approach. This provides a fair comparison between two low-cost localization methods: the proposed method and the dead-reckoning method.

The error models of conventional dead-reckoning systems have been studied in [63,64]. A widely used approach to implementing a low-cost dead-reckoning system involves measuring the heading angle using a compass or magnetometer and estimating speed using an odometer or speed sensor. Microelectromechanical systems (MEMS) gyros or accelerometers can also be used to estimate the heading angle and speed. Thus, the performance of such a dead-reckoning system depends on the estimation errors of heading and speed derived from the applied sensors.

In [63], the heading angle error was modeled as a Gauss–Markov process with a correlation time of 120 s and a standard deviation of 2.5 degrees. These values were obtained based on actual measurements of estimation errors from a low-cost inertial navigation system (i.e., MEMS gyros and accelerometers) and a set of magnetometers. An additive white noise component with a standard deviation of 0.8 degrees was also included in the Gauss–Markov model.

The measurement model of a wheel speed sensor (WSS), which is typically used for dead reckoning, was proposed by [64] as follows:

where is the linear velocity of the vehicle measured by the wheel speed sensor, R is the nominal radius of the wheel, is the bias on the nominal wheel radius, is a scale factor, is the intended wheel rotational speed, and is the white Gaussian measurement noise.

The parameters of this model for our mobile robot platform, shown in Figure 8, were experimentally estimated. The obtained values were m, , and the standard deviation of was 0.045 m/s. The value is negligible because our mobile robot platform uses relatively small and rigid wheels.

We utilized the heading angle error model and the wheel speed sensor measurement model developed in [63,64] to simulate the performance of a typical dead-reckoning system. The performance of the simulated dead-reckoning system was subsequently compared with that of our proposed localization system, as presented in Section 5.

4.3. Discussion

Although camera distortion—particularly non-radial or asymmetric distortion—can affect the accuracy of marker detection, the symmetric arrangement and precise initial positioning of the beacon robots ensured that the initial triangular formation was accurately established. As a result, the system maintained good localization accuracy without requiring additional dewarping or sub-pixel marker detection algorithms to compensate for center detection uncertainties. For longer trajectories or more distortion-sensitive applications, integrating sub-pixel detection techniques could further enhance precision.

The parameters determining the size of the equilateral triangle formation—specifically dm1, dm2, and dt—were selected based on practical constraints imposed by the field-of-view angle of the commonly available monocular camera used in the experiments. These values were chosen to ensure that all beacon robots remain visible within the camera’s viewing angle during operation. The triangle size directly influences controllability and planning flexibility: a smaller triangle can offer better maneuverability, especially in tight or cluttered environments, while a larger triangle may reduce the number of formation steps required to reach a destination, thereby improving mission efficiency. The system is designed to be adaptable, allowing the triangle size to be tuned according to the camera’s viewing angle, resolution, and optical characteristics, as well as the performance requirements of the task. This adaptability makes the system suitable for a wide range of cost-sensitive and mission-specific deployment scenarios. It also highlights the potential for identifying an optimal triangle size tailored to specific mission profiles, balancing localization accuracy, path efficiency, and hardware limitations.

The parameters dm1, dm2, and dt, when properly matched in pixel units, help preserve the equilateral triangle geometry, resulting in localization errors that accumulate at a sub-pixel level during successive robot movements. As demonstrated in our experiments, the proposed low-cost localization method achieves sufficiently high localization accuracy using a commercial monocular camera and a simple marker detection algorithm. In scenarios involving longer trajectories without periodic error correction, higher localization performance can be achieved by employing more advanced hardware or vision systems.

5. Simulation Results

Based on the realistic error models of the proposed system—obtained in a controlled laboratory environment using the motion capture system—the performance of the proposed localization system in wide open spaces was evaluated through simulations. As the proposed system is a low-cost solution that does not rely on accurate distance-measuring sensors or high computational power, it is appropriate to compare its performance with that of another low-cost solution, namely a dead-reckoning system. The proposed system uses only monocular cameras, while the dead-reckoning system relies on wheel speed sensors and MEMS gyros and accelerometers.

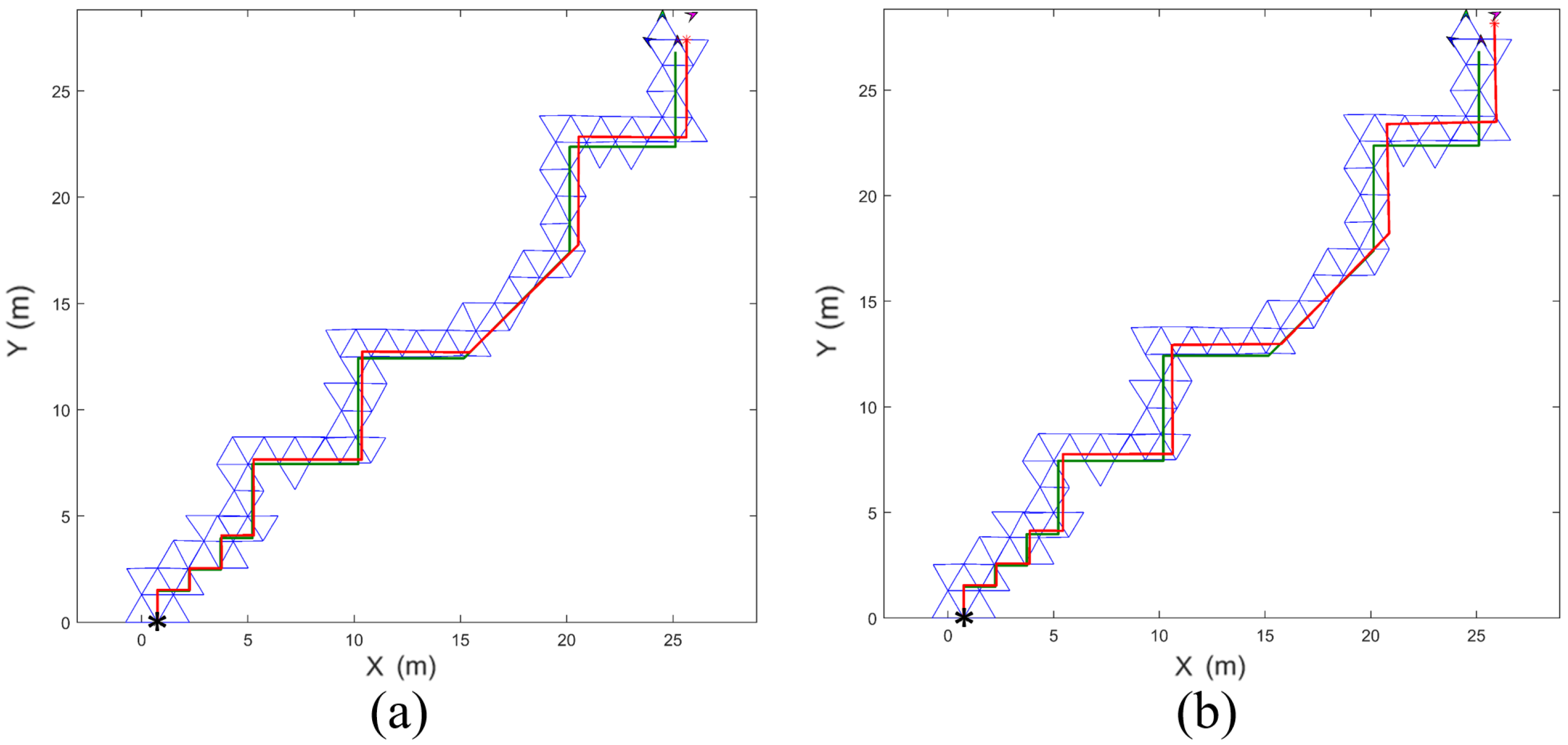

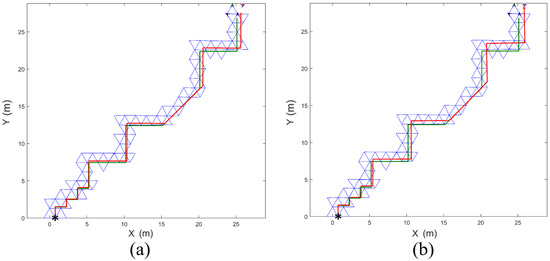

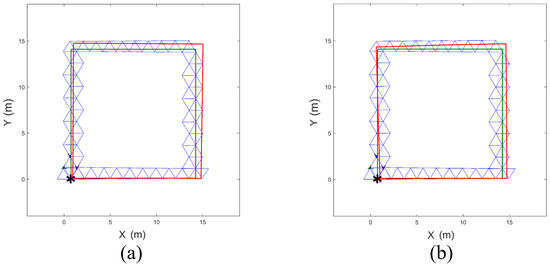

The basic four-robot formation was evaluated through simulation. The simulations were conducted along two trajectories: one with many turns (Figure 12) and another representing a robot patrol path (Figure 13). The initial positions of the robots were set as (0.75 m, 0), (0, 1.30 m), (2.25 m, 0), and (1.5 m, 1.30 m). The length of each side of the equilateral triangle was 1.5 m, and the initial heading angles of all robots were set to zero (i.e., facing east).

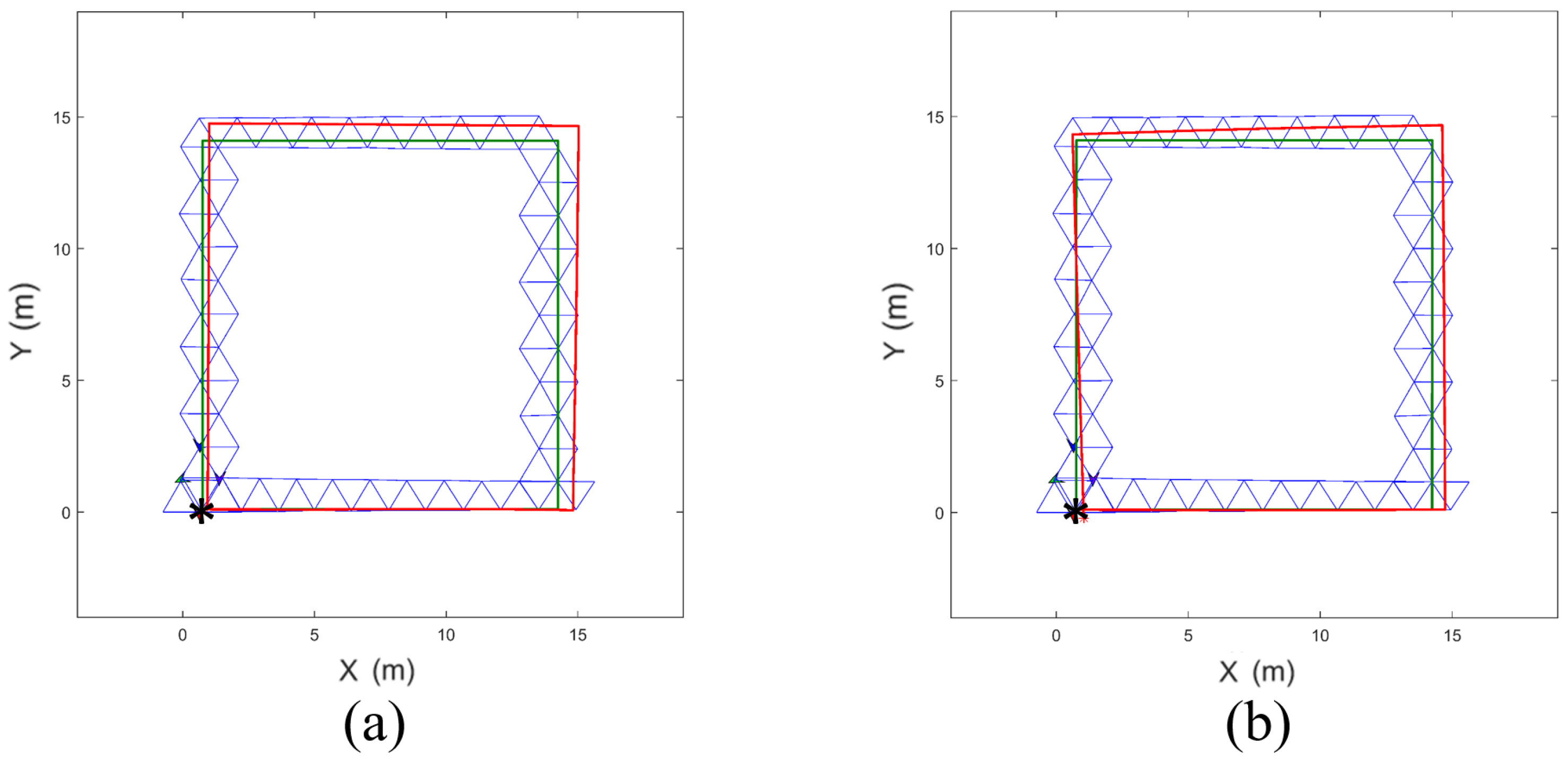

Figure 12.

Comparison of the movement paths generated by the dead-reckoning method (red) and the formations of the proposed method (blue), following the given trajectory (green) with many turns. The asterisk indicates the initial position of the robot that reaches the final destination. The in Equation (3) was set to (a) 5.8 rad/s and (b) 2.9 rad/s. The localization error of the dead-reckoning method significantly increased when was reduced.

Figure 13.

Comparison of the movement paths generated by the dead-reckoning method (red) and the formations of the proposed method (blue), following the given trajectory (green) representing a robot patrol case. The asterisk indicates the initial position of the robot that reaches the final destination. The in Equation (3) was set to (a) 5.8 rad/s and (b) 2.9 rad/s. The localization error of the dead-reckoning method significantly increased when was reduced.

When the destination of the formation was specified, the farthest robot from the destination was selected as the moving robot, and the others served as beacon robots. The selection of the moving robot is similar to the leader selection process in multi-robot systems, as described in Kwon et al. [65]. They proposed the multiple leader candidate (MLC) structure, in which the leader is competitively selected from among leader candidates (LCs) and guides the formation along the planned trajectory. If the current leader experiences a fault or failure, the remaining LCs immediately compete to select a new leader, allowing the followers to maintain formation stability by tracking their vertices relative to the nearest LC until a new leader is designated. The MLC structure offers scalability advantages, as LCs share information through a virtual-leader framework, enabling the system to accommodate additional robots by adjusting the number of LCs according to the capabilities of the communication devices.

While the goal is to form an ideal equilateral triangle geometry at each robot’s movement step, in practice, each robot reaches a slightly erroneous position, as characterized by the experimentally derived error model presented in Figure 11. This model shows the empirical error distributions along the lateral and longitudinal axes. These position errors accumulate with each successive movement, and this error characteristic is taken into account in the simulation.

The green lines in Figure 12 and Figure 13 indicate the given trajectories, and the black asterisk marks the starting point. The proposed formations of robots from the starting point to the destination are shown in blue, while the path of a robot using the dead-reckoning system is shown in red in both figures. The intended wheel rotational speed of the robots, i.e., in Equation (3), was set to 5.8 rad/s for Figure 12a and 2.9 rad/s for Figure 12b.

The localization errors of the dead-reckoning and proposed methods along the given trajectories in Figure 12 and Figure 13 are summarized in Table 1. These errors represent average values based on 100 simulation runs. Since a vertex of a triangle cannot be placed arbitrarily, the localization error of the proposed system is defined as the difference between the actual location of the moving robot when it arrives at the final target vertex near the destination and the ideal vertex location, assuming all equilateral triangles are perfectly constructed from the starting point to the destination.

When the intended wheel rotational speed of the robots was reduced from 5.8 rad/s to 2.9 rad/s, the localization errors of the dead-reckoning system along the trajectories in Figure 12 and Figure 13 increased significantly. In contrast, the localization errors of the proposed system did not change meaningfully.

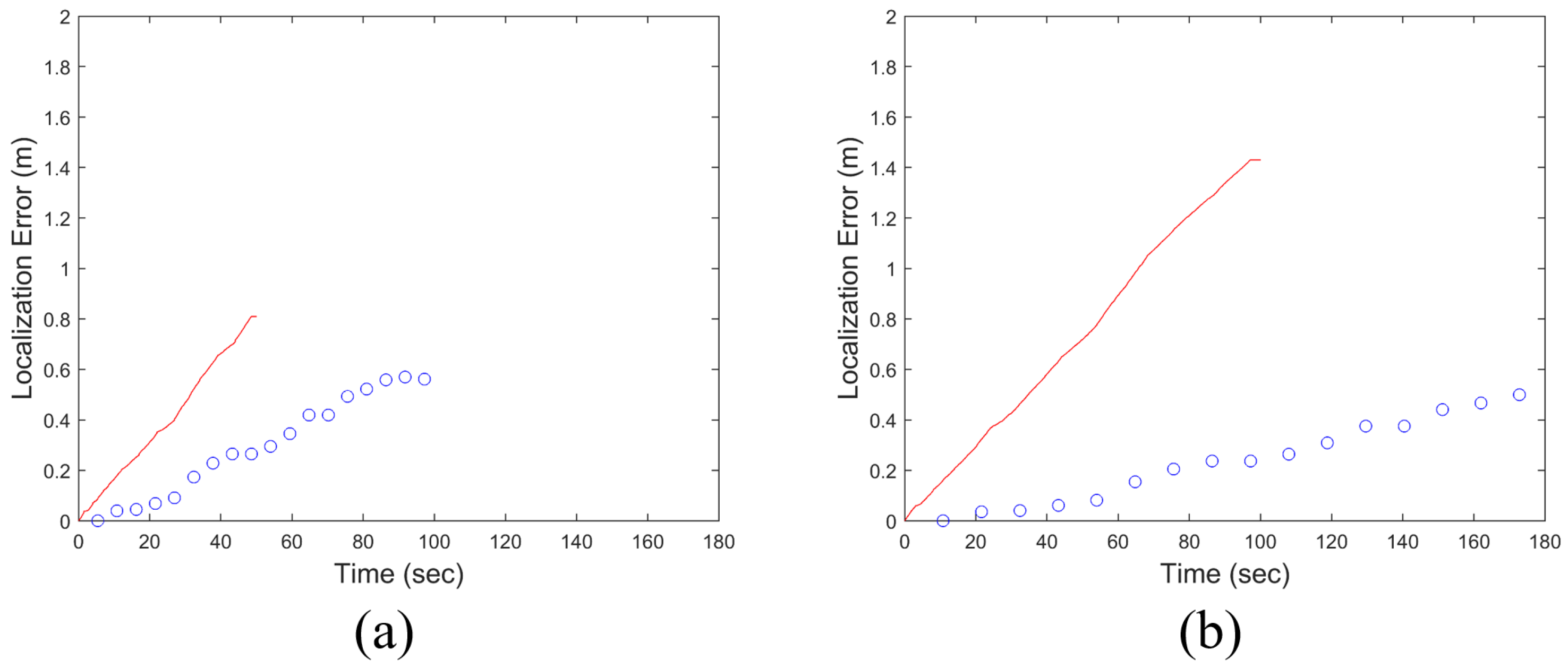

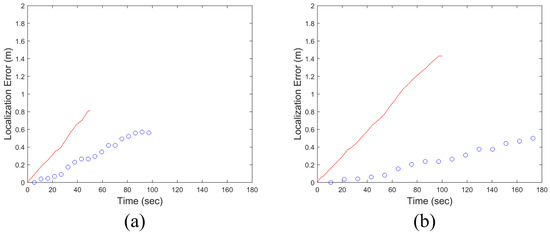

It is interesting to note that the error accumulation behaviors of the two methods are markedly different. Figure 14 compares these behaviors under the trajectory given in Figure 12. Figure 14a shows the case where rad/s, while Figure 14b illustrates the case with reduced by half.

Because gyros measure the angular rate of rotation, the sensor measurements must be integrated over time to obtain the heading angle. During this process, errors in the heading angle accumulate because sensor noise is also integrated. As a result, the localization error of a dead-reckoning system using gyros increases over time. When was reduced from 5.8 rad/s in Figure 14a to 2.9 rad/s in Figure 14b, the travel time to the destination approximately doubled, which also doubled the duration of error accumulation in the dead-reckoning system. Consequently, the red curve (i.e., the dead-reckoning system) in Figure 14b shows approximately twice the localization error compared to the red curve in Figure 14a. In other words, slower movement increases travel time and thereby increases localization error in a dead-reckoning system when following the same trajectory.

On the other hand, the localization error of the proposed system depends on the number of generated triangles en route to the destination and is independent of travel time. The localization error can be evaluated only when the moving robot in Figure 2 reaches the target vertex and a new triangle is formed. Therefore, the localization errors of the proposed system over time are plotted as discrete points rather than a continuous curve in Figure 14.

Recall that the moving robot finds the target vertex using the algorithm in Figure 3, and this algorithm is independent of the robot’s speed. Once a new triangle is formulated, the localization errors along the longitudinal and lateral axes—shown in Figure 10 and following the empirical CDFs in Figure 11—are evaluated. The localization error in the longitudinal axis is caused by the finite pixel size, which limits the resolution of lateral distance measurements (i.e., dm1 and dm2 in Figure 3), while the error in the lateral direction is primarily due to the nonholonomic constraint of the robots. The number of generated triangles to reach the destination remains unchanged for a given trajectory, even if the robot speed varies. Therefore, the localization errors of the proposed system at the destination in Figure 14a,b are nearly identical.

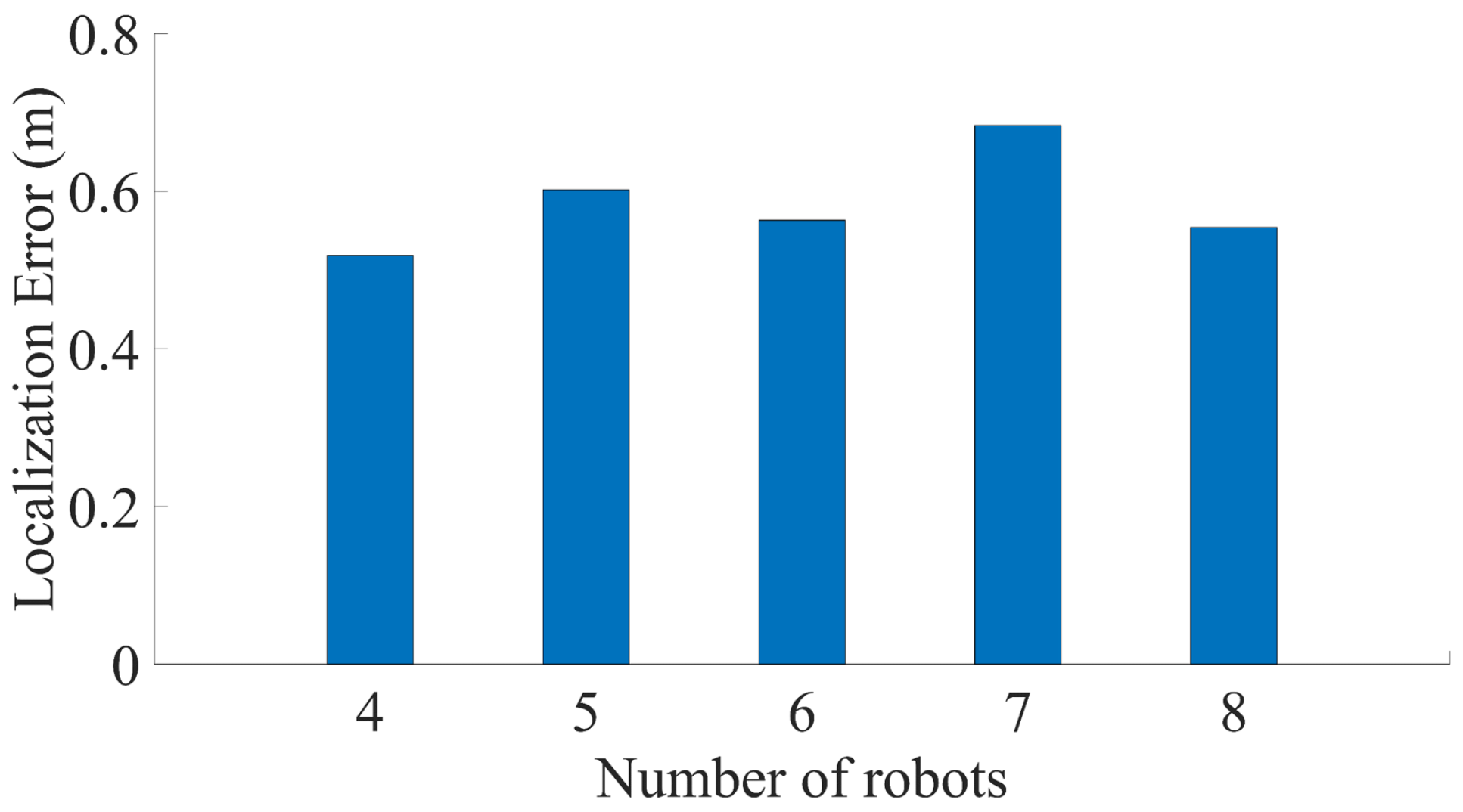

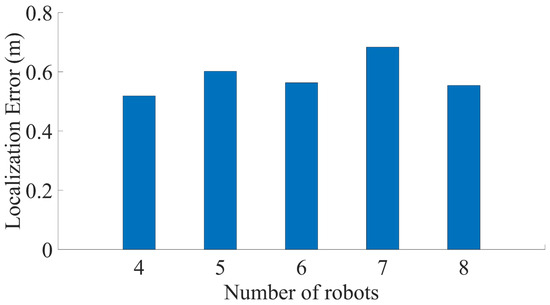

Figure 15 shows the localization error when the trajectory used in Figure 12 is executed with different numbers of robots for scalability analysis. (The reported errors represent average values based on 100 simulation runs.) The initial positions of the robots and the selection of the moving robot follow the configuration shown in Figure 7. As the results indicate, the localization error remains consistent despite an increase in the number of robots, demonstrating good scalability even when extended to an N-robot system. This is reasonable because the localization error in the proposed system depends on the number of generated triangles required to reach the destination. If the distance to the destination is significantly greater than the size of the N-robot system itself—as is the case in Figure 12—the number of required triangles remains nearly constant, regardless of the number of robots.

Figure 15.

Localization error corresponding to the trajectory in Figure 12, evaluated for different numbers of robots to assess system scalability.

6. Conclusions

This paper proposed a low-cost localization method suitable for multiple robots operating in wide open spaces. In the proposed method, a minimum of four robots create and maintain equilateral triangular formations while progressing toward the destination. Although the system relies only on low-cost monocular vision sensors and standard visual markers, a moving robot in the formation can accurately reach the target vertex with the assistance of beacon robots. Since the depth information obtained from low-cost monocular vision sensors is typically not very accurate, the proposed system relies solely on lateral distance measurements and the geometric properties of equilateral triangles to achieve high localization accuracy.

We conducted experiments in a controlled laboratory environment using a motion capture system that provided ground truth trajectories of the robots. Based on the experimental data, realistic error models of the proposed system were obtained. The performance of the proposed system in wide open spaces—where SLAM is not particularly effective—was then compared with that of a dead-reckoning system through simulations. The results confirm that the proposed system achieves noticeably higher accuracy than the dead-reckoning system, especially when travel time is extended.

Moreover, the error characteristics of the proposed system differ from those of the dead-reckoning system. Unlike the dead-reckoning system, the localization error of the proposed system at the destination depends on the number of generated triangles along the path and is independent of travel time. This property can be particularly advantageous for missions involving long travel durations.

Author Contributions

Conceptualization, T.K., J.-W.K. and J.H.K.; methodology, T.K. and J.H.K.; software, T.K. and J.H.K.; validation, T.K., I.B. and J.H.K.; resources, J.H.K.; data curation, T.K., I.B. and J.H.K.; writing—original draft preparation, T.K.; writing—review and editing, J.H.K.; supervision, J.-W.K. and J.H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by Grant RS-2024-00407003 from the “Development of Advanced Technology for Terrestrial Radionavigation System” project, funded by the Ministry of Oceans and Fisheries, Republic of Korea; in part by the National Research Foundation of Korea (NRF), funded by the Korean government (Ministry of Science and ICT, MSIT), under Grant RS-2024-00358298; and in part by the Institute of Information & Communications Technology Planning & Evaluation (IITP) through the Information Technology Research Center (ITRC) program, funded by the MSIT, under Grant IITP-2025-RS-2024-00437494.

Data Availability Statement

The data are contained within the article.

Acknowledgments

Generative AI (ChatGPT, OpenAI) was used solely to assist with grammar and language improvements during the manuscript preparation process. No content, ideas, data, or citations were generated by AI. All technical content, methodology, analysis, and conclusions were written and verified solely by the authors.

Conflicts of Interest

Author Jin Hyo Kim was employed by the company DOGU, Inc. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Pahlavan, K.; Krishnamurthy, P.; Geng, Y. Localization challenges for the emergence of the smart world. IEEE Access 2015, 3, 3058–3067. [Google Scholar] [CrossRef]

- Fink, J.; Ribeiro, A.; Kumar, V. Robust control of mobility and communications in autonomous robot teams. IEEE Access 2013, 1, 290–309. [Google Scholar] [CrossRef]

- Misra, P.; Enge, P. Global Positioning System: Signals, Measurements, and Performance; Ganga-Jamuna Press: Nanded, India, 2011. [Google Scholar]

- Tamazin, M.; Noureldin, A.; Korenberg, M.; Massoud, A. Robust fine acquisition algorithm for GPS receiver with limited resources. GPS Solut. 2016, 20, 77–88. [Google Scholar] [CrossRef]

- Park, K.; Seo, J. Single-antenna-based GPS antijamming method exploiting polarization diversity. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 919–934. [Google Scholar] [CrossRef]

- Jia, M.; Lee, H.; Khalife, J.; Kassas, Z.M.; Seo, J. Ground vehicle navigation integrity monitoring for multi-constellation GNSS fused with cellular signals of opportunity. In Proceedings of the IEEE ITSC, Indianapolis, IN, USA, 19–22 September 2021; pp. 3978–3983. [Google Scholar] [CrossRef]

- Lee, H.; Seo, J.; Kassas, Z. Urban road safety prediction: A satellite navigation perspective. IEEE Intell. Transp. Syst. Mag. 2022, 14, 94–106. [Google Scholar] [CrossRef]

- Kim, S.; Park, S.; Seo, J. Single antenna based GPS signal reception condition classification using machine learning approaches. J. Position. Navig. Timing 2023, 12, 149–155. [Google Scholar] [CrossRef]

- Lee, Y.; Hwang, Y.; Ahn, J.Y.; Seo, J.; Park, B. Seamless accurate positioning in deep urban area based on mode switching between DGNSS and multipath mitigation positioning. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5856–5870. [Google Scholar] [CrossRef]

- Kim, S.; Seo, J. Machine-learning-based classification of GPS signal reception conditions using a dual-polarized antenna in urban areas. In Proceedings of the IEEE/ION PLANS, Monterey, CA, USA, 24–28 April 2023; pp. 113–118. [Google Scholar] [CrossRef]

- Liu, H.; Darabi, H.; Banerjee, P.; Liu, J. Survey of wireless indoor positioning techniques and systems. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 1067–1080. [Google Scholar] [CrossRef]

- Lee, H.; Abdallah, A.; Park, J.; Seo, J.; Kassas, Z. Neural network-based ranging with LTE channel impulse response for localization in indoor environments. In Proceedings of the ICCAS, Busan, Republic of Korea, 13–16 October 2020; pp. 939–944. [Google Scholar] [CrossRef]

- Lee, H.; Kang, T.; Jeong, S.; Seo, J. Evaluation of RF fingerprinting-aided RSS-based target localization for emergency response. In Proceedings of the IEEE VTC, Helsinki, Finland, 19–22 June 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Chen, G.; Meng, X.; Wang, Y.; Zhang, Y.; Tian, P.; Yang, H. Integrated WiFi/PDR/smartphone using an unscented Kalman filter algorithm for 3D indoor localization. Sensors 2015, 15, 24595–24614. [Google Scholar] [CrossRef]

- Guerra, E.; Munguia, R.; Bolea, Y.; Grau, A. Human collaborative localization and mapping in indoor environments with non-continuous stereo. Sensors 2016, 16, 275. [Google Scholar] [CrossRef]

- Kang, T.; Seo, J. Practical simplified indoor multiwall path-loss model. In Proceedings of the ICCAS, Busan, Republic of Korea, 13–16 October 2020; pp. 774–777. [Google Scholar] [CrossRef]

- Kim, W.; Seo, J. Low-cost GNSS simulators with wireless clock synchronization for indoor positioning. IEEE Access 2023, 11, 55861–55874. [Google Scholar] [CrossRef]

- Moon, H.; Park, H.; Seo, J. HELPS for emergency location service: Hyper-enhanced local positioning system. IEEE Wirel. Commun. 2024, 31, 276–282. [Google Scholar] [CrossRef]

- Lee, H.; Pullen, S.; Lee, J.; Park, B.; Yoon, M.; Seo, J. Optimal parameter inflation to enhance the availability of single-frequency GBAS for intelligent air transportation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 17801–17808. [Google Scholar] [CrossRef]

- Lee, J.; Morton, Y.; Lee, J.; Moon, H.S.; Seo, J. Monitoring and mitigation of ionospheric anomalies for GNSS-based safety critical systems. IEEE Signal Process. Mag. 2017, 34, 96–110. [Google Scholar] [CrossRef]

- Sun, K.; Chang, H.; Lee, J.; Seo, J.; Jade Morton, Y.; Pullen, S. Performance benefit from dual-frequency GNSS-based aviation applications under Ionospheric Scintillation: A new approach to fading process modeling. In Proceedings of the ION ITM, San Diego, CA, USA, 21–24 January 2020; pp. 889–899. [Google Scholar] [CrossRef]

- Sun, A.K.; Chang, H.; Pullen, S.; Kil, H.; Seo, J.; Morton, Y.J.; Lee, J. Markov chain-based stochastic modeling of deep signal fading: Availability assessment of dual-frequency GNSS-based aviation under ionospheric scintillation. Space Weather 2021, 19, 1–19. [Google Scholar] [CrossRef]

- Ahmed, N.; Seo, J. Statistical evaluation of the multi-frequency GPS ionospheric scintillation observation data. In Proceedings of the ICCAS, Jeju, Republic of Korea, 18–21 October 2017; pp. 1792–1797. [Google Scholar] [CrossRef]

- Grant, A.; Williams, P.; Ward, N.; Basker, S. GPS jamming and the impact on maritime navigation. J. Navig. 2009, 62, 173–187. [Google Scholar] [CrossRef]

- Park, K.; Lee, D.; Seo, J. Dual-polarized GPS antenna array algorithm to adaptively mitigate a large number of interference signals. Aerosp. Sci. Technol. 2018, 78, 387–396. [Google Scholar] [CrossRef]

- Chen, Y.H.; Juang, J.C.; Seo, J.; Lo, S.; Akos, D.; De Lorenzo, D.; Enge, P. Design and implementation of real-time software radio for anti-interference GPS/WAAS sensors. Sensors 2012, 12, 13417–13440. [Google Scholar] [CrossRef]

- Lee, S.; Kim, E.; Seo, J. SFOL DME pulse shaping through digital predistortion for high-accuracy DME. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 2616–2620. [Google Scholar] [CrossRef]

- Kim, S.; Park, K.; Seo, J. Mitigation of GPS chirp jammer using a transversal FIR filter and LMS algorithm. In Proceedings of the ITC-CSCC, JeJu, Republic of Korea, 23–26 June 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Chen, Y.H.; Juang, J.C.; De Lorenzo, D.; Seo, J.; Lo, S.; Enge, P.; Akos, D. Real-time software receiver for GPS controlled reception pattern antenna array processing. In Proceedings of the ION GNSS, Portland, OR, USA, 21–24 September 2010; pp. 1932–1941. [Google Scholar]

- Jubaer Alam, M.; Ahamed, E.; Faruque, M.; Islam, M.; Tamim, A. Left-handed metamaterial bandpass filter for GPS, Earth exploration-satellite and WiMAX frequency sensing applications. PLoS ONE 2019, 14, e0224478. [Google Scholar] [CrossRef]

- Kim, W.; Son, P.W.; Park, S.G.; Park, S.H.; Seo, J. First demonstration of the Korean eLoran accuracy in a narrow waterway using improved ASF maps. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 1492–1496. [Google Scholar] [CrossRef]

- Jirawimut, R.; Ptasinski, P.; Garaj, V.; Cecelja, F.; Balachandran, W. A method for dead reckoning parameter correction in pedestrian navigation system. IEEE Trans. Instrum. Meas. 2003, 52, 209–215. [Google Scholar] [CrossRef]

- Cho, B.S.; Moon, W.; Seo, W.J.; Baek, K.R. A dead reckoning localization system for mobile robots using inertial sensors and wheel revolution encoding. J. Mech. Sci. Technol. 2011, 25, 2907–2917. [Google Scholar] [CrossRef]

- Byun, S.; Lee, H.; Han, J.; Kim, J.; Choi, E.; Kim, K. Walking-speed estimation using a single inertial measurement unit for the older adults. PLoS ONE 2019, 14, e0227075. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Kwon, S. Balancing-prioritized anti-slip control of a two-wheeled inverted pendulum robot vehicle on low-frictional surfaces with an acceleration slip indicator. Machines 2023, 11, 553. [Google Scholar] [CrossRef]

- Filliat, D.; Meyer, J.A. Map-based navigation in mobile robots: I. A review of localization strategies. Cogn. Sys. Res. 2003, 4, 243–282. [Google Scholar] [CrossRef]

- Gamini Dissanayake, M.; Newman, P.; Clark, S.; Durrant-Whyte, H.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Rob. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef]

- Tang, J.; Zhang, S.; Zou, Y.; Liu, F. An adaptive map-matching algorithm based on hierarchical fuzzy system from vehicular GPS data. PLoS ONE 2017, 12, 1–11. [Google Scholar] [CrossRef]

- Thrun, S. Probabilistic robotics. Commun. ACM 2002, 45, 52–57. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Rob. Autom. Mag. 2006, 13, 99–108. [Google Scholar] [CrossRef]

- Bresson, G.; Feraud, T.; Aufrere, R.; Checchin, P.; Chapuis, R. Real-time monocular SLAM with low memory requirements. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1827–1839. [Google Scholar] [CrossRef]

- Munguia, R.; Urzua, S.; Grau, A. Delayed monocular SLAM approach applied to unmanned aerial vehicles. PLoS ONE 2016, 11, e0167197. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Chen, C.; Yin, Q.; Ma, C.; Guo, F. Virtual and real occlusion processing method of monocular visual assembly scene based on ORB-SLAM3. Machines 2025, 13, 212. [Google Scholar] [CrossRef]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans. Rob. 2017, 33, 249–265. [Google Scholar] [CrossRef]

- Mu, L.; Yao, P.; Zheng, Y.; Chen, K.; Wang, F.; Qi, N. Research on SLAM algorithm of mobile robot based on the fusion of 2D LiDAR and depth camera. IEEE Access 2020, 8, 157628–157642. [Google Scholar] [CrossRef]

- Daoud, H.; Sabri, A.; Loo, C.; Mansoor, A. SLAMM: Visual monocular SLAM with continuous mapping using multiple maps. PLoS ONE 2018, 13, e0195878. [Google Scholar] [CrossRef]

- Aznar, F.; Pujol, F.; Pujol, M.; Rizo, R.; Pujol, M.J. Learning probabilistic features for robotic navigation using laser sensors. PLoS ONE 2014, 9, e112507. [Google Scholar] [CrossRef]

- Chen, W.; Wang, X.; Gao, S.; Shang, G.; Zhou, C.; Li, Z.; Xu, C.; Hu, K. Overview of multi-robot collaborative SLAM from the perspective of data fusion. Machines 2023, 11, 653. [Google Scholar] [CrossRef]

- Shao, H.; Zhao, Q.; Chen, H.; Yang, W.; Chen, B.; Feng, Z.; Zhang, J.; Teng, H. Advancing simultaneous localization and mapping with multi-sensor fusion and point cloud de-distortion. Machines 2023, 11, 588. [Google Scholar] [CrossRef]

- Williams, B.; Cummins, M.; Neira, J.; Newman, P.; Reid, I.; Tardós, J. A comparison of loop closing techniques in monocular SLAM. Rob. Autom. Syst. 2009, 57, 1188–1197. [Google Scholar] [CrossRef]

- Newman, P.; Ho, K. SLAM–Loop closing with visually salient features. In Proceedings of the IEEE ICRA, Barcelona, Spain, 18–22 April 2005; pp. 635–642. [Google Scholar] [CrossRef]

- Wang, G.; Gao, S.; Ding, H.; Zhang, H.; Cai, H. LIO-CSI: LiDAR inertial odometry with loop closure combined with semantic information. PLoS ONE 2021, 16, e0261053. [Google Scholar] [CrossRef]

- Kurazume, R.; Nagata, S.; Hirose, S. Cooperative positioning with multiple robots. In Proceedings of the IEEE ICRA, San Diego, CA, USA, 8–13 May 1994; pp. 1250–1256. [Google Scholar] [CrossRef]

- Kim, J.; Kwon, J.W.; Seo, J. Simulation study on a method to localize four mobile robots based on triangular formation. In Proceedings of the ION Pacific PNT, Oahu, HI, USA, 1–4 May 2017; pp. 348–361. [Google Scholar] [CrossRef]

- Anousaki, G.; Kyriakopoulos, K. Simultaneous localization and map building for mobile robot navigation. IEEE Rob. Autom. Mag. 1999, 6, 42–53. [Google Scholar] [CrossRef]

- Guivant, J.; Nebot, E. Optimization of the simultaneous localization and map-building algorithm for real-time implementation. IEEE Trans. Rob. Autom. 2001, 17, 242–257. [Google Scholar] [CrossRef]

- Mourikis, A.; Roumeliotis, S. Predicting the performance of cooperative simultaneous localization and mapping (C-SLAM). Int. J. Rob. Res. 2006, 25, 1273–1286. [Google Scholar] [CrossRef]

- Poulose, A.; Han, D. Hybrid indoor localization using IMU sensors and smartphone camera. Sensors 2019, 19, 5084. [Google Scholar] [CrossRef] [PubMed]

- Rhee, J.; Seo, J. Ground reflection elimination algorithms for enhanced distance measurement to the curbs using ultrasonic sensors. In Proceedings of the ION ITM, Reston, VA, USA, 29 January–1 February 2018; pp. 224–231. [Google Scholar] [CrossRef]

- Rhee, J.; Seo, J. Low-cost curb detection and localization system using multiple ultrasonic sensors. Sensors 2019, 19, 1389. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Kwon, J.W.; Seo, J. Mapping and path planning using communication graph of unlocalized and randomly deployed robotic swarm. In Proceedings of the ICCAS, Gyeongju, Republic of Korea, 16–19 October 2016; pp. 865–868. [Google Scholar] [CrossRef]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar] [CrossRef]

- Gebre-Egziabher, D. Design and Performance Analysis of a Low-Cost Aided Dead Reckoning Navigator. Ph.D. Dissertation, Stanford University, Stanford, CA, USA, 2004. [Google Scholar]

- Vezinet, J. Study of Future on-Board GNSS/INS Hybridization Architectures. Ph.D. Dissertation, University of Toulouse, Toulouse, France, 2014. [Google Scholar]

- Kwon, J.W.; Kim, J.; Seo, J. Multiple leader candidate and competitive position allocation for robust formation against member robot faults. Sensors 2015, 15, 10771–10790. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).