Abstract

This study presents the development of a software solution for processing, analyzing, and visualizing sensor data collected by an educational mobile robot. The focus is on statistical analysis and identifying correlations between diverse datasets. The research utilized the PlatypOUs mobile robot platform, equipped with odometry and inertial measurement units (IMUs), to gather comprehensive motion data. To enhance the reliability and interpretability of the data, advanced data processing techniques—such as moving averages, correlation analysis, and exponential smoothing—were employed. Python-based tools, including Matplotlib and Visual Studio Code, were used for data visualization and analysis. The analysis provided key insights into the robot’s motion dynamics; specifically, its stability during linear movements and variability during turns. By applying moving average filtering and exponential smoothing, noise in the sensor data was significantly reduced, enabling clearer identification of motion patterns. Correlation analysis revealed meaningful relationships between velocity and acceleration during various motion states. These findings underscore the value of advanced data processing techniques in improving the performance and reliability of educational mobile robots. The insights gained in this pilot project contribute to the optimization of navigation algorithms and motion control systems, enhancing the robot’s future potential in STEM education applications.

1. Introduction

Modern engineering education needs to supply the new needs of the industry, following the Industry 4.0 revolution. All segments and professions are striving to gain a boost from new technologies, such as cloud computing, the Internet of Things, and artificial intelligence (AI) [1,2]. New methods are inspiring innovation and exploitation, cross-fertilized by the combination of machine learning (ML)/AI, robotics, and big data technologies. The OECD’s definition for an AI system emphasizes the human-defined objectives, the ability to make predictions, recommendations, and the capability of influencing real or virtual environments [3], while robots are defined by Haidegger as being a complex mechatronic system enabled with electronics, sensors, actuators, and software that executes tasks with a certain degree of autonomy [4]. Meanwhile, big data and data science are the study of data analysis by advanced technology, processing a huge amount of structured, semi-structured, and unstructured data [5,6].

Mobile robots serve as excellent tools for educators across various engineering fields, facilitating the implementation of skill development tasks [5,7]. The complexity of these systems provides valuable insights into how new technologies can be integrated to enhance system efficiency, reduce energy consumption, and improve materials and techniques. Numerous studies in the literature highlight the use of mobile robotic platforms in educational settings, demonstrating how they improve student learning and training in robotics by providing hands-on experience with physical platforms [8,9].

Recent advancements in educational mobile robots have significantly improved their capabilities, leading to increased adoption in educational institutions, including schools and universities. These robots can perform a wide range of tasks, from teaching basic programming to tackling more advanced engineering challenges. Notable examples include LEGO Mindstorms, which enables students and teachers to build and program their own robots while learning about mechanics, electronics, and software development. Dash and Dot, designed for younger learners, teach programming concepts in a playful and engaging manner. Additionally, TurtleBot serves as a popular educational tool, helping students grasp the fundamentals of robotics and artificial intelligence. The widespread use and versatility of mobile robots open up new educational opportunities and help students develop critical technology and engineering skills [8,10].

Despite their growing popularity, educational mobile robots still face challenges in sensor data analysis, motion control optimization, and navigation accuracy. Sensor data often contain noise and inconsistencies that impede accurate interpretation. Moreover, the lack of standardized tools for processing and visualizing sensor data limits the ability to identify patterns and trends effectively. Statistical analysis techniques, including moving averages, variance analysis, correlation studies, and exponential smoothing, are commonly used in mobile robotics to reduce noise, identify trends, and enhance data reliability. However, these methods often require manual implementation and lack integration into user-friendly software solutions tailored to educational purposes.

This study addresses these challenges by developing a modular software solution for processing, analyzing, and visualizing sensor data collected by the PlatypOUs mobile robot platform. The proposed system integrates statistical methods such as moving averages, correlation analysis, and exponential smoothing to improve data reliability and interpretability. By bridging the gap between raw sensor data and actionable insights, this research aims to optimize robot performance and support STEM education.

RVIZ is a comprehensive 3D visualization tool for robots using ROS [11,12]. It effectively displays sensor data and robot state information by combining and presenting it all in a single image overlaid on a simulated environment. However, a notable limitation of RVIZ is its lack of access to raw data, making it difficult for developers to interpret the underlying information directly. One potential solution is to enhance RVIZ with markers. Markers are simulated objects within the visualization that can be programmatically sized and positioned. For example, they can be used to represent radial lines from a laser scan or place cubes whose height corresponds to the robot’s current speed [13,14,15].

Alternatively, augmented reality offers another approach, as demonstrated by Collet and MacDonald [16]. Their work focuses on representing data within a real-world environment, using examples like laser and sonar scans.

In the domain of live programming, several languages feature their own visualizations. The concept of live programming dates back to Tanimoto’s work on VIVA [17], a programming language for image processing that uses a graphical representation of programs, similar to electronic circuits, with data flowing along wires to processing components. A similar data-flow-based approach is seen in Hancock [18], a live programming language designed for teaching robotics, where sensor readings are displayed as numerical or Boolean data that are easy to interpret without the need for additional visualizations.

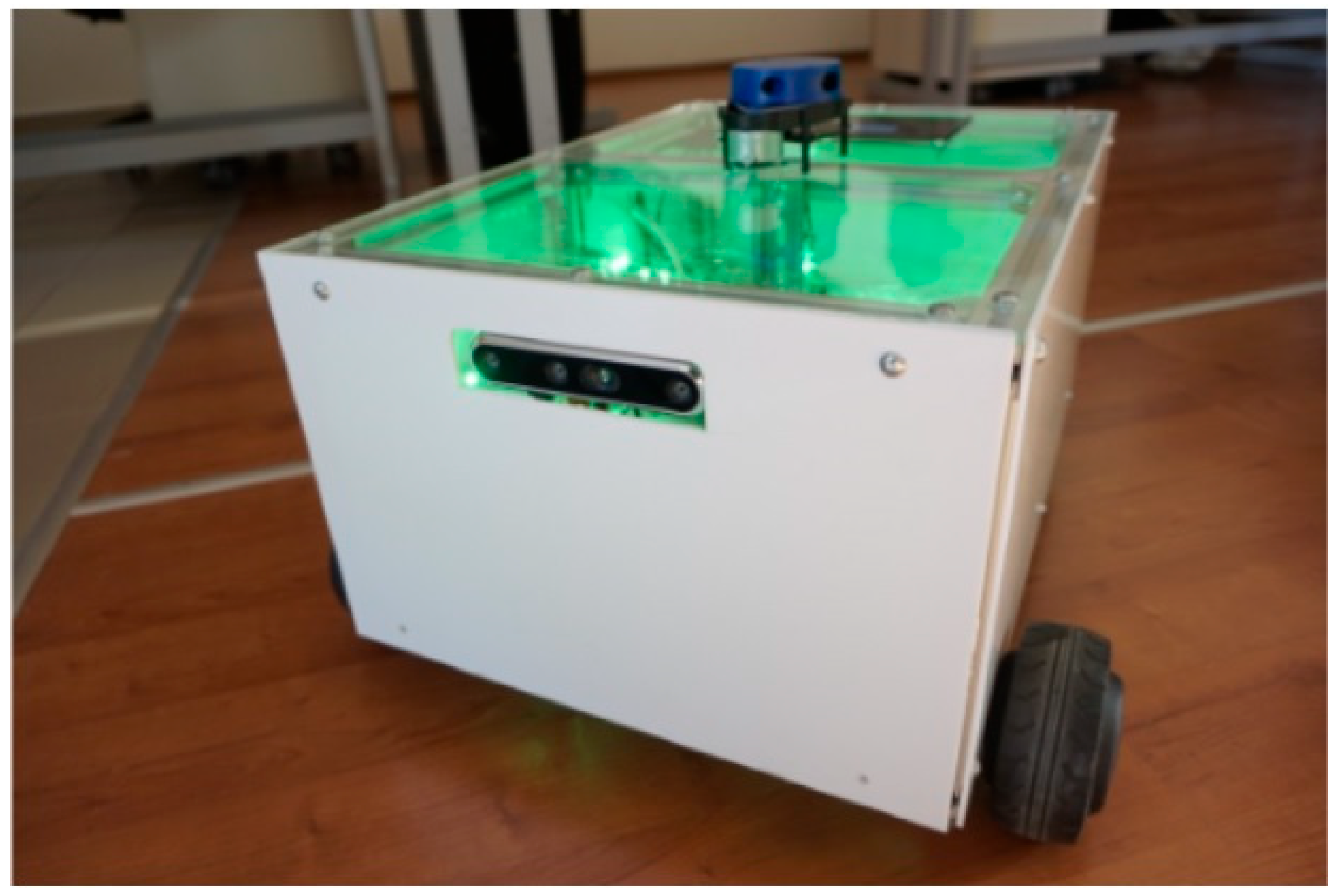

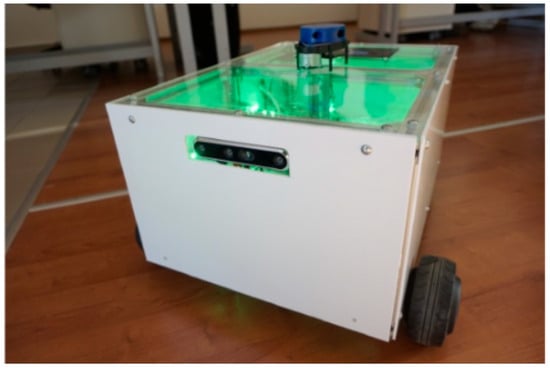

Using the PlatypOUs platform (0.2.0) (Figure 1), the simulator offers students and self-study participants hands-on experience and teaches them to tackle complex engineering problems in a professional yet engaging way [19]. The open-source mobile robot platform can be remotely controlled by an electromyography (EEG) device (https://mindrove.com/product/eeg-headset/ accessed on 2 July 2025) using the MindRove Arc 2 6-channel brain–computer interface headset. The collected bio-signals are classified using a support vector machine, and the results provide motion instructions to the mobile platform. In addition to the real robot, a virtual environment has been created in Gazebo, offering the same capabilities as the physical device. The PlatypOUs project aims to support STEM education and extracurricular activities, particularly laboratory exercises and demonstrations. It spans several disciplines, including systems design, control engineering, mobile robotics, and machine learning, with applications in all these fields. The PlatypOUs platform was developed to serve as both a research and educational tool for sensor-based robot control tasks [20]. It also offers a platform for experimenting with other emerging control modalities, such as augmented reality headsets and eye-gaze tracking [21].

Figure 1.

The PlatypOUs mobile robot, in the lab environment of the Antaly Bejczy Center for Intelligent Robotics, at Obuda University.

The main contributions of our work include the development of a modular system that demonstrates robot sensorics and control functions across various education levels, along with the prototyping and testing activities conducted during the research. The features of our system related to sensory data collection and aggregation are summarized in Table 1.

Table 1.

Comparison of types of data that can be obtained from PlatypOUs and ROS-RVIZ-Gazebo.

2. Materials and Methods

2.1. Robotics and Automation

For the education of the next digital native generation, the question is how robotics and AI tools can play an effective role in educational work and what positive effects can be highlighted in terms of development in educational processes, taking into account the constant change, which is one of the most important factors in the current era of digitalization [22,23]. Robotic megatrends outline the key research and educational aspects of our time, from the most critical applications—like surgery—to robot toys [24,25,26]. Computer science and programming education and robotics play an important role in technical higher education. Developing programming skills is a priority for all students. Algorithmic thinking and problem-solving skills are particularly important. The tools to achieve the above objectives are the Webots development environment and Parallax’s Boe-bot programmable robot [27].

2.2. Mobile Robots in Education

C/C++ programming languages are commonly used to teach the fundamentals of robot programming, after which students can expand their knowledge to languages such as C#, Java, PHP, and others. In educational settings, programmable robots like Boe-bot are valuable tools for students of varying ages and skill levels. These robots provide an engaging and interactive way to learn programming, offering students the opportunity to apply theoretical knowledge in real-world scenarios. Programming in real-life contexts allows for the testing of different programming languages and environments. Furthermore, these robots can serve as teaching aids in a variety of subjects, including communication, artificial intelligence, image processing, algorithm theory, shape recognition, game theory, and swarm intelligence. Their versatility in education makes mobile robots an essential component of modern learning tools.

The PlatypOUs robot is a differential drive mobile platform, equipped with two driven wheels powered by brushless DC hub motors. These motors are controlled by an ODrive BLDC driver board, an open-source motor control solution with freely available schematics and firmware. The driver board communicates with the robot’s main computer, an Intel NUC8i5BEH mini PC, via a USB-Serial interface (https://intelnuc.co.in/intel-nuc-kit-with-8th-generation/intel-nuc-kit-nuc8i5beh/ accessed on 2 July 2025).

The robot is powered by two 24 V, 15 Ah Li-ion battery packs connected in parallel. While the motor driver board receives full battery voltage, the main computer and sensors are powered at 12 V or 5 V through voltage regulators. Safety features include fuses on all power lines, a main power switch, and an emergency switch. The emergency switch disconnects only the motor driver, preventing movement while ensuring the computer remains operational.

Our system is equipped with a range of sensors, including a YDLIDAR X4 laser scanner (https://www.ydlidar.com/product/ydlidar-x4-pro accessed on 2 July 2025), an Intel RealSense D455 depth camera (https://www.intelrealsense.com/depth-camera-d455/ accessed on 2 July 2025), and wheel encoders. These sensors collect environmental data, allowing the robot to map its surroundings, determine its precise location, detect obstacles, and navigate autonomously to target destinations.

2.3. Limitations of Prior Art

RViz is a standard visualization tool in ROS-based robotics education [11]. It focuses primarily on the live 3D visualization of robot states and does not provide access to raw sensor data for detailed analysis. Simulation environments like Gazebo are focused on replicating physical environments and robot dynamics but offer limited built-in tools for time-series data analysis and correlation inspection of sensor signals. Additionally, while platforms like LEGO Mindstorms and TurtleBot are effective for introductory robotics, they often provide black-box abstractions, limiting students’ exposure to the details of sensor data interpretation and filtering.

Visual programming environments like VIVA [17] and Flogo I [18] support flow-based or block-based learning but are not designed for analyzing physical sensor data from real robots. It seemed practical to take a new approach, integrating live coding in Python (3.9) with direct data pipeline manipulation, providing an authentic data science experience within robotics education—a key differentiator from visual-only programming tools.

3. Data Processing and Visualization

Data processing and visualization are critical components of mobile robotics applications, as the data collected by sensors can provide valuable insights [19]. Various methods, such as filtering, noise reduction, normalization, and transformation, can be used to process these data. Data visualization—the graphical representation and interpretation of data—has become an essential tool in natural sciences, social sciences, and engineering. The evolution of data visualization closely follows the development of application domains. While new types of diagrams, often minor variations of existing ones, continue to emerge in the field, more challenging areas involve the efficient and accurate visualization of large datasets, commonly referred to as ‘big data’ problems. Ultimately, data visualization aids in understanding data and facilitating communication, playing a pivotal role in scientific research, business decision-making, and other fields where data need to be interpreted and visualized [28,29].

As the volume, variety, and sources of data within organizations continue to grow, so does the importance of leveraging these data in analytics, data science, and machine learning initiatives to generate actionable business insights. This increasing demand is placing significant pressure on data engineering teams, as transforming raw, unstructured data into clean, reliable datasets is a critical step in enabling these systems to deliver valuable results. The ETL process—Extract, Transform, and Load—is used by data engineers to extract data from various sources, transform them into a usable and reliable format, and then load them into systems that end users can access to solve business problems [11,13,16,30].

3.1. Visual Studio Code

Visual Studio Code (1.86) is a free code editor that helps to get started with coding quickly. Visual Studio Code supports a wide range of languages, including Python, Java, C++ and JavaScript. While coding, Visual Studio Code provides suggestions for completing lines of code and quickly fixing common errors. VS Code’s debugger can also be used to go through each line of code and understand what is happening. The editor has all the standard tools expectable from a modern code editor, including syntax highlighting, customizable keyboard shortcuts, bracket insertion, and snippets. It runs on all operating systems, including Windows, Linus, and macOS [31].

3.2. Microsoft Power BI

Power BI (2024) is a business analytics service developed by Microsoft. It provides interactive business and business intelligence capabilities in an interface that is easy to use to create reports and dashboards. It makes it possible to connect to multiple data sources, clean and transform data, create customized calculations, and visualize data using charts, graphs, and tables. Power BI can be accessed through a web browser, on a mobile device, or as a desktop application, and can be integrated with other Microsoft tools such as Excel and SharePoint. It can be used for advanced data modeling and analysis capabilities, and organizations can use it to make data-driven decisions and gain insight into their business performance [31].

3.3. Matplotlib Library

Matplotlib (3.9) is a versatile data visualization library for Python, which makes it possible to create different types of graphs (histograms, scatter plots, bar charts) in cooperation with NumPy. Developers can use Matplotlib’s APIs (Application Programming Interfaces) to incorporate graphs into GUI (Graphical User Interface) applications. A typical Python Matplotlib script usually requires only a few lines of code to visualize the data. Matplotlib includes two main APIs for graphical representation:

- The pyplot API, which is a hierarchy of Python code objects, with matplotlib.pyplot representing the top level.

- An object-oriented (OO) API offering a collection of objects, providing more flexibility than pyplot. This API has direct access to the Matplotlib backend system [32].

We chose VS Code because it is ideal for both sensor data analysis and visualization as it is extremely user-friendly. As mentioned before, although it supports several programming languages, the Python was our preferred option. This platform is particularly suitable for handling large amounts of data and the built-in version tracking capabilities make it easier to organize and manage data analysis projects. Visual Studio Code is not only outstanding for its features, but also for its vast documentation, which provides support and guidance to users. It features powerful debugging tools, which are essential to quickly identify and solve problems that arise during data analysis processes.

3.4. Methods

The data were collected using a robot called PlatypOUs, which collects data using sensors. The data were saved in different CSV files. In the wheels odometry, data were calculated from the encoders and saved in the file wheel_odom.csv with a frequency of 100 Hz, in the following format:

- t: UNIX timestamp

- x: position x coordinate

- y: position y coordinate

- theta: orientation angle

- v: linear velocity

- omega: angular velocity

Brushless DC (BLDC) hub wheels with integrated 12-bit wheel encoders are advanced components used in differential drive platforms to provide precise wheel odometry measurements. These hub wheels incorporate BLDC motors, which offer high efficiency, low maintenance, and smooth operation. Additionally, the integrated encoders provide a resolution of 4096 counts per revolution (12 bits), allowing for fine-grained measurement of wheel rotations. The wheel encoders play a crucial role in determining the position and speed of each wheel by measuring the rotation in small increments.

To calculate the movement of a differential drive platform using encoder values, the control system reads the encoder ticks from each wheel. The distance traveled by each wheel is determined by multiplying the number of encoder ticks by the wheel circumference and dividing by the encoder resolution. In a differential drive system, the robot’s linear and angular movements are derived from the distances traveled by the left and right wheels. The forward movement is the average of the distances traveled by both wheels, calculated as the sum of the left and right distances divided by two. The change in orientation is determined by the difference in distances divided by the wheelbase width.

These calculations enable precise control of the robot’s trajectory by continuously updating its position and heading based on the encoder data. The integration of BLDC hub wheels with high-resolution encoders significantly enhances the accuracy and reliability of autonomous navigation in differential drive platforms.

The IMU measurements were saved in the file imu.csv, at a frequency of 200 Hz, in the following format:

- t: UNIX timestamp (nanosec)

- gyro_r: x-axis angular velocity

- gyro_y: y-axis angular velocity

- gyro_z: z-axis angular velocity

- acc_r: x-axis linear acceleration

- acc_y: y-axis linear acceleration

- acc_z: z-axis linear acceleration

Bosch BMI055 is a high-performance inertial measurement unit (IMU) designed for precise motion tracking and orientation sensing applications. It integrates a 3-axis accelerometer and a 3-axis gyroscope into a compact 3.0 × 4.5 × 0.95 mm LGA package, making it suitable for a wide range of applications, including mobile devices, gaming, and industrial control. The accelerometer in BMI055 features selectable measurement ranges of ±2 g, ±4 g, ±8 g, and ±16 g, with a sensitivity of 16,384 LSB/g in the ±2 g range and a noise density of 150 μg/√Hz. The gyroscope offers measurement ranges of ±125°/s, ±250°/s, ±500°/s, ±1000°/s, and ±2000°/s, with a sensitivity of 262.4 LSB/°/s in the ±125°/s range and a noise density of 0.014°/s/√Hz. The device operates over a supply voltage range of 2.4 to 3.6 V and supports both I2C and SPI digital interfaces, ensuring versatile integration into various systems. Additionally, BMI055 boasts low power consumption, with typical values of 0.14 mA for the accelerometer and 5.9 mA for the gyroscope, making it an efficient choice for battery-powered applications. With its high precision, low noise, and robust design, Bosch BMI055 IMU is well-suited for demanding applications requiring accurate and reliable inertial sensing.

4. Results

Various analyses can be performed on the data, making these calculations easier, such as:

Position and orientation tracking:

The current position and orientation of the robot, based on the odometry data.

Velocity and acceleration analysis:

Tracking the robot’s linear and angular velocity and linear robot motion and acceleration to identify motion patterns, turns, and accelerations.

The robot’s speed and direction of rotation:

- Analysis of IMU data: using IMU gyroscope data to determine the robot’s rotational speed and direction. IMU linear acceleration data can help navigate terrain and avoid collisions with obstacles.

The analyses performed on the data include:

- Moving averages: As a statistical method to move a set of values from a dataset within a given time interval or window. It is a technique that is often used to smooth time series or to determine trends. It can be used to reduce the variability of data and reveal long-term patterns.

- Scattering: Helps to understand how far data spread from the mean. It helps to understand the distribution of the variance from the mean. This is particularly important in assessing the stability and reliability of sensor data. Scatter can also be useful for checking the quality of sensors, for example determining how the values measured by the sensors vary over time.

- Correlation: Correlation analysis helps to optimize data collection processes. It also helps to identify relationships between different sensor data.

The importance of two key data sources generated by the mobile robot—odometry and IMU data—will be discussed in this section. The odometry data, stored in the ‘wheel_odom.csv’ file, record changes in the robot’s position and orientation over time. The IMU data in the ‘imu.csv’ file include the angular velocities and linear accelerations measured by the sensors. Variance analysis will provide a deeper understanding of the consistency and variability of the data, particularly regarding the accuracy of the encoders and sensors, as well as the evaluation of the robot’s dynamic behavior. The Python code used for the analysis is presented in Appendix A.

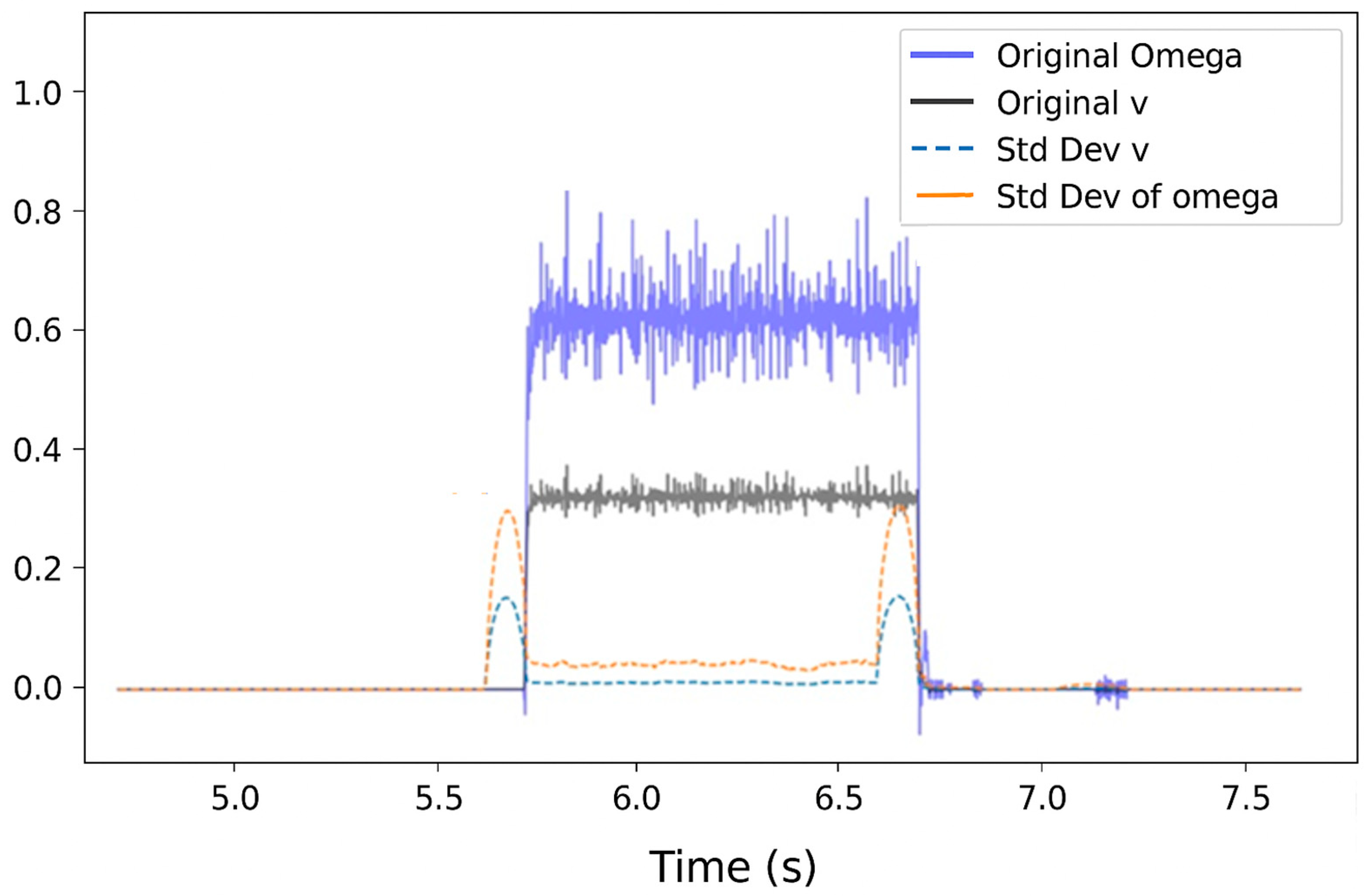

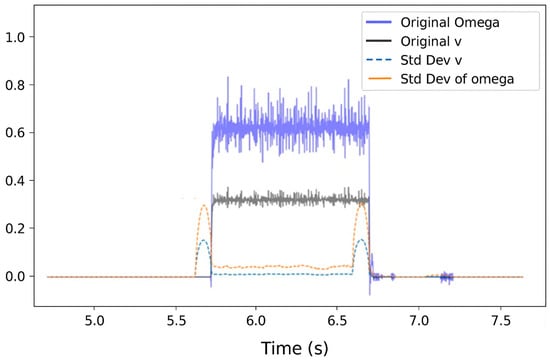

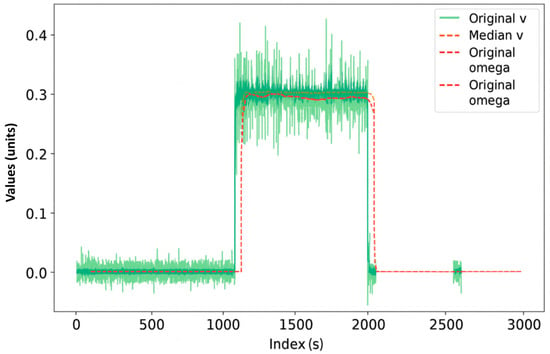

First, the odometry data are read from the ‘wheel_odom.csv’ file using the pandas library. The file contains the robot’s linear velocity (‘v’) and angular velocity (‘omega’), and is loaded from the user’s desktop. We selected these columns from the dataset and applied a 100-item motion window to calculate the standard deviation of the data. The code iterates through the dataset, calculating the standard deviation at each point using the current and next 99 data points. This method reflects the temporal variability of the data. If fewer than 100 data points are available at the end of the dataset, the code repeats the last calculated standard deviation value to ensure that a standard deviation is assigned to all data points. Figure 2 shows the scatter deviation of the odometry data.

Figure 2.

Original and Standard Deviation of Omega over Time.

The calculated standard deviations are then appended to the original dataset, creating a new, extended dataset. This new dataset is saved in a separate CSV file.

- “Original Omega” shows the angular velocity of the robot as a function of time. When the robot turns left, the omega is positive, as indicated by the blue line in the graph. The changes in angular velocity are critical for the robot’s navigation system because they determine the robot’s orientation changes, including direction and speed.

- “Std Dev of Omega” (standard deviation) represents the variability of angular velocity over time. A low standard deviation indicates that the angular velocity is relatively consistent, while higher values suggest variability or instability. The orange dashed line on the graph highlights periods when the robot’s turning speed exhibited greater fluctuations.

- “Original v” shows the robot’s linear speed as a function of time. Linear speed is important for positioning the robot during straight-line movements. Maintaining a constant velocity is essential for predictable and accurate navigation, as shown in Figure 3.

Figure 3. Data representation from the sensory system.

Figure 3. Data representation from the sensory system. - “Std Dev of v” (standard deviation) reflects the variability of linear velocity. If the robot is moving smoothly at a predefined speed, the standard deviation will remain low. Higher values indicate that the robot’s speed is variable, making precise tracking and stable operation more challenging.

To summarize, the calculation of the deviations in omega and v variance analyzes two distinct aspects of the robot’s motion. The deviation of omega variance reflects the stability and predictability of the robot’s turns, while the deviation of v variance reflects the smoothness and reliability of the linear motion. Both measurements are crucial for fine-tuning robot motion and improving navigation algorithms. A thorough analysis of these data can help system designers and operators optimize motion models and enhance overall robot performance.

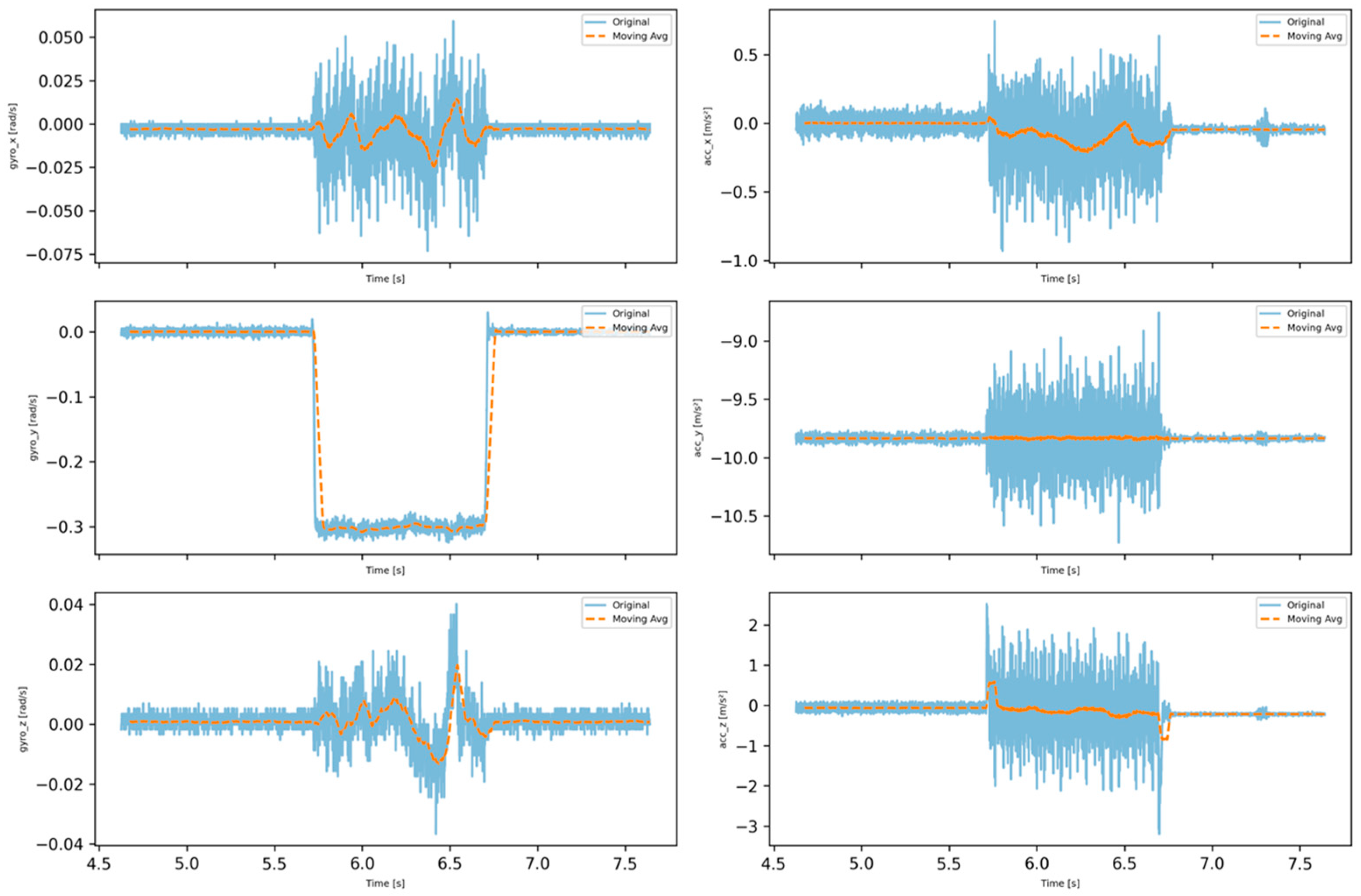

Overall, the stability or low variance levels observed in Gyro_x and Acc_x indicate that the robot maintained stable direction and velocity along the x-axis, which is a positive sign during the turning maneuver. In contrast, the high variance in Gyro_y and Acc_y suggests that the robot’s motion along the y-axis was more variable, which could imply inconsistent turning behavior. This variability may pose a potential issue from both a stability and motion control perspective. The observed changes in Gyro_y and Acc_y during the left turn were expected, as they are directly linked to the turning motion. Additionally, the variation in Gyro_z and Acc_z data indicates that the robot’s tilt changed during the maneuver.

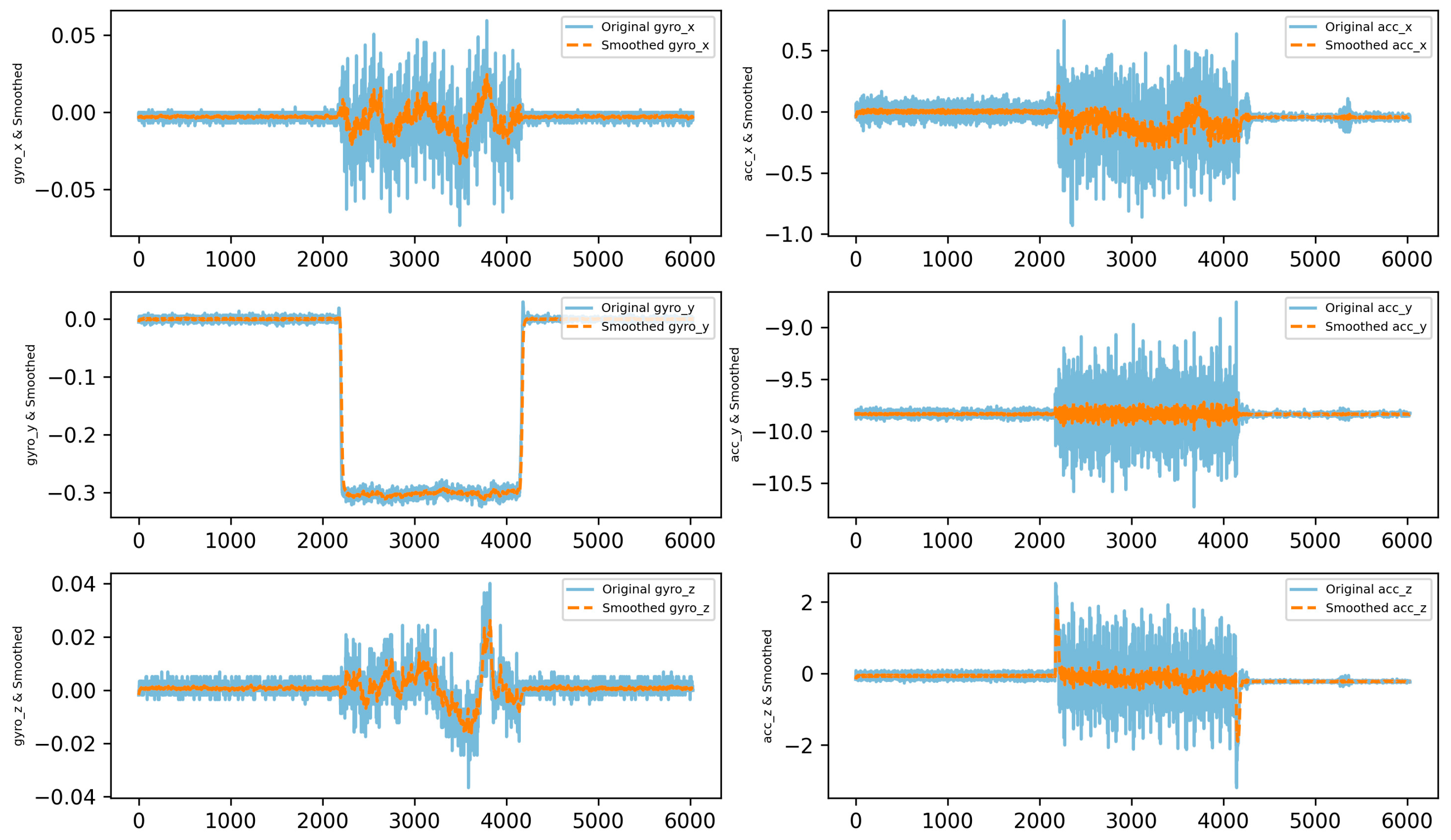

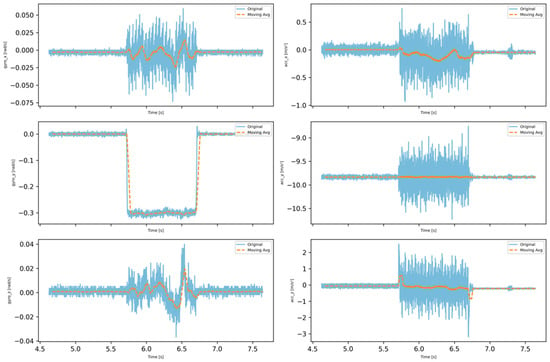

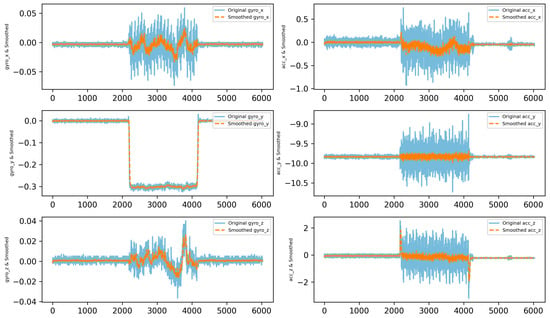

Applying a moving average filter to the IMU and wheel-code data (Figure 4) offers significant benefits. This filter is an effective tool for reducing noise and minimizing short-term fluctuations while preserving the underlying trends in the data. The moving average filter works by averaging recent measurements from the dataset, which helps smooth out short-term changes and create a more consistent data series. This is particularly useful in robotics, where sensor data often contain random noise or sudden changes caused by the robot’s movements or environmental factors.

Figure 4.

Moving average (100 window size) chart of odometry data through 1000 iterations.

When the robot is navigating, the moving average filter helps smooth the data, providing a clearer picture of the true trends in its movements. This allows navigation algorithms to make more reliable decisions. By filtering out random fluctuations that could distort the evaluation of the robot’s motion, the moving average provides a more stable foundation for motion planning and fine-tuning motion control systems. The use of this filter enhances the robustness of the robot system against external disturbances, promotes more accurate and smoother movements, and ultimately contributes to improved robot performance and reliability.

The left turn in the IMU data of the robot is measured, in particular, by the gyro ‘gyro_y’ axis of the robot. A pop-out event in the ‘gyro_y’ graph, where the angular velocity value shows a sharp change, indicates a left turn. Since the measurement axes of the gyroscope are defined according to the robot’s own axis system, a positive or negative value along a given axis indicates the direction of rotation. If there is a large positive or negative offset on the ‘gyro_y’ axis, this may indicate that the robot has turned counter-clockwise (left) or clockwise (right) (Figure 5).

Figure 5.

Moving average chart of odometry data (3D speed and acceleration).

The usefulness of the moving average in this case is twofold: It helps to highlight the turning event by smoothing the data, and it helps to distinguish real motion from short-term noise. This smoothing can be particularly useful if we want to correctly identify and describe the turning motion in time, as it allows us to focus on the real motion, rather than the noisy data.

The formula for calculating the moving average of a time-series data Yt over a window of size k is:

where MAt is the moving average at time t, Yt is the value of the time series at time tt,k is the size of the moving average window, and i is the index within the window.

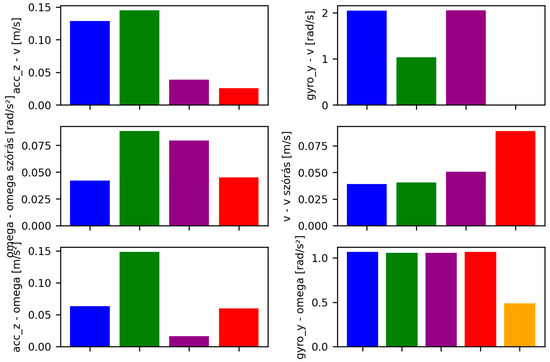

4.1. Correlation

Correlation in the context of mobile robots can help identify the extent to which data from the robot’s sensors are interdependent. For instance, when measuring the robot’s motion and the changes in its environment, correlation can shed light on how data from individual sensors influence each other. This method is particularly useful in the development of a robot navigation system, as it helps to understand the relationship between data from various sensors and the robot’s movements and interactions with its environment. By using correlation, developers can better optimize the robot’s sensor system, thereby improving navigation accuracy and efficiency.

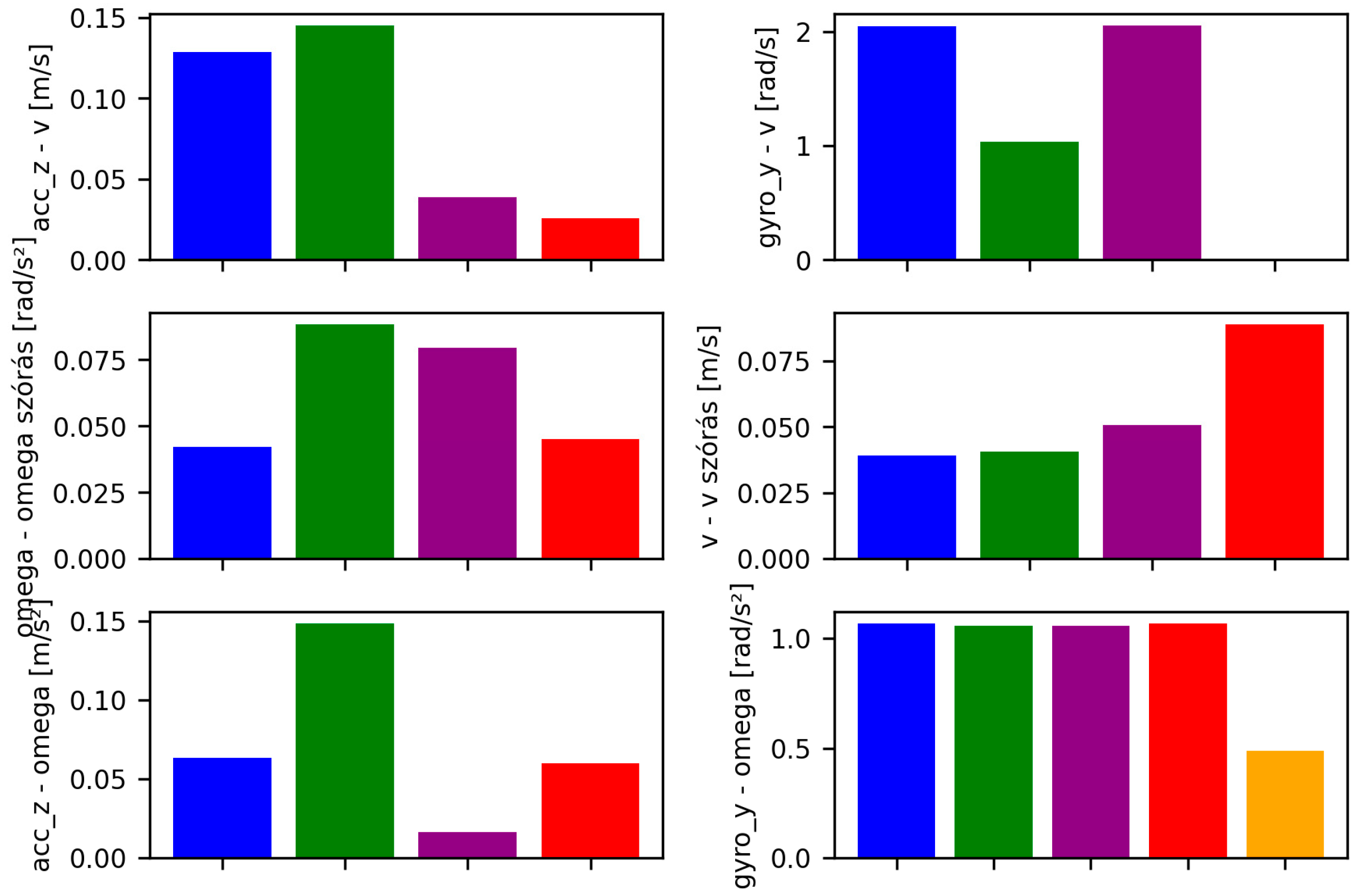

Correlation plays a crucial role in analyzing the PlatypOUs robot’s sensor data. Odometry data from the wheel encoders, which provides information on position and velocity, and IMU data, which measures acceleration and angular velocity, offer different types of motion-related information. Correlation can be employed to explore how these data sets are interconnected, aiding in the creation of a more accurate model of the robot’s motion and navigation. For example, if the velocity measured by the wheel encoders does not correlate well with the accelerations measured by the IMU, this may signal sensor errors or calibration issues (Figure 6).

Figure 6.

Correlation between acceleration and velocity of the robot.

The low correlation between acceleration and velocity during straight-line motion indicates that changes in velocity during this phase are less influenced by acceleration. This suggests that the robot’s velocity remains relatively stable during straight motion, with acceleration playing a smaller role in velocity changes. The observed low correlation could also be due to issues with sensor data, which are still under investigation.

In contrast, the higher correlation observed during turns suggests that acceleration has a greater impact on velocity changes in this phase. Notably, this relationship is even more pronounced during right turns, indicating that acceleration has a stronger influence on velocity in right turns compared to left turns.

These findings imply that the robot’s physical characteristics or sensor sensitivity may vary depending on the type of motion. The insights gained from data analysis can help identify and address potential sensor failures, ultimately improving the robot’s performance and reliability under different conditions.

The data analysis reveals minimal correlation between linear velocity and Z-axis acceleration during straight-line travel, suggesting that acceleration has little effect on velocity in this state. However, in turns, particularly right turns, the correlation between acceleration and velocity becomes stronger. A similar pattern is observed with angular velocity along the Y-axis and linear velocity. The variability of angular velocity is larger in right turns, which could reflect differences in motion dynamics or sensor accuracy. Additionally, the variance in linear velocity is greatest during straight travel and significantly smaller during stationary turns. In both cases, the highest correlation is observed during left turns in place, suggesting that the relationship between acceleration and angular velocity is strongest in this specific motion state.

In statistics, theoretical values are not available, and empirical correlation is therefore calculated as follows:

Correlation can be calculated using statistical software. This can be found in the statistics/analyze menu. The software provides descriptive statistics, the value of the correlation coefficient (r), and the significance level (denoted by p/sig), and sometimes also the confidence interval.

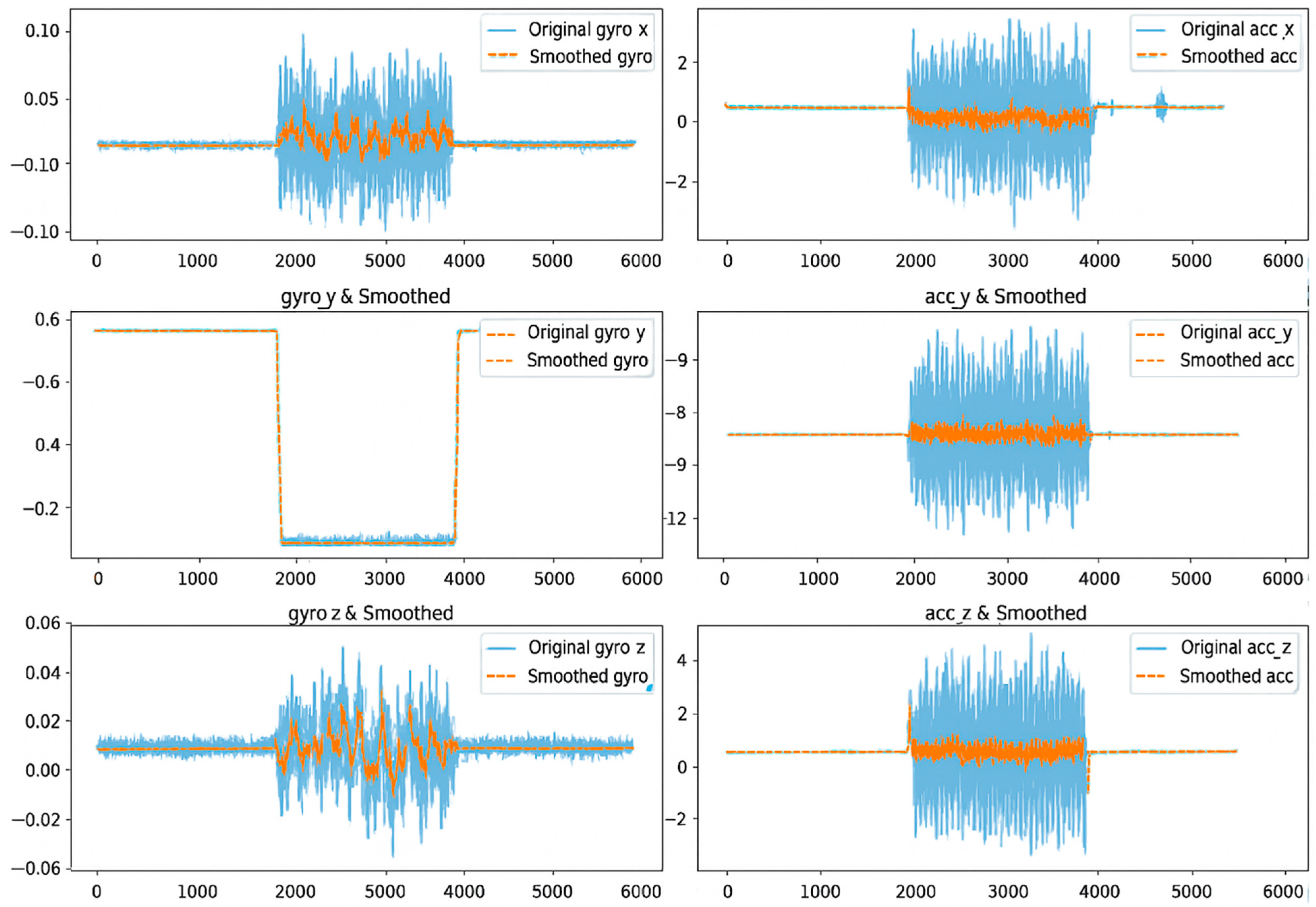

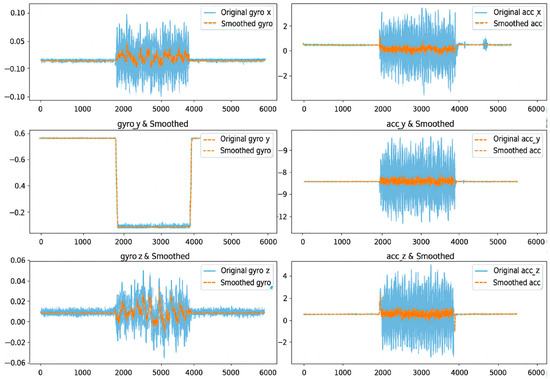

4.2. Exponential Smoothing

The application of exponential filtering in the analysis of sensor data from mobile robots is highly effective for reducing noise and adapting to rapid environmental changes. This filter type is particularly well-suited for mobile robots with limited resources, as it is computationally efficient and easy to implement. Exponential filters help eliminate sensor-generated noise, ensuring stable robot navigation and effective interaction with the environment.

By assigning more weight to recent measurements, exponential filters allow robots to respond quickly to environmental changes, which is crucial for efficient operation in dynamic environments. Additionally, they help robots track long-term trends while filtering out short-term fluctuations.

Setting the α value correctly is crucial, as it determines the speed at which the filter reacts to new data relative to older data. The optimal value for α depends on factors such as the robot’s speed, environmental conditions, and the specific task at hand. This parameter is typically set through experimental or adaptive methods.

Exponential smoothing effectively reduces the noise in the gyroscope and accelerometer data, allowing for more accurate observation of the robot’s real-world motion. The rotation along the X-axis shows a stable trend, although with some outliers. Y-axis rotation was relatively stable with little noise. The angular velocity and acceleration along the Z-axis showed initial noise data, but became more stable after smoothing, reflecting the dynamics of the robot’s motion. The smoothed data help to more easily interpret the smooth trends and possible changes, which will facilitate more accurate navigation and environmental interactions (Figure 7).

Figure 7.

Sensor values with exponential smoothing.

Exponential smoothing effectively reduced noise in the gyroscope and accelerometer data, allowing the robot to detect and react more accurately to environmental changes, such as turning left. The smoothed data illustrate smoother and more easily interpretable trends, which helps the robot to navigate stably and perform environmental interactions more accurately. The use of a filter helps the robot’s control system to make more accurate decisions, as smoothed signals contain less random fluctuations.

However, it is important to carefully adjust the smoothing factor α to determine the optimal balance between noise reduction and real-time responsiveness, especially for dynamic maneuvers, such as turns.

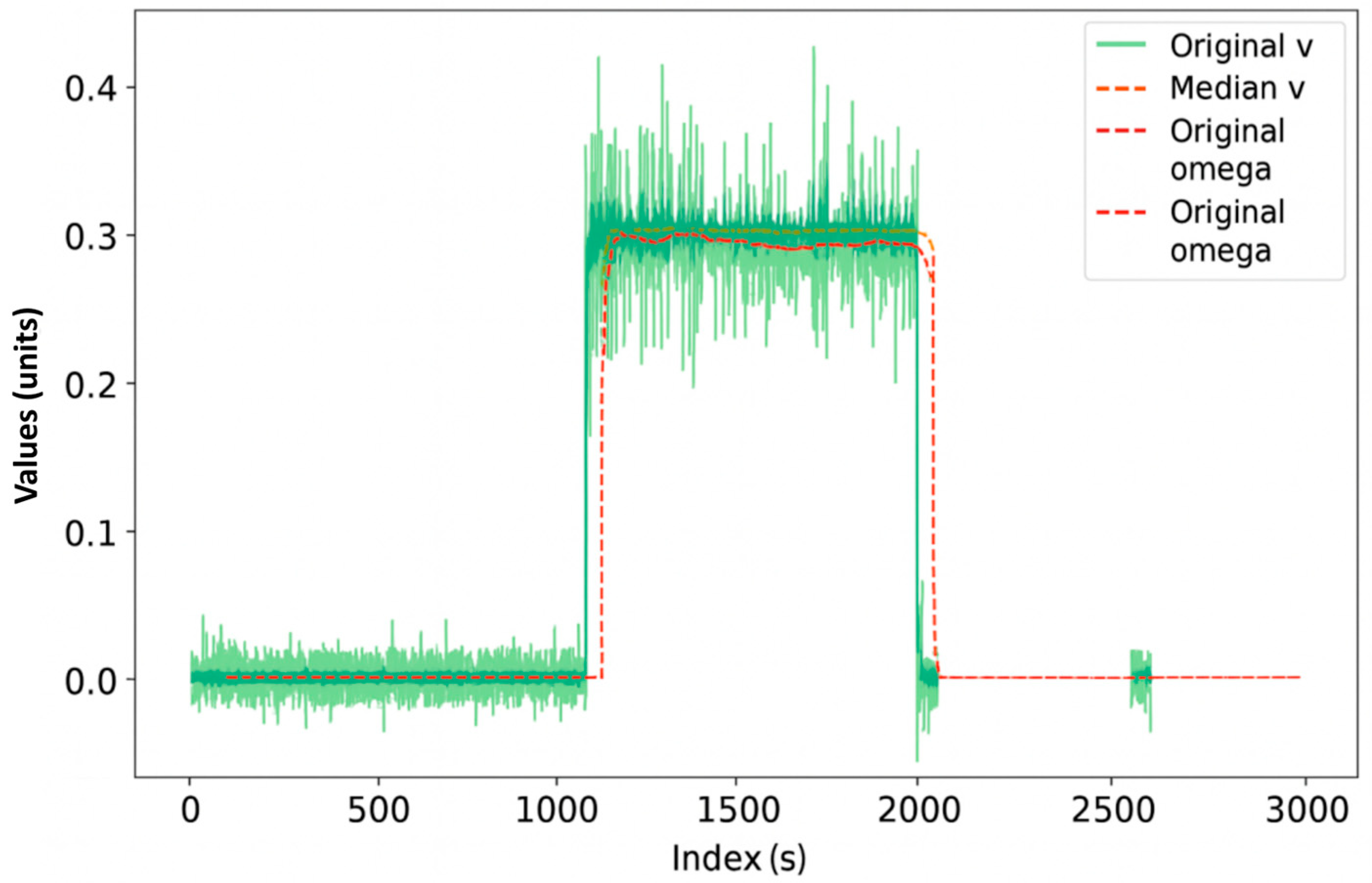

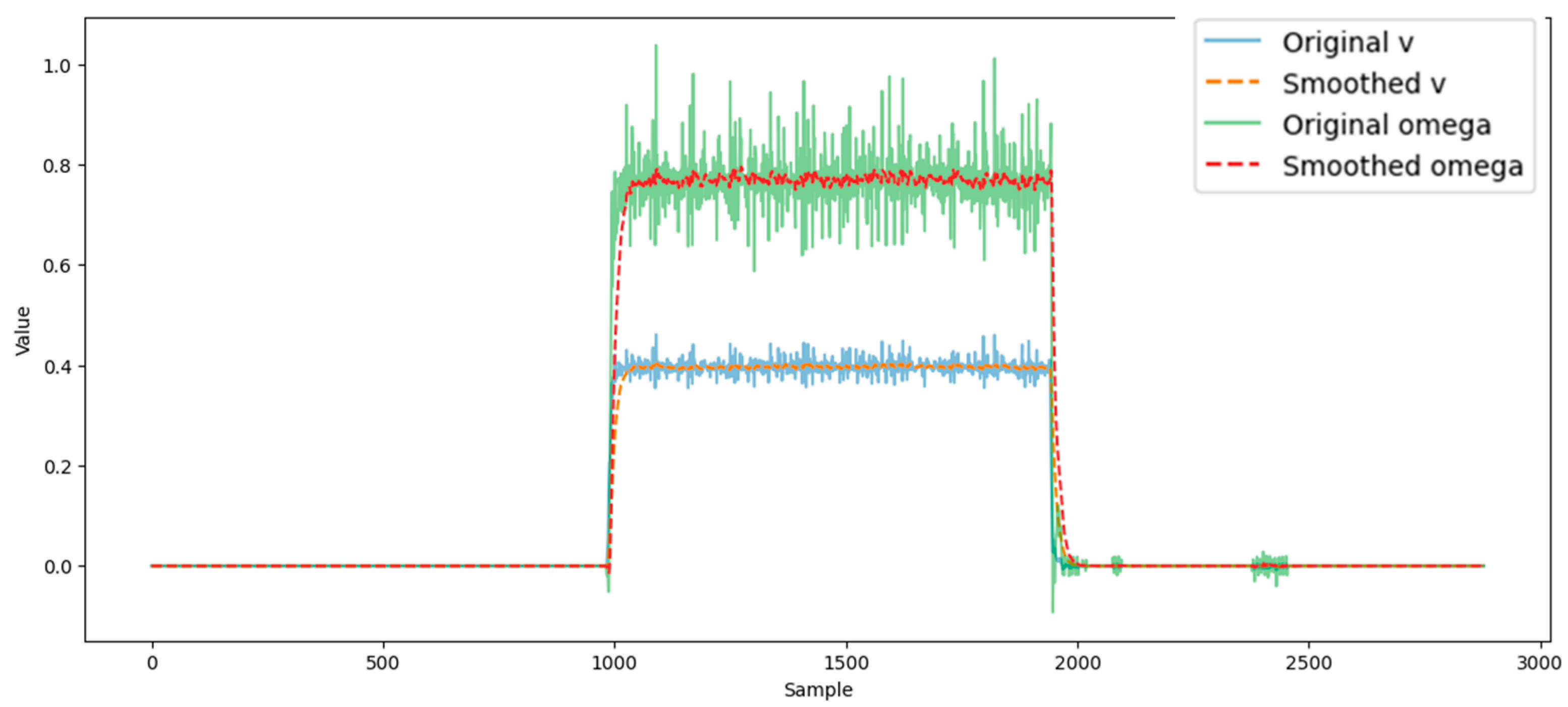

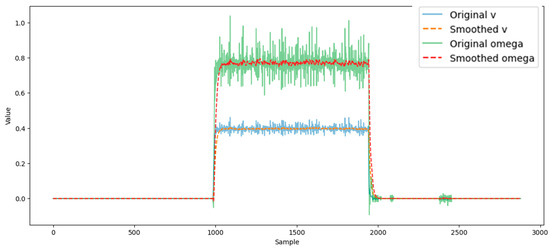

The “Original v” data show slight noise, but are stable, indicating steady progress. The smoothed linear velocity curve further reduces the noise, preserving the overall trend of the original data. Consistent altitude indicates a steady speed, suggesting stability during the turning maneuver (Figure 8).

Figure 8.

Wheel sensor values with exponential smoothing.

The “Original omega” data shows significant noise and fluctuation, probably due to wheel encoder or measurement inaccuracies. The smoothed angular speed curve shows a sudden change, indicating the time of the left turn. After the turn, the omega value returns to near-zero, indicating that the robot has completed the turn and is moving in a straight line again.

The stability of the linear velocity during the turning maneuver suggests that the robot was able to maintain forward velocity during the turning maneuver, which may be a good indication of the efficiency of the robot’s propulsion and control system. The sharp change in the smoothed omega curve shows that the turning maneuver was dynamic, and the exponential smoothing effectively filters out noise, so that the time and duration of the actual turning maneuver can be easily identified. Taken together, these data indicate that the robot performed a controlled and smooth left turn. The simulations allow for a more accurate analysis of the odometry data and a better understanding of the robot’s motion.

The formula for exponential smoothing can be expressed as follows:

where St is the current smoothed value, Yt is the current observed value, St−1 is the previous smoothed value, and α is the smoothing factor (a value between 0 and 1) that determines how much weight is given to the previous value when calculating the current value. The closer α is to 1, more weight is placed on the current value.

5. Discussion

The stability or low variance in Gyro_x and Acc_x indicates that the robot maintained its direction and velocity along the x-axis, which is a positive sign during turning maneuvers. In contrast, the high variance in Gyro_y and Acc_y suggests that the robot’s motion along the y-axis was more variable, which may indicate inconsistent turning behavior. This variability could pose a problem from a stability and motion control perspective. The changes in Gyro_y and Acc_y during the left turn were expected, as these data are directly related to the turning motion. Additionally, changes in Gyro_z and Acc_z data show that the robot’s tilt also changed during the maneuver.

In summary, the calculation of omega and v variance deviations analyzes two distinct aspects of robot motion. The omega variance deviation assesses the stability and predictability of the robot’s turns, while the v variance deviation examines the smoothness and reliability of the robot’s linear motion. Both measurements are essential for fine-tuning robot motion and developing navigation algorithms.

The moving average filter serves two key purposes in this context: first, it helps highlight the turning event by smoothing the data, and second, it helps distinguish real movement from short-term noise. This smoothing is particularly useful for accurately identifying and describing the turning motion over time, as it allows us to focus on the true motion rather than on noisy data.

The acceleration values along the acc_x and acc_y axes may also exhibit variations during the turn, but these variations are generally less characteristic than the gyroscope data. On the acc_z axis, the effect of gravity makes it less likely that the turn will be evident, unless the robot’s mass changes during the maneuver.

The moving average filter makes it easier to identify and analyze dynamic behaviors associated with a left turn, providing a clearer picture of the robot’s directional change. By using the moving average, we can see that the robot’s linear velocity remained relatively constant for most of the measurement period, while the angular velocity graph clearly indicates the time and duration of the turn. The smoothed moving average makes the robot’s motion easier to interpret, especially during turning maneuvers, where the original data may be distorted by significant noise and fluctuations.

Much of the system development originates from the experience of robotics and STEM education practices at Obuda University. Unlike standard robot visualization tools such as RViz, which focus on spatial representations without exposing raw sensor streams for analytical exploration, our proposed platform directly integrates data processing and visualization into the educational workflow. This allows learners to engage with statistical methods like moving average filtering, exponential smoothing, and correlation analysis, fostering a deeper understanding of robot dynamics and the influence of sensor noise. Compared to simulation tools like Gazebo or educational kits like LEGO Mindstorms, which abstract away sensor-level complexities, PlatypOUs emphasizes data transparency and equips students with practical skills in both robotics and data analysis. This dual focus on real-time data-driven decision-making and system debugging addresses a gap in current robotics education tools, aiming to enhance the learning experience in STEM.

6. Future Work

Arguably, the work is still ongoing, with significant potential for future development. To begin with, it is expected to extend our data pool by including numerous representative motion patterns—straight-line traversal and an approximation of a slalom-like path, repeated in numerous iterations.

Further developments could extend the research in several directions to gain a deeper understanding of robot motion and enable the application of adaptive non-linear control algorithms [33]. It is crucial to utilize various types and amounts of data for a more detailed analysis, which will help better understand different aspects of the robot’s movement and ultimately allow for the application of deep learning methods to the dataset [34].

Additionally, the introduction of new sensors, sensor fusion, and advanced filtering techniques—such as the integration of laser scanners and the latest versions of depth cameras (e.g., the Intel RealSense D555)—could enhance the robot’s ability to detect motion more accurately and improve its navigation capabilities [35]. Advances in material development, particularly in additive manufacturing, could lead to the creation of lightweight yet durable structures for the robot’s base, enabling the integration of more sensors [36].

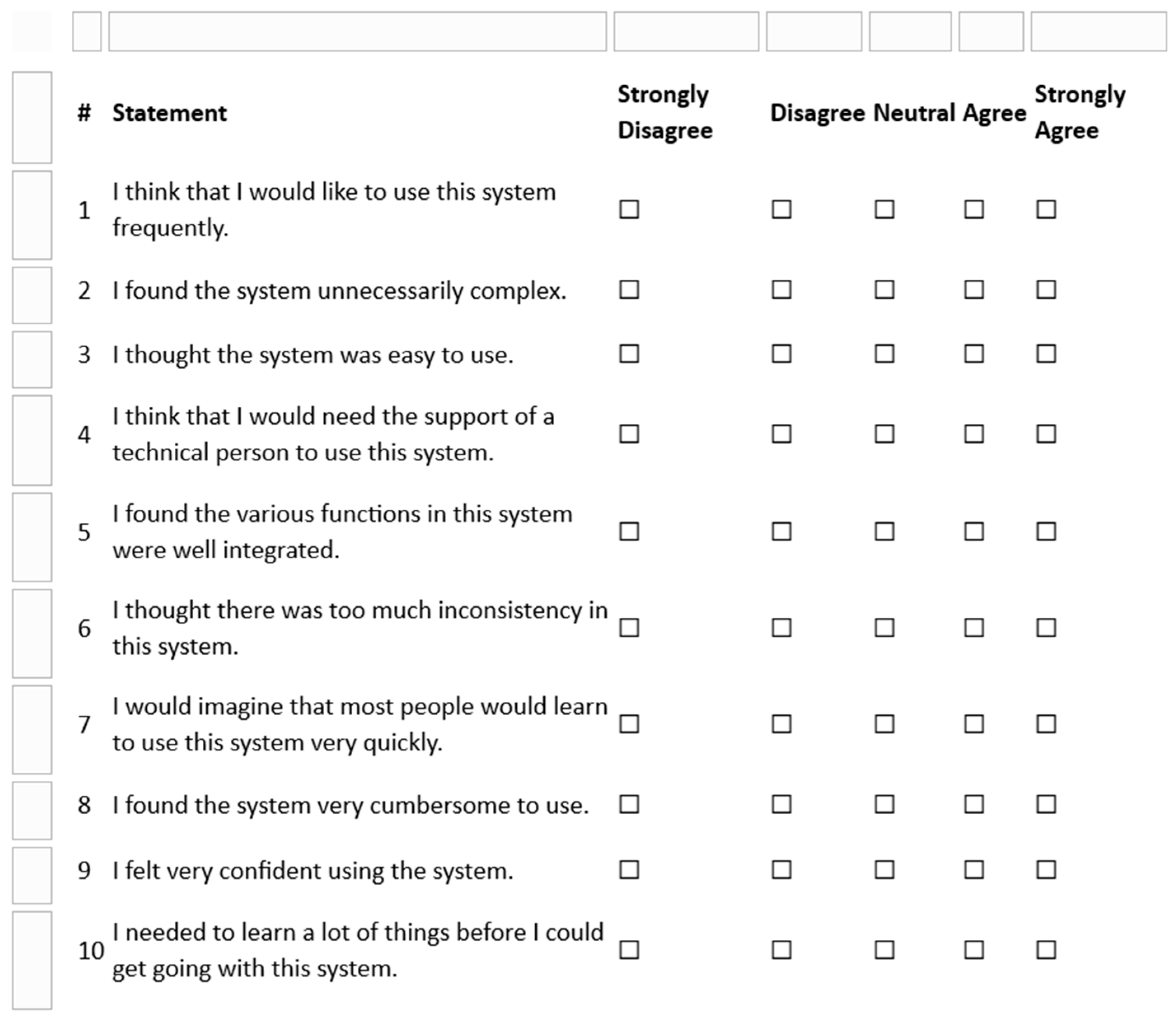

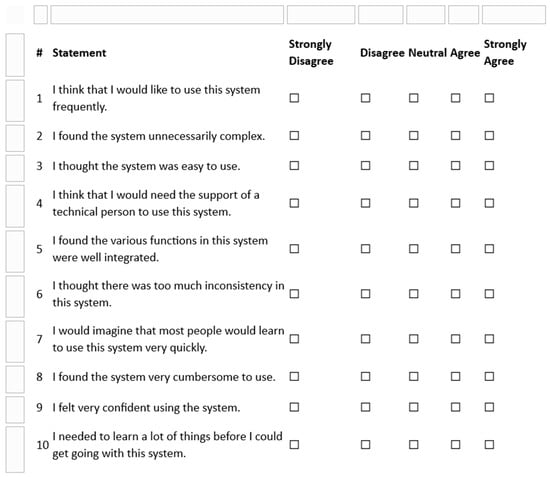

Expanding the modalities and scope of the collected data would allow for an update to the data analysis window size, which is an interesting avenue for identifying the optimal setting. Furthermore, exploring different motion parameter configurations could offer new perspectives on navigational capabilities and stability. A comprehensive revision aimed at scalability and future integration into educational systems is also essential. In the proposed future assessment session, students shall indicate how much they agree with the above statements about their experience using the PlatypOUs robot and data analysis platform (including data processing and visualization tools) for analyzing their own robot-collected data (Figure 9). Certain modern robot system development frameworks, such as the IEEE’s Ethically Aligned Design principle (published as standard: IEEE 7007-2022) [37,38], could be applied to ensure ethical development and alignment with industry best practices. Overall, these innovative approaches could enable robots to operate more efficiently and accurately, offering new opportunities and advancements in the field of robotics.

Figure 9.

System Usability Scale (SUS) Questionnaire for the PlatypOUs Data Analysis Platform.

7. Conclusions

In summary, the data analysis demonstrates that the robot maintained stability and consistent direction along the x-axis during the turning maneuver, reflecting effective control. However, variability in the Gyro_y and Acc_y data suggests some inconsistency in the robot’s motion along the y-axis, which could affect stability during left turns. Despite this, the variations in Gyro_y and Acc_y were expected, as they are directly related to the turning motion. Changes in the Gyro_z and Acc_z data indicate adjustments in the robot’s tilt during the maneuver.

The omega and v variance deviations provide valuable insights into turn stability and the smoothness of linear motion, which are crucial for refining motion control and developing navigation algorithms. The moving average filter serves a dual purpose: it highlights the turning event while filtering out short-term noise, facilitating the accurate identification of turning patterns. While acceleration data along the acc_x and acc_y axes exhibit some variation, this is less pronounced compared to the gyroscope data, with gravity largely influencing acc_z.

Overall, this analysis effectively describes the robot’s performance during a left turn, underscoring the importance of stability and predictability in turns, as well as smooth linear motion. The moving average filter helps differentiate real motion from noise, contributing to a deeper understanding that supports the optimization of motion control and navigation algorithms. Ultimately, these improvements enhance the robot’s performance and reliability.

Author Contributions

Conceptualization, D.P., T.H. and E.N.; Methodology, B.D. and E.N.; Software, D.P. and B.D.; Validation, D.P. and B.D.; Formal analysis, B.D.; Investigation, D.P. and T.H.; Writing—original draft, D.P.; Writing—review & editing, T.H. and E.N.; Supervision, T.H. and E.N.; Project administration, E.N.; Funding acquisition, E.N. All authors have read and agreed to the published version of the manuscript.

Funding

T. Haidegger is a Consolidator Researcher, supported by the Distinguished Researcher program of Obuda University.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

The Python code for processing the odometry data is shown below as:

- import pandas as pd

- import numpy as np

- import matplotlib.pyplot as plt

- df = pd.read_csv('c:/users/ploka/Desktop/circleft0408_1/wheel_odom.csv')

- selected_columns = df.loc[:, 'v':'omega']

- window_size = 100

- std_devs_df = pd.DataFrame()

- for column in selected_columns:

- std_devs = []

- for start in range(len(df) - window_size + 1):

- end = start + window_size

- window = df[column][start:end]

- std_dev = window.std()

- std_devs.append(std_dev)

- std_devs.extend([std_devs[−1]] * (window_size − 1))

- std_devs_df[f'std_dev_{column}'] = std_devs

- result_df = pd.concat([df, std_devs_df], axis = 1)

- result_df.to_csv('c:/users/ploka/Desktop/circleft0408_szoras-wheel.csv')

References

- Haidegger, T.; Mai, V.; Mörch, C.; Boesl, D.; Jacobs, A.; Rao, R.B.; Khamis, A.; Lach, L.; Vanderborght, B. Robotics: Enabler and inhibitor of the Sustainable Development Goals. Sustain. Prod. Consum. 2023, 43, 422–434. [Google Scholar] [CrossRef]

- Szilágyi, Z.; Hajdu, C.; Csapó, Á.; Széll, K.; Galambos, P. Sustainability Indicators in Industrial Robotic Systems. Chem. Eng. Trans. 2023, 107, 79–84. [Google Scholar] [CrossRef]

- Zhao, J.; Fariñas, B.G. Artificial intelligence and sustainable decisions. Eur. Bus. Organ. Law Rev. 2023, 24, 1–39. [Google Scholar] [CrossRef]

- Ang, M.H.; Khatib, O.; Siciliano, B. (Eds.) Encyclopedia of Robotics; Springer Nature: Berlin/Heidelberg, Germany, 2018; ISBN 978-3-662-43769-8. [Google Scholar]

- Mubin, O.; Stevens, C.J.; Shahid, S.; Al Mahmud, A.; Dong, J.-J. A review of the applicability of robots in education. J. Technol. Educ. Learn. 2013, 1, 13. [Google Scholar] [CrossRef]

- Haidegger, T.P.; Galambos, P.; Tar, J.K.; Kovács, L.A.; Kozlovszky, M.; Zrubka, Z.; Eigner, G.; Drexler, D.A.; Szakál, A.; Reicher, V.; et al. Strategies and Outcomes of Building a Successful University Research and Innovation Ecosystem. Acta Polytech. Hung. 2024, 21, 13–35. [Google Scholar] [CrossRef]

- Demetriou, G.A. Mobile robotics in education and research. In Mobile Robots-Current Trends; IntechOpen: London, UK, 2011; pp. 27, 48. [Google Scholar]

- Takacs, A.; Eigner, G.; Kovacs, L.; Rudas, I.J.; Haidegger, T. Teacher’s kit: Development, usability, and communities of modular robotic kits for classroom education. IEEE Robot. Autom. Mag. 2016, 23, 30–39. [Google Scholar] [CrossRef]

- Filippini, C.; Perpetuini, D.; Cardone, D.; Merla, A. Improving human–robot interaction by enhancing nao robot awareness of human facial expression. Sensors 2021, 21, 6438. [Google Scholar] [CrossRef] [PubMed]

- Alatise, M.B.; Hancke, G.P. A review on challenges of autonomous mobile robot and sensor fusion methods. IEEE Access 2020, 8, 398. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. ICRA Workshop Open Source Softw. 2009, 3, 5. [Google Scholar]

- Chikurtev, D. Mobile robot simulation and navigation in ROS and Gazebo. In Proceedings of the 2020 International Conference Automatics and Informatics (ICAI), Varna, Bulgaria, 1–3 October 2020; pp. 1–6. [Google Scholar]

- Campusano, M.; Fabry, J. From robots to humans: Visualizations for robot sensor data. In Proceedings of the 2015 IEEE 3rd Working Conference on Software Visualization (VISSOFT), Bremen, Germany, 27–28 September 2015; pp. 135–139. [Google Scholar] [CrossRef]

- Krushnan, J.; Thangaraj, H.; Thiruvalluvan, K.; Balasubramanian, S.; Uppalapati, V.P.; Schrödel, F. MicroROS Based Controller and RViz Visualization for Robot Manipulation as an Educational Module. In Proceedings of the 2024 32nd Mediterranean Conference on Control and Automation (MED), Chania-Crete, Greece, 11–14 June 2024; pp. 406–411. [Google Scholar]

- Kolhatkar, C.; Wagle, K. Review of SLAM algorithms for indoor mobile robot with LIDAR and RGB-D camera technology. In Proceedings of the Innovations in Electrical and Electronic Engineering; Springer: Singapore, 2020; pp. 397–409. [Google Scholar]

- Collett, T.; MacDonald, B.A. Developer oriented visualisation of a robot program. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2 March 2006; pp. 49–56. [Google Scholar]

- Tanimoto, S.L. Viva: A visual language for image processing. J. Vis. Lang. Comput. 1990, 1, 127–139. [Google Scholar] [CrossRef]

- Hancock, C.M. Real-Time Programming and the Big Ideas of Computational Literacy. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2003. [Google Scholar]

- Rácz, M.; Noboa, E.; Détár, B.; Nemes, Á.; Galambos, P.; Szűcs, L.; Márton, G.; Eigner, G.; Haidegger, T. PlatypOUs—A Mobile Robot Platform and Demonstration Tool Supporting STEM Education. Sensors 2022, 22, 2284. [Google Scholar] [CrossRef]

- Noboa, E.; Rácz, M.; Szűcs, L.; Galambos, P.; Márton, G.; Eigner, G. Development of an EMG based SVM supported control solution for the PlatypOUs education mobile robot using MindRove headset. IFAC-Pap. 2021, 54, 304–309. [Google Scholar] [CrossRef]

- Takács, K.; Haidegger, T. Eye Gaze Tracking in Robot-Assisted Minimally Invasive Surgery: A Systematic Review of Recent Advances and Applications. Acta Polytech. Hung. 2024, 21, 393–411. [Google Scholar] [CrossRef]

- Pedro, F.; Subosa, M.; Rivas, A.; Valverde, P. Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development; The United Nations Educational, Scientific and Cultural Organization: Paris, France, 2019. [Google Scholar]

- Bennett, S.; Maton, K.; Kervin, L. The ‘digital natives’ debate: A critical review of the evidence. Br. J. Educ. Technol. 2008, 39, 775–786. [Google Scholar] [CrossRef]

- Haruna, A.I.; Sankar, R.; Samaila, A. Design and development of an instructional mobile robot for effective learning of material handling in mechanical workshops in universities. Mater. Today Proc. 2023; in press. [Google Scholar] [CrossRef]

- Fichtinger, G.; Troccaz, J.; Tamas, H. Image-guided interventional robotics: Lost in translation? Proc. IEEE. 2022, 110, 932–950. [Google Scholar] [CrossRef]

- D’Ettorre, C.; Mariani, A.; Stilli, A.; y Baena, F.R.; Valdastri, P.; Deguet, A.; Kazanzides, P.; Taylor, R.H.; Fischer, G.S.; DiMaio, S.P. Accelerating surgical robotics research: A review of 10 years with the da vinci research kit. IEEE Robot. Autom. Mag. 2021, 28, 56–78. [Google Scholar] [CrossRef]

- Chiang, C.H.; Liu, J.-S.; Chou, Y.-S. Comparing Path Length by Boundary Following Fast Matching Method and Bug Algorithms for Path Planning in Opportunities and Challenges for Next-Generation Applied Intelligence; Springer: Berlin/Heidelberg, Germany, 2009; pp. 303–309. [Google Scholar]

- Calders, T.; Custers, B. What is data mining and how does it work? In Discrimination and Privacy in the Information Society: Data Mining and Profiling in Large Databases; Springer: Berlin/Heidelberg, Germany, 2013; pp. 27–42. [Google Scholar]

- Kotu, V.; Deshpande, B. Data Science: Concepts and Practice, 2nd ed.; Morgan Kaufmann: Cambridge, MA, USA, 2018. [Google Scholar]

- Lardinois, F. Microsoft Launches Visual Studio Code, a Free Cross-Platform Code. 2015. Available online: https://techcrunch.com/2015/04/29/microsoft-shocks-the-world-with-visual-studio-code-a-free-code-editor-for-os-x-linux-and-windows/ (accessed on 14 January 2025).

- Apache Kafka Vs. Flume | Top 5 Awesome Comparison to Know. 2018. Available online: https://www.educba.com/apache-kafka-vs-flume/ (accessed on 14 January 2025).

- Apache Flink. Available online: https://sourceforge.net/software/product/Flink/ (accessed on 2 July 2025).

- Károly, A.I.; Tirczka, S.; Gao, H.; Rudas, I.J.; Galambos, P. Increasing the Robustness of Deep Learning Models for Object Segmentation: A Framework for Blending Automatically Annotated Real and Synthetic Data. IEEE Trans. Cybern. 2024, 54, 25–38. [Google Scholar] [CrossRef]

- Kuti, J.; Galambos, P.; Györök, G. Adaptive odometry and IMU sensor fusion for KUKA youBot mobile robot using analytical time update. In Proceedings of the 2019 IEEE 23rd International Conference on Intelligent Engineering Systems (INES), Godollo, Hungary, 25–27 April 2019; pp. 257–262. [Google Scholar]

- Jaksa, L.; Azamatov, B.; Nazenova, G.; Alontseva, D.; Haidegger, T. State of the art in Medical Additive Manufacturing. Acta Polytech. Hung. 2024, 21, 553–579. [Google Scholar] [CrossRef]

- Houghtaling, M.A.; Fiorini, S.R.; Fabiano, N.; Gonçalves, P.J.S.; Ulgen, O.; Haidegger, T.; Carbonera, J.L.; Olszewska, J.I.; Page, B.; Murahwi, Z.; et al. Standardizing an Ontology for Ethically Aligned Robotic and Autonomous Systems. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 1791–1804. [Google Scholar] [CrossRef]

- Demir, F.; Haidegger, T.; Falk, S.; Mohamadi, P.H.A.; Garcia, P.L.; Verhelst, M.; Sun, J.; Terryn, S.; Brancart, J.; Canamero, L.; et al. Towards an Eco-Label for Sustainable Robotics. Sustain. Prod. Consum. 2025, 45. in press. Available online: https://drive.google.com/file/d/1zLRrdCDbcyD5HvwqrokLeXaTSdd8aram/view?usp=sharing (accessed on 2 July 2025).

- IEEE 7007–2022; Ontological Standard for Ethically Driven Robotics and Automation Systems. IEEE: Piscataway, NJ, USA, 2022.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).