The Application of Reinforcement Learning to Pumps—A Systematic Literature Review

Abstract

1. Introduction

- We conducted a comprehensive review of 100 peer-reviewed articles on RL applications in pump systems, based on bibliometric and content analyses. The systematic literature review identified the current methods used in reinforcement learning as applied to pumps, the main and current assumptions, challenges encountered, prospects in light of this trend, and prospects for the application of the reinforcement learning technique to pump control.

- We classified RL algorithms, such as Q-learning, DDPG, PPO, and SAC, according to their applicability to operational objectives and different pump types.

- We identified critical challenges in the RL-based control of pump systems, including real-time deployment, data limitations, and algorithm convergence. The work highlights how reinforcement learning, a subset of machine learning, has revolutionized the decision-making and control of industrial pumping systems.

- We explored and suggested the future direction, such as the deployment of multi-agent RLs to address cases where the energy price depends on its demand, because multi-agents learn policies more effectively. They also overcome the curse of dimensionality.

2. Methodology

- Q1

- What methods of RL are used with pumps?

- Q2

- What are the deficiencies of the methods listed in Q1?

- Q3

- What are the challenges faced in using RL as related to pumps?

- Q4

- What are the challenges and possible solutions involved in optimizing the energy consumption of pumps using RL techniques?

- Q5

- What are the future trends in the application of RL to pumps?

- Bibliometric analysis: This is a qualitative method that describes published articles and aids academics in evaluating academic studies on a certain topic [23]. Our work follows the structure used by Cancino et al. [24], where they used graphical analysis methods such as bibliographic coupling, co-citation, co-authorship, and the co-occurrence of keywords in the VOSviewer_1.6.20 software to map the bibliographic material graphically. The maps generated in our analysis provide insight into the field of RL application to pumps. The functions and advantages of a bibliometric analysis can be found in detail in Börner et al. [25]. The analysis’ contribution is to more directly reflect the status quo and the context of RL applied to pump research, as well as to show significant institutions, journals, and references in the research area, which will aid scholars in finding relevant journals, authors, and publications. The criteria metrics for the bibliometric analysis are highlighted in Table 1, whereas Table 2 lists the documents selected for the analysis.

- 2.

- Content analysis: In this analysis, a critical discussion of selected papers was performed to show the development trend in the research area to assist researchers to understand the evolution and recognize new directions. The adopted structure for the content analysis is based on the work of da Silva et al. [120]. From the content analysis, we were able to draw out inferences, and we answered the research questions highlighted in the methodology.

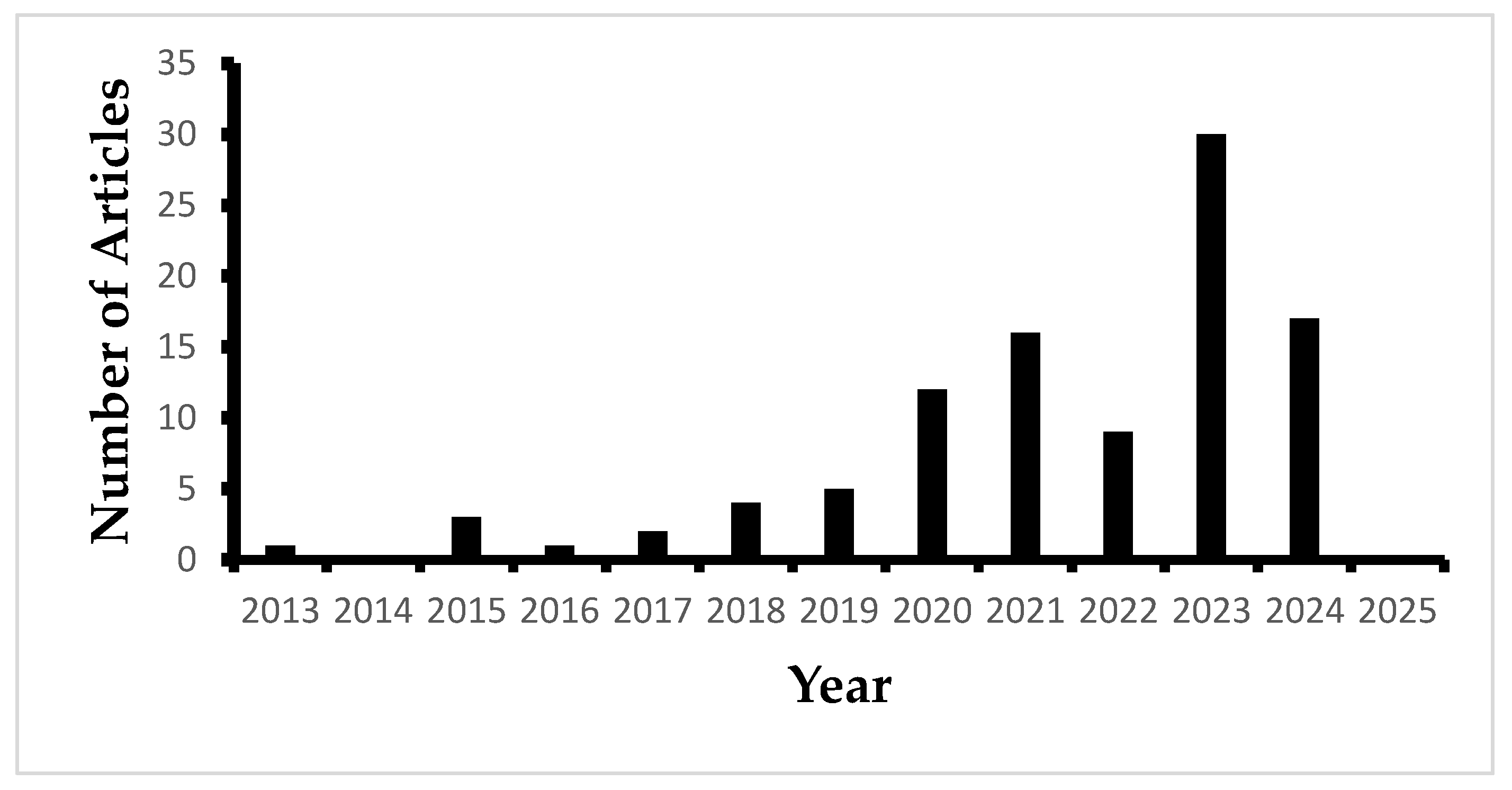

3. Bibliometric Analysis

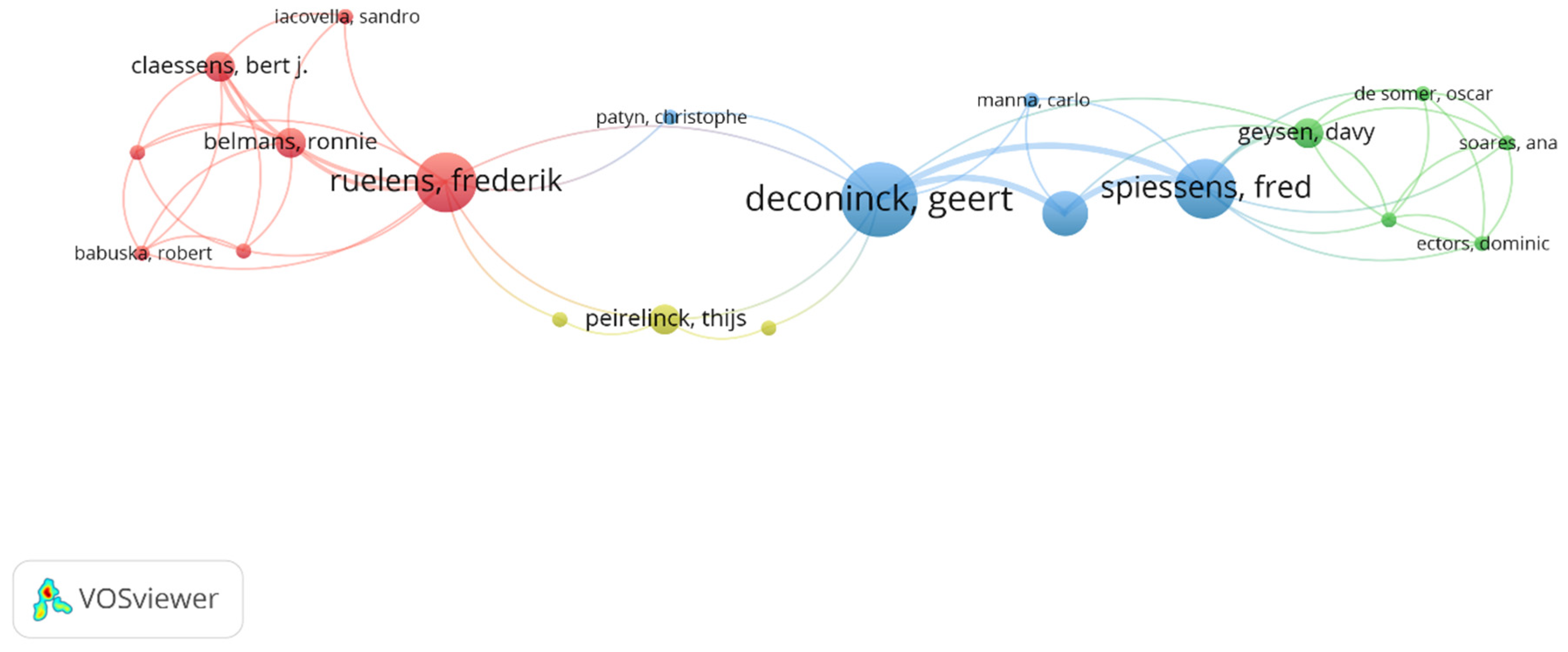

3.1. Co-Authorship Analysis

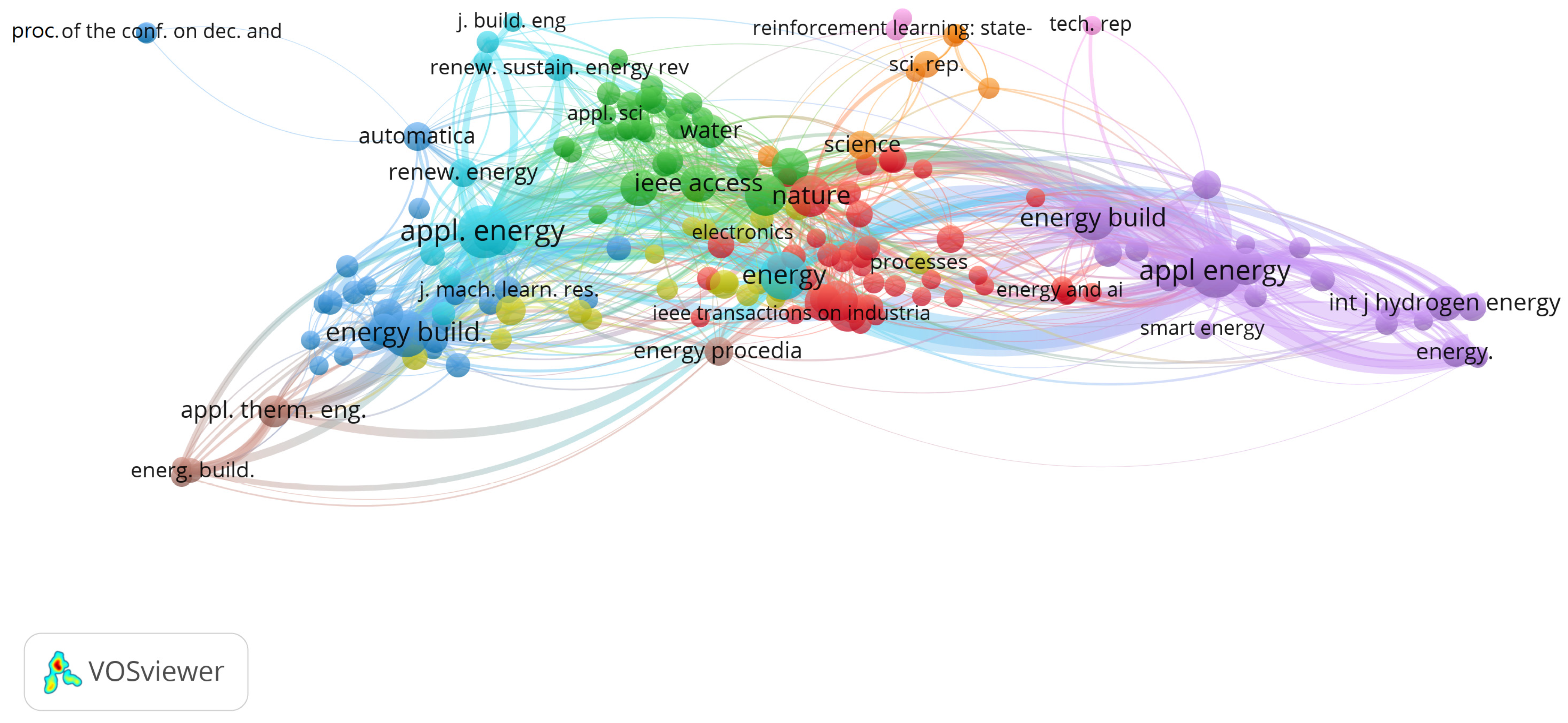

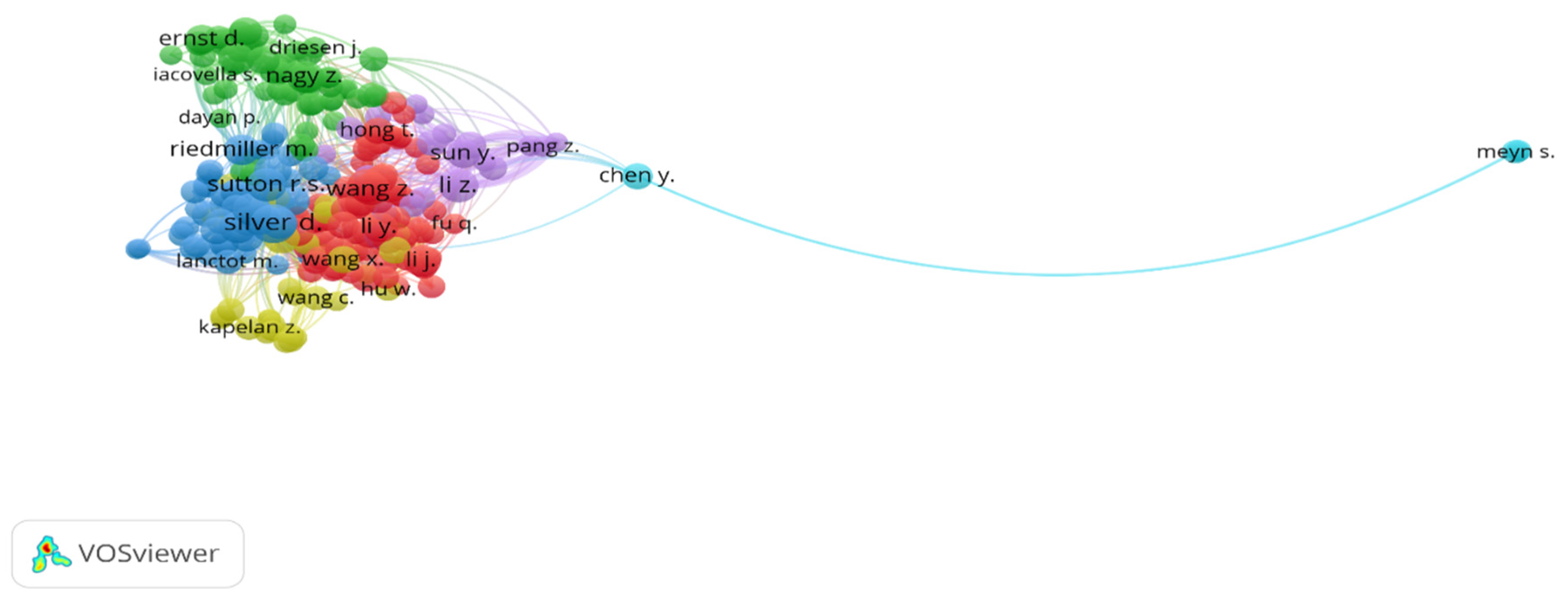

3.2. Co-Citation Analysis

- Type of analysis: co-citation.

- Counting method: full counting [124].

| Unit of Analysis | ||

|---|---|---|

| Cited Sources | Cited Authors | |

| Min. number of citations | 3 | 7 |

| Threshold | 176 | 245 |

3.2.1. Co-Citation Analysis of Cited Sources

3.2.2. Co-Citation Analysis of Cited Authors

3.3. Bibliographic Coupling

3.4. Co-Occurrence Analysis of Keywords

4. Content Analysis

4.1. Early Applications (2013–2015)

4.2. Expanding Applications and Addressing Challenges (2015–2018)

4.3. Deep Reinforcement Learning and Addressing Data Issues (2018–2020)

4.4. Multi-Agent Reinforcement Learning and Continuous Action Spaces (2020–2021)

4.5. Continuous Control and Real-World Implementation (2022–2025)

4.6. Case Studies of RL Applications in Pump Systems

- Case Study 1:

- Case Study 2:

- Case Study 3:

5. Main Findings

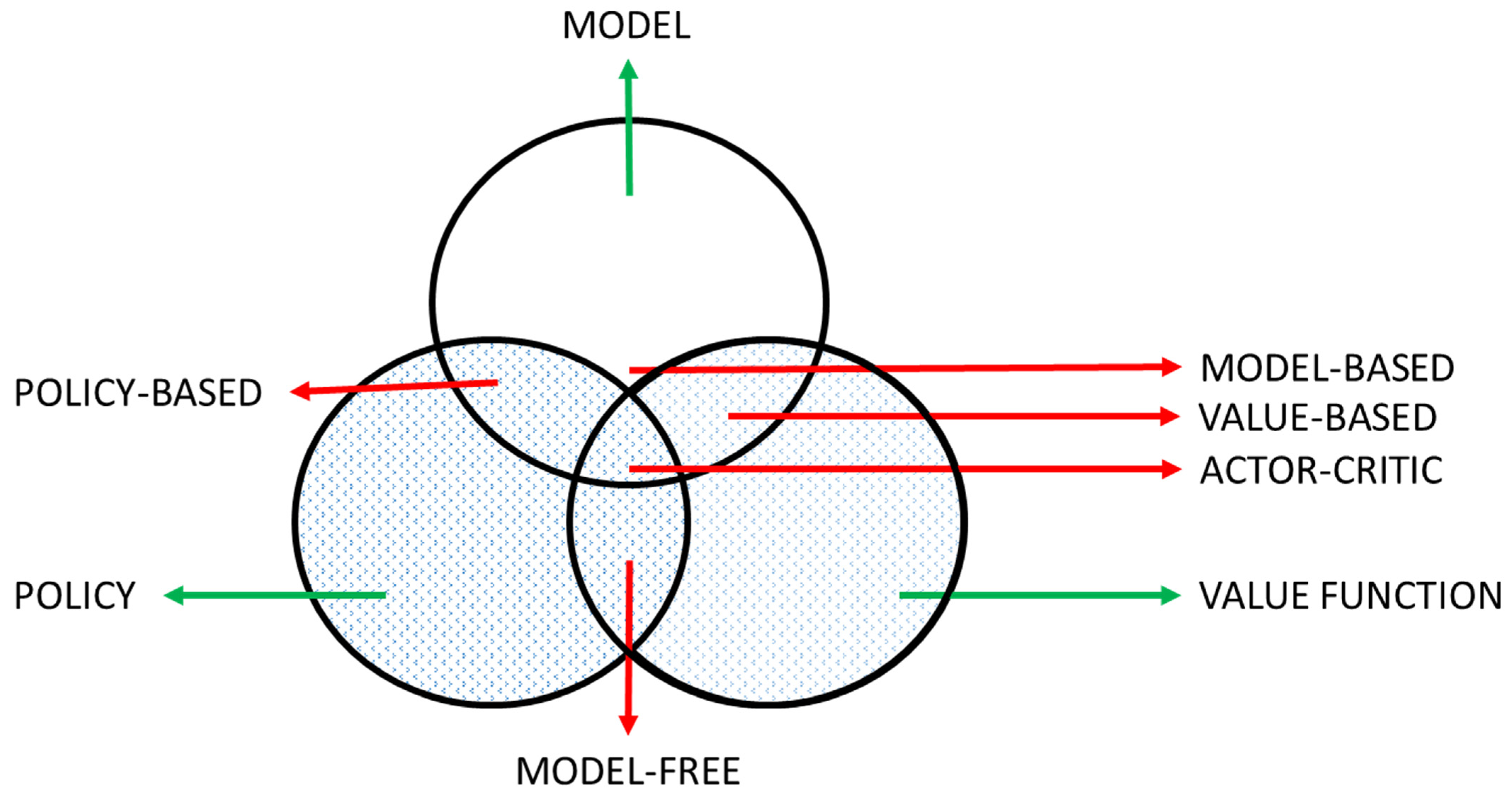

- Research question 1: what methods of reinforcement learning are used with pumps?

- Research question 2: what are the deficiencies of these methods?

- Research question 3: what are the challenges faced in using reinforcement learning as related to pumps?

- Research question 4: What are the challenges and possible solutions involved in optimizing the energy consumption of pumps using RL techniques?

- Research question 5: what are the future trends in the application of reinforcement learning to pumps?

6. Conclusions

- Despite the promise of reinforcement learning (RL) techniques, their application has primarily been limited to theoretical or simulated environments. A significant hurdle to industrial adoption is the lack of readily deployable software solutions. Deploying RL models through user-friendly software could significantly incentivize real-world implementation. Imagine a one-click solution that tackles complex control problems without requiring specialized expertise in RL algorithms. Such an interface would significantly reduce the barrier to entry for industries seeking to leverage the power of RL for pump optimization.

- Most RL control systems are usually designed with a single variable input for the state environment; therefore, the extension of the environment to include more features as covariates will produce a more robust RL model that makes a more informed control decision.

- To optimize the functionality of an RL agent in reducing energy consumption, we suggest further research into the deployment of multi-agent RLs to cater for the case in which the energy price is dependent on its demand, because multi-agents learn policies more effectively. They also overcome the curse of dimensionality.

- RL algorithms require large datasets; research breakthroughs will be of value if more work is carried out by harnessing the merits of using BC, TL, and PL agents that use less data and still achieve a high performance with less training time.

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| Abbreviations | Full Term | Description |

| RL | reinforcement learning | A machine learning technique for decision-making via interaction with the environment. |

| DRL | deep reinforcement learning | The extension of RL using deep neural networks. |

| HVAC | heating, ventilation, and air conditioning | Systems related to thermal comfort and air quality in buildings. |

| DDPG | deep deterministic policy gradient | An RL algorithm for continuous action spaces. |

| DQN | deep Q-network | Model-free RL using deep learning for Q-value approximation. |

| PPO | proximal policy optimization | A policy optimization RL algorithm with stability improvements. |

| FQI | fitted Q-iteration | A batch-mode RL technique for policy learning using previously collected data. |

| SAC | soft actor–critic | An off-policy RL algorithm combining value and policy-based learning. |

| TL | transfer learning | A technique for leveraging a pre-trained model or policies. |

| PL | parallel learning | A method for speeding up training by concurrent agent learning. |

| BC | behavioral cloning | Learning policies by mimicking expert demonstrations. |

| MIMO | multi-input, multi-output | Control systems with multiple inputs and outputs. |

| MARL | multi-agent reinforcement learning | Multiple agents learn to make decisions through interaction with a shared environment and, often, with each other |

References

- Fu, Q.; Han, Z.; Chen, J.; Lu, Y.; Wu, H.; Wang, Y. Applications of reinforcement learning for building energy efficiency control: A review. J. Build. Eng. 2022, 50, 104165. [Google Scholar] [CrossRef]

- Wang, Z.; Hong, T. Reinforcement learning for building controls: The opportunities and challenges. Appl. Energy 2020, 269, 115036. [Google Scholar] [CrossRef]

- Kobbacy, K.A.H.; Vadera, S. A survey of AI in operations management from 2005 to 2009. J. Manuf. Technol. Manag. 2011, 22, 706–733. [Google Scholar] [CrossRef]

- Moharm, K. State of the art in big data applications in microgrid: A review. Adv. Eng. Inform. 2019, 42, 100945. [Google Scholar] [CrossRef]

- Tang, S.; Zhu, Y.; Yuan, S. An improved convolutional neural network with an adaptable learning rate towards multi-signal fault diagnosis of hydraulic piston pump. Adv. Eng. Inform. 2021, 50, 101406. [Google Scholar] [CrossRef]

- Ahmed, A.; Korres, N.E.; Ploennigs, J.; Elhadi, H.; Menzel, K. Mining building performance data for energy-efficient operation. Adv. Eng. Inform. 2011, 25, 341–354. [Google Scholar] [CrossRef]

- Singaravel, S.; Suykens, J.; Geyer, P. Deep convolutional learning for general early design stage prediction models. Adv. Eng. Inform. 2019, 42, 100982. [Google Scholar] [CrossRef]

- Tse, Y.L.; Cholette, M.E.; Tse, P.W. A multi-sensor approach to remaining useful life estimation for a slurry pump. Measurement 2019, 139, 140–151. [Google Scholar] [CrossRef]

- Guo, R.; Li, Y.; Zhao, L.; Zhao, J.; Gao, D. Remaining Useful Life Prediction Based on the Bayesian Regularized Radial Basis Function Neural Network for an External Gear Pump. IEEE Access 2020, 8, 107498–107509. [Google Scholar] [CrossRef]

- Kimera, D.; Nangolo, F.N. Predictive maintenance for ballast pumps on ship repair yards via machine learning. Transp. Eng. 2020, 2, 100020. [Google Scholar] [CrossRef]

- Azadeh, A.; Saberi, M.; Kazem, A.; Ebrahimipour, V.; Nourmohammadzadeh, A.; Saberi, Z. A flexible algorithm for fault diagnosis in a centrifugal pump with corrupted data and noise based on ANN and support vector machine with hyper-parameters optimization. Appl. Soft Comput. 2013, 13, 1478–1485. [Google Scholar] [CrossRef]

- Li, X.; Jiang, H.; Xie, M.; Wang, T.; Wang, R.; Wu, Z. A reinforcement ensemble deep transfer learning network for rolling bearing fault diagnosis with Multi-source domains. Adv. Eng. Inform. 2022, 51, 101480. [Google Scholar] [CrossRef]

- Wang, Z.; Xuan, J. Intelligent fault recognition framework by using deep reinforcement learning with one dimension convolution and improved actor-critic algorithm. Adv. Eng. Inform. 2021, 49, 101315. [Google Scholar] [CrossRef]

- Ding, Y.; Ma, L.; Ma, J.; Suo, M.; Tao, L.; Cheng, Y.; Lu, C. Intelligent fault diagnosis for rotating machinery using deep Q-network based health state classification: A deep reinforcement learning approach. Adv. Eng. Inform. 2019, 42, 100977. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- François-Lavet, V.; Henderson, P.; Islam, R.; Bellemare, M.G.; Pineau, J. An Introduction to Deep Reinforcement Learning. Found. Trends Mach. Learn. 2018, 11, 219–354. [Google Scholar] [CrossRef]

- Han, G.; Joo, H.-J.; Lim, H.-W.; An, Y.-S.; Lee, W.-J.; Lee, K.-H. Data-driven heat pump operation strategy using rainbow deep reinforcement learning for significant reduction of electricity cost. Energy 2023, 270, 126913. [Google Scholar] [CrossRef]

- Bachseitz, M.; Sheryar, M.; Schmitt, D.; Summ, T.; Trinkl, C.; Zörner, W. PV-Optimized Heat Pump Control in Multi-Family Buildings Using a Reinforcement Learning Approach. Energies 2024, 17, 1908. [Google Scholar] [CrossRef]

- Xu, Y.; Li, Y.; Gao, W. Comparative Analysis of Reinforcement Learning Approaches for Multi-Objective Optimization in Residential Hybrid Energy Systems. Buildings 2024, 14, 2645. [Google Scholar] [CrossRef]

- Fu, Q.; Chen, X.; Ma, S.; Fang, N.; Xing, B.; Chen, J. Optimal control method of HVAC based on multi-agent deep reinforcement learning. Energy Build. 2022, 270, 112284. [Google Scholar] [CrossRef]

- Wu, Q.; Zhu, D.; Liu, Y.; Du, A.; Chen, D.; Ye, Z. Comprehensive Control System for Gathering Pipe Network Operation Based on Reinforcement Learning. In Proceedings of the Proceedings of the 2018 VII International Conference on Network, Communication and Computing, Taipei, Taiwan, 14–16 December 2018; ACM: New York, NY, USA, 2018; pp. 34–39. [Google Scholar] [CrossRef]

- Kitchenham, B. Procedures for Performing Systematic Reviews, Version 1.0. Empir. Softw. Eng. 2004, 33, 1–26. [Google Scholar]

- Small, H. Co-citation in the scientific literature: A new measure of the relationship between two documents. J. Am. Soc. Inf. Sci. 1973, 24, 265–269. [Google Scholar] [CrossRef]

- Cancino, C.; Merigó, J.M.; Coronado, F.; Dessouky, Y.; Dessouky, M. Forty years of Computers & Industrial Engineering: A bibliometric analysis. Comput. Ind. Eng. 2017, 113, 614–629. [Google Scholar] [CrossRef]

- Börner, K.; Chen, C.; Boyack, K.W. Visualizing knowledge domains. Annu. Rev. Inf. Sci. Technol. 2003, 37, 179–255. [Google Scholar] [CrossRef]

- Wang, M.; Lin, B.; Yang, Z. Energy-Efficient HVAC Control based on Reinforcement Learning and Transfer Learning in a Residential Building. In Proceedings of the 2024 IEEE International Conference on Industrial Technology (ICIT), Bristol, UK, 25–27 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Ludolfinger, U.; Perić, V.S.; Hamacher, T.; Hauke, S.; Martens, M. Transformer Model Based Soft Actor-Critic Learning for HEMS. In Proceedings of the 2023 International Conference on Power System Technology (PowerCon), Jinan, China, 21–22 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Gan, T.; Jiang, Y.; Zhao, H.; He, J.; Duan, H. Research on low-energy consumption automatic real-time regulation of cascade gates and pumps in open-canal based on reinforcement learning. J. Hydroinformatics 2024, 26, 1673–1691. [Google Scholar] [CrossRef]

- Croll, H.C.; Ikuma, K.; Ong, S.K.; Sarkar, S. Systematic Performance Evaluation of Reinforcement Learning Algorithms Applied to Wastewater Treatment Control Optimization. Environ. Sci. Technol. 2023, 57, 18382–18390. [Google Scholar] [CrossRef] [PubMed]

- Kokhanovskiy, A.Y.; Kuprikov, E.; Serebrennikov, K.; Mkrtchyan, A.; Davletkhanov, A.; Gladush, Y. Manipulating the harmonic mode-locked regimes inside a fiber cavity by a reinforcement learning algorithm. In Quantum and Nonlinear Optics X; He, Q., Li, C.-F., Kim, D.-S., Eds.; SPIE: Beijing, China, 2023; p. 20. [Google Scholar] [CrossRef]

- Kaspar, K.; Nweye, K.; Buscemi, G.; Capozzoli, A.; Nagy, Z.; Pinto, G.; Eicker, U.; Ouf, M.M. Effects of occupant thermostat preferences and override behavior on residential demand response in CityLearn. Energy Build. 2024, 324, 114830. [Google Scholar] [CrossRef]

- Li, J.; Wang, X.; Liu, B. Event-Based Deep Reinforcement Learning for Smoothing Ramp Events in Combined Wind-Storage Energy Systems. IEEE Trans. Ind. Inform. 2024, 20, 7871–7882. [Google Scholar] [CrossRef]

- Masdoua, Y.; Boukhnifer, M.; Adjallah, K.H. Fault Tolerant Control of HVAC System Based on Reinforcement Learning Approach. In Proceedings of the 2023 9th International Conference on Control, Decision and Information Technologies (CoDIT), Rome, Italy, 3–6 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 555–560. [Google Scholar] [CrossRef]

- Bex, L.; Peirelinck, T.; Deconinck, G. Seasonal Performance of Fitted Q-iteration for Space Heating and Cooling with Heat Pumps. In Proceedings of the 2023 IEEE PES Innovative Smart Grid Technologies Europe (ISGT EUROPE), Grenoble, France, 23–26 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Meyn, S.; Lu, F.; Mathias, J. Balancing the Power Grid with Cheap Assets. In Proceedings of the 2023 62nd IEEE Conference on Decision and Control (CDC), Singapore, 13–15 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 4012–4017. [Google Scholar] [CrossRef]

- Zhang, C. Intelligent Optimization Algorithm Based on Reinforcement Learning in the Process of Wax Removal and Prevention of Screw Pump. In Proceedings of the 2024 International Conference on Telecommunications and Power Electronics (TELEPE), Frankfurt, Germany, 29–31 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 15–20. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, X.; Zhang, H.; Ma, Y.; Chen, S.; Wang, C.; Chen, Q.; Xiao, X. Hybrid model-free control based on deep reinforcement learning: An energy-efficient operation strategy for HVAC systems. J. Build. Eng. 2024, 96, 110410. [Google Scholar] [CrossRef]

- Schmitz, S.; Brucke, K.; Kasturi, P.; Ansari, E.; Klement, P. Forecast-based and data-driven reinforcement learning for residential heat pump operation. Appl. Energy 2024, 371, 123688. [Google Scholar] [CrossRef]

- Cai, W.; Sawant, S.; Reinhardt, D.; Rastegarpour, S.; Gros, S. A Learning-Based Model Predictive Control Strategy for Home Energy Management Systems. IEEE Access 2023, 11, 145264–145280. [Google Scholar] [CrossRef]

- Li, Z.; Bai, L.; Tian, W.; Yan, H.; Hu, W.; Xin, K.; Tao, T. Online Control of the Raw Water System of a High-Sediment River Based on Deep Reinforcement Learning. Water 2023, 15, 1131. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, W.; Cao, H.; He, Q.; Liu, Y. Antagonistic Pump with Multiple Pumping Modes for On-Demand Soft Robot Actuation and Control. IEEEASME Trans. Mechatron. 2024, 29, 3252–3264. [Google Scholar] [CrossRef]

- Tubeuf, C.; Birkelbach, F.; Maly, A.; Hofmann, R. Increasing the Flexibility of Hydropower with Reinforcement Learning on a Digital Twin Platform. Energies 2023, 16, 1796. [Google Scholar] [CrossRef]

- Joo, J.-G.; Jeong, I.-S.; Kang, S.-H. Deep Reinforcement Learning for Multi-Objective Real-Time Pump Operation in Rainwater Pumping Stations. Water 2024, 16, 3398. [Google Scholar] [CrossRef]

- Ludolfinger, U.; Zinsmeister, D.; Perić, V.S.; Hamacher, T.; Hauke, S.; Martens, M. Recurrent Soft Actor Critic Reinforcement Learning for Demand Response Problems. In Proceedings of the 2023 IEEE Belgrade PowerTech, Belgrade, Serbia, 25–29 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Kar, I.; Mukhopadhyay, S.; Chatterjee, A.S. Application of Deep Reinforcement Learning to an extreme contextual and collective rare event with multivariate unsupervised reward generator for detecting leaks in large-scale water pipelines. In Proceedings of the 2023 Fifth International Conference on Electrical, Computer and Communication Technologies (ICECCT), Erode, India, 22–24 February 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Zhao, H.; Pan, S.; Ma, L.; Wu, Y.; Guo, X.; Liu, J. Research on joint control of water pump and radiator of PEMFC based on TCO-DDPG. Int. J. Hydrogen Energy 2023, 48, 38569–38583. [Google Scholar] [CrossRef]

- Moreira, T.M.; De Faria, J.G.; Vaz-de-Melo, P.O.S.; Medeiros-Ribeiro, G. Development and validation of an AI-Driven model for the La Rance tidal barrage: A generalisable case study. Appl. Energy 2023, 332, 120506. [Google Scholar] [CrossRef]

- Li, Z.; Tang, C.; Zhou, G.; Guo, X.; Lu, J.; Shu, X. Discontinuous Rod Spanning Motion Control for Snake Robot based on Reinforcement Learning. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 4437–4442. [Google Scholar] [CrossRef]

- Wu, G.; Yang, Y.; An, W. Multi-scenario operation optimization of electric-thermal coupling renewable energy system based on deep reinforcement learning. In Proceedings of the Ninth International Conference on Energy Materials and Electrical Engineering (ICEMEE 2023), Guilin, China, 6 February 2024; Zhou, J., Aris, I.B., Eds.; SPIE: Bellingham, WA, USA, 2024; p. 4. [Google Scholar] [CrossRef]

- Tubeuf, C.; Aus Der Schmitten, J.; Hofmann, R.; Heitzinger, C.; Birkelbach, F. Improving Control of Energy Systems With Reinforcement Learning: Application to a Reversible Pump Turbine. In Proceedings of the ASME 2024 18th International Conference on Energy Sustainability, Anaheim, CA, USA, 15–17 July 2024; American Society of Mechanical Engineers: New York, NY, USA, 2024; p. V001T01A001. [Google Scholar] [CrossRef]

- Ma, H.; Wang, X.; Wang, D. Pump Scheduling Optimization in Urban Water Supply Stations: A Physics-Informed Multiagent Deep Reinforcement Learning Approach. Int. J. Energy Res. 2024, 2024, 9557596. [Google Scholar] [CrossRef]

- Klingebiel, J.; Salamon, M.; Bogdanov, P.; Venzik, V.; Vering, C.; Müller, D. Towards maximum efficiency in heat pump operation: Self-optimizing defrost initiation control using deep reinforcement learning. Energy Build. 2023, 297, 113397. [Google Scholar] [CrossRef]

- Li, J.; Zhou, T. Active fault-tolerant coordination energy management for a proton exchange membrane fuel cell using curriculum-based multiagent deep meta-reinforcement learning. Renew. Sustain. Energy Rev. 2023, 185, 113581. [Google Scholar] [CrossRef]

- Song, R.; Zinsmeister, D.; Hamacher, T.; Zhao, H.; Terzija, V.; Peric, V. Adaptive Control of Practical Heat Pump Systems for Power System Flexibility Based on Reinforcement Learning. In Proceedings of the 2023 International Conference on Power System Technology (PowerCon), Jinan, China, 21–22 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Tang, Z.; Chai, B.; Li, J.; Wang, Y.; Liu, S.; Shi, X. Research on Capacity Configuration Optimization of Multi-Energy Complementary System Using Deep Reinforce Learning. In Proceedings of the 2023 13th International Conference on Power and Energy Systems (ICPES), Chengdu, China, 8–10 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 213–218. [Google Scholar] [CrossRef]

- Qin, H.; Yu, Z.; Li, T.; Liu, X.; Li, L. Energy-efficient heating control for nearly zero energy residential buildings with deep reinforcement learning. Energy 2023, 264, 126209. [Google Scholar] [CrossRef]

- Athanasiadis, C.L.; Pippi, K.D.; Papadopoulos, T.A.; Korkas, C.; Tsaknakis, C.; Alexopoulou, V.; Nikolaidis, V.; Kosmatopoulos, E. Energy Management for Building-Integrated Microgrids Using Reinforcement Learning. In Proceedings of the 2023 58th International Universities Power Engineering Conference (UPEC), Dublin, Ireland, 30 August–1 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Kokhanovskiy, A.; Kuprikov, E.; Serebrennikov, K.; Mkrtchyan, A.; Davletkhanov, A.; Bunkov, A.; Krasnikov, D.; Shashkov, M.; Nasibulin, A.; Gladush, Y. Multistability manipulation by reinforcement learning algorithm inside mode-locked fiber laser. Nanophotonics 2024, 13, 2891–2901. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Zheng, W.; Wang, Z.; Wang, Y.; Pang, X.; Wang, W. Comparison of reinforcement learning and model predictive control for building energy system optimization. Appl. Therm. Eng. 2023, 228, 120430. [Google Scholar] [CrossRef]

- Zhou, S.; Qin, L.; Yang, Y.; Wei, Z.; Wang, J.; Wang, J.; Ruan, J.; Tang, X.; Wang, X.; Liu, K. A Novel Ensemble Fault Diagnosis Model for Main Circulation Pumps of Converter Valves in VSC-HVDC Transmission Systems. Sensors 2023, 23, 5082. [Google Scholar] [CrossRef]

- Hu, S.; Gao, J.; Zhong, D.; Wu, R.; Liu, L. Real-Time Scheduling of Pumps in Water Distribution Systems Based on Exploration-Enhanced Deep Reinforcement Learning. Systems 2023, 11, 56. [Google Scholar] [CrossRef]

- Zhang, Z.; Tian, W.; Liao, Z. Towards coordinated and robust real-time control: A decentralized approach for combined sewer overflow and urban flooding reduction based on multi-agent reinforcement learning. Water Res. 2023, 229, 119498. [Google Scholar] [CrossRef]

- Xiong, Z.; Chen, F.; Guo, H.; Ren, X.; Zhang, J.; Wang, B. Multi-objective combined dispatching optimization of pumped storage and thermal power. J. Phys. Conf. Ser. 2023, 2503, 012004. [Google Scholar] [CrossRef]

- Zaman, M.; Tantawy, A.; Abdelwahed, S. Optimizing Smart City Water Distribution Systems Using Deep Reinforcement Learning. In Proceedings of the 2023 IEEE 20th International Conference on Smart Communities: Improving Quality of Life using AI, Robotics and IoT (HONET), Boca Raton, FL, USA, 4–6 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 228–233. [Google Scholar] [CrossRef]

- He, L.; Xin, Y.; Zhang, Y.; Li, P.; Yang, Y. Study on Waste Heat Recovery of Fuel-Cell Thermal Management System Based on Reinforcement Learning. Energy Technol. 2024, 12, 2400438. [Google Scholar] [CrossRef]

- Hu, S.; Gao, J.; Zhong, D. Multi-agent reinforcement learning framework for real-time scheduling of pump and valve in water distribution networks. Water Supply 2023, 23, 2833–2846. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, X.; Fu, Q.; Chen, J.; Wang, Y.; Lu, Y.; Liu, L. Priori knowledge-based deep reinforcement learning control for fan coil unit system. J. Build. Eng. 2024, 82, 108157. [Google Scholar] [CrossRef]

- Emamjomehzadeh, O.; Kerachian, R.; Emami-Skardi, M.J.; Momeni, M. Combining urban metabolism and reinforcement learning concepts for sustainable water resources management: A nexus approach. J. Environ. Manag. 2023, 329, 117046. [Google Scholar] [CrossRef]

- Chen, M.; Xie, Z.; Sun, Y.; Zheng, S. The predictive management in campus heating system based on deep reinforcement learning and probabilistic heat demands forecasting. Appl. Energy 2023, 350, 121710. [Google Scholar] [CrossRef]

- Huang, Q.; Hu, W.; Zhang, G.; Cao, D.; Liu, Z.; Huang, Q.; Chen, Z. A novel deep reinforcement learning enabled agent for pumped storage hydro-wind-solar systems voltage control. IET Renew. Power Gener. 2021, 15, 3941–3956. [Google Scholar] [CrossRef]

- Wu, T.; Zhao, H.; Gao, B.; Meng, F. Energy-Saving for a Velocity Control System of a Pipe Isolation Tool Based on a Reinforcement Learning Method. Int. J. Precis. Eng. Manuf.-Green Technol. 2022, 9, 225–240. [Google Scholar] [CrossRef]

- Christensen, M.H.; Ernewein, C.; Pinson, P. Demand Response through Price-setting Multi-agent Reinforcement Learning. In Proceedings of the 1st International Workshop on Reinforcement Learning for Energy Management in Buildings & Cities, Virtual Event, 17 November 2020; ACM: New York, NY, USA, 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Lee, Z.E.; Zhang, K.M. Generalized reinforcement learning for building control using Behavioral Cloning. Appl. Energy 2021, 304, 117602. [Google Scholar] [CrossRef]

- Zhu, J.; Elbel, S. Implementation of Reinforcement Learning on Air Source Heat Pump Defrost Control for Full Electric Vehicles. In Proceedings of the WCX World Congress Experience, Detroit, MI, USA, 14–16 April 2018; 2018-01-1193. pp. 1–7. [Google Scholar] [CrossRef]

- Saliba, S.M.; Bowes, B.D.; Adams, S.; Beling, P.A.; Goodall, J.L. Deep Reinforcement Learning with Uncertain Data for Real-Time Stormwater System Control and Flood Mitigation. Water 2020, 12, 3222. [Google Scholar] [CrossRef]

- Soares, A.; Geysen, D.; Spiessens, F.; Ectors, D.; De Somer, O.; Vanthournout, K. Using reinforcement learning for maximizing residential self-consumption—Results from a field test. Energy Build. 2020, 207, 109608. [Google Scholar] [CrossRef]

- Chong, J.; Kelly, D.; Agrawal, S.; Nguyen, N.; Monzon, M. Reinforcement Learning Control Scheme for Electrical Submersible Pumps. In Proceedings of the SPE Gulf Coast Section Electric Submersible Pumps Symposium, Virtual and The Woodlands, TX, USA, 5 October 2021; SPE: Richardson, TX, USA, 2021; p. D021S001R002. [Google Scholar] [CrossRef]

- Vazquez-Canteli, J.R.; Henze, G.; Nagy, Z. MARLISA: Multi-Agent Reinforcement Learning with Iterative Sequential Action Selection for Load Shaping of Grid-Interactive Connected Buildings. In Proceedings of the 7th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, Virtual Event. Japan, 18–20 November 2020; ACM: New York, NY, USA, 2020; pp. 170–179. [Google Scholar] [CrossRef]

- Ghane, S.; Jacobs, S.; Casteels, W.; Brembilla, C.; Mercelis, S.; Latre, S.; Verhaert, I.; Hellinckx, P. Supply temperature control of a heating network with reinforcement learning. In Proceedings of the 2021 IEEE International Smart Cities Conference (ISC2), Manchester, UK, 7–10 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Mullapudi, A.; Lewis, M.J.; Gruden, C.L.; Kerkez, B. Deep reinforcement learning for the real time control of stormwater systems. Adv. Water Resour. 2020, 140, 103600. [Google Scholar] [CrossRef]

- Filipe, J.; Bessa, R.J.; Reis, M.; Alves, R.; Póvoa, P. Data-driven predictive energy optimization in a wastewater pumping station. Appl. Energy 2019, 252, 113423. [Google Scholar] [CrossRef]

- Blad, C.; Bøgh, S.; Kallesøe, C. A Multi-Agent Reinforcement Learning Approach to Price and Comfort Optimization in HVAC-Systems. Energies 2021, 14, 7491. [Google Scholar] [CrossRef]

- He, Y.; Liu, P.; Zhou, L.; Zhang, Y.; Liu, Y. Competitive model of pumped storage power plants participating in electricity spot Market—In case of China. Renew. Energy 2021, 173, 164–176. [Google Scholar] [CrossRef]

- Liu, T.; Xu, C.; Guo, Y.; Chen, H. A novel deep reinforcement learning based methodology for short-term HVAC system energy consumption prediction. Int. J. Refrig. 2019, 107, 39–51. [Google Scholar] [CrossRef]

- Pinto, G.; Deltetto, D.; Capozzoli, A. Data-driven district energy management with surrogate models and deep reinforcement learning. Appl. Energy 2021, 304, 117642. [Google Scholar] [CrossRef]

- Li, J.; Li, Y.; Yu, T. Distributed deep reinforcement learning-based multi-objective integrated heat management method for water-cooling proton exchange membrane fuel cell. Case Stud. Therm. Eng. 2021, 27, 101284. [Google Scholar] [CrossRef]

- Lissa, P.; Schukat, M.; Keane, M.; Barrett, E. Transfer learning applied to DRL-Based heat pump control to leverage microgrid energy efficiency. Smart Energy 2021, 3, 100044. [Google Scholar] [CrossRef]

- Mbuwir, B.V.; Spiessens, F.; Deconinck, G. Benchmarking regression methods for function approximation in reinforcement learning: Heat pump control. In Proceedings of the 2019 IEEE PES Innovative Smart Grid Technologies Europe (ISGT-Europe), Bucharest, Romania, 29 September–2 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Q.; Wang, T. A Two-stage Optimal Controller For Hydraulic Loading System with Mechanical Compensation. In Proceedings of the 2019 IEEE International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), Bangkok, Thailand, 18–20 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 304–309. [Google Scholar] [CrossRef]

- Patyn, C.; Ruelens, F.; Deconinck, G. Comparing neural architectures for demand response through model-free reinforcement learning for heat pump control. In Proceedings of the 2018 IEEE International Energy Conference (ENERGYCON), Limassol, Cyprus, 3–7 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Cho, S.; Park, C.S. Rule reduction for control of a building cooling system using explainable AI. J. Build. Perform. Simul. 2022, 15, 832–847. [Google Scholar] [CrossRef]

- Ding, H.; Xu, Y.; Chew Si Hao, B.; Li, Q.; Lentzakis, A. A safe reinforcement learning approach for multi-energy management of smart home. Electr. Power Syst. Res. 2022, 210, 108120. [Google Scholar] [CrossRef]

- Mbuwir, B.V.; Geysen, D.; Spiessens, F.; Deconinck, G. Reinforcement learning for control of flexibility providers in a residential microgrid. IET Smart Grid 2020, 3, 98–107. [Google Scholar] [CrossRef]

- Ahn, K.U.; Park, C.S. Application of deep Q-networks for model-free optimal control balancing between different HVAC systems. Sci. Technol. Built Environ. 2020, 26, 61–74. [Google Scholar] [CrossRef]

- Abe, T.; Oh-hara, S.; Ukita, Y. Adoption of reinforcement learning for the intelligent control of a microfluidic peristaltic pump. Biomicrofluidics 2021, 15, 034101. [Google Scholar] [CrossRef]

- Abe, T.; Oh-hara, S.; Ukita, Y. Integration of reinforcement learning to realize functional variability of microfluidic systems. Biomicrofluidics 2022, 16, 024106. [Google Scholar] [CrossRef]

- Seo, G.; Yoon, S.; Kim, M.; Mun, C.; Hwang, E. Deep Reinforcement Learning-Based Smart Joint Control Scheme for On/Off Pumping Systems in Wastewater Treatment Plants. IEEE Access 2021, 9, 95360–95371. [Google Scholar] [CrossRef]

- Langer, L.; Volling, T. A reinforcement learning approach to home energy management for modulating heat pumps and photovoltaic systems. Appl. Energy 2022, 327, 120020. [Google Scholar] [CrossRef]

- Li, J.; Li, Y.; Yu, T. An optimal coordinated proton exchange membrane fuel cell heat management method based on large-scale multi-agent deep reinforcement learning. Energy Rep. 2021, 7, 6054–6068. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, Q. Energy Saving Algorithm of HVAC System Based on Deep Reinforcement Learning with Modelica Model. In Proceedings of the 2022 41st Chinese Control Conference (CCC), Hefei, China, 25–27 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 5277–5282. [Google Scholar] [CrossRef]

- Mbuwir, B.V.; Manna, C.; Spiessens, F.; Deconinck, G. Benchmarking reinforcement learning algorithms for demand response applications. In Proceedings of the 2020 IEEE PES Innovative Smart Grid Technologies Europe (ISGT-Europe), The Hague, The Netherlands, 26–28 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 289–293. [Google Scholar] [CrossRef]

- Hajgató, G.; Paál, G.; Gyires-Tóth, B. Deep Reinforcement Learning for Real-Time Optimization of Pumps in Water Distribution Systems. J. Water Resour. Plan. Manag. 2020, 146, 04020079. [Google Scholar] [CrossRef]

- Blad, C.; Bøgh, S.; Kallesøe, C.S. Data-driven Offline Reinforcement Learning for HVAC-systems. Energy 2022, 261, 125290. [Google Scholar] [CrossRef]

- Shao, Z.; Si, F.; Kudenko, D.; Wang, P.; Tong, X. Predictive scheduling of wet flue gas desulfurization system based on reinforcement learning. Comput. Chem. Eng. 2020, 141, 107000. [Google Scholar] [CrossRef]

- Qiu, S.; Li, Z.; Li, Z.; Li, J.; Long, S.; Li, X. Model-free control method based on reinforcement learning for building cooling water systems: Validation by measured data-based simulation. Energy Build. 2020, 218, 110055. [Google Scholar] [CrossRef]

- Anderson, E.R.; Steward, B.L. Reinforcement Learning for Active Noise Control in a Hydraulic System. J. Dyn. Syst. Meas. Control 2021, 143, 061006. [Google Scholar] [CrossRef]

- Xu, J.; Wang, H.; Rao, J.; Wang, J. Zone scheduling optimization of pumps in water distribution networks with deep reinforcement learning and knowledge-assisted learning. Soft Comput. 2021, 25, 14757–14767. [Google Scholar] [CrossRef]

- Campoverde, L.M.S.; Tropea, M.; De Rango, F. An IoT based Smart Irrigation Management System using Reinforcement Learning modeled through a Markov Decision Process. In Proceedings of the 2021 IEEE/ACM 25th International Symposium on Distributed Simulation and Real Time Applications (DS-RT), Valencia, Spain, 27–29 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Ruelens, F.; Iacovella, S.; Claessens, B.; Belmans, R. Learning Agent for a Heat-Pump Thermostat with a Set-Back Strategy Using Model-Free Reinforcement Learning. Energies 2015, 8, 8300–8318. [Google Scholar] [CrossRef]

- John, D.; Kaltschmitt, M. Control of a PVT-Heat-Pump-System Based on Reinforcement Learning–Operating Cost Reduction through Flow Rate Variation. Energies 2022, 15, 2607. [Google Scholar] [CrossRef]

- Oroojeni Mohammad Javad, M.; Agboola, S.; Jethwani, K.; Zeid, I.; Kamarthi, S. Reinforcement Learning Algorithm for Blood Glucose Control in Diabetic Patients. In Proceedings of the Volume 14: Emerging Technologies; Safety Engineering and Risk Analysis; Materials: Genetics to Structures, Houston, TX, USA, 13–19 November 2015; American Society of Mechanical Engineers: New York, NY, USA, 2015; p. V014T06A009. [Google Scholar] [CrossRef]

- Yang, L.; Nagy, Z.; Goffin, P.; Schlueter, A. Reinforcement learning for optimal control of low exergy buildings. Appl. Energy 2015, 156, 577–586. [Google Scholar] [CrossRef]

- Ruelens, F.; Claessens, B.J.; Vandael, S.; De Schutter, B.; Babuska, R.; Belmans, R. Residential Demand Response of Thermostatically Controlled Loads Using Batch Reinforcement Learning. IEEE Trans. Smart Grid 2017, 8, 2149–2159. [Google Scholar] [CrossRef]

- Vázquez-Canteli, J.; Kämpf, J.; Nagy, Z. Balancing comfort and energy consumption of a heat pump using batch reinforcement learning with fitted Q-iteration. Energy Procedia 2017, 122, 415–420. [Google Scholar] [CrossRef]

- Kazmi, H.; D’Oca, S. Demonstrating model-based reinforcement learning for energy efficiency and demand response using hot water vessels in net-zero energy buildings. In Proceedings of the 2016 IEEE PES Innovative Smart Grid Technologies Conference Europe (ISGT-Europe), Ljubljana, Slovenia, 9–12 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Peirelinck, T.; Ruelens, F.; Deconinck, G. Using reinforcement learning for optimizing heat pump control in a building model in Modelica. In Proceedings of the 2018 IEEE International Energy Conference (ENERGYCON), Limassol, Cyprus, 3–7 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Heidari, A.; Marechal, F.; Khovalyg, D. An adaptive control framework based on Reinforcement learning to balance energy, comfort and hygiene in heat pump water heating systems. J. Phys. Conf. Ser. 2021, 2042, 012006. [Google Scholar] [CrossRef]

- Urieli, D.; Stone, P. A learning agent for heat-pump thermostat control. In Proceedings of the 12th International Conference on Autonomous Agents and Multiagent Systems, Saint Paul, MN, USA, 6–10 May 2013; pp. 1093–1100. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-84899440153&partnerID=40&md5=42dc765943588e8b391dce1c4a79b654 (accessed on 1 June 2024).

- Wang, C.; Bowes, B.; Tavakoli, A.; Adams, S.; Goodall, J.; Beling, P. Smart stormwater control systems: A reinforcement learning approach. In Proceedings of the 17th International Conference on Information Systems for Crisis Response and Management (ISCRAM 2020), Blacksburg, VA, USA, 11 May 2020; Hughes, A.L., McNeill, F., Zobel, C.W., Eds.; pp. 2–13. Available online: https://www.researchgate.net/profile/Cheng-Wang-109/publication/343426754_Smart_Stormwater_Control_Systems_A_Reinforcement_Learning_Approach/links/645d0691f43b8a29ba44dfad/Smart-Stormwater-Control-Systems-A-Reinforcement-Learning-Approach.pdf (accessed on 1 May 2023).

- Da Silva, L.B.L.; Alencar, M.H.; De Almeida, A.T. Multidimensional flood risk management under climate changes: Bibliometric analysis, trends and strategic guidelines for decision-making in urban dynamics. Int. J. Disaster Risk Reduct. 2020, 50, 101865. [Google Scholar] [CrossRef]

- Beaver, D. Reflections on scientific collaboration (and its study): Past, present, and future. Scientometrics 2001, 52, 365–377. [Google Scholar] [CrossRef]

- Laudel, G. Collaboration and reward. Res. Eval. 2002, 11, 3–15. [Google Scholar] [CrossRef]

- Fonseca, B.D.P.F.E.; Sampaio, R.B.; Fonseca, M.V.D.A.; Zicker, F. Co-authorship network analysis in health research: Method and potential use. Health Res. Policy Syst. 2016, 14, 34. [Google Scholar] [CrossRef]

- van Eck, N.J.; Waltman, L. VOSviewer Manual; Univeristeit Leiden: Leiden, The Netherlands, 2013. [Google Scholar]

- Ferreira, F.A.F. Mapping the field of arts-based management: Bibliographic coupling and co-citation analyses. J. Bus. Res. 2018, 85, 348–357. [Google Scholar] [CrossRef]

- White, H.D.; McCain, K.W. Visualizing a discipline: An author co-citation analysis of information science, 1972-1995. J. Am. Soc. Inf. Sci. 1998, 49, 327–355. [Google Scholar]

- Hsu, C.-L.; Westland, J.C.; Chiang, C.-H. Editorial: Electronic Commerce Research in seven maps. Electron. Commer. Res. 2015, 15, 147–158. [Google Scholar] [CrossRef]

- Surwase, G.; Sagar, A.; Kademani, B.S.; Bhanumurthy, K. Co-citation Analysis: An Overview. In Proceedings of the BOSLA national conference proceedings, CDAC, Mumbai, India, 16–17 September 2011; p. 9. [Google Scholar]

- Mas-Tur, A.; Roig-Tierno, N.; Sarin, S.; Haon, C.; Sego, T.; Belkhouja, M.; Porter, A.; Merigó, J.M. Co-citation, bibliographic coupling and leading authors, institutions and countries in the 50 years of Technological Forecasting and Social Change. Technol. Forecast. Soc. Change 2021, 165, 120487. [Google Scholar] [CrossRef]

- Deisenroth, M.P.; Rasmussen, C.E. PILCO: A model-based and data-efficient approach to policy search. In Proceedings of the 28th International Conference on Machine Learning, ICML, Bellevue, WA, USA, 28 June–2 July 2011; pp. 465–472. [Google Scholar]

- Candelieri, A.; Perego, R.; Archetti, F. Intelligent Pump Scheduling Optimization in Water Distribution Networks; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; Volume 11353 LNCS. [Google Scholar]

- Wang, Z.; Schaul, T.; Van Hasselt, H.; De Frcitas, N.; Lanctot, M.; Hessel, M. Dueling Network Architectures for Deep Reinforcement Learning. In Proceedings of the 33rd International Conference on Machine Learning, ICML, New York, NY, USA, 19–24 June 2016; pp. 2939–2947. [Google Scholar]

- Ivan, B.; Tanja, U.; Claude, S. Behavioural Cloning, Phenomena, Results and Problems. In Automated Systems Based on Human Skill; Pergamon Press: Oxford, UK, 1995; pp. 143–150. [Google Scholar]

- Odonkor, P.; Lewis, K. Control of Shared Energy Storage Assets Within Building Clusters Using Reinforcement Learning. In Proceedings of the Volume 2A: 44th Design Automation Conference, Quebec City, QC, Canada, 26–29 August 2018; American Society of Mechanical Engineers: New York, NY, USA, 2018; p. V02AT03A028. [Google Scholar] [CrossRef]

- Hado, V.H.; Arthur, G.; David, S. Deep Reinforcement Learning with Double Q-Learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence (AAAI-16), Phoenix, AZ, USA, 12–17 February 2016; pp. 2094–2100. [Google Scholar] [CrossRef]

- Wiering, M.; van Otterlo, M. (Eds.) Reinforcement Learning: State-of-the-Art. In Adaptation, Learning, and Optimization; Springer: Berlin/Heidelberg, Germany, 2012; Volume 12. [Google Scholar] [CrossRef]

- Ernst, D.; Geurts, P.; Wehenkel, L. Tree-based batch mode reinforcement learning. J. Mach. Learn. Res. 2005, 6, 503–556. [Google Scholar]

- Ernst, D.; Capitanescu, F.; Glavic, M.; Wehenkel, L. Reinforcement Learning Versus Model Predictive Control: A Comparison on a Power System Problem. IEEE Trans. Syst. Man Cybern. Part B 2009, 39, 517–529. [Google Scholar] [CrossRef]

- Guo, Y.; Feng, S.; Roux, N.L.; Chi, E.; Lee, H.; Chen, M. Batch Reinforcement Learning Through Continuation Method. In Proceedings of the ICLR, Vienna, Austria, 4 May 2021; pp. 1–11. [Google Scholar]

- Zhan, Y.; Li, P.; Guo, S. Experience-Driven Computational Resource Allocation of Federated Learning by Deep Reinforcement Learning. In Proceedings of the 2020 IEEE International Parallel and Distributed Processing Symposium (IPDPS), New Orleans, LA, USA, 18–22 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 234–243. [Google Scholar] [CrossRef]

- Sewak, M. Deep Reinforcement Learning Frontier of Artificial Intelligence; Springer: Singapore, 2019. [Google Scholar]

- Faraz, T.; Garrett, W.; Peter, S. Behavioural Cloning from Observation. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1–9. [Google Scholar]

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to End Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Dong, H.; Ding, Z.; Zhang, S. (Eds.) Deep Reinforcement Learning: Fundamentals, Research and Applications; Springer: Singapore, 2020; ISBN 9789811540943. [Google Scholar] [CrossRef]

- Hussein, A.; Gaber, M.M.; Elyan, E.; Jayne, C. Imitation Learning: A Survey of Learning Methods. ACM Comput. Surv. 2018, 50, 1–35. [Google Scholar] [CrossRef]

- Achiam, J.; Sastry, S. Surprise-Based Intrinsic Motivation for Deep Reinforcement Learning. arXiv 2017, arXiv:1703.01732. [Google Scholar]

- Andrew, G.B.; Sridhar, M. Recent advances in hierarchical Reinforcement Learning. Kluwer Acad. Publ. 2003, 13, 41–77. [Google Scholar]

- Ben Slama, S.; Mahmoud, M. A deep learning model for intelligent home energy management system using renewable energy. Eng. Appl. Artif. Intell. 2023, 123, 106388. [Google Scholar] [CrossRef]

- Heidari, A.; Khovalyg, D. DeepValve: Development and experimental testing of a Reinforcement Learning control framework for occupant-centric heating in offices. Eng. Appl. Artif. Intell. 2023, 123, 106310. [Google Scholar] [CrossRef]

- Dalamagkidis, K.; Kolokotsa, D.; Kalaitzakis, K.; Stavrakakis, G. Reinforcement learning for energy conservation and comfort in building. Build. Environ. 2007, 42, 2668–2698. [Google Scholar] [CrossRef]

- Kou, P.; Liang, D.; Wang, C.; Wu, Z.; Gao, L. Safe deep reinforcement learning-based constrained optimal control scheme for active distribution networks. Appl. Energy 2020, 264, 114772. [Google Scholar] [CrossRef]

- Vázquez-Canteli, J.R.; Nagy, Z. Reinforcement learning for demand response: A review of algorithms and modeling techniques. Appl. Energy 2019, 235, 1072–1089. [Google Scholar] [CrossRef]

- Park, J.Y.; Dougherty, T.; Fritz, H.; Nagy, Z. LightLearn: An adaptive and occupant centered controller for lighting based on reinforcement learning. Build. Environ. 2019, 147, 397–414. [Google Scholar] [CrossRef]

- Raju, L.; Sankar, S.; Milton, R.S. Distributed Optimization of Solar Micro-grid Using Multi Agent Reinforcement Learning. Procedia Comput. Sci. 2015, 46, 231–239. [Google Scholar] [CrossRef]

- Fant, S. Predictive Maintenance is a Key to Saving Future Resources. 2021. Available online: https://www.greenbiz.com/article/predictive-maintenance-key-saving-future-resources (accessed on 1 May 2023).

- Ghobakhloo, M. The future of manufacturing industry: A strategic roadmap toward Industry 4.0. J. Manuf. Technol. Manag. 2018, 29, 910–936. [Google Scholar] [CrossRef]

- Fausing Olesen, J.; Shaker, H.R. Predictive Maintenance for Pump Systems and Thermal Power Plants: State-of-the-Art Review, Trends and Challenges. Sensors 2020, 20, 2425. [Google Scholar] [CrossRef]

| Source | Scopus |

|---|---|

| Criterion 1 | Pump* in abstract title only |

| Criterion 2 | Reinforcement learning in the abstract title, abstract, keywords |

| Research field | Engineering, computer science, energy, mathematics, environmental science, medicine, material science, neuroscience, physics and astronomy, decision science |

| Source Type | Journals and conference proceedings |

| [14], [26], [27], [28], [29], [17], [30], [31], [32], [33], [34], [35], [36], [37], [38], [39], [40], [41], [18], [42], [43], [44], [45], [46], [47], [48], [49], [50], [51], [52], [53], [19], [54], [55], [56], [57], [58], [59], [60], [61], [62], [63], [64], [65], [66], [67], [68], [69], [70], [71], [72], [73], [74], [75], [76], [77], [78], [79], [80], [81], [82], [83], [20], [84], [85], [86], [87], [88], [89], [90], [91], [92], [93], [94], [95], [96], [97], [98], [99], [100], [101], [102], [103], [104], [105], [21], [106], [107], [108], [109], [110], [111], [112], [113], [114], [115], [116], [117], [118], [119] |

| Type of analysis | Co-authorship |

| Unit of analysis | Authors |

| Counting method | Full counting |

| Min. no of documents of an author | 1 |

| Min. number of citations | 0 |

| Threshold | 381 |

| Rank | Author | Documents | Citations | Total Link Strength |

|---|---|---|---|---|

| 1 | Ruelens, Frederik | 4 | 360 | 12 |

| 2 | Belmans, Ronnie | 2 | 314 | 8 |

| 3 | Claessens, Bert j. | 2 | 314 | 8 |

| 4 | Babuska, Robert | 1 | 258 | 5 |

| 5 | de Schutter, Bart | 1 | 258 | 5 |

| 6 | Vandael, Stijn | 1 | 258 | 5 |

| 7 | Nagy, Zoltan | 3 | 202 | 12 |

| 8 | Goffin, Philippe | 1 | 150 | 3 |

| 9 | Schlueter, Arno | 1 | 150 | 3 |

| 10 | Yang, Lei | 1 | 150 | 3 |

| 11 | Cheng, Yujie | 1 | 143 | 6 |

| 12 | Ding, Yu | 1 | 143 | 6 |

| 13 | Lu, Chen | 1 | 143 | 6 |

| 14 | Ma, Jian | 1 | 143 | 6 |

| 15 | Ma, Liang | 1 | 143 | 6 |

| 16 | Suo, Mingliang | 1 | 143 | 6 |

| 17 | Tao, Laifa | 1 | 143 | 6 |

| 18 | Gruden, Cyndee l. | 1 | 81 | 3 |

| 19 | Kerkez, Branko | 1 | 81 | 3 |

| 20 | Lewis, Matthew j. | 1 | 81 | 3 |

| Rank | Author | Citations | Total Link Strength |

|---|---|---|---|

| 1 | Silver, D. | 80 | 4161 |

| 2 | Kavukcuoglu, K. | 41 | 2211 |

| 3 | Antonoglou, I. | 38 | 2159 |

| 4 | Li, I. | 48 | 2150 |

| 5 | Mnih, V. | 39 | 2105 |

| 6 | Wang, Z. | 44 | 1965 |

| 7 | Sutton, R.S. | 45 | 1903 |

| 8 | Wang, Y. | 45 | 1899 |

| 9 | Wierstra, D. | 34 | 1864 |

| 10 | Riedmiller, M. | 36 | 1775 |

| Type of analysis | Bibliographic coupling |

| Unit of analysis | Documents |

| Counting method | Full counting |

| Min. number of citations | 1 |

| Threshold | 77 |

| Rank | Documents | Citations | Total Link Strength |

|---|---|---|---|

| 1 | Ruelens (2017) [113] | 258 | 39 |

| 2 | Yang (2015) [112] | 150 | 22 |

| 3 | Ding (2019) [14] | 143 | 29 |

| 4 | Mullapudi (2020) [80] | 81 | 43 |

| 5 | Filipe (2019) [81] | 78 | 12 |

| 6 | Liu (2019) [84] | 72 | 17 |

| 7 | Pinto (2021) [85] | 71 | 49 |

| 8 | Ahn (2020) [94] | 70 | 46 |

| 9 | Ruelens (2015) [109] | 56 | 36 |

| 10 | Qiu (2020) [105] | 54 | 33 |

| Extraction fields | Title and abstract |

| Counting method | Full counting |

| Min. number of occurrences | 5 |

| Threshold | 62 |

| Rank | Keyword | Occurrence |

|---|---|---|

| 1 | Reinforcement Learning | 97 |

| 2 | Reinforcement Learnings | 44 |

| 3 | Learning Systems | 34 |

| 4 | Deep Learning | 33 |

| 5 | Energy Utilization | 24 |

| Reinforcement Learning Method | References |

|---|---|

| Q-learning | [29,35,39,71,74,96,105,111,112] |

| proximal policy optimization (PPO) | [18,38,40,48,49,61,66,79,81,92,97,104,107] |

| fitted Q-iteration (FQI) | [34,76,93,109,113,114,116] |

| deep deterministic policy gradient (DDPG) | [19,26,46,54,55,57,59,66,69,72,75,84,86,98,99,100,119] |

| deep Q-networks (DQN) | [8,9,18,64,68,77,80,83,91,96,120] |

| double deep Q-networks | [43,70,117] |

| soft actor–critic (SAC) | [18,27,30,31,32,44,58,59,78,85,106] |

| integral reinforcement learning (IRL) | [89] |

| dueling deep Q-networks | [17,56,59,102] |

| parallel learning | [20] |

| transfer learning | [17,26,32,42,87] |

| behavioral cloning | [73] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aribisala, A.A.; Ghori, U.A.S.; Cavalcante, C.A.V. The Application of Reinforcement Learning to Pumps—A Systematic Literature Review. Machines 2025, 13, 480. https://doi.org/10.3390/machines13060480

Aribisala AA, Ghori UAS, Cavalcante CAV. The Application of Reinforcement Learning to Pumps—A Systematic Literature Review. Machines. 2025; 13(6):480. https://doi.org/10.3390/machines13060480

Chicago/Turabian StyleAribisala, Adetoye Ayokunle, Usama Ali Salahuddin Ghori, and Cristiano A. V. Cavalcante. 2025. "The Application of Reinforcement Learning to Pumps—A Systematic Literature Review" Machines 13, no. 6: 480. https://doi.org/10.3390/machines13060480

APA StyleAribisala, A. A., Ghori, U. A. S., & Cavalcante, C. A. V. (2025). The Application of Reinforcement Learning to Pumps—A Systematic Literature Review. Machines, 13(6), 480. https://doi.org/10.3390/machines13060480