Abstract

In past decade, even though correlation filter (CF) has achieved rapid developments in the field of unmanned aerial vehicle (UAV) tracking, the discrimination ability between target and background still needs further investigation due to boundary effects. Moreover, when the target is occluded or leaves the view field, it may result in tracking loss of the target. To address these limitations, this work proposes an improved CF tracking algorithm based on some existent ones. Firstly, as for the scale changing of tracking target, an adaptive scale box is proposed to adjustably change the scale of the target box. Secondly, to address boundary effects caused by fast maneuvering, a spatio-temporal search strategy is presented, utilizing spatial context from the target region in the current frame and temporal information from preceding frames. Thirdly, aiming at the problem of tracking loss due to occlusion or out-of-view situations, this work proposes a fusion strategy based on the YOLOv5s_MSES target detection algorithm. Finally, the experimental results show that, compared to the baseline algorithm on the UAV123 dataset, our DP and AUC increased by 14.07% and 14.39%, respectively, and the frames per second (FPS) amounts to . Additionally, on the OTB100 dataset, the proposed algorithm demonstrates significant improvements in distance precision (DP) metrics across four challenging attributes compared to the baseline algorithm, showing a 12.85% increase for scale variation (SV), 16.45% for fast motion (FM), 18.66% for occlusion (OCC), and 17.09% for out-of-view (OV) scenarios. To sum up, the proposed algorithm not only achieves the ideal tracking effect, but also meets the real-time requirement with higher precision, which means that the comprehensive performance is superior to some existing methods.

1. Introduction

Currently, as one of the crucial tasks in computer vision, target tracking plays an important role in various related fields such as radar tracking [1,2], video surveillance [3,4,5], and UAV detection [6,7,8,9]. The attainment of precise, stable, and efficient target tracking remains a formidable challenge owing to the intricate nature of UAV application scenarios and the constrained computational resources onboard the platform [10]. The challenges faced by UAV target tracking mainly include the following aspects. (1) There are a large number of ground interference targets in the wide field of view in the air, especially in bad weather conditions (such as fog, rain, strong light) and complex terrain environments (such as urban tall buildings, mountain terrain), resulting in reduced target feature prominence and increased confusion between target and background. (2) Limited shooting altitude and oblique viewing angles typically result in small pixel proportions of tracked targets in ground imaging, making them vulnerable to background noise interference. (3) The UAV is easily affected by wind disturbance during flight, resulting in blurred imaging and target displacement, which seriously affects the tracking continuity. (4) Due to limited computing resources of a UAV, it is challenging to maintain high accuracy while using target detection and a tracking algorithm with low complexity. Despite great advancements in UAV target detection and tracking algorithms, there still exists significant potential for improvement in terms of both high precision and real-time performance due to the aforementioned challenges [11,12].

Normally, CF tracking algorithms determine target positions by generating response graphs via online learning CF, thereby transforming time domain computation into frequency domain computation and significantly enhancing tracking speed. The classical CF trackers encompass MOSSE [13], KCF [14], spatial and temporal regularization [15], adaptive scale estimation [16] and the fusion of complementary learners [17]. In 2010, the MOSSE algorithm [13] was proposed, the core idea of which is to realize real-time target positioning based on filtering and convolution operation. In 2014, the target tracking algorithm based on correlation filtering made a breakthrough. Henriques et al. [14] presented the KCF algorithm, which introduced a kernel technique to optimize the correlation filter in the frequency domain, thus greatly improving the speed and accuracy of target tracking. In the same year, Danelljan et al. [18] first considered the role of color features in single-target tracking, comprehensively evaluated the effects of features extracted from various color spaces in target tracking, and finally proposed a single-target tracking method based on multi-channel color features. In 2017, the CSRDCF algorithm proposed by Lukezic et al. [19] used the color model to construct a spatial-restricted mask, which enhanced the robustness and accuracy of tracking and suppressed the boundary effect. Building upon these algorithms, researchers have made many great improvements [20,21,22]. However, the majority of existing studies primarily focused on how to enhance the detection accuracy of tracking algorithms, while the real-time requirements always received less attention. For instance, the FPS of the algorithm [20] was only , which makes it difficult to deploy for real-time UAV target tracking tasks. Among these algorithms, the KCF algorithm, which represents a target tracking algorithm based on CF, not only exhibits high tracking accuracy and robustness, but also possesses fast tracking speed to meet the real-time requirements. However, the KCF algorithm may lose target features in case of serious occlusion, target deformation, and background confusion, which leads to a reduction in tracking accuracy and even the failure of tracking. Additionally, the fixed tracking target box in the KCF algorithm does not account for changes in scale of the target tracking, which can result in a reduction in tracking accuracy.

Motivated by the above discussions, this work proposes a real-time target detection and tracking algorithm for the UAV. Firstly, an adaptive scale box is introduced to enable the target box for dynamically adjusting its scale in response to the changes in the target size. Secondly, due to the boundary effects, the tracker is prone to drifting when the target is occluded or rotated. Then, a spatio-temporal search strategy is introduced to track the target by utilizing the spatial information surrounding the target in the current frame, along with the temporal information from the previous frame. This approach aims to mitigate the effects of boundary influences. Finally, the average peak correlation energy (APCE) [23] is used to determine whether the target is occluded or lost, and the YOLOv5s_MSES [24] target detection algorithm is employed to redetect and retrack the lost target. In summary, the innovations of this work are listed as follows:

- Based on some existent search strategies, this work initially proposes a new spatio-temporal search strategy (STS), which can comprehensively integrate the information of time and space to dynamically capture the target changes by incorporating historical data while retaining spatial information. Then, different from the traditional ones, the proposed STS can more effectively preserve the valuable feature information of the target, mitigate the target drift issues induced by the boundary effect, and efficiently enhance the search accuracy.

- This work innovatively puts forward an anti-loss strategy for target retracking (TR) based on the YOLOv5s_MSES algorithm. Such a strategy firstly utilizes the APCE to decide whether the tracking target will be obscured or out of the view field, and if so, the YOLOv5s_MSES is exploited to redetect all similar targets. Then, the APCE is further used to determine the real tracking target and track it again by resorting to the KCF algorithm. Thus, our TR strategy not only solves the problem that the current CF algorithm cannot retrack the lost target, but also facilitates the enhancement of tracking accuracy.

- In order to solve the issue of the fixed scale induced by the KCF algorithm, this work introduces an adaptive scale box method (ASB), enabling the dynamic adjustment of the scale of the target tracking box, which can improve the accuracy and stability for our derived algorithm, particularly in the case of large size variation.

The remainder of this work is organized as follows. The related works are reviewed in Section 2. The details of the improvement of the KCF algorithm are presented in Section 3. Section 4 shows the experimental results. Finally, we summarize the work in Section 5.

Notation 1.

The notations of this work are standard. ⊙ represents the element-wise dot multiplication, and denotes the discrete Fourier transform of the term x, and the kernel function is defined as the dot product of the vectors and .

2. Related Work

2.1. Boundary Effects

Even though it is possible to prevent the tracker from overfitting by increasing training samples through the cyclic shift operation, it inevitably leads to the boundary effects of the CF tracker. Recently, many methods have been proposed to solve these negative effects [25,26,27,28]. Liu et al. [26] designed a context pyramid to effectively employ the target and its contextual information in the DCF learning process. Moorthy et al. [28] added wraparound patches to the tracker for suppressing the target drift caused by the boundary effects. However, these above studies only considered the spatial information of the current frame of the tracking target. Based on Ref. [28], this work proposes an improved spatio-temporal search strategy, which fully considers the position information of previous frames tracking the target and the contextual information around the current frame tracking the target, so that it can capture the dynamic changes of the target by combining historical data while retaining spatial information. In particular, the negative impacts of the boundary effects can be effectively eliminated.

2.2. Tracking Loss

Normally, there exist two primary categories for the tracking target loss issue: (1) the tracking target is obscured by other objects or interfered with the background; (2) the tracking target exceeds the field of view of the tracker. However, most studies only considered the issues of the background interference and object occlusion in trackers [29,30,31,32].

In recent years, together with the rapid developments of deep learning, researchers have combined target detection algorithms with the CF ones, and achieved some elegant results [33,34,35]. Cai et al. [33] first detected all moving targets using the YOLO target detection algorithm, and then combined the KCF algorithm with the Kalman filter to track multiple targets. Chen et al. [34] employed an SSD-FT target detection algorithm to redetect the tracking target to eliminate the error after each fixed frame of tracking. Although these above algorithms combine target detection algorithms with the tracking ones, they cannot be effectively utilized and struggle to meet the real-time requirements on tracking. Moreover, whether the background is occluded or the tracking target is out of the field of view, the final result means the loss of the target. Therefore, this work introduces the small target detection algorithm YOLOv5s_MSES on the basis of the KCF algorithm to solve the problem of target loss. In contrast to other detection algorithms, YOLOv5s_MSES has smaller parameter scale and higher tracking accuracy. It not only effectively detects the target for tracking, but also preserve the real-time performance of the tracking algorithm. Ref. [34] employed the SSD-FT target detection algorithm at each fixed frame. However, since the object loss may not occur during the whole tracking procedure, excessive use of the object detection algorithm will reduce the FPS, affect the real-time performance, and waste the limited resources of the computer. Moreover, in comparison with the SSD-FT target detection algorithm [34], the FPS of YOLOv5s_MSES is as high as 51, which more than meets the real-time requirement, while SSD-FT has less than 1 FPS. Therefore, inspired by Ref. [34], this work first utilizes the APCE to determine whether the tracked target appears to be obscured or out of the tracker’s field of view, and if so, the YOLOv5s_MSES will be employed to redetect all similar targets. Then, the APCE is further utilized to select the tracking target and track it again via the proposed algorithm.

3. Methods

3.1. YOLOv5s_MSES Target Detection Algorithm

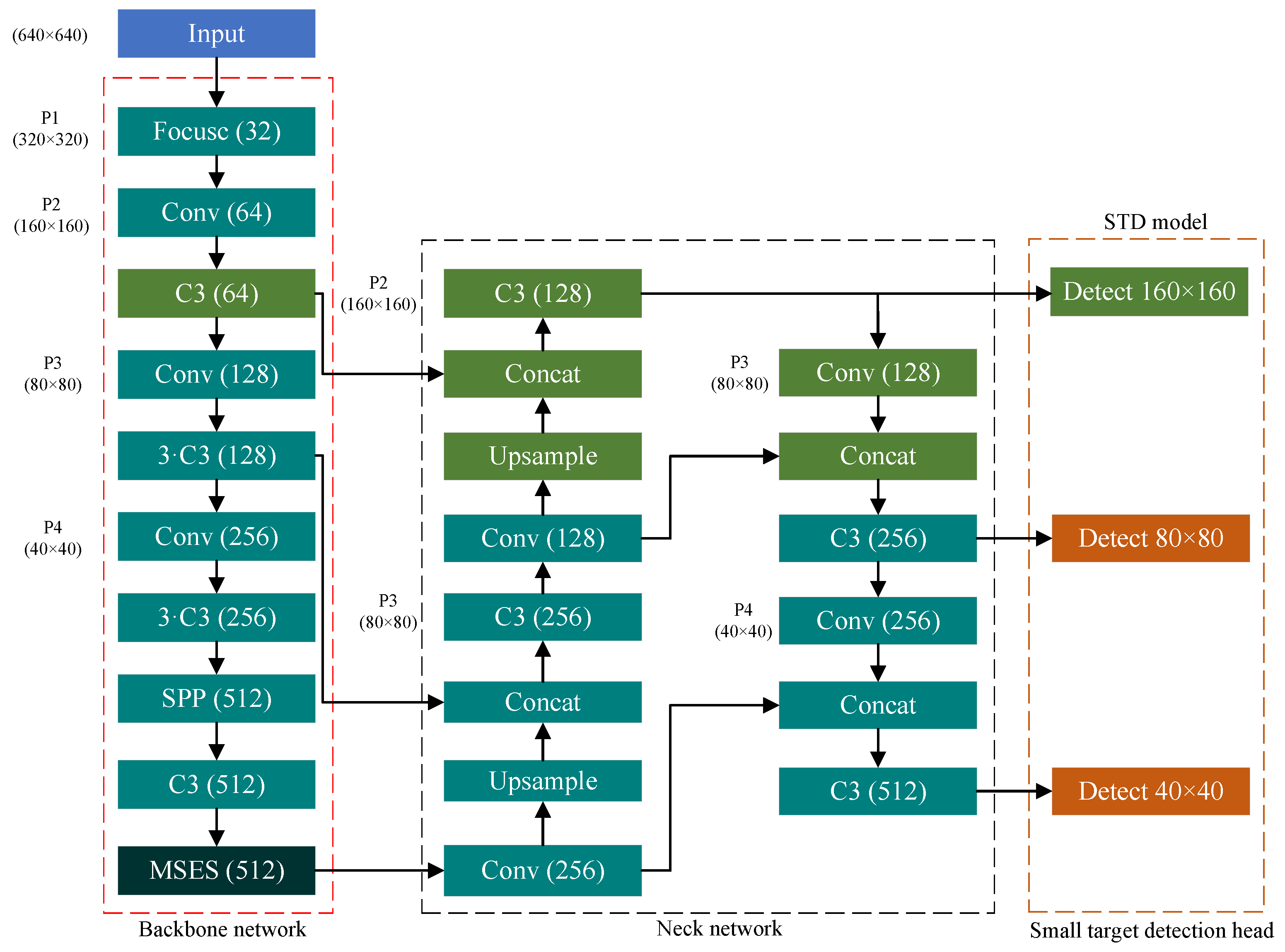

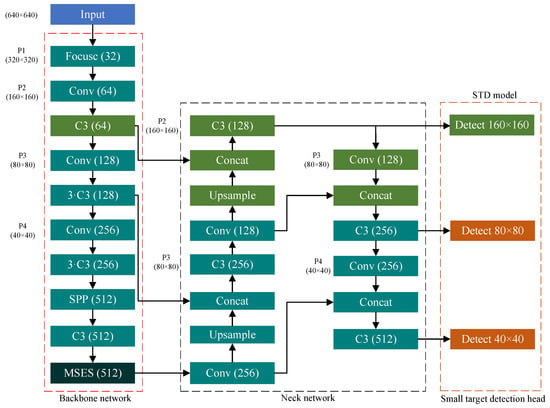

In order to improve the detection accuracy of the UAV target tracking algorithm, this work introduces an improved YOLOv5s_MSES algorithm, whose overall architecture is shown in Figure 1, including the following four key components. Firstly, in the data preprocessing stage, the Mosaic data enhancement method is used to enhance the diversity of the training data, and the input image is uniformly adjusted to the preset size with adaptive image scaling technology. Secondly, the backbone network is composed of several innovative modules. The Focus module uses feature slicing and replication operations to achieve efficient feature extraction; the newly introduced MSES attention module significantly enhances the ability of the network to capture features of small-sized targets; the SPP space pyramid module extends the receptive field of the network effectively through the combination of multi-scale pooling operation and jump connection. Thirdly, in the feature fusion part, the algorithm innovatively integrates the bidirectional feature transfer mechanism of FPN and PAN. Finally, the detection head adopts a multi-scale detection strategy, including three different scale detection layers of 40 × 40, 80 × 80 and 160 × 160. What is more, experimental results show that compared with the existing advanced algorithms [36,37], YOLOv5s_MSES exhibits superior performance on the VisDrone2019 dataset and is well suited for small object detection tasks.

Figure 1.

The YOLOv5s_MSES model structure.

3.2. KCF Target Tracking Algorithm

The KCF algorithm acquires a filled box by filling the target box given in the initial frame of the tracking. In the next frame of the image, the fill box is cyclically shifted to obtain a cyclic matrix to collect a large number of positive samples and negative ones. The region containing the target is designated as the positive samples, while the remainder is considered as the negative ones. These collected samples are used to train the target detector. Subsequently, the trained target detector is employed to ascertain whether the predicted location is the location where the target appears, and the filled box of the previous frame is utilized to continue the cyclic shift. These images in the obtained sample box are divided into positive samples and negative ones again, and the sample with the largest response is selected as the filling box of the target in the current frame. Finally, these samples obtained from the current frame are used to update the target detection classifier. During this process, these samples can be computed by utilizing the diagonalization property of the circulant matrix in Fourier space and fast Fourier transform to improve the calculation speed. The process is represented by the following formulas. (1) Let a set of training samples be , then denotes input sample i, and represents the response of the i th sample. (2) The regression function is , where w means the weight coefficient for the column vector, which can be solved by using the least square method. Equation (1) is given as follows:

where is the regularization coefficient to prevent the tracker from overfitting, and denotes the L2-norm of w.

By introducing the nonlinear mapping function , the low-dimensional nonlinear inseparable problem is mapped to the high-dimensional space, which can be transformed into a linear solution, whose weighted linear combination is expressed as follows:

where z represents the single test sample.

In Equations (2) and (3), the optimization problem of w is transformed into the optimization one for . The final result is:

where means the first row element of the kernel function matrix. Here, according to Notation 1, denote the discrete Fourier transforms of , respectively.

In the detection stage, firstly, the template obtained by training the target in the previous frame is used, and the kernelized correlation filter circularly shifts to the original target position region in the current frame for obtaining the candidate region. Then, z is correlated with the filter template to calculate its response value. The specific formula is given as follows:

where the asymmetric matrix denotes the kernel matrix between the training sample and the candidate one. Each element of is defined as , where P is the permutation matrix used to realize displacement. Here, is a vector composed of each coefficient . Furthermore, in order to compute Equation (5), this work diagonalizes it and further obtains it as:

The value of is the response value of all the shifted candidate regions. The candidate sample corresponding to the maximum value means the candidate sample that is most likely to be the target after this detection.

3.3. Spatio-Temporal Search Strategy

Due to the boundary effects, the tracker easily tends to drift when the target undergoes occlusion and rotation. Inspired by Ref. [28], this work utilizes the appearance features and contextual information of the target for joint modeling, to better distinguish between the target and the background. Modify Equation (6) to the following formula:

where denotes the range size of the surrounding search with a size of . Moreover, N represents the number of surrounding search boxes, and since the algorithm searches in four directions, up, down, left, and right of the target, the value of N is 4 to make full use of the spatial context information around the target.

However, the tracking target may have different motion patterns in different time periods. For faster tracking targets, there may also be a problem of leaving the search field in the next frame. Only relying on spatial information cannot capture this change and accurately predict the future position of the target. Therefore, in order to improve the accuracy and robustness of the tracker, except for considering spatial information, this work further involves temporal information. By modeling the historical trajectory of the target, the future position of the target can be predicted. In this way, even if the target experiences occlusion or rotation, its possible position can be inferred based on its motion pattern, thereby reducing the error caused by the boundary effects. Thus, we modify Equation (7) to the following formulas:

where represents the predicted position of the target, and means an input sample whose center position coordinate corresponds to . What is more, t implies the current frame, X denotes the horizontal coordinate of the target position center, and Y means the vertical coordinate of the target position center.

According to Equations (9) and (10), the spatial–temporal search strategy first predicts the position of the current frame of the target according to the historical information of the target tracking, and then carries out spatial search near the predicted position via the time information to obtain the feature information of the target for determining the final predicted position and tracking the target effectively.

3.4. Retracking Strategy for Target Loss

The KCF algorithm can always track the specified target under normal tracking conditions. However, when the target is obscured by other objects or out of the field of tracker’s view, it is easy to lose the target. Furthermore, although YOLOv5s_MSES can detect UAV small targets well, it cannot complete the tracking task of a single target due to the limitations of the target detection algorithm. Therefore, combining two above algorithms is a good solution to the problem of tracking target loss.

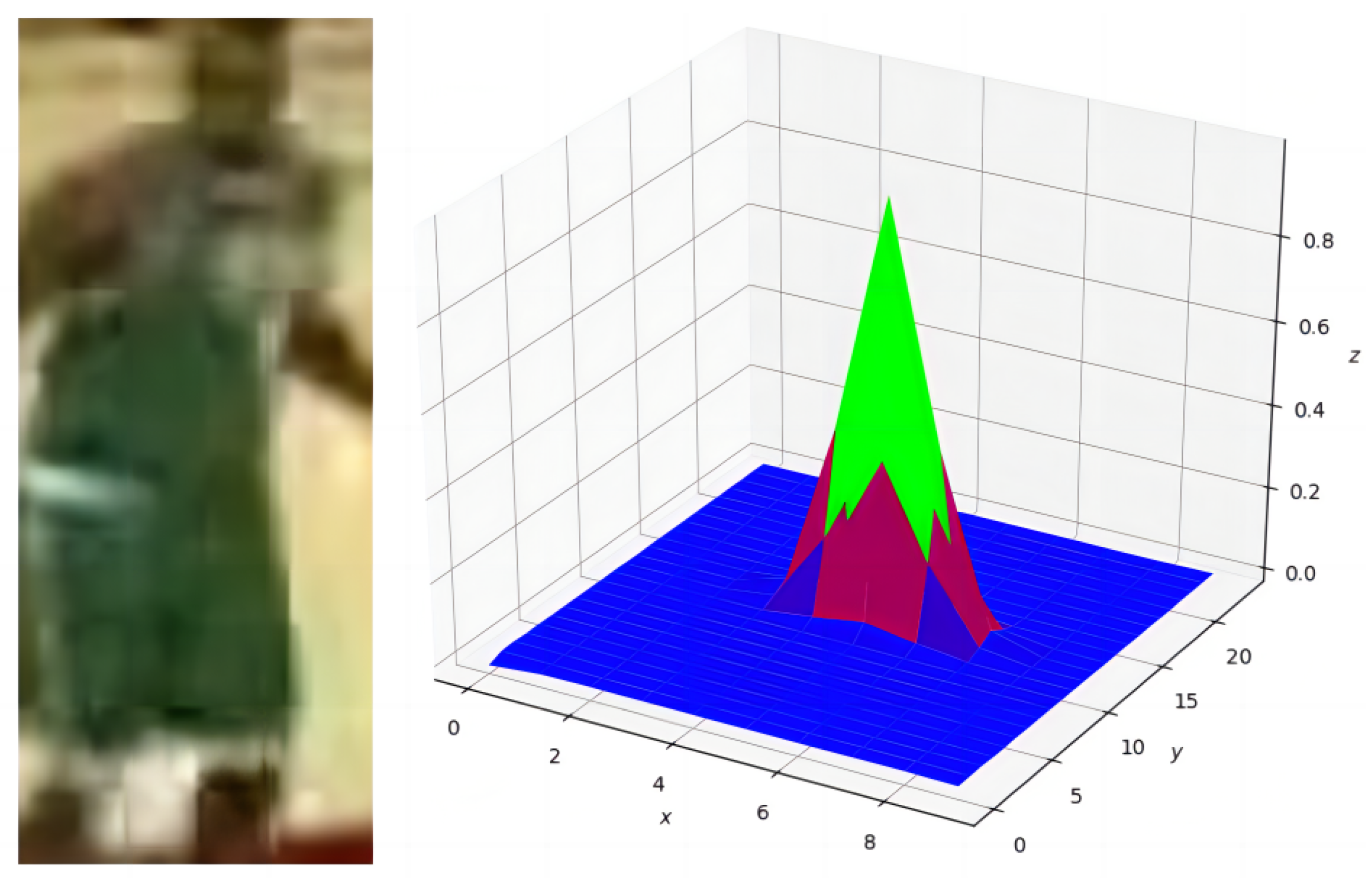

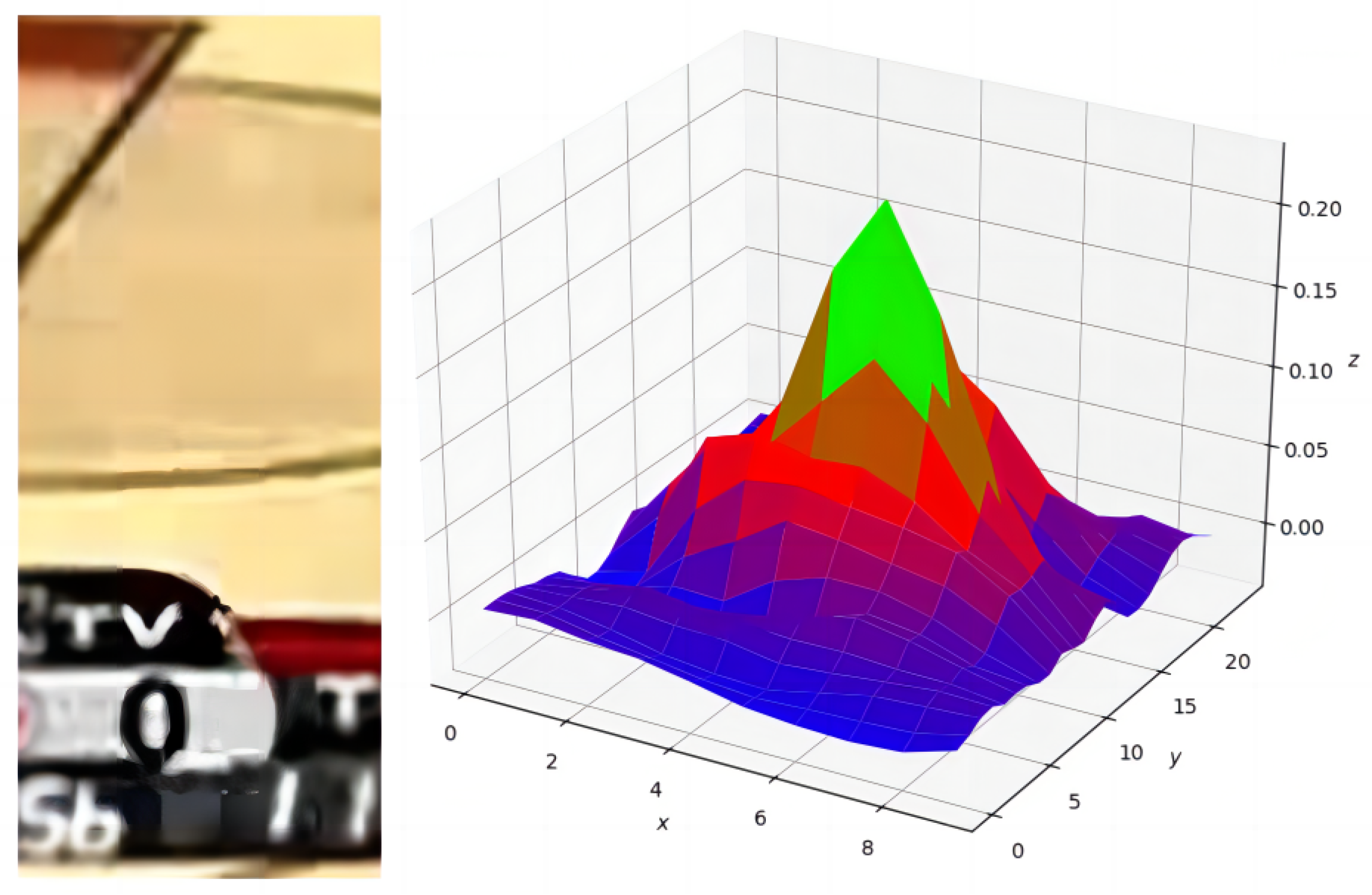

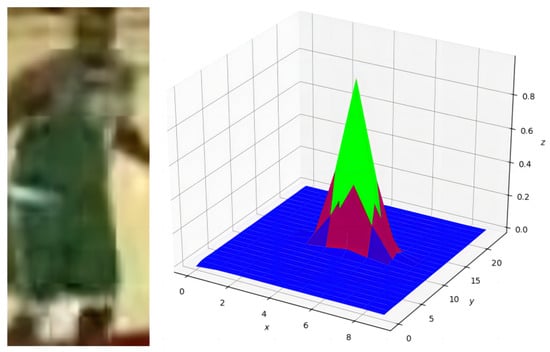

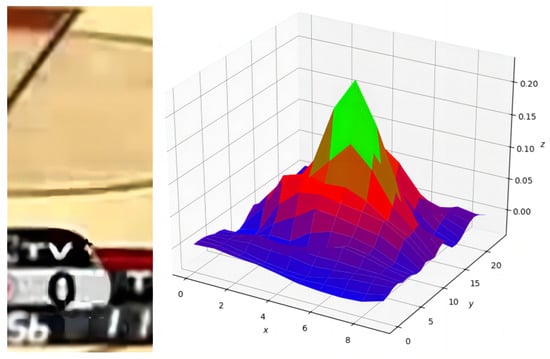

The retracking strategy for target loss first utilizes the APCE to determine whether the target is lost. When the cumulative number of APCE values exceeding the threshold reaches 3, the target is considered to be lost, where the threshold is set to times the initial APCE value. Subsequently, YOLOv5s_MSES is employed to detect the target. The YOLOv5s_MSES small target detection algorithm based on UAV can extract more small target feature information by improving the network structure of the YOLOv5s algorithm, and then use an attention mechanism to highlight small target feature information to suppress background information, which greatly improves the detection accuracy of small targets. Finally, APCE is used to compare the correlation filter response graphs of all candidate targets detected by YOLOv5s_MSES with the response graph of the initial tracking target, and the candidate target with the highest response pattern matching degree is selected as the follow-up tracking target. As shown in Figure 2 and Figure 3, when the target is lost, there is a significant difference between the maximum value and fluctuation of the response graph. Therefore, the APCE can be utilized to determine whether the target is lost. The calculation formula for the APCE is shown as follows:

where R denotes the response matrix, means the minimum value in the response matrix, is the maximum value in the response matrix, and represents the response value at in the response matrix.

Figure 2.

The response plot of the target image.

Figure 3.

The response plot of the interference image.

3.5. Adaptive Scale Box

The KCF algorithm utilizes a fixed scale box for the target. When the size of the tracking target changes, the target box cannot adapt to the size of the target, thereby affecting tracking accuracy. In Ref. [38], the adaptive multi-scale pyramid (AMP) only used multiple fixed scale factors to resize the scale box, which may not yield the optimal result for the current target. In order to solve this problem, this work proposes an effective method to dynamically adjust the scale factors according to the differences between the maximum response value of different-sized scale boxes and the maximum response value of the current scale box, through which the scale box can be more suitable for the size of the current target. Specifically, the bidirectional scale sampling of the current target region is carried out first, and the peak response values and are extracted by calculating the reduced and enlarged correlation filter response graphs of the current target region. Then, the peak or is used to determine whether the next frame should be scaled down or enlarged. Finally, the scale factor and the difference between the current frame response peak and the corresponding peak after reduction or amplification are used to determine the actual scaled frame scale size for the next frame. The specific formula is given as follows:

where B represents the scale box, and denote the width and height, respectively. Then, R means the maximum response value of the current scale box, denotes the maximum response value of the small scale box, and represents the maximum response value of the large scale box. Moreover, and are the scale factors for the size of the scale box where the size of is chosen as and the size of is selected as .

4. Experiment

4.1. Experimental Data and Parameter Setting

(1) Implementation details: The experimental running environment for this experiment is the Windows 11 operating system, a computer with Nvidia RTX3060 GPU hardware configuration and Intel i7-12700 2.70 GHz CPU, and the compiling software for program code is PyCharm with version 2021.3.3. The algorithm is written in the Python language with version 3.8.5, and the parameter settings are consistent with the open-source KCF code. In sum, this work uses the above experimental environment for the tracking experiment.

(2) OTB100 Dataset: The experimental data environment consists of 98 videos and 100 test scenarios in the OTB100 [39] dataset. Among them, the video sequences Skating2 and Jogging have two test scenes for each case. The dataset covers 11 complex scene attributes, namely lighting changes (IV), SV, OCC, deformation (DEF), motion blur (MB), FM, in-plane rotation (IPR), out-of-plane rotation (OPR), OV, background clutter (BC), and low resolution (LR).

(3) UAV123 Dataset: UAV123 is about 14G in size and contains 123 video sequences [40]. These video sequences cover a variety of complex scenes, including indoor, outdoor, sky, urban and rural, etc. The moving targets in the dataset include people, cars, animals, etc., and they move at different speeds and in different directions in the different environments. Having a high dynamic range and high definition while containing a challenging variety of tracking scenarios makes the UAV123 data ensemble one of the ideal datasets for the evaluation of target tracking algorithms.

(4) Evaluation indicators: In order to quantitatively evaluate the performance of the target tracking algorithms in the following experiments, the tracking results of different trackers are estimated by using precision and success rates [39]. This work employs two evaluation metrics in one-pass evaluation (OPE). One is the average distance precision (DP), which is expressed as the percentage of frames within a given ground truth threshold distance, and the threshold distance is 20 pixels. The other metric is the area under curve (AUC), and it represents the proportion of frames where the bounding box overlap exceeds a specified threshold, determined as 0.50 [41]. Moreover, the FPS indicates the number of frames at per second.

4.2. The Ablation Experiments on OTB100 Dataset

In order to verify each component in our proposed algorithm, the effectiveness of each module is tested on the OTB100 dataset. The ablation results are shown in Table 1.

Table 1.

The ablation experiments on the OTB100 dataset.

The ablation results show that the addition of different modules into the KCF algorithm significantly improves the DP and AUC. According to these data, it can be observed that the improved STS module has enhanced the DP by 1.73% and AUC by 1.64% in contrast to the basic module SI [28], which indicates that incorporating STS effectively mitigates boundary effects, occlusion drift, and other impacts of the algorithm. Since the YOLOv5s_MSES target detection algorithm is trained on the VisDrone2019 dataset, it can only detect a portion of the OTB100 video sequence, as shown in Table 2. After adding the TR module, although only a portion of the video sequence is detected, there exists an improvement of DP by 4.88% and AUC by 3.65%. It demonstrates that combining the target detection algorithm with the CF target tracking one is highly feasible. In comparison with the baseline algorithm, our proposed algorithm has increased the DP by 13.25% and the AUC by 11.98%, indicating its effectiveness and advantage in improving performance. In summary, the proposed algorithm of this work can effectively guide the tracking task of the UAV image target.

Table 2.

The video sequences detected by TR on the OTB100 dataset.

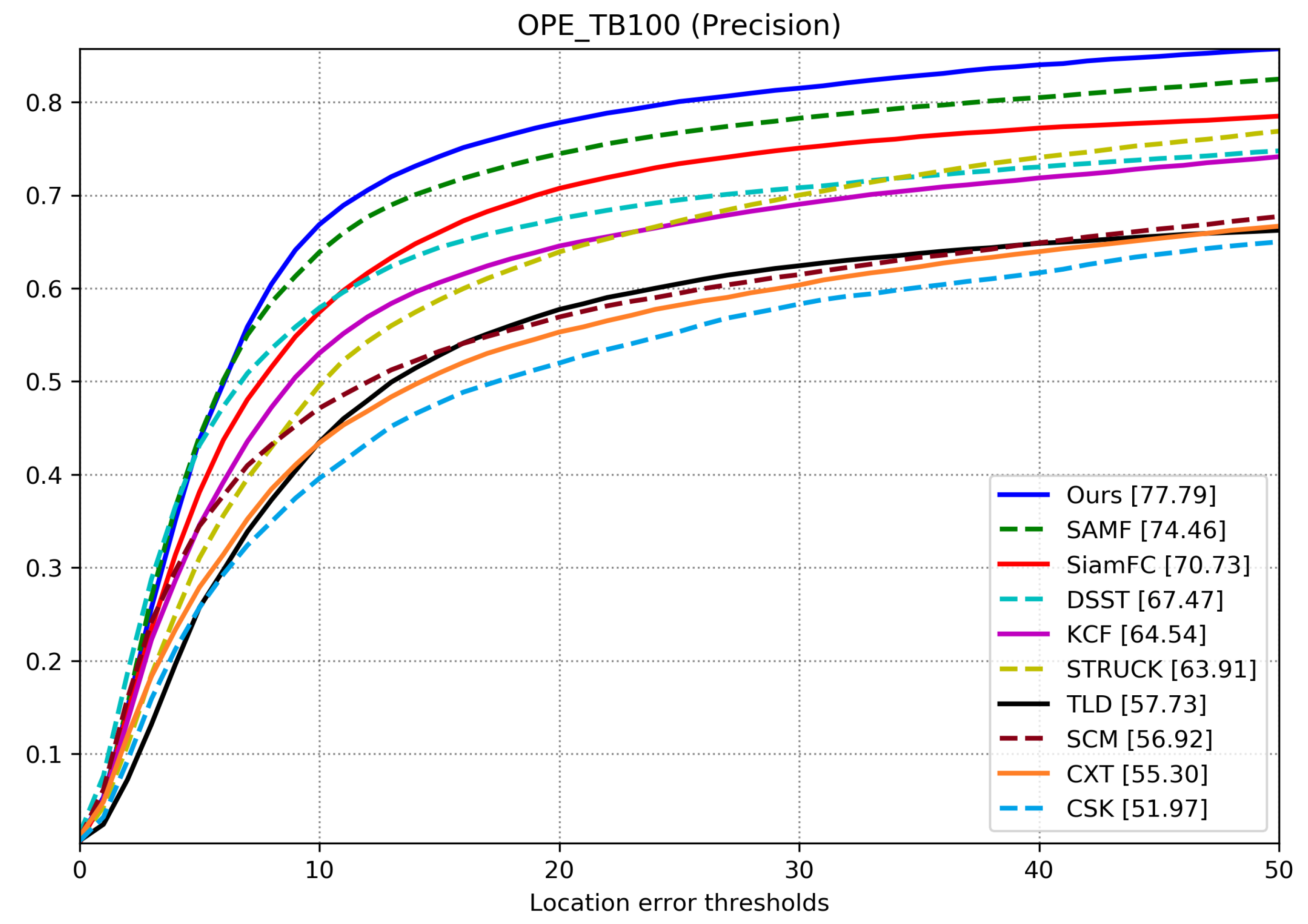

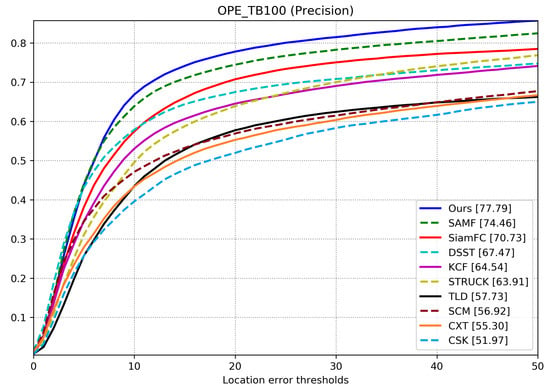

4.3. Contrast Experiments on the OTB100 Dataset

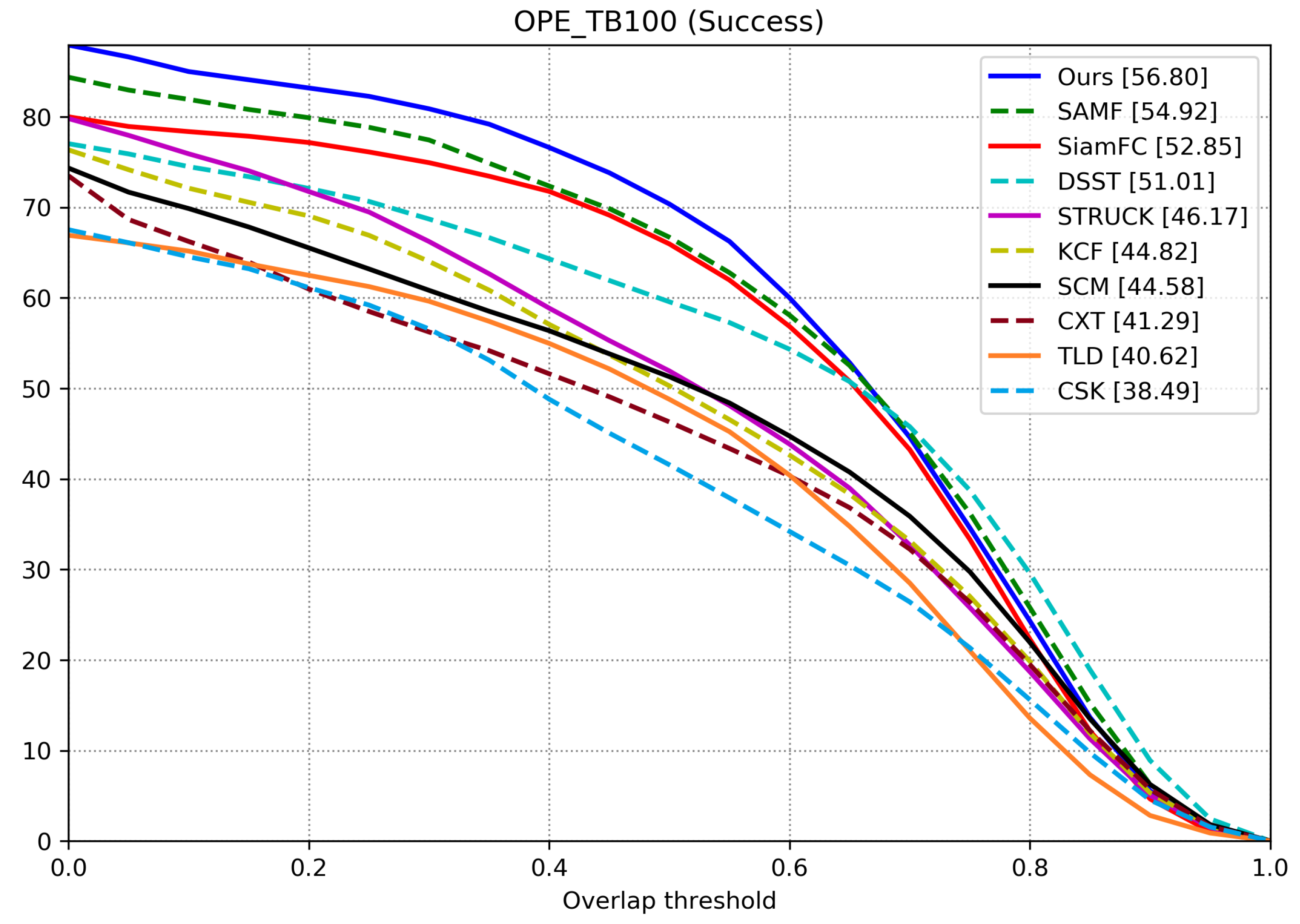

Firstly, this work utilizes 100 video sequences from the OTB100 test set to analyze DP and AUC of the ten algorithms. The experimental results are shown in Figure 4 and Figure 5. From these results, the DP by our algorithm amounts to 77.79%, which can be improved by 13.25% when compared with the original KCF one. Then, the AUC of our proposed algorithm reaches 56.80%, which means 11.98% higher than the original KCF one. In contrast with the second ranked SAMF method, our proposed algorithm improves the DP by 3.33% and the AUC by 1.88%. In addition, our algorithm can achieve 53.9 FPS, indicating that the proposed method can achieve the ideal real-time tracking.

Figure 4.

The DP performance on the OTB100 dataset.

Figure 5.

The AUC performance on the OTB100 dataset.

Secondly, by comprehensively analyzing the tracking performance of our algorithm in various scenarios, this work assesses the DP and AUC of the algorithm across 11 distinct video attributes. As illustrated in Table 3 and Table 4, the scores of various algorithms under the different video attributes are presented. Based on the above experiments, it can be discerned that our proposed algorithm ranks the first among the eight attributes in the DP metric. Under these attributes of IPR, LR and MB, although our algorithm ranks the second, it is not obviously different from the top-ranked algorithm. In the AUC metric, the proposed algorithm ranks the first in ten attributes. Moreover, under the attributes of MB and LR, it is slightly insufficient when being compared with the top-ranked algorithm. Then, based on these comparisons with these original KCF algorithms, our proposed method can achieve an elegant improvement over the 11 attributes. In addition, in the four attributes of OCC, SV, FM and OV, the values of DP and AUC are much higher than those of the second-ranked algorithm. Thus, it is concluded that the proposed modules of this work can effectively help to achieve more accurate target tracking.

Table 3.

The DP values under 11 attributes on the OTB100 dataset.

Table 4.

The ACU values under 11 attributes on the OTB100 dataset.

Finally, Table 5 and Table 6 clearly show the improvement of the addition of several modules on scaling, target loss, and other issues. It is noteworthy that the inclusion of the target detection algorithm has significantly enhanced both DP and AUC metrics in terms of the OCC and OV attributes, which indicates that the combination of KCF and YOLOv5s_MSES can effectively solve the problem of losing track of the target.

Table 5.

The comparisons of DP indicators based on four attributes of OTB100 dataset.

Table 6.

The comparisons of AUC indicators based on four attributes of OTB100 dataset.

4.4. Contrast Experiments on the and Parameters

In the experiments of scale factor selection, the benchmark is the KCF algorithm. In order to facilitate selection, the mean values of AUC and DP are used as evaluation criteria. As can be seen from Table 7 and Table 8, when the value is 0.45 and value is 0.40, the comprehensive performance of the ASB method is the best.

Table 7.

The comparison experiments of scale factor in ASB method based on OTB100 dataset.

Table 8.

The comparison experiments of scale factor in ASB method based on OTB100 dataset.

4.5. Contrast Experiments on the Parameter

In the comparative experiment of the selection of the size of the surrounding search range in the STS method, the benchmark is that the KCF algorithm has been combined with the ASB method. In order to facilitate the selection, the average value of AUC and DP is used as the evaluation standard. As can be seen from Table 9, when the value of is 0.25, the comprehensive performance of the STS method is the best.

Table 9.

The comparison experiments of in STS method based on OTB100 dataset.

4.6. Experiments on UAV123 Dataset

Since the UAV123 dataset is taken from the perspective of the UAV, in order to verify the effectiveness of our proposed algorithm, the ablation experiments are carried out on UAV123. The ablation results are shown in Table 10, which shows that when different modules are added into the KCF algorithm, the DP and AUC of our proposed algorithm are significantly increased. Then, in comparison with the baseline algorithm, the DP of our proposed algorithm can be improved by 14.07%, and the AUC is improved by 14.39%, indicating that our algorithm effectively promotes the performance of the basic KCF algorithm.

Table 10.

The ablation experiments on the UAV123 dataset.

In order to reflect the effectiveness of the combination of YOLOv5s_MSES and KCF, Table 11 shows the comparison results of DP indicators after partially adding the target detection algorithm. It follows from the data that our proposed method can improve the performance of the target tracking algorithm very effectively.

Table 11.

The comparisons of DP indicators on the UAV123 dataset (The wakeboard is abbreviated as wb.).

4.7. Comprehensive Performance Comparison

As shown in Table 12 and Table 13, DP, AUC and FPS are selected as evaluation criteria in the experiment. In the OTB100 dataset and UAV123 dataset, although the DP and AUC of these algorithms (such as ASTSAELT, STCDFF, MixFormer-1k, NeighborTrack, and EFSCF) are higher than ours, the FPS is too low to meet the real-time requirements of tracking. In the UAV target tracking task, if the algorithm cannot meet the real-time requirement, it is difficult for the UAV to complete the task requirements in time. Thus, our proposed algorithm more meets the real-time requirements in the comparisons with the two datasets. Moreover, in contrast to [20,42], our algorithm achieves higher DP, AUC and FPS. In summary, it is concluded that our algorithm can achieve overall better performance.

Table 12.

The comprehensive performance comparison experiments on OTB100 dataset.

Table 13.

The comprehensive performance comparison experiments on UAV123 dataset.

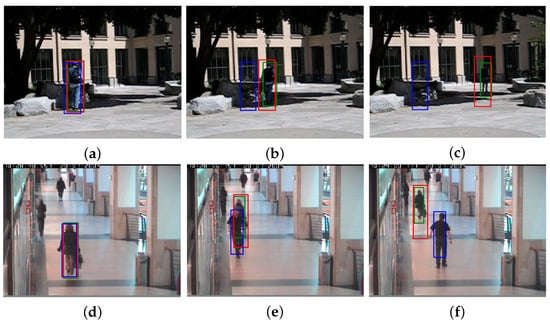

4.8. Experimental Effect and Analysis

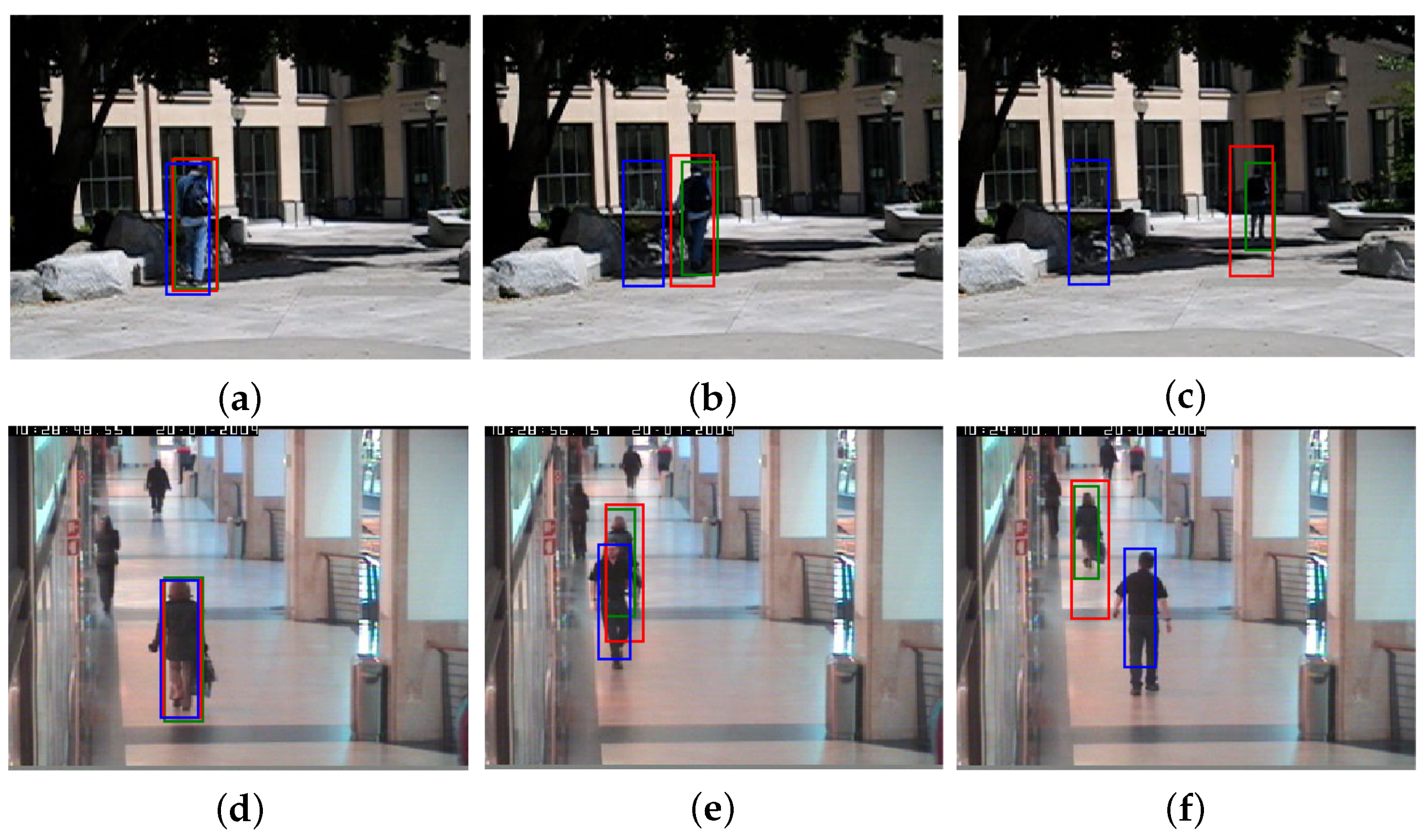

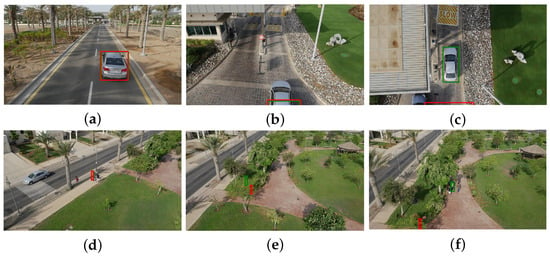

Four kinds of key frames are selected for the tracking experiments by using some existing algorithms. Firstly, Figure 6 shows the tracking results based on the OTB100 dataset, where the green boxes represent the results for our algorithm. The red boxes indicate the results for the KCF algorithm and the blue boxes denote the results for the SiamFC algorithm. In Figure 6a–c, when the tracking target changes in terms of light and shadow, the SiamFC algorithm cannot be adapted and thus the tracking target is lost. Even though KCF can continue to track, the scale box cannot be changed adaptively, while our proposed algorithm can adapt effectively. In Figure 6d–f, when there exists occlusion of similar targets, the tracking error of the SiamFC algorithm is large, while our algorithm can track the target in a normal way. In addition, it can be seen from Figure 6 that the proposed algorithm also has a good effect on scale change.

Figure 6.

The OTB100 video sequences. (a) Human8: Frame 6; (b) Human8: Frame 24; (c) Human8: Frame 94; (d) Walking2: Frame 9; (e) Walking2: Frame 200; (f) Walking2: Frame 314.

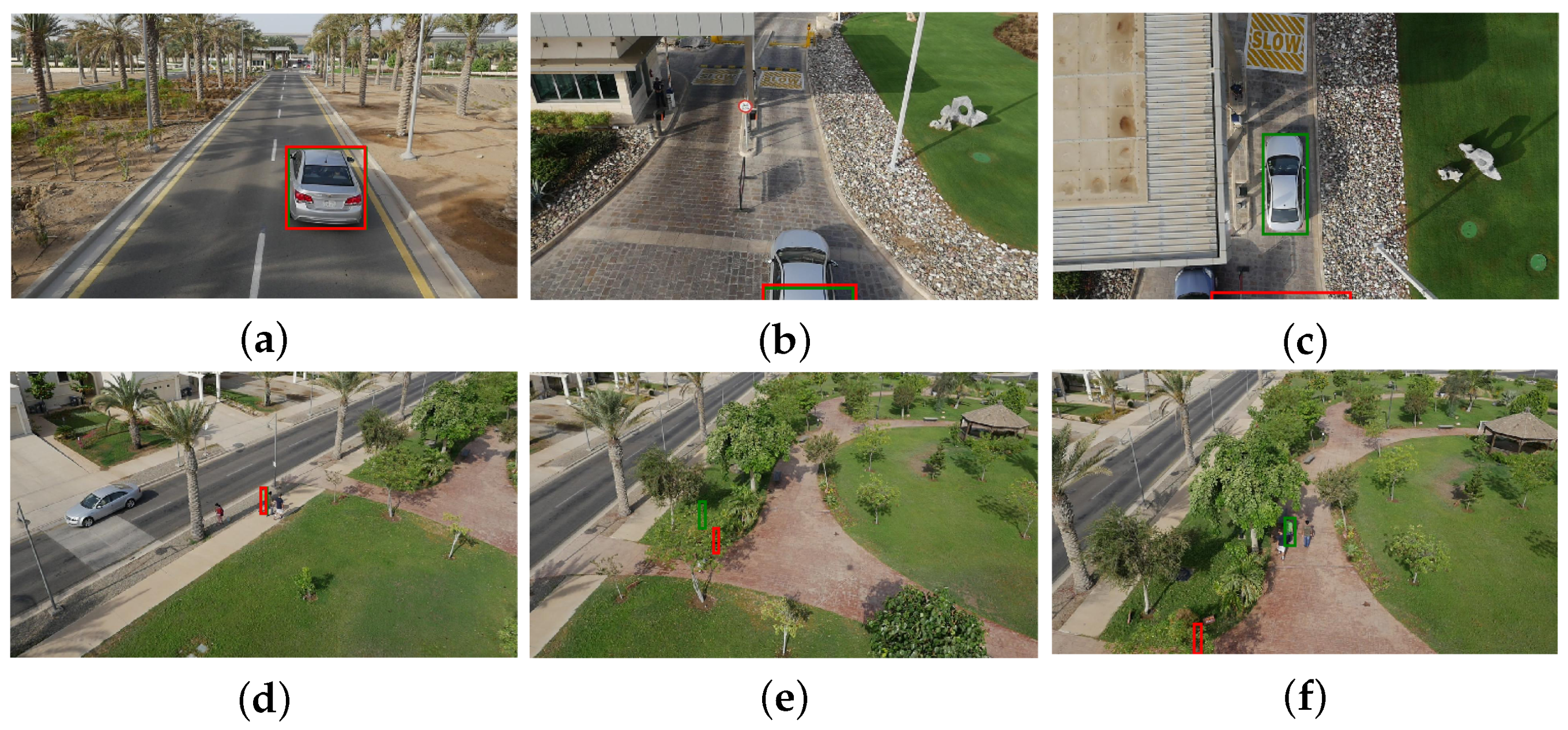

Figure 7 shows the tracking results on the UAV123 dataset, where the green boxes denote the results for our algorithm. The red boxes indicate the results for the KCF + ASB + STS algorithm. As can be seen from Figure 7a–c, the UAV first tracks the rear of the car. Thus, when the tail of the car is out of the view range of the UAV, KCF + ASB + STS cannot perform tracking, while our algorithm can exploit YOLOv5s_MSES to redetect the target and continue to track. As can be seen from Figure 7d–f, the target tracked by the UAV aims for the person in green. Then, when the group is occluded by trees, both KCF + ASB + STS and our algorithms cannot continue the tracking. Yet, when the target reappears in view, our algorithm can still utilize YOLOv5s_MSES to accurately redetect the target and continue tracking. The experimental results show that our algorithms are more feasible and effective for solving the problem of lost target retracking.

Figure 7.

The UAV123 video sequences. (a) Car6_2: Frame 29; (b) Car6_2: Frame 703; (c) Car6_2: Frame 958; (d) Group2_3: Frame 29; (e) Group2_3: Frame 340; (f) Group2_3: Frame 622.

Finally, the dynamic display of the complete video sequence tracking trajectory is presented, especially focusing on stable tracking after successful retracking. The link to the video is https://github.com/csh265/Complete_video_sequence (accessed on 26 April 2025).

5. Conclusions

This work has proposed an improved KCF tracking algorithm based on the target detection algorithm to address the problem of the difficulty involving retracking the target when it is lost and tracking drift caused by boundary effects. First of all, an adaptive scale box was proposed to change the scale of the target box according to the changing scale of the target. Then, based on the spatial information around the current frame of the target and the temporal information of the previous frames, a spatio-temporal search strategy was introduced to suppress the influence of boundary effects. Additionally, the KCF algorithm integrated the YOLOv5s_MSES target detection one and the APCE to enable the UAV to retrack the target when it is lost. Finally, The experimental results of the UAV123 dataset showed that although the YOLOv5s_MSES algorithm could only detect part of the videos, the DP and the AUC were, respectively, increased by 8.99% and 6.62% on the basis of the KCF + ASB + STS detection results. It is verified that the combination of the CF target tracking algorithm and target detection one could achieve great feasibility. Therefore, our proposed algorithm can better solve the problem induced by target loss, scale change, and boundary effects, meet the real-time requirements with high precision, and demonstrate superior comprehensive performance over some existing algorithms.

Author Contributions

Conceptualization, S.C. and T.W.; methodology, S.C.; software, S.C.; validation, S.C., T.W. and T.L.; formal analysis, T.W.; investigation, T.W. and S.F.; writing—original draft preparation, S.C; writing—review and editing, T.L. and S.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundations of China (Nos. 62073164, 61922042), Aeronautical Science Foundation of China (No. 2023Z032052001).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yan, B.; Paolini, E.; Xu, L.; Lu, H. A target detection and tracking method for multiple radar systems. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–21. [Google Scholar] [CrossRef]

- Fang, H.; Liao, G.; Liu, Y.; Zeng, C. Shadow-assisted moving target tracking based on multi-discriminant correlation filters network in video SAR. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar]

- Lee, M.; Lin, C. Object tracking for an autonomous unmanned surface vehicle. Machines 2022, 10, 378. [Google Scholar] [CrossRef]

- Hayat, M.; Ali, A.; Khan, B.; Mehmood, K.; Ullah, K.; Amir, M. An improved spatial–temporal regularization method for visual object tracking. Signal Image Video Process. 2024, 18, 2065–2077. [Google Scholar] [CrossRef]

- Song, P.; Li, P.; Dai, L.; Wang, T.; Chen, Z. Boosting R-CNN: Reweighting R-CNN samples by RPN’s error for underwater object detection. Neurocomputing 2023, 530, 150–164. [Google Scholar] [CrossRef]

- Zhang, R.; Shao, Z.; Huang, X.; Wang, J.; Wang, Y.; Li, D. Adaptive dense pyramid network for object detection in UAV imagery. Neurocomputing 2022, 489, 377–389. [Google Scholar] [CrossRef]

- Wang, J.; Meng, C.; Deng, C.; Wang, Y. Learning convolutional self-attention module for unmanned aerial vehicle tracking. Signal Image Video Process. 2023, 17, 2323–2331. [Google Scholar] [CrossRef]

- Wang, H.; Qi, L.; Qu, H.; Ma, W.; Yuan, W.; Hao, W. End-to-end wavelet block feature purification network for efficient and effective UAV object tracking. J. Vis. Commun. Image Represent. 2023, 97, 103950. [Google Scholar] [CrossRef]

- Daramouskas, I.; Meimetis, D.; Patrinopoulou, N.; Lappas, V.; Kostopoulos, V. Camera-based local and global target detection, tracking, and localization techniques for UAVs. Machines 2023, 11, 315. [Google Scholar] [CrossRef]

- Fu, C.; Li, B.; Ding, F.; Lin, F.; Lu, G. Correlation filters for unmanned aerial vehicle-based aerial tracking: A review and experimental evaluation. IEEE Geosci. Remote Sens. Mag. 2021, 10, 125–160. [Google Scholar] [CrossRef]

- Zhang, F.; Ma, S.; Qiu, Z.; Qi, T. Learning target-aware background-suppressed correlation filters with dual regression for real-time UAV tracking. Signal Process. 2022, 191, 108352. [Google Scholar] [CrossRef]

- Li, S.; Liu, Y.; Zhao, Q.; Feng, Z. Learning residue-aware correlation filters and refining scale for real-time UAV tracking. Pattern Recognit. 2022, 127, 108614. [Google Scholar] [CrossRef]

- Bolme, D.; Beveridge, J.; Draper, B.; Lui, Y. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary learners for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Danelljan, M.; Shahbaz Khan, F.; Felsberg, M.; Van de Weijer, J. Adaptive color attributes for real-time visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar]

- Lukezic, A.; Vojir, T.; Cehovin Zajc, L.; Matas, J.; Kristan, M. Discriminative correlation filter with channel and spatial reliability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6309–6318. [Google Scholar]

- Tai, Y.; Tan, Y.; Xiong, S.; Tian, J. Subspace reconstruction based correlation filter for object tracking. Comput. Vis. Image Underst. 2021, 212, 103272. [Google Scholar] [CrossRef]

- Wen, J.; Chu, H.; Lai, Z.; Xu, T.; Shen, L. Enhanced robust spatial feature selection and correlation filter learning for UAV tracking. Neural Netw. 2023, 161, 39–54. [Google Scholar] [CrossRef]

- Moorthy, S.; Joo, Y. Learning dynamic spatial-temporal regularized correlation filter tracking with response deviation suppression via multi-feature fusion. Neural Netw. 2023, 167, 360–379. [Google Scholar] [CrossRef]

- Ma, H.; Acton, S.; Lin, Z. Situp: Scale invariant tracking using average peak-to-correlation energy. IEEE Trans. Image Process. 2020, 29, 3546–3557. [Google Scholar] [CrossRef]

- Cao, S.; Wang, T.; Li, T.; Mao, Z. UAV small target detection algorithm based on an improved YOLOv5s model. J. Vis. Commun. Image Represent. 2023, 97, 103936. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Z.; Fang, B.; Bu, Y. Temporal–spatial consistency of self-adaptive target response for long-term correlation filter tracking. Signal Image Video Process. 2020, 14, 639–644. [Google Scholar] [CrossRef]

- Liu, P.; Liu, C.; Zhao, W.; Tang, X. Multi-level context-adaptive correlation tracking. Pattern Recognit. 2019, 87, 216–225. [Google Scholar] [CrossRef]

- Elayaperumal, D.; Joo, Y. Robust visual object tracking using context-based spatial variation via multi-feature fusion. Inf. Sci. 2021, 577, 467–482. [Google Scholar] [CrossRef]

- Moorthy, S.; Joo, Y. Adaptive spatial-temporal surrounding-aware correlation filter tracking via ensemble learning. Pattern Recognit. 2023, 139, 109457. [Google Scholar] [CrossRef]

- Li, S.; Wu, O.; Zhu, C.; Chang, H. Visual object tracking using spatial context information and global tracking skills. Comput. Vis. Image Underst. 2014, 125, 1–15. [Google Scholar] [CrossRef]

- Yan, P.; Yao, S.; Zhu, Q.; Zhang, T.; Cui, W. Real-time detection and tracking of infrared small targets based on grid fast density peaks searching and improved KCF. Infrared Phys. Technol. 2022, 123, 104181. [Google Scholar] [CrossRef]

- Yang, X.; Li, S.; Yu, J.; Zhang, K.; Yang, J.; Yan, J. GF-KCF: Aerial infrared target tracking algorithm based on kernel correlation filters under complex interference environment. Infrared Phys. Technol. 2021, 119, 103958. [Google Scholar] [CrossRef]

- Jia, Y.; Zhang, Y.; Zhou, C.; Yang, Y. Helop: Multi-target tracking based on heuristic empirical learning algorithm and occlusion processing. Displays 2023, 79, 102488. [Google Scholar] [CrossRef]

- Cai, D.; Guan, Z.; Bamisile, O.; Zhang, W.; Li, J.; Zhang, Z.; Wu, J.; Chang, Z.; Huang, Q. Multi-objective tracking for smart substation onsite surveillance based on YOLO approach and AKCF. Energy Rep. 2023, 9, 1429–1438. [Google Scholar] [CrossRef]

- Chen, R.; Wu, J.; Peng, Y.; Li, Z.; Shang, H. Detection and tracking of floating objects based on spatial-temporal information fusion. Expert Syst. Appl. 2023, 225, 120185. [Google Scholar] [CrossRef]

- Kinasih, F.; Machbub, C.; Yulianti, L.; Rohman, A. Two-stage multiple object detection using CNN and correlative filter for accuracy improvement. Heliyon 2023, 9, e12716. [Google Scholar] [CrossRef]

- Wang, M.; Yang, W.; Wang, L.; Chen, D.; Wei, F.; KeZiErBieKe, H.; Liao, Y. FE-YOLOv5: Feature enhancement network based on YOLOv5 for small object detection. J. Vis. Commun. Image Represent. 2023, 90, 103752. [Google Scholar] [CrossRef]

- Liu, C.; Yang, D.; Tang, L.; Zhou, X.; Deng, Y. A lightweight object detector based on spatial coordinate self-attention for UAV aerial images. Remote Sens. 2022, 15, 83. [Google Scholar] [CrossRef]

- Zheng, K.; Zhang, Z.; Qiu, C. A fast adaptive multi-scale kernel correlation filter tracker for rigid object. Sensors 2022, 22, 7812. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Lim, J.; Yang, M. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for UAV tracking. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 445–461. [Google Scholar]

- Babenko, B.; Yang, M.; Belongie, S. Robust object tracking with online multiple instance learning. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 1619–1632. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, Y. Object tracking in UAV videos by multi-feature correlation filters with saliency proposals. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5538–5548. [Google Scholar] [CrossRef]

- Nai, K.; Li, Z.; Wang, H. Dynamic feature fusion with spatial-temporal context for robust object tracking. Pattern Recognit. 2022, 130, 108775. [Google Scholar] [CrossRef]

- Cui, Y.; Jiang, C.; Wang, L.; Wu, G. Mixformer: End-to-end tracking with iterative mixed attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13608–13618. [Google Scholar]

- Chen, Y.; Wang, C.; Yang, C.; Chang, H.; Lin, Y.; Chuang, Y.; Liao, H. Neighbortrack: Improving single object tracking by bipartite matching with neighbor tracklets. arXiv 2022, arXiv:2211.06663. [Google Scholar]

- Lin, B.; Bai, Y.; Bai, B.; Li, Y. Robust correlation tracking for UAV with feature integration and response map enhancement. Remote Sens. 2022, 14, 4073. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).