Towards Robust Physical Adversarial Attacks on UAV Object Detection: A Multi-Dimensional Feature Optimization Approach

Abstract

1. Instruction

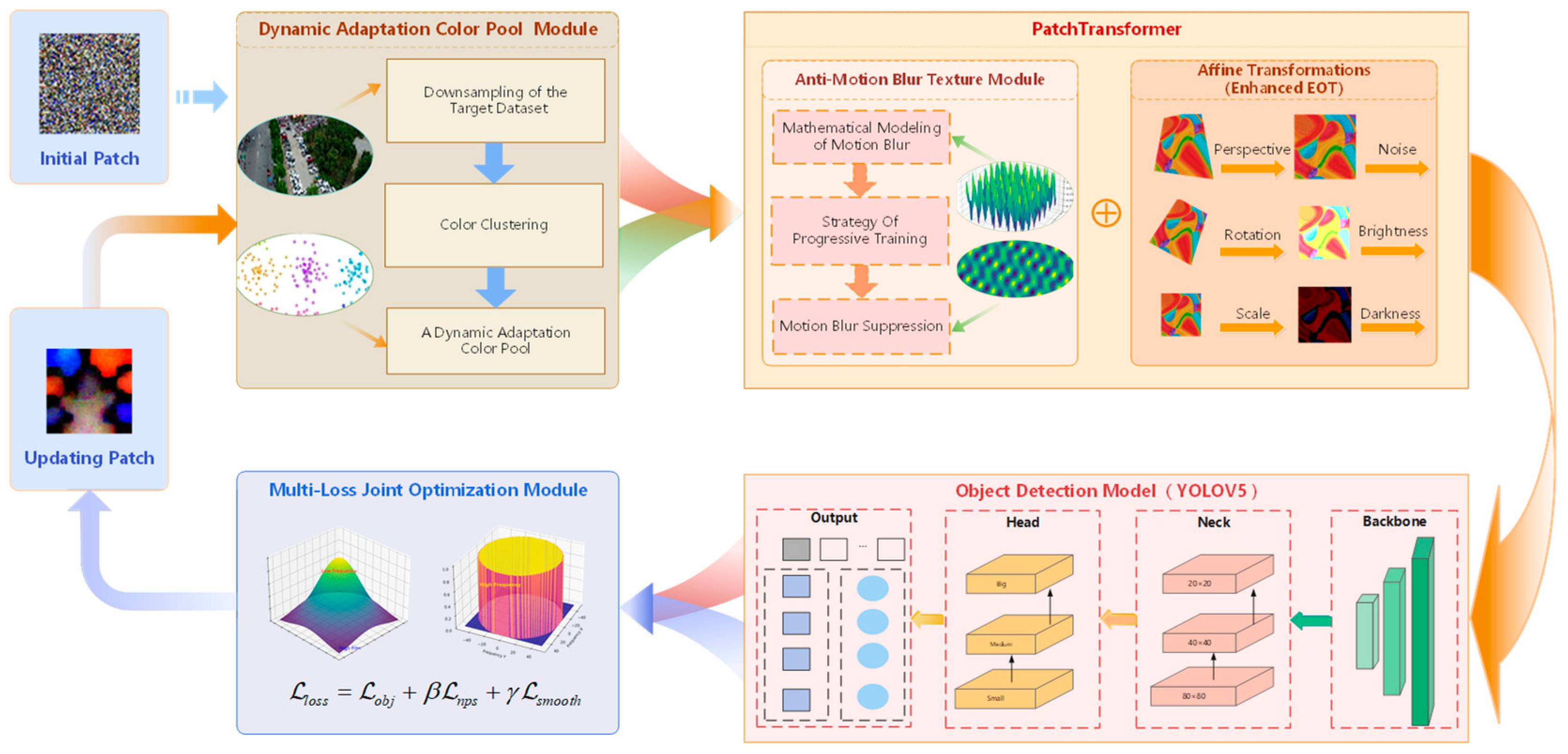

- An environmental adaptive color pool extraction method for adversarial patches is designed. The method enables the patch colors to blend better with the surrounding environments.

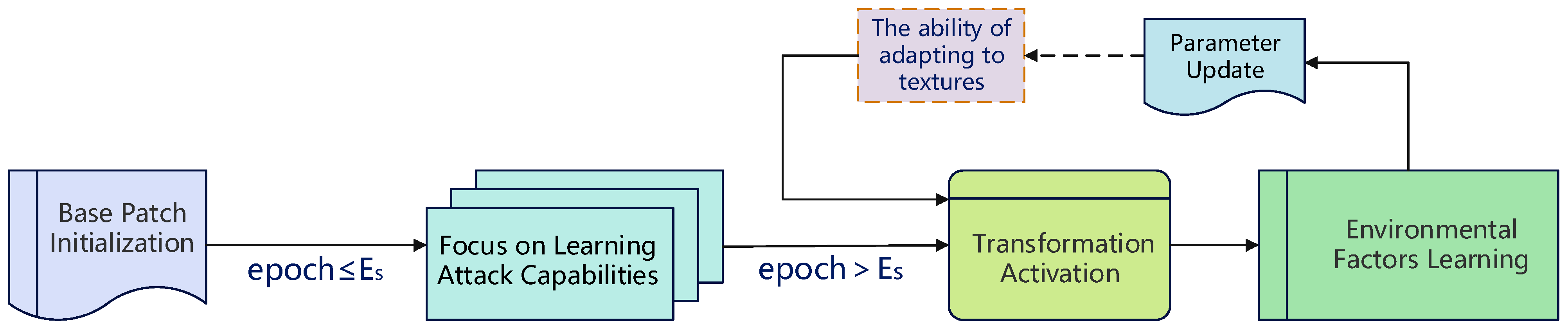

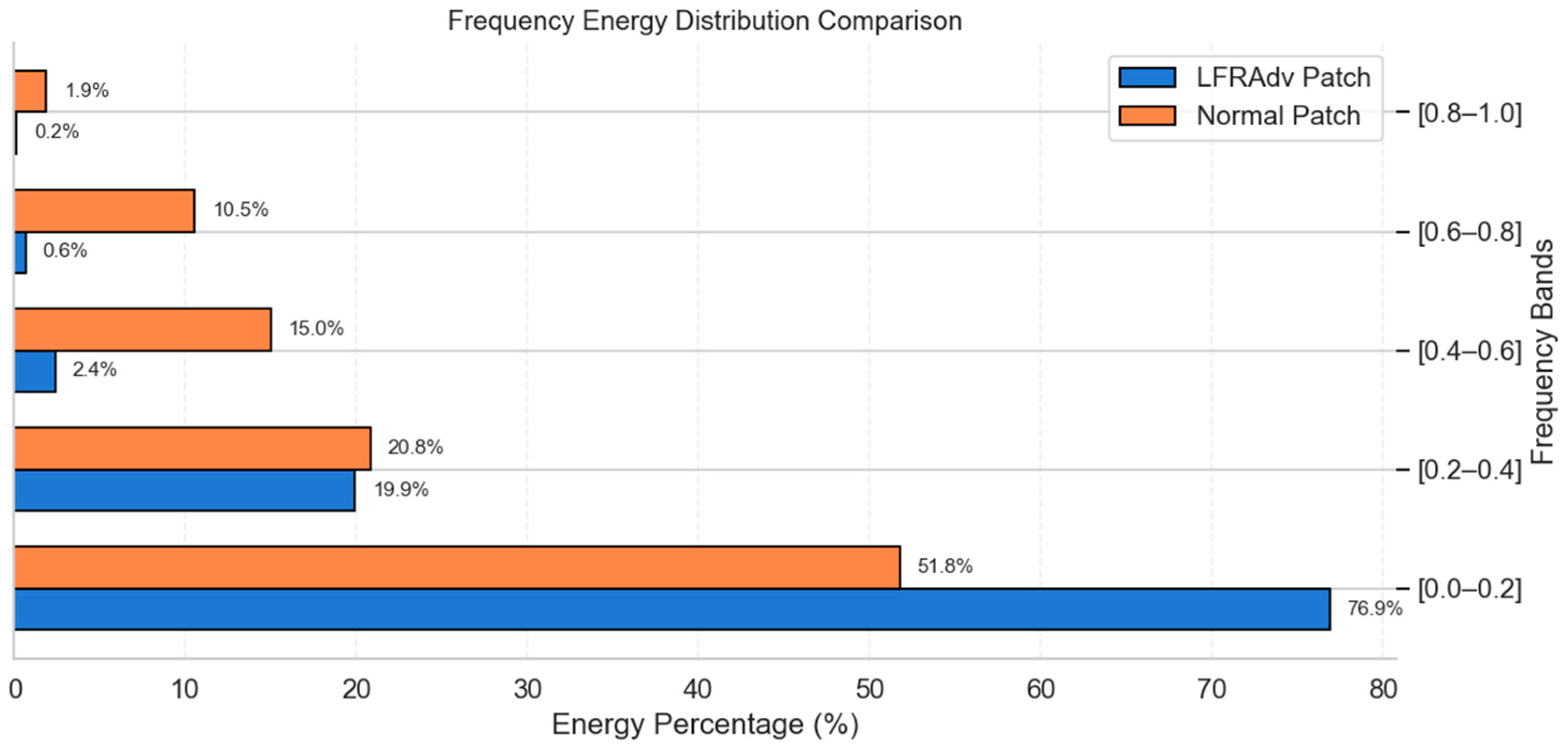

- A texture-based method for anti-blur of patches is proposed. By accurately mathematically modeling the blurring effects caused by the high-speed movement of UAVs, a progressive transformation module based on data augmentation is proposed. Specific textures are generated to suppress the motion-blur effect, thereby reducing the loss of adversarial patches during high-speed UAV photography.

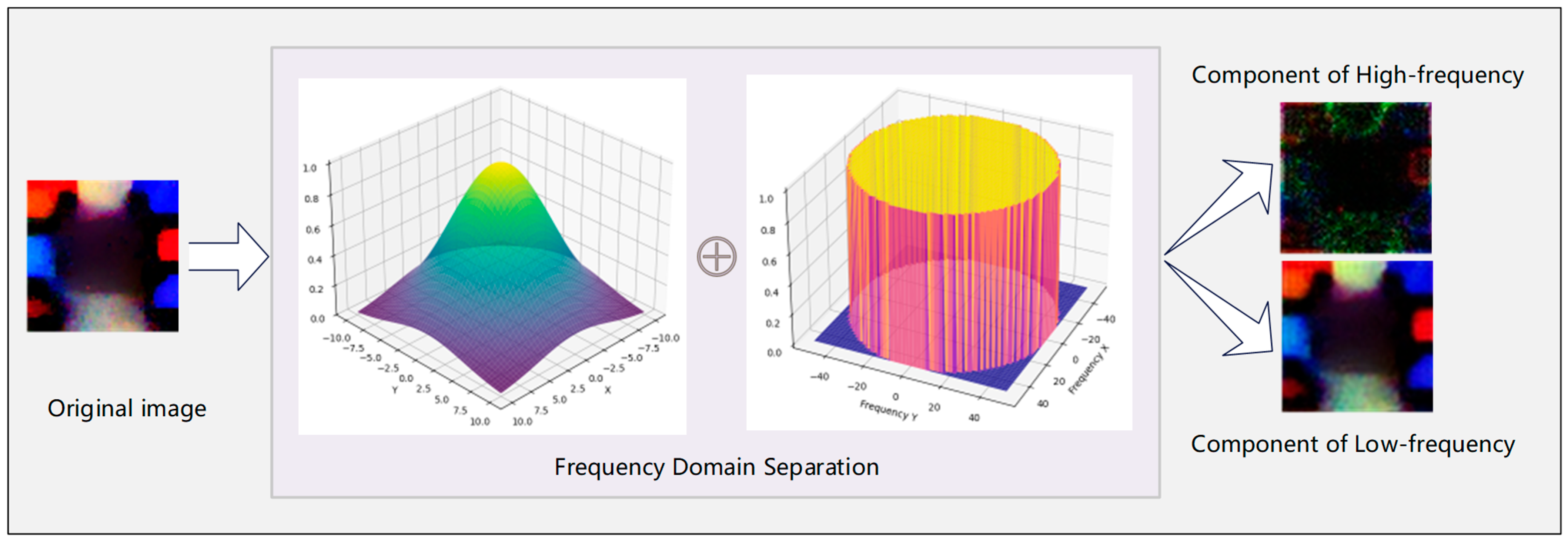

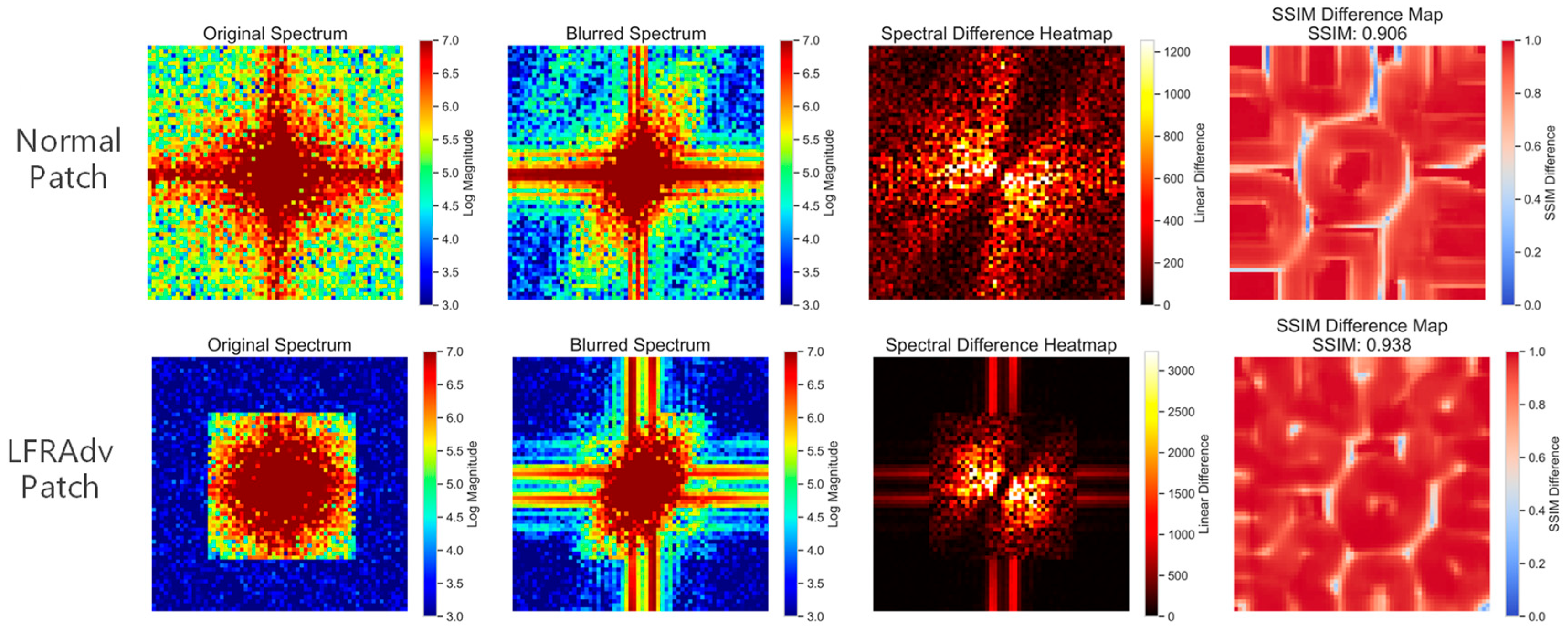

- Frequency domain computation methods are introduced into the adversarial patch generation process. This not only effectively reduces the information loss of adversarial patches after printing and secondary capture, but also improves the patch generation speed.

2. Related Works

2.1. Object Detection on Unmanned Aerial Vehicle

2.2. Physical Adversarial Attacks for Object Detection

- Insufficient cross-modal distortion modeling during digital-to-physical domain conversion. Traditional approaches rely on Total Variation Loss (TV Loss) and Non-Printability Score (NPS Loss) to constrain patch smoothness and printability. However, TV Loss addresses spatial smoothness but does not explicitly protect against frequency-domain distortions like motion blur. Meanwhile, during the physical deployment stage, there is a lack of a patch generation mechanism that matches the environmental color scheme and control of high-frequency information loss that adapts to the environment, resulting in simultaneous degradation of both the stealthiness and attack success rates in practical applications.

- Inadequate consideration of secondary feature of physical patches degradation during their dynamic capture by UAVs. Deployed adversarial patches face multimodal interference under high-speed UAV imaging conditions, including environmental factors and motion-induced artifacts. Although some works have attempted to enhance robustness through physical augmentation, they have not yet solved the coupled effects of motion blur and frequency domain shift during capture, leading to spatiotemporal feature degradation of the patches.

3. Methods

- (a)

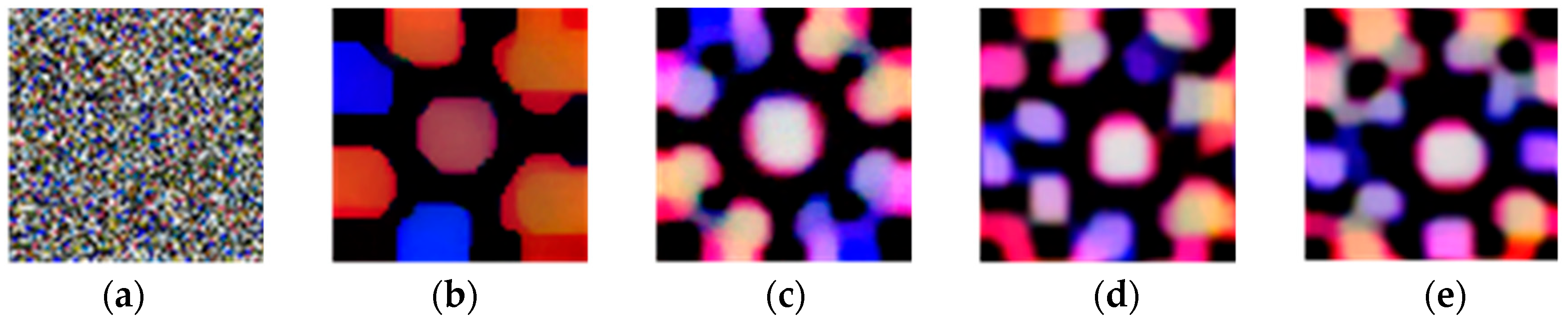

- Anti Motion Blur Texture (AMBT): This part directly integrates the mathematical modeling of motion blur into the patch training process. By applying simulated motion blur to the patch, the optimization process is compelled to generate textures that are inherently resistant to such blur, thus significantly enhancing the patch’s effectiveness in scenarios where the UAV is moving at high speed.

- (b)

- Affine Transformation: This part is used to simulate various geometric and photometric changes that the patch may encounter in the real environment, such as multi-angle perspectives, illumination fluctuations, and random noise. By introducing these transformations, the patch can maintain its adversarial nature in complex and variable environments.

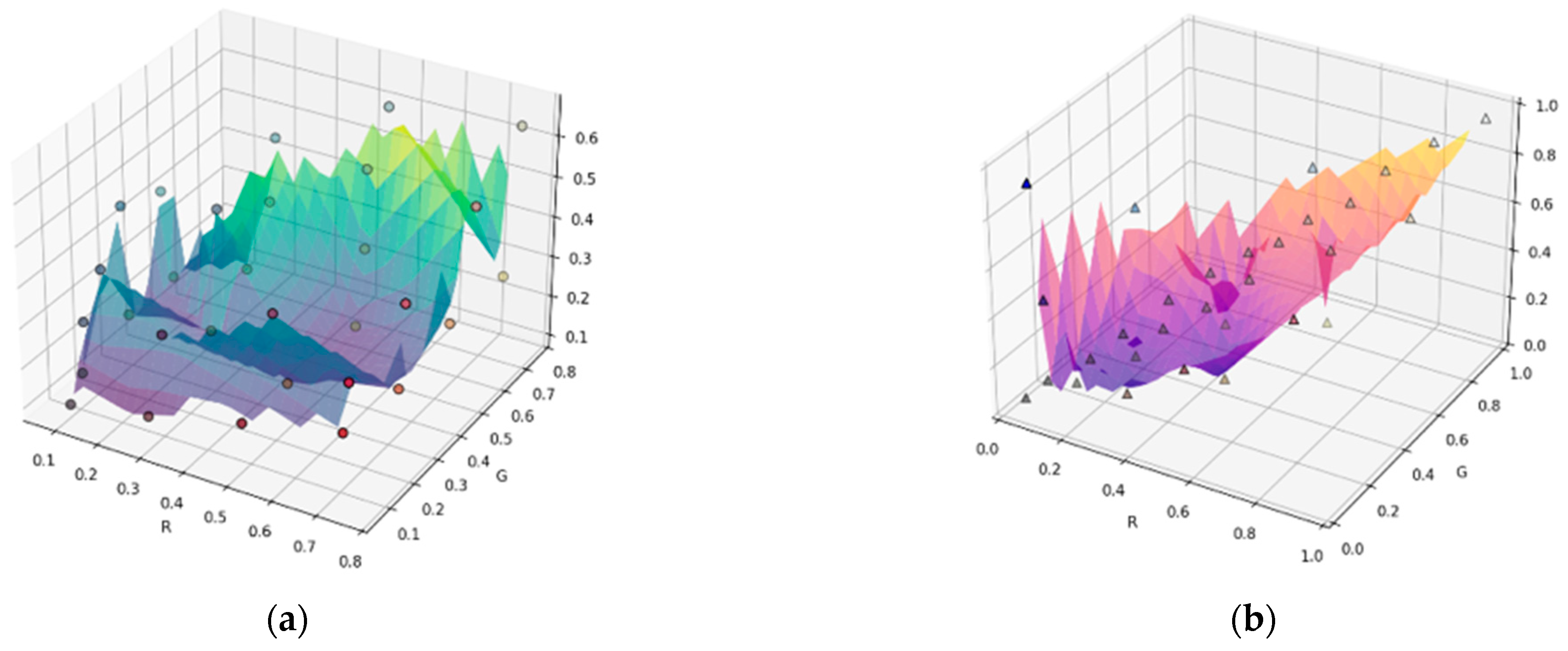

3.1. Construction of Dynamic Adaptation Color Pool

- Data sampling. To ensure the comprehensiveness of the color distribution obtained through sampling and to control the computational complexity, we employed a two-stage sampling algorithm to extract colors from the target environment for patch generation. Firstly, a random selection of images is made from the input dataset. For each image, random down-sampling is performed. When the single image-pixel quantity after down-sampling is less than 1000, all available pixels are retained. Then, during the second-stage sampling, the overall pixel pool is adjusted to maintain a balanced distribution. Then, all pixels are merged for a second round of random sampling to generate a candidate pixel matrix of magnitude.

- Color clustering. The K-means algorithm is used to extract the main colors from all the sampled auxiliary colors, in order to obtain the most representative colors in the target environment. The clustering method is presented as the following equation:where represents the cluster center and is the RGB pixel vector. The clustering process is initialized multiple times to avoid local optima, and the Elkan algorithm [27] is used to optimize the efficiency of distance calculation.

- Cross-space filtering and screening. Based on the colors obtained from the previous two steps of clustering, we further establish a three-level color filtering criterion to enhance the usability of the colors.

3.2. Design of Anti-Motion Blur Texture

3.3. High-Frequency Separation Strategy Based on Fourier Transform

3.4. Loss Functions

- Non-printable score loss :

- b.

- Smooth loss :

- c.

- Objectness loss

4. Experiments & Results

4.1. Experimental Settings

4.2. Results and Evaluation

4.2.1. Effectiveness of the Dynamic Color Pool Construction

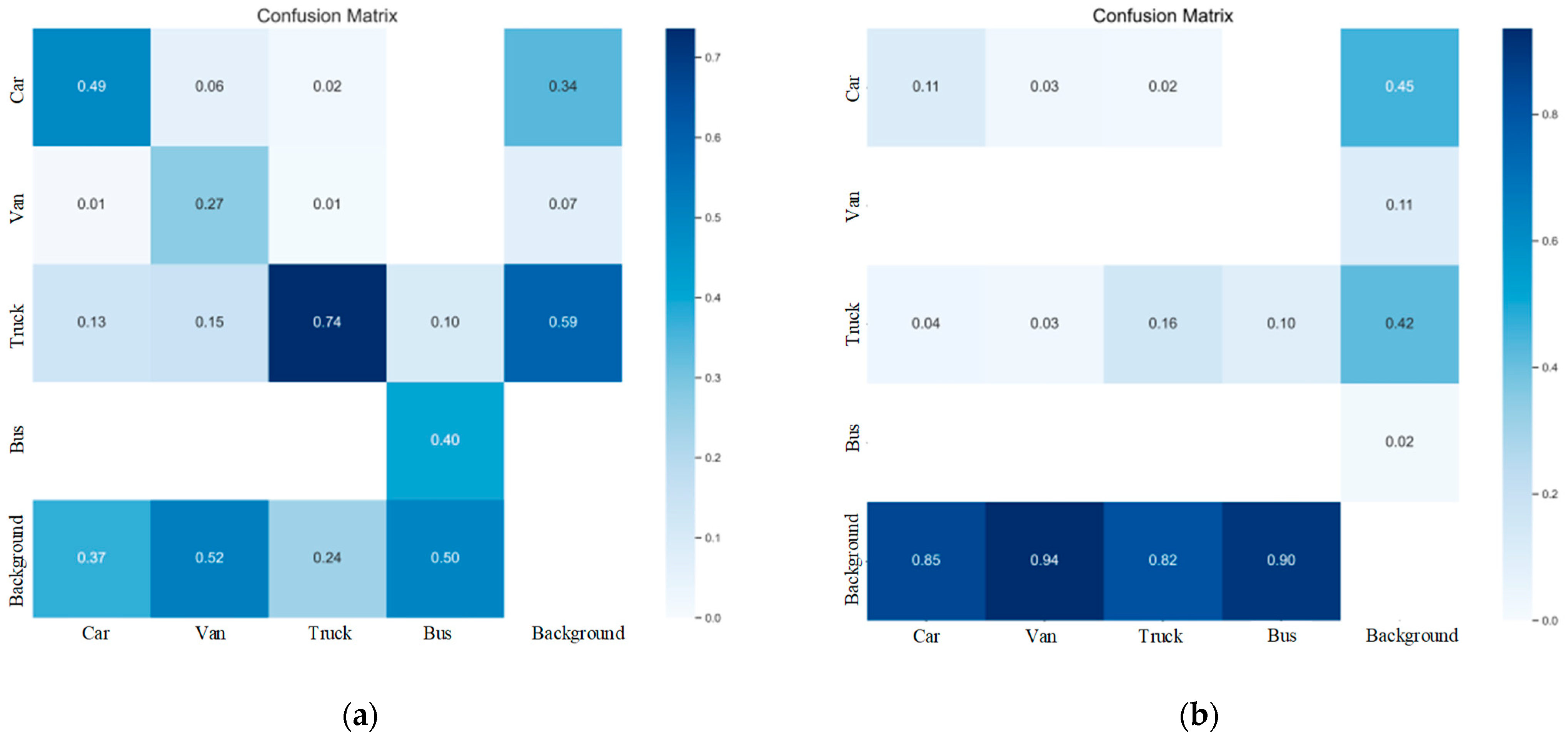

4.2.2. Verification for the Effectiveness of the Adversarial Patches

- (a)

- Compare the attack impacts of random patches and the generated adversarial patches. This was to demonstrate the effectiveness of the adversarial patches.

- (b)

- Conduct ablation experiments on the two strategies proposed in Section 3.2 and Section 3.3.

- (c)

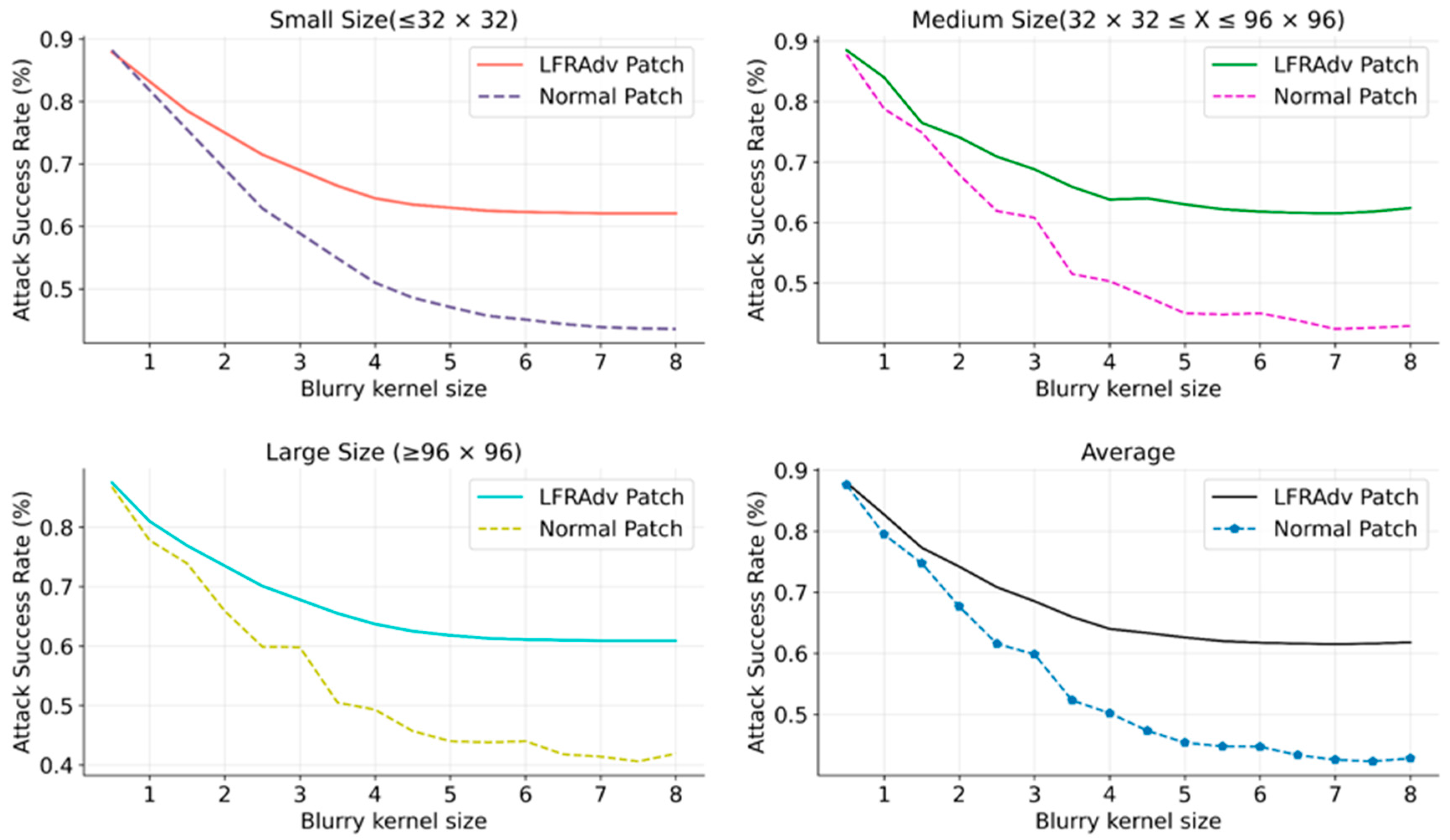

- Examine the information loss disparity between anti-blur patches and ordinary patches when both are subjected to the same blur interference.

- (d)

- Evaluate the ASR of anti-blur patches and ordinary patches after they are affixed to the target surface and subsequently influenced by blur interference.

5. Discussion

5.1. Summary of Key Results

5.2. Comparison with Existing Works

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DNNs | Deep Neural Networks |

| UAVs | Unmanned Aerial Vehicles |

| ASR | Attack Success Rate |

| LFRAP | Low-Frequency Robust Adversarial Patch |

| YOLO | You Only Look Once |

| DACP | Dynamic Adaptation Color Pool |

| AMBT | Anti-Motion Blur Texture |

| MLJO | Multi-Loss Joint Optimization |

| CMYK | Cyan Magenta Yellow Black |

| EOT | Expectation Over Transformation |

| NPS | Non-Printable Score |

| IOU | Intersection over Union |

| SSIM | Structural Similarity Index |

References

- Yang, X.; Smith, A.M.; Bourchier, R.S.; Hodge, K.; Ostrander, D.; Houston, B. Mapping Flowering Leafy Spurge Infestations in a Heterogeneous Landscape Using Unmanned Aerial Vehicle Red-Green-Blue Images and a Hybrid Classification Method. Int. J. Remote Sens. 2021, 42, 8930–8951. [Google Scholar] [CrossRef]

- Alsamhi, S.H.; Shvetsov, A.V.; Kumar, S.; Shvetsova, S.V.; Alhartomi, M.A.; Hawbani, A.; Rajput, N.S.; Srivastava, S.; Saif, A.; Nyangaresi, V.O. UAV Computing-Assisted Search and Rescue Mission Framework for Disaster and Harsh Environment Mitigation. Drones 2022, 6, 154. [Google Scholar] [CrossRef]

- Feng, J.; Yi, C. Lightweight Detection Network for Arbitrary-Oriented Vehicles in UAV Imagery via Global Attentive Relation and Multi-Path Fusion. Drones 2022, 6, 108. [Google Scholar] [CrossRef]

- Jun, M.; Lilian, Z.; Xiaofeng, H.; Hao, Q.; Xiaoping, H. A 2dgeoreferenced Map Aided Visual-Inertial System for Precise Uav Localization. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 4455–4462. [Google Scholar]

- Nex, F.; Armenakis, C.; Cramer, M.; Cucci, D.A.; Gerke, M.; Honkavaara, E.; Kukko, A.; Persello, C.; Skaloud, J. UAV in the Advent of the Twenties: Where We Stand and What Is Next. ISPRS J. Photogramm. Remote Sens. 2022, 184, 215–242. [Google Scholar] [CrossRef]

- Cao, Z.; Kooistra, L.; Wang, W.; Guo, L.; Valente, J. Real-Time Object Detection Based on Uav Remote Sensing: A Systematic Literature Review. Drones 2023, 7, 620. [Google Scholar] [CrossRef]

- Shrestha, S.; Pathak, S.; Viegas, K. Towards a Robust Adversarial Patch Attack against Unmanned Aerial Vehicles Object Detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 3256–3263. [Google Scholar]

- Tian, J.; Wang, B.; Guo, R.; Wang, Z.; Cao, K.; Wang, X. Adversarial Attacks and Defenses for Deep-Learning-Based Unmanned Aerial Vehicles. IEEE Internet Things J. 2022, 9, 22399–22409. [Google Scholar] [CrossRef]

- Xi, H.; Ru, L.; Tian, J.; Lu, B.; Hu, S.; Wang, W.; Luan, X. URAdv: A Novel Framework for Generating Ultra-Robust Adversarial Patches against UAV Object Detection. Mathematics 2025, 13, 591. [Google Scholar] [CrossRef]

- Mittal, P.; Singh, R.; Sharma, A. Deep Learning-Based Object Detection in Low-Altitude UAV Datasets: A Survey. Image Vis. Comput. 2020, 104, 104046. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Vehicle Detection From UAV Imagery With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6047–6067. [Google Scholar] [CrossRef]

- Mei, S.; Chen, X.; Zhang, Y.; Li, J.; Plaza, A. Accelerating Convolutional Neural Network-Based Hyperspectral Image Classification by Step Activation Quantization. IEEE Trans. Geosci. Remote Sens. 2021, 60, 550212. [Google Scholar] [CrossRef]

- Athalye, A.; Engstrom, L.; Ilyas, A.; Kwok, K. Synthesizing Robust Adversarial Examples. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 284–293. [Google Scholar]

- Sharif, M.; Bhagavatula, S.; Bauer, L. Accessorize to a Crime: Realand Stealthy Attacks on State-Ofhe Art Face Recognition. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; ACM Press: New York, NY, USA, 2016. [Google Scholar]

- Song, D.; Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Tramer, F.; Prakash, A.; Kohno, T. Physical Adversarial Examples for Object Detectors. In Proceedings of the 12th USENIX Workshop on Offensive Technologies (WOOT 18) 2018, Baltimore, MD, USA, 13–14 August 2018. [Google Scholar]

- Maesumi, A.; Zhu, M.; Wang, Y.; Chen, T.; Wang, Z.; Bajaj, C. Learning Transferable 3D Adversarial Cloaks for Deep Trained Detectors 2021. arXiv 2021, arXiv:2104.11101. [Google Scholar]

- Guesmi, A.; Hanif, M.A.; Ouni, B.; Shafique, M. Physical Adversarial Attacks for Camera-Based Smart Systems: Current Trends, Categorization, Applications, Research Challenges, and Future Outlook. IEEE Access 2023, 11, 109617–109668. [Google Scholar] [CrossRef]

- Duan, R.; Mao, X.; Qin, A.K.; Chen, Y.; Ye, S.; He, Y.; Yang, Y. Adversarial Laser Beam: Effective Physical-World Attack to Dnns in a Blink. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Denver, CO, USA, 3–7 June 2021; pp. 16062–16071. [Google Scholar]

- Jing, P.; Tang, Q.; Du, Y.; Xue, L.; Luo, X.; Wang, T.; Nie, S.; Wu, S. Too Good to Be Safe: Tricking Lane Detection in Autonomous Driving with Crafted Perturbations. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Vancouver, BC, Canada, 11–13 August 2021; pp. 3237–3254. [Google Scholar]

- Zhong, Y.; Liu, X.; Zhai, D.; Jiang, J.; Ji, X. Shadows Can Be Dangerous: Stealthy and Effective Physical-World Adversarial Attack by Natural Phenomenon. arXiv 2022, arXiv:2203.03818. [Google Scholar] [CrossRef]

- Guesmi, A.; Abdullah Hanif, M.; Shafique, M. Advrain: Adversarial Raindrops to Attack Camera-Based Smart Vision Systems. Information 2023, 14, 634. [Google Scholar] [CrossRef]

- Lian, J.; Mei, S.; Zhang, S.; Ma, M. Benchmarking Adversarial Patch against Aerial Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5634616. [Google Scholar] [CrossRef]

- Du, A.; Chen, B.; Chin, T.-J.; Law, Y.W.; Sasdelli, M.; Rajasegaran, R.; Campbell, D. Physical Adversarial Attacks on an Aerial Imagery Object Detector. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1796–1806. [Google Scholar]

- Cui, J.; Guo, W.; Huang, H.; Lv, X.; Cao, H.; Li, H. Adversarial Examples for Vehicle Detection with Projection Transformation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5632418. [Google Scholar] [CrossRef]

- Thys, S.; Van Ranst, W.; Goedemé, T. Fooling Automated Surveillance Cameras: Adversarial Patches to Attack Person Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Komkov, S.; Petiushko, A. AdvHat: Real-World Adversarial Attack on ArcFace Face ID System. In Proceedings of the 2020 25th international conference on pattern recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 819–826. [Google Scholar]

- Elkan, C. Using the Triangle Inequality to Accelerate K-Means. In Proceedings of the 20th International Conference on Machine Learning (ICML-03), Washington, DC, USA, 21–24 August 2003; pp. 147–153. [Google Scholar]

- Kim, D.-H.; Cho, E.K.; Kim, J.P. Evaluation of CIELAB-Based Colour-Difference Formulae Using a New Dataset. Color Res. Appl. 2001, 26, 369–375. [Google Scholar] [CrossRef]

- Sieberth, T.; Wackrow, R.; Chandler, J. UAV Image Blur–Its Influence and Ways to Correct It. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 33–39. [Google Scholar] [CrossRef]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. Deblurgan: Blind Motion Deblurring Using Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8183–8192. [Google Scholar]

- Wang, W.; Su, C. An Optimization Method for Motion Blur Image Restoration and Ringing Suppression via Texture Mapping. ISA Trans. 2022, 131, 650–661. [Google Scholar] [CrossRef]

- Yadav, O.; Ghosal, K.; Lutz, S.; Smolic, A. Frequency-Domain Loss Function for Deep Exposure Correction of Dark Images. Signal Image Video Process. 2021, 15, 1829–1836. [Google Scholar] [CrossRef]

- Chen, Y.-Y.; Tai, S.-C. Enhancing Ultrasound Images by Morphology Filter and Eliminating Ringing Effect. Eur. J. Radiol. 2005, 53, 293–305. [Google Scholar] [CrossRef] [PubMed]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7380–7399. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

| Filtering Lever | Criteria for Judgement | Parameter Settings |

|---|---|---|

| Saturability | ||

| Print compatibility | Use the US Web Uncoated ICC profile | |

| Color difference constraint | Dynamic threshold δ = 0.12 (color difference) [28] |

| Number of Samples | Random Color Pool (Mean ± Standard Deviation) | DCPAM (Mean ± Standard Deviation) | Improvement Range |

|---|---|---|---|

| 50 | 0.812 ± 0.032 | 0.887 ± 0.021 | 9.2% |

| 100 | 0.798 ± 0.038 | 0.901 ± 0.018 | 12.9% |

| 150 | 0.785 ± 0.041 | 0.914 ± 0.015 | 16.4% |

| 200 | 0.776 ± 0.045 | 0.923 ± 0.012 | 18.9% |

| 1580 | 0.781 ± 0.052 | 0.917 ± 0.023 | 18.4% |

| Types | ASR (%) | |||

|---|---|---|---|---|

| Small Size | Medium Size | Large Size | Average | |

| Normal Patch (Baseline) | 47.1 | 45.5 | 44.0 | 45.5 |

| +Anti-Motion Blur | 59.5 | 57.2 | 58.3 | 58.3 |

| +Frequency Separation | 58.9 | 58.2 | 62.4 | 59.8 |

| LFRAP (Ours) | 65.2 | 64.1 | 64.8 | 64.7 |

| Models | L = 0 | L = 1 | L = 2 | L = 3 | ASR Decline Rate (L = 0 → L = 3) |

|---|---|---|---|---|---|

| YOLOV3 | 85.6 | 80.1 | 73.4 | 65.9 | 19.7% |

| YOLOV5s | 89.2 | 85.3 | 78.7 | 71.5 | 17.7% |

| YOLOV5m | 87.8 | 83.9 | 77.2 | 69.1 | 18.7% |

| YOLOV5l | 84.5 | 82.1 | 75.8 | 67.3 | 19.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xi, H.; Ru, L.; Tian, J.; Wang, W.; Zhu, R.; Li, S.; Zhang, Z.; Liu, L.; Luan, X. Towards Robust Physical Adversarial Attacks on UAV Object Detection: A Multi-Dimensional Feature Optimization Approach. Machines 2025, 13, 1060. https://doi.org/10.3390/machines13111060

Xi H, Ru L, Tian J, Wang W, Zhu R, Li S, Zhang Z, Liu L, Luan X. Towards Robust Physical Adversarial Attacks on UAV Object Detection: A Multi-Dimensional Feature Optimization Approach. Machines. 2025; 13(11):1060. https://doi.org/10.3390/machines13111060

Chicago/Turabian StyleXi, Hailong, Le Ru, Jiwei Tian, Wenfei Wang, Rui Zhu, Shiliang Li, Zhenghao Zhang, Longhao Liu, and Xiaohui Luan. 2025. "Towards Robust Physical Adversarial Attacks on UAV Object Detection: A Multi-Dimensional Feature Optimization Approach" Machines 13, no. 11: 1060. https://doi.org/10.3390/machines13111060

APA StyleXi, H., Ru, L., Tian, J., Wang, W., Zhu, R., Li, S., Zhang, Z., Liu, L., & Luan, X. (2025). Towards Robust Physical Adversarial Attacks on UAV Object Detection: A Multi-Dimensional Feature Optimization Approach. Machines, 13(11), 1060. https://doi.org/10.3390/machines13111060