Implementation of Intelligent Indoor Service Robot Based on ROS and Deep Learning

Abstract

1. Introduction

- Design of a specialized indoor service robot: The focus of this research is to develop an indoor service robot tailored for office environments, aimed at addressing challenges such as accurate target recognition, path planning, and obstacle avoidance. This robot is capable of accurately identifying small objects and autonomously planning paths while avoiding obstacles.

- Indoor SLAM algorithm analysis: This paper conducts an in-depth analysis of three commonly used SLAM algorithms (GMapping, Hector-SLAM, Cartographer) to assess their suitability and performance differences in indoor environments [9]. The research begins with preliminary planning through SLAM simulation experiments. Subsequently, by comparing the output results of different algorithms in experimental settings, the most suitable algorithm for application scenarios involving the robot is selected.

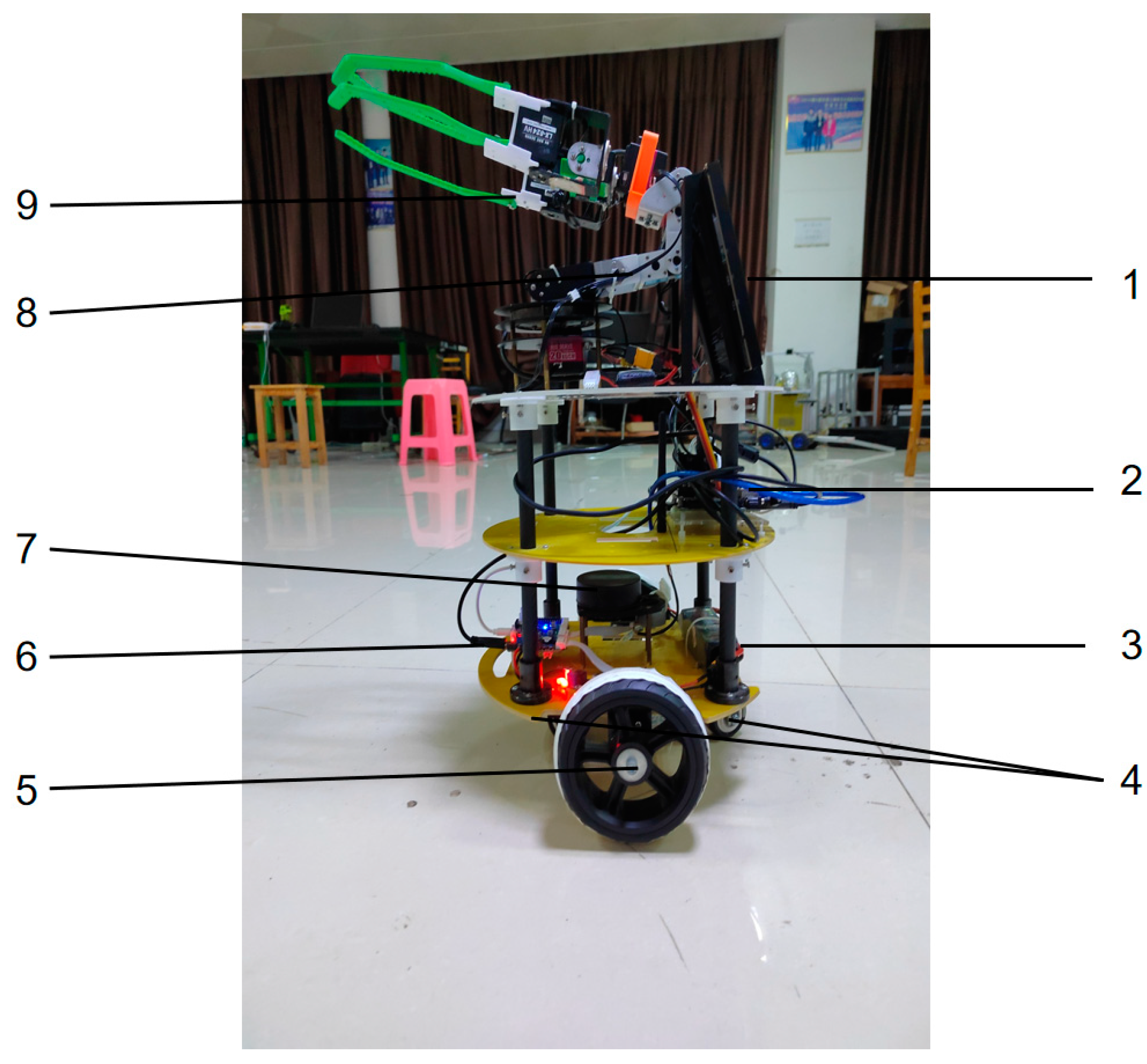

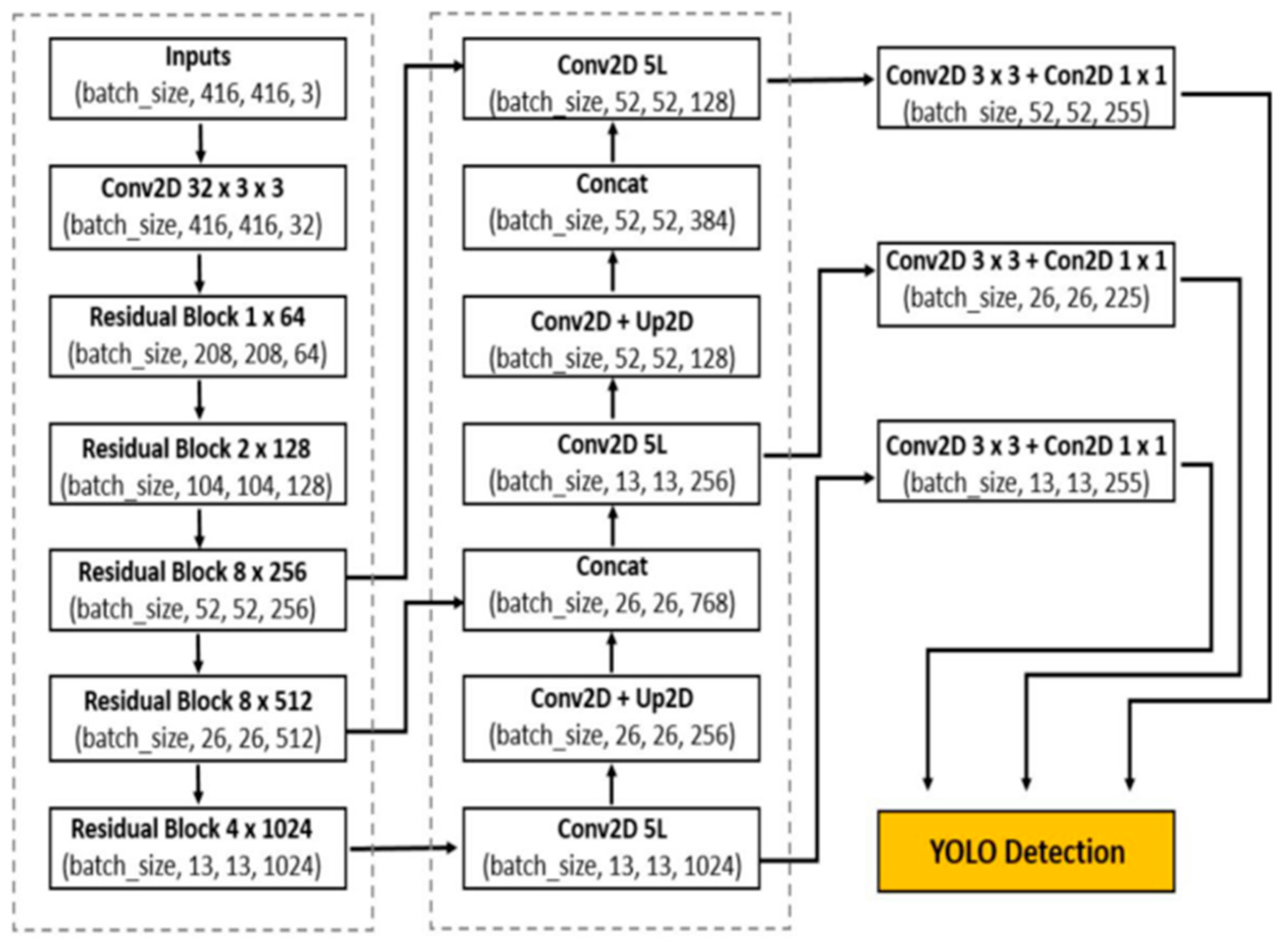

- Hardware and software implementation: This paper utilizes the STM32F407VET6 and Nvidia Jetson Nano B01 as the main control units. The FreeRTOS operating system is employed for program design on the STM32 side, while the ROS (Robot Operating System) is used on the Jetson Nano side. This selection maximizes the resources of the STM32F407VET6 for hardware choices. Considering the computational capabilities of the Jetson Nano B01, appropriate navigation and vision algorithms have been selected for the software implementation. For global path planning, Dijkstra’s algorithm is used, while for local path planning, the teb_local_planner algorithm is employed. In terms of visual recognition, the lightweight YOLOv3 algorithm is chosen for object detection. This configuration enables the robot to autonomously plan paths and navigate when encountering obstacles, while also ensuring precise object detection using machine vision. Ultimately, this setup guarantees efficient service provision to users by facilitating accurate navigation and object recognition.

2. Robot Design

2.1. Robot Chassis Design and Kinematics Analysis

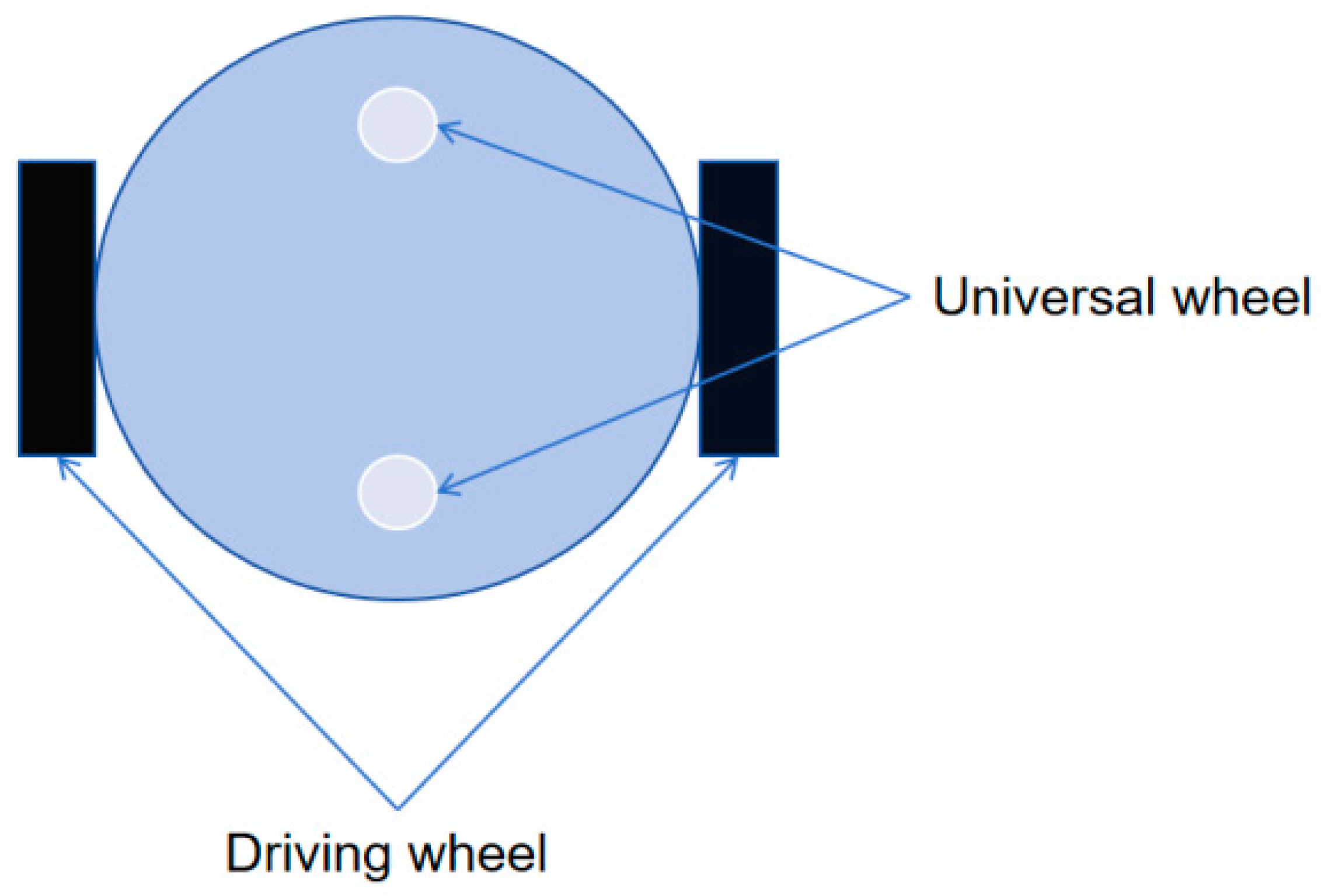

2.1.1. Differential Wheel Chassis Design

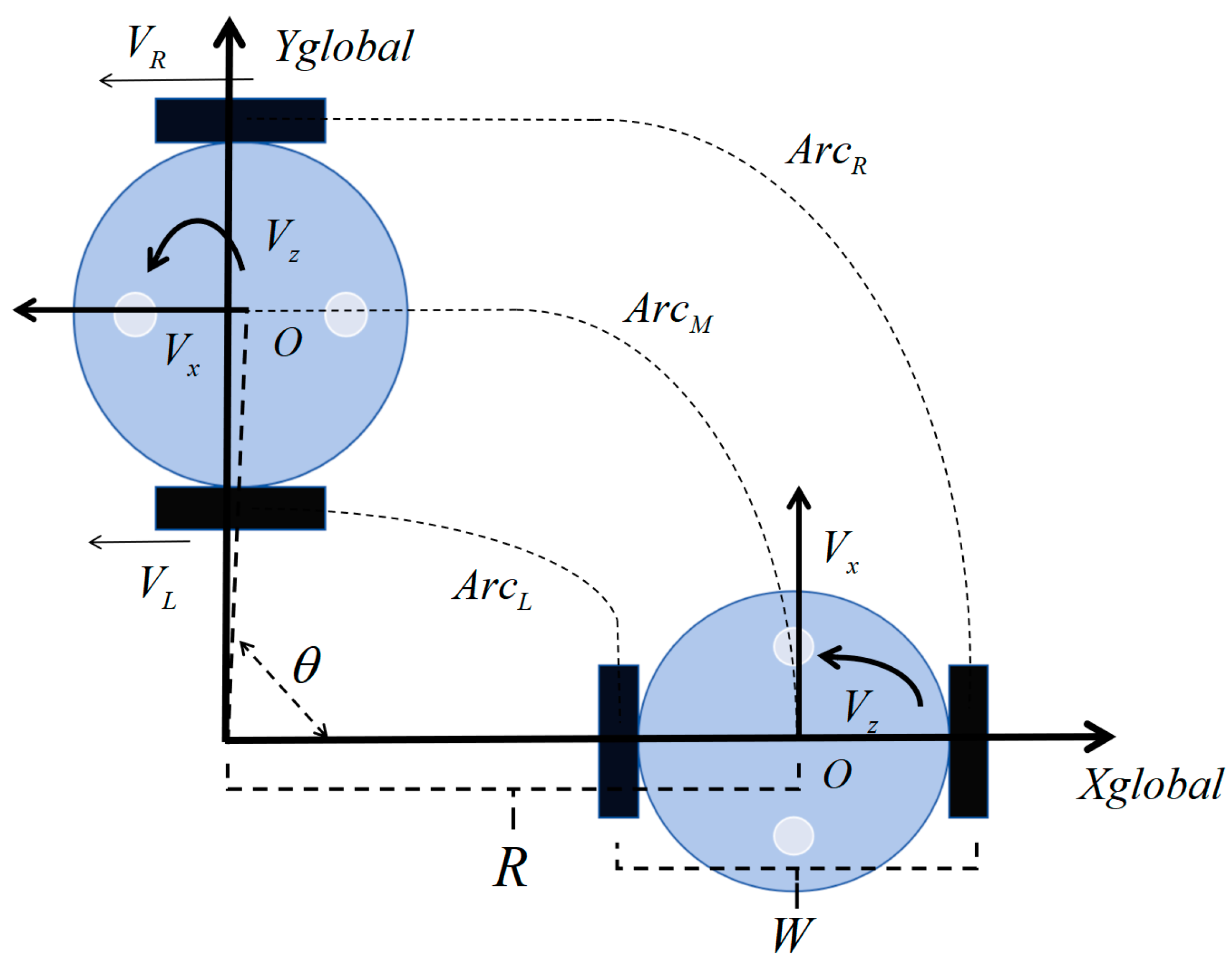

2.1.2. Kinematic Analysis

2.2. Control System Design

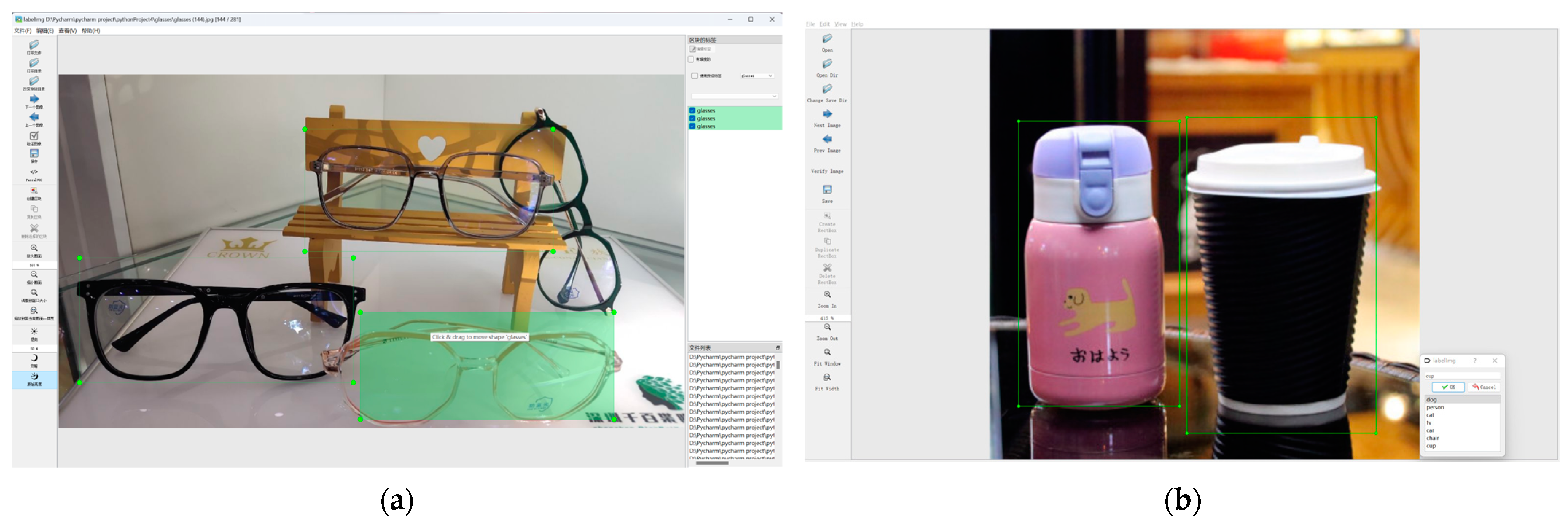

2.3. Robot Vision Part Design

- The Adam optimization algorithm exhibits a higher mAP value compared to SGD, indicating that Adam is more suitable for object detection tasks based on comprehensive evaluation.

- We also considered precision, recall, and F1 score to comprehensively assess model performance, assisting in balancing accuracy and comprehensiveness.

- After thorough parameter tuning and comparative experiments, it was confirmed that the Adam optimization algorithm performs better in object detection, displaying significant advantages across various metrics.

- The comprehensive experimental results provide strong support for selecting outstanding object detection models, highlighting the importance of optimization algorithms in performance and offering valuable guidance for practical applications.

3. Experimental Design and Results

3.1. Experimental Design

3.2. SLAM Simulation Experiment

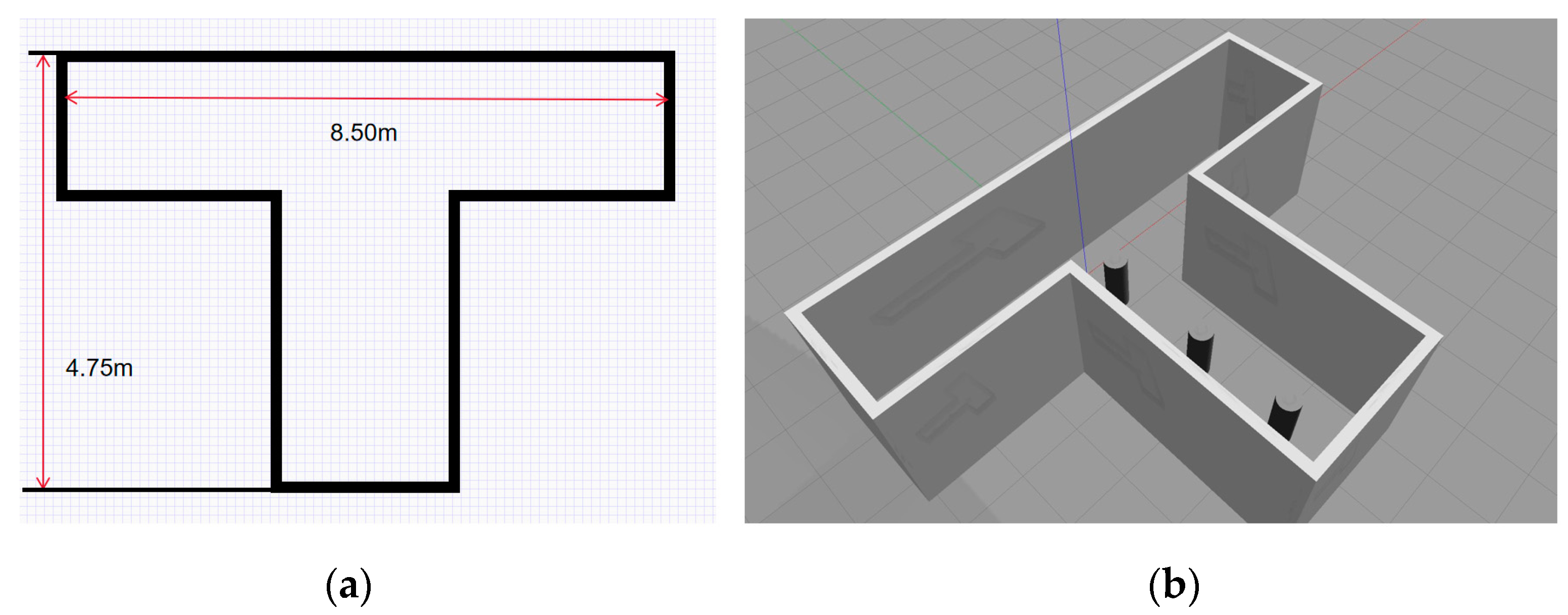

3.2.1. Virtual Simulation Environment

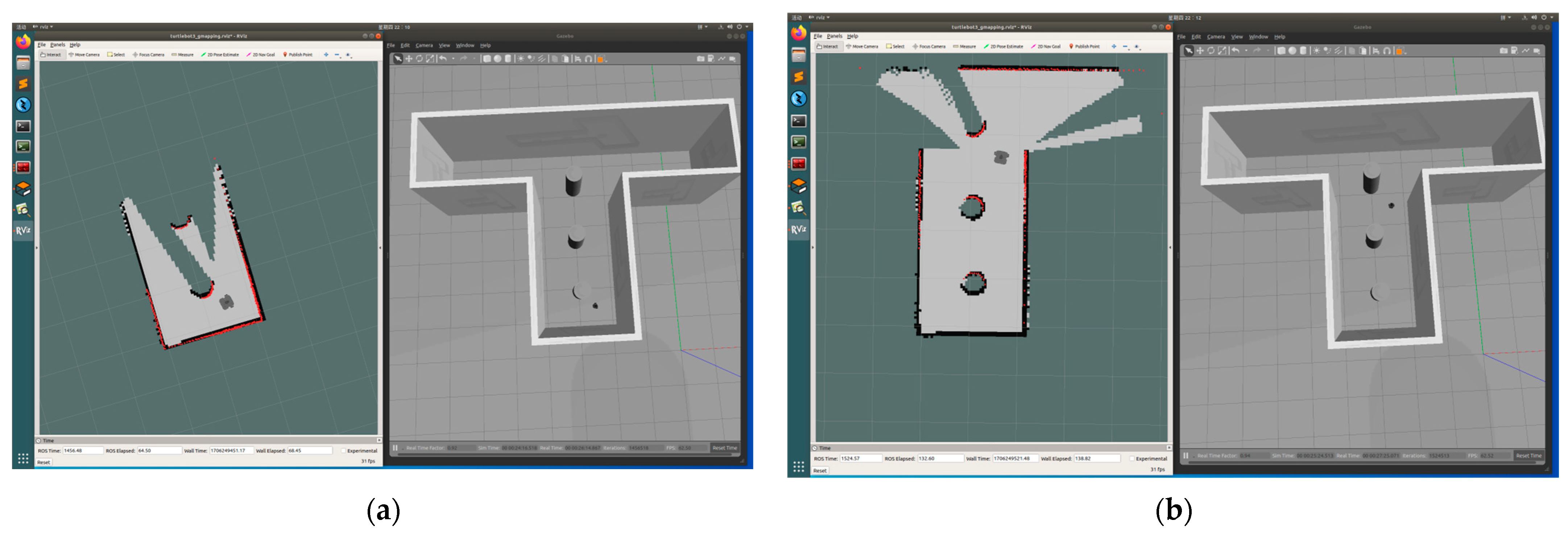

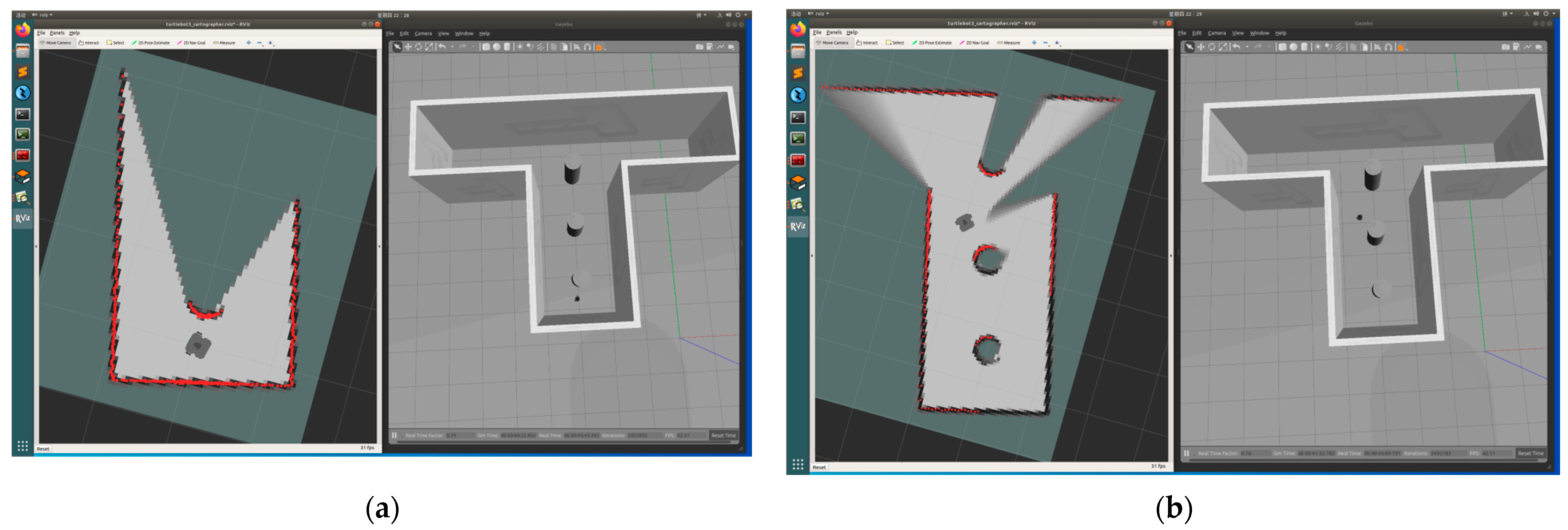

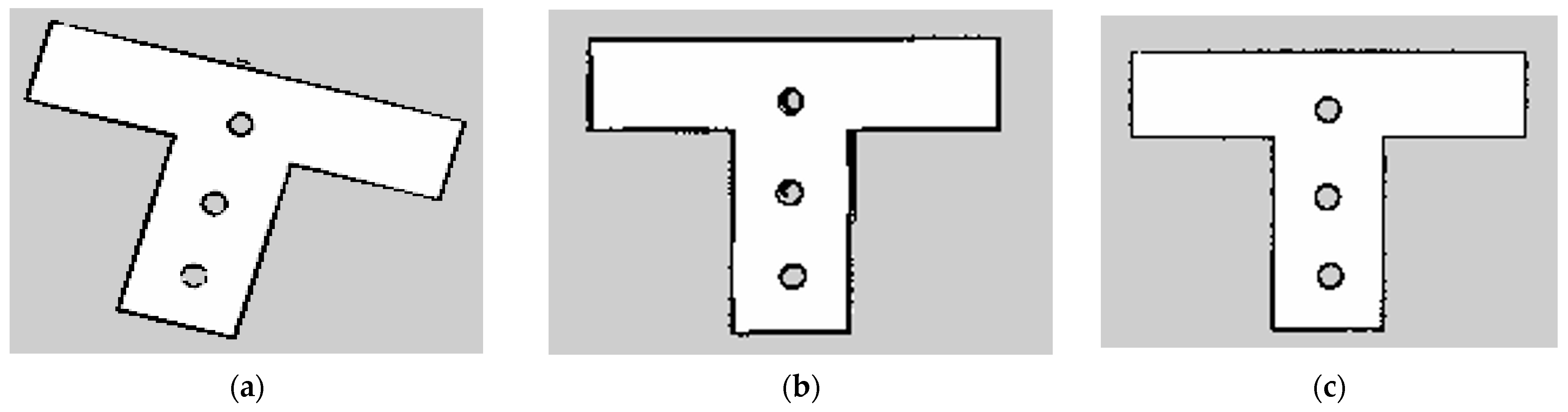

3.2.2. GMapping

- Sampling: According to the motion model, sampling is conducted on the preceding generation of particles, denoted as particle ‘’, yielding the emergence of novel particles termed ‘’. These particles are utilized to forecast the posture of the robot by amalgamating the most recent motion data.

- Calculated weight: Within the framework of particle filtering, the utilization of sensor measurements and motion models allows for the computation of the weight associated with each new particle. These weights are then allocated to individual particles, thus providing a more precise representation of the robot’s actual position within its environment.

- Resampling: Particles endowed with higher weights are preserved, whereas those with lower weights are eliminated. This process aids in concentrating the distribution of particles, thereby enhancing the efficiency of the filtering algorithm.

- Map estimation: By employing the Rao-Blackwellization technique, the degree of congruence between observation data and the map can be assessed. This facilitates the inference of the conditional probability of observation data under a given map, thereby enabling the updating of particle weights.

- Based on RBPF, selective resampling and proposal distribution are improved: These enhancements contribute to the enhancement of the algorithm’s performance in practical applications, enabling the robot to construct and update environmental maps more reliably, thus improving the performance and robustness of mapping algorithms.

- Selective resampling: As particles are solely responsible for representing the robot’s pose, it is feasible to estimate the map by conditioning on the updated particle set. This ensures that the particle set effectively reflects both the robot’s state and map information.

- Improved proposal distribution: To accurately simulate the state distribution, it is imperative to discard particles with lower weights and replicate particles with higher weights, thereby concentrating the particle set closer to the true state.

3.2.3. Hector-SLAM

3.2.4. Cartographer

3.2.5. Results of SLAM Simulation Mapping Experiment

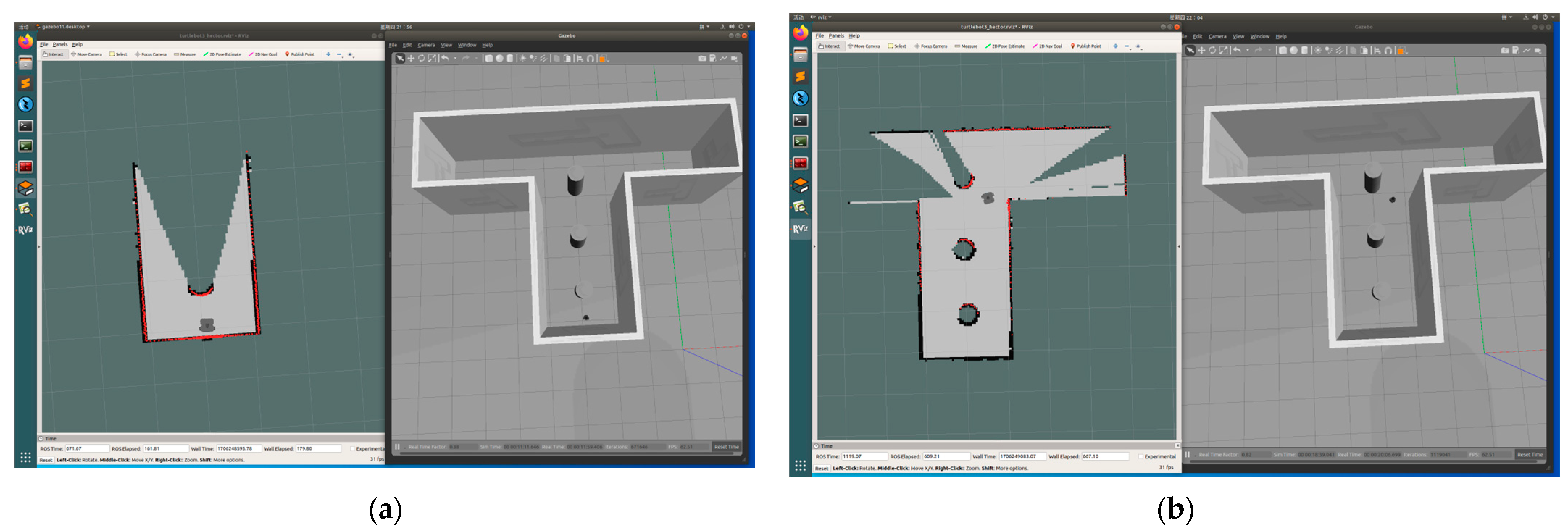

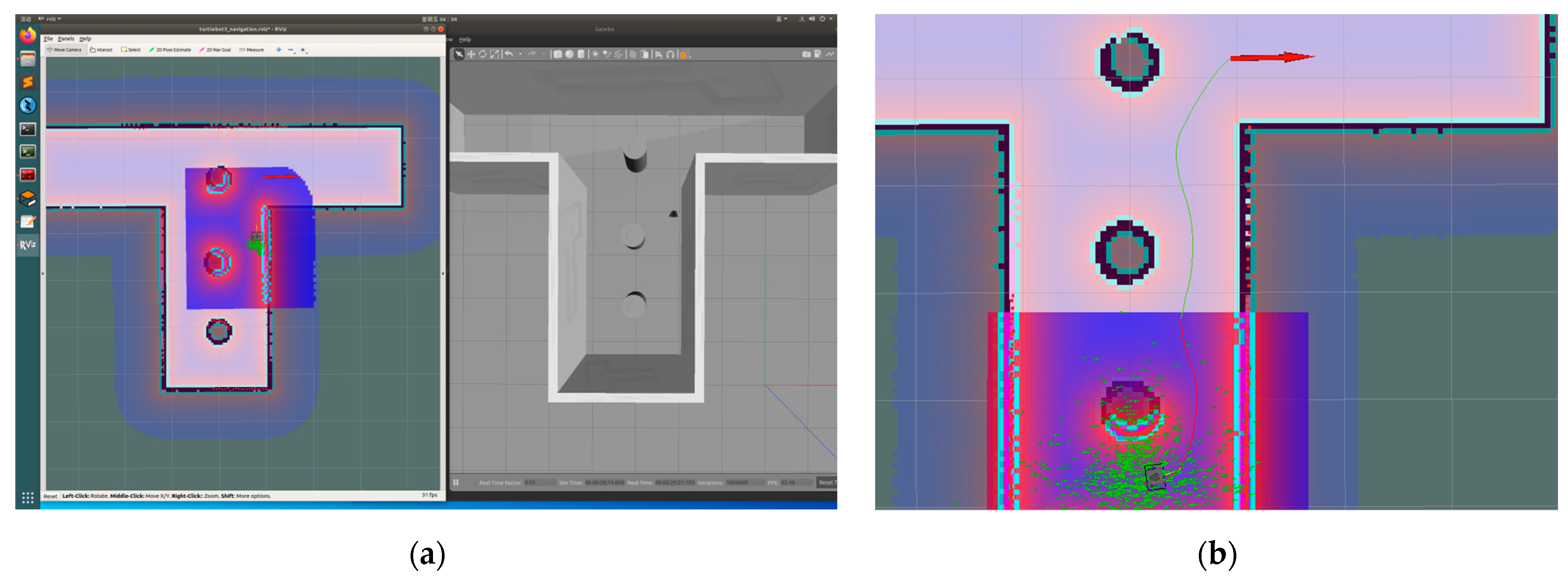

3.3. Path Planning and Navigation Simulation Experiments

3.3.1. Global Path Planning Using Dijkstra’s Pathfinding Algorithm

3.3.2. Local Path Planning teb_local_planner

3.3.3. Path Planning Simulation Experiment

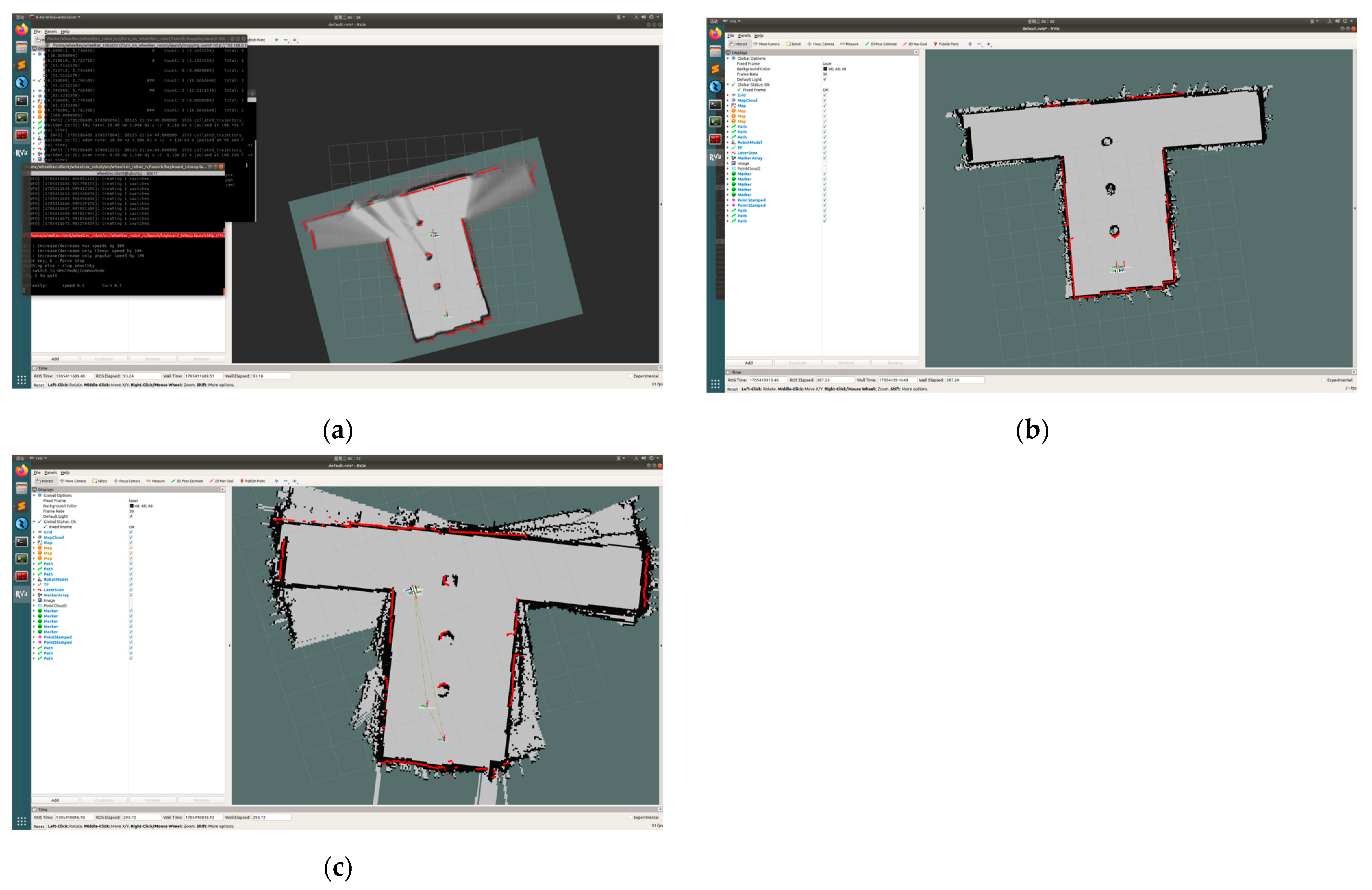

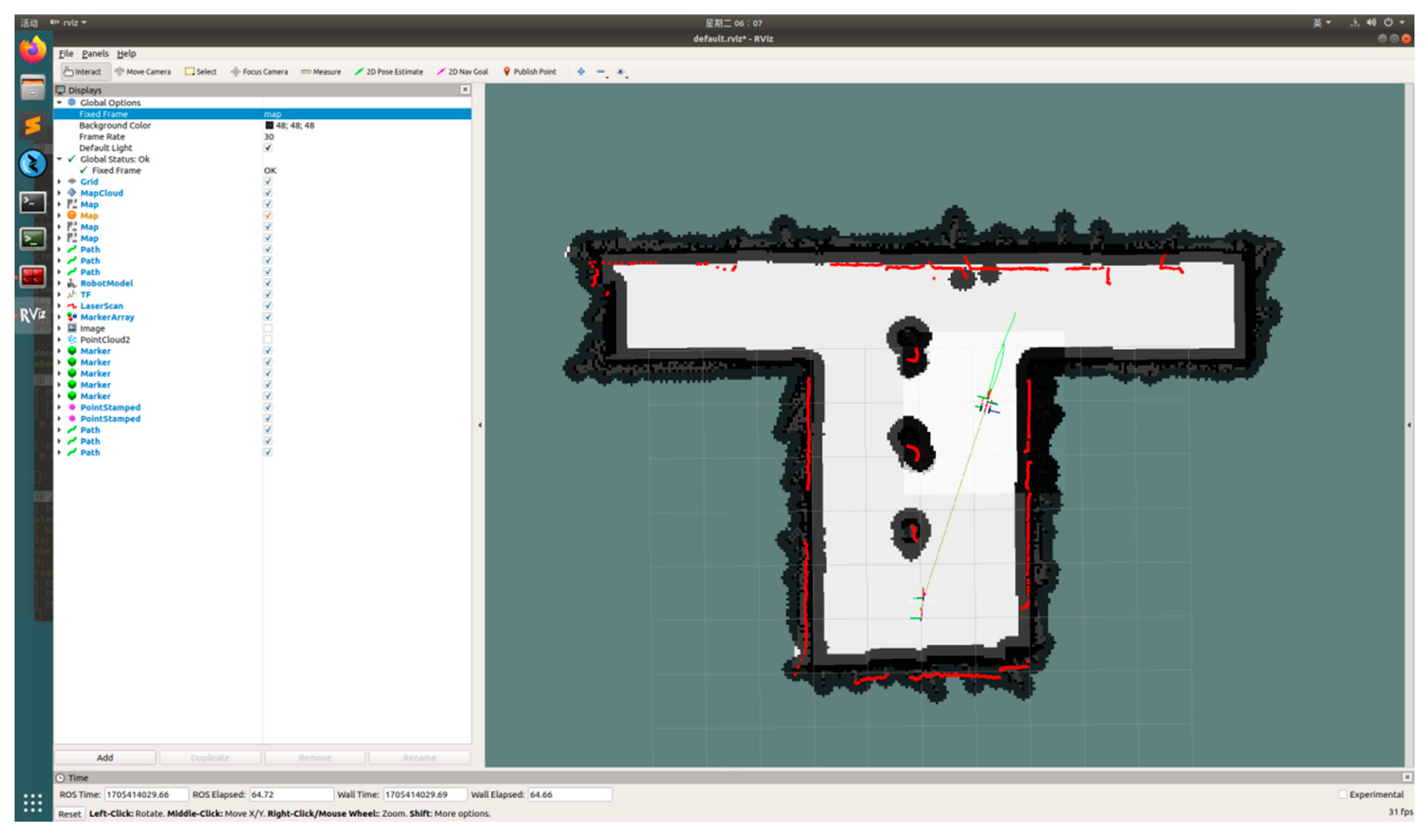

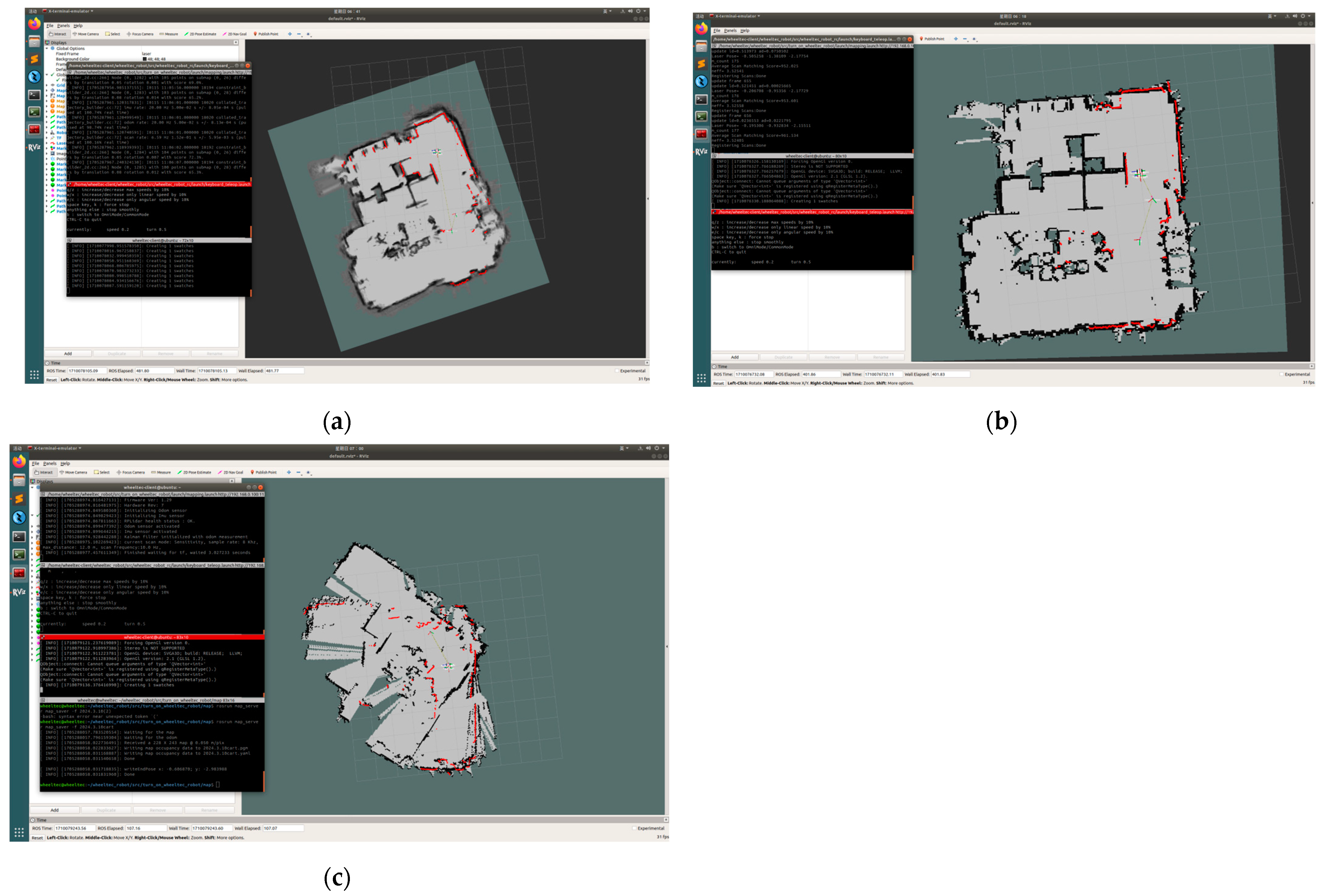

3.4. SLAM Live Field Experiment

3.4.1. SLAM Actual Site Construction Process

3.4.2. SLAM Actual Site Construction Results

3.4.3. Practical Path Planning and Navigation Experiments

3.4.4. Real Scene Target Detection

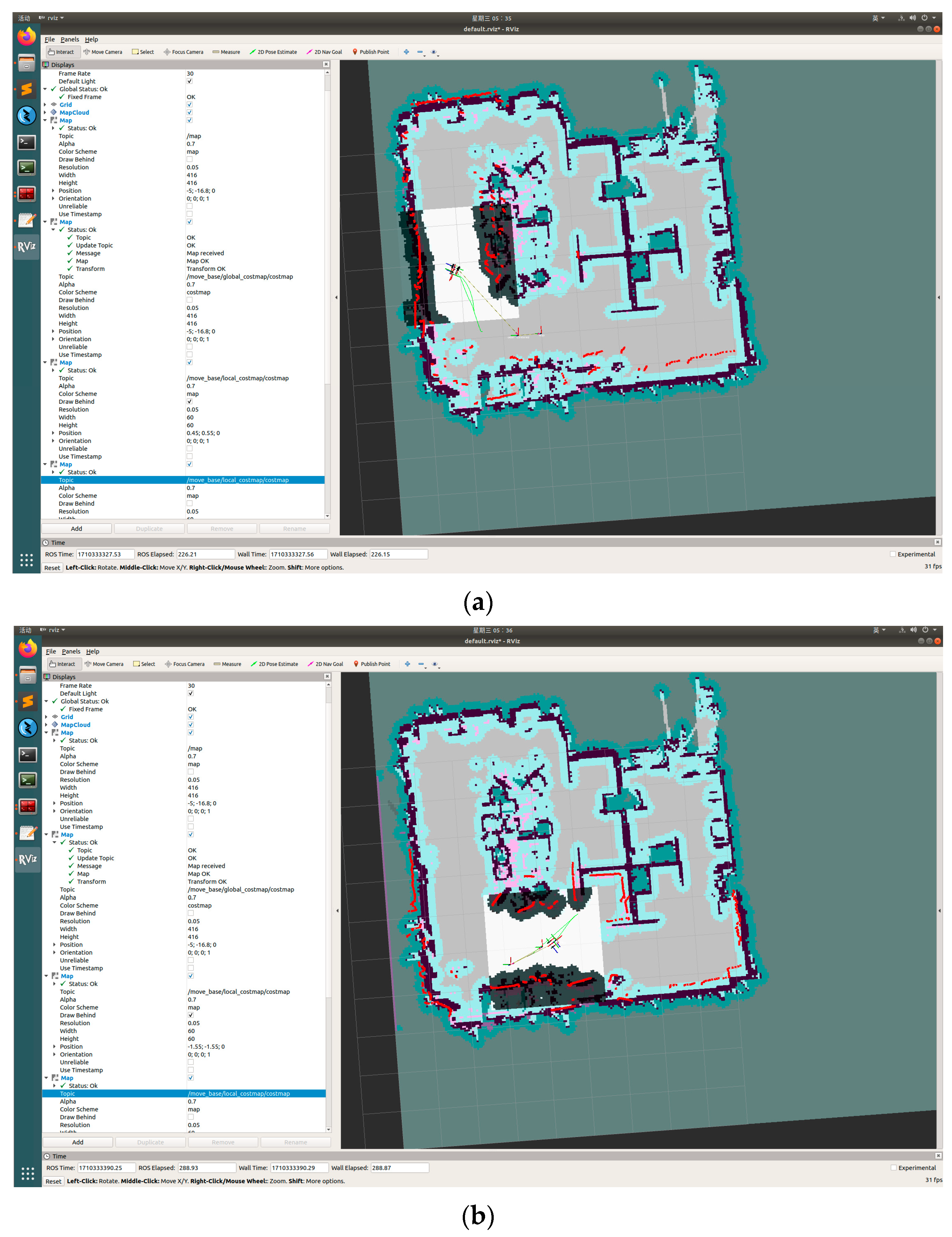

3.5. Office Real Site Experiment

3.5.1. SLAM Office Site Construction Process

3.5.2. SLAM Office Site Construction Results

3.5.3. Experiment on Route Planning and Navigation in Office Environment

3.5.4. Office Site Target Detection

3.6. Analysis and Discussion of Experimental Results

3.6.1. Analysis of SLAM and Navigation Experiment Results

3.6.2. Analysis of Experimental Results of Target Detection

3.6.3. Discussion

- The applicability and performance of SLAM algorithms vary: In the simulation environment, the Hector-SLAM algorithm excels, but its performance in real-world settings is affected by the accuracy of the LiDAR and measurement noise, leading to suboptimal performance. In contrast, the GMapping algorithm demonstrates greater stability and reliability in real-world environments, with higher localization accuracy. This difference may stem from disparities between the simulation environment and real-world conditions, as well as variations in the algorithms’ sensitivity to sensor accuracy and environmental noise.

- Stability and practicality of SLAM algorithms: The stability and practicality of SLAM algorithms are paramount for ensuring the integrity and accuracy of maps, which directly influence the precision of navigation and localization. Significant errors during map construction can lead to localization failures and inaccurate navigation. Therefore, when selecting a SLAM algorithm, it is crucial to consider the algorithm’s autonomy, reliance on sensor data, and performance under different environmental conditions. The robustness of the algorithm in handling sensor data, managing noise and uncertainty, and maintaining accuracy over time is critical for reliable performance. Evaluating the performance of SLAM algorithms under varied environmental conditions is key. Some algorithms may excel in structured indoor environments but perform poorly in outdoor settings with dynamic lighting and changing terrain. A versatile SLAM algorithm should demonstrate robustness across different scenarios and adapt to challenging conditions encountered in real-world applications. Therefore, when choosing a SLAM algorithm, it is essential to prioritize stability, autonomy, robustness to sensor data, and adaptability to various environmental conditions to ensure that robotic systems can conduct map construction, navigation, and localization accurately and reliably.

- Performance and robustness of object-detection algorithms: The YOLOv3 model performs well in experiments, demonstrating fast processing speed and high recognition accuracy. Although it may face challenges in recognizing objects with significant appearance variations, the model still exhibits robustness and generalization capabilities.

- Directions for future work: SLAM algorithms can be further optimized to enhance their applicability and stability in complex environments, for example, by incorporating additional sensor data or improving algorithm optimization strategies. For object-detection algorithms, continuous refinement of datasets can improve recognition accuracy, especially for objects with significant appearance variations. In practical applications, exploring the integration of different algorithms and technologies can achieve more accurate and reliable indoor service robot systems.

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Niloy, M.A.K.; Shama, A.; Chakrabortty, R.K.; Ryan, M.J.; Badal, F.R.; Tasneem, Z.; Ahamed, H.; Moyeen, S.I.; Das, S.K.; Ali, F.; et al. Critical design and control issues of indoor autonomous mobile robots: A review. IEEE Access 2021, 9, 35338–35370. [Google Scholar] [CrossRef]

- Fragapane, G.; De Koster, R.; Sgarbossa, F.; Strandhagen, J.O. Planning and control of autonomous mobile robots for intralogistics: Literature review and research agenda. Eur. J. Oper. Res. 2021, 294, 405–426. [Google Scholar] [CrossRef]

- Cheong, A.; Lau, M.W.S.; Foo, E.; Hedley, J.; Bo, J.W. Development of a robotic waiter system. IFAC-PapersOnLine 2016, 49, 681–686. [Google Scholar] [CrossRef]

- Guo, P.; Shi, H.; Wang, S.; Tang, L.; Wang, Z. An ROS Architecture for Autonomous Mobile Robots with UCAR Platforms in Smart Restaurants. Machines 2022, 10, 844. [Google Scholar] [CrossRef]

- Fang, G.; Cook, B. A Service Baxter Robot in an Office Environment. In Robotics and Mechatronics, Proceedings of the Fifth IFToMM International Symposium on Robotics & Mechatronics (ISRM 2017), Taipei, Taiwan, 28–30 October 2019; Springer International Publishing: Cham, Switzerland, 2019; pp. 47–56. [Google Scholar] [CrossRef]

- Zhang, X.; Li, J. Research and Innovation in Predictive Remote Control Technology for Mobile Service Robots. Adv. Comput. Signals Syst. 2023, 7, 1–6. [Google Scholar] [CrossRef]

- Kumar, S.J. Application and use of telepresence robots in libraries and information center services: Prospect and challenges. Libr. Hi Tech News 2023, 40, 9–13. [Google Scholar] [CrossRef]

- Ye, Y.; Ma, X.; Zhou, X.; Bao, G.; Wan, W.; Cai, S. Dynamic and Real-Time Object Detection Based on Deep Learning for Home Service Robots. Sensors 2023, 23, 9482. [Google Scholar] [CrossRef] [PubMed]

- Kolhatkar, C.; Wagle, K. Review of SLAM algorithms for indoor mobile robot with LIDAR and RGB-D camera technology. Innov. Electr. Electron. Eng. Proc. ICEEE 2020, 2021, 397–409. [Google Scholar]

- Zhou, Y.; Shi, F.; Chen, J. Design and application of pocket experiment system based on STM32F4. In Proceedings of the 2020 8th International Conference on Information Technology: IoT and Smart City, Xi’an China, 25–27 December 2020; pp. 40–45. [Google Scholar] [CrossRef]

- Moshayedi, A.J.; Roy, A.S.; Liao, L.; Khan, A.S.; Kolahdooz, A.; Eftekhari, A. Design and Development of Foodiebot Robot: From Simulation to Design. IEEE Access 2024, 12, 36148–36172. [Google Scholar] [CrossRef]

- Pebrianto, W.; Mudjirahardjo, P.; Pramono, S.H.; Setyawan, R.A. YOLOv3 with Spatial Pyramid Pooling for Object Detection with Unmanned Aerial Vehicles. arXiv 2023, arXiv:2305.12344. [Google Scholar] [CrossRef]

- Al-Owais, A.; Sharif, M.E.; Ghali, S.; Abu Serdaneh, M.; Belal, O.; Fernini, I. Meteor detection and localization using YOLOv3 and YOLOv4. Neural Comput. Appl. 2023, 35, 15709–15720. [Google Scholar] [CrossRef]

- Li, H.; Liu, L.; Du, J.; Jiang, F.; Guo, F.; Hu, Q.; Fan, L. An improved YOLOv3 for foreign objects detection of transmission lines. IEEE Access 2022, 10, 45620–45628. [Google Scholar] [CrossRef]

- Lawal, M.O. Tomato detection based on modified YOLOv3 framework. Sci. Rep. 2021, 11, 1447. [Google Scholar] [CrossRef]

- Kim, K.; Kim, J.; Lee, H.-G.; Choi, J.; Fan, J.; Joung, J. UAV Chasing Based on YOLOv3 and Object Tracker for Counter UAV Systems. IEEE Access 2023, 11, 34659–34673. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Liu, X.; Li, Q. Fruit image recognition based on DarkNet-53 and YOLOv3. J. Northeast Norm. Univ. (Nat. Sci. Ed.) 2020, 52, 60–65. [Google Scholar]

- Tian, C.; Liu, H.; Liu, Z.; Li, H.; Wang, Y. Research on multi-sensor fusion SLAM algorithm based on improved gmapping. IEEE Access 2023, 11, 13690–13703. [Google Scholar] [CrossRef]

- Zhang, L.; Wei, L.; Shen, P.; Wei, W.; Zhu, G.; Song, J. Semantic SLAM based on object detection and improved octomap. IEEE Access 2018, 6, 75545–75559. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, R.; Wang, F.; Zhao, W.; Chen, Q.; Zhi, D.; Chen, X.; Jiang, S. An autonomous navigation strategy based on improved hector slam with dynamic weighted a* algorithm. IEEE Access 2023, 11, 79553–79571. [Google Scholar] [CrossRef]

- Xu, J.; Wang, D.; Liao, M.; Shen, W. Research of cartographer graph optimization algorithm based on indoor mobile robot. J. Phys. Conf. Ser. 2020, 1651, 012120. [Google Scholar] [CrossRef]

- Zhang, X.; Lai, J.; Xu, D.; Li, H.; Fu, M. 2d lidar-based slam and path planning for indoor rescue using mobile robots. J. Adv. Transp. 2020, 2020, 8867937. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), IEEE, Stockholm, Sweden, 16–21 May 2016; Volume 20, pp. 1271–1278. [Google Scholar] [CrossRef]

- Mi, Z.; Xiao, H.; Huang, C. Path planning of indoor mobile robot based on improved A* algorithm incorporating RRT and JPS. AIP Adv. 2023, 13, 045313. [Google Scholar] [CrossRef]

- Rösmann, C.; Feiten, W.; Wösch, T.; Hoffmann, F.; Bertram, T. Trajectory modification considering dynamic constraints of autonomous robots. In Proceedings of the ROBOTIK 2012; 7th German Conference on Robotics, Munich, Germany, 21–22 May 2012; VDE: Offenbach, Germany, 2012; pp. 1–6. ISBN 978-3-8007-3418-4. [Google Scholar]

- Macenski, S.; Martín, F.; White, R.; Clavero, J.G. The marathon 2: A navigation system. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 2718–2725. [Google Scholar] [CrossRef]

- Guo, J. Research and Optimization of Local Path Planning for Navigation Robots Based on ROS Platform. Mod. Inf. Technol. 2022, 6, 144–148. (In Chinese) [Google Scholar] [CrossRef]

- Zhang, B.; Li, S.; Qiu, J.; You, G.; Qu, L. Application and Research on Improved Adaptive Monte Carlo Localization Algorithm for Automatic Guided Vehicle Fusion with QR Code Navigation. Appl. Sci. 2023, 13, 11913. [Google Scholar] [CrossRef]

- Yong, T.; Shan, J.; Fan, R.; Tianmiao, W.; He, G. An improved Gmapping algorithm based map construction method for indoor mobile robot. High Technol. Lett. 2021, 27, 227–237. [Google Scholar]

- Qu, P.; Su, C.; Wu, H.; Xu, X.; Gao, S.; Zhao, X. Mapping performance comparison of 2D SLAM algorithms based on different sensor combinations. J. Phys. Conf. Ser. 2021, 2024, 012056. [Google Scholar] [CrossRef]

| Backbone | Top-1 | Top-5 | Bn Ops | BFLOP/s | FPS |

|---|---|---|---|---|---|

| DarkNet-19 | 74.1 | 91.8 | 7.29 | 1246 | 171 |

| ResNet-101 | 77.1 | 93.7 | 19.7 | 1039 | 53 |

| ResNet-152 | 77.6 | 93.8 | 29.4 | 1090 | 37 |

| DarkNet-53 | 77.2 | 93.8 | 18.7 | 1457 | 78 |

| Algorithm Name | Category | AP/% | mAP/% |

|---|---|---|---|

| SGD | Chair | 94.03% | 79.00% |

| Cup | 82.29% | ||

| Flash drive | 89.93% | ||

| Glasses | 86.25% | ||

| Pen | 42.53% | ||

| Adam | Chair | 98.02% | 86.10% |

| Cup | 88.72% | ||

| Flash drive | 93.96% | ||

| Glasses | 95.91% | ||

| Pen | 53.88% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, M.; Chen, M.; Wu, Z.; Zhong, B.; Deng, W. Implementation of Intelligent Indoor Service Robot Based on ROS and Deep Learning. Machines 2024, 12, 256. https://doi.org/10.3390/machines12040256

Liu M, Chen M, Wu Z, Zhong B, Deng W. Implementation of Intelligent Indoor Service Robot Based on ROS and Deep Learning. Machines. 2024; 12(4):256. https://doi.org/10.3390/machines12040256

Chicago/Turabian StyleLiu, Mingyang, Min Chen, Zhigang Wu, Bin Zhong, and Wangfen Deng. 2024. "Implementation of Intelligent Indoor Service Robot Based on ROS and Deep Learning" Machines 12, no. 4: 256. https://doi.org/10.3390/machines12040256

APA StyleLiu, M., Chen, M., Wu, Z., Zhong, B., & Deng, W. (2024). Implementation of Intelligent Indoor Service Robot Based on ROS and Deep Learning. Machines, 12(4), 256. https://doi.org/10.3390/machines12040256