1. Introduction

Autism spectrum disorder (ASD) is a multifaceted neurodevelopmental condition characterized by varying degrees of difficulty with social interaction and communication and is often accompanied by repetitive behaviors [

1]. Currently affecting 1 in 68 children [

2], it remains a prevailing concern that, to date, lacks any definitive treatment [

3,

4]. While the exact etiology of this disorder remains elusive, various therapies and interventions have been developed to support children with ASD in achieving their full potential and leading fulfilling lives [

5,

6,

7,

8,

9,

10,

11].

In an era of widespread digital presence, children with ASD are often observed to exhibit a strong affinity for technological devices such as tablets and smartphones. While these devices can provide an engaging medium for learning and entertainment, an excessive dependence on them may inadvertently contribute to the intensification of their social isolation [

12]. However, a groundbreaking revelation has emerged, demonstrating that when these devices adopt human-like characteristics, they can act as bridges to fill the social gap and bolster social skills. This has prompted the exploration of humanoid robots, such as Kasper [

13], NAO [

14,

15], FACE [

16], Bandit [

17], ZECA [

18], Zeno R25 [

19], Puffy [

20], Ifbot [

21], Ichiro [

22], and Pepper [

23], as interactive tools for children with ASD.

Simultaneously, there has been an increasing interest in robots that mimic animals or fictional characters, such as Probo [

24], CuDDler [

24], Romibo [

25], Sphero [

26], COLOLO [

27], Aibo [

24], Paro [

28], Pleo [

29], BLISS [

30], Iromec [

31], and Jibo [

32]. The intriguing shapes and forms of these robots, coupled with their interactive capabilities, have demonstrated their potential to engage and captivate children’s attention.

The literature abounds with studies examining the role of robots in enhancing the social and communicative abilities of children with ASD [

33,

34,

35,

36,

37,

38,

39,

40]. These studies consistently reveal positive outcomes, such as improved social skills and increased eye contact. Among the multitude of robots employed for this purpose, the NAO robot, produced by Softbank Robotics [

41], has emerged as a particularly popular choice, accounting for over 30% of the studies conducted in the last decade.

The extensive utilization of robots in assisting children with ASD represents an exciting intersection between robotics and educational therapy, providing a platform for the exploration of new pedagogical approaches. This paper aims to contribute to this growing body of literature by presenting our findings from a recent study.

The following sections of this paper are structured as follows:

Section 2 discusses materials and methods, followed by

Section 3 on results. The discussion is then presented in

Section 4, and conclusions are provided in

Section 5.

2. Materials and Methods

This paper proposes an SAR augmented with a mobile application for autism education (AE) in preschool and kindergarten settings. The research question is as follows: Will the SAR augmented with the mobile application influence the frequency of eye contact among autistic students during AE? For an instance of eye contact to be recorded, it entails the child’s gaze meeting the teacher’s gaze during a working session. The number is collected and recorded by the teacher during a session.

To achieve our goal, an effective SAR suitable for AE in preschool or kindergarten must meet the following requirements:

Children must have the ability to communicate with the robot.

The robot must be capable of endlessly repeating commands.

The robot must be easily portable.

Children with autism should comprehend the robot’s dialogues and queries.

The robot must accurately recognize each child’s face.

The robot should accurately receive answers to questions from the children.

The program must organize all lessons for autistic children into fields.

The application must ensure that children with autism complete and master each field.

The program must generate an individual profile for each child.

The program must save progress charts in a database to track the child’s learning and social skills advancement.

The program must be simple to comprehend and learn for new users.

2.1. Key Performance Indicators and Engineering Requirements

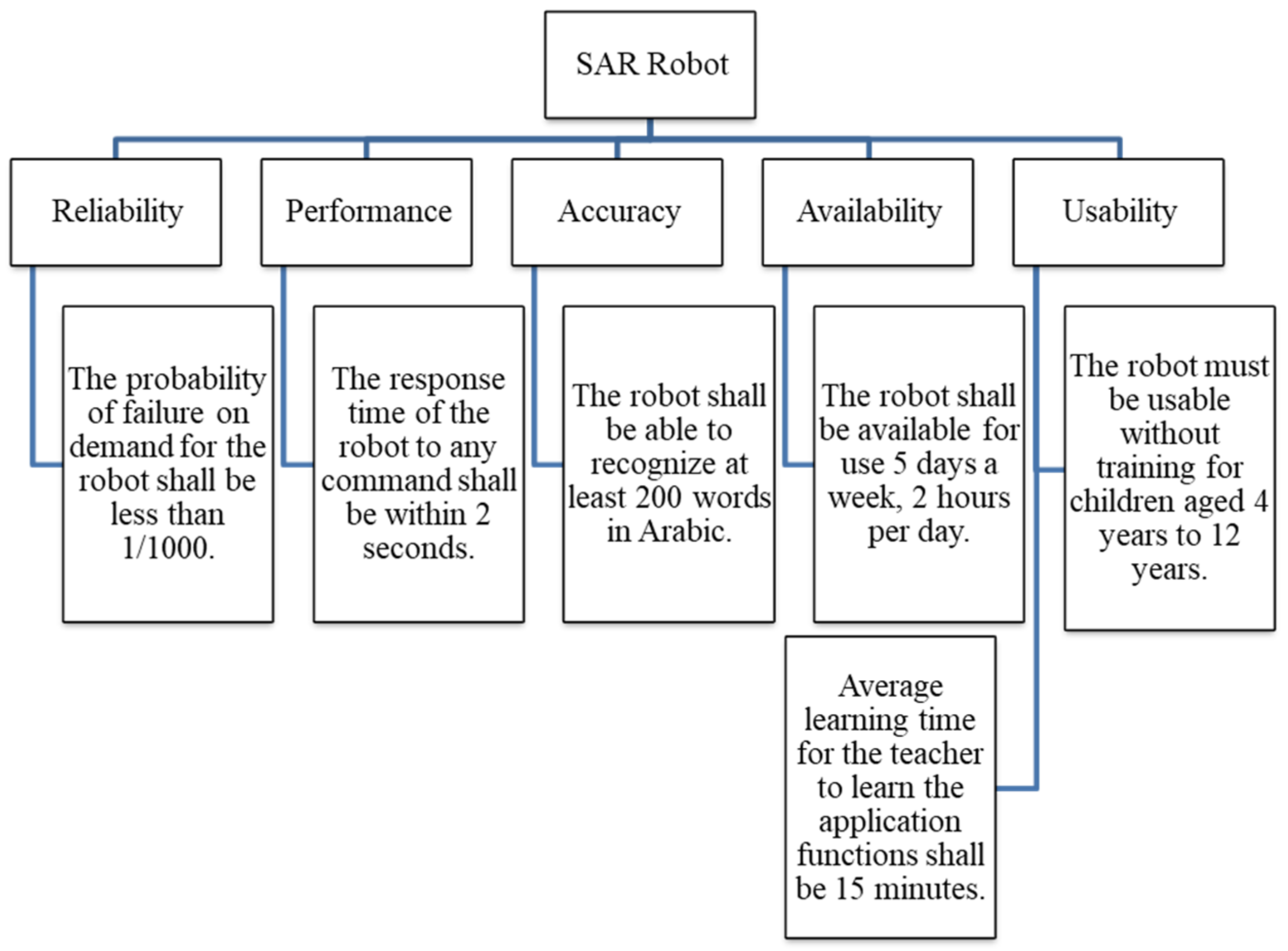

The SAR must meet the five key performance indicators: reliability, performance, accuracy, availability, and usability.

Reliability refers to the robot’s ability to consistently perform its tasks without breakdowns, malfunctions, or requiring excessive maintenance. It is often measured by the mean time between failures (MTBF) or the probability of failure rate on demand (PFOD). A more reliable robot experiences fewer interruptions due to repairs or modifications. We aim for a PFOD of less than 0.001.

The key performance indicators (KPIs) measure the robot’s speed, effectiveness, and throughput. These metrics can be quantified by the number of jobs completed per unit of time, energy utilization, or the ratio of work accomplished to the robot’s capability. They also consider the robot’s ability to handle complex tasks or varying workloads. For our SAR, an appropriate response time would be no more than two seconds to reply to any order.

Accuracy measures how well the robot’s actions or outputs align with the intended goals. For example, in a factory setting, this could mean that a robot always puts parts in the right place, within minimal tolerances. It is essential to be accurate when performing precise work, like robotic surgery or micro-assembly. In our SAR, accuracy entails correct recognition of localized Arabic words. Thus, we set our accuracy measure to correctly acknowledge at least 200 Arabic words in the kindergarten setting.

Availability is another KPI; it indicates the robot’s readiness and capability to perform tasks over time. Most of the time, it excludes maintenance, repairs, or program updates. High availability means that the robot operates most of the time, with minimal interruptions due to technical problems or software changes. Our SAR robot needs to be available during kindergarten hours, at least two hours a day, five days a week.

Usability refers to how easily individuals can interact with, understand, and operate the robot. It considers factors such as the control interface, programming flexibility, communication of status or issues, and safety for human interaction. The SAR should be user-friendly for children aged four to twelve to use, requiring no training. Additionally, teachers should be able to learn how the mobile app works and how to use it within 15 min.

The five key performance indicators of SAR robots for ADT that are suitable for preschool or kindergarten are summarized in

Figure 1.

After a thorough evaluation, we have determined that the NAO robot produced by Softbank Robotics [

41] is better suited for our application since it supports Arabic language localization. With its 25 degrees of freedom (DOFs), the NAO robot offers exceptional flexibility and mobility capabilities. Standing at 57 cm in height, it is designed to encourage interaction with children, either sitting or at table level. The robot is equipped with two cameras—one on its head for facial recognition and another on its chin for navigation and environmental recognition. Furthermore, NAO includes voice recognition capabilities, a speech synthesizer, and various sensors such as an accelerometer, gyroscope, and force-sensitive resistors. Its eyes, adorned with changeable LED lights, add a further dimension to its interactive capability, enhancing its appeal to users. Additionally, it may link to an external server or mobile phone to expand its capabilities.

Table 1 maps the engineering requirements with the selected NAO specifications to justify our selection from an engineering perspective.

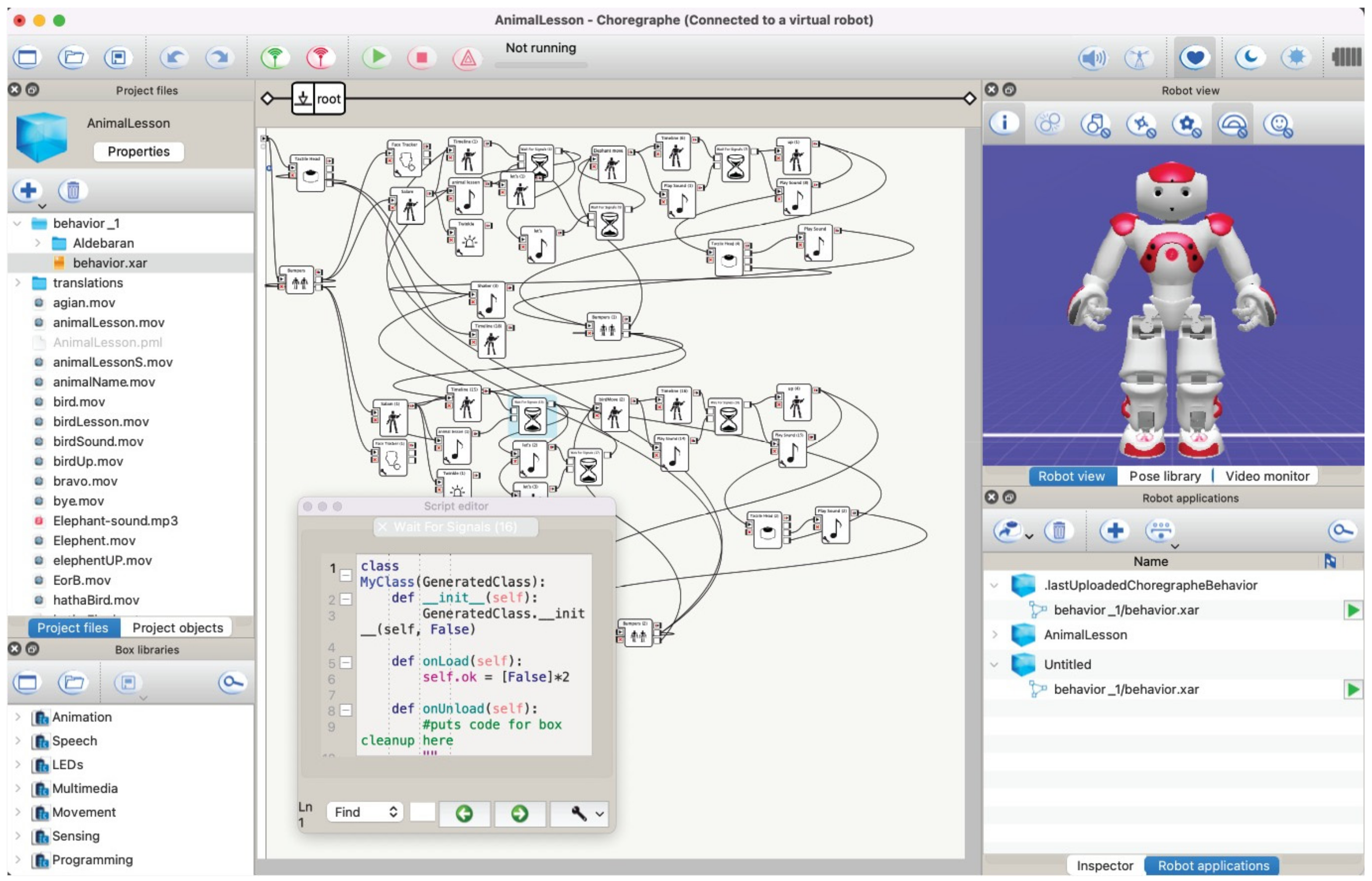

2.2. The Robot Program Structure

The front-end programming of the NAO robot involved the use of Python, C++, and Choregraphe modular programming, as shown in

Figure 2. The backend smartphone application was developed using Java. The smartphone application serves as an interface between the user and the NAO robot, facilitating class management, monitoring student progress, and assigning new users to classes. Firebase is utilized to construct system databases that collect, organize, and analyze data.

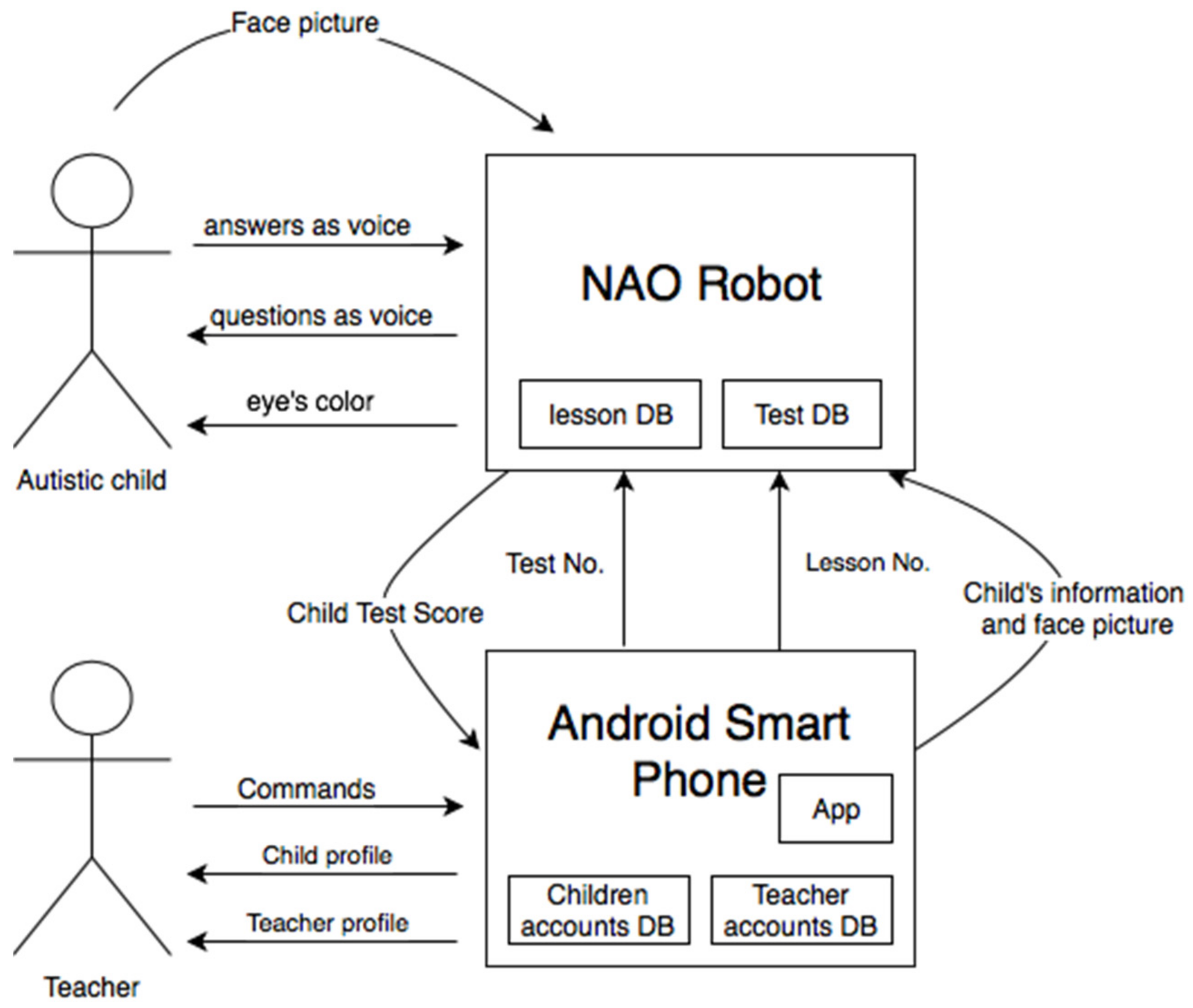

The system was meticulously crafted to retain the progress of each individual child and to foster a stronger connection by recognizing their strengths and weaknesses in each lesson. To achieve this, we augmented the NAO robot with an external smartphone application to better benefit from the ability to create and save an internal database on the mobile app, enabling the development of personalized profiles for each user and personalized education. Furthermore, it enhances the interaction and connection with the end user. To implement more individualized training, we must monitor the student’s completed lessons and have more control over the child’s development. This would assist in providing control elements and only let a student advance to the next session if they achieved satisfactory scores on the exam. The robot should save this information and be able to access it once it recognizes the child’s face, at which point it should engage with them appropriately.

Based on the recommendations of the social consultant, each student is allocated a class with individualized instructions and assessments. The robot will manage voice recognition, speech synthesis, and picture identification, making crucial decisions. On the other hand, a smartphone will manage several accounts and keep track of exam results and completed courses. The relationship between NAO and smartphone applications is seen in

Figure 3. Further details on the structure of the smartphone application are given in the next section.

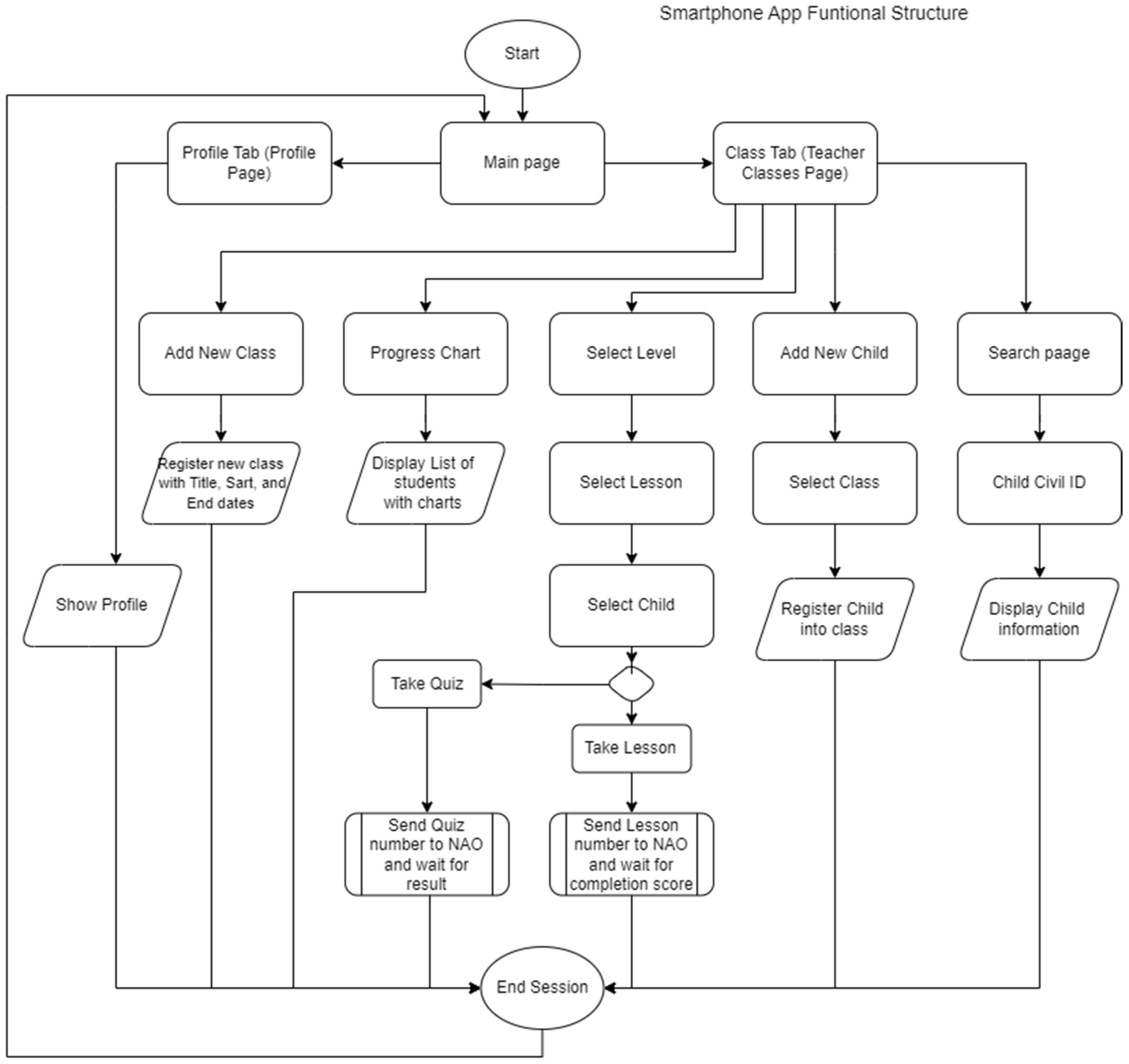

2.3. Mobile Application Structure

The mobile application acts as a backend, connecting the instructor to the child through the NAO robot, as shown in

Figure 3. The mobile application on the first login will allow the user to either sign up for a new account, log in, or connect with their existing Google account. Once logged in, the user can access their profile to update personal information such as their name, password, and contact information. They can also start using the application by navigating to the Class Tab. The complete functional structure of the smartphone application is depicted in

Figure 4. Within the Class Tab, the instructor can create a new class, add students to a class, search for a student using their civil ID, check the progress chart of any student in their classes, or initiate a new lesson at a specific level.

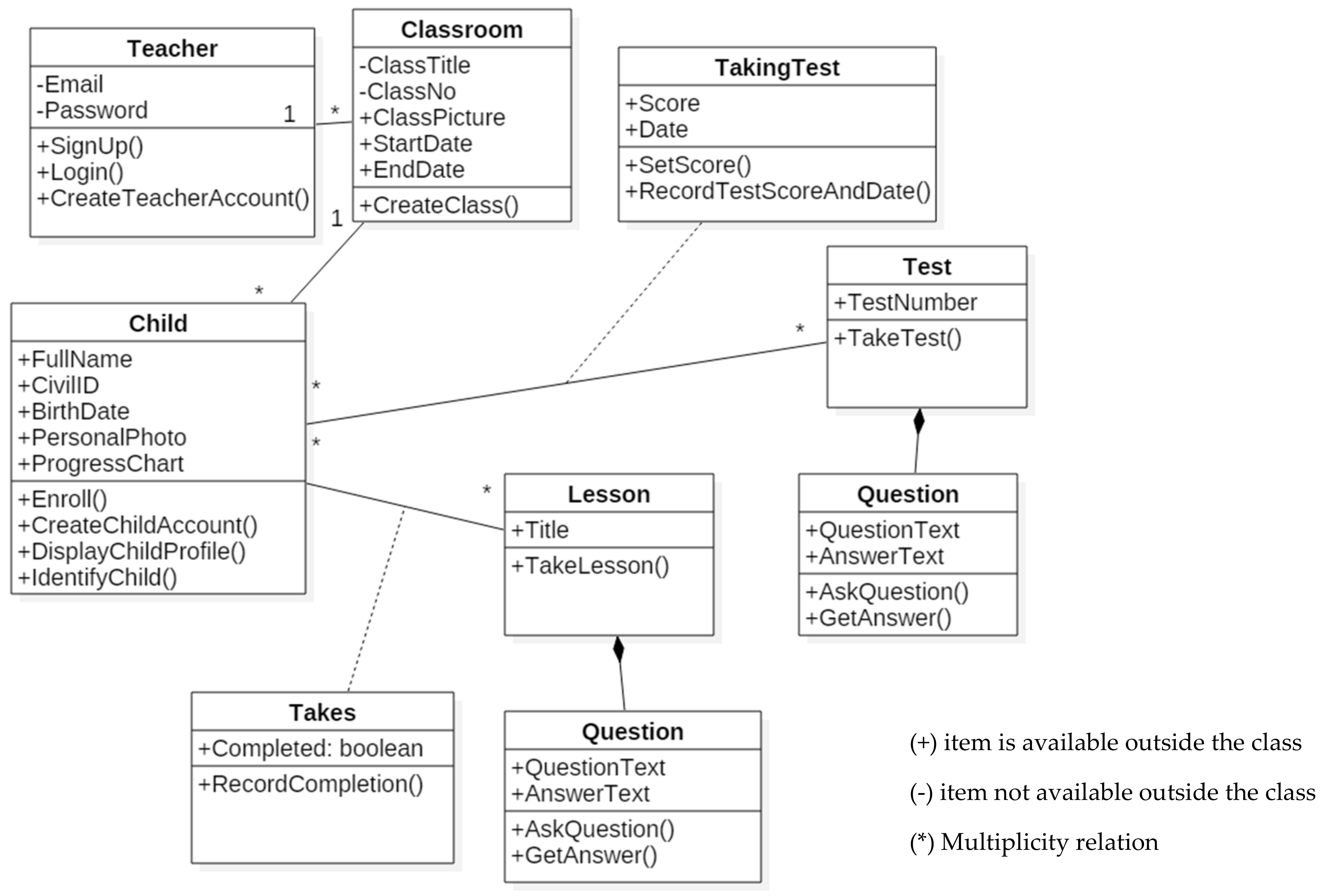

2.4. The System Classes’ Structure

The proposed approach relies on object-oriented classes and methods [

42,

43,

44,

45]. A system class diagram provides a visual representation of the system’s components, their interactions, and relationships.

Figure 5 displays various classes, their methods, and the interconnections between them. Each block is divided into an upper part and a lower part. The upper part represents the class’s variables, while the lower part represents the class’s member functions. The system has three major components:

Teacher,

Classroom, and

Child. A teacher may be allocated to more than one classroom, which is shown by a line connecting the

Teacher and the

Classroom with an asterisk on the

Classroom side to indicate that one instructor can be assigned to more than one classroom. Conversely, since each child can be assigned to only one classroom, the line connecting

Child and

Classroom has a number 1 on the classroom side and an asterisk on the child side.

The relationship between the Child component and the Lesson component is many-to-many, which implies that each student may have several lessons, and a lesson can be assigned to multiple children. Therefore, asterisks are displayed on both sides of the edge relation that connects Child to Lesson. The same applies to the Child class and the Test class. The dotted line relation represents a class function that takes an instance of both connected classes as arguments to record a global variable in the database. In this case, we have the Takes class, which records which lesson is taken by which child, and Test class, which records, which test is taken by which child.

The Teacher class will hold credentials for teachers. Conversely, the Child class omits credentials. Nonetheless, this is performed automatically by searching for the child’s profile based on the image recognition supplied by the robot through the identify Child method and comparing it to the stored child picture. The Child class will also store additional child-specific information. The Classroom class stores the class’s title, number, photo, start and finish dates, and duration. Classroom class has one method for creating a class. The Taking Test class stores the score and the test date and has two methods to set and retrieve the scores. The Test class keeps track of which child completed what test number. The Lesson class stores the lesson title and has one method to initiate the lesson by sending a unique code for the robot to start the corresponding routine. The Question class stores questions and answers and has two methods: one for asking the question and another for checking the answer. That Takes class acts as a control flag to track which student took which lesson.

2.5. Teacher User-Interface

Figure 6 visually represents the mobile application’s user interface.

Figure 6a displays the landing page, which offers teachers three options: to log in, create a new account, or connect using an existing Google account.

Figure 6b provides a snapshot of the variety of accessible fields within the application, with a vertical scrolling feature for a comprehensive overview of all fields.

Figure 6c showcases the available lessons, and a vertical scrolling feature is used to allow users to view all lessons. Lastly, the child’s progress chart is available from the Class Tab. This feature provides teachers with a quick overview of the child’s previous lesson, highlighting their strengths and weaknesses. This valuable information aids in tailoring the current lesson for a personalized educational approach.

2.6. Robot Identification Protocol

The auto-identification feature will identify the child once they appear in front of the robot, matching their image with the photo stored in the database. Upon successful identification, the robot initiates a personalized greeting, addressing the child by name to establish a social bond and set the stage for the upcoming lesson.

Figure 7 provides a detailed illustration of this identification sequence protocol.

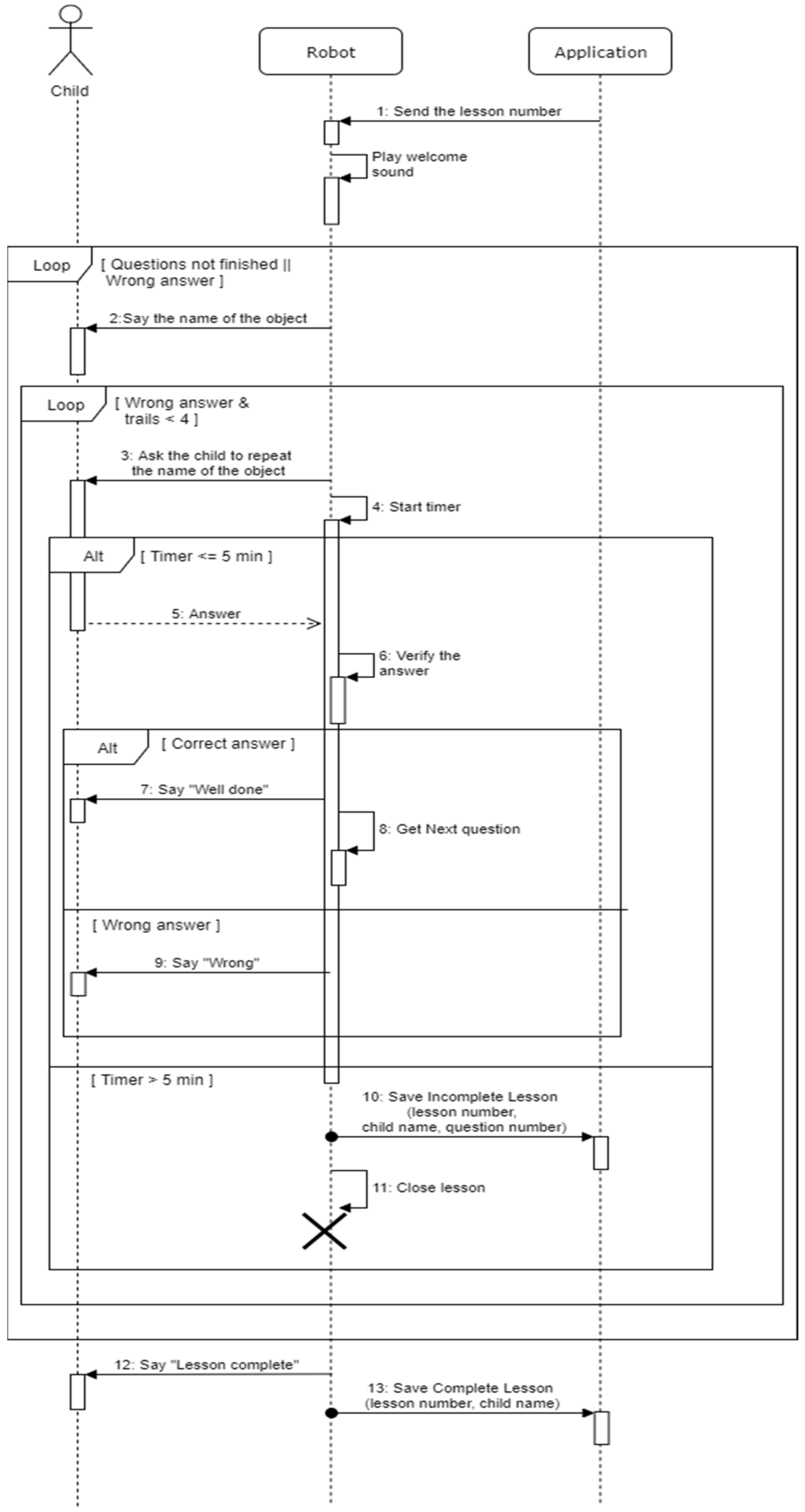

2.7. Lesson Protocol

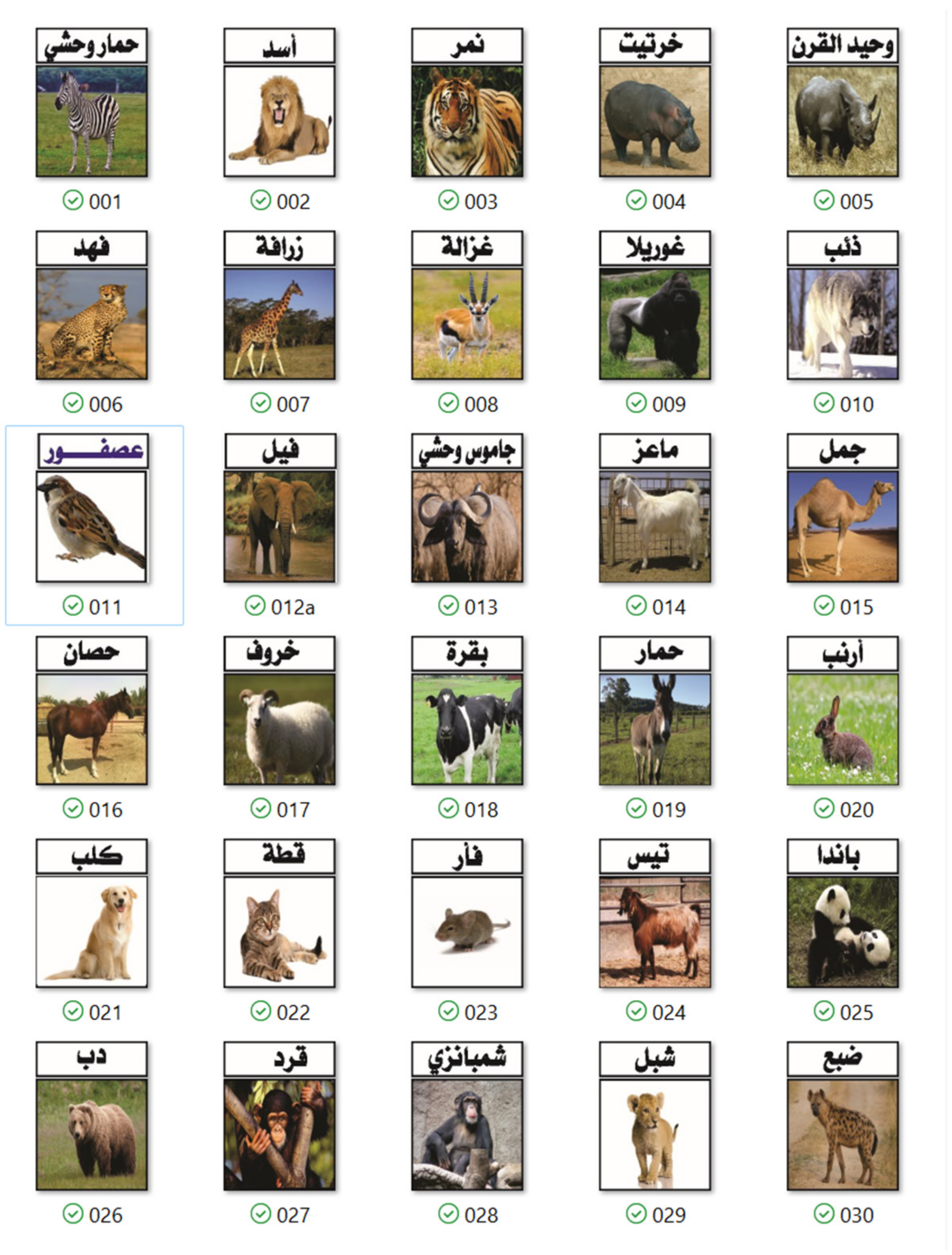

The authors successfully implemented a range of lessons, encompassing topics such as color, food, body parts, personal hygiene tools, transportation, movement, and animals. Each lesson adheres to a standard protocol, as demonstrated in

Figure 8, which outlines the sequence for a “repeat the object name” lesson. Upon welcoming the child, the robot prompts them to name a presented object chosen by the teacher. The robot then pauses to listen for a response. If the child answers incorrectly within a specified timeframe, the robot offers three additional attempts. If these attempts are unsuccessful, the lesson is recorded as incomplete, and the robot moves on to the next topic. The child’s progress chart records these incomplete lessons for future review and revision.

The interaction between each lesson and the child varies based on the child’s level and profile.

Figure 9a shows that some lessons will require the child to emulate the robot, while other lessons will ask the child to select the correct card from a stack of cards, as shown in

Figure 10 (an example for the animal lesson). The child will be instructed to hold the correct animal card in front of the robot to check the answer, as depicted in

Figure 9b. In this case, the child is holding a bird card (card number 11 in

Figure 10) in front of the robot, and the robot will perform image processing and check if the image matches the question asked. Depending on the difficulty level, the robot could imitate the animal’s sound, mention the animal’s name, or describe its features. See

Supplementary Materials for more information at

https://openmylink.in/NAOMedia.

3. Results

3.1. Technical Description

The front-end solution was deployed on the NAO robot using Choregraphe 2.1.4. The backend software was developed using object-oriented programming (OOP) with Java programming language, implemented using Android studio 3.3 and Arduino IDE 1.8.8. These were deployed on an Android smartphone running version 7, equipped with 2 MB RAM and 32 MB storage capacity. The database was stored using a Firebase server [

46] as a backend-as-a-service (BAAS) hosted with Google Cloud and integrated with Bayt Alatfal cloud structure [

47]. Firebase operates as a NoSQL database [

48,

49], employing JSON-like documents [

50].

Lessons and quizzes were designed in consultation with the teachers and the specialists from Bayt Alatfal special needs preschool for autism. The lessons are compatible with the Bexly curriculum.

3.2. Experiment Description

Each lesson required approximately eight to ten minutes for completion. The learning sessions were conducted under the supervision of one specialist and one IT technical support worker. Each session lasted between 20 and 30 min and covers three to four children. The robot was charged between sessions, and the IT support worker set up the program. On average, a working day consisted of six to eight sessions. The sessions covered various fields, including communication, cognition, social skills, and mobility.

3.3. Population Description

The study involved two distinct groups, each comprising 12 children aged between three to six years old, exhibiting mild to moderate ASD symptoms, as shown in

Table 2 and

Table 3.

Table 4 provides an illustration of the normality test, executed using both the Kolmogorov–Smirnov and Shapiro–Wilk methods. The control group adhered to the standard class curriculum, establishing a baseline for comparison. Meanwhile, the experimental group had the unique opportunity to interact with the NAO robot as an integrated part of their classroom activities, introducing an innovative approach to their learning experience.

3.4. Results from Eye Contact Data Analysis

To investigate the research question, we have composed the following null hypotheses:

Hypothesis 0a (H0a): The distribution of the number of eye contacts is the same across categories of autism levels.

Hypothesis 0b (H0b): The distribution of the number of eye contacts is the same across different age groups.

Hypothesis 0c (H0c): The distribution of the number of eye contacts is the same across the control group and experimental group.

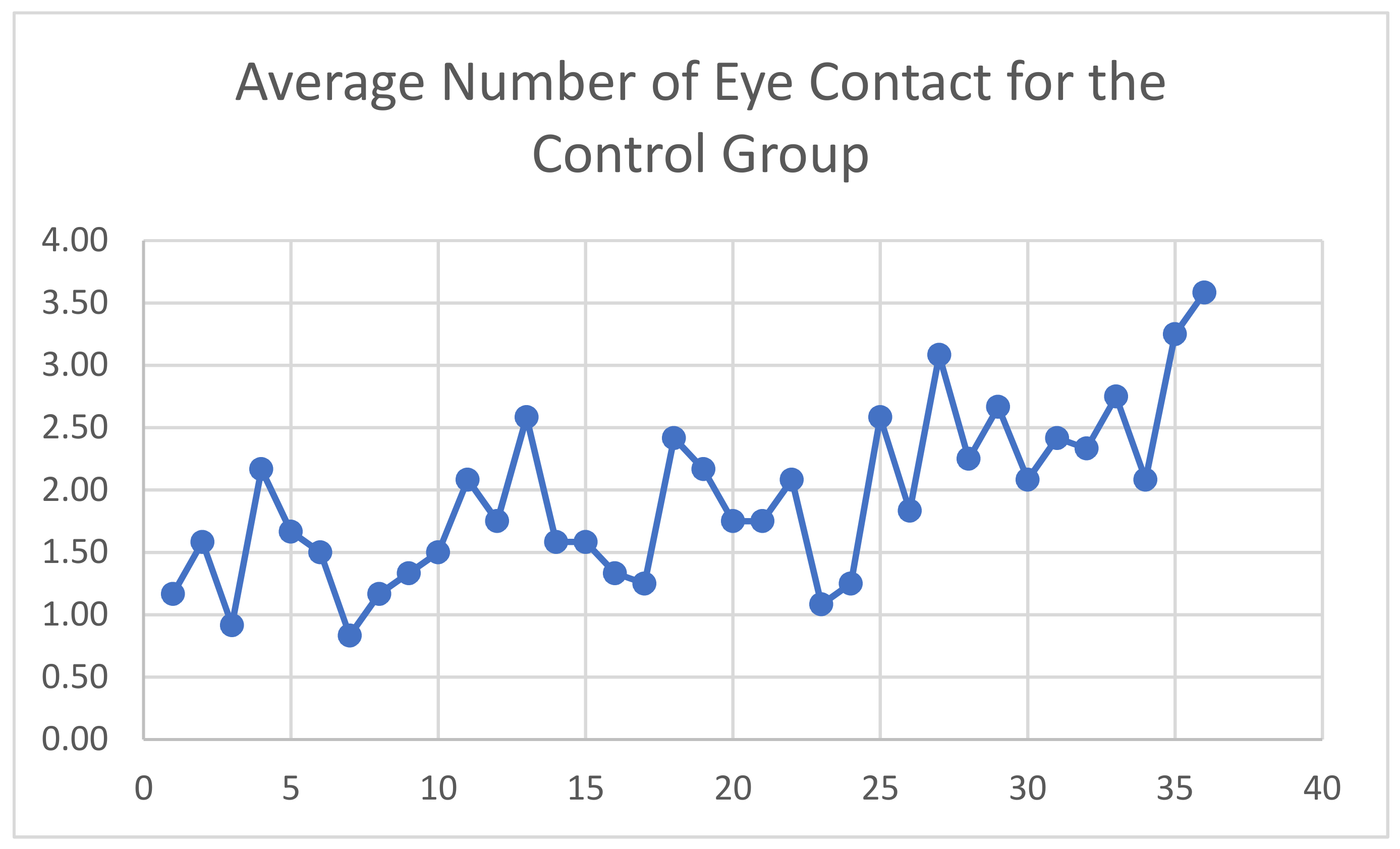

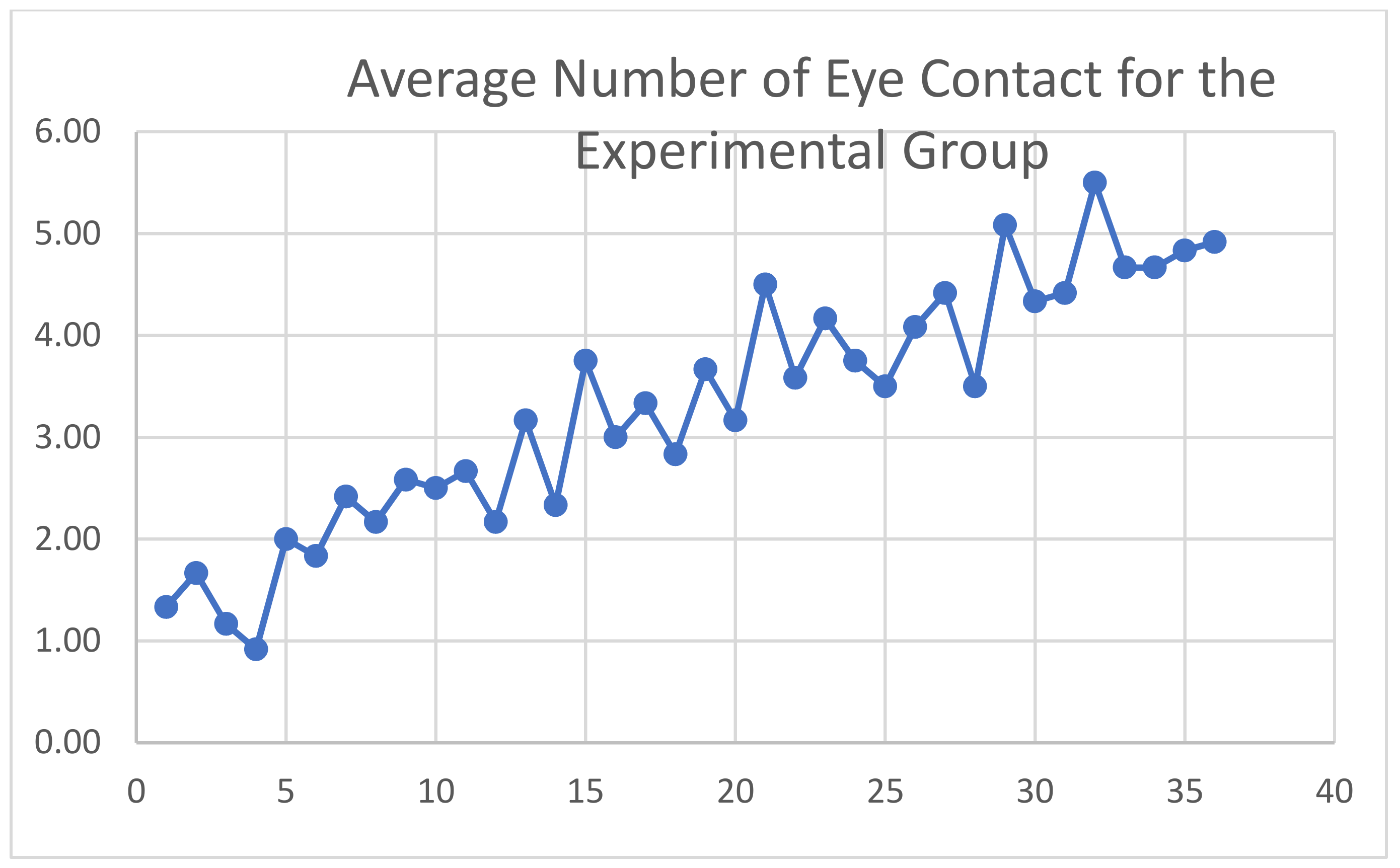

Figure 11 and

Figure 12 plot the average number of eye contacts per student per working day for the control group and the average number of eye contacts per student per working day for the experimental group, respectively. During a working session, when a child’s gaze aligns with that of the teacher, it is considered eye contact. The teacher recorded this during each session.

The Shapiro–Wilk test and the Kolmogorov–Smirnov test are two of the most commonly used methods to test for normality. As shown in

Table 4 above, the

p-values (Sig.) for both age and autism level, as indicated by both the Kolmogorov–Smirnov and Shapiro–Wilk tests, are less than 0.05 (in fact, they are less than 0.001), which means neither age nor autism level is normally distributed. Consequently, non-parametric test methodologies will be applied to the data.

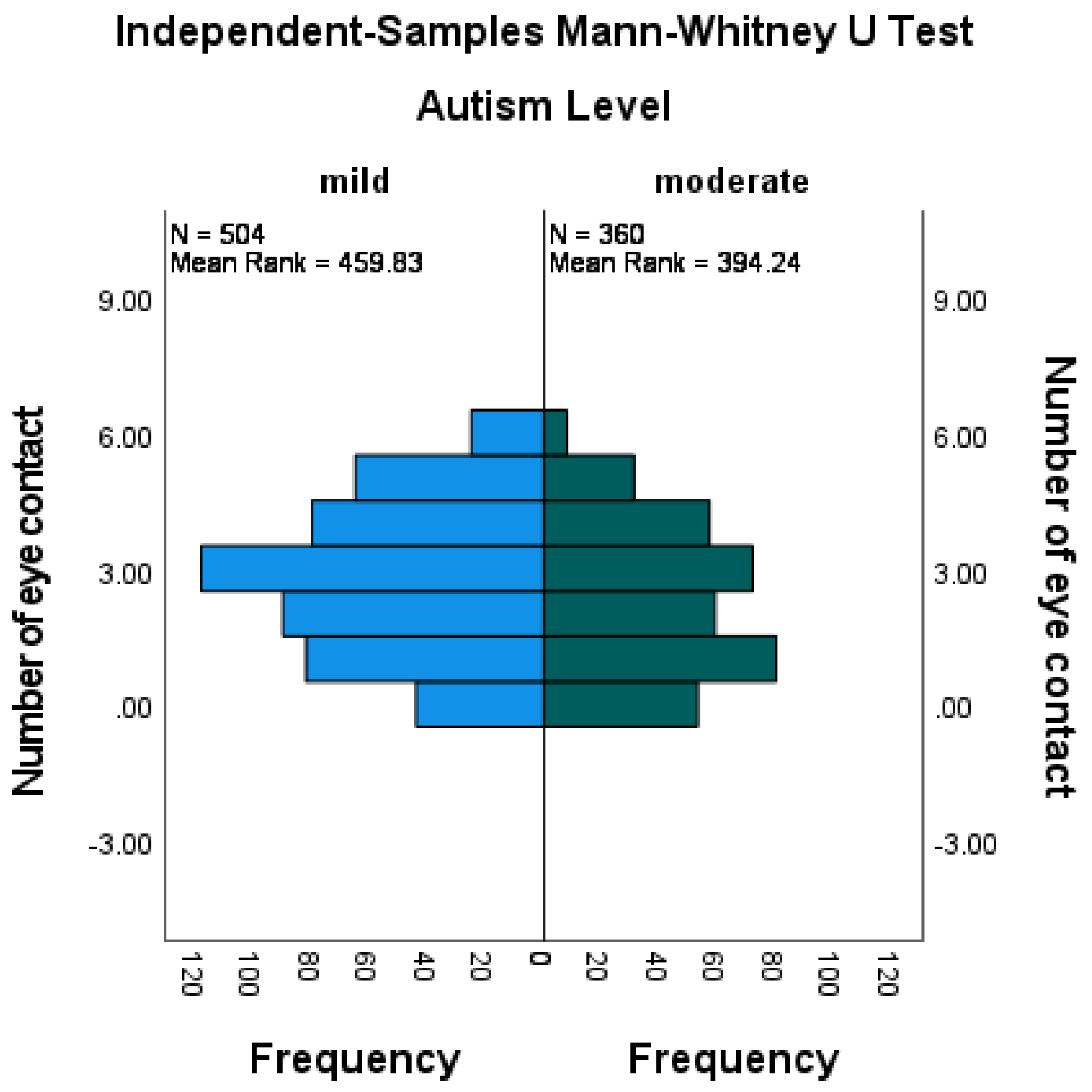

Table 5 presents a summary of the results from the independent-sample Mann–Whitney U Test, analyzing the number of eye contacts across different autism levels. Similarly,

Figure 13 illustrates the independent-sample Mann–Whitney U Test for the number of eye contacts across various autism levels.

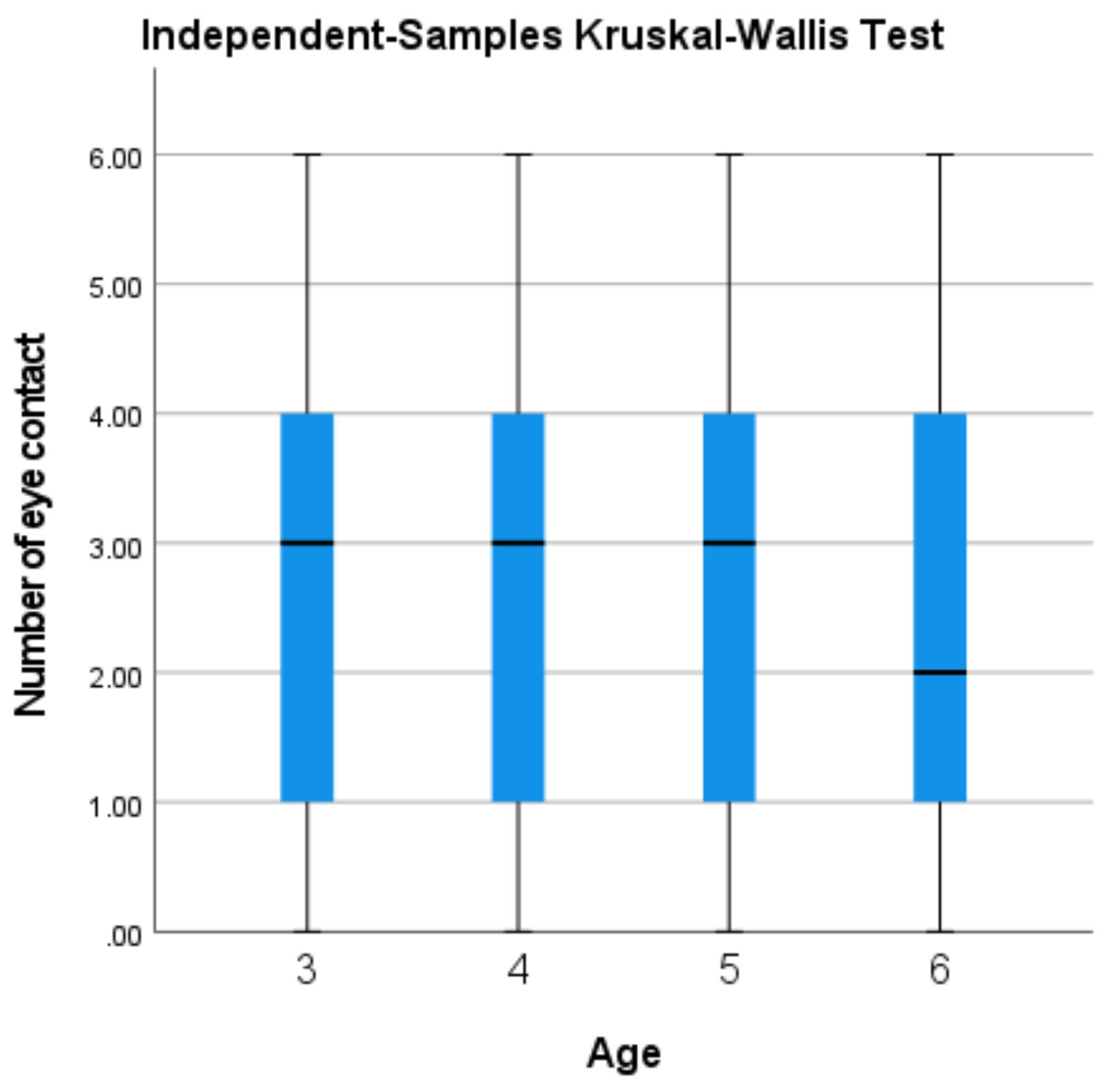

Given that the age variable encompasses more than two age groups and exhibits a non-normal distribution across all samples, we opted for the Kruskal–Wallis test as an alternative to the one-way ANOVA. In this regard,

Table 6 succinctly encapsulates the outcomes of the independent-sample Kruskal–Wallis test, investigating the variation in the number of eye contacts across distinct age groups. In tandem,

Figure 14 visually represents the findings of the independent-sample Kruskal–Wallis Test through a graphical representation.

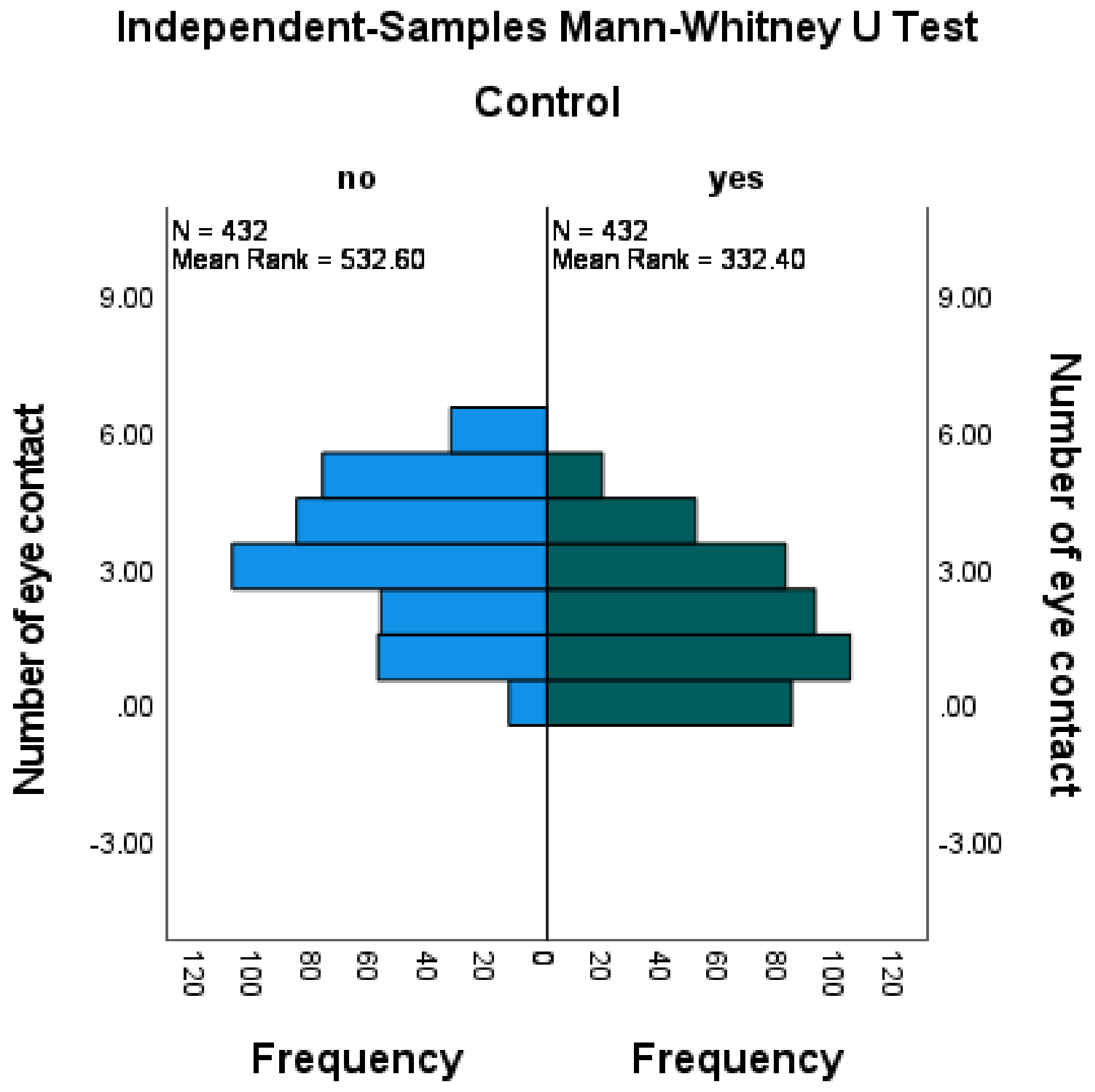

Table 7 provides a summary of the independent-sample Mann–Whitney U test results, examining the number of eye contacts between the control (Control = 1) and experimental (Control = 0) samples. In a similar vein,

Figure 15 depicts the independent-sample Mann–Whitney U test, focusing on the number of eye contacts within these same samples.

4. Discussion

The primary objective of our study was to investigate the impact of age, autism level, and the use of NAO robot intervention (denoted as “Control = 0” for the experimental group in the data) on the number of eye contacts demonstrated by the subjects. We sought to understand these relationships by using SPSS version 28.

In our study, the dependent variable is the “Number of Eye Contact”, while the independent variables comprise “Age”, “Autism Level”, and “Control” (the latter designates whether the subject belongs to the control (Control = 1) or experimental (Control = 0) group.

As indicated in

Table 5, the results reveal a statistically significant difference in the number of eye contacts between different autism levels. This conclusion is drawn from a

p-value of less than 0.001, making the result significant at the 0.05 level, leading to the rejection of the null hypothesis H

0a. In other words, this implies a meaningful difference in the number of eye contacts observed among various categories of autism levels.

Additionally, the “Standardized Test Statistic” value of −3.865, representing the z-score, offers insight into the result’s relation to the mean. It tells us how many standard deviations the observed result is from the mean. The negative value specifically suggests that the observed rank sum is less than what would typically be expected.

Turning to

Table 6, the Kruskal–Wallis test yields a relatively high

p-value (Asymptotic Sig. (2-sided test) = 0.635). Since this

p-value exceeds the conventional threshold of 0.05, we do not have grounds to reject the null hypothesis H0b. In essence, these test outcomes suggest that there is not a statistically significant variation in the distribution of the dependent variable across different age groups. In simpler terms, age does not appear to exert a substantial impact on the frequency of eye contacts, according to these test findings.

The independent-sample Mann–Whitney U test was utilized to investigate the distribution of the number of eye contacts across the categories of the control variable within the dataset (N = 864). The null hypothesis for this non-parametric test posits that the distribution of eye contacts is identical across the control and experimental categories.

The test results revealed a statistically significant difference in the distribution of the number of eye contacts across the control categories (Mann–Whitney U = 50,070.500, Wilcoxon W = 143,598.500, Standardized Test Statistic = −11.966, and Asymptotic Significance (2-sided) = 0.000). With a significance level of 0.050, the null hypothesis H0c is consequently rejected.

This finding indicates that the number of eye contacts is not uniformly distributed across the control and experimental subjects, indicating a discernible distinction between the groups. Therefore, the incorporation of SAR augmented with a mobile application does impact the frequency of eye contacts within the examined population, which answers our research question. Further exploration and contextualization of the specific categories and features of the subjects, along with the methodology used and its influence on eye contact, could offer deeper insights into the underlying mechanisms and implications of this finding within the context of autism research.

We also noticed that maintaining a child’s attention throughout the sessions proved to be complicated. A substantial portion of each session was devoted to captivating and sustaining the child’s attention. We discovered that utilizing the NAO robot significantly grabbed the child’s attention. The robot’s capacity to personalize substances, such as recognizing the child’s presence by proclaiming their name when they entered the robot’s viewing area, proved to be highly beneficial. This individualized contact was further enhanced when the robot retained prior information about the child, fostering a sense of connection.

However, we encountered difficulties when the information did not correspond to the child’s interests. To address this, we worked closely with the preschool’s behavior modification specialist and psychotherapist (BMSP) to customize themes based on the child’s interests. For example, we incorporated the child’s favorite foods into food lessons and placed a mirror behind the robot during motion lessons to allow the child to observe and replicate the robot’s motions. These alterations, implemented in both the control and experimental groups, significantly improved the children’s attention.

In lessons focused on color, the NAO robot’s ability to change its eye color added an engaging and interactive element. The child was tasked with identifying the robot’s eye color by selecting a corresponding color card or verbally stating the color, depending on the lesson’s level. This exercise necessitated direct eye contact and notably enhanced this skill compared to traditional lessons involving color identification from pictures.

Many challenges are faced during the course of conducting these experiments, mainly in engaging the child and retaining their attention. There are also other technical challenges and limitations that need to be addressed in any future work or extensions. We outline them as follows:

The NAO robot can interact concurrently with a maximum of five children.

The NAO robot is proficient in the official Arabic language but does not comprehend the Kuwaiti dialect.

The NAO robot has an operational battery life of about 90 min and requires regular recharging to prevent automatic shutdown due to a depleted battery.

Internet connectivity is essential for the NAO robot to access cloud services and acquire accurate system data.

The mobile application is exclusive to Android users.

Teachers need a valid email address for application usage.

The user must connect to the internet to use the application.

5. Conclusions

In the last decade, robots have undergone significant advancements, transitioning from factory machines to sophisticated companions and assistants in various aspects of human life. Thanks to breakthroughs in artificial intelligence, including machine learning, image recognition, voice recognition, and speech synthesis, robots have become more human-like companions or assistants in many areas of human life. One such application is education, where robots have shown a key role in attracting autistic youngsters and bridging the gap between them and their isolation. Autistic children often find devices more appealing than people. The NAO robot was carefully selected to resemble a human when augmented with a backend application that could increase the robot’s intelligence to recognize the child’s profile better and direct their learning path, as well as provide the teacher with statistical charts to track the learner’s progress better and tailor future lessons based on previous historical data.

This study was implemented in an autism-specific private preschool. With the aid of a school consultant, all lessons were created and conducted using linguistic and cultural references specific to the learners. Twelve children were given the solution and showed improvement in their eye contact skills.

This research effectively demonstrated the potential of combining humanoid robots, specifically the NAO robot, with a smartphone application to improve autistic children’s educational experiences. The implemented solution has increased eye contact and engagement in AE by providing a customized and individualized learning approach. Collaboration with specialists and educators ensured that the content was tailored to the specific requirements and cultural context of the children.

However, the study also revealed a number of limitations and obstacles, including the limited number of children with whom the NAO robot can interact simultaneously, language barriers, battery life, and internet connectivity requirements. Future research should strive to resolve these issues to enhance the efficacy and accessibility of these educational aids for autistic children.

Continued innovation and refinement in robotics and AI will contribute to bridging the divide between autistic children and their educational requirements by building upon the findings of this study. This technology has the potential to become a valuable resource for educators and specialists, promoting inclusivity and individualized learning for children with autism.

Author Contributions

Conceptualization, A.M.M. and H.M.A.M.; methodology, A.M.M.; software, A.A.-H. and N.A.-K.; validation, R.A.-O., A.A.-H. and H.M.A.M.; formal analysis, A.M.M.; investigation, N.A.-K. and A.A.-A.; resources, A.M.M.; writing—original draft preparation, A.M.M.; writing—review and editing, A.M.M. and H.M.A.M.; visualization, R.A.-O.; supervision, A.M.M. and H.M.A.M.; project administration, A.M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The questionnaire and methodology of this study were approved by the office of the Vice Dean for Academic Affairs for Research and Graduate Studies at the College of Engineering and Petroleum, Kuwait University (REF#737).

Informed Consent Statement

Informed consent was obtained from the parents of all subjects involved in the study.

Data Availability Statement

The original contributions presented in the study are included in the article/

Supplementary Material. Further inquiries can be directed to the corresponding author(s). The video and media for this study can be found in the OneDrive link at

https://bit.ly/includeMe accessed on 18 July 2023.

Acknowledgments

The authors extend their gratitude to Bayet Alatfal Special Need Nursery for their support, dedication, and collaboration in designing, testing, and validating this work, as well as for allowing their students to interact with the robot. Special thanks are also extended to the Robotics lab at Kuwait University for providing access to the NAO robot for this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Claudia, S.; Lucia, L. Social Skills, Autonomy and Communication in Children with Autism. Technium Soc. Sci. J. 2022, 30, 442. [Google Scholar]

- Azeem, M.W.; Imran, N.; Khawaja, I.S. Autism spectrum disorder: An update. Psychiatr. Annals. 2016, 46, 58–62. [Google Scholar] [CrossRef]

- Theoharides, T.C.; Kavalioti, M.; Tsilioni, I. Mast cells, stress, fear and autism spectrum disorder. Int. J. Mol. Sci. 2019, 20, 3611. [Google Scholar] [CrossRef] [PubMed]

- Ng, Q.X.; Loke, W.; Venkatanarayanan, N.; Lim, D.Y.; Soh, A.Y.S.; Yeo, W.S. A systematic review of the role of prebiotics and probiotics in autism spectrum disorders. Medicina 2019, 55, 129. [Google Scholar] [CrossRef]

- Jouaiti, M.; Henaff, P. Robot-based motor rehabilitation in autism: A systematic review. Int. J. Soc. Robot. 2019, 11, 753–764. [Google Scholar] [CrossRef]

- Sharma, S.R.; Gonda, X.; Tarazi, F.L. Autism spectrum disorder: Classification, diagnosis and therapy. Pharmacol. Ther. 2018, 190, 91–104. [Google Scholar] [CrossRef]

- Medavarapu, S.; Marella, L.L.; Sangem, A.; Kairam, R. Where is the evidence? A narrative literature review of the treatment modalities for autism spectrum disorders. Cureus 2019, 11, e3901. [Google Scholar] [CrossRef]

- Gengoux, G.W.; Abrams, D.A.; Schuck, R.; Millan, M.E.; Libove, R.; Ardel, C.M.; Phillips, J.M.; Fox, M.; Frazier, T.W.; Hardan, A.Y. A pivotal response treatment package for children with autism spectrum disorder: An RCT. Pediatrics 2019, 144, e20190178. [Google Scholar] [CrossRef]

- Hao, Y.; Franco, J.H.; Sundarrajan, M.; Chen, Y. A pilot study comparing tele-therapy and in-person therapy: Perspectives from parent-mediated intervention for children with autism spectrum disorders. J. Autism Dev. Disord. 2021, 51, 129–143. [Google Scholar] [CrossRef]

- Will, M.N.; Currans, K.; Smith, J.; Weber, S.; Duncan, A.; Burton, J.; Kroeger-Geoppinger, K.; Miller, V.; Stone, M.; Mays, L.; et al. Evidenced-based interventions for children with autism spectrum disorder. Curr. Probl. Pediatr. Adolesc. Health Care 2018, 48, 234–249. [Google Scholar] [CrossRef]

- DiPietro, J.; Kelemen, A.; Liang, Y.; Sik-Lanyi, C. Computer-and robot-assisted therapies to aid social and intellectual functioning of children with autism spectrum disorder. Medicina 2019, 55, 440. [Google Scholar] [CrossRef] [PubMed]

- Kumazaki, H.; Muramatsu, T.; Yoshikawa, Y.; Matsumoto, Y.; Ishiguro, H.; Kikuchi, M.; Sumiyoshi, T.; Mimura, M. Optimal robot for intervention for individuals with autism spectrum disorders. Psychiatry Clin. Neurosci. 2020, 74, 581–586. [Google Scholar] [CrossRef]

- Wood, L.J.; Zaraki, A.; Robins, B.; Dautenhahn, K. Developing kaspar: A humanoid robot for children with autism. Int. J. Soc. Robot. 2021, 13, 491–508. [Google Scholar] [CrossRef] [PubMed]

- Cao, H.L.; Simut, R.E.; Desmet, N.; De Beir, A.; Van De Perre, G.; Vanderborght, B.; Vanderfaeillie, J. Robot-assisted joint attention: A comparative study between children with autism spectrum disorder and typically developing children in interaction with NAO. IEEE Access 2020, 8, 223325–223334. [Google Scholar] [CrossRef]

- Cao, H.L.; Vanderborght, R.S.; Krepel, N.; Vanderborght, B.; Vanderfaeillie, J. Could NAO robot function as model demonstrating joint attention skills for children with autism spectrum disorder? An exploratory study. Int. J. Hum. Robot. 2022, 19, 2240006. [Google Scholar] [CrossRef]

- Puglisi, A.; Caprì, T.; Pignolo, L.; Gismondo, S.; Chilà, P.; Minutoli, R.; Marino, F.; Failla, C.; Arnao, A.A.; Tartarisco, G.; et al. Social Humanoid Robots for Children with Autism Spectrum Disorders: A Review of Modalities, Indications, and Pitfalls. Children 2022, 9, 953. [Google Scholar] [CrossRef]

- Taheri, A.; Shariati, A.; Heidari, R.; Shahab, M.; Alemi, M.; Meghdari, A. Impacts of using a social robot to teach music to children with low-functioning au-tism. Paladyn J. Behav. Robot. 2021, 12, 256–275. [Google Scholar] [CrossRef]

- Costa, S.; Soares, F.; Pereira, A.P.; Santos, C.; Hiolle, A. A pilot study using imitation and storytelling scenarios as activities for labelling emotions by chil-dren with autism using a humanoid robot. In Proceedings of the 4th ICDL-EpiRob, Genoa, Italy, 13–16 October 2014; pp. 299–304. [Google Scholar]

- Silva, V.; Soares, F.; Esteves, J.S.; Santos, C.P.; Pereira, A.P. Fostering emotion recognition in children with autism spectrum disorder. Multimodal Technol. Interact. 2021, 5, 57. [Google Scholar] [CrossRef]

- Ubaldi, A.; Gelsomini, M.; Degiorgi, M.; Leonardi, G.; Penati, S.; Ramuzat, N.; Silvestri, J.; Garzotto, F. Puffy, a Friendly Inflatable Social Robot. In Proceedings of the CHI EA’ 18, Montreal, QC, Canada, 21–26 April 2018; p. VS03. [Google Scholar]

- Huang, X.; Fang, Y. Assistive Robot Design for Handwriting Skill Therapy of Children with Autism. In Proceedings of the ICIRA, Harbin, China, 1–3 August 2022; Springer: Berlin/Heidelberg, Germany; pp. 413–423. [Google Scholar]

- Attamimi, M.; Muhtadin, M. Implementation of Ichiro Teen-Size Humanoid Robots for Supporting Autism Therapy. JAREE 2019, 3. [Google Scholar]

- Lang, N.; Goes, N.; Struck, M.; Wittenberg, T.; Goes, N.; Seßner, J.; Franke, J.; Wittenberg, T.; Dziobek, I.; Kirst, S.; et al. Evaluation of an algorithm for optical pulse detection in children for application to the Pepper robot. Curr. Dir. Biomed. Eng. 2021, 7, 484–487. [Google Scholar] [CrossRef]

- Bharatharaj, J.; Huang, L.; Krägeloh, C.; Elara, M.R.; Al-Jumaily, A. Social engagement of children with autism spectrum disorder in interaction with a parrot-inspired therapeutic robot. Procedia Comput. Sci. 2018, 133, 368–376. [Google Scholar] [CrossRef]

- Ishak, N.I.; Yusof, H.M.; Na’im Sidek, S.; Rusli, N. Robot selection in robotic intervention for ASD children. In Proceedings of the IEEE-EMBS (IECBES), Sarawak, Malaysia, 3–6 December 2018; pp. 156–160. [Google Scholar]

- Soleiman, P.; Moradi, H.; Mehralizadeh, B.; Azizi, N.; Anjidani, F.; Pouretemad, H.R.; Arriaga, R.I. Robotic Social Environments: A Promising Platform for Autism Therapy. In Proceedings of the ICSR, Golden, CO, USA, 14–18 November 2020; Springer: Berlin/Heidelberg, Germany; pp. 232–245. [Google Scholar]

- Nunez, E.; Matsuda, S.; Hirokawa, M.; Yamamoto, J.; Suzuki, K. Effect of sensory feedback on turn-taking using paired devices for children with ASD. Multimodal Technol. Interact. 2018, 2, 61. [Google Scholar] [CrossRef]

- Lane, G.W.; Noronha, D.; Rivera, A.; Craig, K.; Yee, C.; Mills, B.; Villanueva, E. Effectiveness of a social robot, Paro. in a VA long-term care setting. Psychol. Serv. 2016, 13, 292. [Google Scholar] [CrossRef] [PubMed]

- Ramírez-Duque, A.A.; Aycardi, L.F.; Villa, A.; Munera, M.; Bastos, T.; Belpaeme, T.; Frizera-Neto, A.; Cifuentes, C.A. Collaborative and inclusive process with the autism community: A case study in Colombia about social robot design. Int. J. Soc. Robot. 2021, 13, 153–167. [Google Scholar] [CrossRef]

- Attawibulkul, S.; Sornsuwonrangsee, N.; Jutharee, W.; Kaewkamnerdpong, B. Using storytelling robot for supporting autistic children in theory of mind. Int. J. Biosci. Biochem. Bioinform. 2019, 9, 100–108. [Google Scholar] [CrossRef][Green Version]

- Robins, B.; Ferrari, E.; Dautenhahn, K. Developing scenarios for robot assisted play. In Proceedings of the RO-MAN 2008—The 17th IEEE RO-MAN, Munich, Germany, 1–3 August 2008; pp. 180–186. [Google Scholar]

- Mavadati, S.M.; Feng, H.; Salvador, M.; Silver, S.; Gutierrez, A.; Mahoor, M.H. Robot-based therapeutic protocol for training children with Autism. In Proceedings of the 2016 25th IEEE RO-MAN, New York, NY, USA, 26–31 August 2016; pp. 855–860. [Google Scholar]

- Saleh, M.A.; Hanapiah, F.A.; Hashim, H. Robot applications for autism: A comprehensive review. Disabil. Rehabil. Assist. Technol. 2021, 16, 580–602. [Google Scholar] [CrossRef]

- Saleh, M.A.; Hashim, H.; Mohamed, N.N.; Abd Almisreb, A.; Durakovic, B. Robots and autistic children: A review. PEN 2020, 8, 1247–1262. [Google Scholar]

- Ismail, L.I.; Verhoeven, T.; Dambre, J.; Wyffels, F. Leveraging robotics research for children with autism: A review. Int. J. Soc. Robot. 2019, 11, 389–410. [Google Scholar] [CrossRef]

- Osório, A.A.C.; Brunoni, A.R. Transcranial direct current stimulation in children with autism spectrum disorder: A systematic scoping review. Dev. Med. Child. Neurol. 2019, 61, 298–304. [Google Scholar] [CrossRef]

- Alabdulkareem, A.; Alhakbani, N.; Al-Nafjan, A. A Systematic Review of Research on Robot-Assisted Therapy for Children with Autism. Sensors 2022, 22, 944. [Google Scholar] [CrossRef]

- Szymona, B.; Maciejewski, M.; Karpiński, R.; Jonak, K.; Radzikowska-Büchner, E.; Niderla, K.; Prokopiak, A. Robot-Assisted Autism Therapy (RAAT). Criteria and types of experiments using anthropomorphic and zoomorphic robots. review of the research. Sensors 2021, 21, 3720. [Google Scholar] [CrossRef] [PubMed]

- Baratgin, J.; Jamet, F. Le paradigme de l’enfant mentor d’un robot ignorant et naif” comme révélateur de compétences cognitives et sociales précoces chez le jeune enfant. In Proceedings of the WACAI 2021, CNRS, Saint Pierre d’Oléron, France, 21 October 2021. [Google Scholar]

- Dubois-Sage, M.; Jacquet, B.; Jamet, F.; Baratgin, J. The mentor-child paradigm for individuals with autism spectrum disorders. In Proceedings of the International Conference on HRI, Stockholm, Sweden, 17 March 2023. [Google Scholar]

- SoftBank Robotics. Available online: https://www.softbankrobotics.com/ (accessed on 25 March 2022).

- Kanaki, K.; Kalogiannakis, M. Introducing fundamental object-oriented programming concepts in preschool education within the context of physical science courses. Educ. Inf. Technol. 2018, 23, 2673–2698. [Google Scholar] [CrossRef]

- Fülöp, M.T.; Udvaros, J.; Gubán, Á.; Sándor, Á. Development of Computational Thinking Using Microcontrollers Integrated into OOP (Object-Oriented Programming). Sustainability 2022, 14, 7218. [Google Scholar] [CrossRef]

- Dageförde, J.C.; Kuchen, A. A compiler and virtual machine for constraint-logic object-oriented programming with Muli. J. Comput. Lang. 2019, 53, 63–78. [Google Scholar] [CrossRef]

- Mahoney, M.S. The roots of software engineering. CWI Q. 1990, 3, 325–334. [Google Scholar]

- Mokar, M.A.; Fageeri, S.O.; Fattoh, S.E. Using firebase cloud messaging to control mobile applications. In Proceedings of the ICCCEEE, Khartoum, Sudan, 21–23 September 2019. [Google Scholar]

- Bayt Alatfal Special Needs Preschool. Bayt Alatfal. Available online: https://ba.specialneedsnurserykw.com/en/ (accessed on 18 July 2023).

- Guo, D.; Onstein, E. State-of-the-art geospatial information processing in NoSQL databases. ISPRS Int. J. Geo-Inf. 2020, 9, 331. [Google Scholar] [CrossRef]

- Diogo, M.; Cabral, B.; Bernardino, J. Consistency models of NoSQL databases. Future Internet 2019, 11, 43. [Google Scholar] [CrossRef]

- Bourhis, P.; Reutter, J.L.; Vrgoč, D. JSON: Data model and query languages. Inf. Syst. 2020, 89, 101478. [Google Scholar] [CrossRef]

Figure 1.

The five key performance indicators of SAR robots for ADT education in preschool or kindergarten.

Figure 1.

The five key performance indicators of SAR robots for ADT education in preschool or kindergarten.

Figure 2.

Choregraphe modular program used as the front end of the application.

Figure 2.

Choregraphe modular program used as the front end of the application.

Figure 3.

NAO and smartphone interaction structure.

Figure 3.

NAO and smartphone interaction structure.

Figure 4.

Smartphone application functional structure after login.

Figure 4.

Smartphone application functional structure after login.

Figure 5.

System class diagram.

Figure 5.

System class diagram.

Figure 6.

Smartphone application: (a) main page; (b) topic page; (c) lesson pages.

Figure 6.

Smartphone application: (a) main page; (b) topic page; (c) lesson pages.

Figure 7.

Child auto-identification protocol.

Figure 7.

Child auto-identification protocol.

Figure 8.

Protocol sequence of lesson “Say the object name”.

Figure 8.

Protocol sequence of lesson “Say the object name”.

Figure 9.

The robot performing at the preschool with autistic children during lesson sessions.

Figure 9.

The robot performing at the preschool with autistic children during lesson sessions.

Figure 10.

Animal cards that are presented to the child during the lessons; each card has the Arabic name on the top and the animal picture at the bottom.

Figure 10.

Animal cards that are presented to the child during the lessons; each card has the Arabic name on the top and the animal picture at the bottom.

Figure 11.

Average number of eye contacts per student per working day for the control group.

Figure 11.

Average number of eye contacts per student per working day for the control group.

Figure 12.

Average number of eye contacts per student per working day for the experimental group.

Figure 12.

Average number of eye contacts per student per working day for the experimental group.

Figure 13.

Independent-sample Mann–Whitney U Test graph for number of eye contacts across autism levels.

Figure 13.

Independent-sample Mann–Whitney U Test graph for number of eye contacts across autism levels.

Figure 14.

Independent-sample Kruskal–Wallis test graph for number of eye contacts across age.

Figure 14.

Independent-sample Kruskal–Wallis test graph for number of eye contacts across age.

Figure 15.

Independent-sample Mann–Whitney U test for number of eye contacts across control.

Figure 15.

Independent-sample Mann–Whitney U test for number of eye contacts across control.

Table 1.

Requirement justification with its engineering reflection.

Table 1.

Requirement justification with its engineering reflection.

| Requirement # | Engineering Requirements | Justification |

|---|

| 1,4,5 | The robot should be able to move, hear, speak, see, and think. | The robot looks like a human, so when autistic children interact with it, it helps them interact with humans easily. |

| 2 | The robot shall be available five days a week, two hours daily. | The teaching sessions are very tiring for humans, so using a robot is very helpful. |

| 3 | The robot weighs 5.4 kg. | The robot should be easy to handle and transport. |

| 1,4,6 | The robot should be able to speak at least 200 words in Arabic. | Autistic children only understand their native language. |

| 5,9,10 | The robot should have face recognition ability, and the system should differentiate each child’s face. | Each child has their own field, score, and progress.

This will help teachers to track each child’s progress separately. |

| 6 | The robot’s probability of failure on demand should be less than 1/1000. | The system should calculate the accurate score for the child. |

| 7,8,11 | The application shall not proceed to the following field unless the child completes several tests of the previous field with a score higher than 75/100. | This percentage will confirm that the information provided in the lesson has been fully understood by the child. |

| 10 | The application should have children’s scores and a progress analysis database. | This can also be used to promote good behavior, reward children for their progress and behavior, and show their progress to the teacher. |

| 11 | The application should have a simple interface and be learned in 15 min. | People of different age groups should be able to use it easily. |

Table 2.

Age distribution of the population.

Table 2.

Age distribution of the population.

| Age | N | % |

|---|

| 3 | 2 | 8.3% |

| 4 | 8 | 33.3% |

| 5 | 11 | 45.8% |

| 6 | 3 | 12.5% |

Table 3.

Autism level of the population.

Table 3.

Autism level of the population.

| Autism Level | N | % |

|---|

| mild | 13 | 54.2% |

| moderate | 11 | 45.8% |

Table 4.

Tests of normality using Kolmogorov–Smirnov and Shapiro–Wilk methods.

Table 4.

Tests of normality using Kolmogorov–Smirnov and Shapiro–Wilk methods.

| | Kolmogorov–Smirnov a | Shapiro–Wilk |

|---|

| Statistic | df | Sig. | Statistic | df | Sig. |

|---|

| Age | 0.243 | 864 | <0.001 | 0.873 | 864 | <0.001 |

| Autism Level | 0.384 | 864 | 0.000 | 0.626 | 864 | <0.001 |

Table 5.

Independent-sample Mann–Whitney U Test summary for number of eye contacts across Autism Levels.

Table 5.

Independent-sample Mann–Whitney U Test summary for number of eye contacts across Autism Levels.

| Total N | 864 |

| Mann–Whitney U | 76,946.500 |

| Wilcoxon W | 141,926.500 |

| Test Statistic | 76,946.500 |

| Standard Error | 3563.302 |

| Standardized Test Statistic | −3.865 |

| Asymptotic Sig. (2-sided test) | <0.001 |

Table 6.

Independent-sample Kruskal–Wallis test summary for number of eye contacts across age.

Table 6.

Independent-sample Kruskal–Wallis test summary for number of eye contacts across age.

| Total N | 864 |

| Test Statistic | 1.739 a,b |

| Degree Of Freedom | 3 |

| Asymptotic Sig. (2-sided test) | 0.628 |

Table 7.

Independent-sample Mann–Whitney U test summary for number of eye contacts across control.

Table 7.

Independent-sample Mann–Whitney U test summary for number of eye contacts across control.

| Total N | 864 |

| Mann–Whitney U | 50,070.500 |

| Wilcoxon W | 143,598.500 |

| Test Statistic | 50,070.500 |

| Standard Error | 3613.848 |

| Standardized Test Statistic | −11.966 |

| Asymptotic Sig. (2-sided test) | 0.000 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).