Slice-Aided Defect Detection in Ultra High-Resolution Wind Turbine Blade Images

Abstract

:1. Introduction

2. Related Work

- We propose a defect detection framework that is capable of incorporating a realistic slice-aided inference strategy for object detection in ultra high-resolution images.

- We present a benchmark comparison of our framework on several state-of-the-art deep learning detection baselines and slicing strategies for WTB inspection.

- We provide an extensive evaluation on an ultra high-resolution drone image dataset, demonstrating significant improvements in the detection of small- and medium-size WTB defects.

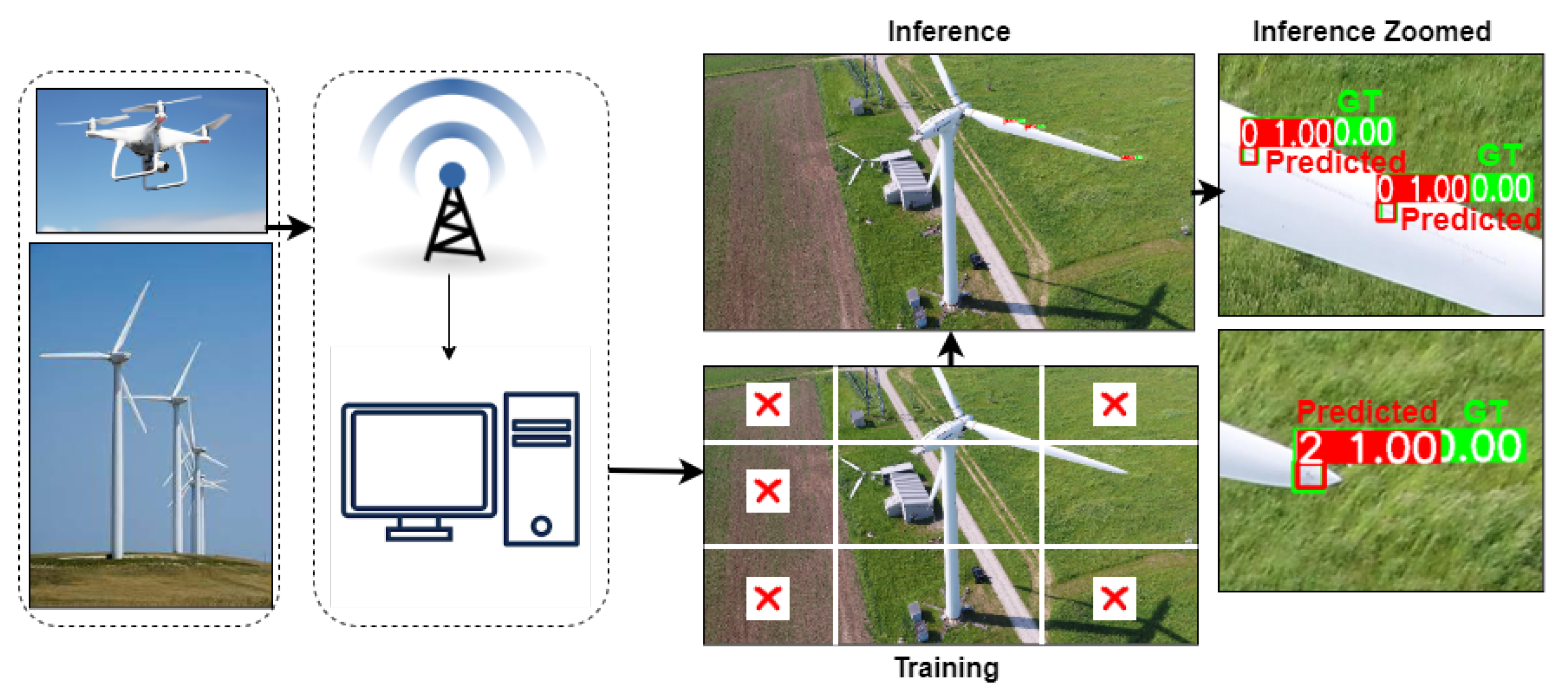

3. Proposed Framework

3.1. Dataset

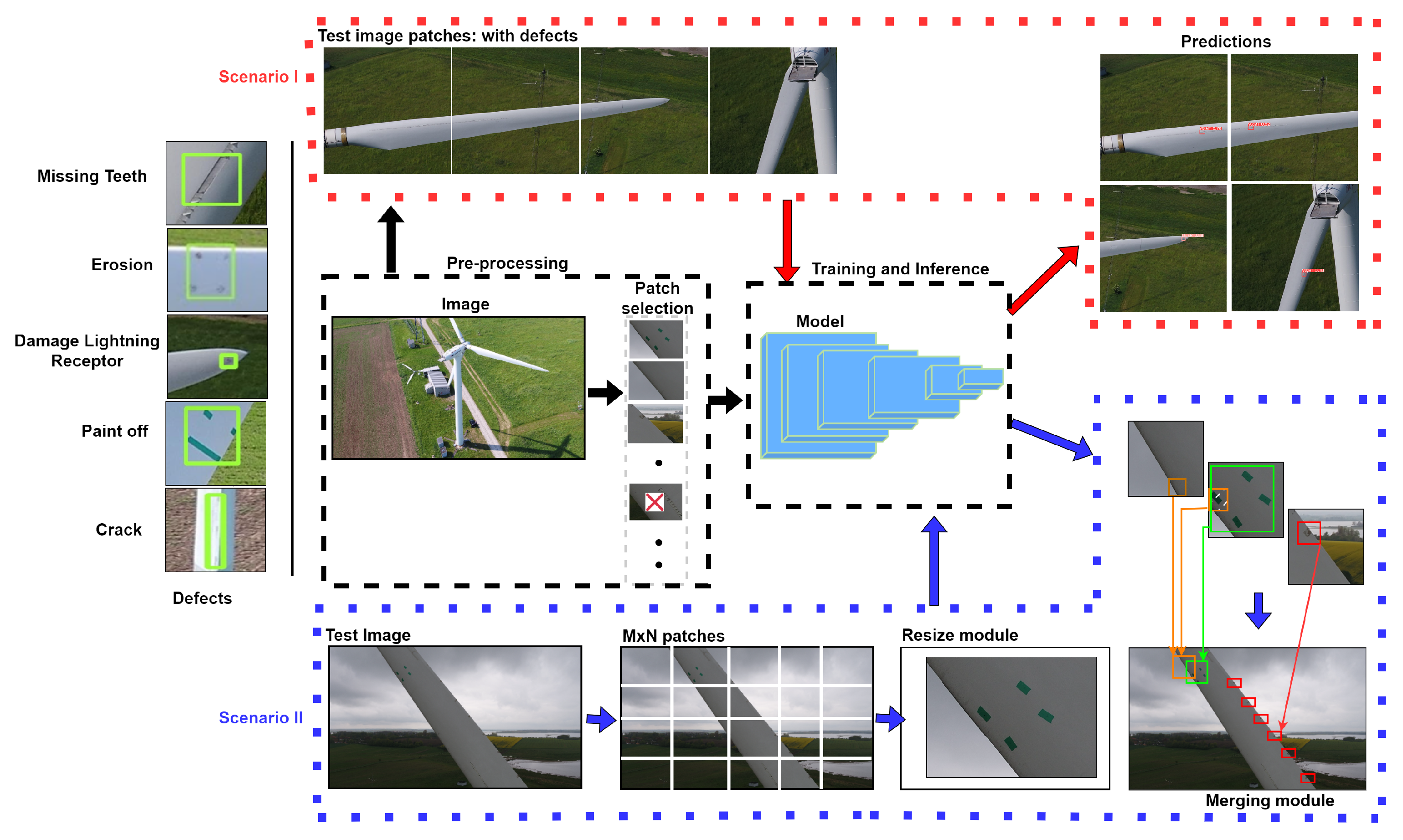

3.2. Wind Turbine Blade Surface Defects

- Missing Teeth (MT): this surface defect refers to the absence of teeth in the vertex generating panel, which is a crucial component of the wind turbine blade. Identifying the presence or absence of teeth is essential for ensuring optimal performance.

- Erosion (ER): erosion represents a type of surface defect in which the surface of the wind turbine blade undergoes gradual deterioration due to environmental factors or prolonged exposure to natural elements. Although erosion does not pose immediate problems, it necessitates regular maintenance.

- Damage Lightning Receptor (DA): the lightning receptor plays a vital role in safeguarding the wind turbine blade against lightning strikes. Identifying any surface damage to the lightning receptor is crucial for assessing its functionality and ensuring effective protection.

- Crack (CR): surface cracks in wind turbine blades are considered critical defects, as they can lead to structural instability and potentially result in catastrophic failure. Detecting and localizing surface cracks is essential for prompt maintenance and preventing further damage.

- Paint-Off (PO): paint-off refers to the loss or peeling of the protective paint layer on the wind turbine blade’s surface. While not directly problematic, it signifies the need for maintenance to preserve the blade’s integrity.

3.3. Dataset Annotation

3.4. Pre-Processing

3.5. Detection Framework

3.6. Inference Strategies

3.6.1. Scenario I: Patch-Based Inference

3.6.2. Scenario II: Slice-Aided Inference

4. Experiments

4.1. Evaluation Details

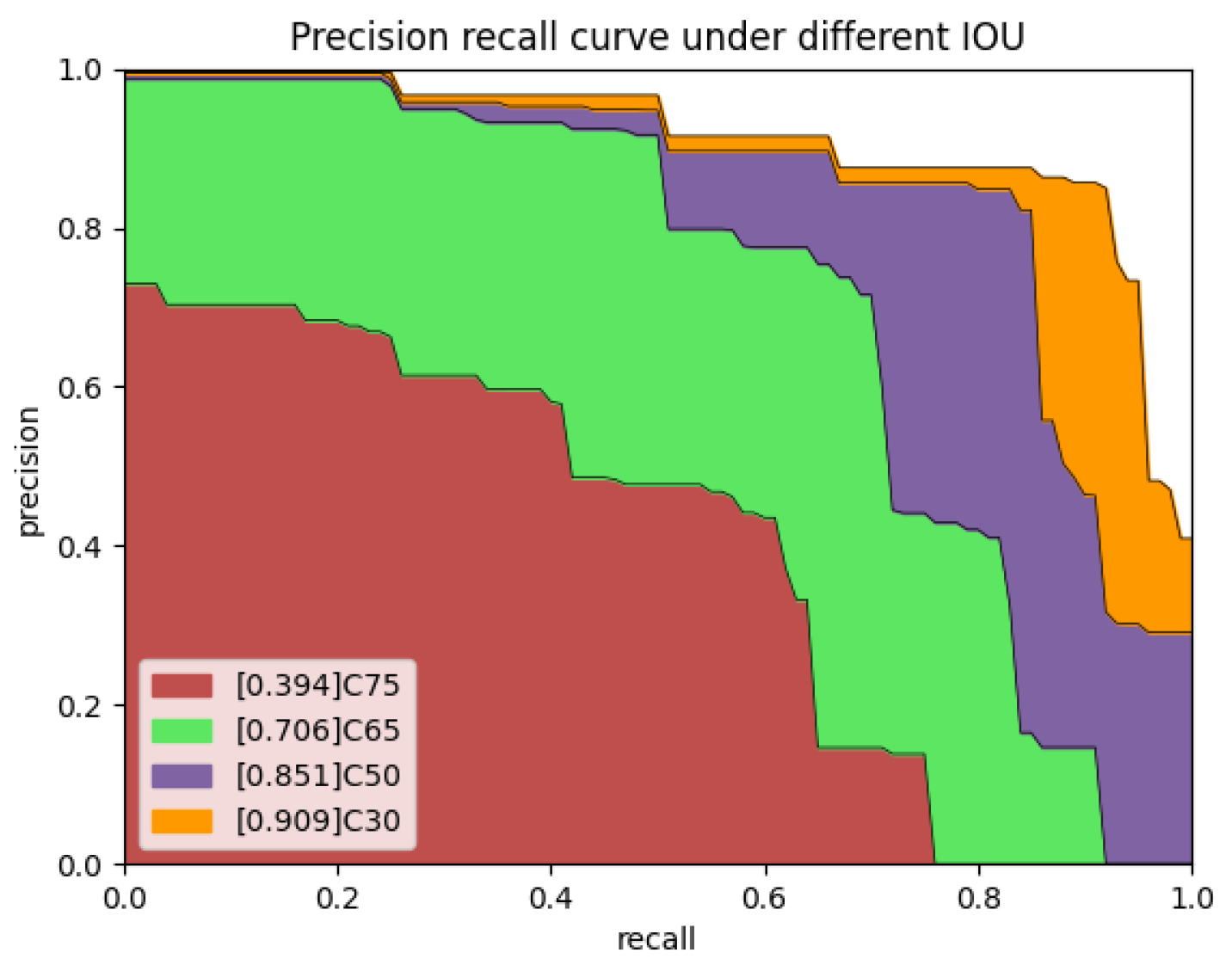

4.2. Performance Metric

4.3. Training Configurations

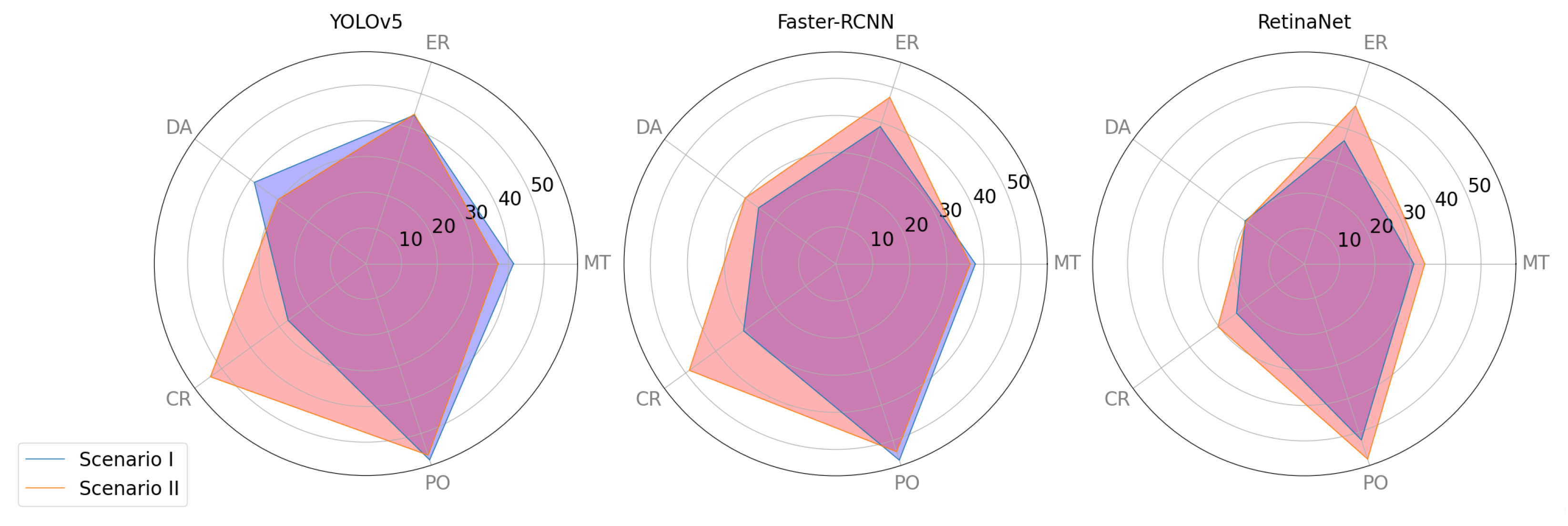

4.4. Results and Discussion

4.4.1. Overall Results

4.4.2. Class-Wise Results

4.4.3. Visual Comparisons

4.4.4. Efficiency

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DTU | Technical University of Denmark |

| WTB | Wind Turbine Blade |

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Network |

| UAV | Unmanned Aerial Vehicle |

| HD | High Definition |

| YOLO | You Look Only Once |

| mAP | Mean Average Precision |

References

- Alharbi, F.R.; Csala, D. Gulf cooperation council countries’ climate change mitigation challenges and exploration of solar and wind energy resource potential. Appl. Sci. 2021, 11, 2648. [Google Scholar] [CrossRef]

- Ikram, M.; Sroufe, R.; Zhang, Q.; Ferasso, M. Assessment and prediction of environmental sustainability: Novel grey models comparative analysis of China vs. the USA. Environ. Sci. Pollut. Res. 2021, 28, 17891–17912. [Google Scholar] [CrossRef]

- Yousefi, H.; Abbaspour, A.; Seraj, H. Worldwide development of wind energy and co2 emission reduction. Environ. Energy Econ. Res. 2019, 3, 1–9. [Google Scholar]

- Woofenden, I. How a Wind Turbine Works; Wind Energy Technologies Office: Washington, DC, USA, 2016. [Google Scholar]

- Adeyeye, K.A.; Ijumba, N.; Colton, J. The Effect of the Number of Blades on the Efficiency of a Wind Turbine. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2021; Volume 801, p. 012020. [Google Scholar]

- Aminzadeh, A.; Dimitrova, M.; Meiabadi, M.S.; Sattarpanah Karganroudi, S.; Taheri, H.; Ibrahim, H.; Wen, Y. Non-Contact Inspection Methods for Wind Turbine Blade Maintenance: Techno–Economic Review of Techniques for Integration with Industry 4.0. J. Nondestruct. Eval. 2023, 42, 54. [Google Scholar]

- Du, Y.; Zhou, S.; Jing, X.; Peng, Y.; Wu, H.; Kwok, N. Damage detection techniques for wind turbine blades: A review. Mech. Syst. Signal Process. 2020, 141, 106445. [Google Scholar]

- Solimine, J.; Niezrecki, C.; Inalpolat, M. An experimental investigation into passive acoustic damage detection for structural health monitoring of wind turbine blades. Struct. Health Monit. 2020, 19, 1711–1725. [Google Scholar]

- Panagiotopoulos, A.I.; Tcherniak, D.; Fassois, S.D. Damage detection on an operating wind turbine blade via a single vibration sensor: A feasibility study. In Proceedings of the European Workshop on Structural Health Monitoring, Palermo, Italy, 6–9 July 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 405–414. [Google Scholar]

- Ye, G.; Neal, B.; Boot, A.; Kappatos, V.; Selcuk, C.; Gan, T.H. Development of an ultrasonic NDT system for automated in-situ inspection of wind turbine blades. In Proceedings of the EWSHM-7th European Workshop on Structural Health Monitoring, Nantes, France, 8–11 July 2014. [Google Scholar]

- Liu, Z.; Liu, X.; Wang, K.; Liang, Z.; Correia, J.A.; De Jesus, A.M. GA-BP neural network-based strain prediction in full-scale static testing of wind turbine blades. Energies 2019, 12, 1026. [Google Scholar] [CrossRef]

- Deign, J. Fully Automated Drones Could Double Wind Turbine Inspection Rates. Wind Energy Update 2016. Available online: https://analysis.newenergyupdate.com/wind-energy-update/fully-automated-drones-could-double-wind-turbine-inspection-rates (accessed on 28 August 2023).

- Qi, W. Object detection in high resolution optical image based on deep learning technique. Nat. Hazards Res. 2022, 2, 384–392. [Google Scholar] [CrossRef]

- Kang, T.; Park, S.; Choi, S.; Choo, J. Data augmentation using random image cropping for high-resolution virtual try-on (viton-crop). arXiv 2021, arXiv:2111.08270. [Google Scholar]

- Yang, R.; Wang, R.; Deng, Y.; Jia, X.; Zhang, H. Rethinking the random cropping data augmentation method used in the training of CNN-based SAR image ship detector. Remote Sens. 2020, 13, 34. [Google Scholar] [CrossRef]

- Geras, K.J.; Wolfson, S.; Shen, Y.; Wu, N.; Kim, S.; Kim, E.; Heacock, L.; Parikh, U.; Moy, L.; Cho, K. High-resolution breast cancer screening with multi-view deep convolutional neural networks. arXiv 2017, arXiv:1703.07047. [Google Scholar]

- Foster, A.; Best, O.; Gianni, M.; Khan, A.; Collins, K.; Sharma, S. Drone Footage Wind Turbine Surface Damage Detection. In Proceedings of the 2022 IEEE 14th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP), Nafplio, Greece, 26–29 June 2022; pp. 1–5. [Google Scholar]

- Wang, L.; Zhang, Z. Automatic detection of wind turbine blade surface cracks based on UAV-taken images. IEEE Trans. Ind. Electron. 2017, 64, 7293–7303. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Z.; Luo, X. A two-stage data-driven approach for image-based wind turbine blade crack inspections. IEEE/ASME Trans. Mechatron. 2019, 24, 1271–1281. [Google Scholar] [CrossRef]

- Deng, L.; Guo, Y.; Chai, B. Defect detection on a wind turbine blade based on digital image processing. Processes 2021, 9, 1452. [Google Scholar] [CrossRef]

- Peng, L.; Liu, J. Detection and analysis of large-scale WT blade surface cracks based on UAV-taken images. IET Image Process. 2018, 12, 2059–2064. [Google Scholar] [CrossRef]

- Ruiz, M.; Mujica, L.E.; Alferez, S.; Acho, L.; Tutiven, C.; Vidal, Y.; Rodellar, J.; Pozo, F. Wind turbine fault detection and classification by means of image texture analysis. Mech. Syst. Signal Process. 2018, 107, 149–167. [Google Scholar] [CrossRef]

- Shihavuddin, A.; Chen, X.; Fedorov, V.; Nymark Christensen, A.; Andre Brogaard Riis, N.; Branner, K.; Bjorholm Dahl, A.; Reinhold Paulsen, R. Wind turbine surface damage detection by deep learning aided drone inspection analysis. Energies 2019, 12, 676. [Google Scholar] [CrossRef]

- Shihavuddin, A.; Rashid, M.R.A.; Maruf, M.H.; Hasan, M.A.; ul Haq, M.A.; Ashique, R.H.; Al Mansur, A. Image based surface damage detection of renewable energy installations using a unified deep learning approach. Energy Rep. 2021, 7, 4566–4576. [Google Scholar]

- Yu, Y.; Cao, H.; Yan, X.; Wang, T.; Ge, S.S. Defect identification of wind turbine blades based on defect semantic features with transfer feature extractor. Neurocomputing 2020, 376, 1–9. [Google Scholar]

- Yang, X.; Zhang, Y.; Lv, W.; Wang, D. Image recognition of wind turbine blade damage based on a deep learning model with transfer learning and an ensemble learning classifier. Renew. Energy 2021, 163, 386–397. [Google Scholar] [CrossRef]

- Sarkar, D.; Gunturi, S.K. Wind turbine blade structural state evaluation by hybrid object detector relying on deep learning models. J. Ambient Intell. Humaniz. Comput. 2021, 12, 8535–8548. [Google Scholar]

- Ran, X.; Zhang, S.; Wang, H.; Zhang, Z. An Improved Algorithm for Wind Turbine Blade Defect Detection. IEEE Access 2022, 10, 122171–122181. [Google Scholar] [CrossRef]

- Zou, L.; Cheng, H. Research on wind turbine blade surface damage identification based on improved convolution neural network. Appl. Sci. 2022, 12, 9338. [Google Scholar] [CrossRef]

- Helbing, G.; Ritter, M. Deep Learning for fault detection in wind turbines. Renew. Sustain. Energy Rev. 2018, 98, 189–198. [Google Scholar]

- Zhang, J.; Cosma, G.; Watkins, J. Image enhanced mask r-cnn: A deep learning pipeline with new evaluation measures for wind turbine blade defect detection and classification. J. Imaging 2021, 7, 46. [Google Scholar] [CrossRef]

- Iyer, A.; Nguyen, L.; Khushu, S. Learning to identify cracks on wind turbine blade surfaces using drone-based inspection images. arXiv 2022, arXiv:2207.11186. [Google Scholar]

- Zhang, C.; Yang, T.; Yang, J. Image recognition of wind turbine blade defects using attention-based MobileNetv1-YOLOv4 and transfer learning. Sensors 2022, 22, 6009. [Google Scholar] [CrossRef]

- Yang, C.; Liu, X.; Zhou, H.; Ke, Y.; See, J. Towards accurate image stitching for drone-based wind turbine blade inspection. Renew. Energy 2023, 203, 267–279. [Google Scholar] [CrossRef]

- Shihavuddin, A.; Chen, X. DTU—Drone Inspection Images of Wind Turbine; Mendeley Data: Amsterdam, The Netherlands, 2018. [Google Scholar] [CrossRef]

- Zhang, K.; Li, D.; Luo, W.; Ren, W.; Stenger, B.; Liu, W.; Li, H.; Yang, M.H. Benchmarking ultra-high-definition image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 14769–14778. [Google Scholar]

- Leng, J.; Mo, M.; Zhou, Y.; Gao, C.; Li, W.; Gao, X. Pareto Refocusing for Drone-view Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 1320–1334. [Google Scholar] [CrossRef]

- Tzutalin. LabelImg. Free Software: MIT License; MIT: Cambridge, MA, USA, 2015. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Fang, J.; Michael, K.; Montes, D.; Nadar, J.; Skalski, P.; et al. ultralytics/yolov5: V6. 1-TensorRT, TensorFlow Edge TPU and OpenVINO Export and Inference. Zenodo. 2022. Available online: https://zenodo.org/record/6222936 (accessed on 6 May 2023).

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing aided hyper inference and fine-tuning for small object detection. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 966–970. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 3 June 2023).

| (a) | (b) | |||||||

|---|---|---|---|---|---|---|---|---|

| Images | Labels | mAP@.5 | mAP@.5-.95 | Patches/Image | mAP@.5 | mAP@.5-.95 | ||

| 640 | 888 | 1055 | 79.7 | 45.2 | 512 | 84 | 82.4 | 45.4 |

| 800 | 766 | 953 | 80.4 | 43.5 | 800 | 40 | 83.1 | 45.7 |

| 1024 | 598 | 796 | 85.5 | 46.9 | 1024 | 24 | 82.9 | 45.6 |

| 2048 | 362 | 666 | 85.4 | 48.3 | 2048 | 6 | 81.6 | 44.8 |

| Models | Scenario-I | Scenario-II | Difference | ||||||

|---|---|---|---|---|---|---|---|---|---|

| small | medium | large | small | medium | large | (S) | (M) | (L) | |

| YOLOv5 | 27.3 | 47.5 | 51.4 | 25.2 | 48.1 | 53.1 | −2.1 | 0.6 | 1.7 |

| Faster-RCNN | 16 | 42.3 | 63.8 | 29.2 | 48.5 | 50.5 | 13.2 | 6.2 | −13.3 |

| RetinaNet | 13.3 | 36.7 | 65.6 | 18.1 | 43.5 | 50.1 | 4.8 | 6.8 | −15.5 |

| Models | mAP@.50 | mAP@.5-.95 | ||

|---|---|---|---|---|

| Scenario-I | Scenario-II | Scenario-I | Scenario-II | |

| YOLOv5 | 81.3 | 85.1 | 41.7 | 44.2 |

| Faster-RCNN | 73.2 | 83.4 | 37.8 | 43.1 |

| RetinaNet | 70.6 | 70.4 | 32.9 | 37.9 |

| Classes | YOLOv5 | Faster-RCNN | RetinaNet | |||

|---|---|---|---|---|---|---|

| Scenario-I | Scenario-II | Scenario-I | Scenario-II | Scenario-I | Scenario-II | |

| MT | 41.4 | 37.1 | 37.7 | 36.3 | 31 | 34.1 |

| ER | 43.7 | 44 | 38.9 | 47.2 | 36.6 | 46.9 |

| DA | 38.7 | 30.5 | 25.7 | 30.2 | 20.7 | 20.4 |

| CR | 27 | 53.9 | 30.7 | 48.8 | 23.7 | 30.2 |

| PO | 57.8 | 56.4 | 55.6 | 53.2 | 52.3 | 58 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gohar, I.; Halimi, A.; See, J.; Yew, W.K.; Yang, C. Slice-Aided Defect Detection in Ultra High-Resolution Wind Turbine Blade Images. Machines 2023, 11, 953. https://doi.org/10.3390/machines11100953

Gohar I, Halimi A, See J, Yew WK, Yang C. Slice-Aided Defect Detection in Ultra High-Resolution Wind Turbine Blade Images. Machines. 2023; 11(10):953. https://doi.org/10.3390/machines11100953

Chicago/Turabian StyleGohar, Imad, Abderrahim Halimi, John See, Weng Kean Yew, and Cong Yang. 2023. "Slice-Aided Defect Detection in Ultra High-Resolution Wind Turbine Blade Images" Machines 11, no. 10: 953. https://doi.org/10.3390/machines11100953

APA StyleGohar, I., Halimi, A., See, J., Yew, W. K., & Yang, C. (2023). Slice-Aided Defect Detection in Ultra High-Resolution Wind Turbine Blade Images. Machines, 11(10), 953. https://doi.org/10.3390/machines11100953