Virtual and Real Bidirectional Driving System for the Synchronization of Manipulations in Robotic Joint Surgeries

Abstract

1. Introduction

1.1. Background and Significance

1.2. Aims and Contributions

2. Materials and Methods

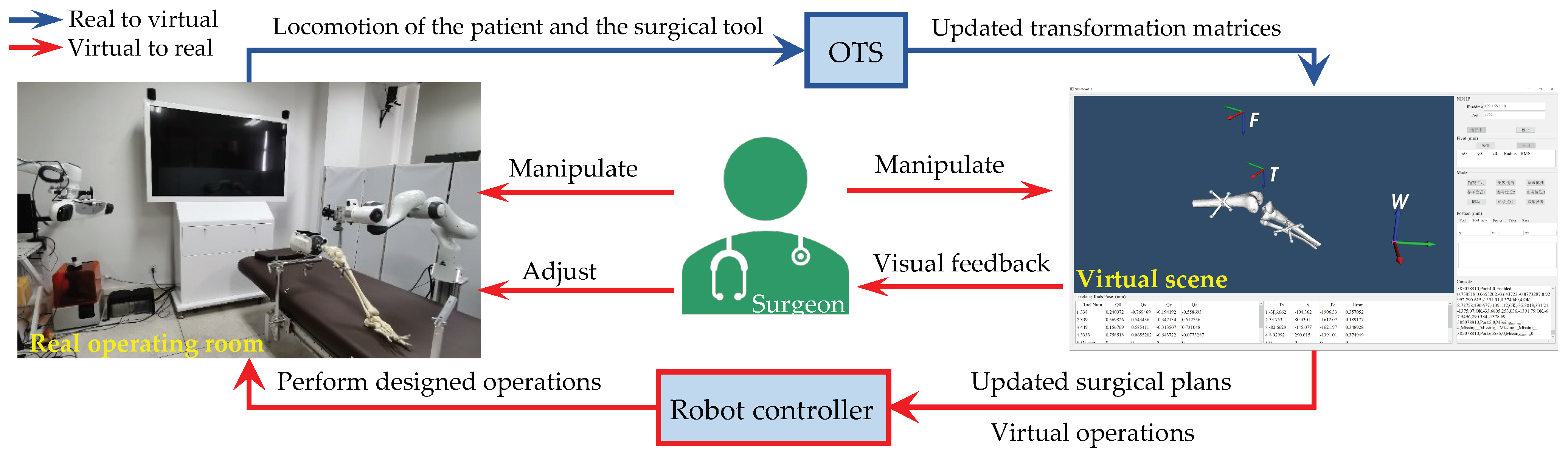

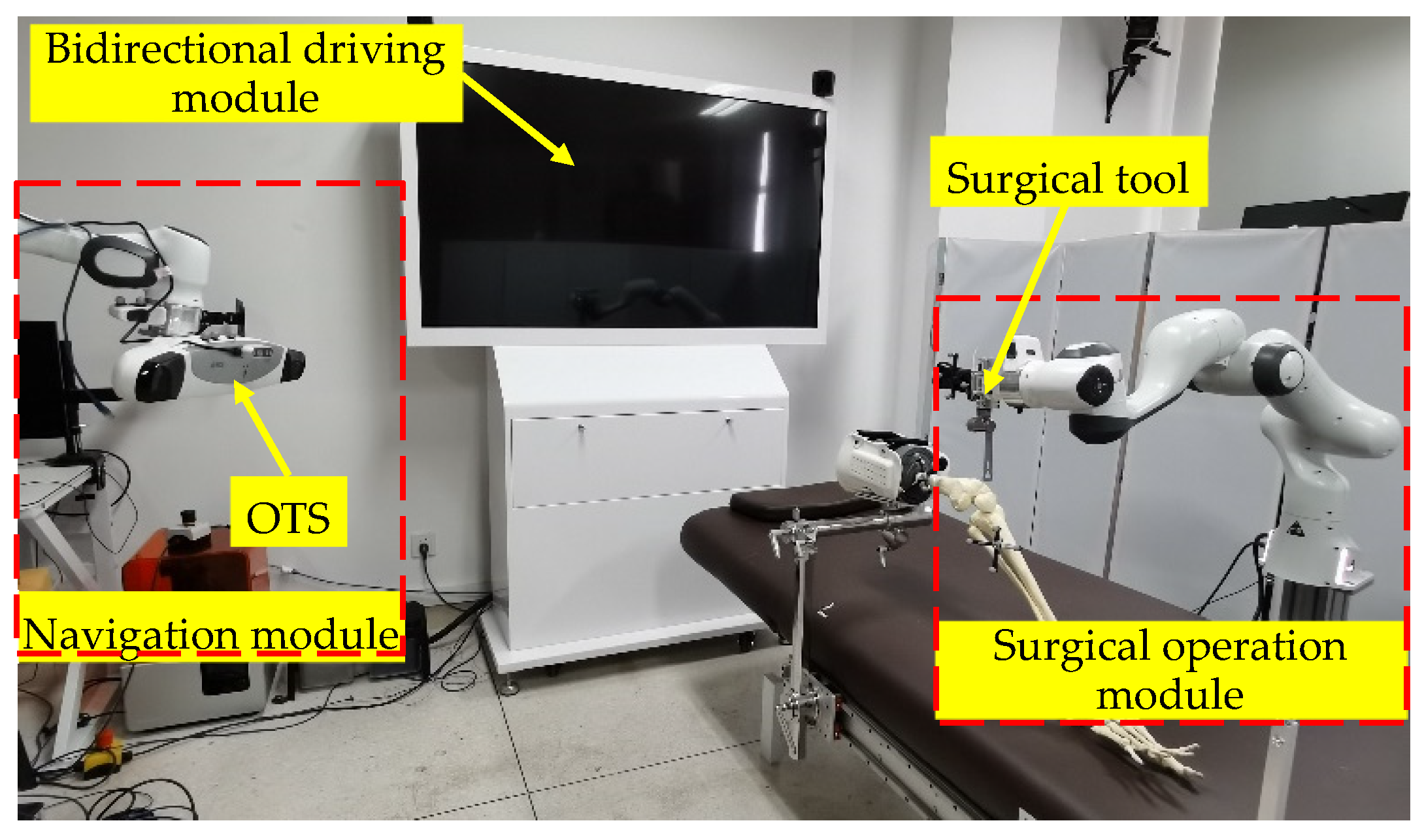

2.1. Universal Robotic Surgery System Orthopedic Surgeries

2.2. Bidirectional Driving Method

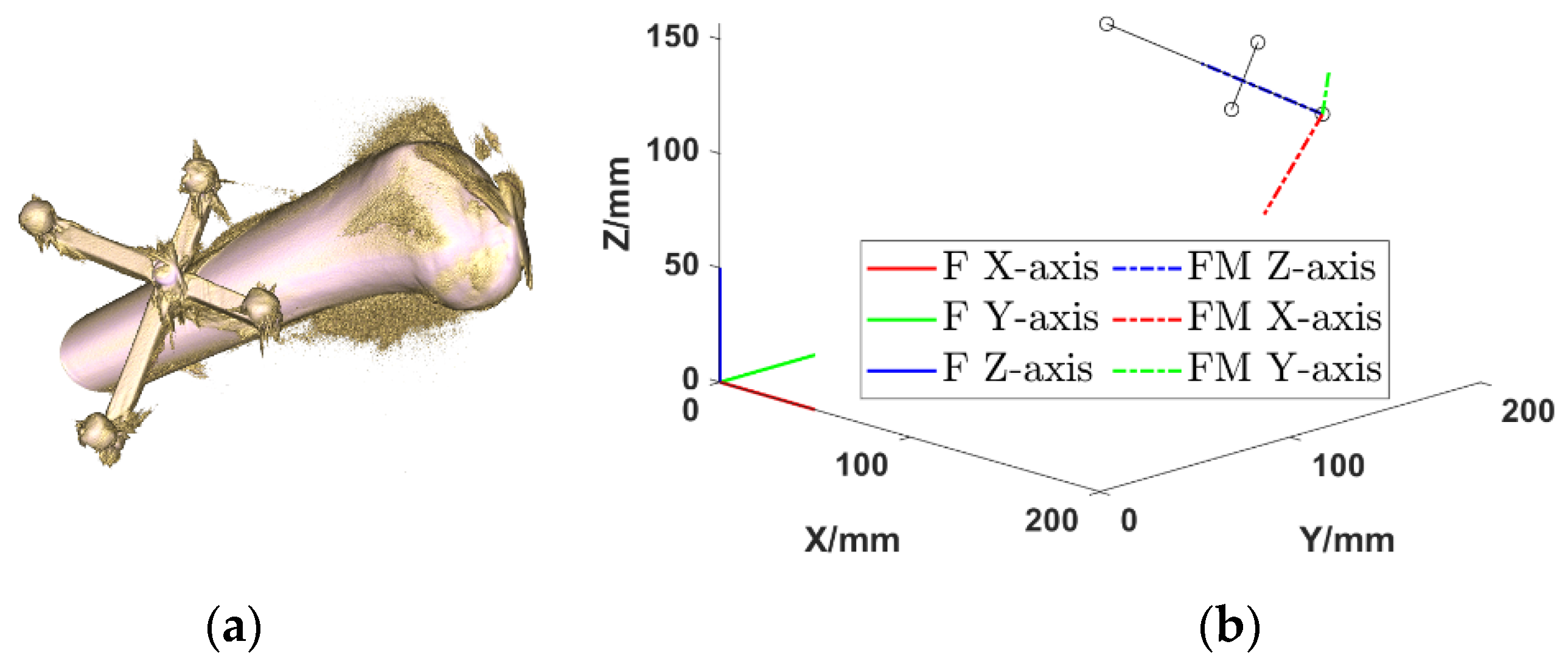

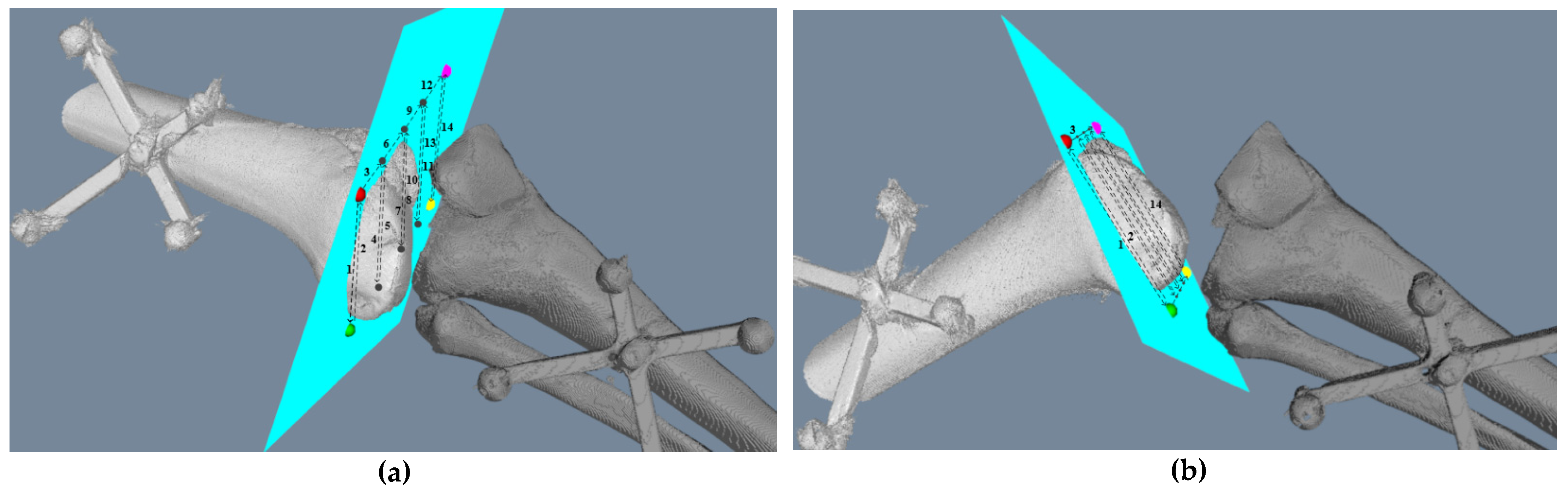

2.2.1. Intraoperative Registration

2.2.2. Real to Virtual Mapping

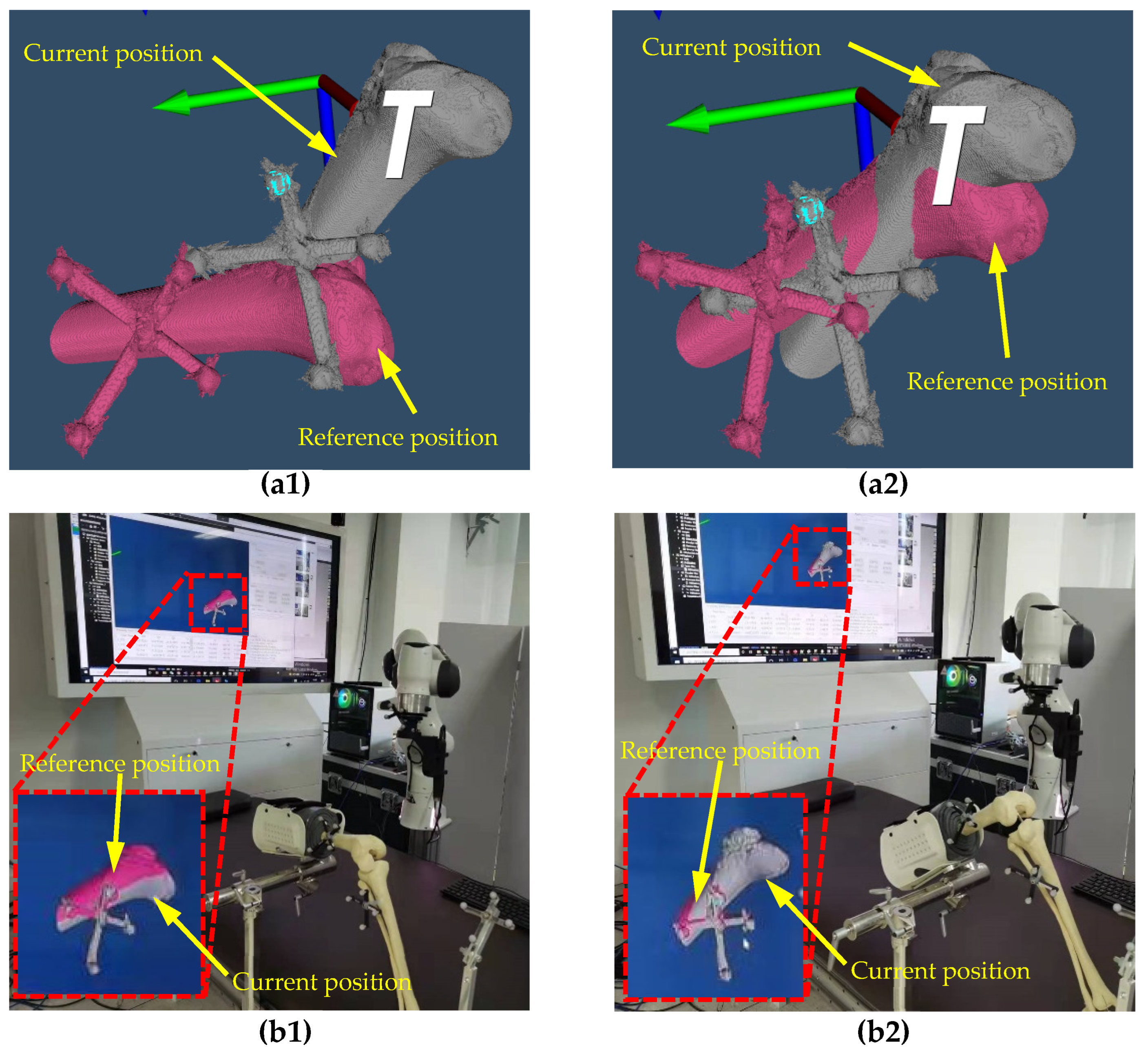

2.2.3. Virtual to Real Mapping

3. Results

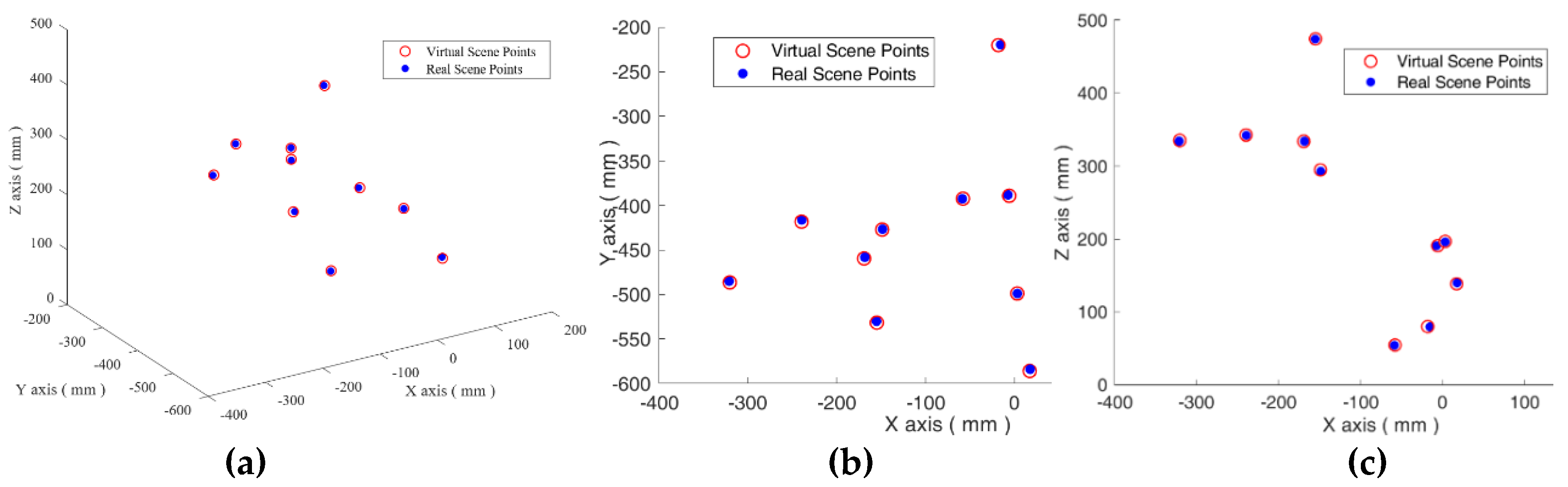

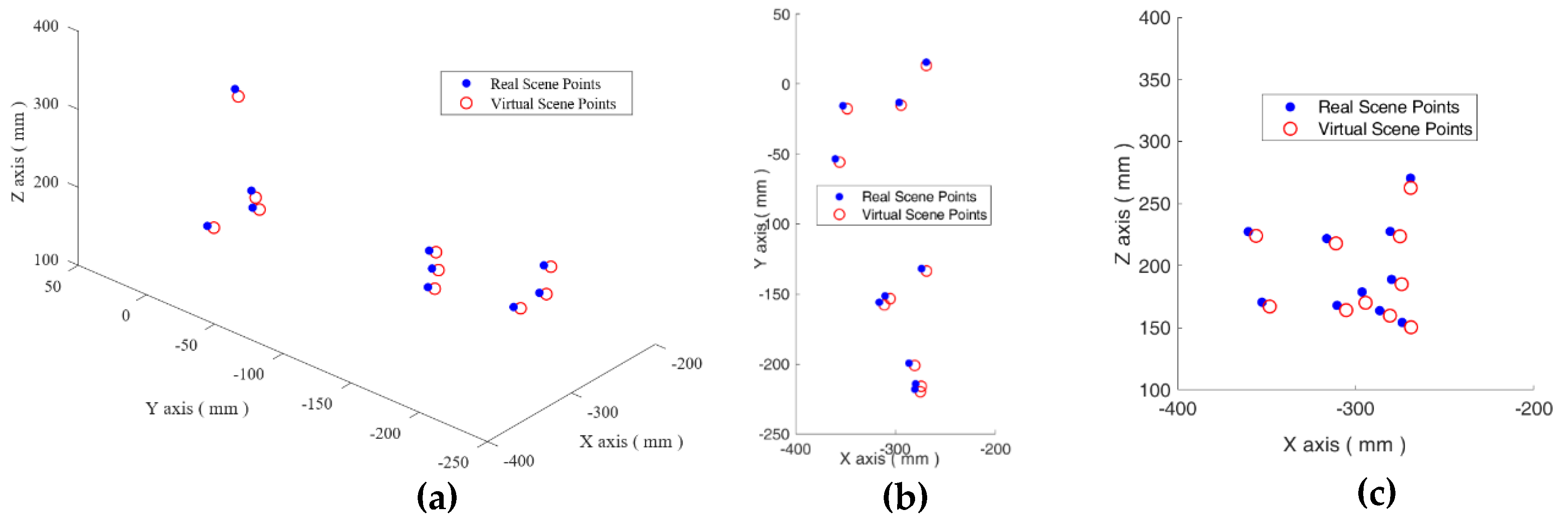

3.1. The Real to Virtual Mapping

3.2. The Virtual to Real Mapping

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Conflicts of Interest

References

- He, Y.; Zhao, B.; Qi, X.; Li, S.; Yang, Y.; Hu, Y. Automatic Surgical Field of View Control in Robot-Assisted Nasal Surgery. IEEE Robot. Autom. Lett. 2021, 6, 247–254. [Google Scholar] [CrossRef]

- Li, S.; Du, Z.; Yu, H. A Robot-Assisted Spine Surgery System Based on Intraoperative 2D Fluoroscopy Navigation. IEEE Access 2020, 8, 51786–51802. [Google Scholar] [CrossRef]

- Ma, Q.; Kobayashi, E.; Suenaga, H.; Hara, K.; Wang, J.; Nakagawa, K.; Sakuma, I.; Masamune, K. Autonomous Surgical Robot with Camera-Based Markerless Navigation for Oral and Maxillofacial Surgery. IEEE/ASME Trans. Mechatron. 2020, 25, 1084–1094. [Google Scholar] [CrossRef]

- Naik, A.; Smith, A.D.; Shaffer, A.; Krist, D.T.; Moawad, C.M.; MacInnis, B.R.; Teal, K.; Hassaneen, W.; Arnold, P.M. Evaluating robotic pedicle screw placement against conventional modalities: A systematic review and network meta-analysis. Neurosurg. Focus 2022, 52, E10. [Google Scholar] [CrossRef]

- Tovar, M.A.; Dowlati, E.; Zhao, D.Y.; Khan, Z.; Pasko, K.B.D.; Sandhu, F.A.; Voyadzis, J.-M. Robot-assisted and augmented reality-assisted spinal instrumentation: A systematic review and meta-analysis of screw accuracy and outcomes over the last decade. J. Neurosurgery Spine 2022, 1, 1–16. [Google Scholar] [CrossRef]

- Mehmanesh, H.; Henze, R.; Lange, R. Totally endoscopic mitral valve repair. J. Thorac. Cardiovasc. Surg. 2002, 123, 96–97. [Google Scholar] [CrossRef][Green Version]

- Menon, M.; Tewari, A.; Peabody, J.; Team, V.I.P. Vattikuti Institute prostatectomy: Technique. J. Urol. 2003, 169, 2289–2292. [Google Scholar] [CrossRef]

- Mettler, L.; Ibrahim, M.; Jonat, W. One year of experience working with the aid of a robotic assistant (the voice-controlled optic holder AESOP) in gynaecological endoscopic surgery. Hum. Reprod. 1998, 13, 2748–2750. [Google Scholar] [CrossRef]

- Asham, K.; Meyers, J.E.; Ioannis, S.; John, P. Next-Generation Robotic Spine Surgery: First Report on Feasibility, Safety, and Learning Curve. Oper. Neurosurg. 2018, 1, 61–69. [Google Scholar]

- Mao, G.; Gigliotti, M.J.; Myers, D.; Yu, A.; Whiting, D. A Single Surgeon Direct Comparison of O-arm Neuronavigation versus Mazor X™ Robotic-Guided Posterior Spinal Instrumentation. World Neurosurg. 2020, 137, e278–e285. [Google Scholar] [CrossRef]

- Lonjon, N.; Chan-Seng, E.; Costalat, V.; Bonnafoux, B.; Vassal, M.; Boetto, J. Robot-assisted spine surgery: Feasibility study through a prospective case-matched analysis. Eur. Spine J. Off. Publ. Eur. Spine Soc. Eur. Spinal Deform. Soc. Eur. Sect. Cerv. Spine Res. Soc. 2016, 25, 947–955. [Google Scholar] [CrossRef]

- Matsen, F.A.; Garbini, J.L.; Sidles, J.A.; Pratt, B.; Baumgarten, D.; Kaiura, R. Robotic Assistance in Orthopaedic Surgery. Clin. Orthop. Relat. Res. 1993, 18, 178–186. [Google Scholar] [CrossRef]

- Siebert, W. Technique and first clinical results of robot-assisted total knee replacement. Knee 2002, 9, 173–180. [Google Scholar] [CrossRef]

- Roche, M. The MAKO robotic-arm knee arthroplasty system. Arch. Orthop. Trauma Surg. 2021, 141, 2043–2047. [Google Scholar] [CrossRef]

- Sires, J.D.; Craik, J.D.; Wilson, C.J. Accuracy of bone resection in MAKO total knee robotic-assisted surgery. J. Knee Surg. 2021, 34, 745–748. [Google Scholar] [CrossRef]

- Andreas, W.; Adrian, M.; Werner, N. Development of a Real-Time Virtual Reality Environment for Visualization of Fully Digital Microscope Datasets. In Proceedings of the Advanced Biomedical and Clinical Diagnostic and Surgical Guidance Systems XVII, San Francisco, CA, USA, 26 February 2019. [Google Scholar]

- Hernigou, P.; Olejnik, R.; Safar, A.; Martinov, S.; Hernigou, J.; Ferre, B. Digital twins, artificial intelligence, and machine learning technology to identify a real personalized motion axis of the tibiotalar joint for robotics in total ankle arthroplasty. Int. Orthop. 2021, 45, 2209–2217. [Google Scholar] [CrossRef]

- Hernigou, P.; Scarlat, M.M. Ankle and foot surgery: From arthrodesis to arthroplasty, three dimensional printing, sensors, artificial intelligence, machine learning technology, digital twins, and cell therapy. Int. Orthop. 2021, 45, 2173–2176. [Google Scholar] [CrossRef]

- Ahmed, H.; Devoto, L. The Potential of a Digital Twin in Surgery. Surg. Innov. 2021, 28, 509–510. [Google Scholar] [CrossRef]

- Abdallah, M.B.; Blonski, M.; Wantz-Mezieres, S.; Gaudeau, Y.; Taillandier, L.; Moureaux, J.M.; Darlix, A.; de Champfleur, N.M.; Duffau, H. Data-Driven Predictive Models of Diffuse Low-Grade Gliomas Under Chemotherapy. IEEE J. Biomed. Health Inf. 2019, 23, 38–46. [Google Scholar] [CrossRef]

- Baumgarten, C.; Zhao, Y.; Sauleau, P.; Malrain, C.; Jannin, P.; Haegelen, C. Improvement of Pyramidal Tract Side Effect Prediction Using a Data-Driven Method in Subthalamic Stimulation. IEEE Trans. Biomed. Eng. 2017, 64, 2134–2141. [Google Scholar] [CrossRef]

- Tsang-Hsiang, C.; Hu, P.J.H. A Data-Driven Approach to Manage the Length of Stay for Appendectomy Patients. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2009, 39, 1339–1347. [Google Scholar] [CrossRef]

- Ayoub, A.; Pulijala, Y. The application of virtual reality and augmented reality in Oral & Maxillofacial Surgery. BMC Oral Health 2019, 19, 238. [Google Scholar]

- Hanken, H.; Schablowsky, C.; Smeets, R.; Heiland, M.; Sehner, S.; Riecke, B.; Nourwali, I.; Vorwig, O.; Gröbe, A.; Al-Dam, A. Virtual planning of complex head and neck reconstruction results in satisfactory match between real outcomes and virtual models. Clin. Oral Investig. 2015, 19, 647–656. [Google Scholar] [CrossRef]

- Myers, B.; Nahal, J.A.; Yang, C.; Brown, L.; Ghiasi, S.; Knoesen, A. Towards data-driven pre-operative evaluation of lung cancer patients: The case of smart mask. In Proceedings of the 2016 IEEE Wireless Health, Bethesda, MD, USA, 25–27 October 2016; pp. 1–6. [Google Scholar]

- Budilovsky, O.; Alipour, G.; Knoesen, A.; Brown, L.; Ghiasi, S. A data-driven approach to pre-operative evaluation of lung cancer patients. In Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services, Dalian, China, 12–15 October 2017; pp. 1–6. [Google Scholar]

- Gamage, P.; Xie, S.Q.; Delmas, P.; Xu, P. Pose estimation of femur fracture segments for image guided orthopedic surgery. In Proceedings of the 2009 24th International Conference Image and Vision Computing, Wellington, New Zealand, 23–25 November 2009; pp. 288–292. [Google Scholar]

- Gamage, P.; Xie, S.Q.; Delmas, P.; Xu, P.; Mukherjee, S. Intra-operative 3D pose estimation of fractured bone segments for image guided orthopedic surgery. In Proceedings of the 2008 IEEE International Conference on Robotics and Biomimetics, Bangkok, Thailand, 22–25 February 2009; pp. 288–293. [Google Scholar]

- Hagenah, J.; Evers, T.; Scharfschwerdt, M.; Schweikard, A.; Ernst, F. A Support Vector Regression-Based Data-Driven Leaflet Modeling Approach for Personalized Aortic Valve Prosthesis Development. In Proceedings of the 2018 Computing in Cardiology Conference, Maastricht, The Netherlands, 23–26 September 2018. [Google Scholar]

- Pardo, A.; Streeter, S.S.; Maloney, B.W.; Gutierrez-Gutierrez, J.A.; McClatchy, D.M.; Wells, W.A.; Paulsen, K.D.; Lopez-Higuera, J.M.; Pogue, B.W.; Conde, O.M. Modeling and Synthesis of Breast Cancer Optical Property Signatures with Generative Models. IEEE Trans. Med. Imaging 2021, 40, 1687–1701. [Google Scholar] [CrossRef]

- Peng, H.; Yang, X.; Su, Y.H.; Hannaford, B. Real-time Data Driven Precision Estimator for RAVEN-II Surgical Robot End Effector Position. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; pp. 350–356. [Google Scholar]

- Haigron, P.; Bellemare, M.E.; Acosta, O.; Goksu, C.; Kulik, C.; Rioual, K.; Lucas, A. Depth-map-based scene analysis for active navigation in virtual angioscopy. IEEE Trans. Med. Imaging 2004, 23, 1380–1390. [Google Scholar] [CrossRef]

- Moccia, S.; Wirkert, S.J.; Kenngott, H.; Vemuri, A.S.; Apitz, M.; Mayer, B.; De Momi, E.; Mattos, L.S.; Maier-Hein, L. Uncertainty-Aware Organ Classification for Surgical Data Science Applications in Laparoscopy. IEEE Trans. Biomed. Eng. 2018, 65, 2649–2659. [Google Scholar] [CrossRef]

- Sefati, S.; Gao, C.; Iordachita, I.; Taylor, R.H.; Armand, M. Data-Driven Shape Sensing of a Surgical Continuum Manipulator Using an Uncalibrated Fiber Bragg Grating Sensor. IEEE Sens. J. 2021, 21, 3066–3076. [Google Scholar] [CrossRef]

- Jiang, W.; Yu, T.; He, X.; Yang, Y.; Wang, Z.; Liu, H. Data-Driven Modeling the Nonlinear Backlash of Steerable Endoscope Under a Large Deflection Cannulation in ERCP Surgery. In Proceedings of the 2021 IEEE International Conference on Real-time Computing and Robotics, Xining, China, 15–19 July 2021; pp. 39–44. [Google Scholar]

- Nercessian, M.; Haouchine, N.; Juvekar, P.; Frisken, S.; Golby, A. Deep Cortical Vessel Segmentation Driven By Data Augmentation with Neural Image Analogy. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging, Nice, France, 13–16 April 2021; pp. 721–724. [Google Scholar]

- Mamone, V.; Ferrari, V.; Condino, S.; Cutolo, F. Projected Augmented Reality to Drive Osteotomy Surgery: Implementation and Comparison with Video See-Through Technology. IEEE Access 2020, 8, 169024–169035. [Google Scholar] [CrossRef]

- Suthakorn, J. A concept on Cooperative Tele-Surgical System based on Image-Guiding and robotic technology. In Proceedings of the 2012 Pan American Health Care Exchanges, Miami, FL, USA, 26–31 March 2012; pp. 41–45. [Google Scholar]

- Guo, J.; Feng, S.; Guo, S. Study on the Automatic Surgical Method of the Vascular Interventional Surgical Robot Based on Deep Learning. In Proceedings of the 2021 IEEE International Conference on Mechatronics and Automation, Takamatsu, Japan, 8–11 August 2021; pp. 1076–1081. [Google Scholar]

- Li, Y.; Miyasaka, M.; Haghighipanah, M.; Lei, C.; Hannaford, B. Dynamic modeling of cable driven elongated surgical instruments for sensorless grip force estimation. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 4128–4134. [Google Scholar]

- Tsai, R.Y. A New Technique for Fully Autonomous and Efficient 3D Robotics Hand/Eye Calibration. IEEE Trans. Robot. Autom. 1989, 5, 345–358. [Google Scholar] [CrossRef]

- Qin, Y.; Ma, M.; Shen, L.; Song, Z.; Chen, X.; Wang, H. Pre- and Intra-operative Dynamic Registration for Total Knee Arthroplasty Based on CT Image Annotation. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics, Sanya, China, 27–31 December 2021. [Google Scholar]

| Distance | Ground-Truth | Calculated | Error |

|---|---|---|---|

| A-C | 88.00 | 87.99 | 0.01 |

| A-B | 50.00 | 50.07 | 0.07 |

| A-D | 60.00 | 59.96 | 0.04 |

| Group ID | Coordinates of the Points | Error | Group ID | Coordinates of the Points | Error | ||||

|---|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | ||||

| 1 | −239.613 | −418.146 | 342.410 | 2.074 | 6 | −169.222 | −459.504 | 333.856 | 1.556 |

| −239.347 | −416.181 | 341.804 | −168.376 | −458.199 | 333.909 | ||||

| 2 | −57.909 | −392.237 | 54.354 | 0.796 | 7 | −154.900 | −531.637 | 474.282 | 1.653 |

| −58.599 | −392.486 | 54.045 | −155.192 | −530.049 | 473.930 | ||||

| 3 | −148.851 | −427.016 | 294.940 | 1.967 | 8 | 3.228 | −498.840 | 196.662 | 0.638 |

| −148.436 | −426.605 | 293.062 | 3.384 | −498.817 | 196.044 | ||||

| 4 | −18.036 | −220.221 | 79.7501 | 2.660 | 9 | 17.274 | −585.962 | 138.410 | 2.383 |

| −15.481 | −219.647 | 79.284 | 17.820 | −584.000 | 139.648 | ||||

| 5 | −320.424 | −486.398 | 335.061 | 1.799 | 10 | −5.848 | −389.018 | 190.731 | 1.707 |

| −321.172 | −485.076 | 334.097 | −7.243 | −388.035 | 190.715 | ||||

| Group ID | Coordinates of the Points | Error | Group ID | Coordinates of the Points | Error | ||||

|---|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | ||||

| 1 | −348.460 | −17.688 | 166.969 | 5.951 | 6 | −280.836 | −201.004 | 159.612 | 7.203 |

| −352.806 | −15.579 | 170.445 | −286.582 | −199.388 | 163.643 | ||||

| 2 | −305.445 | −153.240 | 164.060 | 6.677 | 7 | −268.969 | −133.468 | 150.278 | 6.518 |

| −310.590 | −151.312 | 167.853 | −273.956 | −131.776 | 154.119 | ||||

| 3 | −311.214 | −157.672 | 217.823 | 6.728 | 8 | −356.130 | −55.900 | 223.897 | 6.018 |

| −316.372 | −155.790 | 221.712 | −360.498 | −53.494 | 227.266 | ||||

| 4 | −275.199 | −219.73 | 223.532 | 6.955 | 9 | −294.561 | −15.104 | 170.007 | 9.143 |

| −280.684 | −217.969 | 227.429 | −296.504 | −13.204 | 178.737 | ||||

| 5 | −274.236 | −215.848 | 184.939 | 7.119 | 10 | −269.176 | 13.186 | 262.541 | 8.202 |

| −279.947 | −214.171 | 188.845 | −269.280 | 15.594 | 270.381 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, Y.; Ma, M.; Shen, L.; Wang, H.; Han, J. Virtual and Real Bidirectional Driving System for the Synchronization of Manipulations in Robotic Joint Surgeries. Machines 2022, 10, 530. https://doi.org/10.3390/machines10070530

Qin Y, Ma M, Shen L, Wang H, Han J. Virtual and Real Bidirectional Driving System for the Synchronization of Manipulations in Robotic Joint Surgeries. Machines. 2022; 10(7):530. https://doi.org/10.3390/machines10070530

Chicago/Turabian StyleQin, Yanding, Mingqian Ma, Lin Shen, Hongpeng Wang, and Jianda Han. 2022. "Virtual and Real Bidirectional Driving System for the Synchronization of Manipulations in Robotic Joint Surgeries" Machines 10, no. 7: 530. https://doi.org/10.3390/machines10070530

APA StyleQin, Y., Ma, M., Shen, L., Wang, H., & Han, J. (2022). Virtual and Real Bidirectional Driving System for the Synchronization of Manipulations in Robotic Joint Surgeries. Machines, 10(7), 530. https://doi.org/10.3390/machines10070530