Autonomous Visual Navigation for a Flower Pollination Drone

Abstract

:1. Introduction

- We demonstrated the deployment of deep learning based computer vision in real-time on-board a resource restricted embedded processing platform.

- We developed a methodology to train the necessary neural networks with a partially real, partially synthesized dataset.

- We successfully demonstrated a two-stage flower approaching visual servoing procedure.

2. Related Work

2.1. Drone Navigation

2.2. Visual Servoing

2.3. PID Control Loops

2.4. Related Work on Artificial Pollination

2.5. Hardware Platforms for On-Board Computer Vision Based Navigation

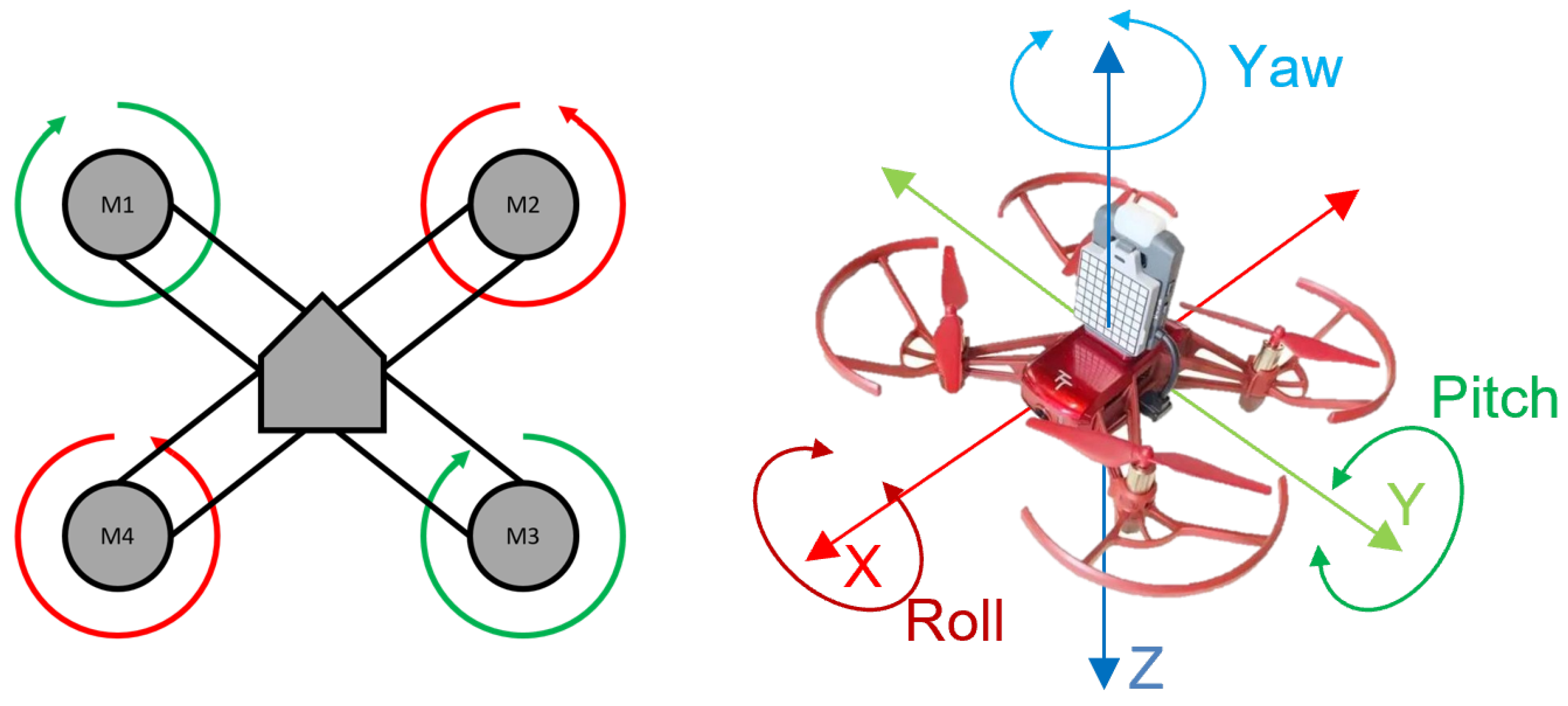

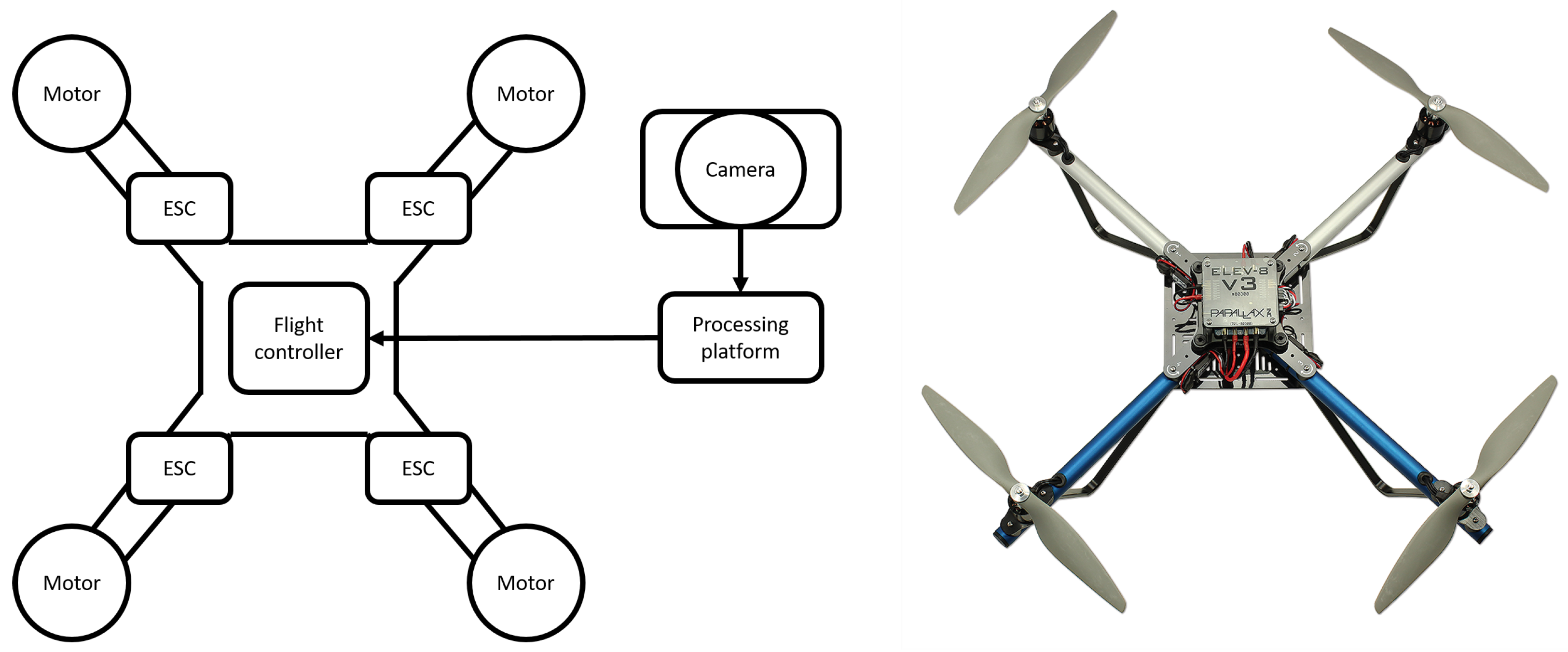

3. Autonomous Drone Navigation

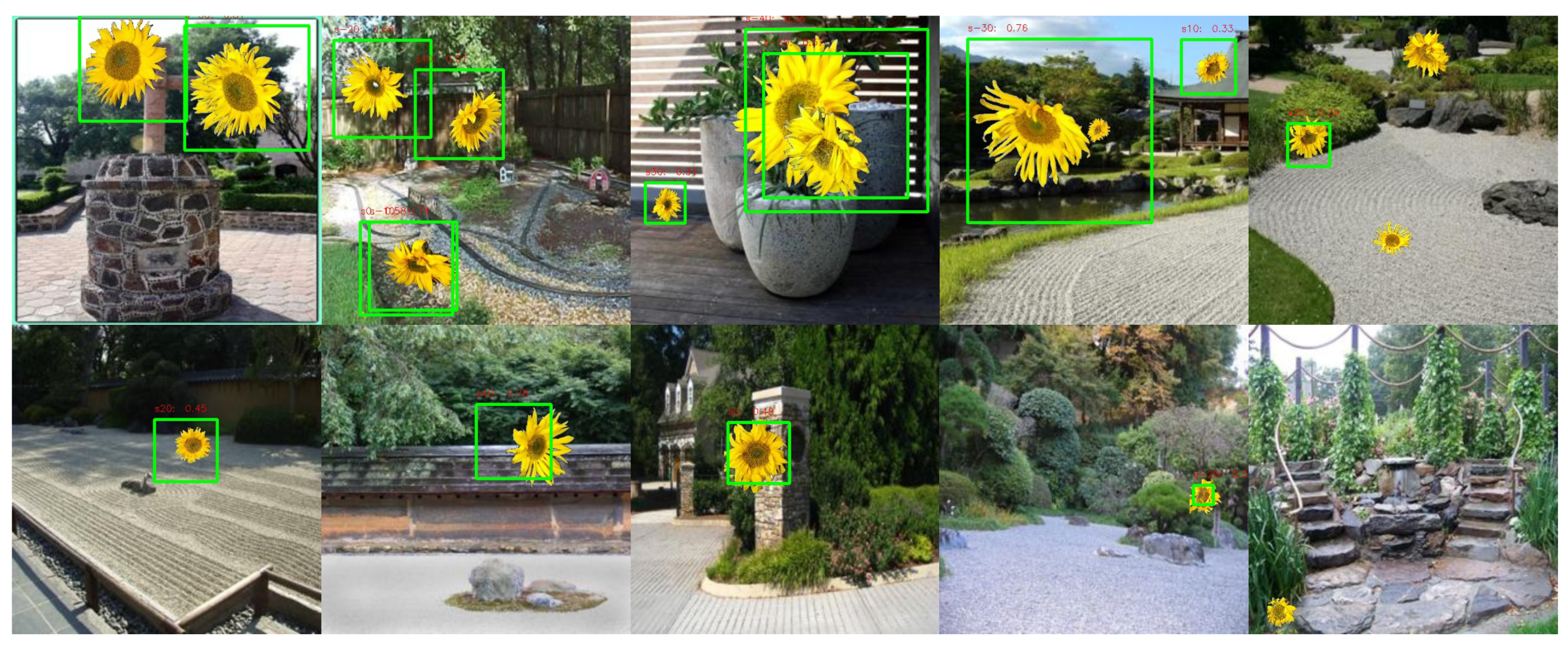

3.1. Flower Dataset

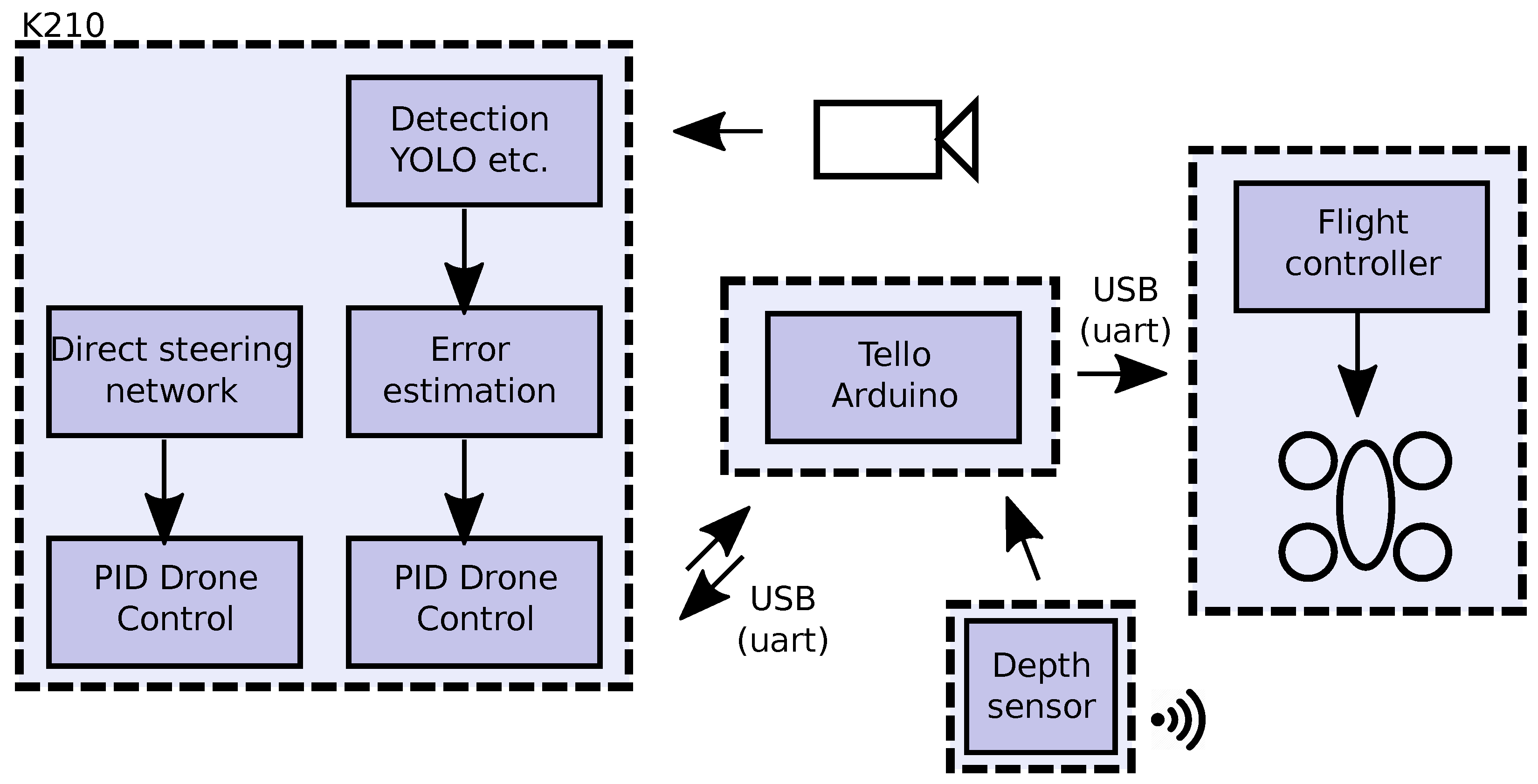

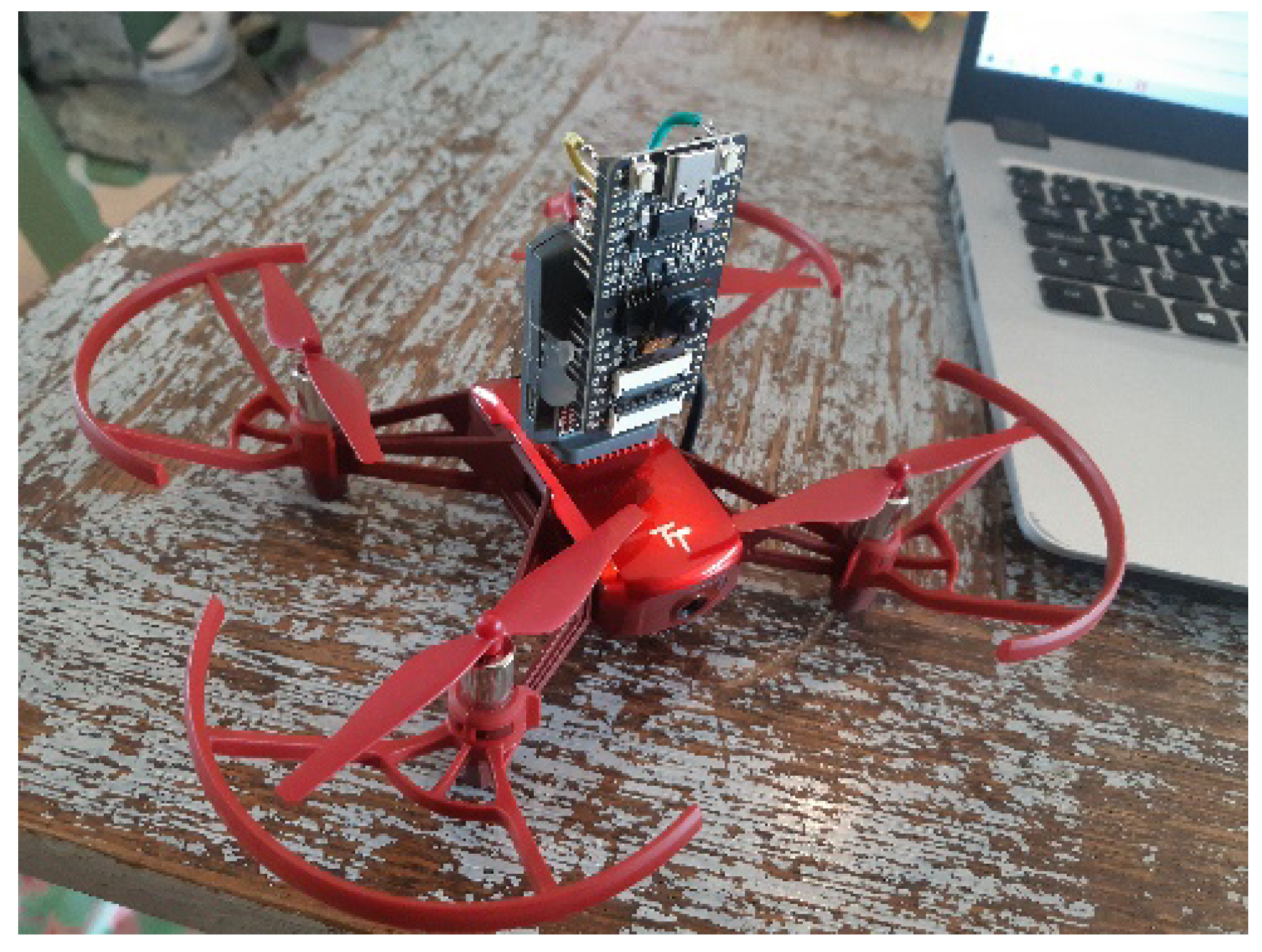

3.2. Hardware Platform

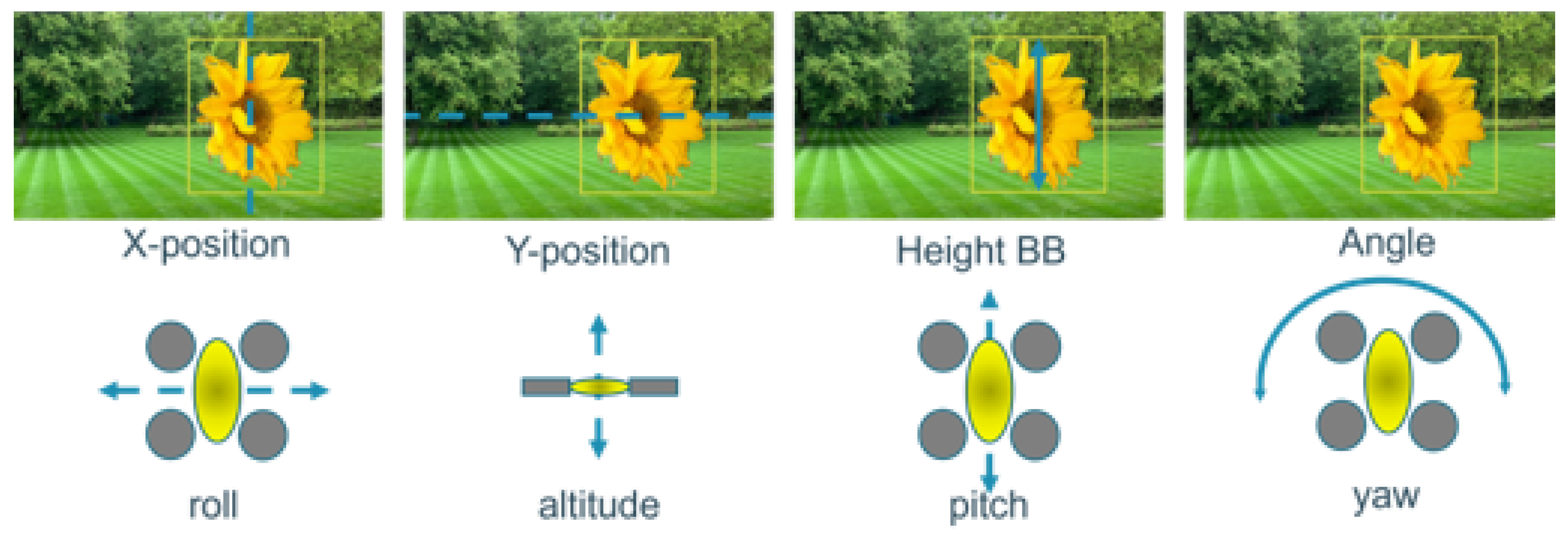

3.3. Hybrid End-to-End and Detection Approach

3.3.1. Detection Stage

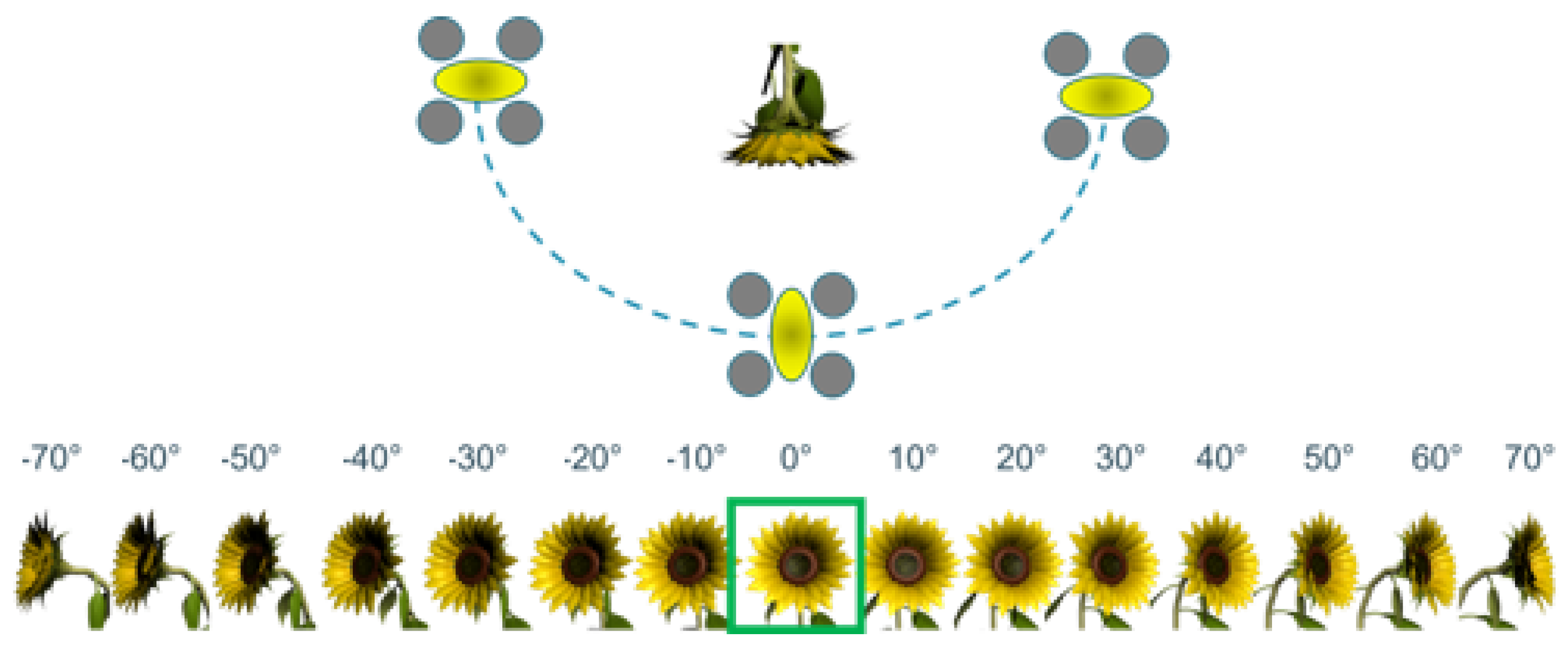

3.3.2. Direct Visual Servoing

3.3.3. Optimization of the Neural Networks

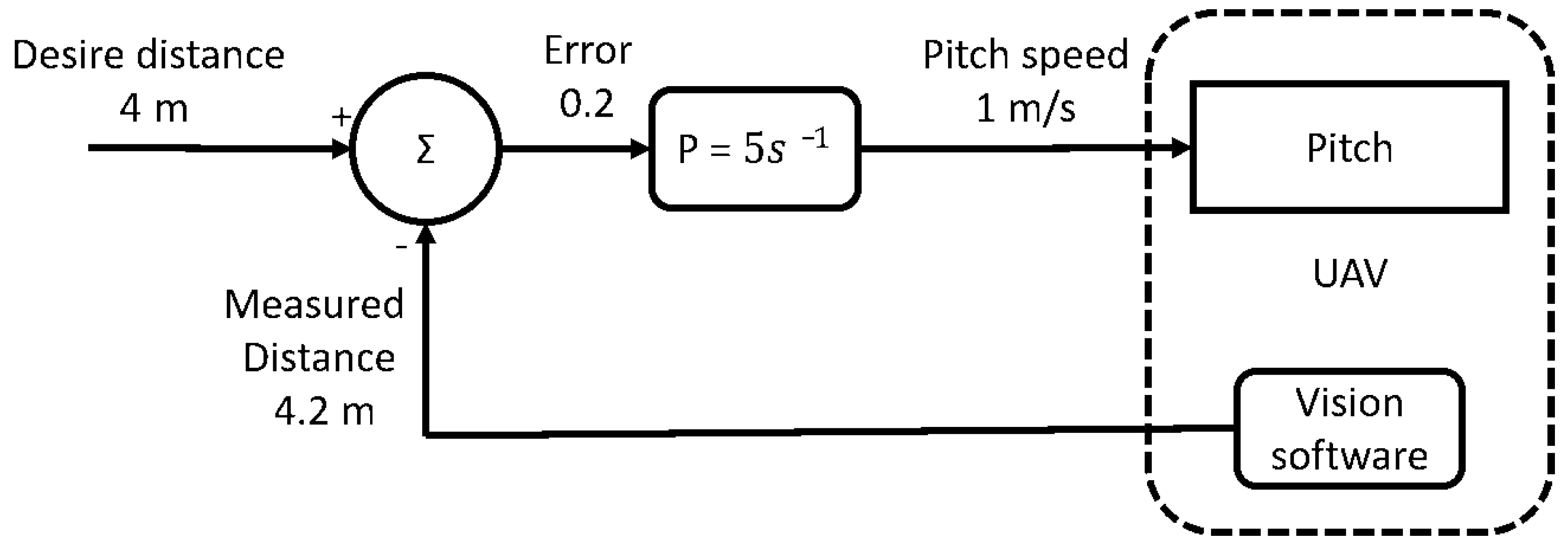

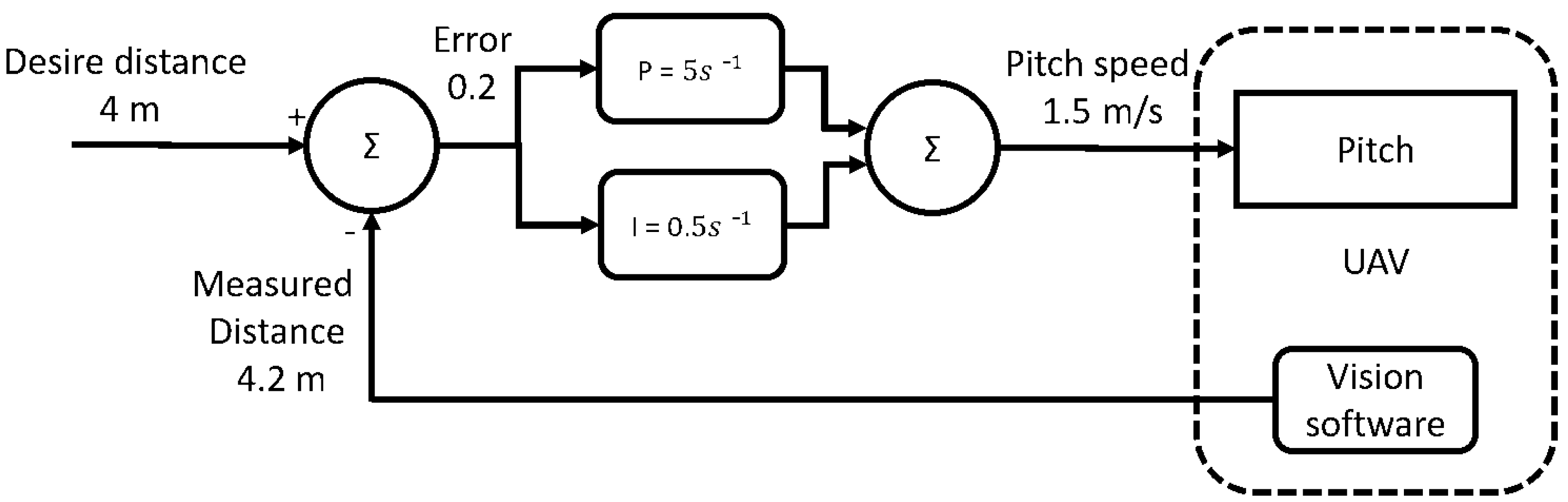

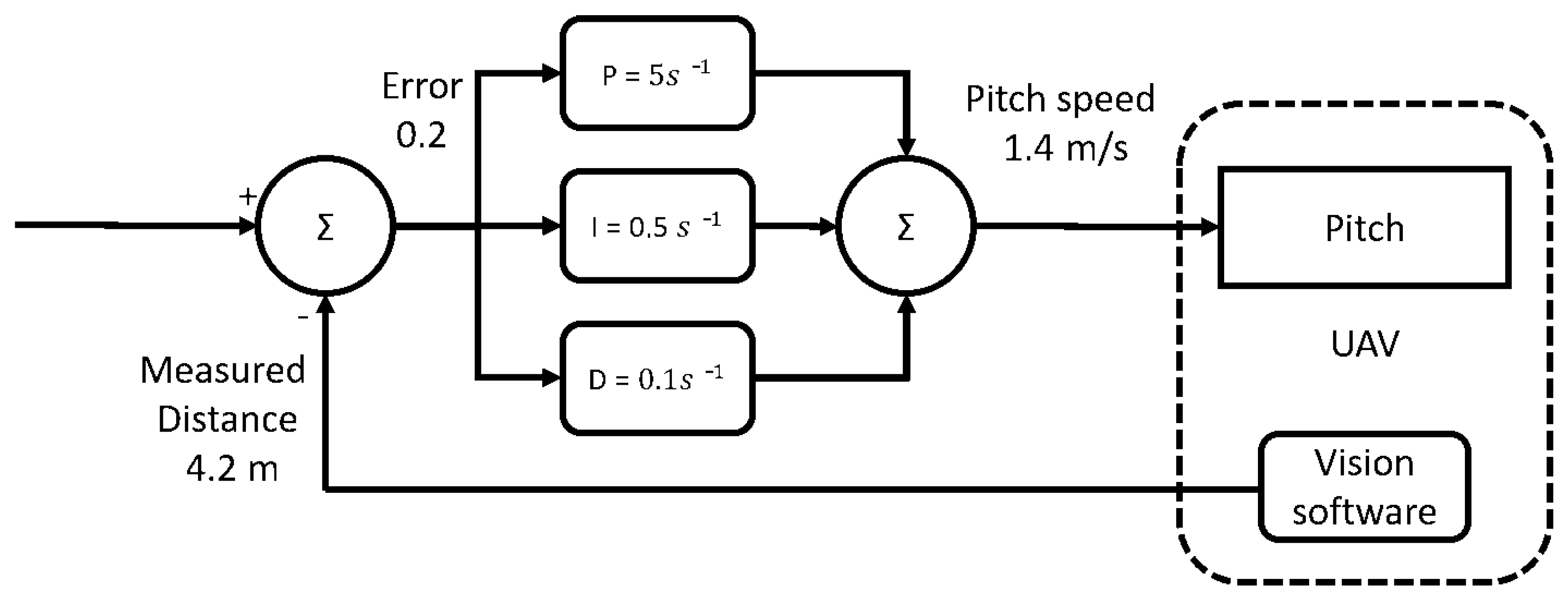

3.4. PID Control Loop

3.4.1. Tuning of the PID Loops

4. Experiments and Results

4.1. Flower Detection

4.2. Direct Steering Model

4.3. Visual Navigation

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Van Dijk, M.; Morley, T.; Rau, M.L.; Saghai, Y. A meta-analysis of projected global food demand and population at risk of hunger for the period 2010–2050. Nat. Food 2021, 2, 494–501. [Google Scholar] [CrossRef]

- Wagner, D.L. Insect declines in the Anthropocene. Annu. Rev. Entomol. 2020, 65, 457–480. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Khalifa, S.A.; Elshafiey, E.H.; Shetaia, A.A.; El-Wahed, A.A.A.; Algethami, A.F.; Musharraf, S.G.; AlAjmi, M.F.; Zhao, C.; Masry, S.H.; Abdel-Daim, M.M.; et al. Overview of bee pollination and its economic value for crop production. Insects 2021, 12, 688. [Google Scholar] [CrossRef] [PubMed]

- Yuan, T.; Zhang, S.; Sheng, X.; Wang, D.; Gong, Y.; Li, W. An autonomous pollination robot for hormone treatment of tomato flower in greenhouse. In Proceedings of the 2016 3rd International Conference on Systems and Informatics (ICSAI), Shanghai, China, 19–21 November 2016; pp. 108–113. [Google Scholar]

- Ohi, N.; Lassak, K.; Watson, R.; Strader, J.; Du, Y.; Yang, C.; Hedrick, G.; Nguyen, J.; Harper, S.; Reynolds, D.; et al. Design of an Autonomous Precision Pollination Robot. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7711–7718. [Google Scholar] [CrossRef] [Green Version]

- Kalantari, F.; Mohd Tahir, O.; Mahmoudi Lahijani, A.; Kalantari, S. A review of vertical farming technology: A guide for implementation of building integrated agriculture in cities. In Advanced Engineering Forum; Trans Tech Publications Ltd., Switzerland: Bach, Switzerland, 2017; Volume 24, pp. 76–91. [Google Scholar]

- Hulens, D.; Van Ranst, W.; Cao, Y.; Goedemé, T. The Autonomous Pollination Drone. In Proceedings of the 2nd IFSA Winter Conference on Automation, Robotics & Communications for Industry 4.0 (ARCI’ 2022), Andorra, 3 February 2022; Volume 1, pp. 38–41. [Google Scholar]

- Hashimoto, K. Visual Servoing; World Scientific: Singapore, 1993; Volume 7. [Google Scholar]

- Doitsidis, L.; Valavanis, K.P.; Tsourveloudis, N.C.; Kontitsis, M. A framework for fuzzy logic based UAV navigation and control. In Proceedings of the IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; Volume 4, pp. 4041–4046. [Google Scholar]

- De Schutter, J.; De Laet, T.; Rutgeerts, J.; Decré, W.; Smits, R.; Aertbeliën, E.; Claes, K.; Bruyninckx, H. Constraint-based task specification and estimation for sensor-based robot systems in the presence of geometric uncertainty. Int. J. Robot. Res. 2007, 26, 433–455. [Google Scholar] [CrossRef]

- Strader, J.; Nguyen, J.; Tatsch, C.; Du, Y.; Lassak, K.; Buzzo, B.; Watson, R.; Cerbone, H.; Ohi, N.; Yang, C.; et al. Flower Interaction Subsystem for a Precision Pollination Robot. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 5534–5541. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Dias, P.A.; Tabb, A.; Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 2018, 99, 17–28. [Google Scholar] [CrossRef] [Green Version]

- Guglielmino, P.C.; Seker, Z.; Stallings, N.A.; Craigie, C.A. Autonomous Drone Pollination; Worcester Polytechnic Institute (WPI): Worcester, UK, 2021. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef] [Green Version]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef] [Green Version]

| Model | Alpha Factor | Resolution | Detection AP |

|---|---|---|---|

| SSD + MobileNetV1 | 1.0 | 320 × 240 | 0.68 |

| SSD + MobileNetV1 | 0.5 | 320 × 240 | 0.61 |

| SSD + MobileNetV1 | 0.25 | 320 × 240 | 0.51 |

| SSD + MobileNetV2 | 1.0 | 320 × 240 | 0.77 |

| SSD + MobileNetV2 | 0.5 | 320 × 240 | 0.66 |

| SSD + MobileNetV2 | 0.35 | 320 × 240 | 0.62 |

| Sequence nb | Conditions | Remarks | Video URL (Accessed 9 May 2022) |

|---|---|---|---|

| 1 | indoor | cluttered environment | https://youtu.be/quX5HhVyR3g |

| 2 | indoor | demo at Dubai World Expo | https://youtu.be/u13j3sPgDlE |

| 3 | outdoor | low wind conditions | https://youtu.be/ixOCjHggUw4 |

| 4 | indoor | difficult light conditions | https://youtu.be/DZh7zHVQJqM |

| 5 | indoor | initial heading away from flower | https://youtu.be/Lq7TR70cJJk |

| 6 | indoor | long search for flower | https://youtu.be/AhhI29ofmr0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hulens, D.; Van Ranst, W.; Cao, Y.; Goedemé, T. Autonomous Visual Navigation for a Flower Pollination Drone. Machines 2022, 10, 364. https://doi.org/10.3390/machines10050364

Hulens D, Van Ranst W, Cao Y, Goedemé T. Autonomous Visual Navigation for a Flower Pollination Drone. Machines. 2022; 10(5):364. https://doi.org/10.3390/machines10050364

Chicago/Turabian StyleHulens, Dries, Wiebe Van Ranst, Ying Cao, and Toon Goedemé. 2022. "Autonomous Visual Navigation for a Flower Pollination Drone" Machines 10, no. 5: 364. https://doi.org/10.3390/machines10050364

APA StyleHulens, D., Van Ranst, W., Cao, Y., & Goedemé, T. (2022). Autonomous Visual Navigation for a Flower Pollination Drone. Machines, 10(5), 364. https://doi.org/10.3390/machines10050364