1. Introduction

Conventionally, robots were used with the capabilities of nominal sensors and intelligence in the industry. The tasks assigned to them were normally repetitive in nature [

1]. However, recent developments in the industry have spawned a wide range of robots. The up-gradations are common in services [

2,

3], exploration and rescue [

4,

5], and therapy operations [

6,

7]. The advent of human and robot interaction in the above-mentioned fields has given rise to the specialized field of human–robot interaction (HRI) [

8]. The field of HRI is now transforming into social HRI and is of particular importance. The interactions in this field include cognitive, social, and emotional activities with the robots [

9]. Moreover, a new term of human–machine collaboration (HMC) arises in this context, and human–robot collaboration (HRC) specifically for robots. Chandrasekaran et al. [

10] described HRC as a measure for improved task performance of the robot and reduced tasks for humans. A shared space is used by humans and robots while performing collaborative tasks, and it is expected that they attain a common objective while conforming to the rules of social interaction. These robots perform joint actions, obey rules of social interaction (such as proxemics) and still act efficiently and legibly [

11]. Since these interactions take place continuously during collaborative human and robot tasks, they place strong demands on collaborators’ personal and environmental safety. In addition to physical contact, the control system of the human–robot collaboration system must cater to uncertainties and ensure stability. Mead et al. [

12] identified three types of requirements for HRI commonly used by computer models of proxemics:

Physical requirements, based on collaborators’ distance and orientation;

Psychological requirements, based on the collaborators’ relationship;

Psychophysiological requirements based on the sensory experience through social stimuli.

The broad categories of safety for the robotic collaborative system in the social domain are physical and psychological safety. The earlier provides safety from physical hazards, whereas the latter is from psychological discomforts such as close interaction with machines, monotonous operations, or deviations from the task.

Different physical safety protocols for HRC-based CPS were presented by authors [

13,

14,

15,

16], whose activation is dependent on the proximity between the cobot and the human operator. Collision avoidance is a common solution to provide physical safety that includes avoiding unwanted contact with people or environmental obstacles [

17]. These techniques depend on the measurement of the distance in-between the robot and the obstacle [

16,

18]. Motion planning techniques are one of the key strategies to estimate collision-free trajectories such as configuration × time–space method [

19]; collision-free vertices [

20,

21]; representation of objects as spheres; and exploring collision-free path [

22], potential field method [

23], and virtual spring and damping methods [

24]. Unfortunately, collision avoidance can fail because of the sensors and robotic movement limitations, as sometimes human actions are quicker than robotic actions. It is nevertheless feasible to sense the bodily collision and counteract it [

25,

26,

27], which allows eradicating the robot from the contact area. In such circumstances, robots can use variable stiffness actuation [

28,

29] while effectively controlled [

30,

31], and lightweight robots with compliant joints [

32] may be used to lessen the impact forces on the contact. Lately, a multi-layered neural network method involving dynamics of the manipulator joints (measurement of torque through sensors and intrinsic joint positions through kinematics) has been used [

33] to discover the location of the collision on the robot (collided link). While accidents due to sudden contact between humans and robots may be restricted by designing lightweight/compliant mechanical manipulators [

30] and collision detection/response strategies [

27], collision avoidance in complex, unpredictable, and dynamic environments is still mainly dependent on the employment of exteroceptive sensors.

From the perspective of HRI, psychological safety means ensuring interactions that do not cause undue stress and discomfort in the long run. Therefore, a robot that can sense worker fatigue with whom it works is able to take essential safeguards to avoid accidents. An illustration of it was demonstrated in [

34], a human–computer integration where biosensors were mounted on the operator, and physiological signals (such as anxiety) were measured and transmitted to the robot. Researchers are required to build robots that must respond to emotional information received from the operator, also known as “affective computing” [

35]. In [

36,

37,

38], measurements of cardiac activity, skin conductivity, respiration, and eye muscle activity in conjunction with fuzzy inference tools were used to change the robot’s trajectory for safe HRC. Recently, Chadalavada et al. [

39] demonstrated how a robot (automated forklift) could show its intentions using spatial augmented reality (SAR) so that humans can visually understand the robot’s navigational intent and feel safe next to it.

It seems now essential that methods ensuring both physical and psychological safety be developed for HRC. Lasota et al. [

40] introduced a distinct concept of combining both physical and psychological safety for safe HRC. By measuring the distance between the robot and the human in real-time, they precisely control the speed of the robot at low separation distances for collision avoidance and stress relief. In another paper [

14], the same authors established via quantitative metrics that human-conscious motion planning leads to more effective HRC. Lately, Dragan et al. [

41] described that psychological safety is ensured by legibility; the operator may feel more comfortable if they can judge the robot’s intention by its motion.

Humans, robots, and machines lately work in the ambit of cyber-physical systems (CPS) under the umbrella of Industry 4.0. They are smart systems that contain both physical and computational elements. The concept involves decision-making through real-time data evaluation collected from interconnected sensors [

42]. Monostori [

43] proposed overall automation of these layers for production systems interconnecting necessary physical elements such as machines, robots, sensors, and conveyors through computer science and termed it a cyber-physical production system (CPPS). Green et al. [

44] highlighted two important components of an active system of collaboration between humans and robots. First, the adjustable autonomy of the robotic system is an essential part of an effective collaboration that enhances productivity. Second, awareness of situations, or knowing what is happening in the robot workspace, is also essential for collaboration. The effectiveness of HRC depends on the effective monitoring of human and environmental actions and the use of AI to predict actions and states of mind to which humans may have contributed to the task. Lemaignan et al. [

11] emphasized the architecture of the decision layer of social robots. The paper is an attempt to characterize the challenges and present a series of critical decision problems that must be solved for cognitive robots to successfully share space and tasks with humans. In this context, the authors identified logical reasoning, perception-based situational assessment, affordability analysis, representation and acquisition of knowledge-based models for involved actors (humans and robots, etc.), multimodal dialogue, human-conscious mission planning, and HRC task planning.

As the industry is transforming from automation to intelligence, a need is felt to extend the concept of psychological safety from humans to the CPSs. Psychological safety is equally essential for an intelligent CPS as physical safety is perceived [

45]. Although interactions among human operators, physical and computational layers of CPS can be quite demanding in terms of cognitive resources, the psychological aspects of safety are not extensively taken into account by existing systems. However, there is no connective framework that assesses and counters both physical and psychological issues of a CPPS in a social domain. Flexible CPPS in this regard is required to counter the physical and psychological uncertainties by defining contingencies. This indicates the requirement for an efficient and reliable framework for next-generation CPPS:

A framework that can quantify both the physical and psychological safety of the CPPS;

A framework that can assess the CPPS’s current state and provide a thinking base accordingly to make it flexible and safe;

A framework that can decide, optimize and control based on the situational assessment.

Our research focused on addressing both physical and psychological issues faced by a CPPS. We proposed a layered framework for knowledge-based decision-making in a CPS. The CPS, at the core, performs the desired operations through the interactions between its physical component (PC), computer component (CC), and human component (HC). A situational assessment layer is proposed above the central layer to assess the anxiety of the situations faced. Calculation of the matching score is suggested for the relevance of each situation’s anxiety to each resource, and we named it an “anxiety factor”. The third layer is the resource optimization layer, above the situational assessment layer. This layer optimizes the allocation of available resources through an optimization algorithm using the evaluated matching score, the objective function, and the defined constraints. The last is the logic-based decision-making layer. This layer embeds predefined logic to decide on complex situations, thereby tasking different resources using the experts’ knowledge, evaluated optimization, and calculated anxieties. The logic remains specific to each identical case/situation embedded in a CPS scenario. The proposed framework is validated through experimental case studies facing several situations.

2. Methodology

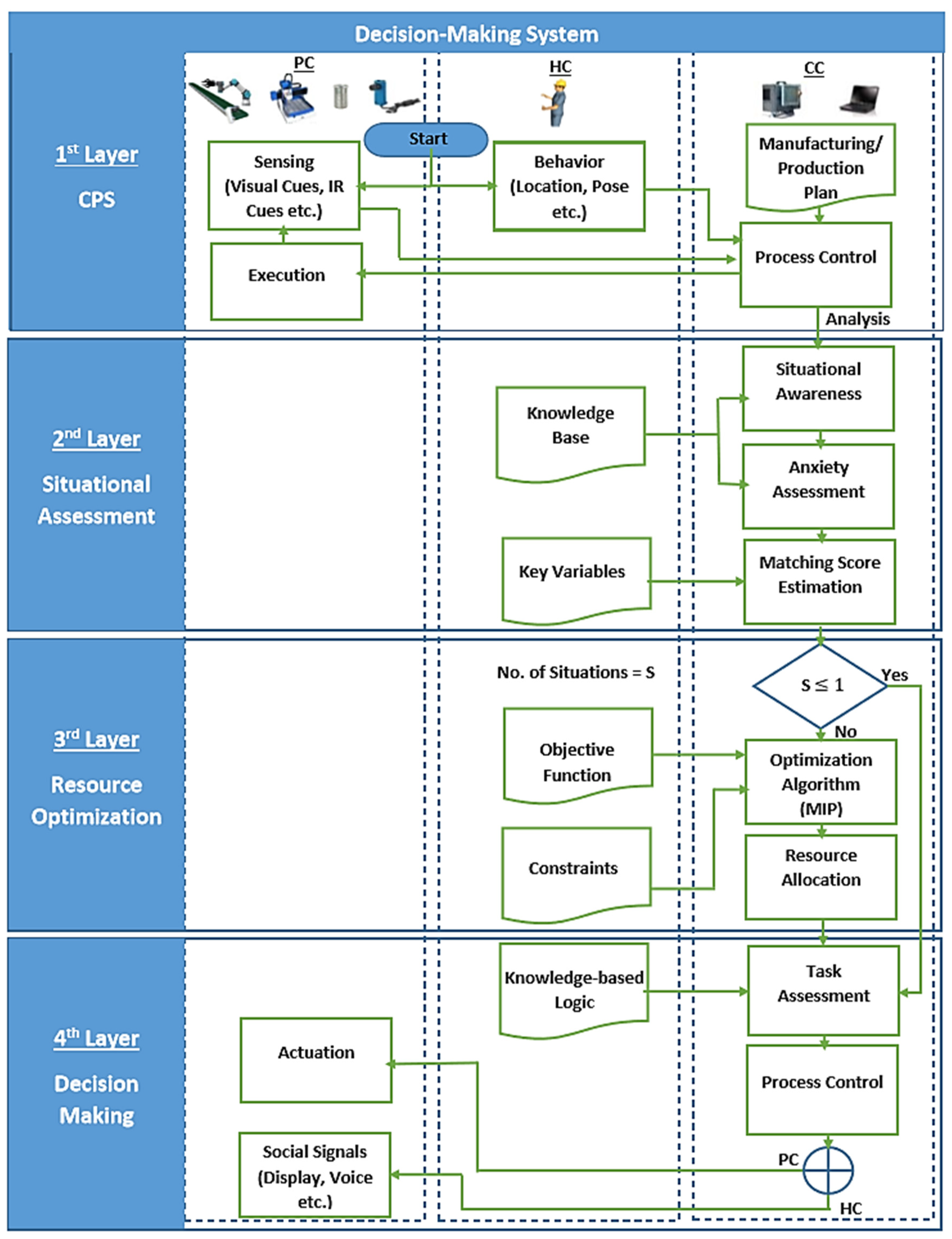

The connective framework and an overview of its execution are shown in

Figure 1. We propose a knowledge-based modular software system where different modules represent different layers of the proposed framework. However, we do not propose the number of modules to be fixed and may vary as per the requirement of a particular case. The data of all the layers are stored in a central database.

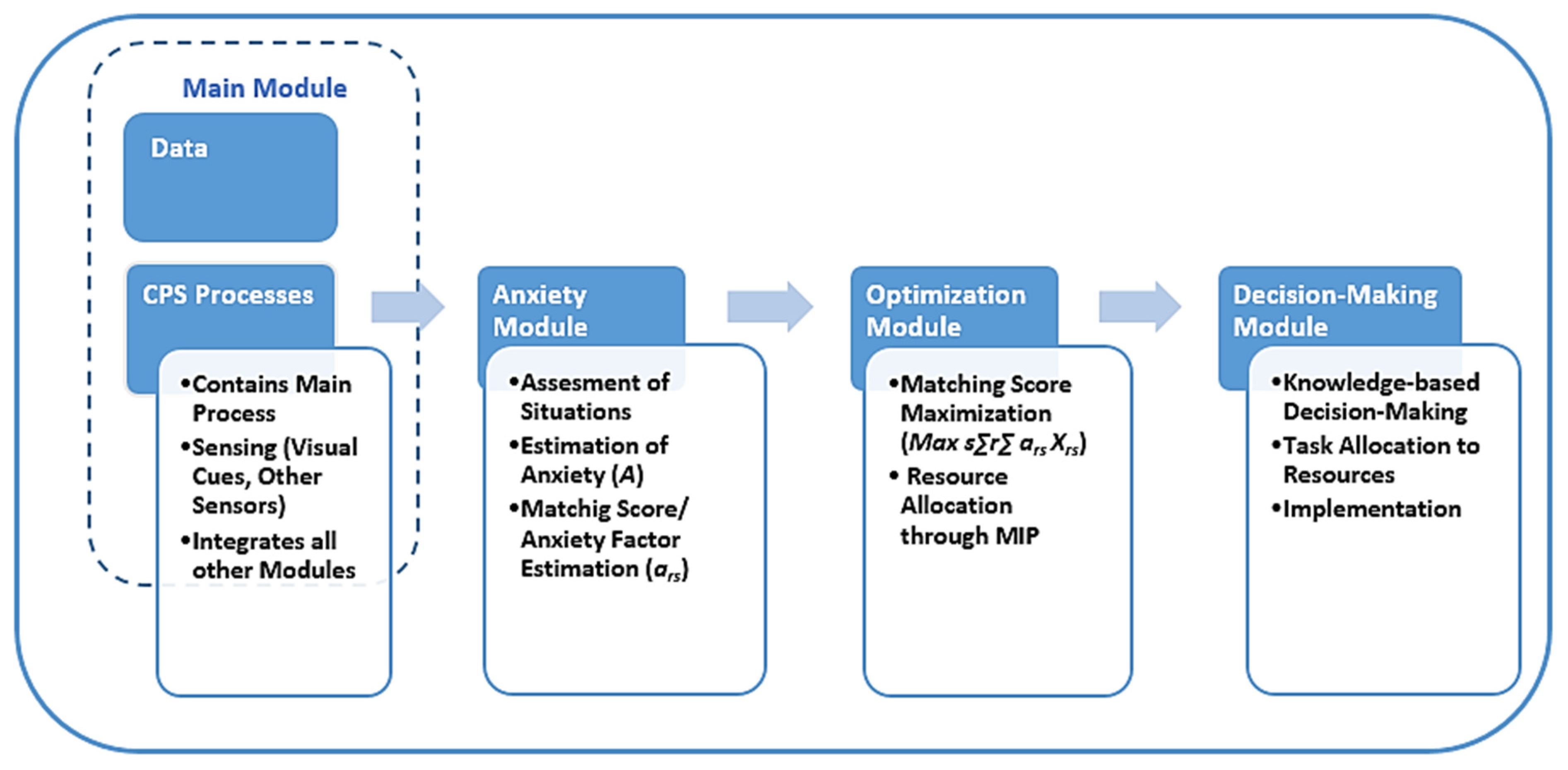

The basic modules are the main module for the sensing and process control layer of the CPS, the anxiety module for the situational assessment layer, the optimization module for the resource optimization layer, and the decision-making module for the decision-making layer. The general connectivity of the modules is shown in

Figure 2.

If multiple changes are faced, the matching score is ascertained for each situation through the anxiety module. Accordingly, the situations with the higher matching score (anxiety factor) are assigned the best possible resources through the optimization module. The contingencies to handle the braved situations are looked after by the decision-making module. In this context, outputs from the anxiety and the optimization modules are given to the start of the decision-making module, which decides on the assignment of resources to the tasks based on the defined logic. The term anxiety and the anxiety factor are defined, and the method for their estimation is stated in this paper.

2.1. Anxiety of Cyber-Physical Systems

Anxiety is the human body’s natural response to stress. It is a feeling of fear or apprehension about what is to come, also recognized as the unpleasant state when an expectation is not achieved due to any stressful, dangerous, or unfamiliar situation. The central task of the overall system in the brain is to compare, quite generally, actual with expected stimuli. We wanted to elaborate more on the term that should not be confused with risk. Risk is based on hazard, whereas “anxiety can be defined as an urge to perform any job either to avoid a hazard or to do a righteous job”. When multiple situations are confronted, all generate anxiety, but there would be limited resources to handle each. Therefore, we defined anxiety to make it scalable for CPSs. There may be different levels of anxiety generated by situations. The module is initialized by the results of Ishikawa, which assigns an index to each anticipated situation. After initial indexing is performed, a novel and intelligent technique based on medical knowledge categorizes situations into different anxiety types. Each type relates to a particular level of severity.

2.1.1. Categorization of Anxiety

The criterion for categorizing and indexing anxiety in different situations faced by the CPS is defined in

Table 1. The categories are named concerning the characteristics matched with the medical anxieties. Individual severity depends on the category and the repeatability of the situation. It can be said that the “Quantification” of psychological safety for the CPS is being performed. Anxiety for a situation is the sum of the lowest severity limit and the index “

I” estimated by Ishikawa. The value of

I ranges from 0 to 100. The detailed procedure for calculating index

I is explained in [

46].

Total severity is also calculated for a particular instance, and an alarm is raised if it crosses a certain limit. It is the sum of all current emerging situations in a single iteration, whose severities are estimated.

2.1.2. Anxiety Factor Calculation

The anxiety factor, i.e., the matching score, is then calculated through a mathematical model dependent on the category and the variables related to tasks/resources. The procedure is repeated at each iteration and the situations faced are analyzed. The anxiety factor for a corresponding resource and a situation is calculated as:

Anxiety “

A” is the parameter defined by the category of the particular situation, which is

G,

P,

O, etc., and calculated as explained in

Table 1. Its value ranges from 0 to 100. “

p” is the preference variable and is the ascending order of anxiety level sorted for different situations; it can also be referred to as priority index number. “

t” is the task variable; it defines which task can better be performed by which resource. The prior resource is assigned a lower value and subsequently ascending value for lower priorities. The value of

t depends upon the number of resources, e.g., if there are two resources, then

t = 0, 1. “

Q” is the resource suitability variable; it has the value “1” if a resource is suitable, and “0” if not suitable. In order to simplify the mathematical notation of the model formulation, we defined the indices for resources “

r” and situations “

s”.

The expression for “a” can be written as:

ars ∈ [0,100 + S] for all resources r ∈ R and situations s ∈ S;

r ∈ R: index and set of resources;

s ∈ S: index and set of situations.

S is the total no of situations. As the value of p ranges from 1 to S, hence the range of the anxiety factor is from 0 to 100 + S.

The anxiety factor “a”, is the value of anxiety calculated through the above variables for a particular situation tackled by a specific resource.

2.2. Layers of the Decision-Making System

The framework is explained layer by layer. The CPs layer is the main layer that controls all physical and human elements. The physical component involves machines, robots, conveyors, sensors, display/output devices, input devices, etc. The main role of the layer is to execute the intended process for which the production plan is uploaded. It also looks for changes at every cycle of the operation. For this, various sensing techniques, such as proxemics and visual, physiological, or social cues, etc., may be used. Different situations are expected to affect the desired output are registered.

The situational assessment layer assess confronted situations for priority index (anxiety) by making use of the HC’s knowledge base. First, the index of expected situations is calculated with the aid of Ishikawa analysis, as proposed in

Section 2.1. The category of each situation is then identified. Based on the category, the matching score (anxiety factor) for all expected situations is calculated, which defines the resource suitability to the particular situation. On the initialization of the process, the layer first becomes aware of the situations that are detected by the CPS layer. The layer then links the matching scores to the related situations and re-estimates if the category of the situation changed by the HC during the operation.

The resource optimization layer optimizes the allocation of resources through an optimization algorithm. The module checks the number of situations; if there is a single situation, the program directly moves to the decision-making layer, and if there are multiple situations, the program moves to the optimization algorithm. The algorithm employs mixed-integer programming (MIP) technique making use of the matching score, objective function, and the defined constraints. The Gurobi Optimizer [

47] is one of the state-of-the-art solvers for mathematical optimization problems. The MIP model of the stated problem is implemented in the Gurobi Optimizer. The overall technique addresses all the situations sequentially in terms of priority.

The decision-making module encompasses the logic defined by experts to handle the braved situations and decides on the assignment of resources to tackle them. The main consideration for the assignment of resources is the allocation recommendation by the optimization module; however, the implementation is carried out by the decision-making module. Commands are then given to the physical resources, which could be a human operator, a cobot, a machine, etc., depending on the ascertained tasks. The human component is also given cautions through social signals on observation of social norms and obsessions.

The implementation of the proposed method includes two types of actions, one to be taken before activating the system and others are happening in real-time during the process cycle. Both the pre-process and in-process steps concerning each layer are shown in

Table 2.

2.3. Resource Optimization

We introduced a decision variable, “X”, for each possible assignment of resources to the situations. In general, we can say that any decision variable Xrs equals “1” if resource r ∈ R is assigned to the situation s ∈ S or “0” otherwise.

2.3.1. Situation Constraints

We discussed the constraints associated with situations. These constraints ensure that each situation is handled by exactly one resource. This corresponds to the following:

Less than <1 is included to incorporate the null when no resource is assigned to the situation in an iteration.

2.3.2. Resource Constraints

The resource constraint ensures that, at most, one situation is assigned to each resource. However, it is possible sometimes that not all the resources are assigned. For example, if CPS encounters two situations and only one resource is suitable to handle both. Then the situations are handled sequentially by the resource in order of anxiety. We can write this constraint as follows:

This constraint is less than <1 to allow the possibility that a resource is not assigned to any situation.

2.3.3. Objective Function

The objective is to maximize the total matching score (anxiety factor) of the assignments that satisfy both the situation and resource constraints. The objective function can be concisely written as follows:

3. Experimental Validation

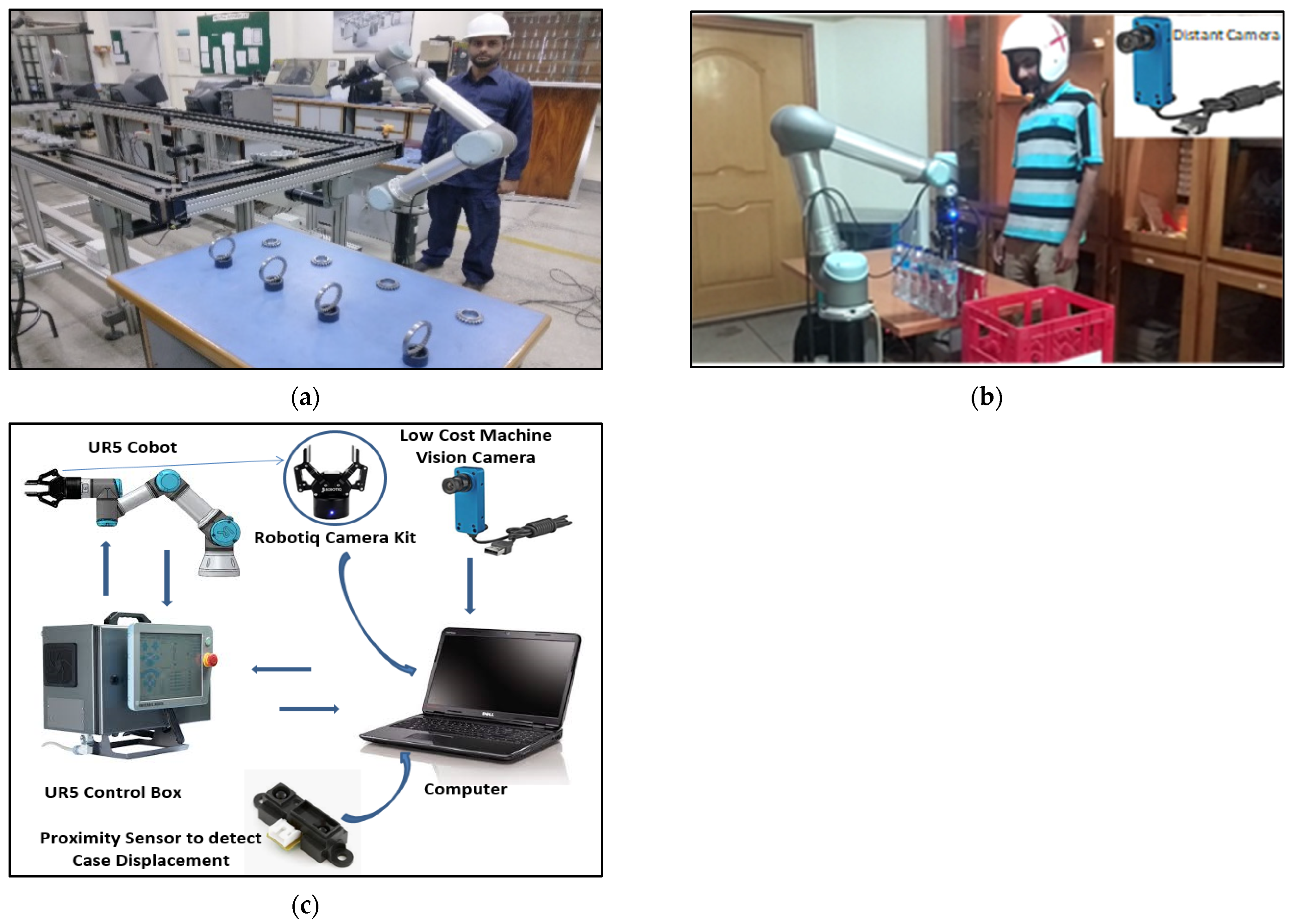

Examples of automotive parts assembly and beverage packaging were considered, as shown in

Figure 3. The scenario involves a cobot and a human operator performing tasks dependent on information received from multiple sensors integrated with a collaborative CPPS. Different items arrive at workstations. They are then assembled or packaged in a sequence through a cobot. A human supervisor assists in the completion of the process by performing several tasks. In addition to the dedicated tasks, the supervisor also monitors operations for anomalies and erroneous activities, i.e., they are in both collaborative and supervisory roles. As the assembly/packaging process completes, the supervisor gives a command for the next one, and the cobot moves accordingly. The cobot performs specific operations in collaboration with the operator to complete the task, e.g., in the case of packaging, the robot picks bottles and cans in a sequence from specific locations and drops them in specific slots in the crate, whereas in the case of assembly, the robot picks two types of gears from specific locations in a sequence and place them on a case for assembly. The operator, in the case of packaging, replaces the crate on completion of packaging, and in the case of assembly, tightens the top plate with screws and presses a button for the conveyor to deliver the next case. The human supervisor is also responsible for corrective actions on wrong item arrival, wrong sequence, or absence of item from the location. We call this whole process a standard procedure.

There may be situations when the process may not proceed as intended, and it may face various unwanted and unforeseen situations. In order to cater to this, different situations were anticipated for both the cases that can emerge during a cycle; these include the intended situation as well as unwanted situations. The considered situations are as follows: the right item is the main task/intended situation i.e., the right items (beverages/gears) are in place for pick and place operations/assembly; the wrong item is the item at the work location is either not in list or wrong in sequence; no item is when no item appears at the work location; the human interference is when operator interferes in any task at any location, as they find that the robot may not be able to perform the task or they find any anomaly; displaced case/crate is that the operation of packaging/assembly cannot be completed when the crate/case is displaced from the designated location; unidentified person is that any unknown person in the workspace is a hazard to the system and to themselves; foreign object is any object not required in the workspace is also a hazard to the system; obsession is any situation actually not affecting but disturbing the outcome of the system, and it is generally established after few iterations when the operator realizes that the situation is a false alarm, e.g., in both the case studies the object detection algorithm detects the table on which the items are placed as a foreign object; time delay is the completion time variance in the intended operation; threshold distance is the breach of minimum distance that is established to be safe for collaboration between the human and the robot; cobot power failure is when the cobot stops work due to power failure; cobot collision is when the cobot collides with the human operator. Different situational awareness techniques were used to detect the listed situations. The object detection technique is used to detect a right item, a wrong item, no item, an unidentified person, and foreign objects. YOLOv3 [

48] is trained to detect gears, bottles, and cans along with day-to-day general objects. RFID sensor in the helmet is used to identify the authorized operator, an IR proximity sensor to detect the displaced case/crate, impact sensors in the cobot to detect cobot collision, a power sensor to detect cobot power failure, and the clock to gauge the time delay. The human interventions are detected through a pose estimation algorithm which takes the feed through a camera installed above the workspace. Open pose [

49] is used to detect different human poses. The separation distance between the cobot and the operator is used to evaluate the threshold distance; this was performed by calculating the distance between the center of the cobot and the operator detection bounding box. The question is how to map these situations to calculate the anxiety index and anxiety factor and then analyze and decide on requisite actions on them. As soon as any of the listed situations are detected through the visual sensing/other detection techniques, an input of detection is given to the main program. The situation’s severity, anxiety index, variables relative to resources, and anxiety factor were already evaluated before the commencement of the operation are taken into account. The severity

I for each situation was assessed by the experts by assigning weights to each situation against all other situations in the Ishikawa diagram, as shown in

Figure 4. “1” was assigned to the other situation, which was decided to have low priority than the main situation and “0” if it had high priority. The weight was assigned based on the voting of experts. The ranking of the situation (index

I) was the total of weights assigned under it. The anxiety

A was then calculated for each situation by putting in values of categories and severity as stated in

Table 1. There were two resources, a human and a cobot; the matching score (

ars) was then estimated as explained in

Section 2 for both the resources vs. the situations and fed into the central database. The severity, categories, the value of anxiety evaluated for the cases, and the anxiety factors are shown in

Table 3.

The main program was divided into five modules to implement the approach on the case studies, which are the main module, item in place module, anxiety module, optimization module, and the decision-making module. The main module holds the other modules. This module keeps count of the operations in the cycle and performs the intended task. It looks for the situations during each iteration and ascertains the matching score through the anxiety module in case multiple situations emerge. The item-in-place module is specific to each particular case and checks whether the right or wrong item is in place through object detection and item count. A machine vision camera was placed to detect the objects. The module adapts the contingency plan if the right item is not in place through the decision-making module. The optimization module assigns the resources to the situations by identifying the highest matching score through MIP using Equations (3)–(5). By analyzing

Table 3, we can see that the first basis for the resource assignment is the resource suitability variable

Q; if it is zero, the resource cannot be assigned to the situation. The second basis is the task variable

t; in our case, 0 was assigned to the preferred resource. Hence the resource with

t = 0 was assigned if it was not already committed and if the resource suitability variable was not “0”. The third basis is the preference variable

p, the situation with the higher value was addressed first by the two resources, and the remaining was addressed subsequently after the disposal of the initial ones. The cumulative effect of all these variables is the anxiety factor. The table gives a clear depiction of when any situation appears, which out of two resources can be assigned, which the prior resource is, and which situation is addressed first. The decision-making module then decides the contingencies for the identified situations through actions performed by the resources. The actions are either performed by the cobot or the human supervisor in our scenario, which is dependent on the specific role assigned to the resource for a particular case. The roles assigned to the situations are: for the right item, the robot picks up and places the item at designated location, the human can do so but the preference is given to the robot; for the wrong item, the robot picks up the item and places it at the spot dedicated for them, the count, however, is not increased and the human has to place the right item at the location; in the case of no item in location, the robot moves to the next location and the human places the item at the drop point; if the human operator interferes due to any anomaly in the process or defected item, they may drop the item at the drop point or the wrong item spot, the count is then incorporated by checking the human pose when performing the action; for a displaced case/crate, the robot adjusts it by pushing it to the fixed enclosure, and if the robot is not available, then the human performs it; if an unidentified person enters the workspace, the human has to remove them from the area, and a caution is displayed on the screen for the human to perform this action; similarly, if a foreign object appears in the workspace, a caution is raised to the human to remove the object from the workspace, and in both the last two cases, the robot stops action until the human presses the button for resume operation; in the case of obsession, none of the resources perform any action and perform the task as intended; if the time delay and threshold distance is breached, a caution is given to the human operator to analyze and adjust accordingly; in case of cobot power failure, the human checks the reason, rectifies it, and resumes the operation, however, the whole system is to remain at stand still; similarly in case of cobot collision, the whole system comes to stop and the human checks, resolve the issue, and then resumes the operation. When analyzing, we see that all the actions are interlinked with the variable assigned in

Table 3. The experts actually decide the values of the variables based on experience and consultation.

3.1. Survey

We carried out a survey that consisted of participants pooled from university students and faculty. The ages of the participants range from 19 to 40 years (M = 28.7, SD = 7.73).

The participants were informed before the experiment to monitor the screen for instructions during the operation continuously. We devised our own metrics similar to the work in [

43]. The subjects had nominal knowledge of the specifics of the system and were briefed on the assigned task only. A total of 20 subjects participated, the repeated-measure design was considered for the experiment, and subjects were divided into two groups randomly. Two types of conditions were assigned, the first group included those who first worked with the decision-making design (

n = 11), and the second group included those who worked with the standard design without a decision-making system (

n = 9). The subjects were not informed before the experiment what condition was assigned and what metrics were being measured. We measured both quantitative and subjective measures. The quantitative measures include the decision time taken to decide on each situation and the accuracy of the process. The subjective measures include perceived safety, comfort, and legibility based on the questionnaire responses.

First, both groups executed a training round, during which the participants performed the complete experiment by themselves, without an assistant and the robot, to familiarize themselves with the task. Next, all participants were provided with a human assistant to perform the task collaboratively as would be required to perform with a robot in the subsequent phase, i.e., the 3rd phase. Finally, two task executions were conducted firstly in one condition and later in a switched mode; however, the sequence was different for both groups. A questionnaire was given to participants after each task execution. Each participant performed two training sessions with the robot before each task execution to build mental compatibility. In order to prevent any involuntary bias from the participants, the first task execution was conducted in a way that the participant was unaware of which out of two conditions each participant had been assigned to. Before the conduct of the alternate mode, participants were educated that the system would behave differently during the second phase. The participants were briefed; the robot could take some automated measures, and they were required to monitor instructions on the screen.

The questions, shown in

Table 4, were intended to determine each participant’s satisfaction with the robot as a teammate as well as their perceived safety, comfort, and legibility. A 5-point Likert scale was used for the two questionnaires on which the participants had to respond, strongly disagree to strongly agree for the first questionnaire and much less to much more for the second questionnaire. Based on the dependent measures, the two main hypotheses in this experiment were as follows:

Hypothesis 1.

Using a decision-making framework for anxiety will lead to more fluent human–robot collaboration based on timely automated decisions and the accuracy of the approach.

Hypothesis 2.

Participants will be more satisfied with the decision-making framework performance while collaborating with the robot and will feel more comfortable, safe, and legible compared with a CPPS that uses standard task planning.

The automated decision time cannot be calculated for both conditions as the standard approach does not cater to unprecedented situations; the operator has to stop the system and apply countermeasures. The accuracy of the approach is defined as the number of errors (situations that could not be handled automatically) observed during one cycle divided by the total number of iterations. At least 2 situations were intentionally generated in each trial to check the system response.

3.2. Results

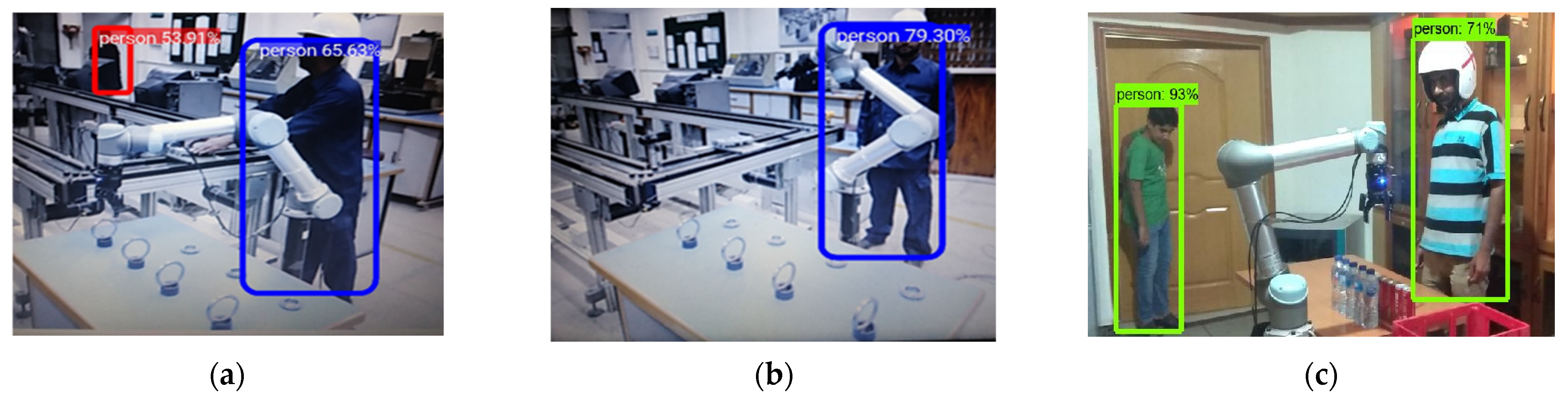

The detection of the objects through the object detection technique is shown in

Figure 5.

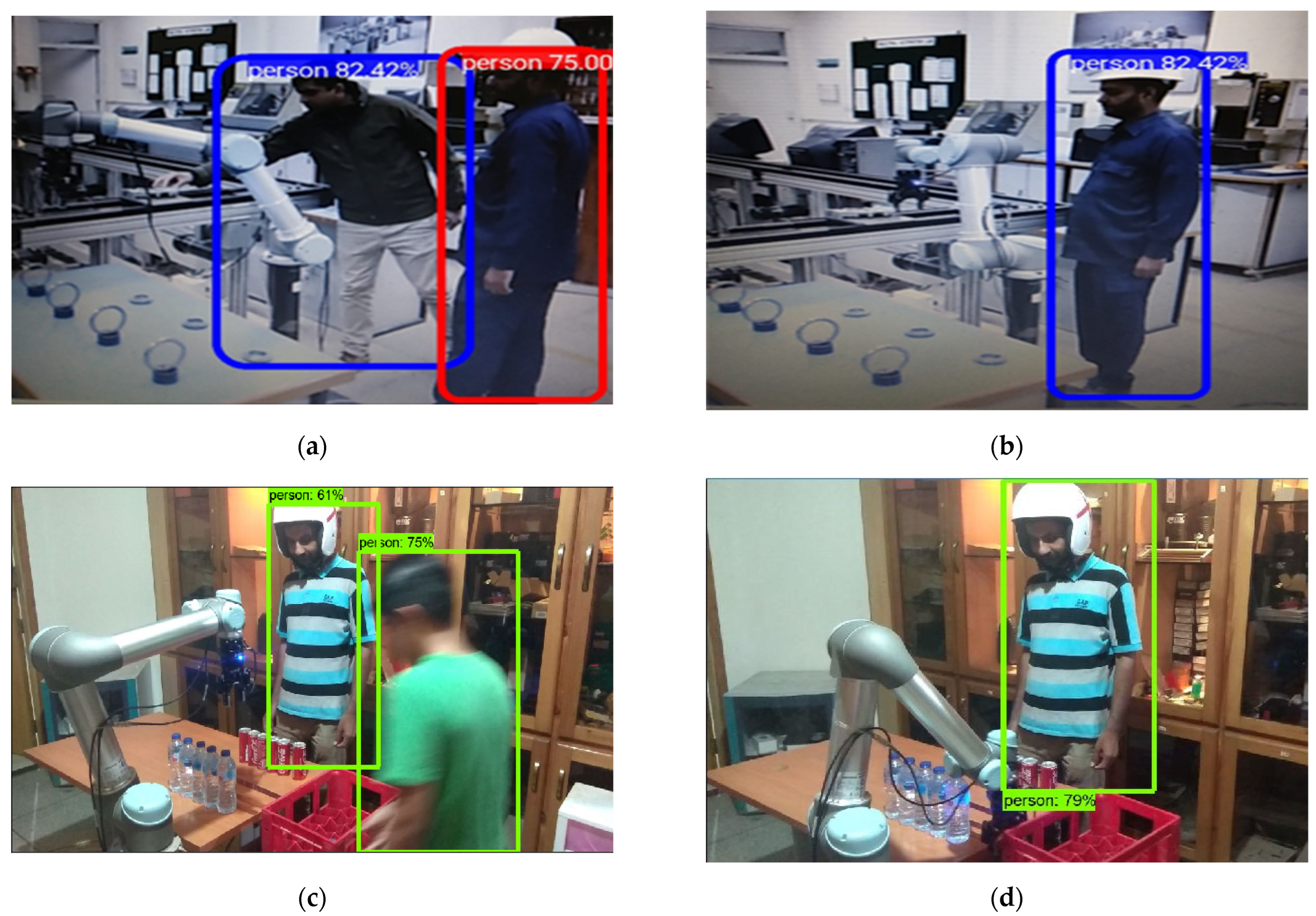

Similarly, human detection was also carried out through the object detection technique. The results for the detection of an authorized operator and an unidentified person are shown in

Figure 6. The identification of the authorized operator within the workspace through object detection was complemented by the RFID sensor in the helmet.

Detection of specific poses of the operator is shown in

Figure 7, the initial is the detection of the pose of the operator when interfering in assembly, and the latter two are the poses of the operator while placing objects himself. It is pertinent to mention here that the contingencies were adopted only when a particular pose detected is complemented by the position of the robot at the same location. This is because, while performing parallel operations, the same pose could be detected while the cobot may be operating at some other place.

Our approach is capable of detecting multiple situations and their disposal at once. As an example, two individual situations were considered at a time, which are a displaced case/crate and an unidentified person. An inspector entered the workspace and displaced the outer case in the first scenario, and the crate entered the workspace in the second during inspection (see

Figure 8).

The time taken for decision for handling situations for two of the cycles in one test run is shown in

Figure 9. Anxieties, total anxiety, and the maximum anxiety situation for each iteration are shown. The maximum time taken to decide on a single situation was noted as 0.03 s.

The quantitative analysis from the survey was carried out; the overall mean automated decision-time (MADT) for the proposed method and accuracy of both approaches were calculated with a minimum of two situations in each cycle. It was revealed that the mean automated decision time for a contingency is 0.21 s, as can be seen in

Table 5. The decision-making system (accuracy of 89.98%) was found to be 16.85% more accurate than the standard system (accuracy of 73.125%). The

t-test for the significance of the results was conducted with a confidence level set to

p < 0.05 (95% confidence level), and the

p-value for the test was found to be

p < 0.01. The standard error mean (SEM) for the MADT was found to be 9.36 × 10

−5, the upper limit was found to be 0.0211 s, and the lower limit was 0.0208 s.

Significant differences (at p < 0.05) were found for the questions of fluency with the collaborative robot; the participants exposed to the decision-making system agreed more strongly with “I trusted the robot to do the right thing at right time” (p < 0.01) and “The robot and I worked together for better task performance” (p < 0.01), and disagreed more strongly with “The robot did not understand how I desired the task to be executed” (p < 0.01) and “The robot kept disturbing during the task” (p < 0.01).

Similarly (at confidence level p < 0.05), significant differences were found for the questions of perceived safety, comfort, and legibility; the participants exposed to the decision-making system agreed much more with “I felt safe while working with the robot” (p < 0.01), “I trusted the robot would not harm me” (p < 0.01), and “I understand what robot will be doing ahead” (p < 0.01), and agreed much less with “The robot moved too drastic for my comfort” (p < 0.01) and “The robot endangered safety of unknown persons in workspace” (p < 0.01).

The results support both the hypothesis in favor of the decision-making system; they indicate that the proposed approach leads to more fluent HRC (Hypothesis 1) and also highlight that the method extends safety, comfort, and legibility (Hypothesis 2).

3.3. Discussion

The research shows that the previous works address limited situations and solely focus on a single aspect, e.g., collision avoidance, motion planning, or psychological safety. The proposed method is seen to collectively address all the issues earmarked by the experts that a CPPS may face. Here, we also wanted to compare the current method with the previous work [

46]. Only the most prior situation with maximum anxiety can be handled at once using the previous method, whereas the current approach is optimized to employ all available resources to relieve the current state of anxiety. The method is feasible to include any number of resources by modifying the equations in the optimization algorithm. At times when limited resources are available, situations with higher anxiety were addressed first, followed by lower ones. The current method combines human knowledge and intelligence with AI techniques to minimize the decision time; the maximum time recorded to decide on a scenario is 0.03 s, which shows that the method is not time-intensive. The indexing of anxiety was carried out through a more generic approach in the previous method that lacked rationale for scaling different levels. The current method partially uses the previous technique; however, a logical approach with a biomimetic connection was presented, which makes it easy to differentiate and prioritize situations.

The contribution of the works is that the CPPS was made more intelligent, safe, resilient, and smart. The decision-making of the production system was improved, which provides it the flexibility to tackle multiple situations at once in an optimal manner and can fit in any industrial scenario; manufacturing, assembly, packaging, etc. The amalgamation of four layers highlights the contribution of AI in the industry that increases the overall productivity of the system. The work is a real manifestation of the integration of the computers in Industry 4.0, which is implemented in every layer of the proposed work, i.e., the functioning of the CPS, situational assessment, optimization, and decision-making through software, algorithms, AI, and interface.

The technique in the future can be reinforced by machine learning techniques to make it more intelligent. The CPPS may learn in real-time from the environment and will improve the knowledge base continuously. As the existing system is dependent on predefined solutions, it is proposed that the system may learn through repetitive patterns and solutions provided to the previously confronted situations and may automatically resort to the same solutions. Cloud base and semantic systems are emerging trends that may be incorporated further to enhance the capability and customization of these systems. In this way, multiple situations can be updated and omitted automatically in the system, though supervision by the human component is still recommended. We used machine-learning-based image processing techniques as a tool, which are known for uncertainties due to the statistical methods and probability distributions used in them. The probabilistic nature of these models is prone to errors that cannot be ignored. In the future, the confidence level of detection may be included in the calculation of the anxiety factor. The accuracy of the method may either be increased by selecting a threshold level of detection or adding a variable incorporating the detection level that will affect the anxiety factor of the confronted situation.