A General Inertial Projection-Type Algorithm for Solving Equilibrium Problem in Hilbert Spaces with Applications in Fixed-Point Problems

Abstract

1. Introduction

- (i)

- Choose where a sequence is satisfies the following conditions:

- (ii)

- Choose satisfying and

- (iii)

- Compute

2. Preliminaries

- (1)

- γ-strongly monotone if

- (2)

- monotone if

- (3)

- γ-strongly pseudomonotone if

- (4)

- pseudomonotone if

- (i)

- Let and we have

- (ii)

- if and only if

- (iii)

- For any and

- (i)

- for every the exists;

- (ii)

- each sequentially weak cluster limit point of the sequence belongs to .

- (f1)

- f is pseudomonotone on and for every ;

- (f2)

- f satisfies the Lipschitz-type condition on with constants and

- (f3)

- for every and satisfying ;

- (f4)

- needs to be convex and subdifferentiable on for all

3. The Modified Extragradient Algorithm for the Problem (1) and Its Convergence Analysis

| Algorithm 1 (Modified Extragradient Algorithm for the Problem (1)) |

|

4. Applications to Solve Fixed Point Problems

- (i)

- sequentially weakly continuous on if

- (ii)

- (i)

- Choose and satisfies the following condition:

- (ii)

- Choose satisfies , such that

- (iii)

- Compute , where

- (iv)

- Revised the stepsize in the following way:

5. Application to Solve Variational Inequality Problems

- (i)

- L-Lipschitz continuous on if

- (ii)

- monotone on if

- (iii)

- pseudomonotone on if

- (L1)

- L is monotone on with ;

- (L2)

- L is L-Lipschitz continuous on with ;

- (L3)

- L is pseudomonotone on with ; and,

- (L4)

- and satisfying

- (i)

- Choose and , such that

- (ii)

- Let satisfies and

- (iii)

- Compute where

- (iv)

- Stepsize is revised in the following way:

- (i)

- Choose and , such that

- (ii)

- Choose satisfying , such that

- (iii)

- Compute where

- (iv)

- The stepsize is updated in the following way:

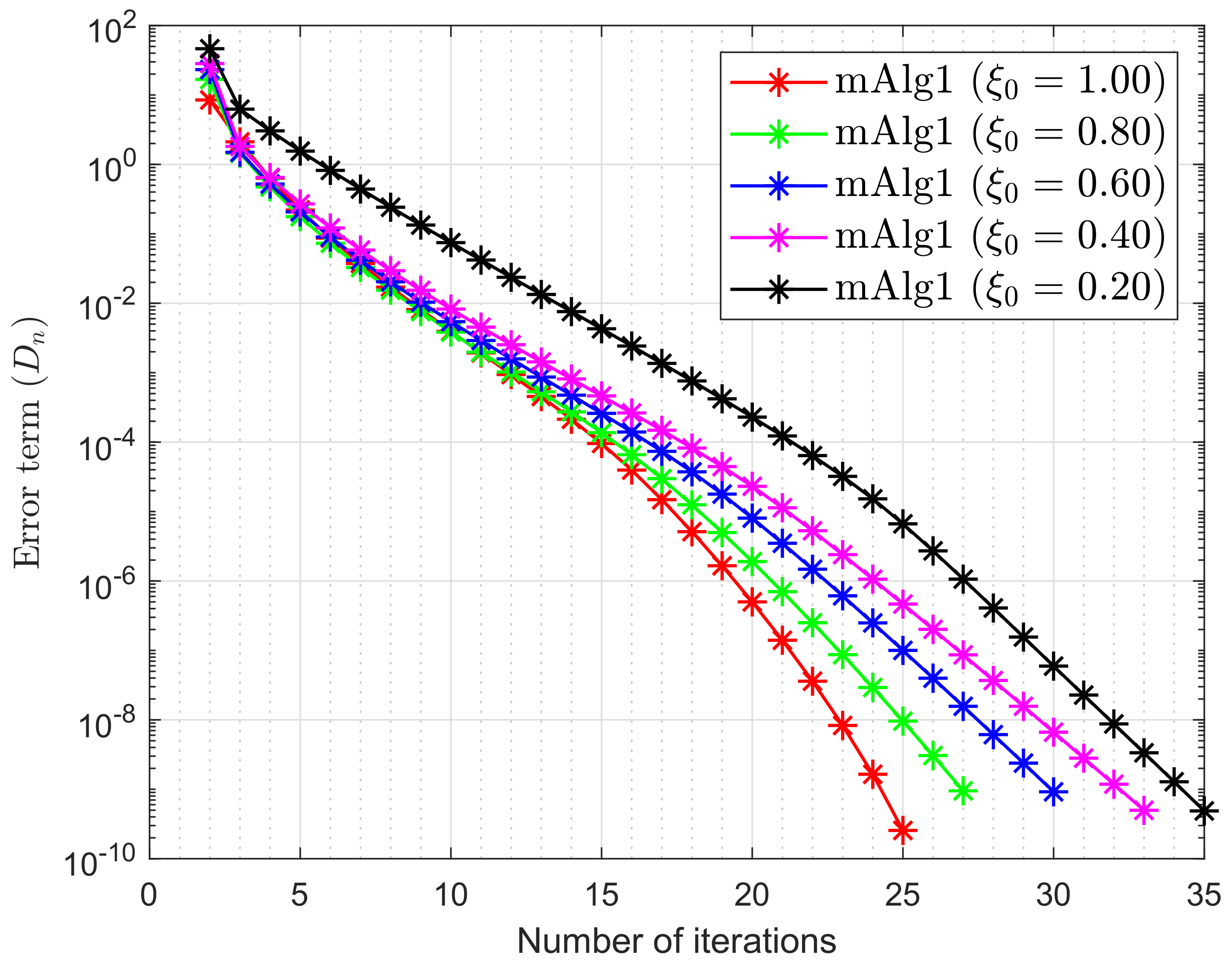

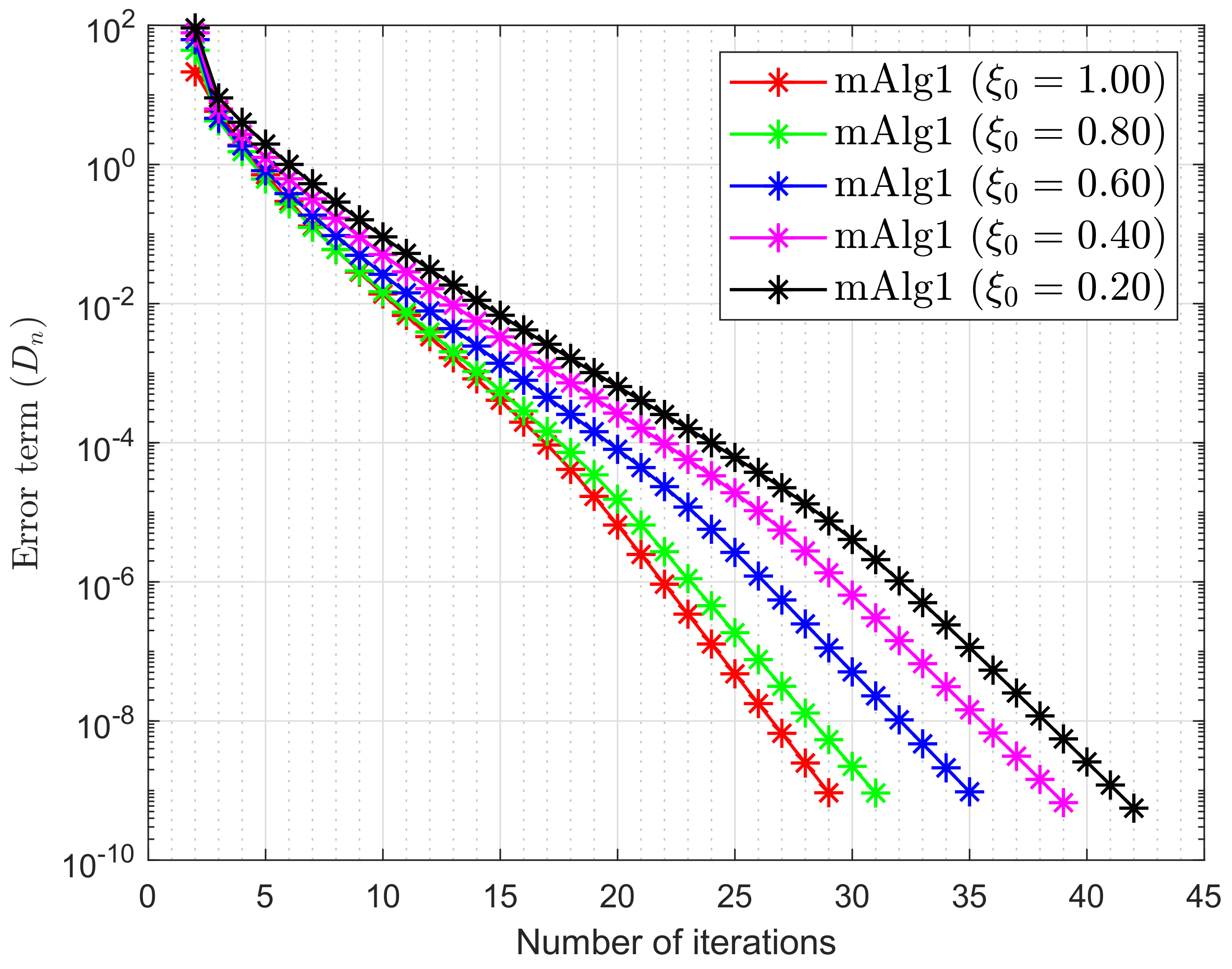

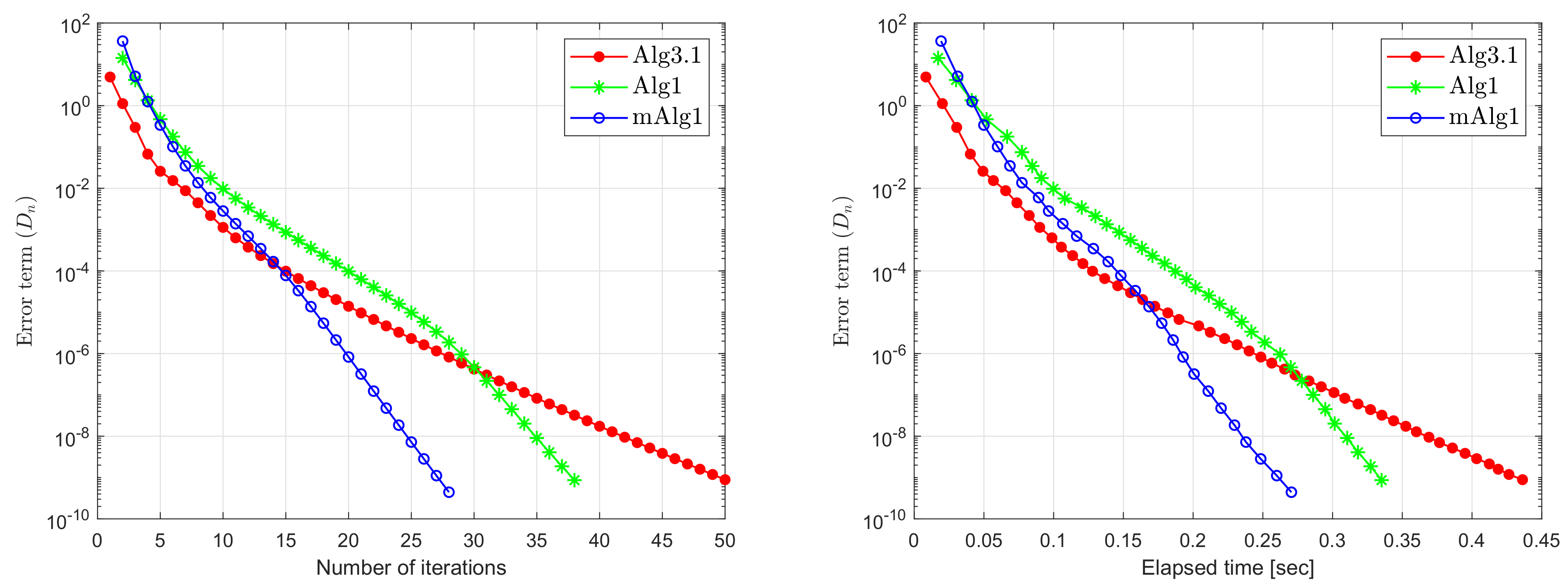

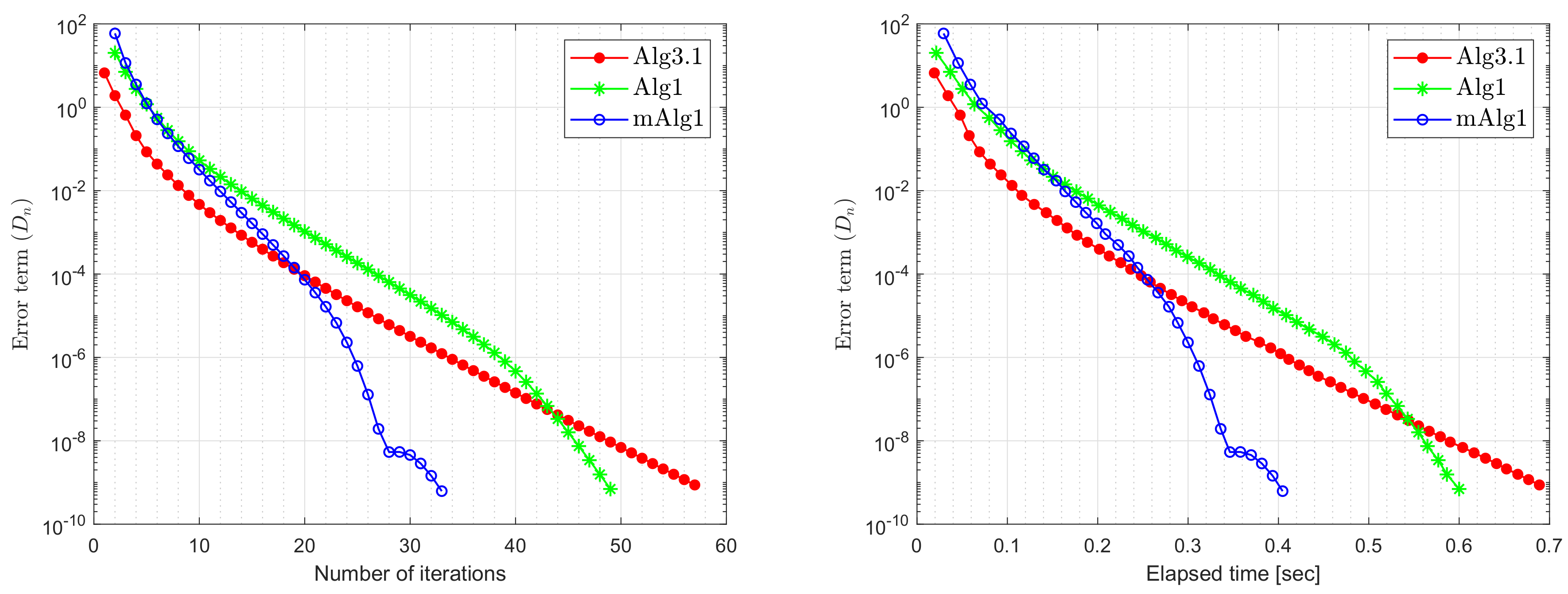

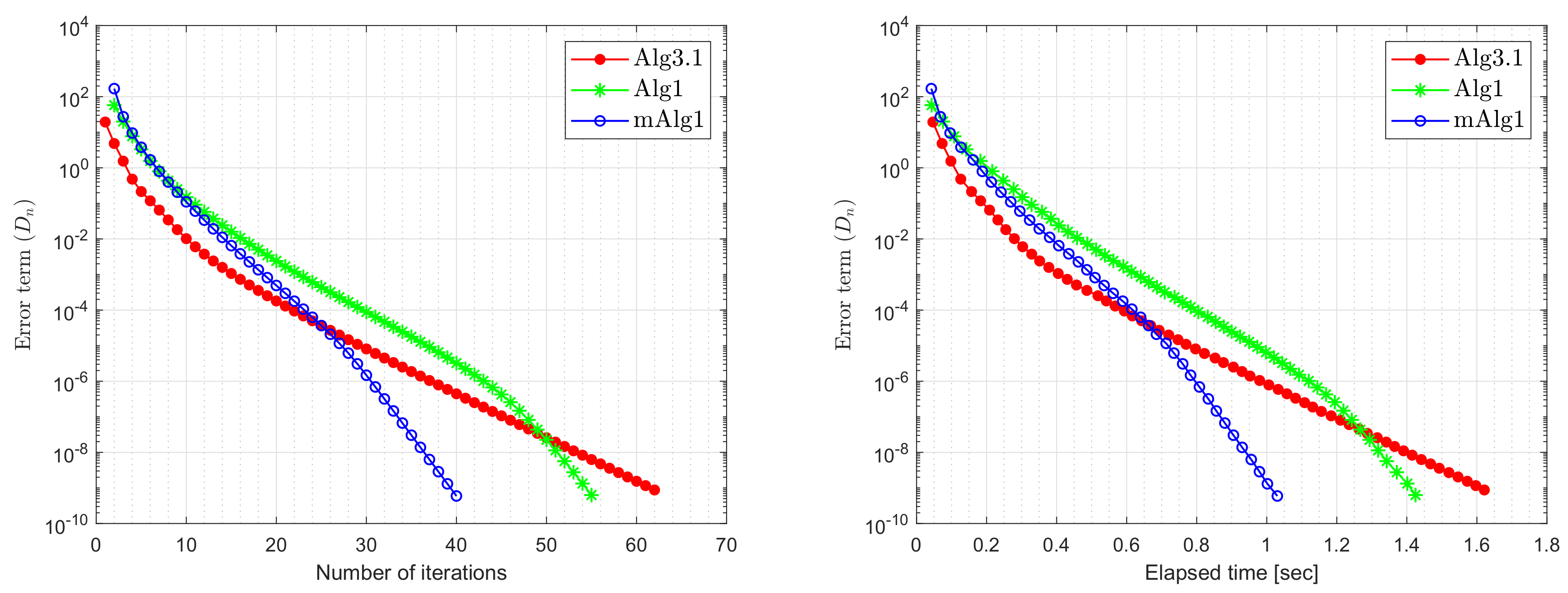

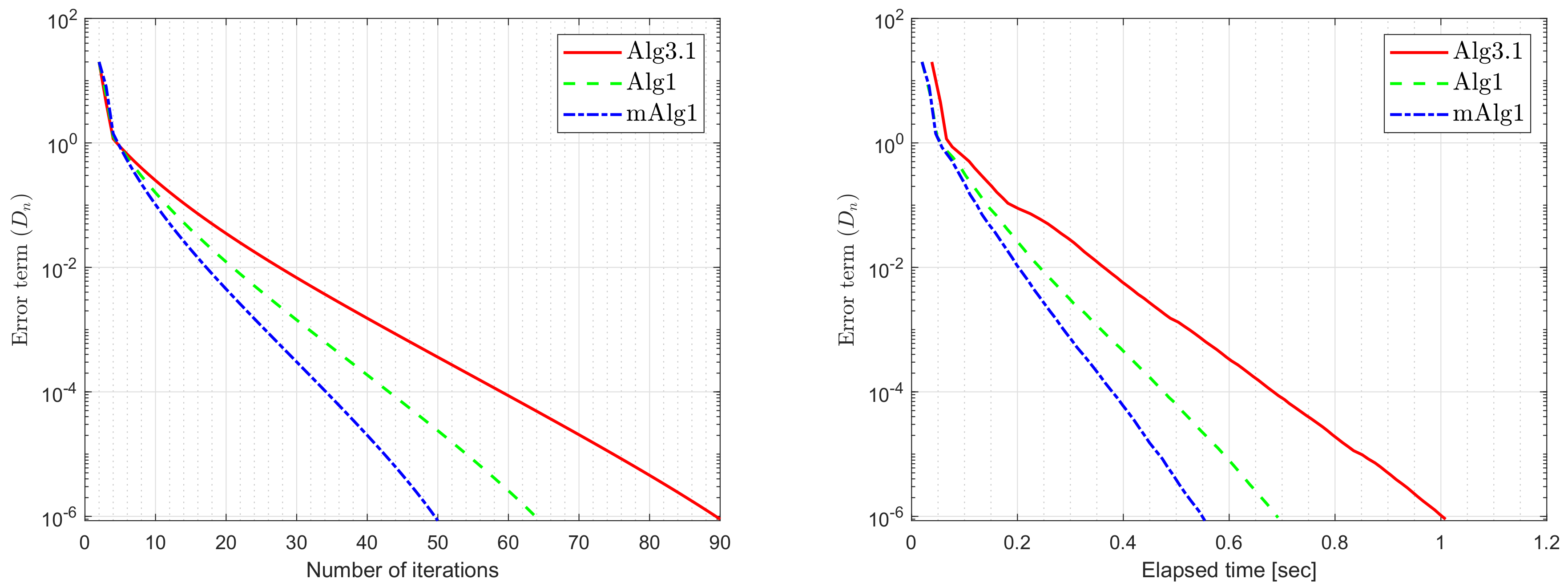

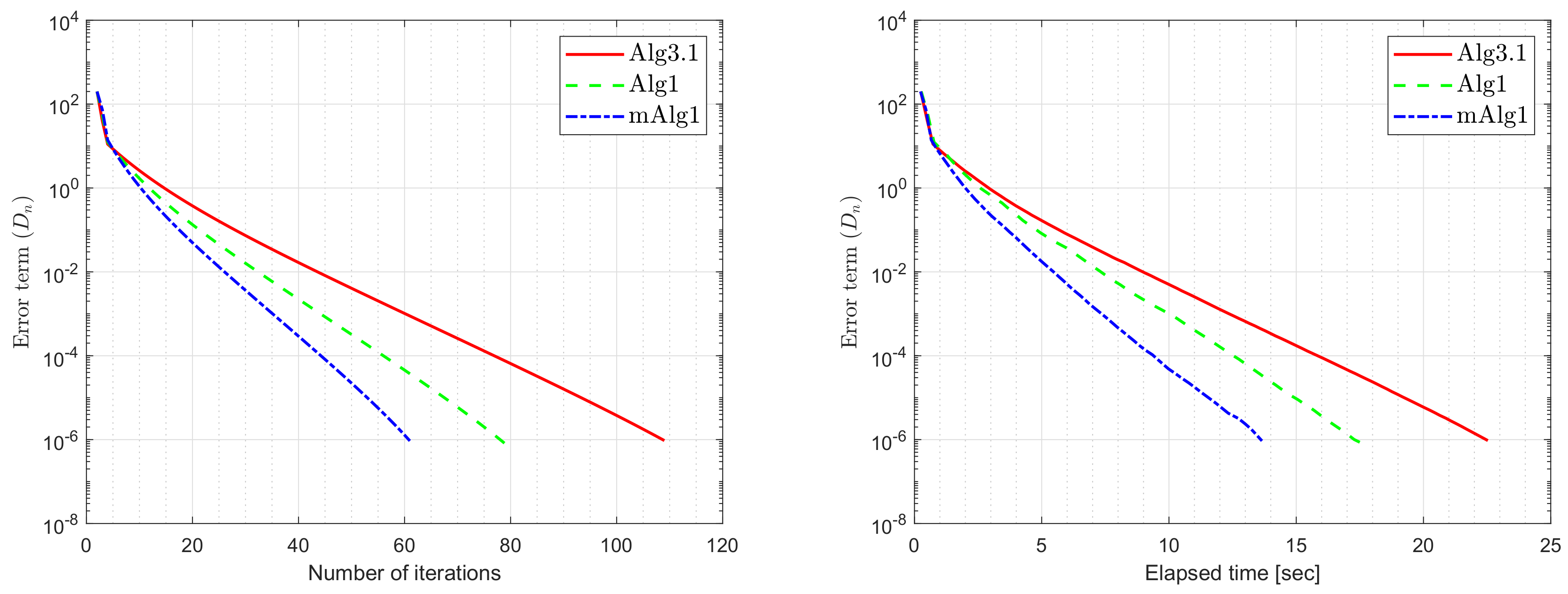

6. Numerical Experiments

- (i)

- It is also significant that the value of is crucial and performs best when it is nearer to

- (ii)

- It is observed that the selection of the value ϑ is often significant and roughly the value performs better than most other values.

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Blum, E. From optimization and variational inequalities to equilibrium problems. Math. Stud. 1994, 63, 123–145. [Google Scholar]

- Fan, K. A Minimax Inequality and Applications, Inequalities III; Shisha, O., Ed.; Academic Press: New York, NY, USA, 1972. [Google Scholar]

- Facchinei, F.; Pang, J.S. Finite-Dimensional Variational Inequalities and Complementarity Problems; Springer Science & Business Media: Berlin, Germany, 2007. [Google Scholar]

- Konnov, I. Equilibrium Models and Variational Inequalities; Elsevier: Amsterdam, The Netherlands, 2007; Volume 210. [Google Scholar]

- Muu, L.D.; Oettli, W. Convergence of an adaptive penalty scheme for finding constrained equilibria. Nonlinear Anal. Theory Methods Appl. 1992, 18, 1159–1166. [Google Scholar] [CrossRef]

- Quoc, T.D.; Le Dung, M.N.V.H. Extragradient algorithms extended to equilibrium problems. Optimization 2008, 57, 749–776. [Google Scholar] [CrossRef]

- Quoc, T.D.; Anh, P.N.; Muu, L.D. Dual extragradient algorithms extended to equilibrium problems. J. Glob. Optim. 2011, 52, 139–159. [Google Scholar] [CrossRef]

- Lyashko, S.I.; Semenov, V.V. A New Two-Step Proximal Algorithm of Solving the Problem of Equilibrium Programming. In Optimization and Its Applications in Control and Data Sciences; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 315–325. [Google Scholar] [CrossRef]

- Takahashi, S.; Takahashi, W. Viscosity approximation methods for equilibrium problems and fixed point problems in Hilbert spaces. J. Math. Anal. Appl. 2007, 331, 506–515. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Cho, Y.J.; Yordsorn, P. Weak convergence of explicit extragradient algorithms for solving equilibirum problems. J. Inequalities Appl. 2019, 2019. [Google Scholar] [CrossRef]

- Anh, P.N.; Hai, T.N.; Tuan, P.M. On ergodic algorithms for equilibrium problems. J. Glob. Optim. 2015, 64, 179–195. [Google Scholar] [CrossRef]

- Hieu, D.V.; Quy, P.K.; Vy, L.V. Explicit iterative algorithms for solving equilibrium problems. Calcolo 2019, 56. [Google Scholar] [CrossRef]

- Hieu, D.V. New extragradient method for a class of equilibrium problems in Hilbert spaces. Appl. Anal. 2017, 97, 811–824. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Je Cho, Y.; Suleiman, Y.I.; Kumam, W. Modified Popov’s explicit iterative algorithms for solving pseudomonotone equilibrium problems. Optim. Methods Softw. 2020, 1–32. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Abubakar, A.B.; Cho, Y.J. The extragradient algorithm with inertial effects extended to equilibrium problems. Comput. Appl. Math. 2020, 39. [Google Scholar] [CrossRef]

- Santos, P.; Scheimberg, S. An inexact subgradient algorithm for equilibrium problems. Comput. Appl. Math. 2011, 30, 91–107. [Google Scholar]

- Hieu, D.V. Halpern subgradient extragradient method extended to equilibrium problems. Revista de la Real Academia de Ciencias Exactas, Físicas y Naturales Serie A Matemáticas 2016, 111, 823–840. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Kumam, W.; Shutaywi, M.; Jirakitpuwapat, W. The Inertial Sub-Gradient Extra-Gradient Method for a Class of Pseudo-Monotone Equilibrium Problems. Symmetry 2020, 12, 463. [Google Scholar] [CrossRef]

- Anh, P.N.; An, L.T.H. The subgradient extragradient method extended to equilibrium problems. Optimization 2012, 64, 225–248. [Google Scholar] [CrossRef]

- Muu, L.D.; Quoc, T.D. Regularization Algorithms for Solving Monotone Ky Fan Inequalities with Application to a Nash-Cournot Equilibrium Model. J. Optim. Theory Appl. 2009, 142, 185–204. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Argyros, I.K.; Deebani, W.; Kumam, W. Inertial Extra-Gradient Method for Solving a Family of Strongly Pseudomonotone Equilibrium Problems in Real Hilbert Spaces with Application in Variational Inequality Problem. Symmetry 2020, 12, 503. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Argyros, I.K.; Alreshidi, N.A.; Kumam, W.; Jirakitpuwapat, W. A Self-Adaptive Extra-Gradient Methods for a Family of Pseudomonotone Equilibrium Programming with Application in Different Classes of Variational Inequality Problems. Symmetry 2020, 12, 523. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Argyros, I.K.; Shutaywi, M.; Shah, Z. Optimization Based Methods for Solving the Equilibrium Problems with Applications in Variational Inequality Problems and Solution of Nash Equilibrium Models. Mathematics 2020, 8, 822. [Google Scholar] [CrossRef]

- Yordsorn, P.; Kumam, P.; ur Rehman, H.; Ibrahim, A.H. A Weak Convergence Self-Adaptive Method for Solving Pseudomonotone Equilibrium Problems in a Real Hilbert Space. Mathematics 2020, 8, 1165. [Google Scholar] [CrossRef]

- Yordsorn, P.; Kumam, P.; Rehman, H.U. Modified two-step extragradient method for solving the pseudomonotone equilibrium programming in a real Hilbert space. Carpathian J. Math. 2020, 36, 313–330. [Google Scholar]

- La Sen, M.D.; Agarwal, R.P.; Ibeas, A.; Alonso-Quesada, S. On the Existence of Equilibrium Points, Boundedness, Oscillating Behavior and Positivity of a SVEIRS Epidemic Model under Constant and Impulsive Vaccination. Adv. Differ. Equ. 2011, 2011, 1–32. [Google Scholar] [CrossRef]

- La Sen, M.D.; Agarwal, R.P. Some fixed point-type results for a class of extended cyclic self-mappings with a more general contractive condition. Fixed Point Theory Appl. 2011, 2011. [Google Scholar] [CrossRef]

- Wairojjana, N.; ur Rehman, H.; Argyros, I.K.; Pakkaranang, N. An Accelerated Extragradient Method for Solving Pseudomonotone Equilibrium Problems with Applications. Axioms 2020, 9, 99. [Google Scholar] [CrossRef]

- La Sen, M.D. On Best Proximity Point Theorems and Fixed Point Theorems for -Cyclic Hybrid Self-Mappings in Banach Spaces. Abstr. Appl. Anal. 2013, 2013, 1–14. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Shutaywi, M.; Alreshidi, N.A.; Kumam, W. Inertial Optimization Based Two-Step Methods for Solving Equilibrium Problems with Applications in Variational Inequality Problems and Growth Control Equilibrium Models. Energies 2020, 13, 3292. [Google Scholar] [CrossRef]

- Rehman, H.U.; Kumam, P.; Dong, Q.L.; Peng, Y.; Deebani, W. A new Popov’s subgradient extragradient method for two classes of equilibrium programming in a real Hilbert space. Optimization 2020, 1–36. [Google Scholar] [CrossRef]

- Wang, L.; Yu, L.; Li, T. Parallel extragradient algorithms for a family of pseudomonotone equilibrium problems and fixed point problems of nonself-nonexpansive mappings in Hilbert space. J. Nonlinear Funct. Anal. 2020, 2020, 13. [Google Scholar]

- Shahzad, N.; Zegeye, H. Convergence theorems of common solutions for fixed point, variational inequality and equilibrium problems, J. Nonlinear Var. Anal. 2019, 3, 189–203. [Google Scholar]

- Farid, M. The subgradient extragradient method for solving mixed equilibrium problems and fixed point problems in Hilbert spaces. J. Appl. Numer. Optim. 2019, 1, 335–345. [Google Scholar]

- Flåm, S.D.; Antipin, A.S. Equilibrium programming using proximal-like algorithms. Math. Program. 1996, 78, 29–41. [Google Scholar] [CrossRef]

- Korpelevich, G. The extragradient method for finding saddle points and other problems. Matecon 1976, 12, 747–756. [Google Scholar]

- Yang, J.; Liu, H.; Liu, Z. Modified subgradient extragradient algorithms for solving monotone variational inequalities. Optimization 2018, 67, 2247–2258. [Google Scholar] [CrossRef]

- Vinh, N.T.; Muu, L.D. Inertial Extragradient Algorithms for Solving Equilibrium Problems. Acta Math. Vietnam. 2019, 44, 639–663. [Google Scholar] [CrossRef]

- Hieu, D.V.; Cho, Y.J.; bin Xiao, Y. Modified extragradient algorithms for solving equilibrium problems. Optimization 2018, 67, 2003–2029. [Google Scholar] [CrossRef]

- Bianchi, M.; Schaible, S. Generalized monotone bifunctions and equilibrium problems. J. Optim. Theory Appl. 1996, 90, 31–43. [Google Scholar] [CrossRef]

- Mastroeni, G. On Auxiliary Principle for Equilibrium Problems. In Nonconvex Optimization and Its Applications; Springer: New York, NY, USA, 2003; pp. 289–298. [Google Scholar] [CrossRef]

- Kreyszig, E. Introductory Functional Analysis with Applications, 1st ed.; Wiley Classics Library, Wiley: Hoboken, NJ, USA, 1989. [Google Scholar]

- Tiel, J.V. Convex Analysis: An Introductory Text, 1st ed.; Wiley: New York, NY, USA, 1984. [Google Scholar]

- Ioffe, A.D.; Tihomirov, V.M. (Eds.) Theory of Extremal Problems. In Studies in Mathematics and Its Applications 6; North-Holland, Elsevier: Amsterdam, The Netherlands; New York, NY, USA, 1979. [Google Scholar]

- Opial, Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Amer. Math. Soc. 1967, 73, 591–598. [Google Scholar] [CrossRef]

- Tan, K.; Xu, H. Approximating Fixed Points of Nonexpansive Mappings by the Ishikawa Iteration Process. J. Math. Anal. Appl. 1993, 178, 301–308. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: New York, NY, USA, 2011; Volume 408. [Google Scholar]

- Browder, F.; Petryshyn, W. Construction of fixed points of nonlinear mappings in Hilbert space. J. Math. Anal. Appl. 1967, 20, 197–228. [Google Scholar] [CrossRef]

| m = 10 | m = 20 | m = 50 | m = 100 | |||||

|---|---|---|---|---|---|---|---|---|

| iter. | time | iter. | time | iter. | time | iter. | time | |

| 1.00 | 20 | 0.1701 | 25 | 0.2153 | 29 | 0.2726 | 40 | 0.5570 |

| 0.80 | 23 | 0.1945 | 27 | 0.2326 | 31 | 0.2788 | 47 | 0.5469 |

| 0.60 | 25 | 0.1995 | 30 | 0.2634 | 35 | 0.3285 | 52 | 0.6228 |

| 0.40 | 29 | 0.1467 | 33 | 0.2979 | 39 | 0.3549 | 55 | 0.6542 |

| 0.20 | 30 | 0.2632 | 35 | 0.2868 | 42 | 0.3849 | 57 | 0.6662 |

| Number of Iterations | Execution Time in Seconds | |||||

|---|---|---|---|---|---|---|

| Alg3.1 | Alg1 | mAlg1 | Alg3.1 | Alg1 | mAlg1 | |

| 60 | 50 | 38 | 28 | 0.4362 | 0.3352 | 0.2705 |

| 120 | 57 | 49 | 33 | 0.6888 | 0.6000 | 0.4047 |

| 200 | 66 | 57 | 39 | 1.4708 | 1.0881 | 0.6794 |

| 300 | 62 | 55 | 40 | 1.6213 | 1.4251 | 1.0303 |

| Number of Iterations | Execution Time in Seconds | |||||

|---|---|---|---|---|---|---|

| Alg3.1 | Alg1 | mAlg1 | Alg3.1 | Alg1 | mAlg1 | |

| 0.90 | 67 | 56 | 47 | 2.8674 | 2.5324 | 1.6734 |

| 0.70 | 63 | 53 | 45 | 2.7813 | 2.6423 | 1.5026 |

| 0.50 | 57 | 47 | 41 | 2.0912 | 2.4212 | 1.4991 |

| 0.30 | 61 | 48 | 44 | 2.4115 | 2.3567 | 1.5092 |

| 0.10 | 69 | 60 | 47 | 2.9229 | 2.2881 | 1.5098 |

| Number of Iterations | Execution time in Seconds | |||||

|---|---|---|---|---|---|---|

| Alg3.1 | Alg1 | mAlg1 | Alg3.1 | Alg1 | mAlg1 | |

| 33 | 28 | 19 | 4.7654 | 3.9782 | 2.9342 | |

| 38 | 31 | 20 | 5.2598 | 4.1458 | 3.0987 | |

| 41 | 33 | 22 | 5.9876 | 5.3976 | 4.4298 | |

| 47 | 39 | 22 | 6.9921 | 5.4765 | 4.4611 | |

| 58 | 43 | 31 | 8.4691 | 5.8329 | 5.0321 | |

| Number of Iterations | Execution Time in Seconds | |||||

|---|---|---|---|---|---|---|

| Alg3.1 | Alg1 | mAlg1 | Alg3.1 | Alg1 | mAlg1 | |

| 20 | 90 | 64 | 50 | 1.0089 | 0.6923 | 0.5541 |

| 50 | 98 | 70 | 52 | 1.6089 | 1.9092 | 0.8464 |

| 100 | 104 | 74 | 58 | 2.9231 | 2.1456 | 1.6970 |

| 200 | 109 | 79 | 61 | 22.5299 | 17.6267 | 13.6542 |

| 300 | 112 | 81 | 63 | 52.6776 | 39.0018 | 36.6305 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wairojjana, N.; Rehman, H.u.; De la Sen, M.; Pakkaranang, N. A General Inertial Projection-Type Algorithm for Solving Equilibrium Problem in Hilbert Spaces with Applications in Fixed-Point Problems. Axioms 2020, 9, 101. https://doi.org/10.3390/axioms9030101

Wairojjana N, Rehman Hu, De la Sen M, Pakkaranang N. A General Inertial Projection-Type Algorithm for Solving Equilibrium Problem in Hilbert Spaces with Applications in Fixed-Point Problems. Axioms. 2020; 9(3):101. https://doi.org/10.3390/axioms9030101

Chicago/Turabian StyleWairojjana, Nopparat, Habib ur Rehman, Manuel De la Sen, and Nuttapol Pakkaranang. 2020. "A General Inertial Projection-Type Algorithm for Solving Equilibrium Problem in Hilbert Spaces with Applications in Fixed-Point Problems" Axioms 9, no. 3: 101. https://doi.org/10.3390/axioms9030101

APA StyleWairojjana, N., Rehman, H. u., De la Sen, M., & Pakkaranang, N. (2020). A General Inertial Projection-Type Algorithm for Solving Equilibrium Problem in Hilbert Spaces with Applications in Fixed-Point Problems. Axioms, 9(3), 101. https://doi.org/10.3390/axioms9030101