Abstract

We propose two new iterative algorithms for solving K-pseudomonotone variational inequality problems in the framework of real Hilbert spaces. These newly proposed methods are obtained by combining the viscosity approximation algorithm, the Picard Mann algorithm and the inertial subgradient extragradient method. We establish some strong convergence theorems for our newly developed methods under certain restriction. Our results extend and improve several recently announced results. Furthermore, we give several numerical experiments to show that our proposed algorithms performs better in comparison with several existing methods.

Keywords:

K-pseudomonotone; inertial iterative algorithms; variational inequality problems; Hilbert spaces; strong convergence MSC:

47H10; 47H05; 68W10; 65K15; 65Y05

1. Introduction

In this paper, the set C denotes a nonempty closed convex subset of a real Hilbert space The inner product of H is denoted by and the induced norm by Suppose is an operator. The variational inequality problem (VIP) for the operator A on is to find a point such that

In this study, we denote the solution set of (VIP) (1) by The theory of variational inequalities was introduced by Stampacchia [1]. It is known that the (VIP) problem arise in various models involving problems in many fields of study such as mathematics, physics, sciences, social sciences, management sciences, engineering and so on. The ideas and methods of the variational inequalities have been highly applied innovatively in diverse areas of sciences and engineering and have proved very effective in solving certain problems. The theory of (VIP) provides a natural, simple and unified setting for a comprehensive treatment of unrelated problems (see, e.g., [2]). Several authors have developed efficient numerical methods in solving the (VIP) problem. These methods includes the projection methods and its variants (see, e.g., [3,4,5,6,7,8,9,10,11,12,13]). The fundamental objective involves extending the well-known projected gradient algorithm, which is useful in solving the minimization problem subject to This method is given as follows:

where the real sequence satisfy certain conditions and is the well-known metric projection onto The interested reader may consult [14] for convergence analysis of this algorithm for the special case in which the mapping is convex and differentiable. Equation (2) has been extended to the (VIP) problem and it is known as the projected gradient method for optimization problems. This is done by replacing the gradient with the operator A thereby generating a sequence as follows:

However, the major drawback of this method is the restrictive condition that the operator A is strongly monotone or inverse strongly monotone (see, e.g., [15]) to guarantee the convergence of this method. In 1976, Korpelevich [16] removed this strong condition by introducing the extragradient method for solving saddle point problems. The extragradient method was extended to solving variational inequality problems in both Hilbert and Euclidean spaces. The only required restriction for the extragradient algorithm to converge is that the operator A is monotone and L-Lipschitz continuous. The extragradient method is given as follows:

where and the metric projection from H onto C is denoted by If the solution set of the (VIP) denoted by is nonempty, then the sequence generated by iterative algorithm (4) converges weakly to an element in

Observe that by using the extragradient method, we need to calculate two projections onto the set in every iteration. It is known that the projection onto a closed convex set has a close relationship with the minimun distance problem. Let C be a closed and convex set, this method may require a prohibitive amount of computation time. In view of this drawback, in 2011 Censor et al. [5] introduced the subgradient extragradient method by modifying iterative algorithm in Equation (4) above. They replaced the two projections in the extragradient method in Equation (4) onto the set C by only one projection onto the set and one onto a half-space. It has been established that the projection onto a given half-space is easier to calculate. Next, we give the subgradient extragradient method of Censor et al. [5] as follows:

where Several authors have studied the subgradient extragradient method and obtained some interesting and applicable results (see, e.g., [11]) and the references therein.

The theory of pseudomonotone operators is very crucial in studies in nonlinear analysis, variational inequalities and optimization problems (see, e.g., [17,18,19,20]). One important class of pseudomonotone operators was introduced in 1976 by Karamardian [21] and have been utilized in solving problems in variational inequalities, optimization and economics (see, e.g., [17,20]). In this paper, we shall call the class of pseudomonotone in the sense of Karamardian K-pseudomonotone. Yao [20] utilized K-pseudomonotone in solving some variational inequalities problems in Banach spaces. He established some new existence results which extend many known results in infinite-dimensional spaces under some weak assumptions. He also proved some uniqueness results for the complementarity problem with K-pseudomonotone operators in Banach spaces. It is our purpose in the present paper to introduce two new inertial subgradient extragradient iterative algorithms for solving K-pseudomonotone variational inequality problems in the framework of real Hilbert spaces.

The inertial type iterative algorithms are based on a discrete version of a second order dissipative dynamical system (see, [22,23]). These kind of algorithms can be seen as a process of accelerating the convergence properties of a given method (see, e.g., [24,25,26]). Alvarez and Attouch [24] in 2001 used the inertial method to derive a proximal algorithm for solving the problem of finding zero of a maximal monotone operator. Their method is given as follows: given and any two parameters obtain such that

This algorithm can be written equivalently as follows:

where is the resolvent of the operator A with the given parameter and the inertial is induced by the term

Several researchers have developed some fast iterative algorithms by using inertial methods. These methods includes the inertial Douglas–Rachford splitting method (see, e.g., [27]), inertial forward–backward splitting methods (see, e.g., [28]), inertial ADMM (see, e.g., [29]), inertial proximal–extragradient method (see, e.g., [30]), inertial forward–backward–forward method (see, e.g., [31]), inertial contraction method (see, e.g., [32]), inertial Tseng method (see, e.g., [33]) and inertial Mann method (see, e.g., [11]).

Inspired by the results above, we propose two inertial subgradient extragradient methods for finding a solution of K-pseudomonotone and Lipschitz continuous (VIP). Our first proposed iterative algorithm is a hybrid of the inertial subgradient extragradient method [11], the viscosity method [34] and the Picard Mann method [35]. Our second method combines the inertial subgradient extragradient method [11] and the Picard Mann method [35].

This paper is organized as follows. In Section 2, we give some preliminary definitions of concepts and results that will be crucial in this study. In Section 3, we present our proposed iterative algorithms and prove some convergence results for them. In Section 4, we present some numerical experiments to support the convergence of our proposed iterative algorithms. In Section 5, we give the concluding remarks of the study.

2. Preliminaries

In this paper, the set C denotes a nonempty closed convex subset of a real Hilbert space The inner product of H is denoted by and the induced norm by

We denote the weak convergence of the sequence to x by as we denote the strong convergence of to x by as

For each and we recall the following inequalities in Hilbert spaces:

A mapping is said to be nonexpansive if for each we have

For each we can find a unique nearest point in denoted by such that we have

for each Then is known as the metric projection of H onto It has been proved that the mapping is nonexpansive.

Lemma 1

([36]). Suppose that C is a closed convex subset of a real Hilbert space H and for each Then the following holds:

- (i)

- for all

- (ii)

- for all

- (iii)

- Given and Then we havefor all

For more of the metric projection the interested reader should see Section 3 of [36].

The fixed point problem involves finding the fixed point of an operator The set of fixed point of the operator A is denoted by and we assume that it is nonempty, that is The fixed point problem is then formulated as follows:

In this paper, our problem of interest is to find a point such that

Definition 1.

Let be a mapping. Then for all

- (i)

- A is said to be L-Lipschitz continuous with ifIf then A is called a contraction mapping.

- (ii)

- A is said to be monotone if

- (iii)

- The mapping is said to be pseudomonotone in the sense of Karamardian [21] or K-pseudomonotone for short, if for all

The following lemmas will be needed in this paper.

Lemma 2.

([37]). Suppose is a real sequence of nonnegative numbers such that there is a subsequence of such that for any Then there is a nondecreasing sequence of such that and the following properties are fulfilled: for each (sufficiently large) number

In fact, is the largest number n in the set such that

Lemma 3.

([38]). Let be a sequence of nonnegative real numbers such that

for all where and is a sequence such that

- (a)

- (b)

Then

3. Main Results

The following condition will be needed in this study.

Condition 3.1

The operator is K-pseudomonotone and L-Lipschitz continuous on the real Hilbert space with the solution set of the (VIP) (1.1) and the contraction mapping with the contraction parameter The feasible set is non-empty, closed and convex.

3.1. The Viscosity Inertial Subgradient Extragradient Algorithm

We propose the following algorithm

Algorithm 3.1

Step 0: Given for some and satisfying the following conditions:

Choose initial and set

Step 1: Compute

If then stop, is a solution to the (VIP) problem. Otherwise, go to Step 2.

Step 2: Construct the half-space

and compute

Step 3: Calculate

and compute

Let and return to Step 1.

Next, we prove the following results which will be useful in this study.

Lemma 4.

Let be a sequence generated by Algorithm 3.1. Then

for all

Proof.

Since then by Equation (10) and Lemma 2 (i) we have the following

Hence, from Equation (25) we obtain

Using the condition that A is K-pseudomonotone, we have that We now add this to the right hand side of inequality (25) to obtain the following

Next, we have the following estimates using the condition that A is L-Lipschitz continuous

Since and we obtain This implies that

Using Equations (28) and (29) in Equation (27), we obtain:

The proof of Lemma 4 is completed. □

Next, we prove the following results for Algorithm 3.1.

Theorem 1.

Assume that the sequence is chosen such that

Suppose that is a sequence generated by our Algorithm 3.1, then converges strongly to an element where we have that

Proof.

Claim I

We need to prove that the sequence is bounded, for each By Lemma 4 we have

This implies that

Using Equation (18), we have

Using the condition that it follows that there exist a constant such that

Hence, using Equations (34) and (35) in Equation (33) we obtain

Using (23) and the condition that f is a contraction mapping, we have

By Equation (22), we have

Using Equation (38) in Equation (37), we obtain:

Using Equation (36) in Equation (39), we have

This means that is bounded. Hence, it follows that and are bounded.

Claim II

for some By Equation (23), we have

for some From Equation (22), we have

for some Using Equation (32) in Equation (43), we obtain

From Equation (36), we have

This implies that

for some Combining Equations (44) and (46), we have

Using Equation (47) in Equation (42), we have

This implies that

where

Claim III

for some Using Equations (10) and (18), we have

By Equations (10) and (23), we have

Using Equations (9) and (22), we have

Claim IV

We need to prove that the sequence converges to zero by considering two possible cases.

Case I

There exists a number such that for each This implies that exists and by Claim II, we have

The fact that the sequence is bounded implies that there exists a subsequence of that converges weakly to some such that

Using Equation (56) and Lemma 3, we get From Equation (57) and the fact that we get

Next, we prove that

Clearly,

Combining Equations (56) and (60) we have

Using Equations (58) and (59) we have

Hence by Lemma 3 and Claim III we have

Case II

We can find a subsequence of satisfying for each Hence, by Lemma 2 it follows that we can find a nondecreasing real sequence of satisfying and we get the following inequalities for every :

By Claim II we get

Hence, we have

By similar arguments as in the proof of Case I, we have

and

By Claim III we obtain

By Equations (63) and (68) we have:

Hence, we have

Therefore we obtain:

Combining Equations (63) and (71) we obtain this means that The proof of Theorem 1 is completed. □

Remark 1.

Suantai et al. [39] observed that condition (31) can be easily implemented in numerical results since the value of is given before choosing We can choose as follows:

where and is a positive sequence such that

3.2. Picard–Mann Hybrid Type Inertial Subgradient Extragradient Algorithm

We propose the following algorithm

Algorithm 3.2

Step 0: Given for some and satisfying the following conditions:

Choose initial and set

Step 1: Compute

If then stop, is a solution of the (VIP) problem. Otherwise, go to Step 2.

Step 2: Construct the half-space

and compute

Step 3: Calculate

and compute

Let and return to Step 1.

Next, we prove the following important result for Algorithm 3.2.

Theorem 2.

Suppose that is a real sequence such that the following condition holds:

Then the sequence generated by Algorithm 3.2 converges strongly to an element where

Proof.

We now examine the following claims:

Claim I

We claim that the sequence is bounded. Using similar arguments as in the proof of Theorem 1, we get

This implies that

Moreover, we have

for some

Using Equation (77) we have

Using Equations (10) and (82), we have the following estimate:

This implies that

Therefore, the sequence is bounded. It follows that and are all bounded.

Claim II

We want to show that

for some From Equation (42), we have

for some Using (10) and (77) we get

for some Using Equation (85) in Equation (92), we get

From Equation (36), we get

This implies that

for some Using Equation (96) in Equation (94), we get

for some Using Equation (97) in Equation (91), we have

for some This implies that

Claim III

We want to show that

for some

Using Equations (10) and (78), we have

Next, we have the following estimate, using Equations (10) and (77)

Claim IV

We need to prove that the real sequence converges to 0 by considering the following two cases:

Case I

There exists a number such that for every we have Hence, we have that exists so that by Claim II, we have

Since the sequence is bounded, it follows that there exists a subsequence of such that converges weakly to some such that

Using Equation (107) and Lemma 3, we get From Equation (108) and the fact that we get

Next, we prove that

Clearly,

Combining Equations (107) and (111) we have

Using Equations (109) and (110) we have

Hence by Lemma 3 and Claim III we have

Case II

We can find a subsequence of satisfying for each Hence, by Lemma 2 it follows that there is a nondecreasing real sequence of satisfying so that we get the following inequalities for every :

By Claim II we get

Hence, we have

By similar arguments as in the proof of Case I, we have

and

By Claim III we obtain

for some

By Equations (114) and (119) we have:

Hence, we have

Therefore we obtain:

Combining Equations (114) and (122) we obtain this means that The proof of Theorem 2 is completed. □

4. Numerical Illustrations

In this section, we consider two numerical examples to illustrate the convergence of Algorithms 3.1, Algorithms 3.2 and compare them with three well-known algorithms. All our numerical illustrations were executed on a HP laptop with the following specifications: Intel(R) Core(TM)i5-6200U CPU 2.3GHz with 4 GB RAM. All our codes were written in MATLAB 2015a. In reporting our numerical results, the following tables, ‘Iter.’, ‘Sec.’ and Error denote the number of iterations, the CPU time in seconds and , respectively. We choose

for Algorithm 3.1, Algorithm 3.2, for Algorithm 3.2.

Example 1.

Suppose that with the inner product

and the included norm

Let be the unit ball and define an operator by

and where and ,

we can easily see that A is 1-Lipschitz continuous and monotone on C. Considering the condition on C and A, the set of solutions to the variational inequality problem (VIP) is given by

It is known that

and

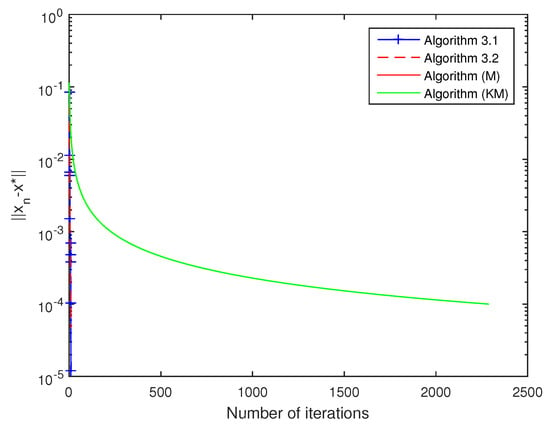

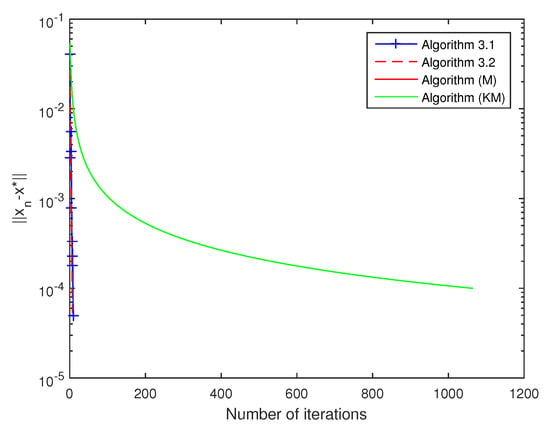

Now, we apply Algorithm 3.1, Algorithm 3.2, Mainge’s algorithm [37] and Kraikaew and Saejung’s algorithm [40] to solve the variational inequality problem (VIP). We choose for Mainge’s algorithm and Kraikaew and Saejung’s algorithm and for all algorithms. We use stopping rule or Iter <= 3000 for all algorithms. The numerical results of all algorithms with different are reported in Table 1 below:

Table 1.

Numerical results obtained by other algorithms.

The convergence behaviour of algorithms with different starting point is given in Figure 1 and Figure 2. In these figures, we represent the value of errors for all algorithms by the y-axis and the number of iterations by the x-axis.

Figure 1.

Comparison of all algorithms with .

Figure 2.

Comparison of all algorithms with .

Example 2.

Assume that is defined by with , where S is an skew-symmetric matrix, B is an matrix, D is an diagonal matrix, whose diagonal entries are positive (so M is positive definite), q is a vector in and

Clearly, we can see that the operator A is monotone and Lipschitz continuous with a Lipschitz constant . Given that , the unique solution of the corresponding (VIP) is .

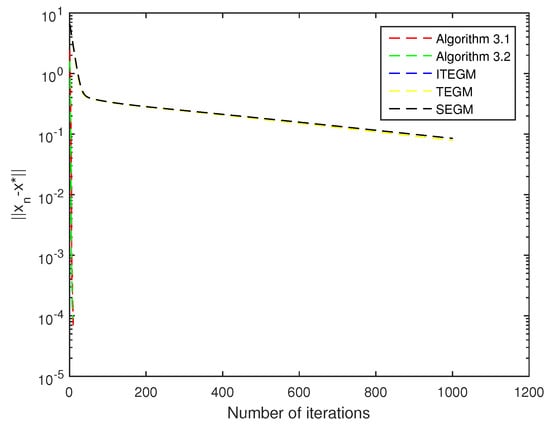

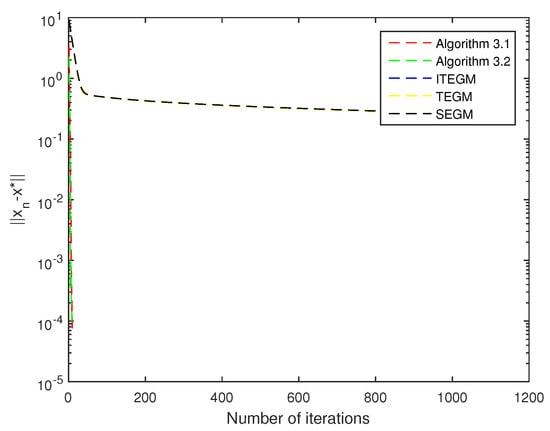

We will compare Algorithm 3.1, Algorithm 3.2 with Tseng’s extragradient method (TEGM) [41], Inertial Tseng extragradient algorithm (ITEGM) of Thong and Hieu [33], subgradient extragradient method (SEGM) of Censor et al. [5]. We choose for all algorithm, where for inertial Tseng extragradient algorithm. The starting points are

For experiment, all entries of B, S and D are generated randomly from a normal distribution with mean zero and unit variance. We use stopping rule or Iter <= 1000 for all algorithms. The results are described in Table 2 and Figure 3 and Figure 4.

Table 2.

Numerical results obtained by other algorithms.

Figure 3.

Comparison of all algorithms with .

Figure 4.

Comparison of all algorithms with .

Table 1 and Table 2 and Figure 1, Figure 2, Figure 3 and Figure 4, give the errors of the Mainge’s algorithm [37] and Kraikaew and Saejung’s algorithm [40], Tseng’s extragradient method (TEGM) [41], Inertial Tseng extragradient algorithm (ITEGM) [33], subgradient extragradient method (SEGM) of Censor et al. [5] and Algorithms 3.1, 3.2 as well as their execution times. They show that Algorithms 3.1 and 3.2 are less time consuming and more accurate than those of Mainge [37], Kraikaew and Saejung [40], Tseng [41], Thong and Hieu [33] and Censor et al. [5].

5. Conclusions

In this study, we developed two new iterative algorithms for solving K-pseudomonotone variational inequality problems in the framework of real Hilbert spaces. We established some strong convergence theorems for our proposed algorithms under certain conditions. We proved via several numerical experiments that our proposed algorithms performs better in comparison than those of Mainge [37], Kraikaew and Saejung [40], Tseng [41], Thong and Hieu [33] and Censor et al. [5].

Data AvailabilityThe authors declare that all data relating to our results in this paper are available within the paper.

Author Contributions

All authors contributed equally to the writing of this paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Basque Government: IT1207-19.

Acknowledgments

This paper was completed while the first author was visiting the Abdus Salam School of Mathematical Sciences (ASSMS), Government College University Lahore, Pakistan as a postdoctoral fellow. The authors wish to thank the referees for their comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interests.

Data Availability

The authors declare that all data relating to our results in this paper are available within the paper.

References

- Stampacchia, G. Formes bilineaires coercitives sur les ensembles convexes. C. R. Acad. Sci. 1964, 258, 4413–4416. [Google Scholar]

- Browder, F.E. The fixed point theory of multivalued mapping in topological vector spaces. Math. Ann. 1968, 177, 283–301. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef] [PubMed]

- Censor, Y.; Gibali, A.; Reich, S. Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 2011, 26, 827–845. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algorithm 2012, 59, 301–323. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 2012, 61, 1119–1132. [Google Scholar] [CrossRef]

- Gibali, A.; Shehu, Y. An efficient iterative method for finding common fixed point and variational inequalities in Hilbert spaces. Optimization 2019, 68, 13–32. [Google Scholar] [CrossRef]

- Gibali, A.; Ha, N.H.; Thuong, N.T.; Trang, T.H.; Vinh, N.T. Polyak’s gradient method for solving the split convex feasibility problem and its applications. J. Appl. Numer. Optim. 2019, 1, 145–156. [Google Scholar]

- Khan, A.R.; Ugwunnadi, G.C.; Makukula, Z.G.; Abbas, M. Strong convergence of inertial subgradient extragradient method for solving variational inequality in Banach space. Carpathian J. Math. 2019, 35, 327–338. [Google Scholar]

- Maingé, P.E. Projected subgradient techniques and viscosity for optimization with variational inequality constraints. Eur. J. Oper. Res. 2010, 205, 501–506. [Google Scholar] [CrossRef]

- Thong, D.V.; Vinh, N.T.; Cho, Y.J. Accelerated subgradient extragradient methods for variational inequality problems. J. Sci. Comput. 2019, 80, 1438–1462. [Google Scholar] [CrossRef]

- Wang, F.; Pham, H. On a new algorithm for solving variational inequality and fixed point problems. J. Nonlinear Var. Anal. 2019, 3, 225–233. [Google Scholar]

- Wang, L.; Yu, L.; Li, T. Parallel extragradient algorithms for a family of pseudomonotone equilibrium problems and fixed point problems of nonself-nonexpansive mappings in Hilbert space. J. Nonlinear Funct. Anal. 2020, 2020, 13. [Google Scholar]

- Alber, Y.I.; Iusem, A.N. Extension of subgradient techniques for nonsmooth optimization in Banach spaces. Set Valued Anal. 2001, 9, 315–335. [Google Scholar] [CrossRef]

- Xiu, N.H.; Zhang, J.Z. Some recent advances in projection-type methods for variational inequalities. J. Comput. Appl. Math. 2003, 152, 559–587. [Google Scholar] [CrossRef]

- Korpelevich, G.M. The extragradient method for finding saddle points and other problems. Ekon. Mat. Metod. 1976, 12, 747–756. [Google Scholar]

- Farouq, N.E. Pseudomonotone variational inequalities: Convergence of proximal methods. J. Optim. Theory Appl. 2001, 109, 311–326. [Google Scholar] [CrossRef]

- Hadjisavvas, N.; Schaible, S.; Wong, N.-C. Pseudomonotone operators: A survey of the theory and its applications. J. Optim. Theory Appl. 2012, 152, 1–20. [Google Scholar] [CrossRef]

- Kien, B.T.; Lee, G.M. An existence theorem for generalized variational inequalities with discontinuous and pseudomonotone operators. Nonlinear Anal. 2011, 74, 1495–1500. [Google Scholar] [CrossRef]

- Yao, J.-C. Variational inequalities with generalized monotone operators. Math. Oper. Res. 1994, 19, 691–705. [Google Scholar] [CrossRef]

- Karamardian, S. Complementarity problems over cones with monotone and pseudomonotone maps. J. Optim. Theory Appl. 1976, 18, 445–454. [Google Scholar] [CrossRef]

- Attouch, H.; Goudon, X.; Redont, P. The heavy ball with friction. I. The continuous dynamical system. Commun. Contemp. Math. 2000, 2, 1–34. [Google Scholar] [CrossRef]

- Attouch, H.; Czamecki, M.O. Asymptotic control and stabilization of nonlinear oscillators with non-isolated equilibria. J. Differ. Equ. 2002, 179, 278–310. [Google Scholar] [CrossRef]

- Alvarez, F.; Attouch, H. An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Maingé, P.E. Inertial iterative process for fixed points of certain quasi-nonexpansive mappings. Set Valued Anal. 2007, 15, 67–69. [Google Scholar] [CrossRef]

- Maingé, P.E. Convergence theorems for inertial KM-type algorithms. J. Comput. Appl. Math. 2008, 219, 223–236. [Google Scholar] [CrossRef]

- Bot, R.I.; Csetnek, E.R.; Hendrich, C. Inertial Douglas-Rachford splitting for monotone inclusion problems. Appl. Math. Comput. 2015, 256, 472–487. [Google Scholar]

- Attouch, H.; Peypouquet, J.; Redont, P. A dynamical approach to an inertial forward-backward algorithm for convex minimization. SIAM J. Optim. 2014, 24, 232–256. [Google Scholar] [CrossRef]

- Chen, C.; Chan, R.H.; Ma, S.; Yang, J. Inertial proximal ADMM for linearly constrained separable convex optimization. SIAM J. Imaging Sci. 2015, 8, 2239–2267. [Google Scholar] [CrossRef]

- Bot, R.I.; Csetnek, E.R. A hybrid proximal-extragradient algorithm with inertial effects. Numer. Funct. Anal. Optim. 2015, 36, 951–963. [Google Scholar] [CrossRef]

- Bot, R.I.; Csetnek, E.R. An inertial forward-backward-forward primal-dual splitting algorithm for solving monotone inclusion problems. Numer. Algor. 2016, 71, 519–540. [Google Scholar] [CrossRef]

- Dong, L.Q.; Cho, Y.J.; Zhong, L.L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 2018, 70, 687–704. [Google Scholar] [CrossRef]

- Thong, D.V.; Hieu, D.V. Modified Tseng’s extragradient algorithms for variational inequality problems. J. Fixed Point Theory Appl. 2018, 20, 152. [Google Scholar] [CrossRef]

- Moudafi, A. Viscosity approximations methods for fixed point problems. J. Math. Anal. Appl. 2000, 241, 46–55. [Google Scholar] [CrossRef]

- Khan, S.H. A Picard-Mann hybrid iterative process. Fixed Point Theory Appl. 2013, 2013, 69. [Google Scholar] [CrossRef]

- Goebel, K.; Reich, S. Uniform Convexity, Hyperbolic Geometry and Nonexpansive Mappings; Marcel Dekker: New York, NY, USA; Basel, Switzerland, 1984. [Google Scholar]

- Maingé, P.E. A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 2008, 47, 1499–1515. [Google Scholar] [CrossRef]

- Liu, L.S. Ishikawa and Mann iteration process with errors for nonlinear strongly accretive mappings in Banach spaces. J. Math. Anal. Appl. 1995, 194, 114–125. [Google Scholar] [CrossRef]

- Suantai, S.; Pholasa, N.; Cholamjiak, P. The modified inertial relaxed CQ algorithm for solving the split feasibility problems. J. Ind. Manag. Optim. 2018, 14, 1595–1615. [Google Scholar] [CrossRef]

- Kraikaew, R.; Saejung, S. Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 2014, 163, 399–412. [Google Scholar] [CrossRef]

- Tseng, P. A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).