1. Introduction

We are interested in the numerical solution of the Cauchy problem, that is the first order Ordinary Differential Equation (ODE),

associated with the initial condition:

where

is a

function on its domain and

is assigned. Note that there is no loss of generality in assuming that the equation is autonomous. In this context, here, we focus on one-step Hermite–Obreshkov (HO) methods ([

1], p. 277). Unlike Runge–Kutta schemes, a high order of convergence is obtained with HO methods without adding stages. Clearly, there is a price for this because total derivatives of the

function are involved in the difference equation defining the method, and thus, a suitable smoothness requirement for

is necessary. Multiderivative methods have been considered often in the past for the numerical treatment of ODEs, for example also in the context of boundary value methods [

2], and in the last years, there has been a renewed interest in this topic, also considering its application to the numerical solution of differential algebraic equations; see, e.g., [

3,

4,

5,

6,

7,

8]. Here, we consider the numerical solution of Hamiltonian problems which in canonical form can be written as follows:

with:

where

and

are the generalized coordinates and momenta,

is the Hamiltonian function and

stands for the identity matrix of dimension

ℓ. Note that the flow

associated with the dynamical system (

3) is symplectic; this means that its Jacobian satisfies:

A one-step numerical method

with stepsize

h is symplectic if the discrete flow

satisfies:

Two numerical methods

are conjugate to each other if there exists a global change of coordinates

, such that:

with

uniformly for

varying in a compact set and ∘ denoting a composition operator [

9]. A method which is conjugate to a symplectic method is said to be conjugate symplectic, this is a less strong requirement than symplecticity, which allows the numerical solution to have the same long-time behavior of a symplectic method. Observe that the conjugate symplecticity here refers to a property of the discrete flow of the two numerical methods; this should be not confused with the group of conjugate symplectic matrices, the set of matrices

that satisfy

, where

H means Hermitian conjugate [

10].

A more relaxed property, shared by a wider class of numerical schemes, is a generalization of the conjugate-symplecticity property, introduced in [

11]. A method

of order

p is conjugate-symplectic up to order

, with

, if a global change of coordinates

exists such that

, with the map

satisfying

A consequence of property (

7) is that the method

nearly conserves all quadratic first integrals and the Hamiltonian function over time intervals of length

(see [

11]).

Recently, the class of Euler–Maclaurin methods for the solution of Hamiltonian problems has been analyzed in [

12,

13] where the conjugate symplecticity up to order

of the

p-th order methods was proven.

In this paper, we consider the symmetric one-step HO methods, which were analyzed in [

14,

15] in the context of spline applications. We call them BSHO methods, since they are connected to B-Splines, as we will show. BSHO methods have a formulation similar to that of the Euler–Maclaurin formulas, and the order two and four schemes of the two families are the same. As a new result, we prove that BSHO methods are conjugate symplectic schemes up to order

, as is the case for the Euler–Maclaurin methods [

12,

13], and so, both families are suited to the context of geometric integration.

BSHO methods are also strictly related to BS methods [

16,

17], which are a class of linear multistep methods also based on B-splines suited for addressing boundary value problems formulated as first order differential problems. Note that also BS methods were firstly studied in [

14,

15], but at that time, they were discarded in favor of BSHO methods since; when used as initial value methods, they are not convergent. In [

16,

17], the same schemes have been studied as boundary value methods, and they have been recovered in particular in connection with boundary value problems. As for the BSHO methods, the discrete solution generated by a BS method can be easily extended to a continuous spline collocating the differential problem at the mesh points [

18]. The idea now is to rely on B-splines with multiple inner knots in order to derive one-step HO schemes. The inner knot multiplicity is strictly connected to the number of derivatives of

involved in the difference equations defining the method and consequently with the order of the method. The efficient approach introduced in [

18] dealing with BS methods for the computation of the collocating spline extension is here extended to BSHO methods, working with multiple knots. Note that we adopt a reversed point of view with respect to [

14,

15] because we assume to have already available the numerical solution generated by the BSHO methods and to be interested in an efficient procedure for obtaining the B-spline coefficients of the associated spline.

The paper is organized as follows. In

Section 2, one-step symmetric HO methods are introduced, focusing in particular on BSHO methods.

Section 3 is devoted to proving that BSHO methods are conjugate symplectic methods up to order

. Then,

Section 4 first shows how these methods can be revisited in the spline collocation context. Successively, an efficient procedure is introduced to compute the B-spline form of the collocating spline extension associated with the numerical solution produced by the

R-th BSHO, and it is shown that its convergence order is equal to that of the numerical solution.

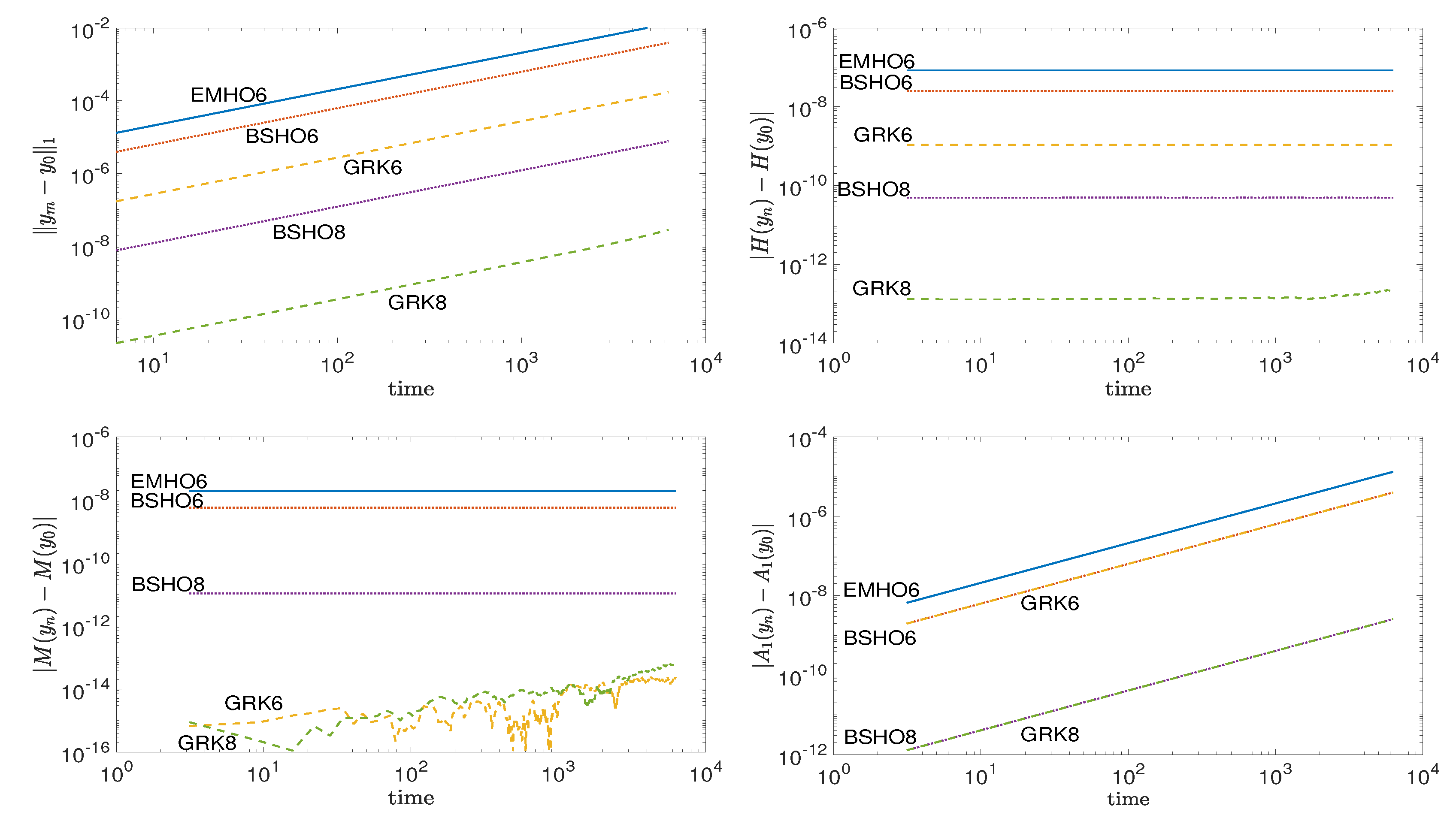

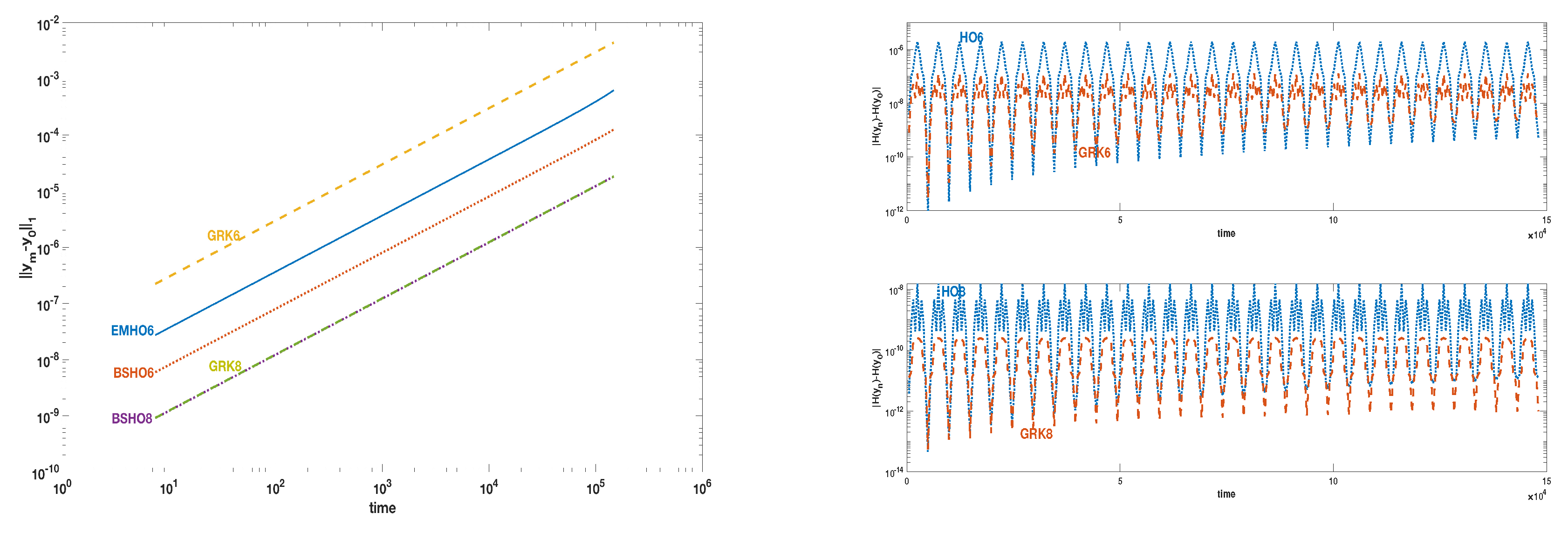

Section 6 presents some numerical results related to Hamiltonian problems, comparing them with those generated by Euler–Maclaurin and Gauss–Runge–Kutta schemes of the same order.

2. One-Step Symmetric Hermite–Obreshkov Methods

Let

be an assigned partition of the integration interval

, and let us denote by

an approximation of

. Any one-step symmetric Hermite–Obreshkov (HO) method can be written as follows, clearly setting

where

and where

, for

denotes the total

-th derivative of

with respect to

t computed at

,

Note that

, and on the basis of (

1), the analytical computation of the

j-th derivative

involves a tensor of order

j. For example,

(where

becomes the Jacobian

matrix of

with respect to

when

). As a consequence, it is

We observe that the definition in (14) implies that only

is unknown in (

8), which in general is a nonlinear vector equation in

with respect to it.

For example, the one-step Euler–Maclaurin [

1] formulas of order

with

(where the

denote the Bernoulli numbers, which are reported in Table 2) belong to this class of methods. These methods will be referred to in the following with the label EMHO (Euler–Maclaurin Hermite–Obreshkov).

Here, we consider another class of symmetric HO methods that can be obtained by defining as follows the polynomial

appearing in ([

1], Lemma 13.3), the statement of which is reported in Lemma 1.

Lemma 1. Let R be any positive integer and be a polynomial of exact degree . Then, the following one-step linear difference equation, defines a multiderivative method of order . Referring to the methods obtainable by Lemma 1, if in particular the polynomial

is defined as in (

11), then we obtain the class of methods in which we are interested here. They can be written as in (

8) with,

which are reported in

Table 1, for

. In particular, for

and

, we obtain the trapezoidal rule and the Euler–Maclaurin method of order four, respectively.

These methods were originally introduced in the spline collocation context, dealing in particular with splines with multiple knots [

14,

15], as we will show in

Section 4. We call them BSHO methods since we will show that they can be obtained dealing in particular with the standard B-spline basis. The stability function of the

R-th one-step symmetric BSHO method is the rational function corresponding to the

-Padé approximation of the exponential function, as is that of the same order Runge–Kutta–Gauss method ([

19], p. 72). It has been proven that methods with this stability function are A-stable ([

19], Theorem 4.12). For the proof of the statement of the following corollary, which will be useful in the sequel, we refer to [

15],

Corollary 1. Let us assume that where such that with Then, there exists a positive constant such that if and denotes the related numerical solution produced by the R-th one-step symmetric BSHO method in (8)–(12), it is: 3. Conjugate Symplecticity of the Symmetric One-Step BSHO Methods

Following the lines of the proof given in [

13], in this section, we prove that one-step symmetric BSHO methods are conjugate symplectic schemes up to order

. The following lemma, proved in [

20], is the starting point of the proof, and it makes use of the

B-series integrator concept. On this concern, referring to [

9] for the details, here, we just recall that a

B-series integrator is a numerical method that can be expressed as a formal

B-series, that is it has a power series in the time step in which each term is a sum of elementary differentials of the vector field and where the number of terms is allowed to be infinite.

Lemma 2. Assume that Problem (1) admits a quadratic first integral (with S denoting a constant symmetric matrix) and that it is solved by a B-series integrator . Then, the following properties, where all formulas have to be interpreted in the sense of formal series, are equivalent: - (a)

has a modified first integral of the form where each is a differential functional;

- (b)

is conjugate to a symplectic B-series integrator.

We observe that Lemma 2 is used in [

21] to prove the conjugate symplecticity of symmetric linear multistep methods. Following the lines of the proof given in [

13], we can actually prove that the

R-th one-step symmetric BSHO method is conjugate symplectic up to order

. With similar arguments of [

13] we prove the following theorem, showing that the map

associated with the BSHO method is such that

, where

is a suitable conjugate symplectic B-series integrator.

Theorem 1. The map associated with the one-step method (8) admits a B-series expansion and is conjugate to a symplectic B-series integrator up to order . Proof. The existence of a

B-series expansion for

is directly deduced from [

22], where a

B-series representation of a generic multi-derivative Runge-Kutta method has been obtained. By defining the two characteristic polynomials of the trapezoidal rule:

and the shift operator

the

R-th method described in (

8) reads,

Observe that

, for

denotes the

-th Lie derivative of

computed at

,

where

is the identity operator and

is defined as the

k-th total derivative of

computed at

where for the computation of the total derivative it is assumed that

satisfies the differential equation in (

1). Note that we use the subscript to define the Lie operator to avoid confusion with the same order classical derivative operator in the following denoted as

With this clarification on the definition of

we now consider a function

, a stepsize

h and the shift operator

, and we look for a continuous function

that satisfies (

13) in the sense of formal series (a series where the number of terms is allowed to be infinite), using the relation

where

is the classical derivative operator,

By multiplying both sides of the previous equation by

, we obtain:

Now, since Bernoulli numbers define the Taylor expansion of the function

and

and

for the other odd

we have:

Thus, we can write (

15) as

Adding and subtracting terms involving the classical derivative operator

, we get

that we recast as

Since

, due to the regularity conditions on the function

, we see that

and hence the solution

of (

16) is

-close to the solution of the following initial value problem

with:

that has been derived from (

16) by neglecting the sums containing the derivatives

. Observe that

for

since the method is of order

(see [

9], Theorem 3.1, page 340). We may interpret (

17) as the modified equation of a one-step method

, where

is evidently the time-

h flow associated with (

17). Expanding the solution of (

17) in Taylor series, we get the modified initial value differential equation associated with the numerical scheme by coupling (

17) with the initial condition

. Thus,

is a B-series integrators. The proof of the conjugated symplecticity of

follows exactly the same steps of the analogous proof in Theorem 1 of [

13]. Since

and

is conjugate-symplectic, the result follows using the same global change of coordinates

associated to

. ☐

In

Table 2, we report the coefficients

for

and the corresponding Bernoulli numbers. We can observe that the truncation error in the modified initial value problem is smaller than the one of the EMHO methods of the same order, which is equal to

(see [

13]). The conjugate symplecticity up to order

property of a numerical scheme makes it suitable for the solution of Hamiltonian problems. A well-known pair of conjugate symplectic methods is composed by the trapezoidal and midpoint rules. Observe that the trapezoidal rule belongs to both the classes BSHO and EMHO of multiderivative methods, and its characteristic polynomial plays an important role in the proof of Theorem 1.

4. The Spline Extension

A (vector) Hermite polynomial of degree

interpolating both

and

respectively at

and

together with assigned derivatives

can be computed using the Newton interpolation formulas with multiple nodes. On the other hand, in his Ph.D. thesis [

15], Loscalzo proved that a polynomial of degree

verifying the same conditions exists if and only if (

8) is fulfilled with the

coefficients defined as in (

12). Note that, since the polynomial of degree

fulfilling these conditions is always unique and its principal coefficient is given by the generalized divided difference

of order

associated with the given

R-order Hermite data, the

n-th condition in (

8) holds iff this coefficient vanishes. If all the conditions in (

8) are fulfilled, it is possible to define a piecewise polynomial, the restriction to

of which coincides with this polynomial, and it is clearly a

spline of degree

with breakpoints at the mesh points. Now, when the definition given in (14) is used together with the assumption

the conditions in (

8) become a multiderivative one-step scheme for the numerical solution of (

1). Thus, the numerical solution

it produces and the associated derivative values defined as in (14) can be associated with the above-mentioned

degree spline extension. Such a spline collocates the differential equation at the mesh points with multiplicity

that is it verifies the given differential equation and also the equations

at the mesh points. This piecewise representation of the spline is that adopted in [

15]. Here, we are interested in deriving its more compact B-spline representation. Besides being more compact, this also allows us to clarify the connection between BSHO and BS methods previously introduced in [

16,

17,

18]. For this aim, let us introduce some necessary notation. Let

be the space of

-degree splines with breakpoints at

where

Since we relate to the B-spline basis, we need to introduce the associated extended knot vector:

where:

which means that all the inner breakpoints have multiplicity

R in

and both

and

have multiplicity

The associated B-spline basis is denoted as

and the dimension of

as

with

The mentioned result proven by Loscalzo is equivalent to saying that, if the

coefficients are defined as in (

12), any

spline of degree

with breakpoints at the mesh points fulfills the relation in (

8), where

denotes the

j-th spline derivative at

In turn, this is equivalent to saying that such a relation holds for any element of the B-spline basis of

Thus, setting

and

considering the local support of the B-spline basis, we have that

, where the punctuation mark “;” means vertical catenation (to make a column-vector), can be also characterized as the unique solution of the following linear system,

where

and:

with

defined as,

where

denotes the

j-th derivative of

Note that the last equation in (

19),

is just a normalization condition.

In order to prove the non-singularity of the matrix we need to introduce the following definition,

Definition 1. Given a non-decreasing set of abscissas we say that a function agrees with another function at Θ if where denotes the multiplicity of in

Then, we can formulate the following proposition,

Proposition 1. The matrix defined in (20) and associated with the B-spline basis of is nonsingular. Proof. Observe that the restriction to

of the splines in

generates

since there are no inner knots in

Then, restricting to

can be also generated by the B-splines of

not vanishing in

that is from

Since the polynomial in

agreeing with a given function in:

is unique, it follows that also the corresponding

matrix collocating the spline basis active in

is nonsingular. Such a matrix is the principal submatrix of

of order

Thus now, considering that the restriction to

of any function in

is a polynomial of degree

we prove by reductio ad absurdum that the last row of

cannot be a linear combination of the other rows. In fact, in the opposite case, there would exist a polynomial

P of degree

such that

and

Considering the specific interpolation conditions, this

P does not fulfill the

n-th condition in (

8). This is absurd, since Loscalzo [

15] has proven that such a condition is equivalent to requiring degree reduction for the unique polynomial of degree less than or equal to

, fulfilling

Hermite conditions at both

and

☐

Note that this different form for defining the coefficient of the

R-th BSHO scheme is analogous to that adopted in [

17] for defining a BS method on a general partition. However, in this case, the coefficients of the scheme do not depend on the mesh distribution, so there is no need to determine them solving the above linear system. On the other hand, having proven that the matrix

is nonsingular will be useful in the following for determining the B-spline form of the associated spline extension.

Thus, let us now see how the B-spline coefficients of the spline in

associated with the numerical solution generated by the

R-th BSHO can be efficiently obtained, considering that the following conditions have to be imposed,

Now, we are interested in deriving the B-spline coefficients

of

Relying on the representation in (

23), all the conditions in (

22) can be re-written in the following compact matrix form,

where

, with

is the identity matrix of size

D is the dimension of the spline space previously introduced and where:

with each

being a

-banded matrix of size

(see

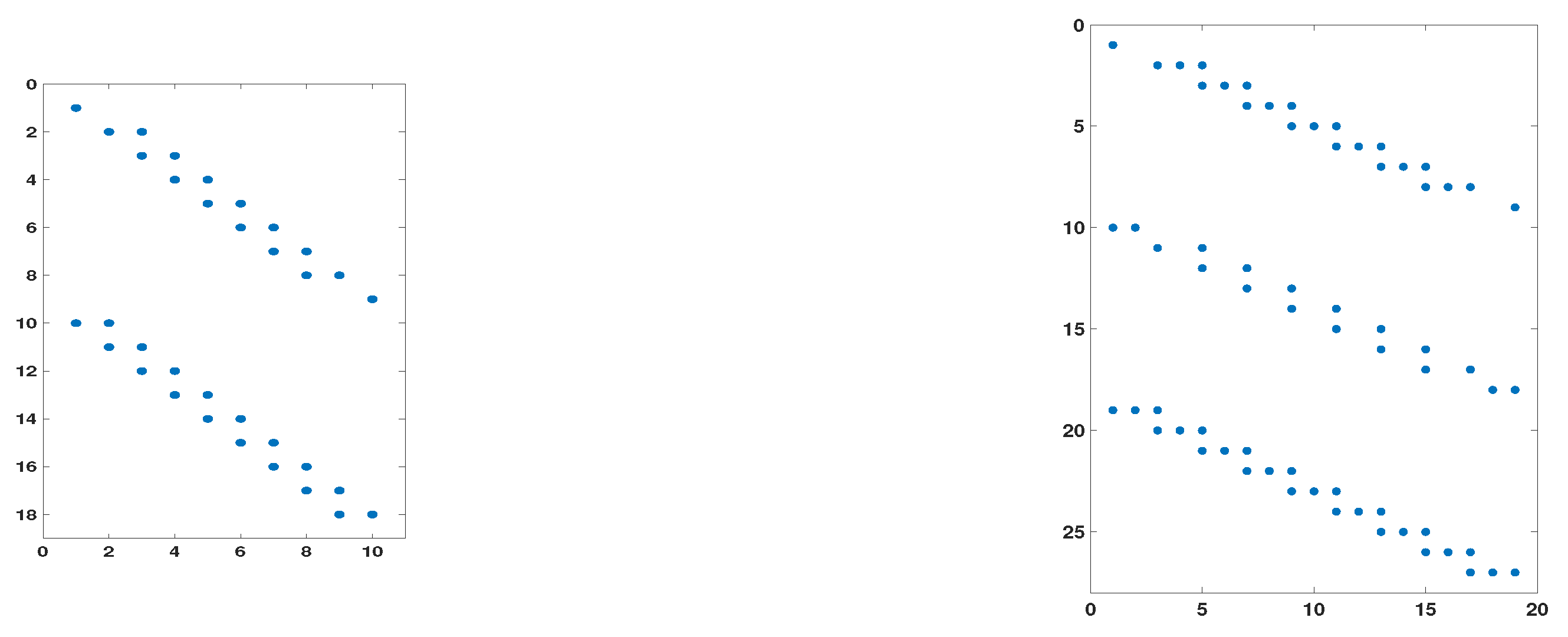

Figure 1) with entries defined as follows:

The following theorem related to the rectangular linear system in (

24) ensures that the collocating spline

is well defined.

Theorem 2. The rectangular linear system in (24) has always a unique solution, if the entries of the vector on its right-hand side satisfy the conditions in (8) with the β coefficients given in (12). Proof. The proof is analogous to that in [

18] (Theorem 1), and it is omitted. ☐

We now move to introduce the strategy adopted for an efficient computation of the B-spline coefficients of

4.1. Efficient Spline Computation

Concerning the computation of the spline coefficient vectors:

the unique solution of (

24) can be computed with several different strategies, which can have very different computational costs and can produce results with different accuracy when implemented in finite arithmetic. Here, we follow the local strategy used in [

18]. Taking into account the banded structure of

, we can verify that (

24) implies the following relations,

where

and:

As a consequence, we can also write that,

where

Now, for all integers

we can define other

auxiliary vectors

defined as the solution of the following linear system,

where

is the

r-th unit vector in

(that is the auxiliary vectors define the

r-th column of the inverse of

). Then, we can write,

From this formula, considering (

27), we can conclude that:

Thus, solving all the systems (

28) for

with:

all the spline coefficients are obtained. Note that, with this approach, we solve

D auxiliary systems, the size of which does not depend on

N, using only

N different coefficient matrices. Furthermore, only the information at

and

is necessary to compute

Thus, the spline can be dynamically computed at the same time the numerical solution is advanced at a new time value. This is clearly of interest for a dynamical adaptation of the stepsize.

In the following subsection, relying on its B-spline representation, we prove that the convergence order of

to

is equal to that of the numerical solution. This result was already available in [

15] (see Theorem 4.2 in the reference), but proven with different longer arguments.

4.2. Spline Convergence

Let us assume the following quasi-uniformity requirement for the mesh,

where

and

are positive constants not depending on

with

and

Note that this requirement is a standard assumption in the refinement strategies of numerical methods for ODEs. We first prove the following result, that will be useful in the sequel.

Proposition 2. If and so in particular if is a polynomial of degree at most then:where and the spline extension coincides with Proof. The result follows by considering that the divided difference vanishes and, as a consequence, the local truncation error of the methods is null. ☐

Then, we can prove the following theorem (where for notational simplicity, we restrict to

), the statement of which is analogous to that on the convergence of the spline extension associated with BS methods [

18]. In the proof of the theorem, we relate to the quasi-interpolation approach for function approximation, the peculiarity of which consists of being a local approach. For example, in the spline context considered here, this means that only a local subset of a given discrete dataset is required to compute a B-spline coefficient of the approximant; refer to [

23] for the details.

Theorem 3. Let us assume that the assumptions on f done in Corollary 1 hold and that (30) holds. Then, the spline extension approximates the solution y of (1) with an error of order where . Proof. Let

denote the spline belonging to

obtained by quasi-interpolating

y with one of the rules introduced in Formula (5.1) in [

23] by point evaluation functionals. From [

23] (Theorem 5.2), under the quasi-uniformity assumption on the mesh distribution, we can derive that such a spline approximates

y with maximal approximation order also with respect to all the derivatives, that is,

where

K is a constant depending only on

and

On the other hand, by using the triangular inequality, we can state that:

Thus, we need to consider the first term on the right-hand side of this inequality. On this concern, because of the partition of unity property of the B-splines, we can write:

where

and

Now, for any function

we can define the following linear functionals,

where:

and the vector

has been defined in the previous section. Considering from Proposition 2 that

, as well as any other spline belonging to

can be written as follows,

from (

31), we can deduce that:

Now, the vector

is defined in (

28) as the

r-th column of the inverse of the matrix

. On the other hand, the entries of such nonsingular matrix do not depend on

h, but because of the locality of the B-spline basis and of the

R-th multiplicity of the inner knots, only on the ratios

, which are uniformly bounded from below and from above because of (

30). Thus, there exists a constant

C depending on

and

R such that

which implies that the same is true for any one of the mentioned coefficient vectors. From the latter, we deduce that for all indices, we find:

On the other hand, taking into account the result reported in Corollary 1 besides (

31), we can easily derive that

, which then implies that

☐

5. Approximation of the Derivatives

The computation of the derivative from the corresponding is quite expensive, and thus, usually, methods not requiring derivative values are preferred. Therefore, as well as for any other multiderivative method, it is of interest to associate with BSHO methods an efficient way to compute the derivative values at the mesh points. We are exploiting a number of possibilities, such as:

using generic symbolic tools, if the function is known in closed form;

using a tool of automatic differentiation, like ADiGator, a MATLAB Automatic Differentiation Tool [

24];

using the

Infinity Computer Arithmetic, if the function

is known as a black box [

6,

7,

13];

approximating it with, for example, finite differences.

As shown in the remainder of this section, when approximate derivatives are used, we obtain a different numerical solution, since the numerical scheme for its identification changes. In this case, the final formulation of the scheme is that of a standard linear multistep method, being still derived from (

8) with coefficients in (

12), but by replacing derivatives of order higher than one with their approximations. In this section, we just show the relation of these methods with a class of Boundary Value Methods (BVMs), the Extended Trapezoidal Rules (ETRs), linear multistep methods used with boundary conditions [

25]. Similar relations have been found in [

26] with HO and the equivalent class of the super-implicit methods, which require the knowledge of functions not only at past, but also at future time steps. The ETRs can be derived from BSHO when the derivatives are approximated by finite differences. Let us consider the order four method with

. In this case, the first derivative of

could be approximated using central differences:

the numerical scheme (

8), denoting

and

, is:

after the approximation becomes:

rearranging, we recover the ETR of order four:

With similar arguments for the method of order six,

, by approximating the derivatives with the order four finite differences:

and:

and rearranging, we obtain the sixth order ETR method:

This relation allows us to derive a continuous extension of the ETR schemes using the continuous extension of the BSHO method, just substituting the derivatives by the corresponding approximations. Naturally, a change of the stepsize will now change the coefficients of the linear multistep schemes. Observe that BVMs have been efficiently used for the solution of boundary value problems in [

27], and the BS methods are also in this class [

16].

It has been proven in [

21] that symmetric linear multistep methods are conjugate symplectic schemes. Naturally, in the context of linear multistep methods used with only initial conditions, this property refers only to the trapezoidal method, but when we solve boundary value problems, the correct use of a linear multistep formula is with boundary conditions; this makes the corresponding formulas stable, with a region of stability equal to the left half plane of

(see [

25]). The conjugate symplecticity of the methods is the reason for their good behavior shown in [

28,

29] when used in block form and with a sufficiently large block for the solution of conservative problems.

Remark 1. We recall that, even when approximated derivatives are used, the numerical solution admits a -degree spline extension verifying all the conditions in (24), where all the appearing on the right-hand side have to be replaced with the adopted approximations. The exact solution of the rectangular system in (24) is still possible, since (8) with coefficients in (12) is still verified by the numerical solution by its derivatives and by the approximations of the higher order derivatives. The only difference in this case is that the continuous spline extension collocates at the breakpoints of just the given first order differential equation.