1. Introduction and Preliminaries

Throughout the course of this study, will denote the set of natural numbers. Let be a non-empty closed and convex subset of the Banach space equipped with a norm . We define as a mapping, and as the set encompassing all fixed points of within .

Since Banach’s pioneering contribution in 1922 [

1], the Banach contraction principle, based on the class of contraction mappings, has attracted significant attention in mathematical research. A mapping

on a normed space

is called a

contraction if there exists a constant

such that

Owing to its fundamental properties and strong potential for addressing complex problems across various disciplines, this class of mappings has been extensively studied under different structural frameworks. Consequently, it has emerged as a powerful tool in fixed-point theory. However, recognizing both the strengths and inherent limitations of contraction mappings, researchers have introduced more generalized classes that relax certain constraints while extending their applicability under broader conditions (see [

2,

3,

4,

5,

6,

7,

8]). These generalized mappings have been investigated in terms of stability, existence, and uniqueness of fixed points, data dependence, and other qualitative aspects within diverse mathematical settings, including metric, topological, and normed spaces (see [

9,

10,

11,

12,

13,

14,

15,

16]). Moreover, numerous iterative algorithms have been developed to approximate the fixed points of such mappings, specifically addressing the challenges posed by modern computational problems (see [

17,

18,

19,

20,

21,

22,

23,

24,

25]). While classical fixed-point iterations, such as those of Picard and Krasnoselskij, continue to serve as fundamental methods for solving nonlinear equations, emerging applications in high-dimensional optimization, machine learning, and perturbed dynamical systems expose the limitations of these traditional approaches. These domains require algorithms that offer enhanced stability, accelerated convergence, and robustness to approximation errors. To meet these demands, recent contributions by Filali et al. [

26] and Alam and Rohen [

27] have introduced two distinct approaches, hereinafter referred to as the “DF iteration algorithm” (Algorithm 1) and “AR iteration algorithm” (Algorithm 2), respectively, which will be examined in detail in the subsequent sections.

| Algorithm 1: DF iteration algorithm |

| Input: A mapping , |

| initial point , |

| , , , |

| and budget N. |

| 1: for do |

| 2: |

| |

| |

| |

| 3: end for |

| Output: Approximate solution |

| Algorithm 2: AR iteration algorithm |

| Input: A mapping , |

| initial point , |

| ,

, , |

| and budget N. |

| 1: for do |

| 2: |

| |

| |

| |

| 3: end for |

| Output: Approximate solution |

Both studies presented a range of theoretical results, including convergence and stability analyses, as well as insights into the sensitivity of fixed points with respect to perturbations in contraction mappings. More specifically, the authors derived the following results concerning the data dependence of fixed points:

Theorem 1. Let , be two contractions with contractivity constant and fixed points and , respectively. Suppose that there exists a maximum admissible error such that for all (in this case, and are called approximate operators of each other).

(i) ([

26], Theorem 5)

Let and be the iterative sequences generated by the DF iteration algorithm associated with and , respectively. If the sequences , , in the DF iteration algorithm satisfy the conditions for all and , then it holds that(ii) ([

27], Theorem 8)

Let and be the iterative sequences generated by the AR iteration algorithm associated with and , respectively. If the sequences , , in the DF iteration algorithm satisfy either ofthen it holds that We should immediately point out that, in both results, the restrictions imposed on the control sequences

,

,

,

,

,

and the imposed contraction condition on the mapping

, significantly restrict the applicability of these theories. Indeed, when

and

are approximate operators of each other and

is a

contraction, the mapping

satisfies the following condition for all

x,

:

where

. This demonstrates that

inherits a weakened contraction-like property, deviating from strict contractivity by an additive constant

dependent on the approximation error

. As is evident, any contraction mapping satisfies condition (

4). However, as the following example illustrates, a mapping

that satisfies (

4) does not necessarily satisfy the contraction condition given in (

1).

Example 1. Consider the space equipped with the usual absolute value norm. Define the mappings as follows:Then, we haveSo, we can choose . Since is discontinuous, it does not qualify as a contraction. However, it possesses a unique fixed point at . On the other hand, the mapping satisfies condition (4) with and . To verify this, we examine the following cases: Cases 1

and 2:

If or , then for any , we have Cases 3

and 4:

If and (or vice versa), then Thus, considering all cases together, we conclude that the mapping satisfies condition (4) with and . (Note that while the theory requires , this example shows that is sufficient for the inequality to hold, indicating a tighter bound for this specific mapping.) The example provided below illustrates that the condition delineated in (

4) lacks sufficiency in ensuring the existence of fixed points for a mapping

.

Example 2. Let us consider the set , equipped with the norm induced by the usual absolute value metric. Define the mappings , as follows:Then, we haveSo, we can choose . We now demonstrate that the mapping satisfies the inequality in (4) under four cases. Cases 1

and 2:

If or , then for any , we obtainandrespectively. Hence, in both cases, the mapping satisfies the inequality in (4) for any and any . Cases 3

and 4:

If and (or vice versa), then and . It is important to note that for every and for every indicating that and are increasing functions. Consequently,reaches its maximum value when and . This implies thatmeaning that for every and , . On the other hand, employing similar arguments, we obtainindicating that for every and , . Thus, if and , then for any and any , the following inequality holds:As a result, for every , the mapping satisfies the inequality in (4) for any and for any . (Note that while the theory guarantees the inequality for , this example demonstrates that a smaller is sufficient, indicating a tighter bound for this specific mapping). However, upon solving the equation , we find that for , , and for , . Consequently, the mapping does not possess any fixed points for . By Banach’s fixed-point theorem, the contraction mapping

guarantees the existence of a unique fixed point

. However, the approximate operator

, while satisfying inequality (

4) deviates from strict contractivity because of the additive perturbation term

. Although

, lacks the classical contraction property, its structural proximity to

permits an analysis of fixed-point stability. Specifically, if

is sufficiently small—as a consequence of the bounded approximation error

—the fixed points of

, should they exist (say

), lie within a neighborhood of

. By utilizing inequality (

4) and employing a non-asymptotic approach, we derive a concrete upper bound for

without relying on asymptotic assumptions, as required in Theorem 1. Specifically, we establish the following bound:

which leads to

This result formalizes the intuition that small perturbations in the operator propagate controllably to its equilibria. While

does not inherently inherit the contraction property, the weakened inequality still enables meaningful conclusions about fixed-point proximity, illustrating the robustness of contraction-based frameworks under bounded approximations. Such insights are pivotal in applied settings, where numerical or modeling errors require tolerance analyses in dynamical systems and iterative algorithms.

The preceding discussions indicate that the applicability of the data-dependence results in parts (i) and (ii) of Theorem 1 can be further extended by relaxing the contraction assumption imposed on the mapping and instead treating merely as an approximate operator of the mapping .

In this study, we establish a convergence-equivalence result between the DF and AR iteration algorithms in approximating the fixed point of a contraction mapping. Moreover, we derive enhanced versions of the data-dependence results in parts (i) and (ii) of Theorem 1 by not only removing the contraction condition imposed on the mapping but also eliminating the constraints on the control sequences , , , , , .

The following lemma plays a key role in establishing our results:

Lemma 1 ([

28])

. Let , be three sequences such that for each , for all , , , andIt then holds that . 2. Main Results

In this section, we establish our main theoretical findings concerning the convergence behavior of the iterative processes under consideration. In particular, we compare the trajectories generated by the DF iteration and the AR iteration when applied to a contraction mapping. The significance of the following theorem lies in the fact that it provides conditions under which both iterative schemes not only converge to the unique fixed point of , but also approach each other asymptotically. This comparison allows us to assess the relative stability and efficiency of the algorithms, and highlights the robustness of their convergence under mild assumptions on the control sequences. We will now state the results precisely:

Theorem 2. Let be a λ-contraction mapping with a fixed point . Consider the sequences and , which are generated by the DF and AR iteration algorithms, respectively. The following statements are equivalent:

(i)

Define the sequence for all asIf the sequence is bounded and , then the sequence converges strongly to 0, and converges strongly to . (ii)

Define the sequence for all asIf the sequence is bounded and , then the sequence converges strongly to zero and converges strongly to . Proof. (i) Using the inequality in (

1) and employing the DF and AR iteration algorithms, we achieve the following for every

:

where

Now, we set the following for every

:

Since

is bounded, there exists a number

such that for every

, the following holds:

Moreover, since

according to ([

27], Theorem 3), for any given

, there exists an

such that for all

,

. Thus, for every

, we have

which implies

, i.e.,

. Consequently, the inequality in (

6) satisfies all the requirements of Lemma 1 and, therefore, we obtain

. On the other hand, since

, we can conclude that

.

(ii) Utilizing the inequality in (

1) with AR and DF iteration algorithms, we obtain the following for all

:

where

Define the following for all

:

Given that

is bounded, there exists

such that for all

:

Furthermore, as

according to ([

26], Theorem 2), for any

, there exists an

such that for all

,

. Hence, for each

This implies

or

. Thus, the inequality in (

7) satisfies the conditions of Lemma 1, leading to

. Moreover, since

we conclude that

. □

To demonstrate the applicability of Theorem 2, we will now provide an example based on a nonlinear differential equation. This example serves two purposes. First, it shows how an abstract contraction mapping arising from an integral operator can be constructed in a concrete functional setting. Second, it illustrates that the theoretical convergence results established in Theorem 2 can be verified numerically by examining the behavior of the DF and AR iteration schemes. In particular, we consider an initial value problem whose solution can be reformulated as a fixed-point problem, and then show that the corresponding operator is indeed a contraction mapping. This allows us to apply Theorem 2 directly and validate the convergence through numerical simulations.

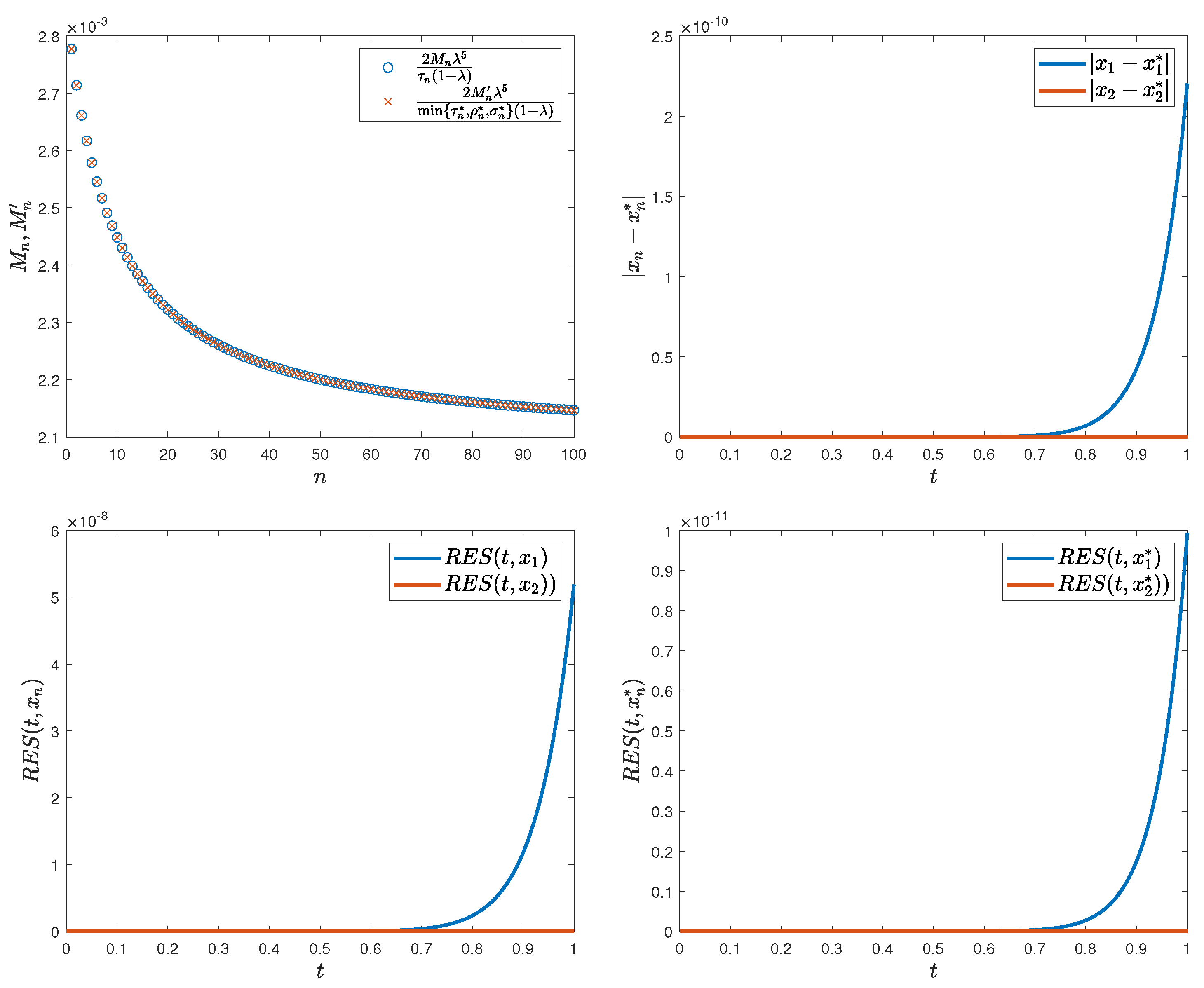

Example 3. Let denote the set of functions defined on that possess continuous second-order derivatives, equipped with the supremum norm . It is well known that forms a Banach space. Now, consider the following initial value problem:A potential solution to this problem can be expressed in the following integral form:Now, let . Then, the operator , defined asis a contraction mapping. Indeed, it satisfies the contraction property Now, for each , we consider the following sequences: As illustrated in Figure 1 (top left), the sequencesare both bounded, thereby satisfying the hypotheses of Theorem 2. Letbe the residual error for . Figure 1 (top right) shows that, starting with the initial norm , where , the sequence converges to 0, while the diagrams below, together with Table 1, illustrate that the sequences and converge to the fixed point of the mapping . Numbers in parentheses indicate decimal exponents. Algorithms 3 and 4 provide theoretical error bounds when the exact operator

is replaced by a perturbed operator

, which can be interpreted as an approximation arising in practical applications. To illustrate the usefulness of this result, we consider a modified nonlinear differential equation. Such an example demonstrates how the admissible error

can be explicitly quantified in a functional setting, and how the theoretical estimates (

9)–(

10) compare with the actual numerical deviations observed in practice.

Theorem 3. Let be a λ-contraction mapping with a fixed point , and let be a mapping. Consider the sequences and generated by the DF and AR iteration algorithms associated with , which are defined as follows:| Algorithm 3: DF iteration algorithm for |

| Input: A mapping , |

| initial point , |

| , , , |

| and budget N. |

| 1: for do |

| 2: |

| |

| |

| |

| 3: end for |

| Output: Approximate solution |

| Algorithm 4: AR iteration algorithm for |

| Input: A mapping , |

| initial point , |

| , , , |

| and budget N. |

| 1: for do |

| 2: |

| |

| |

| |

| 3: end for |

| Output: Approximate solution |

Suppose the following conditions hold:

(C1)

There exists a maximum admissible error such that(C2) There exists such that and both iterative sequences and converge to .

Then, the following bounds hold for the iterative sequences and , respectively:and Proof. We begin by deriving the bound presented in (

9) for the term

, utilizing the DF iteration algorithms associated with the mappings

and

. Using the contraction property of

, condition (C1), and the DF iteration algorithm for both

and

, we obtain the following estimates:

By combining these inequalities, we obtain

Since for every

,

,

, and

and

, we conclude that

From ([

26], Theorem 2), we have

and under assumption (C2), we also have

. Taking the limit on both sides of the final inequality yields

Next, we derive the bound specified in (

10) for the quantity

by utilizing the AR iteration algorithms associated with the mappings

and

. By leveraging the contraction property of

, assumption (C1), and the AR iteration algorithm for both

and

, the following estimates can be derived in a manner similar to the previous ones:

By successively substituting these bounds, we obtain the following:

Since for every

,

,

, and

and

, it follows that

From ([

27], Theorem 3), we know that

, and under assumption (C2), we also have

. Taking limits on both sides of the last inequality, we finally obtain

which completes the proof. □

To clarify the applicability of Theorem 3, we present a concrete example constructed from a nonlinear differential equation. This will allow us to explicitly see how the perturbation of the operator affects the fixed point and how the theoretical error bounds are reflected in practice.

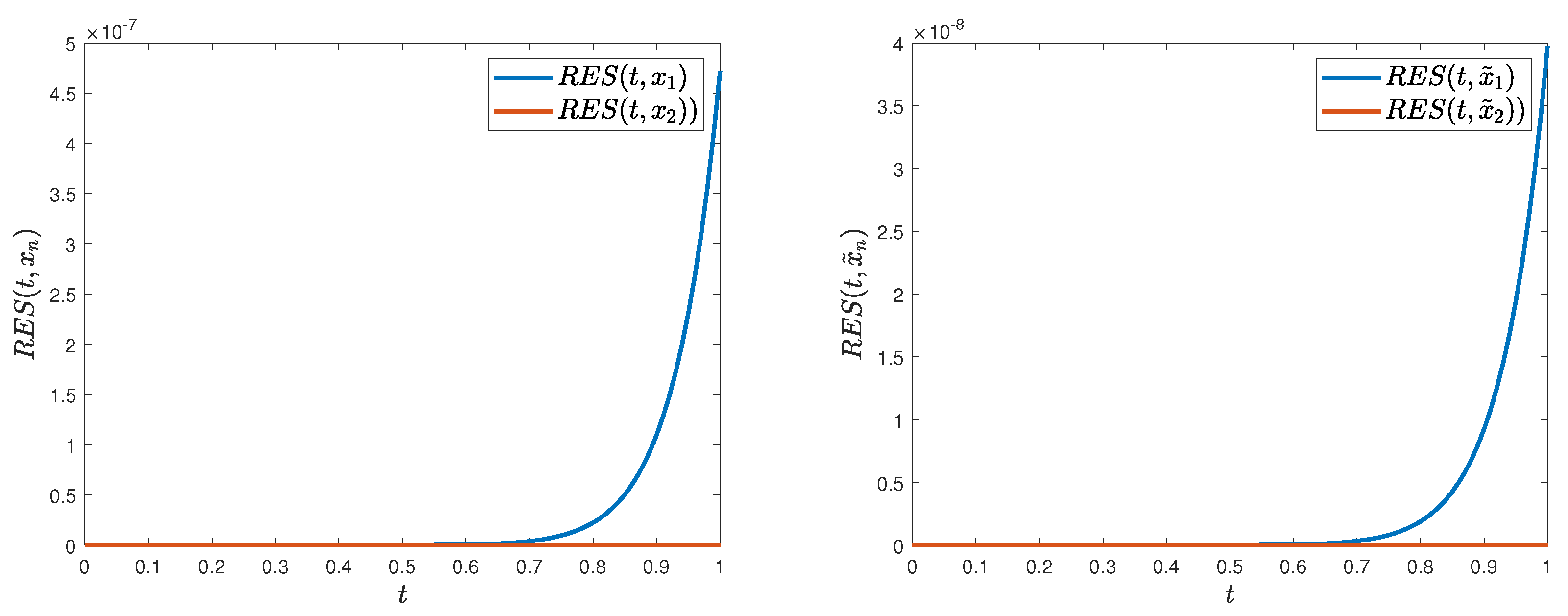

Example 4. Let , , , , , , , , and be defined as in Example 3. We now consider the following second-order initial value problemA possible solution to this problem can be formulated as an integral equation:Define an operator as follows:Thus, we can establish the following bound:The obtained value represents the admissible tolerance ε, showing that the error in the approximation does not exceed this bound. As in Example 3, we introduce the residual error for the sequence by From Table 2 and Figure 2, starting with the initial function , it is evident that the sequences and exhibit convergence toward a fixed point of . As a result, all the conditions of Theorem 3 are satisfied, thereby confirming the validity of the estimates in (9) and (10), as demonstrated below:The numerical estimates demonstrate that the deviations are bounded by and , which remain well within the respective theoretical tolerances and , thereby confirming both the accuracy of the numerical scheme and the sharpness of the established analytical bounds. The numerical outcomes presented in

Table 2 and

Figure 2 highlight two important observations. First, the residual errors decrease rapidly with each iteration, confirming the strong convergence of both DF and AR schemes towards the fixed point of

. Second, the measured deviations between

and

remain well within the analytical error bounds derived in Theorem 3. This shows that estimates (

9) and (

10) are not only mathematically valid, but also numerically sharp. Consequently, Example 4 provides concrete evidence of the stability and reliability of the proposed iterative methods when small perturbations are introduced into the underlying operator.

Remark 1. (1)

The estimates provided in Theorem 3 within (9) and (10) for the quantity offer substantially more precise approximations than the corresponding ones presented in parts (i)

and (ii)

of Theorem 1. Additionally, a comparative analysis of the estimates given in (9) and (10) reveals that the bound in (9) exhibits superior accuracy compared to that in (10).Furthermore, by utilizing the identitywe derive the following asymptotic results: (D1)

Taking the limit as under the assumption that , , , the inequality given in (11) leads to(D2)

As , assuming , , , the inequality from (11) results in(D3)

Taking the limit as with , , , the inequality from (12) yields(D4)

Finally, as under the condition , , , applying the limit to the inequality in (12) givesBased on these results, it follows that in cases (D1)

and (D2)

, the estimates obtained in (13) and (14) are more precise than the bound in (9). Similarly, for cases (D3)

and (D4)

, the estimates in (15) and (16) yield more refined approximations than that in (10). (2)

Let the mappings and be as defined in Theorem 3. Then, we observe thatwhere, due to condition (C1)

, we havewhich leads toThus, using a more straightforward approach, we obtain a tighter bound for compared to those provided in (9) and (10).(3)

Table 3 presents a comparison of the results obtained from the analyses conducted to establish an upper bound for : Example 5. Let , , , and be defined as in Example 4.

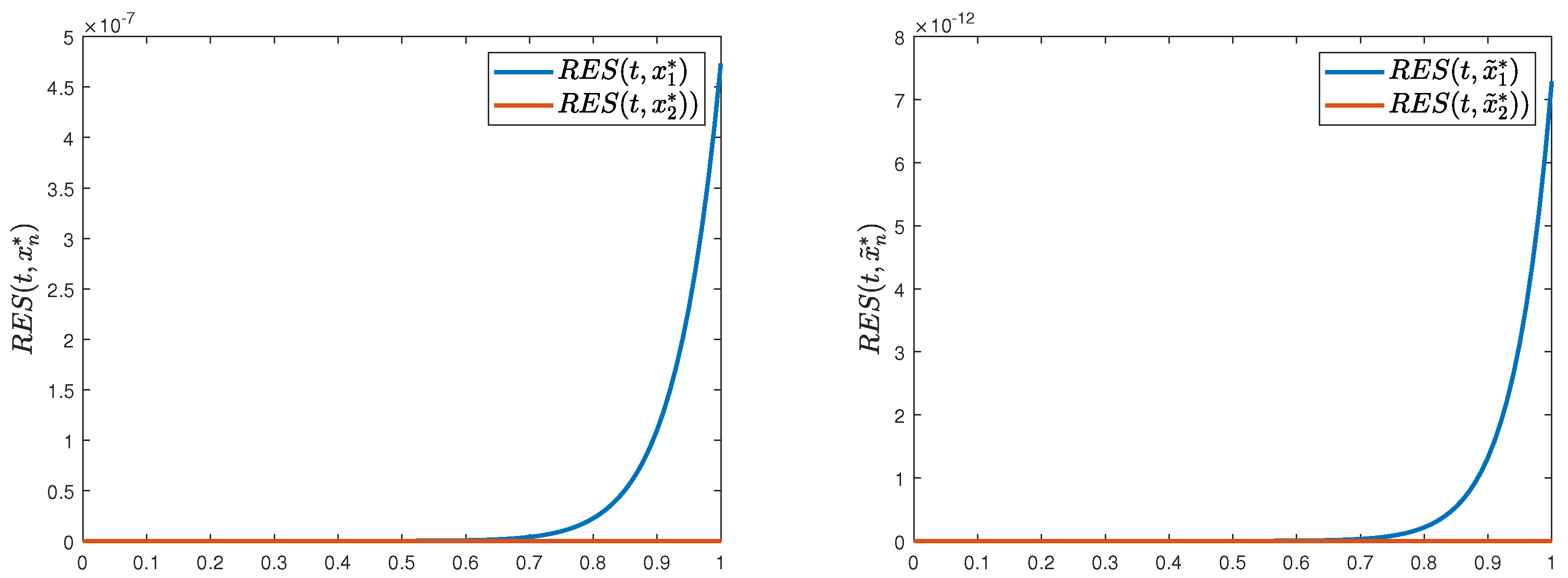

(D1)

Consider the parametrized sequences , , , prescribed byfor all . These sequences exhibit asymptotic behavior as n . As empirically validated by Figure 3, the iterative sequence , starting with the initial , converges to the fixed point of , while , starting with the initial , converges to the fixed point of . A quantitative assessment yields(D2)

Building on Example 4, define the sequencesfor all . Here, as . Graphical results in Figure 3 (left and right) confirm that and , starting with the initial , converge to and , respectively. The error propagation adheres to the bound:(D3)

Let the sequences , , and be specified viafor all . These sequences satisfy , , as n . As depicted in Figure 4 (left and right), and , starting with the initial , converge to and , respectively. The deviation between fixed points is bounded by(D4)

Adopting the framework of Example 4, definefor all . Then, , , as n . Starting with the initial , Figure 1 (bottom-right) and Figure 2 (right) illustrate the convergence of and to and , respectively. The empirical error remains well within the theoretical upper limit