Abstract

In this paper, we present a technique that improves the applicability of the result obtained by Cordero et al. in 2024 for solving nonlinear equations. Cordero et al. assumed the involved operator to be differentiable at least five times to extend a two-step p-order method to order . We obtained the convergence order of Cordero et al.’s method by assuming only up to the third-order derivative of the operator. Our analysis is in a more general commutative Banach algebra setting and provides a radius of the convergence ball. Finally, we validate our theoretical findings with several numerical examples. Also, the concept of basin of attraction is discussed with examples.

Keywords:

Fréchet derivative; order of convergence; Newton method; Taylor series expansion; nonlinear equations; iterative method; Julia set; Fatou set MSC:

65J15; 65H10; 47H99; 65D99

1. Introduction

In science and engineering, we commonly experience problems that require solving the systems of nonlinear equations expressed by

where is a nonlinear operator. Here, X is a commutative Banach algebra, Y is a Banach space, and is a non-empty open convex subset of X. Many real-life problems, including optimal control, transport theory, neurophysiology, reactors and steering, kinematics, synthesis problems, etc., fit into Equation (1) (for details, see [1,2,3,4,5,6]). However, it is not always possible to obtain an analytical or closed-form solution of Equation (1). Thus, the iterative methods are useful for approximating such solutions. In some conditions, the solution of (1) may be obtained as a fixed point of some appropriate iterative function satisfying , where is an initial guess of . The Newton method [7], defined by

is probably the most popular and widely used iterative method for finding the approximate solution for (1). Under some conditions, (2) has the order of convergence two (see [7]). We always want to find algorithms that solve our problems in optimistic ways. In recent years, numerous authors developed a modified version of (2), which improved the method’s order of convergence (see Definition 2). For example, Behl et al. in [8] proposed a method of order seven and Lotfi et al. in [9] proposed a method of order six. In both cases, they used the Taylor series expansion with the existence of at least seven-time Fréchet differentiability of the involving operator on . Cordero et al. in [10] proved that the method

had the order of convergence , if was an iterative map with convergence order p. Here, I is the identity operator. Behl et al. and Lotfi et al.’s works are particular cases of Cordero et al.’s work in [10]. But the techniques used in [10] needed higher-order Fréchet differentiability of the involved operator and the analysis was conducted in Euclidean spaces. For example, consider the function defined by

We can observe that is a simple solution of and is unbounded on . Hence, the function is not differentiable more than three times on . Thus, the analysis given in [8,9,10] does not guarantee the convergence of the method (3) to the solution of . To reduce the requirement of higher-order derivatives for the convergence analysis, numerous authors have studied similar results in [11,12,13]. The significance of our work is as follows:

- (i)

- We obtain the convergence order of method (3) without using the Taylor series expansion.

- (ii)

- We use conditions on the operator and its Fréchet derivates up to the third order only.

- (iii)

- We provide the convergence ball which was not given in earlier studies.

- (iv)

- The analysis in [10] was conducted in the Euclidean spaces, whereas our study is in a more general commutative Banach algebra setting.

We organised this paper as follows. In Section 2, we discuss some useful concepts used in our study. The convergence analysis of method (3) is presented in Section 3. Several numerical examples are given in Section 4 to validate our results, and the method’s basin of attraction is presented in Section 5. Finally, we provide a conclusion based on the theoretical and numerical experiments in Section 6.

2. Preliminary Concepts

Let , and , where and .

Lemma 1

(cf. [7], (Theorem 1.1.12)). Let T be a bounded linear operator defined on X with . Then, the operator is invertible, and

Definition 1

(See [14]). The efficiency index for comparing the iterative methods is defined by , where θ is the total number of functions and derivatives evaluated per iteration.

Definition 2

(See [15,16]). Let and for Then, the sequence is said to converge to ξ with an R order of at least p if the following conditions hold:

- (i)

- ,

- (ii)

- There exist , , and such that

Definition 3

(See [16]). Let ξ be a solution of Equation (1) and suppose that and are four consecutive iterations near to ξ. Then, the approximated computational order of convergence (ACOC) is defined by

Remark 1.

Note that the ACOC is not a good measure if there is an oscillating behaviour of the approximations or slow convergence in the initial stage [17].

We use the following conditions on the operator in our study:

- (C1)

- (C2)

- ;

- (C3)

- ;

- (C4)

- ;

- (C5)

- ;

- (C6)

- .

By condition , we have

The next tool, known as the Mean Value Theorem (MVT) in integral form [18], is used very frequently in our study:

3. Main Results

First, we introduce some functions and parameters used in this analysis. Define the following functions by

and . Clearly, and g are continuous on . We observe that each of the terms in are non-negative and non-decreasing on ; hence, is non-decreasing on . Therefore, g is also non-decreasing () with and . By the Intermediate Value Theorem, there exists a smallest such that . Observe that .

Theorem 1.

Assume that the conditions hold. Then, the sequence generated by (3) with an initial value is well defined, and , . Moreover, the following holds:

Proof.

Using the principle of mathematical induction, we prove that , . First, we verify the result for . Using (3) and (5) with and , we write as follows:

Using (6) with in the first term, and in the second and third terms above, we obtain

Adding and subtracting in the integrands above (first, third, and fourth terms), we obtain

Using (7) (by taking suitable x and y) in the first and fourth terms in the above equation, we obtain

where

and

For convenience, we denote , and .

By adding and subtracting in the integrand of the last term of Equation (10), we have

Again, by adding and subtracting in the integrand in the third, sixth, and seventh terms above, we obtain

where

and

By adding in the third and subtracting the same in the sixth term, and combing the fourth and fifth terms in (11), we obtain

By using the relation in the third term, and by adding and subtracting the term in the above equation, we obtain

By applying (5) (with suitable x and y) in the third and in the sixth term, and with the relation , , we obtain

By using (6) (with suitable x and y) in third and sixth term above, we obtain

where and . By adding and subtracting in the integrand of the third and sixth term above, we obtain

where

and

By adding and subtracting , we obtain

where . By using the commutative properties in X, we have

Again, by adding and subtracting , we obtain

By using the identity , we obtain

where

and

Before moving further, we find some useful estimates. By , we have

By Lemma 1 (with , and ), we obtain

Since , and by using (5), we have

and

By using , (4) and (12), we obtain

and

By (14), we obtain

Since in (3) is of order p (without loss of generality, we assume that and in Definition 2), we have

We shall find an upper bound of for each . Using and (12), we obtain

By using , (12), (13) and (16), we find estimates for and :

and

By utilising and (12), we obtain

By using and (12), we have

Similarly, by using , (12), (13) and (16), we obtain

and

By , (12), (14) and (16), we obtain

From and (12), we obtain

By using , (12), (13), (14), and (16), we have

Similarly, by , (12), and (16),

Using , (12), and (16), we obtain

From , (12), and (16), we get

Applying , (12), (14), and (16), we obtain

and

Similarly, using , (12), (15), and (16), we obtain

and

By using (17) in all the above upper bounds for , and by the triangle inequality, we obtain

Since , we have , and hence . Thus, the iteration . The function is non-decreasing on and , so we obtain

We assume that the result is true for any i.e., . If we replace , and by , and , respectively, in the above computations, we obtain and

Therefore, by the principle of induction, we have and

Furthermore, we have obtained (9). By Definition 2, we have proved that the order of convergence of the method (3) was . □

Remark 2.

Some special cases of in (3) are given below.

The next result concerns the uniqueness of the solution of (1).

Proposition 1.

Assume that and hold. Suppose that there exists such that

Then, Equation (1) has a unique solution .

Proof.

Similar to the proof of Proposition 2.2 in [11]. □

4. Numerical Examples

This section considers four examples. In Example 1, we discuss the Hammerstein type nonlinear integral equation, which has many applications in science and technology (for details, see [1,2,20,21]). In Example 2, we discuss the solution of a system of nonlinear equations on , and in Example 4, we consider a system of nonlinear equations. Finally, in Example 5, we solve the Van der Pol differential equation. This equation has a wide range of applications in seismology, physical sciences, biological sciences, etc., for example, in the geological fault of two plates, electric flow in a vacuum tube, and neuron action potential (see [22,23,24]).

Example 1.

Let and , where .

Consider the integral equation

We are interested in finding a function which satisfies , where

The Fréchet derivatives of up to the third order are given by

and

To verify our conditions given in Section 2, we consider . Observe that , the identity map, so we have

and

All conditions are verified, and and , and . Thus, the results in Theorem 1 apply to this particular nonlinear integral equation.

Next, we converted the problem of solving the integral equation into a system of nonlinear equations using the Gauss–Legendre quadrature formula given in [25]. We solved the obtained system using the method (3) with as in (18)–(20) with the initial point . The results obtained in each iteration are shown in Table 1, from which it is visible that the method (3) with as in (18)–(20) are converging to within the second iteration. Hence, the results in Theorem 1 are validated for this example.

Table 1.

Approximated solutions using method (3) with different choices of for Example 1.

Example 2.

Let with max norm and be defined by

The Fréchet derivatives up to the third order are given as follows:

and

All the conditions given in Section 2 are verified with , and the constants are , , and . Hence, the results in Theorem 1 are applicable to solve . Taking the initial value , we obtain that the method (3) with as in (18)–(20) are converging to within the three iterations. The approximated solutions are shown in Table 2.

Table 2.

Approximated solutions using method (3) with different choices of for Example 2.

Example 3.

Consider the scalar function defined by

Note that and the fourth derivative does not exist on . Therefore, the analysis in [8,9,10] cannot guarantee the convergence of the iterative method to the simple solution . Here, the function q satisfies the assumptions – with , , and . From Table 3, one can compare the convergence order, efficiency index, and the number of iterations required to converge to the solution from the initial point for different choices of in (3) and the methods mentioned in the table.

Table 3.

Comparison table for Example 3.

Example 4.

Consider the following system of equations studied in [28]

We take the initial value . In this case, all coordinates of the approximated solutions are the same, i.e., , where . The approximated solution of the system of nonlinear equations in Equation (4), solved by method (3) with as in (18)–(20), with an accuracy of , are given in Table 4.

Table 4.

Comparison table for Example 4.

One can compare the number of iterations required for convergence and the corresponding errors along with the ACOC from Table 4.

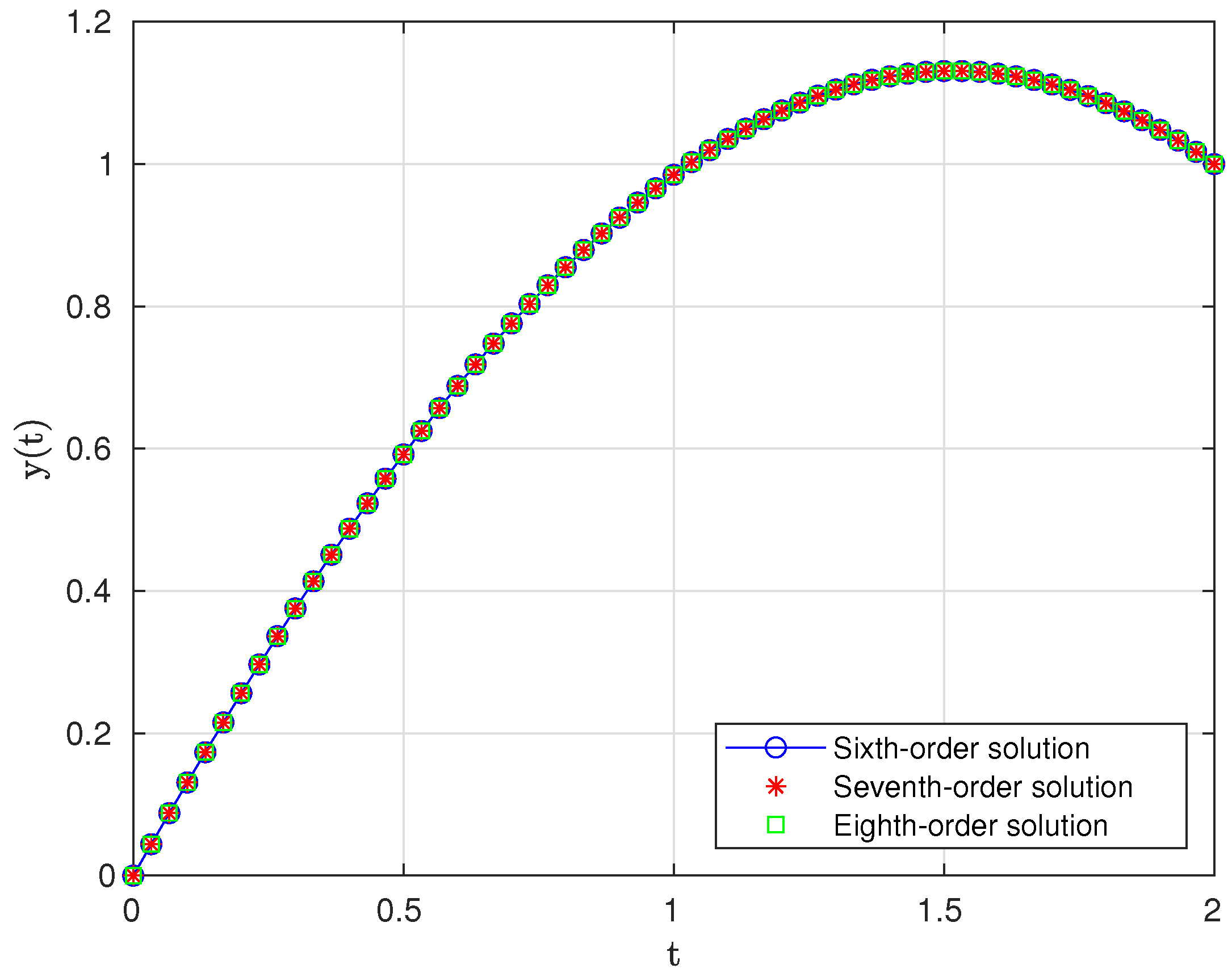

Example 5.

Consider the Van der Pol equation [29], which is described as follows:

We consider the nodes satisfying , where , and write, , . When we use the divided difference technique to discretize (22), we end up with a system of nonlinear equations as follows:

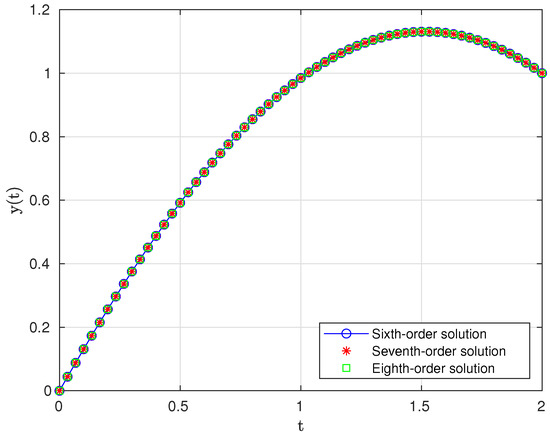

Let us take the value of , initial approximation , and . For this case, we obtain a system of nonlinear equations. The graph of the approximated solution of (22) corresponding to the choices of as in (18)–(20) is plotted in Figure 1.

Figure 1.

Graph of the solutions of (22).

5. Basin of Attractions

This section discusses the basin of attraction of a solution of the nonlinear system of Equation (1), considering with the usual norm. Let be an iterative function for (1). A point is called an attracting fixed point of F if it satisfies and . We denote the n-time composition of F. For an iterative method, suppose that the sequence converges to the solution ; then, any such point is often referred to as an initial point/initial guess. The difficulty lies in making the correct initial guess. The set

is known as the basin of attraction of . Such a set is always open but not connected in general. The connected component of containing is called an immediate basin of . The set of correct guesses is precisely the union of all the basins corresponding to solutions of (1). The Fatou set is denoted by

and the complement of , denoted by , is known as the Julia set. Here, is an open set, and is a closed set. For any attracting fixed point of F, we have and the boundary of , . For more details, see [30,31,32,33,34,35].

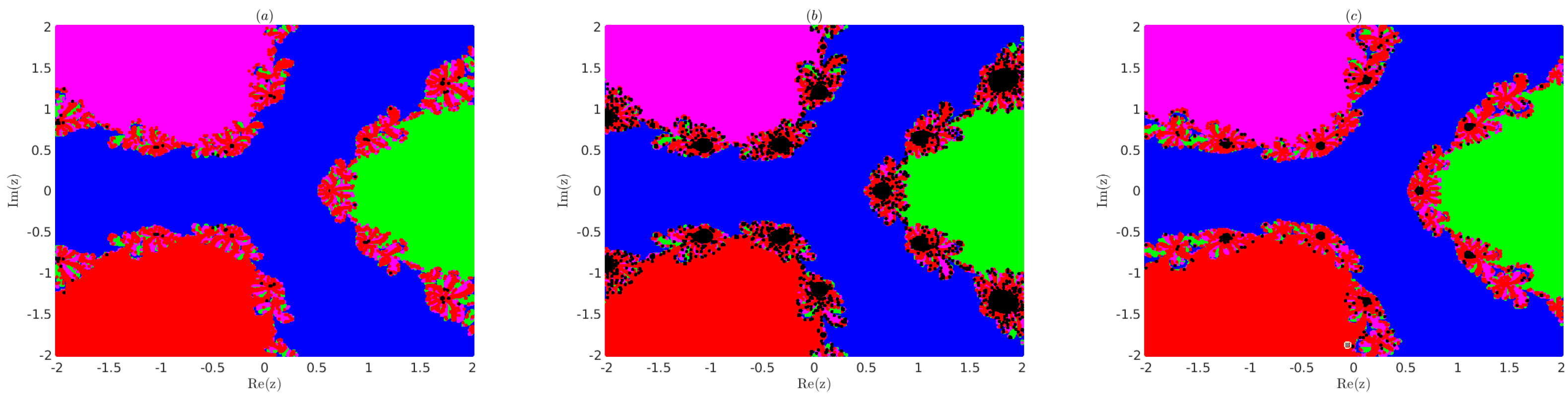

Example 6.

Consider the following two complex-variable polynomials

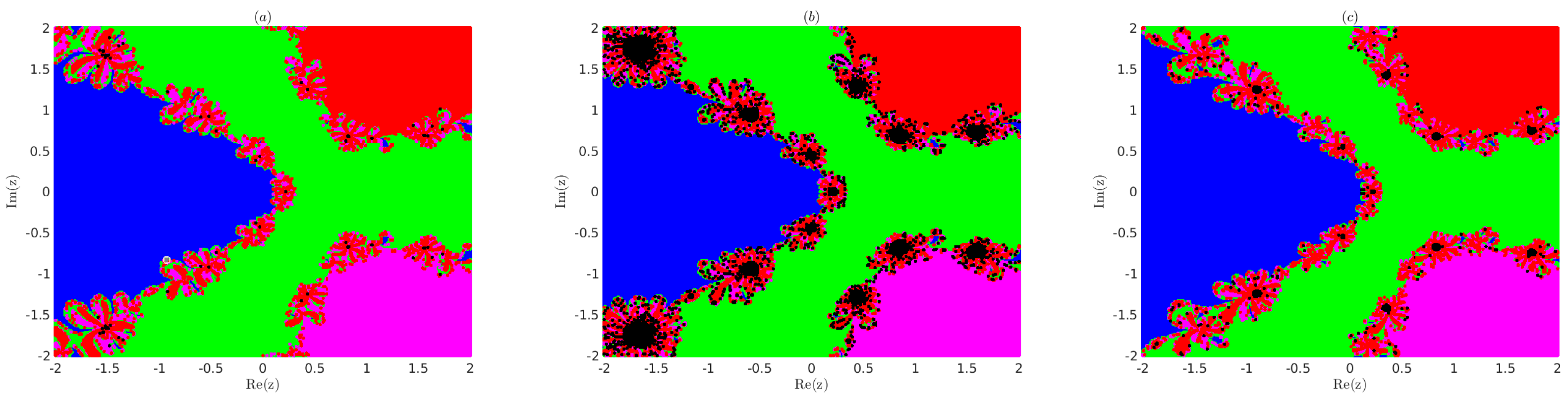

Note that the zeros for P are , , , and , and for Q, they are , and . We divide the region which contains all the roots of (23), in equally spaced grid points. Next, we apply method (3) with as in (18)–(20) using each grid point as the initial point and obtain the basin of attractions for the roots and as shown in Figure 2 for (23) and Figure 3 for (24).

Note that we assigned the colours blue, green, magenta, and red to the grid points for which the sequence converges. It converges with an accuracy of to the roots , , , and , respectively. We assigned a black colour to divergent grid points in Figure 2 and Figure 3. It is observed from Figure 2 and Figure 3 that the convergence region for choice (19) is smaller than the convergence region of choices (18) and (20) of method (3) in R.

6. Conclusions

In this work, we extended the convergence order to from a two-step iterative method of order p using weaker conditions than in earlier studies. Numerical examples were discussed, and the performance of the considered methods was compared with some existing methods. From Equation (9), one could obtain a bound for the asymptotic error constant as . Our work is limited to the operators which satisfy conditions – only. Therefore, there is a scope to weaken these conditions further to enhance the applicability of the considered methods.

Author Contributions

Conceptualization, validation, formal analysis, investigation, and visualization by I.B., M.M., S.G., K.S., I.K.A. and S.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

Santhosh George thanks Science and Engineering Research Board, Govt. of India for support under Project Grant No. CRG/2021/004776. Indra Bate and Muniyasamy M would like to thank the National Institute of Technology Karnataka, India, for their support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sakawa, Y. Optimal control of a certain type of linear distributed-parameter systems. IEEE Trans. Automat. Control 1966, 11, 35–41. [Google Scholar] [CrossRef]

- Hu, S.; Khavanin, M.; Zhuang, W. Integral equations arising in the kinetic theory of gases. Appl. Anal. 1989, 34, 261–266. [Google Scholar] [CrossRef]

- Cercignani, C. Nonlinear problems in the kinetic theory of gases. In Trends in Applications of Mathematics to Mechanics (Wassenaar, 1987); Springer: Berlin/Heidelberg, Germany, 1988; pp. 351–360. [Google Scholar]

- Lin, Y.; Bao, L.; Jia, X. Convergence analysis of a variant of the Newton method for solving nonlinear equations. Comput. Math. Appl. 2010, 59, 2121–2127. [Google Scholar] [CrossRef]

- Grosan, C.; Abraham, A. A New Approach for Solving Nonlinear Equations Systems. IEEE Trans. Syst. Man Cybern.-Part A Syst. Humans 2008, 38, 698–714. [Google Scholar] [CrossRef]

- Moré, J.J. A collection of nonlinear model problems. In Computational Solution of Nonlinear Systems of Equations. Lectures in Applied Mathematics; American Mathematical Society: Providence, RI, USA, 1990; Volume 26, pp. 723–762. [Google Scholar]

- Argyros, I.K. Convergence and Applications of Newton-Type Iterations; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Behl, R.; Arora, H. CMMSE: A novel scheme having seventh-order convergence for nonlinear systems. J. Comput. Appl. Math. 2022, 404, 113301. [Google Scholar] [CrossRef]

- Lotfi, T.; Bakhtiari, P.; Cordero, A.; Mahdiani, K.; Torregrosa, J.R. Some new efficient multipoint iterative methods for solving nonlinear systems of equations. Int. J. Comput. Math. 2015, 92, 1921–1934. [Google Scholar] [CrossRef]

- Cordero, A.; Leonardo-Sepúlveda, M.A.; Torregrosa, J.R.; Vassileva, M.P. Increasing in three units the order of convergence of iterative methods for solving nonlinear systems. Math. Comput. Simul. 2024, 223, 509–522. [Google Scholar] [CrossRef]

- George, S.; Bate, I.; Muniyasamy, M.; Chandhini, G.; Senapati, K. Enhancing the applicability of Chebyshev-like method. J. Complex. 2024, 83, 101854. [Google Scholar] [CrossRef]

- George, S.; Kunnarath, A.; Sadananda, R.; Jidesh, P.; Argyros, I.K. On obtaining order of convergence of Jarratt-like method without using Taylor series expansion. Comput. Appl. Math. 2024, 43, 243. [Google Scholar] [CrossRef]

- Muniyasamy, M.; Chandhini, G.; George, S.; Bate, I.; Senapati, K. On obtaining convergence order of a fourth and sixth order method of Hueso et al. without using Taylor series expansion. J. Comput. Appl. Math. 2024, 452, 116136. [Google Scholar]

- Ostowski, A.M. Solution of Equations and System of Equations; Academic Press: New York, NY, USA, 1960. [Google Scholar]

- Weerakoon, S.; Fernando, T. A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 2000, 13, 87–93. [Google Scholar] [CrossRef]

- Cordero, A.; Martínez, E.; Torregrosa, J.R. Iterative methods of order four and five for systems of nonlinear equations. J. Comput. Appl. Math. 2009, 231, 541–551. [Google Scholar] [CrossRef]

- Petković, M.S.; Neta, B.; Petković, L.D.; Džunić, J. Multipoint methods for solving nonlinear equations: A survey. Appl. Math. Comput. 2014, 226, 635–660. [Google Scholar] [CrossRef]

- Argyros, I.K. The Theory and Applications of Iteration Methods, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Cordero, A.; Gómez, E.; Torregrosa, J.R. Efficient high-order iterative methods for solving nonlinear systems and their application on heat conduction problems. Complexity 2017, 1, 6457532. [Google Scholar] [CrossRef]

- Kelley, C.T. Approximation of solutions of some quadratic integral equations in transport theory. J. Integral Equ. 1982, 4, 221–237. [Google Scholar]

- Hernández-Verón, M.A.; Martínez, E.; Singh, S. On the Chandrasekhar integral equation. Comput. Math. Methods 2021, 3, e1150. [Google Scholar] [CrossRef]

- Burridge, R.; Knopoff, L. Model and theoretical seismicity. Bull. Seismol. Soc. Amer. 1967, 57, 341–371. [Google Scholar] [CrossRef]

- van der Pol, B. The nonlinear theory of electric oscillations. Proc. IRE 1934, 22, 1051–1086. [Google Scholar] [CrossRef]

- FitzHugh, R. Impulses and Physiological States in Theoretical Models of Nerve Membrane. Biophys. J. 1961, 1, 445–466. [Google Scholar] [CrossRef]

- Hernández, M. Chebyshev’s approximation algorithms and applications. Comput. Math. Appl. 2001, 41, 433–445. [Google Scholar] [CrossRef]

- George, S.; Sadananda, R.; Padikkal, J.; Argyros, I.K. On the Order of Convergence of the Noor–Waseem Method. Mathematics 2022, 10, 4544. [Google Scholar] [CrossRef]

- Ham, Y.; Chun, C. A fifth-order iterative method for solving nonlinear equations. Appl. Math. Comput. 2007, 194, 287–290. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Grau, À.; Noguera, M. On the computational efficiency index and some iterative methods for solving systems of nonlinear equations. J. Comput. Appl. Math. 2011, 236, 1259–1266. [Google Scholar] [CrossRef]

- Behl, R.; Cordero, A.; Motsa, S.S.; Torregrosa, J.R. Stable high-order iterative methods for solving nonlinear models. Appl. Math. Comput. 2017, 303, 70–88. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S.; Plaza, S. Review of some iterative root-finding methods from a dynamical point of view. Sci. Ser. A Math. Sci. 2004, 10, 3–35. [Google Scholar]

- Varona, J.L. Graphic and numerical comparison between iterative methods. Math. Intell. 2002, 24, 37–46. [Google Scholar] [CrossRef]

- Campos, B.; Canela, J.; Vindel, P. Dynamics of Newton-like root finding methods. Numer. Algorithms 2023, 93, 1453–1480. [Google Scholar] [CrossRef]

- Husain, A.; Nanda, M.N.; Chowdary, M.S.; Sajid, M. Fractals: An Eclectic Survey, Part-I. Fractal Fract. 2022, 6, 89. [Google Scholar] [CrossRef]

- Husain, A.; Nanda, M.N.; Chowdary, M.S.; Sajid, M. Fractals: An Eclectic Survey, Part-II. Fractal Fract. 2022, 6, 379. [Google Scholar] [CrossRef]

- Laplante, P.A.; Laplante, C. Introduction to Chaos, Fractals and Dynamical Systems; World Scientific: London, UK, 2023. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).