MSGS-SLAM: Monocular Semantic Gaussian Splatting SLAM

Abstract

1. Introduction

- ‑

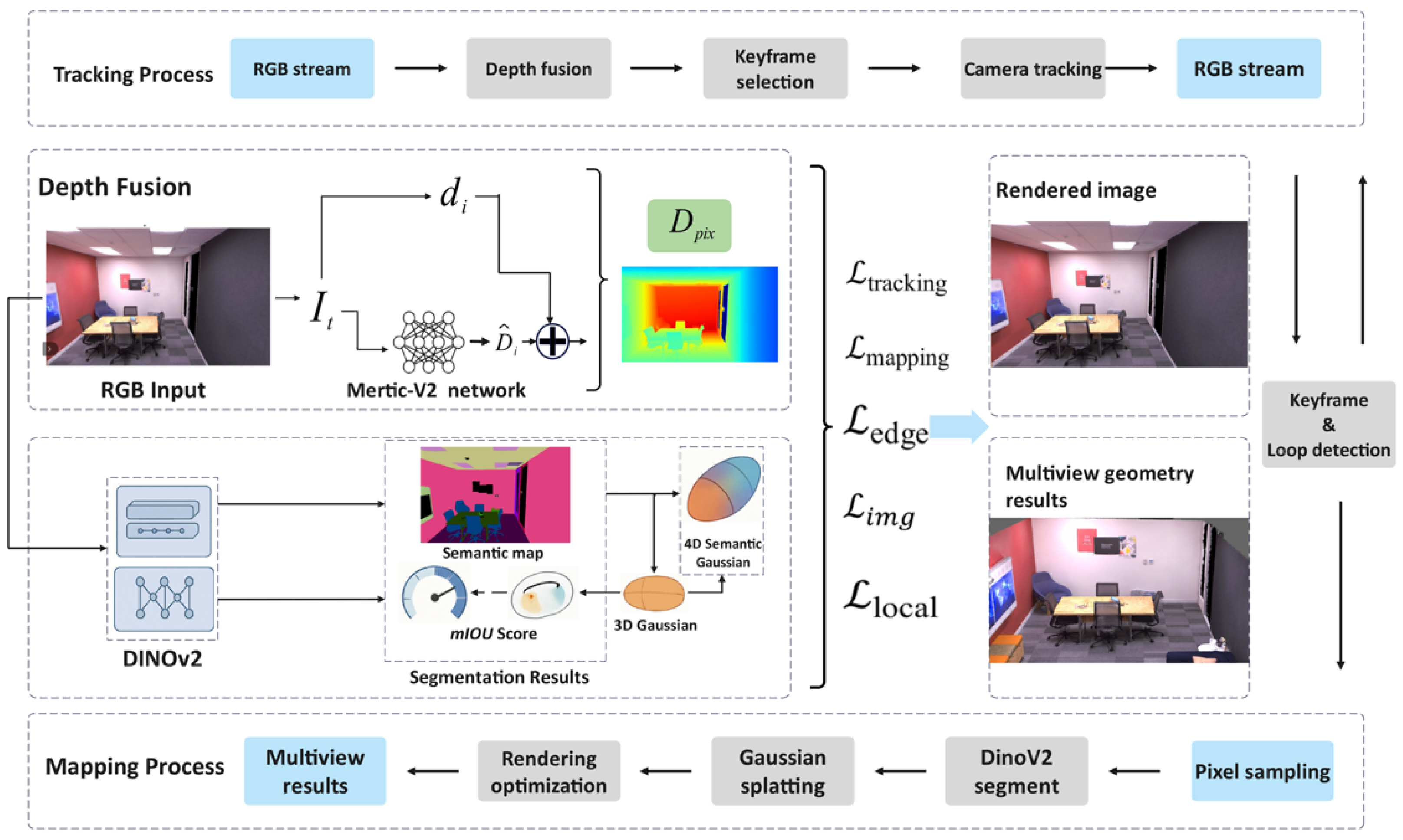

- We propose MSGS-SLAM, a monocular semantic SLAM system based on 3D Gaussian splatting. The system integrates Metric3D-V2 [13] for proxy depth estimation to address scale ambiguity, while utilizing four-dimensional semantic Gaussian representation for unified geometric, appearance, and semantic optimization. This approach achieves high-fidelity reconstruction comparable to RGB-D methods while requiring only monocular input.

- ‑

- We develop innovative technical components including uncertainty-aware proxy depth fusion, semantic-guided keyframe selection, and multi-level loop detection mechanisms. The system employs adaptive optimization strategies that leverage appearance, geometric, and semantic information to ensure robust tracking and global consistency correction.

- ‑

- Our monocular approach eliminates the requirement for specialized depth sensors (e.g., LiDAR, structured light sensors) that typically cost $200–2000 compared to standard RGB cameras ($10–100), enabling deployment with ubiquitous consumer devices, existing video datasets, and resource-constrained environments. This fundamental hardware simplification expands applicability to historical data analysis, large-scale urban modeling, and scenarios where RGB-D sensors are impractical due to cost, power, or environmental constraints.

2. Related Work

2.1. NeRF-Based SLAM

2.2. 3D Gaussian Splatting SLAM

2.3. Semantic SLAM

2.4. Depth Estimation for Monocular SLAM

3. Method

3.1. Proxy Depth Fusion

3.2. Four-Dimensional Semantic Gaussian Representation and Multi-Channel Rendering

3.3. Semantic-Guided System Tracking

3.4. Semantic-Guided System Mapping

3.5. Semantic Loop Detection and System Optimization

4. Experiments

4.1. Experimental Setup

- Datasets. We conduct systematic evaluations on both synthetic and real-world scene datasets to validate the effectiveness and generalization capability of our method. To ensure a fair comparison with existing SLAM systems, we select the Replica dataset [36] and ScanNet dataset [37] as benchmark testing platforms. Specifically, the camera trajectories and semantic maps in the Replica dataset are derived from precise simulation environments, while the reference camera poses in the ScanNet dataset are reconstructed using the BundleFusion algorithm. All two-dimensional semantic labels used in the experiments are directly provided by the original datasets, ensuring consistency and reliability of the evaluation benchmarks.

- Metrics. To evaluate system performance, we adopt a multi-dimensional quantitative metric system: for scene reconstruction quality assessment, we apply PSNR [38], Depth L1, SSIM [39], and LPIPS [40] for comprehensive measurement; for camera pose estimation accuracy, we employ Average Absolute Trajectory Error (ATE RMSE) as the standard evaluation metric; for semantic segmentation performance evaluation, we select mean Intersection over Union (mIoU) [41] as the key measurement standard.

- Baselines. We compare the tracking and mapping performance of our system with current state-of-the-art methods, including SplaTAM, SGS-SLAM, MonoGS, and SNI-SLAM. For semantic segmentation accuracy evaluation, we select SGS-SLAM and SNI-SLAM, which represent the cutting-edge level in the field of three-dimensional Gaussian semantic scene understanding, as benchmark standards.

- Implementation Details. In this section, we detail the experimental setup and key hyperparameter configurations. To address the complexity of hyperparameter tuning in practical applications, we provide principled guidelines for parameter selection based on extensive empirical validation across diverse scene types. All experiments are conducted on a workstation equipped with a 3.80 GHz Intel i7-10700K CPU and NVIDIA RTX 3090 GPU.

- Hyperparameter Selection Methodology. Our hyperparameter values were determined through systematic grid search validation on a held-out subset of Replica scenes (Room0, Office0) followed by cross-validation on ScanNet scenes. We evaluated 125 different parameter combinations using a multi-objective optimization approach that balances reconstruction quality (PSNR), tracking accuracy (ATE), and semantic performance (mIoU). The reported values represent the Pareto-optimal configuration that achieves the best trade-off across all metrics.

- Parameter Sensitivity Analysis. We conduct sensitivity analysis showing that our method is robust to ±20% variations in most parameters, with tracking weights (, ) being most critical for convergence. The regularization parameter in Equation (20) was set to 0.2 through SSIM optimization, while temporal decay in Equation (13) was set to 0.1 based on keyframe retention analysis. These values, while not globally optimal due to computational constraints, represent practical choices validated across multiple scene types and lighting conditions.

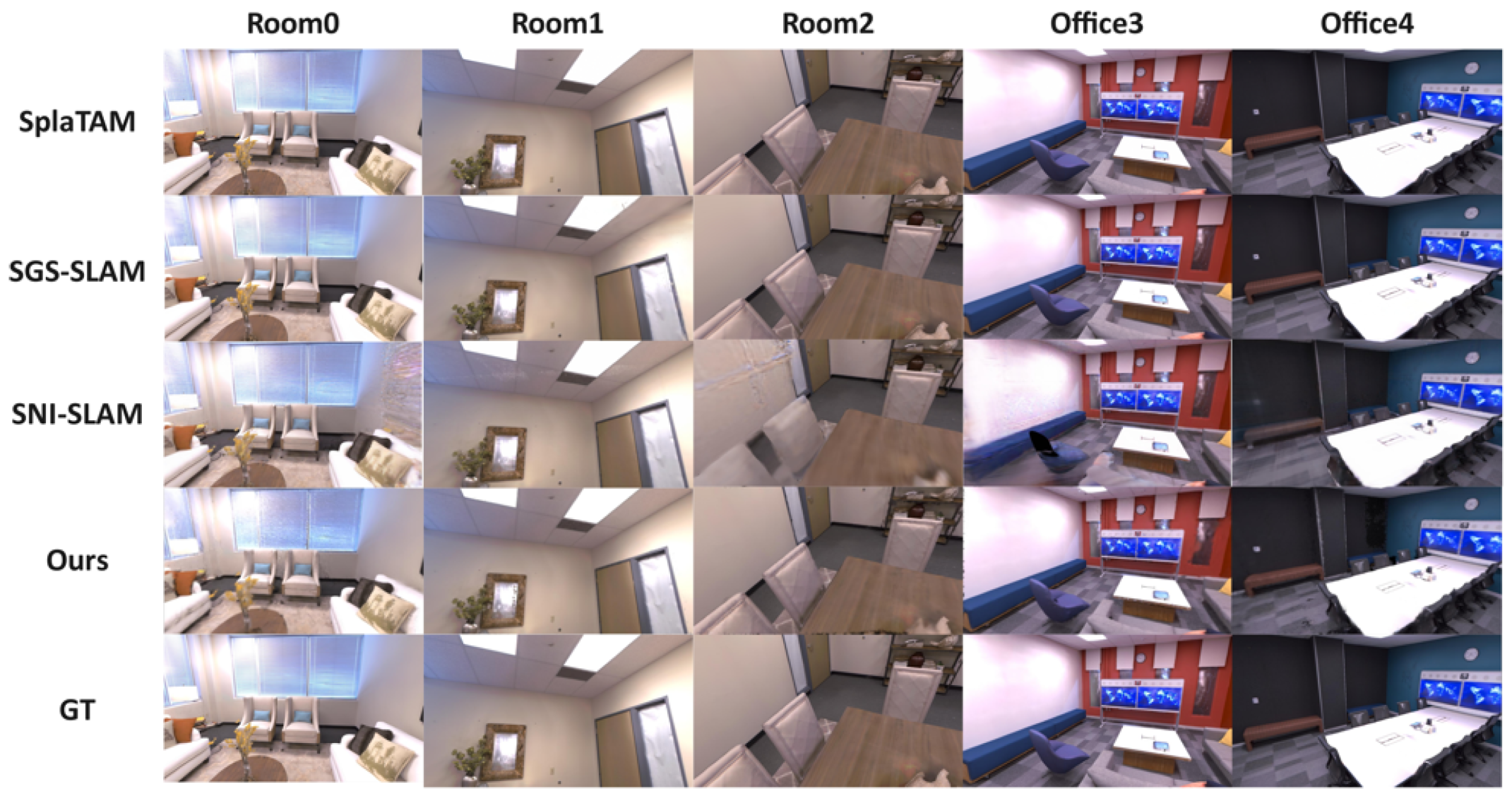

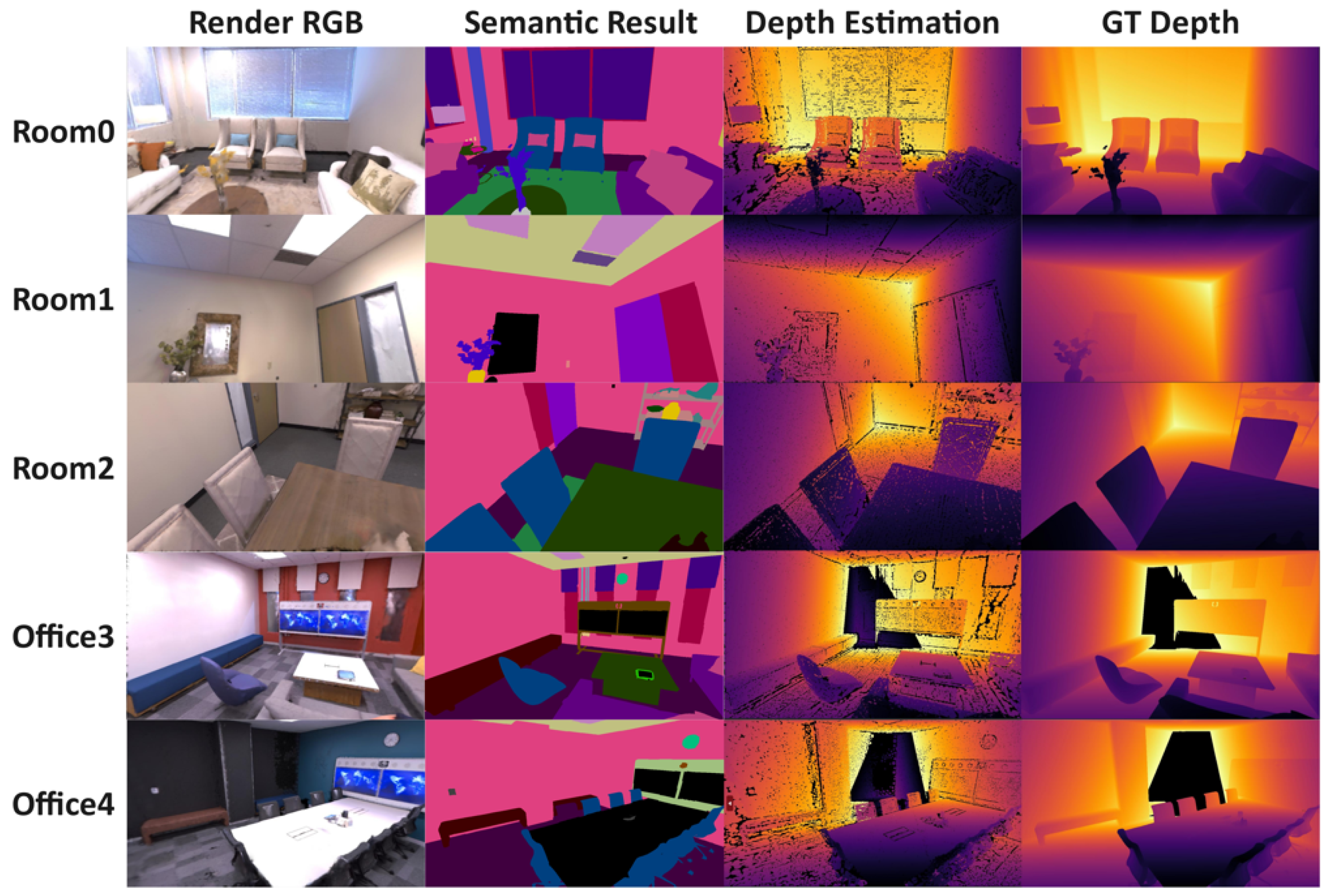

4.2. Evaluation of Mapping, Tracking, and Semantic Segmentation on the Replica Dataset

- Rendering Quality Analysis. As demonstrated in Table 1, our MSGS-SLAM achieves superior performance across all rendering metrics. Our method attains the highest average PSNR of 34.48 dB, surpassing SGS-SLAM by 0.36 dB, with the most significant improvement in Office3 (31.60 vs. 31.29 dB). The SSIM score of 0.957 and lowest LPIPS of 0.108 indicate excellent structural preservation and perceptual quality. Notably, our Depth L1 error of 0.345 substantially outperforms monocular competitor MonoGS (27.23), validating the effectiveness of Metric3D-V2 proxy depth integration.

- Tracking Accuracy and Efficiency.Table 2 shows our method achieves the best trajectory accuracy with ATE RMSE of 0.408 cm and ATE Mean of 0.319 cm, significantly outperforming monocular methods like MonoGS (12.823 cm mean error). Despite operating on monocular input, our approach maintains competitive performance with RGB-D methods while achieving real-time capability (2.05 SLAM FPS). The superior tracking performance stems from our semantic-guided keyframe selection and uncertainty-aware proxy depth optimization.

- Semantic Segmentation Performance. Our semantic evaluation in Table 3 demonstrates significant improvements with 92.68% average mIoU, surpassing SGS-SLAM by 0.80% and SNI-SLAM by 4.34%. The consistent performance across scenes, particularly 93.51% in Office0, validates our four-dimensional semantic Gaussian representation. Unlike RGB-D systems limited by depth sensor constraints, our proxy depth approach maintains robust semantic understanding across varying conditions.

4.3. Evaluation of Mapping, Tracking, and Semantic Segmentation on the ScanNet Dataset

- Rendering Quality Analysis. Table 4 demonstrates MSGS-SLAM’s superior rendering quality in natural scenes, achieving 19.48 dB PSNR and 0.738 SSIM while maintaining competitive depth accuracy (6.25 L1 error) comparable to RGB-D methods despite using proxy depth estimation.

- Tracking Accuracy and Efficiency. Our method achieves the best trajectory accuracy (8.05 cm mean ATE) in Table 5, demonstrating effective scale ambiguity resolution and robust performance under real-world visual complexity while maintaining practical real-time operation (2.08 SLAM FPS).

- Semantic Segmentation Performance. Table 6 shows our 69.15% mIoU outperforms existing semantic SLAM methods, validating our four-dimensional Gaussian representation’s effectiveness in maintaining scene understanding despite the additional challenges of natural lighting and surface variations.

4.4. Ablation Study

- Effectiveness of Proxy Depth Guidance: As shown in Table 7, introducing Metric3D-V2 proxy depth guidance significantly improves PSNR from 18.07 dB to 19.13 dB (5.86% improvement) while reducing depth L1 error from 8.34 to 6.12 (26.6% reduction). This enhancement stems from our innovative proxy depth fusion mechanism with uncertainty quantification, successfully addressing monocular vision’s scale ambiguity problem.

- Critical Role of Semantic Loss: Table 8 confirms semantic information’s importance in scene reconstruction. Integrating semantic loss achieves 9.8% PSNR improvement and dramatically enhances mIoU from 61.85% to 69.85% (13.0% improvement). This performance leap benefits from our four-dimensional semantic Gaussian representation, enabling collaborative optimization of geometric, appearance, and semantic information.

- Robustness of Semantic-Guided Loop Detection: Table 9 demonstrates significant trajectory accuracy improvement through semantic-guided loop detection. Our method reduces ATE RMSE from 12.34 cm to 11.15 cm while improving global consistency from 0.742 to 0.824. This advantage originates from our multi-level semantic similarity assessment, effectively overcoming traditional geometric methods’ limitations in challenging scenarios.

- Synergistic Effects of Multi-modal Loss: Table 10 shows our complete multi-modal loss function achieves optimal performance balance. Compared to color-only configurations, our full system significantly improves PSNR, depth accuracy, and perceptual quality, validating effective synergy among depth constraints, appearance consistency, and semantic guidance.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part i. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 34–46. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. Orb-slam: A versatile and accurate monocular slam system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. In Proceedings of the European Conference on Computer Vision, Scotland, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 405–421. [Google Scholar]

- Li, M.; Zhou, Y.; Jiang, G.; Deng, T.; Wang, Y.; Wang, H. Ddn-slam: Real-time dense dynamic neural implicit slam. arXiv 2024, arXiv:2401.01545. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Li, M.; He, J.; Wang, Y.; Wang, H. End-to-end rgb-d slam with multi-mlps dense neural implicit representations. IEEE Robot. Autom. Lett. 2023, 8, 7138–7145. [Google Scholar] [CrossRef]

- Liu, S.; Deng, T.; Zhou, H.; Li, L.; Wang, H.; Wang, D.; Li, M. Mg-slam: Structure gaussian splatting slam with manhattan world hypothesis. IEEE Trans. Autom. Sci. Eng. 2025, 22, 17034–17049. [Google Scholar] [CrossRef]

- Li, M.; Chen, W.; Cheng, N.; Xu, J.; Li, D.; Wang, H. Garad-slam: 3d gaussian splatting for real-time anti dynamic slam. arXiv 2025, arXiv:2502.03228. [Google Scholar]

- Zhou, Y.; Guo, Z.; Li, D.; Guan, R.; Ren, Y.; Wang, H.; Li, M. Dsosplat: Monocular 3d gaussian slam with direct tracking. IEEE Sens. J. 2025. [Google Scholar] [CrossRef]

- Li, M.; Liu, S.; Deng, T.; Wang, H. Densesplat: Densifying gaussian splatting slam with neural radiance prior. arXiv 2025, arXiv:2502.09111. [Google Scholar]

- Hu, M.; Yin, W.; Zhang, C.; Cai, Z.; Long, X.; Chen, H.; Wang, K.; Yu, G.; Shen, C.; Shen, S. Metric3d v2: A versatile monocular geometric foundation model for zero-shot metric depth and surface normal estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10579–10596. [Google Scholar] [CrossRef] [PubMed]

- Yen-Chen, L.; Florence, P.; Barron, J.T.; Rodriguez, A.; Isola, P.; Lin, T.-Y. iNeRF: Inverting neural radiance fields for pose estimation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 1323–1330. [Google Scholar]

- Sucar, E.; Liu, S.; Ortiz, J.; Davison, A.J. iMAP: Implicit mapping and positioning in real-time. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 6229–6238. [Google Scholar]

- Zhu, Z.; Peng, S.; Larsson, V.; Xu, W.; Bao, H.; Cui, Z.; Oswald, M.R.; Pollefeys, M. NICE-SLAM: Neural implicit scalable encoding for SLAM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12786–12796. [Google Scholar]

- Wang, H.; Wang, J.; Agapito, L. Co-SLAM: Joint coordinate and sparse parametric encodings for neural real-time SLAM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 13293–13302. [Google Scholar]

- Deng, Z.; Yunus, R.; Deng, Y.; Cheng, J.; Pollefeys, M.; Konukoglu, E. NICER-SLAM: Neural implicit scene encoding for RGB SLAM. In Proceedings of the 2024 International Conference on 3D Vision (3DV), Davos, Switzerland, 18–21 March 2024; pp. 82–91. [Google Scholar]

- Sandström, E.; Li, Y.; Gool, L.V.; Oswald, M.R. Point-SLAM: Dense neural point cloud-based SLAM. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 18–23 October 2023; pp. 18433–18444. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3d gaussian splatting for real-time radiance field rendering. Acm Trans. Graph. 2023, 42, 1–14. [Google Scholar] [CrossRef]

- Matsuki, H.; Murai, R.; Kelly, P.H.J.; Davison, A.J. Gaussian Splatting SLAM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 18039–18048. [Google Scholar]

- Keetha, N.; Karhade, J.; Jatavallabhula, K.M.; Yang, G.; Scherer, S.; Ramanan, D.; Luiten, J. SplaTAM: Splat, track & map 3d gaussians for dense RGB-D SLAM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–18 June 2024; pp. 21357–21366. [Google Scholar]

- Yan, C.; Qu, D.; Xu, D.; Zhao, B.; Wang, Z.; Wang, D.; Li, X. GS-SLAM: Dense visual SLAM with 3d gaussian splatting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–24 June 2024; pp. 19221–19230. [Google Scholar]

- Sun, L.C.; Bhatt, N.P.; Liu, J.C.; Fan, Z.; Wang, Z.; Humphreys, T.E. MM3DGS SLAM: Multi-modal 3d gaussian splatting for SLAM using vision, depth, and inertial measurements. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–24 June 2024; pp. 23403–23413. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.; Davison, A.J. SLAM++: Simultaneous localisation and mapping at the level of objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar]

- Rosinol, A.; Abate, M.; Chang, Y.; Carlone, L. Kimera: An open-source library for real-time metric-semantic localization and mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1689–1696. [Google Scholar]

- Zhu, S.; Wang, G.; Blum, H.; Liu, J.; Song, L.; Pollefeys, M.; Wang, H. SNI-SLAM: Semantic neural implicit SLAM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–18 June 2024; pp. 21167–21177. [Google Scholar]

- Li, M.; Liu, S.; Zhou, H.; Zhu, G.; Cheng, N.; Deng, T.; Wang, H. SGS-SLAM: Semantic gaussian splatting for neural dense SLAM. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 168–185. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In Vision Algorithms: Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2000; pp. 298–372. [Google Scholar]

- Dönmez, A.; Köseoğlu, B.; Araç, M.; Günel, S. vemb-slam: An efficient embedded monocular slam framework for 3d mapping and semantic segmentation. In Proceedings of the 2025 7th International Congress on Human-Computer Interaction, Optimization and Robotic Applications (ICHORA), Ankara, Turkiye, 23–24 May 2025; pp. 1–10. [Google Scholar]

- Wang, H.; Yang, M.; Zheng, N. G2-monodepth: A general framework of generalized depth inference from monocular rgb+ x data. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 3753–3771. [Google Scholar] [CrossRef] [PubMed]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. Dinov2: Learning robust visual features without supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 27380–27400. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Straub, J.; Whelan, T.; Ma, L.; Chen, Y.; Wijmans, E.; Green, S.; Engel, J.J.; Mur-Artal, R.; Ren, C.; Verma, S.; et al. The replica dataset: A digital replica of indoor spaces. arXiv 2019, arXiv:1906.05797. [Google Scholar] [CrossRef]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5828–5839. [Google Scholar]

- Horé, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Everingham, M.; Gool, L.V.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (voc) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

| Methods | Metrics | Avg. | Room0 | Room1 | Room2 | Office0 | Office1 | Office2 | Office3 |

|---|---|---|---|---|---|---|---|---|---|

| SplaTAM | PSNR↑ | 33.52 | 31.72 | 33.12 | 34.34 | 37.33 | 38.11 | 31.06 | 28.98 |

| SSIM↑ | 0.953 | 0.952 | 0.952 | 0.965 | 0.961 | 0.963 | 0.946 | 0.931 | |

| LPIPS↓ | 0.117 | 0.093 | 0.123 | 0.095 | 0.109 | 0.121 | 0.129 | 0.151 | |

| Depth L1↓ | 0.533 | 0.485 | 0.542 | 0.501 | 0.518 | 0.555 | 0.567 | 0.563 | |

| SGS-SLAM | PSNR↑ | 34.12 | 31.81 | 33.56 | 34.38 | 37.60 | 38.22 | 31.98 | 31.29 |

| SSIM↑ | 0.954 | 0.954 | 0.976 | 0.958 | 0.961 | 0.957 | 0.948 | 0.947 | |

| LPIPS↓ | 0.111 | 0.088 | 0.117 | 0.087 | 0.107 | 0.109 | 0.126 | 0.143 | |

| Depth L1↓ | 0.359 | 0.325 | 0.315 | 0.385 | 0.331 | 0.394 | 0.375 | 0.388 | |

| MonoGS | PSNR↑ | 31.27 | 29.05 | 30.95 | 31.55 | 34.35 | 34.67 | 29.73 | 28.59 |

| SSIM↑ | 0.910 | 0.895 | 0.908 | 0.915 | 0.921 | 0.925 | 0.902 | 0.904 | |

| LPIPS↓ | 0.208 | 0.185 | 0.201 | 0.195 | 0.224 | 0.235 | 0.198 | 0.217 | |

| Depth L1↓ | 27.23 | 24.85 | 26.12 | 25.78 | 29.45 | 31.20 | 26.90 | 26.33 | |

| SNI-SLAM | PSNR↑ | 28.97 | 25.18 | 27.95 | 28.94 | 33.89 | 30.05 | 28.25 | 28.53 |

| SSIM↑ | 0.928 | 0.877 | 0.905 | 0.935 | 0.962 | 0.925 | 0.945 | 0.945 | |

| LPIPS↓ | 0.343 | 0.395 | 0.378 | 0.335 | 0.275 | 0.330 | 0.338 | 0.352 | |

| Depth L1↓ | 1.167 | 1.123 | 1.386 | 1.247 | 1.095 | 0.874 | 1.152 | 1.092 | |

| Ours | PSNR↑ | 34.48 | 31.75 | 33.85 | 34.65 | 37.85 | 38.18 | 32.25 | 31.60 |

| SSIM↑ | 0.957 | 0.955 | 0.954 | 0.968 | 0.964 | 0.966 | 0.947 | 0.954 | |

| LPIPS↓ | 0.108 | 0.085 | 0.114 | 0.090 | 0.104 | 0.106 | 0.123 | 0.144 | |

| Depth L1↓ | 0.345 | 0.314 | 0.306 | 0.369 | 0.415 | 0.386 | 0.365 | 0.380 |

| Methods | ATE Mean [cm] ↓ | ATE RMSE [cm] ↓ | Track. FPS [f/s] ↑ | Map. FPS [f/s] ↑ | SLAM FPS [f/s] ↑ |

|---|---|---|---|---|---|

| SplaTAM | 0.349 | 0.451 | 5.47 | 3.94 | 2.13 |

| SGS-SLAM | 0.328 | 0.416 | 5.20 | 3.53 | 2.11 |

| MonoGS | 12.823 | 14.533 | 0.85 | 0.62 | 0.51 |

| SNI-SLAM | 0.516 | 0.631 | 17.25 | 3.60 | 2.94 |

| Ours | 0.319 | 0.408 | 4.58 | 3.26 | 2.05 |

| Methods | Avg. mIoU↑ | Room0 [%]↑ | Room1 [%]↑ | Room2 [%]↑ | Office0 [%]↑ |

|---|---|---|---|---|---|

| SGS-SLAM | 91.88 | 92.02 | 92.17 | 90.94 | 92.25 |

| SNI-SLAM | 88.34 | 89.35 | 88.21 | 87.10 | 88.63 |

| Ours | 92.68 | 92.58 | 92.74 | 91.89 | 93.51 |

| Methods | Metrics | Avg. | 0000 | 0059 | 0106 | 0169 | 0181 | 0207 |

|---|---|---|---|---|---|---|---|---|

| SplaTAM | PSNR↑ | 18.64 | 18.92 | 18.59 | 17.15 | 21.62 | 16.29 | 19.20 |

| SSIM↑ | 0.693 | 0.637 | 0.764 | 0.674 | 0.752 | 0.660 | 0.673 | |

| LPIPS↓ | 0.429 | 0.505 | 0.361 | 0.454 | 0.357 | 0.495 | 0.410 | |

| Depth L1↓ | 11.33 | 11.45 | 9.87 | 12.56 | 9.23 | 13.78 | 11.09 | |

| SGS-SLAM | PSNR↑ | 18.96 | 19.06 | 18.45 | 17.98 | 19.57 | 18.82 | 19.68 |

| SSIM↑ | 0.726 | 0.721 | 0.704 | 0.678 | 0.751 | 0.728 | 0.774 | |

| LPIPS↓ | 0.383 | 0.390 | 0.407 | 0.439 | 0.352 | 0.386 | 0.324 | |

| Depth L1↓ | 6.48 | 6.16 | 7.12 | 7.89 | 5.84 | 6.55 | 5.32 | |

| MonoGS | PSNR↑ | 16.83 | 17.24 | 15.89 | 15.67 | 17.48 | 16.92 | 17.78 |

| SSIM↑ | 0.634 | 0.645 | 0.612 | 0.598 | 0.661 | 0.638 | 0.683 | |

| LPIPS↓ | 0.587 | 0.598 | 0.623 | 0.641 | 0.559 | 0.587 | 0.513 | |

| Depth L1↓ | 18.76 | 19.84 | 21.35 | 22.67 | 17.23 | 18.94 | 12.53 | |

| SNI-SLAM | PSNR↑ | 17.86 | 17.42 | 16.89 | 16.73 | 18.35 | 17.94 | 19.83 |

| SSIM↑ | 0.658 | 0.641 | 0.625 | 0.614 | 0.686 | 0.663 | 0.719 | |

| LPIPS↓ | 0.512 | 0.528 | 0.551 | 0.567 | 0.489 | 0.514 | 0.423 | |

| Depth L1↓ | 7.34 | 7.85 | 8.42 | 9.16 | 6.78 | 7.23 | 4.60 | |

| Ours | PSNR↑ | 19.48 | 19.13 | 19.05 | 18.76 | 21.06 | 19.55 | 19.33 |

| SSIM↑ | 0.738 | 0.728 | 0.725 | 0.712 | 0.748 | 0.732 | 0.775 | |

| LPIPS↓ | 0.368 | 0.378 | 0.359 | 0.424 | 0.342 | 0.373 | 0.318 | |

| Depth L1↓ | 6.25 | 6.12 | 6.95 | 7.75 | 5.72 | 6.45 | 4.48 |

| Methods | ATE Mean [cm] ↓ | ATE RMSE [cm] ↓ | Track. FPS [f/s] ↑ | Map. FPS [f/s] ↑ | SLAM FPS [f/s] ↑ |

|---|---|---|---|---|---|

| SplaTAM | 8.23 | 9.87 | 4.85 | 3.21 | 1.98 |

| SGS-SLAM | 9.54 | 11.37 | 4.67 | 3.05 | 1.89 |

| MonoGS | 18.75 | 21.42 | 0.73 | 0.54 | 0.46 |

| SNI-SLAM | 12.84 | 15.67 | 14.32 | 3.12 | 2.58 |

| Ours | 8.05 | 9.65 | 4.95 | 3.15 | 2.08 |

| Methods | Avg. mIoU↑ | 0000 [%]↑ | 0059 [%]↑ | 0106 [%]↑ | 0169 [%]↑ |

|---|---|---|---|---|---|

| SGS-SLAM | 68.52 | 69.45 | 67.89 | 68.21 | 68.53 |

| SNI-SLAM | 64.89 | 65.85 | 63.68 | 64.31 | 65.72 |

| Ours | 69.15 | 69.85 | 68.37 | 68.94 | 69.18 |

| Configuration | Mapping (ms) ↓ | PSNR (dB) ↑ | Depth L1 ↓ |

|---|---|---|---|

| W/o proxy depth guidance | 28.4 | 18.07 | 8.34 |

| W/proxy depth guidance | 31.7 | 19.13 | 6.12 |

| Configuration | Mapping (ms) ↓ | PSNR (dB) ↑ | mIoU (%) ↑ | SSIM ↑ |

|---|---|---|---|---|

| W/o semantic loss | 26.8 | 17.42 | 61.85 | 0.665 |

| W/semantic loss | 31.7 | 19.13 | 69.85 | 0.728 |

| Configuration | ATE RMSE (cm) ↓ | Tracking FPS ↑ | PSNR (dB) ↑ | Global Consistency ↑ |

|---|---|---|---|---|

| W/o loop closure | 12.34 | 5.28 | 18.25 | 0.742 |

| W/semantic loop closure | 11.15 | 5.08 | 19.13 | 0.824 |

| Configuration | Mapping (ms) ↓ | PSNR (dB) ↑ | Depth L1 ↓ | LPIPS ↓ |

|---|---|---|---|---|

| W/o depth loss | 29.5 | 17.85 | 7.94 | 0.425 |

| W/o color & depth loss | 24.1 | 16.93 | 9.17 | 0.468 |

| W/full loss (Ours) | 31.7 | 19.13 | 6.12 | 0.378 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, M.; Ge, S.; Wang, F. MSGS-SLAM: Monocular Semantic Gaussian Splatting SLAM. Symmetry 2025, 17, 1576. https://doi.org/10.3390/sym17091576

Yang M, Ge S, Wang F. MSGS-SLAM: Monocular Semantic Gaussian Splatting SLAM. Symmetry. 2025; 17(9):1576. https://doi.org/10.3390/sym17091576

Chicago/Turabian StyleYang, Mingkai, Shuyu Ge, and Fei Wang. 2025. "MSGS-SLAM: Monocular Semantic Gaussian Splatting SLAM" Symmetry 17, no. 9: 1576. https://doi.org/10.3390/sym17091576

APA StyleYang, M., Ge, S., & Wang, F. (2025). MSGS-SLAM: Monocular Semantic Gaussian Splatting SLAM. Symmetry, 17(9), 1576. https://doi.org/10.3390/sym17091576