Symmetry-Aware Face Illumination Enhancement via Pixel-Adaptive Curve Mapping

Abstract

1. Introduction

- (1)

- A symmetry-aware illumination intensity measurement algorithm is created, which combines a novel nested U-Net structure for face shadow detection with Gaussian convolution.

- (2)

- A high-order enhancement curve controlled by the illumination intensity is proposed, which not only maps pixels to a wider dynamic range, but also maintains the balanced enhancement of symmetrical facial features.

2. Related Work

2.1. Low-Light Image Enhancement

2.2. Shadow Detection

2.3. Literature Review

3. The Proposed Method

3.1. Pixel-Wise Facial Illumination Intensity Measurement Algorithm

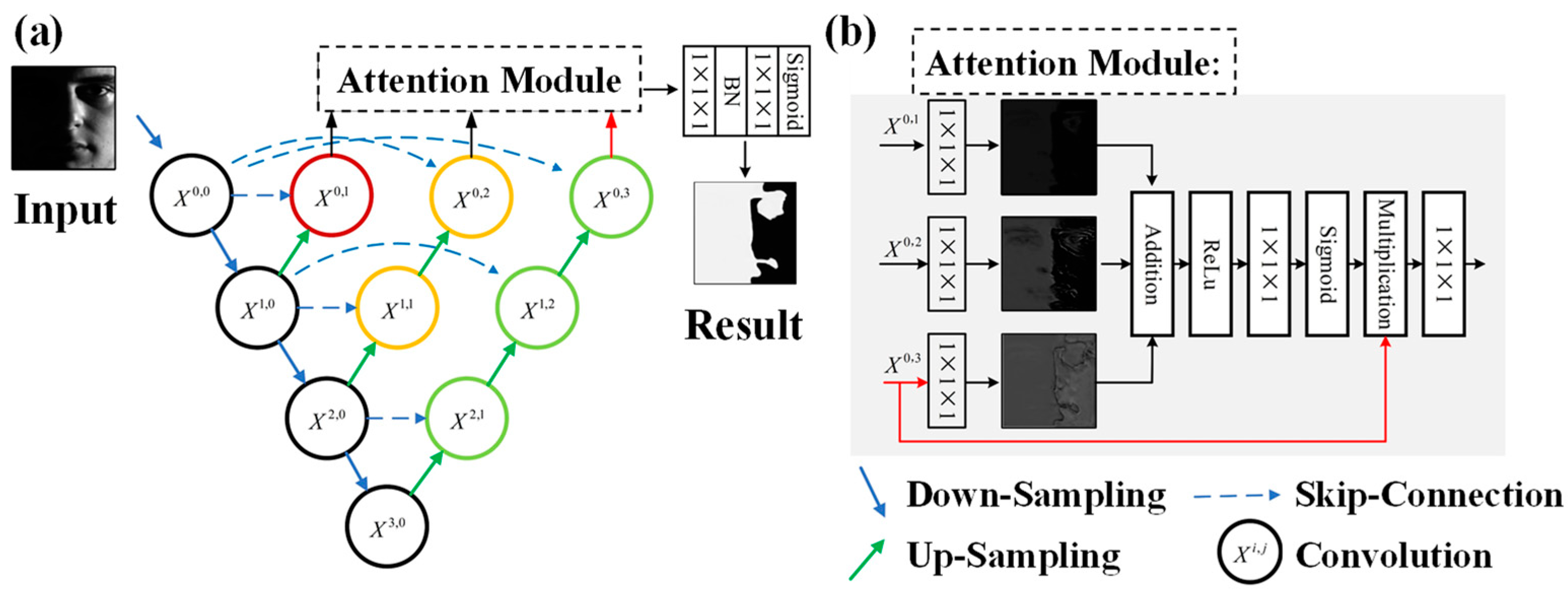

3.1.1. Face Shadow Detection Network (FSDN)

3.1.2. Facial Illumination Intensity Map

3.2. Illumination Enhancement Curve

4. Results

4.1. Dataset

4.2. Implementation Details

4.3. Results of Face Shadow Detection

4.4. Illumination Enhancement Results

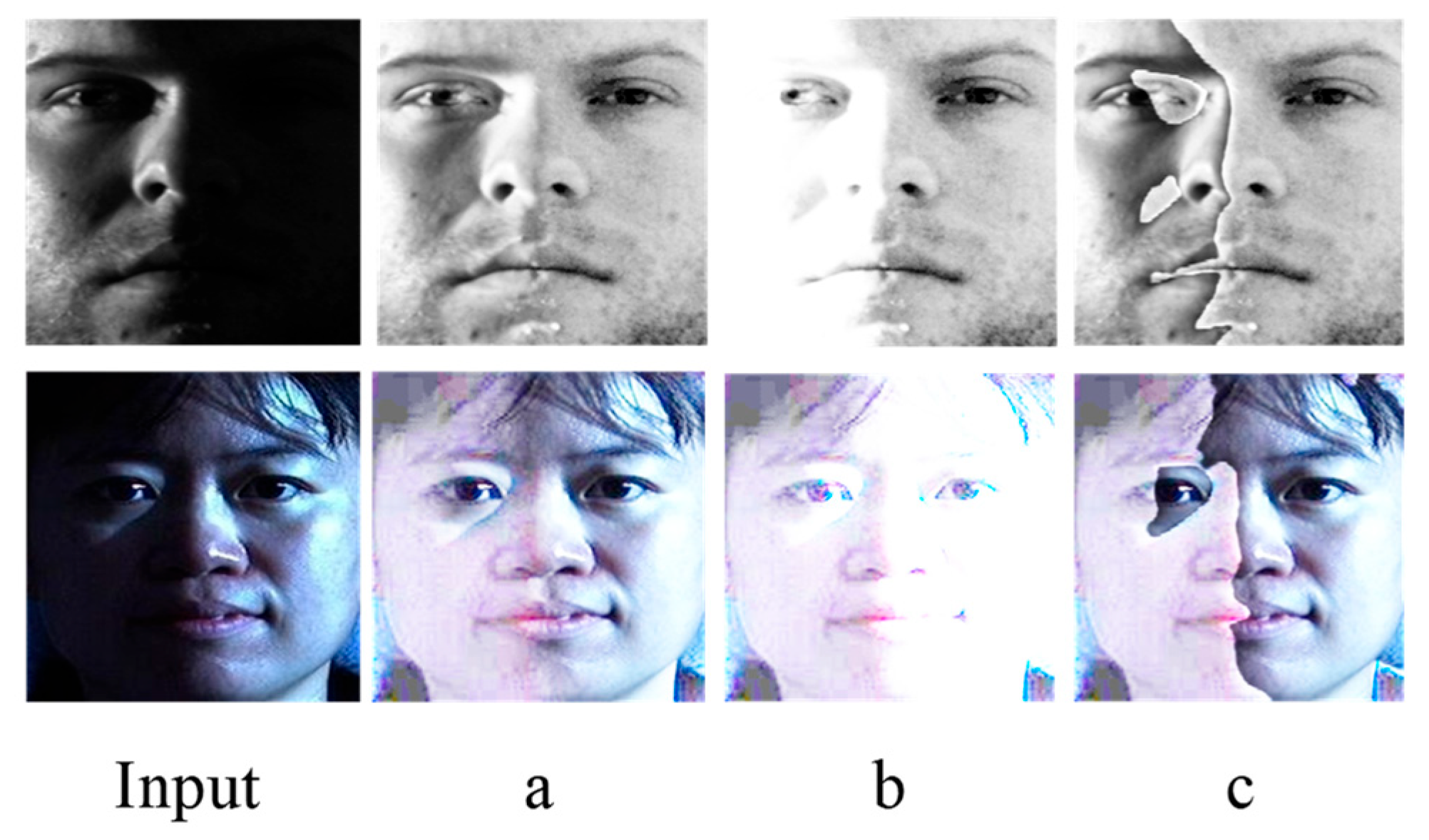

4.4.1. Qualitative Results

4.4.2. Quantitative Results

4.4.3. Ablation Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Adini, Y.; Moses, Y.; Ullman, S. Face recognition: The problem of compensating for changes in illumination direction. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 721–732. [Google Scholar] [CrossRef]

- Emadi, M.; Khalid, M.; Yusof, R.; Navabifar, F. Illumination normalization using 2D wavelet. Procedia Eng. 2012, 41, 854–859. [Google Scholar] [CrossRef]

- Chen, W.; Er, M.J.; Wu, S. Illumination compensation and normalization for robust face recognition using discrete cosine transform in logarithm domain. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2006, 36, 458–466. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Xie, X.; Lam, K.-M. Face recognition under varying illumination based on a 2D face shape model. Pattern Recognit. 2005, 38, 221–230. [Google Scholar] [CrossRef]

- Xie, X.; Lam, K.-M. An Efficient Method for Face Recognition Under Varying Illumination. In Proceedings of the 2005 IEEE International Symposium on Circuits and Systems, Kobe, Japan, 23–26 May 2005; pp. 3841–3844. [Google Scholar]

- Xie, X.; Lam, K.-M. An efficient illumination normalization method for face recognition. Pattern Recognit. Lett. 2006, 27, 609–617. [Google Scholar] [CrossRef]

- Vishwakarma, V.P.; Pandey, S.; Gupta, M.N. Adaptive histogram equalization and logarithm transform with rescaled low frequency DCT coefficients for illumination normalization. Int. J. Recent Trends Eng. 2009, 1, 318. [Google Scholar]

- Lee, P.-H.; Wu, S.-W.; Hung, Y.-P. Illumination compensation using oriented local histogram equalization and its application to face recognition. IEEE Trans. Image Process. 2012, 21, 4280–4289. [Google Scholar] [CrossRef]

- Basri, R.; Jacobs, D. Lambertian reflectance and linear subspaces. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 218–233. [Google Scholar] [CrossRef]

- Xie, X.; Zheng, W.-S.; Lai, J.; Yuen, P.C.; Suen, C.Y. Normalization of face illumination based on large-and small-scale features. IEEE Trans. Image Process. 2010, 20, 1807–1821. [Google Scholar] [CrossRef]

- Matsukawa, T.; Okabe, T.; Sato, Y. Illumination Normalization of Face Images with Cast Shadows. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1848–1851. [Google Scholar]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Shen, L.; Yue, Z.; Feng, F.; Chen, Q.; Liu, S.; Ma, J. Msr-net: Low-light image enhancement using deep convolutional network. arXiv 2017, arXiv:1711.02488. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the darkness: A practical low-light image enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1632–1640. [Google Scholar]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef] [PubMed]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1777–1786. [Google Scholar]

- Xie, C.; Fei, L.; Tao, H.; Hu, Y.; Zhou, W.; Hoe, J.T.; Hu, W.; Tan, Y.-P. Residual quotient learning for zero-reference low-light image enhancement. IEEE Trans. Image Process. 2025, 34, 365–378. [Google Scholar] [CrossRef]

- Yu, C.; Han, G.; Pan, M.; Wu, X.; Deng, A. Zero-tce: Zero reference tri-curve enhancement for low-light images. Appl. Sci. 2025, 15, 701. [Google Scholar] [CrossRef]

- Yan, Q.; Feng, Y.; Zhang, C.; Pang, G.; Shi, K.; Wu, P.; Dong, W.; Sun, J.; Zhang, Y. HVI: A new color space for low-light image enhancement. arXiv 2025, arXiv:2502.20272. [Google Scholar]

- Sim, T.; Baker, S.; Bsat, M. The CMU Pose, Illumination, and Expression (PIE) Database. In Proceedings of the fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21 May 2002; pp. 53–58. [Google Scholar]

- Georghiades, A.S.; Belhumeur, P.N.; Kriegman, D.J. From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 643–660. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Hordley, S.D.; Lu, C.; Drew, M.S. On the removal of shadows from images. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 28, 59–68. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Drew, M.S.; Lu, C. Entropy minimization for shadow removal. Int. J. Comput. Vis. 2009, 85, 35–57. [Google Scholar] [CrossRef]

- Guo, R.; Dai, Q.; Hoiem, D. Single-image shadow detection and removal using paired regions. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2033–2040. [Google Scholar]

- Guo, R.; Dai, Q.; Hoiem, D. Paired regions for shadow detection and removal. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 2956–2967. [Google Scholar] [CrossRef]

- Vicente, T.F.Y.; Yu, C.-P.; Samaras, D. Single Image Shadow Detection Using Multiple Cues in a Supermodular MRF. In Proceedings of the British Machine Vision Conference, Bristol, UK, 9–13 September 2013. [Google Scholar]

- Yuan, X.; Ebner, M.; Wang, Z. Single-image shadow detection and removal using local colour constancy computation. IET Image Process. 2015, 9, 118–126. [Google Scholar] [CrossRef]

- Liu, J.; Tang, Q.; Wang, Y.; Lu, Y.; Zhang, Z. Defects’ geometric feature recognition based on infrared image edge detection. Infrared Phys. Technol. 2014, 67, 387–390. [Google Scholar] [CrossRef]

- Hamza, S.A.; Jesser, A. A study on advancing Edge Detection and Geometric Analysis through Image Processing. In Proceedings of the 2024 8th International Conference on Graphics and Signal Processing (ICGSP ’24), Tokyo, Japan, 14–16 June 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 9–13. [Google Scholar]

- Yang, D.; Peng, B.; Al-Huda, Z.; Malik, A.; Zhai, D. An overview of edge and object contour detection. Neurocomputing 2022, 488, 470–493. [Google Scholar] [CrossRef]

- Khan, S.H.; Bennamoun, M.; Sohel, F.; Togneri, R. Automatic Feature Learning for Robust Shadow Detection. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2014), Columbus, OH, USA, 23–28 June 2014; pp. 1939–1946. [Google Scholar]

- Vicente, T.F.Y.; Hou, L.; Yu, C.P.; Hoai, M.; Samaras, D. Large-scale training of shadow detectors with noisily-annotated shadow examples. In European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Hosseinzadeh, S.; Shakeri, M.; Zhang, H. Fast Shadow Detection from a Single Image Using a Patched Convolutional Neural Network. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018), Madrid, Spain, 1–5 October 2018; pp. 3124–3129. [Google Scholar]

- Nguyen, V.; Vicente, T.F.Y.; Zhao, M.; Hoai, M.; Samaras, D. Shadow Detection with Conditional Generative Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 4520–4528. [Google Scholar]

- Le, H.; Vicente, T.F.Y.; Nguyen, V.; Hoai, M.; Samaras, D. A+D net: Training a shadow detector with adversarial shadow attenuation. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhu, L.; Deng, Z.; Hu, X.; Fu, C.-W.; Xu, X.; Qin, J.; Heng, P.-A. Bidirectional feature pyramid network with recurrent attention residual modules for shadow detection. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhou, H.; Yi, J. FFSDF: An improved fast face shadow detection framework based on channel spatial attention enhancement. J. King Saud Univ. Comput. Inf. Sci. 2023, 35, 101766. [Google Scholar] [CrossRef]

- Zheng, Q.; Qiao, X.; Cao, Y.; Lau, R.W. Distraction-Aware Shadow Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 15–20 June 2019; pp. 5162–5171. [Google Scholar]

- Zhang, J.; Li, F.; Zhang, X.; Cheng, Y.; Hei, X. Multi-Task Mean Teacher Medical Image Segmentation Based on Swin Transformer. Appl. Sci. 2024, 14, 2986. [Google Scholar] [CrossRef]

- Shi, P.; Hu, J.; Yang, Y.; Gao, Z.; Liu, W.; Ma, T. Centerline boundary dice loss for vascular segmentation. In Proceedings of Medical Image Computing and Computer Assisted Intervention—MICCAI 2024; Springer Nature: Cham, Switzerland, 2024; pp. 46–56. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Aadarsh, Y.G.; Singh, G. Comparing UNet, UNet++, FPN, PAN and Deeplabv3+ for Gastrointestinal Tract Disease Detection. In Proceedings of the 2023 International Conference on Evolutionary Algorithms and Soft Computing Techniques (EASCT), Bengaluru, India, 20–21 October 2023; pp. 1–7. [Google Scholar]

- Yuan, Y.; Chen, X.; Wang, J. Object-contextual representations for semantic segmentation. In Proceedings of the Computer Vision-ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part VI. Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Bakurov, I.; Buzzelli, M.; Schettini, R.; Castelli, M.; Vanneschi, L. Structural similarity index (SSIM) revisited: A data-driven approach. Expert Syst. Appl. 2022, 189, 116087. [Google Scholar] [CrossRef]

- Cao, Q.; Shen, L.; Xie, W.; Parkhi, O.M.; Zisserman, A. VGGFace2: A Dataset for Recognising Faces Across Pose and Age. In Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 67–74. [Google Scholar]

| Methods | IoU | SER | NER | BER |

|---|---|---|---|---|

| U-Net [43] | 0.84 | 8.06 | 5.15 | 6.61 |

| U-Net++ [44] | 0.84 | 7.92 | 4.97 | 6.45 |

| OCRNet [45] | 0.83 | 8.98 | 4.83 | 6.91 |

| BDRAR [38] | 0.86 | 8.06 | 5.15 | 6.61 |

| DSC [47] | 0.83 | 8.67 | 3.67 | 6.21 |

| DSD [40] | 0.91 | 4.36 | 2.50 | 3.43 |

| FSDN | 0.92 | 3.06 | 2.81 | 2.94 |

| Methods | SSIM | Face Recognition Error Rate (%) | ||

|---|---|---|---|---|

| Yale B | CMU-PIE | Yale B | CMU-PIE | |

| HE | 0.42 | 0.54 | 2.9 | 0.3 |

| MSR (MSRCR) | 0.47 | 0.54 | 4.74 | 0.2 |

| Retinex-Net | 0.45 | 0.53 | 6.56 | 0.4 |

| Zero-Dce | 0.44 | 0.58 | 1.3 | 0.3 |

| This work | 0.48 | 0.59 | 1.3 | 0.2 |

| Loss Term | SSIM | Face Recognition Error Rate (%) | ||

|---|---|---|---|---|

| Yale B | CMU-PIE | Yale B | CMU-PIE | |

| 0.38 | 0.35 | 0.542 | 37.2 | |

| w/o Gaussian smoothing | 0.44 | 0.54 | 0.033 | 0.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Lu, Y.; Liu, J.; Yi, J. Symmetry-Aware Face Illumination Enhancement via Pixel-Adaptive Curve Mapping. Symmetry 2025, 17, 1560. https://doi.org/10.3390/sym17091560

Yang J, Lu Y, Liu J, Yi J. Symmetry-Aware Face Illumination Enhancement via Pixel-Adaptive Curve Mapping. Symmetry. 2025; 17(9):1560. https://doi.org/10.3390/sym17091560

Chicago/Turabian StyleYang, Jieqiong, Yumeng Lu, Jiaqi Liu, and Jizheng Yi. 2025. "Symmetry-Aware Face Illumination Enhancement via Pixel-Adaptive Curve Mapping" Symmetry 17, no. 9: 1560. https://doi.org/10.3390/sym17091560

APA StyleYang, J., Lu, Y., Liu, J., & Yi, J. (2025). Symmetry-Aware Face Illumination Enhancement via Pixel-Adaptive Curve Mapping. Symmetry, 17(9), 1560. https://doi.org/10.3390/sym17091560