In this section, a stock price trend dataset is used as an example to test the validity of the prediction model based on HBRB-b. In

Section 5.1, the introduction of the dataset was carried out. In

Section 5.2, a stock price trend prediction model based on HBRB-b was developed. In

Section 5.3, the interpretability of the model based on HBRB-b was verified. In

Section 5.4, cross-validation was carried out. In

Section 5.5, a comparative experiment was conducted.

5.1. Introduction to the Dataset

This research used the dataset found in

https://www.kaggle.com/datasets/gelasiusgalvindy/stock-indices-around-the-world, accessed on 11 September 2025. The dataset, collected from Yahoo Finance, consists of daily stock trading indicators for the period from 17 July 2017 to 2 July 2022. The dataset used in this research includes daily price data of multiple major global stock indices, specifically the following: the Dow Jones Industrial Average (DJI), the S&P 500 Index (SPX), the Nasdaq Composite Index (IXIC), the CBOE Volatility Index (VIX), the FTSE 100 Index (FTSE), the Paris CAC40 Index (FCHI), the European STOXX 600 Index (STOXX), the Dutch AEX Index, and the Spanish I The BEX Index, the Russian MOEX Index, the Turkish BIST Index, the Hong Kong Hang Seng Index (HSI), the Shanghai Composite Index (SSE), etc. Stock data changes exhibit nonlinearity, non-stationarity, and high complexity. The constructed stock price prediction system should not only be able to effectively handle uncertain information, but must also have interpretability to ensure its prediction results have high credibility. The detailed descriptions of the selected indicators are shown in

Table 1. The dataset includes 1280 index data points, among which the first 60% are used as training data and the last 40% as test data.

To prepare the data for modeling, the following preprocessing steps were applied:

Handling Missing Values: The dataset was first inspected for missing or anomalous values. Any days with missing entries were removed from the analysis to ensure data integrity. This resulted in a clean dataset of 1280 consecutive trading days.

Normalization: Due to the varying units and numerical ranges of different features, gradient updates during model training can become slower. Therefore, the data were standardized and scaled to the interval (0, 1). The standardized formula is shown in Formula (21).

Figure 4 presents the relationship between the four normalized attributes and the stock price of the next day.

5.2. Construction and Optimization of Stock Price Trend Prediction Model Based on HBRB-b

The proposed HBRB-b model for stock price prediction is configured as follows:

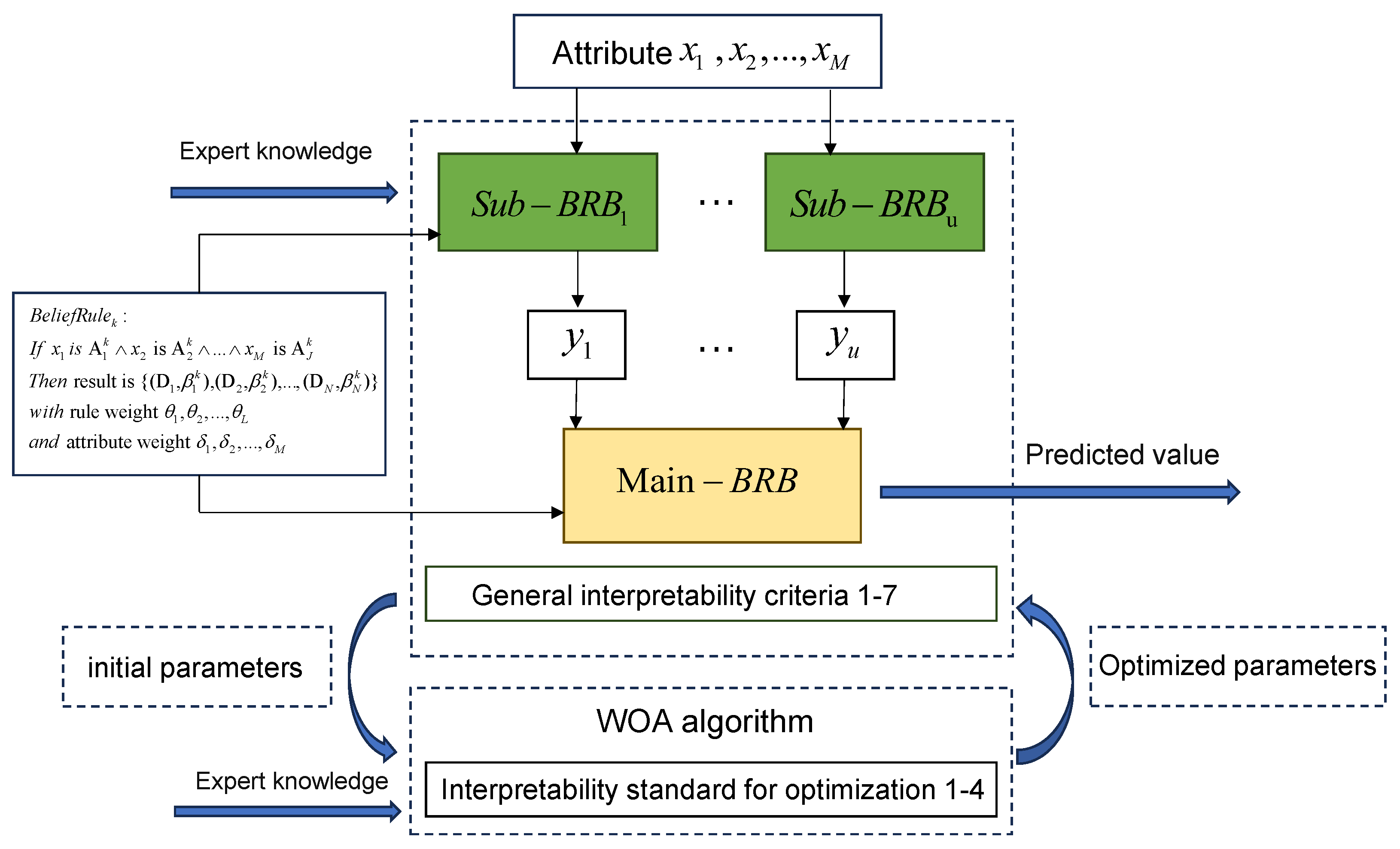

Input Attributes: Four input features are selected: (Normalized Daily Opening Price), (Normalized Daily Closing Price), (Normalized High Price), and (Normalized Low Price). These features are divided into two groups.

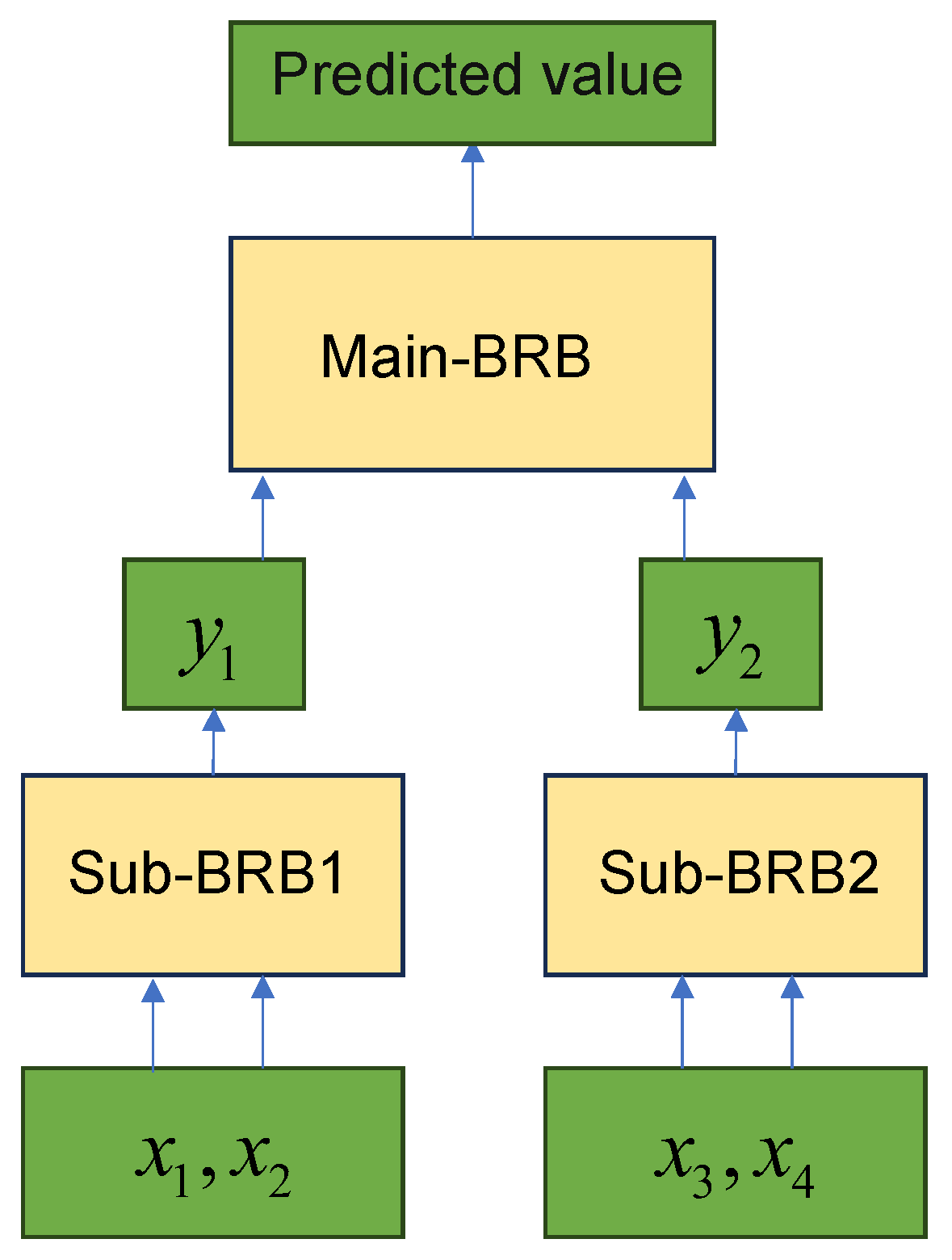

Sub-BRB1: Takes and as inputs and produces an intermediate result . Sub-BRB2: Takes and as inputs and produces an intermediate result . The Main-BRB integrates these results to output the final prediction of the next day’s normalized closing price. The use of daily data enables the model to capture short-term market trends and volatilities, which are essential for daily trading decisions.

Rule Base Size: Each sub-BRB contains 25 rules (5 reference points for input 1 and 5 reference points for input 2). The Main-BRB also contains 25 rules. This structure effectively reduces the total number of rules from

= 625 (in a traditional BRB) to 25 + 25 + 25 = 75, demonstrating its advantage in combating combinatorial explosion.

Table 2 compares the complexity of different numbers of attributes for the traditional BRB model and the HBRB-b model.

The HBRB-b model fundamentally improves the scalability of the model through its hierarchical structure. As shown in the comparative analysis, the number of rules in traditional BRB grows exponentially with the input features, leading to a combination explosion when the number of features is large. HBRB-b successfully reduces the complexity to a polynomial level, generating only hundreds or even thousands of rules under the same conditions and achieving a reduction of several orders of magnitude. Thereby significantly enhancing the model’s ability to handle high-dimensional problems.

Output: The Main-BRB outputs the predicted trend as a belief distribution over the linguistic terms VL, L, M, H, and VH, which is then defuzzified into a numerical value using utility scores.

To construct the initial model based on HBRB-b, belief rules need to be formulated. According to the evaluation of experts, five semantic levels—“Very Low” (VL), “Low” (L), “Medium” (M), “High” (H), and “Very High” (VH)—were selected to represent the state of the system, as shown in Formula (22).

This model consists of two sub-rule bases, named sub-BRB1 and sub-BRB2, respectively. Each sub-rule base is responsible for integrating two sets of attributes and generating outputs y1 and y2. The BRB at the top, called the Main-BRB, integrates the outputs from the sub-BRBs to infer the final prediction. The structure of the model is shown in

Figure 5.

Based on expert knowledge and a detailed analysis of the indicator data, five reference values were defined for each indicator. The sum of the matching degrees of these reference values with the current input index data is limited within the range of 0 to 1.

Table 3 shows the reference points and their reference values of the input indicators and output results. Furthermore, the stock price prediction model based on HBRB-b is constructed by leveraging professional experience in the field, and

Appendix B.1 lists the initial rule configuration of this model.

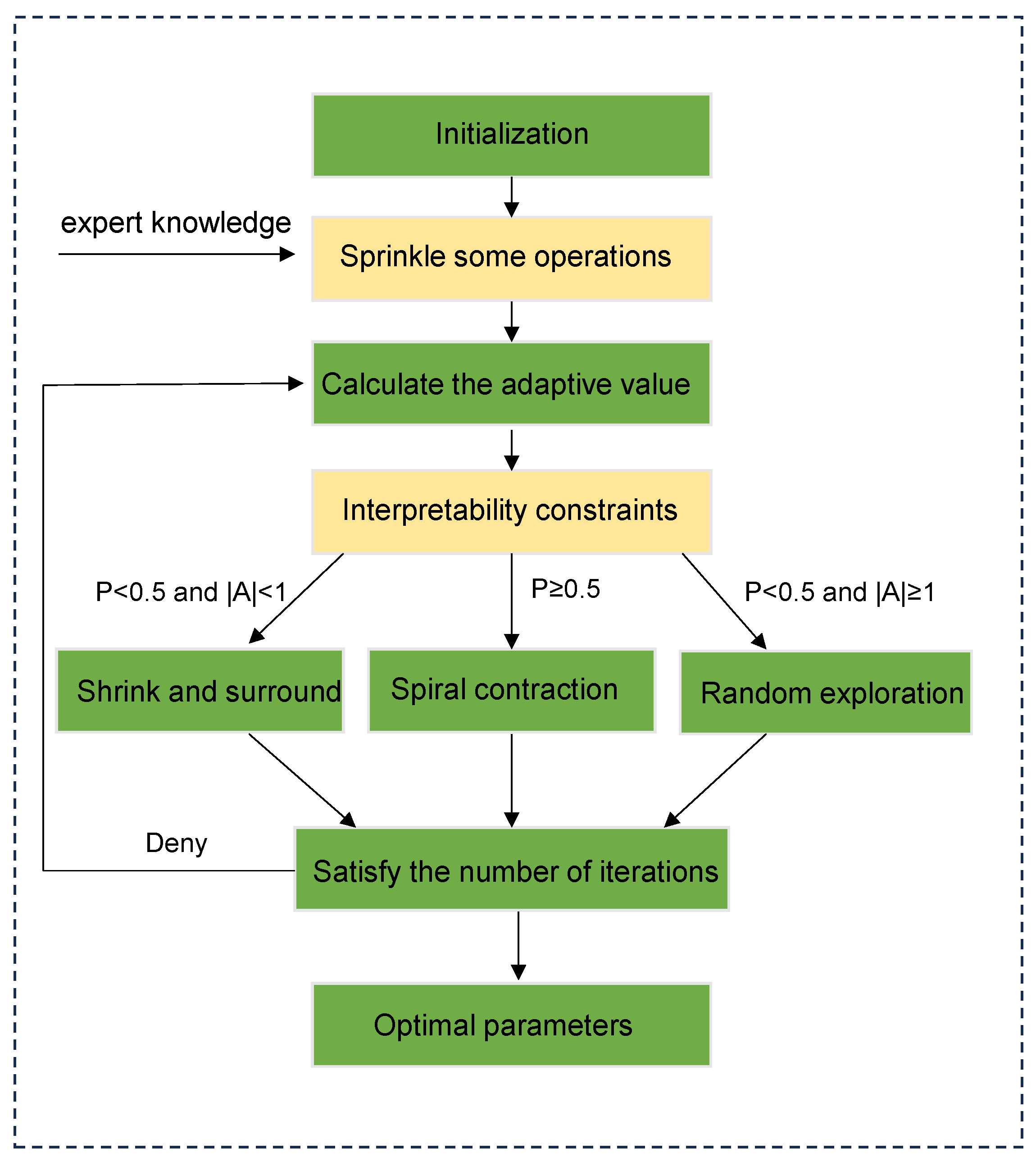

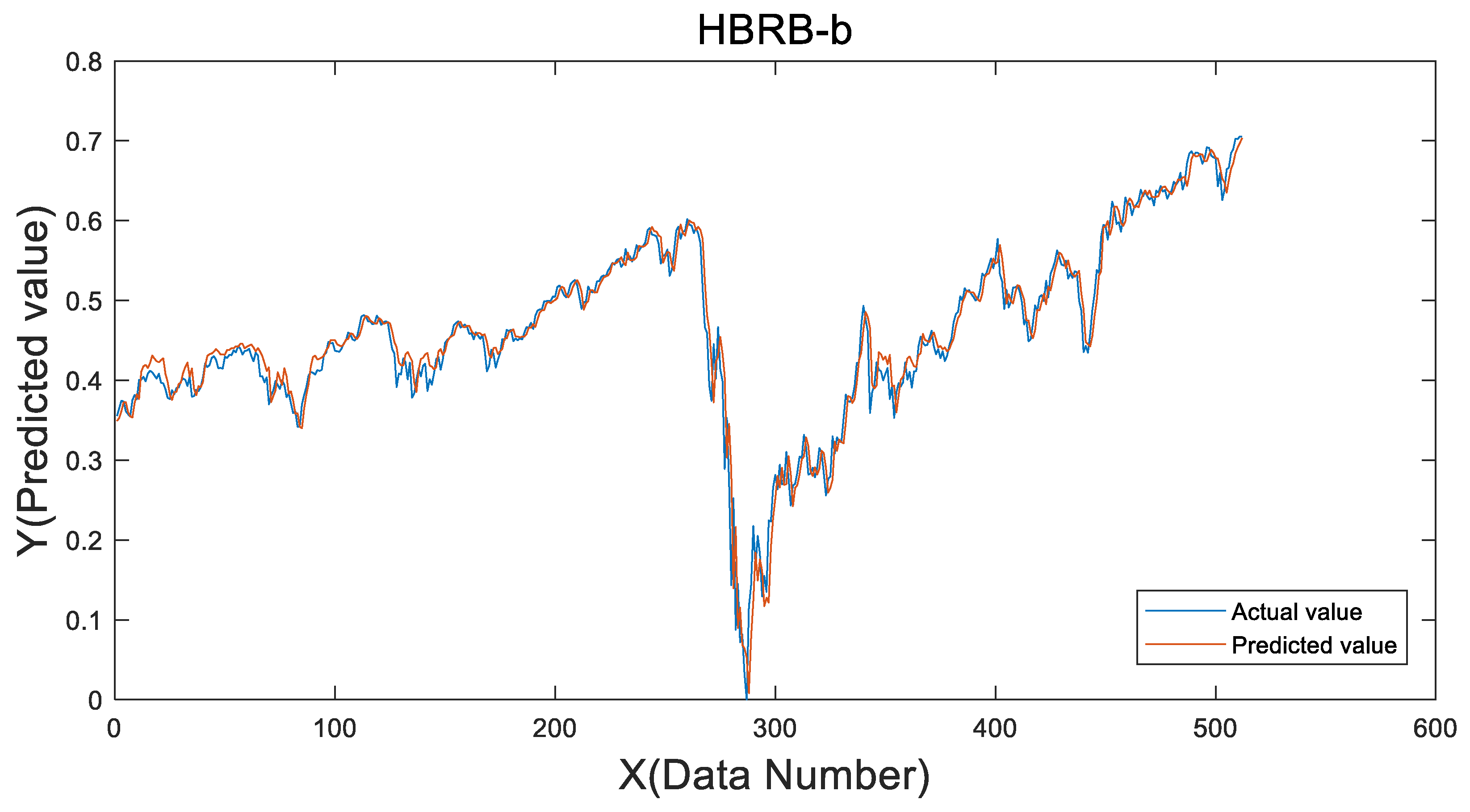

The initial model was optimized using the improved WOA. Considering that the results obtained by sub-BRB often lack comprehensiveness, therefore, in the optimization process, the global optimization method was adopted to avoid falling into local optimum. The initial parameters of the WOA are set as follows: a population size of 20,400 iterations, and an optimization dimension of 152. The stock price trend dataset used contains 1280 sets of data, among which 768 sets are used for training and the remaining 512 sets are used for testing. The prediction results of the optimized model are shown in

Figure 6, and the mean square error between the predicted value and the actual value is 4.5392 × 10

−4. This indicates that the HBRB-b model fits the data well and produces highly accurate predictions. The optimized rule parameters are detailed in

Appendix B.2.

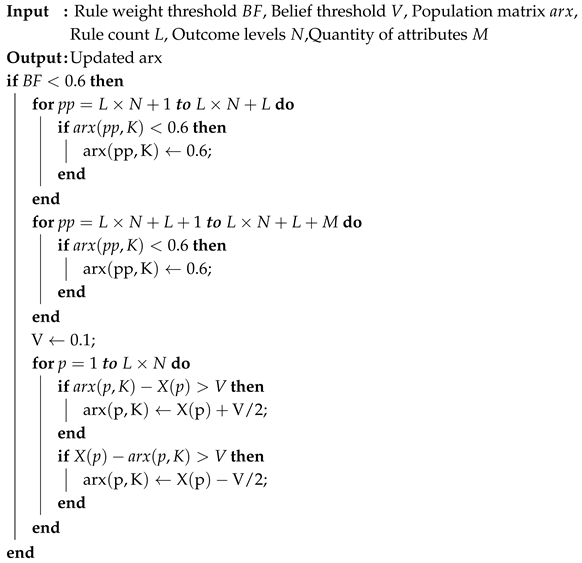

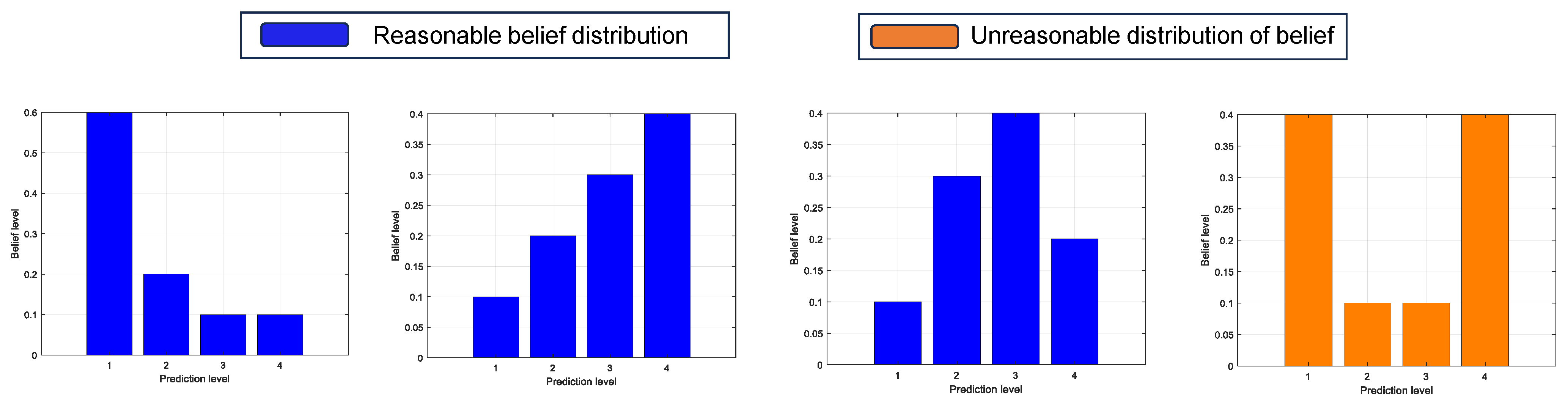

5.3. Interpretability Analysis

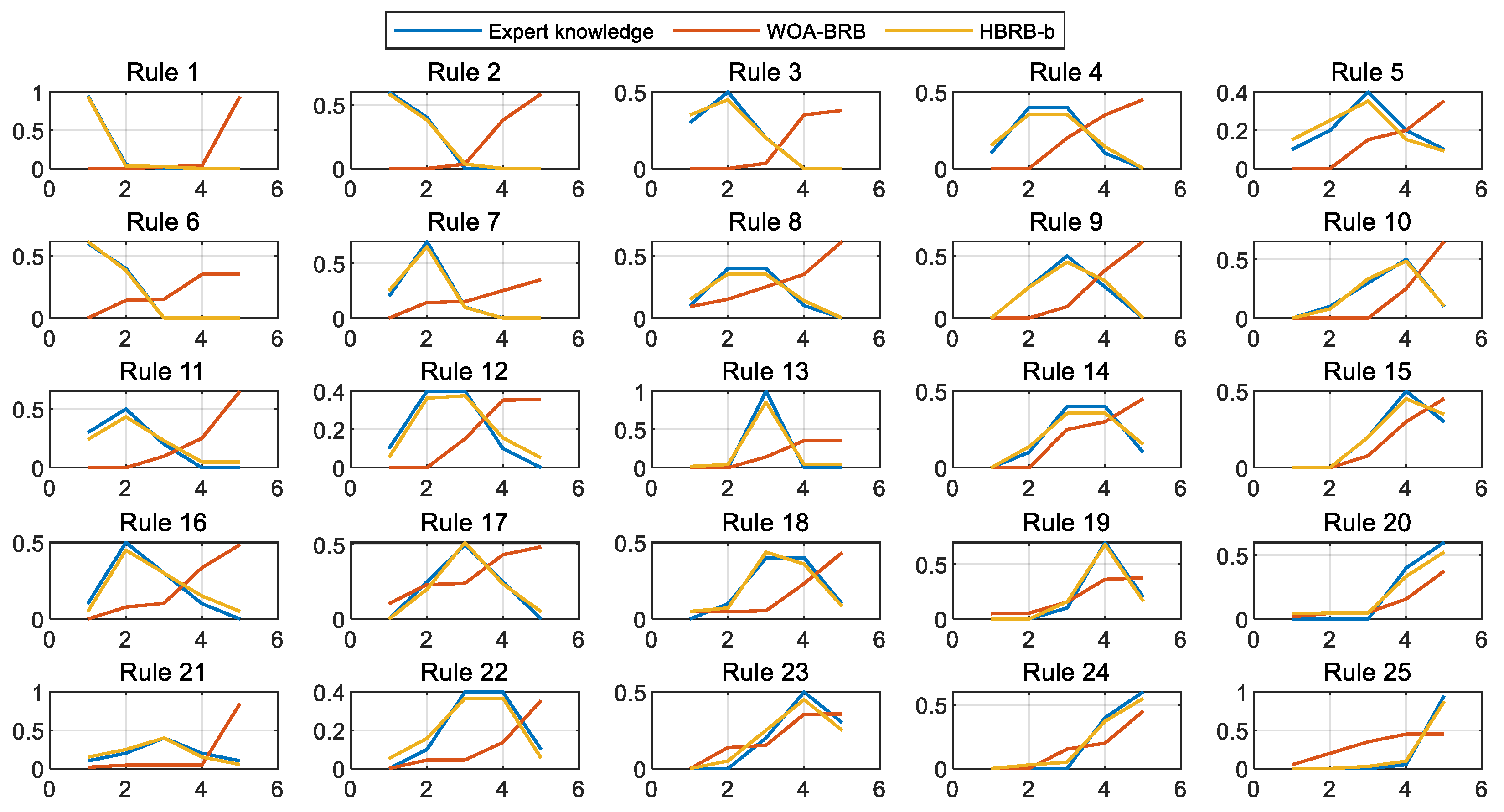

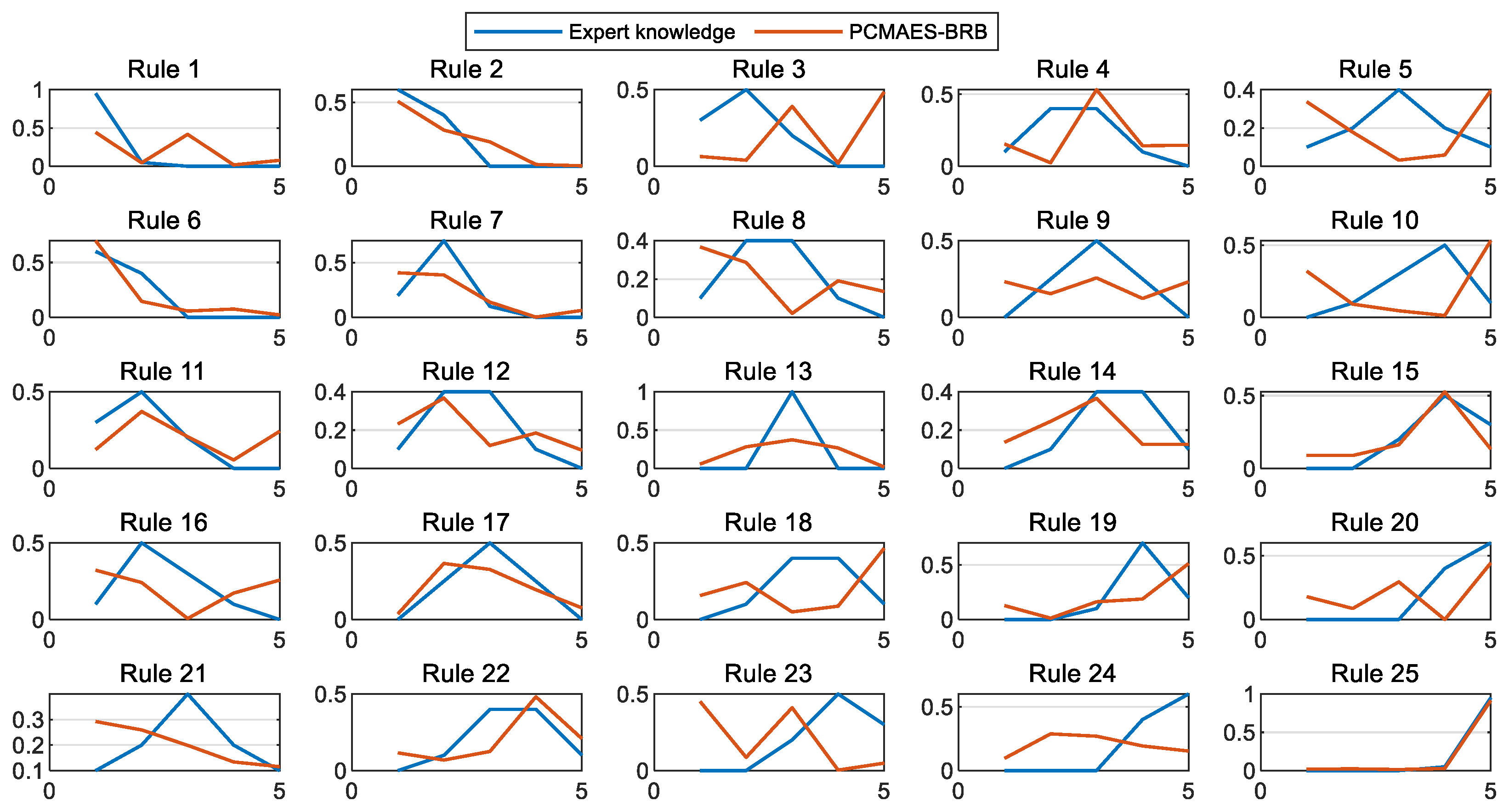

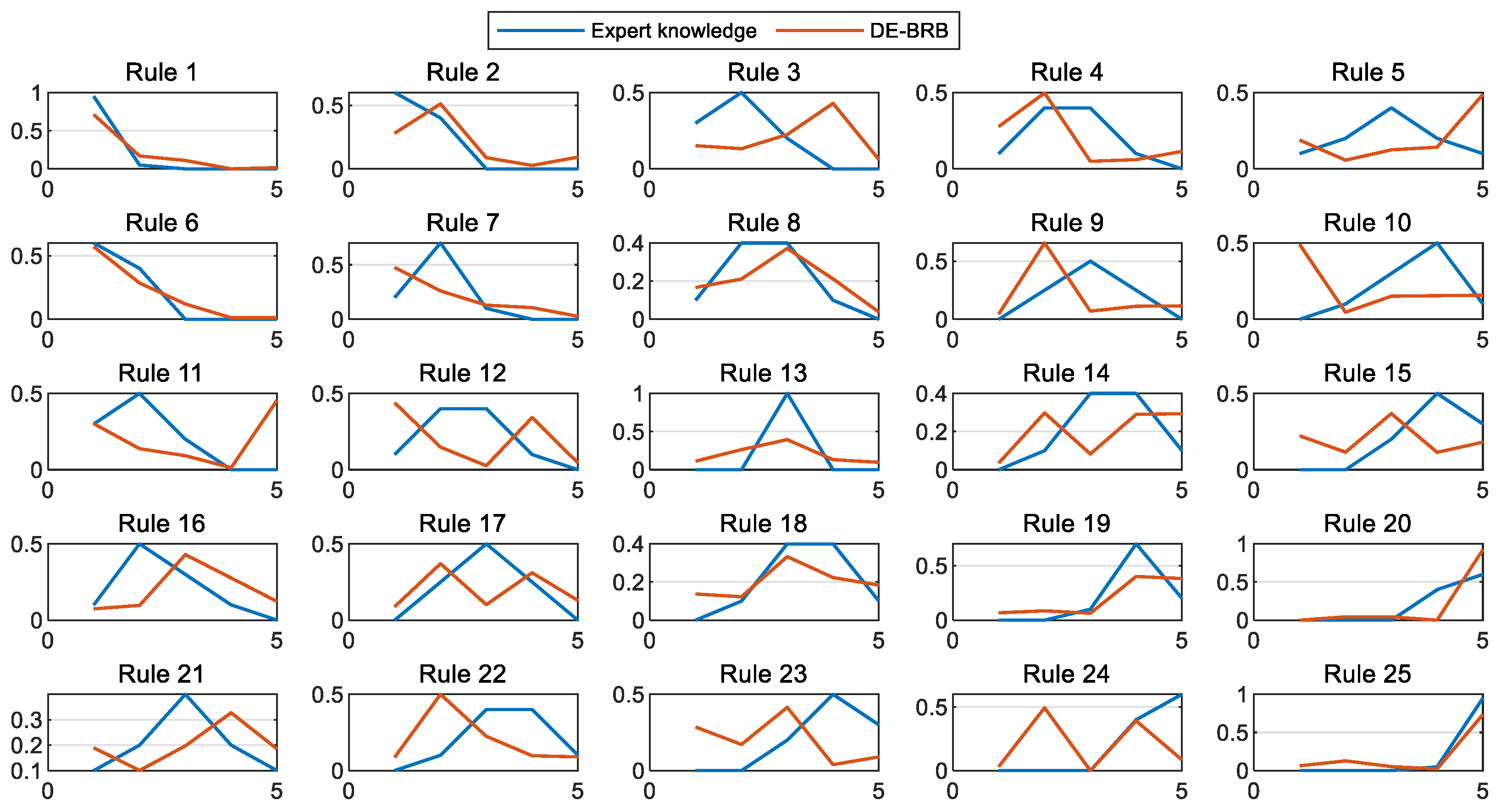

To evaluate the interpretability of the models, this section compares the PCMAES-BRB, DE-BRB, WOA-BRB, and HBRB-b models, with a focus on analyzing the belief distribution of the initial rules and the optimized rules of these models. As shown in

Figure 7,

Figure 8 and

Figure 9, the optimized belief distribution in the HBRB-b model remains consistent with expert knowledge. This demonstrates that the model’s interpretability is effectively maintained and that the optimized rules retain high credibility. In contrast, the other three models deviated significantly from the initial expert judgments during optimization, substantially weakening their interpretability.

Derived from the Pythagorean theorem, the Euclidean distance is a fundamental metric for calculating the straight-line distance between points in a multidimensional space. It is computed by taking the square root of the sum of squared differences across each dimension and exhibits key characteristics such as intuitiveness, symmetry, non-negativity, and adherence to the triangle inequality. In machine learning applications, this distance metric plays a crucial role in measuring data point similarity and determining proximity relationships. The interpretability of the HBRB-b model stems from its foundation in expert knowledge, by which financial analysts and investment practitioners systematically transform accumulated experience into belief rules. These rules abstract factors influencing stock prices and potential market behaviors, formalizing expert insights into rule parameters with explicit practical significance. By ensuring the optimized rules and parameters remain consistent with expert judgments, the HBRB-b model maintains its interpretability in stock price prediction tasks. This study selects Euclidean distance as the core metric for evaluating model interpretability, with its specific calculation formula presented as Equation (

8), and the comparative results across different models shown in

Table 4 demonstrate that the HBRB-b model achieves superior performance by effectively minimizing the deviation from expert knowledge while preserving prediction accuracy, thereby establishing an optimal balance between computational optimization and interpretability maintenance that is particularly valuable for financial decision-making scenarios requiring both transparency and reliability. The adoption of Euclidean distance as an interpretability evaluation index is well justified given its mathematical properties that enable effective verification of the consistency between data-driven optimizations and domain expertise.

During the optimization process, the HBRB-b model is centered on expert knowledge. Through precise parameter adjustment and rule optimization, the Euclidean distance between the optimized rules and expert knowledge is minimized. This minimization is not only reflected numerically, but also means that the optimized rules are highly consistent with the empirical judgment of experts in logic and structure, thereby meeting interpretability Standards 1 and 2. Furthermore, it can be seen from

Appendix B.2 that rules 2, 3, 4, 5, 6, 10, 15, and 16 did not participate in the optimization, indicating that these rules were not activated and met the interpretability Standard 3. Meanwhile, the optimized rule belief distribution shows that the overall belief distribution presents a monotonic or convex shape, which conforms to interpretability Standard 4. Therefore, the HBRB-b model shows the best interpretability among all the comparison models and can provide users with more intuitive, reliable, and easily understandable decision-making basis.

To analyze the optimized rules in detail, we deeply studied the specific parameter changes in

Appendix B.1 and

Appendix B.2 and explained their financial logic. This indicates that the optimization process has improved rather than overturned expert knowledge.

Rule 7: The initial rule inputs was (0.2,0.7,0.1,0,0), strongly believing in a “Low” outcome. After optimization, the distribution became (0.241,0.621,0.092,0.029,0.014). While the belief is now distributed across more levels, the core semantics are preserved and refined: the model still assigns the highest belief (62.1%) to “Low” and the second highest (24.1%) to “Very Low”. Two “Low” signals most likely lead to a “Low” outcome, not exclusively a “Very Low” one, which is a financially reasonable adjustment.

Rule 12: The initial setting for input scenario was a neutral (0.1, 0.4, 0.4, 0.1, 0). The optimization process sharpened this to (0.049,0.366,0.381,0.153,0.050). When all signals are average, the most probable outcome is a stable, “Medium” state, drastically reducing ambiguity. This outcome is highly interpretable.

Rule 25: The initial rule was (0,0,0,0.05,0.95). The optimized rule is (0,0,0.025,0.098,0.877). The model learns from historical data that even for the most extreme bullish signals, the market rarely moves in one direction with 100% probability. Therefore, it slightly reduces the confidence level of VH (from 95% to 87.7%). The reallocated belief (approximately 7.3%) was reasonably distributed to ‘moderate’ and ‘high’ outcomes. This did not create a distribution that does not conform to financial logic (for example, believing that there will be both big and big fluctuations), but rather formed a monotonic increase.

This detailed examination of individual rules proves that the HBRB-b model’s interpretability is not merely a theoretical claim backed by aggregate metrics, but a practical reality. The optimization process acts as a collaborative partner to the expert: it refines fuzzy initial beliefs into sharper and more accurate distributions, discovers nuanced patterns within financially logical boundaries, and wisely leaves unused knowledge untouched. This results in a rule base that is both more accurate and more transparently explainable to a financial analyst.

A pertinent consideration is the potential trade-off between interpretability and adaptability, especially during extreme market events like financial crises. In calm markets, unconstrained models may achieve high accuracy by overfitting to subtle noise and transient correlations—patterns that typically break down during regime shifts. In contrast, the HBRB-b model captures fundamental, robust relationships—such as how a widening bid-ask spread combined with high volatility signals rising uncertainty and downward pressure, a pattern persistent in both normal and crisis periods. Therefore, rather than impairing performance, these interpretability constraints likely shield the model from overfitting and enhance its generalization capability during periods of market stress, a hypothesis supported by the model’s superior stability in our robustness analysis (

Section 5.5.2).

5.4. Cross-Validation

Cross-validation is vital for model performance evaluation, as it ensures reliability on unseen data. This method divides the dataset into multiple training and validation sets, enabling repeated model training and validation across different data combinations. Such an approach provides a more accurate assessment of model generalization ability while simultaneously preventing overfitting to the training data.

The study adopted a systematic 5-fold cross-validation process. The complete dataset was evenly partitioned into five subsets, each comprising 20% of the total data. During each iteration, one subset served as the test set while the remaining four subsets formed the training set. This procedure resulted in five independent model training cycles, followed by a comprehensive analysis of all training outcomes.

The experimental analysis compared four stock price trend prediction models: PCMAES-BRB, DE-BRB, WOA-BRB and HBRB-b. Mean square error (MSE), calculated as shown in Equation (

23), was selected as the primary evaluation metric. The results presented in

Table 5 demonstrate that the HBRB-b model achieves superior generalization performance for stock price trend prediction while effectively avoiding overfitting issues.

5.5. Comparative Experiment

5.5.1. Performance Analysis

The comparative analysis evaluated seven distinct models: PCMAES-BRB, DE-BRB, WOA-BRB, Backpropagation Neural Network (BP), Radial Basis Function (RBF), Random Forest (RF), and the proposed HBRB-b model. To comprehensively assess prediction performance, we incorporated Mean Absolute Error (MAE) as an additional evaluation metric alongside existing measures. MAE quantifies the average magnitude of prediction errors through the mean absolute difference between predicted and actual values, where lower values correspond to higher predictive accuracy. The MAE calculation follows Equation (

24).

Table 6 presents the comparison results of all the models.

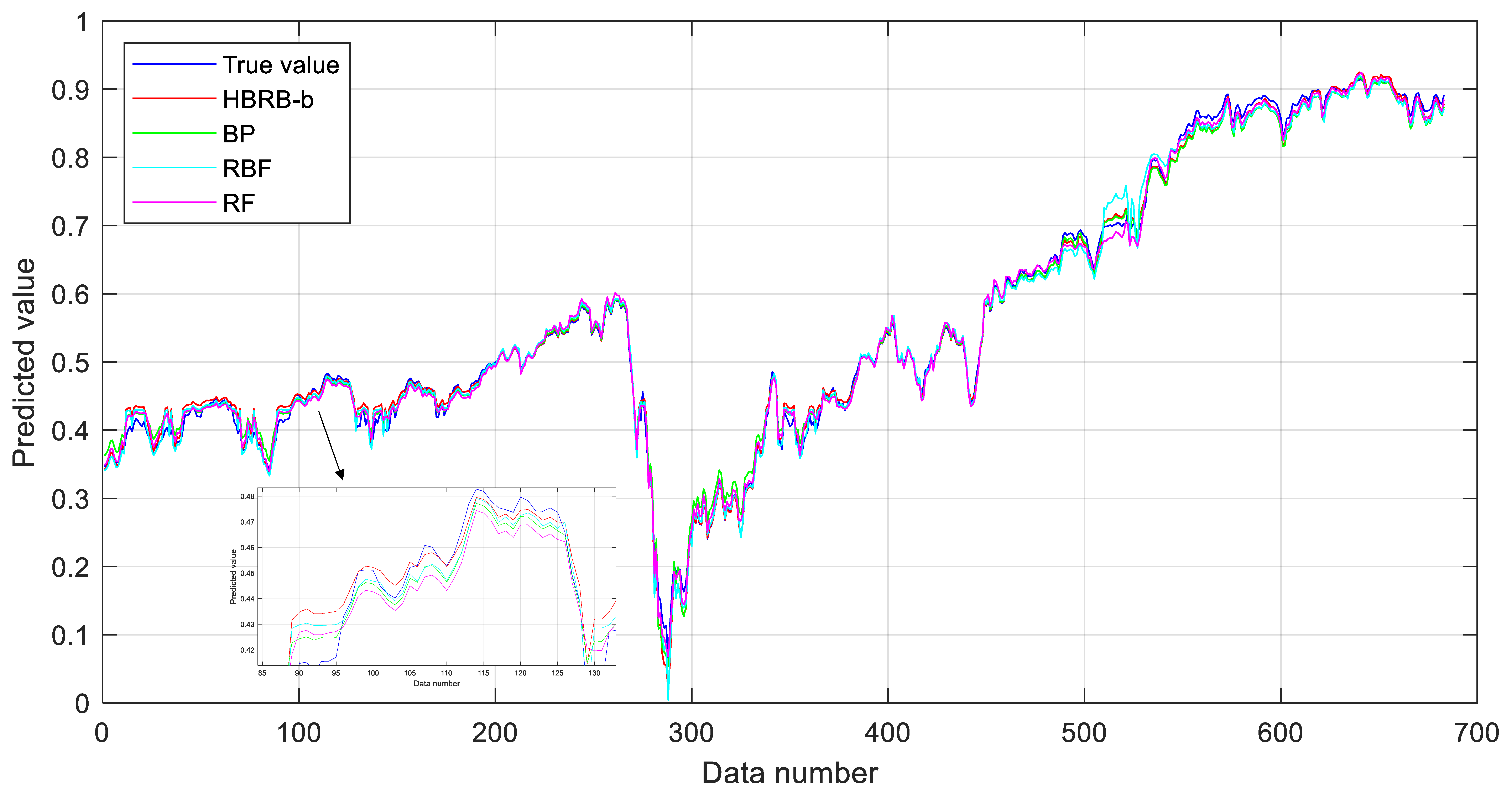

Figure 10 shows the comparison results between all BRB-based models and the actual values, and

Figure 11 shows the comparison between the predicted values and the true values of HBRB-b and the other three machine learning models. Overall, the prediction curves of all models can follow the changing trend of the true values quite well. However, within the data range of 100 to 150, when the predicted values fluctuate greatly, the predicted values of the HBRB-b and RBF models are closer to the true values, showing better adaptability and accuracy. Euclidean distance is an important indicator for measuring the difference between the predicted value and the true value. The smaller its value is, the higher the prediction accuracy of the model is. Among all models listed in

Table 6, the HBRB-b model achieves the best performance, with a Euclidean distance of 0.3193, while the PCMAES-BRB and DE-BRB models show distances of 1.4161 and 1.7652, respectively. The Euclidean distance of the PCMAES-BRB model is 1.4161, and that of the DE-BRB model is 1.7652, also showing good interpretability. The Euclidean distance of the WOA-BRB model is 2.8082, which is the largest among the listed models. Since BP, RBF, and RF models are essentially black-box models, they lack transparency when providing prediction results and therefore do not have inherent interpretability. Overall, the HBRB-b-based stock price prediction model demonstrates clear advantages in both accuracy and interpretability. The experimental results provide compelling evidence that validates the proposed hypotheses.

5.5.2. Robustness Analysis

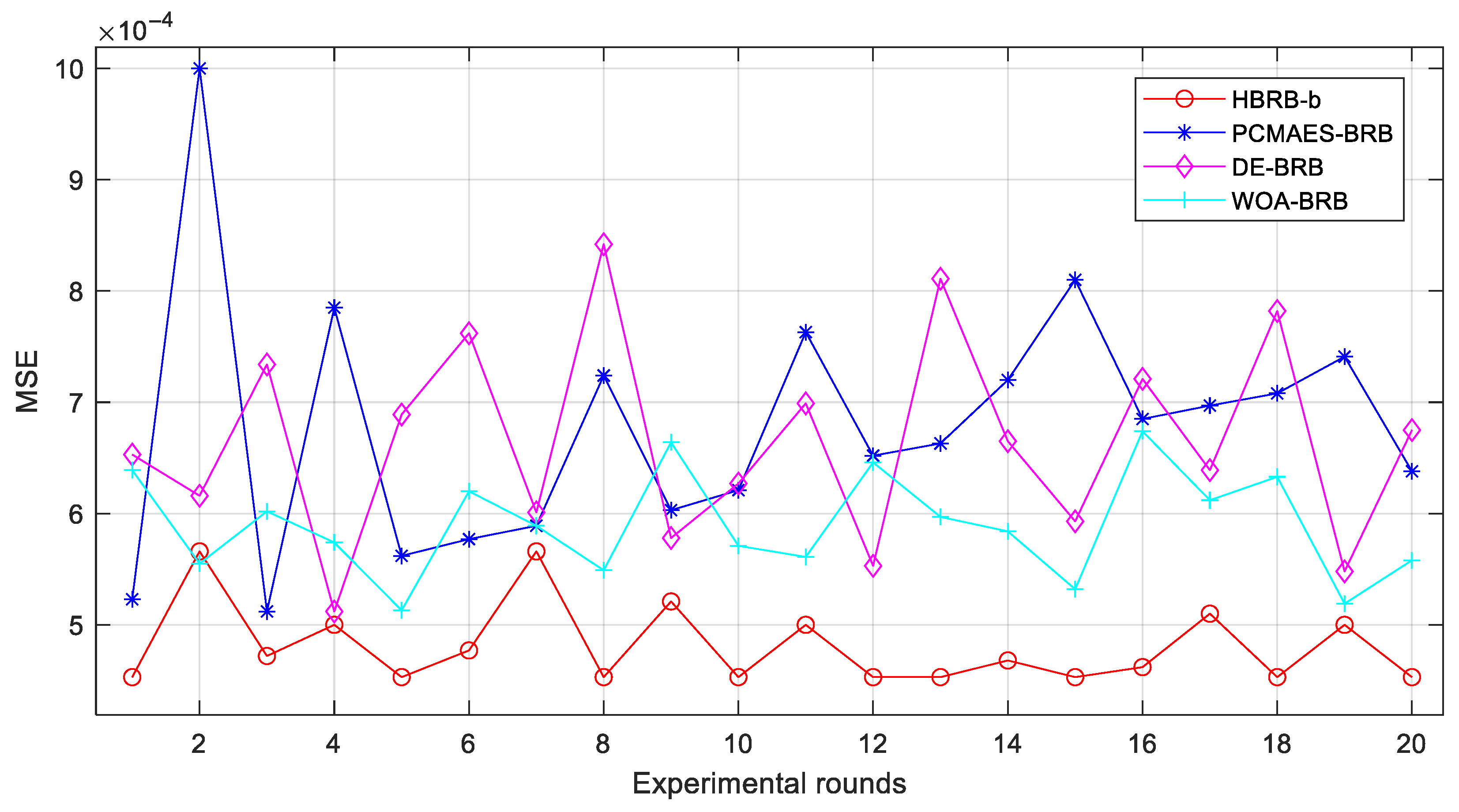

To ensure methodological robustness, a comprehensive experimental analysis was conducted involving twenty repeated optimization trials across seven comparative models: PCMAES-BRB, DE-BRB, WOA-BRB, Backpropagation Neural Network (BP), Radial Basis Function (RBF), Random Forest (RF), and the proposed HBRB-b model. The robustness analysis results are systematically presented in

Appendix C.1, while

Figure 12 and

Figure 13 provide visual comparisons of the prediction performance through mean square error (MSE) metrics across all evaluated models.

The experimental results reveal that the HBRB-b model maintains consistently low MSE values with minimal variation across multiple experimental trials, demonstrating remarkable stability in stock price trend prediction. This stable performance stems from three fundamental improvements brought about by the interpretability criterion: first, it confines the parameter search space within reasonable bounds; second, it mitigates optimization volatility during training; and third, it ensures that optimal solutions align with domain expert knowledge. These synergistic effects not only enhance optimization efficiency but also guarantee the reliability of prediction outcomes, making the model more robust and practical for real-world financial applications.

The inherent instability of BP and RF models originates from their core algorithmic mechanisms: BP networks are highly sensitive to random weight initialization and may converge to divergent local optima during training, resulting in substantial performance variance. Similarly, while robust, RF models introduce variability through bootstrapped sampling and random feature selection at each node, meaning different random seeds can construct meaningfully different forests. In contrast, HBRB-b’s stability is engineered by its interpretability constraints, which act as a powerful regularizer. By severely restricting the parameter search space to a financially plausible and consistent region, these constraints effectively anchor the optimization process, preventing it from overfitting to stochastic noise and making its results far less dependent on random initial conditions.

For portfolio or risk managers, the stability of HBRB-b can translate into lower model risk and higher trust, as the model’s performance in real-time trading is predictable and consistent with the results of its backtesting. This reliability reduces the operational burden and cost associated with frequent model monitoring, validation, and recalibration. Ultimately, it provides decision-makers with the confidence that artificial intelligence predictions are robust and reliable.

5.5.3. Discussion on Interpretability and Practical Implications

The excellent performance of the HBRB-b model is not merely a statistical outcome but a direct result of its foundational architecture. Unlike black-box models which function as opaque function approximators, HBRB-b integrates domain knowledge through its belief rules, effectively constraining its optimization to financially plausible patterns. For instance, rules linking a high opening price with a low trading range to a bearish sentiment encapsulate classic technical analysis wisdom. The hierarchical structure mitigates the curse of dimensionality, allowing the model to capture complex interactions without overfitting the prevalent noise in market data. Furthermore, the interpretability constraints applied during optimization prevent the model from learning spurious, non-generalizable relationships, thereby enhancing its robustness in volatile markets.

The practical implications of this interpretable accuracy are profound for various financial market participants. For the quantitative analyst, the model offers a transparent tool for strategy development and validation. The ability to audit which rules activate for a given prediction enables continuous refinement of the rule base using new financial insights. For the portfolio manager, the model’s output—a belief distribution—provides a nuanced view of risk and opportunity. A prediction of (Down, 0.6), (Neutral, 0.3), (Up, 0.1) clearly communicates a high-probability downward trend with a quantified level of uncertainty, enabling more informed asset allocation and hedging decisions than a single-point estimate ever could. For regulators, the model’s transparency is a key advantage for market monitoring and stress testing, as its reasoning can be scrutinized to understand the drivers of predicted market downturns.

This stands in stark contrast to the compared black-box models. While BP, RBF, and RF can achieve competitive accuracy, their predictions are not auditable. A fund manager cannot justify a multi-million-dollar trade based on a prediction from an unexplainable neural network. The RF model, while able to rank feature importance, fails to articulate the complex conditional logic between features.

Table 7 conducts an in-depth comparison between the HBRB-b model and other machine learning models. It can be seen that although these black-box models have strong approximation capabilities, their predictions lack the transparency required for full trust in critical financial decision-making environments. Therefore, the HBRB-b model addresses this gap by offering a solution that is accurate, actionable, and trustworthy.