EBiDNet: A Character Detection Algorithm for LCD Interfaces Based on an Improved DBNet Framework

Abstract

1. Introduction

- (1)

- Introducing a Bidirectional Feature Pyramid Network (BiFPN) structure to achieve comprehensive multiscale feature fusion, thereby enhancing the detection accuracy and stability for characters of varying sizes;

- (2)

- Constructing and expanding a dedicated LCD character dataset tailored for industrial scenarios. This better matches the distribution characteristics of high density, fine-grained characters and strengthens the model’s generalization capability under complex backgrounds and varying lighting conditions;

- (3)

- The original ResNet50 backbone is substituted with the more efficient EfficientNetV2-S, which preserves detection accuracy while markedly enhancing feature extraction efficiency and inference speed, thereby achieving a favorable balance between precision and computational efficiency.

2. Related Works

3. Methods

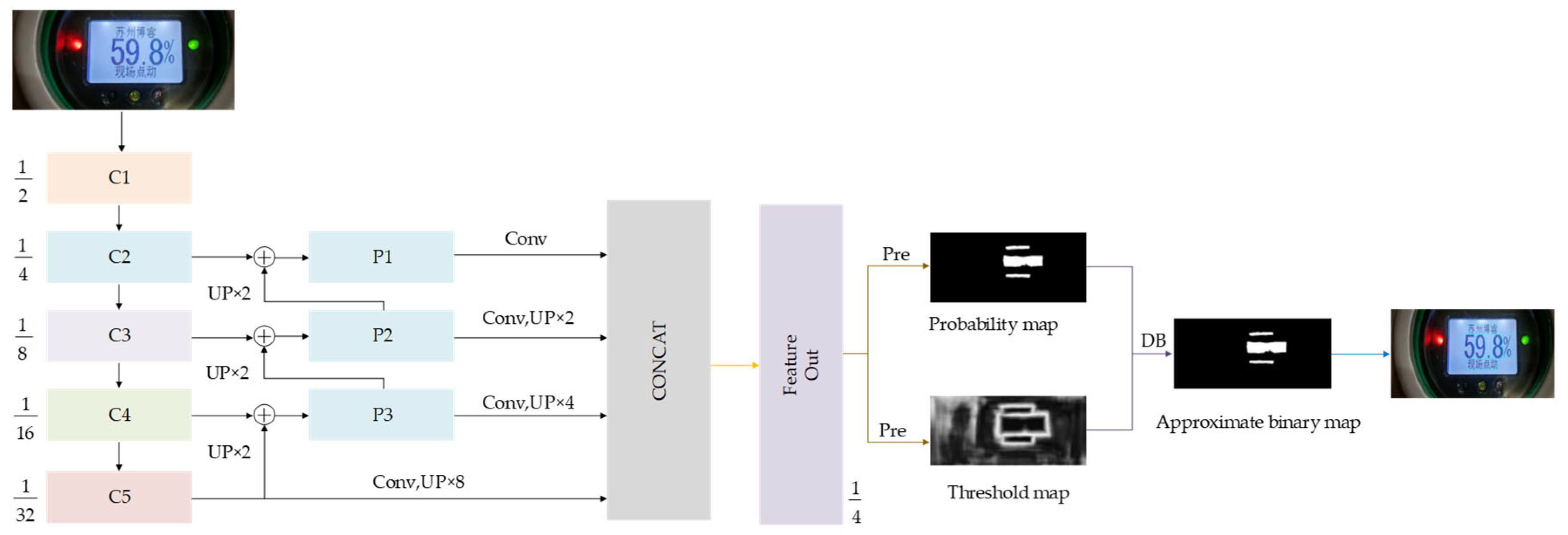

3.1. DBNet Architecture

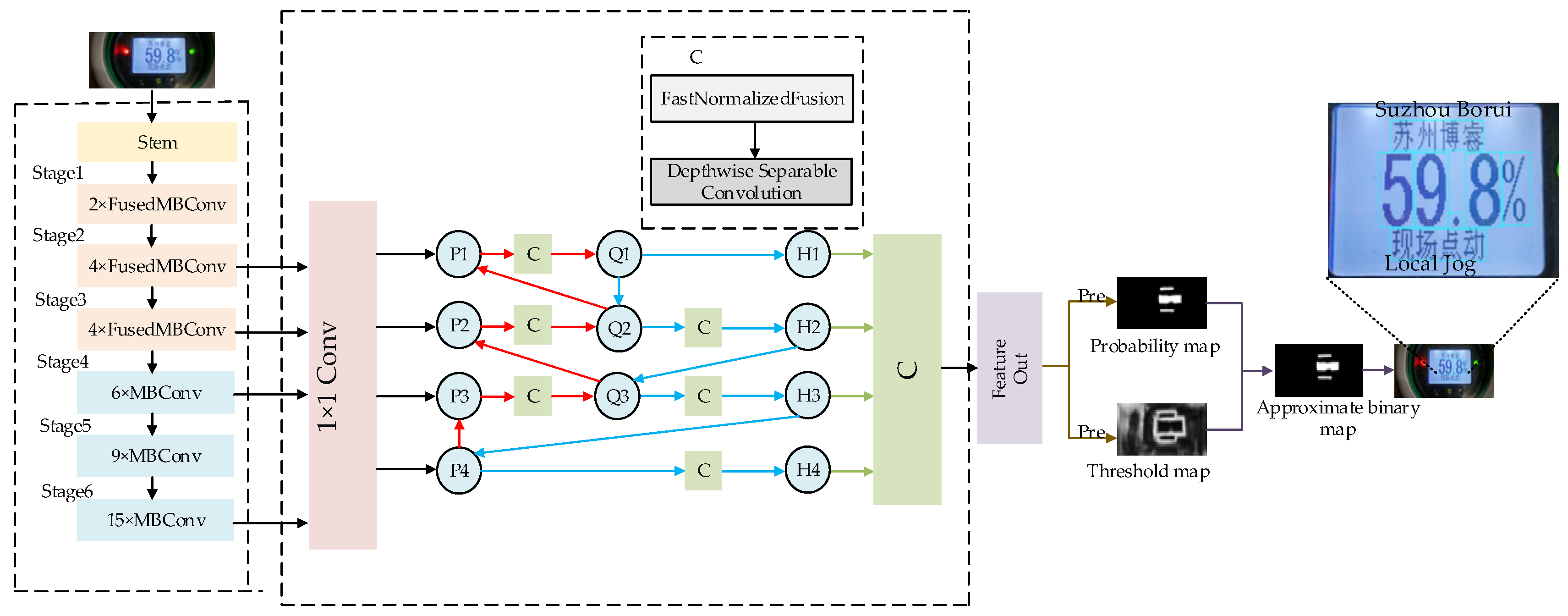

3.2. EBiDNet—Improved DBNet Network Structure

- (1)

- The lightweight network EfficientNetV2-S, which offers a better balance between model accuracy and computational efficiency, is introduced as the backbone network to replace the original ResNet. This substitution reduces both the model parameters and computational complexity while also enhancing feature extraction capability.

- (2)

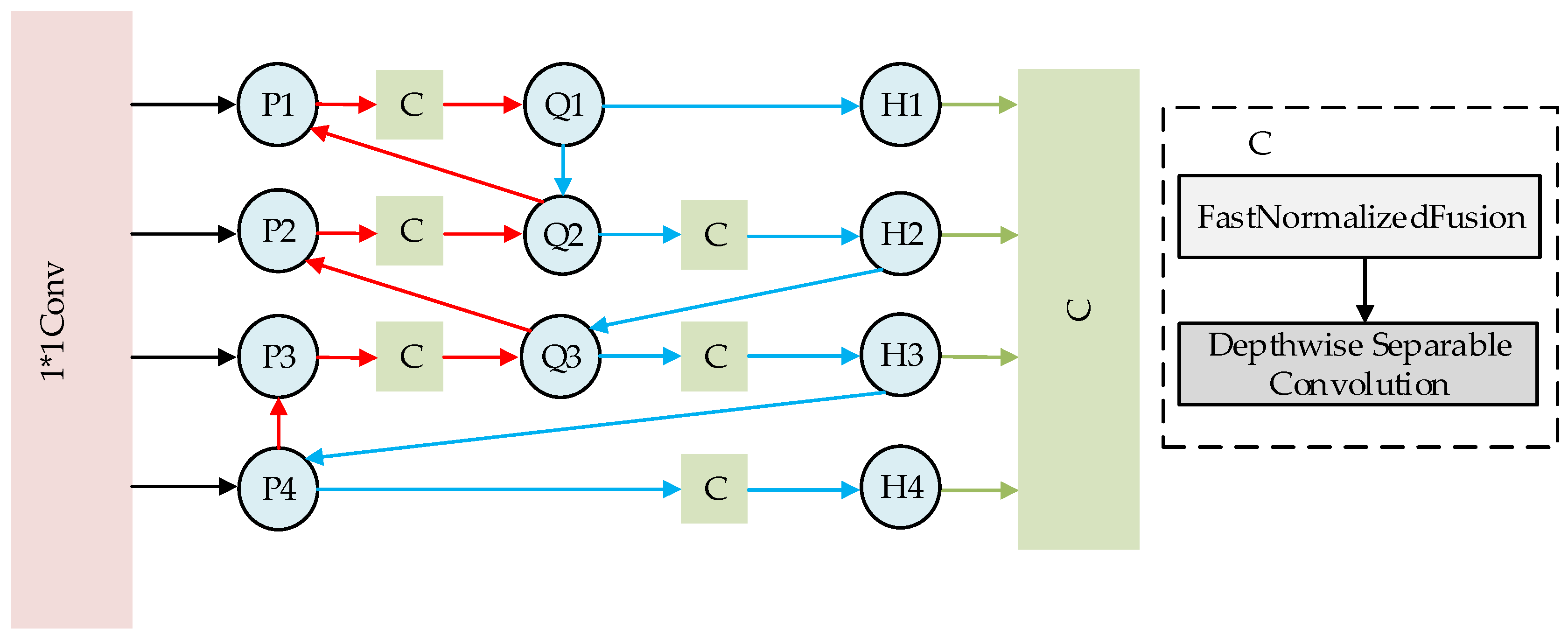

- The Bidirectional Feature Pyramid Network (BiFPN) is incorporated into the neck network to fuse multiscale features through bidirectional cross scale connections.

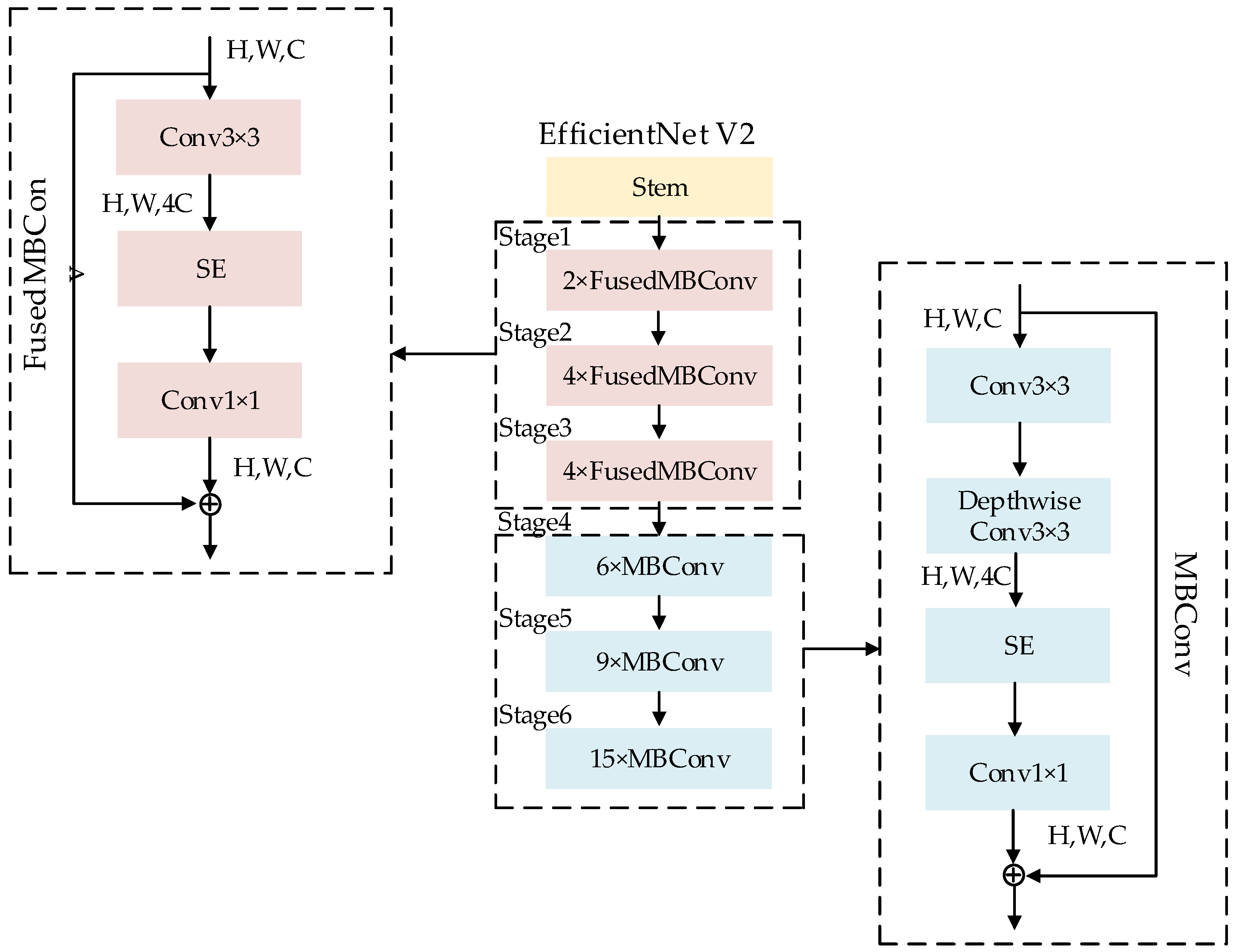

3.3. Backbone

- (1)

- The core operational modules within each stage consist of a Conv3 × 3 convolutional layer, Fused-MB Conv modules, and MB Conv modules. The table details the stride operation, output channel count, and the number of times each module is repeated within its stage.

- (2)

- Within the Fused-MB Conv module, the notation 1 or 4 denotes the expansion ratio, while k3 × 3 specifies a convolutional kernel size of 3 × 3.

- (3)

- When the expansion ratio equals 1 (expansion ratio = 1), the main branch contains only a 3 × 3 convolutional layer. When the expansion ratio differs from 1 (expansion ratio ≠ 1), a 1 × 1 convolutional layer follows the initial 3 × 3 convolution in the main branch.

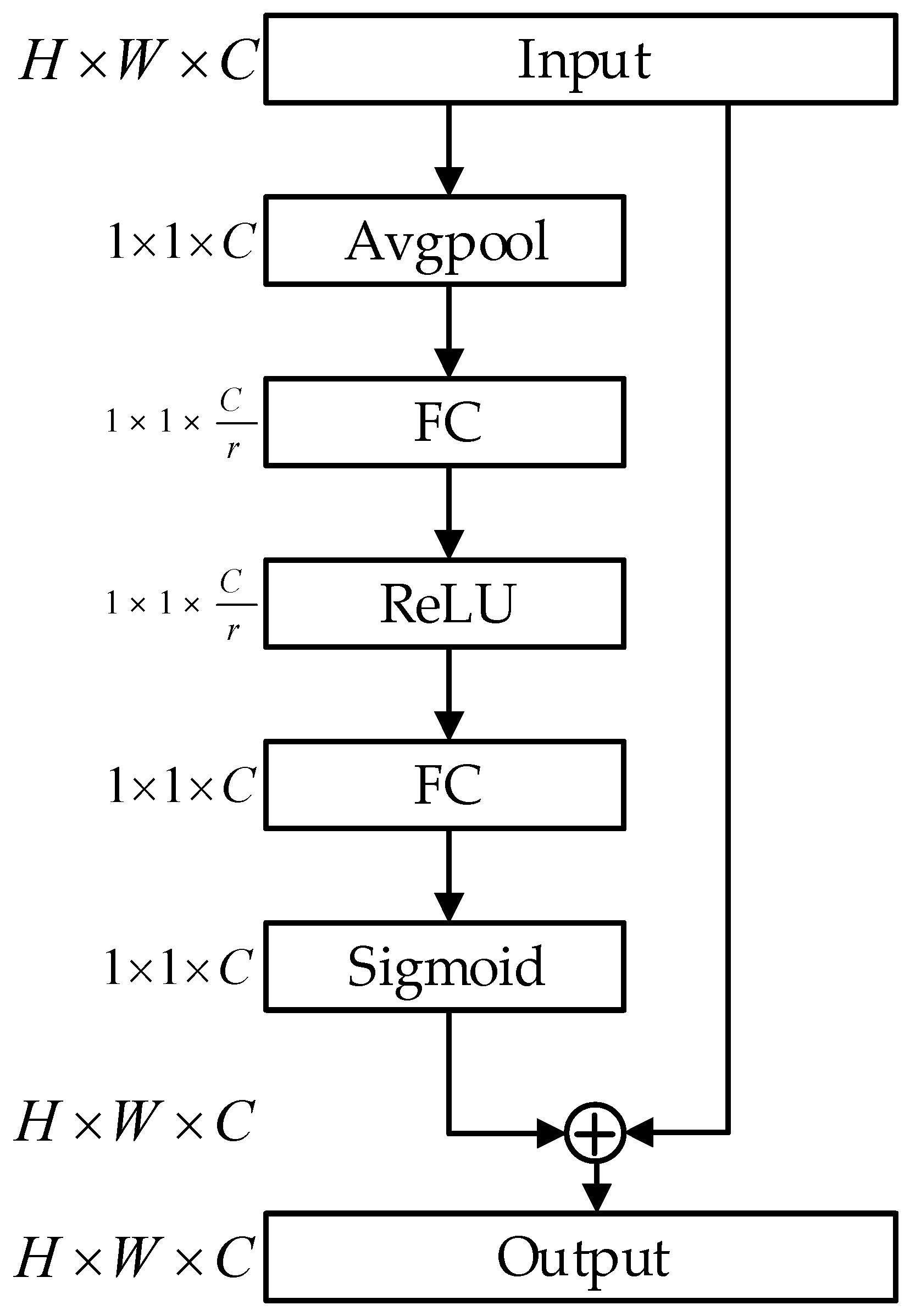

- (1)

- Squeeze Stage

- (2)

- Excitation Stage

- (3)

- Recalibration Stage

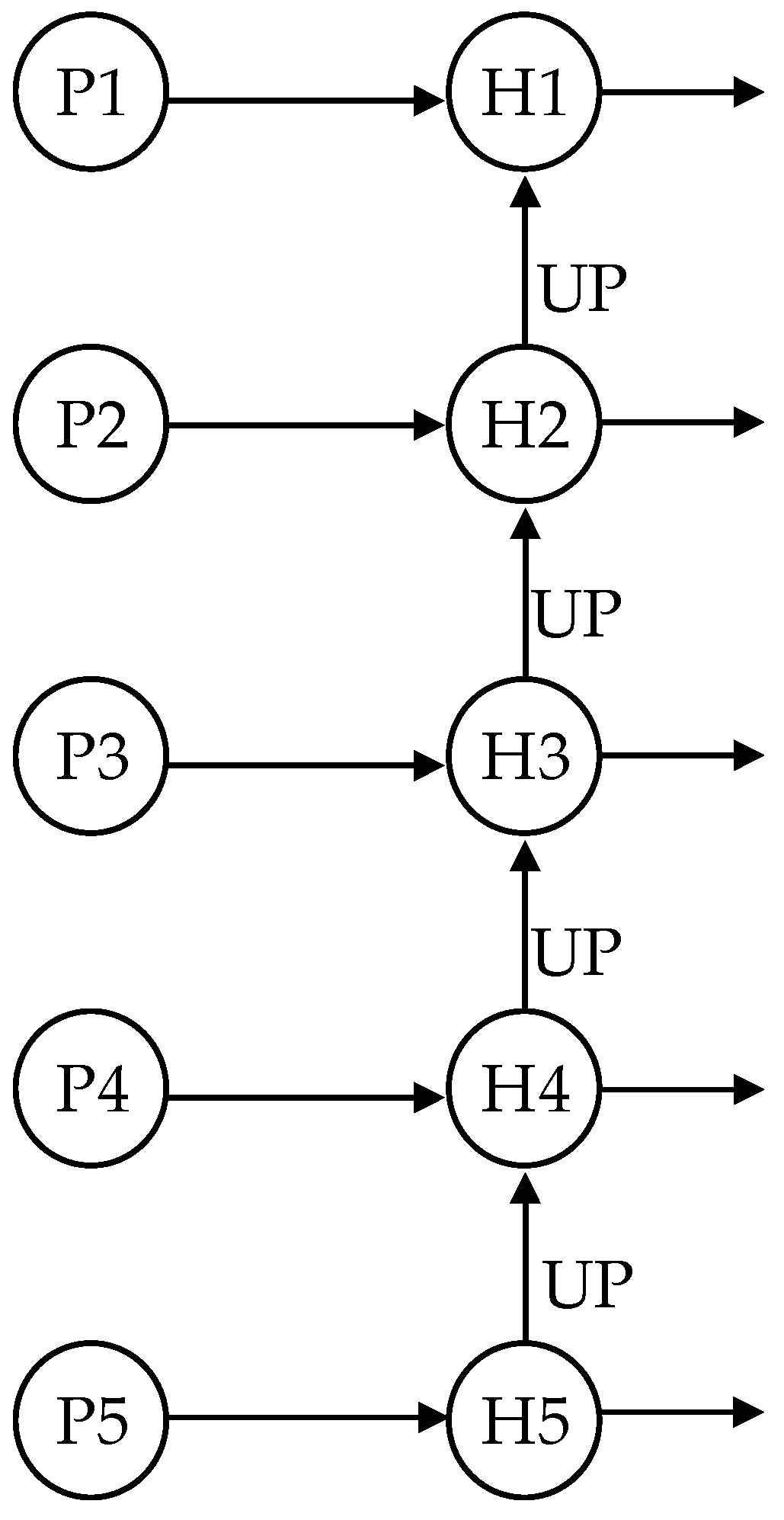

3.4. Neck

- (1)

- Bottom-up path (red arrows)

- (2)

- Top-down path (blue arrows)

- (1)

- Fast Normalized Fusion

- (a)

- Non-negative Rectification via ReLU:

- (b)

- Weight Normalization:

- (c)

- Weighted Fusion Output:

- (2)

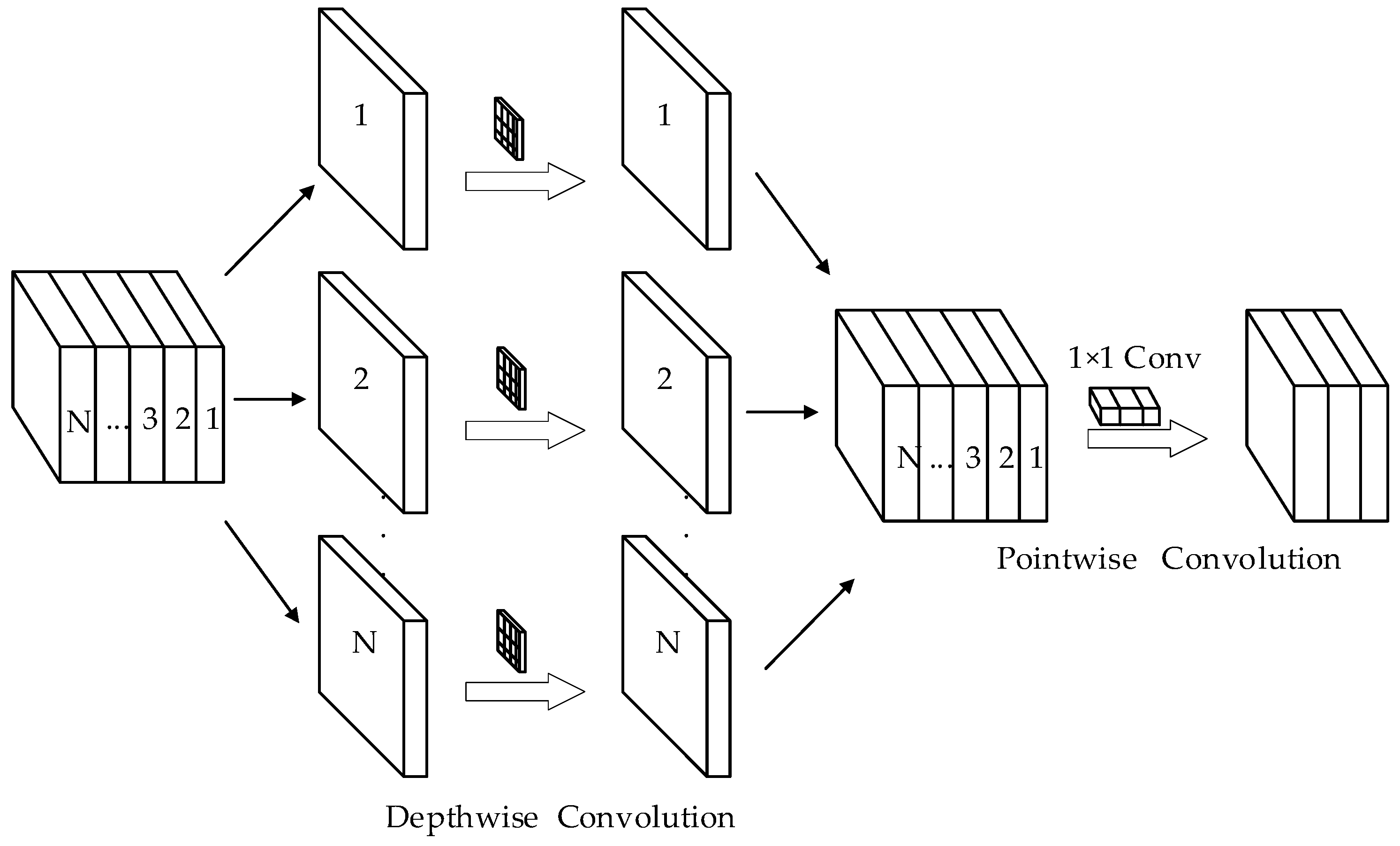

- Depthwise Separable Convolution

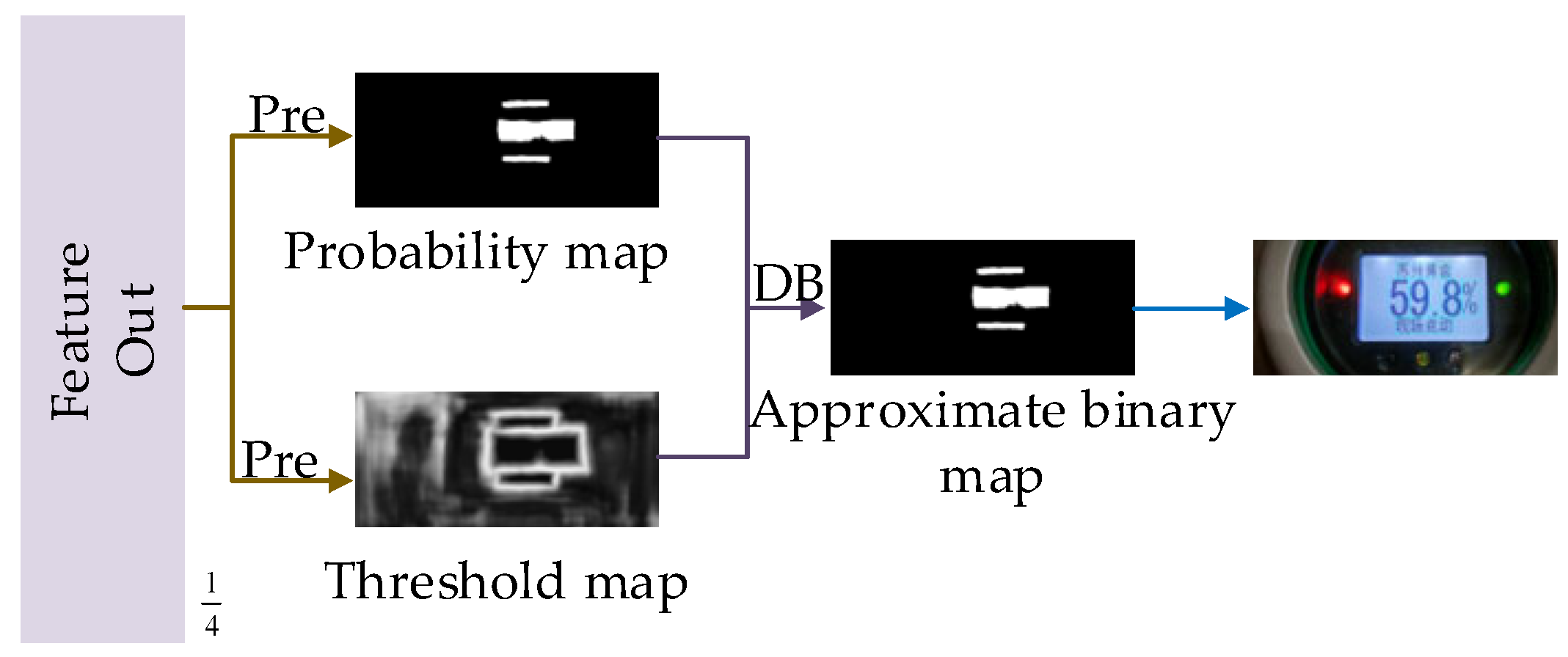

3.5. Head

3.5.1. Head Network Architecture

3.5.2. Principle of Differentiable Binarization

3.5.3. Adaptive Threshold

3.5.4. Loss Calculation

4. Experiments and Results

4.1. Dataset

4.1.1. Construction of the Dataset Acquisition Platform

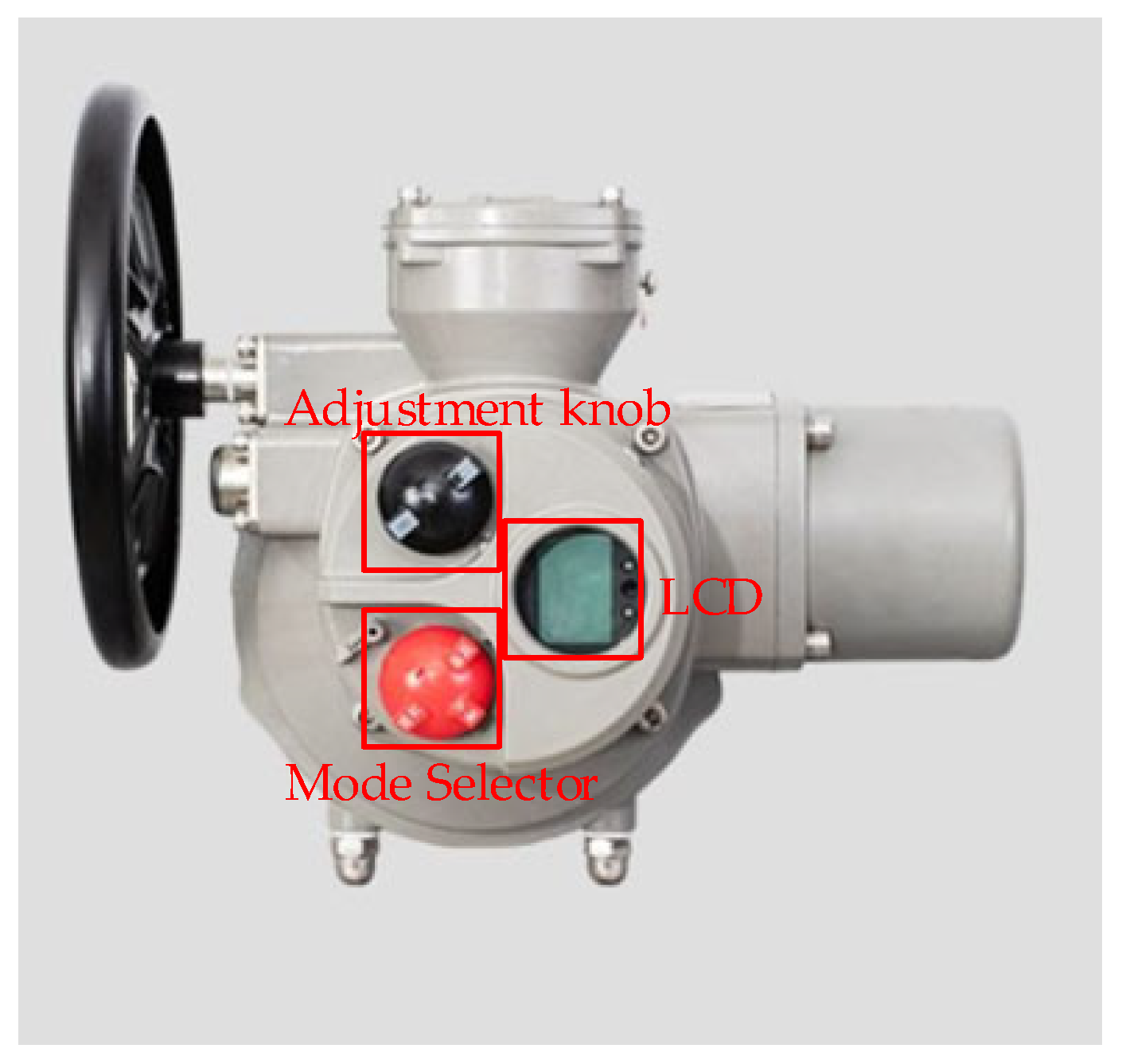

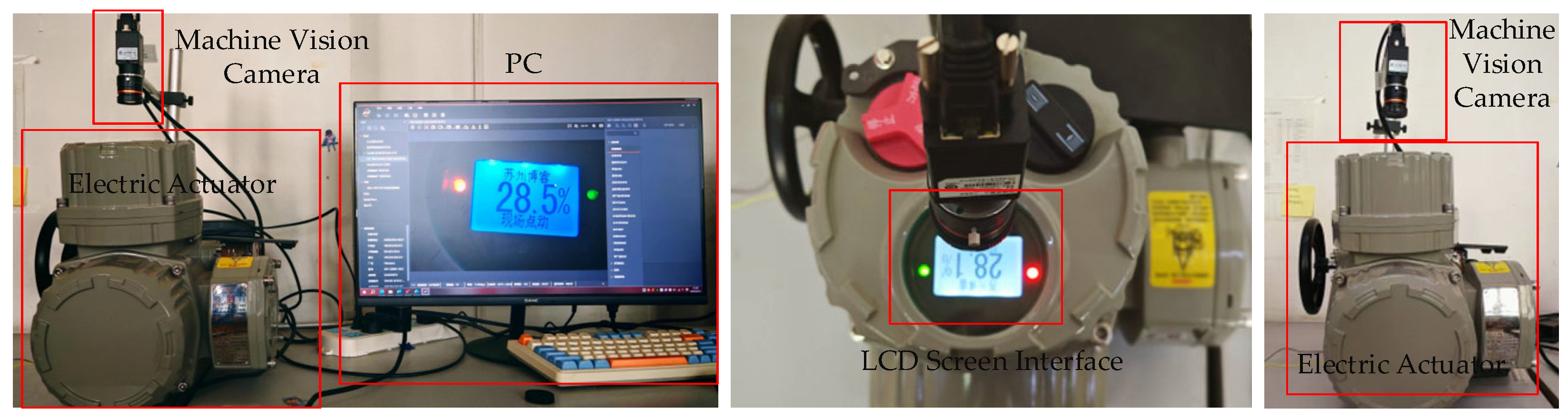

- (1)

- Electric Actuator: As a critical industrial control device, it provides precise actuation for valves, dampers, and related mechanisms. Its embedded sensors deliver real-time feedback on status parameters including valve opening position, motor rotation speed, and current. Serving as the primary data acquisition target, it furnishes fundamental execution-end data for industrial process control.

- (2)

- Machine Vision Camera: Equipped with high-definition imaging modules and intelligent algorithms, it captures and analyzes visual data from the electric actuator’s display interface, physical appearance, and operational status. This visual information is converted into digital signals, enabling non-contact, visual data acquisition. This approach overcomes the limitation of conventional sensors, which typically capture only single-parameter measurements.

- (3)

- PC: It manages the synchronized operation of the actuator and camera, ensures precise timing of data acquisition, and handles the reception, storage, and preliminary processing of data, thereby laying the groundwork for subsequent analysis.

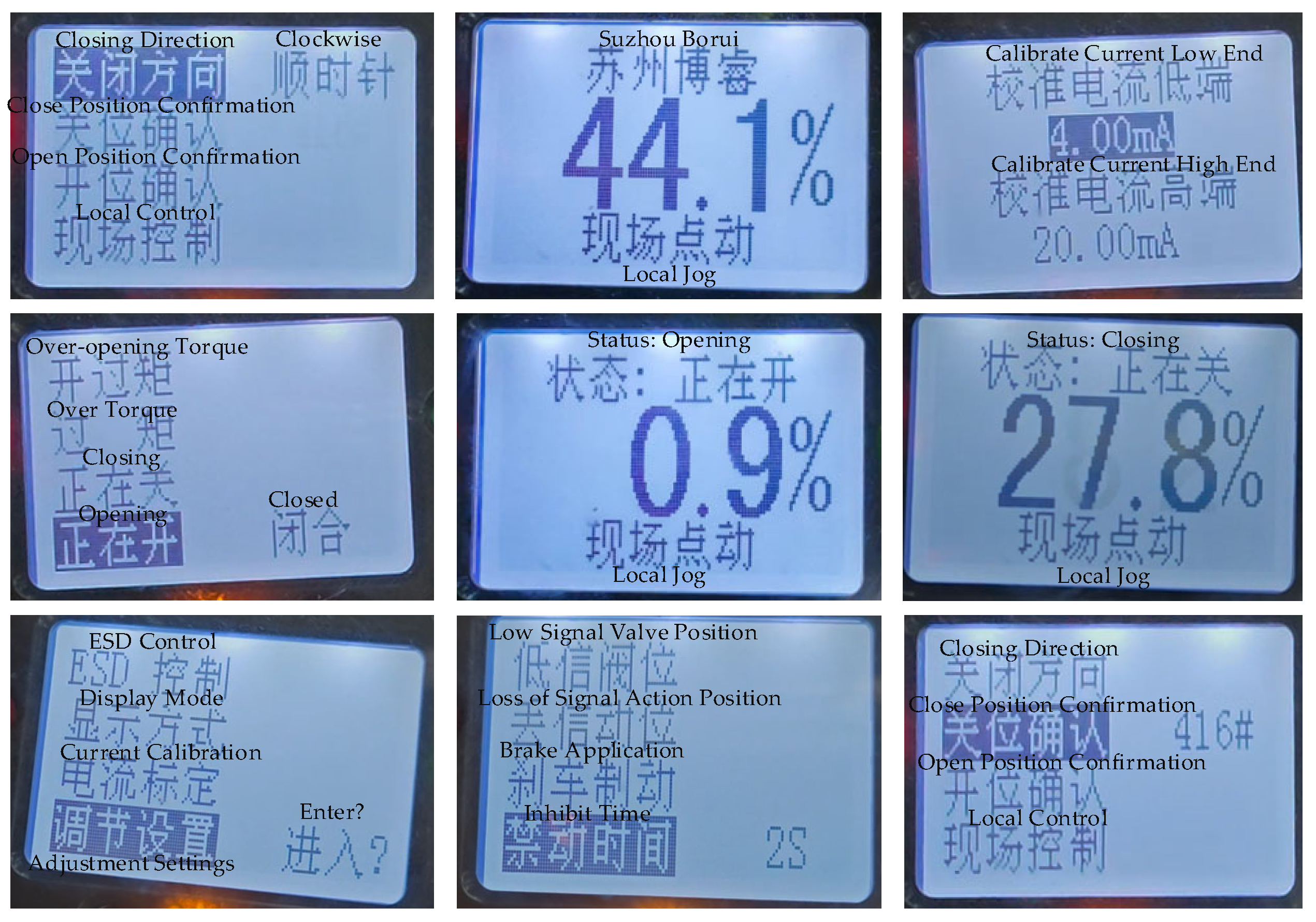

4.1.2. Data Collection and Partitioning

4.1.3. Training Augmentation Strategies

- (1)

- Horizontal Flipping: Applied with a probability of 0.5 to increase diversity in character orientation along the horizontal axis.

- (2)

- Random Rotation: Performed within the range [−10°, 10°] to enhance the model’s ability to handle slightly skewed characters.

- (3)

- Random Scaling: Executed within the scaling factor range [0.5, 3] to improve detection performance for characters of varying sizes.

- (4)

- Random Cropping: Images are randomly cropped to an output size of 640 × 640 while preserving the aspect ratio, thereby strengthening the model’s recognition capability for local features and partially occluded characters.

4.2. Training Details

4.3. Evaluation Indicators

- (1)

- Precision: Refers to the ratio of the number of correctly identified positive samples to the total number of samples predicted as positive, as expressed in the following formula:

- (2)

- Recall: Refers to the ratio of the number of correctly identified positive samples to the total number of actual positive samples, as expressed in the following formula:

- (3)

- F1-Score: Refers to the harmonic mean of Precision and Recall, used to measure the balanced performance of a classification model between these two metrics, as expressed in the following formula:

- (4)

- FPS (Frames Per Second): Refers to the number of image frames a model can process per second. In this experiment, FPS is calculated by measuring the average time required for the model to process 105 images, as expressed by the formula below:

4.4. Ablation Experiment

4.5. Comparison Experiments

5. Conclusions

- (1)

- Superior detection performance: EBiDNet achieves an F1-score of 91.94% on the original dataset, improving by 14.17 percentage points over DBNet while reducing the parameter count by 17.96%, confirming the synergistic benefits of EfficientNetV2-S and BiFPN;

- (2)

- Enhanced robustness and adaptability: EBiDNet demonstrates strong robustness across diverse and complex industrial scenarios, effectively handling a wide range of liquid crystal display character interfaces;

- (3)

- Comparative advantages: EBiDNet demonstrates significant improvements in both detection accuracy and the integrity of character regions compared with mainstream methods such as DBNet, EAST, and FCE. However, relative to some other advanced improved algorithms, the proposed method still exhibits certain limitations. Future work could explore the incorporation of channel and spatial attention mechanisms, adversarial data augmentation, and deformable convolutions to further enhance character detection performance in complex scenarios.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, S.F.; Lai, C.C. The Defect Classification of TFT-LCD Array Photolithography Process via Using Back-Propagation Neural Network. Appl. Mech. Mater. 2013, 378, 340–345. [Google Scholar] [CrossRef]

- Shah, R.; Doss, A.S.A.; Lakshmaiya, N. Advancements in AI-Enhanced Collaborative Robotics: Towards Safer, Smarter, and Human-Centric Industrial Automation. Results Eng. 2025, 27, 105704. [Google Scholar] [CrossRef]

- Xue, C.; Bai, X. Probabilistic Carbon Emission Flow Calculation of Power System with Latin Hypercube Sampling. Energy Rep. 2025, 14, 751–765. [Google Scholar] [CrossRef]

- El Zomor, M.A.; Ahmed, M.H.; Ahmed, F.S.; Elhelaly, M.A. Failure Analysis of Bolts in Deluge Valve Bonnet in Cooling Tower System in Petrochemical Plant. Sci. Rep. 2025, 15, 14133. [Google Scholar] [CrossRef] [PubMed]

- Peng, W.X. Research on Industrial Inspection Technology Based on Computer Vision. Mod. Manuf. Technol. Equip. 2023, 59, 112–114. [Google Scholar] [CrossRef]

- Zhao, X.; Kargoll, B.; Omidalizarandi, M.; Xu, X.; Alkhatib, H. Model Selection for Parametric Surfaces Approximating 3D Point Clouds for Deformation Analysis. Remote Sens. 2018, 10, 634. [Google Scholar] [CrossRef]

- Liao, M.; Shi, B.; Bai, X.; Wang, X.; Liu, W. TextBoxes: A Fast Text Detector with a Single Deep Neural Network. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar] [CrossRef]

- Zhang, S.; Duan, A.; Sun, Y. A Text-Detecting Method Based on Improved CTPN. J. Phys. Conf. Ser. 2023, 2517, 012014. [Google Scholar] [CrossRef]

- Ma, X.; Tian, Y.H.; Zhao, W. A Review of Neural Network-Based Machine Translation Research. Comput. Eng. Appl. 2025. online ahead of print. Available online: https://link.cnki.net/urlid/11.2127.TP.20250522.1548.014 (accessed on 22 May 2025).

- Zhou, X.; Yao, C.; Wen, H.; Wang, Y.; Zhou, S.; He, W.; Liang, J. EAST: An Efficient and Accurate Scene Text Detector. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2642–2651. [Google Scholar] [CrossRef]

- Wang, R.; Yang, C.Y. Transmission Cable Model Identification Based on Dual-Stage Correction. Meas. Control Technol. 2025, 44, 26–34. [Google Scholar] [CrossRef]

- Feng, L.; Chen, Y.; Zhou, T.; Hu, F.; Yi, Z. Review of Human Lung and Lung Lesion Regions Segmentation Methods Based on CT Images. J. Image Graph. 2022, 27, 722–749. [Google Scholar] [CrossRef]

- Deng, D.; Liu, H.; Li, X.; Cai, D. PixelLink: Detecting Scene Text via Instance Segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar] [CrossRef]

- Liao, M.; Wan, Z.; Yao, C.; Chen, K.; Bai, X. Real-Time Scene Text Detection with Differentiable Binarization. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11474–11481. [Google Scholar] [CrossRef]

- Zhu, Y.; Chen, J.; Liang, L.; Kuang, Z.; Jin, L.; Zhang, W. Fourier Contour Embedding for Arbitrary-Shaped Text Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 3122–3130. [Google Scholar] [CrossRef]

- Liu, L.L.; Liu, G.M.; Qi, B.Y.; Deng, X.S.; Xue, D.Z.; Qian, S.S. Efficient Inference Techniques of Large Models in Real-World Applications: A Comprehensive Survey. Comput. Sci. 2025. online ahead of print. Available online: https://link.cnki.net/urlid/50.1075.TP.20250703.1601.002 (accessed on 3 July 2025).

- Guan, S.; Niu, Z.; Kong, M.; Wang, S.; Hua, H. EDPNet (Efficient DB and PARSeq Network): A Robust Framework for Online Digital Meter Detection and Recognition Under Challenging Scenarios. Sensors 2025, 25, 2603. [Google Scholar] [CrossRef]

- Wei, W.; Long, N.; Tian, Y.; Kang, B.; Wang, D.L.; Zhao, W.B. Text Detection Method for Power Equipment Nameplates Based on Improved DBNet. High Volt. Eng. 2023, 49 (Suppl. S1), 63–67. [Google Scholar] [CrossRef]

- Yao, L.; Tang, C.; Wan, Y. Advanced Text Detection of Container Numbers via Dual-Branch Adaptive Multiscale Network. Appl. Sci. 2025, 15, 1492. [Google Scholar] [CrossRef]

- Li, Z.X.; Zhou, Y.T. iSFF-DBNet: An improved text detection algorithm in e-commerce images. Comput. Eng. Sci. 2023, 45, 2008–2017. [Google Scholar]

- Zheng, Q.; Zhang, Y. Text Detection and Recognition for X-Ray Weld Seam Images. Appl. Sci. 2024, 14, 2422. [Google Scholar] [CrossRef]

- Huang, B.; Bai, A.; Wu, Y.; Yang, C.; Sun, H. DB-EAC and LSTR: DBnet based seal text detection and Lightweight Seal Text Recognition. PLoS ONE 2024, 19, e0301862. [Google Scholar] [CrossRef]

- Mai, R.; Wang, J. UM-YOLOv10: Underwater Object Detection Algorithm for Marine Environment Based on YOLOv10 Model. Fishes 2025, 10, 173. [Google Scholar] [CrossRef]

- Ding, Y.; Han, B.; Jiang, H.; Hu, H.; Xue, L.; Weng, J.; Tang, Z.; Liu, Y. Application of Improved YOLOv8 Image Model in Urban Manhole Cover Defect Management and Detection: Case Study. Sensors 2025, 25, 4144. [Google Scholar] [CrossRef]

- Rossi, M.J.; Vervoort, R.W. Enhancing Inundation Mapping with Geomorphological Segmentation: Filling in Gaps in Spectral Observations. Sci. Total Environ. 2025, 997, 180180. [Google Scholar] [CrossRef] [PubMed]

- Xie, W.; Cui, Y.R. Identification of Maize Leaf Diseases Based on Improved EfficientNetV2 Model. Jiangsu Agric. Sci. 2025, 53, 207–215. [Google Scholar] [CrossRef]

- Yin, L.; Wang, N.; Li, J. Electricity Terminal Multi-Label Recognition with a “One-Versus-All” Rejection Recognition Algorithm Based on Adaptive Distillation Increment Learning and Attention MobileNetV2 Network for Non-Invasive Load Monitoring. Appl. Energy 2025, 382, 125307. [Google Scholar] [CrossRef]

- Mao, Y.; Tu, L.; Xu, Z.; Jiang, Y.; Zheng, M. Combinatorial Spider-Hunting Strategy to Design Multilayer Skin-Like Pressure-Stretch Sensors with Precise Dual-Signal Self-Decoupled and Smart Object Recognition Ability. Res. Astron. Astrophys. 2025, 25, 1147. [Google Scholar] [CrossRef]

- Zhuang, J.; Chen, W.; Huang, X.; Yan, Y. Band Selection Algorithm Based on Multi-Feature and Affinity Propagation Clustering. Remote Sens. 2025, 17, 193. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Guo, Y.; Zhan, W.; Zhang, Z.; Zhang, Y.; Guo, H. FRPNet: A Lightweight Multi-Altitude Field Rice Panicle Detection and Counting Network Based on Unmanned Aerial Vehicle Images. Agronomy 2025, 15, 1396. [Google Scholar] [CrossRef]

- Yu, X.; Lin, S.J. Research on Improved Text Detection Algorithm for Prosecutorial Scenarios Based on DBNet. SmartTech Innov. 2024, 30, 7. [Google Scholar] [CrossRef]

- Liao, M.; Zou, Z.; Wan, Z.; Yao, C.; Bai, X. Real-Time Scene Text Detection with Differentiable Binarization and Adaptive Scale Fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 919–931. [Google Scholar] [CrossRef]

- Zhang, S.X.; Zhu, X.; Hou, J.B.; Liu, C.; Yang, C.; Wang, H.; Yin, X.C. Deep Relational Reasoning Graph Network for Arbitrary Shape Text Detection. arXiv 2020, arXiv:2003.07493. [Google Scholar] [CrossRef]

- Sheng, T.; Chen, J.; Lian, Z. CentripetalText: An Efficient Text Instance Representation for Scene Text Detection. arXiv 2022, arXiv:2107.05945. [Google Scholar] [CrossRef]

- Li, X.T.; Deng, Y.H. DDP-YOLOv8 model for battery character defect detection. J. Electron. Meas. Instrum. 2025, online ahead of print. 1–10. Available online: https://link.cnki.net/urlid/11.2488.TN.20250512.1511.004 (accessed on 13 May 2025).

- Deng, W.C.; Yu, X.C.; Zhu, J.B.; Ma, Q.S.; Chen, Y.; Ye, C.; Zhang, C.Z.; Ge, C.Y. Text detection model based on DBNet-CST. Commun. Inf. Technol. 2025, 1, 100–103. [Google Scholar]

- Cai, W.T.; Zhao, H.; Wang, H.; Zhang, H. An Improved Inkjet Character Recognition Algorithm Based on Multi-Granularity Attention. Manuf. Autom. 2024, 46, 148–155. [Google Scholar] [CrossRef]

| Stage Operator | Operator | Stride | Channel | Layers |

|---|---|---|---|---|

| 0 | Conv 3 × 3 | 2 | 24 | 24 |

| 1 | Fused-MB Conv1, k3 × 3 | 1 | 24 | 24 |

| 2 | Fused-MB Conv4, k3 × 3 | 2 | 48 | 48 |

| 3 | Fused-MB Conv4, k3 × 3 | 2 | 64 | 64 |

| 4 | MB Conv4, k3 × 3, SE0.25 | 2 | 128 | 128 |

| 5 | MB Conv6, k3 × 3, SE0.25 | 1 | 160 | 160 |

| 6 | MB Conv6, k3 × 3, SE0.25 | 2 | 256 | 256 |

| 7 | Conv 3 × 3 & Pooling & FC | - | 1280 | 1280 |

| Name | Version |

|---|---|

| Operating System | Windows 10 Professional |

| CPU | Intel Core i5-13400F |

| GPU | RTX-4060 |

| Python | 3.10.16 |

| Paddle | 2.6 |

| CUDA | 11.8 |

| Model | Precision | Recall | F1-Score | Params/M | FPS |

|---|---|---|---|---|---|

| DBNet | 84.50% | 72.04% | 77.77% | 24.55 | 18.87 |

| DBNet + BiFPN | 86.55% | 79.11% | 82.66% | 24.09 | 20.88 |

| DBNet + EfficientNetV2S | 92.43% | 84.48% | 88.28% | 20.29 | 17.02 |

| DBNet + EfficientNetV2S + BiFPN (Ours) | 93.63% | 90.32% | 91.94% | 20.14 | 18.61 |

| Model | Precision | Recall | F1-Score |

|---|---|---|---|

| EAST | 84.41% | 69.17% | 76.02% |

| FCE | 90.08% | 89.49% | 89.78% |

| DB++ [33] | 90.89% | 82.66% | 86.58% |

| DRRG [34] | 89.92% | 80.91% | 85.18% |

| CT [35] | 88.68% | 81.70% | 85.05% |

| EBiDNet (Ours) | 93.63% | 90.32% | 91.94% |

| Model | Precision | Recall | F1-Score |

|---|---|---|---|

| EDNet [17] | 100.00% | 99.59% | 99.79% |

| F1 [18] | 84.70% | 79.20% | 82.20% |

| iSFF-DBNet [20] | 82.60% | 68.10% | 74.60% |

| DB-EAC [24] | 90.29% | 85.17% | 87.65% |

| DDP-YOLOv8 [36] | 86.30% | 97.00% | 91.30% |

| DBNet-CST [37] | 92.20% | 81.10% | 86.30% |

| F2 [38] | 98.82% | 84.20% | 90.92% |

| EBiDNet (Ours) | 93.63% | 90.32% | 91.94% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Wu, Y.; Yan, Z. EBiDNet: A Character Detection Algorithm for LCD Interfaces Based on an Improved DBNet Framework. Symmetry 2025, 17, 1443. https://doi.org/10.3390/sym17091443

Wang K, Wu Y, Yan Z. EBiDNet: A Character Detection Algorithm for LCD Interfaces Based on an Improved DBNet Framework. Symmetry. 2025; 17(9):1443. https://doi.org/10.3390/sym17091443

Chicago/Turabian StyleWang, Kun, Yinchuan Wu, and Zhengguo Yan. 2025. "EBiDNet: A Character Detection Algorithm for LCD Interfaces Based on an Improved DBNet Framework" Symmetry 17, no. 9: 1443. https://doi.org/10.3390/sym17091443

APA StyleWang, K., Wu, Y., & Yan, Z. (2025). EBiDNet: A Character Detection Algorithm for LCD Interfaces Based on an Improved DBNet Framework. Symmetry, 17(9), 1443. https://doi.org/10.3390/sym17091443