1. Introduction

In the modern world where the internet dominates, user-generated content, especially reviews of movies, TV shows, and streaming services, has become an essential source of social opinion. The subjective sentiment density of these reviews is high, and many are organized by specific aspects: plot, acting, visual effects, direction, and soundtrack. This is, however, not an easy task since the Aspect-Based Sentiment Analysis (ABSA) of these reviews would require emotional tone and contextual layering. The common sentiment analysis methods do not satisfy the fine-grained interrelation between context and emotion, which is why methods based on deep learning that are more involved and architecture-aware have to come to the fore. The given research is devoted to comparing and choosing the best deep learning architectures to be applied in film and TV content interpretation from the perspective of ABSA [

1]. The most fundamental problem is how to systematically compare and rank deep learning models, including BERT (Bidirectional Encoder Representations of Transformers) + GRU assemblers, transformer-based encoders, attention-enhanced CNNs, and other hybrid networks, along several conflicting dimensions. Such criteria can be the degree of (classification) accuracy, the interpretability of the model, efficiency (of the computers), and cross-domain generalizability. The problem with conventional ranking and MCDM is that they usually cannot capture the vagueness involved in subjective preferences by human beings. Therefore, we can combine the DoCM [

2] and a CPFS [

3] construct. DoCM offers a loose and simple-to-understand decision support technique that can be used to allow masters to perform pair-wise comparisons based not on comparable numeric markings, but on the explanation of preferences. Throughout the involvement of circular logic, we accept that preferences can be described in a directional manner based on the understanding that two options could be both near or far within a conceptual cycle of value. Moreover, it features in a system in which the PFS is embedded, representing membership (support of an option), non-membership (opposition), and hesitation, with the vague indecision that is realistic whenever subjective models are being assessed. A typical example problem of the current research includes five possible architectures, which are considered relative to four essential criteria based on expert knowledge and the performance of a dataset. The comparison procedure starts with the input of fuzzy evaluations of each choice, the acquisition of fuzzy Pythagorean scores, and the subsequent production of circular wooden rankings by the logic of the deck of cards matrix. The approach gives a mathematically sound, interpretable, and visually constructive approach to choosing the most appropriate model to use in ABSA. Finally, the proposed problem can be addressed and presented by empowering researchers, media analysts, and AI practitioners with a transparent and human-centric framework, providing the best-performing emotion analysis model according to metrics, human intuition, and uncertainty. These are essential advantages regarding recommendation systems, opinion monitoring services, or media analytics systems [

4].

1.1. Background

The digital world is a data-driven society, and thousands of terabytes of unorganized information, which reflects people’s opinions, emotions, and sentiments, are produced daily by various media platforms. The proper perception of this sentiment plays a significant role in using applications like political prediction, brand management, mental conditions, and even safety in terms of protection. Although these traditional approaches to sentiment analysis are somewhat practical, they frequently fail to address vagueness, ambiguity, and subjectivity, which are also inherent to human language expressions. Consequently, there have been increasing demands for more intelligent, flexible, and uncertainty-aware decision-making architectures, especially in selecting optimal deep learning architectures with reference to such applications. As a possible solution to this problem, the current paper proposes a new decision-making scheme based on the CPFS or on modifying existing decision-making tools such as the DoC technique. This hybrid scheme increases the articulation of human-like reasoning in an uncertainty setting where information possesses symmetry and facilitates the systematic elicitation of pairwise preferences. By incorporating it into a multi-criteria decision-making (MCDM) environment, the model allows one to choose the most appropriate deep learning model to interpret sentiment in the media. The CPFS structure, besides reflecting the complex fuzzy logic of relationships, further demonstrates the context of circular geometry and expresses the hesitancy and relative power of preferences better than in classical fuzzy theories. The concept of Fuzzy Set (FS) Theory was first introduced by Lotfi Zadeh in 1965 [

5] and revolutionized how uncertainty and imprecision were treated in computational models. Over the decades, numerous extensions have been developed to address the limitations of FS. Some Fuzzy set extensions shown in

Table 1.

Each successive model builds upon the weaknesses of the previous one, advancing in how it models uncertainty, hesitancy, and the relationship between membership and non-membership degrees. In particular, CPFS introduces a circular geometric structure that captures the degree of uncertainty and the balance and trade-off between opposing states, offering richer semantic insight for decision-making.

1.2. Problem Statement

Due to the abundance of user-generated reviews on both film and television sites, the dynamics of the data presented create a rich but complex environment, which tends to concentrate sentiment in specific areas, such as storyline, acting, cinematography, and direction. To obtain these sentiments, we need advanced deep learning models to extract them. However, the number of architectures to be used, like attention-based networks, BERT hybrids, and transformer variants, is also a problem: Which deep learning-based semi-supervised model approaches best suit aspect-specific sentiment analysis within media reviews?

Existing performance appraisal systems are incomplete as they contain three fundamental shortcomings:

They also depend significantly on sharp metrics, disregarding indecisiveness.

They are not interpretable by human beings on comparative model judgment.

They cannot incorporate subjective, circular preferences and fuzzy reasoning.

The framework must support a multi-criteria assessment, represent human reasoning, and accommodate fuzzy uncertainty and preference loops. The study fills this research gap by offering a CPF-DoCM to measure and rank the deep learning architectures on media sentiment interpretation.

1.3. Research Gap

Although the deep learning and sentiment analysis fields have shown substantial progress, an essential point of departure still exists when deriving a framework for observing and choosing deep learning architectures in a scenario filled with uncertainty, hesitation, and the personal interpretation of an expert. The current model selection frameworks mostly use crisp measures, such as accuracy or loss functions. Thus, they cannot cover human-focused elements, such as interpretability, computational workability, and scenario-specific relevance, which are particularly important in sensitive areas like media sentiment interpretation. Moreover, fuzzy set theories have been utilized even in the context of decision-making. Still, in most cases, the conventional approaches to fuzzy sets have been used; these include regular fuzzy sets, imprecise fuzzy sets, or Pythagorean sets, which do not employ the modeling of circular geometry, which can express trade-offs and preference imprecision more smoothly. In addition, the most commonly used MCDM techniques do not incorporate an easy-to-use algorithm that would elicit priorities defined by the expert. They are more of a problem in the real world and more challenging to work with in multi-stakeholder settings. Hitherto, no unified framework has clustered Circular Pythagorean Fuzzy Sets (CPFS) and Deck of Cards (DoC) to carry out both, choose an LMM, and understand it clearly. This literature examines this gap and presents a new CPFS-DoC framework explicitly used to find the optimal architecture in the uncertain, complex field of media sentiment analysis.

1.4. Research Question and Motivation

The research question of the study is as follows:

What is the criterion-consistent and practical evaluation and ranking of deep learning architectures of the aspect-based sentiment analysis of film and television content via a multi-criteria decision-making (MCDM) approach?

How can Pythagorean fuzzy sets amplify the characterization of doubts, ambivalence, and subjective expert evaluation concerning the model selection in sentiment analysis?

How might circular reasoning be assimilated into the comparative decision models to account for the non-linear and cyclic ordering of choices in decomposing deep learning?

Would combining the Deck of Cards Method with Circular Pythagorean fuzzy logic offer a more structured, interpretable, transparent, and cognitively consistent system of choosing optimal deep learning models in media sentiment interpretation?

How does applying the proposed hybrid framework affect deep learning model selection’s accuracy, reliability, and explainability in the aspect-specific sentiment analysis in the entertainment domain?

The motivation of our study is as follows:

As streaming services and user-generated reviews have gained popularity, the entertainment sector has become a sentiment gold mine. Audience members have given up using stars to rate their opinions, but have used descriptive content that shows subtle feelings regarding emotions like story, characters, movies, soundtrack, etc. Although aspect-based sentiment analysis (ABSA) has already proved to be a valuable method for the interpretation of such narrowed-down opinions, selecting the deep learning architecture to work with this problem is still a multifaceted dilemma. There are a lot of models, including five retraining costs, six scaling, seven accuracies, including CNN and LSTM-based frameworks, and more advanced transformer-based ones (e.g., BERT and GPT). The available evaluation methods usually assess using singular measures (such as accuracy or F1-score), and do not reflect the multidimensionality of model appropriateness. In addition, the subjective judgments of any expert, ambiguous preferences, and a reluctance to take one of the models that performs most similarly are not considered in the classic model selection approaches. The research is driven by the necessity of a more humanistic, aligned with the needs of people, and flexible approach to decision-making methodology that would incorporate expert opinion, allow one to deal with ambiguity, and represent a cyclic model of preferences when models are performing similarly on several criteria. Accordingly, the present paper proposes a hybrid system that integrates the DoCM, a pictorial and intuitive ranking-based approach, and the CPFS theory to contextualize the sentiment-model examination procedure with uncertainty-sensitive, circular comparative inference.

1.5. Objective and Contribution

To develop a new method of MCDM combining the DoCM with CPFS.

To compare the appropriateness of different deep learning assemblers (e.g., BERT + BiGRU, Transformer-XL, CNN-LSTM) used to carry out aspect-specific sentiment analysis of film and television texts.

To support the comparative modeling of human-like reasoning in that it involves circular pairwise judgments, hesitation modeling, and fuzzy preference aggregation levels with symmetry.

To exemplify how the framework may be applied to a real-world scenario via a case study and evaluate the performance considering different criteria (e.g., accuracy, interpretability, computational efficiency, and generalization).

Confirm the efficiency of the given approach using score, aggregation, ranking, and visual analysis.

This study makes an innovative and multidisciplinary research contribution by proposing a CPF-DoCM model for assessing and selecting deep learning models in a media sentiment analysis study. It is the earliest endeavor to unite the models of circular fuzzy reasoning, the theory of PFS, and the DoCM in an integrated model selection pipeline that would suit the prodigious task of aspect-specific sentiment interpretation. To begin with, the paper provides a new approach to subjective model evaluation that is realized in the form of Pythagorean fuzzy numbers PFN and allows one to express the degree of membership, non-membership, and hesitation in human judgments. Second, it employs circular logic, allowing for a non-linear, direction-conscious comparison that takes into account subtle preference cycles among alternatives, which is something that ordinary ranking systems cannot do. Third, the DoCM features an intuitive interface that enables a decision-maker to rank model alternatives through visual and pairwise comparisons between DoC, rather than relying on arbitrarily assigned numerical scoring. The proposed framework offers a unique combination of the circular fuzzy reasoning, hesitation modeling, and Deck of Cards method to study both quantitative performance and qualitative interpretability together and, therefore, represents a meaningful contribution to addressing this gap in the research. To illustrate its usefulness, the framework is applied in a case study comparing the results of various deep learning models on several key metrics, including accuracy, interpretability, generalization, and computational efficiency. The suggested approach enables the identification of the most relevant model in the context, thereby increasing transparency, flexibility, and trust during the model selection process. This contribution presents a significant advancement for any researcher and practitioner working in natural language processing, decision science, sentiment analysis, and ethics in AI, especially in the entertainment and media analytics domain.

1.6. Layout

This article is based on the CPF information using the DoC method. The remaining part of this article is organized as follows:

Section 1 presents an overview of this research work, and

Section 2 provides a literature review of the DoC method. In

Section 3, we explore CPFS and some fundamental operation laws in the environment of CPF information.

Section 4 discusses the extension of the DoC technique, methodology, and algorithm in the framework of CPF information based on the MCDM problem. Moreover, we apply our proposed work to the numerical example and conduct a sensitivity and comparison analysis.

Section 5 presents the article’s conclusion, outlines its limitations, and discusses the scope of future research.

4. Evaluation of Optimal Deep Learning Architecture in Media Sentiment Interpretation

Evaluating optimal deep learning models for interpreting sentiment in media involves examining several, often conflicting, performance measures, such as accuracy, interpretability, computational efficiency, and generalization ability. The conventional evaluation metrics will consider only accuracy without considering the trade-offs essential in real-life applications of the technology, particularly in high-stakes fields such as journalism, politics, and mental health monitoring. This paper uses the CPF-DoCM as an MCDM method to evaluate deep learning architectures and rank them rigorously. There are five other types of architecture, including BERT, LSTM, Transformer-XL, RoBERTa, and BiLSTM, that have been tested against four key parameters based on expert linguistic judgments data recorded in Circular Pythagorean Fuzzy Numbers (CPFNs). Such fuzzy judgments enable the choice makers to represent preference and indecisiveness among criteria more naturally, with the drawbacks of binary or crisp evaluation models. The DoC method is incorporated to calculate the relative importance weights of the criteria based on a static and visual way of expressing preference. This makes the stakeholder input cognitively convenient and mathematically sound so that a correct representation of expert views can be achieved in a fuzzy MCDM setup. After the formation of the weights, the advanced fuzzy operators will be used to aggregate the CPFNs, and alternative scores will also be calculated.

4.1. Case Study

The case study utilizes simulated data, rather than a real-world benchmark dataset. It aims to demonstrate how the CPF-DoCM framework operates within a controlled framework and how expert estimation and fuzzy preference may inform the ranking of the models. The hypothetical performance values for models were chosen in alignment with trends reported in recent ABSA benchmark studies [

12,

15]. The next step will be an empirical in situ proof of the practical effectiveness of ranked models with regular data that covers such websites as IMDb or Rotten Tomatoes.

To increase transparency and explainability for practitioners, the CPF-DoCM process employed in this case study allows every step of the decision-making process to be represented in a traceable and interpretable manner. The Deck of Cards Method (DoCM) enables professionals to intuitively and graphically reveal their preferences through pairwise comparisons. In contrast, CPFS addresses the issue of hesitation and uncertainty in a well-structured manner. Moreover, the overall scores of each alternative can be disintegrated to reveal the extent to which each criterion has contributed to the final rank. The justification and transparency in the model selection outcome are evident in this layered structure.

Here, we will solve an illustrative example by considering four criteria and five alternatives (

Table 2). We will rank the best alternative based on the requirements, and for this decision-making, we will use a method and work with the CPF framework.

The alternatives will be represented as and the criteria will be represented as . Weights will be assigned to each criterion using DoCM, and a pairwise blank card will be used to calculate the normalized weights.

These models have not been applied in the context of this study. They were chosen as the representative alternatives to be evaluated in the CPFS-DoCM framework.

4.2. Numerical Evaluation

Step 1. Decision-makers rank alternatives (or criteria) from most preferred to least preferred. Let

be alternatives and

. The ranking of alternatives on the above criteria is shown in

Table 3.

Step 2. A comparison table is created by specifying the number of blank cards (gaps) between each pair of elements. The matrix includes all pairwise comparisons and may consist of interval or uncertain values, as shown in

Table 4.

Step 3. Check the consistency of the table using the rule, as shown in

Table 5.

For all If inconsistencies are found, revise through decision-maker interaction.

Step 4: Once consistency is achieved, calculate the weight for each item, as shown in

Table 6.

Then, normalize the results, as shown in

Table 7.

Step 5. A Pythagorean fuzzy number gives each evaluation, as shown in

Table 8.

Step 6. For each criterion

construct matrix

, as given in

Table 9.

Step 7. Now, compute local scores, as given below in

Table 10.

For to for unweighted, overall ; for weighted, .

Step 8. Rank alternatives based on descending

values, as given in

Table 11.

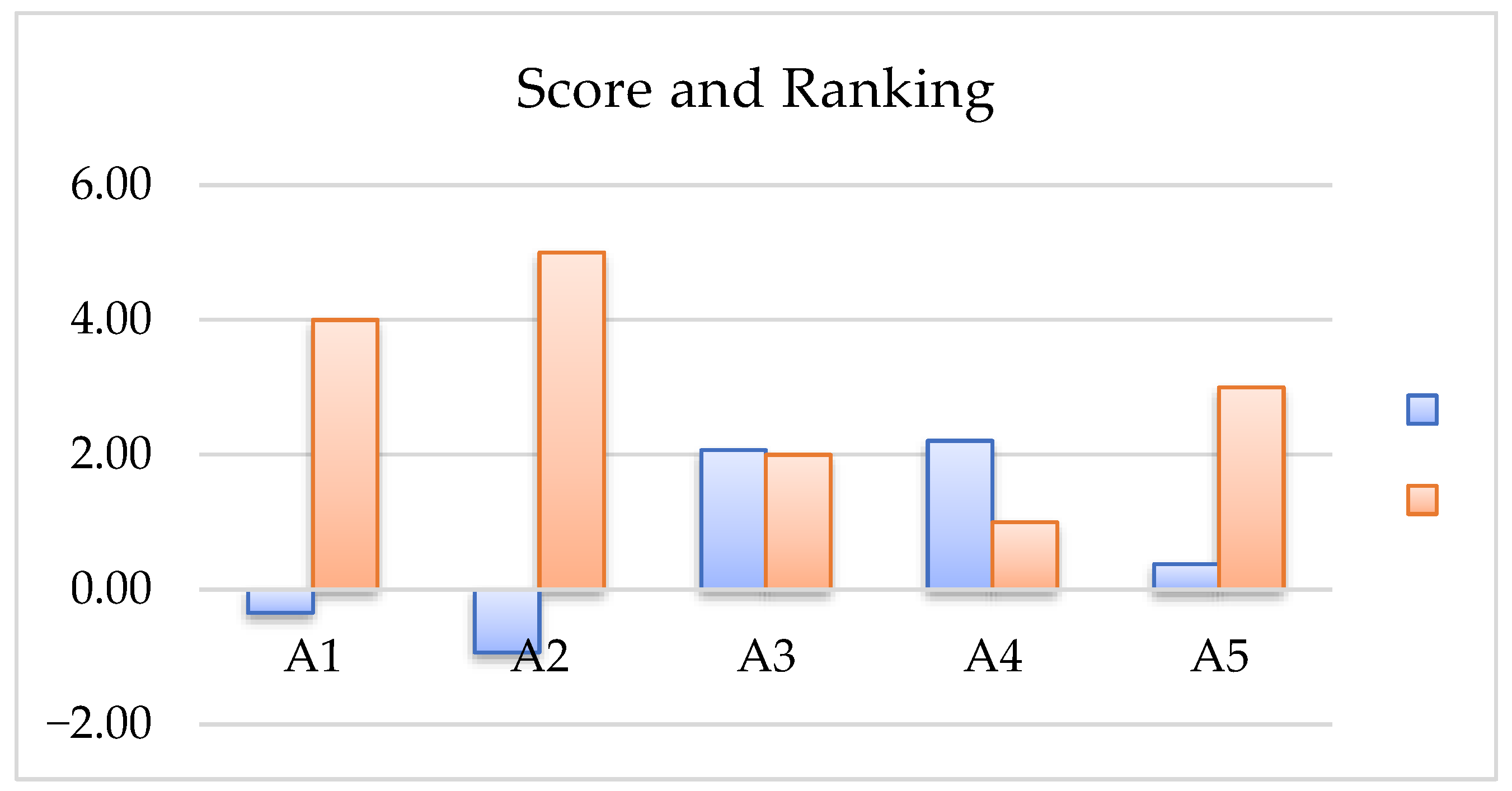

Step 9. This is a pictorial representation of the ranking of alternatives, as given in

Figure 2.

4.3. Result Discussion and Comparison

The findings based on the CPF-DoC model indicate that Integrator is an optimal deep learning architecture for interpreting media sentiments. Such a decision is formed on the aggregation of the scores of four critical points of examinations, and the norm score in the aggregate of is This indicates the stability and uniformity of the examination assessment. In particular, stood out in interpretability . It also demonstrated competitiveness in all other measures, indicating that it is likely a substantial trade-off of the long-range dependencies and semantic depth characteristics important in fine-grained sentiment processing. The second-best alternative was somewhat more precise and adaptive, lower in its semantic representation, and thus not as holistic. Alternative underperformed, with less consistency in its performance and lower robustness or explainability. These results confirm the effectiveness of the CPF-DoC approach in reflecting expert judgment and processing vague, uncertain assessments. Possessing the ability to model qualitative gaps, as well as maintain ordinal preferences, is very useful in sophisticated decision-making scenarios, such as selecting deep learning models for sentiment analysis tasks.

The integration of both the CPFS and DoCM is computationally more demanding than conventional methods, as it involves calculations of fuzzy membership and hesitation degrees, as well as pairwise comparisons. For the current study (five models and four criteria), this is easily manageable. However, in very large-scale comparisons (e.g., hundreds of models), the main challenges would be obtaining better memory and time to handle large, fuzzy matrices, exerting greater effort to obtain professional input, and necessitating parallel processing schemes and automated judgment tools. This is an area we can improve upon in the future. Furthermore, the final scores of alternatives can also be very close or even tied in cases where the circular scoring approach of CPFS-DoCM is used. This is indicative of real-life uncertainty in expert judgment and does not counter the validity of the method. In these situations, the decision-maker has the option of utilizing tie-breaking methods, including reconsidering specific high-weight criteria, adding an auxiliary criterion, or seeking other expert opinion. The scoring is visual and circular and assists in determining when choices are almost identical on a scale where decisions can be refined intelligently rather than forced-ranked.

Decision explainability is very significant, especially when ranking complicated deep-learning models. It increases transparency in three critical ways by CPF-DoCM: Explicit Weighting of Criteria. Unlike black-box optimization approaches, CPF-DoCM displays the relative importance of each criterion (e.g., Accuracy, Interpretability, Complexity) and how these weights influence rankings. Final Scores Decomposition: The score of an alternative can be broken down into the contributions of individual criteria, making it obvious why a given model is ranked above another one.

The comparative study of extensions of fuzzy sets highlights the exclusive benefits of the CPFS model used in the paper. Conventional fuzzy models, such as IFS and PFS, provide basic functionalities for representing uncertainty and non-membership. Still, they are not geometric and fail to support the fine-grained expression of preference. CIFS takes this further in some aspects, providing radius-based membership and the ability to visualize membership. However, it still has not considered ways to incorporate the intuitionistic nature of such tasks by expert-based preference elicitation techniques such as the DoC framework. To the contrary, CPFS is an extension that allows circular (as well as semantically richer, but of limited geometric scope) geometry, in addition to supporting all classical fuzzy-related properties, which are uncertainty accounting, hesitancy, and explicit non-membership, providing it with a unique ability in modeling complex human judgments. When used with the DoC technique, CPFS allows an interactive, scale-based, cognitively natural decision-making process and is thus particularly adequate for formulating optimal deep learning architectures in sentiment analysis implementations. The comparative table based on characteristics is given in

Table 12.

To support classical performance comparison, the materials used to evaluate all five deep learning models classically, using averaged traditional assessment scores (e.g., Precision, Recall, F1-score, and training time), were provided (see

Table 13). These values are either hypothetically simulated based on reasonable assumptions or derived from published literature benchmarks on sentiment analysis. Although these metrics offer a robust empirical comparison for selecting the model, they fail to reflect explainability, cross-domain generalization, and preference-based trade-offs across criteria. The suggested CPFS-DoCM framework combines these quantitative measures with qualitative and strategic standards (e.g., interpretability, decision-maker preferences), thereby providing a more holistic and flexible model selection methodology.

The interpretability is determined qualitatively depending on the model architecture and insights reported in the literature. Although the F1-Score is greatest in , it also demands a better amount of training resources. The aggregate score computed in the final CPFS-DoCM combines the classical scores and other criteria of the decision-maker, exemplifying how the method under consideration attempts to integrate accuracy, efficiency, and interpretability.

4.4. Sensitivity Analysis

The sensitivity analysis shows that, despite varying the weight of individual criteria like the accuracy or interpretability, Transformer-XL

is the highest-ranked architecture. This proves the strength of the CPF-DoC model in identifying the best architecture. Perturbations improve (or worsen) other alternatives, but the ranking order is relatively consistent, strengthening the regularity and soundness of the developed method.

Table 14 shows the sensitivity analysis of the best alternatives.

4.5. Practical Implications

This study has significant practical implications, especially in artificial intelligence-based decision-making when applied to the sentiment analysis of any media. The model provides a systematic yet flexible framework for evaluating and selecting between deep learning architectures in cases of uncertainty, imprecision, and subjectivity, thereby merging the CPFS and the DoC methods. This could particularly be applied to media corporations, social platforms, and policymakers who require sentiment analysis on the fly to provide real-time analytics, identify misinformation, and trace trends in people’s opinions. This model enables decision-makers to incorporate expert linguistic judgment in a mathematically solid ranking procedure that can be more transparent and traceable when choosing the models with the best performance, like Transformer-XL or hybrids based on BERT. In addition, its ability to record subtle choices and trade-offs extends to decisions under high-value and context-specific situations that align with operational objectives and ethical considerations in AI implementation. It is also possible to apply this strategy in other fields that deal with strategic model choice, including finance, healthcare, and cybersecurity. Although the presented application of domain-independent CPF-DoCM framework is in media sentiment interpretation, the framework itself is purely general. Its ability to handle uncertainty and integrate subjective preferences makes it suitable for other sectors such as healthcare (model selection), finance (portfolio optimization), and cybersecurity (threat detection). The framework needs the domain-specific criteria to be defined so as to adapt, yet its structure concerning computation does not change.

5. Conclusions

This paper proposed a new decision-making model, which involves the DoCM intersected with CPFS to determine the best deep learning architecture that will facilitate the interpretation of media sentiment. By including subjective expert opinions, the model considers the ambiguity and reluctance of assessing various deep learning options. The DoCM’s co-constructive character allowed us to elicit preferences in a very nuanced manner. In contrast, the CPFS framework allowed us to cope effectively with uncertainty, which is especially relevant when it comes to sentiment-significant domains and realities. The good results in selecting architectures that are susceptible to averting performance, interpretability and complexity in the experimental implementation of the model showed effectiveness in the media analysis context. Therefore, the suggested approach can be a systematized, comprehensible, and changeable framework for directing DL architecture choices in problem settings, including high-stakes, sentiment-based settings. However, we recognize that the assessment in this paper was limited to five deep learning models and four criteria related to a specific media sentiment situation. The results are, however, encouraging, and further study is required to validate the framework in a broader context, involving more domain-specific examples, such as news, music, or product reviews, as well as additional decision criteria. Future extensions will make the framework more broadly applicable and attempt to challenge its robustness across a broader range of nuanced, real-world sentiment analysis problems.

Limitation and Future Direction

This research has several limitations despite its merits. First, the model’s efficiency is directly dependent on the quality and regularity of the expert input; the input may be biased or afflicted with inconsistent estimates. Second, CPFS is good with hesitation, but it is computationally demanding in large search spaces of architecture. Third, the model remains based on stagnant expert opinion, lacking consideration of real-time performance updates and the transformation of attitudes. This is a conceptual study based on simulated data, serving as an illustration of the decision-making framework. Confirmatory tests over genuine, review-based data are necessary to further substantiate the soundness and generalizability of the suggested model. Moreover, validation with benchmark datasets against real-world data is another future research objective. Additionally, it is assumed that decision-makers clearly understand the acceptable performance levels of the model, which may not always be the case, especially in applications where technical expertise is not above average. The research is currently based on hypothetical data. Although this would guarantee controlled model testing, it does not allow for direct generalizations to real-world information. A future study may involve testing the validity of the framework using actual media review data to enhance external validity. We acknowledge that, although transferability through the framework design was considered in this study, the incorporation of empirical validation from other domains into the design has not been addressed in this work and will be reflected in future studies. The approach is practical for small and medium-sized decision problems, but can become inefficient in cases involving large applications with a larger number of models.

This model might be expanded to include the real-time feedback of deployed DL architectures, allowing a dynamic learning-decision loop. Additionally, subjectivity can be minimized by integrating automated methodologies of meta-learning to enhance expert judgments. Moreover, group decision-making in distributed environments would improve the scalability of the given model in collaborative settings. Assigning other fuzzy extensions, such as interval-valued [

26], spherical, hesitant [

27] or complex fuzzy sets [

28,

29], or combining the trust and transparency mechanisms through explainability in model selection, may also enhance it. Involving the symmetry-based aggregation operators, together with the DoC method, may also be performed in future work. Lastly, expanding the application of such a hybrid framework to various NLP-related tasks (e.g., misinformation detection or toxic language filtering) would enable its greater practical use.