Synthesizing Olfactory Understanding: Multimodal Language Models for Image–Text Smell Matching

Abstract

1. Introduction

- We propose a method to model semantic symmetry between images and texts by aligning their representations through shared olfactory references. This enables improved detection of smell-related elements in multimodal data using a multilingual ensemble of language-specific models.

- We explore the potential of fine-tuning multimodal large language models (MM-LLMs), particularly Qwen-VL-Chat, for significantly improved olfactory recognition in the MUSTI task.

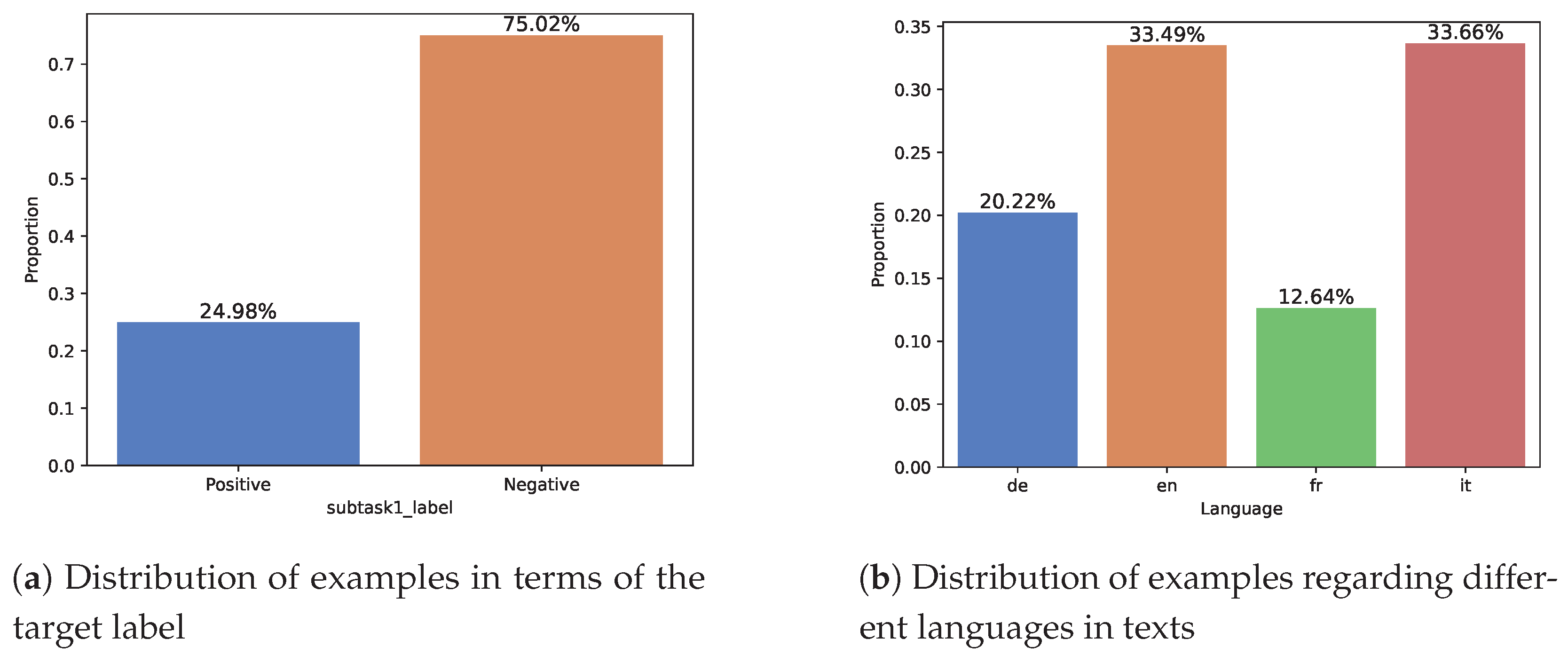

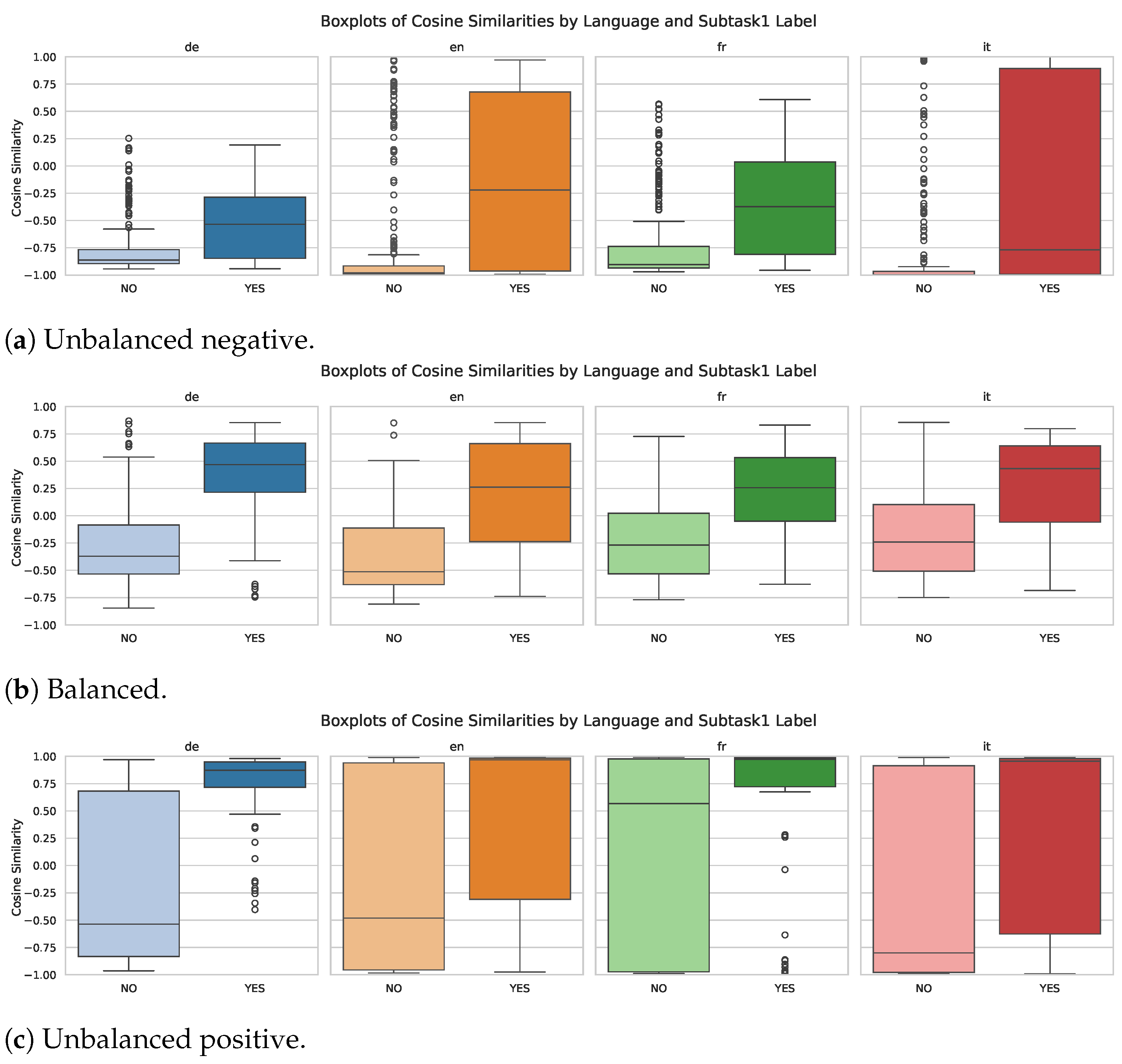

- We conduct a systematic evaluation of class imbalance mitigation strategies, including majority-class downsampling, to address the pronounced label skew in the MUSTI dataset and improve model robustness.

2. Related Work

2.1. Multimodal Image–Text Alignment Overview

2.2. Multimodal Image–Text Alignment Based on Smell Sources

2.3. Multimodal Large Language Model Overview

2.4. Multimodal Large Language Models for Smell Identification

3. Data

4. Approach

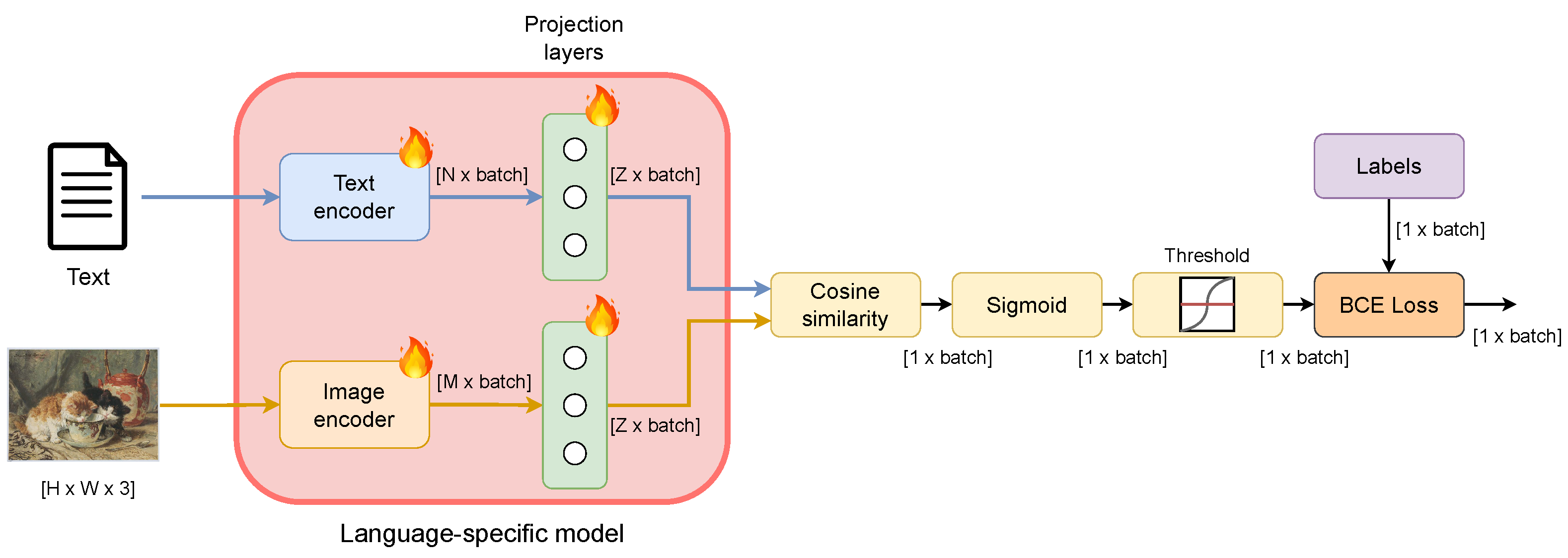

4.1. Language-Specific Models

- Imbalanced Data: Our dataset is heavily skewed toward negative examples (i.e., no matching smell). Applying the CLIP training scheme in this context would require discarding a substantial portion of the training data, approximately 75%, as it is designed to align representations between positive pairs while pushing non-matching pairs apart. As a result, negative relationships would be excluded from training, limiting the model’s ability to learn from the full dataset.

- Captioning Design Mismatch: CLIP assumes a strong correlation between images and their corresponding captions, where each caption directly describes the image or references its elements or context. However, in our case, this assumption does not hold. Even for positive pairs, the text may not explicitly describe the smell depicted in the image as it can consist of passages that refer to olfactory concepts without directly relating to the visual content.

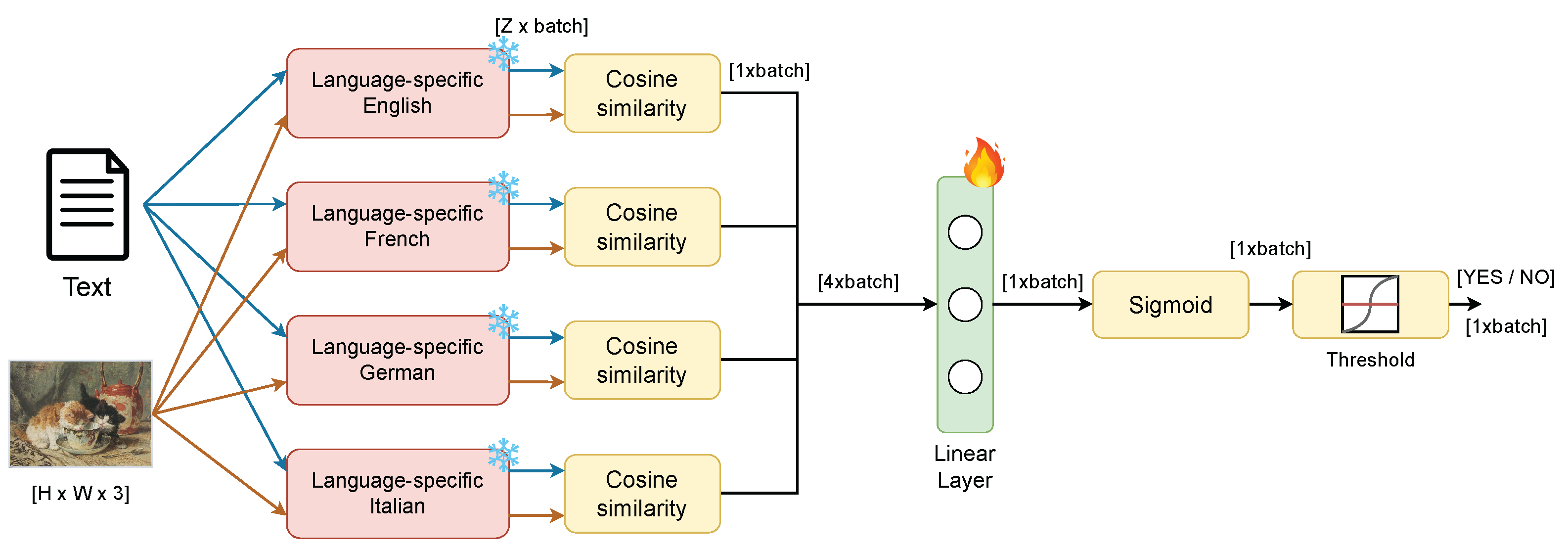

4.2. Mixture of Experts (MoE)

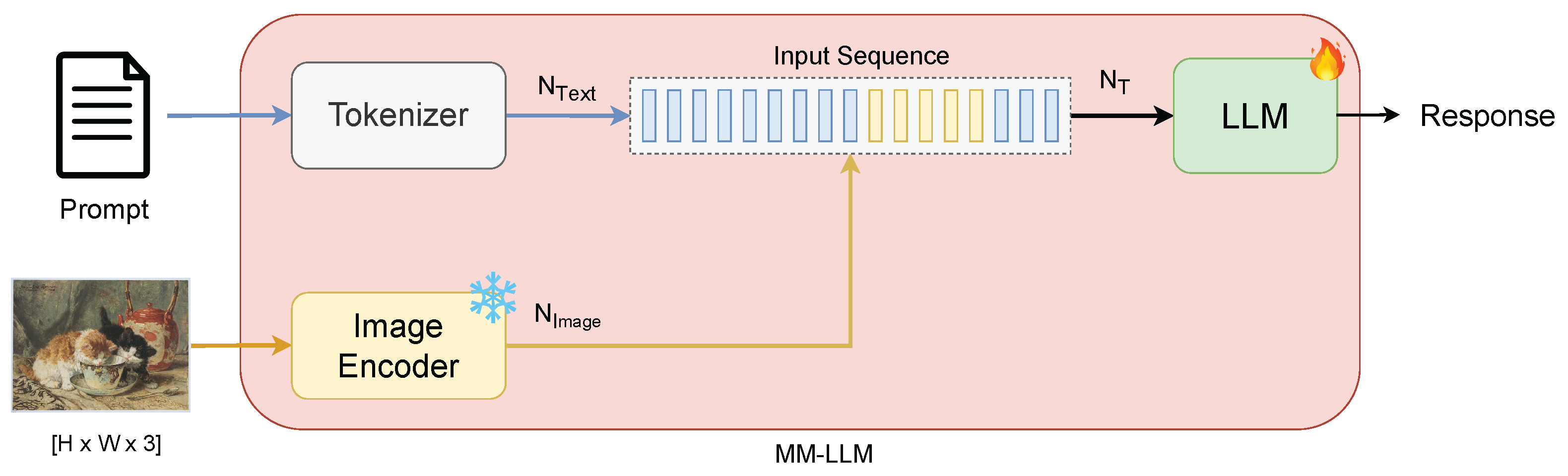

4.3. MM-LLMs as Olfactory Experts

4.3.1. Zero-Shot Evaluation

4.3.2. Fine-Tuning Qwen-VL

5. Results and Discussion

5.1. Language-Specific Models

5.1.1. Results on In-House Datasets

5.1.2. Results on Challenge Dataset

5.2. Mixture of Experts

5.3. MM-LLMs

5.3.1. Zero-Shot Performance on In-House Dataset

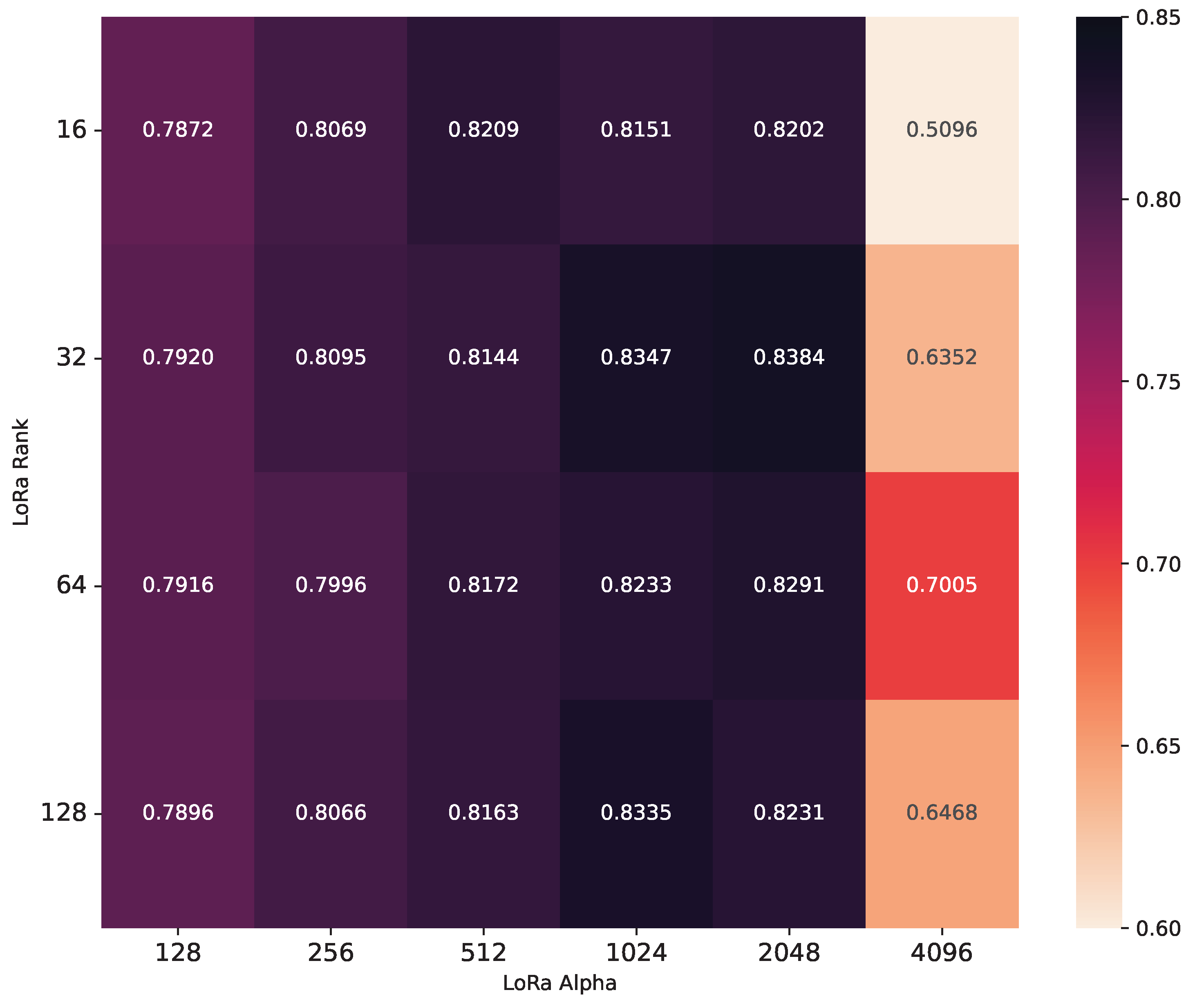

5.3.2. Fine-Tuning Qwen-VL-Chat on In-House Dataset

5.3.3. Comparison with Language-Specific Models on In-House Dataset

5.3.4. Challenge Dataset Results

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lisena, P.; Schwabe, D.; van Erp, M.; Troncy, R.; Tullett, W.; Leemans, I.; Marx, L.; Ehrich, S.C. Capturing the Semantics of Smell: The Odeuropa Data Model for Olfactory Heritage Information. In Proceedings of the Semantic Web; Groth, P., Vidal, M.E., Suchanek, F., Szekley, P., Kapanipathi, P., Pesquita, C., Skaf-Molli, H., Tamper, M., Eds.; Springer: Cham, Switzerland, 2022; pp. 387–405. [Google Scholar]

- Menini, S.; Paccosi, T.; Tonelli, S.; Van Erp, M.; Leemans, I.; Lisena, P.; Troncy, R.; Tullett, W.; Hürriyetoğlu, A.; Dijkstra, G.; et al. A multilingual benchmark to capture olfactory situations over time. In Proceedings of the 3rd Workshop on Computational Approaches to Historical Language Change, Dublin, Ireland, 26–27 May 2022; pp. 1–10. [Google Scholar]

- Zinnen, M.; Madhu, P.; Kosti, R.; Bell, P.; Maier, A.; Christlein, V. Odor: The icpr2022 odeuropa challenge on olfactory object recognition. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 4989–4994. [Google Scholar]

- Wang, P.Y.; Sun, Y.; Axel, R.; Abbott, L.; Yang, G.R. Evolving the olfactory system with machine learning. Neuron 2021, 109, 3879–3892. [Google Scholar] [CrossRef]

- Lee, B.K.; Mayhew, E.J.; Sanchez-Lengeling, B.; Wei, J.N.; Qian, W.W.; Little, K.A.; Andres, M.; Nguyen, B.B.; Moloy, T.; Yasonik, J.; et al. A principal odor map unifies diverse tasks in olfactory perception. Science 2023, 381, 999–1006. [Google Scholar] [CrossRef] [PubMed]

- Ameta, D.; Kumar, S.; Mishra, R.; Behera, L.; Chakraborty, A.; Sandhan, T. Odor classification: Exploring feature performance and imbalanced data learning techniques. PLoS ONE 2025, 20, e0322514. [Google Scholar] [CrossRef]

- Tan, H.; Zhou, Y.; Tao, Q.; Rosen, J.; van Dijken, S. Bioinspired multisensory neural network with crossmodal integration and recognition. Nat. Commun. 2021, 12, 1120. [Google Scholar] [CrossRef] [PubMed]

- Hürriyetoglu, A.; Paccosi, T.; Menini, S.; Zinnen, M.; Lisena, P.; Akdemir, K.; Troncy, R.; van Erp, M. MUSTI—Multimodal Understanding of Smells in Texts and Images at MediaEval 2022. In Working Notes Proceedings of the MediaEval 2022 Workshop, Bergen, Norway and Online, 12–13 January 2023; Hicks, S., de Herrera, A.G.S., Langguth, J., Lommatzsch, A., Andreadis, S., Dao, M., Martin, P., Hürriyetoglu, A., Thambawita, V., Nordmo, T.S., et al., Eds.; 2022; Volume 3583, CEURWorkshop Proceedings. Available online: https://ceur-ws.org/Vol-3583/ (accessed on 16 August 2025).

- Masaoka, Y.; Sugiyama, H.; Yoshida, M.; Yoshikawa, A.; Honma, M.; Koiwa, N.; Kamijo, S.; Watanabe, K.; Kubota, S.; Iizuka, N.; et al. Odors Associated With Autobiographical Memory Induce Visual Imagination of Emotional Scenes as Well as Orbitofrontal-Fusiform Activation. Front. Neurosci. 2021, 15, 709050. [Google Scholar] [CrossRef]

- Ehrich, S.; Verbeek, C.; Zinnen, M.; Marx, L.; Bembibre, C.; Leemans, I.; Nose-First. Towards an Olfactory Gaze for Digital Art History. In LDK 2021 LDK Workshops and Tutorials, Zaragoza, Spain, 1–4 September 2021; Carvalho, S., Rocha Souza, R., Eds. 2021; Volume 3064, CEURWorkshop Proceedings. Available online: https://ceur-ws.org/Vol-3064/ (accessed on 16 August 2025).

- Esteban-Romero, S.; Martín-Fernández, I.; Bellver-Soler, J.; Gil-Martín, M.; Martínez, F.F. Multimodal and Multilingual Olfactory Matching based on Contrastive Learning. In Proceedings of the MediaEval, Amsterdam, The Netherlands and Online, 1–2 February 2024. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021, arXiv:2103.00020. [Google Scholar] [CrossRef]

- Bai, J.; Bai, S.; Yang, S.; Wang, S.; Tan, S.; Wang, P.; Lin, J.; Zhou, C.; Zhou, J. Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond. arXiv 2023, arXiv:2308.12966. [Google Scholar] [CrossRef]

- Desai, K.; Johnson, J. VirTex: Learning Visual Representations from Textual Annotations. arXiv 2021, arXiv:2006.06666. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, H.; Miura, Y.; Manning, C.D.; Langlotz, C.P. Contrastive Learning of Medical Visual Representations from Paired Images and Text. arXiv 2022, arXiv:2010.00747. [Google Scholar] [CrossRef]

- Hürriyetoglu, A.; Novalija, I.; Zinnen, M.; Christlein, V.; Lisena, P.; Menini, S.; van Erp, M.; Troncy, R. The MUSTI Challenge @ MediaEval 2023—Multimodal Understanding of Smells in Texts and Images with Zero-shot Evaluation. In Proceedings of the MediaEval 2023 Workshop, Amsterdam, The Netherlands and Online, 1–2 February 2024. [Google Scholar]

- Akdemir, K.; Hürriyetoglu, A.; Troncy, R.; Paccosi, T.; Menini, S.; Zinnen, M.; Christlein, V. Multimodal and Multilingual Understanding of Smells using VilBERT and mUNITER. In Working Notes, Proceedings of the MediaEval 2022 Workshop, Bergen, Norway and Online, 12–13 January 2023; Hicks, S., de Herrera, A.G.S., Langguth, J., Lommatzsch, A., Andreadis, S., Dao, M., Martin, P., Hürriyetoglu, A., Thambawita, V., Nordmo, T.S., et al., Eds. 2022; Volume 3583, CEURWorkshop Proceedings. Available online: https://ceur-ws.org/Vol-3583/ (accessed on 16 August 2025).

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks. arXiv 2019, arXiv:1908.02265. [Google Scholar] [CrossRef]

- Liu, F.; Bugliarello, E.; Ponti, E.; Reddy, S.; Collier, N.; Elliott, D. Visually Grounded Reasoning across Languages and Cultures. In Proceedings of the NeurIPS 2021 Workshop on ImageNet: Past, Present, and Future, Online, 13–14 December 2021. [Google Scholar]

- Xie, N.; Lai, F.; Doran, D.; Kadav, A. Visual entailment task for visually-grounded language learning. arXiv 2018, arXiv:1811.10582. [Google Scholar]

- Mirunalini, P.; Sanjhay, V.; Rohitram, S.; Rohith, M. MUSTI-Multimodal Understanding of Smells in Texts and Images Using CLIP. In Proceedings of the MediaEval, Amsterdam, The Netherlands and Online, 1–2 February 2024. [Google Scholar]

- Ngoc-Duc, L.; Minh-Hung, L.; Quang-Vinh, D. Handle the problem of ample label space by using the Image-guided Feature Extractor on the MUSTI dataset. In Proceedings of the MediaEval, Amsterdam, The Netherlands and Online, 1–2 February 2024. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1 (long and short papers), pp. 4171–4186. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Team, G.; Mesnard, T.; Hardin, C.; Dadashi, R.; Bhupatiraju, S.; Pathak, S.; Sifre, L.; Rivière, M.; Kale, M.S.; Love, J.; et al. Gemma: Open models based on gemini research and technology. arXiv 2024, arXiv:2403.08295. [Google Scholar] [CrossRef]

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Dang, K.; Deng, X.; Fan, Y.; Ge, W.; Han, Y.; Huang, F.; et al. Qwen Technical Report. arXiv 2023, arXiv:2309.16609. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar] [CrossRef] [PubMed]

- Chu, Y.; Xu, J.; Yang, Q.; Wei, H.; Wei, X.; Guo, Z.; Leng, Y.; Lv, Y.; He, J.; Lin, J.; et al. Qwen2-Audio Technical Report. arXiv 2024, arXiv:2407.10759. [Google Scholar] [CrossRef]

- Esteban-Romero, S.; Martín-Fernández, I.; Gil-Martín, M.; Griol-Barres, D.; Callejas-Carrión, Z.; Fernández-Martínez, F. LLM-Driven Multimodal Fusion for Human Perception Analysis. In Proceedings of the 5th on Multimodal Sentiment Analysis Challenge and Workshop: Social Perception and Humor, Melbourne, VIC, Australia, 28 October 2024; MuSe’24. pp. 45–51. [Google Scholar] [CrossRef]

- Martín-Fernández, I.; Esteban-Romero, S.; Bellver-Soler, J.; Fernández-Martínez, F.; Gil-Martín, M. Larger Encoders, Smaller Regressors: Exploring Label Dimensionality Reduction and Multimodal Large Language Models as Feature Extractors for Predicting Social Perception. In Proceedings of the 5th on Multimodal Sentiment Analysis Challenge and Workshop: Social Perception and Humor, Melbourne, VIC, Australia, 28 October 2024; pp. 20–27. [Google Scholar]

- Srinivasan, D.; Subhashree, M.; Mirunalini , P.; Jaisakthi, S.M. Multimodal Learning for Image-Text Matching: A Blip-Based Approach. In Proceedings of the MediaEval, Amsterdam, The Netherlands and Online, 1–2 February 2024. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. arXiv 2022, arXiv:2201.12086. [Google Scholar] [CrossRef]

- Kurfalı, M.; Olofsson, J.K.; Hörberg, T. Enhancing Multimodal Language Models with Olfactory Information. In Proceedings of the MediaEval, Amsterdam, The Netherlands and Online, 1–2 February 2024. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. arXiv 2023, arXiv:2304.08485. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar] [CrossRef]

- Ridnik, T.; Ben-Baruch, E.; Noy, A.; Zelnik-Manor, L. ImageNet-21K Pretraining for the Masses. arXiv 2021, arXiv:2104.10972. [Google Scholar] [CrossRef]

- Song, K.; Tan, X.; Qin, T.; Lu, J.; Liu, T.Y. MPNet: Masked and Permuted Pre-training for Language Understanding. arXiv 2020, arXiv:2004.09297. [Google Scholar] [CrossRef]

- Martin, L.; Muller, B.; Suárez, P.J.O.; Dupont, Y.; Romary, L.; de la Clergerie, É.V.; Seddah, D.; Sagot, B. CamemBERT: A Tasty French Language Model. arXiv 2019, arXiv:1911.03894. [Google Scholar] [CrossRef]

- Peng, Z.; Wang, W.; Dong, L.; Hao, Y.; Huang, S.; Ma, S.; Wei, F. Kosmos-2: Grounding Multimodal Large Language Models to the World. arXiv 2023, arXiv:2306.14824. [Google Scholar] [CrossRef]

- Team, T.M. SWIFT:Scalable lightWeight Infrastructure for Fine-Tuning. 2024. Available online: https://github.com/modelscope/swift (accessed on 16 August 2025).

- Tang, Y.; Tran, C.; Li, X.; Chen, P.J.; Goyal, N.; Chaudhary, V.; Gu, J.; Fan, A. Multilingual Translation with Extensible Multilingual Pretraining and Finetuning. arXiv 2020, arXiv:2008.00401. [Google Scholar] [CrossRef]

| Exp. Setup | Total | Pos. | Neg. | Test |

|---|---|---|---|---|

| Unbal. Neg. | 2374 | 593 | 1781 | 356 (15%) |

| Balanced | 1218 | 593 | 625 | 122 (10%) |

| Unbal. Pos. | 994 | 593 | 401 | 146 (15%) |

| Model | EN | FR | DE | IT |

|---|---|---|---|---|

| Parameters | 196 M | 423 M | 196 M | 196 M |

| Model | Prompt |

|---|---|

| Kosmos-2 | “<grounding>Determine if the following text and image share common elements, with a specific focus on smell sources. Look for entities such as objects, animals, fruits, or any other elements that could be potential sources of smells. Answer YES or NO. Text: <text>. Answer:” |

| Qwen-VL | “Question: Is there any object that appear in both the text and in Picture 1 with a specific focus on smell sources?. Text: <text>. Answer only YES or NO. ANSWER:” |

| Qwen-VL-Chat and Qwen-VL-Chat-Int4 | “Question: Is there any object that appear in both the text and in <img>{im_path}</img>with a specific focus on smell sources?. Text: <text>. Answer only YES or NO. ANSWER:” |

| Experiment Setup | Threshold |

|---|---|

| Unbalanced Negative | 0.3 |

| Balanced | 0.5 |

| Unbalanced Positive | 0.6 |

| Experiment | Hyperparameters |

|---|---|

| 5-fold Cross-Validation (CV) for our in-house | Batch size: 16 |

| experimental datasets (see Table 6) | Learning rate: |

| Early stopping: 10 epochs | |

| Early stop improvement: 0.005 | |

| Max epochs: 100 | |

| MUSTI Challenge official setup (see Table 7, Table 8 and Table 9) | Batch size: 16 |

| Learning rate: | |

| Early stopping: 10 epochs | |

| Early stop improvement: 0.005 | |

| Max epochs: 15 |

| Exp. Setup | English | French | German | Italian | Average | MoE |

|---|---|---|---|---|---|---|

| Unbal. Neg. | 0.6532 | 0.7031 | 0.6814 | 0.6311 | 0.6672 | 0.6226 |

| Balanced | 0.6889 | 0.7253 | 0.7015 | 0.6504 | 0.6915 | 0.6490 |

| Unbal. Pos. | 0.7043 | 0.6873 | 0.7125 | 0.6542 | 0.6895 | 0.5950 |

| Class | Metric | EN | FR | DE | IT | Avg. | MoE |

|---|---|---|---|---|---|---|---|

| NO | Precision | 0.7589 | 0.7694 | 0.7880 | 0.7705 | 0.7717 | 0.6832 |

| Recall | 0.7657 | 0.7818 | 0.5850 | 0.6064 | 0.6847 | 0.8640 | |

| F1-score | 0.7622 | 0.7755 | 0.6715 | 0.6787 | 0.7220 | 0.7630 | |

| YES | Precision | 0.4739 | 0.5020 | 0.4171 | 0.4102 | 0.4508 | 0.2830 |

| Recall | 0.4646 | 0.4843 | 0.6535 | 0.6024 | 0.5512 | 0.1180 | |

| F1-score | 0.4692 | 0.4930 | 0.5092 | 0.4880 | 0.4899 | 0.1667 | |

| Macro F1 | 0.6157 | 0.6342 | 0.5903 | 0.5834 | 0.6059 | 0.4648 | |

| Weighted F1 | 0.6707 | 0.6872 | 0.6208 | 0.6191 | 0.6495 | 0.5767 | |

| Accuracy | 0.6716 | 0.6888 | 0.6064 | 0.6052 | 0.6430 | 0.6310 | |

| Class | Metric | EN | FR | DE | IT | Avg. | MoE |

|---|---|---|---|---|---|---|---|

| NO | Precision | 0.7288 | 0.7872 | 0.7784 | 0.7015 | 0.7490 | 0.6743 |

| Recall | 0.8605 | 0.7281 | 0.7227 | 0.7317 | 0.7608 | 0.8479 | |

| F1-score | 0.7892 | 0.7565 | 0.7495 | 0.7163 | 0.7529 | 0.7512 | |

| YES | Precision | 0.4902 | 0.4865 | 0.4728 | 0.3478 | 0.4493 | 0.2273 |

| Recall | 0.2953 | 0.5669 | 0.5472 | 0.3150 | 0.4311 | 0.0984 | |

| F1-score | 0.3686 | 0.5232 | 0.5073 | 0.3306 | 0.4324 | 0.1374 | |

| Macro F1 | 0.5789 | 0.6401 | 0.6284 | 0.5234 | 0.5927 | 0.4443 | |

| Weighted F1 | 0.6578 | 0.6838 | 0.6739 | 0.5958 | 0.6528 | 0.5594 | |

| Accuracy | 0.6839 | 0.6777 | 0.6679 | 0.6015 | 0.6578 | 0.6138 | |

| Class | Metric | EN | FR | DE | IT | Avg. | MoE |

|---|---|---|---|---|---|---|---|

| NO | Precision | 0.7235 | 0.7203 | 0.8218 | 0.7438 | 0.7524 | 0.6850 |

| Recall | 0.5617 | 0.3363 | 0.5939 | 0.5403 | 0.5081 | 0.3345 | |

| F1-score | 0.6324 | 0.4585 | 0.6895 | 0.6259 | 0.6016 | 0.4495 | |

| YES | Precision | 0.3536 | 0.3279 | 0.4450 | 0.3686 | 0.3738 | 0.3111 |

| Recall | 0.5276 | 0.7126 | 0.7165 | 0.5906 | 0.6118 | 0.6614 | |

| F1-score | 0.4234 | 0.4491 | 0.5490 | 0.4539 | 0.4689 | 0.4232 | |

| Macro F1 | 0.5279 | 0.4538 | 0.6193 | 0.5399 | 0.5352 | 0.4363 | |

| Weighted F1 | 0.5671 | 0.4556 | 0.6456 | 0.5722 | 0.5601 | 0.4413 | |

| Accuracy | 0.5510 | 0.4539 | 0.6322 | 0.5560 | 0.5483 | 0.4367 | |

| MM-LLM Strategy | Exp. Setup | Kosmos-2 | Qwen-VL | Qwen-VL-Chat-Int4 | Qwen-VL-Chat |

|---|---|---|---|---|---|

| Zero-shot | Unbal. Neg. | 0.3253 | 0.5581 | 0.4446 | 0.5661 |

| Class | Metric | Qwen-VL r = 128 = 2048 | Qwen-VL r = 16 = 2048 | Qwen-VL-Int4 r = 128 = 1024 | Qwen-VL-Int4 r = 32 = 2048 |

|---|---|---|---|---|---|

| NO | Precision | 0.7622 | 0.8308 | 0.8325 | 0.8354 |

| Recall | 0.8945 | 0.8694 | 0.8980 | 0.8623 | |

| F1-score | 0.8230 | 0.8497 | 0.8640 | 0.8486 | |

| YES | Precision | 0.6242 | 0.6798 | 0.7286 | 0.6737 |

| Recall | 0.3858 | 0.6102 | 0.6024 | 0.6260 | |

| F1-score | 0.4769 | 0.6432 | 0.6595 | 0.6490 | |

| Macro F1 | 0.6500 | 0.7464 | 0.7618 | 0.7488 | |

| Weighted F1 | 0.7149 | 0.7851 | 0.8001 | 0.7862 | |

| Accuracy | 0.7355 | 0.7884 | 0.8057 | 0.7884 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esteban-Romero, S.; Martín-Fernández, I.; Gil-Martín, M.; Fernández-Martínez, F. Synthesizing Olfactory Understanding: Multimodal Language Models for Image–Text Smell Matching. Symmetry 2025, 17, 1349. https://doi.org/10.3390/sym17081349

Esteban-Romero S, Martín-Fernández I, Gil-Martín M, Fernández-Martínez F. Synthesizing Olfactory Understanding: Multimodal Language Models for Image–Text Smell Matching. Symmetry. 2025; 17(8):1349. https://doi.org/10.3390/sym17081349

Chicago/Turabian StyleEsteban-Romero, Sergio, Iván Martín-Fernández, Manuel Gil-Martín, and Fernando Fernández-Martínez. 2025. "Synthesizing Olfactory Understanding: Multimodal Language Models for Image–Text Smell Matching" Symmetry 17, no. 8: 1349. https://doi.org/10.3390/sym17081349

APA StyleEsteban-Romero, S., Martín-Fernández, I., Gil-Martín, M., & Fernández-Martínez, F. (2025). Synthesizing Olfactory Understanding: Multimodal Language Models for Image–Text Smell Matching. Symmetry, 17(8), 1349. https://doi.org/10.3390/sym17081349