1. Introduction

Code generation has become a crucial aspect of modern software engineering, significantly enhancing productivity by automating the process of transforming natural language descriptions into executable code. Recent advancements in large language models have spurred the development of innovative code generation methods that leverage these models’ ability to understand and synthesize code from various programming languages [

1,

2,

3,

4,

5,

6,

7,

8]. Such methods have proven highly effective in tasks ranging from simple syntax generation to more complex tasks involving multi-step reasoning and logic. These approaches, particularly those based on LLMs, offer a promising direction for automating the coding process, making it accessible even to developers with limited programming experience. As a result, a variety of LLM-based solutions for code generation have emerged, showing substantial improvements in task performance and expanding the scope of possible applications.

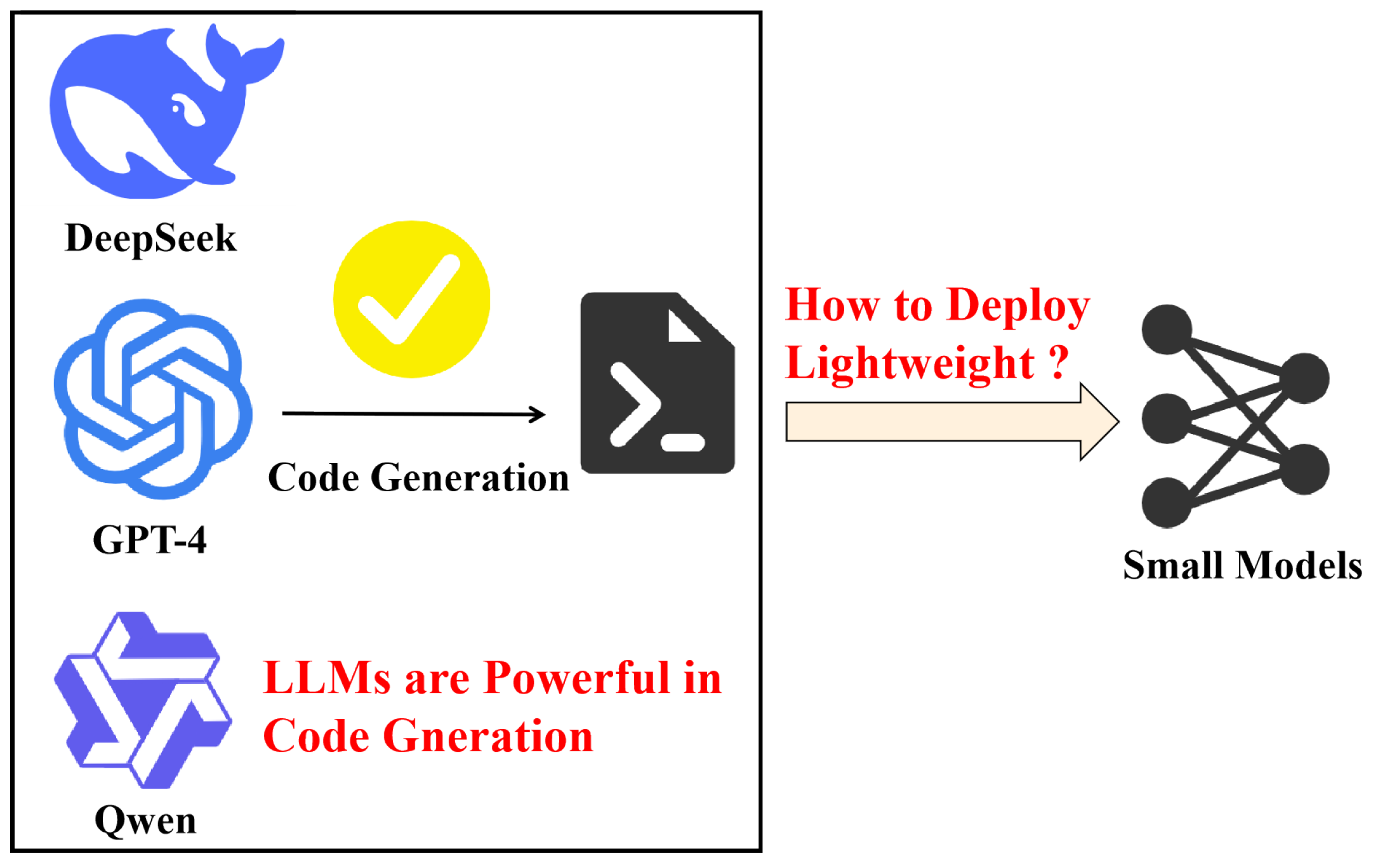

However, as shown in

Figure 1, despite the impressive capabilities of LLMs in code generation, their deployment remains a significant challenge due to the enormous computational resources required by these models [

9,

10,

11]. The sheer size of modern LLMs, such as those with hundreds of billions of parameters [

12,

13,

14,

15], presents substantial obstacles for practical use in real-world scenarios. These LLMs often demand vast amounts of memory and specialized infrastructure, making them impractical for widespread deployment, especially in environments where resource constraints are a concern. Consequently, deploying lightweight versions of these models—capable of retaining the high-level capabilities of LLMs while being more resource-efficient—has become an overlooked yet crucial area of research. Addressing this challenge involves overcoming various complexities, such as model compression, distillation, and efficient inference techniques. This issue remains largely underexplored but holds significant potential to make advanced code generation accessible and practical for diverse applications, particularly in resource-limited contexts.

The motivation behind our approach is grounded in the idea of distilling the step-by-step reasoning process from LLMs into smaller, more efficient models [

16,

17]. This approach is based on the concept of transferring not just the final outputs, but the intermediate reasoning steps—essentially, the cognitive chain—used by LLMs to solve complex tasks. By capturing and transferring these reasoning steps, smaller models can learn to replicate the problem-solving strategies of larger models, thus enhancing their ability to understand and generate language more effectively. The distillation pipeline involves the following: (1) the large model generates a series of intermediate reasoning steps; (2) these steps are distilled into a form that the smaller model can learn from; (3) the smaller model is trained to mimic the large model’s reasoning process; and (4) it is evaluated based on its ability to generate accurate results. This approach not only improves the language understanding and generation capabilities of smaller models but also reduces the computational and memory demands of deploying LLMs. The generality of this framework makes it applicable across a range of tasks, enabling the efficient deployment of powerful reasoning capabilities while significantly lowering resource requirements.

The challenge lies in transferring the sophisticated problem-solving strategies of LLMs to smaller, more efficient models without sacrificing performance. This task is further complicated by the need to maintain the symmetry between the reasoning process (i.e., pseudocode generation) and the final code generation output. While LLMs excel at generating both the reasoning path and the final solution, smaller models must replicate this same cognitive structure, ensuring that the intermediate steps and final code are balanced and aligned. Preserving this symmetry is crucial to ensuring that the smaller models maintain the same reasoning capabilities as the larger models, while overcoming the challenges of reduced computational and memory resources. Achieving this balance remains an underexplored yet vital area of research, as it requires overcoming the complexities of model compression, distillation, and efficient inference techniques.

In this paper, we propose a novel method for distilling the code generation capabilities of large language models into smaller models through a multi-task learning framework. Our approach mirrors the reasoning process of LLMs by transferring both the intermediate thought processes, i.e., pseudocode, and the final code generation output, to smaller models. This symmetry between the large and small models enables the smaller models to replicate the problem-solving strategies of their larger counterparts, preserving the balance between understanding the problem, breaking it down into pseudocode, and generating executable code. By achieving this symmetry, we ensure that the smaller models can learn the same cognitive patterns without the need for extensive computational resources. We evaluate the framework using several LLMs, including CodeLlama-7B, CodeQwen-7B, DeepSeek, and GPT-4, and demonstrate significant performance improvements across CodeSearchNet benchmark [

18]. The results show that the distillation framework not only enhances the performance of smaller models, as reflected in both automatic metrics and human evaluations, but also maintains efficiency, making it suitable for resource-constrained environments. Specifically, distilling from larger models, particularly GPT-4, leads to the most substantial improvements, achieving top accuracy scores on various tasks while substantially reducing memory usage and inference time.

The contributions of this paper are as follows:

We propose a novel distillation framework that transfers the reasoning and code generation capabilities of LLMs into smaller, more efficient models by leveraging intermediate reasoning steps such as pseudocode, enabling smaller models to replicate the problem-solving strategies of larger models.

We evaluate the proposed distillation framework using several LLMs, including CodeLlama-7B, CodeQwen-7B, DeepSeek, and GPT-4, and demonstrate significant performance improvements in code generation tasks across CodeSearchNet benchmark, both in automatic metrics and human evaluations.

Our experiments show that distilling from larger models, especially GPT-4, yields the most substantial improvements, achieving top accuracy scores on various tasks while maintaining efficiency in terms of memory usage and inference time, making the framework suitable for deployment in resource-constrained environments.

The remainder of this paper is structured as follows.

Section 2 reviews the background and related work on neural code generation and knowledge distillation, highlighting recent advances in large language models and their limitations in deployment.

Section 3 introduces the proposed cognitive-chain distillation framework, which distills the reasoning process, such as problem decomposition and pseudocode planning—from LLMs into smaller code generation models.

Section 4 presents the experimental setup, evaluation metrics, benchmark datasets, baseline comparisons, and ablation studies to validate the effectiveness and efficiency of our approach.

Section 5 presents threats to validity in this work. Finally,

Section 6 concludes the paper and outlines potential future directions for enhancing lightweight, high-performance NL2Code systems.

2. Background and Related Work

2.1. Chain-of-Thought Planning for Code Generation

In our proposed methodology for code generation using chain-of-thought (CoT), we introduce a two-phase process that incorporates self-planning by the large language models before generating the actual code. This process is delineated as follows:

Planning Phase: This phase leverages the in-context learning capabilities of LLMs for self-planning. Here, a prompt C, comprising k concatenated tuples , is provided. In these tuples, x represents the human intent, and y symbolizes the plan—an organized schedule of subproblems abstracted and decomposed from the intent. The plan is formulated as . During inference, the test-time intent is appended to the prompt, forming , which is then processed by the LLM to generate a test-time plan . Notably, k is a relatively small number, indicating that effective self-planning can be achieved with just a few examples demonstrating planning.

Implementation phase: In this phase, the test-time plan is combined with the intent as input to the model , resulting in the generation of the final code .

The entire process can be expressed through the following equation, formalizing the relationship between the phases:

By assuming conditional independence, we further simplify this to . This simplification underscores the efficiency and effectiveness of our proposed CoT approach in LLM-based code generation.

2.2. Chain-of-Thought Reasoning for LLMs

Chain-of-thought reasoning has emerged as a critical method for enhancing the problem-solving capabilities of large language models, particularly in complex tasks requiring multi-step reasoning [

2,

19,

20,

21,

22]. Several studies have explored the power of CoT and its theoretical foundations, shedding light on its efficacy and potential mechanisms. Wei et al. explored the use of chain-of-thought prompting to enhance LLMs’ reasoning abilities, showing that generating a series of intermediate reasoning steps significantly improves the performance of LLMs on arithmetic, commonsense, and symbolic reasoning tasks. Their empirical results demonstrated that CoT prompting, with only a few exemplars, achieved state-of-the-art accuracy in math word problem benchmarks, even surpassing fine-tuned models [

19]. Feng et al. took a theoretical approach to examine the expressivity of LLMs with CoT in solving mathematical and decision-making problems. Their work utilized circuit complexity theory to show that LLMs, through CoT reasoning, can effectively handle decision-making problems and solve tasks like dynamic programming, justifying their power in tackling complex real-world challenges [

20]. Xia et al. expanded the CoT paradigm by introducing a range of chain-of-X (CoX) methods, inspired by the sequential structure of CoT. Their survey categorizes various CoX techniques used to improve reasoning in diverse domains and tasks, offering a comprehensive resource for researchers interested in applying CoT across broader scenarios [

21]. Zhang et al. introduced chain of preference optimization (CPO), a method that fine-tunes LLMs using search trees from the tree-of-thought (ToT) approach to optimize CoT reasoning paths. Their experiments showed that CPO improved LLM performance in complex problem-solving tasks like question answering, arithmetic reasoning, and fact verification, without the heavy computational burden of ToT [

22].

Together, these studies highlight the evolution of CoT as a powerful tool in LLM-based reasoning tasks. From practical prompting techniques to theoretical insights and optimization strategies, these works contribute to our understanding of how LLMs process complex reasoning tasks and provide potential pathways for further improving these models.

2.3. Knowledge Distillation of LLMs

Knowledge distillation (KD) has become a crucial method for enhancing the performance of large language models, enabling the transfer of knowledge from larger, more complex models to smaller, more efficient ones [

23,

24,

25,

26,

27,

28,

29]. Various studies have explored different approaches and applications of KD in LLMs, focusing on model compression, efficiency improvements, and the mitigation of limitations such as hallucinations. Yang et al. introduced LLM-NEO, a parameter-efficient knowledge distillation method that integrates low-rank adaptation (LoRA) with KD to improve knowledge transfer efficiency. Their approach demonstrates superior performance in compressing models like Llama 2 and Llama 3.2, offering insights into hyperparameter optimization and LoRA’s robustness in KD applications [

23]. Song et al. explored privacy-preserving data augmentation using KD, focusing on medical text classification. Their method combines differential privacy (DP) with KD to generate pseudo samples while maintaining privacy guarantees. By using LLMs as teachers, the model trains students to select private data samples with calibrated noise, ensuring privacy protection while enhancing the classification model’s performance [

30]. Li et al. proposed prompt distillation (prompt distillation or POD) to improve the efficiency of LLM-based recommendation systems. Their work distills discrete prompts into continuous vectors, enhancing the efficiency of LLM training and reducing inference time. Although their method showed improvements in training, it also highlighted the need for further advancements in inference efficiency for recommendation tasks [

25]. Xu et al. provided a comprehensive survey on the knowledge distillation of LLMs, emphasizing its role in transferring knowledge to open source models like LLaMA and Mistral. The survey discusses KD mechanisms, its integration with data augmentation (DA), and the potential for the self-improvement of open source models. This work lays the foundation for understanding how KD can bridge the gap between proprietary and open-source models while enhancing their contextual understanding and ethical alignment [

31]. McDonald et al. focused on reducing hallucinations in LLMs through knowledge distillation. Their research demonstrated how transferring knowledge from high-capacity teacher models to smaller student models can significantly reduce hallucination rates while improving the accuracy of generated responses. Using the MMLU benchmark, their method showed substantial improvements in model robustness and accuracy, suggesting that KD can be an effective tool for refining LLMs in real-world applications [

32]. Further enhancing distillation robustness may require addressing causal feature separation to improve out-of-distribution generalization [

33], especially when confounded instruments (e.g., biased training data or prompts) affect the student model’s causal inference [

34]. Additionally, modeling time-varying dependencies in the distillation process through state-space counterfactual prediction [

35] could help adapt to dynamic knowledge shifts between teacher and student models.

Therefore, knowledge distillation in LLMs has become a critical area of research, with approaches ranging from improving privacy, recommendation efficiency, and model robustness to reducing hallucinations. These studies highlight the diverse applications of KD and provide a pathway for further research into optimizing and transferring knowledge within LLMs, making them more efficient and reliable in various domains.

Table 1 provides a categorized summary of existing studies on chain-of-thought reasoning and knowledge distillation for large language models, highlighting their key contributions and limitations. This comparison reveals that prior work either focuses on prompting strategies or general-purpose distillation, without addressing the integration of reasoning processes into distilled models. In contrast, our work uniquely combines CoT reasoning with knowledge distillation, targeting enhanced performance in code generation tasks with smaller models.

3. Methodology

3.1. Overall Architecture

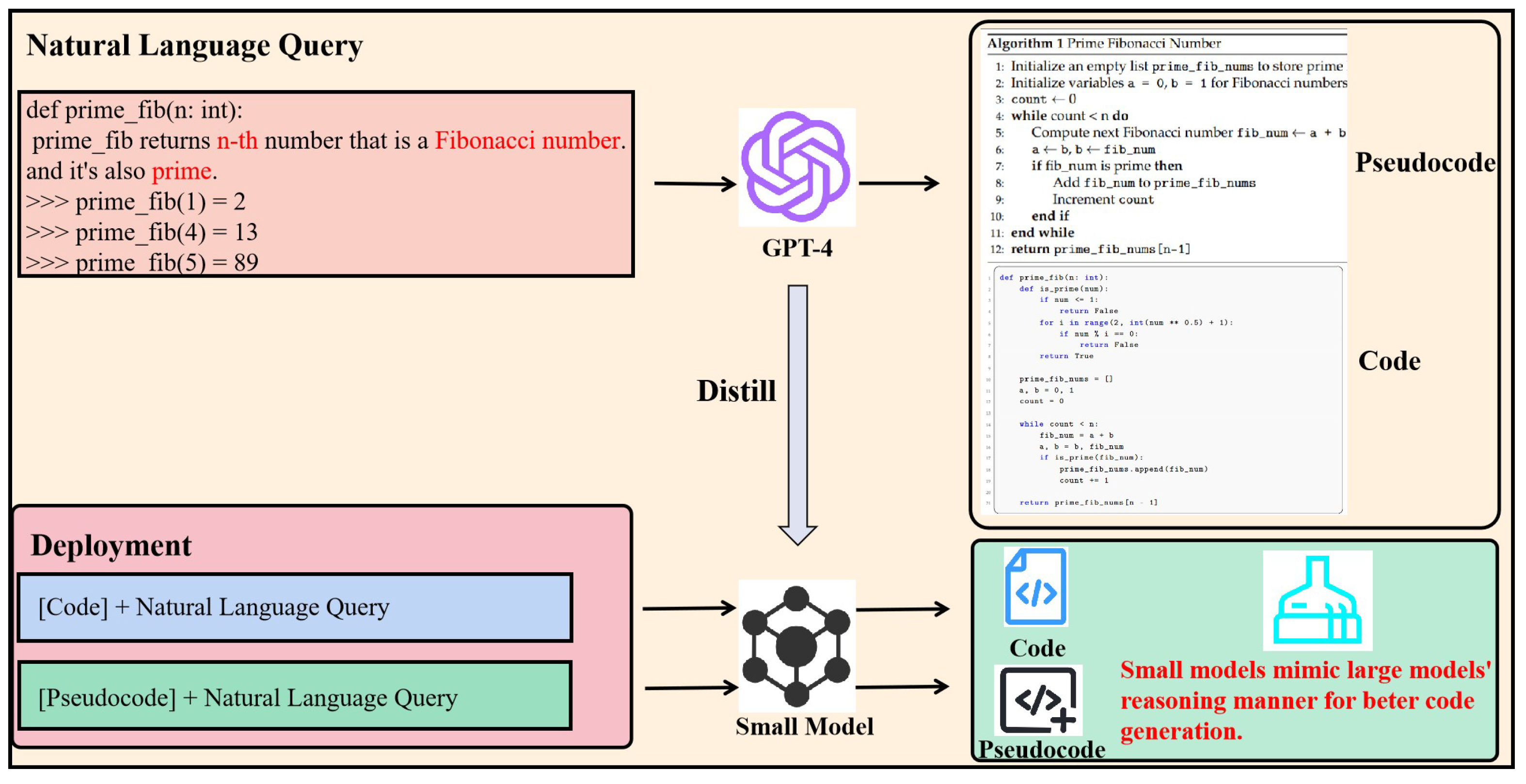

The proposed method distills the cognitive process of LLMs into smaller models for code generation tasks, specifically focusing on the transfer of intermediate reasoning steps, such as pseudocode generation. The motivation behind this approach is to improve the performance of smaller models by enabling them to mimic the problem-solving strategies of larger, more powerful models, thereby enhancing their ability to generate high-quality code. The method uses a multi-task learning framework, where the smaller model is trained to perform two tasks simultaneously: (1) generating pseudocode and (2) generating the final code. This dual learning task enables the model to learn both the reasoning path (pseudocode) and the end result (code), closely following the methodology used by larger models.

As shown in

Figure 2, the overall pipeline for this approach begins with the large model generating both the pseudocode and final code. The pseudocode, representing the logical steps taken by the large model, serves as an intermediate representation. The distillation process then trains the smaller model to replicate both the pseudocode generation and the final code generation, capturing the larger model’s reasoning process while simultaneously learning to produce the desired code outputs.

3.2. Pseudocode Extraction

To extract the reasoning process (pseudocode) from a large model during code generation, we leverage in-context learning. This method capitalizes on the model’s ability to utilize the surrounding context provided in the prompt to generate intermediate reasoning steps, such as pseudocode, before producing the final code. The underlying idea is that by conditioning the model on a well-structured prompt that encourages it to break down the problem into logical steps, the model will naturally generate the reasoning steps necessary for solving the task.

The process begins with a carefully designed prompt that not only provides the input query but also encourages the model to generate intermediate steps that clarify the approach to solving the problem. The prompt is structured as follows:

Problem:

Pseudocode:

1. [First reasoning step]

2. [Second reasoning step]

3. [Additional reasoning steps]

4. […]

Code: ![Symmetry 17 01325 i001 Symmetry 17 01325 i001]()

Explanation:

This structure guides the model to first generate intermediate reasoning steps (i.e., pseudocode) based on the given problem description. The Pseudocode section allows the model to break down the problem into smaller, logically coherent steps, which it can then use to generate the final Code section. The high-level steps in the pseudocode ensure that the model understands the flow and logic behind the task before proceeding to actual code generation.

In this prompt structure, LLMs are explicitly instructed to first generate pseudocode. This pseudocode typically includes high-level steps such as initializing variables, performing loops, and checking conditions, which are essential for solving the problem. By conditioning the model with this structure, we ensure that it generates the necessary intermediate reasoning before proceeding to the final code generation.

This approach mimics how human developers often approach programming: first by planning out the steps (pseudocode), followed by the actual implementation (code). By embedding this structure into the prompt, we harness the model’s contextual understanding, enabling it to reason more effectively and generate high-quality code based on its learned thought process.

3.3. Multi-Task Learning Framework

In order to achieve the dual task of learning pseudocode generation and code generation, we formulate the multi-task learning framework as follows. Let

be the loss function for generating pseudocode, and

be the loss function for generating the final code. The total loss for the multi-task learning objective is

where

and

are hyperparameters that control the relative importance of the two tasks. These losses are computed based on the difference between the model’s predicted outputs and the ground truth pseudocode or code. Specifically, for a given input query

, the model generates a pseudocode

and code

, and the respective losses are computed as follows:

where

and

are the true pseudocode and code tokens, respectively, and

and

are the predicted tokens. By optimizing this combined objective, the model learns to simultaneously generate accurate pseudocode and the final code, mimicking the cognitive process of larger models.

This approach ensures that the smaller model not only generates correct final outputs but also understands the reasoning process involved in arriving at those outputs, which significantly improves its ability to solve complex programming tasks. Furthermore, this dual training process enables the smaller model to focus on both the intermediate reasoning and the final goal, leading to a more robust understanding of code generation as a whole.

3.4. Distillation of Cognitive Process

In our approach, we utilize different masks to trigger the generation of various components, [pseudocode] or [code], within the model. The mask serves as a mechanism to guide the model in generating specific parts of the solution, based on the task at hand. For instance, when the mask is applied to prompt the model for pseudocode generation, the model focuses on producing intermediate reasoning steps that break down the problem. Conversely, when the mask is applied to trigger code generation, the model generates the final executable code based on the previously generated pseudocode. This masked conditioning helps the model distinguish between generating high-level logical steps (pseudocode) and the final solution (code), ensuring that both components are learned and generated efficiently in the multi-task learning framework.

The distillation of the cognitive process is essential for transferring the problem-solving strategies from the large model to the smaller model. The larger model generates both pseudocode and the corresponding code for a given problem, serving as the “teacher” in the distillation framework. The smaller model, acting as the “student,” learns to generate both pseudocode and code by mimicking the teacher’s reasoning process.

The key idea behind this process is that intermediate steps—such as pseudocode generation—are critical for understanding how to approach and solve a coding problem. By training the smaller model to generate these intermediate steps, the model can better grasp the logical flow of a solution, leading to more accurate and efficient code generation. Through multi-task learning, the model is not only trained to generate the final code but also to follow the reasoning path, which enhances its language understanding and coding proficiency.

This distillation approach effectively captures the cognitive chain of LLMs and applies it to smaller models, making advanced code generation techniques more accessible for deployment in environments with limited computational resources. The use of pseudocode as an intermediate step ensures that the reasoning process is transferred, allowing smaller models to perform at a higher level despite their reduced size.

Algorithm 1 outlines the distillation framework for transferring the cognitive reasoning of LLMs to smaller models in code generation tasks. In each iteration, the large teacher model generates both pseudocode and final code for a given task, which serves as ground truth for training the student model. The student model is simultaneously trained to generate both pseudocode and final code, with the loss function combining the errors in pseudocode and code generation. The total loss is a weighted sum of the CrossEntropy losses for both tasks, and the student model is optimized through backpropagation. This process enables the smaller model to learn both the intermediate reasoning steps and the final code generation, effectively transferring the problem-solving capabilities of the larger model while reducing computational and memory requirements.

| Algorithm 1 Distillation of cognitive process from large to small models |

- 1:

Input: Teacher Model , Student Model , Training Data , Hyperparameters - 2:

for each task in do - 3:

Step 1: Pseudocode Generation - 4:

- 5:

Step 2: Code Generation - 6:

- 7:

Step 3: Student Model Output - 8:

- 9:

- 10:

Step 4: Loss Calculation - 11:

- 12:

- 13:

- 14:

Step 5: Optimization - 15:

- 16:

end for - 17:

Output: Trained Student Model

|

4. Experiment Results

4.1. Experimental Settings

All experiments were conducted on a server equipped with 8 NVIDIA Tesla V100 GPUs (32 GB memory per GPU), running a Linux-based environment with CUDA 11.2 and cuDNN 8.2. The teacher models, CodeLlama-7B and CodeQwen-7B, were pre-trained on large-scale code datasets, while the student model, CodeT5-base (220 M parameters), was selected for its efficiency in resource-constrained environments. We used the CodeSearchNet dataset, which was pre-processed and split into training, validation, and test sets with an 80–10–10 ratio.

Regarding the distilling process, hyperparameters and were set to 0.5 for balanced learning between the two tasks. The Adam optimizer with a learning rate of was used, and the batch size was set to 16. Models were trained for 30 epochs with early stopping based on validation loss. Performance was evaluated using accuracy on the CSN test set, with additional human evaluation for the qualitative assessment of the generated code.

To further validate the deployability of the distilled student models in resource-constrained environments, we conducted additional runtime experiments on a consumer-grade machine equipped with a single NVIDIA RTX 3060 GPU (12 GB memory) and on a Raspberry Pi 5 with 8 GB RAM (ARM-based). On the RTX 3060, the CodeT5-base model achieved an average inference latency of 120 ms per sample, maintaining real-time responsiveness. On the Raspberry Pi, while batch inference was not feasible due to memory constraints, the model successfully executed single-sample code generation tasks within an average of 3.8 s using ONNX runtime in quantized form. These results confirm that the distilled models, especially CodeT5-base, can operate effectively on lower-end hardware, supporting our claim of suitability for deployment in resource-constrained environments.

4.2. Research Questions

In this section, we introduce the research questions, the dataset and baseline models, the details in the evaluation, as well as the experimental results.

RQ1: Effectiveness of distilling LLMs into smaller models: How does distilling the reasoning and code generation capabilities of LLMs into smaller models impact the performance of the smaller models in code generation tasks? Specifically, we investigate how different LLMs, such as CodeLlama-7B and CodeQwen-7B, perform when their reasoning processes are distilled into smaller models like CodeT5-base.

RQ2: Ablation study on the chain-of-thought process: What is the impact of disrupting the intermediate reasoning steps (e.g., pseudocode) during the distillation process? We perform an ablation study by randomly shuffling or replacing the chain-of-thought pseudocode with other random content to assess how such modifications affect the performance of the distilled smaller models.

RQ3: Human Evaluation of Distilled Model Outputs: How do human evaluators perceive the quality of code generated by the distilled smaller models? We incorporate a human evaluation to assess the generated code in terms of accuracy, readability, and overall utility, providing an empirical measure of the real-world effectiveness of the distilled models in practical scenarios.

These research questions guide our investigation into the viability, impact, and practical applications of distilling large language models’ reasoning capabilities into smaller, more deployable models for code generation.

4.3. Dataset

The dataset used for distillation contains bimodal data pairs, where each pair corresponds to a specific programming language.

Table 2 provides the statistics of the dataset, showing the number of bimodal data pairs for each language.

4.4. Evaluation Metrics

In our study, we adopt a comprehensive approach to evaluate both code generation capabilities, focusing on the similarity between the generated code and the reference (oracle) code. We utilize four distinct metrics, as established in prior research [

36], to conduct a thorough assessment:

Token match (TM): This metric is computed using the standard BLEU score [

37], commonly used in evaluating natural language generation tasks. TM measures the n-gram overlap between the generated code and the reference. The BLEU score is defined as

where

is the modified n-gram precision,

is the weight for each n-gram (typically uniform), and BP is the brevity penalty to penalize short candidates.

Syntax match (SM): SM evaluates the similarity between the abstract syntax trees (ASTs) of the predicted code (

) and the reference code (

). We calculate the tree edit distance (TED) and normalize it:

where

denotes the tree edit distance, and

is the size of the AST. A higher SM indicates better syntactic similarity.

Dataflow match (DM): DM assesses whether the data dependencies (i.e., variable usage and flow) are preserved. Let

and

denote the sets of data dependency edges (e.g., def-use chains) in the predicted and reference code. We define

which measures the Jaccard similarity between dataflow graphs.

CodeBLEU (CB): CodeBLEU [

38] is a weighted combination of four aspects: n-gram match, weighted syntax match, dataflow match, and keywords match. It is computed as

where

and KM is the keyword match score. In our evaluation, we follow the default weights suggested in [

38].

These metrics collectively provide a multi-dimensional assessment of code quality, ranging from basic lexical similarities to complex semantic and syntactic alignments. For a more detailed explanation of these metrics, readers are encouraged to refer to [

36]. This multifaceted evaluation framework allows us to rigorously assess the effectiveness of code generation algorithms, ensuring their practical applicability and reliability. Additionally, Top-1 accuracy measures the percentage of times the model’s highest-ranked prediction is correct, while Top-5 accuracy measures the percentage of times that the correct answer appears within the model’s top five predictions.

4.5. Baseline Methods

In our study, we focus on several baseline models for distillation, each representing a state-of-the-art approach in code generation. These models serve as foundational elements in our distillation process, allowing us to capture and transfer their capabilities into smaller, more efficient models.

Tranx: Tranx, as detailed in [

39], is a model that predicts a sequence of actions to construct an abstract syntax tree, which in turn generates the source code. It operates on an abstract syntax description language framework, a grammatical representation of ASTs. The model predicts three types of actions at each timestep to expand the tree until it is fully constructed. Given its influential role in AST construction, as evidenced by numerous follow-up studies [

36], Tranx is chosen as the representative technique in our study.

CodeT5: Following the T5 architecture [

40], CodeT5 [

41] processes both code and text tokens. It features a unique pre-training task, NL-PL dual generation, enabling the model to generate code from text and vice versa. This dual-generation capability, along with its encoder–decoder framework, makes CodeT5 an ideal candidate for code generation tasks. We opt for the CodeT5-base version due to its demonstrated effectiveness.

NatGen: Building upon CodeT5, NatGen [

42] introduces an additional pre-training task called “Code Naturalizing.” This task trains the model to convert unnatural code into a form resembling human-written code, enhancing its understanding of code semantics and its ability to generate human-like code.

SPT-Code: Another advanced pre-trained model, SPT-Code [

43], also follows the encoder–decoder framework. It differs from CodeT5 in its pre-training input; it includes the AST of the code, harnessing syntactic information, and uses the method names and called methods as a natural language description, thus eliminating the need for a bilingual corpus.

Each of these models brings unique strengths to the table, making them ideal candidates for our study on distilling large language model capabilities into smaller, more deployable models. Their collective attributes form the basis of our approach to enhance the efficiency and efficacy of code generation in resource-constrained environments.

To ensure a fair comparison across all models, we standardized the training and distillation procedures by using the same set of hyperparameters where applicable. Specifically, all student models were trained using the Adam optimizer with a learning rate of , a batch size of 16, and a maximum of 30 epochs with early stopping based on validation loss. For the distillation process, we set the loss balancing weights and to equally weigh the task-specific loss and the distillation loss. Each model was trained on the same training split of the CodeSearchNet dataset, and evaluations were conducted under identical conditions. For models with architectural differences (e.g., CodeT5 vs. Tranx), we retained their original layer configurations and embedding dimensions as reported in their respective papers to preserve their design integrity, while ensuring consistent training conditions across all experiments.

4.6. Large Language Models

The LLMs used for distillation in this paper are as follows:

DeepSeek: DeepSeek is a general-purpose large language model known for its versatility across a wide range of tasks, from text generation to problem-solving. It is designed to handle diverse inputs and can be fine-tuned for specific applications, showcasing impressive performance in natural language understanding and generation. We exploit DeepSeek-671B in our experiments.

GPT-4: GPT-4 is one of the most advanced general-purpose language models developed by OpenAI. It excels in complex reasoning tasks, including multi-step problem solving, creative writing, and language comprehension, setting new benchmarks for large language models in various NLP applications.

CodeLlama-7B: CodeLlama-7B is a specialized code generation model developed for software engineering tasks. With 7 billion parameters, it is designed to handle programming-related tasks such as code completion, bug fixing, and code synthesis, offering a more efficient solution compared to larger models while maintaining strong performance.

CodeQwen-7B: CodeQwen-7B is another code-focused large model with 7 billion parameters, optimized for various programming languages and development environments. It is specifically fine-tuned for generating code from natural language descriptions, offering a more compact alternative to larger models without sacrificing quality.

Our approach is designed to be model-agnostic, meaning it can be seamlessly integrated with various LLMs in a plug-and-play manner. This flexibility allows for future explorations with even more advanced LLMs, potentially unlocking new avenues in code generation and LLM applications.

4.7. Results

4.7.1. RQ1: Effectiveness of Distilling LLMs into Smaller Models

To ensure that the model is well-trained and neither underfits nor overfits, we monitored the training and validation loss across 30 epochs. As shown in

Figure 3, both losses exhibit a smooth and consistent decline, converging around epoch 26. The validation loss closely follows the training loss throughout, indicating that the model generalizes well to unseen data. These results justify our decision to use 30 epochs with early stopping based on validation loss, as the model reaches a stable state without overtraining.

As shown in

Table 3, our distillation framework shows clear improvements in smaller models across various code generation tasks. For instance, distilling from CodeQwen-7B enhances CodeT5’s Top-1 accuracy from 9.2 to 12.0, and Tranx sees a similar boost from 2.5 to 3.1. These results suggest that even smaller 7B models can effectively transfer key reasoning steps, enabling smaller models to perform better in generating code. Notably, CodeQwen-7B distillation leads to a larger increase in performance for CodeT5 compared to other small models, which suggests that CodeQwen-7B is particularly effective at transferring knowledge for more complex tasks, especially in terms of generating code output that aligns well with expected patterns.

Larger models, i.e., DeepSeek and GPT-4, provide even more significant performance gains, particularly for more complex tasks. For example, distilling from DeepSeek boosts CodeT5’s Top-1 accuracy from 9.2 to 15.5, while GPT-4 distillation achieves a higher Top-1 accuracy of 16.0. This trend continues across other models, where distillation from DeepSeek and GPT-4 outperforms both CodeQwen-7B and CodeLLama-7B in Top-1 and Top-5 accuracy. Specifically, NatGen sees its Top-1 accuracy increase from 9.5 to 12.0 with DeepSeek, and to 13.0 with GPT-4, showing how the larger models’ advanced reasoning capabilities lead to more accurate code generation, especially when the task complexity increases.

Therefore, our distillation framework not only provides clear performance improvements across all small models but also demonstrates that larger models offer more pronounced benefits. The GPT-4 and DeepSeek distillations consistently lead to the highest performance, with GPT-4 achieving the best Top-1 accuracy across all tasks (e.g., 16.0 for CodeT5 and 13.0 for NatGen). On the other hand, CodeQwen-7B offers solid improvements, though not as drastic as the larger models. This indicates that the distillation framework is highly versatile, capable of improving small models with distillation from both relatively smaller 7B models and larger, more powerful models, making it a scalable solution for efficient code generation.

To present the results of the ablation study on the chain-of-thought process, we introduce a random disturbance to the distilled pseudocode in our models. This aims to test the significance of maintaining the reasoning chain (i.e., pseudocode) during the distillation process. We randomly shuffle or replace the pseudocode with irrelevant content and observe how the performance changes compared to the baseline models and the best-performing GPT-4 distilled model.

4.7.2. RQ2: Ablation Study on the Chain-of-Thought Process

Table 4 presents the results of the ablation study, where we compare the original distilled models with those where the pseudocode reasoning (chain-of-thought) was randomly disturbed. As seen in the table, introducing random disturbance in the pseudocode leads to a decrease in performance across all models, as indicated by the lower Top-1 and Top-5 accuracy scores. For example, Tranx-random-distill shows a significant reduction in performance, with Top-1 accuracy dropping from 2.5 to 2.0, and Top-5 accuracy also showing a decline. Similarly, CodeT5-random-distill and SPT-Code-random-distill exhibit noticeable drops in performance.

The GPT-4 distilled models, however, consistently show the highest performance, further reinforcing the value of maintaining the chain-of-thought pseudocode in the distillation process. The comparison between the random-distilled models and their base counterparts confirms that the reasoning steps embedded in the pseudocode are crucial for achieving optimal performance in code generation tasks. Therefore, this ablation study validates the effectiveness of our distillation framework in transferring meaningful reasoning processes from LLMs to smaller ones, highlighting the importance of preserving the reasoning chain during the distillation process.

4.7.3. Results of Human Evaluation

For the human evaluation, we selected 100 samples from the test set and focused on the top-performing models, specifically the five models from the CodeT5 series: the baseline version and four distillations from LLMs (CodeLLama-7B, CodeQwen-7B, DeepSeek, and GPT-4). These 100 samples were evenly distributed among five experts with over six years of programming experience, with each expert evaluating 100 samples. Each model’s output was assessed across three criteria: correctness, completeness, and fluency, with scores ranging from 1 to 5, where 1 represents “Poor” and 5 represents “Excellent.” To ensure consistency, a cross-validation process was applied, and the final scores were averaged for each model.

The results from the human evaluation, as shown in

Table 5, provide further confirmation of the effectiveness of our distillation framework. When evaluating the five models across the three criteria—Correctness, Completeness, and Fluency—we observe clear improvements in the distilled models, particularly those generated from larger models, which supports the findings from the automatic evaluation metrics.

Firstly, the distillation from CodeQwen-7B shows notable improvements compared to the baseline CodeT5. For example, the Correctness score increases from 2.8 to 3.4, the Completeness score increases from 2.9 to 3.4, and the Fluency score increases from 3.2 to 3.6. These results align with the trends observed in the automatic evaluation, where the distillation from CodeQwen-7B similarly improved performance across the board. Furthermore, distillation from more powerful models like DeepSeek and GPT-4 results in even greater improvements, achieving Correctness scores of 4.1 and 4.3, respectively. These improvements in human evaluation are consistent with the gains observed in the automatic metrics, reinforcing the idea that the distillation framework can successfully transfer reasoning capabilities from larger models to smaller ones.

The highest performance in Correctness, Completeness, and Fluency is achieved by the distillation from GPT-4, with scores of 4.3, 4.2, and 4.3, respectively, which outperforms all other models in human evaluation. This confirms that our distillation framework not only improves automated evaluation metrics but also translates into tangible improvements in human-assessed qualities such as code Correctness, Completeness, and Fluency. Therefore, our results demonstrate that the distillation framework is effective in enhancing the performance of smaller models, with both automated and human evaluations confirming the benefits of distilling from larger models.

To provide a qualitative comparison, we further analyzed representative code samples generated by our distilled models and baseline models. Compared to baseline models such as CodeT5 and NatGen, the code generated by our distilled models—especially those distilled from GPT-4 and DeepSeek, exhibited more coherent logic, clearer structure, and better alignment with programming conventions. In particular, the distilled models were more likely to include meaningful variable names, complete function definitions, and properly structured control flows, whereas baseline models occasionally produced syntactically valid but semantically incomplete or overly generic code. This improvement is largely attributed to the preservation of chain-of-thought reasoning during distillation, which helps guide the model through multi-step generation processes in a more interpretable and human-like manner.

4.7.4. Prompt Examples of the Distillation Process

To illustrate how our distillation framework operates in real scenarios, we provide representative prompt examples used during training and evaluation. Below is an example of a training prompt given to the teacher model (e.g., GPT-4) and its corresponding output, which is used to supervise the student model:

Problem: Write a Python function that takes a nested list of arbitrary depth and returns a flat list containing all the elements.

Pseudocode:

1. Define a function that takes a list as input.

2. Initialize an empty result list.

3. Iterate through each element in the input list.

4. If the element is a list, recursively flatten it and extend the result list.

5. If the element is not a list, append it directly to the result list.

6. Return the result list after processing all elements.

Code:![Symmetry 17 01325 i002 Symmetry 17 01325 i002]()

Explanation:

This function uses recursion to handle arbitrarily nested lists. For each element, it checks if it is a list; if so, it calls itself recursively and flattens that sublist. Otherwise, it appends the element directly. This process ensures that the final result contains no nested structures, regardless of input depth.

5. Threats to Validity

5.1. Internal Validity

One potential threat to internal validity in our study is the reliance on expert human evaluators, which may introduce subjective biases in the scoring process. While we selected five experts with over six years of programming experience to ensure a reasonable level of expertise, variations in individual judgment could influence the evaluation of criteria such as Correctness, Completeness, and Fluency. Although we employed a cross-validation process and averaged the scores to mitigate this issue, the small number of evaluators and their subjective interpretations of the evaluation criteria could still introduce inconsistency in the results. Furthermore, there is the possibility that the model outputs evaluated in the human study may not cover the full range of real-world code generation tasks, as the selected test set might not be entirely representative of the complexity encountered in different programming environments.

Another internal validity concern is the limited diversity in the test set. Although we selected a diverse range of 100 samples from the dataset, this subset might not comprehensively represent the vast spectrum of programming tasks encountered in practical applications. As the difficulty and nature of programming problems can vary greatly, the specific sample selection process might unintentionally favor certain types of code or problem domains, leading to biased performance metrics. This could affect the fairness of the evaluation, as some models may perform better on the selected test set due to its particular composition rather than a generalized ability to handle diverse real-world scenarios.

Additionally, while our framework is designed to preserve reasoning patterns through the use of intermediate supervision (e.g., pseudocode), we do not directly analyze the internal representations of student models to verify the depth of reasoning transfer. Our current conclusions are based on performance improvements and output structure. Future work may incorporate probing techniques or attention-based interpretability analyses to more rigorously evaluate whether internal cognitive-like mechanisms are being transferred during distillation.

5.2. External Validity

In terms of external validity, a major concern arises from the limited scope of our study, as it only focuses on specific LLMs for distillation (i.e., CodeLLama-7B, CodeQwen-7B, DeepSeek, and GPT-4). While these models represent advanced state-of-the-art architectures, the findings from our experiments might not directly apply to other large language models or distillation methods that were not included in this study. For example, the effectiveness of distillation from models not covered here, such as those specialized in other domains or with different architectural properties, remains unclear. Therefore, the conclusions drawn about the benefits of distilling reasoning capabilities may not generalize to other, less widely used models or novel techniques not considered in our study.

Moreover, the test set used for both automatic and human evaluations may not fully reflect the real-world programming tasks typically encountered in production environments. While our dataset is diverse in terms of programming languages and problem types, its composition might still introduce biases that affect how well the results generalize to broader use cases. For example, code generation tasks in more specialized domains or those requiring complex reasoning might differ from those represented in our test set. Thus, the results of this study may be most applicable to code generation tasks within the scope of the evaluated test set, limiting the broader applicability of our findings to all possible programming scenarios.

While our primary training and evaluation were conducted on a high-performance server, we recognize the need to verify real-world deployability. To address this, we performed additional testing on consumer-grade and ARM-based devices, which demonstrated that the distilled student models can run efficiently with limited computational resources. However, performance may vary across deployment contexts depending on device memory, optimization level (e.g., quantization), and runtime framework, which warrants further profiling in production scenarios.

5.3. Theoretical and Practical Implications

This study offers both theoretical and practical contributions to the field of code generation and LLM distillation. Theoretically, it demonstrates that preserving chain-of-thought reasoning during knowledge distillation significantly enhances the performance of smaller models, providing empirical support for integrating intermediate reasoning steps as transferable knowledge units. This finding advances our understanding of how reasoning traces from LLMs can be effectively internalized by compact models, bridging the gap between model interpretability and performance. Practically, our proposed distillation framework enables the deployment of lightweight, resource-efficient models that retain strong reasoning capabilities, making them suitable for real-world applications such as IDE integration, automated code assistants, and edge-device programming tools. Furthermore, the model-agnostic design of our framework ensures its adaptability across various LLMs and code generation tasks, promoting its utility in both academic and industrial settings.

5.4. Security Evaluation

While our primary evaluation focuses on the functional correctness of generated code, we acknowledge that security is a critical dimension often overlooked in code generation tasks. To address this concern, we conducted a preliminary security analysis using the static analysis tools, including Semgrep and CodeQL, to assess the potential vulnerabilities in the code generated by both baseline and distilled models.

Specifically, we analyzed 1000 code snippets generated from the test set and scanned them for common security issues, such as unsafe input handling, use of insecure APIs, and missing input validation. Our findings show that the code generated by distilled models, particularly those distilled from GPT-4 and DeepSeek, tends to produce fewer security warnings compared to their undistilled counterparts (e.g., CodeT5 and NatGen). This suggests that distilling reasoning-aware models may help capture secure programming patterns implicitly learned by the teacher LLMs.

However, vulnerabilities still occasionally emerge, especially in edge cases where the model attempts complex input/output operations or constructs SQL queries or shell commands. While the reduction in security warnings is promising, this preliminary evaluation highlights the need for incorporating explicit security-aware objectives or constraints in future distillation pipelines.

6. Conclusions

In this work, we propose a novel distillation framework designed to transfer the reasoning and code generation capabilities of large language models into smaller, more efficient models. Our approach leverages the intermediate reasoning steps, such as pseudocode, during the distillation process, enabling smaller models to replicate the problem-solving strategies of larger models. We evaluate the framework on multiple tasks using several LLMs, including CodeLLama-7B, CodeQwen-7B, DeepSeek, and GPT-4, and demonstrate significant improvements in performance across various code generation benchmarks. Our results show that the distillation framework not only improves the performance of smaller models, as evidenced by both automatic metrics and human evaluations, but also enhances code generation capabilities while maintaining efficiency in resource-constrained environments. Notably, distilling from larger models, particularly GPT-4, leads to the most substantial improvements, achieving top accuracy scores in multiple tasks.

Looking ahead, there are several promising directions for future work. First, we plan to explore the application of our distillation framework to other tasks beyond code generation, such as question answering and text summarization, to assess its broader applicability. Additionally, investigating more advanced techniques for preserving the reasoning steps during distillation, such as hierarchical or multi-modal reasoning, could further improve the quality of the distilled models. Another avenue for future work involves expanding the dataset to include a wider variety of programming languages and more complex real-world tasks to test the generalizability of the framework in diverse scenarios. Moreover, we aim to evaluate the scalability of our framework with even larger teacher models and systematically explore the trade-offs between model size, reasoning quality, and computational efficiency. Finally, integrating our approach into real-world systems and comparing its performance with existing tools, such as Kiro (

https://kiro.dev/, (accessed on 11 August 2025)), will be an important step towards validating its practical impact and usability.