Abstract

This study introduces the concept of symmetry as a fundamental theoretical perspective for understanding the linguistic structure of cyberbullying texts. It posits that such texts often exhibit symmetry breaking between surface-level language forms and underlying semantic intent. This structural-semantic asymmetry increases the complexity of the recognition task and places higher demands on the semantic modeling capabilities of detection systems. With the rapid growth of social media, the covert and harmful nature of cyberbullying speech has become increasingly prominent, posing serious challenges to public opinion management and public safety. While mainstream approaches to cyberbullying detection—typically based on traditional deep learning models or pre-trained language models—have achieved some progress, they still struggle with low accuracy, poor generalization, and weak interpretability when handling implicit, semantically complex, or borderline expressions. To address these challenges, this paper proposes a cyberbullying detection method that combines LoRA-based fine-tuning with Small-Scale Hard-Sample Adaptive Training (S-HAT), leveraging a large language model framework based on Meta-Llama-3-8B-Instruct. The method employs prompt-based techniques to identify inference failures and integrates model-generated reasoning paths for lightweight fine-tuning. This enhances the model’s ability to capture and represent semantic asymmetry in cyberbullying texts. Experiments conducted on the ToxiCN dataset demonstrate that the S-HAT approach achieves a precision of 84.1% using only 24 hard samples—significantly outperforming baseline models such as BERT and RoBERTa. The proposed method not only improves recognition accuracy but also enhances model interpretability and deployment efficiency, offering a practical and intelligent solution for cyberbullying mitigation.

1. Introduction

With the rapid development of social media, the speed and breadth of information dissemination have reached unprecedented levels. At the same time, the spread of violent speech online has become more convenient and covert, posing severe challenges to social stability and public safety. Particularly across different countries and regions, variations in cultural backgrounds, legal systems, and internet governance environments result in diverse manifestations of online violence and corresponding governance strategies. This highlights the importance of examining the identification and regulation of violent speech from a regional perspective.

In recent years, large language models (LLMs) and their fine-tuning techniques—especially Low-Rank Adaptation (LoRA) methods—have matured technologically and offered new, effective means for the automatic detection of online violent speech. These methods not only enhance the adaptability of models in diverse linguistic environments but also improve their ability to capture covert expressions of violence in complex contexts.

Most existing studies treat the identification of violent speech as a text classification task, utilizing Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and attention mechanisms to extract semantic features. However, with the rise of Graph Neural Networks (GNNs), this technology has demonstrated superior performance in modeling user interaction relationships and integrating multimodal information. These advancements are particularly prominent in research conducted in developed regions such as Europe and North America, while studies focusing on developing countries and region-specific social platforms remain insufficient. This gap calls for innovative integration of regional data and methods.

Incorporating LoRA fine-tuning into LLM training not only improves model generalization under resource-constrained conditions but also significantly enhances lightweight deployment potential—meeting the demands of real-time, multi-regional detection of violent speech. This is especially relevant in populous countries such as China and India, where the linguistic environments on social media are highly complex and diverse. The parameter efficiency and flexible transferability of LoRA make it particularly valuable in such contexts.

Based on this, the present study focuses on the diverse and rapidly evolving linguistic environment of social media, exploring the potential and challenges of using LLMs fine-tuned with LoRA in the detection of online violence. We will assess this approach’s performance in identifying covert and flexible forms of violent content, considering model effectiveness, transferability, and computational efficiency. Furthermore, we will construct a detection system tailored to the specific characteristics of the Chinese online environment, aiming to support law enforcement agencies in achieving automated monitoring and intervention.

In addition, addressing the asymmetry between surface linguistic structures and deep semantic intentions in violent speech, this paper proposes a “symmetry breaking” perspective. By modeling at the level of linguistic structure, we aim to enhance the identification of covertly aggressive speech. This theoretical perspective enriches current frameworks for violent speech detection and broadens the scope of innovative identification methods within regional contexts.

The next sections systematically review research results related to online violence recognition, fine-tuning of large language models, and difficult-sample mining. The relevant parts of the research are detailed in Section 2, Section 3 introduces the methodological framework proposed in this paper, Section 4 demonstrates the experimental setup and result analysis, Section 5 explores the limitations of the methodology, and Section 6 summarizes the research results and looks into the future research directions.

2. Related Research

2.1. State of the Art of Violent Speech Recognition Research

Cyberviolent speech refers to the forms of language that display negative emotions or behaviors such as aggression, insults, racism, sexism, intimidation, etc., in the online environment. Its main features include strong displays of emotion, malicious accusations, personalized attacks, etc. These languages are usually used to hurt others or trigger antagonistic emotions. The identification of violent speech goes beyond the superficial capture of emotions and requires an in-depth analysis of the context to understand the hidden meanings in the language. In particular, it is necessary to distinguish between different types of violent speech, such as insulting speech, hate speech, sexism, etc., which are all hurtful and destructive, albeit in different forms. With the popularity of social media and online communication platforms, online violent speech has become a global problem that needs to be urgently solved, with wide-ranging impacts on public safety, mental health, and other aspects. Therefore, how to accurately identify and processing online violent speech has become an important research direction in the field of natural language processing (NLP) [1].

With the popularity of the Internet, social media, while providing a platform for exchanging views, also exacerbates the spread of hate speech due to its virtual nature and anonymity. To address this problem, Chinese researchers constructed a Chinese hate speech dataset, CHSD, and proposed a Chinese hate speech detection model, RoBERTa-CHSD [2]. The model uses a RoBERTa pre-trained language model to serialize Chinese hate speech, extract text feature information, and access the TextCNN model and Bi-GRU model to extract multi-level local semantic features and global dependency information between sentences to achieve Chinese hate speech detection. The experimental results show that the F1 value of this model on the CHSD dataset is 89.12%, which is improved by 1.76% compared with the current optimal mainstream model, RoBERTa-WWM [3]. Some of the studies are analyzed by CiteSpace visualization, which shows that the research literature on cyber violence in China is increasing year by year, and the hot topics include the research on the characteristics of cyber violence, generation research, group research, and governance research. The research is rich and innovative, but there is still room for strengthening the cooperation between core authors and research institutions. Future research on cyber violence needs to strengthen academic cooperation, pay attention to the research hotspots of, and innovate research topics so as to realize the transformation of theoretical research into practical application and create a healthy and clear cyberspace.

In more research, the violent speech detection task has been formulated in a variety of forms in the supervised learning problem [4], including binary classification, multi-classification, multi-label classification, and multi-task learning [5]. A systematic review of automated hate speech detection in text is presented by Fortuna and Nunes [6], enumerating lexicon, rule, and feature-based techniques, as well as early deep learning models that were applied. In recent years, deep learning models (e.g., Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), and Transformer models) have been widely used to build automated violent speech detection systems and achieve high performance as algorithms improve. Naseem et al. showed that in addition to the training algorithm, preprocessing methods have a significant, but often overlooked, impact on classifier performance. They developed an intelligent tweet processing method that minimizes information loss during the preprocessing stage. Ayo et al. [7] focused on hate speech classification of Twitter data and surveyed machine learning approaches for this task. Aluru et al. reviewed deep learning algorithms for multilingual hate speech detection. Since 2018, pre-trained language models have become a common language resource for training NLP classifiers. For example, Salminen et al. [8] used BERT to generate text representations and demonstrated its robustness across social media platforms. Mozafari et al. [9] fine-tuned BERT and compared multiple prediction layers and found that CNN-based fine-tuning strategies were more effective than those based on RNNs or nonlinear output layers. Wiedemann et al. [10] evaluated the performance of multiple fine-tuned Transformer models for detecting offensive languages and their subcategories and showed that the integrated ALBERT-based approach performed best, while RoBERTa performed best in a single language model.

Despite the excellent performance of deep learning models in cross-validation, their robustness in generalizing across datasets is poor. Risch and Krestel [11] proposed an integrated approach consisting of multiple fine-tuned BERT models to address the problem of large variance in the output of fine-tuned models for small datasets. Miok et al. [12] introduced a Bayesian approach in the Transformer model’s attention layer by introducing a Bayesian approach to provide reliability estimates for classifier decisions, thus not only improving performance but also reducing the workload of human reviewers through reliability scoring. Multi-task learning is another approach to improve the performance of offensive language detection. For example, Safi Samghabadi [13] proposed an end-to-end neural model based on BERT, which combines multi-task learning with an attention mechanism to perform the multi-classification task of “offensive identification” and the biclassification task of “sexist offensive identification”, respectively [14] demonstrated that hate speech detection in combination with an auxiliary task improves robustness to datasets with different distributions and labeling criteria. Ousidhoum et al. [15] demonstrated the performance improvement of multi-language multi-task learning for tasks with limited labeled data.

To address the problem of small offensive language datasets, researchers have proposed solutions for data augmentation and improved learning algorithms. Guzman-Silverio et al. [16] showed that different initialized random seeds lead to significantly different fine-tuned models when the size of the dataset is less than 10,000 instances. They customized the data augmentation method for the Spanish dataset and demonstrated its effectiveness. Rizos et al. and Wullach et al. [17] used a deep generative language model to generate realistic expressions of hate and non-hate, showing that training by augmenting the dataset improves cross-dataset performance. In addition, zero-sample learning and small-sample learning techniques have been shown to be effective in hate speech detection in low-resource environments.

2.2. Research on the Application and Fine-Tuning of Large Language Models

Language models (LMs) are computational models capable of understanding and generating human natural language, and their core task is to predict the probability of the occurrence of words in a given context or generate new text based on the input. Traditional language modeling approaches, such as N-gram models, rely on fixed-length context information for probability estimation; however, they have significant limitations in dealing with rare words, capturing long-distance dependencies, and modeling complex linguistic phenomena. In order to overcome these problems, researchers have continuously optimized the model structure and training methods, pushing the development of language models into a large-scale pre-training phase.

Large language models (LLMs) are high-capacity neural network models trained on massive data, with excellent language understanding and generation capabilities. Represented by the GPT series of models, LLMs are based on the self-attention mechanism in the Transformer architecture, which can efficiently model long-distance dependencies between sequences and achieve massively parallel computation. OpenAI has launched GPT-1, GPT-2, GPT-3, and other language models since 2018 and gradually established pretrain–fine-tune (pretrain–fine-tuning), which is the most effective way to train LLMs, including GPT-1, GPT-2, GPT-3, etc. Under this framework, models are first pre-trained unsupervised with large-scale unlabeled text to learn general language patterns, and then supervised fine-tuning is carried out on specific tasks with a small amount of labeled data to achieve task adaptation and performance improvement.

Among them, the GPT-3 model further expands the application boundary of language models. The model introduces the mechanism of In-Context Learning, which realizes zero-shot or few-shot reasoning by means of task hints and examples, and completes a variety of natural language processing tasks without parameter updates. Meanwhile, reinforcement learning techniques have also been introduced into the training process, especially Reinforcement Learning from Human Feedback (RLHF), which greatly improves the performance of the model in terms of safety and reasonableness of response by providing manual scoring and feedback optimization of the model output. GPT-3.5 is a representative application optimized based on this method, which has been widely used in text generation, task quizzing, code writing, and other scenarios and has demonstrated strong multi-tasking capability and commercial application potential [18].

With the wide application of LLMs, model fine-tuning becomes a key tool to enhance their performance in specific tasks. Fine-tuning refers to the use of domain-specific labeled data on top of the pre-trained model to further train the model for downstream tasks. The process usually requires only a small amount of labeled data to significantly improve the performance of the model in tasks such as text classification, sentiment analysis, and question and answer systems [19]. During the fine-tuning process, researchers have proposed a variety of efficient strategies aimed at maintaining model performance while reducing computational cost, including methods such as Adapter Layers, Low-Rank Adaptation (LoRA), and Layer-wise Freezing [20].

The adapter layer achieves task-specific knowledge migration by embedding a small number of trainable modules in the original structure of the model while avoiding large-scale updating of the original model parameters, which effectively reduces the training cost [21]. LoRA, on the other hand, reduces the number of parameters and computational resources required for fine-tuning by performing a low-rank decomposition of the weight matrix and optimizing only a low-dimensional subspace of the parameter space, which accelerates the convergence of the model. The hierarchical freezing method is based on the hierarchical structure of the Transformer, which further compresses the computational overhead and improves the training efficiency by fixing some of the parameters and fine-tuning only the network layers that are strongly relevant to the task [22].

In addition, in order to deal with the problem of hallucination that may occur in the text generation process of the large language model, the researchers introduced factual consistency checking, external knowledge base integration, regularization methods, and other technical paths [23]. Factual consistency checking ensures the reliability of the model output by comparing it with authoritative knowledge sources; external knowledge bases provide additional knowledge support for model generation to enhance its semantic integrity and contextual coherence; and the regularization technique improves the consistency and controllability of the model generated content by introducing constraints during the training process [24]. Combining the above methods with fine-tuning strategies [25] not only significantly improves the generalization ability and output quality of LLMs [26] but also provides a strong guarantee for their deployment and security in practical applications [27].

2.3. Current Status of Research on Difficult-Sample Mining

Hard-example mining (HEM), as a key strategy to improve the generalization ability and robustness of models, has been widely used in a number of tasks such as computer vision and natural language processing in recent years. The core idea is to promote the model to learn the discriminative boundary more effectively by identifying the samples that are difficult for the model to classify correctly or with low confidence during the training process and giving higher training weights or specialized optimization to these samples.

In the field of computer vision, online hard-example mining (OHEM) is widely used in target detection tasks. Shrivastava et al. [28] proposed to dynamically select samples with high loss values for backpropagation in the training phase, which effectively improves the performance of the model in small target and occluded object recognition. This strategy also provides theoretical support for difficult-sample learning in NLP tasks.

In the field of natural language processing, difficult samples are mainly manifested as textual expressions with fuzzy semantic boundaries, high ambiguity, or complex contexts. For example, in tasks such as sentiment analysis, text categorization, and natural language reasoning, Ma et al. [29] proposed the Focal Loss variant to emphasize the importance of difficult samples for training, which further facilitates the model’s ability to perceive semantic details.

In addition, with the development of large language modeling (LLM), some studies have begun to explore ways of identifying “prompt-failure samples” as difficult samples for fine-tuning in large model prompt learning or zero-sample tasks. For example, Liu [30] found in the prompt-tuning process that the model’s failed predictions under certain ambiguous prompts can be considered as challenging samples for subsequent policy tuning.

3. Methodology

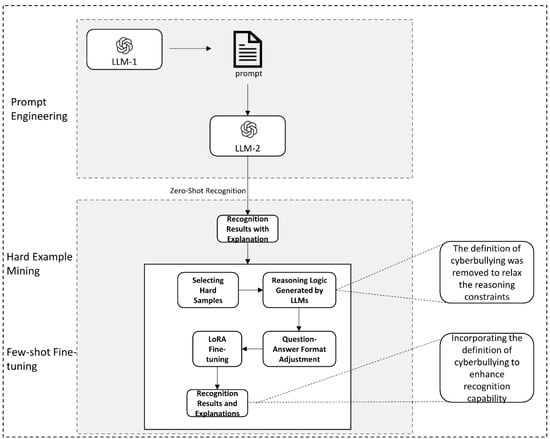

The research framework of this paper is shown in Figure 1. In this paper, we use Meta-Llama-3-8B’s instruction to fine-tune the large language model Meta-Llama-3-8 B-Instruct and propose a Small-Scale Hard-Example Adaptive Tuning Framework (S-HAT), which takes into account zero-sample recognition and model fine-tuning to improve generalization capability and accuracy and enhance interpretability. Through large model inference and LoRA fine-tuning, harmful content can be effectively identified and analyzed. Figure 1 demonstrates the S-HAT framework.

Figure 1.

Adaptive training framework diagram for small, difficult samples.

3.1. Model Selection

LLaMA (Large Language Model Meta AI) is a large-scale language model based on the Transformer architecture proposed by Meta AI, which adopts a multi-layer auto-attention mechanism to effectively model the semantics of text with long-distance dependencies. Its pre-training data covers multilingual high-quality corpora such as Wikipedia, books, and academic papers and combines with an autoregressive modeling strategy to deeply learn the language laws by predicting the next word. Compared with the self-encoding approach of BERT, LLaMA is better at text generation while taking into account both natural language understanding and generative tasks, showing stronger adaptability and generalization ability.

LLaMA is chosen as the basis of the cyber violence speech recognition model because of its excellent performance in low-resource environments and its ability to effectively capture offensive, metaphorical, and contextual meanings in language. Meanwhile, LLaMA’s support for multi-language and cross-task migration makes it more suitable for recognizing diverse and complex expressions of cyberviolence and improves the accuracy and robustness of the recognition model.

Meta-Llama-3-8B-Instruct, used in this study, is an open-source large language model released by Meta (Facebook’s parent company), belonging to the third generation of models in the LLaMA (Large Language Model Meta AI) family, with 8 billion parameters (8B) and fine-tuned by instruction (Instruct tuning) to better understand and execute human instructions. The main reasons for choosing this model are as follows: Firstly, the powerful language modeling capabilities of the Llama family allow it to perform well in multilingual environments, and it is particularly suitable for cross-linguistic sentiment analysis tasks. Secondly, Meta-Llama-3-8B-Instruct excels in understanding complex instructions and sentiment information and is able to effectively capture multi-level sentiment changes. Finally, as an open-source model, its code and data are publicly available, providing rich resource support while striking a better balance between parameter size and computational resources, which ensures high performance and reduces the complexity of training and application. To suit the task of this research, we have custom-optimized the model to explore its potential for application in the field of sentiment analysis.

3.2. Prompt Design

Prompt engineering is the process of designing and optimizing interaction prompts for large language models (e.g., GPT-3, PaLM, etc.). With the rapid development of large language models, this field is becoming increasingly important. The core goal of prompt engineering is to generate relevant and high-quality outputs by designing appropriate prompts that help the model better understand the task requirements. For example, when performing sentiment analysis, the prompt could be “Please identify the sentiment tendency from the text and determine whether it is positive, negative or neutral, along with a brief justification.” The text reads, “Although the project was eventually completed, I was very disappointed by the arguments between team members throughout the process.” This prompt not only explicitly asks for sentiment categorization but also requires an explanation of the source of the sentiment, which helps to improve the relevance and interpretability of the model output.

The design of prompt words in this study is based on the synergy of large language models, aiming to achieve automated and efficient text processing and content judgment. Specifically, we choose GPT-3.5, which has strong logical reasoning ability, as the prompt word generation model, and through its reasoning and generation ability, we automatically generate task-specific prompt words, whose core function is to help judge whether the text involves online violent speech or not. The generated prompts will be applied to the Meta-Llama-3-8B-Instruct model, which has powerful text-processing capabilities, for further sentiment analysis and content screening. Through this “big model leads to big model” approach, we are able to take full advantage of the two big language models, utilizing the inference and prompt word design capabilities of GPT-3.5 and the text comprehension and sentiment analysis capabilities of Meta-Llama-3-8B-Instruct, to achieve automated and precise online violent speech detection. This approach not only enhances the task adaptability of the model but also improves the efficiency and accuracy when dealing with complex tasks to a certain extent. The prompt word generation results are shown in Table 1.

Table 1.

Prompt generation results.

3.3. LoRa Fine-Tuning

Low-Rank Adaptation (LoRA) [31] is an efficient parameter fine-tuning method proposed by Microsoft to reduce the computational cost and resource consumption during the training of large language models (LLMs). The method is typically applied to attention layer weight matrices such as Wq (query weights) and Wv (value weights) in the Transformer architecture by restricting the update of model weight matrices to low-rank subspaces. Wq is responsible for mapping inputs to query vectors, which are used to compute the attention weights with the keys to determine which locations the model focuses on, while Wv maps the inputs to value vectors, which serve as the basis for the final context representation. Since these two matrices play a central role in the information extraction and context modeling of the model, LoRA chooses to perform a low-rank update to them, which can significantly reduce the number of trainable parameters and improve the fine-tuning efficiency and generalization ability while maintaining the performance of the model. It is shown that LoRA can achieve comparable or even better results than full fine-tuning in a variety of pre-trained models (e.g., GPT and BERT) with only a small number of additional parameters introduced compared with direct fine-tuning of all parameters. Meanwhile, due to its efficient parameter reuse, LoRA supports fast context switching in multi-task switching scenarios, which significantly reduces the storage and deployment costs without introducing additional inference latency. In practical applications, setting the matrix rank to 8 effectively preserves the key singular vector directions and avoids the accumulation of training noise. The experiments also show that jointly adjusting Wq and Wv has better performance than adjusting Wq or Wk alone. In addition, the LoRA model can explicitly recover the original weights via Equation (1), which supports efficient task switching and reduces the memory burden and delay in the inference process.

where denotes the fine-tuned weight matrix, and is the weight of the original freeze in the pre-trained model; and are trainable low-rank matrices, and denotes a rank much smaller than the original dimension . During training, only and are updated, and is kept unchanged to significantly reduce the parameter size. In the inference phase, the full weights are restored by matrix multiplication enabling fast and low-cost model deployment and multi-task switching.

3.4. Small-Scale Hard-Example Adaptive Training Framework

To balance the adaptability, interpretability, and deployment efficiency of large language models in few-shot tasks, this paper proposes the Small-Scale Hard-Sample Adaptive Tuning Framework (S-HAT). This framework integrates LoRA fine-tuning, prompt engineering, and hard-sample mining, not as a simple aggregation but as an organically unified design grounded in the following theoretical motivations:

First, LoRA leverages its parameter-efficient structure to enable rapid task adaptation in low-resource scenarios by injecting learnable rank-decomposed matrices into frozen pre-trained weights. Second, prompt engineering explicitly encodes classification criteria through natural language task prompts, activating the model’s latent semantic understanding—particularly effective for the preliminary detection and task-aware alignment of online hate speech with ambiguous semantic boundaries. Third, the automated mining and retraining of hard samples emphasize the importance of “the most informative samples during training,” significantly enhancing the model’s ability to learn discriminative boundaries in the presence of vague semantics and subtly aggressive expressions.

The synergistic integration of these three components aims to improve the model’s capacity to perceive and model the breakdown of semantic–structural symmetry in online abusive language. Building on this, S-HAT employs prompt-based mechanisms to identify reasoning failures, guiding the model to generate explicit analysis pathways. This process constructs a high-information-density, reasoning-oriented few-shot training set, enabling lightweight fine-tuning while substantially enhancing the model’s boundary reasoning and generalization capabilities.

In the initial stage, we employ prompt-driven large language models to perform zero-shot classification. Given an unlabeled dataset , the model generates predicted labels under the guidance of a natural language prompt:

Subsequently, we identify hard examples where the model prediction is incorrect and prompt the model to generate corresponding reasoning traces , forming an augmented hard-example set. To ensure class balance and representation diversity during fine-tuning, we randomly sample an equal number of positive and negative examples from the augmented set to construct the final training dataset:

Here, denotes the full hard-example set, is a randomly sampled subset containing an equal number of positive and negative instances, and represents the pairing of each sample with its corresponding reasoning trace.

Finally, we apply Low-Rank Adaptation (LoRA) to fine-tune the large language model on this curated training set, yielding an optimized model:

Through this process, the S-HAT framework enhances the boundary inference capabilities of the model using only a small number of high-quality hard examples, demonstrating efficient and effective model adaptation.

3.5. Data Preparation and Evaluation Indicators

In order to evaluate the performance of the large language model in the recognition task, ToxiCN, a publicly available dataset, is selected as the basis for the experiments in this paper. This dataset, as the only Chinese text resource, was adopted by the following international evaluation task: the CLEF 2024 Multilingual Text Detoxification subtask [27].

The dataset was constructed by collecting posts from two major Chinese social media platforms, Zhihu and Baidu Tieba, focusing on four common sensitive topics: gender, race, region, and LGBTQ. After keyword filtering and data cleaning, a total of 12,011 comments were retained. The dataset is publicly available at [https://github.com/DUT-lujunyu/ToxiCN], accessed on 31 July 2025.

For annotation, ToxiCN adopts a hierarchical labeling system called the MONITOR TOXIC FRAME. Each sample is annotated in the following order: (1) whether it contains harmful content; (2) the type of toxicity (general offense or hate speech); (3) the targeted group (e.g., gender, race, etc.); and (4) the mode of expression (direct, implicit, or declarative). Harmful content is further divided into general offensive content and four types of hate speech. Modes of expression are also categorized into explicit attacks, implicit expressions (such as sarcasm and stereotypes), and non-prejudicial statements. Each sample was independently annotated by at least three annotators with backgrounds in linguistics, and the final labels were determined by majority vote. To minimize bias, annotators were selected from diverse backgrounds in terms of gender, age, region, and education level (including undergraduate, master’s, and doctoral degrees) and received systematic training. To assess annotation consistency, the authors reported Fleiss’ Kappa scores for the four levels: 0.62 for toxicity identification, 0.75 for type differentiation, 0.65 for group identification, and 0.68 for expression mode classification, indicating moderate to substantial agreement. Additionally, ToxiCN includes an insult lexicon containing 1032 terms, covering both explicit and implicit offensive vocabulary. This lexicon highlights the diverse forms of sarcasm, homophones, and metaphorical expressions specific to the Chinese online context.

The distribution and data samples of the ToxiCN dataset are shown in Table 2, where label 0 represents non-violent speech and label 1 represents violent speech.

Table 2.

Overview and examples of the ToxiCN dataset.

Firstly, a direct classification strategy was used in the initial identification phase in order to obtain the initial performance of the model under unsupervised conditions. Subsequently, we systematically analyzed the zero-sample prediction results from which we screened out the model misclassification samples and constructed a small set of difficult samples accordingly. Specifically, we randomly selected 12 positive and 12 negative class samples from the misclassification samples for category balanced matching (see Table 3 for details). With these high information density, difficult samples, the S-HAT framework can significantly improve the model performance, reduce the annotation cost, and achieve fast fine-tuning and good generalization with only 24 samples, which provides a feasible path for small-sample learning and efficient deployment.

Table 3.

Distribution and examples of hard-sample data.

In order to enhance the model’s semantic reasoning ability on difficult samples, the training dataset not only retains the original text and category labels but also introduces the analyses generated by the model itself, which are used to assist comprehension and attribution. It is worth noting that during the construction of the training data, we intentionally did not provide an explicit definition of “violent speech on the Internet” to avoid the model being constrained by a priori concepts when generating analyses so as to stimulate its ability to reason autonomously based on the context and to obtain more explanatory and diversified linguistic generation results (as shown in Table 3).

In the testing stage, in order to improve the model’s ability to discriminate between borderline samples and fuzzy scenarios, we introduced the definition of “online violent speech” in the prompt words so as to guide the model to complete the classification judgment more accurately.

In the experiment, all models use the precision rate (Pre) as the evaluation index of classification effect as shown in Equation (5):

Considering that misjudging normal speech as violent speech (false positives) in the online violence recognition task may have a large negative impact, the precision rate is used to highlight the accuracy of the model in predicting violent speech, thus reducing false positives and improving the usefulness of the system.

3.6. Experimental Setup with Comparison Experiments

In this study, the computing devices used were provided by Zhongke Shituo (Nanjing) Technology Co., Ltd. (Nanjing, China), RTX 4090 GPU and 16 vCPU Intel(R) Xeon(R) Gold 6430 CPU are used as the computing platform, and Meta-Llama-3-8B-Instruct is chosen as the base model. The model is based on the advanced Transformer architecture, which demonstrates excellent semantic understanding and context modeling capabilities in natural language processing tasks, especially for the task of identifying violent speech in complex contexts.

In the model training phase, we use the LoRA (Low-Rank Adaptation) fine-tuning method with the following configuration:

Learning rate: 1 × 10−5.

Number of training rounds (Epochs): 10.

LoRA Matrix rank: 8.

LoRA scaling factor (alpha): 32.

Learning rate multiplier for B-matrix in LoRA+: 16.

With the above configuration, LoRA optimizes only the low-rank moment array while keeping the original parameters of the model unchanged, which significantly reduces the computational overhead and improves the model’s ability to identify violent speech. During the experiments, we use the model’s API to achieve efficient data input and processing, ensuring the automation and real-time performance of the training and inference process. The advanced architecture of Meta-Llama-3-8B-Instruct, combined with LoRA’s fine-tuning strategy, provides a high-performance and lightweight solution for the task of recognizing violent speech and ensures that the experiments are both scientifically sound and reproducible.

Three baseline models from the dataset literature are selected for comparative analysis with the prompt engineering and fine-tuning models proposed in this paper, respectively.

BiLSTM: A classical bidirectional long- and short-term memory network structure model that can capture both forward and backward contextual information in a text sequence to enhance the completeness and accuracy of text representation, widely used in various natural language processing tasks.

BERT: A pre-trained language model based on Transformer, which has become one of the fundamental models for several natural language processing tasks by modeling bi-directional encoding on large-scale corpora, obtaining contextual semantic information, and then representing the text.

RoBERTa: An improved version of the BERT model. By removing the Next Sentence Prediction task, using larger training data, a longer training time, and a larger batch size as optimization strategies, it significantly improves the model’s performance in several downstream tasks, especially in classification and inference tasks.

4. Experiments and Analysis of Results

4.1. Analysis of Experimental Results

This study comparatively evaluates the performance of multiple mainstream pre-trained language models in the violent speech recognition task, specifically including BiLSTM, BERT, RoBERTa, and Meta-Llama-3-8B-Instruct. The experiments are conducted on the same dataset, and the results are shown in Table 4 [27].

Table 4.

Experimental results of different models.

From the experimental results, it can be seen that the RoBERTa model has outperformed BiLSTM and BERT in terms of base accuracy, achieving a precision rate of 80.8%. The S-HAT model reaches 84.1% in terms of accuracy rate, which significantly outperforms all the compared models and demonstrates optimal recognition performance.

This experimental result fully verifies the effectiveness of prompt word design in large-scale language models and also shows that the combination of LoRA fine-tuning and difficult-sample retraining strategies can dig deeper into the model’s potential ability and enhance its ability to understand complex semantic and contextual information, thus significantly improving the accuracy of violent speech recognition.

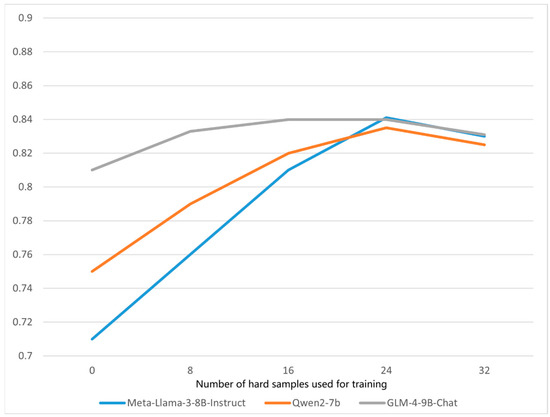

Additionally, as shown in Figure 2, the vertical axis represents precision. The LLaMA model, lacking dialogue-specific training, performs significantly worse in the zero-shot setting, with a precision of only 0.71—which is substantially lower than the other two models. This indicates a weaker ability to extract toxic language from dialogue inputs. However, as the number of training samples increases, LLaMA’s performance improves significantly, with precision gradually approaching that of the other models. This suggests that training with hard samples enhances the model’s dialogue comprehension ability.

Figure 2.

Experimental results on the ToxiCN dataset with different backbone models and numbers of difficult samples.

Moreover, experimental results using different quantities of hard samples validate the reasonableness and representativeness of randomly selecting 24 samples under the current setting.

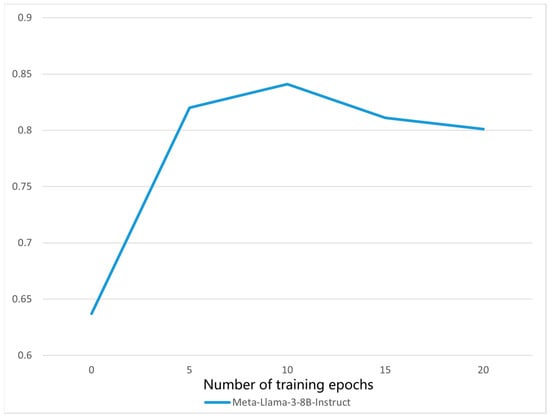

At the same time, as shown in Figure 3, we fixed the number of training epochs to 10 based on observations from multiple experimental runs: under various epoch settings, the model achieved optimal performance on the validation set at 10 epochs while also balancing training efficiency and mitigating the risk of overfitting.

Figure 3.

Graph of experimental results under different numbers of training epochs.

4.2. Ablation Study

To verify the effectiveness of each component in the proposed method, we designed and conducted a systematic ablation study. Specifically, we individually removed and compared the following three core components:

- (1)

- The LoRA fine-tuning module;

- (2)

- The prompt module;

- (3)

- The hard-sample mining mechanism.

By controlling variables, our goal is to evaluate the individual contribution of each module as well as the synergistic effects when multiple modules are combined.

4.2.1. Experimental Setup

Under the same dataset and evaluation metrics as the main experiment, we constructed the following three model variants:

PT (without LoRA module): The LoRA fine-tuning module is removed, using only the prompt module to test the effect of prompt tuning alone.

Meta-Llama-3-8B-Instruct-LoRA (without hard-sample mining and prompt module): Both the prompt module and the hard-sample mining strategy are removed. LoRA fine-tuning is applied only on the original training set to verify LoRA’s effectiveness without external strategy interventions.

S-HAT (full model): All components are retained, including LoRA fine-tuning, the prompt module, and the hard-sample mining mechanism. This variant serves as the control group.

4.2.2. Results and Analysis

Table 5 presents the performance comparison under different model configurations, with precision as the primary evaluation metric.

Table 5.

Experimental results of the ablation study.

From the table, we can observe the following:

- Contribution of the LoRA module: Compared to the full model, the PT variant shows a decline in all metrics, indicating that LoRA fine-tuning plays a key role in optimizing model parameters, especially in significantly enhancing the overall discriminative ability of the model.

- Contribution of the Prompt + Mining mechanism: The performance of Meta-Llama-3-8B-Instruct-LoRA is also relatively poor, demonstrating that the prompt mechanism and hard-example mining are important for improving the model’s ability to recognize minority classes.

- Significant collaborative effect: The full model (S-HAT) significantly outperforms the other two ablation variants across all metrics, verifying that the combined effect of the three components is superior to the use of any single module alone.

The ablation experiment results in this section fully demonstrate that LoRA fine-tuning, the prompt module, and the hard-example mining mechanism each have independent value, and their collaboration produces a significant effect in few-shot scenarios. This experiment provides empirical support for the rationality and effectiveness of our proposed method.

4.3. Case Studies and Interpretive Enquiry

To ensure the completeness and interpretability of the reasoning logic during the identification of violent speech, the explanatory information generated by the model is further analyzed and explored in depth in this paper. Table 6 demonstrates a typical example, where underlining identifies the prompt word design that performs optimally in the zero-sample task. Specifically, the S-HAT method first achieves zero-sample violent speech recognition through prompt words and then retrains difficult samples in combination with the LoRA fine-tuning mechanism to improve the model’s discriminative ability in complex scenarios.

Table 6.

Example of identification of cyberviolent speech.

The method not only enhances the model’s ability to perceive changes in linguistic details but also improves its level of judgement of semantic truthfulness, thus providing a more accurate technical path and theoretical framework for the task of violent speech recognition. In addition, it is worth highlighting that the S-HAT model can simultaneously give its internal reasoning and analysis process while generating output results. This feature is significantly better than traditional models, reflecting its advantages in terms of interpretability and transparency, and provides important support for subsequent human–machine collaboration and decision-making.

5. Limitations

In the task of identifying online hate speech, the model still exhibits a certain proportion of misclassifications, primarily in two forms: (1) false negatives, where violent or abusive content is incorrectly classified as non-violent; and (2) false positives, where benign content is mistakenly identified as violent. These errors reveal the model’s limitations in handling implicit semantics, cultural metaphors, and contextual understanding. As shown in Table 7.

Table 7.

Examples of Recognition Failures.

For example, consider the following sentence:

“Hey fellow, want a manhole cover? A bearded man smiles at you.”

The model classifies this text as non-violent (output = 0). However, a deeper semantic interpretation reveals regional discrimination. In Chinese internet discourse, the phrase “manhole cover” is a derogatory metaphor used to stereotype people from certain regions as prone to theft. Although the surface tone appears neutral or even friendly, the underlying meaning contains discriminatory intent, exposing the model’s weakness in detecting covert hate speech. Such expressions are often embedded in cultural background and group cognition, and the model lacks sensitivity and capability to identify these semantic metaphors.

On the other hand, false positives often result from the model being overly sensitive to surface-level violent vocabulary, without proper contextual understanding. For instance, the sentence

This intended to discuss aquatic ecology and fish behavior, with no hostile or abusive meaning. However, the model misclassifies it as violent (output = 1) due to keywords like “get rid of” and “ferocious,” which are perceived as violent in isolation. This indicates the model’s inadequate contextual disambiguation as it fails to distinguish between neutral or scientific usage and genuinely violent content. It lacks deep semantic reasoning and contextual comprehension, making it prone to misjudging neutral or even technical discourse.“Can I put it with the tilapia… it should get rid of the cleaner fish, right?”

In summary, the current model faces three main challenges in online violence detection:

- Lack of understanding and perception of implicit discrimination, satire, and regional stereotypes embedded in sociocultural expressions;

- Limited semantic parsing ability, making it difficult to distinguish between genuinely aggressive semantics and non-aggressive uses of violent-sounding terms;

- Inability to dynamically adapt to polysemous expressions, metaphorical language, and evolving internet slang.

To address these challenges, future work can explore the following practical strategies:

- Incorporating external knowledge enhancement mechanisms: Integrate encyclopedic knowledge, regional dictionaries, and slang databases as retrieval-based or embedded modules to help the model identify culturally embedded metaphors, regional discrimination, and stereotypical expressions, thereby improving its understanding of deeper semantic meanings.

- Applying contrastive learning for semantic disambiguation: Train the model to recognize fine-grained semantic boundaries by constructing positive and negative-sample pairs (e.g., “violent use” vs. “neutral use” of the word “eliminate”), enhancing its ability to differentiate pragmatic meanings of the same word across different contexts.

- Integrating dynamic vocabulary adaptation mechanisms: Build a “dynamic online corpus pool” from real-time social media data and use vocabulary transfer learning techniques to update word embeddings, allowing the model to adapt to newly emerging slang, puns, homophones, and other ambiguous expressions in online discourse.

- Multilingual and cross-cultural fine-tuning: In multilingual social contexts, incorporate annotated datasets in multiple languages and embed cultural adaptation signals (e.g., regional tags and contextual markers) during training to improve the model’s ability to generalize and transfer across different cultural expression strategies.

Through these technical pathways, the model can achieve more accurate recognition of complex semantic phenomena in real-world applications and develop greater cross-cultural robustness and reliability, laying a solid foundation for building interpretable and trustworthy hate speech detection systems.

6. Conclusions and Future Work

In this paper, we focus on the application of large language models in the task of cyberviolent speech recognition and propose an S-HAT method based on LoRA fine-tuning with small, difficult samples for adaptive training. Experimentally, the method achieves 84.1% accuracy with only a small number of difficult samples, which is better than many mainstream models and the baseline of the untuned large model, verifying the parameter efficiency and task suitability of LoRA fine-tuning.

The S-HAT small, difficult-sample adaptive training framework proposed in this paper has significant advantages in method design. Firstly, the framework combines prompt word-driven and difficult-sample recognition mechanisms and relies only on a small amount of high-value training data to achieve effective fine-tuning of the large language model, which significantly reduces the training cost and data dependency. Second, the LoRA fine-tuning strategy efficiently adjusts the model parameters through Low-Rank Adaptation, which not only preserves the semantic comprehension ability of the large model but also improves its discriminative accuracy for semantic boundary samples. In addition, S-HAT introduces the inference analysis process in the sample construction process, which not only enhances the model’s ability to understand offensive language in complex contexts but also improves the interpretability and transparency of the final output and adapts to the dual requirements of accuracy and controllability in the task of online violence recognition.

At the same time, the inference path generation mechanism introduced by S-HAT improves the model’s explanatory ability in complex contexts and provides a technological path with practical value for actual network governance tasks.

Future research can further expand the cross-language training capability to enhance the generalization performance of the model on multilingual social platforms; meanwhile, online learning and active learning mechanisms can be introduced to equip the model with the ability to dynamically adapt to the emerging cyber terms and attack expressions so as to cope with the ever-changing cyberviolence manifestations more effectively. At the same time, this study models the structure of cyberbullying texts from the perspective of symmetry breaking in language, offering a new research approach for modeling and intelligent recognition in semantically complex social media contexts. It also aligns well with theoretical studies in fields such as structural symmetry and semantic distribution imbalance, thereby extending the application scope of symmetry theory within the domain of natural language processing.

Author Contributions

Conceptualization, Z.G. and L.Z.; methodology, Z.G.; software, Z.G.; validation, Z.G., L.Z. and S.J.; formal analysis, L.Z.; investigation, Z.G.; resources, L.Z.; data curation, Z.G.; writing—original draft preparation, Z.G.; writing—review and editing, Z.G.; visualization, Z.G.; supervision, L.Z.; project administration, L.Z.; funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Research on Public Opinion Risk Modeling and Intelligent Governance Algorithms for Online Communities, funded by the Natural Science Foundation of Hebei Province, grant number G2024507002, and Research on the Identification and Governance of Toxic Online Language in the Context of Artificial Intelligence, grant number JJPY202502. The APC was funded by both Research on the Identification and Governance of Toxic Online Language in the Context of Artificial Intelligence and Research on Public Opinion Risk Modeling and Intelligent Governance Algorithms for Online Communities.

Data Availability Statement

The dataset utilized in this study is not publicly available due to ongoing research but can be provided upon reasonable request. Interested researchers should contact. The first author at gaozg123@163.com, Smart Policing Academy, China People’s Police University, Langfang 065000, China. Requests must include a detailed description of the intended use and the requester’s institutional affiliation.

Acknowledgments

This work was partially supported by the China People’s Police University, and the authors acknowledge the computing resources provided by the Research Center for Network Public Opinion Governance.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Raj, C.; Agarwal, A.; Bharathy, G.; Narayan, B.; Prasad, M. Cyberbullying Detection: Hybrid Models Based on Machine Learning and Natural Language Processing Techniques. Electronics 2021, 10, 2810. [Google Scholar] [CrossRef]

- Rao, X.; Zhang, Y.; Jia, Q.; Liu, X.; Rao, X.; Zhang, Y.; Peng, S.; Jia, Q.; Liu, X. A Study on Chinese Hate Speech Detection Method Based on RoBERTa. (Chinese Hate Speech detection method Based on RoBERTa-WWM). In Proceedings of the 22nd Chinese National Conference on Computational Linguistics, Harbin, China, 3–5 August 2023; Sun, M., Qin, B., Qiu, X., Jiang, J., Han, X., Eds.; Chinese Information Processing Society of China: Harbin, China, 2023; pp. 501–511. [Google Scholar]

- Xu, Z. RoBERTa-Wwm-Ext Fine-Tuning for Chinese Text Classification. arXiv 2021, arXiv:2103.00492. [Google Scholar] [CrossRef]

- Wulczyn, E.; Thain, N.; Dixon, L. Ex Machina: Personal Attacks Seen at Scale. In Proceedings of the 26th International Conference on World Wide Web, WWW ’17, Perth, Australia, 3–7 April 2017; International World Wide Web Conferences Steering Committee: Geneva, Switzerland, 2017; pp. 1391–1399. [Google Scholar] [CrossRef]

- Subramani, S.; Michalska, S.; Wang, H.; Du, J.; Zhang, Y.; Shakeel, H. Deep Learning for Multi-Class Identification From Domestic Violence Online Posts. IEEE Access 2019, 7, 46210–46224. [Google Scholar] [CrossRef]

- Fortuna, P.; Nunes, S. A Survey on Automatic Detection of Hate Speech in Text. ACM Comput. Surv. 2018, 51, 85:1–85:30. [Google Scholar] [CrossRef]

- Ayo, F.E.; Folorunso, O.; Ibharalu, F.T.; Osinuga, I.A. Machine Learning Techniques for Hate Speech Classification of Twitter Data: State-of-the-Art, Future Challenges and Research Directions. Comput. Sci. Rev. 2020, 38, 100311. [Google Scholar] [CrossRef]

- Salminen, J.; Hopf, M.; Chowdhury, S.A.; Jung, S.; Almerekhi, H.; Jansen, B.J. Developing an Online Hate Classifier for Multiple Social Media Platforms. Hum. Cent. Comput. Inf. Sci. 2020, 10, 1. [Google Scholar] [CrossRef]

- Mozafari, M.; Farahbakhsh, R.; Crespi, N. A BERT-Based Transfer Learning Approach for Hate Speech Detection in Online Social Media. In Complex Networks and Their Applications VIII; Cherifi, H., Gaito, S., Mendes, J.F., Moro, E., Rocha, L.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 928–940. [Google Scholar] [CrossRef]

- Wiedemann, G.; Yimam, S.M.; Biemann, C. UHH-LT at SemEval-2020 Task 12: Fine-Tuning of Pre-Trained Transformer Networks for Offensive Language Detection. arXiv 2020, arXiv:2004.11493. [Google Scholar] [CrossRef]

- Risch, J.; Krestel, R. Bagging BERT Models for Robust Aggression Identification. In Proceedings of the Second Workshop on Trolling, Aggression and Cyberbullying, Marseille, France, 16 May 2020; Kumar, R., Ojha, A.K., Lahiri, B., Zampieri, M., Malmasi, S., Murdock, V., Kadar, D., Eds.; European Language Resources Association (ELRA): Marseille, France, 2020; pp. 55–61. [Google Scholar]

- Miok, K.; Škrlj, B.; Zaharie, D.; Robnik-Šikonja, M. To BAN or Not to BAN: Bayesian Attention Networks for Reliable Hate Speech Detection. Cogn. Comput. 2022, 14 1, 353–371. [Google Scholar] [CrossRef]

- Safi Samghabadi, N.; Patwa, P.; PYKL, S.; Mukherjee, P.; Das, A.; Solorio, T. Aggression and Misogyny Detection Using BERT: A Multi-Task Approach. In Proceedings of the Second Workshop on Trolling, Aggression and Cyberbullying, Marseille, France, 6 May 2020; Kumar, R., Ojha, A.K., Lahiri, B., Zampieri, M., Malmasi, S., Murdock, V., Kadar, D., Eds.; European Language Resources Association (ELRA): Marseille, France, 2020; pp. 126–131. [Google Scholar]

- Talat, Z.; Thorne, J.; Bingel, J. Bridging the Gaps: Multi Task Learning for Domain Transfer of Hate Speech Detection. In Online Harassment; Golbeck, J., Ed.; Springer International Publishing: Cham, Switzerland, 2018; pp. 29–55. [Google Scholar] [CrossRef]

- Ousidhoum, N.; Lin, Z.; Zhang, H.; Song, Y.; Yeung, D.-Y. Multilingual and Multi-Aspect Hate Speech Analysis. arXiv 2019, arXiv:1908.11049. [Google Scholar]

- Guzman-Silverio, M.; Balderas-Paredes, Á.; López-Monroy, A.P. Transformers and Data Augmentation for Aggressiveness Detection in Mexican Spanish. Available online: https://ceur-ws.org/Vol-2664/mexa3t_paper9.pdf (accessed on 11 July 2025).

- Rizos, G.; Hemker, K.; Schuller, B. Augment to Prevent: Short-Text Data Augmentation in Deep Learning for Hate-Speech Classification. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; CIKM ’19; Association for Computing Machinery: New York, NY, USA, 2019; pp. 991–1000. [Google Scholar] [CrossRef]

- Kocoń, J.; Cichecki, I.; Kaszyca, O.; Kochanek, M.; Szydło, D.; Baran, J.; Bielaniewicz, J.; Gruza, M.; Janz, A.; Kanclerz, K.; et al. ChatGPT: Jack of All Trades, Master of None. Inf. Fusion 2023, 99, 101861. [Google Scholar] [CrossRef]

- Paaß, G.; Giesselbach, S. Improving Pre-Trained Language Models. In Foundation Models for Natural Language Processing: Pre-trained Language Models Integrating Media; Paaß, G., Giesselbach, S., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 79–159. [Google Scholar] [CrossRef]

- Eriksson, D.B.T. Fine-Tuning a LLM Using Reinforcement Learning from Human Feedback for a Therapy Chatbot Application. Diva-portal.org. Available online: https://www.diva-portal.org/smash/get/diva2:1782678/FULLTEXT01.pdf (accessed on 11 July 2025).

- Chen, Y.; Zhang, S.; Qi, G.; Guo, X. Parameterizing Context: Unleashing the Power of Parameter-Efficient Fine-Tuning and In-Context Tuning for Continual Table Semantic Parsing. Adv. Neural Inf. Process. Syst. 2023, 36, 17795–17810. [Google Scholar]

- Fichtl, A. Evaluating Adapter-Based Knowledge-Enhanced Language Models in the Biomedical Domain. Ph.D. Thesis, Technical University of Munich, Munich, Germany, 2024. Available online: https://wwwmatthes.in.tum.de/file/1lfggh41pzur1/Sebis-Public-Website/-/Master-s-Thesis-Alexander-Fichtl/Fichtl%20Master%20Thesis.pdf (accessed on 21 June 2025).

- Liang, C.; Zuo, S.; Zhang, Q.; He, P.; Chen, W.; Zhao, T. Less Is More: Task-Aware Layer-Wise Distillation for Language Model Compression. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 20852–20867. [Google Scholar]

- Kirchenbauer, J.; Barns, C. Hallucination Reduction in Large Language Models with Retrieval-Augmented Generation Using Wikipedia Knowledge. 2024. Available online: https://files.osf.io/v1/resources/pv7r5/providers/osfstorage/6657166cd835c421594ce333?format=pdf&action=download&direct&version=1 (accessed on 21 June 2025).

- Welz, L.; Lanquillon, C. Enhancing Large Language Models Through External Domain Knowledge. In Artificial Intelligence in HCI.; Degen, H., Ntoa, S., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 135–146. [Google Scholar] [CrossRef]

- Zhou, L.; Chen, Y.; Li, X.; Li, Y.; Li, N.; Wang, X.; Zhang, R. A New Adapter Tuning of Large Language Model for Chinese Medical Named Entity Recognition. Appl. Artif. Intell. 2024, 38, e2385268. [Google Scholar] [CrossRef]

- Lu, J.; Xu, B.; Zhang, X.; Min, C.; Yang, L.; Lin, H. Facilitating Fine-Grained Detection of Chinese Toxic Language: Hierarchical Taxonomy, Resources, and Benchmarks. arXiv 2023, arXiv:2305.04446. [Google Scholar] [CrossRef]

- Shrivastava, A.; Gupta, A.; Girshick, R. Training Region-Based Object Detectors with Online Hard Example Mining. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ma, X.; Huang, H.; Wang, Y.; Romano, S.; Erfani, S.; Bailey, J. Normalized Loss Functions for Deep Learning with Noisy Labels. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 6543–6553. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-Train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. ACM Comput. Surv. 2023, 55, 195:1–195:35. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).