2.1. UMAP

The factors influencing power load are diverse, including natural factors and human factors. These influencing factors exhibit nonlinear and nonstationary characteristics. Although the original dataset contains rich information, it is challenging to analyze and forecast directly in a high-dimensional space. The relatively low forecasting accuracy of previous power load forecasting models has been greatly limited by this problem.

To effectively handle the complex feature structure of high-dimensional power load data, this paper employs Uniform Manifold Approximation and Projection [

17] for data reconstruction and dimensionality reduction. UMAP is a dimensionality reduction method based on manifold learning and algebraic topology theory. By constructing a fuzzy topological representation of data samples and optimizing the low-dimensional embedding space, UMAP can achieve efficient dimensionality reduction while preserving the topological structure of the data. Compared with traditional dimensionality reduction methods, UMAP not only effectively maintains the global structure and local neighborhood relationships of the data but also offers advantages such as fast convergence and strong scalability. Additionally, UMAP demonstrates lower computational complexity and better data structure preservation than t-distributed Stochastic Neighbor Embedding (t-SNE) when processing large-scale datasets. The dimensionality reduction process of UMAP mainly includes the following steps:

Step 1: Construct a weighted k-neighbor graph:

Let the input dataset be , with a dissimilarity measure.

; define and for each :

expresses the constraint of local connectivity:

serves as the corresponding normalization factor and is calculated by the following formula:

Step 2: Calculate the weight function

:

Step 3: Calculate the weight

between the

i-th data point and the

j-th data point in the original space:

Step 4: Calculate the symmetric weight

between the

i-th data point and the

j-th data point in the original space:

where

and

are hyperparameters, and

is the distance between data points

and

.

Step 5: Calculate the UMAP cost function

:

Step 6: Take the positions of the data points in the optimized low-dimensional space as the final result, given by the following formula:

Here, represent the coordinates of the data point in each dimension of the low-dimensional space.

In Formulas (1) and (2), and represent the local connectivity constraint and normalization factor, respectively, which are used to determine the connection range between data points.

In Formulas (3)–(5), the conditional probability of data points in the high-dimensional space , the asymmetric weight , and the symmetric weight are calculated, respectively, in order to construct the topological structure of the data.

The cost function in Formula (6) is used to evaluate the degree to which the topological structure is preserved before and after dimensionality reduction—the smaller the value, the better the dimensionality reduction performance.

Formula (7) provides the coordinate representation of the data points after dimensionality reduction, where indicate the positions in each dimension of the new space.

2.2. MSCSO

The Sand Cat Swarm Optimization (SCSO) algorithm [

18] achieves model parameter optimization by simulating the unique predatory behavior of sand cat swarms. SCSO mainly consists of two stages: exploration and exploitation. In the initial phase, the population is initialized using a random generation method, which results in an inability to ensure the quality and diversity of the initial population. As the number of iterations increases, the search mechanism in the exploration stage demonstrates low development efficiency. Due to the sensitivity settings, the algorithm is prone to falling into local optima traps. Although the algorithm has a simple structure and few parameters, the exploration range of population individuals in the search space decreases as the iterations proceed, which can lead to search stagnation and a reduction in the global search performance of the algorithm. The implementation details of SCSO are as follows:

Step 1: Generate an initial matrix of size

randomly within the given interval.

Here, is the i-th sand cat individual, and represents the position of the i-th sand cat in the j-th dimension of the space.

Step 2: The position update equation in the exploration phase is as follows:

represents the best candidate position of the sand cat individual at the current iteration, and is the current position of the sand cat.

Here,

is a random number in the range (0, 1), and

denotes the sensitivity of the sand cat individual.

Here,

represents the general formula for sand cat sensitivity, as follows:

In Formula (11), is set to a fixed value of 2, is the current iteration number, and is the maximum number of iterations.

Step 3: The position update equation in the exploitation phase is as follows:

In the above equation, represents a random position that ensures the sand cat is close to the prey, is the best position of the sand cat in the t-th iteration, and is the updated position of the sand cat. is a random angle within the range [0, 360].

The transition formula between the exploration phase and the exploitation phase is as follows:

In Formula (15), the parameter controls the phase switching of the sand cat.

In Formula (8), represents the i-th sand cat individual, and denotes the position of that individual in the j-th dimension of the space, used for initializing the population distribution.

In Formulas (9)–(11), represents the new position of the sand cat in the exploration phase, is the current best candidate position, and is the current position.

and represent the individual sensitivity and the general sensitivity formula, respectively, where is set to a fixed value of 2, is the current iteration number, and is the maximum number of iterations.

In Formulas (12)–(15), represents a random position that ensures the sand cat is close to the prey, is the current best sand cat position, is the updated position, is a rand angle within the range [0, 360], is the parameter that controls the phase switching, and is a random number in the range (0, 1).

2.2.1. UTCM

The SCSO algorithm possesses some of the advantages mentioned earlier; however, its shortcomings are also evident. First, the initial population generated by the random generation method cannot ensure consistently high-quality populations. Second, due to the sensitivity settings, the algorithm is prone to falling into local optima traps, with the possibility of finding suboptimal solutions. Third, the search mechanism in the exploration phase demonstrates low development efficiency and is also prone to getting stuck in local optima, which is unfavorable for global exploration.

The initial population of SCSO is generated using a random generation method, which may result in the presence of low-quality individuals within the initial population. Moreover, the random generation method tends to produce individuals in similar regions, limiting the diversity of the population. To address this problem, this paper innovatively improves the Tent Chaos Mapping [

19] and proposes the Uniformization Tent Chaos Mapping (UTCM) to increase the diversity of individuals within the initial population. Incorporating UTCM into the population generation stage enhances diversity among individuals, achieves uniform distribution, and improves the overall quality of the sand cat population. The specific formulas are as follows:

Map the input value

to the interval [0, 1] using the following formula:

Here,

and

represent the lower and upper bounds of the sand cat population, respectively. Apply the Tent mapping function for uniform mapping using the following formula:

Remap the mapped value back to the original interval

using the following formula:

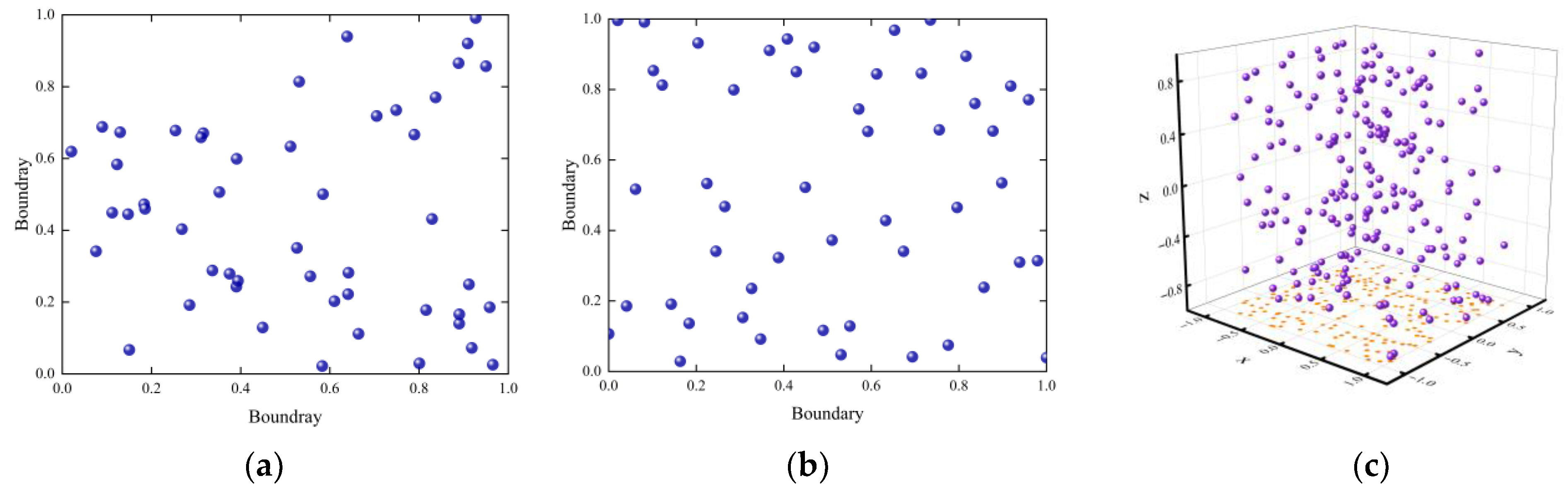

The population distributions of the sand cat populations generated by random initialization and by UTCM are shown, respectively, in

Figure 1.

As shown in

Figure 1, although the population generated using the random generation method exhibits strong randomness, it is not uniformly distributed and presents a phenomenon of regional clustering. In contrast, the population generated using the UTCM method is more evenly distributed and exhibits significant diversity, which helps the algorithm avoid local optima traps and enhances the possibility of exploring the global optimum. The chaotic nature of UTCM makes it sensitive to slight changes in initial conditions, thereby improving the robustness of the algorithm.

2.2.2. Sensitivity Improvement

The sensitivity of sand cat individuals determines their responsiveness to environmental changes or the ability to acquire information from other individuals during the optimization process. Sensitivity affects their decision-making and movement within the search space. The sensitivity defined by SCSO has limitations; the fixed sensitivity used during iterations lacks adaptability throughout the optimization process, which may result in a suboptimal exploration–exploitation trade-off and premature convergence into local optima traps. MSCSO proposes a dynamic cyclic pattern sensitivity variation mechanism that enhances the diversity of sand cat individuals while allowing the algorithm to gradually transition from the exploration phase to the exploitation phase, thereby improving the quality of solutions. Additionally, because the new sensitivity mechanism incorporates randomness, it facilitates better exploration of the search space and helps escape local optima traps.

The sensitivity variation trend of SCSO with the number of iterations, calculated according to Equations (10) and (11), is shown in

Figure 2. Although the sensitivity exhibits good nonlinearity, it is evident that the sensitivity is positive in the first half and negative in the second half, which limits the global exploration capability. Therefore, a cosine evolution adaptive factor is introduced to improve the sensitivity, as expressed by the following formula:

Here,

is the cosine-evolution adaptive factor, and

is the adaptive coefficient, which achieves optimal performance when

.

is the improved sensitivity, and

Figure 3 shows the variation trend of

during the iteration process:

Figure 2.

Sensitivity of SCSO.

Figure 2.

Sensitivity of SCSO.

As shown in

Figure 3, the sensitivity maintains a relatively high value throughout the entire iteration process, overcoming the shortcomings of a small exploration range and significant variation between the early and later stages of the iteration. This improvement enhances the algorithm’s global exploration capability during the middle and later stages of the iteration.

2.2.3. Introduction of the Lévy Flight Strategy to Optimize the Exploitation Phase

Since the exploration range of population individuals in the position update mechanism decreases as the number of iterations increases, the exploitation range of the algorithm also gradually shrinks. This approach tends to cause the optimal solution to converge toward a particular location, which may lead the algorithm into search stagnation and subsequently into local optima traps. To address this limitation, the Lévy flight [

20] is introduced, and its formula is as follows:

Here, is a fixed value of 1.05, is the standard deviation of the Lévy flight step size, and is the step size of the Lévy flight.

The position update equation in the exploration phase after introducing Lévy flight is as follows:

Here, is the adjustment coefficient, with a value of 0.13.

The introduction of Lévy flight enhances the global search capability of the algorithm, providing a strong ability to escape when encountering local optima traps. The heavy-tailed step length allows the algorithm to occasionally perform large jumps, enabling it to traverse distant regions and increasing the likelihood of discovering better solutions. This facilitates more effective exploration of the search space.

2.2.4. MSCSO Algorithm Testing

To verify the effectiveness and stability of the MSCSO algorithm, Schwefel’s 2.26 function (f

1(x)) and the Shekel function (f

2(x)) were selected to test the optimization capabilities of each algorithm on multimodal problems and fixed-dimension multimodal test functions (the function plots are shown in

Figure 4 and

Figure 5, and the specific formulas of the test functions are as follows. The specific parameters of the Shekel function are listed in

Table 1). The standard SCSO, Snake Optimization (SO) algorithm [

21], and Sparrow Search Algorithm (SSA) [

22] were selected for comparison to evaluate the performance of the MSCSO algorithm. In this section, all experiments were conducted using MATLAB 2023a.

All algorithms used an initial population size of 50 and 300 iterations. The experimental results are detailed in

Table 2 and

Table 3, and the iterative optimization processes for the two functions are shown in

Figure 6 and

Figure 7.

Figure 4 and

Figure 5 illustrate the graphical representations of the Schwefel’s 2.26 function and the Shekel function, respectively. As shown by the function plots, the Schwefel’s 2.26 function contains multiple extreme points, exhibiting a complex high-dimensional structure with numerous local optima. This makes optimization algorithms prone to becoming trapped in local optima, especially when the search space is large or the strategy is not sufficiently comprehensive. The Shekel function represents a fixed-dimension multimodal optimization problem, containing multiple local minima and one global minimum, presenting a relatively complex multimodal structure. Therefore, it places high demands on the global search capability of the algorithm.

As shown in

Figure 6, the proposed MSCSO, through ingenious strategy improvements, effectively enhanced global search capability, successfully overcame local optima traps, and was able to quickly find the global optimum. In contrast, although the SCSO algorithm possesses a certain ability to escape local optima and can find the global optimum within 300 iterations, its convergence speed is slow, and its performance lags significantly behind that of the proposed MSCSO.

Additionally, while the SO algorithm has some capacity to escape local optima, its search strategy is designed to expand the search range only after more than half of the total iterations, resulting in a longer time required to escape local optima. Moreover, the SO algorithm clearly failed to find the global optimum, indicating insufficient local optima escape capability. Therefore, when dealing with complex high-dimensional optimization problems, the SO algorithm shows poor stability, and its optimization results are not necessarily the global optimum.

The SSA algorithm’s single search strategy revealed its weakness in global search capability. When handling high-dimensional complex functions, it is prone to becoming trapped in local optima. Especially in optimization problems with multimodal or complex structures, the SSA algorithm’s search efficiency drops significantly, exhibiting a strong tendency toward premature convergence.

Figure 7 illustrates the performance differences of each algorithm on the Shekel function. Since the number of iterations required by each algorithm to find the optimal solution was less than 300, only the first 150 iteration curves are plotted in

Figure 7 for ease of observation. Similarly to

Figure 6,

Figure 7 also highlights the clear differences among the algorithms in terms of solution quality, convergence speed, global search capability, and stability.

Although both the SO algorithm and the SSA algorithm found the global optimum, their convergence speeds were relatively slow. During the iteration process, both algorithms repeatedly became trapped in local optima, resulting in significant fluctuations. These fluctuations not only consumed more time during the optimization process but also affected the robustness of the algorithms. The SO algorithm frequently fell into local optima and required excessive iterations throughout the optimization process. Additionally, the SO algorithm exhibited large fluctuations during optimization, making it unsuitable for complex search spaces. The SSA algorithm encountered the same issue observed in

Figure 6, where its poor global search strategy led to a tendency for premature convergence. Compared with SO and SSA, the SCSO demonstrated stronger local optima escape capability and faster convergence speed, but its performance was still far inferior to that of MSCSO.

The MSCSO algorithm, leveraging its flexible global search strategy and local exploitation mechanism, was able to quickly find the global optimum with the fewest iterations while achieving the highest convergence speed. This advantage primarily stems from the multi-strategy integration mechanism adopted by the MSCSO algorithm, which not only achieves high optimization speed but also balances global exploration and local exploitation, effectively avoiding local optima traps.

Based on the above analysis of

Figure 6 and

Figure 7, the MSCSO algorithm demonstrates the advantages of a fast convergence speed and high solution accuracy in complex optimization problems. In contrast, the SCSO, SO, and SSA algorithms each exhibit issues such as a slow convergence speed or insufficient stability when applied to high-dimensional complex problems.

Table 2 and

Table 3 list the optimal solutions obtained by each algorithm on the f

1(x) and f

2(x) functions, as well as the average value and standard deviation of the best values calculated in each of the 300 iterations. These evaluation metrics can intuitively reflect the performance differences among the algorithms.

In terms of the optimal solution, both the MSCSO and SCSO algorithms successfully found the global optimum for both functions. The SO algorithm did not reach the global optimum of the f1(x) function but found a solution closer to the global optimum than that obtained by the SSA algorithm. In this metric, the MSCSO algorithm, benefiting from its strong global search capability, clearly outperformed the SSA and SO algorithms.

Average value serves as another important metric that can offset the occasional bias that may arise when evaluating by optimum value, and it quantitatively measures the reliability and robustness of the algorithm. As shown in

Table 2, the average value of MSCSO is the closest to the optimum value, indicating that the MSCSO algorithm can stably approach the optimal solution within relatively few iterations, with minimal fluctuations in the best solutions found during the search process and a high consistency in the solutions obtained. This demonstrates that the MSCSO algorithm possesses not only powerful global search capability but also exhibits excellent robustness. In contrast, the average value and optimum value differences are larger for the other algorithms.

Standard deviation is an important metric for measuring the stability and reliability of an algorithm. When comparing different algorithms, a lower standard deviation usually indicates that the algorithm possesses stronger robustness and stability in complex optimization problems. As shown in

Table 2, the standard deviation value for MSCSO is the lowest, indicating that the solutions obtained by the algorithm are highly stable and can consistently yield relatively uniform and near-optimal results across multiple iterations. In contrast, the other algorithms exhibit larger standard deviations, reflecting greater fluctuations in their solutions and lower stability.

Similarly, by comparing the optimum value and average value in

Table 3, it is evident that MSCSO continues to demonstrate superior performance on the f

2(x) function. It is important to note that although SSA’s standard deviation value is relatively low, this does not indicate high stability. On the contrary, this phenomenon results from SSA repeatedly becoming trapped in the same local optimum, making effective global exploration difficult.

Based on the above analysis of the figures and tables, the MSCSO algorithm exhibits significant advantages in solving complex optimization problems, particularly in terms of convergence speed, solution accuracy, stability, and robustness. Therefore, MSCSO is well-suited to the subsequent modeling steps, where it will be responsible for optimizing the key parameters of the forecasting model and identifying the optimal parameter set.

2.2.5. Ablation Study of MSCSO Algorithm

To further investigate the individual contributions of each strategy within the proposed MSCSO algorithm, we designed and conducted a set of ablation experiments. In these experiments, we systematically removed or disabled key strategies within the algorithm to evaluate their specific impact on overall optimization performance.

Figure 8 presents the performance of various strategy combinations on the Schwefel’s 2.26 function and the Shekel function, while

Table 4 and

Table 5 list the best value, mean, and standard deviation of the results for each combination.

Due to the complex structure and large number of local optima in the Schwefel’s 2.26 function, the influence of each strategy on the optimization process is more pronounced. As shown clearly in

Figure 8a, combining different improvement strategies with the baseline SCSO algorithm leads to notable differences in optimization performance. As initialization methods and search strategies are gradually introduced, the performance of the baseline SCSO improves consistently.

Specifically, both SCSO + UTCM and SCSO + Sensitivity combinations enhance the search capabilities of the baseline algorithm to a certain extent. UTCM improves the uniformity of the initial population distribution, providing better starting points for subsequent search, while Sensitivity Optimization directly modifies the algorithm’s search behavior. Though their mechanisms differ, they show comparable performance improvements in the figure. The combination of SCSO + UTCM + Sensitivity integrates the advantages of both strategies, improving accuracy while maintaining search stability.

Among all the improvements, Lévy flight contributes the most significant enhancement. Owing to its strong jump behavior and powerful global search capability, it effectively helps the algorithm escape local optima. When Lévy flight is combined with the UTCM initialization strategy and the Sensitivity adjustment mechanism, optimization performance is further boosted, ultimately forming the complete MSCSO algorithm.

Notably, as shown in

Table 4, the standard deviation of SCSO + Lévy + UTCM is smaller than that of SCSO + Lévy + Sensitivity. This is because UTCM produces a more evenly distributed initial population, enabling higher-quality solutions in the early stages and thus improving solution stability. Although its best and mean values are slightly lower than those of SCSO + Lévy + Sensitivity, its reduced volatility indicates better convergence consistency.

The Shekel function features multiple local optima and a relatively concentrated solution space, which leads to more similar convergence curves among different combinations.

Figure 8b and

Table 5 display the optimization performance of various MSCSO module combinations on this function. The results also confirm that with the incremental introduction of strategies, both convergence precision and result stability improve consistently.

In summary, the ablation study results clearly demonstrate the independent contributions of each strategy in enhancing the optimization performance of MSCSO. UTCM effectively improves population diversity and uniformity, offering a superior starting point for the search process. Sensitivity Improvement enhances the algorithm’s adaptability in fine-grained local searches. The Lévy flight mechanism, with its long-jump distribution, significantly increases the algorithm’s ability to escape local optima and strengthens global exploration. These strategies act synergistically at different levels, significantly improving the convergence speed, solution accuracy, and stability of SCSO, ultimately resulting in an MSCSO framework with superior overall performance.

2.3. TCN

The Temporal Convolutional Network (TCN) is a newly improved and optimized network structure based on traditional convolutional neural networks and recurrent neural networks [

23]. Addressing the complexity and long time span characteristics of power load time series, TCN combines causal convolution and dilated convolution to achieve deep capture of historical load data and strict unidirectional temporal information propagation. As shown in

Figure 9, the core structure of TCN includes causal convolution layers, dilated convolution layers, and residual connections. This design not only enables accurate modeling of long-term load variation patterns but also achieves efficient parallel computation. Compared with RNN family models (such as LSTM, GRU, etc.), TCN effectively avoids the vanishing gradient problem in power load forecasting and demonstrates higher computational efficiency and numerical stability.

The receptive field of TCN is jointly determined by the kernel size, dilation factor, and network depth. Its convolution operation can be expressed as Equation (29):

In Equation (29), represents the input sequence, denotes the convolution operation, is the dilation factor, is the kernel size, is the i-th element of the convolution kernel, and is the corresponding input element.

The Residual Block of TCN consists of causal dilated convolution, weight normalization, ReLU activation function, and a dropout regularization layer, as shown in

Figure 10.

This structural arrangement utilizes weight normalization to effectively suppress gradient explosion and accelerate convergence. The ReLU activation function and Dropout layer within the residual blocks work together to mitigate overfitting, while the residual branch implements dimension-matching nonlinear mapping through a 1 × 1 convolution.

To address the shortcomings of the above-mentioned TCN, this paper adopts the following two targeted modifications.

2.3.1. SwishPlus Activation Function Replacing the ReLU Activation Function

The standard TCN adopts the ReLU activation function [

24] to achieve data nonlinearity. Due to the complexity of power load data, the characteristics of ReLU present certain drawbacks, making the model prone to the problem of neuron paralysis during the training process. Therefore, based on the Swish activation function [

25], this paper innovatively proposes the SwishPlus activation function to replace ReLU. Its non-saturating property can effectively address this defect. The specific formulas for the ReLU function, Swish function, and SwishPlus function are as follows:

Figure 11 shows the comparison between the SwishPlus activation function and the ReLU activation function curves. As observed from

Figure 11, when x > 0, both the ReLU function and the SwishPlus function output non-zero values, but the SwishPlus activation function exhibits better nonlinearity. When x < 0, the ReLU function outputs zero values, whereas the SwishPlus function outputs non-zero and nonlinear values. Although this sacrifices part of the model’s overfitting suppression capability, it effectively resolves the problem of neuron death.

The SwishPlus function shows a significant improvement in stimulating neurons compared to the Swish function. When the input value is greater than 0, the output values of the Swish function and the ReLU function are similar, whereas there is a considerable difference between their values and those of the SwishPlus function. Using the SwishPlus function enables smoother and more continuous activation in neural networks, which facilitates more efficient gradient propagation. This is mainly because the SwishPlus function retains small activation values even when the input is negative, unlike the ReLU function, which truncates values on the negative half-axis directly to zero. This characteristic of retaining negative activation values helps maintain better information flow and gradient propagation in deep networks. Therefore, in terms of gradient updates and model convergence speed, the SwishPlus function demonstrates superior properties, significantly improving model performance in specific tasks.

Figure 12 shows the residual connection unit after replacing the ReLU function with the SwishPlus function.

2.3.2. Self-Attention Mechanism

The Self-Attention mechanism [

22] models direct associations between arbitrary time steps in a sequence through an attention weight matrix to capture long-term dependencies in the data. It computes attention weights based on the similarity between the Query and Key, enabling adaptive weight assignment to different time steps. This dynamic weight allocation mechanism can effectively handle sudden changes and abnormal patterns in load data. The detailed formulas of the Self-Attention mechanism are provided below.

Given the input sequence

as

denotes the sequence length, and

denotes the feature dimension,

Linear projection transformation:

is the Query projection matrix, is the Key projection matrix, and is the Value projection matrix.

Attention weight calculation:

Here, is the attention function, is the similarity matrix, and is the final output representation matrix.

The following are the advantages provided by the Self-Attention mechanism in short-term load forecasting tasks:

Enhanced local temporal association: In short-term load forecasting, load data exhibit significant intraday fluctuations and interday correlations. The Self-Attention mechanism can adaptively learn the dependencies between arbitrary time points and accurately capture these periodic features.

Powerful global modeling capability: The Self-Attention mechanism allows each moment in the load sequence to directly associate with all historical moments, effectively extracting similar daily load patterns. This is particularly important for accurately forecasting load variations under abnormal conditions such as holidays or special weather events.

Improved parallel computing efficiency: Compared to traditional Attention mechanisms, the Self-Attention mechanism derives , , and from the same input sequence, making the computation more efficient and suitable for handling high-frequency sampled load data.

This structural design that combines Self-Attention with TCN not only enhances the advantage of TCN in local feature extraction but also strengthens the model’s capability to capture global dependencies in the load sequence through Self-Attention, while achieving significant improvements in computational efficiency.

2.3.3. Establishment of the MSCSO + SA TCN Power Load Forecasting Model

Since the hyperparameters—kernel size, number of filters, and batch size—in the TCN model significantly affect its forecasting performance, the MSCSO algorithm is employed to optimize these parameters and construct the MSCSO + SA TCN load forecasting model. The specific implementation steps are detailed below, and the model construction process is illustrated in

Figure 13.

- (1)

UMAP is applied to reduce the dimensionality of the historical power load data, and the output data are used as the model input data.

- (2)

The input data obtained in step (1) are divided into a testing set and a training set in a ratio of 30% to 70%.

- (3)

Identify the important parameters in SA TCN that require optimization (kernel size, number of filters, and batch size) and use MSCSO to optimize the parameter set.

- (4)

Assign the optimal parameter set obtained by MSCSO to the SA TCN.

- (5)

Train the SA TCN power load forecasting model using the training set.

- (6)

Evaluate the forecasting performance of SA TCN using the testing set data.