Modeling Based on Machine Learning and Synthetic Generated Dataset for the Needs of Multi-Criteria Decision-Making Forensics

Abstract

1. Introduction

- Atmospheric variables (temperature, humidity, pressure, wind speed, light, …);

- Physical variables (target distance, trigger pull force, …);

- Biomechanical (grip stability, …);

- Behavioral variables (shooter experience, shooter heart rate, …).

- First, we examined a multi-layer model that integrates MCDM and ML methods. MCDM methods are used in beginning layer to provide to the model with necessary suitable dataset, and after that, we used an ensemble methodology integrating two widely used approaches, regression and filter-based feature selection with classification algorithms, into a single ensemble ML model. And on the last and third layer, a voting ensemble is applied for making the final decision. The proposed model is, in this way, practically designed as an asymmetric optimization procedure. The integration aims to leverage the strengths of each method to improve overall performance. Given the complexity of the problem and the wide array of available algorithms and integration strategies for ensemble modeling, the proposed model provides a flexible foundation for future extensions and refinements. According to the authors’ knowledge, a model combining modern ML techniques and MCDM in the proposed configuration for the forensic analysis of handgun selection has not previously been addressed in the existing literature.

- Second, the proposed methodology was evaluated through a case study involving the forensic assessment of the U.S. Army’s 1985 decision to adopt the Beretta 92 handgun in place of the previously used Colt 1911. To enhance the realism of the analysis, the Glock 17, the third most widely used handgun globally, was included in the evaluation [18]. Due to the impracticality of conducting real-world experiments and the lack of publicly available datasets on factors affecting handgun accuracy, the authors utilized synthetic data generated using ChatGPT-4, one of the most prominent AI-based text generation tools, for model evaluation.

- First (conditional) hypothesis: It is possible to use synthetically generated data from the ChatGPT-4 AI tool for this type of forensic analysis.

- Second (final) hypothesis: It is possible to construct a novel ensemble model that solves the problem of handgun type selection in a more effective manner than existing state-of-the-art approaches.

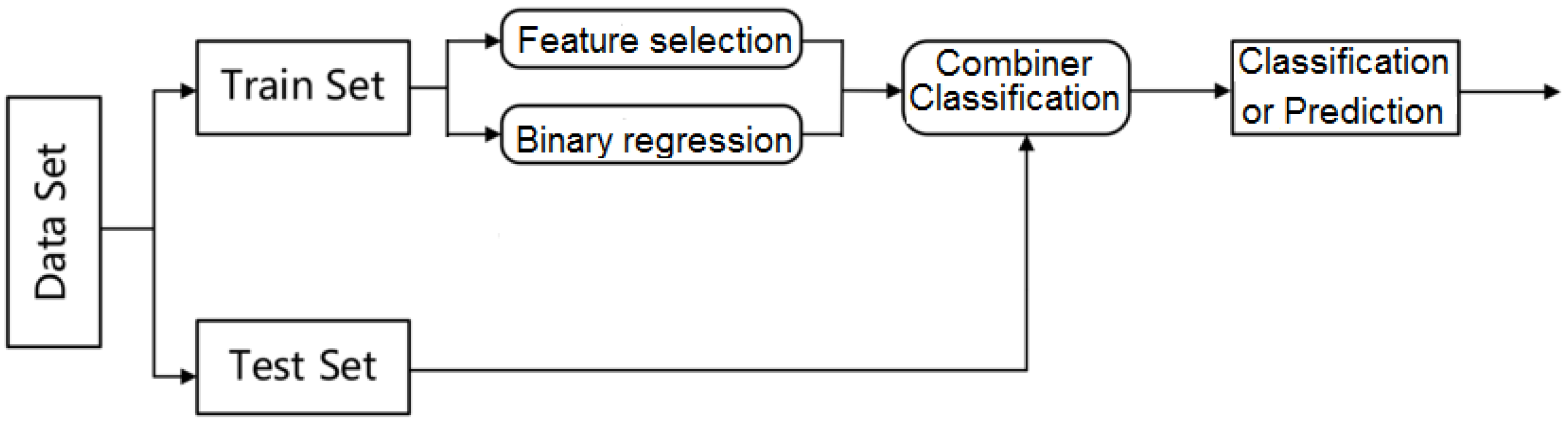

2. Materials and Methods

- The Input Layer, which handles essential dataset preparation and validation for its suitability in solving the given problem, using MCDM methodology;

- The Base Layer, which integrates two widely adopted approaches—classical regression techniques and modern ML-based feature selection—into a unified ensemble model through an asymmetric optimization procedure;

- The Output Layer, which applies a simple voting mechanism to determine the final decision based on the number of shared significant factors across the different considered handgun type.

2.1. Methods

2.1.1. Logistic (Binary) Regression

2.1.2. Classification Algorithms

2.1.3. Future Selection Techniques

- Filter methods, such as Relief, CorrelationAttributeEval, InfoGain, etc.;

- Wrapper methods, such as Greedy Stepwise, BestFirst, Genetic Search, etc.;

- Embedded methods, which combine the strengths of filters and wrappers, for example, Ridge Regression and decision tree-based algorithms like NBTree.

- Filter methods

- Wrapper methods

| Algorithm 1: The greedy search technique |

| 1. The solution set with suitable answers is empty at the beginning. 2. In each step, an item is added to the set that represents the solution, continuing until a final solution is reached. 3. The current item is kept only if the solution set is feasible. 4. Else, the item is rejected and is never considered again. |

2.1.4. Ensemble Methods

- Bootstrap (Bagging);

- Boosting;

- Voting;

- Stacking.

- Ensemble learning combines multiple ML and other types of algorithms into a single unified model to improve overall performance. Bagging primarily aims to reduce variance, boosting focuses on minimizing bias, while stacking seeks to enhance classification or prediction accuracy. For these reasons, the authors applied stacking in their proposed model to address the handgun type selection forensics problem, formulating it as a binary classification task.

- Ensemble learning offers several key advantages, including improved accuracy, interpretability, robustness, and model combination.

- It is important to note that in many real-world problems—including the case study presented in this paper—real-time computation is not a strict constraint. Moreover, the continuous advancement of computing technologies enables increasingly faster processing, making the additional computational demands of ensemble models less problematic.

- Voting

- Majority Voting: Each model votes for a class label, and the class receiving the majority of votes is selected as the final classification or prediction. This is the approach used by the authors to make the final decision in their model. In the event of a tie (i.e., equal number of votes), a tie-breaking rule is applied, such as selecting the factor with the higher associated probability.

- Weighted Voting: Each model’s vote is assigned a weight based on its individual performance, giving more influence to stronger models.

- Soft Voting: This scheme is used when models provide probability estimates or confidence scores for each class label rather than discrete class predictions.

- Stacking

2.2. Materials

- ChatGPTsyntheticGeneratedDatasetHandgunShooting-Colt1911.xls;

- ChatGPTsyntheticGeneratedDatasetHandgunShooting-Bereta92.xls;

- ChatGPTsyntheticGeneratedDatasetHandgunShooting-Glock17.xls.

2.2.1. Generation and Preprocessing of the Required Dataset

- ChatGPTsyntheticGeneratedDatasetHandgunShooing-Colt1911Bereta92Glock17.xls;

- ChatGPTsyntheticGeneratedDatasetHandgunShooting-Colt1911.xls;

- ChatGPTsyntheticGeneratedDatasetHandgunShooting-Bereta92.xls;

- ChatGPTsyntheticGeneratedDatasetHandgunShooting-Glock17.xls.

2.2.2. MCDM Evaluation of the Synthetic Generated Data to Solve the Considered Problem

- AHP

- TOPSIS

3. Proposed Ensemble Method for Handgun Type Selection Forensics

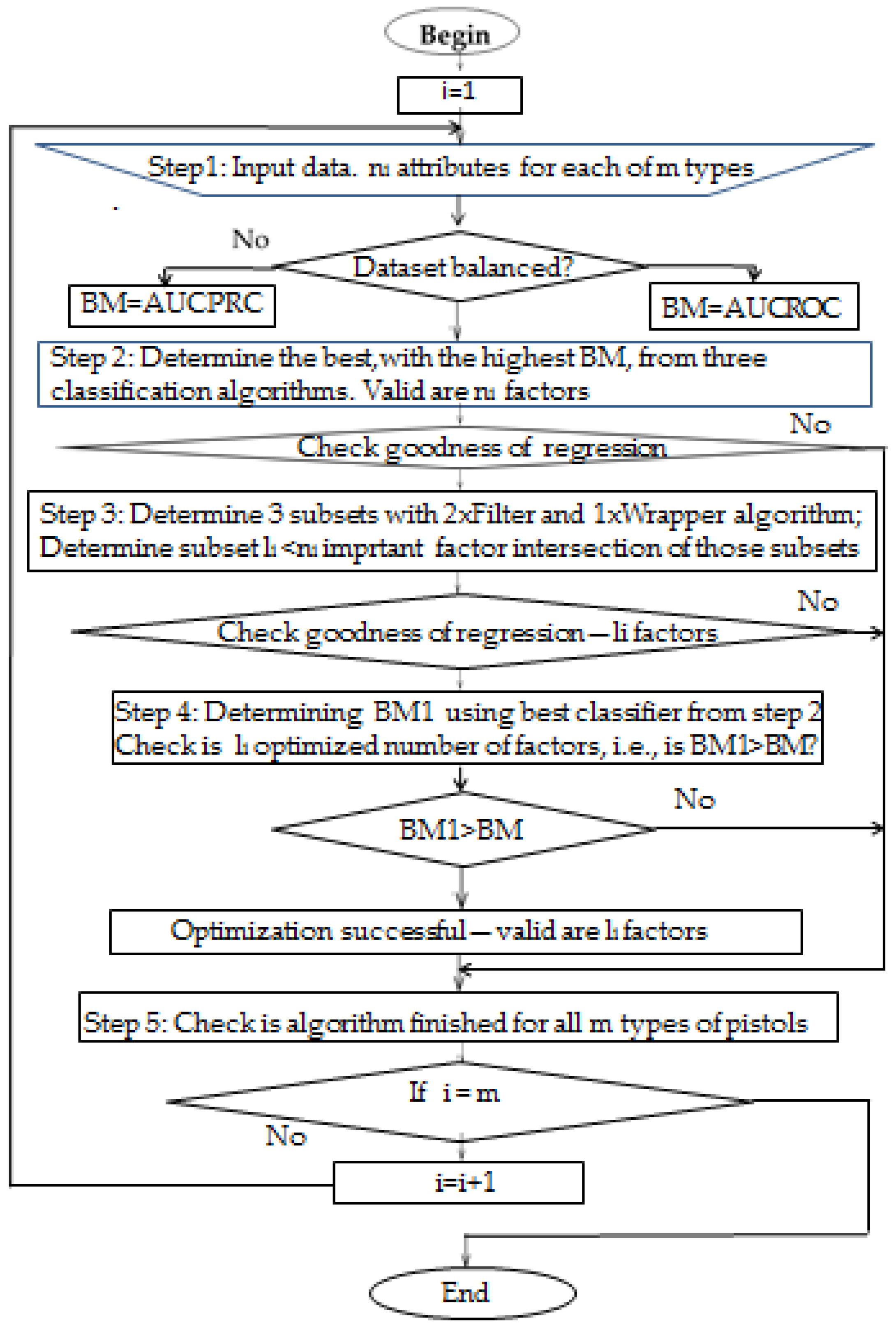

| Algorithm 2: Determining biomechanical and atmospheric predictors |

| Dataset with number of valid attributes; , m number of types of pistols * 1. Input preprocessed verified data for one of m types of pistols; Check balance of uploaded data; IF dataset is balanced BM = AUCROC; GO TO next step ELSE BM = AUCPRC; GO TO next step ** 2. Determine best from three classification algorithms AB, PART and RF algorithm which has the highest value of BM. Perform regression with attributes; check regression goodness; IF regression OK (Hosmer–Lemeshow), number of attributes is ; GO TO next step ELSE GO TO step 5 *** 3. Implement Feature selection with two filter and one wrapper algorithm; using intersection of obtained results determine factors; check regression goodness with factors; IF regression OK number of attributes is ; GO TO next step ELSE number of attributes is ; GO TO step 5 **** 4. Determine BM1 with best classifier determined in step 2; check ; IF Yes number of attributes is ; GO TO next step ELSE number of attributes is ; GO TO step 5 ***** 5. Check is algorithm finished for all types of pistols; IF ; GO TO step 1 ELSE GO TO end |

- * The input consists of already preprocessed and validated data, where for each of the m types of pistols, ni attributes influencing shooting success are considered. The dataset is then checked for class balance. If the dataset is balanced, the primary performance metric for evaluating the proposed model will be BM = AUC-ROC; otherwise, BM = AUC-PRC will be used.

- ** A binary logistic regression is performed using 11 biomechanical attributes ( in the general case) as predictors. The dependent variable represents the binary outcome, either a hit or a miss. If any predictors exhibit unacceptable levels of multicollinearity, they must be excluded from the proposed model. A classification table is used to evaluate the model’s classification accuracy and to compare it against the accuracy expected from random classification. To assess the proportion of variance explained by the model, the Cox–Snell R Square and Nagelkerke R Square statistics are calculated. Finally, the Hosmer and Lemeshow test is applied as the key indicator of the model’s goodness of fit. If the test of goodness is positive, the algorithm continues with the next step, but if opposite, it continues with step 5 without optimization of the number of factors: they remain the same factors. The authors propose that the classification performance of the model be evaluated using the AUC-ROC or AUC-PRC, depending on whether the dataset is balanced, in accordance with standard measures for binary classification problems [30,31]. To determine the best-performing classification algorithm, three algorithms representing distinct classification paradigms—Random Forest (from the Decision Tree group), PART (from the Rule-based group), and AdaBoost (from the Meta-learning group)—as provided in the WEKA tool, are initially tested. The best-performing algorithm among these will be selected for use in Step 4, which involves attribute selection using several feature selection methods to identify the most relevant predictors.

- *** Using three feature selection algorithms—two from the filter group (ReliefF and CorrelationEval) and one from the wrapper group algorithms (GreedyStepwise)—attribute selection is performed. The intersection operation is applied to the three resulting sets obtained from each of these feature selection methods to identify the common attributes. At the end of this step, the quality of the selected attribute subset is evaluated using binary logistic regression, as described in Step 2. The regression model is now constructed with predictors, where is the number of attributes retained after selection. Model evaluation is performed using a classification table, Cox–Snell R Square, Nagelkerke R Square, and the Hosmer and Lemeshow test, as previously explained. If the evaluation confirms the adequacy of the reduced model, and if the check of goodness of regression is OK, the procedure continues using the optimized subset of attributes. Otherwise, the process terminates with the original attributes retained in the model.

- **** The value of the performance measure BM, defined as in Step 1 and now denoted as BM1, is determined using the most effective classifier identified in Step 2 of this algorithm. We check if and if yes, an optimized subset of factors is , and in the opposite case, the optimized subset is of attributes.

- ***** In the final step of the proposed algorithm, it is checked whether all three datasets (or m datasets in the general case) corresponding to the considered types of pistols have been processed. If this condition is met, the algorithm terminates; otherwise, it returns to Step 1 and continues the procedure.

4. Results and Findings

4.1. First Layer of the Proposed Model: Checking the Usability of the Synthetic Generated Dataset

4.2. Second Layer of Proposed Model: Stacking Ensemble for Determining Most Important Factors

- The following eight important factors for the Colt 1911 dataset:

- Target distance, shooter experience, grip stability, trigger pull force, light, temperature, humidity, and precision.

- For the Glock 17 dataset, seven important factors were identified:

- Wind speed, light, heart rate, ammunition quality, temperature, humidity, and precision.

4.3. Third Layer of Proposed Model: Final Decision Making Using Voting

- It can be concluded that only three of the considered factors are not important for successful shooting using the three types of pistols considered: Colt 1911, Beretta 92, and Glock 17

- It can be concluded that the Beretta 92 pistol shares seven out of eight significant factors with the Colt 1911, while only one factor is not common. In contrast, the Glock 17 shares only four significant factors with the Colt 1911, while the remaining four are not matched. A logical conclusion follows from this analysis: the decision made by the U.S. Army to replace the Colt 1911 with the Beretta 92 was well founded and justified.

4.4. Discussion of the Obtained Results

- The results demonstrated the feasibility of constructing an ensemble ML model capable of forensically evaluating the correctness of a weapon selection decision.

- The results proved the possibility of constructing such a model, which is usable in solving many similar forensics problems in different fields of human life.

- The conditional hypothesis, asserting that it is possible to use synthetically generated data—such as that produced by ChatGPT-4 or similar tools—in decision analysis;

- The final hypothesis, stating that it is possible to construct a novel ensemble model that addresses the problem of handgun type selection forensics more effectively than existing state-of-the-art models.

- Reducing overfitting: It helps identify overfitting issues by evaluating model performance on data that was not seen during training.

- Providing a realistic assessment of performance: By using different subsets for training and validation, it offers a more robust evaluation of how well the model generalizes to new, unseen data.

- Detecting data quality issues: If the synthetic data does not accurately represent real-world data, poor performance on validation sets may reveal underlying quality issues.

- Improving model generalization: By validating models on different data partitions, it ensures the model learns generalizable patterns rather than memorizing training data.

- To explore the possibility of using new synthetic multi-criteria decision analysis approaches, such as fuzzy methods and group decision-making techniques, to enhance the characteristics of the proposed model [64]. Of particular interest for the first layer of the model are approaches related to large-group decision making, such as the rough integrated asymmetric cloud model under a multi-granularity linguistic environment [65].

- To include a broader set of classification and feature selection algorithms in order to introduce n-modular redundancy into the second layer of the proposed ensemble algorithm [66]. This enhancement aims to improve prediction performance in similar problem domains across various fields.

- To incorporate an ablation study within the ensemble of machine learning (ML) and multi-criteria decision-making (MCDM) components. Such a study would systematically remove or disable components of the ensemble model to evaluate their individual contributions, thereby identifying which components are most impactful and guiding further performance improvements.

- Given that the model is structurally organized for forensic decision analysis, it is worthwhile to explore its application in other domains beyond the choice of weapons. Potential application areas include transportation and traffic [67], biology [68], medicine [69], economics [70], and the public sector [71].

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MCDM | Multi-criteria decision making |

| ML | Machine learning |

| ROC | Receiver operating characteristic |

| PRC | Precision–recall curve |

| AUCROC | Area under the curve ROC |

| AUCPRC | Area under the curve PRC |

| AHP | Analytic hierarchy process |

| TOPSIS | Technique for order preference by similarity to ideal solution |

| LLM | Large language model |

| IT | Information technologies |

References

- Aleksić, A.; Nedeljković, S.; Jovanović, M.; Ranđelović, M.; Vuković, M.; Stojanović, V.; Radovanović, R.; Ranđelović, M.; Ranđelović, D. Prediction of Important Factors for Bleeding in Liver Cirrhosis Disease Using Ensemble Data Mining Approach. Mathematics 2020, 8, 1887. [Google Scholar] [CrossRef]

- Kemiveš, A.; Ranđelović, M.; Barjaktarović, L.; Đikanović, P.; Čabarkapa, M.; Ranđelović, D. Identifying Key Indicators for Successful Foreign Direct Investment through Asymmetric Optimization Using Machine Learning. Symmetry 2024, 16, 1346. [Google Scholar] [CrossRef]

- Ranđelović, M.; Aleksić, A.; Radovanović, R.; Stojanović, V.; Čabarkapa, M.; Ranđelović, D. One Aggregated Approach in Multidisciplinary Based Modeling to Predict Further Students’ Education. Mathematics 2022, 10, 2381. [Google Scholar] [CrossRef]

- Aleksić, A.; Ranđelović, M.; Ranđelović, D. Using Machine Learning in Predicting the Impact of Meteorological Parameters on Traffic Incidents. Mathematics 2023, 11, 479. [Google Scholar] [CrossRef]

- Mikhaylova, S.S.; Grineva, N.V. Development of a binary classification model based on small data using machine learning methods. Econ. Probl. Leg. Pract. 2024, 20, 129–140. [Google Scholar] [CrossRef]

- Mišić, J.; Kemiveš, A.; Ranđelović, M.; Ranđelović, D. An Asymmetric Ensemble Method for Determining the Importance of Individual Factors of a Univariate Problem. Symmetry 2023, 15, 2050. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- White, M.D. Identifying Situational Predictors of Police Shootings Using Multivariate Analysis. Polic. Int. J. Police Strateg. Manag. 2002, 25, 726–751. [Google Scholar] [CrossRef]

- Mehmet Asaf Düzena, M.A.; Bölükbaşıa, I.B.; Çalıka, E. How to combine ML and MCDM techniques: An extended bibliometric analysis. J. Innov. Eng. Nat. Sci. 2024, 4, 642–657. [Google Scholar] [CrossRef]

- Dagdeviren, M.; Yavuz, S.; Kılınç, N. Weapon Selection Using the AHP and TOPSIS Methods under Fuzzy Environment. Expert Syst. Appl. 2009, 36, 8143–8151. [Google Scholar] [CrossRef]

- Ashari, H.; Parsaei, M. Application of the multi-criteria decision method ELECTRE III for the Weapon selection. Decis. Sci. Lett. 2014, 3, 511–522. [Google Scholar] [CrossRef]

- Jiang, J.; Liu, X.; Garg, H.; Zhang, S. Large group decision-making based on interval rough integrated cloud model. Adv. Eng. Inform. 2023, 56, 101964. [Google Scholar] [CrossRef]

- Kaya, V.; Tuncer, S.; Baran, A. Detection and Classification of Different Weapon Types Using Deep Learning. Appl. Sci. 2021, 11, 7535. [Google Scholar] [CrossRef]

- Jenkins, S.; Lowrey, D. A Comparative Analysis of Current and Planned Small Arms Weapon Systems; MBA Professional Report; Naval Postgraduate School: Monterey, CA, USA, 2004. [Google Scholar]

- Kukolj, M. Pištolj ili revolver za starješine JNA. Vojnoteh. Glas. 1991, 39, 24–34. [Google Scholar] [CrossRef]

- Mason, B.R.; Cowan, L.F.; Gonczol, T. Factors Affecting Accuracy in Pistol Shooting. In EXCEL Publication of the Australian Institute of Sport; Fricker, P., Telford, R., Eds.; Australian Institute of Sport: Canberra, Australia, 1990; Volume 6, pp. 2–6. [Google Scholar]

- Goonetilleke, R.S.; Hoffmann, E.R.; Lau, W.C. Pistol Shooting Accuracy as Dependent on Experience, Eyes Being Opened, and Available Viewing Time. Appl. Ergon. 2009, 40, 500–508. [Google Scholar] [CrossRef]

- Verma, G.K.; Dhillon, A. A Handheld Gun Detection using Faster R-CNN Deep Learning. In Proceedings of the 7th International Conference on Computer and Communication Technology, Allahabad, India, 24–26 November 2017; pp. 84–88. [Google Scholar] [CrossRef]

- Pugliese, R.; Regondi, S.; Marini, R. Machine Learning-Based Approach: Global Trends, Research Directions, and Regulatory Standpoints. Data Sci. Manag. 2021, 4, 19–29. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Nayerifard, T.; Amintoosi, H.; Bafghi, A.; Dehghantanha, A. Machine Learning in Digital Forensics: A Systematic Literature Review. arXiv 2023, arXiv:2306.04965. [Google Scholar] [CrossRef]

- Krivchenkov, A.; Misnevs, B.; Pavlyuk, D. Intelligent Methods in Digital Forensics: State of the Art. In Reliability and Statistics in Transportation and Communication, RelStat 2018; Kabashkin, I., Yatskiv (Jackiva), I., Prentkovskis, O., Eds.; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2019; Volume 68. [Google Scholar] [CrossRef]

- Ubani, S.; Polat, S.O.; Nielsen, R. ZeroShotDataAug: Generating and Augmenting Training Data with ChatGPT. arXiv 2023, arXiv:2304.14334. [Google Scholar] [CrossRef]

- Lingo, R. Exploring the Potential of AI-Generated Synthetic Datasets: A Case Study on Telematics Data with ChatGPT. arXiv 2023, arXiv:2306.13700. [Google Scholar] [CrossRef]

- Domínguez-Almendros, S.; Benítez-Parejo, N.; Gonzalez-Ramirez, A.R. Logistic Regression Models. Allergol. Immunopathol. 2011, 39, 295–305. [Google Scholar] [CrossRef]

- SPSS Statistics 17.0 Brief Guide. Available online: http://www.sussex.ac.uk/its/pdfs/SPSS_Statistics_Brief_Guide_17.0.pdf (accessed on 20 March 2025).

- Romero, C.; Ventura, S.; Espejo, P.; Hervas, C. Data Mining Algorithms to Classify Students. In Proceedings of the 1st International Conference on Educational Data Mining (EDM08), Montreal, QC, Canada, 20–21 June 2008; pp. 20–21. [Google Scholar]

- Witten, H.; Eibe, F. Data Mining: Practical Machine Learning Tools and Techniques, 2nd ed.; Morgan Kaufmann: Burlington, MA, USA, 2005. [Google Scholar]

- Benoit, G. Data Mining. Annu. Rev. Inf. Sci. Technol. 2002, 36, 265–310. [Google Scholar] [CrossRef]

- Gong, M. A Novel Performance Measure for Machine Learning Classification. Int. J. Manag. Inf. Technol. 2021, 13, 11–19. [Google Scholar] [CrossRef]

- Watson, D.; Reichard, K.; Isaacson, A. A Case Study Comparing ROC and PRC Curves for Imbalanced Data. Annu. Conf. PHM Soc. 2023, 15. [Google Scholar] [CrossRef]

- University of Waikato. WEKA. Available online: http://www.cs.waikato.ac.nz/ml/weka (accessed on 20 March 2025).

- Friedman, J.; Hastie, T.; Tibshirani, R. Additive Logistic Regression: A Statistical View of Boosting. Ann. Stat. 2000, 28, 337–407. [Google Scholar] [CrossRef]

- Loh, W.Y. Classification and Regression Trees. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Rucco, M.; Giannini, F.; Lupinetti, K.; Monti, M. A methodology for part classification with supervised machine learning. Artif. Intell. Eng. Des. Anal. Manuf. 2018, 33, 1–14. [Google Scholar] [CrossRef]

- Kumbhakar, S.C.; Lovell, C.A.K. Stochastic Frontier Analysis; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- MacKay, D. Information Theory, Inference, and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Mitchell, T. Machine Learning; McGraw-Hill Science/Engineering/Math: New York, NY, USA, 1997. [Google Scholar]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach; Prentice Hall: Hoboken, NJ, USA, 2003. [Google Scholar]

- Urbanowicz, R.J.; Meeker, M.; La Cava, W.; Olson, R.S.; Moore, J.H. Relief-Based Feature Selection: Introduction and Review. arXiv 2018, arXiv:1711.08421. [Google Scholar] [CrossRef]

- Sugianela, Y.; Ahmad, T. Pearson Correlation Attribute Feature Selection Evaluation-based for Intrusion Detection System. In Proceedings of the International Conference on Smart Technology and Applications (ICoSTA) 2020, Surabaya, Indonesia, 20 February 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Blessie, E.C.; Eswaramurthy, K. Sigmis: A Feature Selection Algorithm Using Correlation-Based Method. J. Algorithms Comput. Technol. 2012, 6, 385–394. [Google Scholar] [CrossRef]

- Programiz. Greedy Algorithm. Available online: https://www.programiz.com/dsa/greedy-algorithm (accessed on 15 March 2025).

- Girish, S.; Chandrashekar, F. A Survey on Feature Selection Methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Zhou, Z.H. Ensemble Methods Foundations and Algorithm; Chapman and Hall/CRC: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Ahad, A. Vote-Based: Ensemble Approach. Sak. Univ. J. Sci. 2021, 25, 858–866. [Google Scholar] [CrossRef]

- Yousefi, Z.; Alesheikh, A.A.; Jafari, A.; Torktatari, S.; Sharif, M. Stacking Ensemble Technique Using Optimized Machine Learning Models with Boruta–XGBoost Feature Selection for Landslide Susceptibility Mapping: A Case of Kermanshah Province, Iran. Information 2024, 15, 689. [Google Scholar] [CrossRef]

- Goyal, M.; Mahmoud, Q.H. A Systematic Review of Synthetic Data Generation Techniques Using Generative AI. Electronics 2024, 13, 3509. [Google Scholar] [CrossRef]

- SANNA. Available online: https://nb.vse.cz/~jablon/sanna.htm (accessed on 15 May 2025).

- AHP Online System—AHP–OS. Available online: https://bpmsg.com/ahp/ahp.php (accessed on 15 May 2025).

- Saaty, T.L. The Analytic Hierarchy Process; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Yoon, K.; Hwang, C.L. Multiple Attribute Decision Making: An Introduction; Sage Publications: Thousand Oaks, CA, USA, 1995; Volume 104. [Google Scholar] [CrossRef]

- Shah, M. Re: Could Someone Explain Me about Nagelkerke R Square in Logit Regression Analysis? 2023. Available online: https://www.researchgate.net/post/Could_someone_explain_me_about_Nagelkerke_R_Square_in_Logit_Regression_analysis/63f330cfe22cf468000037c9/citation/download (accessed on 10 May 2025).

- Hosmer, D.W.; Hosmer, T.; le Cessie, S.; Lemeshow, S. A comparison of goodness-of-fit tests for the logistic regression model. Stat. Med. 1997, 16, 965–980. [Google Scholar] [CrossRef]

- Hosmer, D.W.; Lemeshow, S. Applied Logistic Regression, 2nd ed.; John Wiley and Sons Inc.: New York, NY, USA, 2000. [Google Scholar]

- Monteiro, A.d.R.D.; Feital, T.d.S.; Pinto, J.C. A Numerical Procedure for Multivariate Calibration Using Heteroscedastic Principal Components Regression. Processes 2021, 9, 1686. [Google Scholar] [CrossRef]

- Hair, J.F.; Anderson, R.E.; Tatham, R.L.; Black, W.C. Multivariate Data Analysis; Prentice-Hall, Inc.: New York, NY, USA, 1998. [Google Scholar]

- Yang, T.; Ying, Y. AUC Maximization in the Era of Big Data and AI: A Survey. ACM Comput. Surv. 2022, 37, 1–37. [Google Scholar] [CrossRef]

- Gorriz, J.M.; Segovia, F.; Ramirez, J.; Ortiz, A.; Suckling, J. Is K-fold cross validation the best model selection method for Machine Learning? arXiv 2024, arXiv:2401.16407. [Google Scholar] [CrossRef]

- Emerson, P. Majority Voting—A Critique Preferential Decision-Making—An Alternative. J. Politics Law 2024, 17, 47–57. [Google Scholar] [CrossRef]

- Abramov, M. Ensuring Quality and Realism in Synthetic Data. Available online: https://keymakr.com/blog/ensuring-quality-and-realism-in-synthetic-data/ (accessed on 20 July 2025).

- Abbasi-Azar, M.; Teimouri, M.; Nikray, M. Blind protocol identification using synthetic dataset: A case study on geographic protocols. Forensic Sci. Int. Digit. Investig. 2025, 53, 301911. [Google Scholar] [CrossRef]

- Göbel, T.; Schäfer, T.; Hachenberger, J.; Türr, J.; Baier, H. A Novel Approach for Generating Synthetic Datasets for Digital Forensics. In Advances in Digital Forensics XVI. DigitalForensics 2020; Peterson, G., Shenoi, S., Eds.; IFIP Advances in Information and Communication Technology; Springer: Cham, Switzerland, 2020; Volume 589. [Google Scholar] [CrossRef]

- Anand, M.C.; Kalaiarasi, K.; Martin, N.; Ranjitha, B.; Priyadharshini, S.S.; Tiwari, M. Fuzzy C-Means Clustering with MAIRCA -MCDM Method in Classifying Feasible Logistic Suppliers of Electrical Products. In Proceedings of the 2023 First International Conference on Cyber Physical Systems, Power Electronics and Electric Vehicles (ICPEEV), Hyderabad, India, 28–30 September 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Jiang, J.; Liu, X.; Wang, Z.; Ding, W.; Zhang, S.; Xu, H. Large group decision-making with a rough integrated asymmetric cloud model under multi-granularity linguistic environment. Inf. Sci. 2024, 678, 120994. [Google Scholar] [CrossRef]

- Lyons, E.; Vanderkulk, W. The Use of Triple-Modular Redundancy to Improve Computer Reliability. IBM J. Res. Dev. 1962, 6, 200–209. [Google Scholar] [CrossRef]

- Manzolli, J.A.; Yu, J.; Miranda-Moreno, L. Synthetic multi-criteria decision analysis (S-MCDA): A new framework for participatory transportation planning. Transp. Res. Interdiscip. Perspect. 2025, 31, 101463. [Google Scholar] [CrossRef]

- Sanduleanu, S.; Ersahin, K.; Bremm, J.; Talibova, N.; Damer, T.; Erdogan, M.; Kottlors, J.; Goertz, L.; Bruns, C.; Maintz, D.; et al. Feasibility of GPT-3.5 versus Machine Learning for Automated Surgical Decision-Making Determination: A Multicenter Study on Suspected Appendicitis. AI 2024, 5, 1942–1954. [Google Scholar] [CrossRef]

- Chowdhury, N.K.; Kabir, M.A.; Rahman, M.; Islam, S.M.S. Machine learning for detecting COVID-19 from cough sounds: An ensemble-based MCDM method. Comput. Biol. Med. 2022, 145, 105405. [Google Scholar] [CrossRef]

- Chowdhury, S.J.; Mahi, M.I.; Saimon, S.A.; Urme, A.N.; Nabil, R.H. An Integrated Approach of MCDM Methods and Machine Learning Algorithms for Employees’ Churn Predict. In Proceedings of the 2023 3rd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 7–8 January 2023; pp. 68–73. [Google Scholar] [CrossRef]

- Fischer-Abaigar, U.; Kern, C.; Barda, N.; Kreuter, F. Bridging the gap: Towards an expanded toolkit for AI-driven decision-making in the public sector. Gov. Inf. Q. 2024, 41, 101976. [Google Scholar] [CrossRef]

| Predicted Class | |||

|---|---|---|---|

| Positive | Negative | ||

| Actual class | Positive | TP (true positive) | FN (false negative) |

| Negative | FP (false positive) | TN (true negative) | |

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | C10 | C11 | C12 | C13 | Weights | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Distance_to_Target/C1 | 1 | 1 | 7/5 | 7/3 | 7/3 | 7/3 | 7/5 | 7/5 | 7 | 7 | 7/9 | 1 | 7/9 | 0.107 |

| Wind_Speed/C2 | 1 | 7/5 | 7/3 | 7/3 | 7/3 | 7/5 | 7/5 | 7 | 7 | 7/9 | 1 | 7/9 | 0.107 | |

| Shooter_Experience/C3 | 1 | 5/3 | 5/3 | 5/3 | 1 | 1 | 5 | 5 | 5/9 | 5/7 | 5/9 | 0.076 | ||

| Grip_Stability/C4 | 1 | 1 | 1 | 3/5 | 3/5 | 3 | 3 | 3/9 | 3/7 | 3/9 | 0.046 | |||

| Trigger_Pull_Force/C5 | 1 | 1 | 3/5 | 3/5 | 3 | 3 | 3/9 | 3/7 | 3/9 | 0.046 | ||||

| Light_Conditions/C6 | 1 | 3/5 | 3/5 | 3 | 3 | 3/9 | 3/7 | 3/9 | 0.046 | |||||

| Heart_Rate/C7 | 1 | 1 | 5 | 5 | 5/9 | 5/7 | 5/9 | 0.076 | ||||||

| Ammo_Quality/C8 | 1 | 5 | 5 | 5/9 | 5/7 | 5/9 | 0.076 | |||||||

| Temperature/C9 | 1 | 1 | 1/9 | 1/7 | 1/9 | 0.015 | ||||||||

| Humidity/C10 | 1 | 1/9 | 1/7 | 1/9 | 0.015 | |||||||||

| Precision/C11 | 1 | 9/7 | 1 | 0.138 | ||||||||||

| Outcome/C12 | 1 | 7/9 | 0.107 | |||||||||||

| Price/C13 | 1 | 0.138 |

| 1. Alternative Colt1911 | 2. Alternative Bereta92 | 3. Alternative Glock17 | Weights | |

|---|---|---|---|---|

| Distance_to_Target | 31.09893188 | 28.89752522 | 30.10498801 | 0.10700 |

| Wind_Speed | 9.987965214 | 10.13798654 | 9.818743842 | 0.10700 |

| Shooter_Experience | 0.67721519 | 0.662921348 | 0.716463415 | 0.07600 |

| Grip_Stability | 5.48497407 | 5.583323259 | 5.342132363 | 0.04600 |

| Trigger_Pull_Force | 3.523007728 | 3.482858324 | 3.48476578 | 0.04600 |

| Light_Conditions | 560.5809053 | 540.6561038 | 547.8948968 | 0.04600 |

| Heart_Rate | 117.9697391 | 117.5425839 | 119.9949317 | 0.07600 |

| Ammo_Quality | 0.683544304 | 0.643258427 | 0.670731707 | 0.07600 |

| Temperature | 15.02015166 | 14.85945511 | 15.00268663 | 0.01500 |

| Humidity | 50.66014474 | 50.28772977 | 48.44931696 | 0.01500 |

| Precision | 74.45222776 | 74.78560132 | 72.75204747 | 0.13800 |

| Outcome | 0.522151899 | 0.471910112 | 0.548780488 | 0.10700 |

| Price | 74 | 111 | 100 | 0.13800 |

| 1. Alternative Colt1911 | 2. Alternative Bereta92 | 3. Alternative Glock17 | Weights | |

|---|---|---|---|---|

| Distance_to_Target | 0.0643 | 0.0598 | 0.0623 | 0.107 |

| Wind_Speed | 0.0622 | 0.0631 | 0.0611 | 0.107 |

| Shooter_Experience | 0.0436 | 0.0427 | 0.0461 | 0.076 |

| Grip_Stability | 0.0268 | 0.0272 | 0.0261 | 0.046 |

| Trigger_Pull_Force | 0.0269 | 0.0266 | 0.0266 | 0.046 |

| Light_Conditions | 0.0272 | 0.0263 | 0.0266 | 0.046 |

| Heart_Rate | 0.0439 | 0.0438 | 0.0447 | 0.076 |

| Ammo_Quality | 0.0453 | 0.0426 | 0.0445 | 0.076 |

| Temperature | 0.0087 | 0.0086 | 0.0087 | 0.015 |

| Humidity | 0.0088 | 0.0088 | 0.0084 | 0.015 |

| Precision | 0.0807 | 0.0810 | 0.0788 | 0.138 |

| Outcome | 0.0630 | 0.0569 | 0.0662 | 0.107 |

| Price | 0.0616 | 0.0925 | 0.0833 | 0.138 |

| di− | 0.21318 | 0.03101 | 0.02405 | |

| di+ | 0.03114 | 0.01131 | 0.00999 | |

| ci | 0.213 | 0.732 | 0.706 |

| Binary Regression: Variables in the Equation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Step 1 | B | S.E. | Wald | Df | Sig. | Exp(B) | 95% C.I. for EXP(B) | |||

| Lower | Upper | |||||||||

| Distance_to_Target | −0.005 | 0.009 | 0.285 | 1 | 0.594 | 0.995 | 0.977 | 1.013 | ||

| Wind_Speed | −0.037 | 0.018 | 4.232 | 1 | 0.040 | 0.963 | 0.930 | 0.998 | ||

| Shooter_Experience | 0.017 | 0.224 | 0.006 | 1 | 0.939 | 1.017 | 0.656 | 1.577 | ||

| Grip_Stability | 0.096 | 0.158 | 0.368 | 1 | 0.544 | 1.101 | 0.807 | 1.500 | ||

| Trigger_Pull_Force | 0.188 | 0.114 | 2.729 | 1 | 0.099 | 1.206 | 0.966 | 1.507 | ||

| Light_Conditions | 0.000 | 0.000 | 0.159 | 1 | 0.690 | 1.000 | 0.999 | 1.001 | ||

| Heart_Rate | −0.001 | 0.003 | 0.090 | 1 | 0.765 | 0.999 | 0.992 | 1.006 | ||

| Ammo_Quality | 0.111 | 0.226 | 0.243 | 1 | 0.622 | 1.118 | 0.718 | 1.741 | ||

| Temperature | 0.009 | 0.008 | 1.341 | 1 | 0.247 | 1.009 | 0.994 | 1.024 | ||

| Humidity | 0.005 | 0.005 | 0.982 | 1 | 0.322 | 1.005 | 0.996 | 1.014 | ||

| Precision | −0.013 | 0.017 | 0.584 | 1 | 0.445 | 0.987 | 0.954 | 1.021 | ||

| Classification Table a,b | ||||||||||

| Observed | Predicted | Percentage Correct | ||||||||

| Outcome | ||||||||||

| 0 | 1 | |||||||||

| Step 1 | Outcome | 0 | 128 | 60 | 68.1 | |||||

| 1 | 94 | 74 | 44.0 | |||||||

| Overall Percentage | 56.7 | |||||||||

| a. Constant is included in the model. b. The cut-off value is 0.500. | ||||||||||

| Model Summary | ||||||||||

| Step | −2 Log likelihood | Cox–Snell R Square | Nagelkerke R Square | |||||||

| 1 | 483.891 c | 0.036 | 0.027 | |||||||

| c. Estimation terminated at iteration 3 because parameter estimates changed by less than 0.001. | ||||||||||

| Hosmer and Lemeshow Test | ||||||||||

| Step | Chi-square | df | Sig. | |||||||

| 1 | 3.528 | 8 | 0.897 | |||||||

| Precision | Recall | F1 Measure | AUCROC | |

|---|---|---|---|---|

| RandomForest | 0.479 | 0.483 | 0.479 | 0.461 |

| Ada Boost | 0.441 | 0.458 | 0.437 | 0.433 |

| PART | 0.422 | 0.466 | 0.409 | 0.444 |

| Majority Voting | Relief | CorrelationAttributeEval | GreedyStepwise | |

|---|---|---|---|---|

| Distance_to_Target | ● | ● | ● | |

| Wind_Speed | ● | |||

| Shooter_Experience | ● | ● | ● | |

| Grip_Stability | ● | |||

| Trigger_Pull_Force | ● | ● | ● | |

| Light_Conditions | ● | ● | ● | |

| Heart_Rate | ● | |||

| Ammo_Quality | ● | ● | ● | |

| Temperature | ● | ● | ● | |

| Humidity | ● | ● | ● | |

| Precision | ● | ● | ● |

| Precision | Recall | F1 Measure | AUCROC | |

|---|---|---|---|---|

| RandomForest—8 factors | 0.502 | 0.506 | 0.502 | o.489 |

| RandomForest—11 factors | 0.479 | 0.483 | 0.479 | 0.461 |

| JRip | 0.437 | 0.441 | 0.438 | 0.417 |

| Bagging | 0.440 | 0.444 | 0.441 | 0.444 |

| REPTree | 0.478 | 0.486 | 0.476 | 0.457 |

| Binary Regression: Variables in the Equation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Step 1 | B | S.E. | Wald | df | Sig. | Exp(B) | 95% C.I. for EXP(B) | |||

| Lower | Upper | |||||||||

| Distance_to_Target | −0.009 | 0.009 | 0.951 | 1 | 0.329 | 0.991 | 0.974 | 1.009 | ||

| Shooter_Experience | 0.001 | 0.220 | 0.000 | 1 | 0.996 | 1.001 | 0.650 | 1.542 | ||

| Trigger_Pull_Force | 0.118 | 0.107 | 1.222 | 1 | 0.269 | 1.125 | 0.913 | 1.387 | ||

| Light_Conditions | 0.000 | 0.000 | 0.613 | 1 | 0.433 | 1.000 | 0.999 | 1.000 | ||

| Ammo_Quality | 0.043 | 0.222 | 0.037 | 1 | 0.847 | 1.044 | 0.675 | 1.614 | ||

| Temperature | 0.007 | 0.007 | 0.935 | 1 | 0.334 | 1.007 | 0.993 | 1.022 | ||

| Humidity | 0.003 | 0.004 | 0.388 | 1 | 0.534 | 1.003 | 0.994 | 1.011 | ||

| Precision | −0.005 | 0.004 | 1.217 | 1 | 0.270 | 0.995 | 0.987 | 1.004 | ||

| Classification Table a,b | ||||||||||

| Observed | Predicted | Percentage Correct | ||||||||

| Outcome | ||||||||||

| 0 | 1 | |||||||||

| Step 1 | Outcome | 0 | 124 | 64 | 66.0 | |||||

| 1 | 108 | 60 | 35.7 | |||||||

| Overall Percentage | 51.7 | |||||||||

| a. Constant is included in the model. b. The cut-off value is 0.500. | ||||||||||

| Model Summary | ||||||||||

| Step | −2 Log likelihood | Cox–Snell R Square | Nagelkerke R Square | |||||||

| 1 | 489.011 c | 0.013 | 0.017 | |||||||

| c. Estimation terminated at iteration 3 because parameter estimates changed by less than 0.001. | ||||||||||

| Hosmer and Lemeshow Test | ||||||||||

| Step | Chi-square | Df | Sig. | |||||||

| 1 | 9.335 | 8 | 0.315 | |||||||

| Majority Voting | Colt1911 | Bereta92 | Glock17 | |

|---|---|---|---|---|

| Distance_to_Target | ● | ● | ● | |

| Wind_Speed | ● | |||

| Shooter_Experience | ● | ● | ● | |

| Grip_Stability | ● | |||

| Trigger_Pull_Force | ● | ● | ● | |

| Light_Conditions | ● | ● | ● | ● |

| Heart_Rate | ● | |||

| Ammo_Quality | ● | ● | ● | |

| Temperature | ● | ● | ● | ● |

| Humidity | ● | ● | ● | ● |

| Precision | ● | ● | ● | ● |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aleksić, A.; Radovanović, R.; Joksimović, D.; Ranđelović, M.; Vuković, V.; Ilić, S.; Ranđelović, D. Modeling Based on Machine Learning and Synthetic Generated Dataset for the Needs of Multi-Criteria Decision-Making Forensics. Symmetry 2025, 17, 1254. https://doi.org/10.3390/sym17081254

Aleksić A, Radovanović R, Joksimović D, Ranđelović M, Vuković V, Ilić S, Ranđelović D. Modeling Based on Machine Learning and Synthetic Generated Dataset for the Needs of Multi-Criteria Decision-Making Forensics. Symmetry. 2025; 17(8):1254. https://doi.org/10.3390/sym17081254

Chicago/Turabian StyleAleksić, Aleksandar, Radovan Radovanović, Dušan Joksimović, Milan Ranđelović, Vladimir Vuković, Slaviša Ilić, and Dragan Ranđelović. 2025. "Modeling Based on Machine Learning and Synthetic Generated Dataset for the Needs of Multi-Criteria Decision-Making Forensics" Symmetry 17, no. 8: 1254. https://doi.org/10.3390/sym17081254

APA StyleAleksić, A., Radovanović, R., Joksimović, D., Ranđelović, M., Vuković, V., Ilić, S., & Ranđelović, D. (2025). Modeling Based on Machine Learning and Synthetic Generated Dataset for the Needs of Multi-Criteria Decision-Making Forensics. Symmetry, 17(8), 1254. https://doi.org/10.3390/sym17081254