Abstract

Human Pose Estimation (HPE) aims to accurately locate the positions of human key points in images or videos. However, the performance of HPE is often significantly reduced in practical application scenarios due to environmental interference. To address this challenge, we propose a ladder side-tuning method for the Vision Transformer (ViT) pre-trained model based on multi-path feature fusion to improve the accuracy of HPE in highly interfering environments. First, we extract the global features, frequency features and multi-scale spatial features through the ViT pre-trained model, the discrete wavelet convolutional network and the atrous spatial pyramid pooling network (ASPP). By comprehensively capturing the information of the human body and the environment, the ability of the model to analyze local details, textures, and spatial information is enhanced. In order to efficiently fuse these features, we devise an adaptive symmetric feature fusion strategy, which dynamically adjusts the intensity of feature fusion according to the similarity among features to achieve the optimal fusion effect. Finally, a multi-graph feature aggregation method is developed. We construct graph structures of different features and deeply explore the subtle differences among the features based on the dual fusion mechanism of points and edges to ensure the information integrity. The experimental results demonstrate that our method achieves 4.3% and 4.2% improvements in the AP metric on the MS COCO dataset and a custom high-interference dataset, respectively, compared with the HRNet. This highlights its superiority for human pose estimation tasks in both general and interfering environments.

1. Introduction

Human Pose Estimation (HPE) is an important research direction in the field of computer vision. It has important auxiliary significance for downstream tasks such as human–computer interaction, action recognition, and behavior analysis [1,2,3]. The purpose of human pose estimation is to accurately identify the key points of the human pose in the image. However, the accuracy of key point positioning is often limited by the interference factors, such as environmental reflection, image blur, and multi-scale in practical applications. Therefore, achieving anti-interference and high-precision human pose estimation remains a challenging problem.

The methods of human pose estimation are mainly divided into two categories: traditional methods and deep learning-based methods. Traditional methods mainly rely on manual features and graph models to establish the connectivity between human parts, and model the key points of the human body in combination with the constraints of human kinematics. These approaches are easy to compute and have a quick inference speed. However, it is difficult to adequately express the human feature information due to the limitations of the artificial feature setup [4,5,6]. This results in applications to relatively simple specific scenarios. With the development of deep learning, researchers have developed human pose estimation methods based on this technique. These strategies can learn richer and more abstract representations from the original image by automatically extracting features, thereby achieving more accurate human pose estimation in complex and changing environments. Early research was mainly based on Convolutional Neural Networks (CNNs). Although CNNs perform well in extracting low-level features, they are not very efficient in capturing global dependencies [7]. The emergence of the Transformer [8] provides a new idea for solving this problem. Transformer has a natural advantage in capturing paired or high-order interactions. This is because their attention mechanism can flexibly capture the interaction information between any positions. Therefore, various Visual Transformer structures have been widely used in pose estimation tasks. However, since the transformer architecture focuses more on global information, it is difficult to mine local detail information, leading to a significant reduction in the estimation performance in high-blur situations. As a result, in the case of high-blur and multi-scale interference environments, when relying solely on CNN and Transformer architectures, it is difficult to meet the needs of human pose estimation.

In order to effectively and comprehensively extract human and environmental information in images under high-interference environments, we propose a ladder side-tuning method for the ViT pre-trained model based on multi-path feature fusion. The main contributions of this study are summarized as follows:

- Since the ViT model focuses on capturing global features while ignoring local detail information, we use discrete wavelet convolution and atrous spatial pyramid pooling. This introduces frequency features and multi-scale spatial features to improve the model’s ability to capture local details, texture information, and spatial information. This also improves the model’s anti-interference ability, as well as its generalization and robustness in high-interference environments.

- To address the issues of insufficient and excessive feature fusion, this study suggests an adaptive symmetric feature fusion strategy. We analyze the similarity between the newly introduced features and the pre-trained model features. The number of blocks of the two feature types is dynamically and symmetrically adjusted. And the cross-attention mechanism is used to fuse the features of each block with the global features of another feature type, achieving the optimal feature fusion effect while minimizing resource consumption.

- To effectively achieve the deep fusion of ViT global features, frequency domain features, and spatial features, this paper suggests a multi-graph aggregation method. This method constructs a graph structure for each feature and achieves the structural fusion of the three graphs through point and edge attention mechanisms. This approach aims to capture the consistency and complementary of information between different features for maintaining the integrity of each piece of information.

- The human pose estimation method proposed in this paper was verified on the COCO dataset and the self-built high-blur dataset. Compared with existing methods, this method achieves significant improvement in performance, confirming the excellent anti-interference ability of our method.

The following part of this paper is structured as follows: Section 2 introduces the related work on human pose estimation and ViT fine-tuning, Section 3 focuses on the network architecture and modules in this research, Section 4 describes and analyzes the experimental results, and Section 5 summarizes the entire study and proposes future prospects.

2. Related Work

2.1. Human Pose Estimation Method

In recent years, human pose estimation has been committed to investigating numerous ways to significantly improve estimation accuracy. The methods of human pose estimation can be divided into traditional, CNN-based, and transformer-based methods. Traditional human pose estimation approaches [9,10,11,12,13,14] depend on pre-defined manual features. The approach constructs a graph model by using human joints as nodes. It combines a priori human kinematic restrictions to constantly reduce the state space. However, traditional methods are limited by manual feature settings, making it difficult to adequately express human body feature information. Also, it cannot distinguish between various individuals, resulting in insufficient generalization capabilities in natural scenes.

With the rapid development of deep learning technology, scholars have developed a CNN-based method. Compared with the traditional methods, this approach can automatically learn the representation information of human body parts from images. DeepPose [15] applied convolutional neural networks to human pose estimation for the first time. By constructing a multi-stage deep convolutional network model, it achieved a significant improvement in estimation performance. On this basis, Sun et al. [16] further used the re-parameterized representation of bones to integrate human body information and pose structure. Rogez et al. [17] implemented key point regression by introducing anchor pose classification. Luvizon et al. [18] utilized the Argmax function within a fully differentiable framework to convert feature maps into joint coordinates. Although the above methods have achieved certain results, direct mapping from feature maps to key points still faces challenges. In order to provide more auxiliary information in the human pose estimation process, researchers proposed a CNN method based on heat maps to highlight areas in the image that may contain key points by predicting the probability of each pixel. Wei et al. [19] proposed the Convocational Pose Machine (CPM), which generates heat maps by cascading multiple groups of convolution modules to obtain human pose estimation results with a large receptive field. Newell et al. [20] reviewed an approach based on multi-level feature convolution stacked hourglass networks. The approach used dense blocks instead of residual modules as the basic building blocks, which allows subsequent layers to access all previous feature maps. Bulat et al. [21] used a part detection network to generate a heat map, and then used a regression network to further refine the heat map. However, the above methods do not fully consider the impact of image resolution on the estimation results. Therefore, Sun et al. [22] proposed a high-resolution network HRNet, which connects branches of different resolutions in parallel to maintain the highest resolution feature map in the entire network model. The HigherHRNet [23] is further improved on the basis of HRNet. By combining the feature map of HRNet and the higher-resolution feature map obtained by transposed convolution upsampling, it strengthens the multi-scale perception ability of the model. This also improved the performance of human pose estimation in different scenarios. Although CNN can efficiently extract hierarchical features of images and capture different levels of texture and spatial information, its ability to process global information is weak, resulting in an inability to fully consider the global correlation between various joint points of the human body.

Recently, Transformer-based methods have attracted attention due to their attention module’s ability to capture long-distance dependencies and global features. TransPose [24] uses the attention layer to predict key point heat maps. This model can learn fine-grained features of human poses. Li et al. [25] captured constraint cues and visual appearance relationships by using token representations. In order to improve the expressive ability of the model at different resolutions, Yuan et al. [26] proposed a high-resolution transformer named HRFormer to exchange the blocks in HRNet with the Transformer module. Fang et al. [27] used two transformer modules for human body detection and key point extraction, respectively. These methods mainly improve the global information extraction capabilities. However, these approaches have not yet introduced local feature information. Hence, they are still insufficient in processing local details and spatial information, which results in an inability to cope with high-interference attitude estimation situations.

In summary, we propose a new ladder side-tuning method for the ViT pre-training model based on multi-channel feature fusion, which combines the CNN architecture and the Transformer architecture. This method uses wavelet convolution and Atrous Spatial Pyramid Pooling (ASPP) to respectively extract frequency features and multi-scale spatial features of the image. This enhances the model’s ability to capture local details and image spatial features, which improves the model’s ability to mine joint points in a blurry scene. At the same time, the ViT pre-training model is used to extract the global features and strengthen the capacity to mine the overall structure of the human body and the correlation between joint points. Through the integration of the two technologies, it improves the accuracy and generalization of human posture assessment in complex scenes.

2.2. ViT Fine-Tuning Method

The emergence of the ViT model has shown excellent performance in the field of computer vision, but pre-trained models usually have a large number of parameters. Therefore, researchers are committed to exploring parameter-efficient transfer learning (PETL) methods [28,29,30], aiming to achieve efficient transfer of model capabilities. The PETL methods can be mainly divided into three categories: parameter-based, prompt-based, and adapter-based fine-tuning methods.

Parameter-based fine-tuning methods adjust the weights or biases of specific layers in the pre-trained model while keeping other parameters unchanged to adapt to downstream tasks. In terms of bias fine-tuning, Zaken et al. [31] achieved fine-tuning by only adjusting the bias part of the pre-trained model. Bu et al. [32] proposed the differential private bias fine-tuning method named DP-BiTFiT, which aggregates the bias gradient norms of each layer and introduces Gaussian noise to perform gradient descent on the bias term. In terms of weight fine-tuning, Hu et al. [33] proposed the LoRA fine-tuning method, which introduces a trainable low-rank decomposition matrix in each transformer layer to update the model weights. After training, the adapter module can be integrated into the original weight matrix of the model. Valipour et al. [34] improved LoRA and proposed to split the ranking parameters into multiple parts and optimize them sequentially without relying on the search mechanism.

Prompt-based fine-tuning methods transfer the generalization ability of pre-trained models to various downstream tasks by introducing prompt parameters. Prompt-based fine-tuning methods can be divided into two categories. The first approach involves explicitly introducing prompt parameters. Gao et al. [35] introduced learnable visual prompt parameters in ViT and optimized only these source-initialized prompts. Herzig et al. [36] designed task-specific prompts to achieve downstream task transfer by introducing a small set of dedicated parameters. The second type of method is to develop a subnetwork to construct visual prompts. Zhang et al. [37] utilized a pre-trained ViT network with a lightweight module to divide the input image into foreground and background, allowing them to add foreground and background signals before input. Wang et al. [38] enlarged the discriminative area of the input image to obtain visual prompts by designing a lightweight sampling network. Gan et al. [39] learned domain-specific and domain-agnostic prompts through subnetworks to capture source domain knowledge and maintain domain-shared knowledge. Compared with the fine-tuning method that directly introduces learnable prompts parameters, these methods can improve the interpretability of visual prompts.

Adapter-based fine-tuning methods promote the learning efficiency of downstream tasks by introducing additional trainable parameters into the pre-trained model while keeping other parameters frozen. Nie et al. [40] built multiple phased prompt blocks and used semantic information at different levels to enrich the feature space. The dynamic stacked network [41] is used as an adapter to activate the pre-trained model for visual tasks in a plug-and-play manner. Ermis et al. [42] added adapters before layer normalization and feedforward layers for continuous learning. A spatiotemporal adapter [43] is proposed to provide image models with spatiotemporal reasoning capabilities for video understanding. These methods adopt a serial adapter structure. For further exploration, researchers have proposed a parallel adapter module. Chen et al. [44] introduced image-related inductive biases in parallel without pre-trained adapters for image-dense prediction tasks. Also, Chen et al. [45] designed frozen and trainable model branches to adapt the visual transformer to video action recognition. However, these methods need to calculate the gradients of all parameters when performing gradient backpropagation, resulting in low training efficiency. Therefore, the side fine-tuning method proposed by Zhang et al. [46] avoids calculating the ViT pre-trained model gradient during the model backpropagation process by learning a side model and combining it with the last layer of the pre-trained model to significantly reduce memory requirements. On this basis, Sung et al. [47] proposed LST, which uses the intermediate features of the pre-trained model as supplementary input to train the side network and prevent the loss of the intermediate features of the pre-trained model. Since the interaction between the intermediate features of the pre-trained model and the side network is usually directly implemented through the cross-attention mechanism, this limits the strength of feature fusion, resulting in problems such as feature loss or excessive interaction.

Therefore, we propose a multi-path feature fusion method based on the LST fine-tuning to achieve efficient feature fusion while keeping the training resource consumption to a minimum. In the two-path feature fusion, this study designs an adaptive symmetric feature fusion strategy. By comparing the similarity between the newly introduced features and the pre-trained model features, the block granularity of the two feature types is dynamically and symmetrically adjusted, and the cross-attention mechanism is used to fuse these blocks with the global features. Considering the existence of some identical information between features, which makes it difficult to discover subtle features, this study proposes a multi-graph aggregation method. By constructing a three-way feature graph structure with point and edge attention mechanisms, the final ViT global feature, frequency domain feature, and spatial feature three-way information are deeply mined, ensuring the integrity and complementarity of the information.

3. Method

3.1. Network Structure

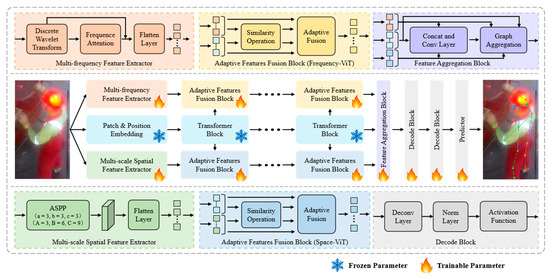

This study proposes a ladder side-tuning method for pre-trained models based on multi-path feature fusion, aiming to enhance the anti-interference ability of the model in the task of human pose estimation. First, the ViT pre-trained model, discrete wavelet convolution, and adaptive spatial pyramid pooling are used to extract global features, frequency features, and multi-scale spatial features, respectively, ensuring comprehensive coverage of human body and environmental information. Subsequently, an adaptive symmetric feature fusion strategy is proposed to achieve two-way fusion between frequency features and global features, as well as multi-scale spatial features and global features. This strategy can dynamically adjust the fusion strength to improve the efficiency of feature fusion. Finally, we design a multi-graph feature aggregation method, which constructs a graph structure of three-way features. We realize the deep fusion of three-way features through point and edge attention mechanisms, ensuring that the commonalities and subtle differences between features are retained at the same time. The overall process of the proposed fine-tuning method is shown in Figure 1.

Figure 1.

Flowchart of ladder side-tuning based on multi-path feature fusion.

The network architecture is divided into three parallel paths, each of which independently extracts features of different categories to enrich image semantic information, aiming to enhance the model’s adaptability to different environments. The first path uses the inherent prior knowledge of the ViT pre-trained model to capture global semantic information, and the model parameters remain frozen during the fine-tuning stage. The second path extracts the frequency features of the image through a discrete wavelet convolutional network to reduce the impact of environmental factors such as blur and interference on the model performance. The third path uses the ASPP network to obtain multi-scale spatial features of the image to enhance the model’s ability of capturing different scales features.

We use discrete wavelet convolution to extract frequency features of different categories in the image, and set the low-pass filter as and the high-pass filter as . Four convolution kernels and are constructed, and each convolution kernel has a step size of 2. The input features are decomposed into low-frequency features , horizontal high-frequency features , vertical high-frequency features , and diagonal high-frequency features . To be specific, the low-frequency features capture the main structural information of the image, while the three types of high-frequency features provide image detail information at different angles. The formulas for generating each feature are as follows,

where represents the cross-product operation. To reduce noise interference, we introduced a multi-frequency attention mechanism. Specifically, we added the features containing high-frequency information and low-frequency information along the channel dimension, respectively. We also compressed the different frequency information into the latent space of each frequency by using a 1 × 1 convolution layer,

where represents a standard convolution with a kernel of size 1, and represents the addition operation of element dimensions. To extract the key information in each frequency feature and suppress the influence of non-key information, we added the two types of frequency features and obtained the attention map through the softmax function. Then, we multiplied the original frequency feature by the attention map. This can be expressed as follows:

where represents the multiplication operation of element dimensions and represents the concatenation operation between features.

We utilized the ASPP network to achieve multi-scale spatial feature extraction. It can capture multi-scale contextual information by processing hole convolution layers with different dilation rates in parallel, which is crucial for processing target objects of different sizes. Specifically, we designed three hole convolution kernels with different dilation rates for feature extraction. The specific processing process is shown as follows,

where is the multi-scale fusion feature output by ASPP, indicates a dilated convolution operation with a convolution kernel size of m and a dilation rate of n, and indicates the global average pooling operation. Furthermore, the output features obtained by discrete wavelet convolution and ASPP modules are processed via layer flattening to ensure that the processed features could match the number of output feature patches by transformer layer in ViT. This maintains that features from different sources can effectively interact and integrate in the same dimensional space.

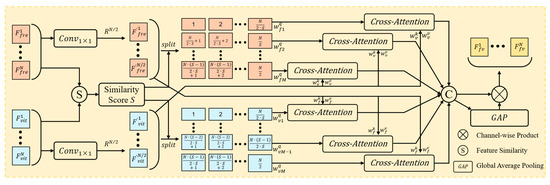

3.2. Adaptive Symmetric Feature Fusion

In order to achieve efficient fusion between ViT pre-trained model features and other feature types including multi-frequency features and multi-scale spatial features, we propose an adaptive symmetric feature fusion strategy. The algorithm structure is shown in Figure 2. In the feature fusion stage, this strategy can dynamically divide various features symmetrically according to the similarity between features, thereby achieving feature interaction at the optimal granularity level. This method effectively avoids the low fusion efficiency caused by insufficient interaction and the waste of resources caused by excessive interaction.

Figure 2.

Structure of adaptive symmetric feature fusion algorithm. Taking the fusion of features from the ViT pre-trained model and multi-frequency features as an example.

This method first calculates the similarity scores between multi-frequency features and other feature types, and then grades the granularity of feature fusion according to the similarity scores. It is divided into four levels: ultra-high-granularity fusion (feature similarity is between 0% and 25%, and the features are divided into four parts), high-granularity fusion (feature similarity is between 25% and 50%, and the features are divided into three parts), medium-granularity fusion (feature similarity is between 50% and 75%, and the features are divided into two parts), and low-granularity fusion (feature similarity is between 75% and 100%, and the features are not divided). The calculation formulas are listed as follows,

where is the feature of the ViT pre-trained model, and represents the cosine similarity function. Moreover, the original feature dimension is compressed to half through convolution operation. The features are divided into various blocks according to the level score. The block features and global features of other feature types are merged by cross-attention operation. Then, we combine and splice these features, and the expression is shown below,

where indicates the block after undergoes 1 × 1 convolution, indicates the cross attention operation, and the symbol means logical or operation. Finally, the concatenated matrix is processed through a global average pooling layer to obtain the weight coefficients of each block, and these coefficients are multiplied with the corresponding blocks to output the dual-path fusion features,

where is the fusion feature of multi-frequency features and the ViT pre-training model, is the fusion feature of multi-scale spatial features and the ViT pre-training model, and indicates the product operation of channel dimensions.

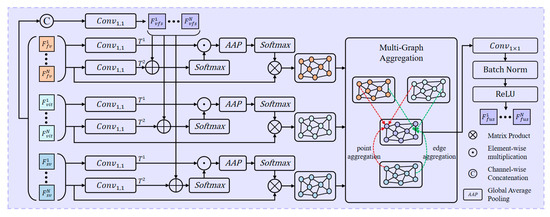

3.3. Multi-Graph Feature Aggregation

Since the ViT pre-trained model features are introduced into the three-way features output after the two-way feature fusion, these features inevitably contain highly similar information. However, for the traditional splicing method, it is difficult to distinguish the subtle differences between different features. Therefore, we design a feature fusion method based on multi-graph aggregation, as shown in Figure 3. In the process of multi-graph fusion, we utilize the attention mechanism of nodes and edges to strengthen the mining of difference information among features, aiming to achieve effective integration of common and different features.

Figure 3.

Structure of feature fusion method based on multi-graph aggregation.

This method first aggregates similar information among features via the interaction of different features with global features. Given the ViT pre-trained model features , multi-frequency fusion features , and multi-scale spatial fusion features , these features are concatenated and reduced to a unified dimension by using convolution operations to produce joint features that incorporate all of the information from each feature. Next, the features of each channel are reduced in dimension through two convolution operations to obtain and . Then, we add feature and joint features , and obtain preliminary fusion features of various information types by multiplying them with features . The formula is shown as follows,

After that, we convert the attention features and the original fusion features into graph structures. and are respectively used to generate attention maps by adaptive average pooling and softmax function. And the original fusion features are mapped to the graph domain through the attention map, so that similar feature information is aggregated to the same vertex. The formula is shown as follows,

where represents adaptive average pooling. In order to capture the consistency and complementarity between different features, this paper designs a dual-fusion strategy based on the node attributes and edge attributes of the graph. Through the full connection among the graph nodes, we extract the edge features between the connected graph nodes. It is defined as follows,

where and are the learnable parameters, indicates the edge features between point and point , and is the future of the point. Based on the learnable feature graph embedding, the fusion of node features and edge features can be expressed as follows,

where and are the learnable parameters, is the total number of points in the graph, and is the total number of edges in the graph. By calculating the attention coefficient of each edge, we obtain the final aggregate features of each point, which are calculated as follows,

where indicates the features of the point in the graph after fusion. Finally, we perform convolution operations on the fused features and normalize them,

where indicates batch norm operation.

3.4. Loss Function

We propose a joint loss function that combines the dual constraints of key points and skeleton positions, aiming to optimize the accuracy and naturalness of human posture estimation. The key point position loss function is the mean square error between the predicted key point heat map and the actual key point heat map. The skeleton position loss function is the difference vector modulus between the predicted adjacent joint point vector and the actual vector. This joint strategy not only improves the positioning accuracy of skeleton key points, but also enhances the coherence of limb movements by integrating the natural constraints of the human skeleton. The specific formula of the overall loss function is as follows,

where is the weight parameter of the loss function, represents the loss function of the key points positions, and represents the loss function of skeleton positions. The formulas for and are as follows,

where and are the amount of key points and skeletons, and indicate the heat map of the predicted and actual key points, and and indicate the predicted vector and actual vector of the joint.

4. Experiment

4.1. Dataset Introduction

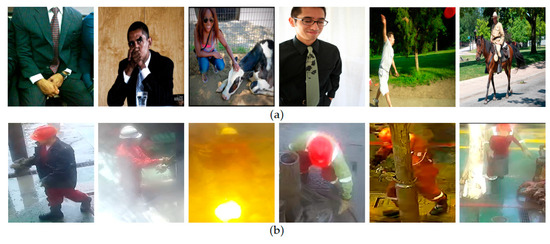

For the task of human pose estimation, we select the COCO dataset [48] and a self-built high-blur dataset, as shown in Figure 4. The COCO dataset is known for the diversity of human poses in daily scenes. This dataset contains about 120,000 images and 17 human key points. Our self-built dataset is collected from monitoring data of a drilling platform in the South China Sea from January to April 2023, and contains a total of 1000 images. This dataset contains normal human images and images affected by environmental factors, such as local over-brightness, image blur, and different distances between employees and equipment. Our criteria for selecting and filtering industrial images are as follows: (1) If the keypoints cannot be discerned by the human eye, the image is deemed too blurry; (2) If over 30% of the human body’s image area is overexposed, making it difficult to identify keypoints, the image will be excluded; (3) If at least 13 out of the 17 keypoints are visible, the image will be included. In order to ensure the standardization of annotations and the comparability of model evaluation, we use the Labellmg tool (v1.8.1) to annotate these images in the COCO format.

Figure 4.

Partial images of two datasets: (a) partial images of the COCO dataset. (b) partial images of the self-built dataset.

4.2. Evaluation Indicators

We use Average Precision (AP) and Average Recall (AR) as evaluation indicators to evaluate the key point detection performance of different methods. AP represents the average precision of all object key point similarities (OKS). The OKS calculation formula is as follows,

where is the Euclidean distance between the detected position and the actual position of the key point, is the scaling factor, is the weight of the key point, and are the real coordinates of the point, and are the predicted coordinates of the point, indicates whether the key point is marked, and represents the indicator function; its value is 1 when the key point is marked, and 0 when it is not marked. We select AP, AP50, AP75, and AR as evaluation indicators. AP and AR represent the estimated precision and recall rate when the OKS threshold is in the range of 0.5 to 0.95, respectively. AP50 and AP75 represent the estimated precision when the OKS threshold is above 0.5 and 0.75, respectively.

4.3. Experimental Environment and Parameter Configuration

In this study, we use a high-performance server based on the Ubuntu operating system, and the hardware configuration includes two Nvidia A800 GPUs. The ViT pre-trained large model used in this paper contains about 630 million parameters. All experiments are implemented based on the Python (v3.7) programming language and the PyTorch (v1.7.1)neural network framework. During the training process, the batch size of the data set is set to 64, the training cycle is set to 100 epochs, the initial learning rate is set to , and the learning rate decay coefficient is set to . We use the AdamW optimizer to optimize the model parameters. The specific experimental parameters are shown in Table 1.

Table 1.

Experimental environment parameter configuration table.

4.4. Comparative Experiment

To determine the optimal value of the weighting parameter in the joint loss function, we conducted experiments on both the COCO dataset and self-built dataset with set to 0.1, 0.3, 0.5, 0.7, and 0.9, respectively. The comparative results are presented in Table 2. As can be seen from the table, the evaluation results are the best when is 0.7. This indicates that, when the training is primarily guided by keypoint loss with skeleton constraints as a supplement, the weight value can achieve the best effect. Therefore, we adopted in our final model.

Table 2.

Comparison of AP under different values on the COCO dataset and the self-built dataset.

In this study, we conduct a comparative analysis of the proposed model with seven other models, including CPM, HRNet, LiteHRNet, MobileNet, MSPN, PVTV2, SCNet, and so on. Table 3 and Table 4 show the comparison results for the COCO data set and the self-built data set, respectively. For the COCO data set, the experimental results show that the accuracy and recall rate of the method proposed in this article are the highest. The AP, AP50, AP75, and AR of this method reach 79.3%, 92.0%, 85.7%, and 84.2% respectively. Compared with the other seven methods, this method achieves improvements in AP and AR indicators of 3.2 to 17.1 percentage points and 2.2 to 15.9 percentage points, respectively. Since the data in the COCO dataset mainly come from high-definition images in daily scenes, these results confirm the superiority of the proposed model in handling complex backgrounds and diverse pose estimation tasks in daily environments. Furthermore, our method also shows significant performance advantages for the self-built dataset. Since the self-built dataset contains more low-light, highly reflective, and blurry images, these conditions pose greater challenges to the human pose estimation algorithm. Despite this, it can be concluded from Table 4 that our method still achieves 69.1%, 81.8%, 73.3%, and 71.8% in AP, AP50, AP75, and AR indicators respectively. Compared with other models, AP and AR achieved improvements of 3.6 to 17.1 percentage points and 3.1 to 14.6 percentage points respectively. The experimental results further prove the high-precision human pose estimation capability of this method in high-interference environments, and achieve efficient migration of the ViT pre-training model from clear scenes to high-interference scenes.

Table 3.

Performance comparison of different algorithms on the COCO dataset.

Table 4.

Performance comparison of different algorithms on self-built dataset.

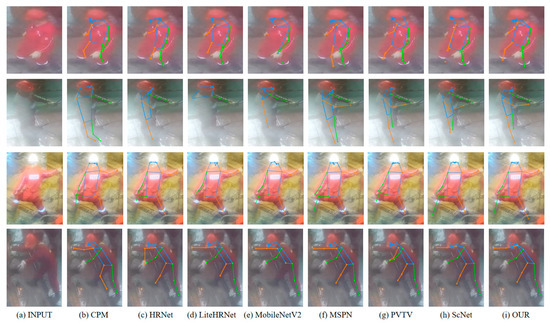

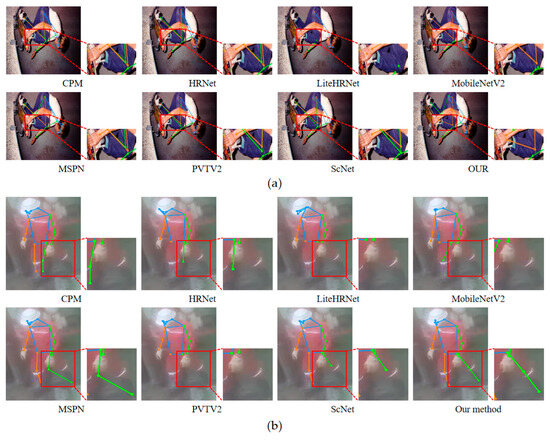

This study conducts a visual analysis of some human posture samples in the COCO dataset and self-built dataset, as shown in Figure 5 and Figure 6, which verifies that the proposed method can achieve more accurate pose estimation in various challenging environments. Compared with other methods, this study significantly reduces the problems of missed detection, false detection, and misconnection. For instance, due to the highly complex human movements, most existing models confused the left and right arms and failed to correctly distinguish between them in the second-row image example of Figure 5. The blurred image quality caused some existing models to miss detections, with most failing to accurately estimate the positions of the right knee or right ankle in the first-row image sample of Figure 6.

Figure 5.

A visual comparison of various algorithms’ estimated results for the COCO dataset.

Figure 6.

A visual comparison of various algorithms’ estimated results on the self-built dataset.

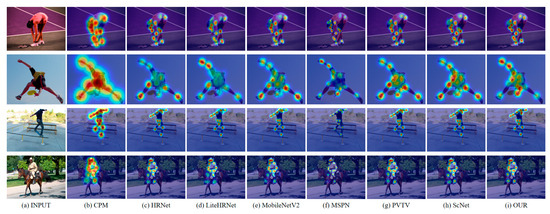

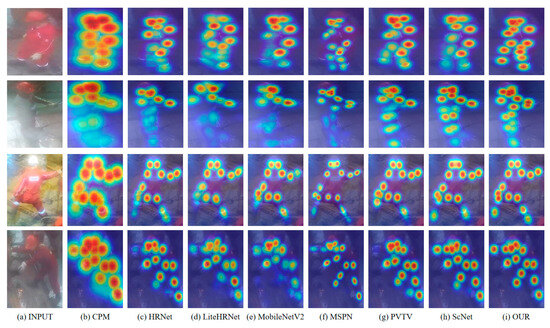

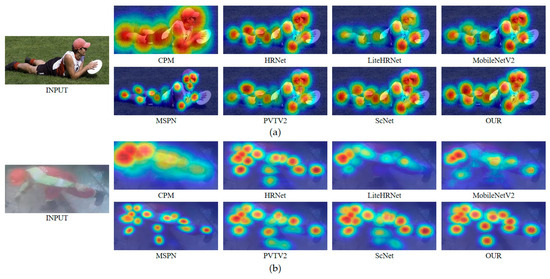

In order to further reveal the performance of this model in the human body joint point detection process, we perform a heat map visualization of the estimated human pose joint points, as shown in Figure 7 and Figure 8. The depth of the color in the figure represents the probability of being identified as a joint point. The results show that this method presents a significant high-probability hot spot area for the position of each joint point. In contrast, other methods have vague definitions of hotspot areas, making it difficult to clearly focus on joint points. At the same time, the probability values of these methods on multiple joint points are low, indicating that they are unable to definitively determine whether these regions are the locations of joint points, which leads to restricted accuracy in joint point recognition.

Figure 7.

A heatmap comparison of various algorithms’ estimated results on the COCO dataset.

Figure 8.

A heatmap comparison of various algorithms’ estimated results on the self-built dataset.

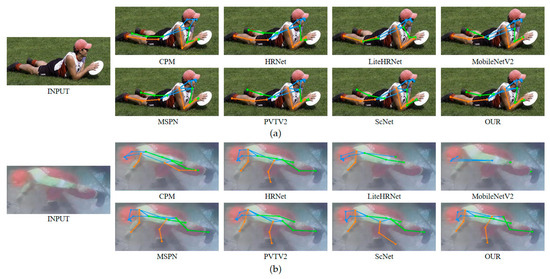

In the real-world application scenarios, human pose is not limited to the upright state, but also exists in the leaning pose. The distribution of key points of the human body in these two poses is quite different. In the upright state, the key points are mainly arranged in the vertical direction. Meanwhile, in the leaning state, the key points are mostly distributed in the horizontal direction. In order to deeply compare the generalization ability of different algorithms, this study visualizes the algorithm on the human body image in the leaning state, as shown in Figure 9 and Figure 10. Through comparative analysis, it can be observed that our method also shows excellent estimation performance in the leaning pose, indicating that this method can understand the relationship between the key points of the human body more deeply and comprehensively, and has stronger generalization ability.

Figure 9.

A visual comparison of different algorithms for estimating people in a leaning position. (a) The test image of the COCO dataset. (b) The test image of the self-built dataset.

Figure 10.

A heatmap comparison of different algorithms for estimating people in a leaning position. (a) The test image of the COCO dataset. (b) The test image of the self-built dataset.

In order to intuitively reveal the performance differences of several algorithms in the pose estimation task, we select the key points in the image that are difficult to estimate and enlarge them for display, as shown in Figure 11. In Figure 11a, LiteHRNet shows a missed detection problem. Other methods all have misdetection, and mistakenly identify the left ankle position as the right ankle position. In Figure 11b, HRNet, LiteHRNet, MobileNetV2, PVTV2, and ScNet all failed to detect the key points of the left ankle due to the high blur of the image. CPM and MSPN did not correctly identify the key point position of the left knee. These results show that the method proposed in this paper has obvious advantages in robustness and is more outstanding in key point positioning accuracy.

Figure 11.

Detailed display of pose keypoints. (a) The test image of the COCO dataset. (b) The test image of the self-built dataset..

4.5. Ablation Experiment

In order to further evaluate the contribution of each module to the overall network structure, we conduct ablation studies on the COCO dataset and the self-built dataset to quantify the impact of each module on network performance. We measure the effectiveness of each module by comparing four indicators: AP, AP50, AP75, and AR. The specific experimental results are shown in Table 5 and Table 6.

Table 5.

Comparison results of ablation experiments for the COCO dataset.

Table 6.

Comparison results of ablation experiments for the self-built dataset.

According to the evaluation results in Table 5 and Table 6, the performance of the model is the worst without introducing any additional features. When multi-frequency features are applied, especially on self-built high-blur data sets, the performance of the model is significantly improved. This result shows that multi-frequency features can effectively alleviate the negative effects caused by factors such as environmental blur and interference, thereby improving the anti-interference ability of the model. In addition, under the condition that the image is relatively clear, the introduction of multi-scale spatial features can further optimize the estimation effect of the model. Integrating these two types of features at the same time can not only improve the accuracy of the model, but also make it more adaptable to complex and changing environmental conditions. Moreover, compared with the traditional additive fusion method, the adaptive symmetric feature fusion module and multi-graph feature aggregation module are more efficient in improving the model’s utilization of these two features types. This can fuse various features information more effectively, which significantly enhances the robustness of the model.

5. Conclusions

Due to the poor performance of human posture estimation models in the high-interference scenarios, we propose a ladder side-tuning method for human pose estimation, pre-training models based on multi-channel feature fusion, leading to significantly enhances anti-interference ability for the model. First, the ViT pre-training model, discrete wavelet convolution network, and atrous spatial pyramid pooling network are applied to extract global features, frequency features and multi-scale spatial features respectively, resulting in comprehensively capturing human body and environmental information. Secondly, in order to achieve the optimal feature fusion effect, we design an adaptive symmetric feature fusion method. This can dynamically adjust the grainedness of feature fusion based on the similarity between features. Finally, we propose a multi-graph feature aggregation method to deeply mine the similarity and difference information between features, aiming to ensure the integrity of feature information. In the meantime, this effectively models the relationship between key points of the body, which improves the accuracy of pose estimation. The experimental results on the COCO dataset and the self-built high-blurry dataset have verified the efficiency of this method in processing clear and blurry images. Compared with the existing technology, it has achieved a significant improvement in performance. In the future, we will systematically explore strategies for reducing the number of keypoints in order to improve the model’s inference speed while maintaining accuracy. Additionally, we plan to construct or incorporate specialized datasets that include diverse body types, such as children and individuals with disabilities, to more comprehensively evaluate and enhance the model’s generalizability.

Author Contributions

Conceptualization, Z.L. and Y.S.; methodology, Y.L.; software, Y.S. and B.J.; validation, Z.L., Y.S., Y.L., and B.J.; formal analysis, Y.L. and T.D.; investigation, Y.S.; resources, Z.L.; data curation, B.J. and T.D.; writing—original draft preparation, Y.S.; writing—review and editing, Y.L. and T.D.; visualization, Y.S. and B.J.; supervision, Y.L.; project administration, Z.L.; funding acquisition, Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Technology Projects of China National Offshore Oil Corporation: KJGG-2024-15-0501 and the National Natural Science Foundation of China: No. 61972353.

Institutional Review Board Statement

This research is licensed to allow unrestricted reuse.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All data included in this study are available upon request by contact with the corresponding author.

Conflicts of Interest

Author Yinliang Shi, Zhaonian Liu, Bin Jiang and Tianqi Dai were employed by the company CNOOC Research Institute Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Wang, J.; Qiu, K.; Peng, H.; Fu, J.; Zhu, J. Ai coach: Deep human pose estimation and analysis for personalized athletic training assistance. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 374–382. [Google Scholar]

- Liu, Z.; Zhang, H.; Chen, Z.; Wang, Z.; Ouyang, W. Disentangling and unifying graph convolutions for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 143–152. [Google Scholar]

- Difini, G.M.; Martins, M.G.; Barbosa, J.L.V. Human pose estimation for training assistance: A systematic literature review. In Proceedings of the Brazilian Symposium on Multimedia and the Web, Belo Horizonte, Brazil, 5–12 November 2021; pp. 189–196. [Google Scholar]

- Gkioxari, G.; Arbeláez, P.; Bourdev, L.; Malik, J. Articulated pose estimation using discriminative armlet classifiers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3342–3349. [Google Scholar]

- Wren, C.R.; Azarbayejani, A.; Darrell, T.; Pentland, A.P. Pfinder: Real-time tracking of the human body. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 780–785. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Ramachandran, P.; Parmar, N.; Vaswani, A.; Bello, I.; Levskaya, A.; Shlens, J. Stand-alone self-attention in vision models. Adv. Neural Inf. Process. Syst. 2019, 32–44. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30–40. [Google Scholar]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Pictorial structures for object recognition. Int. J. Comput. Vis. 2005, 61, 55–79. [Google Scholar] [CrossRef]

- Eichner, M.; Ferrari, V. Better appearance models for pictorial structures. In Proceedings of the British Machine Vision Conference, London, UK, 7–10 September 2009. [Google Scholar]

- Freifeld, O.; Weiss, A.; Zuffi, S.; Black, M.J. Contour people: A parameterized model of 2D articulated human shape. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 639–646. [Google Scholar]

- Yang, Y.; Ramanan, D. Articulated pose estimation with flexible mixtures-of-parts. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011; pp. 1385–1392. [Google Scholar]

- Yang, Y.; Ramanan, D. Articulated human detection with flexible mixtures of parts. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 2878–2890. [Google Scholar] [CrossRef]

- Achilles, F.; Ichim, A.-E.; Coskun, H.; Tombari, F.; Noachtar, S.; Navab, N. Patient MoCap: Human pose estimation under blanket occlusion for hospital monitoring applications. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016: 19th International Conference, Athens, Greece, 17–21 October 2016; Proceedings, Part I 19. Springer: Cham, Switzerland, 2016; pp. 491–499. [Google Scholar]

- Toshev, A.; Szegedy, C. Deeppose: Human pose estimation via deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Sun, X.; Shang, J.; Liang, S.; Wei, Y. Compositional human pose regression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2602–2611. [Google Scholar]

- Rogez, G.; Weinzaepfel, P.; Schmid, C. Lcr-net: Localization-classification-regression for human pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3433–3441. [Google Scholar]

- Luvizon, D.C.; Tabia, H.; Picard, D. Human pose regression by combining indirect part detection and contextual information. Comput. Graph. 2019, 85, 15–22. [Google Scholar] [CrossRef]

- Wei, S.-E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. Springer: Cham, Switzerland, 2016; pp. 483–499. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. Human pose estimation via convolutional part heatmap regression. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VII 14. Springer: Cham, Switzerland, 2016; pp. 717–732. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Cheng, B.; Xiao, B.; Wang, J.; Shi, H.; Huang, T.S.; Zhang, L. Higherhrnet: Scale-aware representation learning for bottom-up human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5386–5395. [Google Scholar]

- Yang, S.; Quan, Z.; Nie, M.; Yang, W. Transpose: Keypoint localization via transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 11802–11812. [Google Scholar]

- Li, Y.; Zhang, S.; Wang, Z.; Yang, S.; Yang, W.; Xia, S.-T.; Zhou, E. Tokenpose: Learning keypoint tokens for human pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 11313–11322. [Google Scholar]

- Yuan, Y.; Fu, R.; Huang, L.; Lin, W.; Zhang, C.; Chen, X.; Wang, J. Hrformer: High-resolution vision transformer for dense predict. Adv. Neural Inf. Process. Syst. 2021, 34, 7281–7293. [Google Scholar]

- Fang, H.-S.; Li, J.; Tang, H.; Xu, C.; Zhu, H.; Xiu, Y.; Li, Y.-L.; Lu, C. Alphapose: Whole-body regional multi-person pose estimation and tracking in real-time. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7157–7173. [Google Scholar] [CrossRef]

- Jia, M.; Tang, L.; Chen, B.-C.; Cardie, C.; Belongie, S.; Hariharan, B.; Lim, S.-N. Visual prompt tuning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 709–727. [Google Scholar]

- Yu, B.X.; Chang, J.; Wang, H.; Liu, L.; Wang, S.; Wang, Z.; Lin, J.; Xie, L.; Li, H.; Lin, Z. Visual tuning. ACM Comput. Surv. 2024, 56, 1–38. [Google Scholar] [CrossRef]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. Adv. Neural Inf. Process. Syst. 2023, 36, 34892–34916. [Google Scholar]

- Zaken, E.B.; Ravfogel, S.; Goldberg, Y. Bitfit: Simple parameter-efficient fine-tuning for transformer-based masked language-models. arXiv 2021, arXiv:2106.10199. [Google Scholar]

- Bu, Z.; Wang, Y.-X.; Zha, S.; Karypis, G. Differentially private bias-term only finetuning of foundation models. arXiv 2023, arXiv:2210.00036. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. ICLR 2022, 1, 3. [Google Scholar]

- Valipour, M.; Rezagholizadeh, M.; Kobyzev, I.; Ghodsi, A. Dylora: Parameter efficient tuning of pre-trained models using dynamic search-free low-rank adaptation. arXiv 2022, arXiv:2210.07558. [Google Scholar]

- Gao, Y.; Shi, X.; Zhu, Y.; Wang, H.; Tang, Z.; Zhou, X.; Li, M.; Metaxas, D.N. Visual prompt tuning for test-time domain adaptation. arXiv 2022, arXiv:2210.04831. [Google Scholar]

- Herzig, R.; Abramovich, O.; Ben Avraham, E.; Arbelle, A.; Karlinsky, L.; Shamir, A.; Darrell, T.; Globerson, A. Promptonomyvit: Multi-task prompt learning improves video transformers using synthetic scene data. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 6803–6815. [Google Scholar]

- Zhang, J.-W.; Sun, Y.; Yang, Y.; Chen, W. Feature-proxy transformer for few-shot segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 6575–6588. [Google Scholar]

- Wang, S.; Chang, J.; Wang, Z.; Li, H.; Ouyang, W.; Tian, Q. Fine-grained retrieval prompt tuning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 2644–2652. [Google Scholar]

- Gan, Y.; Bai, Y.; Lou, Y.; Ma, X.; Zhang, R.; Shi, N.; Luo, L. Decorate the newcomers: Visual domain prompt for continual test time adaptation. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 7595–7603. [Google Scholar]

- Nie, X.; Ni, B.; Chang, J.; Meng, G.; Huo, C.; Xiang, S.; Tian, Q. Pro-tuning: Unified prompt tuning for vision tasks. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 4653–4667. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, T.; Yu, M.; Sun, J.; Ye, W.; Wang, C.; Zhang, S. Stacking networks dynamically for image restoration based on the plug-and-play framework. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIII 16. Springer: Cham, Switzerland, 2020; pp. 446–462. [Google Scholar]

- Ermis, B.; Zappella, G.; Wistuba, M.; Rawal, A.; Archambeau, C. Continual learning with transformers for image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3774–3781. [Google Scholar]

- Pan, J.; Lin, Z.; Zhu, X.; Shao, J.; Li, H. St-adapter: Parameter-efficient image-to-video transfer learning. Adv. Neural Inf. Process. Syst. 2022, 35, 26462–26477. [Google Scholar]

- Chen, Z.; Duan, Y.; Wang, W.; He, J.; Lu, T.; Dai, J.; Qiao, Y. Vision transformer adapter for dense predictions. arXiv 2022, arXiv:2205.08534. [Google Scholar]

- Chen, S.; Ge, C.; Tong, Z.; Wang, J.; Song, Y.; Wang, J.; Luo, P. Adaptformer: Adapting vision transformers for scalable visual recognition. Adv. Neural Inf. Process. Syst. 2022, 35, 16664–16678. [Google Scholar]

- Zhang, J.O.; Sax, A.; Zamir, A.; Guibas, L.; Malik, J. Side-tuning: A baseline for network adaptation via additive side networks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part III 16. Springer: Cham, Switzerland, 2020; pp. 698–714. [Google Scholar]

- Sung, Y.-L.; Cho, J.; Bansal, M. Lst: Ladder side-tuning for parameter and memory efficient transfer learning. Adv. Neural Inf. Process. Syst. 2022, 35, 12991–13005. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer vision–ECCV 2014: 13th European conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part v 13. Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Li, B.; Tang, S.; Li, W. LMFormer: Lightweight and multi-feature perspective via transformer for human pose estimation. Neurocomputing 2024, 594, 127884. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).