Abstract

Artificial intelligence (AI)-powered mental health chatbots have evolved quickly as scalable means for psychological support, bringing novel solutions through natural language processing (NLP), mobile accessibility, and generative AI. This systematic literature review (SLR), following PRISMA 2020 guidelines, collates evidence from 25 published, peer-reviewed studies between 2020 and 2025 and reviews therapeutic techniques, cultural adaptation, technical design, system assessment, and ethics. Studies were extracted from seven academic databases, screened against specific inclusion criteria, and thematically analyzed. Cognitive behavioral therapy (CBT) was the most common therapeutic model, featured in 15 systems, frequently being used jointly with journaling, mindfulness, and behavioral activation, followed by emotion-based approaches, which were featured in seven systems. Innovative techniques like GPT-based emotional processing, multimodal interaction (e.g., AR/VR), and LSTM-SVM classification models (greater than 94% accuracy) showed increased conversation flexibility but missed long-term clinical validation. Cultural adaptability was varied, and effective localization was seen in systems like XiaoE, okBot, and Luda Lee, while Western-oriented systems had restricted contextual adaptability. Accessibility and inclusivity are still major challenges, especially within low-resource settings, since digital literacy, support for multiple languages, and infrastructure deficits are still challenges. Ethical aspects—data privacy, explainability, and crisis plans—were under-evidenced for most deployments. This review is different from previous ones since it focuses on cultural adaptability, ethics, and hybrid public health incorporation and proposes a comprehensive approach for deploying AI mental health chatbots safely, effectively, and inclusively. Central to this review, symmetry is emphasized as a fundamental idea incorporated into frameworks for cultural adaptation, decision-making processes, and therapeutic structures. In particular, symmetry ensures equal cultural responsiveness, balanced user–chatbot interactions, and ethically aligned AI systems, all of which enhance the efficacy and dependability of mental health services. Recognizing these benefits, the review further underscores the necessity for more rigorous academic research into the development, deployment, and evaluation of mental health chatbots and apps, particularly to address cultural sensitivity, ethical accountability, and long-term clinical outcomes.

1. Introduction

The convergence of artificial intelligence (AI) with mental health care is more than an advance in technology—its catalyst is a timely and strategic urgency to the rising global mental health emergency [1,2,3]. Mental health, previously stigmatized and plagued by taboo, has been identified today as an acute public health issue. But this greater awareness has also led to the recognition that the healthcare systems in place are inadequate to address the sharp rise in demand for mental health services. Because of systematic institutional deficiencies in addressing workplace mental health, even university faculty in Malaysia—who are usually better resourced—report high levels of anxiety and depression [4]. While mental and behavioral disorders currently account for around 13–15% of the global burden of disease, depression is among the high-priority causes of disability worldwide [5,6]. Despite the progress in treatment modalities, the paradox remains: Conditions like depression and anxiety are on the rise, but timely, equitable, and effective care remains in short supply [7,8,9].

Conventional mental health service approaches—dependent on static assessment instruments and face-to-face consultations—are rapidly outdated, especially in remote or hard-to-reach populations where care is costly, logistically inconvenient, and socially infeasible [10]. Many structural hurdles, including stigma, cultural mismatches, gender-specific hesitation, and constrained facilities, also hinder treatment accessibility, continuity, and efficacy [11,12,13]. These obstacles disproportionately affect vulnerable populations like adolescents, postpartum mothers, refugees, young offenders, and rural populations, perpetuating their exclusion from mainstream mental health systems [14,15,16]. This includes university students who experienced more distress when switching to remote learning, even though they remained connected to the internet, and pregnant women with fetal abnormality diagnoses who experienced increased anxiety during COVID-19 lockdowns due to compromised support systems [17]. One promising approach to resolving these persistent problems is AI technology. With the ability to process complex, multi-modal information in real time, AI allows for real-time detection, tailored evaluations, responsive interventions, and dynamic remote monitoring [18,19]. Computerized testing logic (CTL), machine learning diagnostic software, natural language processing (NLP) and AI-facilitated cognitive behavioral therapy (CBT) softwareare transforming static, one-size-fits-all paradigms into adaptive, user-centric experiences [20,21,22,23].

Additionally, mobile mental health solutions are becoming more popular as scalable and reasonably priced substitutes for traditional service delivery. These technologies—especially useful for youth and isolated populations that lacked these resources—use smartphones and wearables to collect real-time physiological and behavioral data, facilitating timely, proactive intervention [24,25,26]. AI systems adapted to local cultures, including emotion-sensitive chatbots and localized diagnostic platforms, have also been promising in culturally diverse settings like Malaysia, where sociocultural sensitivity matters [27,28].

Yet, a number of critical hurdles persist. A primary constraint is the homogeneity of much AI model training data, so that many AI models are developed upon homogeneous data—eliciting concerns regarding their fairness, accuracy, and generalizability to different populations [29,30]. Furthermore, data privacy, algorithmic bias, lack of transparency, and restricted explainability are just some of the ethical concerns persisting to inhibit trust building and clinical embedding [31,32,33]. Infrastructural disparities, disconnected rules, and missing implementation frameworks add to the hindrance of the successful deployment of AI within mental health systems [18], and this evaluation highlights the need for stronger research engagement with the rapidly developing field of mental health chatbots and software solutions. While more solutions are becoming available and accessible, most have not been validated through high-level clinical trials or systematic reviews. Closing this knowledge gap is critical for the development of standard, effective, and reliable AI-powered mental health treatments.

A historical perspective provides useful context. The development of AI in mental health first came about with ELIZA in 1966, which was an early system for human–computer therapeutic conversation [34]. Since ELIZA, AI chatbots have developed to include multimodal input, emotional recognition, and responses from wearable devices. Despite advancements, a large number of AI tools have been released onto the market with little empirical support [35]. This review illustrates that many promising solutions have not been validated in real-life clinical settings. For effective and meaningful incorporation, clinician–technologist collaboration is critical to provide solutions that balance innovation with clinical effectiveness and ethics.

There have been numerous excellent review papers that helped grow the area of AI-based mental health interventions. Promising works such as those of [36,37,38] and others have added valuable lessons concerning patient engagement, clinical efficacy, and the potential of digital tools. Nevertheless, a number of these works have limited scope and/or narrow focus. For example, though Khosravi-Azar and colleagues investigated strategies for engagement, they failed to systematically categorize the architecture of chatbots and the technical framework. Li and colleagues’ robust meta-analysis combined a broad range of conversational models but failed to subclassify them into therapeutic vs. non-therapeutic systems. Again, Farzan and colleagues focused their systematic review of the evidence on merely three chatbots, Woebot, Wysa, and Youper, with no examination of more recent tools or cultural and architectural dimensions. Other reviews, e.g., [39], provided descriptive accounts but paid little heed to adaptive capacity, Explainable AI (XAI), and deployment in low-resource and diverse settings.

A comparative overview of recent, significant evaluations on AI-based chatbots for mental health from is shown in Table 1. Every earlier study has significantly advanced the area by providing a range of viewpoints on technical implementation, engagement, and efficacy. By integrating ethical framework, cultural alignment, and therapeutic design symmetry—elements that have not been thoroughly examined collectively in prior reviews—the current review builds upon these foundations and offers a unique perspective. To ensure that the most recent advancements in the field are covered, this review also includes over eight studies from 2024 and 2025. By focusing on these particular aspects, the review enhances previous research and seeks to facilitate the creation of chatbot therapies for mental health that are more technically sound, morally sound, and culturally sensitive.

Table 1.

Comparative summary of major reviews on AI mental health chatbots.

By contrast, the current review expands and improves upon this earlier work by implementing a PRISMA-informed methodology to integrate more than 25 peer-reviewed articles published in the 2020–2025 period. It presents a systematic, multi-dimensional comparison across five domains of importance: methods employed, cultural adaptation process, system architecture and features, technical methodology, and typical limitations. Particular emphasis is given to recent tools such as culturally adaptive and localized AI systems. Far from encompassing every chatbot ever built, the review is intended as a timely and comprehensive reference for researchers wishing to better grasp changing trends and inform the development of equitable, ethical, and clinically responsive digital-delivery mental health tools. In spite of the potential offered in theory for AI in mental health, there is an imperative for integrated, systematic evidence assessing the reality of its implementation—particularly in situations such as those that involve adaptive screening, require cultural sensitivity, and focus on vulnerable populations. To meet this, an extensive systematic literature review (SLR) is crucial in order to monitor practice patterns, underscore technological strengths, and recognize limitations regarding methodology. In accordance with PRISMA guidelines, this review studies the literature from 2020 to 2025 in prominent databases such as Scopus, PubMed, IEEE Xplore, Web of Science, SpringerLink, Google Scholar, and the European Centre for Research Training and Development. In synthesizing these developments, this review explicitly highlights symmetry—as evident across therapeutic interactions, system architecture, and ethical safeguards—as a foundational principle unifying the design and enhancing the effectiveness of modern AI-powered mental health chatbots. It answers the main research query: What are the dominant methods, technological constraints, and most significant innovations in AI-based mental health screening solutions developed from 2020 to 2025, specifically regarding cultural sensitivity and suitability for diverse and low-resource settings? The primary findings are summarized in this review, which also focuses on important areas such as technical design, cultural adaptation, therapeutic approaches, and ethical issues with AI-powered chatbots for mental health. In particular, this systematic review concentrates on four essential elements that are crucial to the creation and assessment of AI-powered chatbots for mental health: ethical considerations, technical design, cultural adaption, and therapeutic approaches.

Therapeutic Models: The fundamental psychological theories that inform chatbot-based mental health treatments are known as therapeutic models. Because it works well in digital settings, cognitive behavioral therapy, or CBT, is still the most often used framework. Recent systems, however, are progressively incorporating alternative approaches, including emotion-focused therapy, mindfulness, psychoeducation, motivational interviewing (MI), and dialectical behavior therapy (DBT), to improve participation and therapeutic success.

Technical Design: The system architectures, interface techniques, and algorithms that underpin chatbot functionality are all included in technical design. Notable examples include rule-based logic, natural language processing (NLP), emotion recognition frameworks (like EmoRoBERTa), generative AI models (like GPT-3.5), and multimodal interfaces that combine virtual and augmented reality. Additionally, a lot of systems use hybrid strategies that combine many AI techniques. The user experience, usability, adaptability, and scalability are all significantly impacted by these design decisions.

Cultural Adaptation: This evaluates how well chatbots represent the language, cultural norms, and values of their users. Context-aware material, culturally appropriate discourse, and localized language support are essential components. These characteristics are necessary to guarantee effectiveness, relevance, and accessibility, especially in situations with limited resources and a varied population.

Ethical Considerations: Responsible chatbot deployment revolves around ethical issues such as algorithmic fairness, transparency, data privacy, crisis response, and informed consent. Strong ethical frameworks are essential for protecting users, building trust, and guaranteeing responsibility because mental health care is sensitive. This review outlines areas that require development and assesses how well existing chatbot systems integrate ethical safeguards.

Even while the number of AI-powered chatbots for mental health is increasing, thorough evaluations that look at the therapeutic underpinnings of these tools as well as their cultural, technological, and ethical aspects are still lacking. The majority of earlier research has concentrated either on technical performance or therapeutic efficacy, frequently ignoring cultural significance and ethical considerations, including prejudice, privacy, and emergency procedures. In order to fill these gaps, this review uses a framework informed by symmetry to provide a multifaceted examination of system design, cultural sensitivity, therapeutic paradigms, and ethical responsibilities in chatbots for mental health.

A systematic review of the literature was carried out in accordance with PRISMA 2020 principles. In-depth investigation of architectural design, therapeutic approaches, cultural adaptation, and the moral dilemmas associated with AI-based mental health therapies was made possible by thematic analysis of the collected research.

2. Methodology

This section outlines the step-by-step process that is adopted in identifying, screening, and reviewing the literature related to chatbots for mental health through AI in a systematic process. It provides a general overview of research methodology, i.e., review process, search process, inclusion/exclusion criteria, process of screening articles, and process of quality control. All these steps are adopted to make the review process methodologically sound, transparent, and replicable.

2.1. Research Approach

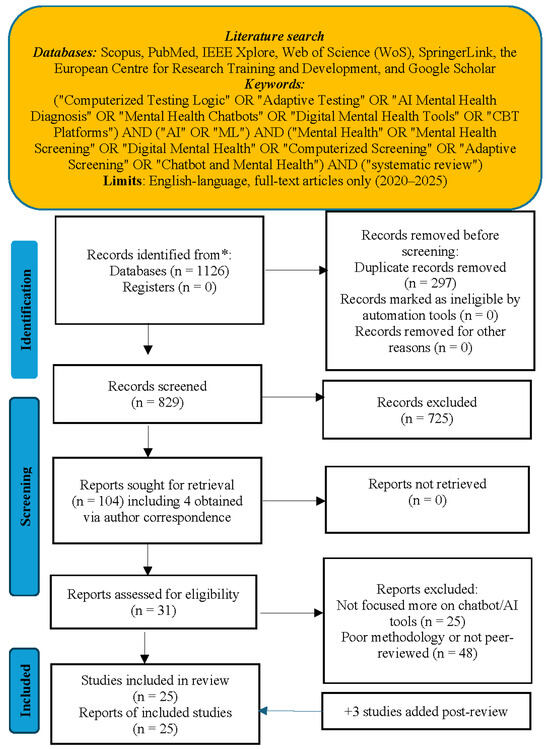

The systematic review (SLR) was conducted in accordance with the PRISMA 2020 guidelines [45] to produce a clear, standard process for conducting and reporting systematic reviews. The PRISMA was used since it can effectively increase review quality and replicability [46]. The process of review reflected the four key PRISMA phases: identification, screening, eligibility, and inclusion. The process of selecting articles was represented graphically through a PRISMA flow diagram altered for use [47]. The process was also aided by better practice guidelines of newer SLRs [48], which involved manual curation to ensure coverage for the unique subtleties in mental health research that cannot be captured through automated searching.

Special emphasis was placed on identifying and mapping the inherent processes in chatbot interventions like natural language processing (NLP), sentiment analysis, reinforcement learning, and adaptive dialogue systems to learn more about their potential to improve mental health screening as well as intervention outcomes.

2.2. Method of Search

Eight well-known academic databases—SCIVerse Scopus, ScienceDirect, IEEE Xplore, PubMed, Web of Science (WOS), PsycINFO, SpringLink, and Google Scholar—were searched extensively for relevant studies. Boolean operators (i.e., AND, OR) were used with key terms related to mental health screening and online assessment approaches. Some example search terms included the following:

- (“Computerized Testing Logic” OR “Adaptive Testing” OR “AI Mental Health Diagnosis” OR “Mental Health Chatbots” OR “Digital Mental Health Tools” OR “CBT Platforms”);

- AND (“AI” OR “ML”);

- AND (“Mental Health” OR “Mental Health Screening” OR “Digital Mental Health” OR “Computerized Screening” OR “Adaptive Screening” OR “Chatbot and Mental Health”);

- AND (“systematic review”).

Search terms were iteratively generated based on preliminary findings and modified according to database indexing.

Not every combination of keywords provided unique or adequate findings throughout the chosen databases. Thus, the search phrases were adapted flexibly and customized to the indexing system of every platform to best recover the applicable literature. To add further thoroughness, searches also took place manually for the list of references, the chief journals, and previous review articles. Only English-language studies where full text was available, published from January 2020 to March 2025, were considered for inclusion.

Aside from database searching and predefined inclusion criteria, I actively approached corresponding authors of some highly relevant but inaccessible studies. This was carried out through email and academic networking sites like ResearchGate. Some authors provided the full-text versions of their articles, which were then assessed for quality and included in the dataset upon passing the quality appraisal process. This ensured the acquisition of a more thorough and representative body of evidence for the review.

2.3. Data Analysis and Thematic Synthesis

A thorough theme analysis was carried out to synthesize the data across several analytical dimensions after the discovery and selection of suitable papers. A structured data extraction matrix created in Microsoft Excel contained the data from every study that was included. Comprehensive information such as study objectives, identified issues, problem statements, research gaps, methodologies, results, limitations, future work, contributions, chatbot systems, techniques used, NLP/ML models, cultural adaptations, and deployed platforms or tools were all intended to be captured by the extraction fields.

Based on the review’s theoretical focus and study goals, a preliminary coding system was created that focused on four main areas: technological design, cultural adaptation, therapeutic models, and ethical issues. Iterative code refining was implemented through many reading cycles as data extraction advanced in order to guarantee consistency and take into account new themes. Every study was thoroughly examined, pertinent data was grouped according to the pre-established codes, and cross-study comparisons were carried out to find recurring trends and differences between therapies.

A thorough comparative synthesis across therapeutic approaches, system designs, cultural contextualization techniques, and ethical implementation frameworks was made possible by the coding process, which aggregated various study features into high-level thematic categories. The analytical findings and discussion in the next sections of this review are based on this thematic synthesis.

2.4. Exclusion and Inclusion Criteria

An organized process of filtering was conducted following the search in order to ascertain the scientific rigor and appropriateness of the included studies for this review. This consisted of applying a prior set of inclusion and exclusion criteria in order to ascertain the suitability of the studies for full analysis. The criteria are detailed in the following section and acted both to narrow the scope of the review and exclude unrelated, duplicate, or non-peer-reviewed literature.

2.4.1. Inclusion Criteria

- Peer-reviewed articles in journals, conference papers, or systematic reviews;

- Research on mental health screening technology, mental health chatbots and tools, CTL, adaptive testing, AI, ML, or CBT;

- Research on digital, computerized, or AI-based mental health therapies;

- Articles released from 2020 to 2025;

- English-language journals with full-text availability.

2.4.2. Exclusion Criteria

- Non-English articles;

- Editorials, opinion articles, commentaries, or opinion columns that lack empirical data;

- Research based solely on hand/manual screening procedures;

- Research not associated with the mental health evaluation or AI/digital-assisted techniques;

- Articles not available in full text;

- Market mental health apps that have not been peer-reviewed.

2.5. Selection Process

The search initially identified 1126 records. After removing 297 duplicates and automated exclusions using Mendeley, 829 records were screened by title and abstract. From these, 104 were selected for full-text review, of which 4 were obtained directly via author contact (email). After applying the initial inclusion criteria, 22 studies were identified. However, following peer-review feedback highlighting underrepresentation from the Global South, we conducted an additional targeted search. Three new eligible studies from Jordan, Brazil, and Malawi were identified and included in the final review, bringing the total to 25 peer-reviewed articles synthesized in this systematic review that were drawn from 7 scholarly databases: Web of Science (n = 6), PubMed (n = 11, including the three additional studies), SpringerLink (n = 2), Google Scholar (n = 3), IEEE Xplore (n = 1), Scopus (n = 1), and the European Centre for Research Training and Development—UK (n = 1). This count reflects both the breadth of sources searched and the multidisciplinary nature of AI-based mental health chatbot studies. Figure 1 illustrates a total of 25 studies that were screened through a systematic process. Two independent reviewers manually carried out the entire screening process to guarantee reliability.

Figure 1.

PRISMA 2020 flow diagram of study selection for AI-based mental health chatbot review (2020–2025). * All records were identified through electronic database searches only.

2.6. Quality Control and Validation

Screening, categorizing, and assessing the relevance of the articles were performed independently and cross-validated by several reviewers. Discrepancies were resolved through discussion to the point of consensus. The cross-validation process using several reviewers ensured conformity with the review goal and improved the final selection methodology consistency [49].

The identification of studies, screening, and inclusion process is explained in the PRISMA flow diagram in Figure 1, which provides an overview of every step from database searching to final study selection. There were 25 studies selected for final review following application of all eligibility criteria, out of 1126 identified records, as detailed in Section 2.5.

2.7. Methodological Quality Assessment of Included Studies

To assess the methodological quality of the included studies, a structured critical assessment was carried out utilizing a modified Critical Appraisal Skills Programme (CASP)-based technique. The design, sample size, measuring instruments, comparison groups, result reporting, bias risk, and general quality of each study were evaluated. The majority of research relied on small samples with little follow-up and lacked controlled procedures, which hampered their generalizability. Inconsistent outcome measures and a strong dependence on self-reported data without objective validation hampered cross-study comparisons. Some research demonstrated technological innovation, but other studies yielded promising clinical outcomes. Table 2 and Table 3 provide a summary of all the information.

Table 2.

Quality assessment of included studies (Part 1).

Table 3.

Quality assessment of included studies (Part 2)—continued from Table 2.

A wide range of study designs, from technical system assessments and qualitative interviews to randomized controlled trials and quasi-experimental designs, are revealed by the methodological assessment shown in Table 2 and Table 3. A sizable percentage of studies were restricted to system design and technical validation without clinical testing, even though a number of excellent RCTs showed noteworthy clinical effects. Small sample numbers, short follow-up periods, no control groups, and fluctuating bias risk were common methodological problems. However, taken as a whole, these studies offer a thorough overview of the state of technological advancement as well as new data demonstrating the therapeutic potential of AI-based chatbots for mental health.

3. Results of Systematic Review

The following systematic literature review integrates conclusions from peer-reviewed articles published from 2020 to 2025 regarding AI-based mental health chatbots. The literature is analyzed against five inductively derived core themes that are concordant with the research aims: (1) Techniques Used, (2) Cultural Adaptation, (3) System Design and Features, (4) Technical Methodology and Evaluation, and (5) Typical Limitations. The following subsections provide a detailed account of each of these five analytical dimensions.

3.1. Techniques Utilized

The most popular framework among the evaluated chatbot systems is cognitive behavioral therapy (CBT). CBT methods were used in all systems like Woebot, Wysa, Tessa, and XiaoE and included cognitive restructuring, behavioral activation, journaling, and mindfulness [52,64,67]. Other evidence-based treatment models like dialectical behavior therapy (DBT), motivational interviewing (MI), and education (psychoeducation) were also used, but less frequently. For example, Woebot-SUD added Woebot therapy capabilities by integrating DBT and MI strategies to treat substance use disorders [66]. Outcomes from chosen applications demonstrated quantifiable clinical impact. Wysa was associated with statistically significant changes in PHQ-9 and GAD-7 in individuals with chronic pain [73]. Aury used a step-by-step stepped-care model of CBT that resulted in sustained clinical outcomes [63]. Vitalk was employed in two settings: In Brazil [70], it served as a rule-based mobile chatbot that provided the general public with cognitive behavioral therapy (CBT) strategies such as behavioral activation and cognitive restructuring, with notable improvements in PHQ-9, GAD-7, and DASS-21 scores. An adapted version of CBT and resilience training for health workers was used in Malawi [71], and it demonstrated moderate improvements on the RS-14 and a custom behavior scale. The STARS system in Jordan [72] produced moderate improvements on several mental health metrics after delivering 10 culturally tailored CBT lessons via a rule-based web chatbot with optional human support. Above and beyond the standard psychotherapeutic models, a number of them incorporated more advanced AI methods for increased personalization and responsiveness. Emohaa, for example, used a dual-bot architecture that brought structured delivery of CBT along with a generative emotive support component using strategy-controlled NLP [56]. Psych2Go incorporated prosodic emotion recognition within a voice-enabled CBT platform for increased emotional sensitivity with respect for user privacy [68].

Increased innovation was observed in F-One, which employed a blend of GPT-3.5, FAISS semantic search, and EmoRoBERTa for enabling emotionally intelligent and context-sensitive conversations, as demonstrated by [51]. NLP has been used by Saarthi for enabling peer-to-peer emotional support among members of communities [57]. In predictive analysis terms, ref. [53] contrasted LSTM and SVM models with an intent classification dataset related to mental health. LSTM performed well with regard to modeling sequential input, with SVM producing excellent classification accuracy. Each of the models obtained a high performance above 94%, and with an ensemble methodology, 94.2% was obtained, reflecting good prospects for the utilization of hybrid AI in the future for interventions.

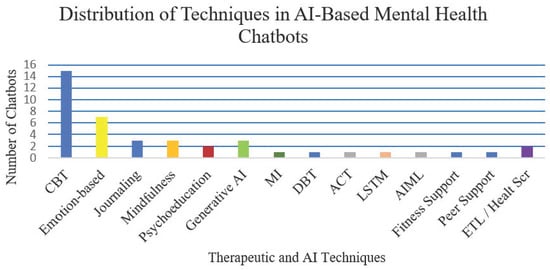

The spread of treatment and AI-based methods among the systems summarized is depicted in Figure 2.

Figure 2.

Frequency of techniques used in AI-based chatbots for mental health (2020–2025).

This indicates that cognitive behavioral therapy (CBT) was used in most cases (15 systems), then emotion-based/empathy strategies (7 systems), while other methods like journaling, mindfulness, psychoeducation, and generative AI (GPT) models also featured in a variety of chatbots. The range and complexity of therapy delivery methods are reflected in this distribution. For example, CBT-based methods offer behavioral activation and structured cognitive restructuring, which are appropriate for self-directed daily mood control. For people who need dynamic emotional reaction, they might not work as well. Although emotion-oriented techniques (like those used in Psych2Go and Emohaa) increase affective sensitivity, they run the danger of being misunderstood in cases where it is unclear whether there is insufficient professional supervision. Additionally, including generative models such as GPT-3.5 (found in F-One) provides additional flexibility in open-ended discussions; nevertheless, this approach does not yet have long-term clinical validation and is still susceptible to ethical control issues in vulnerable populations. Therefore, depending on user features including clinical severity, emotional regulation demands, and cultural context, system design decisions must strike a balance between structure and adaptability.

3.2. Cultural Adaptation

Some works focused on the cultural adaptation role in improving user active participation, the building of user trust, and the applicability of the therapy process in the context of mental health chatbots. Some of the implementations that have used culturally adapted CBT-based therapy included XiaoE, which intervened using Mandarin Chinese [52], and okBot, which intervened using Bahasa Malaysia [27]. The systems used local languages and cultural adaptation of the way of communication, thereby improving accessibility and context-appropriateness in their population. Similar to this, Vitalk was culturally modified for both Brazil and Malawi to accommodate different user groups using resilience techniques and localized cognitive behavioral therapy [70,71]. Through culturally appropriate delivery, the STARS chatbot in Jordan increased engagement by offering CBT in Arabic and optional human e-helper support [72]. Some of the other examples included the Kazakh-adapted chatbot created by [54], utilizing culturally tailored interface features, CBT-informed evaluations, and native language assistance using RASA NLU to facilitate psychological distress management among local contexts. ChatPal, a multilingual digital resource discussed by [69], enabled mental well-being through psychoeducation and exercises provided in English, Scottish Gaelic, Swedish, and Finnish, emphasizing the value of the inclusion of multiple languages in the promotion of greater access to mental health. Luda Lee, a persona social chatbot specifically designed for Korean college students, exhibited efficacy in decreasing social anxiety and loneliness in four weeks due mainly to its empathic and emotionally responsive dialogue [60]. Leora, an Australian-designed one, aimed to offer ethically underpinned and culturally appropriate support using CBT as well as well-being packages with a focus on issues of transparency, bias reduction, and building trust, as reported by [62]. By contrast, Tessa, a chatbot that focuses on preventing eating disorders in American college women, lacked cultural representativeness. Also, [67] evaluated its effectiveness with a primarily White group, which raises questions about its cultural relevance and generalizability across a range of cultural backgrounds.

3.3. Feature and System Design

Chatbot system designs differed with respect to their functionality, adaptability, and user interactive features. For instance, ref. [67] assessed Tessa, a non-AI-driven chatbot that provided CBT-informed eating disorder prevention in the form of scripted, rule-based exchanges. Although intended for scalability, fixed template responses in Tessa restricted its potential for personalization and interactive responsiveness. By way of contrast, AI-powered systems such as Wysa, XiaoE, and BalanceUP employed natural language processing (NLP) using free text in order to facilitate more adaptive, flexible, and emotionally attuned user interactions [52,65,73]. These platforms offered a more natural level of conversation as well as adaptive involvement as compared with rules-based systems. A rule-based architecture centered on CBT delivery, self-monitoring, and resilience approaches customized to the local population circumstances was employed by Vitalk, which was implemented in both Brazil and Malawi [70,71]. Similar to this, the STARS chatbot in Jordan demonstrated an approachable yet encouraging design model [72] by offering a structured, non-AI web-based platform with culturally relevant CBT lessons and optional human e-helper integration. Advanced systems combined generative AI and emotional intelligence capabilities. F-One, for example, combined GPT-3.5, LangChain, and EmoRoBERTa to provide context-aware and emotionally responsive conversations [51]. Replika, on the other hand, offered multimodal interactions using AR, VR, voice, and text to provide vibrant, emotionally nuanced user experiences [59]. BalanceUP and Leora platforms integrated multimedia capabilities such as self-help videos, psychoeducation modules, and escalation of crisis protocols to boost therapy interactions [50,65]. Shared across systems were features such as mood monitoring, journals, and mindfulness tools [64] and crisis identification and escalation features [56,68]. User behavior dashboards and emotional analysis were offered on some sites as well to monitor intervention [50]. Persona-based empathy delivery, as noted on Luda Lee, also enhanced emotional bonding and access to mental health assistance [60]. In the public health context, JomPrEP represented an exemplary hybrid model that brought together clinic-linked infrastructure with mobile-based functionalities like risk screening and e-consultation, as well as real-time dashboards for mental health support and referral among Malaysian MSM communities [6]. This convergence showcased the viability of digital mental health systems within wider healthcare environments.

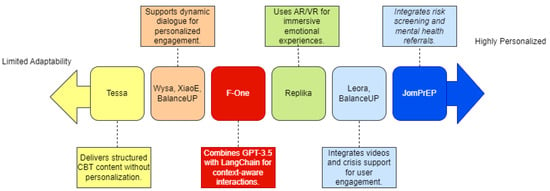

Figure 3 depicts the spectrum of chatbot system development from scripted rule-based systems up to more complex hybrid-integrated platforms.

Figure 3.

Chatbot system design spectrum: from scripted to personalized and context-aware systems (2020–2025).

This figure illustrates the continuum of the reviewed chatbot platforms from low adaptability to high personalization and clinical integration. Rule-based platforms like Tessa provide structured CBT with no adaptive features, while Wysa, XiaoE, and BalanceUP provide dynamic NLP-based dialogues for enhanced personalization. More advanced platforms like F-One integrate generative AI (e.g., GPT-3.5 and LangChain) to facilitate context-sensitive emotional interaction. Replika enables multimodal interaction (AR/VR), and hybrid-integrated platforms like JomPrEP and Leora integrate risk screening, video material, and real-time crisis assistance with the highest level of personalization and system complexity.

3.4. Technical Methodology and Evaluation

The methods of measurement employed in the included studies differ considerably in both methodological strength and comprehensiveness. Randomized controlled trials (RCTs) and quasi-experimental methods were used within the interventions of chatbots such as Elomia, XiaoE, Aury, Tessa, and BalanceUP with widely used psychological tools such as the PHQ-9, GAD-7, and GHQ-28 [52,61,63,65,67]. The use of standardized tools such as PHQ-9, GAD-7, DASS-21, RS-14, and custom behavior scales by Vitalk (Brazil and Malawi) and STARS (Jordan) has also demonstrated consistent improvements in mental health and resilience outcomes through culturally tailored, rule-based chatbot interventions [70,71,72]. In particular, JomPrEP employed a long-term assessment process that included follow-ups after 3, 6, and 9 months. It combined biomedical adherence measurement with app usage monitoring, and it provided distinct proof of both long-term user persistence and clinical outcomes [6]. The validity of ChatGPT-4’s diagnostic performance in predicting PHQ-9 and GAD-7 scores has already been validated by [55], with AUC scores of 0.96 and 0.86 reported, respectively—a high classification accuracy in the screening of mental health using a chatbot. Meanwhile, ref. [74] presented the CES-LCC, a 27-item conceptual framework that evaluates the safety of a chatbot, its emotional responsiveness, and the retention of memory in large language model (LLM) systems. The authors used a wide variety of quantitative measurement methods across the surveyed research works that involved the use of techniques such as BERTScore, ROC-AUC, Cronbach’s alpha, Intraclass Correlation Coefficients (ICC), UNIEVAL, confusion matrices, and visual heatmaps. Frequently used development and testing environments were TensorFlow, Flask, LangChain, Supabase, and Power BI.

3.5. Common Limitations

In spite of the speed of emergent innovation, several limitations persisted in the research. While the LLM-based chatbots suffered from contextual coherence and long-term memory problems, the most rule-based systems used tended to be inflexible [56,67]. Most of the evaluations remained short-term—short of the period of eight weeks, a dissuader of learning long-term influence and long-term change of actions [61,63]. As shown by the agents developed and tested primarily on samples of the Western population, cultural insensitivity was nonetheless common among the chatbots that failed to adapt in responding to non-Western samples [67]. Data concerns and crisis escalation protocols, such as privacy, were not adequately standardized across the deployments. Data governance, authorization, and emergency handling were not built into most of the research [33]. Methodologically, most of the evaluations primarily used self-reporting with less emphasis placed on blending body measurement or passive sensing data, something that might have improved the diagnostic precision [68]. Some of the promising tools that emerged in 2024–2025, such as Buoy Health, Limbic, Ada, MoodKit, Kintsugi, Mindspa, Happify, and Headspace, and others, possessed technologically advanced features but lacked peer-reviewed examination, reflecting the growing evidence–innovation gap [56,59]. For practical applicability, future applications should focus on longitudinal trials, open reporting, and cross-cultural validation. This review also recognizes a methodological limitation: Inter-rater reliability was not statistically assessed using the Kappa coefficient, despite the fact that two independent reviewers performed manual screening. Furthermore, while cultural insensitivity was identified across many included studies, most studies lacked sufficient quantitative indicators or standardized metrics for measuring cultural sensitivity or adaptation levels. This absence of consistent quantifiable data limited our ability to conduct a more systematic cross-cultural comparative analysis. Furthermore, this review acknowledges that although difficulties with cultural adaptation were noted, the majority of systems lacked end-user participation in the design process at the development stage. Particularly in LMIC contexts where cultural norms may diverge greatly from Western models, future research is urged to embrace community-engaged frameworks like focus groups, cultural advisory panels, and iterative co-design with actual users.

Access is a critical limitation in low- and middle-income nations (LMICs). Few tools addressed literacy or mobile infrastructure inequalities. Low-literacy, rural, or disadvantaged users lack the voice-compatible, low-bandwidth, multilingual applications that they need, emphasizing the urgent need for context-sensitive, inclusive design methods [6,28]. This analysis will support the interpretation of the gaps and the recommendations discussed later.

3.6. Synthesis Table: Chatbot Comparison Across Categories

Table 4 and Table 5 provide a comparative overview of AI-based mental health chatbots (2020–2025) across therapeutic models, technical methods, cultural adaptation, and deployment tools.

Table 4.

Overview of chatbot studies and technologies (Part 1).

Table 5.

Overview of Chatbot Studies and Technologies (Part 2).

Table 2 and Table 3 (Section 2.7) provide a summary of the methodological quality evaluations of all the included research, building on the comparative system-level synthesis shown in Table 4 and Table 5. These studies exhibit a notable level of methodological variability. High-quality randomized controlled studies (e.g., He et al. [52], Sabour et al. [56], Ulrich et al. [65], and Fitzsimmons-Craft et al. [67]) showed strong internal validity and solid clinical outcomes. On the other hand, a number of studies (such as Florindi et al. [51], Omarov et al. [54], and Amchislavskiy et al. [68]) were mainly concerned with technical development or feasibility evaluations and lacked clinical outcome measurements. From tiny exploratory investigations (e.g., Siddals et al. [58], N = 19) to large-scale randomized trials with more than 700 individuals (e.g., Fitzsimmons-Craft et al. [67]), sample sizes varied greatly.

Furthermore, the variety of psychological evaluation instruments used, such as the UCLA Loneliness Scale, GAD-7, and PHQ-9, illustrates the field’s inventiveness as well as its fragmentation. This discrepancy emphasizes how urgently uniform evaluation procedures are needed. The complexity of existing system designs is highlighted by Table 4 and Table 5, which show how AI-based mental health chatbots integrate various treatment techniques, technical architectures, cultural adjustments, and deployment platforms.

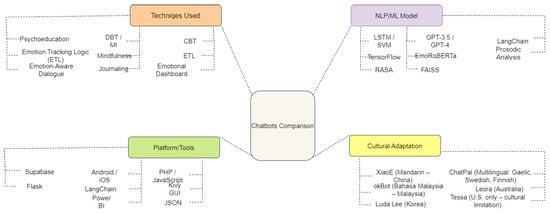

Figure 4 shows a mind-map representation of the comparative chatbot architectures across four important dimensions: platform/tools, NLP/ML models, cultural adaptation, and therapeutic procedures. This helps to graphically synthesize these various system designs and implementation methodologies.

Figure 4.

Comparative mapping of AI-based mental health chatbot systems.

To better understand the design and functionality of AI-based mental health chatbots, a conceptual comparison was conducted. The following mind map (Figure 4) visually summarizes key components across four main dimensions: techniques used, platform/tools, NLP/ML models, and cultural adaptation.

Figure 4 illustrates a mind map of the conceptual comparison of AI-powered chatbots for mental health across four main dimensions: the techniques employed, NLP/ML models utilized, deployment platforms/tools, and cultural localization. The figure aggregates attributes from 25 studies and shows the heterogeneity of the therapies employed (e.g., CBT, mindfulness, journaling), AI technology used (e.g., GPT-4, EmoRoBERTa, LSTM), deployment platforms/tools (e.g., Flask, LangChain, Supabase), and localization initiatives (e.g., XiaoE in China, okBot in Malaysia, ChatPal in multilingual regions).

3.7. Keyword Co-Occurrence Network and Thematic Clusters

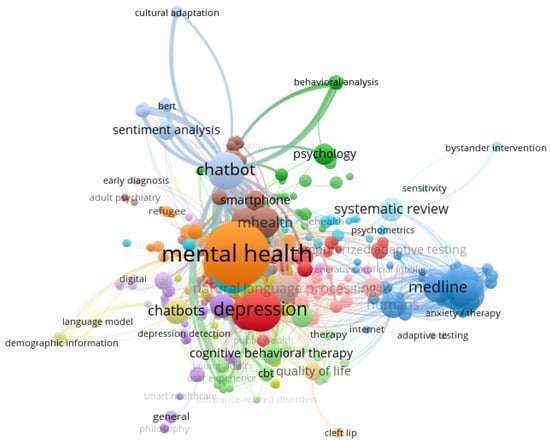

The titles, keywords, and abstracts of included studies were used to develop a network of co-occurrence keywords by using VOSviewer (1.6.20). Each node in Figure 5 is a term, linkages delineate co-occurrence intensity, and size signifies frequency. Color-coded clusters represent thematic groups. The green one marks mobile-based solutions in that it connects “mental health,” “mHealth,” and “mobile phones.” The red one, therapeutic conversational agents, links “chatbot,” “CBT,” and “sentiment analysis.” AI-enabled clinical tools are signified by the blue one that links “natural language processing” and “psychotherapy.” Peripheral clusters (such as orange and purple) signify niche areas like ethics, cultural adaptation, and adaptive testing. The multidisciplinary character of the figure is attested by this graph, and it is congruent with major topics and gaps established during the analysis.

Figure 5.

Keyword co-occurrence network Map (2020–2025) showing thematic clusters generated using VOSviewer. Each color represents a distinct cluster of frequently co-occurring keywords.

3.8. Density Mapping of Author Co-Authorship

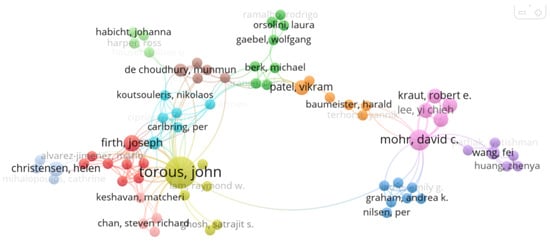

To gain an insight into collaboration patterns in the field, co-authorship network analysis was conducted. Figure 6 illustrates principal clusters of collaborative researchers working on AI-informed mental health studies, showing prominent authors and research team connectivity.

Figure 6.

Network map of author co-authorship (2020–2025).

The collaboration patterns of the authors in the studies under review are depicted in this map, designed by VOSviewer. Node size represents publication number, while thickness represents collaboration level. Warmer-colored authors indicate greater engagement or connection. Known for their research in digital and AI-based mental health, some key contributors include Helen Christensen, David Mohr, Joseph Firth, and John Torous. Authors such as Yi-Chieh Lee, Carmen Moreno, and Vikram Patel, who are involved in research in AI ethics and mental health globally and are also included in the map. With institutional and international clusters indicating main themes addressed in this review, the map illustrates a multidisciplinary research community in a cooperative manner.

AI chatbots for mental health are rapidly evolving to be more multimodal, affect-sensitive, and culture-aware. Large language models (LLMs) and generative AI innovations have overwhelming potential for enhancing scalability and customization. This promise, however, must be realized through concomitant progress in long-term clinical validation, cultural adaptability, and robust ethics protections. The development of standardized assessment frameworks, inclusive co-design involving diverse user groups, and adaptive learning algorithms must be the first priorities for research. These measures are required to ensure the clinical effectiveness, global relevance, and practical safety of AI-supported mental health treatments.

4. Thematic Analysis and Discussion

This analysis examined 25 peer-reviewed articles published between 2020 and 2025 to assess the evolving field of AI-powered chatbots for mental health. As a result, notable progress was made in the areas of cultural sensitivity, technology development, and therapeutic use. However, there are still a number of long-standing limitations, chief among them being those related to ethical control, long-term clinical evidence, and inclusion. The discussion that follows links back to the compiled evidence and places the findings within important dimensions.

The geographic distribution of this study is still strongly biased toward high-income, Western-centric contexts like the U.S., Europe, Australia, and some regions of East Asia, despite the fact that it includes research from a wide range of nations. Notably, there is still a dearth of research from a number of Global South regions, most notably, Africa, the Middle East, and Latin America. To address this constraint, we have included a number of papers from various areas to offer a more comprehensive viewpoint. Vitalk in Brazil and Malawi and the STARS system in Jordan are noteworthy examples of culturally appropriate, cognitive behavioral therapy-based chatbot interventions in underrepresented regions of Latin America, Sub-Saharan Africa, and the Middle East. This helps to achieve more balanced geographic representation and diversity in system design [70,71,72]. We do admit, though, that more work is still required to increase geographic inclusion. To increase the generalizability, equity, and cultural relevance of AI-based chatbot interventions for mental health across a range of international populations, this gap must be filled.

4.1. Symmetry in Therapeutic and Ethical Frameworks

The explicit and implicit symmetry present in the design and execution of the evaluated mental health chatbots is a significant but little-studied aspect of these systems. In this sense, symmetry is culturally balanced adaptation, reciprocal communication patterns, and balanced relationships. Many chatbots use symmetric structures that are modeled after proven therapeutic exchanges (e.g., reciprocal validation and reflective inquiry) and are inspired by cognitive behavioral therapy (CBT).

The suggested symmetric therapeutic framework’s theoretical foundation comes from ideas found in evidence-based psychotherapies, particularly cognitive behavioral therapy (CBT), which hold that balanced, reciprocal interactions between the therapist and the client are essential to the therapeutic process. Symmetry appears in digital interventions through emotional mirroring, regulated conversational flows, and adaptive feedback loops that mimic the workings of therapeutic alliances. For instance, XiaoE uses CBT-informed instruction that mimics two-way therapeutic discourse, organized journaling, and suicide risk assessment. In a similar vein, Luda Lee uses persona-driven empathy to keep the dialog balanced while making people feel heard and encouraged. The incorporation of PHQ-9 and GAD-7 tests into culturally appropriate dialogue paths in okBot is an example of a symmetric design that harmonizes clinical evaluation with user-centered emotional interaction. The essence of symmetry is embodied by these design elements taken together, promoting emotional safety, trust, and culturally aware therapeutic continuity in AI-powered mental health interactions.

Through customized interactions that are sensitive to cultural settings, culturally adaptive chatbots (such as XiaoE, okBot, and Luda Lee) exhibit symmetric design and guarantee equivalent therapeutic efficacy across a range of user demographics. Symmetry in ethics takes the form of a well-balanced strategy that protects user liberty and privacy while optimizing AI benefit. Chatbot interactions that are predictable, stable, and trust-building are enhanced by the use of structural symmetry through mirrored conversational flows and feedback loops. In the end, using AI technologies to provide fair, efficient, and morally sound mental health interventions depends on symmetry—therapeutic, cultural, ethical, and structural.

4.2. Ethical Variations Across Individual Chatbot Systems

Although privacy, autonomy, and fairness are fundamental ethical considerations for AI-powered chatbots for mental health, the evaluated studies showed that these protections were implemented differently in different systems:

XiaoE [52] addresses safety concerns for high-risk users by integrating suicide risk detection and escalation processes.

Although Luda Lee [60] stresses regulated persona engagement and empathy, participants pointed out sporadic over-enthusiasm that could compromise user trust.

Although HelpMe [50] emphasizes emotive dashboards and data visualization, it offers little information about long-term data storage and confidentiality procedures.

Psych2Go [68] uses prosodic speech analysis to identify emotions; however, because voice data are sensitive, privacy concerns are raised.

Though theoretical without empirical support, Leora [62] conceptually integrates ethical design concepts like explainability and clinical oversight.

Limited information about algorithmic bias mitigation, data security rules, and informed consent procedures is provided by a number of other systems (e.g., Saarthi, ChatPal, Elomia).

This variation shows that although ethical awareness is widely accepted, the breadth and depth of implementation vary greatly throughout platforms. To maintain strong governance, more detailed ethical auditing standards are still necessary, especially for disadvantaged and culturally diverse user groups.

4.3. Practical Implications of the Symmetric Therapeutic Framework

We include specific examples from several studies to bolster the proposed symmetric therapeutic framework’s lucidity and empirical foundation. The chatbot Psych2Go, for example, used adaptive GPT-3.5-based responses and prosodic speech analysis to enable emotionally resonant interactions and reflect users’ emotional tone back to them. This embodies therapeutic symmetry through user-centered validation and real-time emotional mirroring [68]. Similarly, Dejalbot used structured journaling and feedback loops in conjunction with CBT approaches, encouraging mutual involvement and developing a digital therapeutic alliance. Relational symmetry was demonstrated in Maples et al. [59], where the Replika chatbot used frequent, sympathetic dialog mirroring to help students develop trust and feel less alone. Although rule-based, Tessa employed reflective inquiry and structured CBT prompts to urge users to react carefully, replicating reciprocal conversations, as demonstrated by Fitzsimmons-Craft et al. [67]. Furthermore, employing emotion-sensitive modules and mood dashboards, Wysa and HelpMe showcased tailored therapeutic feedback that modified answers according to user inputs, thereby reinforcing adaptive feedback loops that are essential to symmetric therapeutic design [50]. Langhammer et al. [63] highlighted how organized, emotionally balanced responses contributed to engagement and psychological benefit, further confirming that teenagers utilizing a CBT-informed chatbot experienced perceived empathy and improved trust. Together, these studies demonstrate how chatbots can improve emotional safety, engagement, and the therapeutic relationship by including essential components of the symmetric framework, such as cultural mirroring, adaptive response, and balanced conversational flow.

4.4. Clinical and Technical Effectiveness

The most common treatment framework among the systems under evaluation was cognitive behavioral therapy (CBT), as appears in Figure 2. Its versatility toward digital extension—specifically, provision through structured interventions like journaling, behavioral activation, and cognitive restructuring—is demonstrated by its use in intervention resources such as Woebot, XiaoE, Wysa, and Aury [52,63,64]. Its widespread use can be attributed to its modularity, especially in cases involving short-term support where resources show statistically significant declines in depression and anxiety scores based on validated measures such as the GAD-7 and PHQ-9 [73]. Even with these encouraging outcomes, clinical evidence strength remains uneven. Most studies drew on relatively small, demographically homogeneous samples, used no control groups, and included only brief follow-up periods. Very few included physiology or passive sensing data to cross-check user outcomes. Nonetheless, a number of studies in this review documented statistically significant improvements, which offer crucial early clinical indicators. He et al. [52], for instance, showed that chatbot-based CBT sessions significantly decreased PHQ-9 ratings (mean change: −3.21; p < 0.001). Following four weeks of Luda Lee use, Kim et al. [60] found substantial reductions in social anxiety (Liebowitz Social Anxiety Scale; t(175) = 2.67, p = 0.01) and loneliness (UCLA Loneliness Scale; t(175) = 2.55, p = 0.02). PHQ-9, GAD-7, PANAS, and ISI were among the areas where Sabour et al. [56] found statistically significant decreases (all p < 0.001). While Ulrich et al. [65] reported sustained improvement across PHQ-ADS, PHQ-15, PSS-10, and functional impairment scores after 6 months of intervention, Fitzsimmons-Craft et al. [67] identified significant reductions in disordered eating and body dissatisfaction (p < 0.01). Although these results point to encouraging short-term efficacy, more extensive research with a range of demographics and meticulous randomized designs is still required to completely prove strong clinical validity.

In addition, comparisons against conventional therapeutic baselines are still the exception—preventing real-world clinical validity from being properly evaluated. On the technical side, however, the results are promising. For example, ref. [53] achieved more than 94% accuracy classification through a hybrid model consisting of LSTM and SVM. In the meantime, systems like F-One brought out the strength of integrating GPT-3.5 and EmoRoBERTa to drive emotionally aware dialogue [51]. Such developments are technically striking—but here lies the important reflection: When deprived of clinical underpinnings, high-performing models can disengage from treatment aims. My perspective is that the trend may be one in which technical sophistication is taking the place of effective mental health outcomes. Strong longitudinal trials and increased incorporation of user feedback and real-world behavior data are necessary to close this gap.

4.5. Cultural Adaptation and Local Relevance

Culture is central to influencing the way individuals understand, experience, and manage mental health issues. These are evident in systems such as XiaoE (China) [52], okBot (Malaysia) [27], and Luda Lee (South Korea) [60]. These systems showed culturally attuned adjustments—tailoring words, affect, and therapy framing to comply with regional user familiarity. These systems became more interactive and meaningful within those cultures, and it was clear that cultural awareness is the foundation for effective therapy. In contrast, Tessa’s Western-oriented approach was less effective culturally. While its standardized CBT implementation was scalable and affordable, the use of rule-based templates by the tool restricted it from connecting effectively across different cultures—especially within the LMICs, which are characterized by spiritual perspectives, linguistic subtleties, and social stigma [67]. STARS in Jordan and Vitalk’s localization in Brazil and Malawi are as examples of culturally sensitive CBT chatbots that are created using context-specific language and framing, resulting in increased engagement and clinical relevance [70,71,72]. This review confirms that cultural adaptation is both a design philosophy and not an altogether technical issue. Few systems, however, were co-designed with local actors, e.g., clinicians or community members. Participatory design and context-specific trials must be prioritized for inclusive and trusted developments.

However, while cultural localization was often described in the reviewed studies, the specific methods used to achieve this—especially through user participation—remain poorly articulated. For example, although systems like Luda Lee and XiaoE reported culturally appropriate adaptations in language and emotional tone, few studies explained whether these were developed via focus groups, user testing, or participatory co-design. Kim et al. [60] provided partial insight by analyzing open-ended user reflections, but this was post-deployment rather than at the design stage. Most adaptations appeared to be designer-driven, assuming cultural expectations rather than empirically verifying them with users. This lack of direct user involvement risks overlooking key cultural nuances that affect therapeutic engagement and trust. To advance this area, future development of AI mental health chatbots should adopt participatory design methodologies that incorporate user feedback from target communities at multiple stages—particularly in low- and middle-income regions where sociocultural norms may diverge significantly from Western models.

Expansion on User Cultural Adaptation Participation

The majority of the examined research offers little methodological transparency on how cultural features were found, verified, or included throughout development, despite the fact that some systems have culturally modified their content. Only a small number of studies, like Kim et al. [60], included user experience analysis following chatbot implementation, offering insightful but backward-looking information on cultural fit. Instead of involving target users in the early stages of participatory design, the cultural adaptation process for the majority of systems seems to rely mainly on developer-driven assumptions about local conventions. The authenticity and breadth of cultural sensitivity may be constrained by the lack of formal co-design procedures. For AI-powered mental health interventions to achieve more meaningful, equitable, and successful cultural customizing, future research should give priority to community involvement frameworks such as focus groups, cultural advisory panels, and iterative prototyping with actual users.

4.6. Expanding the Geographical Scope: Insights from the Global South

Recent research has shown that integrating digital technologies into mental health care continues to offer potential solutions. [58] conducted a thorough assessment of AI-based chatbots in their systematic review, emphasizing their potential to provide scalable mental health interventions in a variety of domains, such as cognitive behavioral therapy (CBT), emotional regulation, and personalized user engagement. Even while chatbots show promise in reducing symptoms, the review highlighted issues with long-term engagement sustainability and brought up significant ethical issues, especially those pertaining to privacy, trust, and possible over-reliance on unsupervised AI systems.

In a similar vein [75], who concentrated on digital mental health interventions in the United Arab Emirates, found significant potential for leveraging machine learning, virtual reality, telehealth platforms, and mobile apps to improve mental health systems. Such technologies can improve clinical results and increase access, especially in underserved areas, as their comprehensive analysis made clear. However, issues with data privacy, digital exclusion, the lack of uniform ethical frameworks, and inequalities in digital literacy—especially among disadvantaged populations—remain. These results are in line with larger global concerns about the fair application of digital mental health solutions.

Vitalk, an AI-powered chatbot for mental health that was implemented in Brazil, was evaluated in the real world by [70]. The levels of stress, anxiety, and sadness of more than 3600 consumers were shown to have significantly decreased. Natural language processing, mood tracking, gamification, interactive conversational flows, and cognitive behavioral therapy were all used by the chatbot to customize the user experience. Significantly, stronger clinical improvements were linked to higher levels of user engagement, showing that well-designed conversational agents can produce both high engagement and beneficial results, especially when culturally tailored to local populations.

Together, these studies show that AI-powered mental health solutions, such as chatbots and online platforms, have a great deal of promise to increase access to care, customize treatment, and produce quantifiable clinical outcomes. Nonetheless, there are still enduring issues with data protection, cultural sensitivity, inclusiveness for a variety of user groups, and ethical design. To guarantee these technologies’ safe and fair inclusion into international mental health care systems, further research is needed to improve them, evaluate their long-term efficacy, and create thorough regulatory frameworks.

4.7. Importance of Active User Participation in AI Chatbot Development

According to this review, one of the main factors influencing the efficacy and engagement results of AI-powered chatbots for mental health is active user interaction. User-driven interaction—such as answering prompts, establishing objectives, finishing tasks, and keeping regular contact with the chatbot—emerged as a key mechanism for therapeutic success across several studies that were part of our synthesis. In the ref. [70] study, for instance, users who reacted to the chatbot Vitalk on a regular basis demonstrated a significant decrease in stress, anxiety, and sadness, with daily engagement rates averaging more than eight responses. Similarly, ref. [61] reinforced users’ active engagement in managing mental health by implementing a goal-setting and self-monitoring tool that encouraged users to create personal targets. CBT-based journaling, which enables users to actively reflect on their thoughts, was introduced by [63]. Higher journaling frequency was associated with better symptom relief. Structured CBT modules were incorporated by [54], where engagement data were linked to improved emotional outcomes and user input directed the treatment flow.

Additionally, ref. [56] highlighted the use of behavioral logs and emotion-tracking, enabling users to control their emotional states through regular engagement. A GPT-enabled chatbot in [59] showed increased engagement when it provided sympathetic, back-and-forth dialogue, urging users to express themselves more freely. According to [6], users who attended three or more sessions per week saw statistically significant changes in their mood. Furthermore, ref. [51] discovered that participants evaluated a reflective diary feature, which is intended to foster self-awareness, as the most beneficial element, demonstrating a high level of user involvement in their journey toward mental wellness. Notably, ref. [27] improved cultural alignment and promoted more organic interaction among Malaysian users by localizing their chatbot in Bahasa Malaysia. Both refs. [55,66] showed that relational rapport and conversational style had a substantial impact on user engagement, particularly for those looking for sympathetic digital support.

Together, these results show that active participation is an essential component of successful digital mental health therapies, not just a feature. In order to achieve therapeutic benefits, user engagement—whether via journaling, structured responses, or emotional check-ins—is essential. As such, future chatbot systems should give priority to features that facilitate and promote ongoing user involvement.

4.8. Accessibility and Inclusivity

Although AI holds great promise for democratizing mental health, the review identifies a significant accessibility gap. The majority of chatbots implicitly assume that their users have reliable internet, smartphone coverage, and online expertise. This presumption excludes the most vulnerable audiences and is not applicable in rural or resource-poor places. Promising technologies like JomPrEP create a replicable hybrid care paradigm by bridging mobile risk screening with in-person referrals, as demonstrated by ref. [6]. As evidenced by [68], Psych2Go ensures functionality and privacy through prosodic emotion recognition in low-bandwidth voice-based systems. Developing for the “digitally invisible” is, in my view, I believe, both technically and ethically crucial. Future chatbots might actually increase health disparities as opposed to alleviating them if they happen to be anything but voice-based, lightweight, and multilingual.

4.9. Ethical Implications

Ethical monitoring remained a persistent blind spot. For sensitive topics including depression, trauma, and suicidal ideation, few systems had well-specified data policies, consent procedures, and escalation during emergencies (see also Section 5.3 on Standardization and Transparency). Added to this is that LMICs’ regulatory systems often remain underdeveloped. Positive instances can be seen, as [33] suggested ethical governance frameworks and explainable AI (XAI) architectures, respectively. However, instances like these are the exception, not the rule. Most implementations used ambiguous privacy notices with little or no operational protections or audits. A major concern is that innovation is outpacing accountability. Without enforceable norms, there is an increasing risk for abuse and unscrupulous application of data—at least in less regulated settings. Accordingly, next-generation chatbots need to integrate user-controllable privacy controls, legally enforceable protections, and auditable decision flows. Together, these insights directly respond to research concerns, specifically those related to ethical responsibility, cultural sensitivity, and clinical efficacy. The subsequent section offers strategic recommendations to fill the gaps identified and to advance ethical design for AI chatbots for mental health based on these findings. It is important to emphasize that, despite scalable support by AI-based chatbots, human therapists must never be replaced by them but can assist together to provide mental health learning, emotional screening, and early screening, specifically in areas where access to expert support is poor. There is still a need to distinguish clearly between clinical intervention and automated assistance to meet patient safety concerns, preserve therapeutic integrity, and maintain public trust. Furthermore, we recognize that there are not many empirical case studies that explicitly assess the performance of algorithmic non-interpretability, privacy breaches, or crisis responses in actual implementations. In order to further inform ethical design requirements, it is recommended that future research undertake thorough evaluations of such real-world settings.Additionally, certain examples from the analyzed studies point to possible dangers in practice. For instance, because of their continuous audio recording, systems that use prosodic voice data for emotion recognition, as Psych2Go, naturally raise the risk of unintentional sensitive data leaking. Similarly, therapeutic decision-making processes become opaque when non-transparent generative models, such as GPT-based architectures (e.g., F-One), are used, which makes patient trust and physician oversight more difficult. Furthermore, although XiaoE incorporates suicide risk escalation, little is known about how well it performs operationally when resolving crises in real time. These illustrations highlight the necessity for a more thorough empirical assessment of moral protections in practical settings.

4.10. Data Security and Transparency in LMICs

Due to fragmented governance structures, weak legal protections, and limited enforcement capabilities, data security and transparency issues are especially pressing in LMICs. The HelpMe chatbot implemented in Malaysia lacked strong procedures for long-term data storage and confidentiality, as noted by [50]. This was indicative of larger issues in guaranteeing user confidence in digital mental health treatments. In a similar vein, ref. [27] highlighted that their Bahasa Malaysia chatbot lacked explicit definitions of informed consent procedures and data encryption standards, possibly exposing sensitive user data. Further privacy concerns are brought up by Psych2Go [68], which uses prosodic speech analysis and records emotionally charged voice data without providing information about safeguards for sharing or storing it. These worries are heightened in LMICs, where a lack of proper data privacy laws may allow unwanted parties to access or keep extremely sensitive mental health data overseas. Additionally, customers frequently do not know how their data are processed, whether they are shared with other parties or used to train AI systems. In addition to undermining user sovereignty, this lack of transparency raises the possibility of stigma, discrimination, and improper use of health information. Thus, it is imperative that context-sensitive data governance mechanisms designed for LMICs be put into place. These must include procedures for independent oversight, transparent data regulations, and enforceable ethical norms in order to safeguard user rights and promote long-term confidence in AI-based mental health treatments.

5. Future Research Directions

This section synthesizes future directions for research and development based on the thematic findings and gaps within this systematic review. Although there has been technological advancement, cultural promise, and prospective clinical utility shown through AI-based mental health chatbots, evidence also indicates limitations regarding ethical governance, equity, and long-term verification. This section, thus, delineates strategic avenues to enhance the efficacy, safety, and international use of these products.

5.1. Contextual and Culturally Grounded Design

The research highlights the importance of culturally sensitive chatbot systems beyond mere translation. As seen in the studied research (e.g., XiaoE, okBot, Luda Lee), systems developed within the context of local linguistic, affective, and cognitive guidelines are more user-engaged and contextually sensitive. According to refs. [28,76], future chatbots must be co-designed with local clinicians, carers, and end-users so that they fit local idioms, stigma perceptions, and therapy expectations. The design must incorporate cultural adaptability and avoid adding it after deployment as an afterthought dimension from a technical viewpoint. The SLR and literature cite developing cultural sensitivity as a methodological necessity and not as an afterthought in a technical context.

5.2. Integration Within Public Health Ecosystems

One of the most viable paths is hybrid integration into existing health structures. As JomPrEP [6] illustrates, risk screening and chatbot triage could be combined with referral dashboards and real-time clinic support. This makes it possible to achieve sustainability, enable clinical supervision, and fill the gap in health infrastructure and innovation-driven solutions. Hence, the review proposes putting systems that facilitate interoperability for electronic health records and public health programs first, especially within LMICs, which rely on system-based trust for implementation. As pointed out by [77], recognizing and solving for various factors governing user acceptance is crucial for effective adoption and the continued use of electronic health records in various healthcare settings. Similarly, recent evidence by [78] shows the utility in regularly tracking blood parameters—e.g., hemoglobin during pregnancy—to allow early risk prediction and enhanced health outcomes. Incorporating such clinical biomarkers into online platforms could further increase the accuracy and efficiency of AI-based interventions in both maternal and general health.

5.3. Standardization, Transparency, and Long-Term

One common failing in research has been poor follow-up over time together with standardized systems of measure. The review calls for lengthy RCTs, standardized measures (e.g., GAD-7, PHQ-9), and utilizing Explainable AI (XAI) systems to provide interpretability. Although some high-scoring systems, such as F-One and ChatGPT-based systems, produced superior technical performance, their clinical utility was not properly proven through science. That challenge is by no means specific to mental health chatbots; even in other clinical applications of AI in medicine—e.g., CNN-based classification for brain tumors—models such as ResNet50 have achieved outstanding technical accuracy but need rigorous clinical validation prior to general deployment [79]. The systems provide real-time, scalable mental health detection, particularly in digital media, and have high accuracy in identifying depression from emotionally expressive as well as informal social media posts [80]. Additionally, many established chatbot systems (e.g., Replika, Kindroid, Psych2Go) were not critically reviewed in accordance with the parameters of this systematic research, which points to a large gap in scholarly validation.

5.4. Ethical Leadership and Regulatory Protections

As discussed in Section 4.9, it was found that most chatbot systems had incomplete ethical frameworks, specifically regarding user consent, data privacy, and crisis response. Few models, e.g., ref. [33], had features for ethical governance or explainability. As mental health data are sensitive, this review proposes regulatory measures enforcing opt-in consent, data minimization, regular audits, and following international guidelines like those suggested by WHO and WPA. Without legally enforceable ethics, large-scale deployment has the potential to erode user trust and safety, particularly within low-governance regimes. Future research should also objectively evaluate the practical efficacy of these ethical principles in safeguarding user data, guaranteeing interpretability, and handling crucial situations during chatbot deployment, in addition to setting regulatory rules.

5.5. Investment in Digital Access and Infrastructure