Enhancing Security in Augmented Reality Through Hash-Based Data Hiding and Hierarchical Authentication Techniques

Abstract

1. Introduction

2. Related Work

2.1. Hash-Based Data Hiding in Multimedia Security

2.2. Traditional Digital Signature and Data-Hiding Techniques for Content Authentication

2.3. Secure Authentication in Augmented Reality (AR) Content

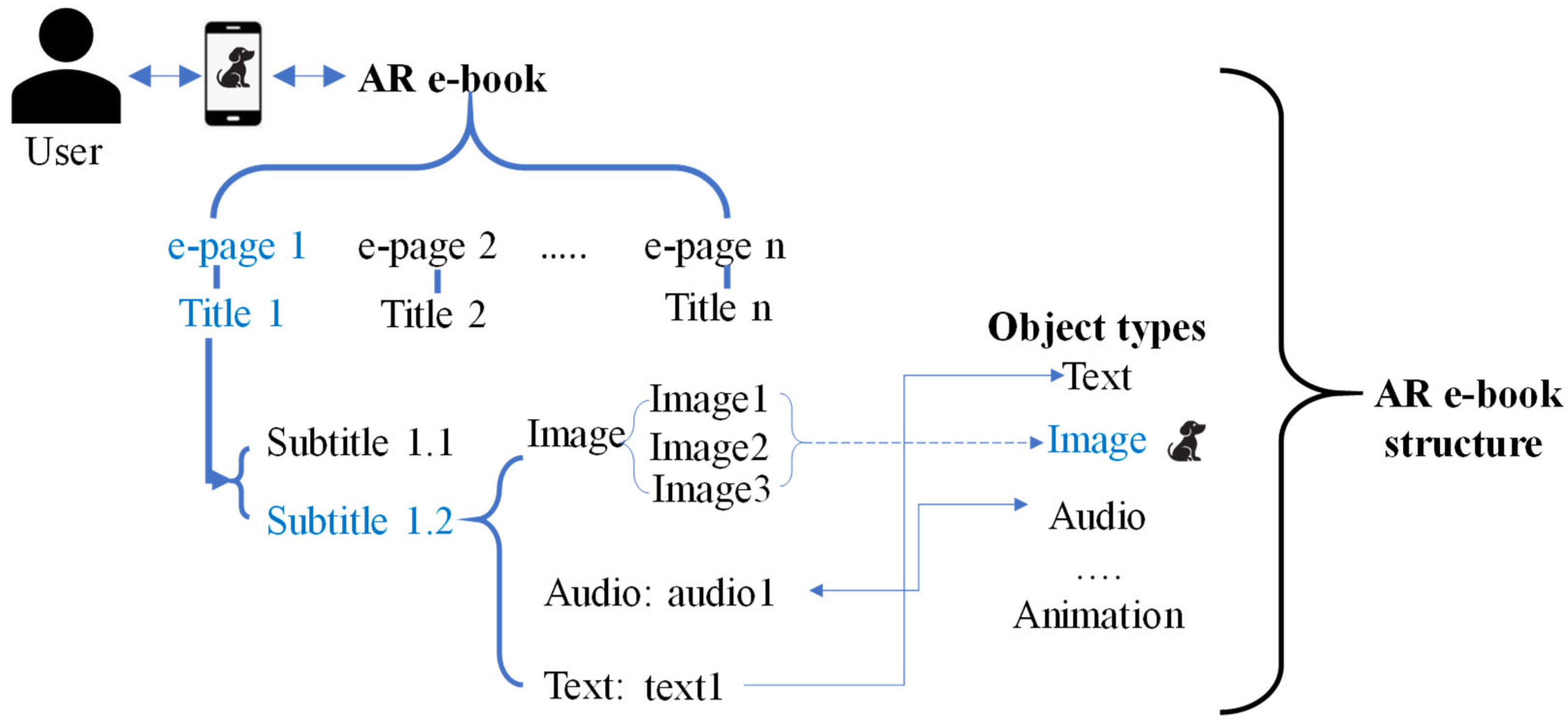

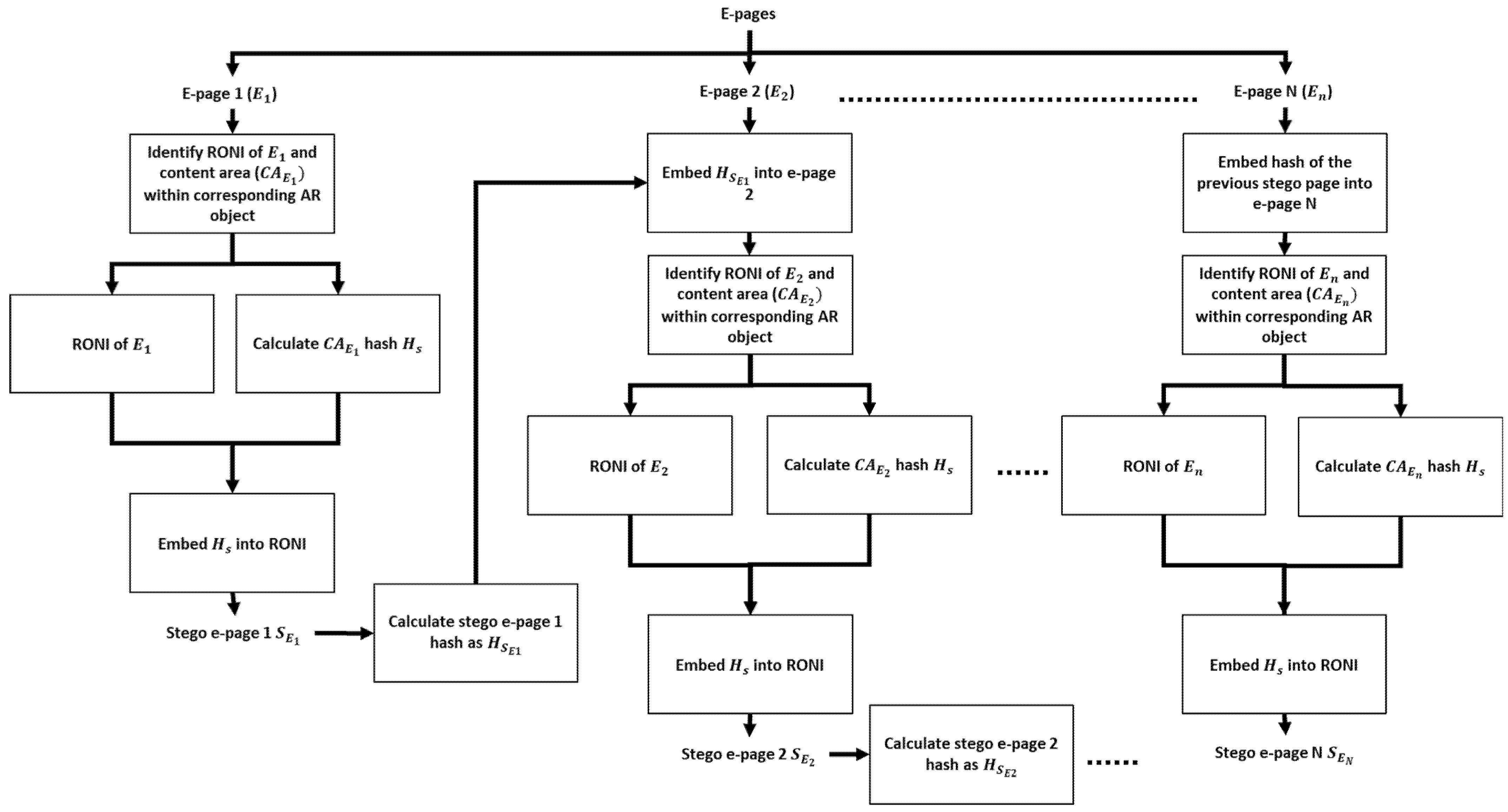

3. Proposed Method

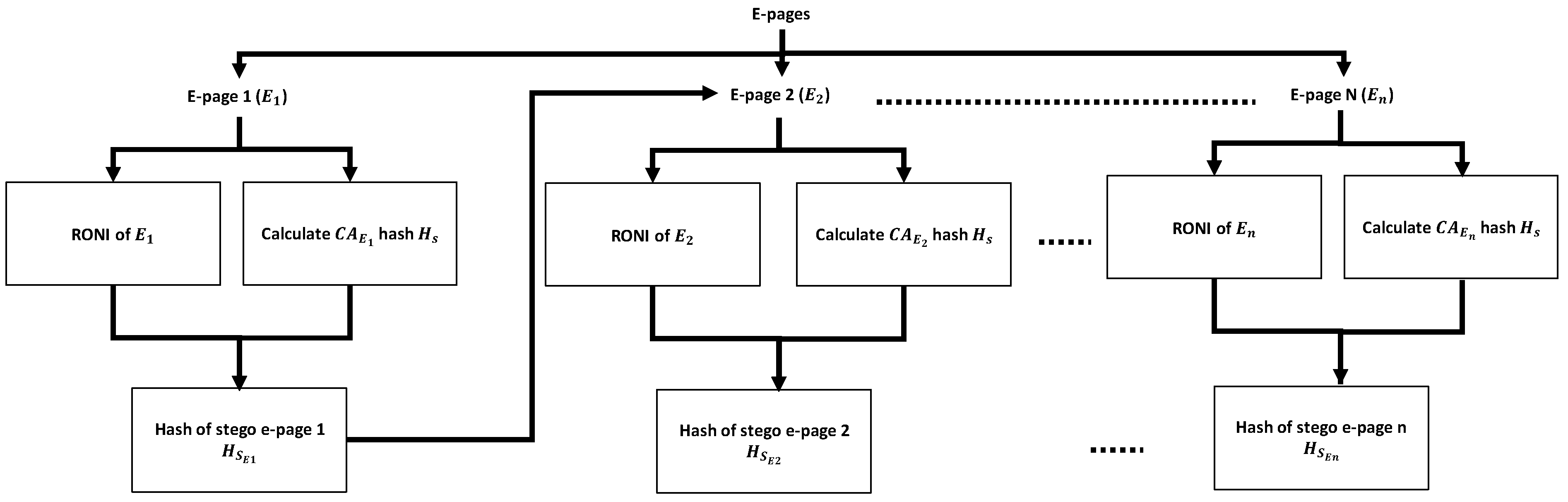

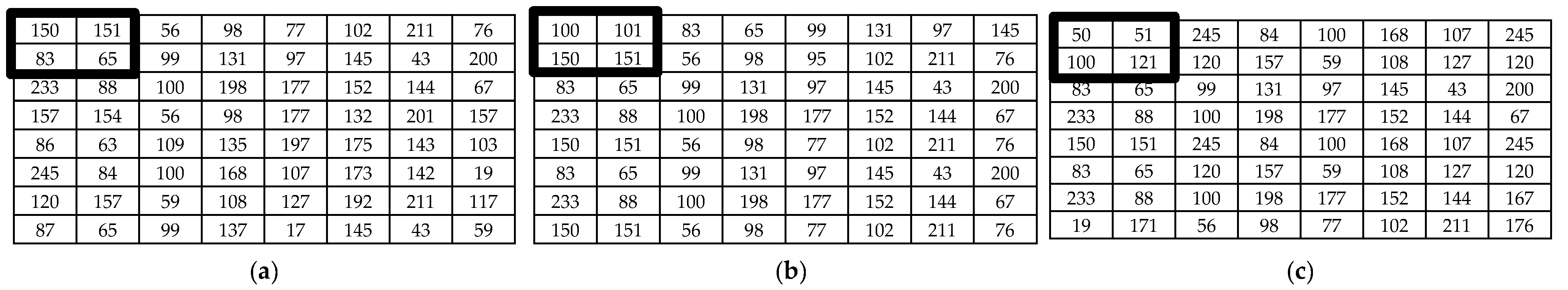

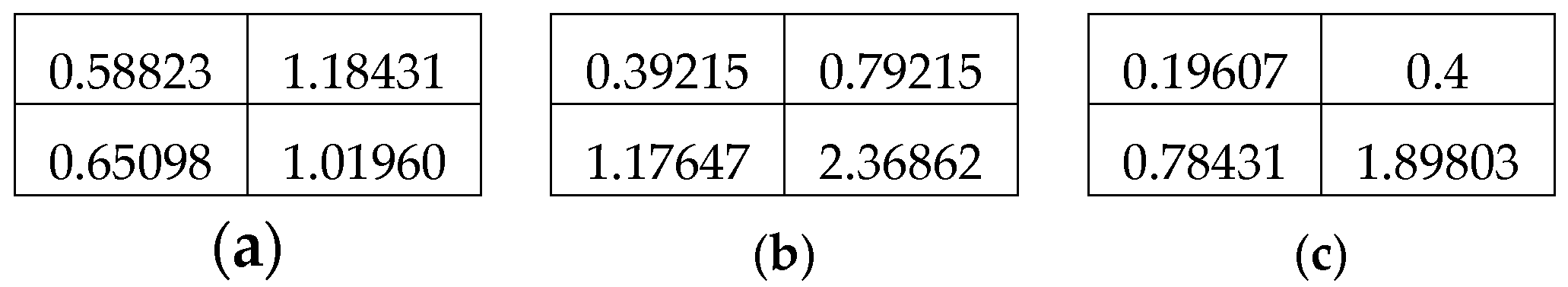

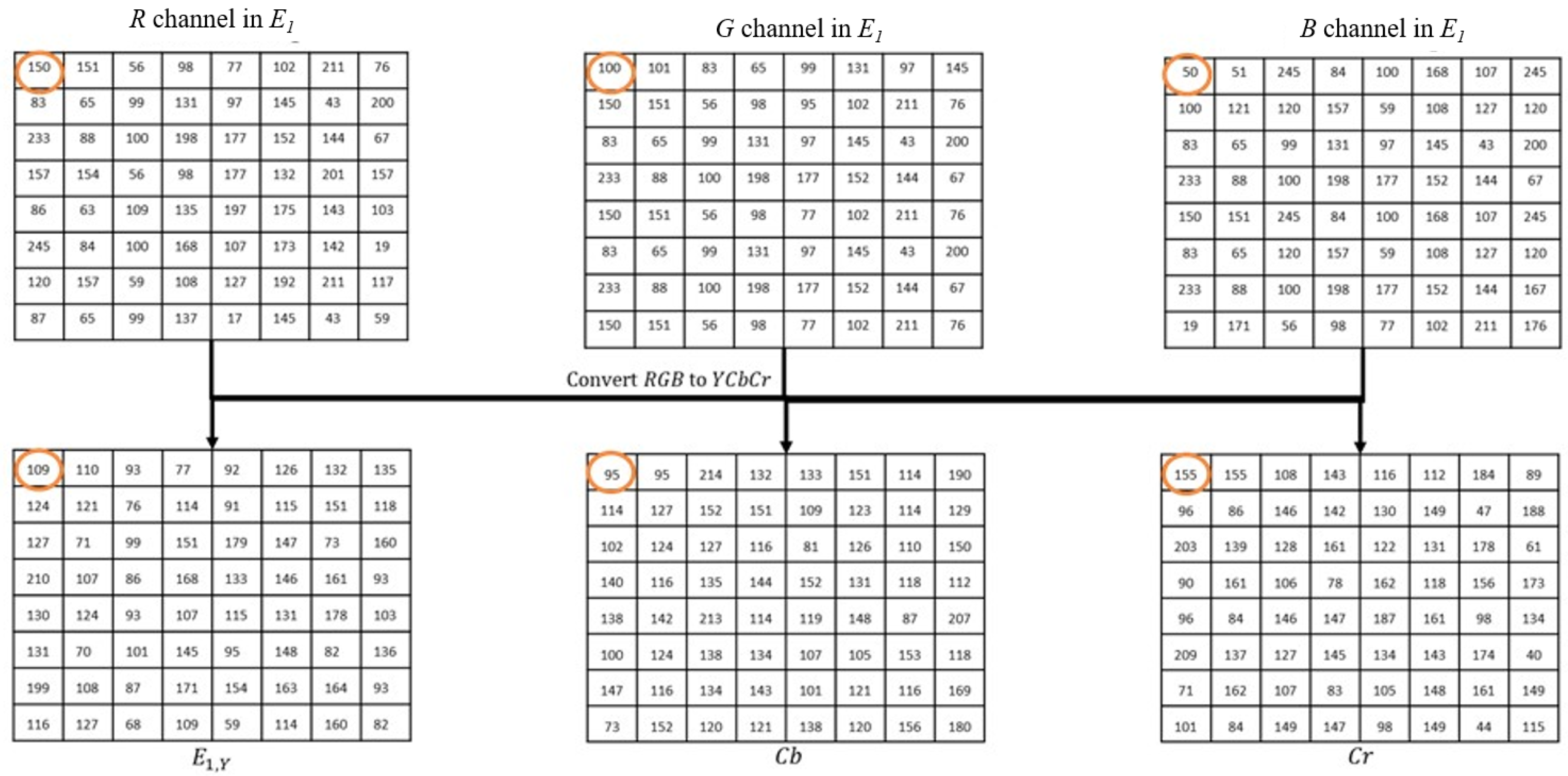

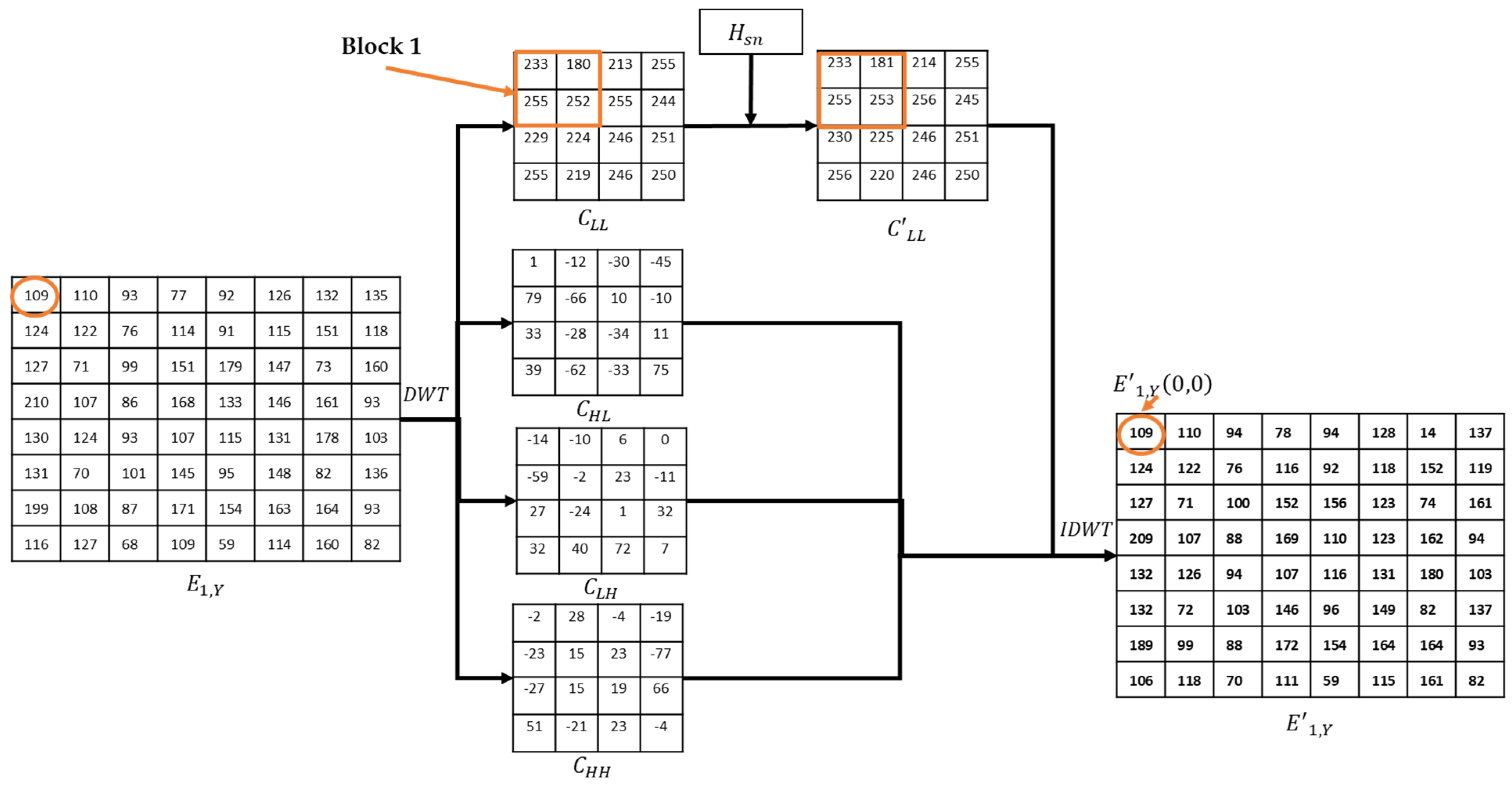

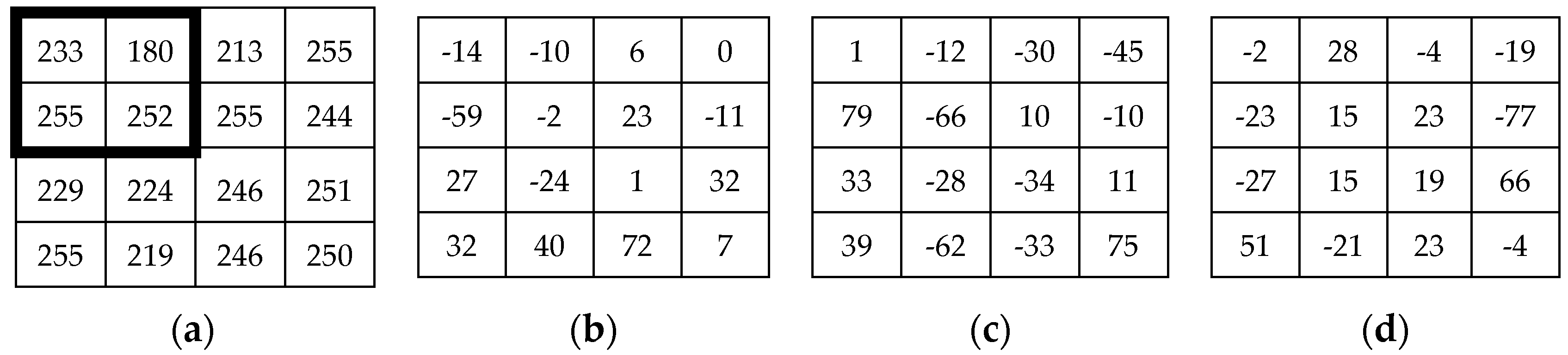

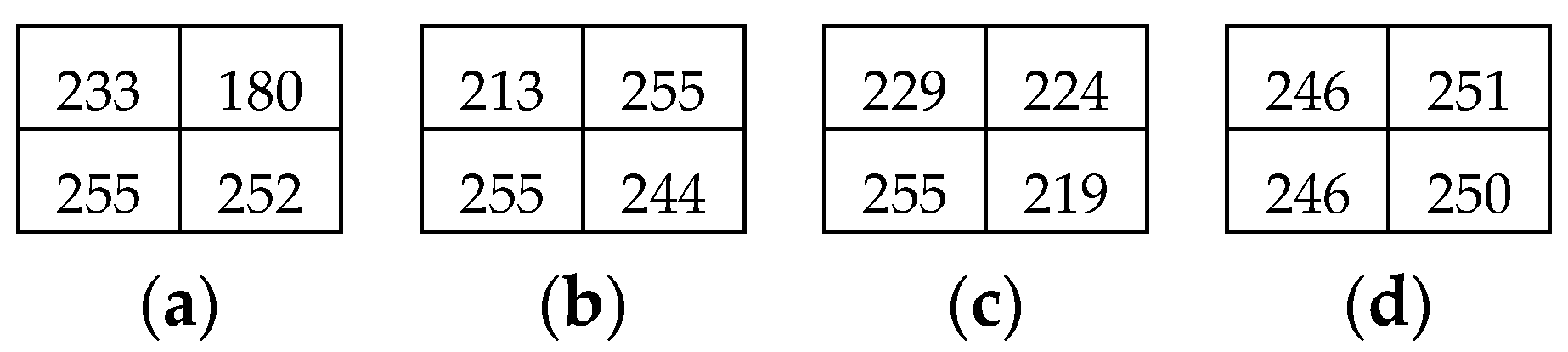

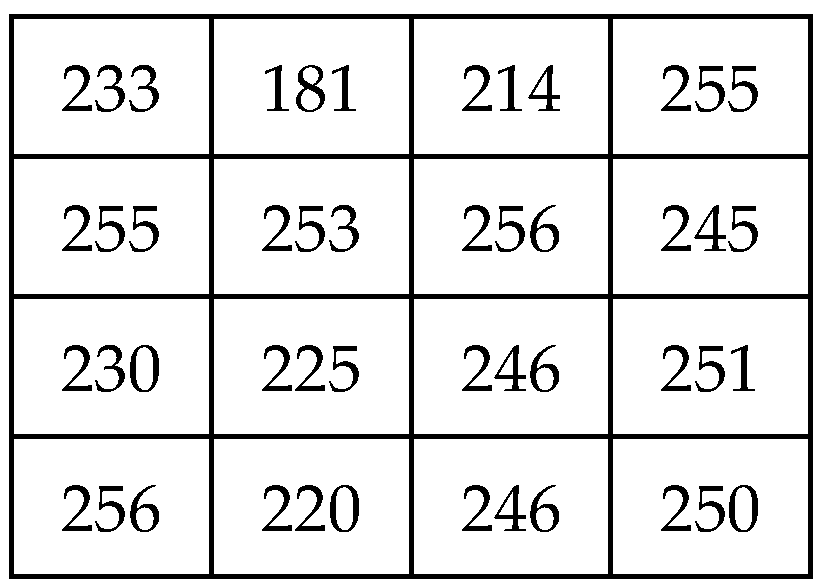

3.1. Hash-Based Data-Embedding Phase

| Algorithm 1: Key-based hash algorithm. |

| Algorithm 2 Hash-based data embedding. |

3.2. Hash-Based Data Extraction and Authentication

3.2.1. Hash-Based Data Extraction

3.2.2. Multi-Level Data Authentication

4. Experimental Results and Discussion

4.1. Image Quality

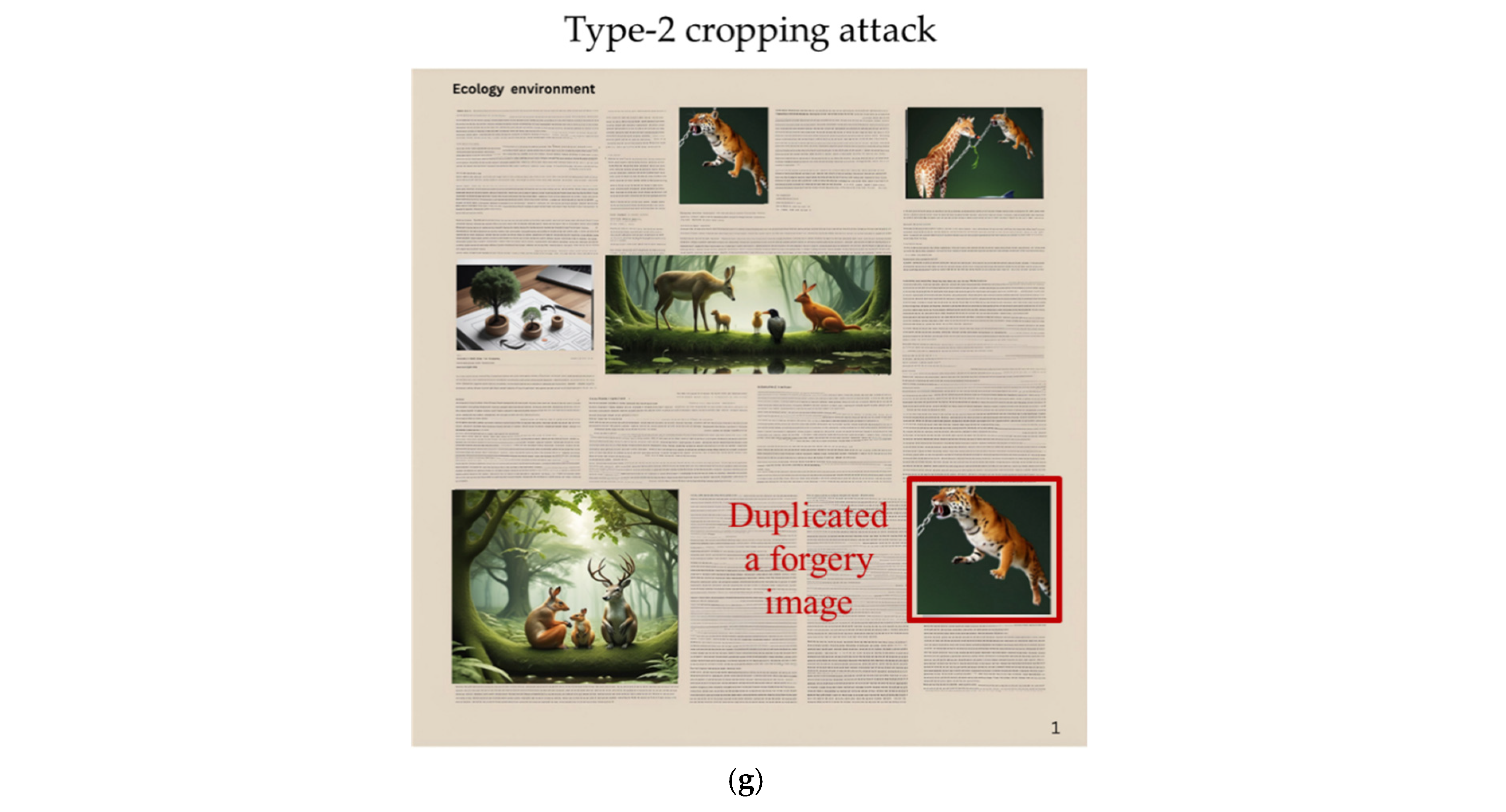

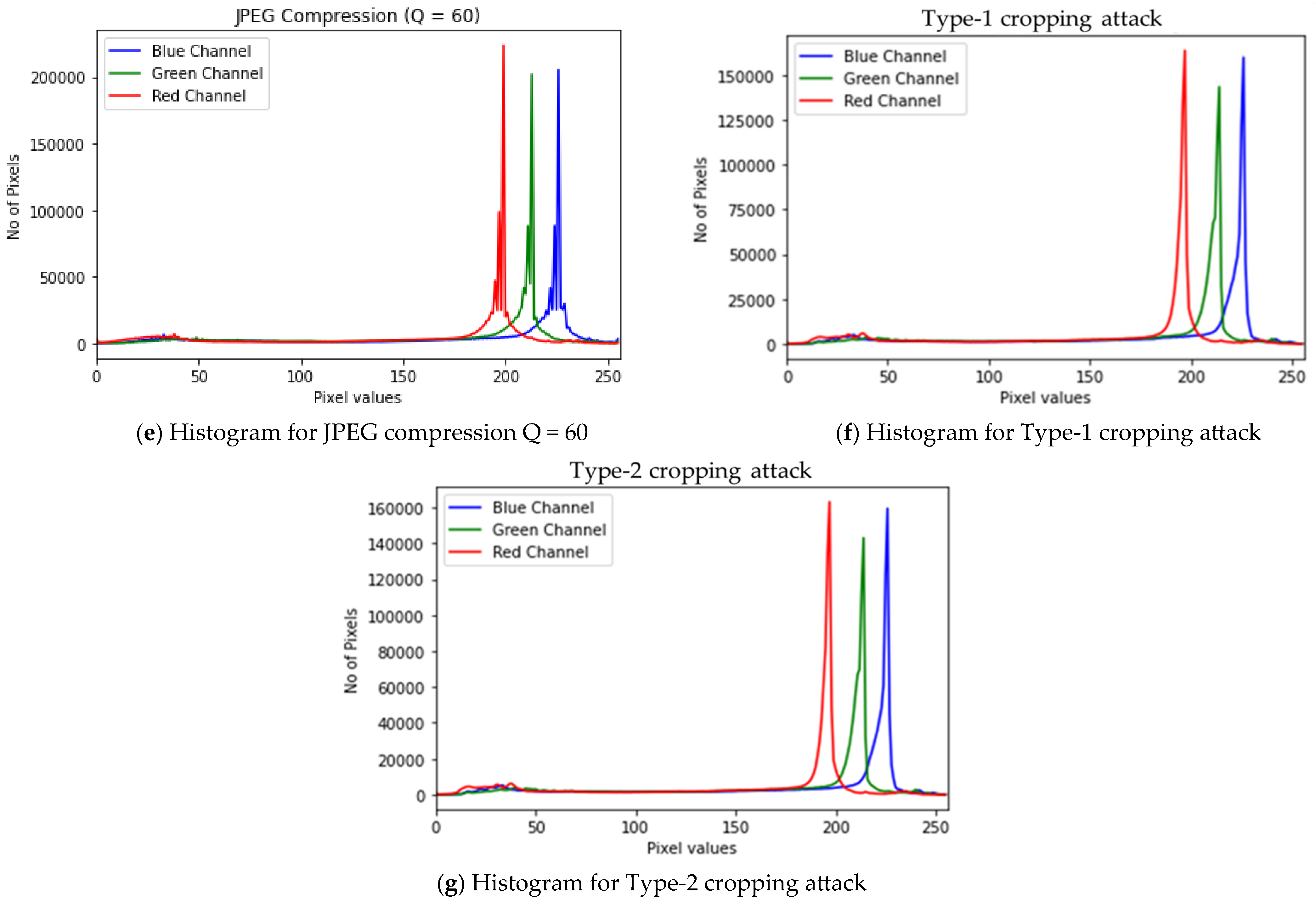

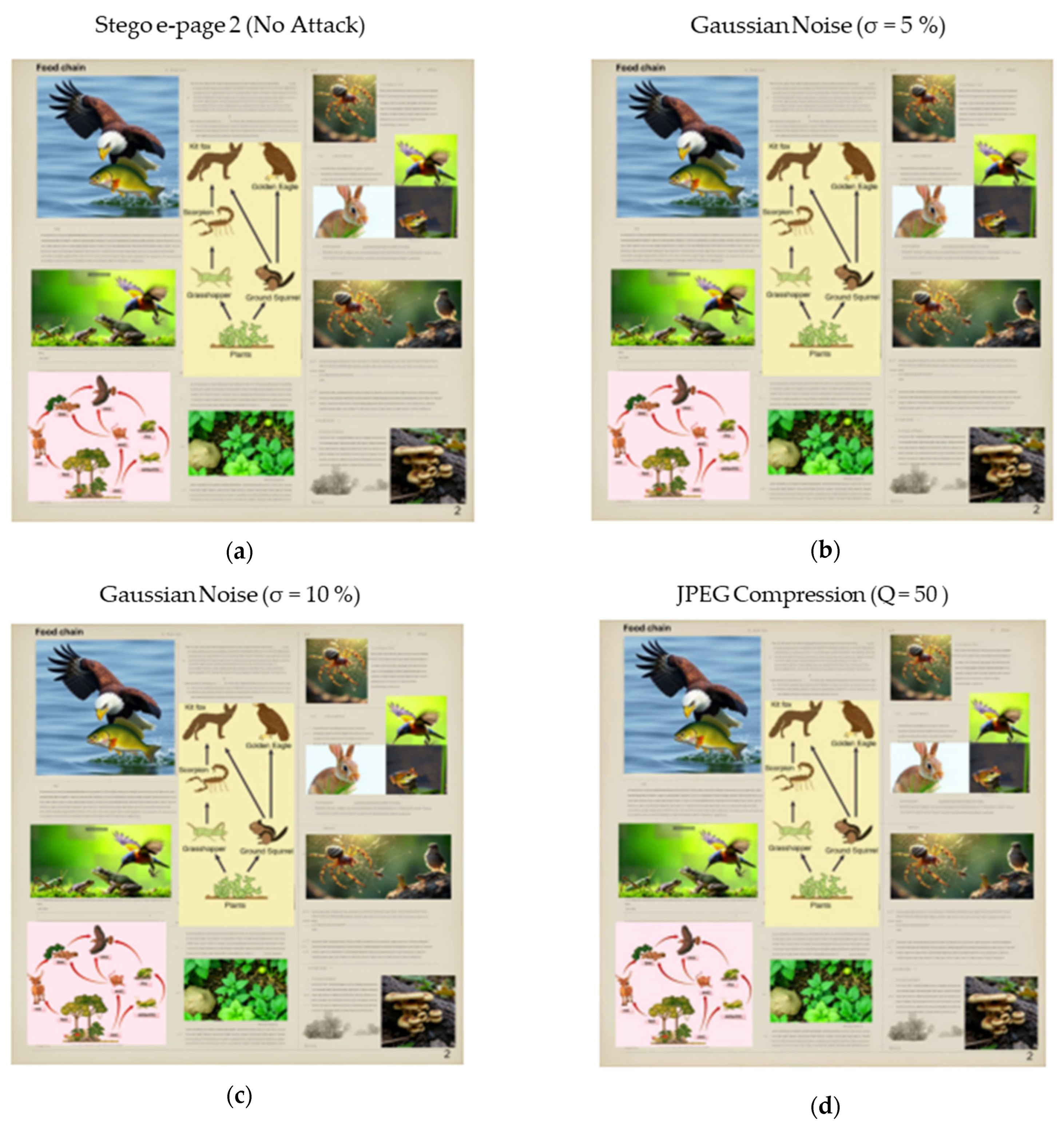

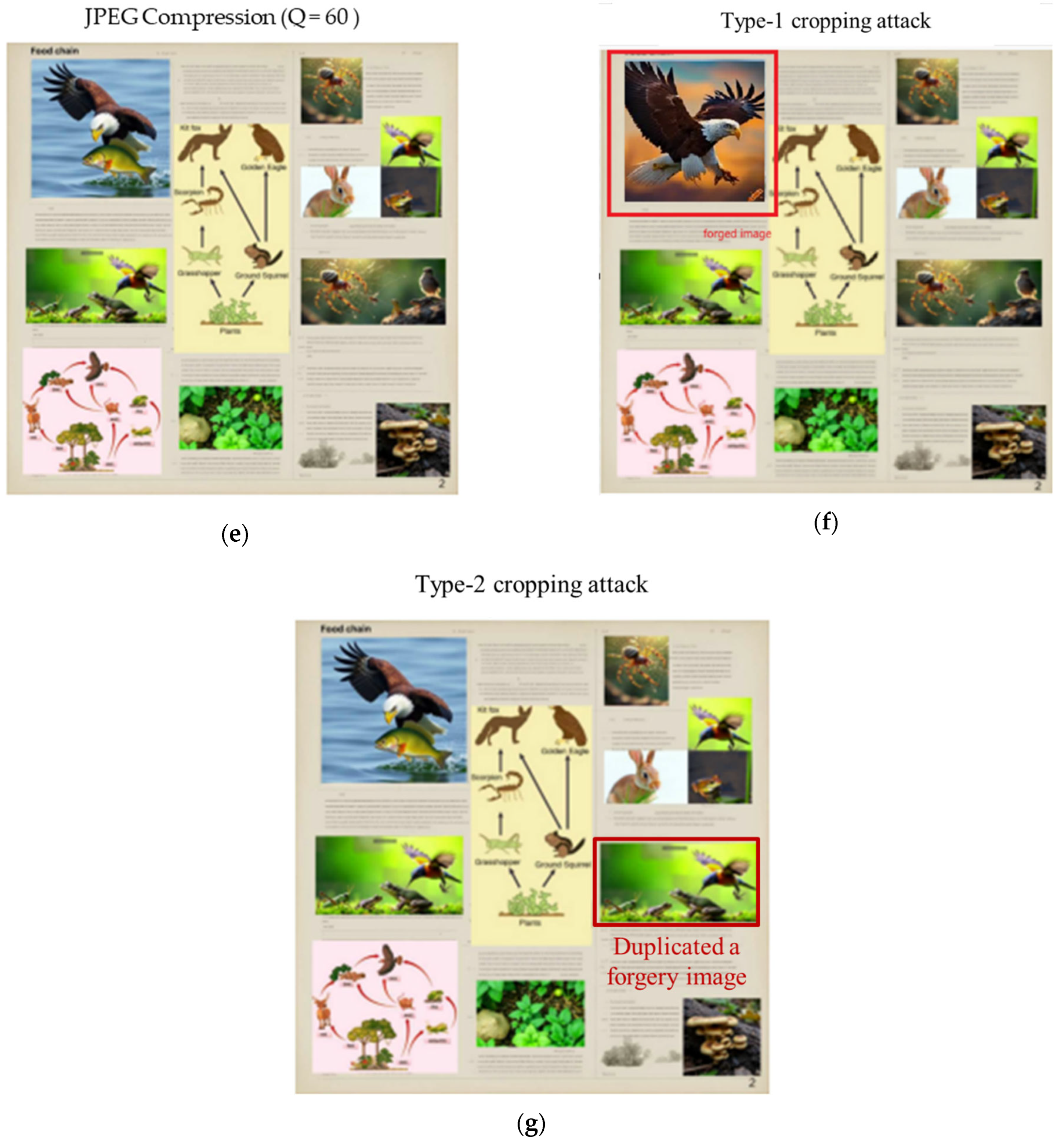

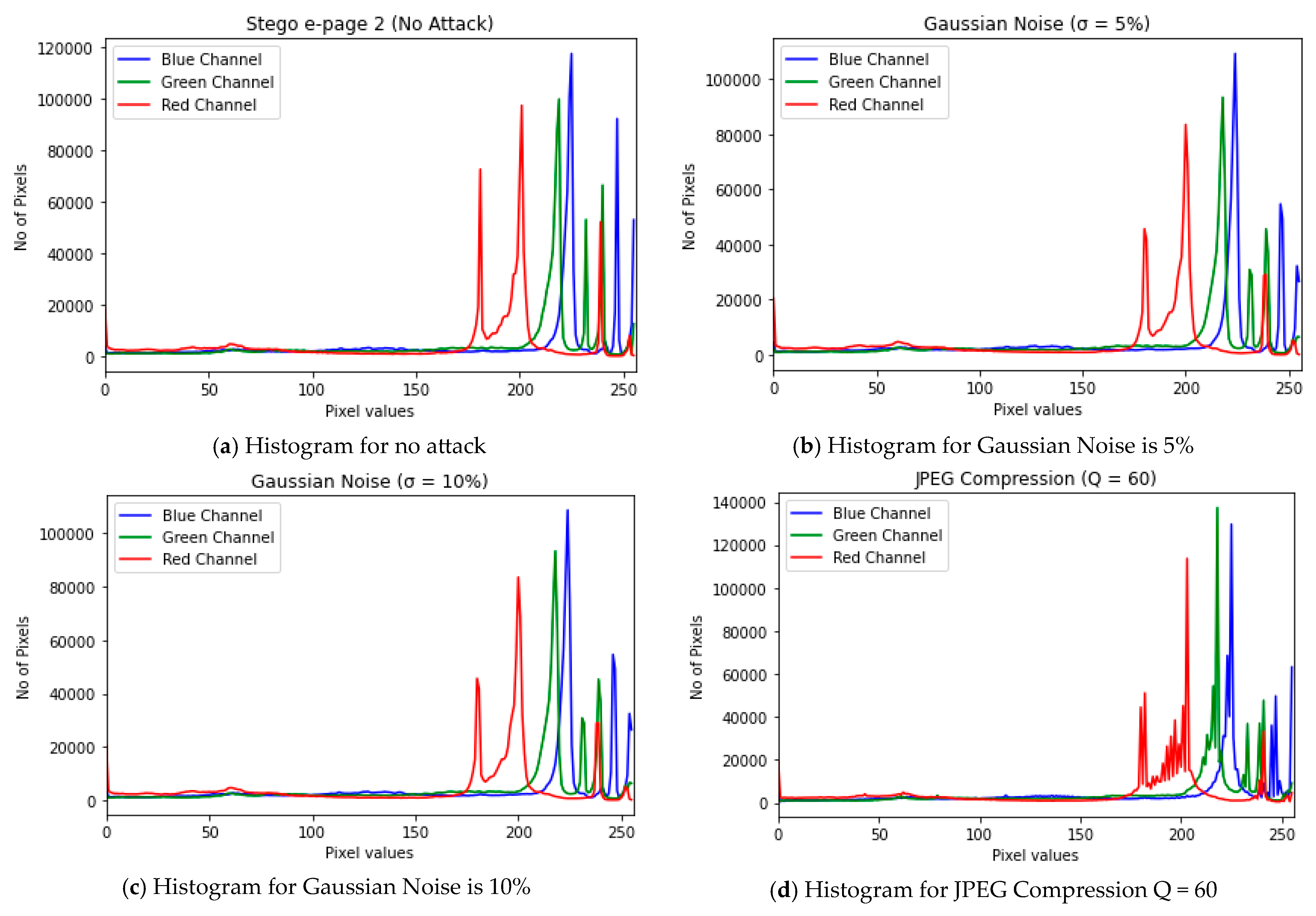

4.2. Robustness Against Attacks

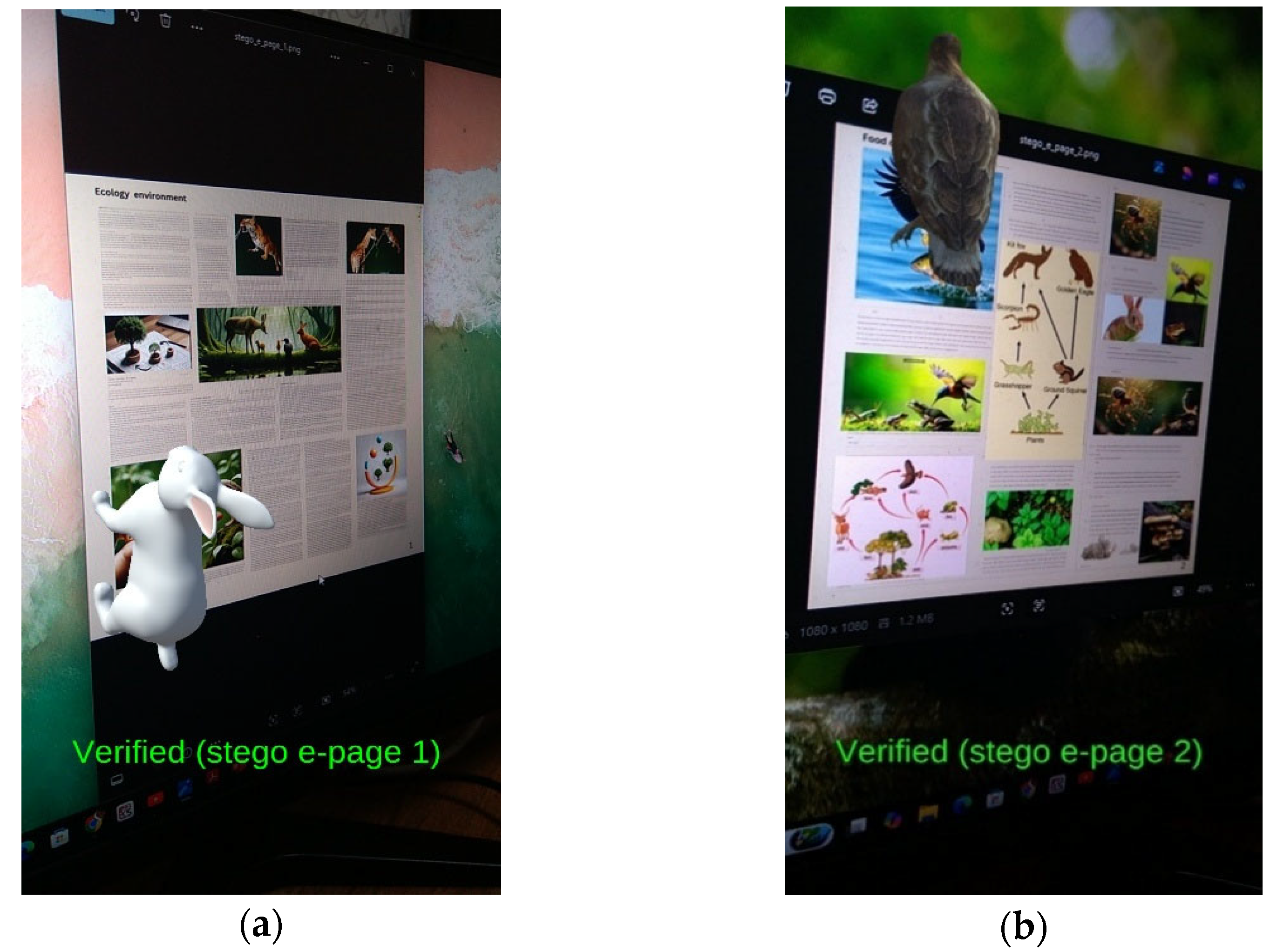

4.3. Authentication Evaluation

4.4. Computational Performance

4.5. Comparisons with Other Works

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Feiner, S.; Macintyre, B.; Seligmann, D. Knowledge-Based Augmented Reality. Commun. ACM 1993, 36, 53–62. [Google Scholar] [CrossRef]

- Gaebel, E.; Zhang, N.; Lou, W.; Hou, Y.T. Looks Good to Me: Authentication for Augmented Reality. In Proceedings of the 6th International Workshop on Trustworthy Embedded Devices, Hofburg Palace, Vienna, Austria, 28 October 2016. [Google Scholar]

- Wazir, W.; Khattak, H.A.; Almogren, A.; Khan, M.A.; Din, I.U. Doodle-Based Authentication Technique Using Augmented Reality. IEEE Access 2020, 8, 4022–4034. [Google Scholar] [CrossRef]

- Chang, C.H.; Lee, C.Y.; Chen, C.C.; Wang, Z.H. A Data-Hiding Scheme Based on One-Way Hash Function. Int. J. Multimed. Intell. Secur. 2010, 1, 285–297. [Google Scholar] [CrossRef]

- Shrimali, S.; Kumar, A.; Singh, K.J. Fast Hash Based High Secure Hiding Technique for Digital Data Security. Electron. Gov. Int. J. 2020, 16, 326–340. [Google Scholar] [CrossRef]

- Suman, R.R.; Mondal, B.; Mandal, T. A Secure Encryption Scheme Using a Composite Logistic Sine Map (CLSM) and Sha-256. Multimed. Tools Appl. 2022, 81, 27089–27110. [Google Scholar] [CrossRef]

- Gueron, S.; Johnson, S.; Walker, J. SHA-512/256. In Proceedings of the 2011 Eighth International Conference on Information Technology: New Generations, Las Vegas, NV, USA, 11–13 April 2011. [Google Scholar]

- Liu, N.; Amin, P.; Subbalakshmi, K. Security and Robustness Enhancement for Image Data Hiding. IEEE Trans. Multimed. 2020, 22, 1802–1810. [Google Scholar] [CrossRef]

- Stephenson, S.; Pal, B.; Fan, S.; Fernandes, E.; Zhao, Y.; Chatterjee, R. Sok: Authentication in Augmented and Virtual Reality. In Proceedings of the IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 23–26 May 2022. [Google Scholar] [CrossRef]

- Schneier, B. Applied Cryptography: Protocols, Algorithms and Source Code in C; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Wu, M.; Liu, B. Data Hiding in Image and Video: Part I Fundamental Issues and Solutions. IEEE Trans. Image Process. 2003, 12, 685–695. [Google Scholar]

- Lin, P.L.; Hsieh, C.K.; Huang, P.W. A Hierarchical Digital Watermarking Method for Image Tamper Detection and Recovery. Pattern Recognit. 2005, 38, 2519–2529. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, S.; Qian, Z.; Feng, G. Reference Sharing Mechanism for Watermark Self-Embedding. IEEE Trans. Image Process. 2011, 20, 485–495. [Google Scholar] [CrossRef]

- Chang, C.C.; Fan, Y.H.; Tai, W.L. Four-Scanning Attack on Hierarchical Digital Watermarking Method for Image Tamper Detection and Recovery. Pattern Recognit. 2008, 41, 654–661. [Google Scholar] [CrossRef]

- Chang, Y.F.; Tai, W.L. A Block-Based Watermarking Scheme for Image Tamper Detection and Self-Recovery. Opto-Electron. Rev. 2013, 21, 182–190. [Google Scholar] [CrossRef]

- Sarreshtedari, S.; Akhaee, M.A. A Source-Channel Coding Approach to Digital Image Protection and Self-Recovery. IEEE Trans. Image Process. 2015, 24, 2266–2277. [Google Scholar] [CrossRef] [PubMed]

- Qin, C.; Wang, H.; Zhang, X.; Sun, X. Self-Embedding Fragile Watermarking Based on Reference-Data Interleaving and Adaptive Selection of Embedding Mode. Inf. Sci. 2016, 373, 233–250. [Google Scholar] [CrossRef]

- Lin, C.C.; Huang, Y.; Tai, W.L. A Novel Hybrid Image Authentication Scheme Based on Absolute Moment Block Truncation Coding. Multimed. Tools Appl. 2017, 76, 463–488. [Google Scholar] [CrossRef]

- Lin, C.C.; Liu, X.L.; Tai, W.L.; Yuan, S.M. A Novel Reversible Data Hiding Scheme Based on AMBTC Compression Technique. Multimed. Tools Appl. 2015, 74, 3823–3842. [Google Scholar] [CrossRef]

- Tai, W.L.; Liao, Z.J. Image Self-Recovery with Watermark Self-Embedding. Signal Process. Image Commun. 2018, 65, 11–25. [Google Scholar] [CrossRef]

- Yu, Z.; Lin, C.C.; Chang, C.C. ABMC-DH: An Adaptive Bit-Plane Data Hiding Method Based on Matrix Coding. IEEE Access 2020, 8, 27634–27648. [Google Scholar] [CrossRef]

- Nazir, H.; Ullah, M.S.; Qadri, S.S.; Arshad, H.; Husnain, M.; Razzaq, A.; Nawaz, S.A. Protection-Enhanced Watermarking Scheme Combined with Non-Linear Systems. IEEE Access 2023, 11, 33725–33740. [Google Scholar] [CrossRef]

- Li, F.Q.; Wang, S.L.; Liew, A.W.C. Linear Functionality Equivalence Attack Against Deep Neural Network Watermarks and A Defense Method by Neuron Mapping. IEEE Trans. Inf. Forensics Secur. 2023, 18, 1963–1977. [Google Scholar] [CrossRef]

- Tang, Y.; Wang, S.; Wang, C.; Xiang, S.; Cheung, Y.M. A Highly Robust Reversible Watermarking Scheme Using Embedding Optimization and Rounded Error Compensation. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1593–1609. [Google Scholar] [CrossRef]

- Anand, A.; Singh, A.K. Dual Watermarking for Security of COVID-19 Patient Record. IEEE Trans. Dependable Secur. Comput. 2023, 20, 859–866. [Google Scholar] [CrossRef]

- Chang, C.C.; Liu, Y.; Nguyen, T.S. A Novel Turtle Shell-Based Scheme for Data Hiding. In Proceedings of the 2014 Tenth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kitakyushu, Japan, 27–29 August 2014. [Google Scholar]

- Chen, C.C.; Chang, C.H.; Lin, C.C.; Su, G.D. TSIA: A Novel Image Authentication Scheme for AMBTC-Based Compressed Images Using Turtle Shell Based Reference Matrix. IEEE Access 2019, 7, 149515–149526. [Google Scholar] [CrossRef]

- Lee, H.R.; Shin, J.S.; Hwang, C.J. Invisible Marker Tracking System Using Image Watermarking for Augmented Reality. In Proceedings of the 2007 Digest of Technical Papers International Conference on Consumer Electronics, Las Vegas, NV, USA, 10–14 January 2007. [Google Scholar]

- Li, C.; Sun, X.; Li, Y. Information Hiding Based on Augmented Reality. Math. Biosci. Eng. 2019, 16, 4777–4787. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.C.; Nshimiyimana, A.; SaberiKamarposhti, M.; Elbasi, E. Authentication Framework for Augmented Reality with Data-Hiding Technique. Symmetry 2024, 16, 1253. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Saraswat, D.; Dave, A.; Acharya, M.; Tanwar, S.; Sharma, G.; Davidson, I.E. Coalition of 6G and Blockchain in AR/VR Space: Challenges and Future Directions. IEEE Access 2021, 9, 168455–168484. [Google Scholar] [CrossRef]

- Deshmukh, P.R.; Bhagyashri, R. Hash Based Least Significant Bit Technique for Video Steganography. Int. J. Eng. Res. Appl. 2014, 4, 44–49. [Google Scholar]

- Manjula, G.R.; Ajit, D. A Novel Hash Based Least Significant Bit (2-3-3) Image Steganography in Spatial Domain. Int. J. Secur. Privacy Trust Manag. 2015, 4, 11–20. [Google Scholar]

- Dasgupta, K.; Mandal, J.K.; Dutta, P. Hash-Based Least Significant Bit Technique for Video Steganography(HLSB). Int. J. Secur. Privacy Trust Manag. 2012, 1, 1–11. [Google Scholar]

- Xiong, L.; Zhong, X.; Yang, C.N. DWT-SISA: A Secure and Effective Discrete Wavelet Transform-Based Secret Image Sharing with Authentication. Signal Process. 2020, 173, 107571. [Google Scholar] [CrossRef]

- Kunhu, A.; Taher, F.; Al-Ahmad, H. A New Multi Watermarking Algorithm for Medical Images Using DWT and Hash Functions. In Proceedings of the 11th International Conference on Innovations in Information Technology, Dubai, United Arab Emirates, 1–3 November 2015. [Google Scholar]

- Mahmood, G.S.; Huang, D.J. PSO-Based Steganography Scheme Using DWT-SVD and Cryptography Techniques for Cloud Data Confidentiality and Integrity. Comput. J. 2019, 30, 31–45. [Google Scholar] [CrossRef]

| Notations/Terminology | Definitions |

|---|---|

| The e-page 1 (electronic page) in the document, represented as an RGB image | |

| The e-page 2 in the document, also represented as an RGB image | |

| The previous e-page in hierarchical order | |

| The next e-page in hierarchical order | |

| Content area extracted from , with dimensions | |

| Red, green, and blue channels, each of size | |

| , , | Normalized pixel values of channel (each divided by 255, ranging within [0, 1]) |

| , , | Normalized pixel values accumulators for channels, initialized to 0 |

| The final hash value, obtained by concatenating , , and through the proposed hash value generation algorithm | |

| Modulo value to limit hash size, where | |

| , , | Pixel values of the channels at coordinates of |

| , , | Normalized pixel values for at coordinates of |

| The concatenation operator used to combine , , and | |

| Modulo operation to limit hash size (applied to , , and ) | |

| Multiplication operator used for weighting pixel contributions | |

| A weight factor or scaling parameter is applied in hash computation to adjust pixel contribution when embedding into | |

| Stego e-page 1 (i.e., the modified ) after embedding into using DWT-based data hiding | |

| Stego e-page 2 (i.e., the modified ) after embedding into using DWT-based data hiding | |

| The LL subband coefficients were obtained after applying DWT to the luminance component () of | |

| α | Blending factor that controls the strength of embedding |

| ϵ | A small constant used to avoid division by zero in β calculations |

| Mean intensity of a block in | |

| The standard deviation of a block in | |

| A block of coefficients from where each block size is to | |

| Modified block after embedding | |

| Normalized hash scaled to match the intensity range of using Equation (1) | |

| , Min () | Maximum and minimum intensity values in |

| Max (), min () | Maximum and minimum values of the hash |

| Extracted hash value from | |

| Hash value of stego e-page 1 () | |

| Extracted linkage hash value from e-page 2 () | |

| The region of interest extracted from corresponding to |

| Attack Type | PSNR (dB) | SSIM |

|---|---|---|

| No attack | 45.80 | 0.99 |

| Gaussian noise (σ = 5%) | 26.05 | 0.70 |

| Gaussian noise (σ = 10%) | 20.38 | 0.60 |

| JPEG compression (Q = 50) | 31.17 | 0.93 |

| JPEG compression (Q = 60) | 31.90 | 0.94 |

| Type-1 cropping attack | 19.25 | 0.55 |

| Type-2 cropping attack | 17.14 | 0.40 |

| Attack Type | PSNR (dB) | SSIM |

|---|---|---|

| No attack | 41.27 | 0.99 |

| Gaussian noise (σ = 5%) | 26.22 | 0.75 |

| Gaussian noise (σ = 10%) | 21.02 | 0.65 |

| JPEG compression (Q = 50) | 31.40 | 0.94 |

| JPEG compression (Q = 60) | 31.69 | 0.95 |

| Type-1 cropping attack | 17.61 | 0.50 |

| Type-2 cropping attack | 21.62 | 0.66 |

| Stego e-Page | Attacks/No Attack | PSNR (dB) | SSIM | Embedding Execution Time (s) | Extraction Execution Time (s) |

|---|---|---|---|---|---|

| Stego e-page 1 | No attack | 45.80 | 0.99 | 0.11 | 0.05 |

| Gaussian noise attack (σ = 5%) | 26.05 | 0.70 | 0.11 | 0.05 | |

| Gaussian noise attack (σ = 10%) | 20.38 | 0.60 | 0.11 | 0.05 | |

| JPEG compression attack (Q = 50) | 31.17 | 0.93 | 0.11 | 0.05 | |

| JPEG compression attack (Q = 60) | 31.90 | 0.94 | 0.12 | 0.06 | |

| Type-1 cropping attack | 19.26 | 0.55 | 0.10 | 0.05 | |

| Type-2 cropping attack | 17.14 | 0.40 | 0.09 | 0.04 | |

| Stego e-page 2 | No attack | 41.26 | 0.99 | 0.10 | 0.05 |

| Gaussian noise attack (σ = 5%) | 26.22 | 0.75 | 0.10 | 0.05 | |

| Gaussian noise attack (σ = 10%) | 21.02 | 0.65 | 0.09 | 0.04 | |

| JPEG compression attack (Q = 50) | 31.40 | 0.94 | 0.09 | 0.04 | |

| JPEG compression attack (Q = 60) | 31.69 | 0.95 | 0.09 | 0.05 | |

| Type-1 cropping attack | 17.61 | 0.50 | 0.09 | 0.04 | |

| Type-2 cropping attack | 21.62 | 0.66 | 0.10 | 0.05 |

| Schemes Criteria | Chang et al. [4] | Liu et al. [8] | Lin et al. [29] | Ours |

|---|---|---|---|---|

| Hiding strategy | Hash function and side-match VQ | Hash-based randomized embedding | LSB/DWT hiding | DWT hiding |

| Carrier type | Image, VQ-index table | Image, DCT coefficients | AR content | AR content |

| Content authentication | - | Yes | Yes | Yes |

| Linkage authentication | - | - | - | Yes |

| Attacks | - | JPEG attack | Luminance attack, Color saturation attack, Replacement attack | JPEG attack, Cropping attack (types 1 and 2) |

| Tamper detection/similarity | - | - | Yes, similarity (70.55–100%) | Yes |

| Average PSNR | - | 42.11 dB | [59.58 dB, 59.87 dB] | [41.26 dB, 45.80 dB] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, C.-C.; Nshimiyimana, A.; Chen, C.-C.; Liao, S.-H. Enhancing Security in Augmented Reality Through Hash-Based Data Hiding and Hierarchical Authentication Techniques. Symmetry 2025, 17, 1027. https://doi.org/10.3390/sym17071027

Lin C-C, Nshimiyimana A, Chen C-C, Liao S-H. Enhancing Security in Augmented Reality Through Hash-Based Data Hiding and Hierarchical Authentication Techniques. Symmetry. 2025; 17(7):1027. https://doi.org/10.3390/sym17071027

Chicago/Turabian StyleLin, Chia-Chen, Aristophane Nshimiyimana, Chih-Cheng Chen, and Shu-Han Liao. 2025. "Enhancing Security in Augmented Reality Through Hash-Based Data Hiding and Hierarchical Authentication Techniques" Symmetry 17, no. 7: 1027. https://doi.org/10.3390/sym17071027

APA StyleLin, C.-C., Nshimiyimana, A., Chen, C.-C., & Liao, S.-H. (2025). Enhancing Security in Augmented Reality Through Hash-Based Data Hiding and Hierarchical Authentication Techniques. Symmetry, 17(7), 1027. https://doi.org/10.3390/sym17071027