Abstract

Although machine learning (ML) models are widely used in many fields, their prediction processes are often hard to understand. This lack of transparency makes it harder for people to trust them, especially in high-stakes fields like healthcare and finance. Human-interpretable explanations for model predictions are crucial in these contexts. While existing local interpretation methods have been proposed, many suffer from low local fidelity, instability, and limited effectiveness when applied to highly nonlinear models. This paper presents SVM-X, a model-agnostic local explanation approach designed to address these challenges. By leveraging the inherent symmetry of the SVM hyperplane, SVM-X precisely captures the local decision boundaries of complex nonlinear models, providing more accurate and stable explanations. Experimental evaluations on the UCI Adult dataset, the Bank Marketing dataset, and the Amazon Product Review dataset demonstrate that SVM-X consistently outperforms state-of-the-art methods like LIME and LEMNA. Notably, SVM-X achieves up to a 27.2% improvement in accuracy. Our work introduces a reliable and interpretable framework for understanding machine learning predictions, offering a promising new direction for future research.

1. Introduction

In recent years, machine learning (ML) has become a core component of many real-world applications, ranging from computer vision [1] to natural language processing [2], despite being popular and successful, the prediction process of ML models is often incomprehensible to humans. The prediction results cannot be acted upon blindly, especially when utilizing ML models in some critical scenarios such as medical diagnosis or financial industry. Therefore, providing human-understandable interpretations of an ML model’s predictions has important implications for current ML systems [3,4].

This paper focuses on presenting a model-agnostic approach to explain the predictions made by ML models from a local perspective. In the following, we first briefly introduce the field of Explainable Machine Learning (XML), and then review existing explanation methods for ML models, followed by our contributions.

1.1. Explainable Machine Learning

Due to their complex structures and large number of parameters, ML models are difficult for humans to interpret, especially when trying to understand the reasoning behind their predictions. XML constitutes a research domain dedicated to advancing techniques and algorithms capable of producing high-quality, interpretable, and intuitive explanations for decisions rendered by ML models. Recent developments in XML show the important realization of ethical considerations, trustworthiness, bias within ML models, and the security risks associated with potential attacks on ML models [5].

Early XML works focus on explaining the collective behavior of ML models, which address feature attributions at a comprehensive level rather than considering them solely for individual input. Ghorbani et al. [6] introduce a method named Automatic Concept-based Explanations (ACE) that aims to provide global explanations for a trained classifier without human supervision. ConceptSHAP [7] provides semantically meaningful clusters through segmentation and introduces the notion of completeness, quantifying the sufficiency of a specific set of concepts in explaining a model’s prediction behavior. Once the relevant concepts are identified, ConceptSHAP can compute the importance of each concept, utilizing the score to elucidate the decision-making process of the models. CaCE [8] defines the effect of the causal concept as the causal impact of a human-interpretable concept (whether present or absent) on the predictions of a deep neural network. CaCE accurately captures the model’s predictive process, avoiding the mere reflection of correlations between features and predictions.

One prevalent limitation in the existing works on explanation methods for the prediction behavior of ML models is their predominant focus on interpreting predictions from a global perspective [5,9]. Global explanations offer valuable insights into the overall decision-making process of the ML models. It presents a comprehensive overview of attributions across a set of samples but potentially neglects the nuances essential for understanding the prediction with respect to individual instances. Additionally, it is worth mentioning that global explanation methods typically have limited applicability and are generally tailored to a specific type of ML model.

1.2. Existing Efforts on Local Explanations

Recently, there has been a growing recognition of the limitations inherent in global explanation methods. Emerging research emphasizes the necessity for a more nuanced and localized approach to understanding model predictions. Specifically, a local explanation method named LIME [10] is proposed, which can explain the predictions of any type of ML model from the local perspective. Local explanation methods articulate individual feature attributions for a single sample, facilitating a more straightforward comprehension of the model’s reasoning. LIME leverages a linear model to approximate the prediction behavior of an ML model locally and then obtains the prediction explanation from the feature weights of the local model. However, LIME assumes that the local decision boundary of the black-box model is approximately linear, but many ML models exhibit highly nonlinear decision boundaries. This fundamental limitation results in poor local fidelity and can lead to misleading or inaccurate interpretations in cases where the true decision boundary is complex. The same issue also exists in other works that describe the local behavior of the model using linear explanations [11,12].

To overcome the above limitation, the follow-up work LEMNA [13] exploits a mixture regression model enhanced by fused lasso to generate high-fidelity explanation results. While LEMNA improves upon LIME by incorporating nonlinear components, it still relies on a weighted combination of linear models, which may not fully capture highly nonlinear decision boundaries. Additionally, LEMNA’s explanation stability is compromised when dealing with instances far from the decision boundary, as these points contribute disproportionately to the explanation model, reducing its robustness.

Another work [14] concentrates on explanation coverage. It proposes a novel model-agnostic explanation called Anchor to provide clearer and more interpretable rule-based explanations. However, The rules (anchors) generated by Anchor are often too specific or fragmented, resulting in low coverage and limited generalization ability. Meanwhile, Anchor does not address the issues related to local fidelity, making it less effective for models with highly irregular decision boundaries.

To address these issues, this paper proposes SVM-X, a novel local explanation method that leverages Support Vector Machines (SVMS) to better capture nonlinear decision boundaries. Unlike LIME and LEMNA, SVM-X avoids oversimplified linear approximations and ensures higher local fidelity. At the same time, a distance-based weighting mechanism is introduced to enhance the stability of interpretation, especially near complex boundaries. SVM-X provides wider applicability without sacrificing coverage. Experiments prove that SVM-X outperforms existing methods on every evaluation metric, making it a more accurate and stable solution for interpreting black-box models.

1.3. Challenges and Motivation

This paper presents a local explanation method dubbed SVM-X, which can give a general explanation of different types of ML models through extracting feature weights from local support vector machine (SVM) models. Our basic idea is to take advantage of SVM models. SVM models can approximate the nonlinear decision boundary well. This paper uses them to approximate the prediction behavior of the target model locally around the target record. In this way, the contribution of each feature in the target record can be obtained as the explanation. With SVM models serving as the local explanation model, SVM-X could obtain a prediction explanation with higher stability and fidelity compared with the existing work.

Although the basic idea is simple, the SVM-X design faces two major challenges. The first challenge lies in the difficulty of better explaining the prediction of SVM models. Since traditional SVM models use kernel functions to map data to infinite dimensions, the feature weights specified by SVM are not intuitively understandable to humans. To address this issue, this paper uses a linear kernel function and aggregates the weights of the same feature and its mapped features as the corresponding explanation weight. By doing so, we could obtain a more precise assessment of each feature’s contribution to the model’s predictions. The second challenge is that it is difficult to measure the extent to which the records in the vicinity of the target record contribute to the local model. Considering that SVM-X desires the prediction results between the local model and the target model to be as close as possible, we design a distance measurement based on the predictions of the target model. With this method, we could significantly improve the local fidelity of the explanation models.

We summarize our main contributions as follows.

- This paper presents SVM-X, a local explanation method that can fit the decision boundary of a black-box ML model with a more reasonable explanation of the prediction on the given record.

- This paper proposes a measure of the distance between two data records based on the model prediction output, by which SVM-X can effectively improve the fidelity of the local explanation model.

- This paper evaluated the performance of SVM-X on five different types of ML models. Extensive experiments with comparisons to four baselines demonstrate that the local interpretation model constructed by SVM-X has a higher fidelity and its explanation can achieve higher stability.

The remainder of this paper is organized as follows. Section 2 introduces the preliminary information about SVM and the definition of the explanation based on the weight of the features. Section 3 describes the detailed design of SVM-X, followed by the performance evaluation in Section 4. Section 5 summarizes related work, and finally Section 6 concludes this paper.

2. Preliminary

2.1. Support Vector Machine

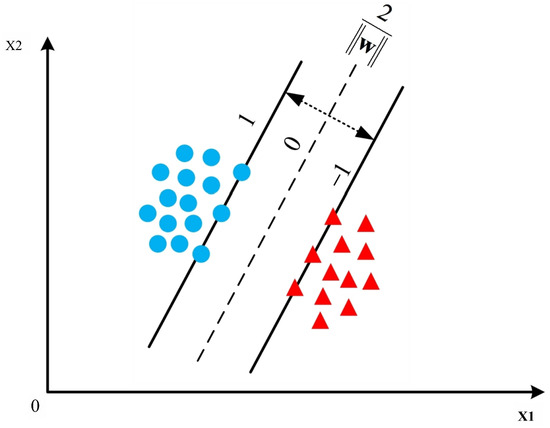

SVM is a supervised learning model designed for classification and regression analysis. Its core objective is to identify the optimal decision hyperplane within the training data space that separates the data into two distinct classes, each residing on opposing sides of this hyperplane. Intuitively, the best hyperplane is the one that maximizes the margin, defined as the distance between the hyperplane and the closest data points from each class. Consequently, SVM primarily focuses on these critical data points, known as support vectors, which lie precisely on the margin boundaries. As shown in Figure 1, which illustrates the decision boundary and margin for a binary classification scenario, the goal is to position the hyperplane such that the geometric margin between the support vectors of the two classes is as large as possible. This maximizes the separation between the classes and enhances the model’s robustness.

Figure 1.

Illustration of SVM decision boundary and margin for binary classification.

Formally, the training process for SVM models can be expressed as follows: Given a training data set D and hyperplane , where is the weight vector (normal vector) of the hyperplane, and is the bias term. D can be described as follows:

where is the feature vector of the ith sample, is the corresponding label, and N is the number of training samples. The point is defined as positive (resp. negative) when (resp. ). SVM supposes the training data set can be linearly separated in a high-dimensional space, and the geometric interval between the hyperplane and the samples can be defined as follows:

where denotes the Euclidean norm of . The geometric margin represents the signed distance from sample to the hyperplane.

The minimum value of the hyperplane’s geometric distance of all sample points can be defined as follows:

The maximum segmentation hyperplane problem of the SVM model can be expressed as the following constraint optimization problem:

For the linearly inseparable samples in the finite-dimensional vector space, we map them to a higher-dimensional vector space and learn SVM models by maximizing the interval. Then, we can obtain the nonlinear SVM. Let be a new vector in the higher-dimensional eigenspace. Then the dual problem of nonlinear SVM can be described as follows:

where is the Lagrange multiplier associated with the i-th training constraint and is the Lagrange multiplier associated with the box constraint . C is the regularization parameter that controls the trade-off between maximizing the margin and minimizing the classification error.

Since the dual maximization problem is a quadratic function subject to linear constraints, it can be efficiently solved by quadratic programming methods, and then the classifying hyperplane can be obtained.

This paper concentrates on leveraging the SVM models to locally approximate the prediction behavior of the target model around the target record, so as to explain the prediction of the target model. Compared with previous works involving linear local models, since SVM models can fit the nonlinear prediction boundary well, we can thus achieve better stability and local fidelity of the explanation.

2.2. Linear Model-Based Explanation

Linear regression models are widespread for interpretation in academic fields such as medicine, sociology, psychology, to name a few. Linear models predict the target as a weighted sum of the feature inputs, which makes it easy to interpret the prediction of linear models [15]. In the training process, models will assign each feature a weight that can be regarded as the importance of the corresponding feature. For humans, the feature weights assigned by the linear model are easy to understand because they indicate the contribution of each feature to the predicted output.

Motivated by the interpretability of linear models, LIME [10] makes use of linear models as the local interpretation models to explain a complex model’s prediction, so that the interpretable model can be applied to general fields. Subsequently, by replacing the single linear model with a mixed one, LEMNA [13] can approximate the decision boundary around the target record more precisely and thus derive a more credible prediction explanation with the feature weights extracted from the local mixed model.

Similar to the above two works, SVM-X is also an interpretation method based on feature weights. However, since the SVM model can fit the nonlinear decision boundary better than linear models, SVM-X would have better fitting performance and interpretation stability.

3. Design of SVM-X

This paper formalizes the task of SVM-X as follows: Given the prediction access to an ML model and a target record , SVM-X aims to obtain the feature importance of the target record, which can serve as the explanation of the target model’s prediction on the target record. More formally, the goal of SVM-X can be expressed as follows:

where is the explanation function. The output of the explanation function is a vector that has the same shape as . Each value in corresponds to a feature importance.

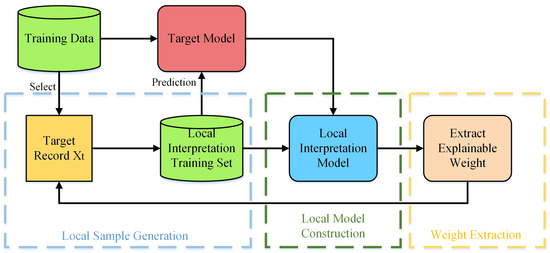

Figure 2 shows the Framework of SVM-X. To achieve the purpose of SVM-X, first randomly sample a set of records around by perturbing the value of the features and then construct a local model using the linear SVM algorithm. To this end, SVM-X mainly involves the following three steps.

Figure 2.

Framework of SVM-X: Key steps in local explanation generation.

- (1)

- Local sample generation: Given a target record selected from the training data, this step generates a local interpretation training set by perturbing the feature values of to create a set of neighborhood samples. Each perturbed sample is passed through the target model to obtain its prediction. The resulting set of perturbed samples and their associated outputs from form the local training data.

- (2)

- Local model construction: Using the locally generated samples and their corresponding predictions, SVM-X trains a linear Support Vector Machine (SVM) to approximate the target model’s behavior around . The linear SVM serves as a surrogate model that mimics the decision boundary of in the neighborhood of . Due to its linearity, the SVM model is highly interpretable; its weight vector directly reflects the influence of each feature.

- (3)

- Explainable weight extraction: Once the linear SVM is trained, SVM-X extracts the weight vector w from the model. Since the prediction function of a linear SVM is , the magnitude of each weight indicates the importance of feature i. These values are normalized to form the explanation vector , which serves as a feature attribution for the prediction made by on .

3.1. Local Sample Generation

The goal of the local sample generation is to generate a set of records around the given target instance . Assuming that the distribution of the target record’s feature is known, the feature value of the randomly selected features of can be correspondingly replaced.

In this step, a set of features is first selected from at uniform randomness, denoted as . Then, the value of the selected features is successively replaced with a different value, chosen from the value range of the corresponding feature based on the distribution of that feature. Specifically, since prior knowledge of the distribution of every feature is available, the value range and the proportion of each feature value can be determined. Formally, it is assumed that a certain feature of has n possible values, and the proportion of each value is , respectively, (). Corresponding weight is added to n values, and one possible value from the feature range is randomly selected based on . However, in some cases, the statistical information of each feature of cannot be obtained.

To address this issue, the proportion of each feature is assigned to (i.e., ), and then the value of is replaced. At the end, one sampled record, denoted as , can be derived. It is worth noting that the number of selected features is also randomly chosen at the beginning of generating every sample.

Intuitively, generated samples with a different distance to the target record should have different contributions to the construction of the local interpretation model, with the generated sample that is far away from the target record providing a relatively small contribution to the local model, and vice versa. Therefore, a sample weight is assigned for according to the distance between and by the following equation:

where is the weight generation function, and is the distance measurement of two samples.

The sample distance is measured using the prediction probability of the target model. Specifically, the prediction probabilities of and are obtained by using the target model . For clarity, the predicted class probability vectors for and are denoted by and , and the category probability of the points on the decision boundary is denoted as . Assuming it is a C classification problem, , the probability of these points belonging to each category is equal.

Since SVM relies on decision hyperplanes for classification, it plays an important role in determining the neighborhood of the target record. can then be used to define the distance measurement using the following function:

where represents distance. The above steps are repeated to generate local samples and the corresponding sample weight around until the number of samples is large enough.

3.2. Local Model Construction

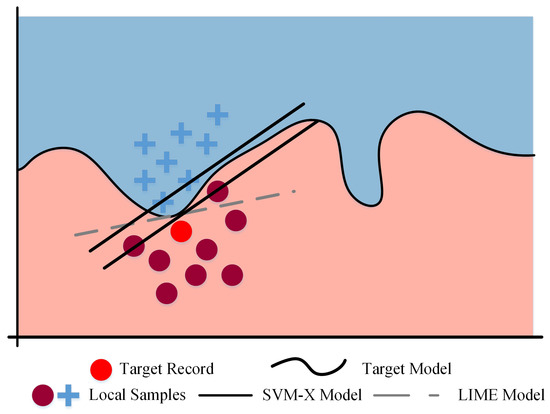

Intuitively, local fidelity is one of the most important aspects that the local interpretation model should take into account. If the local model has similar predictions to the target model on the sampled data around , it would be locally faithful and could give a reasonable explanation for the target model concerning the target record. Regarding previous works such as LIME [10] and LEMNA [13], which leverage the linear model to construct the local interpretable model, the linear model can only fit the linear decision boundary well. In practice, the decision boundary of an ML model is not linear in most cases, and thus, the local linear model cannot achieve high fidelity of the prediction behavior. To address this issue, an SVM model, whose decision boundary can be nonlinear, is considered to construct the local interpretable model. Figure 3 shows a toy example comparing SVM-X and LIME, indicating that the local model of SVM-X fits the decision boundary better than the linear models.

Figure 3.

Comparison of local decision boundary fitting between LIME and SVM-X.

Now that the sampled data around the target record and the corresponding sample weight have been obtained, the next step is to train the local model with the SVM training algorithm. First, the target model is used to predict the sampled data to obtain the corresponding category label . Then, can be trained by minimizing the following loss function:

where represents the probability predicted by that belongs to the class predicted by . In other words, we exploit the mean squared error (MSE) loss with weight to construct our local SVM model.

3.3. Explainable Weight Extraction

Given the local SVM model , which is locally faithful with the target model , the next step is to extract the explainable features from . In this step, the feature weight assigned by the local model is leveraged to explain the target model’s prediction. Therefore, the weight vector obtained from the local model is first normalized, and then the top K features, which contribute most to the local model, are selected by choosing the K features with the largest absolute value of feature weights calculated by the local SVM model. At the end, the explainable features can be obtained, which include the corresponding weights for each feature of the target record. The explanation process of SVM-X is outlined in Algorithm 1.

| Algorithm 1 Explanations using SVM-X | |

| Input: Target model , Target record , Number of samples N, Weight function , Length of explanation K | |

| Output: Explainable features w (top K features with their weights) | |

| |

| ▹ Generate local samples |

| |

| ▹ Calculate sample weight using distance-based weighting |

| ▹ Get prediction from the target model for the sample |

| ▹ Add sample, prediction, and weight to the dataset |

| |

| ▹ Fit local model |

| ▹ Extract K explainable features |

| ▹ Sort features by the absolute values of their weights |

| |

| |

4. Results

4.1. Experiment Setup

4.1.1. Dataset

Three datasets are used to evaluate the explanation performance of SVM-X, including one commonly used benchmark dataset and two additional datasets for comprehensive comparison.

Amazon Product Review [16]. This dataset consists of 2000 product reviews for Books and DVDs from Amazon, with each instance containing a rating score, product name, and review text. The classification task is to determine whether a review expresses a positive or negative sentiment. A sentiment vocabulary is manually constructed by selecting the most frequent sentiment-bearing words. Each review is then converted into a binary feature vector, where each element indicates the presence or absence of a corresponding sentiment word.

UCI Adult (Census Income) [17]. This dataset contains 48,842 entries with 14 attributes such as work class, age, race, and education level. The task is to predict whether an individual’s income exceeds $50K per year. Categorical features are one-hot encoded, while continuous attributes are normalized to ensure consistency and compatibility with machine learning models.

Bank Marketing [18]. This dataset includes information on 45,211 clients from a Portuguese bank, with 17 attributes like age, job type, education, and contact method. The goal is to predict whether a client will subscribe to a term deposit. Preprocessing includes one-hot encoding of categorical variables and normalization of numerical features to facilitate effective training and explanation extraction.

4.1.2. Target Model

The performance of SVM-X is evaluated on five different types of machine learning models, with the specific models as follows:

- Logistic Regression (LR) [19]: A linear model used for binary classification that estimates probabilities using the logistic function.

- Random Forest (RF) [20]: An ensemble learning method that builds multiple decision trees and aggregates their predictions to improve accuracy and control overfitting.

- XGBoost (XGB) [21]: A gradient boosting framework that uses decision trees as base learners and optimizes performance with regularization techniques to prevent overfitting.

- Decision Tree (DT) [22]: A tree-based model that splits the data into subsets using feature thresholds to create a model that can be interpreted visually.

- Deep Neural Network (DNN) [23]: A type of artificial neural network with multiple layers, capable of learning complex patterns in large datasets through backpropagation.

Among these models, the first three are consistent with those evaluated in LIME [10]. The standard training processes provided by the machine learning libraries Scikit-Learn 1.5.0 (for LR, RF, XGB, and DT) and PyTorch 2.3.1 (for DNN) are used to build the models to be explained.

4.1.3. Evaluation Metrics

In the experiments, multiple evaluation metrics are used to assess the interpretation quality of SVM-X from two different perspectives. To measure the local model’s fidelity (i.e., the similarity of prediction behaviors between the target model and the local model), the standard metrics for evaluating ML models, including accuracy, recall, and F1 Score, are used. It is noted that the prediction results of the target model are used as the ground truth for both local samples and target records; therefore, the meaning of these standard metrics slightly changes in this work.

In particular, accuracy represents the proportion of data records predicted by the local interpretation model and the target model to be the same class. Accuracy is chosen as it provides a global view of the alignment between the local model and the target model’s predictions, reflecting the overall consistency.

Recall presents the fraction of the local samples that can be correctly inferred as the same class as that of the corresponding target record. Recall is included to assess the local model’s ability to capture all relevant instances in the data.

F1 Score is an indicator used in statistics to measure the accuracy of a two-class model, balancing precision and recall in the classification model. The F1 Score provides a harmonic mean of precision and recall, making it a robust measure for scenarios.

In order to measure the fidelity of the local model from a fine-grained level, MSE is used to measure the prediction probability difference between the local model and the target model, which can be calculated by the following:

where (resp. ) is the prediction probability of the record obtained from the target model (resp. the local model). MSE is particularly useful in evaluating the subtle differences in probability predictions, providing a more detailed measure of local fidelity compared with categorical metrics like accuracy.

Furthermore, to assess the consistency of the explanation, a metric named weighted mean variance is introduced, which is defined as the mean-variance of the feature weights of a set of target examples. Specifically, N points are sampled around a target record . These points are treated as new target records, and the corresponding local interpretations are obtained. After that, N interpretation vectors are derived. Based on the derived interpretation, the variance of each component is computed separately, and the averaged value over all weight vectors is then calculated using the following:

where is the mean of the explanations of sampled records. Weighted mean variance is crucial for evaluating the stability of explanations, ensuring that the local model provides consistent interpretations for similar target instances.

4.1.4. Comparison Methods

The following comparison methods are considered in the experiments:

LIME [10]. This method constructs a local linear model to approximate the prediction behavior of the target model around the target record. Then, it extracts the weight of each feature from the local model and selects the top K features with the highest weights as the explanation of the prediction of the target model on the target record.

LEMNA [13]. This method uses mixture regression models to approximate the decision boundary of the target model around the target record. Then, it identifies the linear component from the mixture model that has the best approximation of the local decision boundary. After that, LEMNA uses the same feature selection method as LIME on the selected linear component to extract the explanation of the target model.

Random [10]. This method randomly selects K features from the target record as the explanation for the target model’s prediction; the feature weight is also randomly assigned.

Greedy [10]. This method greedily selects K features that contribute most to the target model’s prediction. It sequentially removes the features in the target record and then takes the feature that causes the greatest reduction in the model’s prediction accuracy as the selected feature.

It is worth noting that the last two methods (i.e., Random and Greedy) are consistent with the baselines of LIME. In the experiments, is set for the Amazon Product Review dataset and for both the Adult and Bank datasets.

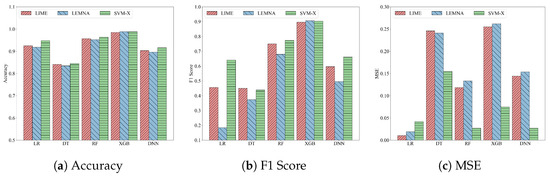

4.2. Performance of SVM-X

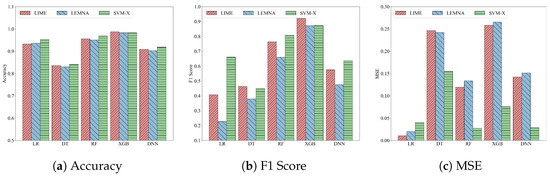

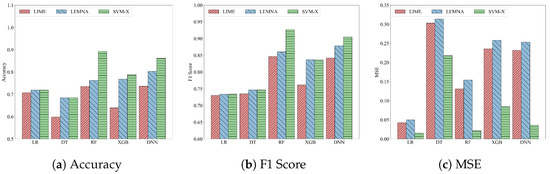

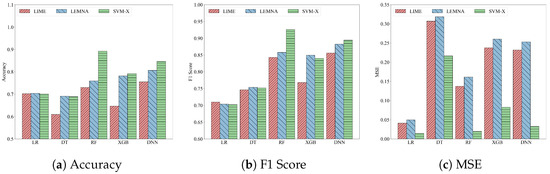

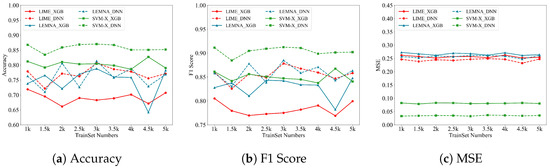

In this part, two tabular datasets (UCI Adult and Bank Marketing) are used for evaluation. The results are shown in Figure 4, Figure 5, Figure 6 and Figure 7.

Figure 4.

Fidelity comparison on adult training dataset: Accuracy, F1 Score and MSE.

Figure 5.

Fidelity comparison on adult testing dataset: Accuracy, F1 Score and MSE.

Figure 6.

Fidelity comparison on bank training dataset: Accuracy, F1 Score and MSE.

Figure 7.

Fidelity comparison on bank testing dataset: Accuracy, F1 Score and MSE.

For the UCI Adult dataset, Figure 4 (resp. Figure 5) shows the histogram of the F1 Score, accuracy, and MSE of SVM-X, LIME, and LEMNA on Adult training data (resp. Adult test data). Comparing Figure 4 and Figure 5, similar trends can be observed on the histograms of the three indicators, which demonstrates the consistent generalization of the results across both the training and test datasets. From Figure 4b, it can be seen that SVM-X and LEMNA perform better than LIME on all the target models. Specifically, when the target model is DT, RF, XGB, and LR, the accuracy of SVM-X is much higher than that of LIME, achieving an improvement of 11.9%, 14.9%, 17.8%, and 27.2%, respectively. However, when it comes to DNN, these two methods are relatively close in terms of accuracy, with an improvement of 5.24% on Adult training data and only 3.95% on Adult test data. As for Figure 4c, apart from LR, SVM-X obtains a lower MSE value than LIME and LEMNA, indicating that SVM-X can better fit the target model in the local range of the target instance. This superior performance is particularly evident for RF, where the model’s structured nonlinearity aligns well with SVM’s kernel-based local approximation.

For the Bank Marketing dataset, Figure 6 and Figure 7 show that the SVM-X method has the best performance on the models trained using RF. For the RF model trained on the Bank training dataset, SVM-X achieves an F1 Score of 0.926 and accuracy of 0.928, resulting in improvements of 9.5% and 25.7%, respectively. When the target model is one of the other four models, SVM-X also performs slightly better than LIME and LEMNA. Compared with the Adult dataset, the performance gap on the Bank dataset is relatively smaller, which may be due to the lower feature dimensionality and reduced sparsity of the Bank dataset, leading to less variance among the interpretation methods. In addition, SVM-X has better performance than LIME and LEMNA on most models, especially when the structure of the target model is relatively complex, like RF and XGB. This indicates that SVM-X excels in capturing structured nonlinearity in complex models, whereas its performance may be limited for simpler or less stable models like DNN due to their highly dynamic decision boundaries.

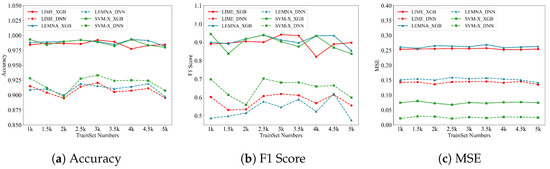

4.3. Impact of the Number of Training Data

In SVM-X, the number of sampled points used to train the local interpretation model may have a huge influence on the performance. So in this section, the influence is evaluated on two different datasets (Adult and Bank) and two models (XGB and DNN) and also compared with those of LIME and LEMNA. For each target model, a sample point is randomly selected from the training set as the target record, and then points around the target record are sampled and used to train the local interpretation model.

Figure 8 and Figure 9 show F1, accuracy, and MSE with the amount of UCI Adult and Bank training data. For XGB and DNN trained on the Adult dataset, the F1 and accuracy of SVM-X are higher than those of LIME and LEMNA, while the MSE of SVM-X is the lowest, which represents that SVM-X fits the target models best. The amount of training data has a smaller influence on the results when the target model is XGB. As for the Adult dataset, the amount of training data has a relatively larger impact, and the law of change is nonlinear and relatively complicated. The main reason is that the number of features of the Adult dataset is more than that of the Bank dataset, so the amount of training data plays an important role in showing the neighborhood comprehensively.

Figure 8.

Accuracy, F1 and MSE with the number of training data under the adult dataset.

Figure 9.

Accuracy, F1 and MSE with the number of training data under the bank dataset.

It can be seen from Figure 9 that these two methods perform the best when the number of training data is about 4000. When the target model is DNN, the performance gap of these three methods is more obvious. Consequently, the effects of the amount of training data are independent of the datasets and the models. Moreover, the more complicated a dataset is, the more important the amount of training data is in the results.

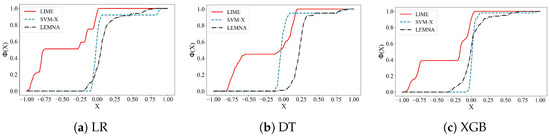

4.4. Weight Stability of Local Interpretation Models

In this part, the weight stability of the local interpretation models is evaluated, and the weight stability is compared with that of LIME and LEMNA. For each target model, 100 samples are randomly selected around a given record as new target records, which are used to train 100 new local interpretation models. The parameters of these new local interpretation models are the same as those of the original model. One hundred weight vectors are obtained, and the variance in each feature weight is calculated. Then, the mean weight variance and the weight difference curve are derived with respect to LIME, LEMNA, and SVM-X.

Intuitively, the weight of each feature in the local interpretation models should show stability since the interpretation models are supposed to give similar explanations for the data that are close to each other. Table 1 shows the mean-variance of these three methods on the local weight. For the Adult dataset, SVM-X performs better than LIME in terms of the stability of other target models. Specifically, when the target models are LR, DT, RF, XGB, and DNN, the mean variance in the local weight on SVM-X is lower than that on LIME by 64.9%, 34.1%, 55.4%, 74.5%, and 73.5%, respectively. When it comes to the Book and DVD datasets, the results of these three methods are relatively close since they are text datasets. However, SVM-X still shows the strongest local weight stability apart from DNN. The main reason why SVM-X does not perform well on DNN is that the training process of DNN involves randomness, including dropout regularization and asynchronous random descent. From the results, it can be seen that SVM-X always performs better than LIME on local weight stability, and the result is related to the target models and datasets. Although LEMNA performs better than LIME on DT when the dataset is Adult, SVM-X has better interpretation stability than LEMNA.

Table 1.

Comparison of the Mean Variance of Local Feature Weights for Different Interpretation Methods Across Models and Datasets.

Figure 10 shows the CDF curve of local weight difference on SVM-X, LIME, and LEMNA. The experiment is completed on the DVD dataset. It can be seen clearly from the CDF curves that the weight difference of SVM-X is denser around zero than that of LIME, which represents that SVM-X performs better than LIME on local weight stability across all target models. Especially for these two methods on the decision tree model and Xgboost model, the method is concentrated around zero, while part of the data in LIME is concentrated around 0.1. Even on the LR model, where SVM-X performs the worst, nearly 90% of the data in SVM-X is concentrated around 0, which is better than LIME. LEMNA also has relatively good stability compared with LIME. However, even on the DNN, where LEMNA performs the best, only 55% of the points are concentrated around 0, which is lower than that of SVM-X.

Figure 10.

Cumulative distribution function (CDF) of weight differences across local models.

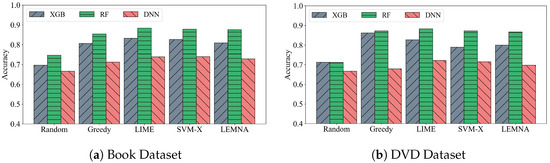

4.5. Fidelity of Local Interpretation Models

In SVM-X, a local interpretation model is constructed around a given instance to accurately provide the basis for the target model to make predictions. Intuitively, how similarly the local interpretation model behaves to the target model plays an important role in the method. Here, the local fidelity of local interpretation models is evaluated and compared with those of other feature selection methods, including LIME, LEMNA, Random, and Greedy as mentioned before. For each target model, a sample is randomly selected from its training data as the target instance, and the local interpretation is constructed. Then, the 20 features with the largest absolute weights are selected, which are used to train a new model whose algorithm and parameters are the same as the target model. After that, the accuracy of the new models is derived with respect to the evaluated methods. As for the recall metrics, the 20 features with the largest absolute weights of the target model are first selected and then compared with the features selected by the evaluated methods to obtain the recall metrics.

Figure 11 compares the accuracy of the new models constructed by the four methods. The comparison experiment is conducted on two datasets: Book datasets (Figure 11a) and DVD datasets (Figure 11b). As can be seen, the datasets used to train target models have a small impact on the accuracy results. Specifically, the changing trends of the histograms in Figure 11a,b are almost the same. This is because these two datasets are relatively similar and are converted into tabular data using the same method to train the target model. SVM-X is very similar to LIME and LEMNA in terms of accuracy and outperforms Random and Greedy on all target models. SVM-X performs the best on RF, achieving accuracy improvements of 17.5% on Random and 2.8% on Greedy. Even on DNN, where SVM-X performs the worst, the accuracy is still slightly higher than that of Random and Greedy.

Figure 11.

Prediction accuracy comparison on book and DVD datasets.

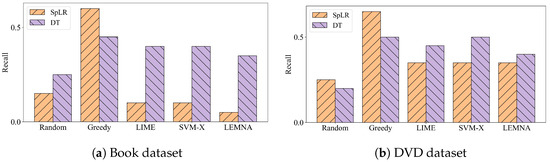

Figure 12 shows the local recall metrics on Book datasets (Figure 12a) and DVD datasets (Figure 12a). Target models play an essential role in the results. Compared with SpLR, SVM-X performs better when the target model is DT, achieving improvements in recall of 300%. The main reason is that linear SVM may fit the decision boundary of the tree model better. The type of datasets has little effect on the results, which is the same as what is analyzed from Figure 11. As can be seen from Figure 12, the recall results of SVM-X and LIME are similar, which represents that the local fidelity of these two methods is relatively similar.

Figure 12.

The prediction recall comparison results.

In order to compare LIME and SVM-X more clearly, the performance of these two methods is assessed using a given text instance. In Figure 13, individual prediction explanations on LIME and SVM-X are shown. The bar chart represents the importance given to the most relevant words, also highlighted in the text. Specifically, the red bar represents the word that contributes to “Positive”, while the blue bar represents the word that contributes to “Negative”. As shown in Figure 13, it can be concluded that the explanation of SVM-X is more reasonable than that of LIME. Specifically, LIME regards words (“more”, “very”) that are not closely related to positive emotions as the words that contribute much to the prediction, which is obviously unreasonable. However, when it comes to SVM-X, it can correctly select the positive sentiment words like “good” and “great” as the model prediction criterion.

Figure 13.

Explaining individual predictions classifiers on whether the book record is “positive” or “negative”.

5. Related Work

For a more intuitive comparison with the previous work, Table 2 compares this work with the previous work from four main aspects. The following subsections provide a more detailed introduction to these related works.

Table 2.

Comparison of key characteristics of prior work and the proposed SVM-X.

5.1. Model-Specific Explanation

Automatic-rule extraction is the premier model-specific explanation technique specifically designed to interpret the prediction results of decision trees [26]. Andrews et al. [27] outline rule extraction techniques and provide a useful taxonomy of five dimensions of rule extraction methods, including the quality of rules at early stages. Then, Averkin et al. [28] review several classical rule extraction algorithms for DNNs in recent years and attempt to extract rules from neural networks using neuro-fuzzy models.

With the success of DNN on many tasks, how to interpret the prediction of DNN models has attracted the interest of numerous researchers. Some researchers aim to extract a set of rules that can explain the prediction behaviors of DNN models. Then, some researchers focus on extracting rules by directly mapping inputs to outputs rather than considering the internal status of a DNN model. These methods treat the DNN model as a black box, looking for the relationship between the inputs and the outputs. Besides, some studies use genetic algorithms [29] or reverse-engineering techniques [30] to extract the explainable rules.

Recently, some works have leveraged DNN models’ gradients of the output over the input to highlight the pixels of the input images that are significantly relevant for the classification results. Among these works, the Saliency map [31] is the most representative and widely-used technique. However, since such derivatives may lose important parts of the information that flows through a network, lots of other approaches have been designed to propagate quantities other than gradients through the network, such as DeepLIFT [32], CAM [33], GradCAM [34], and SmoothGrad [35]. Thereafter, Adebayo et al. [36] propose a practical method to evaluate what kinds of explanations those that output saliency maps can provide. Kindermans et al. [37] focus on the reliability of saliency methods that aim to explain the predictions of DNN. They guarantee reliability by adding a constant shift to the input data. Jin et al. [38] propose the modality-specific feature importance (MSFI) metric to examine whether saliency maps can highlight modality-specific important features, which is important in the biomedical domain. In addition, a recent study [39] introduces a novel explainable AI method specifically designed for deep learning-based time series classifiers. Mamalakis et al. [40] proposed a novel framework that integrates multiple methods to enhance explanations for DNNs, particularly in tasks involving 2D objects and 3D neuroimaging data.

5.2. Model-Agnostic Explanation

Unlike model-specific methods, model-agnostic explanation techniques require no information about the target model, such as model type, model structure, and parameters. These techniques treat the ML models as “black-box”, and derive the explanation by analyzing the corresponding outputs of a set of modified input records [26].

The most representative model-agnostic explanation method is LIME [10] which can explain the predictions of any classifier or regressor faithfully. However, the coverage of LIME’s explanation is unclear. Then, Ribeiro et al. [14] further propose a novel model-agnostic explanation called Anchor, which gives a clear coverage for the explanation. Some researchers find that the interpretation of LIME is unstable because the decision boundary of an ML model is not always linear. Correspondingly, Guo et al. [13] proposed LEMNA, which exploits a mixture regression model enhanced by fused lasso to generate high-fidelity explanation results. To improve the robustness of explanation to small variations, Botari et al. [41] propose to sample the training points along the submanifold where the training dataset lies and then interpret the model prediction based on the sampling points.

Moreover, recent advancements in model-agnostic techniques have explored novel directions. For example, explanation-driven self-adaptation [42] embeds interpretable techniques into adaptive systems to enhance both transparency and adaptability. Another approach has extended model-agnostic interpretability measures to quantum neural networks [43], demonstrating the broadening scope of explainable AI in emerging domains such as quantum computing. Delaunay [44] introduced a method to generate local explanations for deployed ML models, addressing practical concerns in real-world applications. SWhitten et al. [45] proposed a hybrid architecture combining explainable and non-explainable components, introducing a new metric to evaluate model effect while optimizing decision quality.

5.3. Explanation for SVM

Since SVM is used to approximate and then explain the target model’s prediction locally, the explanation techniques used to interpret SVM models are specifically introduced.

Recently, a rule-based explanation for linear SVM models has been proposed [24], which transforms the decision process of SVM models into a set of human-understandable non-overlapping rules. Another work [46] has also applied rule extraction from SVM to explain diagnosing hypertension among diabetics. Subsequently, Zhu et al. [47] propose a rule extraction technique based on the reduction in the consistent area coverage, named CRCR-SVM, to explain the prediction process of the SVM model. An example-based explanation method [25] has also been proposed to explain the prediction of SVM models. This method first selects a set of particular instances that have the most significant contribution to the target model’s prediction from the training dataset and then uses the selected data points to explain the behavior of SVM models. A related work [48] proposes a hybrid approach for extracting rules from SVM for customer relationship management (CRM) purposes. In a recent work, Shakerin et al. [49] focus on the problem of inducing logic programs to explain SVM models.

Unlike the above works that attempt to interpret the prediction behavior of SVM models, SVM is used to approximate the target model locally and interpret the prediction of a target record with the extracted weight of each feature. Furthermore, since SVM models can map the data records to a higher-dimensional space, SVM models could fit the non-linear decision boundary much better than the linear models used in LIME and LEMNA. Therefore, SVM-X does not assume that the decision boundary of the target model near the target record is linear, and thus can provide a more stable explanation.

6. Conclusions

In this paper, a model-agnostic interpretation method named SVM-X is proposed, which can interpret the prediction of any black-box ML model for an individual instance by extracting the contribution of each feature. Compared with previous works, SVM-X more accurately approximates the target model around the target record, providing a more stable and reasonable explanation. The experimental results demonstrate that the local models constructed by this interpretation method achieve higher local fidelity compared with existing methods such as LIME and LEMNA. Moreover, through the analysis of the extracted feature weights, it was found that SVM-X offers a more stable interpretation, even when the target record varies around a point in the data space. Overall, SVM-X is capable of providing stable and reliable interpretations that can assist in model selection, evaluating prediction trustworthiness, and enhancing the reliability of untrustworthy ML models. This work offers a novel approach for local explanation of machine learning models, significantly improving both the efficiency and accuracy of model interpretation, thereby enhancing the understanding of machine learning models’ inner workings and ensuring the credibility and security of artificial intelligence.

SVM-X offers several advantages over traditional interpretation methods. It provides a more stable and consistent local explanation for black-box models, especially for complex models like Random Forests and XGBoost. Furthermore, its flexibility and ability to approximate nonlinear decision boundaries make it superior to linear-based methods like LIME and LEMNA. However, SVM-X is not without its limitations. The method relies on the assumption of linear kernels for local approximations, which may limit its effectiveness when dealing with highly complex interactions or datasets with intricate dependencies. Moreover, while it performs well on many common models, its performance on models such as deep neural networks (DNN) still requires improvement, primarily due to the inherent stochasticity and complexity of these models.

Looking ahead, there are several promising future directions for the SVM-X method. First, it can be extended to handle more complex data types, such as time-series and graph-structured data, which involve more intricate feature dependencies. To address the challenges of capturing complex interactions, a variety of kernel methods could be explored to enhance the fitting ability of nonlinear boundaries. Additionally, real-time data processing can benefit from the integration of incremental learning frameworks, which would reduce computational overhead and allow for dynamic updates, making SVM-X more scalable and adaptable to evolving datasets. These extensions would enhance the applicability and performance of SVM-X, ensuring its continued relevance in diverse, real-world scenarios.

Author Contributions

Conceptualization, B.X.; methodology, J.X.; validation, B.O.; investigation, B.Z. and J.Z.; data curation, H.G.; writing—original draft preparation, Z.Z.; writing—review and editing, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Key Research and Development Program of Hubei Province, China under Grant 2024BAB016, in part by the Key Research and Development Program of Hubei Province, China under Grant 2024BAB031. in part by the Key Research and Development Program of Hubei Province, Chian under Grant 2023BAB074.

Data Availability Statement

The Amazon Product Review dataset can be accessed through the following link (accessed on 11 June 2025): https://huggingface.co/datasets/katossky/multi-domain-sentiment. The UCI Adult dataset can be accessed through the following link (accessed on 11 June 2025): https://archive.ics.uci.edu/dataset/2/adult?utm_source=chatgpt.com. The Bank Marketing dataset can be accessed through the following link (accessed on 11 June 2025): https://archive.ics.uci.edu/dataset/222/bank%2Bmarketing?utm_source=chatgpt.com.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhai, X.; Kolesnikov, A.; Houlsby, N.; Beyer, L. Scaling vision transformers. In Proceedings of the IEEE/CVF CVPR, New Orleans, LA, USA, 18–22 June 2022; pp. 12104–12113. [Google Scholar]

- Min, B.; Ross, H.; Sulem, E.; Veyseh, A.P.B.; Nguyen, T.H.; Sainz, O.; Agirre, E.; Heintz, I.; Roth, D. Recent advances in natural language processing via large pre-trained language models: A survey. ACM Comput. Surv. 2023, 56, 1–40. [Google Scholar] [CrossRef]

- Rawal, A.; McCoy, J.; Rawat, D.B.; Sadler, B.M.; Amant, R.S. Recent advances in trustworthy explainable artificial intelligence: Status, challenges, and perspectives. IEEE Trans. Artif. Intell. 2022, 3, 852–866. [Google Scholar] [CrossRef]

- Zou, L.; Goh, H.L.; Liew, C.J.Y.; Quah, J.L.; Gu, G.T.; Chew, J.J.; Kumar, M.P.; Ang, C.G.L.; Ta, A.W.A. Ensemble image explainable AI (XAI) algorithm for severe community-acquired pneumonia and COVID-19 respiratory infections. IEEE Trans. Artif. Intell. 2023, 4, 242–254. [Google Scholar] [CrossRef]

- Saleem, R.; Yuan, B.; Kurugollu, F.; Anjum, A.; Liu, L. Explaining deep neural networks: A survey on the global interpretation methods. Neurocomputing 2022, 513, 165–180. [Google Scholar] [CrossRef]

- Yeh, C.K.; Kim, B.; Arik, S.; Li, C.L.; Pfister, T.; Ravikumar, P. On Completeness-aware Concept-Based Explanations in Deep Neural Networks. In Proceedings of the NeurIPS, Virtual, 6–12 December 2020; Volume 33, pp. 20554–20565. [Google Scholar]

- Ghorbani, A.; Wexler, J.; Zou, J.Y.; Kim, B. Towards Automatic Concept-based Explanations. In Proceedings of the NeurIPS, Vancouver, CA, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Yash, G.; Amir, F.; Uri, S.; Been, K. Explaining classifiers with causal concept effect (cace). arXiv 2019, arXiv:1907.07165. [Google Scholar]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.I. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Why should I trust you? Explaining the predictions of any classifier. In Proceedings of the ACM SIGKDD, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Baehrens, D.; Schroeter, T.; Harmeling, S.; Kawanabe, M.; Hansen, K.; Müller, K.R. How to explain individual classification decisions. J. Mach. Learn. Res. 2010, 11, 1803–1831. [Google Scholar]

- Strumbelj, E.; Kononenko, I. An efficient explanation of individual classifications using game theory. J. Mach. Learn. Res. 2010, 11, 1–18. [Google Scholar]

- Guo, W.; Mu, D.; Xu, J.; Su, P.; Wang, G.; Xing, X. Lemna: Explaining deep learning based security applications. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 364–379. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Anchors: High-Precision Model-Agnostic Explanations. In Proceedings of the AAAI, New Orleans, LO, USA, 2–7 February 2018; Volume 18, pp. 1527–1535. [Google Scholar]

- He, Y.; Lou, J.; Qin, Z.; Ren, K. FINER: Enhancing State-of-the-art Classifiers with Feature Attribution to Facilitate Security Analysis. In Proceedings of the 2023 ACM SIGSAC CCS, Copenhagen, Denmark, 26–30 November 2023; pp. 416–430. [Google Scholar]

- Blitzer, J.; Dredze, M.; Pereira, F. Biographies, bollywood, boom-boxes and blenders: Domain adaptation for sentiment classification. In Proceedings of the ACL, Prague, Czech Republic, 23–27 June 2007; pp. 440–447. [Google Scholar]

- Kohavi, R. Scaling up the accuracy of naive-bayes classifiers: A decision-tree hybrid. In Proceedings of the ACM SIGKDD, Portland, OR, USA, 2–4 August 1996; Volume 96, pp. 202–207. [Google Scholar]

- Moro, S.; Laureano, R.; Cortez, P. Using data mining for bank direct marketing: An application of the crisp-dm methodology. EUROSIS-ETI 2011. Available online: https://hdl.handle.net/1822/14838 (accessed on 11 June 2025).

- Wright, R.E. Logistic Regression; American Psychological Association: Washington, DC, USA, 1995. [Google Scholar]

- Svetnik, V.; Liaw, A.; Tong, C.; Culberson, J.C.; Sheridan, R.P.; Feuston, B.P. Random forest: A classification and regression tool for compound classification and QSAR modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947–1958. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the ACM SIGKDD, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man, Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Glenn, F.; Sathyakama, S.; R Bharat, R. Rule Extraction from Linear Support Vector Machines. In Proceedings of the ACM SIGKDD, Chicago, IL, USA, 21–24 August 2005; pp. 32–40. [Google Scholar]

- Wachter, S.; Mittelstadt, B.; Russell, C. Counterfactual explanations without opening the black box: Automated decisions and the GDPR. Harv. JL Tech. 2017, 31, 841. [Google Scholar] [CrossRef]

- Dwivedi, R.; Dave, D.; Naik, H.; Singhal, S.; Omer, R.; Patel, P.; Qian, B.; Wen, Z.; Shah, T.; Morgan, G.; et al. Explainable AI (XAI): Core ideas, techniques, and solutions. ACM Comput. Surv. 2023, 55, 1–33. [Google Scholar] [CrossRef]

- Andrews, R.; Diederich, J.; Tickle, A.B. Survey and critique of techniques for extracting rules from trained artificial neural networks. Knowl.-Based Syst. 1995, 8, 373–389. [Google Scholar] [CrossRef]

- Averkin, A.; Yarushev, S. Fuzzy rules extraction from deep neural networks. In Proceedings of the EEKM, Moscow, Russia, 8–9 December 2021. [Google Scholar]

- Hailesilassie, T. Rule extraction algorithm for deep neural networks: A review. arXiv 2016, arXiv:1610.05267. [Google Scholar]

- Zilke, J.R.; Mencía, E.L.; Janssen, F. Deepred–rule extraction from deep neural networks. In Proceedings of the ICDS, Venice, Italy, 24–28 April 2016; pp. 457–473. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the ECCV, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Shrikumar, A.; Greenside, P.; Kundaje, A. Learning important features through propagating activation differences. arXiv 2017, arXiv:1704.02685. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE ICCV, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Smilkov, D.; Thorat, N.; Kim, B.; Viégas, F.; Wattenberg, M. Smoothgrad: Removing noise by adding noise. arXiv 2017, arXiv:1706.03825. [Google Scholar]

- Adebayo, J.; Gilmer, J.; Muelly, M.; Goodfellow, I.; Hardt, M.; Kim, B. Sanity checks for saliency maps. Adv. Neural Inf. Process. Syst. 2018, 31, 1–11. [Google Scholar]

- Kindermans, P.J.; Hooker, S.; Adebayo, J.; Alber, M.; Schütt, K.T.; Dähne, S.; Erhan, D.; Kim, B. The (un) reliability of saliency methods. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer: Berlin/Heidelberg, Germany, 2019; pp. 267–280. [Google Scholar]

- Jin, W.; Li, X.; Hamarneh, G. One map does not Fit all: Evaluating saliency map explanation on multi-modal medical images. arXiv 2021, arXiv:2107.05047. [Google Scholar]

- Mekonnen, E.T.; Longo, L.; Dondio, P. A global model-agnostic rule-based XAI method based on Parameterized Event Primitives for time series classifiers. Front. Artif. Intell. 2024, 7, 1381921. [Google Scholar] [CrossRef] [PubMed]

- Mamalakis, M.; Mamalakis, A.; Agartz, I.; Mørch-Johnsen, L.E.; Murray, G.; Suckling, J.; Lio, P. Solving the enigma: Deriving optimal explanations of deep networks. arXiv 2024, arXiv:2405.10008. [Google Scholar]

- Botari, T.; Izbicki, R.; de Carvalho, A.C. Local Interpretation Methods to Machine Learning Using the Domain of the Feature Space. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Würzburg, Germany, 16–20 September 2019; pp. 241–252. [Google Scholar]

- Negri, F.R.; Nicolosi, N.; Camilli, M.; Mirandola, R. Explanation-driven Self-adaptation using Model-agnostic Interpretable Machine Learning. In Proceedings of the 19th International Symposium on Software Engineering for Adaptive and Self-Managing Systems, Lisbon, Portugal, 15–16 April 2024; pp. 189–199. [Google Scholar]

- Pira, L.; Ferrie, C. On the interpretability of quantum neural networks. Quantum Mach. Intell. 2024, 6, 52. [Google Scholar] [CrossRef]

- Delaunay, J. Explainability for Machine Learning Models: From Data Adaptability to User Perception. Ph.D. Thesis, Université de Rennes, Rennes, France, 2023. [Google Scholar]

- Whitten, P.; Wolff, F.; Papachristou, C. An AI Architecture with the Capability to Explain Recognition Results. arXiv 2024, arXiv:2406.08740. [Google Scholar]

- Namrata, S.; Pradeep, S.; Deepika, B. A rule extraction approach from support vector machines for diagnosing hypertension among diabetics. Expert Syst. Appl. 2019, 130, 188–205. [Google Scholar]

- Zhu, P.; Hu, Q. Rule extraction from support vector machines based on consistent region covering reduction. Knowl.-Based Syst. 2013, 42, 1–8. [Google Scholar] [CrossRef]

- Farquad, M.A.H.; Ravi, V.; Raju, S.B. Churn prediction using comprehensible support vector machine: An analytical CRM application. Appl. Soft Comput. J. 2014, 19, 31–40. [Google Scholar] [CrossRef]

- Shakerin, F.; Gupta, G. White-box induction from SVM models: Explainable ai with logic programming. Theory Pract. Log. Program. 2020, 20, 656–670. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).