3. Methodology

In this paper, 294 companies from the S&P 500 index that were continuously listed over the analysis period (1 January 2010–1 June 2022) are clustered. Since it includes the 500 biggest companies by market capitalization on the most developed capital market, the US equity exchange, the S&P 500 was chosen. It serves as the primary investment benchmark for evaluations of performance for most investors and fund managers globally. By concentrating on S&P 500 businesses, substantial liquidity is ensured, making clustering-based trading methods practical. This decision raises the study’s relevance and applicability for real investors looking to beat the benchmark, even though it might limit generalizability to other markets. Most investors and hedge fund managers use the S&P 500, one of the most well-known market indices, as a benchmark and indicator of the US stock market. In order to achieve robust clustering, firms that were not consistently listed over the whole time were eliminated, as companies are added or withdrawn from the index due to eligibility barriers. This agrees with the core AI/ML tenet of “Garbage In, Garbage Out”.

The research being conducted is motivated by the premise that groups of companies that consistently beat the S&P 500 benchmark on a risk-adjusted return basis can be found by clustering them using k-means based on financial parameters. The study intends to help investors build well-diversified portfolios that enhance risk-adjusted returns by utilizing important liquidity and solvability criteria.

Although Python is already widely used and highly versatile in machine learning, it was still chosen for data processing and clustering in this case. Python 3.9 served as the basis for all data wrangling and analysis. The requests package was used to query the Calcbench REST API, scikit-learn (StandardScaler, KMeans, silhouette_score) for modeling and validation, matplotlib for visualization, yfinance for Yahoo Finance price pulls, pandas for tabular manipulation, and NumPy for numerical operations. The Finance Application Programming Interface (API) was used to obtain price data from Yahoo Finance, while the Calcbench API was used to extract basic financial data from Securities and Exchange Commission (SEC) filings. The raw Calcbench + Yahoo Finance pull was cleaned in the manner described as follows, before modeling: (i) to reduce survivorship-bias noise from temporary constituents, the universe was limited to the 294 firms that remained in the S&P 500 for the entire 2010–2022 window; (ii) quarterly fundamentals with missing observations were forward-filled for up to one year, and any remaining gaps (<0.3% of cells) were eliminated. To prevent outliers from controlling the distance measure in k-means, extreme ratio values over the 1st and 99th percentiles were winsorized. Before using machine learning methods, all input variables were standardized to avoid potential data distortions or biased outcomes. Using the scikit-learn Python library’s StandardScaler, the standard score (z-score), noted by

z, was determined as follows:

where

is the training sample mean,

σ is

is the sample [

42]. The study optimizes the efficacy of the clustering process and the accuracy of its conclusions by guaranteeing consistent data preparation.

Clustering, which involves grouping related data points into discrete groups known as clusters, is an essential step that follows data aggregation and normalization. Clustering, which is categorized as an unsupervised learning algorithm, finds hidden patterns and structures in the dataset without making use of labels that have already been assigned. The algorithm independently analyzes the data to identify the best clusters for stocks because stock market analysis can be challenging and there are no obvious classification labels. This method helps investors find businesses with comparable financial traits and make well-informed portfolio decisions, which renders it very helpful for stock selection and diversification.

Finding the ideal number of clusters (k) is a crucial phase in the clustering process. Although eye examination or a preset number of ideal clusters could possibly be used for this, objective techniques are available to prevent human bias. The Elbow Method, among the most popular approaches, computes the Within-Cluster Sum of Squares (WCSS) for each iteration of the clustering algorithm using varying values of k. The WCSS is calculated as follows and measures how compact a cluster is:

where

represents the i-th data point, and

is the centroid of the cluster to which it belongs. The optimal number of clusters is typically identified as

sharply decreases, forming an “elbow” shape on the graph.

For identifying the most suitable number of clusters, the Elbow Method is not necessarily the most accurate approach. Selecting k can become unclear if the inflection point is not readily apparent. To guarantee the most relevant stock segmentation in such circumstances, it may be necessary to test a range of values for k and take consideration of alternate clustering evaluation methodologies.

Silhouette Analysis is a popular method for determining the ideal number of k clusters. Using a Silhouette Score that ranges from −1 to 1, this method assesses how well each data point fits into its designated cluster as compared to other clusters. The data point has probably been misclassified and assigned to the incorrect cluster if the score is near −1. The data item is appropriately identified into its cluster if its score is near 1. A score close to 0 indicates that clusters overlap, which weakens the separation between classifications. To calculate the right number of clusters (k), the figure that produces Silhouette Scores closest to one is chosen [

43]. The Silhouette Score S(i) for the data point in question i is determined using the following formula:

where

a(i) represents the average intra-cluster distance, or the mean distance between i and all other points in the same cluster, and

b(i) represents the average inter-cluster distance, or the mean distance from i to the nearest neighboring cluster.

By integrating insights from the Elbow Method and Silhouette Analysis, the optimal number of clusters (k) for this study was determined to be 2. The k-means clustering technique was chosen because of its efficiency, scalability, and broad application in unsupervised machine learning. K-means is a partitional clustering algorithm that divides the dataset into k various clusters, each represented by a cluster centroid. It accepts unlabeled information as input and assigns each data point to the nearest cluster centroid, attempting to reduce the sum of lengths between each point and its corresponding centroid. Even though they were examined, density-based (DBSCAN) and distributional (Gaussian Mixture Model) approaches were not implemented: while GMMs add stronger distributional assumptions and a significantly longer run-time without providing a clearer economic interpretation, DBSCAN requires an ε-neighborhood parameter that is difficult to calibrate in this eight-ratio, 12-year panel. K-means is a personalized optimization approach that does not always discover the worldwide minimum of the sum of squared distances between data points and centroids, but it is quite effective in reality. Since all input features were z-score-standardized, balancing scale and variation across dimensions, k-means inherently predicts nearly spherical clusters with identical within-cluster variance because it reduces Euclidean distance. To increase accuracy and reduce the chance of convergence to a poor solution, the method is performed several times with different randomized initializations, and the best clustering output from each run is chosen.

The steps of the algorithm are as follows:

Determine the number of clusters (k).

Initial cluster centroids are chosen at random from k points.

Each data point is assigned to its closest cluster centroid (k).

Cluster centroids are relocated based on the estimated mean of all data points.

Repeat steps 3 and 4 until the last produced cluster centroids are no longer shifted and the data is clustered.

Considering the successful clustering of all companies into two separate groups (cluster 0 and cluster 1) based on profitability measures for each year, the next step is to determine whether these clusters provide real benefit to traders and investors when building portfolios. To find a balance between data availability and insightful performance assessment, a one-year backtest period was used. Yearly financial statements, which provide a comprehensive evaluation of a company’s profitability, liquidity, and solvency, might be included within this period. Additionally, a year allows businesses ample opportunity to adjust their plans and react to shifting market dynamics, which increases the relevance of performance evaluations.

In practical terms, investment funds and portfolio managers usually use profit and loss statements from one year apart to assess performance annually. The results of the research are still directly relevant to actual investment decision-making since they are in line with this standard business practice. Furthermore, by employing year-long periods, several non-overlapping observations can be made, boosting the analysis’s robustness without adding transient market noise. This is accomplished through backtesting, which evaluates previous data to estimate the performance of each cluster as time goes by.

Backtesting uses financial information from January 1 to December 31 of Year 0. Companies disclose audited financial data at different times; thus, the clustering process is completed by May 31 of Year 1 to accommodate for reporting delays. After clustering is completed, two equally balanced portfolios are created, each comprising all of the companies from its respective cluster. Each stock’s weight is allocated equally, measured as 100% divided by the total amount of firms in the portfolio. To reduce concentration risk and avoid overexposure to the biggest corporations, equal-weighted portfolios were employed. A market-cap weighted strategy would limit potential outperformance by producing portfolios that are too comparable to the S&P 500. Equal weighting also makes it easier to replicate a portfolio without constantly re-balancing, making it more affordable for investors with a smaller budget. As prices fluctuate, market-cap weighting would need to be adjusted regularly, which would raise transaction costs and portfolio turnover. From June 1 of Year 1 to June 1 of Year 2, these portfolios are backtested to compare their performance to one another and to the overall market. As a market benchmark, the SPY ETF is used, as it closely tracks the S&P 500 index, which is widely regarded by asset managers as the performance benchmark to surpass. Given this methodology, backtesting results are valid until June 1, 2022, based on clustering from 2020 profitability ratios. While clustering for 2021 profitability ratios is accessible, the backtesting period is still incomplete; consequently, it was removed to prevent drawing hasty conclusions. To allow for direct performance comparisons, all three portfolios were normalized to 1.0 (or 100%). This uniformity ensures that relative performance variations are easily discernible over time. Real-world trading restrictions including transaction charges, liquidity limitations, and re-balancing frictions are not included in this analysis. Without introducing time-varying cost assumptions that are challenging to quantify over extended periods of time, the main goal is to isolate the pure economic signal produced by the clustering methodology. In addition, this method stays in line with earlier research, which typically abstracts implementation costs in order to concentrate on theoretical and structural insights. Subsequent research should naturally incorporate real-world trading limitations to assess the strategy’s viability in applied portfolio management. After the backtests had been completed, key performance indicators such as return, volatility, and Sharpe Ratios were determined for each portfolio. Alternative measures such as Jensen’s alpha (CAPM abnormal return) and Treynor ratio (excess return per unit of systematic risk) were considered but omitted from the headline results because (i) they both embed a single-factor risk model that the study purposefully avoids; (ii) initial estimates (reported in the online appendix) revealed no qualitative change in conclusions relative to Sharpe; and (iii) they require a stable beta estimate, which is noisy for annually re-balanced, equally weighted clusters of varying sector composition.

As can be seen in

Figure 1, the simplified process flow diagram is presented.

Because the yearly, overlapping return series violate the i.i.d. assumption and the sample size (12 rolling windows) is too small for reliable resampling, formal significance tests (such as Jobson–Korkie or bootstrapped Sharpe differences) were not conducted. As a result, the analysis views Sharpe differences as economically, rather than statistically, informative, leaving rigorous inference to future work. The Sharpe Ratio, developed by William F. Sharpe [

44], is a popular tool among financial professionals for evaluating risk-adjusted returns. This metric assesses the reward-to-variability tradeoff, providing a more accurate representation of portfolio performance by changing returns for risk. The Sharpe Ratio is the primary measure of risk-adjusted performance in this study. Both researchers and professionals use and comprehend the Sharpe Ratio extensively, and it is often regarded as one of the most significant metrics in investment evaluation. Since annualized Sharpe Ratios are reported in the majority of conventional asset pricing and factor-anomaly literature, its adoption also makes comparisons with previous research easier.

Other performance measures, such the Sortino Ratio or Maximum Drawdown, provide more information, but they also offer more variables and customization options. Concerns with “metric mining”, in which selective reporting favors the most flattering outcomes, may arise from this. As a result, methodological clarity and conformity to accepted norms receive top priority in the current analysis. Further research should focus on broadening the range of performance criteria, especially for a deeper assessment of drawdown sensitivity or downside risk.

The Sharpe Ratio is computed using the following formula:

where

represents the portfolio return,

is the risk-free rate, approximated using the average annual yield of the 10-year US Treasury Note as a proxy, and σ

p denotes the standard deviation of the portfolio’s excess return, serving as a measure of its volatility. The Sharpe Ratio, which evaluates excess payout per unit of total portfolio volatility, the precise amount minimized along the optimal frontier, is selected as the study’s main efficiency statistic because it closely corresponds with Markowitz mean-variance theory. In keeping with our data-driven clustering approach, using σ instead of β avoids the imposition of a single-factor CAPM structure and keeps the evaluation model free. The 10-year US Treasury note’s time-varying annual average yield, or

, is sourced from the FRED series DGS10; an updated annual mean is computed for each backtest window (for example, June 2016–May 2017) so that the risk-free rate changes in response to market conditions.

When interpreting the data, the following metrics were used, as seen in

Table 1 below:

To provide a structured examination of portfolio performance, the results are divided into three categories of financial metrics: profitability, liquidity, and solvability. Each of the categories is thoroughly studied in the subsequent subchapters, where the impact of various financial indicators on volatility, and risk-adjusted performance (Sharpe Ratio) is evaluated. This breakdown provides a better understanding of how various financial parameters impact portfolio clustering outcomes and investment decisions. Companies with more favorable profit ratios reflect a greater ability to create profits and cash flows, making them more appealing to fundamental investors than less profitable or loss-making businesses. Highly profitable corporations typically have greater market prices. However, there are a few outliers, particularly among high-growth technological companies that may operate at a loss for a considerable amount of time while aggressively spending on R&D and client acquisition. Even in such circumstances, investors frequently value these businesses based on their probable future profitability potential rather than their current earnings. Given this dynamic, it is understandable that some investors may want to diversify their portfolios based on indicators of profitability in order to increase risk-adjusted returns.

Studying profitability ratios alone provides minimal information on a company’s financial health. A more effective strategy is to compare these measures among different companies in the same sector or field. Profitability levels differ greatly among sectors; for example, companies in the Consumer Staples sector frequently maintain low but in-accordance profit margins, but firms in the Technology sector may undergo years of unprofitability before receiving high profit margins as they grow. Understanding these variations is critical for making sound investing decisions

This paper analyzes eight important profitability measures typically used in evaluating publicly traded companies to help investors diversify their portfolios while enhancing risk-adjusted returns. These indicators are derived from balance sheets, cash flow statements, and income statements, which corporations publish quarterly. This study used the following profitability ratios: Return on Assets (ROA), Return on Equity (ROE), Return on Invested Capital (ROIC), Gross Profit Margin (GPM), Net Profit Margin (NPM), Operating Profit Margin (OPM), Operating Cash Flow Margin (OCFM), and Earnings Before Interest, Taxes, Depreciation, and Amortization Margin (EBITDA).

After consolidating and adjusting the data, the next phase in the process is clustering, which entails categorizing organizations with similar economic features into discrete groups known as clusters. Clustering is an unsupervised learning technique that detects hidden patterns and structural correlations in data without using predefined labels. Given the complexities of stock market data and the lack of established groups, the algorithm examines the dataset autonomously to identify the most significant clusters of stocks. This strategy is very helpful for discovering stocks with similar profitability measures, which helps investors with choosing stocks and variety. The clustering process was performed on an annual basis over a 12-year period, involving the 294 organizations that remained constantly listed during the study. On an annual basis, clustering was performed using quarterly reported financial numbers, which were then combined to determine each company’s yearly financial standing. Each of the eight profitability ratios was clustered separately, which meant that for each year, all 294 companies being allocated to clusters depended on their performance in a given profitability statistic. This results in eight clusters each year, for an aggregate of 96 clusters over the twelve-year study period.

Finding the right number of clusters (k) is an important stage in clustering analysis. While visual inspection and subject experience can provide a first estimate, more objective methods exist to decrease bias against humans in the selection process. The Elbow Method is an accepted approach in which the clustering algorithm is run for various k values and the Within-Cluster Sum of Squares (WCSS) is calculated for each case. WCSS assesses cluster homogeneity by calculating the sum of squared distances between every point of information and its assigned cluster centroid.

Figure 2 provides an illustration of Elbow Method applied to Gross Profit Margin for the year 2017, to aid in choosing the number of clusters.

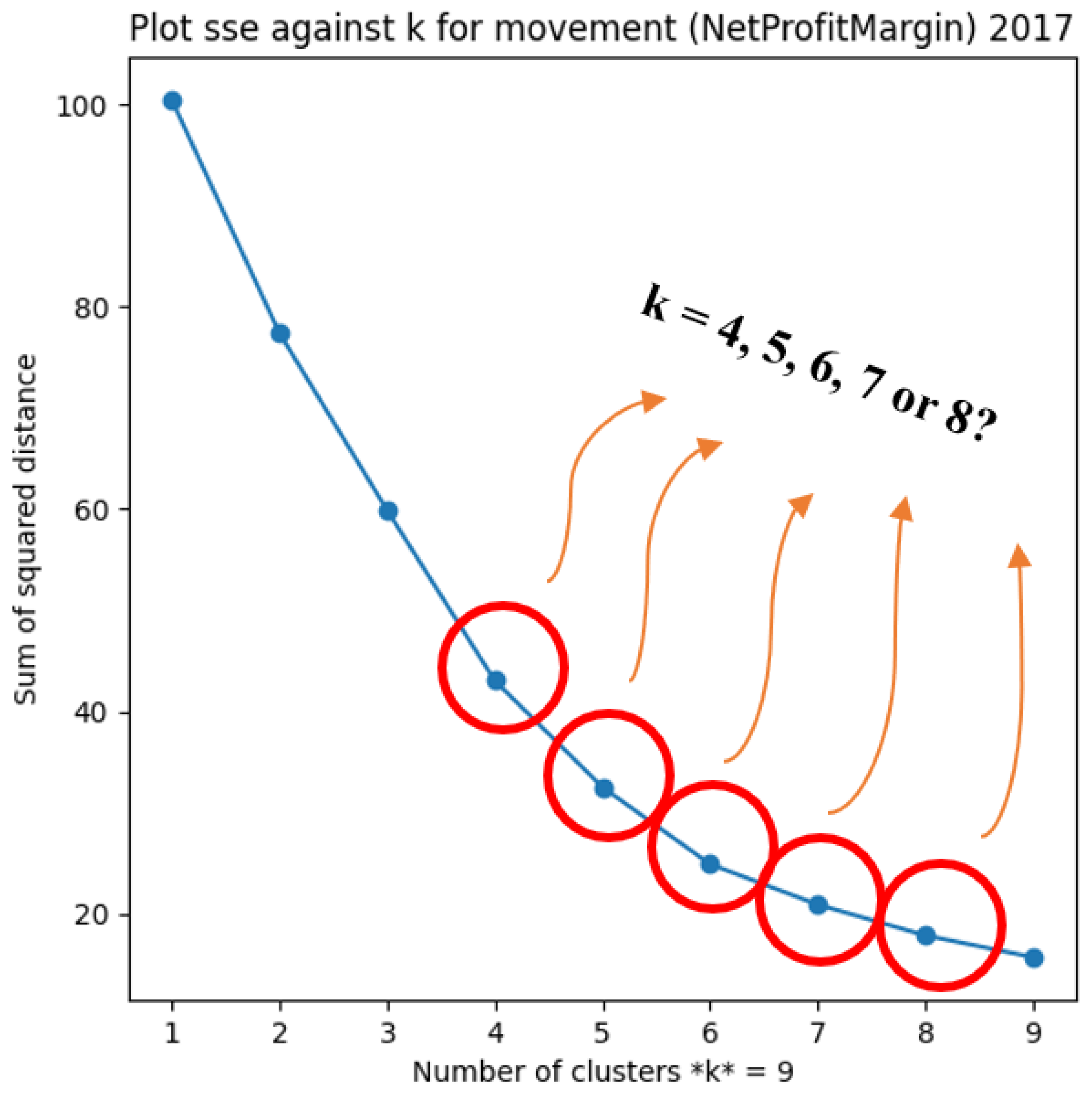

The Elbow Method indicates that the optimal number of clusters is two, as demonstrated by the inflection point in the WCSS graph. While this method is extensively used, it is not necessarily the most reliable way to determine the appropriate number of clusters. In other scenarios, visual inspection alone may not provide a clear indication of the best k value, making the selection procedure more difficult. As illustrated in

Figure 3, when the curve’s inflection point is not clearly evident, the choice of k becomes less obvious. In such cases, relying just on the Elbow Method may result in confusion, necessitating further validation approaches. Alternative techniques, such as Silhouette Analysis, can aid in the selection of the best cluster count by assessing how well each data point fits into its given group. In circumstances when the Elbow Method fails to provide a conclusive answer, testing other cluster numbers and analyzing results with additional clustering validation measures can result in enhanced segmentation.

Because no distinct elbow is visible in the plot, calculating the appropriate number of clusters (k) using the Elbow Method becomes difficult. Given that this method does not always yield a conclusive answer, an additional methodology called Silhouette Analysis is used to find the optimal number of clusters. Silhouette Analysis evaluates how well a data point fits within its given cluster when compared to other clusters, generating a Silhouette Score ranging from −1 to 1. A score around −1 indicates that the data point was misclassified and would be better suited in another cluster; a score near 1 indicates that the data point was appropriately assigned, implying that it is well isolated from other clusters; and a score below 0 indicates that clusters overlap, making classification less distinct. To identify the appropriate number of clusters (k), the value that maximizes the Silhouette Score, ideally close to one, is chosen, as per Banerji (2021) [

43]. Based on the Silhouette Analysis results for the Net Profit Margin in 2017 (

Figure 4), it is clear that the best number of clusters is two, as shown by a Silhouette Score of nearly 0.9. This high distinction between clusters demonstrates that two different groups are well formed, which supports the choice of k equal to 2 for this dataset.

The highest Silhouette Score suggests that the best number of clusters is two. After collecting the data from both the Elbow Method and the Silhouette Analysis, it became clear that the Elbow Method was not an especially trustworthy technique for establishing the correct number of clusters in the context of profitability ratio clustering. With a few exceptions, the Elbow Method yielded unclear findings, making it difficult to choose a suitable k. In contrast, Silhouette Analysis produced more precise and trustworthy results, providing an improved basis for calculating the optimum number of clusters. In most cases, the ratio k = 2 was chosen as the best option. While there are some uncommon instances, this study uses two-cluster segmentation for two reasons: (1) to ensure comparability across all clustering scenarios, which allows for significant cross-sectional analysis; and (2) to provide a structured foundation for the future refinement and development of this clustering framework.

The k-means method for clustering was selected because of its efficiency, scalability, and extensive use in machine learning that is unsupervised. K-means is a partitional clustering algorithm that separates the dataset into k different groups, each with its own centroid. This algorithm receives unlabeled data as input and allocates each data point to a cluster centroid (k) by minimizing the sum of distances between the data point and the allocated cluster center. K-means is a local optimization algorithm that does not always identify the global minimum of the sum of squared distances, although it is quite effective in reality. To minimize the danger of suboptimal clustering, the algorithm is performed several times with different random initializations, and the best results from each run are chosen as the final clustering result. Scikit-learn’s k-means++ seeding is applied in each iteration (n_init = 10, max_iter = 300); convergence toward a stable local minimum is guaranteed by choosing the solution with the lowest final inertia. This guarantees that the clustering process is both robust and reliable.

To broaden the analysis and improve investment decision-making, liquidity and solvency criteria need to be integrated. Liquidity measures indicate a company’s ability to satisfy short-term obligations, whereas solvency metrics represent the company’s financial stability and ability to handle debt. By integrating profitability, liquidity, and solvency, investors can build more resilient portfolios that reduce risk while optimizing risk-adjusted returns. This integrated approach serves as the foundation for the next round of research, which will look into how liquidity and solvency measurements may assist discovery and selection of high-performing equities. This builds on the profitability-based analysis, giving a more thorough foundation to establish stronger investment portfolios. The liquidity metrics considered for this clustering were Cash Ratio, Current Ratio, and Quick Ratio, and for solvency the metrics considered were Short-Term Debt to Equity Ratio, Long-Term Debt to Equity Ratio, Times Interest Earned, Debt to EBITDA, Payables Turnover, Asset to Equity Ratio, Days Sales Outstanding (DSO), Debt to Equity Ratio, Days Payables Outstanding (DPO), and Debt Ratio.

4. Results

After successfully clustering all companies into two groups (cluster 0 and cluster 1) for each year and across all profitability metrics, the next critical step, moving from theory to practice, is to determine that these clusters provide tangible advantages to traders and investors in portfolio construction. This is accomplished through backtesting, which simulates historical performance to evaluate the effectiveness of the clustering process. To conduct the backtest, financial data were obtained from 1 January to 31 December of Year 0. Companies provide audited financial data at different times; therefore, clustering is performed until 31 May of Year 1, allowing for any delays in SEC filings and shareholder reporting. Following clustering, two equally weighted portfolios are produced, each incorporating all companies from the respective clusters. Each stock’s weight is evenly distributed, measured by splitting 100% between the total number of firms in the cluster.

From 1 June of Year 1 to 1 June of Year 2, the two portfolios are backtested to determine their performance in comparison with one another and the overall market. The SPY ETF is utilized as a market benchmark since it closely tracks the S&P 500 index, which is acknowledged as the industry standard for measuring investing performance. Based on clustering of 2020 profitability ratios, backtesting results are valid until 1 June 2022. Although clustering for the 2021 profitability ratios was completed, the backtesting period is still incomplete; hence, it was removed to avoid presenting premature or inconclusive results. To allow for the easy display and comparison of results, all three portfolios were normalized to a starting value of 1.0 (or 100%) at the beginning of the backtest.

As can be seen in

Figure 5, the backtesting in year 2017 based on Gross Profit Margin in 2016 clustering, is presented.

Figure 6 illustrates an example of such a backtest. The test begins in 2017, using k-means clustering applied to Gross Profit Margin data from 2016. The red line reflects the SPY ETF performance, while the blue and green lines reflect the cluster 0 and cluster 1 portfolios, respectively. This visualization assists with determining whether profitability-based clustering produces portfolios that outperform or underperform the market benchmark over time.

The following metrics are presented in the tables below:

Table 2—profitability metrics,

Table 3—liquidity metrics and

Table 4—solvency metrics. To provide a comprehensive view of performance,

Table 5 averages and aggregates the results of 11 years of backtesting for each profitability parameter. The table is organized as follows: the first three columns represent the portfolios’ average returns; the middle three columns present the average volatility levels; and the final three columns report the average Sharpe Ratios. The table displays portfolio outcomes in the following order: (1) cluster 0 portfolios, (2) cluster 1 portfolios, and (3) SPY (the S&P 500 market equivalent). This organized presentation provides an effective comparison of cluster-based portfolio outcomes to the market benchmark, assisting in determining whether clustering based on profitability criteria leads to higher risk-adjusted returns.

The findings show a clear pattern in portfolio performance: cluster 1 portfolios have the highest returns and volatility, whilst the SPY (market portfolio) has the least amount of return and volatility. At this point, cluster 0 portfolios consistently fall between the two.

Although some traders deliberately seek volatility for short-term trading opportunities, most investors prefer higher returns accompanied by lower volatility. However, the bulk of investors who favor risk-adjusted returns over speculative volatility-driven methods are the subject of the present research. It is clear from a heatmap depiction of the data that cluster-based portfolios often provide higher returns than the market benchmark. Still, as the volatility columns show, cluster 1 portfolios also show the highest levels of volatility, indicating that this increased return is not risk-free. The SPY market portfolio, on the other hand, has less volatility, which is consistent with its steadier, wide-market exposure. Once more, cluster 0 portfolios balance risk and return by maintaining an intermediate position.

Higher returns are frequently associated with more volatility, as is well known in both academic research and investment practice. The Sharpe Ratio was used to estimate returns adjusted for risk in order to evaluate performance above absolute returns. According to this analysis, the market portfolio regularly produces the poorest Sharpe Ratio, whereas the two cluster portfolios alternate for the title of greatest risk-adjusted return. The average Sharpe Ratio of the SPY market portfolio is shown by the grey line in

Figure 7, whereas the average Sharpe Ratios of the cluster 0 and cluster 1 portfolios are shown by the blue and orange lines, respectively. This variation implies that although both cluster-based portfolios beat the market when risk is taken into account, their position of supremacy varies depending on the time period and particular market conditions.

Cluster 1 portfolios based on GPM, OCFM, and ROA have the best Sharpe Ratios, according to the analysis. In particular, three portfolios with Sharpe Ratios of 1.22 (GPM), 1.22 (OCFM), and 1.21 (ROA) stand out as having higher risk-adjusted returns. According to these research results, businesses in cluster 1 provide the optimum return-to-volatility balance when categorized using these profitability criteria.

These portfolios produce the greatest Sharpe Ratios. In terms of both absolute return and risk-adjusted return, these portfolios routinely beat the market benchmark (SPY) and cluster 0 portfolios. Cluster 1 portfolios performed better than cluster 0 portfolios, with an average real return advantage of 12.06% and 27.95% above the market portfolio (SPY), respectively. As regards average real volume, cluster 1 has an advantage of 19.46% above cluster 0 and 35.08%, respectively, above the market portfolio (SPY), and on Average Excess Sharp there is an advantage of 0.01 for cluster 0 and 0.23 for market portfolio (SPY). These portfolios’ greater risk-adjusted returns were further supported by their Sharpe Ratio excess of 0.13 over cluster 0 and 0.33 over the market portfolio. These findings show that clustering based on these indicators of profitability may offer a workable approach for portfolio creation, as more risk-taking in cluster 1 portfolios produced both higher returns and a better risk-adjusted reward.

Compared to cluster 1, there was no obvious victor in terms of performance supremacy for any one profitability criteria. Rather, in terms of returns and risk-adjusted performance, cluster 0 portfolios continuously placed themselves in the middle of the market portfolio (SPY) and cluster 1 portfolios. These findings support the notion that cluster 0 portfolios constitute a compromise, providing a more equitable risk–return tradeoff in contrast to the higher-risk, higher-reward profile of cluster 1.

Because they are more volatile, cluster 1 portfolios frequently suffer a more significant decline during volatile markets, occasionally even outperforming the SPY. They do, however, recover from downturns more quickly and robustly, providing a greater upside when markets recover. These portfolios can boost returns for investors who are capable of capitalizing on such corrections. Cluster 0 portfolios, on the other hand, are more stable and offer capital protection during tumultuous times. These safer choices may be preferred by investors who anticipate higher volatility. An obvious illustration of the tradeoff between risk and opportunity based on market conditions is the COVID-19 crash in 2020, when cluster 1 portfolios fell more than SPY but later outperformed in the rebound.

The analysis demonstrates that the returns and volatility levels of cluster 0 portfolios are in the middle of those of the cluster 1 and SPY portfolios. This confirms the notion that risk is typically higher when returns are higher. In contrast to the lower-risk, lower-return SPY market portfolio and the higher-risk, higher-return cluster 1 portfolios, it is significant that cluster 0 portfolios typically place themselves in the middle of the SPY and cluster 1 portfolio range, demonstrating that they are a moderate-risk, moderate-return investment choice.

Plotting the return and volatility of the examined portfolios on an axis serves to further support this result. This graphical illustration, as shown in

Figure 8, intuitively confirms the clustering results by vividly visualizing the link between risk and return. What is also remarkable is that the portfolios remain aligned with the initial clustering on profitability ratios early in the study, even when assessed on the basis of return and risk. This consistency indicates that the profitability-based classification successfully divides businesses into discrete investment profiles and provides additional evidence of the precision and dependability of the clustering process used.

The study’s conclusions support the notion that the portfolios in clusters 0 and 1 show different grouping patterns, with both showing better return potential and more volatility. As demonstrated by the SPY ETF, which mimics the S&P 500 index, these results empirically confirm the concept that groups of companies that exceed the market benchmark on a risk-adjusted basis can be successfully identified by using k-means clustering to profitability measures. This research’s methodological approach made it easier to divide the companies into two groups, each of which had its own performance traits: compared to the SPY market portfolio, (1) cluster 0 portfolios generated higher returns with higher volatility; (2) cluster 1 portfolios showed even better returns, but with a corresponding rise in volatility.

Nevertheless, both the cluster 0 and cluster 1 portfolios outperformed the market portfolio when evaluated using the risk-adjusted return framework, as seen in their greater Sharpe Ratios. The most favorable risk-adjusted returns were notably shown by portfolios built from companies in cluster 1 based on GPM, OCFM, and ROA. This suggests that these indicators of profitability are especially important in selecting businesses with a better return per unit of risk. Both clustered portfolios provide good investment options, and an investor’s risk tolerance and return goals will determine which option they choose. Cluster 1 portfolios may be preferred by investors looking for better absolute returns, while cluster 0 portfolios may be more attractive to those who take a more balanced approach to risk and return. By adding companies that match their volatility preferences or risk-adjusted return expectations, as indicated by the Sharpe Ratio, investors can further improve their portfolio construction process, using the clustering results as a further stock selection criterion.

A significant methodological finding from this study is that Silhouette Analysis produced more trustworthy results than the Elbow Method when it came to figuring out the ideal number of clusters. This result emphasizes how important it is to use the right clustering validation methods, especially for financial applications where patterns and data distributions are very changeable. Although the study’s results are encouraging, it is advised that this methodology not be applied alone but rather in conjunction with other risk management and portfolio development methodologies. Although the profitability ratios examined in this study offer a thorough foundation for clustering, future research could build on this by adding more financial indicators, such as solvability and liquidity ratios, to further strengthen the clustering process’s resilience.

Furthermore, future studies should examine the viability of forecasting full-year financial ratios using partial-year data, as publicly traded corporations provide financial data on a quarterly basis. By clustering financial data based on one, two, or three quarters, investors may be able to make investment decisions early and gain from new trends and stock market price changes. Furthermore, there remains a lot of room to improve and refine this clustering-based investment method in order to adjust to shifting market conditions and new investment opportunities as financial markets continue to change.

The findings related to liquidity metrics are summarized in

Table 6, which compares the performance of the portfolios against each other and the market benchmark.

For the same three liquidity metrics, the figure below presents the plot of Sharpe Ratios for the portfolios made from companies in cluster 0, cluster 1, and SPY.

The findings show that the average Sharpe Ratio was lowest for the SPY ETF and most significant for companies in cluster 0 in every instance, with cluster 1 companies falling in between. Cluster 1 portfolios performed better than cluster 0 portfolios, with an average real return advantage of 13.62% and 29.68% above the market portfolio (SPY), respectively. As regards average real volume, cluster 1 had an advantage of 27.70% above cluster 0 and, respectively, 42.83% above the market portfolio (SPY), and on Average Excess Sharp there was a difference of −0.08 for cluster 0 and 0.14 for market portfolio (SPY). This implies that investors should choose portfolios made up of businesses in cluster 0 since they provide a higher risk-adjusted return, given all other variables staying the same.

Additionally, the figure below uses k-means clustering on liquidity measures to plot average volatility (x-axis) versus average return (y-axis) for the SPY ETF and the portfolios formed from cluster 0 and cluster 1 in order to visually demonstrate the correlation between risk and return. The influence of liquidity-based clustering on portfolio performance in comparison to market benchmarks is shown graphically in this analysis.

The cluster’s average return and volatility for the liquidity metric-built portfolios is illustrated in

Figure 9.

Higher risk exposure is typically linked to higher return potential, as the graph shows a positive linear relationship between volatility and returns. This finding is consistent with well-established financial theories, which hold that investors demand greater remuneration in exchange for taking on greater risk. Nevertheless, this relationship indicates diminishing marginal gains, as demonstrated by the Sharpe Ratio analysis. The rate of return increase in relation to the additional volatility assumed gradually decreases, even while cluster 1 portfolios obtain greater absolute returns. Because more volatility does not always equate to proportionately larger returns, this study emphasizes the significance of assessing risk-adjusted performance measures. Therefore, when building optimized investment portfolios, investors should take into account both the efficiency of risk-taking and absolute performance.

Furthermore,

Table 7 illustrates the performance results of portfolios built using the clustering of solvency criteria. A comparison of the portfolios in relation to the market benchmark and to one another can be found in this table.

For the same 10 liquidity metrics, the

Figure 10 presents the plot of Sharpe Ratios for the portfolios made from companies in cluster 0, cluster 1, and SPY.

For portfolios made up of cluster 0 businesses, the average Sharpe Ratio has stayed largely stable. With values ranging from levels near the SPY’s Sharpe Ratio to higher than those of the cluster 0 portfolios for certain solvency metrics like Debt to Equity Ratio, Days Payables Outstanding, and Debt Ratio, portfolios derived from cluster 1 companies, on the other hand, indicate more variability. Cluster 1 portfolios performed better than cluster 0 portfolios, with an average real return advantage of 14.61% and 30.71% above the market portfolio (SPY), respectively. As regards average real volume, cluster 1 had an advantage of 24.21% above cluster 0 and, respectively, 39.41% above the market portfolio (SPY), and on Average Excess Sharp there was a difference of −0.02 for cluster 0 and 0.19 for market portfolio (SPY). Given this unpredictability and under the assumption that all other variables stay the same, an investor looking for the best risk-adjusted returns would probably choose portfolios in cluster 1, which are produced via k-means clustering based on the debt ratio. This is because these portfolios have demonstrated the greatest potential for higher Sharpe Ratios.

In the

Figure 11, average volatility (x-axis) is plotted against average return (y-axis) for the SPY ETF, cluster 0 portfolios, and cluster 1 portfolios to further show the link between return and volatility for solvency-based grouping. In contrast to the market benchmark, this graphic offers more information on how solvency parameters affect the risk–return dynamics of a portfolio.

This graph also clearly shows the positive linear link between returns and volatility. However, the risk–return characteristics of the cluster 1 portfolios obtained from solvency-based clustering show more dispersion than the previous analysis using liquidity parameters. One noteworthy finding is that, out of all the portfolios, the one created from cluster 1 companies based on Debt to EBITDA shows the most volatility, suggesting a higher level of risk exposure. A more consistent risk–return profile among solvency-driven clusters is suggested by the portfolio made up of cluster 1 companies based on debt ratio, which shows the least volatility within cluster 1. The need for investors to take specific financial indicators into account when building portfolios based on solvency-based clustering approaches is further supported by this variability, which demonstrates how various solvency measurements affect the overall risk–return tradeoff.