1. Introduction

In recent years, two-wheeled self-balancing carts have attracted significant attention due to their potential applications in various fields, such as intelligent transportation, logistics automation, and service robotics [

1,

2,

3,

4]. These systems have inherently unstable structures which are similar to inverted pendulums. Thus, sophisticated control algorithms are required to maintain balance and ensure accurate steering. Traditional control methods, including PID (Proportional–Integral–Derivative) control, fuzzy logic control, and some other complex algorithmic control approaches [

5,

6], have been widely adopted. However, these methods often face challenges when dealing with complex and dynamic environments. They rely on predefined rules and fixed parameters, which may not adapt well to changing conditions, especially in the context of symmetrical steering control.

With the rapid development of computer vision technology, image-based control strategies have emerged as a promising approach for self-balancing carts. By utilizing cameras, these systems can acquire rich environmental information, enabling more intelligent and adaptive control. Linear CCD (Charge-Coupled Device) sensors, in particular, offer high-resolution linear imaging capabilities, which are advantageous for precise path tracking and obstacle detection [

7,

8,

9,

10]. Nevertheless, most existing image-based control methods for two-wheeled self-balancing carts focus on short-term motion prediction and lack the ability to handle long-term dependencies and temporal dynamics within image sequences.

Long Short-Term Memory (LSTM) networks, a type of recurrent neural network (RNN), have demonstrated outstanding performance in processing sequential data with long-term dependencies. LSTM networks can selectively remember and forget information over time, making them suitable for tasks that require an understanding of temporal context, such as speech recognition, time series prediction, and video analysis [

11,

12,

13,

14,

15]. Although recently, models based on TimeGPT and other advanced architectures have shown better performance than LSTM-based models [

16,

17,

18], the LSTM is still used in this paper for the following reasons: Firstly, the data used in this research has specific characteristics and constraints. LSTM models are well studied and have proven effective in handling sequential data with temporal dependencies, which aligns well with the nature of the linear CCD data collected from the two-wheeled vehicle. In contrast, TimeGPT-based models, although promising, require a large amount of data for training and fine-tuning, which was not available in this study due to the limited data collection resources. Secondly, the computational resources and time available for this study were also factors. LSTM-based models are relatively more computationally efficient compared to the complex architectures of TimeGPT-based models, allowing for the timely completion of the research. Finally, the novelty of this study lies in the innovative way of preprocessing CCD data for LSTM-based turning judgment. While TimeGPT-based models are an interesting area of research, they do not directly contribute to the main objectives of this study.

Some of the latest studies about two-wheeled cart control are detailed as follows [

19,

20,

21,

22]: In [

19], by training the model with historical motion and environmental data, it is able to predict the future states of the mobile robot. The proposed approach is expected to improve the robot’s adaptability and control performance. In [

20], the focus of the research is on the coordination of the robot’s wheels, its body posture, and its interaction with the environment. By formulating a comprehensive control algorithm, the authors aim to improve the robot’s performance in terms of balance maintenance, maneuverability, and load-carrying capacity. In [

21], based on their model, an optimal control strategy is proposed to maintain the robot’s balance and achieve desired motions. The control approach likely aims to minimize certain cost functions related to energy consumption, balance error, and tracking performance. In [

22], the feedback mechanism is integrated to enhance the controller’s performance by continuously adjusting the control signals according to the actual system states. This combination aims to improve the system’s response time, stability, and robustness against disturbances. The authors conduct extensive simulations and, possibly, real-world experiments to evaluate the performance of the proposed controller.

In this paper, we propose a novel symmetrical steering control method for linear CCD-based two-wheeled self-balancing carts that integrates image tracking and LSTM networks. The image tracking module extracts dynamic features from linear CCD images, while the LSTM network models the long-term temporal relationships in the tracking data to predict future motion states. By combining these two components, our approach aims to enhance the control performance of self-balancing carts in complex and dynamic environments, overcoming the limitations of traditional control methods and existing image-based approaches. The contributions of this study include the development of an LSTM-based control framework for self-balancing carts and the integration of image tracking and LSTM for more accurate motion prediction.

During the implementation process, we first use the Python language [

23] to train the LSTM neural network. After training is completed, we use the TensorFlow Lite 2.1 [

24] package to convert the LSTM weights into C language and integrate them with the cart control code using the Keil IDE (Integrated Development Environment) uVision 5 [

25]. Experimental results on two-wheeled self-balancing carts [

26] validate the effectiveness and superiority of the proposed method. The design objectives of this paper are to prove the feasibility of the method through simulation and then implement the method using an actual two-wheeled self-balancing cart for symmetrical operation.

2. Designing Symmetrical Steering Motion of Cart

Similarly to forward motion, we can also establish a simplified symmetrical steering motion model, as shown in

Figure 1 [

26]. The symmetrical steering motion is induced by the unequal magnitudes of the reaction forces

and

exerted on the cart body by the left and right wheels in the horizontal direction. Based on the law of rotation of a rigid body with a fixed axis, we can obtain the fundamental equations that govern the rotational motion of the object. These equations form the foundation for the subsequent experimental verification and simulation analysis, which I will elaborate upon in the following paragraphs.

Similarly to the forward-motion model, we can also establish a simplified symmetrical steering motion model, as shown in

Figure 1. The symmetrical steering motion arises from the unequal horizontal reaction forces

and

exerted on the cart body by the left and right wheels. By applying the rotational form of Newton’s second law to a rigid body with a fixed axis, we obtain the following dynamic equation:

After considering the displacement relationship between the left and right wheels, the dynamic equation is established as follows: When the speeds of the left and right wheels are not equal, the cart body turns, as shown in

Figure 1. From the geometric relationship, we can derive

After subtracting Equation (2) from Equation (1), we obtain:

Then, the dynamic model of symmetrical steering of the cart can be obtained as

Since the magnitude of the motor output torque is difficult to control directly, it is converted into the acceleration of the two wheels according to the law of rigid body fixed-axis rotation

where

,

. The

is defined as the disturbances and the uncertainties.

The parameters of model are defined as follows:

: Horizontal displacement of right wheel (m);

: Horizontal displacement of left wheel (m);

: Yaw angle of the cart (rad);

: The moment of inertia of the cart body when rotating around the z-axis (kg × m2);

: Wheel mass (kg);

: Radius of wheel (m);

: Distance from right wheel to rotation center (m)

: Distance from left wheel to rotation center (m)

: Velocity of cart (m/s);

: Velocity of right wheel (m/s);

: Velocity of left wheel (m/s);

: The right wheel acceleration which is also the right control output ();

: The left wheel acceleration which is also the left control output ();

: Distance between wheels (m);

: The output torque of the right wheel motor ();

: The output torque of the left wheel motor ();

: The magnitude of the horizontal component of the force acting on the right wheel (N);

: The magnitude of the horizontal component of the force acting on the left wheel (N);

: Moment of inertia of the wheel (kg × m2).

To prove the stability of the designed system, we first define its error as follows:

. Then, based on this error, we design an ideal controller as follows:

Substituting this equation into Formula (7), we can derive the error dynamic equation as follows:

According to Laplace transformation, as long as and are selected correctly so that the characteristic roots of Equation (5) are located in the left half-plane, the system is guaranteed to be stable, and the system error will converge, i.e.:.

The ideal controller

involves excessive uncertainties, necessitating the design of a robust controller

to address these challenges.

The control forces for the left and right wheels are determined by the symmetrical steering direction. For instance, when turning right, the left wheel must accelerate; when turning left, the right wheel must accelerate. Their relationship with the ideal control input

is as follows:

3. LSTM Neural Network Design and Simulation Results

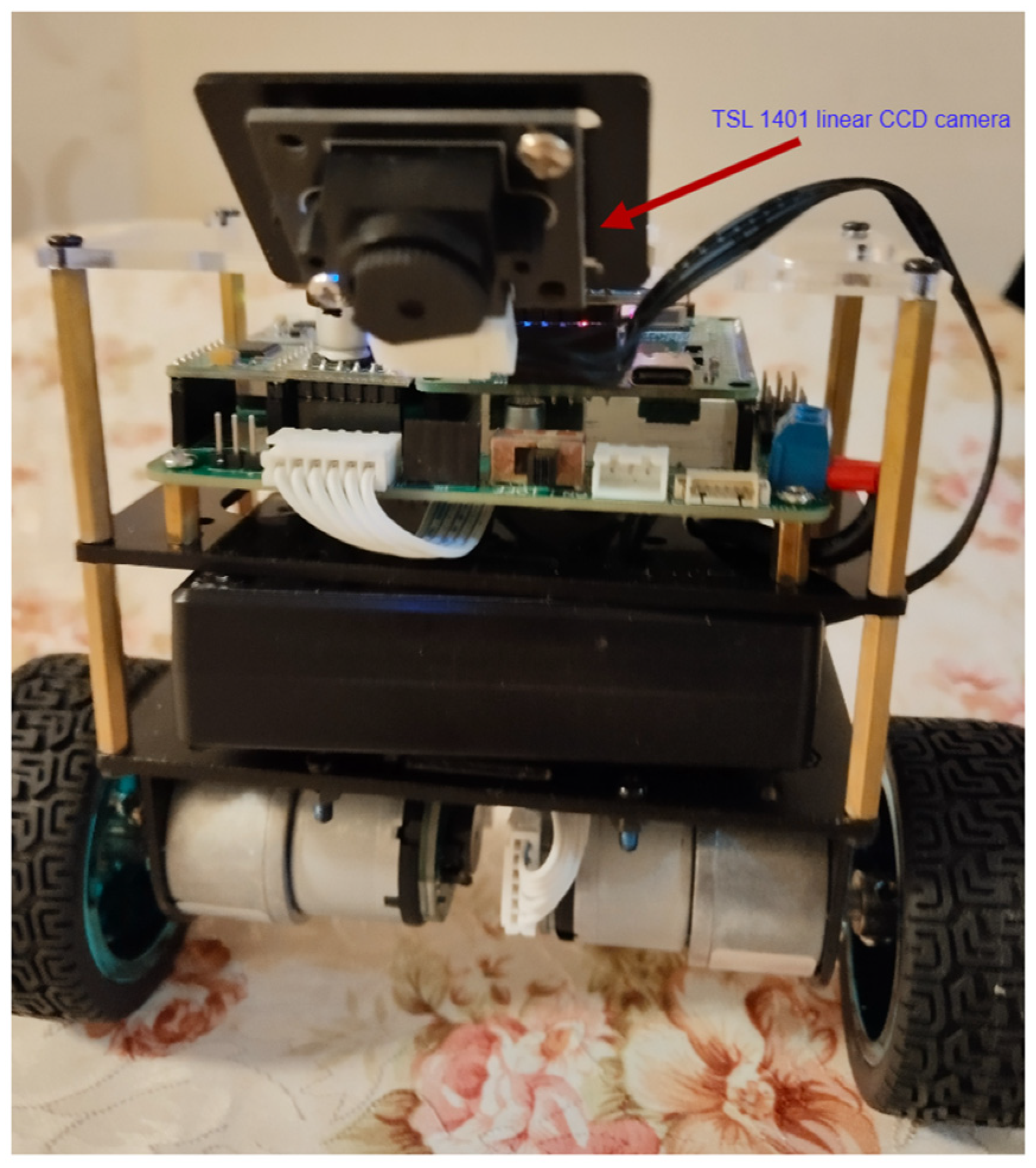

First, the characteristics of the linear CCD in TSL 1401 [

26] need to be introduced.

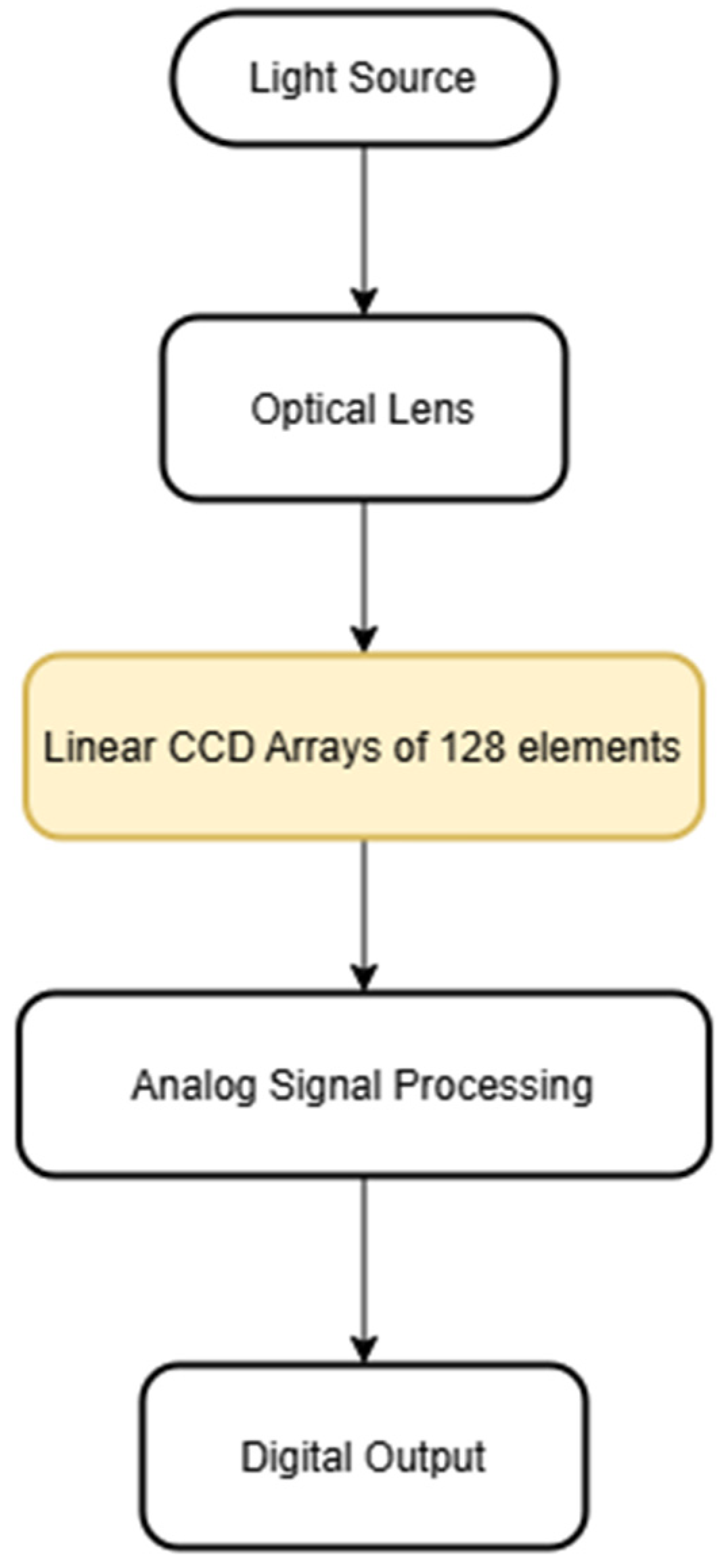

Figure 2 illustrates the structure of the TSL1401, a linear image sensor celebrated for its high sensitivity and extensive dynamic range, rendering it well suited for light detection applications. It consists of 128 photodiodes arranged in a single row, allowing for highly precise light intensity detection. With low dark current and high responsivity, the sensor ensures reliable performance even in low-light conditions. Moreover, its simple serial interface simplifies its integration with microcontrollers and other digital systems.

Each photodiode in the array converts incident light into an electrical charge, with the amount of charge generated being proportional to the local light intensity. These charges are then sequentially transferred and processed. The sensor samples the charge from each photodiode, converts it into a voltage-proportional signal, and outputs the data serially. By analyzing the output values, users can determine the light distribution across the 128-element array, which finds application in various scenarios, including light-level monitoring, edge detection, and optical signal analysis.

This paper utilizes this CCD to track the black tape on the ground and control the direction of the cart, with the goal of enabling the cart to move along the black tape. Previously, infrared sensors were predominantly used due to their short-range detection and relatively low susceptibility to interference. Control laws such as PID and LQR were applied to ensure the cart moved stably along the tape. In this study, image-based tracking is adopted, presenting significant challenges. Images are vulnerable to environmental brightness, reflections, and the reflective background of the tape, all of which can impact tracking accuracy. Therefore, simulations are first conducted, and a neural-network-like LSTM is employed to memorize the cart’s movement patterns. Similarly to human cognitive abilities, the system can still identify the position of the black tape in ambiguous environments.

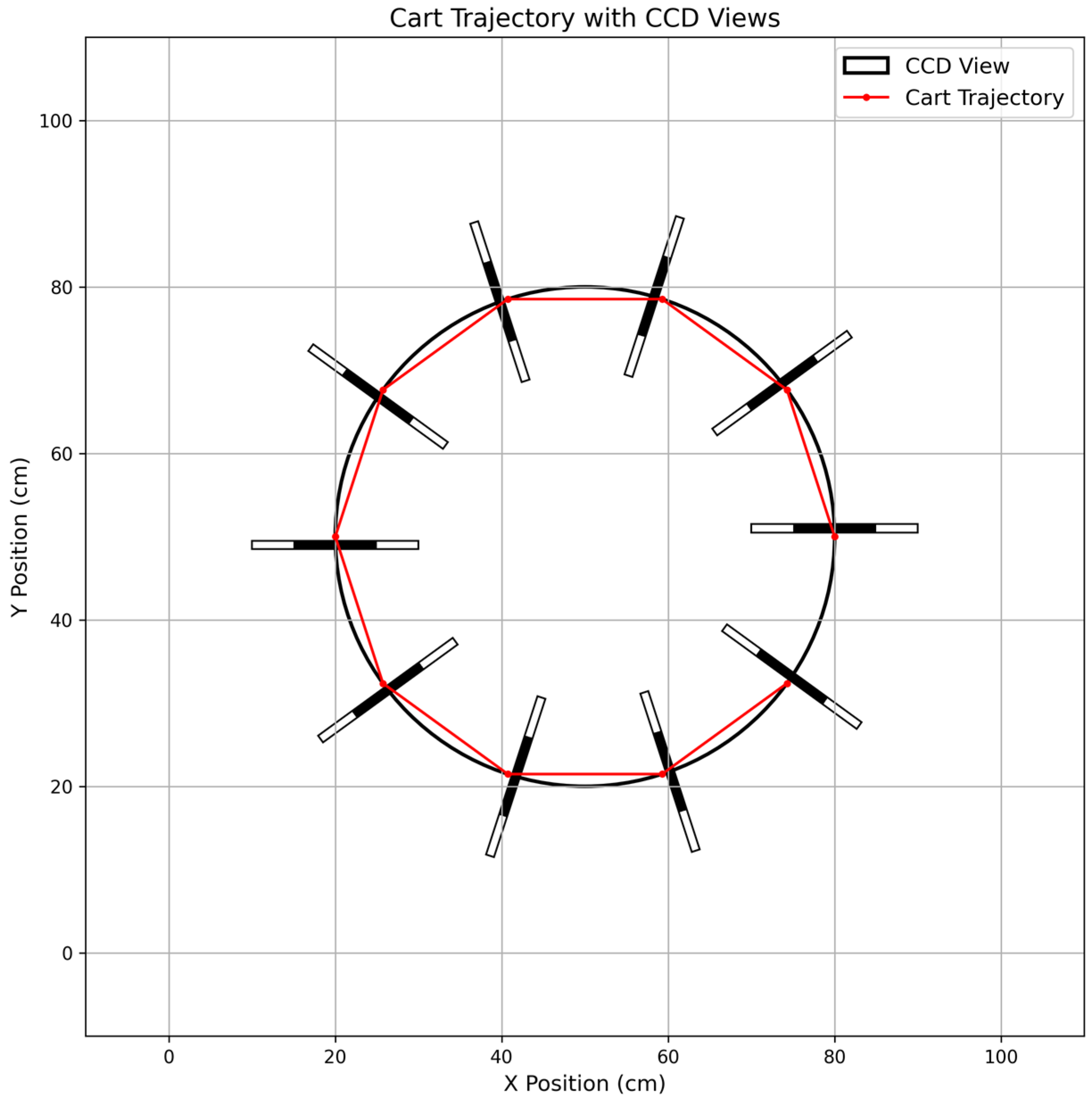

We select 64 elements from the middle of the 128-element CCD as the target values. Specifically, the control objective is to maintain the lowest voltage value at the 64th position, as shown in

Figure 3. To accomplish this, we create learning samples for an LSTM network to be trained on. LSTM networks are particularly adept at predicting time-series data, making them highly suitable for this task.

Python is an ideal programming language for this task due to its simplicity, readability, and extensive libraries. Its high-level syntax allows for rapid development, enabling researchers to quickly prototype and iterate on the LSTM model. With libraries like NumPy and Pandas, data preprocessing, manipulation, and analysis become straightforward, which are crucial for handling the linear CCD data used in this paper. TensorFlow, on the other hand, provides a powerful and flexible framework for building and training neural networks. It offers high-level APIs, such as Keras, that simplify the construction of complex models like LSTM, making it accessible even for those new to deep learning. TensorFlow also supports automatic differentiation, which streamlines the computation of gradients during the training process, significantly reducing the development time. Additionally, it has excellent support for distributed training, which can accelerate the training of large-scale LSTM models. TensorFlow’s compatibility with various hardware platforms, including GPUs and TPUs, further boosts the training efficiency.

The neural network architecture consists of one LSTM layer followed by a densely connected layer, as illustrated in

Figure 4, with its operational flow depicted in

Figure 5. We implement this model using Python [

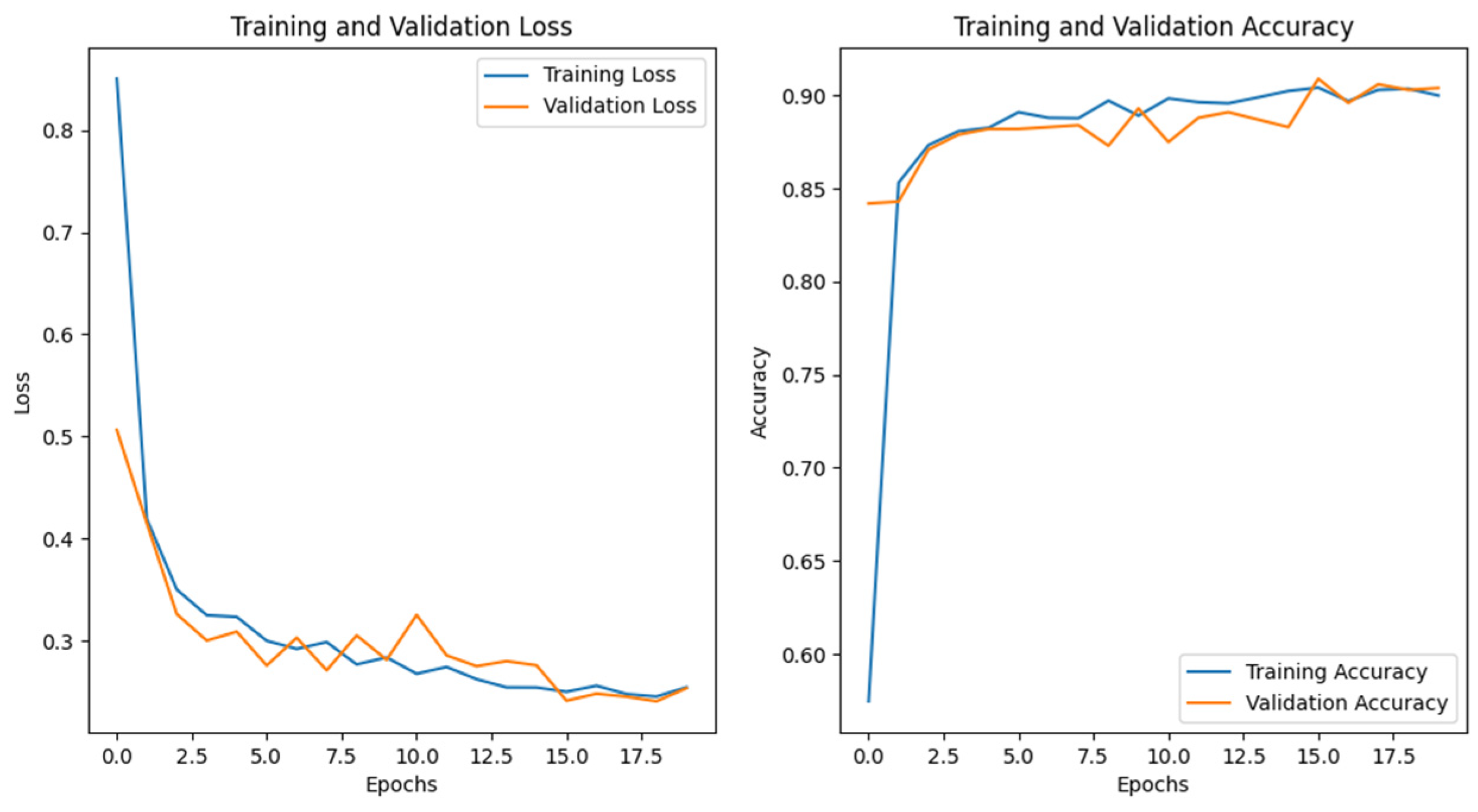

23]. To mitigate overfitting and ensure reliable predictions, we carefully regulate the training batch size. The model is trained with a learning rate of 0.001 for 20 epochs, with a batch size of 32. After training, simulations are conducted. The model consists of an LSTM layer with 32 units followed by two Dense layers, and the activation function of the output layer is ‘softmax’. Regarding the hyperparameters, the learning rate is initially set at 0.001 and tuned within the range of 0.0001–0.01. Through the ‘adam’ optimizer and a grid search combined with cross-validation, the optimal learning rate is determined to be 0.001. The final accuracy of the model is 0.9, and the loss is 0.25. The training process is depicted in

Figure 6.

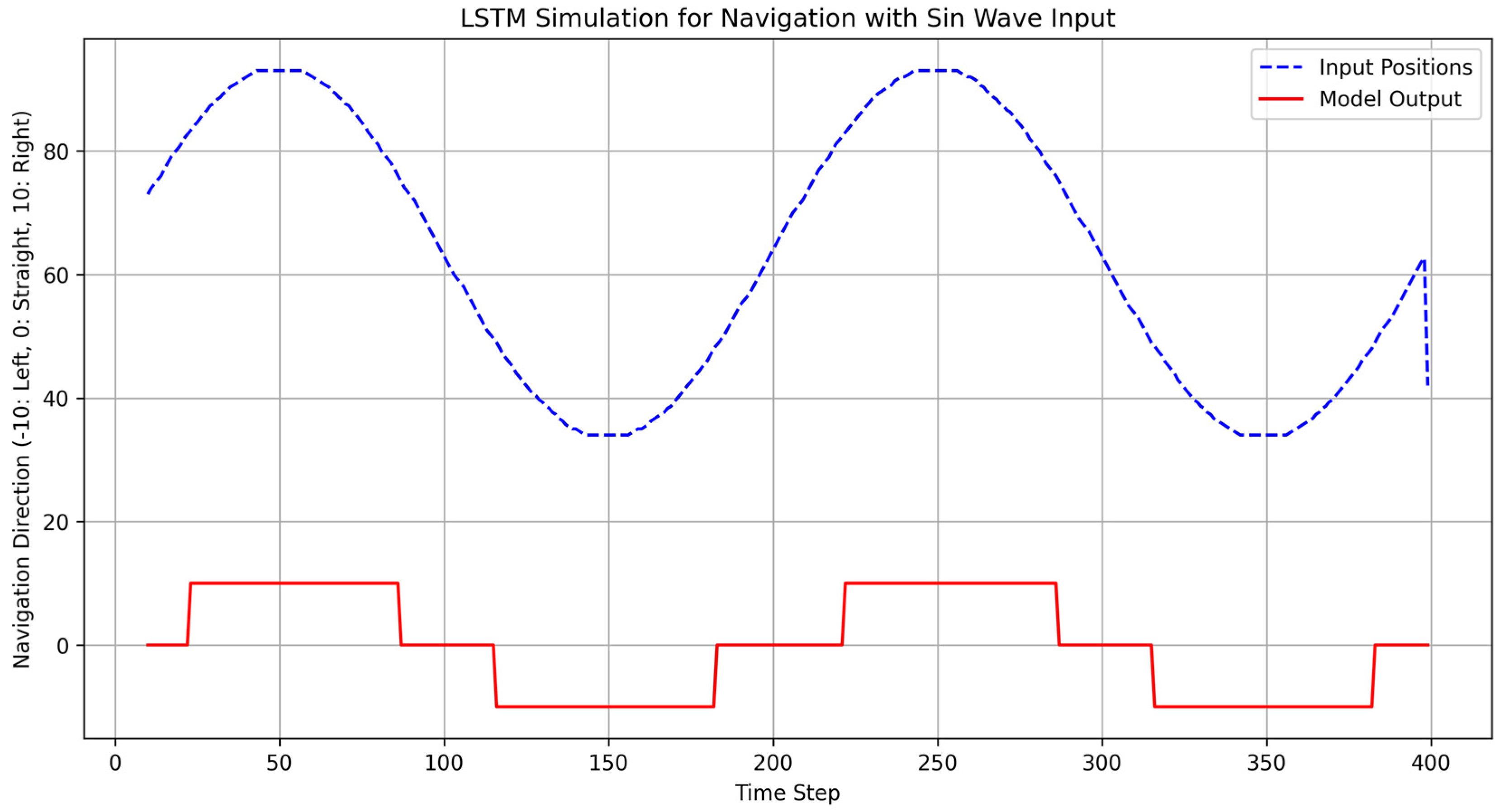

We iteratively generate 128 training points within the range of 35 to 95, enabling the LSTM model to learn three types of movements: moving forward (output 0), turning right (output 10), and turning left (output −10), as shown in

Figure 7. The results clearly show that when the input value is around 64, the model outputs 0, indicating forward movement; when the input is approximately 40, it outputs −10, signifying a left turn; and when the input is near 80, the output is 10, corresponding to a right turn.

In the absence of the LSTM component, the system loses its predictive ability. As demonstrated in

Figure 8, just relying solely on a PD (proportional-differential) controller leads to subpar control performance. This method involves using the distance between the image point and the mid-point as a parameter, and then considering its differential term for simple traditional control. In contrast,

Figure 9 presents the simulation results after integrating the trained LSTM model and its learned weights. The results clearly indicate that the inclusion of LSTM enables the cart to follow the desired path accurately, while without it, the cart fails to execute proper turns and moves erratically. The reason for this is mainly due to the unique characteristics of the data and the problem at hand. The data source, e.g., linear CCD data for the two-wheeled cart used in this study contains complex, non-linear, and time-varying patterns that are difficult to model accurately using traditional control methods.

4. Practical Implementation Results

The cart body used in the actual experimental implementation is shown in

Figure 10. In real-world applications, especially when implementing the model on embedded systems or devices with limited computational resources, such as the two-wheeled self-balancing cart in this study, machine code offers significant advantages. Machine code is the lowest level of programming representation that can be directly executed by the hardware. By converting the trained weights into machine code, we can eliminate the overhead associated with higher level programming languages and frameworks. This results in faster execution speeds, reduced memory consumption, and improved real-time performance, which are crucial for the real-time control and operation of the self-balancing cart. Moreover, machine code enables better integration with the specific hardware architecture of the target device. It allows for fine-grained optimization tailored to the unique features and capabilities of the hardware, further enhancing the overall efficiency of the model.

When moving from simulation to real-world testing, several significant challenges emerge. Firstly, the simulation environment is often an idealized representation that fails to account for the complex and unpredictable real-world factors. For instance, in the case of the two-wheeled self-balancing cart, simulation may not accurately replicate variations in terrain, ambient noise, and interference from external electromagnetic fields. These real-world elements can significantly affect the performance of the control system based on the trained LSTM model. Secondly, hardware limitations pose a major hurdle. In real-world testing, the physical sensors and actuators of the self-balancing cart may have inherent inaccuracies, non-linearities, and signal delays that are not present in simulations. Calibrating these components to work in harmony with the model can be extremely challenging and time-consuming. Another critical factor is data deviation. The data collected during real-world testing may have different statistical characteristics compared to the simulated data used for training. This data mismatch can lead to the poor generalization of the model, resulting in suboptimal performance.

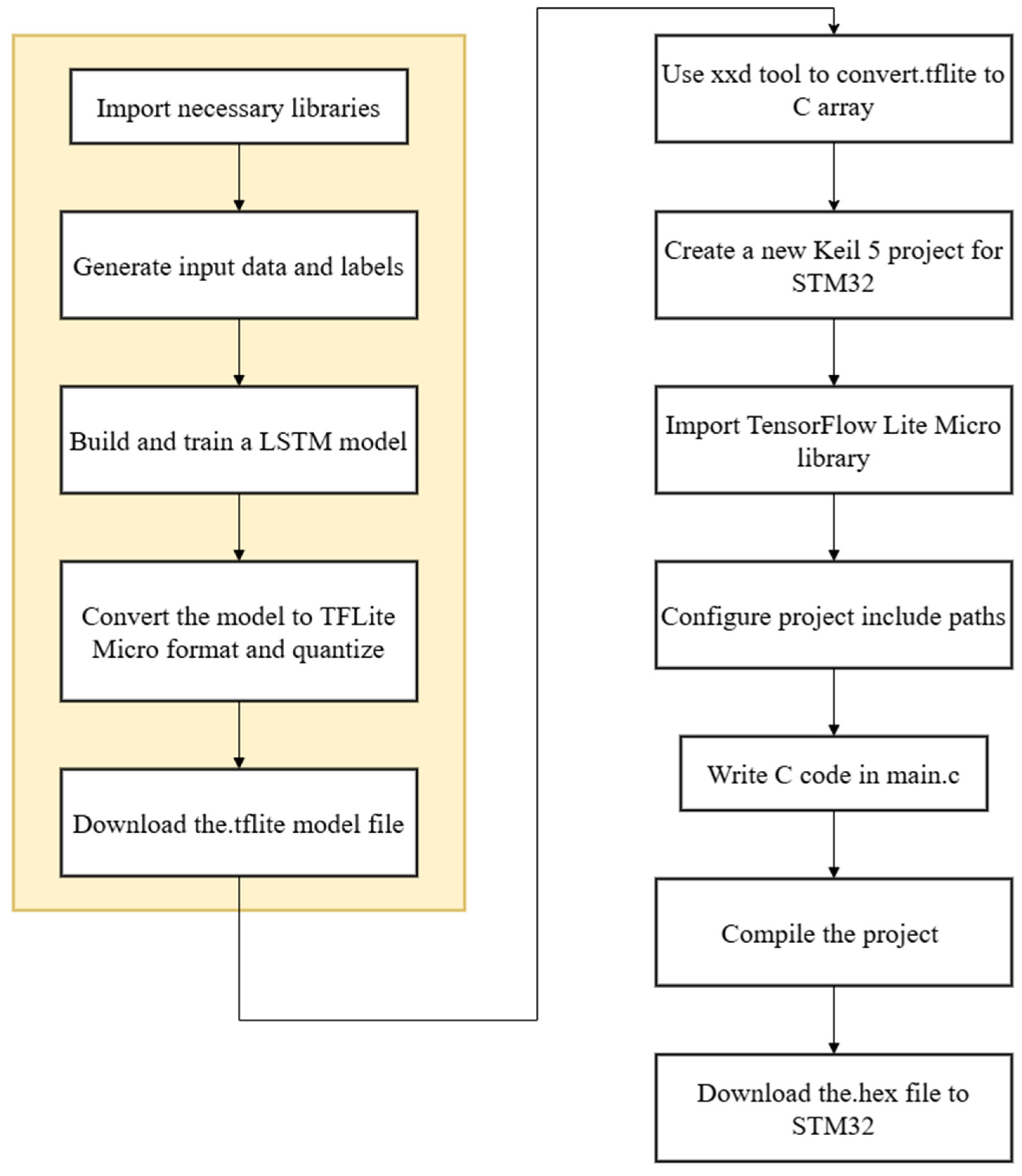

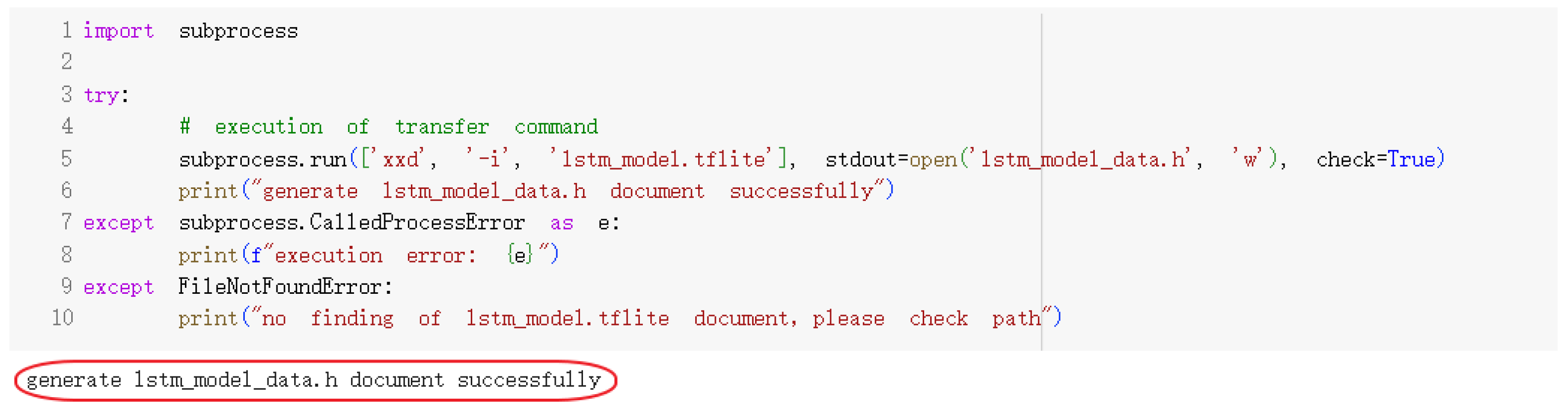

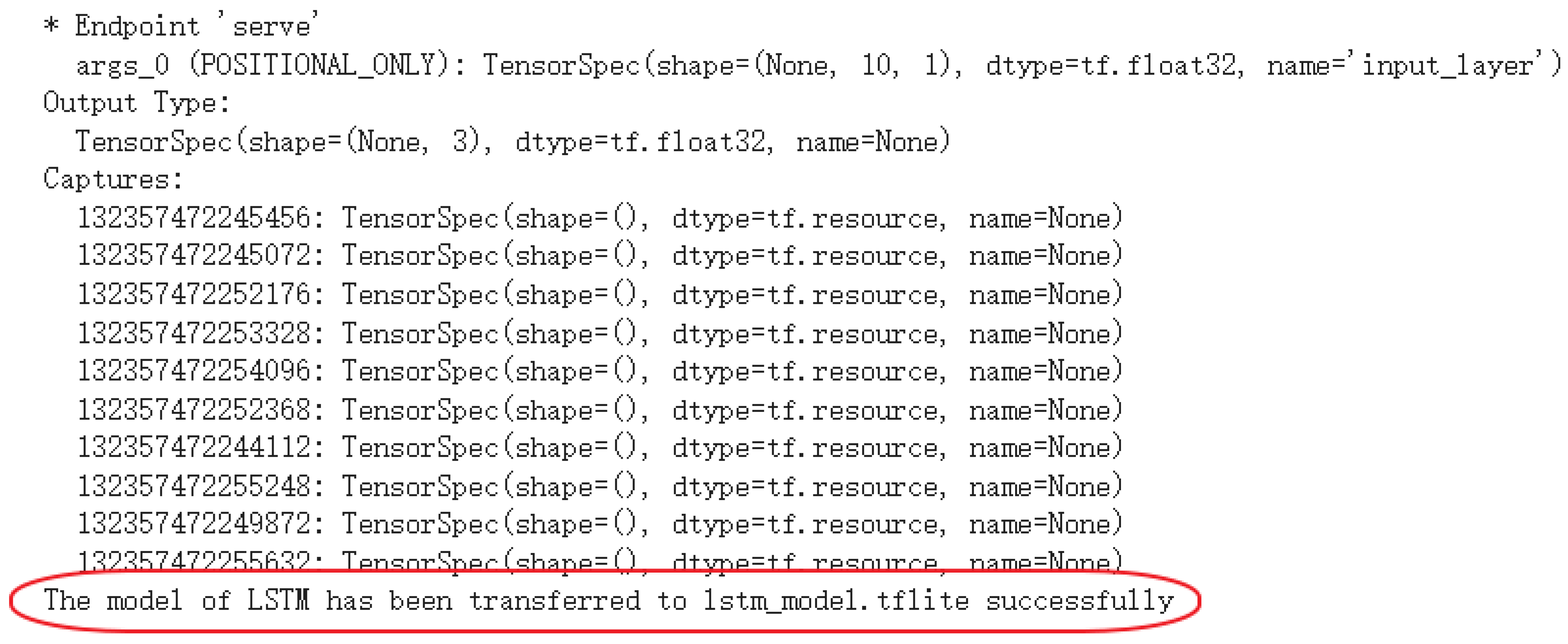

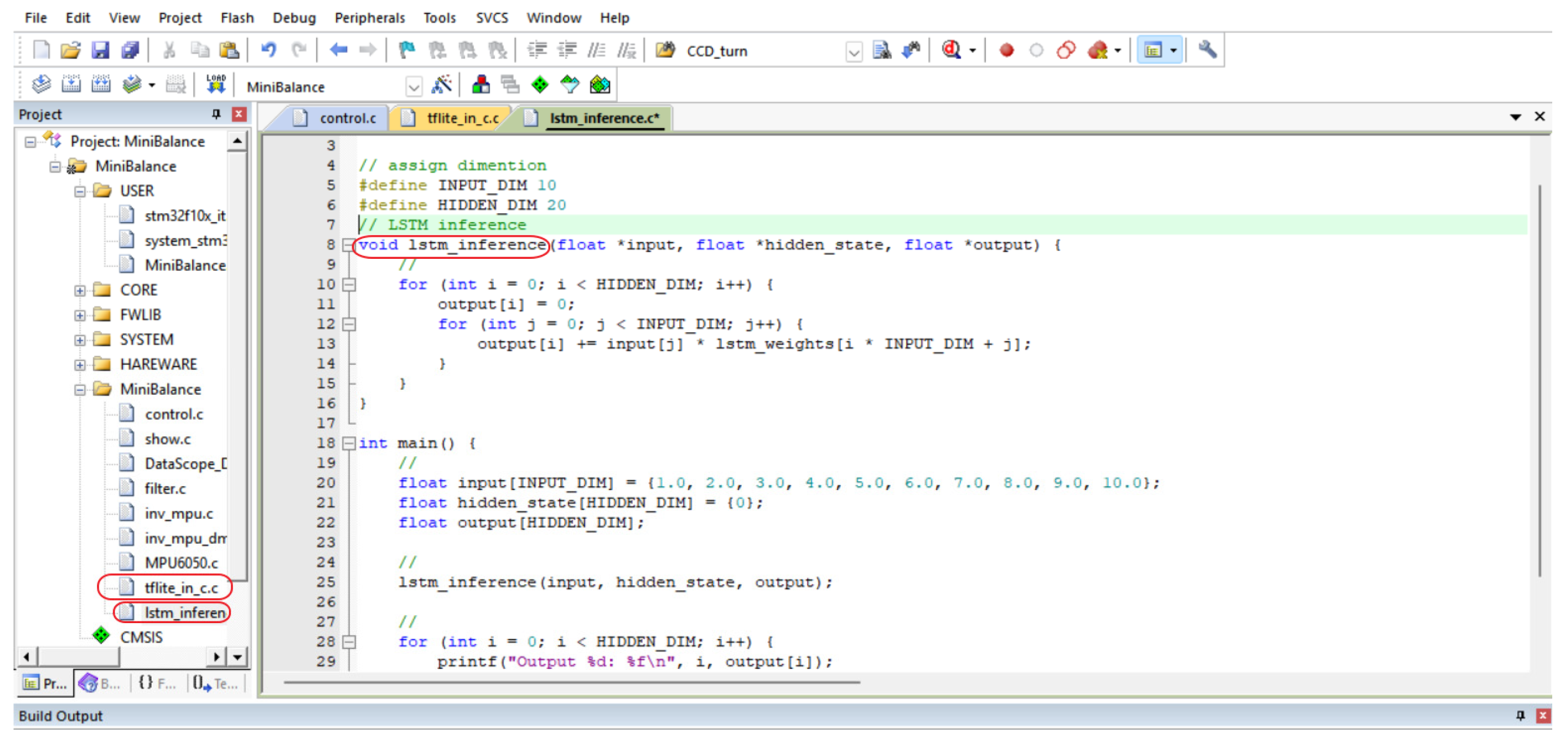

Since we need to load the TensorFlow model data into the STM32 MCU [

27], an installation step is necessary. We employ TensorFlow Lite [

24], and its implementation steps are illustrated in

Figure 11. The screenshots of several important software files during generation are shown in

Figure 12 and

Figure 13. The software development environment, uVision 5 developed by Keil Corp. [

25], is utilized to generate machine code, as depicted in

Figure 14.

Figure 11,

Figure 12 and

Figure 13 illustrate a continuous process. This process primarily demonstrates how we utilize Python to convert an LSTM model into a .tflite header file. Subsequently, we transform this header file into a C-language module. This module can be integrated into our software project for joint compilation, generating machine code that is then flashed into the program memory of the hardware. The experimental results are presented in

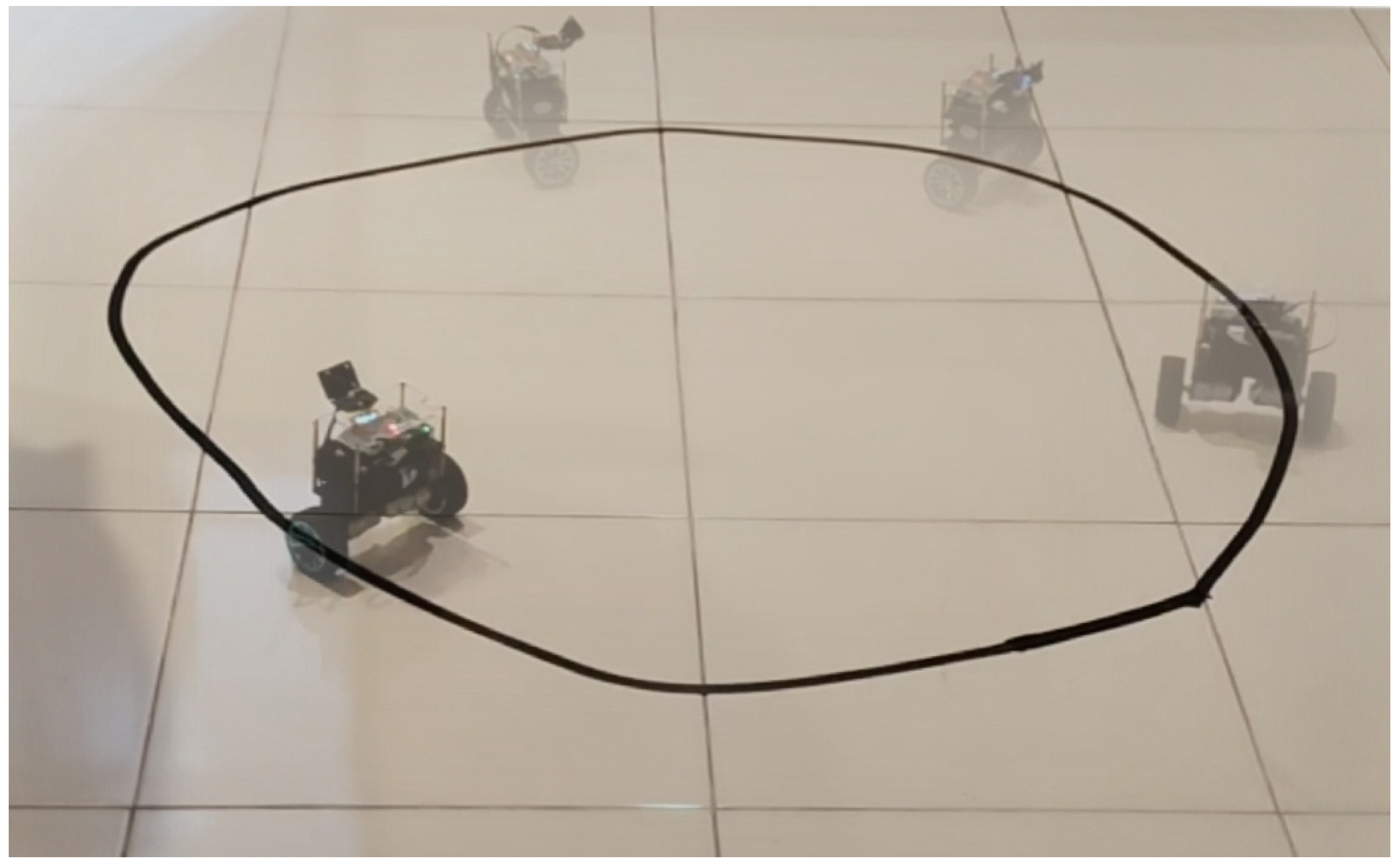

Figure 15. In practice, we observe that the image tracking performance of the two-wheeled self-balancing cart is excellent.

This approach still has the following limitations: One of the primary limitations is that this method is highly dependent on a critical factor, such as the quality and quantity of linear CCD data. When the data contains substantial noise or outliers, the performance of the method may deteriorate. Moreover, the proposed approach is validated under specific experimental conditions, for instance, in controlled indoor environments. Its effectiveness in more intricate real-world scenarios, including varying lighting conditions or high-speed motion, still needs to be further explored.

5. Conclusions

This paper innovatively presents an application that fully harnesses the exceptional memory capabilities of Long Short-Term Memory (LSTM) neural networks for sequence signals, providing a new solution for the precise control of two-wheeled self-balancing carts. A linear array CCD lens is used to detect the black tape on the ground. After establishing a series of continuous training sample points, Python and TensorFlow are employed to train the LSTM network and optimize its weights. The efficacy of the training results is verified by simulating the symmetrical steering control of a two-wheeled cart. This process validates the proposed design method and showcases its remarkable performance. Experimental results demonstrate that this method is highly effective, characterized by robust image-tracking capabilities and optimal computational performance.

The proposed LSTM-based control strategy can be applied in various real-world scenarios. For example, in two-wheeled self-balancing carts used in industrial automation, logistics, or domestic services, it can be developed into an effective control strategy to enhance the safety, stability, and efficiency of all robots in these applications. This may include applications in other types of mobile robots, intelligent transportation systems, or wearable devices that require precise symmetrical motion control.