Abstract

Adversarial attacks on visual object tracking aim to degrade tracking accuracy by introducing imperceptible perturbations into video frames, exploiting vulnerabilities in neural networks. In real-world symmetrical double-blind engagements, both attackers and defenders operate with mutual unawareness of strategic parameters or initiation timing. Black-box attacks based on iterative optimization show excellent applicability in this scenario. However, existing state-of-the-art adversarial attacks based on iterative optimization suffer from high computational costs and limited effectiveness. To address these challenges, this paper proposes the Universal Low-frequency Noise black-box attack method (ULN), which generates perturbations through discrete cosine transform to disrupt structural features critical for tracking while mimicking compression artifacts. Extensive experimentation on four state-of-the-art trackers, including transformer-based models, demonstrates the method’s severe degradation effects. GRM’s expected average overlap drops by on VOT2018, while SiamRPN++’s AUC and Precision on OTB100 decline by and , respectively. The attack achieves real-time performance with a computational cost reduction of over compared to iterative methods, operating efficiently on embedded devices such as Raspberry Pi 4B. By maintaining a structural similarity index measure above , the perturbations blend seamlessly with common compression artifacts, evading traditional spatial filtering defenses. Cross-platform experiments validate its consistent threat across diverse hardware environments, with attack success rates exceeding even under resource constraints. These results underscore the dual capability of ULN as both a stealthy and practical attack vector, and emphasize the urgent need for robust defenses in safety-critical applications such as autonomous driving and aerial surveillance. The efficiency of the method, when combined with its ability to exploit low-frequency vulnerabilities across architectures, establishes a new benchmark for adversarial robustness in visual tracking systems.

1. Introduction

Visual object tracking is an important area in computer vision, which locates the target in each frame by successively inferring key frames in a video sequence to provide the target’s trajectory and activity area. In recent years, the performance of visual object tracking algorithms has improved rapidly. Meanwhile, it has been widely used in the fields of autonomous driving [1], biological behavior monitoring [2], and aerial visual object tracking [3]. Visual object tracking algorithms aim to be able to guarantee unlimited tracking of any class of objects. Single object tracking (SOT) is a fundamental problem in the field of visual object tracking. The main goal of the current work in this direction is to further improve the accuracy and stability of the tracker. In recent years, SOT algorithms have made great progress in terms of stability and accuracy, and the use of DNNs to improve visual object tracking is one of the important branches. However, it has been proved that visual object tracking models based on DNNs lead to a significant degree of performance loss [4]. Various perturbations that occur in video frames, such as drastic changes in light and dark, object occlusion, cluttered backgrounds, motion blur, or fast motion, can lead to serious deviations in the predicted box. Adversarial attacks are dedicated to investigating how to generate more effective perturbation attacks, and providing some references to enhance the performance and improve the stability of the tracker.

The study of adversarial examples for natural image classification models has been quite extensive [5,6,7,8], and the importance for investigating the weaknesses of visual object trackers is equally significant. Using adversarial attacks to expose the vulnerabilities of the tracker, we can effectively provide valuable information to enhance its robustness. Based on the extent of the attacker’s advantage, adversarial attacks can be categorized into white-box and black-box attacks. White-box attacks assume that the structure and process of the tracking algorithm is known. By requiring attackers to have full knowledge of the model and training plan, white-box attacks can be effective in providing security guidance against insider attacks. However, machine learning cloud service platforms only provide APIs for developers to train models in reality, making it difficult for attackers to access process parameters or relevant training details. In most embedded micro-SOT devices, the terminal often transmits video frames to a server for tracking and analysis. However, this process creates opportunities for black-box attacks. These attacks exploit vulnerabilities in the transmission process, causing the tracker to fail by simply modifying the input. Black-box attacks typically occur in relatively constrained scenarios where the attacker has limited access. Specifically, the attacker can only obtain the input and output of the tracker, without the ability to access preceding or succeeding frames during the attack.

In the scenario of object tracking, the adversarial dynamics between attackers and defenders are inherently symmetric, as both parties operate under similar constraints of partial information and unknown adversary strategies. This symmetry is particularly evident in real-world scenarios where both the attacker, crafting perturbations to deceive the tracker, and the defender, designing robust algorithms to maintain tracking accuracy, must base their strategies on identical observable information without knowledge of each other’s specific tactics or implementations. In this case, our goal is to design an attack that keeps the computational cost low and requires no prior training, and which is easily deployed and implemented on any device while achieving an effective perturbation. The algorithm strikes a balance between computational efficiency and destructive capability, demonstrating a high level of feasibility, which ensures that the attack can be practically implemented without extensive computational resources. Additionally, the characteristics of the attack noise make it easy to disguise as artifacts resulting from image compression. Such artifacts are quite common in devices with limited computational power, making the attack less detectable. This poses a significant challenge for modern tracking devices, underscoring the need for improved defensive strategies.

In this paper, our research focuses on adding perturbations into video frames and evaluating their impact on various main-stream SOT frameworks in terms of performance degradation and attack computational cost. Therefore, we propose the Universal Low-frequency Noise black-box attack (ULN) and evaluate the feasibility and effectiveness of this attack across various tracking scenarios. In summary, our main contributions are as follows:

- We present the Universal Low-frequency Noise black-box attack that generates perturbations solely through iterative search and query processes. In each iteration, we pregenerate orthogonal vector space and sample perturbations from the low-frequency discrete cosine transform (DCT) space. In addition, we use a sampling–reconstruction strategy to effectively save the query cost for perturbation.

- The proposed attack eliminates the need for pretraining a generator or utilizing subsequent video frames, and also significantly reduces the time required for each iteration, ensuring low hardware requirements and enabling the use of parallel computing for enhanced efficiency.

- Experiments are a crucial part of our attack evaluation. We conducted extensive attack experiments against trackers in four prominent domains, demonstrating the robust versatility and effectiveness of the proposed attack algorithm.

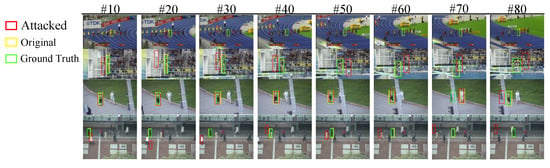

By demonstrating the practical applicability of ULN, we aim to provide insights into enhancing the robustness of visual object tracking systems against adversarial attacks. Figure 1 shows that the proposed attack causes the tracker to lose the target.

Figure 1.

The visualization of ULN attack on the OTB100. It shows how subtle perturbations caused tracker SiamRPN++ to gradually deviate from the ground-truth box.

2. Background

2.1. Visual Object Tracking

Siamese-based trackers consist of twin network CNN architectures that have been used for visual object tracking to perform similarity matching. These trackers are mainly composed of two CNN branching architectures: one for learning the feature representation of the target template and the other for learning the feature representation of the search region. To date, a large number of Siamese-based trackers have been proposed in the field of tracking. SiamRPN++ [9] showed excellent accuracy and computational efficiency in CNN-based trackers, which aggregated features at different levels by using a deeper network with a simple but effective spatially aware sampling strategy.

Discriminative-model-based trackers introduce a tracking architecture that incorporates end-to-end training capabilities and enables direct prediction of visual object tracking during the testing phase. Dimp [10] improves the flexibility of the architecture through two key designs, i.e., the use of the steepest descent method in calculating the optimal step size during each iteration and the integration of a module that effectively initializes the target model.

Transformer has been achieved remarkable results after being applied to the field of Natural Language Processing (NLP). The form of transformer-based trackers is divided into CNN-transformer and fully transformer. The former using two identical pipelines of Siamese-like networks. At the beginning of these pipelines, features are extracted from the target template and the search region using a CNN trunk. The latter performs a single object tracking task using the full transformer architecture. Based on the combination of transformer and CNN, TrTr [11] was proposed to capture the connection between the target template and the search region. Such trackers were trained to obtain global information about the target template. GRM [12] is a fully-transformer-based tracker which aimed to classify the input tokens into three categories, namely template tokens, search tokens suitable for cross-relational modeling, and search tokens unsuitable for relational modeling. In the tests conducted on the LaSOT benchmark dataset [13] for various trackers of current tracking structures [14], GRM showed relatively excellent success rates and Precision.

2.2. Adversarial Attacks

Black-box attacks contain both single-frame attacks against the original template and frame-by-frame attacks that provide comprehensive template information. These attacks can be executed in different forms, such as training a generator to produce adversarial noise or performing iterative searches to find effective perturbations.

Hijacking [15] improves the effectiveness of the attack by adding misleading perturbations in both shape and position with a proposed adaptive optimization method. CSA [16] uses the U-Net architecture as a perturbation generator and a novel loss function, which consisted of a cooling loss for the disturbance classification map and a shrinking loss for the disturbance regression map. Only Once Attack [17] provides two basic types of attacks targeting the initial frame: position offset attacks and direction offset attacks. These can be further combined to create more complex directional attacks. Efficient Universal Shuffle Attack (EUSA) [18] employs a greedy gradient strategy and triple loss design. It improved the attack efficiency and the performance by capturing and attacking model-specific feature representations. SPARK [19] proposes an online incremental attack method based on spatial awareness. By generating spatial-temporal sparse incremental perturbations online, the adversarial attack was made less detectable. One-shot Attack(OA) [20] only perturbs the target region with small pixel values in the initial frames of the video, which adds an attention mechanism to the two loss functions to further improve the effectiveness of the attack. IOU attack [21] is an iteration-based black-box attack that focuses on the image content and objects in a video sequence. By using an iterative orthogonal combination approach, it randomly generates noise with the same level in each iteration to find the tangent interference with the lowest score. After generating heavily perturbed initial frame, Discretely Masked Black-Box Attack [22] uses an A2C (Actor-Critic) grid search strategy to reduce the large-scale adversarial perturbations in specific regions of the initial frames. TTP [23] designs a temporally transferable adversarial perturbation generation framework through a single perturbation and applying it to the search region in subsequent video frames.

Adversarial attacks based on deep networks could be highly effective, but deploying such attacks requires extensive video datasets for training, which involves a significant amount of data and does not always guarantee practical effectiveness. Iterative search-based attacks often rely on querying random Gaussian noise, which incurred high query costs and results in low efficiency in finding effective perturbations. Additionally, although IOU attack performed poorly against transformer-based trackers in experiments, it provided valuable insights, indicating that “plug-and-play” should be a core objective in the design of iteration-based attacks. Specifically, unlike template attacks, in real-world scenarios, attacks should not be confined to the initialization phase, as tracking models often have complete features of the target info and search area. This requires that attacks should be able to be executed at any frame during the tracking process and still cause effective misdirection. For attackers, as long as the output target bounding box is acquirable, the most effective perturbations can be queried for any unknown tracker. Based on this assumption and requirement, the proposed attack focuses solely on the information features of the current frame while recording the impact of historical perturbations. In the engineering experiments for the proposed attack scenario, we considered execution scenarios involving CPU/GPU and even embedded devices, as well as assessing the ability to perform more efficient queries with the support of parallel computing. Our goal is to quantify the practicality and applicability of the proposed attack across different computational platforms.

Finally, research shows that there has been a large number of transformer-based visual object trackers [14]. While most recent attack tests have concentrated on Siamese-based trackers, it is crucial to validate attacks against CNN-transformer and fully-transformer-based trackers. This validation is essential to fully reveal the applicability and effectiveness of the proposed attack in exposing the weaknesses of these advanced tracking models.

2.3. Low-Frequency Perturbation

In the context of Shannon’s information theory, the fundamental characteristics of visual data are encoded in their frequency components. For natural images, most of the perceptually relevant information, such as object shapes, edges, and textures, is concentrated in the low-frequency domain. This is because low-frequency components capture the broad structural properties of an image, which are essential for object recognition and tracking. From an information-theoretic perspective, low-frequency noise is more energy-efficient for disrupting tracking models. Since the majority of the signal’s energy in natural images is concentrated in the low-frequency domain, perturbations in this range can achieve significant disruption with relatively small magnitudes. This efficiency, combined with its ability to target the most information-rich components of the visual signal, makes low-frequency noise a powerful tool for compromising tracking performance. Based on the context, the adversarial nature of low-frequency noise for tracking models is further validated.

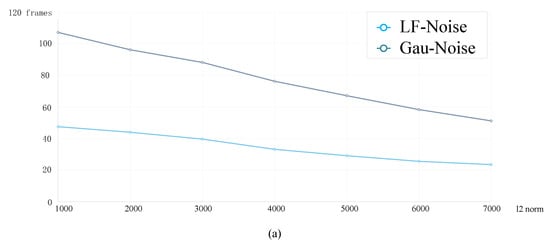

Results, as shown in Figure 2, align with the theoretical analysis, as low-frequency noise directly disrupts the structural information of the target, which is critical for maintaining accurate tracking. In contrast, Gaussian noise, while disruptive, distributes its energy across all frequency bands and does not specifically target the low-frequency components that are most important for object recognition and tracking. Given that visual object tracking models rely heavily on extracting these low-frequency features to maintain target identification and spatial consistency across frames, perturbations in this domain can have a disproportionate impact on model performance. Low-frequency noise, by its very nature, disrupts the critical information that tracking algorithms depend on, making it an effective vector for adversarial attacks. In addition, Guo et al. [24] attempted to use low-frequency noise to interfere with the prediction results of object classifiers. Qiao et al. [25] created poisoned samples for backdoor attacks through low-frequency noise.

Figure 2.

By observing the adversarial effects of low-frequency random noise (LF-Noise) and Gaussian random noise (Gau-Noise) on the video tracking process at different intensities, we statistically count the median number of frames in the sequence that caused the earliest loss of the target in (a), and the IOU between the predicted bounding box and the ground-truth bounding box before the loss of target in (b).

3. Method

3.1. Motivation

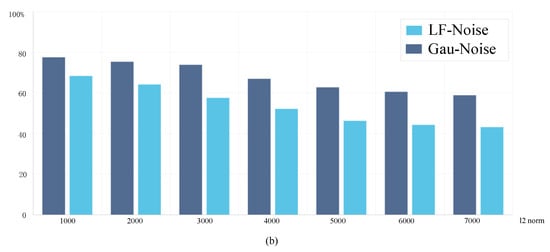

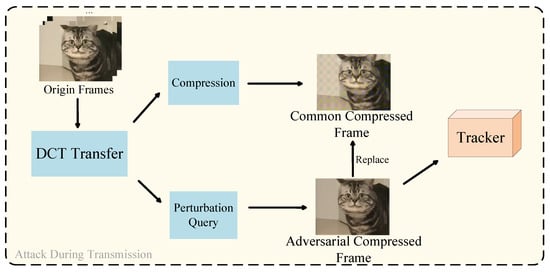

On the basis that low-frequency noise is significantly adversarial for tracking models, we outline the specific attack flow of ULN attack in this section. The proposed attack scenario begins by presenting the problem definition and subsequently delves into the attack’s detailed components. Figure 3 illustrates the overall structure of the attack, providing a visual representation of its key components.

Figure 3.

The overall process of ULN attack.

A non-targeted attack strives to make the predictions of the tracker lower than the original tracking effect, so the final goal of our attack is to ensure as quickly as possible that the tracker degrades in accuracy and eventually loses the tracked target. For a video sequence, , where represent the sequence length, width of the frame, height of the frame, and the channel number of the color, respectively. In the datasets using One Pass Evaluation (OPE), the first frame is used to provide the features of the target. The attack begins on the second frame. In general, the clean frames are replaced by , and then enter into the tracker in succession. For a sequence I in another type of dataset that can be relocated after the object is lost, the tracker can skip frames and reset itself as the target is lost during the tracking process. On all frames except these, the attacker attempts to perform a perturbed query by. Overall, we describe the target of our adversarial attack as:

As stated, we use the constraint for the perturbation added to the attack region to ensure that our attack can fool the human eye, which represents the l2 norm constraints on the perturbations generated at each iteration. After the completion of the iteration, generator compute the Structural Similarity Index Measure (SSIM) to further assess the distortion introduced to the frame. These evaluations are crucial in determining the effectiveness and deceptiveness.

3.2. ULN Attack

Experimental evidence [24,26] demonstrated that, in the low-frequency DCT space, there is an increased likelihood of finding adversarial noise, which is an idea that can be effective against low-pass filtering defensive tactics. DCT (Discrete Cosine Transform) has been extensively utilized in image compression algorithms, including the popular JPEG encoder [27]. However, it has faced criticism primarily due to the noticeable artifacts and distortion rates it introduces [28]. We leverage this inherent limitation to artificially generate distortion noise resulting from compression, and then search for the most adversarial noise that maximizes the impact. As with the effect of the attack we are trying to present in Figure 4, noise appears to be common in a variety of compressed images. Constrained by the attack magnitude, the attacker chooses the most effective region to inject perturbations. In each complete tracking process, for the frames after the initialized frame, we select the given search region as the center and crop a square as the attack region. In each of the following frames, attackers crop out a region for querying, by using the prediction given by the tracker in the previous frame as a reference. The cropped image region is then used as input to the sampling–reconstruction module, which undergoes interpolation and simple feature extraction to accelerate low-frequency perturbation queries.

Figure 4.

A hypothetical, easy-to-implement attack scenario that retrieves effective adversarial low-frequency noise to disguise as compression artifacts.

For efficient sampling of low-frequency perturbations, the sampled attack region images are passed through a chunked random index generation module to generate random low-frequency vector indices. The core of this is the construction of the dynamic mapping relationship of the orthogonal vector space in the DCT domain. Given the image size, number of channels, initial block size , and expansion step , the algorithm generates the low-frequency perturbation index matrix by hierarchical random ordering.

In summary, this module first generates an initial block starting from the upper left corner of the image. The indexes of all elements in the initial block are randomized to form the initial sampling space for the low-frequency perturbation. Then, starting from the edge of the initial block, it is expanded outwards layer by layer with stride as the step size. At each expansion, the current ring matrix is divided into two submatrices in the vertical direction (column expansion) and in the horizontal direction (row expansion), which are filled with randomly arranged indices. For each padding position , the number of newly padded elements is . The attacker generates a randomized queue and splits it into vertical and horizontal components.

where . This strategy ensures that the perturbation energy gradually spreads from the low-frequency core matrix to the mid-frequency matrix, while maintaining the continuity of the indexes. Upon completion of the ring expansion, the newly generated indexes are superimposed into the global index matrix, and the total number of indexes is updated. The function constructs a multi-scale perturbation sampling space covering the DCT domain from low to intermediate frequencies using incremental padding. In the final step, the 3D index matrix is transformed into a one-dimensional vector, and a global index sequence is generated and sorted by value. The sequence, thus, provides a prioritization guide for the attack algorithm, enabling preferential access to high-energy perturbation directions in the low-frequency region during the iterative search, and hence, enhancing the attack efficiency. Algorithm 1 shows the pseudo-code.

3.2.1. Perturbation Generation

Query. For every instance of generating low-frequency random noise, the attacker calculates the historical IOU score to quantify the extent of perturbation applied to the frame. consists of a spatial score and a temporal score . represents the IOU score between the original noiseless output bounding box and the bounding box generated after the current attack. represents the IOU score between the original noiseless output result of the previous frame and the bounding box generated after the current attack. Parameter is used to assign weights to the effects of both.

In this manner, responds to the noise perturbations present in historical frames, while also serving as a criterion for identifying the most influential noise for future video frames. Moreover, we utilize this form of analysis to determine whether a query attack is necessary for each frame. In cases where random noise leads to a significant drop in , skipping the query phase can efficiently save time and computational resources.

| Algorithm 1 Universal Low-frequency Noise Black-box Attack. |

|

Iteration. The attacker’s main goal is to obfuscate a target in video frames by finding a direction with a high adversary density.

In the attack, a sequence is generated with a fixed stride that encompasses all alternative perturbations demonstrating valid attack effects during each iteration. From this sequence, the most effective perturbation is selected to complete a single iteration. Each query in the iteration, we select a vector aligned with the low-frequency direction to iterate upon. We apply a discrete cosine transform to the orthogonal vector and generate perturbations by introducing noises in the tangential direction and auxiliary direction.

The reconstruction function, denoted as , is utilized for interpolating and extending the matrix. We describe the replaceable image produced by the n-th query in an iteration as follows:

Based on this, the attacked frame is input into the next iteration for gradient descent of the IOU score. Specifically, for the result produced by the k-th iteration, we express the following equation. Function P is denoted as the perturbation corresponding to the indexing according to the score:

In summary, we perform the query within K iterations, and in each iteration, we take N vectors from a random sequence that are orthogonal to each other. The perturbation we add to the clean image can be expressed as:

3.2.2. Optimization

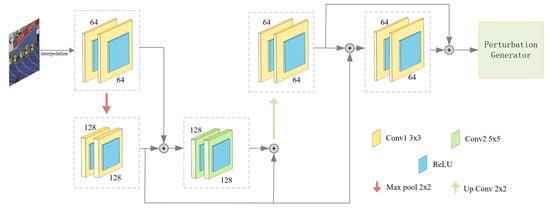

In the process of generating perturbation vectors in a single query iteration, each video frame passes through a Discrete Cosine Transform (DCT) to extract low-frequency features. From a computational efficiency perspective, the runtime associated with executing a single DCT transformation is notably substantial. To address this challenge, we propose a sampling–reconstruction strategy that mainly consists of several modules of the feature extraction network. Drawing inspiration from methodologies prevalent in the fields of signal processing, the strategy aims to comprehensively capture the overall characteristics of the target region, and thus, optimize the perturbation generation process.

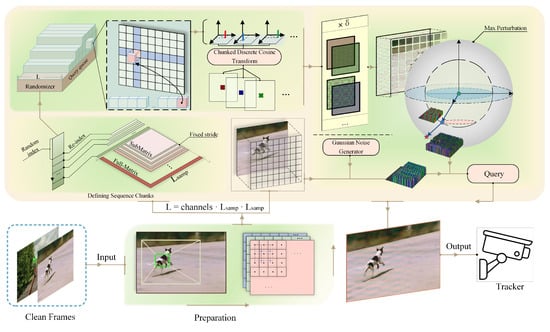

During the initial phase of attack, the attacker implements a sampling operation within the attack region. This step results in the generation of a primary attack target matrix, denoted as , which serves as the foundational basis for subsequent query phases. This matrix encapsulates the essential characteristics of the target region, enabling an efficient and effective perturbation generation process. The sampled features are then fed into our low-frequency feature extraction network shown in Figure 5, which consists of multiple modules: initial down-sampling and feature extraction, deep feature extraction, size adjustment and up-sampling, as well as residual learning and detail restoration. These modules work in concert to ensure low-frequency features are preserved during the transformation process.

Figure 5.

Initial attack regions of different sizes are interpolated to be fed into the network and used to output a fixed-size feature map. Through a non-linear structure and modular process, it aims to preserve multi-scale features and reduce the computational cost of perturbation queries.

After obtaining the final perturbation results, the proposed strategy will proceed to the noise reconstruction stage, which ensures that the generated noise harmonizes with the original image and maintains the integrity of the visual content while integrating the perturbations. Through this two-step approach, followed by reconstruction, a balance between computational efficiency and perturbation effectiveness is achieved, making the strategy particularly suited for real-time applications where both speed and accuracy are paramount. The time cost required to compute one channel value for a single pixel in a single query serves as the base unit for measuring computational cost. We can describe the computational cost saved by employing the sampling–reconstruction strategy as follows:

where represents the size of the chunk and and represent the size of the original attack region and the size of the matrix generated by sampling, respectively. When sampling generates a size several times smaller than the size of the attack area, we can fundamentally solve the problem of the time cost required for querying.

4. Experiments

4.1. Testing Dataset

First, we conducted attack experiments on the seven mainstream datasets in the tracking domain. Based on different working modes, these datasets can be categorized into the VOT challenges, which reinitialize the tracker after tracking is lost, and the OPE challenges, which only initialize the tracker on the first frame. This distinction allows us to evaluate the effectiveness of the proposed attack under varying conditions.

VOT. VOT (Visual Object Tracking) challenges [29,30,31] (https://www.votchallenge.net/ (accessed on 6 March 2025)) are the most widely used online tracking benchmarks. A key feature of VOT, compared to other datasets, is its reset-based evaluation system. When a tracker deviates completely from the ground truth within the predicted bounding box, its parameters are reinitialized after five frames. VOT uses two primary metrics to evaluate tracker performance: accuracy (Acc) and robustness (Rob). Accuracy measures how well the predicted bounding box aligns with the ground truth, while robustness measures the frequency of tracking failures. To provide a comprehensive performance evaluation, VOT also employs the expected average overlap (EAO) metric, which reflects both the accuracy and robustness of the tracker. Note that accuracy measurements exclude the first ten frames after each reinitialization.

OTB. OTB100 (Object Tracking Benchmark) [32] (https://paperswithcode.com/dataset/otb (accessed on 6 March 2025)) comprises 100 video sequences with complete annotation, with the objective of providing a consistent, equitable, and exhaustive evaluation standard for online object tracking algorithms. The target objects in the dataset encompass a broad spectrum of types, including human bodies and vehicles, among others, and incorporate a diverse array of challenging scenarios such as fluctuating illumination, rapid movement, occlusion, and background clutter. OTB100 has been designed to provide a uniform, fair, and comprehensive benchmark for online object tracking algorithms. In order to facilitate analysis of the performance of the tracking algorithms, each sequence has been labeled with 11 attributes to describe different challenging factors. This dataset has been constructed to address the shortcomings of existing datasets in terms of inconsistent labeling, inconsistent initialization conditions, and evaluation methods, thus providing a reliable basis for performance comparison of tracking algorithms. In comparison to other datasets, OTB100 is distinguished by its substantial size, comprehensive range of scenarios, and the provision of detailed labeling information that can support a variety of evaluation metrics and analysis methods.

NFS. The NFS30 (Need for Speed) [33] (https://ci2cv.net/nfs/index.html (accessed on 6 March 2025)) dataset is a benchmark dataset designed for high frame rate video object tracking. The purpose of the dataset is to evaluate and compare the performance of different object tracking algorithms in high frame rate scenarios. NFS30 comprises 100 high-quality video clips, totaling 380,000 frames, all of which were captured at a high frame rate of 240 FPS, significantly exceeding the conventional object tracking dataset’s frame rate of 30 FPS. This facilitates systematic investigation and comparison of the performance of diverse tracking algorithms under such high-frame-rate conditions. The provision of high-frame-rate videos enables the dataset to reveal the potential strengths and weaknesses of traditional target-tracking algorithms at high frame rates, particularly demonstrating the superiority of simple yet efficient trackers (e.g., correlation filters) in such scenarios. Furthermore, the dataset offers a distinctive opportunity to investigate the performance limitations and potential optimization pathways of high-frame-rate object tracking by providing high-frame-rate videos and extensive visual attribute annotations.

UAV. UAV123 (Unmanned Aerial Vehicle) [34] (https://ivul.kaust.edu.sa/benchmark-and-simulator-uav-tracking-dataset (accessed on 6 March 2025)) is a comprehensive annotation dataset dedicated to UAV tracking to fill the gap between flight tracking test challenges that lacks comprehensive annotations in existing benchmark datasets and to better guide the evaluation of low-altitude photographic capture scenarios, specifically including long-term full and partial occlusion, scale variation, viewpoint variation, background clutter, and camera motion. The UAV simulator partially generates realistic ground annotations, and the automatically completed annotations are more applicable to simulate the tracking issues of real scenes. On the other hand, the UAV-based video sequences fully consider the visual characteristics of different types of objects in low resolution for tracking reference, which is a unique advantage over OTB and other datasets in the OPE tracking.

LaSOT. LaSOT (Large-scale Single Object tracking) [13] (http://vision.cs.stonybrook.edu/~lasot/ (accessed on 6 March 2025)) is a benchmark dataset of considerable quality and size, containing 1400 video sequences totaling over 3.5 million frames, with an average length of 2500 frames per sequence. It is imperative that a robust tracking system functions reliably across categories. However, an imbalance in the distribution of categories is evident in the short-term datasets. LaSOT aims to mitigate category bias and enhance generalizability of tracking through the design of category balancing. In this study, we experimentally evaluate the robustness of the system in complex real-world application scenarios beyond short-term tracking by introducing LaSOT long-term tracking sequences.

4.2. Tracking Challenge

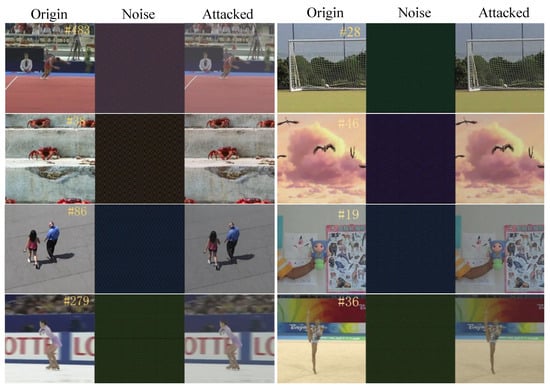

In this subsection, experiments are run through a server with Ubuntu 18.04 deploying NVIDIA RTX3090 and Intel E5-2680 @2.40 GHz. We utilize the norm to restrict the magnitude of the perturbation introduced to the image. During the experiments, we search for perturbation with 2 iterative steps. In each step, a maximum of 50 queries is allowed. The final attack perturbation is obtained by combining the most effective perturbations from two iterative steps, with the magnitude of each perturbation strictly limited to less than 4000. Limitation is set to 16 to ensure perturbation is undetectable to the human eye. In addition, we calculate the average SSIM for video frames, effectively demonstrating its deceptive nature. A comparison of the visualization before and after the attack is shown in Figure 6.

Figure 6.

Visual comparison of the effect of ULN attack on a frame and a clean frame. The noise is cropped, enlarged, and color-sharpened for easier observing.

4.2.1. VOT Challenge

Table 1 summarizes the impact of our proposed attack on the performance of four different trackers, i.e., SiamRPN++, DIMP, TrTr, and GRM, across the VOT2016, VOT2018, and VOT2019 challenges. The results indicate a significant degradation in tracking performance as evidenced by the substantial decreases in accuracy (ACC) and Expected Average Overlap (EAO), alongside notable increases in the number of frames where tracking was lost (FAIL). Detailed data can be seen in Tables S1–S3 (Supplementary Materials).

Table 1.

The table presents the results under the VOT2016, VOT2018, and VOT2019 challenges. For each tracker, the table shows the percentage decrease in accuracy (ACC) and Expected Average Overlap (EAO) caused by the attack. Additionally, it highlights the increase in the number of frames where tracking was lost due to the attack (FAIL). Arrows indicate the attacker’s targeting trend.

Across all three VOT challenges, the attack consistently caused considerable reductions in ACC and EAO metrics. For example, GRM experienced the highest decline in both ACC and EAO in the VOT2018 challenge, with a decrease in ACC and a decrease in EAO, indicating severe disruption in tracking capability. Similarly, DIMP showed a significant drop in performance across all challenges, particularly in the VOT2019 challenge, where it exhibited a decrease in ACC and an decrease in EAO. Additionally, the average increase in FAIL frames was notable, with an average increment of 1237 frames across the different trackers and challenges. This demonstrates the attack’s capability to induce frequent and persistent tracking errors.

The consistent impact of the proposed attack across multiple trackers and challenges suggests that it is robust and universal, capable of exploiting vulnerabilities in various tracking algorithms and scenarios. Overall, these highlight the importance of improving adversarial robustness in the design and evaluation of visual object tracking systems.

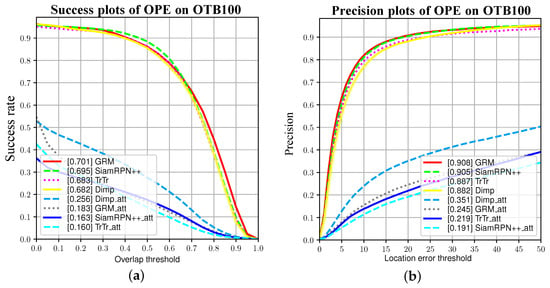

4.2.2. OPE Challenge

Based on Table 2, the proposed attack results in substantial AUC and accuracy losses for the four trackers in the tracking experiments on OTB100. Specifically, we observe AUC losses of , , , and , as well as accuracy losses of , , , and for the trackers. Such significant attack effects indicate that our attack has sufficient obfuscation capability. Figure 7 illustrates the plot of the AUC and Precision.

Table 2.

Attack performance at OTB100. Arrows indicate the attacker’s targeting trend.

Figure 7.

(a) Plots of four trackers and their success rates after being attacked at different thresholds. (b) Precision plots at varying thresholds in the same experiment.

In NFS30, as shown in Table 3, success rate decreases by , , , and , respectively. The accuracy decreases by , , , and , respectively. These results highlight the effectiveness of our attack method in impairing the tracking performance of the evaluated trackers on the NFS dataset.

Table 3.

Attack performance at NFS30. Arrows indicate the attacker’s targeting trend.

In the final part of the dataset challenge, we focus on the performance of the two trackers, SiamRPN++ and GRM, in drone detection and long-scale tracking. Effectiveness is evident in Table 4, as measured by the AUC and Pre. Low-frequency noise, by its nature, interferes with the tracker’s ability to accurately capture and maintain these critical features, leading to significant performance degradation. The Siamese network’s architecture, optimized for short-term tracking scenarios, appears less adept at handling the subtle yet disruptive influence of low-frequency perturbations, particularly in the challenging long-term tracking environments presented by LaSOT. Furthermore, on the other side, GRM’s reliance on graph-based models and long-term dependency learning provides some degree of resilience, as these mechanisms can partially mitigate the effects of low-frequency noise by leveraging contextual information and global structural cues. However, the dramatic decline in Pre on LaSOT, from 0.816 to 0.076, underscores the limitations of this approach in the face of persistent and carefully crafted low-frequency perturbations. The result suggests fundamental differences in how SiamRPN++ and GRM process visual information and respond to adversarial perturbations. The susceptibility of SiamRPN++ highlights the potential vulnerability of feature-based tracking methods when confronted with low-frequency noise, which can obscure or distort the high-frequency details on which these methods rely. Conversely, the relatively better performance of GRM, despite its own significant degradation, points to the potential benefits of incorporating global structural and contextual information to improve robustness against such attacks.

Table 4.

Attack performance at UAV123 and LaSOT. Arrows indicate the attacker’s targeting trend.

4.3. Defense Scenario Experiment

Tracking models often use defensive algorithms with noise reduction capabilities and greater generalizability to defend against unknown attacks. To comprehensively evaluate the effectiveness of the proposed attack method, we implement a series of experiments to analyze its impact on state-of-the-art object tracking systems under both vanilla and defended scenarios. Current approaches to noise reduction in object tracking include Gaussian filtering, JPEG compression [27], and BM3D denoising [35]. In addition, the Robust Tracking Against Adversarial Attacks (RTAA) [36] framework introduces a novel mechanism that combines detection-based defense and noise reduction to significantly improve the robustness of tracking systems against adversarial perturbations. JPEG compression is a lossy compression technique based on the discrete cosine transform, which mainly removes high-frequency components while preserving low-frequency information. BM3D uses block matching and 3D filtering techniques, which are very effective at reducing high and mid frequency noise.

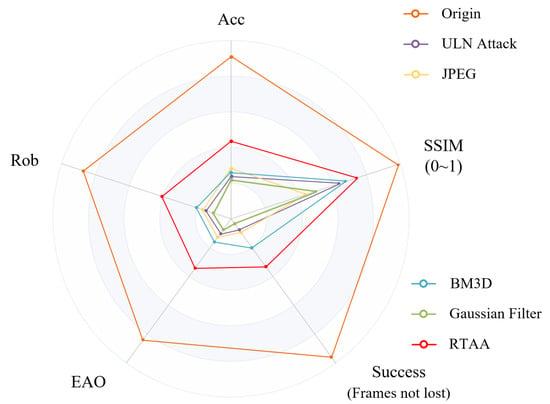

The detailed experimental result is shown in Figure 8. In terms of the result, although RTAA significantly reduces the effect of low-frequency noise and improves SSIM, it is still difficult to recover more than of the accuracy loss. In addition, traditional denoising methods are unable to repair the frame and improve the performance of the tracker. Since the low-frequency noise attack is performed in the same spectral domain, the JPEG compression technique is not effective in mitigating the adversarial perturbation. Based on a similar principle, Gaussian filtering is irresistible to low-frequency adversarial attacks. BM3D is limited in its ability to remove composite low-frequency adversarial noise, especially when the low-frequency noise is related to the image content.

Figure 8.

In the experiments against defensive measures, we tested several mainstream denoising defense systems for the SiamRPN++ tracker based on the VOT2018 challenge, and attacked them through ULN. A comparison of the data after normalization of each is given above.

4.4. Efficiency Experiment

At this stage of the experiment, using the same hardware as before, we measured the time cost per frame during the attack.

The experiment is divided into two parts. The first part involves the runtime generation (RTG) of perturbation noise during the attack, which requires performing chunked DCT for each query during the attack phase. In the second part of the experiment, we pregenerate (PreG) a portion of the noise using low-discrepancy sequence sampling in low-frequency space. This approach involves creating a large but finite database of noise samples, thereby eliminating the need for the noise generation in runtime during the attack.

Specifically, we pregenerated a total of 268,203 noise samples for query during the attack. Although this requires approximately 90 GB of storage space, it provides substantial time optimization for the attacker. By having a pregenerated database, the attacker can quickly retrieve data, enhancing the efficiency of the attack process.

As shown in Table 5, the perturbation retrieval under PreG reduced the time cost by over . This trade-off between time efficiency and performance degradation demonstrates the practicality of the PreG approach, especially in scenarios where computational resources and time are constrained.

Table 5.

Average time–cost experiment on VOT2018.

4.5. Practicality Verification

This subsection encompassed a range of mainstream computing devices, providing a representative evaluation of the attack’s adaptability and performance across different hardware platforms. The proposed attack’s performance on devices with varying computational capabilities can differ significantly due to the specific software implementations and device chips used. On the one hand, we tested the attack on a mobile device by using Apple’s Metal graphics API via a compute shader. This allows us to evaluate the attack’s performance on a civil mobile. On the other hand, we conducted tests on an embedded device, which represents a low-power, resource-constrained environment. Finally, we carried out comparative tests on the CPU and GPU of a laptop to assess the attack’s efficiency on powerful, yet portable, computing platforms. In this atomic performance experiment, the iteration time per frame was limited to 100 ms. We record the impact of the proposed attack, including the performance degradation of the tracker and the average number of queries executed per frame. By imposing strict limits on the perturbation search, we aim to evaluate the feasibility of the attack in real-time tracking scenarios with limited computational resources and time.

The results on devices with different computational capabilities are recorded in Table 6, which includes data on the performance of the attack and the average of queries per frame. It can be observed that as the number of queries increases, the performance degradation caused by the attack becomes more severe. The pregenerated discrete perturbation method enhances the querying capability across various devices, ensuring a substantial attack impact. This strategy effectively trades storage space for computational time, allowing for more efficient attacks and achieving significant degradation on a wide range of hardware platforms.

Table 6.

Experiments on SiamRPN++ using the OTB100 dataset across platforms with different computational capabilities. Detailed information of equipment: Raspberry Pi 4B, Raspberry Pi Foundation, Made in Pencoed, UK; iPhone14 pro, Apple, Made in Zhengzhou, China; Laptop, Lenovo, Made in Beijing, China.

4.6. Ablation Study

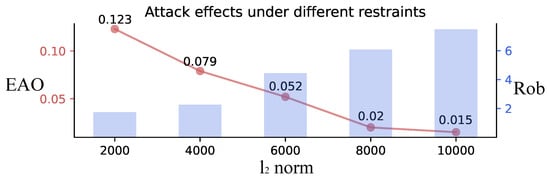

Finally, as depicted in Figure 9, we evaluate the impact of the attack at various perturbation magnitudes. The results demonstrate that as the perturbation magnitude increases, the tracker’s robustness and EAO gradually decrease.

Figure 9.

Comparison of EAO and robustness for VOT2018 with different perturbations.

4.7. Comparisons with Other Methods

We conduct a comparison between our method and several mainstream attacks based on iterative optimization and deep-network-based methods. These attack algorithms encompass various types, and it is important to state specific categories. DimBA [22] and OA [20] attacks against the first frame aim to obfuscate the target information provided to the tracker, which is the opposite of the original intent of our experiments. All attacks are implemented on the OTB100 dataset by using the SiamRPN++ tracker.

Specifically, we compare them in terms of both AUC and Precision, and categorize them according to the way the attack is performed and the type of methods. Based on operability, there are different conditional requirements for implementing an attack. On-site deployment means that attackers do not need to preprovide training plans or datasets for the perturbation generator, and can launch attacks during the tracking process. As can be seen from Table 7, our method significantly enhances the impact on AUC and accuracy, surpassing the IOU attack [21] with improvements of and , respectively. Our method has achieved advanced results in attacks based on iterative optimization among recent years.

Table 7.

Comparison with other methods on OTB100 with SiamRPN++(R).

5. Discussion

5.1. Similar Systems

The rapid evolution of adversarial attacks in visual object tracking has yielded diverse methodologies, each with distinct strengths and inherent limitations. A critical analysis of prominent approaches reveals key trade-offs between attack efficacy, computational feasibility, and practical applicability. EUSA [18] shows notable effectiveness by leveraging model-specific feature representations through a triple-loss design. However, its reliance on extensive video datasets for generator pretraining introduces scalability challenges, as real-world deployment often lacks sufficient training data to guarantee consistent performance across heterogeneous tracking scenarios. Similarly, SPARK [19] enhances stealth through spatial-temporal sparse perturbations, but incurs prohibitive computational overhead due to its frame-by-frame optimization paradigm, limiting real-time applicability in edge computing environments. DimBA [22] addresses redundancy reduction via Actor-Critic-guided noise pruning, yet its reliance on weak random noise distributions restricts its potency as an adversary, particularly against transformer-based trackers that exhibit resilience to low-magnitude perturbations. OA [20] circumvents iterative optimization by targeting the initialization phase but fails to account for dynamic model adaptation during long-term tracking, rendering it ineffective against reset-capable frameworks prevalent in VOT benchmarks. Although an IOU Attack [21] achieves competitive performance degradation through orthogonal noise combinations, its Gaussian sampling strategy distributes energy indiscriminately across frequency bands, resulting in suboptimal efficiency and limited effectiveness against frequency-aware defenses, which can be seen in Tables S4 and S5 (Supplementary Materials). The methodologies collectively highlight a fundamental trade-off in adversarial attack design: efficiency versus destructiveness, stealth versus generalizability, and theoretical potency versus practical deployability. For instance, generator-based approaches excel in perturbation subtlety but falter in unseen tracking environments, while iterative methods prioritize adaptability at the expense of computational scalability. Ultimately, the variability in attack strategies reflects the multifaceted nature of adversarial threats. Consequently, defensive mechanisms must adopt countermeasures that are equally diversified, balancing frequency domain filtering, temporal consistency checks, and architecture-specific hardening. This diversity highlights the richness of research on adversarial research and emphasizes the need for context-aware defense frameworks capable of addressing domain-specific vulnerabilities. Future advancements will likely hinge on hybrid approaches that integrate the strengths of existing methods while mitigating their inherent limitations, fostering a more robust and adaptive tracking ecosystem.

5.2. Limitations

In the following subsection, the similarities and differences between ULN and previous work are discussed, with particular emphasis on the strengths and limitations of each. ULN attacks inject low-frequency perturbations via the discrete cosine transform, and their effectiveness depends on the sensitivity of the tracking model to low-frequency features. However, the attack generation process can introduce unintended side effects. For example, the similarity between attack noise and JPEG compression artifacts [27] may be misclassified as natural noise by the defense system, but if the defense system employs dynamic frequency domain analysis, the stealth of the attack may be reduced. In terms of practical application considerations, although ULN achieves real-time processing on embedded devices, its pre-generated noise library relies on 90 GB of disk memory, which is difficult to deploy in edge devices such as UAV on-board processing units, where resources are severely constrained. On the other hand, despite the fact that the sampling-reconstruction strategy reduces the computational overhead through local feature compression, the dynamic defense mechanism adaptive frequency-domain filtering may block the cumulative effect of low-frequency noise by adjusting the frequency-domain mask or motion model parameters in real time. In addition, concerns have been raised about the efficiency of the attack. Designs based on iterative search require the computers to perform the discrete fragmented cosine transform repeatedly, which accounts for of the time cost. Despite the commitment to parallel computation and optimized designs, we hope that future version will be efficient at 30 fps or higher. Finally, the tracker may gradually adapt to the perturbation pattern through historical trajectory prediction or online update of model parameters, leading to a decline in attack efficacy over time.

6. Conclusions

We propose the Universal Low-frequency Noise black-box attack, a more efficient black-box attack for visual object tracking. Our approach encompasses how to search and generate perturbations, how to record and evaluate perturbations, and how to gain a time advantage at a lower cost. The proposed attack focuses on the low-frequency domain, which is crucial for tracking models and computationally accessible for adversarial perturbations. This dual focus ensures that the attack strategy is not only effective but also efficient, in line with the principle of symmetry in adversarial scenario where information is blocked. Our work encompasses the specific details of the attack, capability experiments conducted in a laboratory environment, and tests performed in real-world tracking scenarios. These comprehensive evaluations fully demonstrate the effectiveness and generalizability of the attack model. On the other hand, the stealthiness and deceptiveness of the attack are both theoretically and practically validated. The noise can be effectively disguised as artifacts caused by image compression, making it difficult to detect. Through the work, we hope to illustrate the aggressiveness and deceptiveness of low-frequency adversarial perturbations to better inform relevant theoretical and practical knowledge. We encourage researchers to focus on adversarial attacks and defenses based on our proposed approach, aiming to delve deeper into the robustness of visual object tracking.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/sym17030462/s1, Table S1, Attack performance at VOT2016; Table S2, Attack performance at VOT2018; Table S3, Attack performance at VOT2019; Table S4, IOU attack on TrTr; Table S5, IOU attack on GRM.

Author Contributions

Conceptualization, H.H. and H.B.; methodology, H.H.; software, H.H. and H.B.; validation, H.H.; formal analysis, H.H.; writing—original draft preparation, H.H.; writing—review and editing, H.H., H.B., K.W. and Y.W.; visualization, H.H.; supervision, Y.W. and K.W.; project administration, K.W. and Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Raw data from the experiment are available at https://github.com/sngo666/ULN_exp_data (accessed on 6 March 2025) and we are happy to provide additional help and communication to promote the evolution of the field.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, Y.; Wang, Q. Pedestrian tracking through coordinated mining of multiple moving cameras. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 252–261. [Google Scholar]

- Menezes, R.; de Miranda, A.; Maia, H. Pymicetracking: An open-source toolbox for real-time behavioral neuroscience experiments. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 21459–21465. [Google Scholar]

- Cao, Z.; Huang, Z.; Pan, L.; Zhang, S.; Liu, Z.; Fu, C. Tctrack: Temporal contexts for aerial tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 14778–14788. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.J.; Fergus, R. Intriguing properties of neural networks. 2013. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Croce, F.; Hein, M. Minimally distorted adversarial examples with a fast adaptive boundary attack. In Proceedings of the International Conference on Machine Learning (ICML), Virtual Event, 13–18 July 2020; pp. 2196–2205. [Google Scholar]

- Guo, Q.; Cheng, Z.; Juefei-Xu, F.; Ma, L.; Xie, X.; Liu, Y.; Zhao, J. Learning to adversarially blur visual object tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 10839–10848. [Google Scholar]

- Croce, F.; Hein, M. Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks. In Proceedings of the International Conference on Machine Learning (ICML), Virtual Event, 13–18 July 2020; pp. 2206–2216. [Google Scholar]

- Li, Z.; Shi, Y.; Gao, J.; Wang, S.; Li, B.; Liang, P.; Hu, W. A simple and strong baseline for universal targeted attacks on siamese visual tracking. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3880–3894. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–19 June 2019; pp. 4282–4291. [Google Scholar]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar]

- Zhao, M.; Okada, K.; Inaba, M. Trtr: Visual tracking with transformer. arXiv 2021, arXiv:2105.03817. [Google Scholar]

- Gao, S.; Zhou, C.; Zhang, J. Generalized relation modeling for transformer tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 18686–18695. [Google Scholar]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–19 June 2019; pp. 5374–5383. [Google Scholar]

- Kugarajeevan, J.; Kokul, T.; Ramanan, A.; Fernando, S. Transformers in single object tracking: An experimental survey. IEEE Access 2023, 11, 80297–80326. [Google Scholar] [CrossRef]

- Yan, X.; Chen, X.; Jiang, Y.; Xia, S.-T.; Zhao, Y.; Zheng, F. Hijacking tracker: A powerful adversarial attack on visual tracking. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 2897–2901. [Google Scholar]

- Yan, B.; Wang, D.; Lu, H.; Yang, X. Cooling-shrinking attack: Blinding the tracker with imperceptible noises. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 990–999. [Google Scholar]

- Zhou, Z.; Sun, Y.; Sun, Q.; Li, C.; Ren, Z. Only once attack: Fooling the tracker with adversarial template. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3173–3184. [Google Scholar] [CrossRef]

- Liu, S.; Chen, Z.; Li, W.; Zhu, J.; Wang, J.; Zhang, W.; Gan, Z. Efficient universal shuffle attack for visual object tracking. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 2739–2743. [Google Scholar]

- Guo, Q.; Xie, X.; Juefei-Xu, F.; Ma, L.; Li, Z.; Xue, W.; Feng, W.; Liu, Y. Spark: Spatial-aware online incremental attack against visual tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 202–219. [Google Scholar]

- Chen, X.; Yan, X.; Zheng, F.; Jiang, Y.; Xia, S.-T.; Zhao, Y.; Ji, R. One-shot adversarial attacks on visual tracking with dual attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10176–10185. [Google Scholar]

- Jia, S.; Song, Y.; Ma, C.; Yang, X. Iou attack: Towards temporally coherent black-box adversarial attack for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6709–6718. [Google Scholar]

- Yin, X.; Ruan, W.; Fieldsend, J. Dimba: Discretely masked black-box attack in single object tracking. Mach. Learn. 2022, 13, 1705–1723. [Google Scholar] [CrossRef]

- Nakka, K.K.; Salzmann, M. Temporally-transferable perturbations: Efficient, one-shot adversarial attacks for online visual object trackers. arXiv 2020, arXiv:2012.15183. [Google Scholar]

- Guo, C.; Gardner, J.; You, Y.; Wilson, A.G.; Weinberger, K. Simple black-box adversarial attacks. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 2484–2493. [Google Scholar]

- Qiao, Y.; Liu, D.; Wang, R.; Liang, K. Low-frequency black-box backdoor attack via evolutionary algorithm. arXiv 2024, arXiv:2402.15653. [Google Scholar] [CrossRef]

- Guo, C.; Frank, J.S.; Weinberger, K.Q. Low frequency adversarial perturbation. In Proceedings of the Thirty-Fifth Conference on Uncertainty in Artificial Intelligence, UAI 2019, Tel Aviv, Israel, 22–25 July 2019; Volume 115, pp. 1127–1137. Available online: http://proceedings.mlr.press/v115/guo20a.html (accessed on 6 March 2025).

- Wallace, G.K. The JPEG still picture compression standard. Commun. ACM 1991, 34, 30–44. [Google Scholar] [CrossRef]

- Mishra, D.; Singh, S.K.; Singh, R.K. Deep architectures for image compression: A critical review. Signal Process. 2022, 191, 108346. [Google Scholar] [CrossRef]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Cehovin Zajc, L.; Vojir, T.; Bhat, G.; Lukezic, A.; Eldesokey, A.; et al. The sixth visual object tracking vot2018 challenge results. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; pp. 3–53. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Pflugfelder, R.; Kamarainen, J.-K.; Zajc, L.C.; Drbohlav, O.; Lukezic, A.; Berg, A.; et al. The seventh visual object tracking vot2019 challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2206–2241. [Google Scholar]

- Roffo, G.; Melzi, S. The visual object tracking vot2016 challenge results. In Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8–10 and 15–16, 2016, Proceedings, Part II; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 777–823. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.-H. Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Galoogahi, H.K.; Fagg, A.; Huang, C.; Ramanan, D.; Lucey, S. Need for speed: A benchmark for higher frame rate object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for UAV tracking. In Computer Vision-ECCV 2016-14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 445–461. [Google Scholar] [CrossRef]

- Hou, Y.; Zhao, C.; Yang, D.; Cheng, Y. Comments on “image denoising by sparse 3-d transform-domain collaborative filtering”. IEEE Trans. Image Process. 2011, 20, 268–270. [Google Scholar] [CrossRef] [PubMed]

- Jia, S.; Ma, C.; Song, Y.; Yang, X. Robust tracking against adversarial attacks. In Computer Vision-ECCV 2020-16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XIX; Lecture Notes in Computer Science; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; Springer: Cham, Switzerland, 2020; Volume 12364, pp. 69–84. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).